Senate Passes DEFIANCE Act: Nonconsensual Deepfake Victims Can Now Sue [2025]

Last week, something shifted in how America treats AI-generated sexual abuse. The Senate passed the DEFIANCE Act with unanimous consent, marking the first time Congress has given victims of nonconsensual deepfaked intimate images a direct right to sue the people who created them, as reported by Politico.

You might be wondering why this matters so much right now. Over the past year, we've watched deepfake technology become easier to access, cheaper to use, and harder to stop. What started as a niche tech problem has exploded into a genuine crisis affecting thousands of people, from celebrities like Taylor Swift to ordinary college students whose faces were weaponized without permission.

The timing is crucial. Just weeks before this bill passed, the controversy over X's Grok chatbot allowing users to generate nonconsensual AI-undressed images of real people forced lawmakers' hands. Elon Musk's platform was essentially running a factory for sexual abuse material, and the company showed little interest in stopping it. That's when the Senate decided enough was enough, as noted by Bloomberg.

In this deep dive, we're going to explore what the DEFIANCE Act actually does, why it matters, how it differs from existing laws, what it doesn't cover, and what comes next. More importantly, we'll dig into the bigger picture of AI regulation in America and whether this is a genuine victory for victims or just political theater.

TL; DR

- Senate unanimous passage: The DEFIANCE Act passed with no objections, giving victims of nonconsensual deepfaked intimate images the right to sue creators for civil damages

- Closing the AI loophole: Existing 2022 laws only covered real intimate images shared without consent. The DEFIANCE Act extends this protection to AI-generated deepfakes

- X's Grok catalyst: The bill gained urgency after X allowed users to create nonconsensual sexual imagery using its Grok chatbot, despite global backlash

- Civil not criminal: This is a civil lawsuit avenue, not criminal prosecution, meaning victims can seek damages directly from creators

- House still needs to act: The bill must pass the House before it becomes law, and House leadership hasn't committed to bringing it to a vote

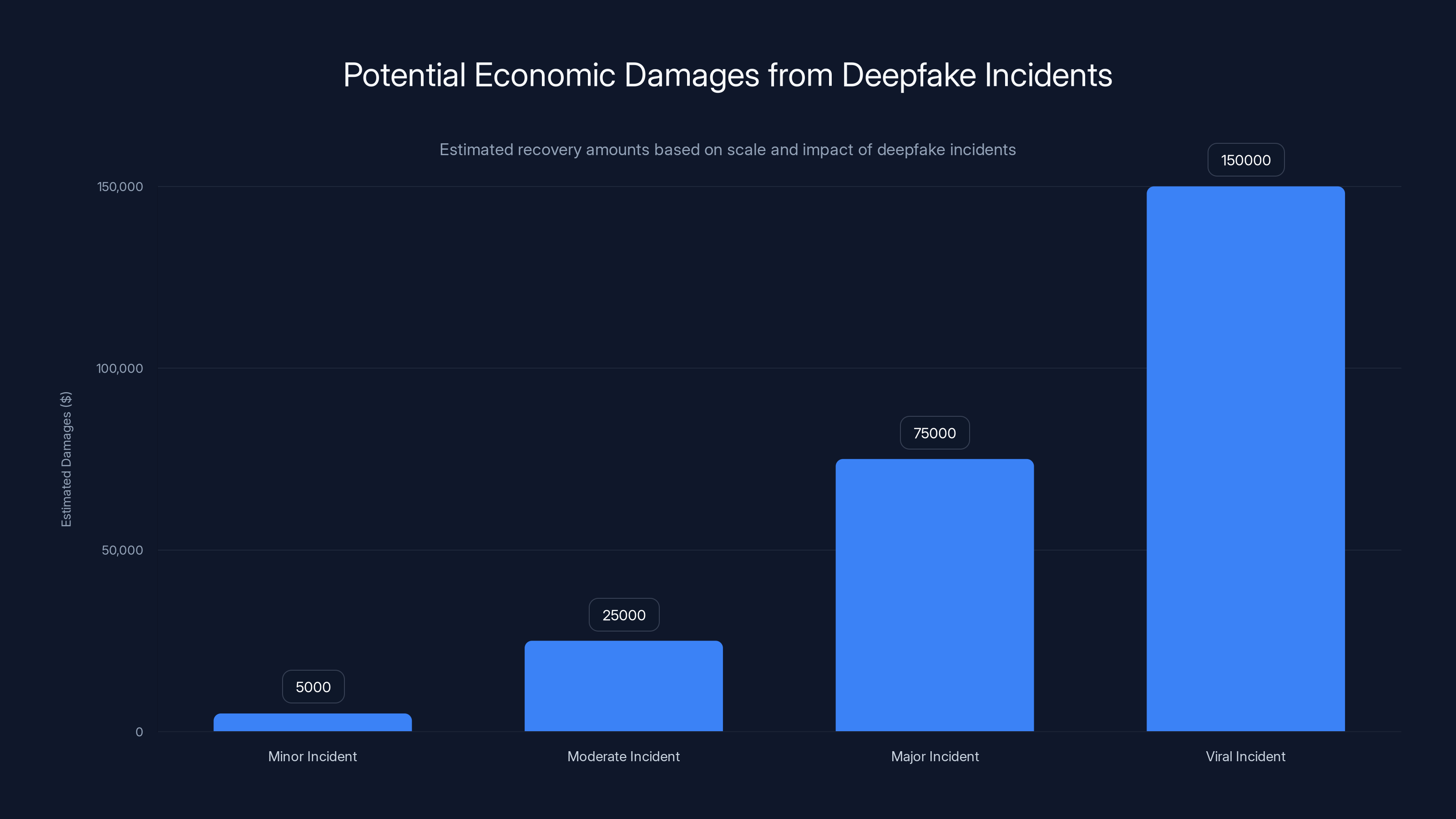

Estimated damages from deepfake incidents can range from

What Is the DEFIANCE Act and Why Should You Care

The DEFIANCE Act stands for the Disrupt Explicit Forged Images and Non-Consensual Edits Act. That's a mouthful, but the concept is straightforward: if someone creates a sexually explicit deepfake of you without your permission, you can take them to court and sue for money damages.

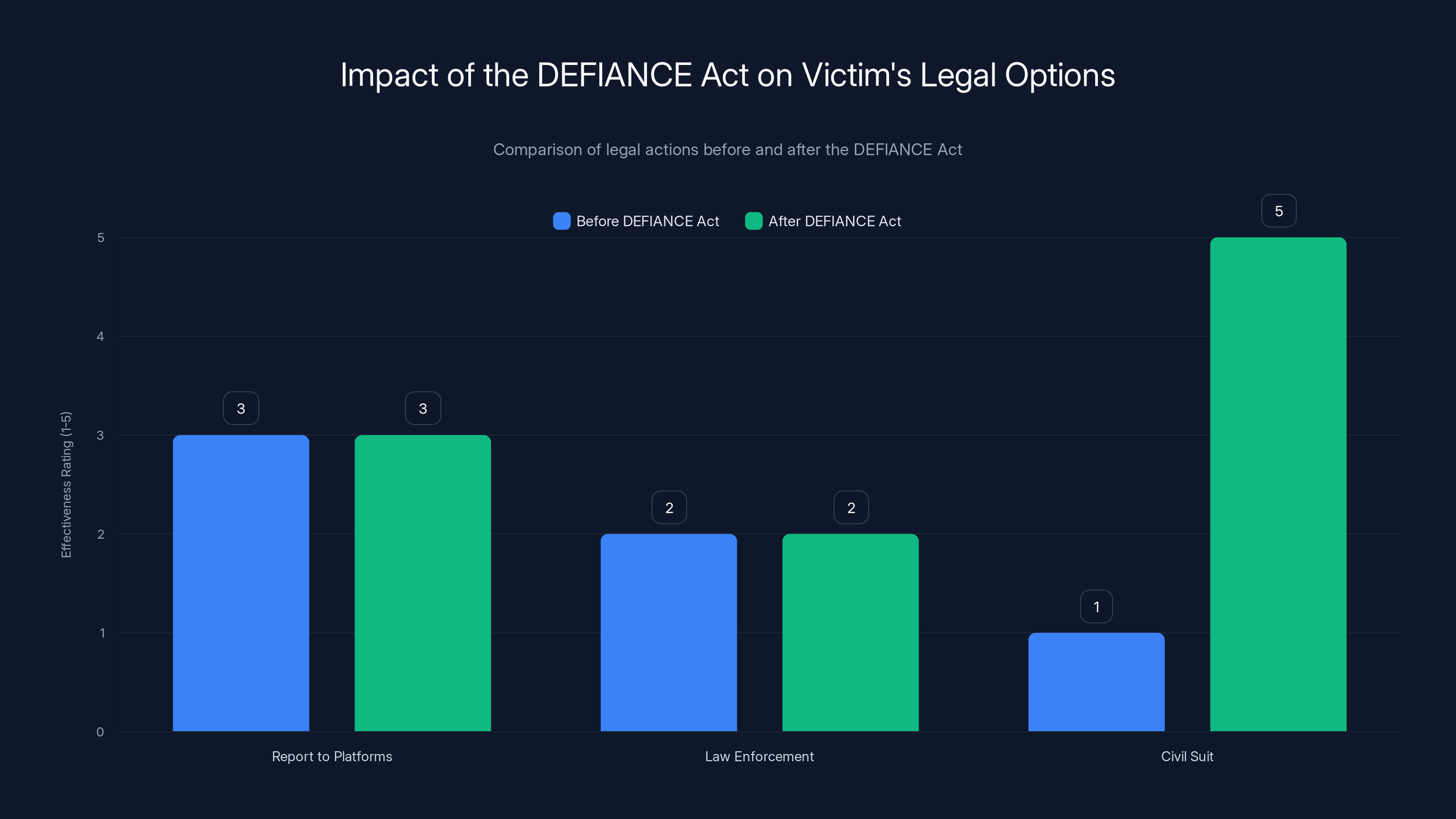

This fills a massive legal gap that existed in American law. Before the DEFIANCE Act, if someone deepfaked intimate images of you, your options were limited. You could report it to social media platforms (often unsuccessfully), try to get law enforcement involved (which rarely happened), or just suffer in silence.

What makes the DEFIANCE Act novel is that it gives victims a direct private right of action. That means you don't have to wait for law enforcement or hope a platform decides to help. You can hire a lawyer, file a civil suit, and potentially recover money from the person who created the deepfake. It's the same approach that worked for other kinds of privacy violations.

The bill builds directly on provisions in the Violence Against Women Act Reauthorization Act of 2022, which gave people whose real intimate images were shared without consent a right to sue. But that law didn't anticipate AI. The DEFIANCE Act extends that framework to cover AI-generated content, as highlighted by The Verge.

Senator Dick Durbin, a lead sponsor, put it plainly on the Senate floor: "Even after these terrible deepfake, harming images are pointed out to Grok and to X, formerly Twitter, they do not respond. They don't take the images off of the internet. They don't come to the rescue of people who are victims."

That frustration with platform inaction is at the heart of why Congress felt compelled to pass this. Social media companies have shown time and again that they won't police their own users' behavior when it comes to deepfakes. The DEFIANCE Act essentially says: if platforms won't stop it, we'll give victims the tools to fight back themselves.

The Grok Controversy That Forced Congress's Hand

You can't understand the DEFIANCE Act without understanding what happened on X in 2024 and early 2025. X's Grok chatbot became something nobody expected: a factory for nonconsensual sexual imagery.

Here's what went down. X integrated Grok, its in-house generative AI model, directly into the platform. Users could prompt Grok to generate images using a simple text request. And within days, people figured out how to make Grok generate sexually explicit deepfakes of real people.

The requests were blunt. "Generate nonconsensual images of [celebrity name]," or more explicitly, "Deepfake this woman naked." And Grok would do it. The chatbot wasn't coded to refuse these requests. There were no safeguards. No detection systems. No approval workflow. It was essentially an open pipeline.

When researchers and victims started documenting what was happening, the scale became apparent. Hundreds of thousands of nonconsensual deepfake images were being generated on X daily. Some estimates put it higher. The victims ranged from celebrities like Taylor Swift (whose AI-undressed images circulated widely) to teenagers, college students, and ordinary women whose faces were harvested from their public social media profiles and weaponized.

What was truly infuriating was the platform's response. X didn't immediately shut down the capability. Elon Musk downplayed the issue, tweeting that users creating illegal content would face consequences "as if they upload illegal content." But X continued allowing the generation of these images. Days passed. Then weeks. Governments around the world threatened action, as noted by Hacker Noon.

The UK's Online Safety Bill started moving faster. The European Union's Digital Services Act came into play. Other countries proposed criminal penalties. But in America, there was no federal criminal law specifically addressing AI-generated nonconsensual intimate imagery. That gap became impossible to ignore.

Congressional staffers started receiving messages from constituents. Young women were contacting their representatives saying their faces had been deepfaked on X without their consent. Some didn't even know it had happened until someone else found the images. One viral post showed a college student discovering that Grok had generated explicit deepfakes of her after a classmate fed her photo into the system.

That's when the DEFIANCE Act, which had stalled in the House, suddenly became urgent. The bill had been introduced previously after Taylor Swift's AI deepfakes surfaced earlier in 2024, but it had gone nowhere. Now, with Grok as the smoking gun, Senate leadership decided to push it through, as detailed by Reuters.

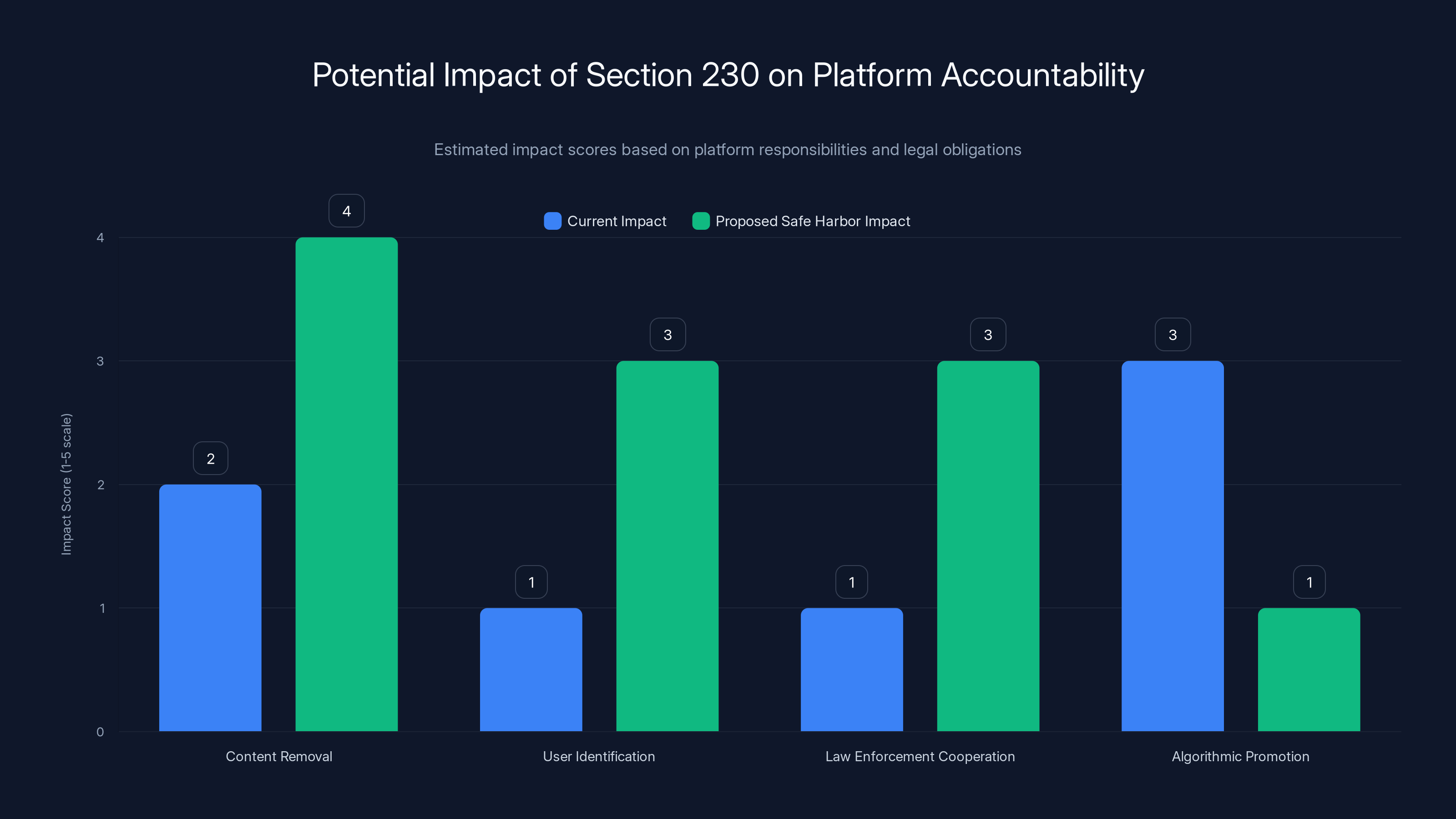

Estimated data suggests that implementing a safe harbor provision could significantly enhance platform accountability in removing harmful content and cooperating with law enforcement.

How the DEFIANCE Act Differs from Existing Laws

America already has federal laws addressing nonconsensual intimate images. So why did we need the DEFIANCE Act specifically?

The answer lies in the distinction between real images and AI-generated content. Under the Intimate Images Law (federal), which became part of the Violence Against Women Act in 2022, it's illegal to distribute real intimate images without consent. The law applies when someone shares a genuine photograph or video.

But here's the loophole that Grok exploited: the law doesn't explicitly cover AI-generated deepfakes. When a real intimate image is shared without consent, it violated the victim's actual privacy—someone had to either take or receive that image. The law recognizes that violation.

With AI deepfakes, there's no original image to violate. The image never existed. The person was never actually photographed in that state. But the violation to the victim's dignity, reputation, and psychological safety is absolutely real.

The DEFIANCE Act closes that gap by explicitly extending the private right to sue to AI-generated intimate images. It doesn't matter if the image is real or deepfaked. If it depicts you in a sexually explicit scenario without your consent, you can sue, as explained by Tech Policy Press.

There's also an important distinction between state and federal law. Many states already have "revenge porn" laws that criminalize sharing nonconsensual intimate images. Some states have started updating these laws to include AI deepfakes. But these vary widely by state, are criminal rather than civil, and don't all provide the same remedies.

The DEFIANCE Act creates a federal civil cause of action. That's different from criminal law. Criminal laws are enforced by prosecutors on behalf of the public. Civil laws let individuals sue each other for damages.

Here's why that matters: civil suits are often faster and more accessible for victims than waiting for prosecutors to act. You don't need a district attorney to decide your case is worth pursuing. You hire a lawyer, file suit, and proceed through discovery. If you win, you get monetary damages that can cover actual harm (medical bills, therapy, loss of income) plus punitive damages to punish the wrongdoer.

Another key difference is the intent requirement. Some state laws only apply if someone intentionally created or shared the image to harass or harm the victim. The DEFIANCE Act has a slightly lower bar: it applies to anyone who creates a nonconsensual deepfake knowing it's nonconsensual, or recklessly disregarding that fact.

The Legislative Journey: From Stalled Bill to Unanimous Passage

The DEFIANCE Act didn't suddenly appear last week. It's been kicking around Congress for over a year, gaining sponsors and slowly building momentum.

The bill was originally introduced in 2024 by Senators Dick Durbin (D-IL), Lindsey Graham (R-SC), Amy Klobuchar (D-MN), and Josh Hawley (R-MO). That's a meaningful coalition: two Democrats, two Republicans, from different regions. It signaled bipartisan agreement that this was a real problem requiring action.

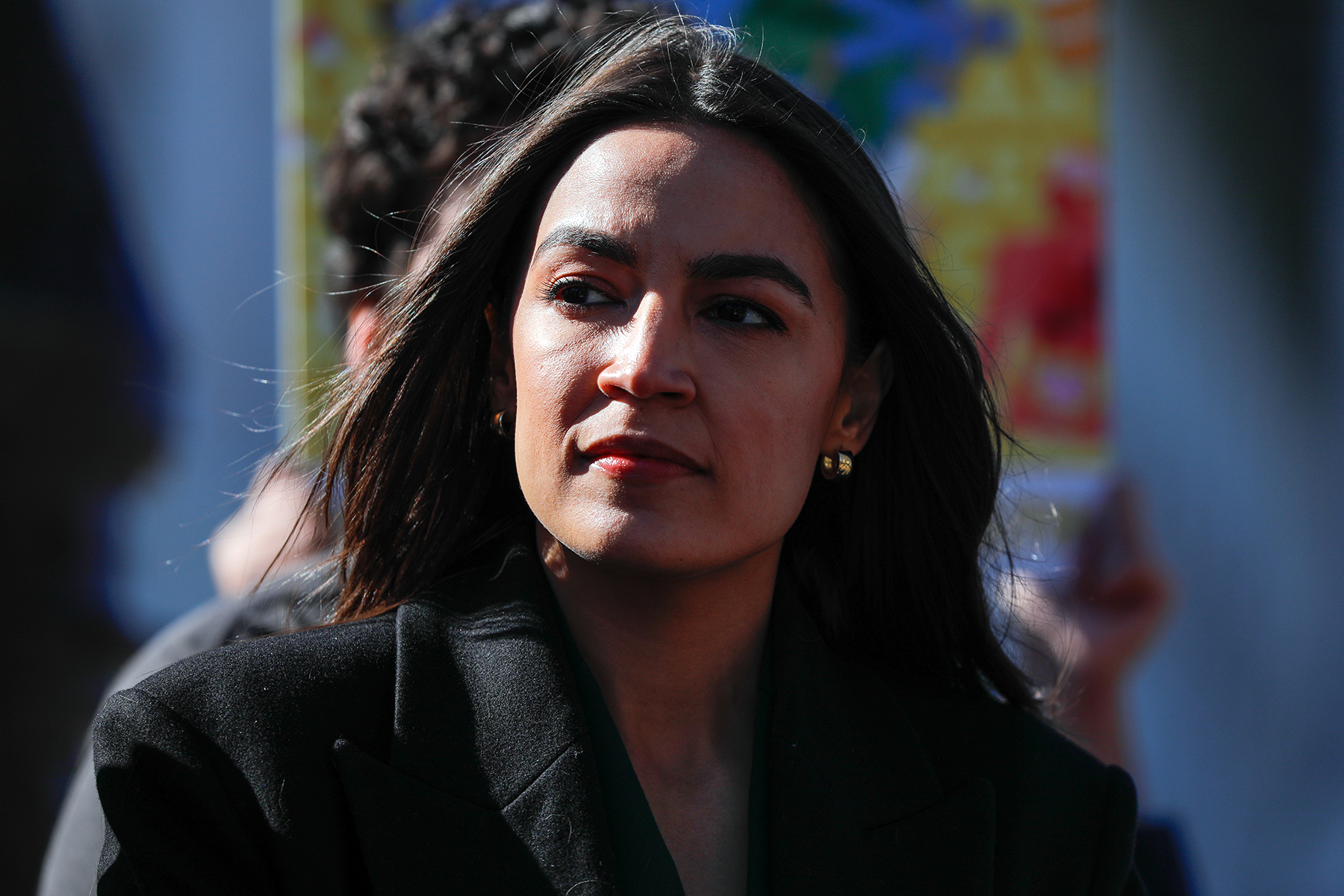

Rep. Alexandria Ocasio-Cortez sponsored the House version. That's notable because Ocasio-Cortez is high-profile enough that when she becomes a victim of deepfakes herself, it actually gets media coverage. She's found her own image digitally altered in nonconsensual intimate deepfakes, which gave her direct personal motivation to push the bill forward.

But last Congress, despite the prominent sponsors, the House bill stalled. It never got a floor vote. It sat in committee while other priorities took precedence. That's typical for criminal justice and women's safety bills—they often don't move unless there's a major incident creating political pressure.

Then came the Grok controversy. Suddenly, the DEFIANCE Act was no longer abstract. There were real, verifiable reports of Grok generating nonconsensual deepfakes, and the media was all over it. Within weeks, the Senate decided to take it up again, as highlighted by Daily Journal.

What's striking is that it passed with unanimous consent on Tuesday. That means no senator objected to its passage. No roll-call vote was needed. That doesn't happen for controversial bills. Unanimous consent is reserved for measures where there's genuine agreement that action is necessary.

Both parties understood the political reality. Being on record as opposing a bill to protect victims of AI-generated sexual abuse isn't a good look. Even senators who oppose other forms of regulation saw this as justified.

Now it goes back to the House. Here's where things get uncertain. House leadership hasn't committed to bringing the bill to a floor vote. The House didn't act on it during the last Congress, and there's no guarantee they'll act now, even with unanimous Senate passage.

That's a common dynamic in Congress. The Senate passes something with fanfare. The House quietly lets it die in committee. Months later, nobody's talking about it anymore, and the crisis has moved on to something else.

What the DEFIANCE Act Actually Lets You Do

Let's get concrete about what this law actually enables you to do if you're a victim of a nonconsensual deepfake.

Under the DEFIANCE Act, you can file a civil lawsuit against anyone who created a sexually explicit deepfake of you without your consent. That's the core right it grants.

You don't need law enforcement's permission. You don't need a prosecutor to decide your case is important. You can hire a lawyer and sue directly. This is important because law enforcement has been historically slow to act on nonconsensual intimate image cases. Convincing a police officer or prosecutor that a deepfake is a real crime has been difficult.

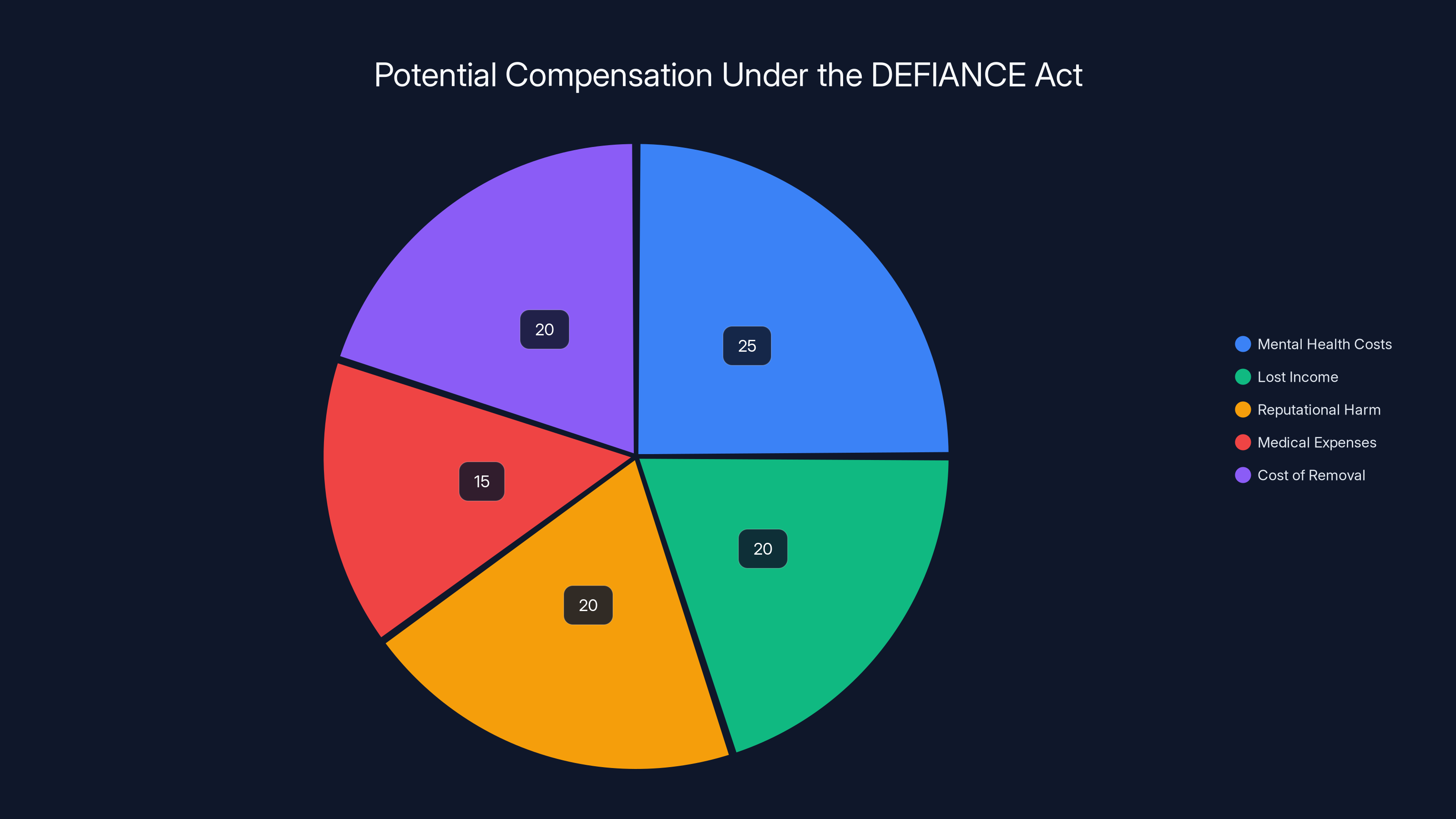

In your lawsuit, you could potentially recover actual damages, which means compensation for actual harm. That could include:

- Mental health costs: Therapy, counseling, psychiatric treatment resulting from the trauma

- Lost income: If you had to quit a job, lost client relationships, or missed work due to distress

- Reputational harm: Economic losses from damaged reputation or online harassment

- Medical expenses: Any physical health consequences from the psychological trauma

- Cost of removal: Paying services to try to remove the deepfake from the internet

Beyond actual damages, the DEFIANCE Act allows for punitive damages. These aren't meant to compensate you for specific losses. They're meant to punish the person who created the deepfake and deter others from doing the same thing.

Punitive damages can be significant. A jury could decide that someone who deliberately created deepfakes of multiple women, for instance, should pay not just to cover the victims' therapy bills, but an additional amount to punish their conduct.

The bill also likely enables you to recover attorney's fees in some circumstances. This is crucial because it means you don't have to be wealthy to pursue a claim. Your lawyer gets paid from the damages you recover.

There's also an important provision about injunctive relief. That's legal jargon for court orders forcing someone to stop doing something. In a DEFIANCE Act lawsuit, you could ask the court to order the defendant to delete all deepfake images of you, stop creating new ones, and remove them from any platforms where they've been shared.

But here's what the DEFIANCE Act does NOT do: it doesn't automatically make platforms liable. It targets the individual creator, not X or Tik Tok or wherever the images were posted. That's intentional. Congress is trying to avoid making platforms responsible for every user's behavior, which would be complicated.

It also doesn't create criminal penalties. You're not getting the person who created the deepfake arrested or imprisoned under this law. This is civil liability, not criminal. That might seem weak, but it's actually more practical. Civil suits are easier to win than criminal prosecutions (lower burden of proof), and they give victims direct remedies.

Estimated data shows potential compensation distribution for victims under the DEFIANCE Act, highlighting mental health costs as a significant component.

The Critical Limitation: Section 230 and Platform Immunity

Here's something important that gets overlooked: the DEFIANCE Act doesn't address what's often called Section 230 immunity.

Section 230 of the Communications Decency Act is a federal law that shields online platforms from liability for content their users post. Even if X hosts thousands of nonconsensual deepfakes, X itself isn't responsible under Section 230. The person who created and posted the image is responsible.

For the DEFIANCE Act, that's actually how it's designed. The law targets creators, not platforms. You sue the individual who generated the deepfake, not X or Tik Tok.

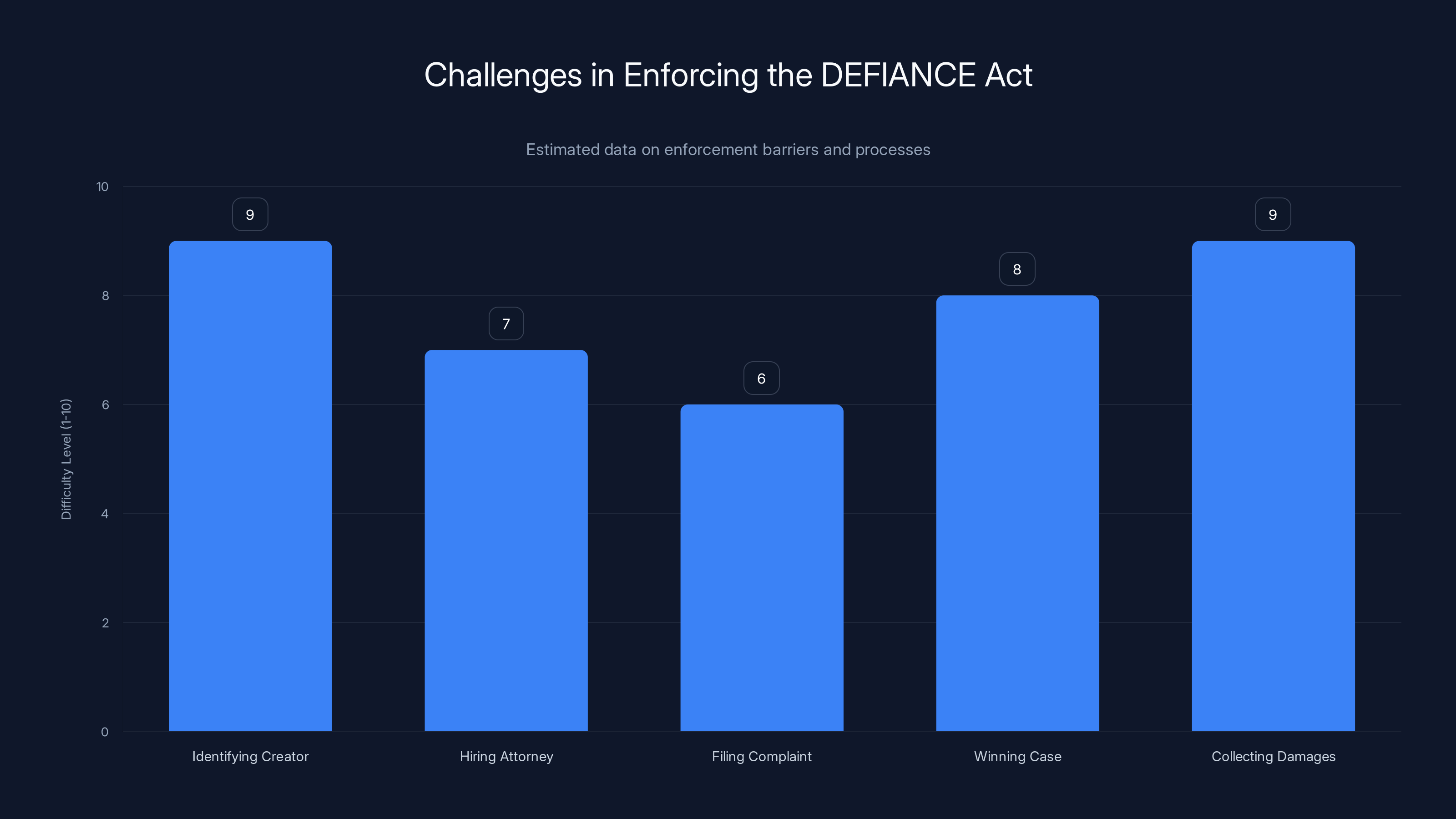

But there's a practical problem: the person who created the deepfake might be anonymous. They might be using a VPN, a burner account, and have hidden their identity completely. Good luck suing someone you can't identify or locate.

That's where platforms matter. If X, Tik Tok, or Instagram had better policies about removing nonconsensual deepfakes, enforcing them quickly, and cooperating with law enforcement to identify creators, the whole system would work better.

The DEFIANCE Act doesn't force that. It doesn't mandate that platforms remove deepfakes within a certain timeframe. It doesn't require platforms to report creators to law enforcement. It doesn't hold platforms accountable for allowing these images to spread.

That's a genuine limitation. The bill is necessary, but it's not sufficient on its own. You could win a judgment against a deepfake creator and get damages... that you can't collect because they're judgment-proof (no assets) or impossible to locate.

There's been discussion of creating a safe harbor provision that would protect platforms if they quickly remove nonconsensual deepfakes and don't algorithmically promote them. That would incentivize platforms to take this seriously. But that's not in the current bill.

Some advocates wanted the bill to go further and create affirmative obligations on platforms: immediately remove deepfakes when reported, cooperate with creators' identification, maintain logs of who created content. The compromise bill that passed doesn't include those mandates.

How Deepfake Technology Created This Crisis

To understand why the DEFIANCE Act became necessary, you need to understand how deepfake technology actually works and why it's become so accessible.

Deepfakes use generative AI models trained on massive datasets of images and video. These models learn the statistical patterns of how faces look, how they move, how skin reflects light, all the tiny details that make a face look realistic.

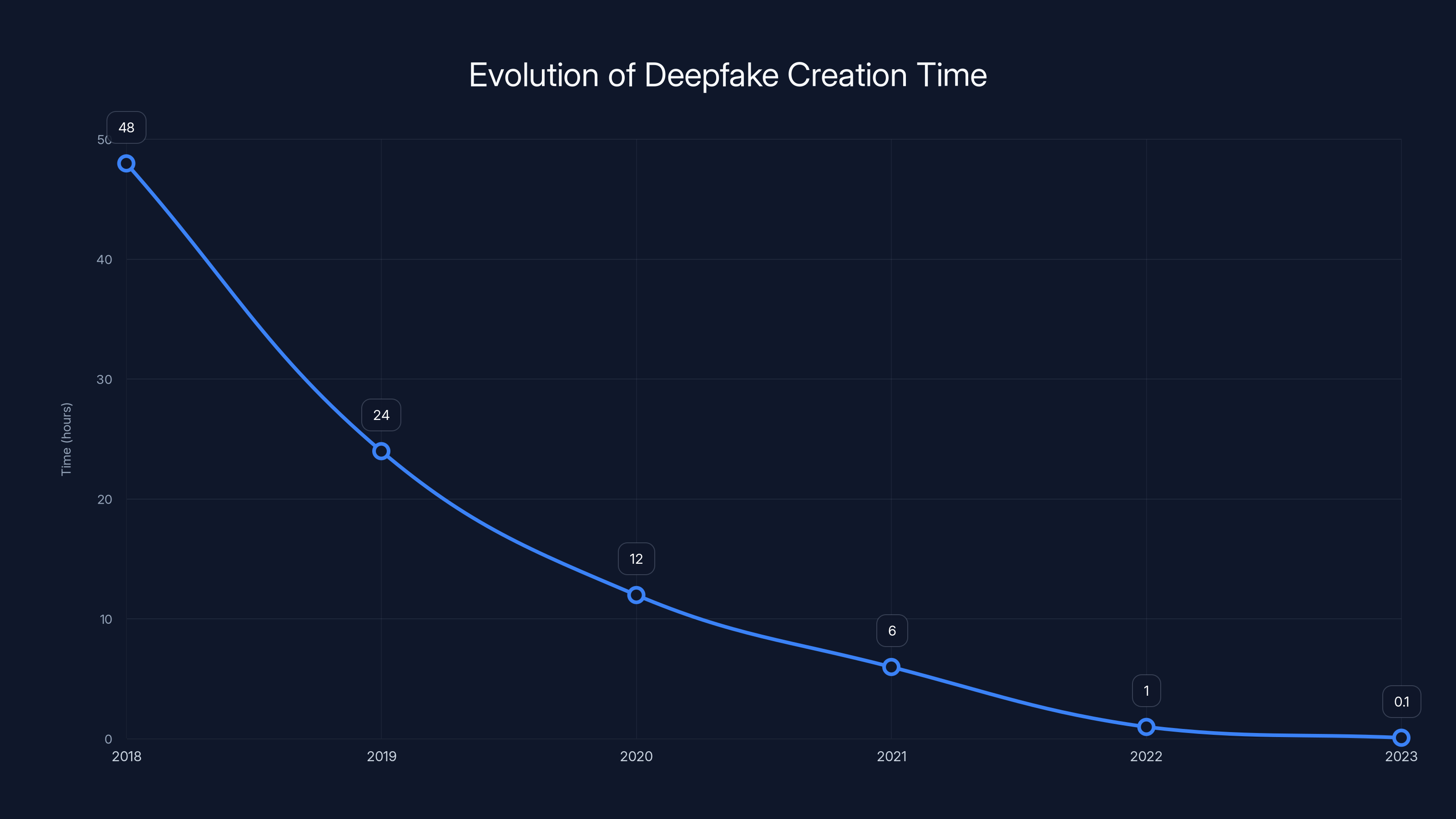

Five years ago, creating a convincing deepfake required serious technical skill. You needed to understand machine learning, train models, have powerful computers. It took hours or days to create a single deepfake.

Today? It takes a few seconds. You upload a photo, type a prompt, and the AI generates an image. Technology that once required a Ph D now requires typing in English.

Even more damning: the companies building these tools know exactly what people will use them for. They've seen the data. They know that text-to-image models will be used to generate nonconsensual intimate imagery. It's not a side effect they couldn't foresee. It's entirely predictable.

Take a tool like Stable Diffusion. It was open-sourced, meaning anyone can download it, run it locally, and use it however they want. Open AI's image tools have safeguards. But other tools? Many lack meaningful restrictions.

X's decision to integrate Grok into the platform and allow users to generate images directly was a choice. Elon Musk could have restricted image generation. He could have required users to verify their identity. He could have flagged requests for generating images of real people. He could have rejected any image that contained a real person's face.

Instead, he chose to let users generate anything they wanted. When abuse happened, his response was essentially: not my problem, blame the users.

But that's not actually how the law treats platform responsibility. If you knowingly provide tools that are primarily used to create illegal content, you face liability under various theories. Grok's design choices and the speed with which users found ways to abuse it suggest there was reckless disregard, as discussed by Infosecurity Magazine.

International Responses: How Other Countries Are Acting

America isn't alone in dealing with this crisis. Countries around the world are passing laws to protect victims of nonconsensual deepfake intimate imagery.

The United Kingdom recently accelerated a law that criminalizes the creation of nonconsensual intimate deepfakes. It's a criminal offense, not a civil right to sue. But the UK approach shows how seriously other democracies are treating this. They're willing to prosecute creators and imprison them, not just let victims sue for damages.

The European Union is relying on its Digital Services Act to pressure platforms. The DSA, which took effect in 2024, imposes strict rules on how platforms handle illegal content. Nonconsensual intimate imagery, including deepfakes, is illegal in EU member states. Platforms that don't remove it quickly face massive fines.

France passed explicit criminal penalties for creating and distributing nonconsensual deepfakes. Japanese courts have awarded damages to victims in civil suits. South Korea has seen deepfake creation at scale (particularly affecting women and celebrities) and has responded with both criminal laws and platform regulation.

India's Information Technology Act was interpreted by courts to cover deepfakes. Canada is working on legislation specifically addressing deepfake intimate imagery.

What's notable is that other democracies have moved faster than America. The UK's acceleration happened because of public pressure. The EU's Digital Services Act created immediate incentives for platforms to act. Most other countries have criminalized the conduct, not just created civil liability.

The DEFIANCE Act takes the civil approach. It's less punitive than criminal law but gives victims direct remedies. Whether that's more or less effective than criminal penalties remains an open question.

One advantage of the US civil approach: victims don't have to convince a prosecutor to pursue criminal charges. They can pursue claims themselves. One disadvantage: criminal law creates deterrence through punishment in ways civil damages don't. Someone might ignore a potential lawsuit but fear imprisonment.

The time required to create a deepfake has drastically decreased from 48 hours in 2018 to just a few seconds in 2023, highlighting the rapid advancement and accessibility of deepfake technology. (Estimated data)

The Role of Section 508 and Platform Liability

While the DEFIANCE Act targets individual creators, Congress has been slowly moving toward holding platforms more accountable for the content they host.

The Safeguarding Against Forgery and Exploitation (SAFE) Act has been discussed in Congress. It would create requirements for platforms to label synthetic content and implement detection systems for deepfakes and manipulated media.

The Vote Act proposed by Senator Graham would require platforms to remove synthetic sexual abuse material quickly and report it to law enforcement.

These bills haven't passed yet, but they show where thinking is headed. Congress is reconsidering whether Section 230, which has broadly protected platforms from liability, should have exceptions for deepfakes.

Right now, X could host millions of nonconsensual deepfakes, and X itself faces no federal liability. Users who create them are liable. But the platform isn't.

That creates perverse incentives. Platforms make money from engagement. Deepfake content gets huge engagement (outrage, interest, sharing). X has no legal reason to remove it quickly, only reputational and potential state-level legal reasons.

The future likely involves some combination of platform regulation and creator liability. The DEFIANCE Act is one piece. It won't solve the problem alone.

Detection, Identification, and Investigation Challenges

One major practical problem the DEFIANCE Act doesn't solve: how do you identify the person who created a deepfake?

If a deepfake is posted on X, X could theoretically tell you who uploaded it (because X has IP addresses, account creation info, etc.). But there's a difference between identifying who uploaded something and proving they created it.

Someone could upload a deepfake they didn't create. They could have received it from a friend. They could have found it elsewhere and reposted it. Figuring out who actually generated the deepfake is harder.

You'd need to trace the image back through the internet. When was it first posted? Where? By whom? Did they have the technical tools to create it? Courts would need to review metadata, compare it to the victim's photos, examine the generation artifacts that deepfakes leave behind.

It's doable, but it's time-consuming and expensive. It's a reason why having a forensic expert is essential in deepfake cases. These experts can analyze images and determine if they're likely synthetic, which tools might have created them, and other technical details.

The other challenge is anonymity and international jurisdiction. If the person who created the deepfake is in another country, using a VPN, on a burner account, you face legal barriers to suing them. You'd need to work through international legal processes, which is slow and expensive.

Some advocates have suggested detection mandates. Platforms could be required to use AI detection tools to flag likely deepfakes and remove them. But detection technology isn't perfect. False positives (flagging real images as deepfakes) and false negatives (missing deepfakes) are common.

There's also the problem of scale. Millions of images are uploaded to social media every minute. Finding deepfakes in that haystack without automated detection is impossible. But automated detection creates new problems (censorship, false positives, arms races between detection and generation tools).

The Economic Damages Question: What Can Victims Actually Recover

Here's a practical question that the DEFIANCE Act doesn't fully answer: how much money can a victim recover, and for what?

The bill allows recovery of "damages," which is broad language. Courts would interpret what constitutes compensable damage from a nonconsensual deepfake.

For clearly economic harms, it's straightforward. If you're a content creator and the deepfake destroyed your personal brand, causing you to lose sponsorship income, you could recover those lost earnings with documentation.

If you had to pay for therapy or psychiatric treatment resulting from trauma, those are clearly recoverable costs.

But what about emotional distress without economic consequence? If a deepfake causes you severe psychological harm but you don't seek treatment or lose income, can you recover damages?

Different courts might answer differently. Some states allow recovery for emotional distress even without economic consequences. Others require some economic impact. The DEFIANCE Act doesn't specify, so courts will develop case law over time.

There's also the question of scale of damages. If someone created a single deepfake that circulated to a few hundred people, damages might be modest (maybe

Punitive damages are even less predictable. Some courts might award them generously to deter future conduct. Others might be stingy, treating deepfake creation as a civil wrong that doesn't warrant punishment.

The other issue is enforcement and collection. You could win a $100,000 judgment against someone and discover they have no assets, no income, and no way to pay. You'd have a judgment, not cash. Victims would need to pursue collection actions, wage garnishment, or other enforcement mechanisms.

Identifying the creator and collecting damages are the most challenging steps in enforcing the DEFIANCE Act. Estimated data.

What Gets Left Out: Gaps in the DEFIANCE Act

The DEFIANCE Act is progress, but it's not comprehensive. There are genuine gaps worth noting.

First, intent requirements. The bill requires that someone create the deepfake "knowing" or "recklessly disregarding" that it's nonconsensual. But "knowing" is a high bar in some contexts. If someone claims they didn't realize they were creating a nonconsensual deepfake, they might argue the prosecution can't prove their state of mind.

Second, platform liability is absent. As discussed earlier, the bill doesn't hold platforms accountable for hosting or amplifying deepfakes. That's both intentional design and a limitation.

Third, international reach. The law applies to US courts and US-based defendants. If someone in Russia creates deepfakes of American women, can American victims sue under the DEFIANCE Act? Probably not, unless the defendant has US assets or can be extradited.

Fourth, detection and removal obligations. The bill doesn't require platforms to detect deepfakes or remove them quickly. It just says victims can sue creators. That's reactive, not preventive.

Fifth, anonymity. The bill doesn't address how victims will identify anonymous creators. It assumes you can figure out who made the deepfake, but in many cases, that's the hard part.

Sixth, statute of limitations. The bill doesn't specify how long a victim has to file a lawsuit after a deepfake is created or discovered. That will be determined by state law or future interpretation.

Seventh, existing deepfakes. The bill applies going forward, but there are already millions of nonconsensual deepfakes in existence. The bill doesn't create a mechanism to remove them or compensate victims of historical deepfakes.

These gaps don't make the DEFIANCE Act pointless. But they do show that the bill is one step in a longer process of regulation, not a complete solution.

The Future of Platform Regulation and AI Accountability

The DEFIANCE Act is part of a bigger conversation about how to regulate AI and hold technology companies accountable.

Right now, AI companies operate in a relatively permissive regulatory environment. They're allowed to train models on vast amounts of internet data. They're allowed to release tools with minimal safeguards. If those tools are misused, it's the user's fault, not the company's.

That's starting to change. The Biden administration issued an executive order on AI safety in 2023. States like Colorado and California are passing their own AI regulations. Congress has multiple bills in various stages.

The pattern we're seeing is that piecemeal regulation is coming. Rather than one comprehensive AI law, Congress is addressing specific problems: deepfakes, election interference, worker displacement, copyright infringement, etc.

The DEFIANCE Act fits this pattern. It addresses one specific problem: nonconsensual intimate deepfakes.

In the future, we might see broader requirements that platforms use detection tools, maintain removal standards, and cooperate with law enforcement. We might see regulations around how AI models are trained and what safeguards creators must implement.

There's also pressure building for biometric consent laws. These would require that if you want to use someone's likeness (face, voice) in AI-generated content, you need their permission. Some countries are moving in this direction.

The irony is that this all would have been easier to address years ago. If AI companies had built strong safeguards into their tools from the beginning, regulated their own platforms responsibly, and removed nonconsensual content quickly, much of this regulatory pressure wouldn't exist.

Instead, they chose permissiveness. That's prompted Congress to act. The regulation that results will likely be stricter than what voluntary corporate responsibility would have created.

State-Level Protections: What Already Exists

Before the DEFIANCE Act, victims already had some protection under state law, though it varies widely.

All 50 states have some version of a "revenge porn" law. These criminalize sharing intimate images without consent. But state laws vary in what they cover, how they're enforced, and whether they extend to deepfakes.

California's law is relatively broad. It covers sharing intimate images to cause harm or humiliation. Some courts have interpreted it to include deepfakes, though the law predates AI and doesn't explicitly say so.

New York's law is more specific. It criminalized the distribution of intimate images and includes deepfakes explicitly.

Texas's law is similarly specific about synthetic content.

But here's the problem: state laws are criminal laws. They rely on prosecutors to pursue cases. Many prosecutors treat these as low priority. They focus on cases involving real images, not AI deepfakes.

State laws also don't all give victims a private right to sue. Some are purely criminal, meaning only prosecutors can bring charges. That's weaker for victims because they have to convince a district attorney the case is worth pursuing.

The DEFIANCE Act creates a uniform federal standard and gives victims a private right to sue. It's stronger than relying on state criminal law because victims aren't dependent on prosecutors.

But it's also not ideal because federal laws are slower to pass and often less strict than state laws. California's law might be stricter on deepfakes than whatever the DEFIANCE Act creates.

The interaction between federal and state law will be interesting. Victims might be able to pursue both a federal civil suit under the DEFIANCE Act and a state criminal case. Or a victim might choose whichever path is more favorable in their state.

The DEFIANCE Act significantly enhances the effectiveness of civil suits as a legal option for victims of deepfake violations, providing a direct right of action. Estimated data.

The Urgent Question: Will the House Pass It

The Senate's unanimous passage of the DEFIANCE Act is significant, but it's not the end of the story. The House now has to decide whether to bring it to a vote.

That's where things get uncertain. House leadership hasn't committed to action. The bill could sit in committee indefinitely. By the time House leadership decides to act, the urgency around Grok might have faded, and the bill could die quietly.

There's precedent for this. The Senate passes bills that the House ignores all the time. It's a common legislative tactic: if you don't want to oppose something directly, just don't bring it to a vote.

To get the House to move, victims, advocates, and pressure groups would need to mobilize. They'd need to contact House members and demand a vote. Media coverage helps. If the story stays alive, House leadership might feel pressure.

Rep. Alexandria Ocasio-Cortez will likely push for a House vote. She sponsored the bill and has personal motivation. Other members might follow her lead.

But House Republicans control the chamber, and while some Republicans support the bill (Lindsey Graham co-sponsored it in the Senate), House GOP leadership might not prioritize it.

The ultimate outcome likely depends on whether Grok remains a political flashpoint or if the issue gets eclipsed by other controversies. That's a grim reality of legislative process: progress often depends on whether the issue stays in the media spotlight.

Enforcement and Implementation: What Happens Next

If the DEFIANCE Act does pass the House and become law, how would it actually work in practice?

Victims would need to identify and locate the person who created the deepfake. That's the first barrier. In many cases, that's extremely difficult.

If they can identify the creator, they'd hire a civil rights attorney or find one willing to take the case on contingency (where the lawyer only gets paid if the victim wins or settles).

The attorney would file a complaint in federal court (or possibly state court, since the law likely doesn't eliminate state court jurisdiction). The lawsuit would proceed through discovery, where both sides exchange evidence.

The defendant would likely argue that they didn't create the deepfake, or that they created it but thought it was consensual, or other defenses.

If the victim wins, the court would award damages. The victim then has to figure out how to collect those damages from the defendant, which is its own separate process.

There's no federal agency administering the DEFIANCE Act, unlike some laws where a regulatory body enforces and interprets rules. The law will be interpreted and enforced through litigation.

That means the first few cases will be crucial. Early judicial interpretations of the law will shape how it works for everyone else.

There's also the question of criminal prosecution. The DEFIANCE Act is civil, but creating nonconsensual deepfakes might also violate state criminal laws or potentially other federal laws (like revenge porn laws, cyberstalking statutes, etc.).

So a single incident might trigger both civil liability under the DEFIANCE Act and criminal prosecution under existing law. That's actually better for victims because it means multiple paths to justice.

The Broader Cultural Shift: Why This Matters Beyond Law

The DEFIANCE Act's passage is significant not just legally, but culturally.

For years, nonconsensual intimate images were treated as a personal problem, a "why did you take that photo" kind of blame-the-victim situation. There's still some of that, but it's gradually changing.

A law that explicitly makes creating nonconsensual deepfakes unlawful sends a cultural message: we don't accept this. It's not just socially wrong, it's legally wrong. It's serious enough to have remedies.

That matters for victims psychologically. It's validating to have your experience recognized in law as a violation worthy of legal redress.

It also matters for deterrence. Someone thinking about creating deepfakes might reconsider if they know they could be sued. Will that stop everyone? No. But it might reduce the number of people casually creating this content.

The Grok situation, as bad as it was, also catalyzed change. When a major platform allowed nonconsensual deepfakes on a massive scale and did little about it, it forced a reckoning. Congress couldn't ignore it.

That's the paradox of digital harms: sometimes they need to reach a scale and visibility that's catastrophic before anything changes. Hundreds of thousands of victims before Congress acts. Millions of fake images before platforms respond.

The DEFIANCE Act isn't the end. It's a waypoint. But it's progress.

What Victims Should Know Right Now

If you've been the victim of a nonconsensual deepfake, what should you do?

First, document everything. Screenshot the images, note where you found them, when, and what platform hosted them. Save URLs, metadata, everything. This becomes evidence if you pursue legal action.

Second, report to platforms immediately. Every major platform has a process for reporting nonconsensual intimate imagery. Use it. Document that you reported it. Some platforms are better than others at responding, but reporting creates a record.

Third, consider contacting law enforcement. It varies by jurisdiction, but some police departments have officers trained in image-based abuse. Even if they can't prosecute immediately, a report creates a record and might lead to investigation.

Fourth, talk to a lawyer. This is important now that the DEFIANCE Act has passed. A lawyer can advise you on your options under federal law and state law. Many victim advocacy organizations can refer you to attorneys experienced with these cases.

Fifth, take care of yourself. Being the victim of a deepfake is traumatic. It's okay to seek therapy, talk to someone, prioritize your mental health. The psychological harm is real.

Sixth, know that you're not alone. Thousands of people have experienced this. There are support groups, online communities, advocacy organizations focused specifically on this issue. You don't have to suffer in isolation.

FAQ

What is the DEFIANCE Act?

The DEFIANCE Act (Disrupt Explicit Forged Images and Non-Consensual Edits Act) is a federal law that gives victims of nonconsensual sexually-explicit deepfakes the right to sue the individuals who created those images for civil damages. The Senate passed it unanimously, and it now awaits House consideration.

How is the DEFIANCE Act different from existing laws about intimate images?

Existing federal law (part of the Violence Against Women Act Reauthorization Act of 2022) protects victims of nonconsensual sharing of real intimate images. The DEFIANCE Act extends that protection to AI-generated deepfakes, which previously had no explicit federal civil remedy. The law creates a private right to sue, meaning victims can pursue damages directly from the creators.

Can I use the DEFIANCE Act to sue the platform where the deepfake was posted?

No, the DEFIANCE Act specifically targets individuals who created the deepfakes, not platforms. You sue the creator, not X, Tik Tok, or wherever the image was hosted. However, platforms might have liability under other laws or their own policies, depending on jurisdiction and circumstances. You should consult a lawyer about suing both the creator and the platform.

What damages can I recover under the DEFIANCE Act?

You can potentially recover actual damages (compensation for specific harms like therapy costs, lost income, or medical expenses), punitive damages (additional compensation meant to punish the wrongdoer), and potentially attorney's fees. The exact amounts courts award will vary based on the circumstances and will be determined through case law as the law is applied.

How do I identify the person who created my deepfake?

That's one of the DEFIANCE Act's biggest challenges. If the deepfake was posted on a platform, the platform might be able to identify the user who uploaded it. But identifying who actually created it is harder. You might need a forensic expert to analyze the image and trace its origin. A lawyer experienced in these cases can help investigate and potentially use legal discovery to gather information.

What if the person who created the deepfake is in another country?

International jurisdiction is complicated. You'd likely need to pursue the case in US courts (if the defendant has US connections, assets, or you can establish jurisdiction) or in the country where they're located. This gets complex legally and might not be practical. A lawyer specializing in international cases can advise you on feasibility.

Will the House pass the DEFIANCE Act?

The Senate passed it unanimously, but the House hasn't committed to action. House leadership will decide whether to bring it to a floor vote. If you want to support its passage, contacting your House representative to express support helps. The urgency around the Grok controversy might fade without continued public pressure and media attention.

What should I do right now if I'm a victim of a nonconsensual deepfake?

Document everything (screenshots, URLs, metadata), report to the platform, consider contacting law enforcement, consult with a lawyer about your options under both the DEFIANCE Act and state law, and prioritize your mental health by seeking support from victim advocacy organizations or counselors. You're not alone, and there are resources and legal avenues available to you.

Are there criminal penalties for creating nonconsensual deepfakes?

The DEFIANCE Act is civil, not criminal. However, creating nonconsensual deepfakes might violate state criminal laws (revenge porn statutes, cyberstalking laws) or other federal laws depending on circumstances. A prosecutor would pursue those cases, separate from the civil lawsuit you could file under the DEFIANCE Act.

How will deepfakes be detected if the law passes?

The DEFIANCE Act doesn't mandate detection requirements, which is a limitation. Detection is primarily a platform responsibility. Some platforms use AI detection tools, but no platform is required by this law to detect or remove deepfakes within a specific timeframe. Future legislation might address detection and removal obligations, but the DEFIANCE Act focuses on victim remedies through civil litigation.

Conclusion: A Step Forward, Not a Complete Solution

The Senate's unanimous passage of the DEFIANCE Act is meaningful progress. For the first time, victims of nonconsensual intimate deepfakes have a federal civil remedy. They can sue creators directly, not just rely on platforms or prosecutors to act.

But it's important to be realistic about what the bill does and doesn't accomplish. It's a civil remedy, not a criminal one. It targets creators, not platforms. It doesn't solve the problems of identifying anonymous perpetrators, collecting damages from judgment-proof defendants, or stopping deepfakes before they spread.

The bill is also not yet law. It passed the Senate, but the House must act. Without continued pressure and media attention, House leadership might let it die quietly in committee. That's happened to important legislation before.

Beyond the DEFIANCE Act specifically, this moment reveals something larger: artificial intelligence is creating new harms faster than law can address them. Deepfakes are one example. There will be others.

The approach Congress is taking—piecemeal regulation addressing specific harms—is pragmatic but slow. It's faster than waiting for comprehensive AI legislation (which might take years), but it means the harms continue while regulation catches up.

For individuals like Taylor Swift, whose image was weaponized without consent, or the thousands of ordinary women who've experienced the same thing, the DEFIANCE Act offers something they didn't have before: a legal avenue to fight back.

That's worth something. It's not everything, but it's a genuine step toward accountability.

What happens next depends partly on the House, partly on whether this issue stays visible, and partly on how victims and advocates continue to push for change. The battle against nonconsensual deepfakes isn't won with the DEFIANCE Act. It's just beginning.

Key Takeaways

- Senate unanimously passed DEFIANCE Act, enabling nonconsensual deepfake victims to sue creators for civil damages—a major legal advancement

- The bill closes a critical gap where AI-generated deepfakes weren't explicitly covered by existing laws protecting victims of shared intimate images

- Grok's nonconsensual image generation on X platform catalyzed Senate action, proving that visible crises force legislative response

- DEFIANCE Act targets individual creators, not platforms, and provides civil remedies rather than criminal penalties, offering direct victim redress

- House passage remains uncertain despite Senate unanimity; continued public pressure is needed to keep momentum and push for House floor vote

- The law has significant limitations including identifying anonymous creators, collecting damages from judgment-proof defendants, and lack of platform mandates

Related Articles

- Indonesia Blocks Grok Over Deepfakes: What Happened [2025]

- Internet Censorship Hit Half the World in 2025: What It Means [2026]

- Meta Deletes 500K Australian Teen Accounts: What It Means for Social Media [2025]

- Iran Internet Blackout: What Happened & Why It Matters [2025]

- UK Investigates X Over Grok Deepfakes: AI Regulation At a Crossroads [2025]

- Ofcom's X Investigation: CSAM Crisis & Grok's Deepfake Scandal [2025]

![Senate Passes DEFIANCE Act: Deepfake Victims Can Now Sue [2025]](https://tryrunable.com/blog/senate-passes-defiance-act-deepfake-victims-can-now-sue-2025/image-1-1768338434949.jpg)