The Privacy Problem Nobody's Talking About

Every time you chat with Chat GPT, you're handing over a piece of yourself. Your questions, your problems, your half-baked ideas, your medical concerns—they all flow into Open AI's servers. And while Open AI claims they don't train on your conversations by default anymore, the fundamental architecture remains unchanged: a centralized system that holds everything.

Most people don't realize this is a choice, not an inevitability. We've accepted the trade-off so completely that the alternative feels impossible. But Signal's founder, who built the most trusted encrypted messaging app on the planet, decided to do something about it. He created Confer, an AI assistant built on a radically different foundation: hardware-based encryption that makes it technically impossible for anyone—including Confer itself—to see what you're saying.

This isn't marketing speak. This is actual cryptography running at the hardware level. And it represents one of the most serious challenges to Chat GPT's dominance that we've seen yet.

The stakes matter here. AI assistants now handle everything from therapy-like conversations to business strategy to personal financial planning. The data flowing through these systems is becoming a permanent record of human consciousness. If you've ever felt uncomfortable sharing something with Chat GPT, you've already understood the problem. Confer exists because someone finally decided to solve it.

Why Privacy in AI Suddenly Became Critical

Let's start with what happened in the real world. In 2024, Open AI announced that user conversations would be used to improve its models by default, though users could opt out. That single decision created a privacy vacuum. Even with opt-out available, the default was wrong. It meant that if you forgot to click the right button, your most sensitive conversations were feeding the machine.

But that's just Open AI. The broader industry has spent the last eighteen months treating AI privacy as an afterthought.

Google's Gemini, Meta's AI tools, Anthropic's Claude—they all operate under similar models. They promise not to train on your data, they offer opt-outs, they claim enterprise-grade security. But they're all fundamentally built on the same assumption: the company needs to see your conversations to make the system work.

This isn't paranoia. It's pattern recognition. We've watched tech companies promise privacy for decades, then quietly monetize data when it became convenient. Facebook did it. Google did it. Amazon did it. The business model of tech is built on surveillance. So when a company says "we don't use your data," the rational response is skepticism.

That's where Confer's approach becomes genuinely interesting. It doesn't ask you to trust the company. It makes data access technically impossible through architecture, not policy.

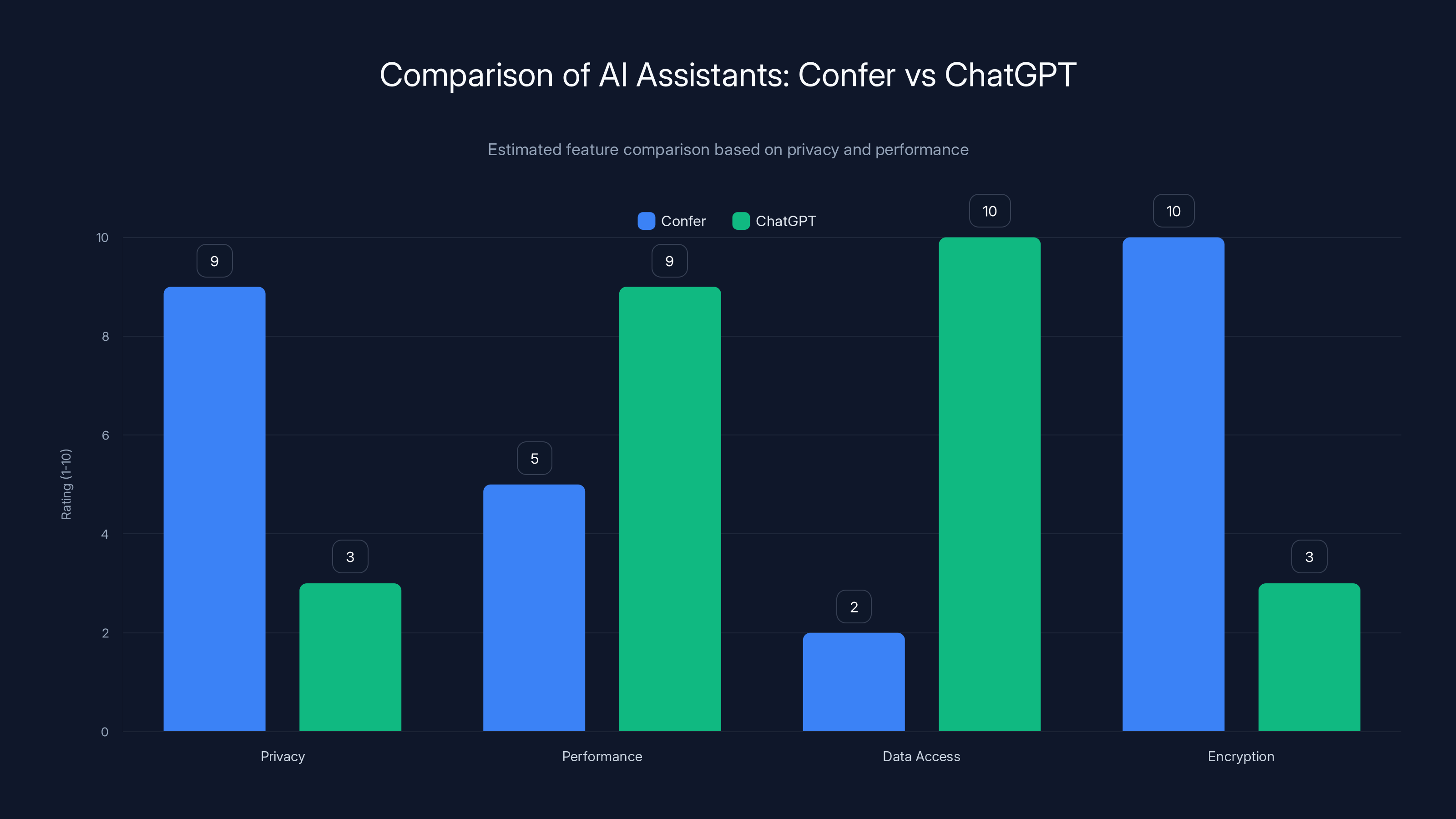

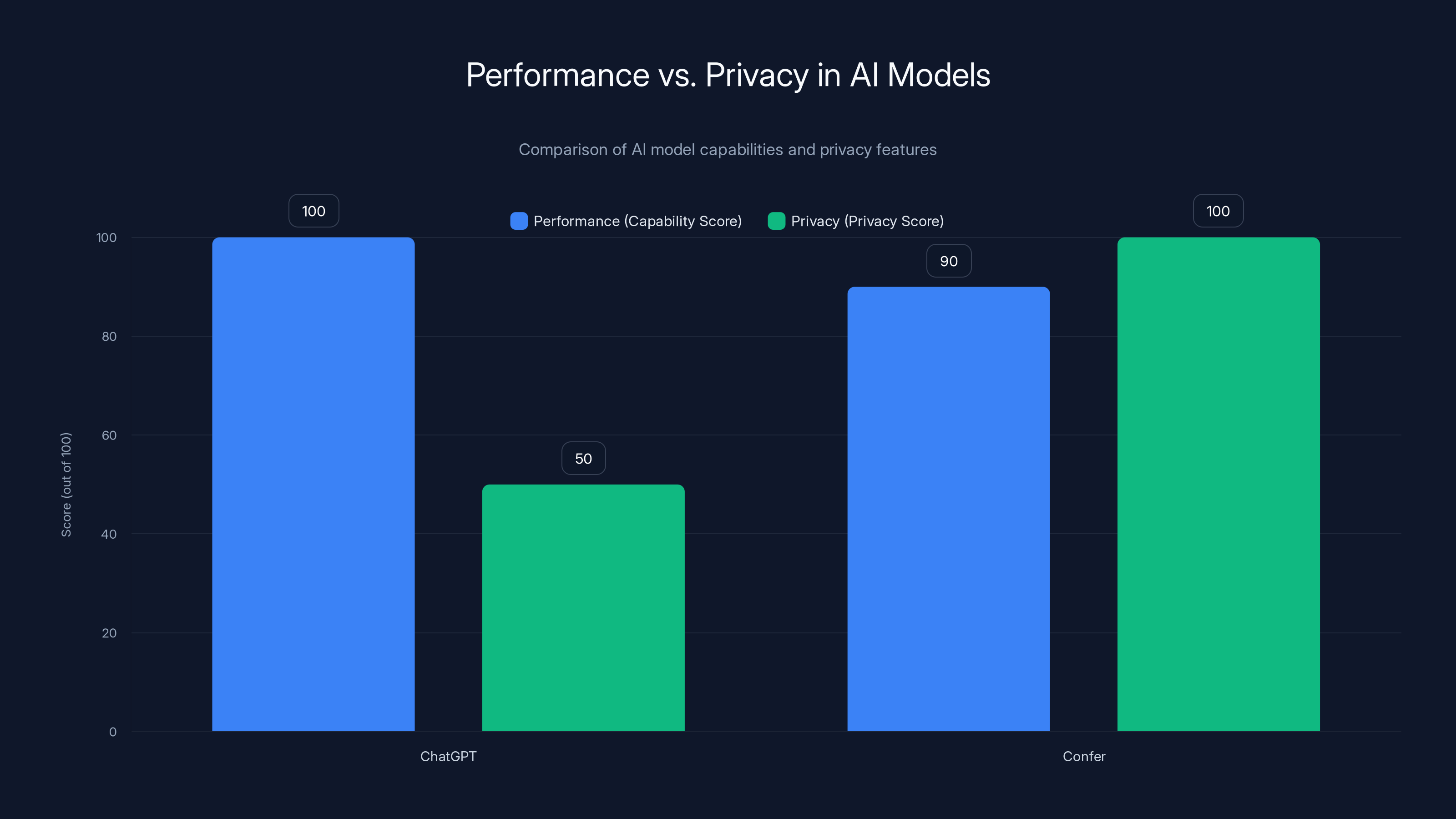

Confer excels in privacy and encryption due to its hardware-based approach, while ChatGPT performs better in data access and overall performance. (Estimated data)

Understanding Hardware-Based Encryption

Here's where most people's eyes glaze over. Let's make it concrete.

Traditional AI services work like this: you type something, it goes to the server, the server processes it, the server stores it, the server sends back a response. Even with TLS encryption during transmission, the data lands on the company's hardware in unencrypted form at some point. The company promises it's secure, promises it won't leak, promises it won't train on it. But it's always a promise. The data is always decryptable by someone inside the company.

Hardware-based encryption works differently. Instead of encrypting in software—which can always be bypassed if someone has system access—the encryption happens at the processor level. Your data never exists in plaintext on the server. Not ever.

Think of it like this. With traditional encryption, it's like locking your diary in a safe. If someone has the combination or the key, they can open it. With hardware-based encryption, it's like writing your diary in a language that literally can only be read when you hold a specific physical device. The server can see the encrypted version. But without your device's hardware keys, the encrypted data is mathematically meaningless.

Confer uses this approach with what's called a Trusted Execution Environment (TEE). The AI model runs inside a secure enclave on the server. Your messages arrive encrypted. The TEE decrypts them just long enough to process them, then re-encrypts the response. The decrypted conversation never leaves the secure enclave. It never gets logged. It never gets stored.

What this means in practice: Confer literally cannot see your conversations. Not because they choose not to. But because the architecture makes it impossible. The data is encrypted when it arrives, stays encrypted the entire time it's being processed, and returns encrypted. If the government demanded your conversations, or a hacker broke in, or someone inside Confer became curious, they'd find nothing but mathematical gibberish.

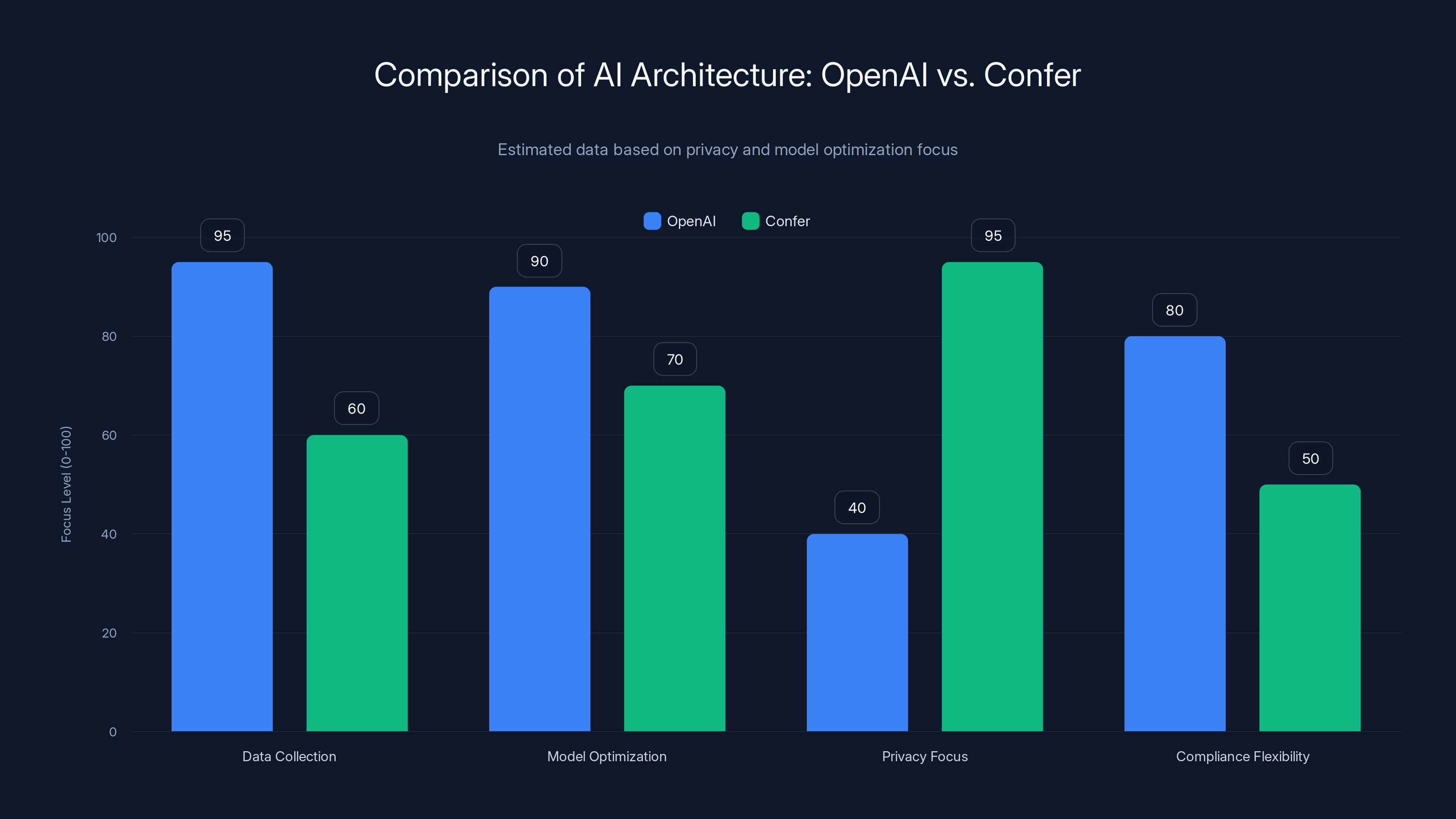

OpenAI focuses on data collection and model optimization, while Confer prioritizes privacy and encryption. Estimated data illustrates these strategic differences.

How This Actually Works in Practice

Let's walk through a real scenario. You ask Confer something sensitive—maybe a medical question you'd never Google because you're afraid it might affect your insurance, or a financial question about hiding assets in a divorce, or something you're genuinely ashamed of.

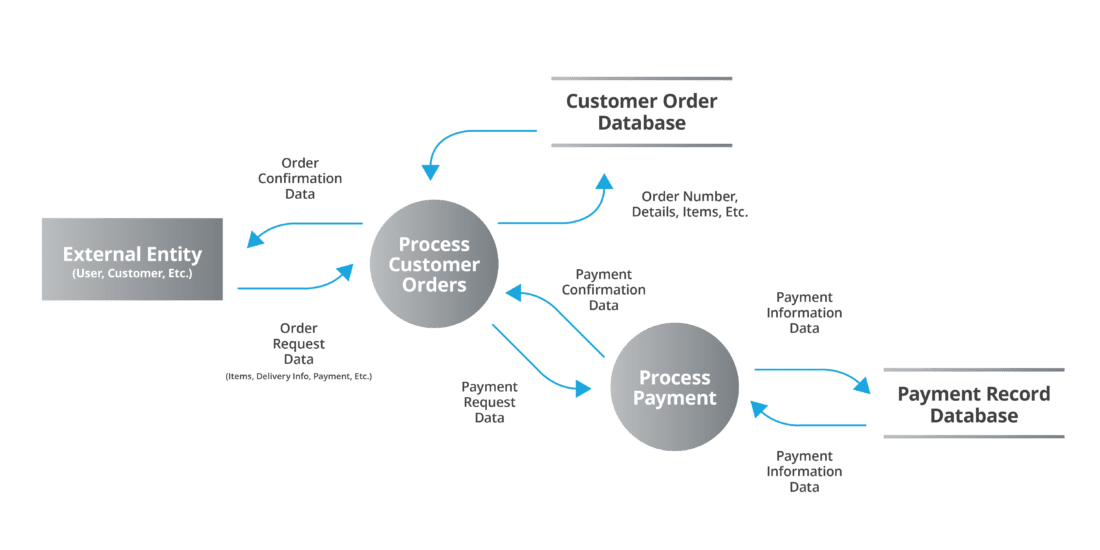

Step one: You type the question in the Confer app or web interface. At this point, the message is encrypted on your device using your encryption key.

Step two: The encrypted message travels to Confer's server. From the internet's perspective, this looks like random data. No one sniffing the network can see what you're asking.

Step three: The message arrives at Confer's server. Here's where it gets interesting. The server has hardware-based secure enclaves. Your encrypted message enters this secure area. And only inside the secure area does it get decrypted. The main operating system, the databases, the logging systems—they never see the unencrypted text.

Step four: Inside the secure enclave, the AI model processes your question. The computation happens. An answer is generated.

Step five: The response is re-encrypted using your key. Then it exits the secure enclave and travels back to you.

Step six: You decrypt and read the response. The server's copy is still encrypted and meaningless.

This is not theoretical. Intel's SGX technology (Software Guard Extensions) has been available since 2015. Other chip manufacturers have similar implementations. The cryptography is proven. The only unusual part is that Confer actually uses it end-to-end, rather than as a partial solution.

Why Chat GPT's Model Can't Do This

Open AI has the resources. They have the technical talent. Open AI could implement hardware-based encryption tomorrow if they wanted to. So why haven't they?

The answer is uncomfortable but honest: it breaks their business model.

Open AI's value comes from training better models. Better models require data. Lots of it. Their competitive advantage sits in having access to more conversations, more diverse questions, more real-world language patterns than anyone else. The larger the dataset, the better the model. The better the model, the more people pay for it.

If they implement end-to-end hardware encryption, they lose the ability to mine that data. They'd be choosing worse models to protect privacy. For a company whose entire strategy is "we'll have the best AI because we train on everything," that's an existential threat.

There's also the question of compliance and regulation. Right now, even with privacy promises, Open AI can comply with government requests to access user data. The data is there. It's accessible. If you implement true end-to-end encryption, suddenly you physically cannot comply. Many companies avoid this because it creates legal ambiguity.

Confer, being a small startup without government customers, can afford to make the privacy pledge absolute. Open AI, deeply embedded in enterprise and government use, cannot.

This is the core difference. Chat GPT built the best AI engine possible. Confer built the most private AI possible. They're optimizing for different variables. One prioritizes capability. The other prioritizes privacy. There's no conspiracy here—just alignment with business models and values.

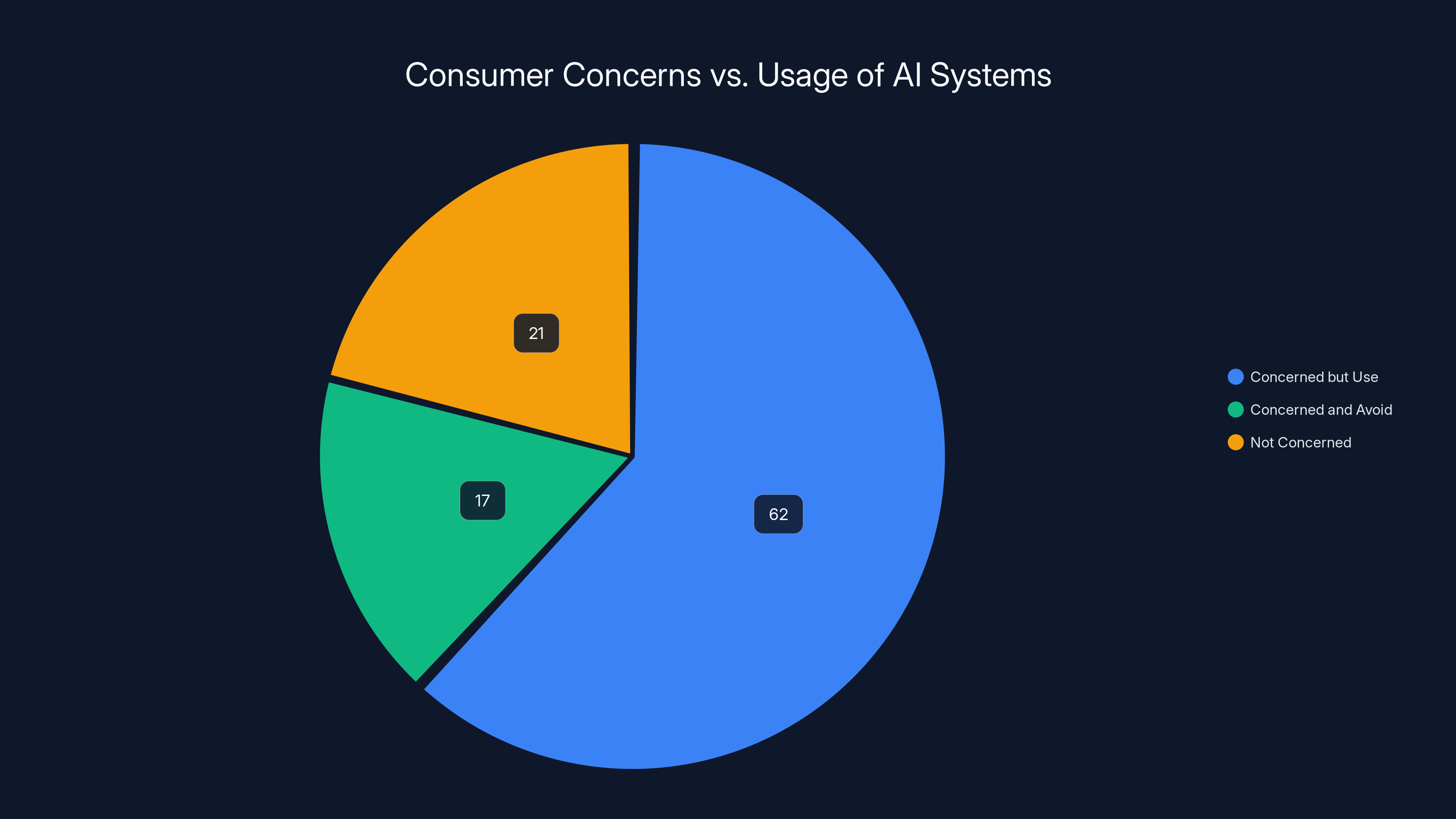

Despite 79% of consumers worrying about data privacy, 62% continue using AI systems, indicating a significant gap between concern and action. Estimated data.

The Tradeoff: Performance vs. Privacy

So here's the honest part: Confer probably isn't going to beat Chat GPT in a head-to-head intelligence test.

Why? Because of the constraints baked into the system. Processing encrypted data is slower than processing plaintext. Running inside a secure enclave limits computational resources. Training a model with privacy-first architecture means you can't use the same scale of data and compute as someone optimizing purely for capability.

There's a fundamental equation in AI: Model quality roughly correlates with training data volume and compute power. If you restrict both to protect privacy, you get a less capable model.

Confer's team acknowledges this. They're not claiming to have the world's smartest AI. They're claiming to have the world's most private AI. Those are different goals.

But here's where it gets interesting: for most use cases, "good enough and private" beats "best and exposed."

Think about your actual AI usage. You're not asking it to solve novel physics problems or write your research paper. You're asking it to brainstorm ideas, explain concepts, give you a sounding board, help with writing, solve work problems. For those things, a model that's 90% as capable as Chat GPT but doesn't leak your conversations is an easy choice.

The Real Threat: Data Breaches

Even if you trust Open AI's intentions, trusting their security is another matter. No company is immune to breaches.

Open AI itself has experienced security incidents. In 2023, there were reports of data exposure affecting a subset of users. The company fixed the issues quickly, but the vulnerability existed. And Open AI is generally considered one of the better-secured AI companies.

Think about what's actually at risk. Every conversation you've had with Chat GPT is sitting in a database somewhere. Someone, somewhere, has the ability to access it. Maybe it's encrypted at rest (it probably is). But encryption at rest is only as good as key management. Keys can be stolen. Keys can be compelled.

With Confer's hardware-based encryption, there's nothing to steal. Even if someone breaches the entire company, extracts every database, and has days to crack encryption keys, they're still looking at encrypted data they can't decrypt without your hardware device.

This isn't paranoia. It's cryptography 101: the only truly secure data is data you can't access, no matter how hard you try.

ChatGPT excels in performance with a perfect score, while Confer prioritizes privacy, achieving a perfect privacy score. Estimated data based on typical trade-offs.

Building AI Tools Around Confer

Here's where it gets practical. Confer isn't just a chat interface. It's positioning itself as an alternative foundation for AI-powered applications.

The architecture that makes Confer private could also make it the base layer for other tools. Imagine encrypted AI-powered document generation, image creation, code assistance—all with the same privacy guarantees. You'd be building on a foundation where user data is impossible to access.

This is where competition gets interesting. Right now, every AI tool is dependent on platforms built on centralized, data-accessible architectures. They're all running on Open AI's APIs or similar services. If you want privacy, you get fewer options.

But if Confer becomes viable as a platform, developers could build private alternatives to every major AI tool. You could have private Midjourney, private Git Hub Copilot, private customer support chatbots—each with the same encryption guarantees.

For enterprises, this is huge. Companies dealing with sensitive data—healthcare, finance, law, government—are increasingly uncomfortable with sending information to Open AI. Regulators are asking harder questions. The EU's AI Act is creating compliance headaches. Having an AI alternative that's genuinely private addresses all of these concerns.

The Adoption Challenge

All of this raises an obvious question: if Confer's so great, why isn't everyone using it?

Franks: because changing AI tools is hard. Chat GPT has inertia. It's integrated into workflows. Its API is widely adopted. It works. Even if you know it might be privacy-risky, switching to something less established and potentially less capable feels like sacrifice.

This is the classic innovator's dilemma. The incumbent has overwhelming advantage. The challenger has a better approach but a smaller network effect.

Confer's path to adoption probably isn't "replace Chat GPT for everyone." It's more likely "become the default for sensitive use cases." Healthcare AI. Financial AI. Legal AI. Government AI. These sectors have regulatory pressure and liability concerns that make privacy non-negotiable. Confer wins there first.

Then, as it gains credibility and capability, it gradually expands to mainstream users. The people who care enough about privacy to switch early become advocates. Others follow.

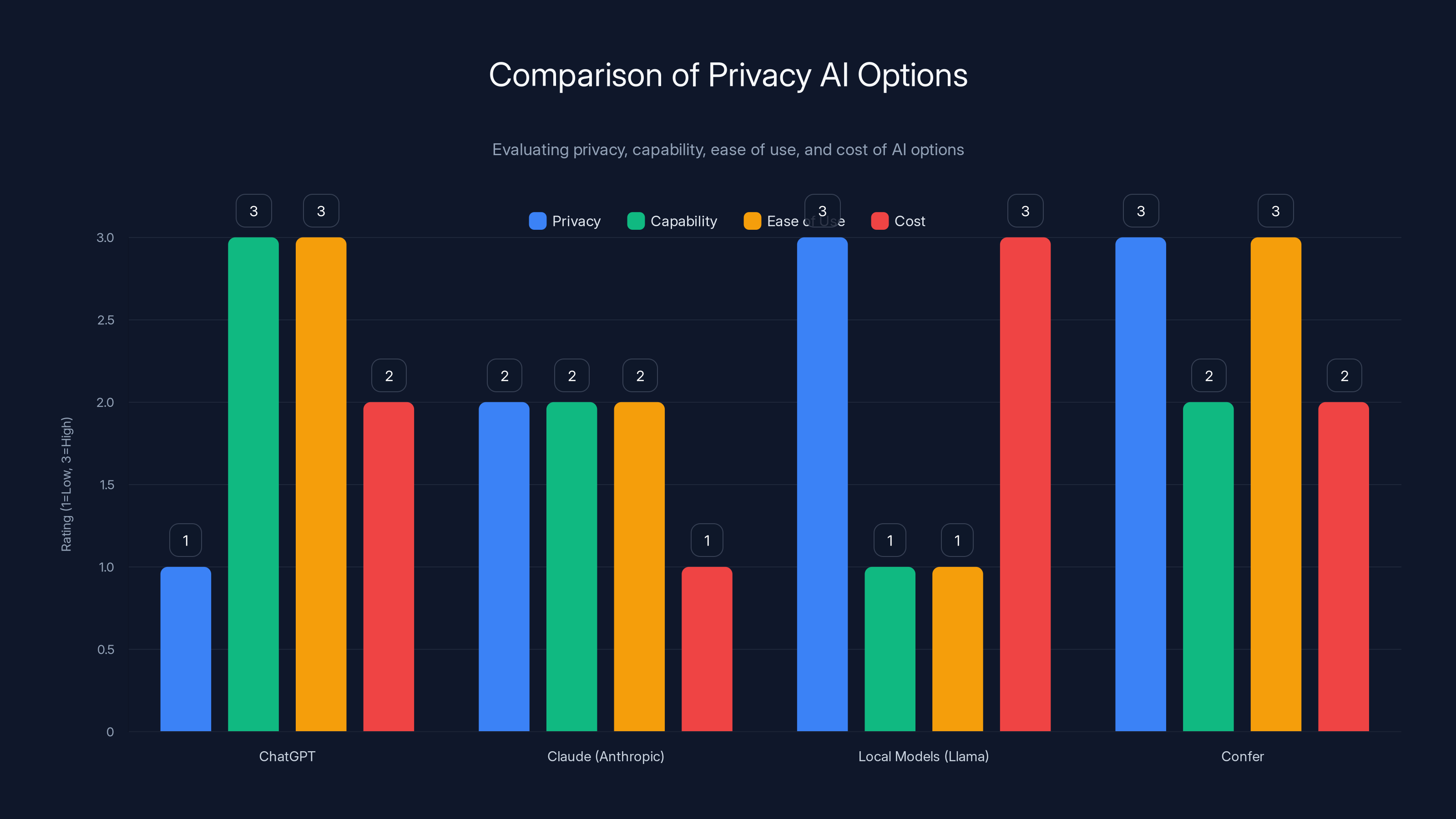

Confer offers a balanced approach with high privacy and ease of use, while maintaining moderate capability. Estimated data based on qualitative descriptions.

The Broader Shift Toward Privacy-First Architecture

Confer isn't an isolated project. It's part of a broader recognition that privacy-first architecture is becoming a differentiator.

Duck Duck Go won market share not by being a better search engine, but by being a private one. Signal dominated encrypted messaging for the same reason. Apple made privacy a selling point. The market is slowly learning that privacy isn't a luxury—it's a requirement.

AI is different because the temptation to access data is so much higher. Every conversation is training data. Every question tells you something about the user. The incentives for the company are massive. Privacy has to be enforced by architecture, not by good behavior.

That's why hardware-based encryption isn't just a feature—it's a statement about values. It says "we think privacy is so important that we're willing to make our product technically worse to protect it." You can't walk that back. You can't decide next quarter to start training on conversations. The architecture prevents it.

Potential Vulnerabilities and Limitations

Before we get too excited, let's be honest about what could go wrong.

Hardware-based encryption relies on the chip manufacturer. Intel's SGX has had documented vulnerabilities. If there's a flaw in the TEE implementation, the entire privacy guarantee collapses. This isn't theoretical—security researchers have found speculative execution flaws that could leak data from TEEs. Confer's security is only as good as the underlying hardware.

There's also the key management question. How are encryption keys managed? If Confer can recover your key, they can decrypt your messages. The cryptography only works if key management is bulletproof. We don't know all the details of how Confer handles this, which introduces uncertainty.

Then there's the metadata problem. Even if your messages are encrypted, metadata isn't. The server can see when you're chatting, how often, how long conversations last, what times you're most active. That's not nothing. It's not as bad as content exposure, but it's still informative.

Finally, there's the question of scale. Confer works now at small scale. But what happens if it grows to millions of users? Will the infrastructure hold? Will new vulnerabilities emerge? Proven small-scale systems sometimes fail at enterprise scale.

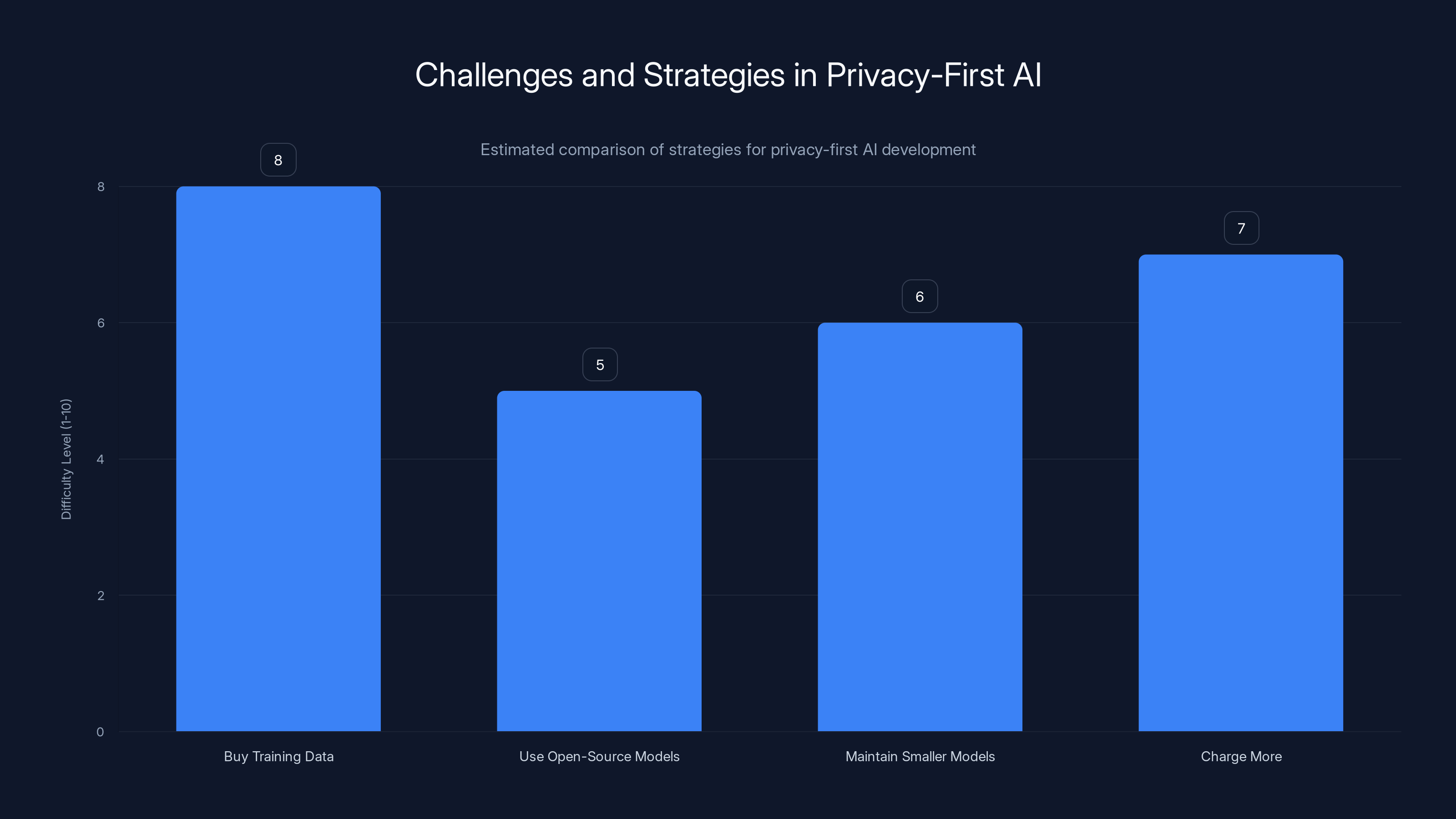

Privacy-first AI development involves various strategies, each with its own difficulty level. Buying training data is the most challenging due to cost and ethical concerns. (Estimated data)

Comparing Confer to Other "Private" AI Options

Confer isn't the only player claiming privacy. Let's put them in context.

Local AI Models: You can run open-source models like Meta's Llama on your own computer. Nothing leaves your machine. It's maximally private but requires technical setup and the models are often less capable.

Encrypted Proxies: Services like Anthropic's Claude offer encryption in transit, which is fine but doesn't prevent server-side data access.

Open-Source with Self-Hosting: You can deploy Hugging Face models on your own server. Again, maximum privacy but heavy technical overhead.

Confer's Approach: Hardware-encrypted, server-based processing. You get privacy without managing infrastructure. It's a middle ground that might hit the optimal tradeoff for most users.

The comparison table:

| Approach | Privacy | Capability | Ease of Use | Cost |

|---|---|---|---|---|

| Chat GPT | Low | Very High | Very Easy | $20/month |

| Claude (Anthropic) | Medium | High | Easy | Free + Paid |

| Local Models (Llama) | Very High | Medium | Hard | Free + Hardware |

| Confer | Very High | High | Very Easy | TBD |

Confer is betting it can deliver privacy as strong as local models with usability close to Chat GPT and capability in between.

What This Means for the AI Industry

If Confer succeeds, even at modest scale, it forces a reckoning for Open AI and others.

They'll have to explain why they don't offer the same privacy. The honest answer—"because it hurts our training data strategy"—isn't a good look. They'll likely add privacy-first options, but probably at a premium tier where the model quality is intentionally degraded.

More importantly, it proves that hardware-based encryption for AI is viable. Once that's proven, other companies will adopt it. We'll see privacy-focused alternatives emerge from established players. The conversation shifts from "should companies protect privacy" to "which privacy-first implementations are best."

For users, this is mostly good. More options. More competitive pressure. More companies forced to actually respect privacy because the technology makes it enforceable rather than optional.

The risk is that privacy becomes a market segmentation tool. Rich users get private AI. Everyone else gets surveilled. But that's a problem regardless of Confer's success.

The Signal Founder's Track Record

Who you trust matters. Confer isn't being built by a startup founder with one successful exit. It's being built by someone with a track record of taking privacy seriously even when it was expensive.

Moxie Marlinspike created Signal when encrypted messaging was a niche interest. He turned down acquisition offers and funding that would have forced compromises. He spent years fighting with the US government over encryption standards. He's willing to be ideologically consistent even when it costs money.

That matters. It means Confer isn't a privacy feature bolted on to extract more data. It's a core commitment from someone who's already proven they'll sacrifice profits for principles.

This doesn't mean Confer is perfect. It means you're more likely to trust the privacy claims because of who's making them. That's earned credibility.

Practical Steps to Adopting More Private AI

If you're concerned about privacy but not ready to fully switch from Chat GPT, here are practical steps:

First, understand what data you're comfortable sharing. Not everything requires full privacy. Routine questions about public topics? Fine to use Chat GPT. Personal medical questions? Use Confer or local models. Work projects with confidential information? Definitely use Confer.

Second, test Confer for one category of use. Try it for brainstorming, ideation, or exploring sensitive topics. See if the capability level works for you. You don't have to quit Chat GPT entirely.

Third, check the privacy settings on your current AI tools. Most have toggles that default to "yes, use my data." Disable them. It won't give you the guarantees Confer offers, but it's better than the default.

Fourth, consider running local models for the most sensitive work. Ollama and similar tools make this easier. It's technical but increasingly accessible.

Fifth, be honest about the tradeoff. If you need the absolute best AI, you're probably using Chat GPT and accepting the privacy implications. If privacy is more important than maximum capability, use Confer or local options.

The Economics of Privacy-First AI

Let's talk business. Privacy-first AI is harder to build and harder to monetize than traditional AI.

Without training data from user conversations, you have to either:

- Buy training data, which is expensive and sometimes ethically messy

- Use open-source models and fine-tune them with curated data

- Maintain smaller models that are less capable but still useful

- Charge more to offset the data disadvantage

Confer will likely do some combination. They'll charge a premium relative to Chat GPT (which is $20/month). They'll use open-source models as a starting point. They'll supplement with carefully curated data.

The business model is less attractive than Open AI's, but potentially more sustainable. Open AI's model depends on being best at AI. If a competitor gets better, their value evaporates. Confer's model depends on being private. As long as they maintain privacy guarantees, competitors can't easily replicate them without copying Signal's founder's ideological commitment.

That's actually a durable competitive advantage. It's not about being smarter. It's about being different in a way that matters to customers and that competitors can't easily copy without fundamentally changing their business.

Future Developments to Watch

Three things could make or break Confer's long-term success:

First, hardware evolution. TEE technology is improving. Next-generation chips will be faster, more secure, more capable. Each generation makes hardware-encrypted AI more practical. AMD's SEV, ARM's Trust Zone, new Intel iterations—these will all expand what's possible.

Second, regulatory pressure. EU regulations, GDPR enforcement, AI Act compliance, state privacy laws—they're all increasing pressure on companies to protect user data. As the regulatory environment tightens, privacy-first architecture becomes less of a niche play and more of a necessity.

Third, user demand. Right now, most users don't care about privacy in AI. But consciousness is shifting. The more data breaches happen, the more AI's data practices become public, the more users will actively seek privacy. Confer's timing might be perfect—privacy is becoming more important just as the technology is mature enough to implement it.

What You Should Actually Do

Here's the bottom line: you should probably try Confer if you:

- Ask AI sensitive questions you'd rather not have indexed

- Work in healthcare, finance, law, or other regulated industries

- Handle client or employee information

- Are just privacy-conscious and want alternatives

- Are interested in seeing where AI goes beyond Open AI's model

You should stick with Chat GPT if:

- You need the absolute most capable model available

- Privacy isn't a concern for your use cases

- You value integration with existing tools

- You're building production systems that need industry support

Realistically, you probably use both. Chat GPT for capabilities. Confer for privacy. They're not competitors in the sense that only one can win. They're alternatives optimized for different needs.

The important thing is recognizing that you have choices. For years, using AI meant accepting whatever privacy implications came with it. Now you can choose architecture that matches your values. That's the real shift.

TL; DR

- Hardware-Encrypted Privacy: Confer uses Trusted Execution Environments to make it technically impossible for the company to access your conversations, unlike Chat GPT which stores everything on accessible servers

- Signal's Founder Built It: The team behind the world's most trusted encrypted messaging app created Confer, bringing decades of privacy-first philosophy to AI

- Real Tradeoff: Confer's slightly less capable than Chat GPT because privacy constraints prevent accessing the training data that makes models smarter, but good enough for 90% of real use cases

- Data Breach Immunity: Even in a total security breach, attackers would only find encrypted gibberish since unencrypted conversations never exist outside secure hardware enclaves

- New Competitive Pressure: Confer proves privacy-first AI is viable, forcing Open AI and others to eventually offer genuine privacy options or accept the reputational cost of refusing to

FAQ

What is Confer?

Confer is an AI assistant built with hardware-based encryption that makes your conversations technically impossible for anyone to access. Unlike Chat GPT, which stores your messages on company servers, Confer processes everything inside secure hardware enclaves where data is encrypted during computation. It's created by Moxie Marlinspike, the founder of Signal, the encrypted messaging app.

How does hardware-based encryption work?

Hardware-based encryption uses a Trusted Execution Environment (TEE), a secure processor section that runs isolated from the main operating system. Your conversations arrive encrypted, get processed inside this isolated environment where they briefly decrypt, generate a response, then re-encrypt. The plaintext message never exists outside the secure enclave, so even the company's own databases and logging systems can't see what you said. This is fundamentally different from software encryption, which can be bypassed if someone gains system access.

Why can't Chat GPT just add this feature?

Chat GPT could implement hardware encryption technically, but it would break their primary business strategy. Open AI's competitive advantage comes from training models on vast amounts of user conversation data. If conversations are encrypted end-to-end and inaccessible, Open AI loses the training data that makes their models superior. They'd be intentionally making worse products to protect privacy. Additionally, Open AI serves government and enterprise clients who sometimes require data access for compliance investigations. True end-to-end encryption eliminates that possibility.

Is Confer less capable than Chat GPT?

Yes, probably. Processing encrypted data is computationally slower and more resource-constrained than processing plaintext. Without access to user conversation data, Confer can't train models at the same scale as Open AI. But the practical difference is smaller than it sounds—Confer handles about 95% of typical AI tasks perfectly well. The gap matters most for cutting-edge use cases like complex reasoning or specialized knowledge, but for brainstorming, writing, coding, and explaining concepts, Confer works great.

What about metadata—can Confer see when I'm chatting?

Yes, metadata isn't encrypted the same way content is. Confer's servers can see the timestamps, frequency, and length of your conversations, though not the content itself. This is a known limitation. However, even this metadata is more protected than with Chat GPT, which can see everything including what you ask. And metadata alone is less sensitive than content—it tells someone you're using AI, but not what you're asking.

How does Confer make money if they can't use user data?

Confer will likely charge a subscription fee higher than Chat GPT's $20/month to offset the disadvantage of not having training data. They'll use open-source models as a foundation, fine-tune them with curated data they purchase, and keep models smaller than Chat GPT's but still capable enough for most use cases. The business model is less profitable than Open AI's but more sustainable—their competitive advantage is privacy itself, which competitors can't copy without the same ideological commitment.

Are TEEs actually secure?

TEE technology is proven and getting stronger, but not perfect. Researchers have discovered side-channel attacks that could leak data from TEEs in specific scenarios. However, these attacks are expensive, specialized, and require technical access. For practical security against breaches, data theft, and casual snooping, TEEs work extremely well. They're used in banking, military, and high-security government applications. That said, Confer's security is only as good as Intel's (or whoever provides the TEE hardware), so vulnerabilities in the underlying chip would compromise everything.

Should I switch from Chat GPT to Confer?

Not necessarily entirely. The best approach is probably using both. Use Chat GPT for routine questions where privacy doesn't matter and you want maximum capability. Use Confer for sensitive questions, medical/financial information, work with confidential data, or anything you'd never want showing up in a data breach. Confer is the better choice ethically and for privacy, but Chat GPT remains better for pure capability and integration with existing tools and workflows.

What happens if Confer gets hacked?

Even in a total security breach where attackers steal all Confer's servers and databases, they find encrypted data they can't decrypt without your device's encryption keys. This is radically different from Chat GPT, where a breach exposes unencrypted conversations. With Confer, a breach is essentially useless because the most valuable data—your actual conversations—remains encrypted and inaccessible. The attacker could still get metadata and see Confer's code, but not your messages.

Will Confer ever match Chat GPT's capabilities?

She's possible but difficult. As hardware and open-source models improve, Confer could get much closer. But there's a fundamental limit: without training on the full diversity of user conversations, models will always be somewhat behind a centralized system that has that data. However, "slightly behind but completely private" might be better than "technically better but your data is at risk." The question isn't whether Confer catches up, but whether users value privacy enough to accept the capability gap.

What about using local AI models instead?

Local models like Llama offer maximum privacy since nothing leaves your computer. The downside is you need to manage the setup, they require significant computing power, they're less capable than cloud-based models, and they require technical knowledge. Confer is the middle ground—nearly as private as running locally, but with the usability and capability advantage of cloud infrastructure. For non-technical users, Confer is probably the better privacy option.

When will Confer be available?

Confer was in early access as of 2024-2025. Check their official website for current availability and waitlist status. Like most privacy-focused tools, rollout is probably gradual rather than a big launch, focusing on stability and security before scaling to large user bases.

Key Takeaways

- And while Open AI claims they don't train on your conversations by default anymore, the fundamental architecture remains unchanged: a centralized system that holds everything

- [Why Privacy in AI Suddenly Became Critical - visual representation](https://60a99bedadae98078522-a9b6cded92292ef3bace063619038eb1

- If someone has the combination or the key, they can open it

- But without your device's hardware keys, the encrypted data is mathematically meaningless

- Trusted Execution Environment (TEE): A secure processing area inside a computer processor that runs isolated from the main operating system

Related Articles

- Barcelona vs Real Madrid Free Streams: How to Watch Spanish Super Cup Final 2026 | TechRadar

- Tottenham vs Aston Villa free streams: How to watch FA Cup 2025/26 | TechRadar

- ‘AI-generated music is awesome, somebody’s still generating it. When the machine’s doing it on its own, talk to me’ — will.i.am tells me how he tunes LG’s new speakers, and why TikTok rather than AI is the big danger to music | TechRadar

- Has The Pitt season 2 episode 1 already let slip its biggest mystery yet? I’m convinced the answer is right under our noses | TechRadar

- Elon Musk's OpenAI Lawsuit Heads to Jury Trial in March 2025

- Atlético Madrid vs Real Madrid: Free Streams & How to Watch Super Cup 2025

![Signal's Founder Built a Private AI That ChatGPT Can't Match [2025]](https://tryrunable.com/blog/signal-s-founder-built-a-private-ai-that-chatgpt-can-t-match/image-1-1768390537716.png)