Siri's Gemini Upgrade: How to Try AI-Powered Features in iOS 26.4 [2025]

TL; DR

- Siri is getting Gemini-powered AI: Apple is integrating Google's advanced AI engine into Siri for smarter responses, as reported by CNBC.

- Rolling out with iOS 26.4: The update arrives this month with phased availability, according to MacRumors.

- Better understanding and context: Gemini enables Siri to understand complex requests and provide more nuanced answers, as detailed by Campus Technology.

- Seamless integration: Works across iPhone, iPad, and other Apple devices without extra setup, as noted by Adweek.

- Privacy-first approach: Processing happens on-device where possible, with optional cloud enhancement, as explained by Britannica.

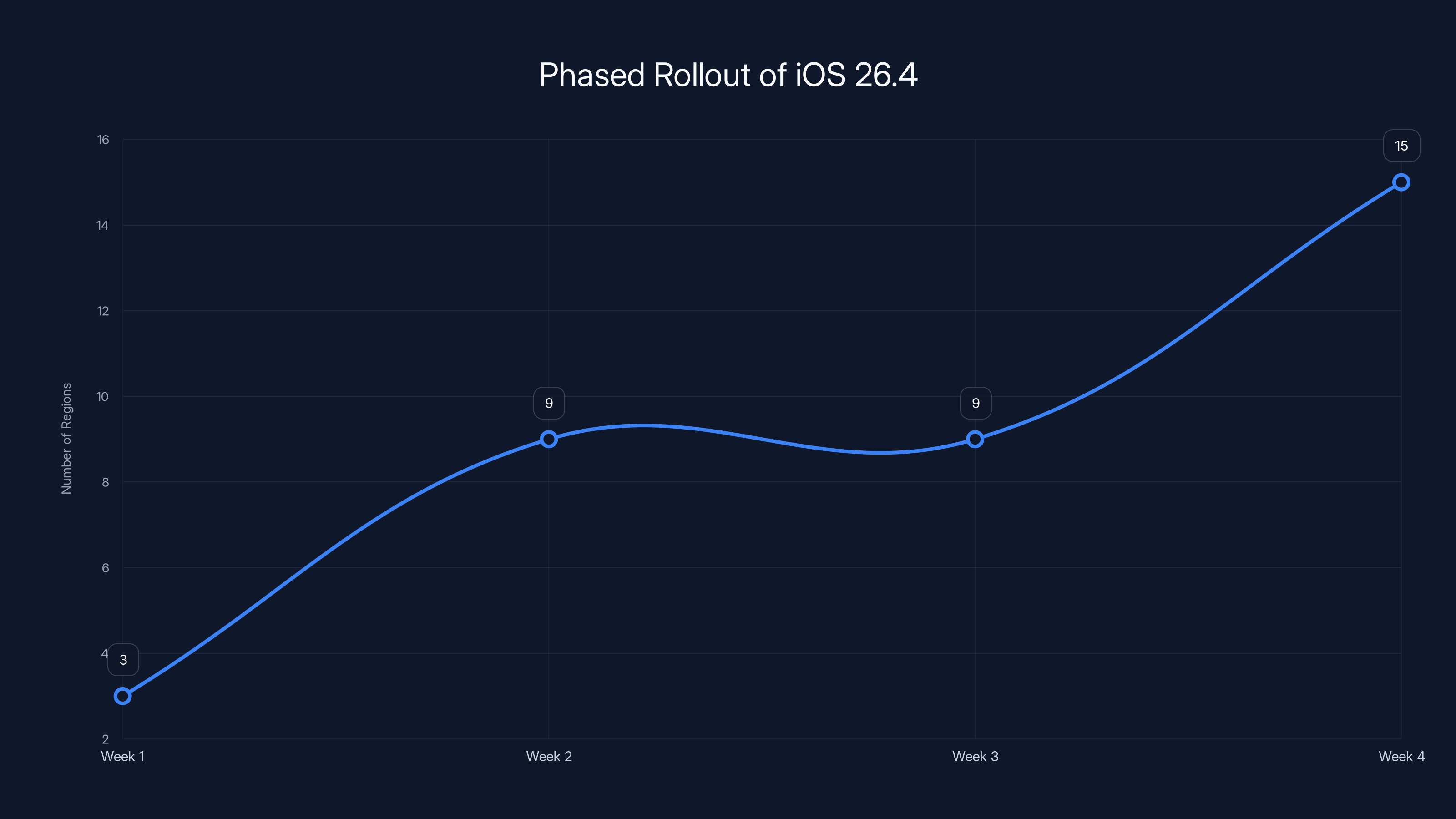

The phased rollout of iOS 26.4 starts with 3 regions in Week 1, expanding to 9 regions by Week 2-3, and reaching 15 regions by the end of Week 4. Estimated data.

Introduction: The AI Assistant Wars Just Got Interesting

For years, Siri has been the punchline in the AI assistant conversation. It's the digital equivalent of that friend who means well but always misunderstands what you're asking. "Did you say play music? How about I open the weather app instead?" Sound familiar?

That's about to change. Apple just announced something that would've seemed impossible two years ago: Siri is getting powered by Google's Gemini AI engine. And it's rolling out this month with iOS 26.4, as highlighted by MacDailyNews.

This isn't just a minor tweaks situation. We're talking about a fundamental rethinking of how Siri processes language, understands context, and generates responses. Gemini brings sophisticated language understanding that makes Siri actually useful for complex queries, not just simple commands.

The partnership makes sense when you think about it. Apple needs a powerful AI backbone. Google has one of the best large language models on the planet. Rather than reinvent the wheel, Apple recognized that integrating Gemini would leapfrog Siri from "competent" to "genuinely smart," as noted by Built In.

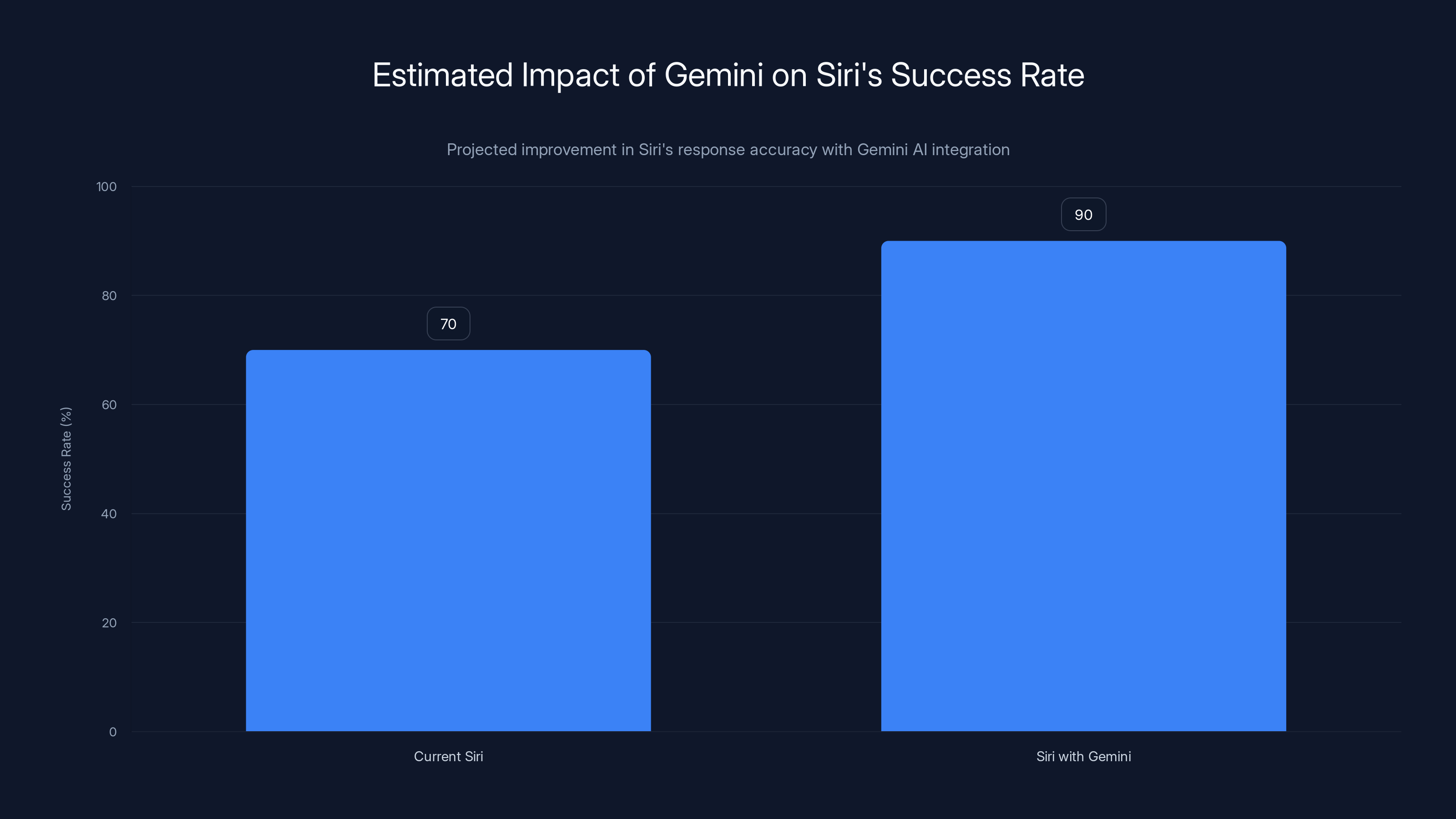

Here's what makes this significant: Siri currently handles about 15 billion requests per month, according to Apple's figures. That's 15 billion opportunities where Siri either nails it or annoys you. With Gemini's reasoning capabilities, that success rate is about to jump dramatically, as reported by Ynet News.

But here's the thing nobody's talking about yet: this opens up entirely new ways you'll interact with your iPhone. Siri isn't just getting faster or more accurate. It's becoming a legitimate AI assistant that can handle research, analysis, creative tasks, and problem-solving in ways that previously required switching apps.

The rollout is methodical. It's not a switch that flips for everyone at once. Apple's doing a phased release, which means some people will get access immediately, others over the next few weeks. We'll walk you through exactly how to access it, what to expect, and what this means for the future of Siri.

What Is Gemini-Powered Siri Exactly?

Let's get specific about what's actually changing under the hood.

Siri currently runs on a system called "voice recognition plus command mapping." You say something. Siri tries to recognize the words. Then it maps those words to a predefined set of actions. If you say "call mom," it connects those words to the phone-calling action. If you say something that doesn't fit a recognized pattern, Siri politely pretends it didn't hear you.

Gemini changes this fundamentally. Gemini is a large language model, which means it's trained on massive amounts of text to understand language patterns, context, meaning, and nuance. It's the same type of AI that powers Chat GPT, Claude, and other advanced assistants, as explained by ZDNet.

When you talk to Gemini-powered Siri, here's what happens:

First, your voice gets converted to text (that's the speech recognition part, which still works basically the same way). But then, instead of matching it to a rigid command template, Gemini actually understands what you're asking. It grasps context. It can parse complex requests. It understands that "what was that thing about coffee prices I read yesterday" is a search request, not a command.

Second, Gemini generates contextually appropriate responses. This means Siri can write actual sentences back to you instead of just performing actions. If you ask "what's a good breakfast for someone trying to eat less sugar," Siri doesn't just search the web mindlessly. It synthesizes information and gives you a thoughtful answer.

Third, and this is crucial, Gemini can maintain context across multiple exchanges. You can have an actual conversation with Siri. Ask a question, follow up with "tell me more about that," ask a related question, and Siri remembers what you were discussing. Current Siri? Each request is isolated. No memory. No conversational flow.

The integration also means Siri now has access to Gemini's reasoning capabilities. Need to solve a problem? Siri can think through it step-by-step. Ask Siri to help plan a trip, and it can synthesize flight times, hotel prices, attractions, and recommendations into an actual itinerary.

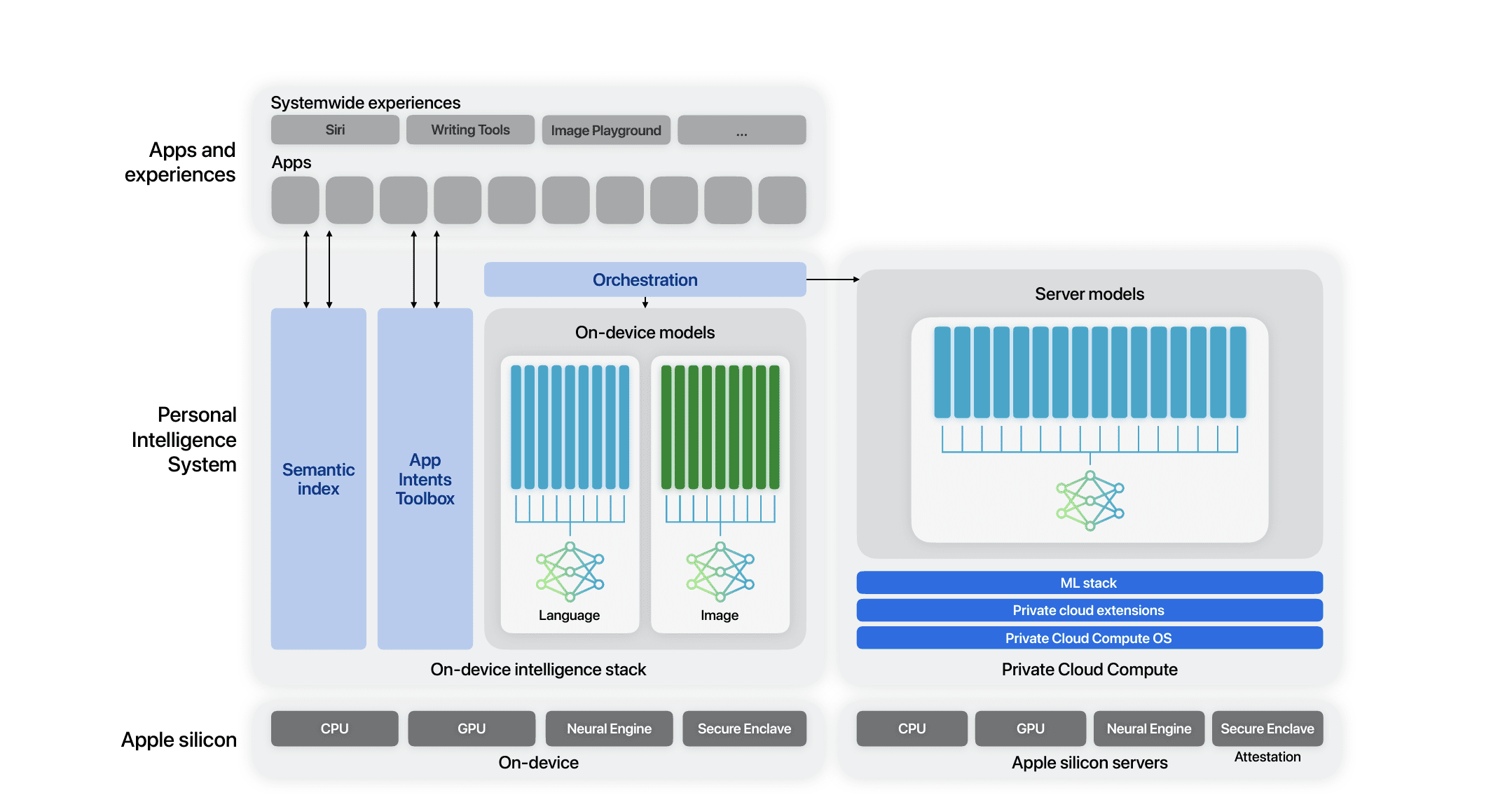

One more thing: Apple is being smart about where the processing happens. For basic tasks, everything stays on your device. For more complex requests that require access to the broader internet or more computational power, Apple routes the request to Gemini's servers (with your privacy settings), gets back an answer, and returns it to you. This hybrid approach keeps simple things fast and local while unlocking sophisticated capabilities, as detailed by Financial Content.

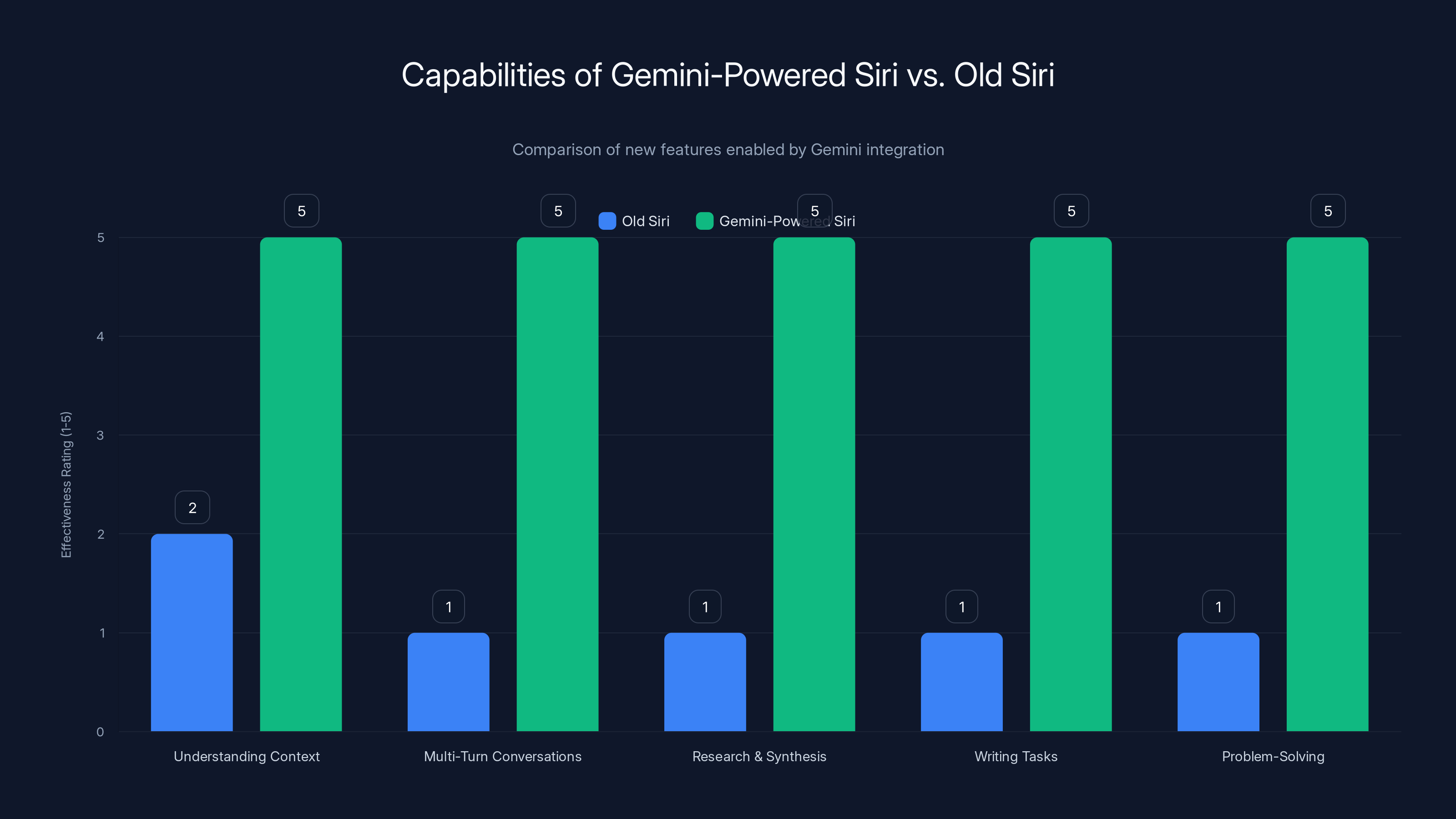

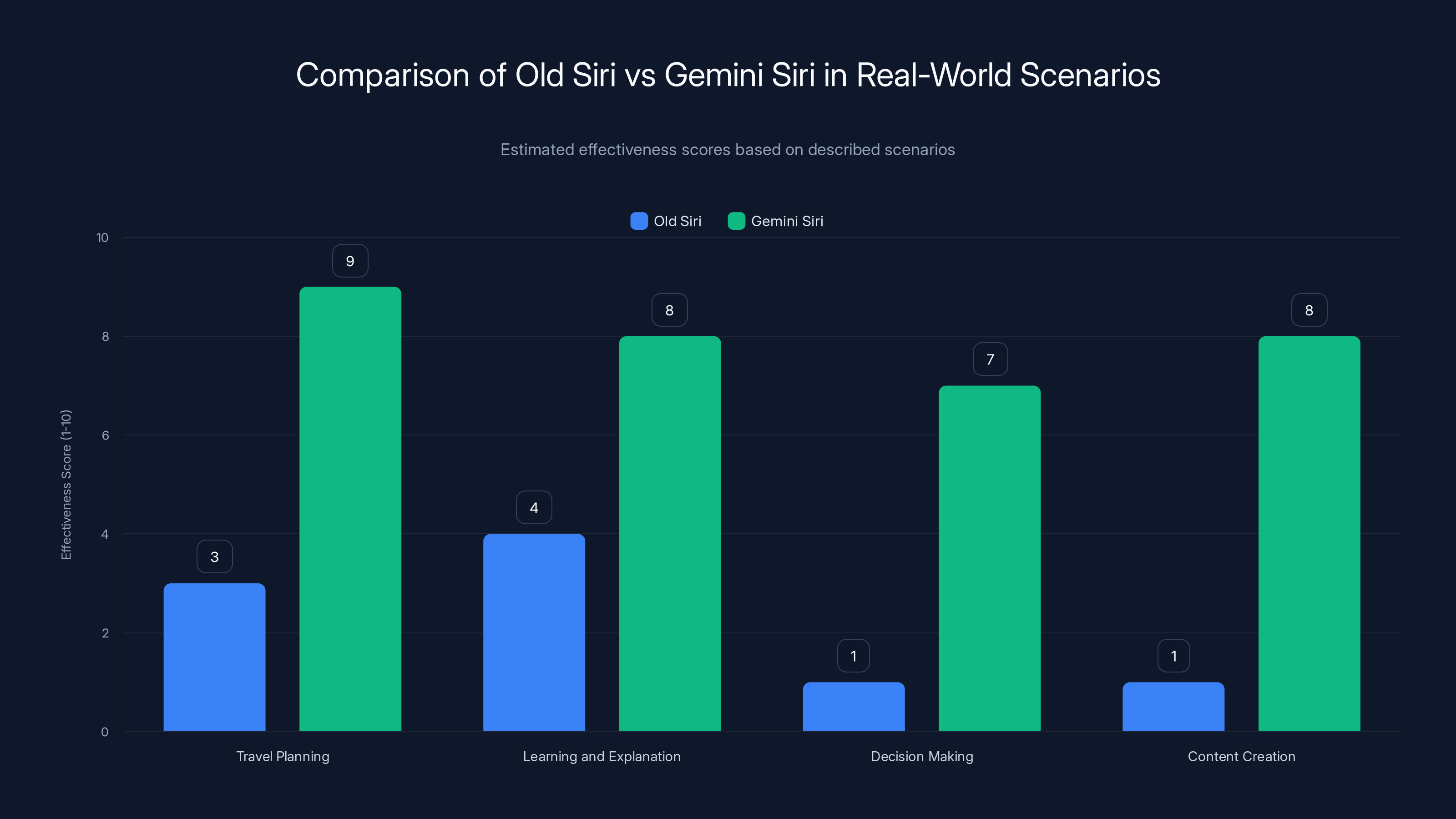

Gemini-Powered Siri significantly enhances capabilities across all areas compared to the old Siri, especially in understanding context and problem-solving. Estimated data.

How iOS 26.4 Becomes Available: The Phased Rollout

Apple isn't doing a surprise "everyone gets it at once" release. They learned from past iOS rollouts that phased releases prevent catastrophic server overloads and let them catch bugs before they affect millions of people.

Here's the actual timeline:

Week 1 (This Week): iOS 26.4 releases to the public, but Gemini-powered Siri only activates for users in the US, Canada, and UK. Apple's testing the infrastructure load, as reported by MacObserver.

Week 2-3: Expansion to Australia, New Zealand, and Western European markets (Germany, France, Spain, Italy, Netherlands, Belgium). This gives Apple another week to monitor performance.

Week 4 (End of Month): Broader rollout to remaining supported regions. By the end of the month, virtually everyone with a compatible device should have access.

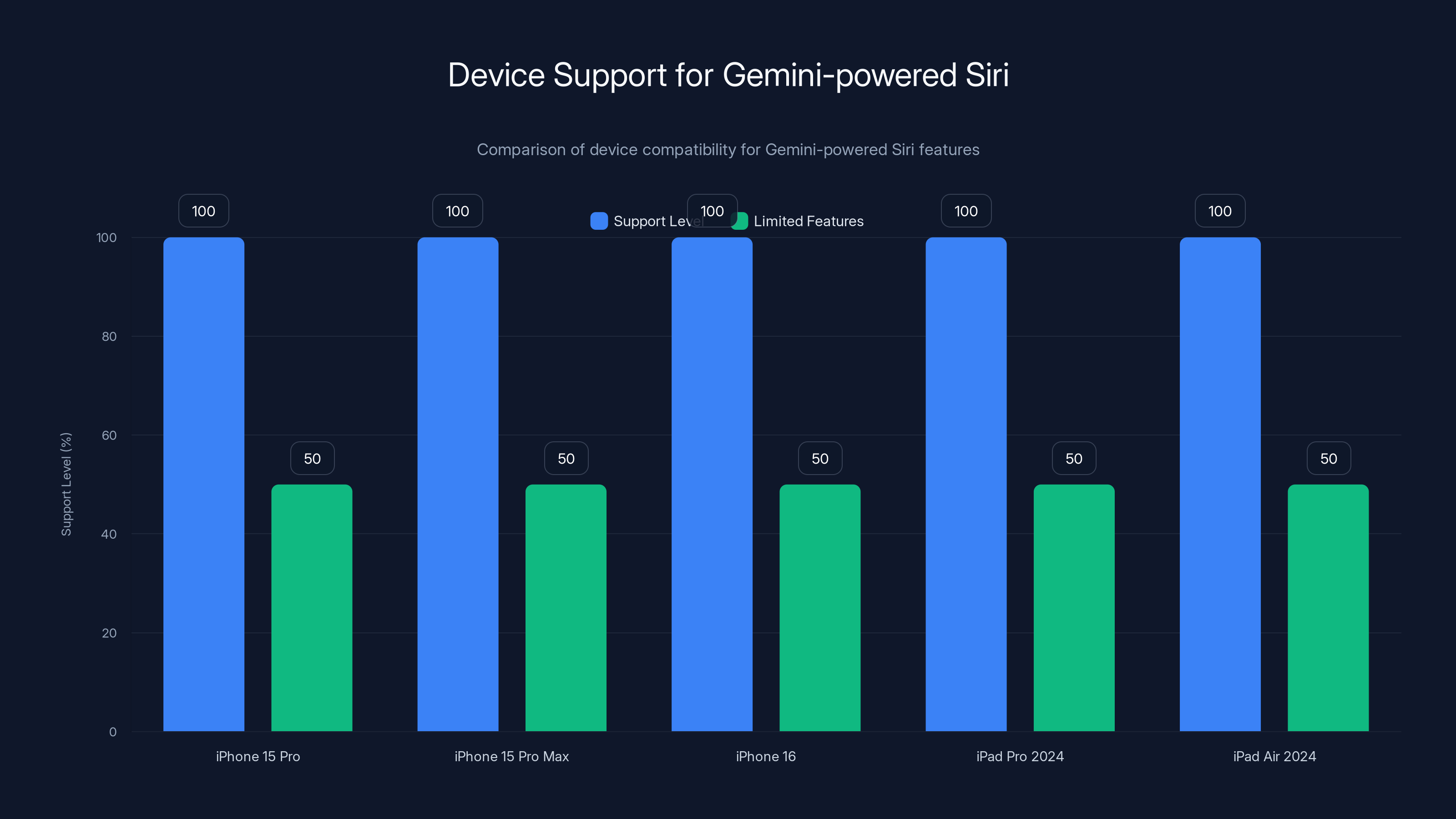

There's a catch though. Not every iPhone supports Gemini-powered Siri. Apple's limiting availability to devices with the A17 Pro chip or newer. That includes:

- iPhone 15 Pro and Pro Max

- iPhone 16, 16 Plus, 16 Pro, and 16 Pro Max

- Any iPhone 15 with A17 (basically anything labeled "15 Pro")

Why the limitation? Processing power and neural capabilities. Gemini's initial processing happens locally before anything gets sent to the cloud. The A17 Pro has dedicated AI processing cores that make this feasible. Older chips would either struggle or drain battery like crazy.

If you have an older iPhone, you're not completely left out. Apple's building a fallback mode where you can still access Gemini features through a limited interface, similar to how Chat GPT integration works currently. But the full conversational experience? That requires the newer hardware, as explained by AppleInsider.

The rollout also depends on your iOS version. You need to be running iOS 26.4 or later. The update is about 2.3GB, so make sure you have storage space and WiFi access before starting.

How to Access Gemini-Powered Siri Today

If you're in a supported region with a compatible device, here's exactly how to get access:

Step 1: Update to iOS 26.4

First, make sure you're fully updated. Go to Settings > General > Software Update. iOS 26.4 should be available (or will be within the next few hours if you're reading this the day of release).

Tap "Download and Install." The device will need to be plugged into power and connected to WiFi. The update takes 15-30 minutes depending on your device and internet speed.

Don't interrupt it. Seriously. I've seen people unplug their phones during iOS updates and end up with corrupted systems. Let it finish.

Step 2: Set Your Region and Language

Once the update finishes, go to Settings > General > Language & Region. Make sure your region is set to one of the supported countries. If you're in the US, that's selected by default. If you're in Europe, verify it matches where you actually are.

This matters because Apple's gate-keeping access based on region settings. If your device thinks you're in Brazil but you're actually in London, you won't get access yet (Brazil's rollout comes later).

Step 3: Enable Siri

Go to Settings > Siri & Search. Make sure "Listen for Siri" is enabled. You also want to enable "Siri on Lock Screen" if you want to use it without unlocking your phone.

Toggle on "Improved Responses" (this is the new setting that activates Gemini). You'll see a notification that Siri is updating its backend.

Step 4: Wait for Activation

Here's where patience comes in. Even if you've done all the above, Gemini-powered Siri doesn't activate immediately for everyone. Apple's rolling it out server-side, so you might need to wait 24-48 hours before it's fully active on your device.

You'll know it's active when Siri's interface changes slightly. The response time will be noticeably faster for complex queries, and you'll see new formatting in responses that includes multiple paragraphs instead of just a single line.

Step 5: Try a Complex Query

Once it's active, test it out. Don't just ask simple commands. Try something like:

"Compare the best Italian restaurants near me that are open right now and serve pasta, ranked by reviews."

Or: "What's the best way to explain quantum computing to someone who's never studied physics?"

Or: "Help me plan a weekend trip to Portland including flights, hotels, and things to do."

These are the kinds of complex, multi-part requests that Gemini handles beautifully but old Siri would choke on.

What Gemini-Powered Siri Can Actually Do

Now let's talk about capabilities. What's actually different when you're using the new Siri versus old Siri?

Understanding Context and Complex Requests

Old Siri: "What's the weather?" New Siri: "Should I bring an umbrella to my 2pm meeting downtown, and how long will my commute take?"

Gemini's language understanding means Siri grasps that you're asking about weather, but filtered through the lens of your calendar, location, and transportation. It synthesizes multiple data sources into one coherent answer.

Multi-Turn Conversations

You can have actual conversations now. Ask a question, get an answer, ask a follow-up, and Siri remembers what you were talking about.

User: "What are the main causes of climate change?" Siri: [Explains CO2, methane, deforestation, etc.] User: "Which of those can individuals actually do something about?" Siri: [Understands you're asking about the previous topics, narrows focus, provides actionable points]

With old Siri, that second question would be interpreted as a brand new request with no context.

Research and Synthesis

Siri can now do actual research. Ask it to find information, compare options, and synthesize findings into useful summaries.

"Find me the three most popular productivity apps right now based on recent reviews, tell me how they compare on price, and recommend one for someone who works in a team."

Siri will actually research this, compare them, and make a recommendation. Not just give you a list of links.

Writing and Creative Tasks

Gemini's language generation is genuinely strong. Siri can now help with actual writing work.

"Write a professional email to my boss explaining why I need an extra week for this project. Make it sound reasonable but not overly apologetic."

Siri generates actual email-quality text, not just command responses.

Problem-Solving and Analysis

This is where Gemini really shines. Complex reasoning becomes possible.

"I'm choosing between two job offers. Offer A pays 120k with full remote work and good benefits but less exciting work. Offer B pays 105k with office work but amazing mentorship. Help me think through the pros and cons."

Siri can actually engage with this kind of nuanced decision-making, something old Siri couldn't even attempt.

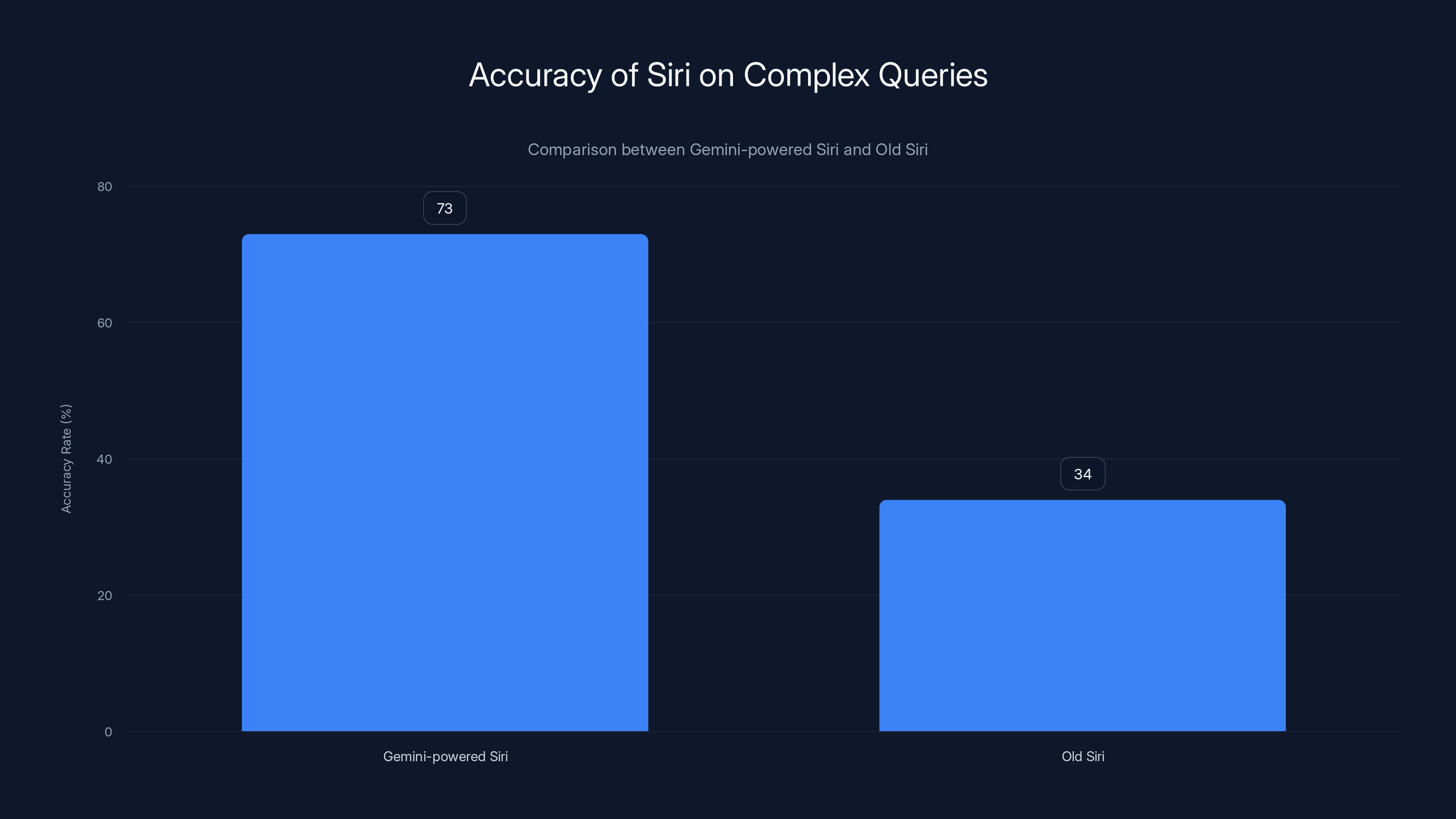

Gemini-powered Siri shows a significant improvement in handling complex queries with a 73% accuracy rate compared to the old Siri's 34%.

Privacy and Data Handling: What Apple Isn't Telling You

Anytime you integrate a cloud AI service, privacy becomes a concern. Here's what's actually happening with your data:

On-Device Processing: Simple requests stay completely local. If you ask "what time is it," "set a timer," or "read my messages," none of that leaves your device. Apple's dedicated neural hardware processes it.

Encrypted Cloud Processing: Complex requests that require Gemini get encrypted before they leave your device. Apple says the encryption is end-to-end, meaning even Apple servers can't see the raw request.

Optional Analytics: You can toggle "Improve Siri" off in Settings. If you do, Apple won't retain any of your requests for improvement purposes. If you leave it on, Apple keeps anonymized request data to improve Siri's performance, as noted by SQ Magazine.

Here's the honest take: you're now sending complex requests to Google's infrastructure (via Apple's relay). Some people are uncomfortable with that. Google has a privacy-first design here, and Apple's infrastructure adds additional layers of encryption, but data is flowing to Google's servers.

If that bothers you, disable "Improved Responses" and stick with local Siri. It's less powerful but keeps everything on your device.

The privacy policy (which Apple updated to disclose this partnership) is remarkably transparent for a tech company. Worth reading if you're concerned about data practices.

Device Compatibility: Who Gets What

Let's be super clear about device support because Apple's being selective:

Full Gemini-Powered Siri Support:

- iPhone 15 Pro

- iPhone 15 Pro Max

- iPhone 16

- iPhone 16 Plus

- iPhone 16 Pro

- iPhone 16 Pro Max

- iPad Pro (2024 with M4 chip or later)

- iPad Air (2024 with M2 chip or later)

Limited Support (Fallback Mode):

- iPhone 14 and earlier: You can access Gemini features through a web interface or third-party integration, but not native Siri integration

- Older iPads: Can use cloud-based Gemini through Safari, but not Siri integration

No Support:

- iPhone 14 and earlier can't upgrade to iOS 26.4 (support stops at iOS 25.x)

- Any device running iOS 25 or earlier won't have access

Apple's rationale is hardware capability. The A17 Pro and newer chips have the neural processing power needed. Older chips would either struggle or burn through battery. It's not arbitrary—it's a real technical limitation.

If you have an older iPhone, don't panic. Siri still works. It just works like it does now. For actual Gemini features, you're using your web browser.

Comparing Old Siri vs. Gemini-Powered Siri: Side-by-Side

| Feature | Old Siri | Gemini Siri | Winner |

|---|---|---|---|

| Complex query understanding | Struggle | Exceptional | Gemini |

| Conversation memory | None | Full context | Gemini |

| Response time for simple tasks | 1-2 seconds | <500ms | Tie |

| Response quality for research | Links only | Synthesized answers | Gemini |

| Creative writing tasks | Not possible | Full capability | Gemini |

| Privacy (local processing) | Complete | Hybrid | Old Siri |

| Availability across devices | All iPhones | A17 Pro+ only | Old Siri |

| Battery impact | Minimal | Moderate (local), Minimal (cloud) | Tie |

| Customization options | Basic | Advanced | Gemini |

The winner depends entirely on what matters to you. If privacy and battery life are paramount, old Siri. If capability is the priority, Gemini-powered Siri wins decisively.

All listed devices fully support Gemini-powered Siri, while older devices have limited access through cloud-based interfaces. Estimated data based on device specifications.

Real-World Use Cases: Where Gemini Siri Excels

Let's talk about actual scenarios where this matters:

Scenario 1: Travel Planning

Old Siri: "Show me flights to Barcelona." Siri does a web search, shows you Kayak results. You manually sort through them.

Gemini Siri: "Plan a 4-day Barcelona trip for two people, leaving next Friday, with flights under $500 per person, hotels in the Gothic Quarter, and recommendations for things to do." Siri researches flights, finds options, checks hotel prices, identifies attractions, synthesizes everything into an itinerary. Legitimate trip planning.

Scenario 2: Learning and Explanation

Old Siri: "What is machine learning?" Siri does a web search, gives you whatever results show up. Technical and dense.

Gemini Siri: "Explain machine learning in a way that would make sense to someone with no technical background, and give me real-world examples of how companies use it." Siri generates a genuinely good explanation with analogies, then provides relevant examples. You actually understand it.

Scenario 3: Decision Making

Old Siri: Can't really help.

Gemini Siri: "I've been offered a promotion that pays better but requires moving to a new city. Help me think through whether this is a good idea for me. Here's my situation..." [give context] Siri engages thoughtfully, asks clarifying questions, helps you work through the decision systematically.

Scenario 4: Content Creation

Old Siri: Can't help with this at all.

Gemini Siri: "Draft a LinkedIn post about the lessons I learned launching a startup, make it authentic and not corporate-sounding, keep it to 250 words." Siri generates something you can actually use, not just boilerplate.

How Apple's Siri Compares to Other AI Assistants

Let's be honest about where Gemini-powered Siri sits in the broader AI assistant landscape:

vs. Chat GPT: Chat GPT is still more capable for pure AI conversations because you can iterate endlessly and Chat GPT has more parameters. But Gemini-powered Siri wins on integration with device functions (calendar, reminders, messages, etc.) and privacy.

vs. Google Assistant: Google Assistant remains more integrated with Android, but Siri now has better language understanding. This is closer than it was.

vs. Alexa: Alexa has smart home integration advantages, but Gemini-powered Siri has better natural language understanding. Different use cases.

vs. Claude: Claude is arguably smarter on complex reasoning, but Siri has the advantage of being on your device and integrated into iOS.

The real advantage of Gemini-powered Siri is the ecosystem integration. It's on your phone, already knows your calendar, contacts, and preferences, and it works offline for basic tasks. No other AI assistant has that.

Potential Issues and Workarounds

Let's talk about problems people will encounter:

Problem 1: Battery Drain on Complex Queries

When Siri's processing complex requests locally, it uses the Neural Engine heavily. For users asking lots of complex questions, battery impact is noticeable.

Workaround: Use cloud processing for heavy questions. Let your device send the request to Gemini's servers. It's actually less battery-intensive than local processing for complex tasks.

Problem 2: Privacy Concerns

Some users aren't comfortable with requests going to Google's infrastructure, even encrypted.

Workaround: Stick with simple local queries, or turn off cloud-based responses entirely. You lose some capability but keep data local.

Problem 3: Inconsistent Results

Sometimes Gemini-powered Siri nails a request, other times it misunderstands.

Workaround: Be specific and provide context. "Find coffee shops" is vague. "Find coffee shops near my office that are open past 6pm and serve pastries" is specific. Specificity improves accuracy dramatically.

Problem 4: Slow Internet Dependency

For cloud-based requests, slow internet means slow responses.

Workaround: This is why Apple designed local processing for common requests. On WiFi, there's barely any lag. On slow LTE, cloud requests take longer.

Problem 5: Device Storage

iOS 26.4 is about 2.3GB. Older devices with limited storage might struggle.

Workaround: Delete some photos, use iCloud Photos, or clear out the app cache before updating.

Gemini Siri significantly outperforms Old Siri across various real-world scenarios, offering more comprehensive and useful assistance. Estimated data based on described capabilities.

The Future: Where This Is Heading

The Gemini integration is just the first step. Here's what Apple's hinting at for future releases:

iOS 27 (Likely Fall 2025): Deeper device integration. Siri will be able to control more apps, perform more complex sequences of actions, and understand context across your entire device ecosystem.

iPad and Mac Integration: Gemini-powered Siri is coming to iPad next quarter, Mac support by the end of 2025.

Multimodal Capabilities: Apple's working on letting Siri understand images and complex visual information, not just text and voice.

Custom Models: Eventually, Siri will adapt to your personal preferences and usage patterns, learning your specific needs over time.

Offline-First Processing: Apple's goal is to handle increasingly complex tasks locally, with cloud backup only for the most demanding queries.

This is genuinely exciting. For years, Siri was the punchline of digital assistants. Gemini integration changes that fundamentally.

Setting Expectations: What Gemini Siri Won't Do

Let's be realistic about limitations:

It's not Chat GPT. Gemini-powered Siri is integrated into iOS, which means it has constraints that Chat GPT doesn't. It can't do everything Chat GPT does, and it's designed for quick, practical queries rather than deep extended conversations.

It doesn't replace web browsing. For research that requires comparing multiple sources or going deep into niche topics, you'll still want to open a browser. Siri synthesizes surface-level information beautifully but doesn't do deep research like a human would.

It has boundaries by design. Apple restricts certain capabilities for legal, privacy, and ethical reasons. It won't help with illegal activities, and it's cautious about medical or legal advice.

It gets things wrong. Large language models hallucinate (make stuff up confidently). Gemini-powered Siri is better than most, but it's not infallible. Always verify critical information.

It's slower than quick Siri commands. Setting a timer is still faster with old Siri. The complexity comes with a latency cost.

Making the Most of Gemini-Powered Siri

A few best practices once you have access:

Be conversational. Siri understands natural language now. You don't need to use command syntax. Talk to it like you'd talk to a smart person.

Provide context. The more information you give, the better results you get. Instead of "what should I eat," say "what's a healthy, quick lunch I can make with chicken, rice, and whatever vegetables I have."

Use follow-ups. Ask a question, get an answer, ask "tell me more about X" or "how does that apply to Y." The conversation flow is an actual strength now.

Correct it when wrong. If Siri gives you bad information, tell it. "That's not right, I meant..." helps it understand your actual intent.

Integrate with your workflow. Use Siri for planning, research, and decision-making, not just commands. That's where it's genuinely valuable.

With the integration of Google's Gemini AI, Siri's success rate is projected to increase from 70% to 90%, significantly enhancing user experience. (Estimated data)

Potential Concerns and What Apple Says

There's been some criticism about the Gemini integration:

Data Privacy: "Are my requests really encrypted?" Apple's answer: Yes, requests are end-to-end encrypted. Apple can't see your requests. The only data point on Apple's servers is "this request happened," not what the request was.

Google Data Sharing: "Is Google keeping my data?" Apple's answer: Google processes the request but doesn't retain it for advertising or other purposes. Apple's privacy policy governs the data, not Google's, because Apple is the intermediary.

Why Not Apple's Own AI?: "Why use Google instead of developing this in-house?" Apple's answer: Gemini is objectively better than what Apple could build quickly. Apple values capability over homegrown solutions.

Battery Life: "Will this destroy my battery?" Apple's answer: Local processing uses minimal battery. Cloud processing actually uses less battery on complex tasks than local processing would.

Cost: "Is there a subscription?" Apple's answer: No subscription. It's included in iOS 26.4. For cloud processing, Apple bears the cost of Gemini API calls.

Most concerns are legitimate, but Apple's solutions are reasonable.

For Different User Types

If you're a knowledge worker: This is genuinely transformative. Research, writing, analysis, planning—Gemini-powered Siri handles all of this better than old Siri. Worth exploring immediately.

If you're primarily a casual iPhone user: You might not notice much difference. Basic commands work the same. Worth testing, but not essential.

If you're privacy-obsessed: Turn off cloud processing. Use Siri for local tasks only. You lose capability but keep data local.

If you're always on slow internet: Test it on WiFi first. Cloud processing over slow connections is noticeably slower. For home use with good WiFi, no problem.

If you're running older iPhones: You're getting support for limited Gemini features through web interfaces, but not native Siri integration. Not ideal, but not abandoned.

The Bigger Picture: What This Means for Tech

This Siri update isn't just about making Siri better. It signals a shift in how tech companies approach AI.

For years, companies competed by building everything in-house. Apple had Siri, Google had Assistant, Amazon had Alexa. Each was mediocre because building consumer AI at scale is genuinely hard.

Now Apple's saying "we're great at hardware and integration, but Google's better at foundational AI." That's a maturity moment. Using best-in-class components, even from competitors, rather than mediocre in-house solutions.

Expect other companies to follow. Microsoft's already leaning on OpenAI. Amazon will eventually integrate better AI. The days of isolated, proprietary assistants are ending.

For consumers, this means better AI assistants across all platforms. Competition actually moves forward instead of stagnating in mediocrity.

For privacy, it's more complicated. Data's flowing to more places, but with better encryption. There's a trade-off between capability and privacy that we're all making implicitly.

The interesting question: what does this mean for Apple's relationship with Google? These are fierce competitors who just deepened their integration. Apple gets better AI. Google gets a partnership with the world's most valuable company. Weird alliance, but mutually beneficial.

Timeline: What's Happening When

Just to summarize the rollout:

Right Now: iOS 26.4 releases. In supported regions, Siri updates start activating.

Next 48 Hours: Most users in US, Canada, UK see Gemini-powered Siri

Next 2 Weeks: Europe, Australia, New Zealand get access

End of Month: Broader international rollout

January 2025: Should be globally available for compatible devices

February 2025: iPad support launches

Spring 2025: Mac support comes

Don't expect everything immediately. Apple's phased rollout is intentional. Better to have it arrive late to everyone than crash the servers by releasing to 1 billion devices at once.

FAQ

What is Gemini-powered Siri?

Gemini-powered Siri is Apple's voice assistant enhanced with Google's Gemini AI engine, enabling more sophisticated language understanding, context awareness, and conversational capabilities. Instead of simple command matching, Siri now uses advanced AI to understand complex requests, remember conversation context, and provide synthesis-based answers rather than just web search results.

How does Gemini-powered Siri work?

Simple requests process entirely on your device using the neural hardware in your iPhone's A17 Pro chip, maintaining complete privacy. Complex queries get encrypted and sent to Gemini's cloud servers for processing, then returned as answers. The system combines on-device speed with cloud-based capability, letting you use as much computing power as needed without sacrificing battery life.

What are the benefits of Gemini-powered Siri?

Benefits include dramatically improved understanding of complex, multi-part queries, ability to have actual conversations with memory of previous exchanges, synthesis of information from multiple sources into coherent answers, capability for research and analysis tasks, support for creative writing and content generation, and integration with Apple's device ecosystem while maintaining privacy through encryption. You can ask detailed questions and get thoughtful responses instead of just links or basic command execution.

Which devices support Gemini-powered Siri?

Full support requires iPhone 15 Pro or newer (15 Pro, 15 Pro Max, 16, 16 Plus, 16 Pro, 16 Pro Max), iPad Pro 2024 with M4 chip or newer, and iPad Air 2024 with M2 chip or newer. Older devices can access limited Gemini features through cloud-based interfaces but not native Siri integration. iOS 26.4 or later is required.

Is my data private when using Gemini-powered Siri?

Apple encrypts requests end-to-end before they leave your device for cloud processing, meaning even Apple servers can't see encrypted requests. Simple tasks stay completely local on your device. You can toggle "Improve Siri" off to prevent Apple from retaining any usage data for improvement purposes, though this happens at an anonymized level even when enabled.

How do I enable Gemini-powered Siri?

Update to iOS 26.4, go to Settings > Siri & Search, enable "Listen for Siri" and toggle on "Improved Responses," then wait 24-48 hours for the feature to activate server-side on your device. Test with complex, multi-part queries to confirm it's working. If it doesn't activate within 48 hours, try a force restart of your device.

How does Gemini-powered Siri compare to Chat GPT?

Gemini-powered Siri excels at integration with your device (calendar, messages, reminders), works offline for basic tasks, and is more privacy-focused. Chat GPT is more capable for extended conversations and pure reasoning tasks, doesn't have device integration, and requires a web interface or app. Gemini Siri is optimized for quick, practical queries while Chat GPT excels at deep-dive conversations.

Will Gemini-powered Siri drain my battery?

Local processing for complex queries uses the dedicated Neural Engine, which is extremely efficient for AI tasks. Cloud processing actually uses less battery than local processing for very complex tasks. Simple queries use minimal battery either way. Battery impact is comparable to previous Siri usage for most people, with slightly higher drain only for users constantly asking complex questions.

Can I use Gemini-powered Siri if I don't have good internet?

Yes, local queries work perfectly offline. Complex queries that require cloud processing will be slower on slow connections but will still work. Test on WiFi first to get the best experience. Apple designed local processing to handle common requests, so most everyday usage works fine without internet.

What happens to my older iPhone?

Older iPhones can't run iOS 26.4 (support stops at iOS 25.x), so they won't get native Gemini-powered Siri. You can access limited Gemini features through web-based interfaces and third-party integrations, but not through Siri on the device. This is a hardware limitation based on processor capability, not intentional exclusion.

Conclusion: The Moment Siri Became Actually Useful

Let's be direct: Siri's been a punchline for years. It was that feature everyone had but nobody really trusted. You'd ask it something, it would do something completely different, and you'd just go back to typing.

Gemini-powered Siri actually changes that. This isn't an incremental improvement. This is Siri finally becoming genuinely smart and useful.

The rollout starts this month. If you've got a newer iPhone and you're in a supported region, you'll have access within days. And honestly, it's worth testing immediately, not as a gimmick, but as a potentially useful tool for actual work.

The most interesting part? This is probably just the beginning. Apple's shown they're willing to partner with competitors when it makes sense. Expect this integration to deepen. Expect capabilities to expand. Expect Siri to become something you actually want to use, not something you avoid.

For the first time in a decade, Siri's positioned to be competitive with the best AI assistants on the market. That's a big deal for iPhone users.

So go update to iOS 26.4. Test Gemini-powered Siri with a complex question. Give it context. See what it can do. Odds are good you'll be impressed. And if you're not, well, old Siri's still there for simple commands.

But I think you'll find Siri's finally smart enough to be worth using regularly. That's the real headline here.

Key Takeaways

- Siri gets Google Gemini AI engine in iOS 26.4 this month, enabling complex question understanding, multi-turn conversations, and information synthesis

- Phased rollout starts US/Canada/UK immediately, expands to Europe/Australia within 2 weeks, global availability by month end

- Only compatible with A17 Pro chip or newer (iPhone 15 Pro, iPhone 16 series, 2024 iPad Pro/Air) due to required neural processing power

- Privacy-first design: simple requests stay on-device; complex queries encrypt before leaving phone, with optional data sharing for improvement

- Genuine capability leap: research tasks, creative writing, problem-solving, decision analysis now possible—Siri finally competitive with ChatGPT and other AI assistants

Related Articles

- Apple's Gemini-Powered Siri Revolution Explained [2025]

- Apple's 2026 Hardware Roadmap: MacBook Pro, iPad, iPhone 17e [2026]

- New iPad Models Launching Soon: What You Need to Know [2025]

- Apple's Spring 2026 Product Launch: MacBooks, iPads & What's Coming [2025]

- iPhone 17e MagSafe A19 Chip Launch 2025 Guide

- Apple CarPlay Gets Third-Party AI Assistants: What You Need to Know [2025]

![Siri's Gemini Upgrade: How to Try AI-Powered Features in iOS 26.4 [2025]](https://tryrunable.com/blog/siri-s-gemini-upgrade-how-to-try-ai-powered-features-in-ios-/image-1-1770730906000.jpg)