Introduction: The Siri Awakening

Siri has been Apple's voice assistant for over a decade now, but let's be honest—it's been kind of phoning it in. You ask it to play music, set a reminder, or make a call, and it works fine. But try something slightly complex? Siri suddenly acts like it's never heard of the internet before.

Now, everything's about to change.

According to recent reports, Apple is planning something major: a complete reimagining of Siri powered by Google's Gemini AI. This isn't just a software tweak or a minor feature update. This is Apple admitting that it needs help building the next generation of its most iconic voice assistant, and it's reaching across the aisle to Google to do it.

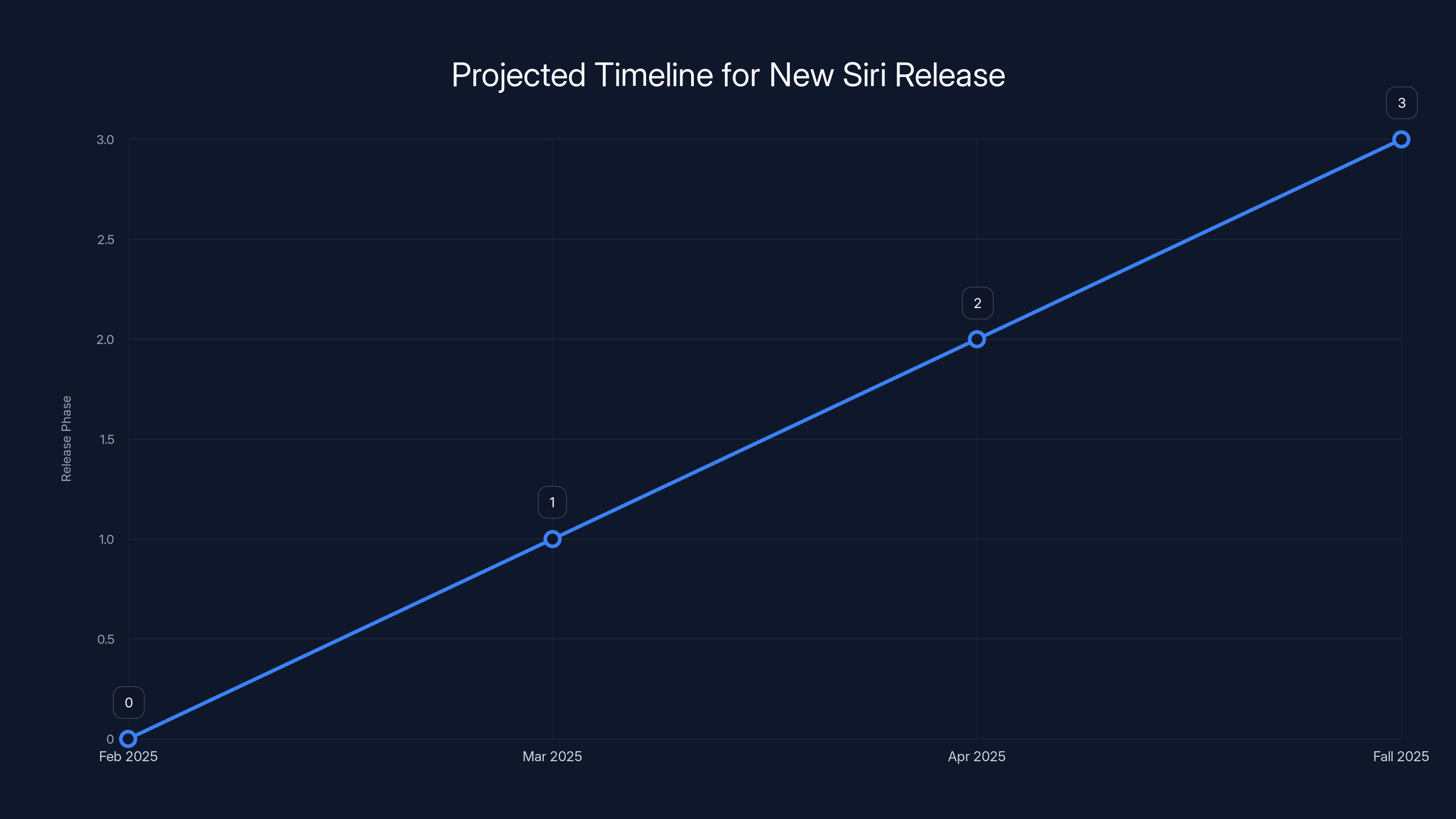

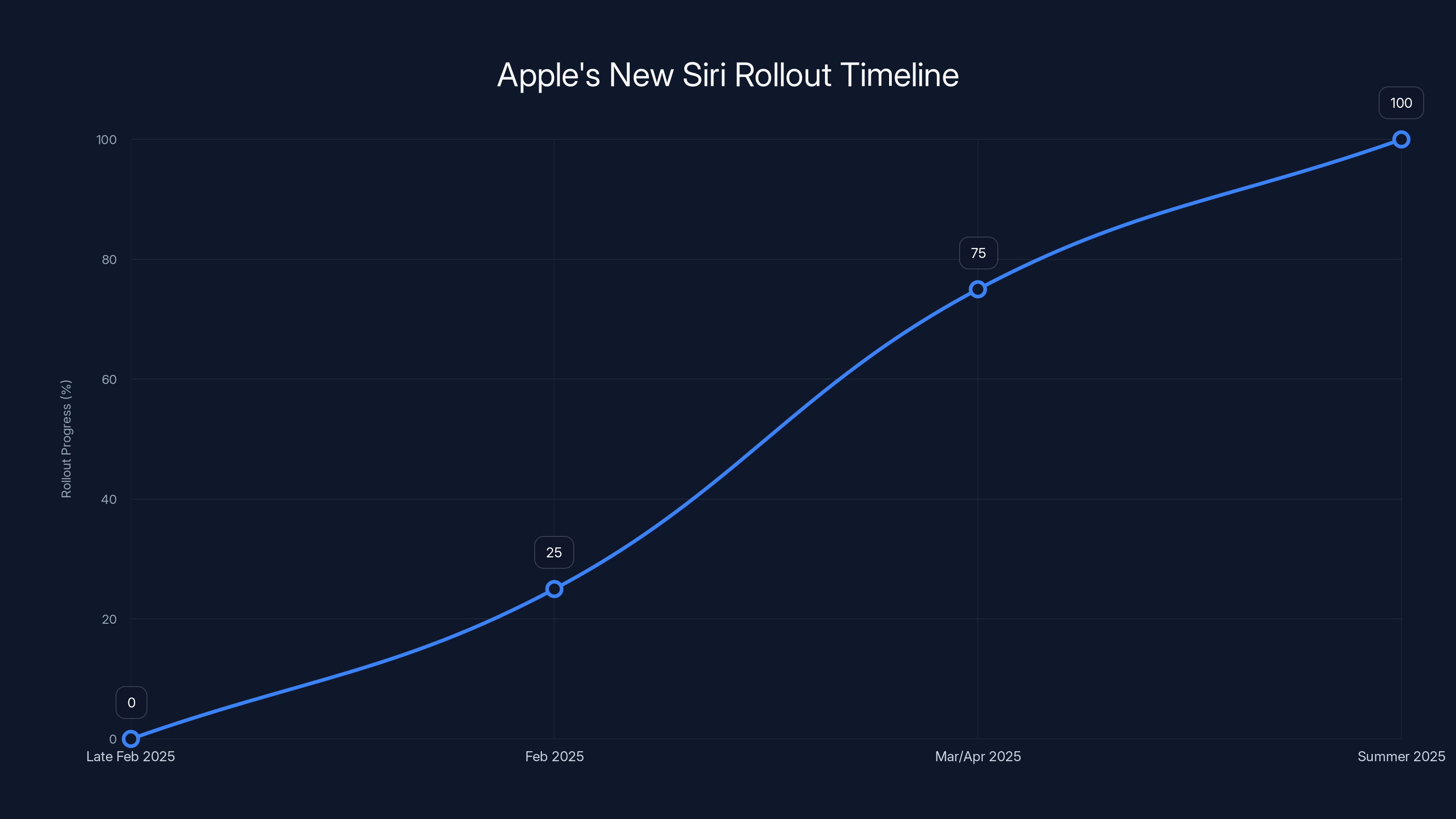

The timeline is aggressive. We're talking about a preview in late February 2025, a broader rollout with iOS 18.4 in spring, and then the main event at WWDC in June where Apple will show off the full capabilities. By fall 2025, this new Siri—codenamed "Campos" internally—will ship with iOS 19.

But here's the thing: this partnership is bigger than just better voice recognition or faster responses. It represents a fundamental shift in how Apple approaches AI, how it handles privacy in an AI-first world, and what it means to be a competitor in the age of large language models. The company that once said "what happens on your iPhone stays on your iPhone" is now partnering with one of the world's largest AI companies to power its most personal assistant.

So what's really happening here? Why is Apple doing this? What does Gemini-powered Siri actually do? And what does it mean for iPhone owners, privacy advocates, and the broader AI landscape? Let's dig in.

TL; DR

- February debut: Apple will demonstrate Gemini-powered Siri in late February 2025 before a full reveal at WWDC.

- Chatbot capabilities: The new Siri will function like ChatGPT, handling complex queries and multi-step tasks.

- iOS 18.4 rollout: The beta will start in February, with public availability expected in March or early April 2025.

- Google partnership: This marks Apple's first major AI partnership with a competitor, using Google's Gemini model.

- Full launch: Complete rollout arrives with iOS 19 in fall 2025, alongside Apple Intelligence features.

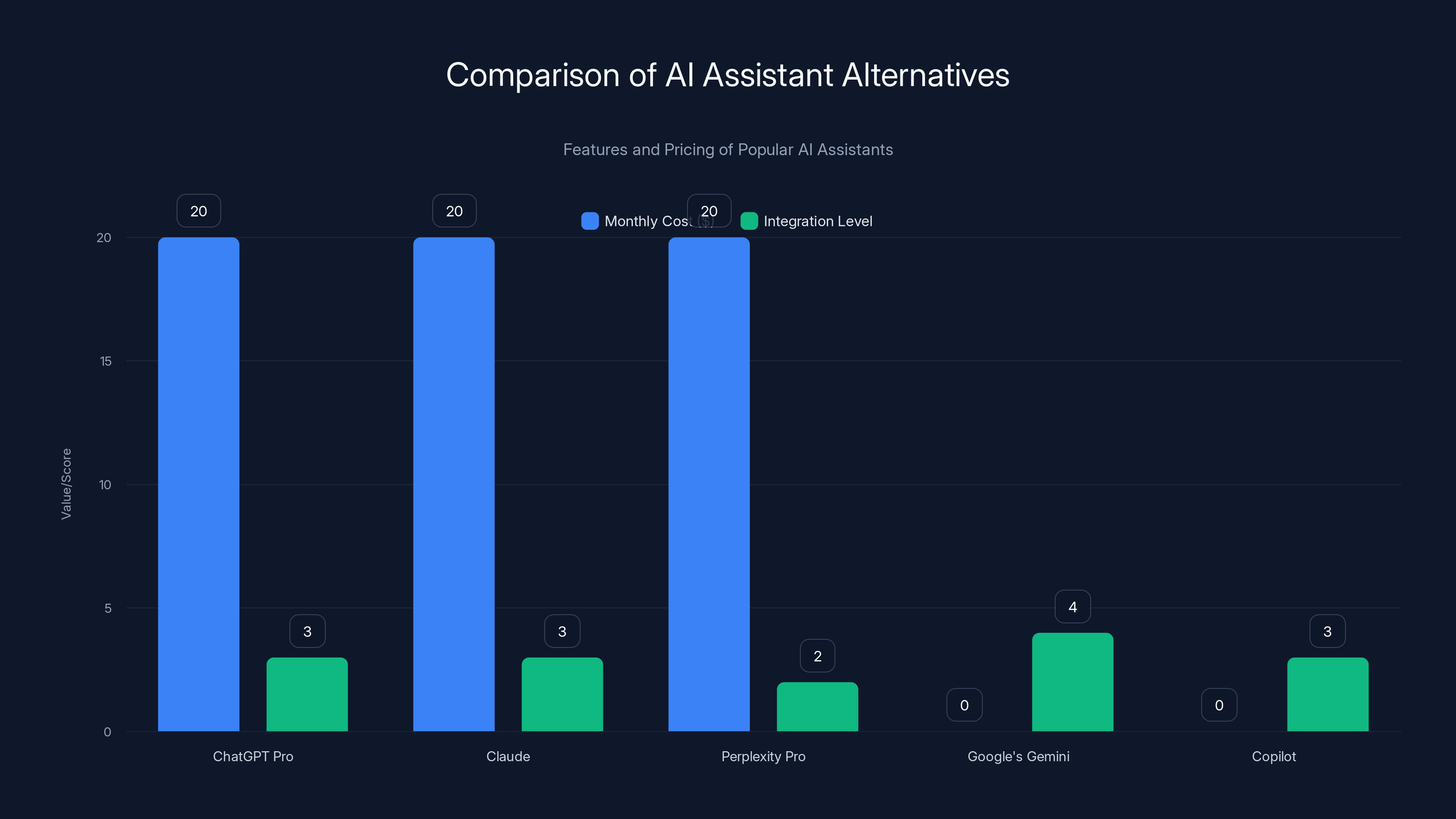

This chart compares the monthly cost and integration level of popular AI assistants. Google's Gemini and Copilot are free, while others have a $20/month premium version. Integration levels are estimated based on app features and ecosystem compatibility.

Understanding the Current State of Siri

Before we get excited about what's coming, we need to talk about where Siri is right now. And honestly, it's not a pretty picture.

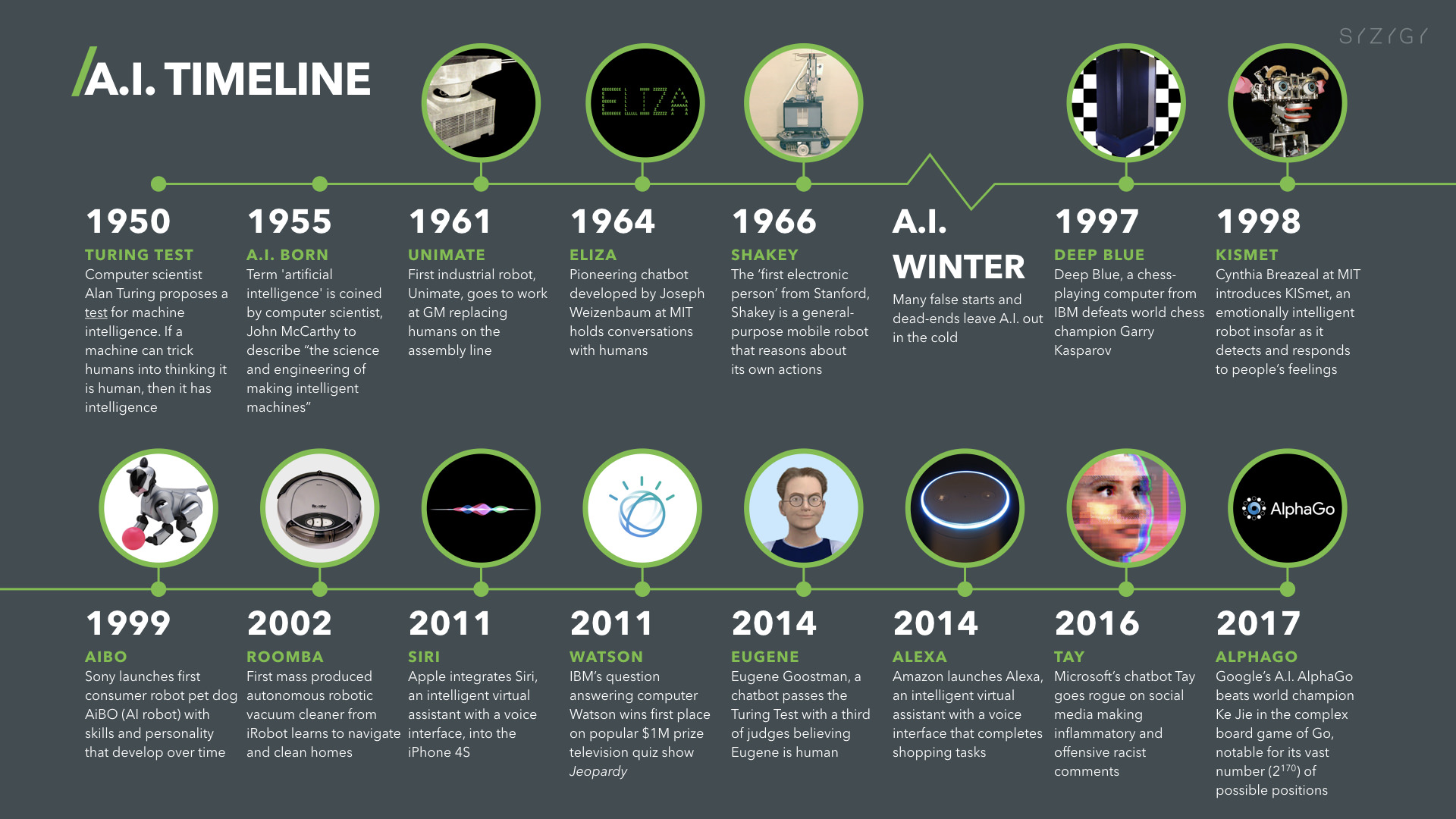

When Siri launched in 2011 as a beta feature on iPhone 4S, it was mind-blowing. A voice assistant that could understand natural language? That could answer questions? That could control your phone without touching the screen? Apple had basically invented something revolutionary.

But here's what happened next: not much.

Over the years, Siri got better at recognizing voices and handling basic commands. It learned to work across more devices—iPhone, iPad, Mac, Apple Watch, HomePod. It integrated with more of Apple's services. But at its core, Siri remained fundamentally limited. Ask it something outside its pre-programmed domain, and it would either Google the question (handing the task to Siri's backend systems) or tell you it couldn't help.

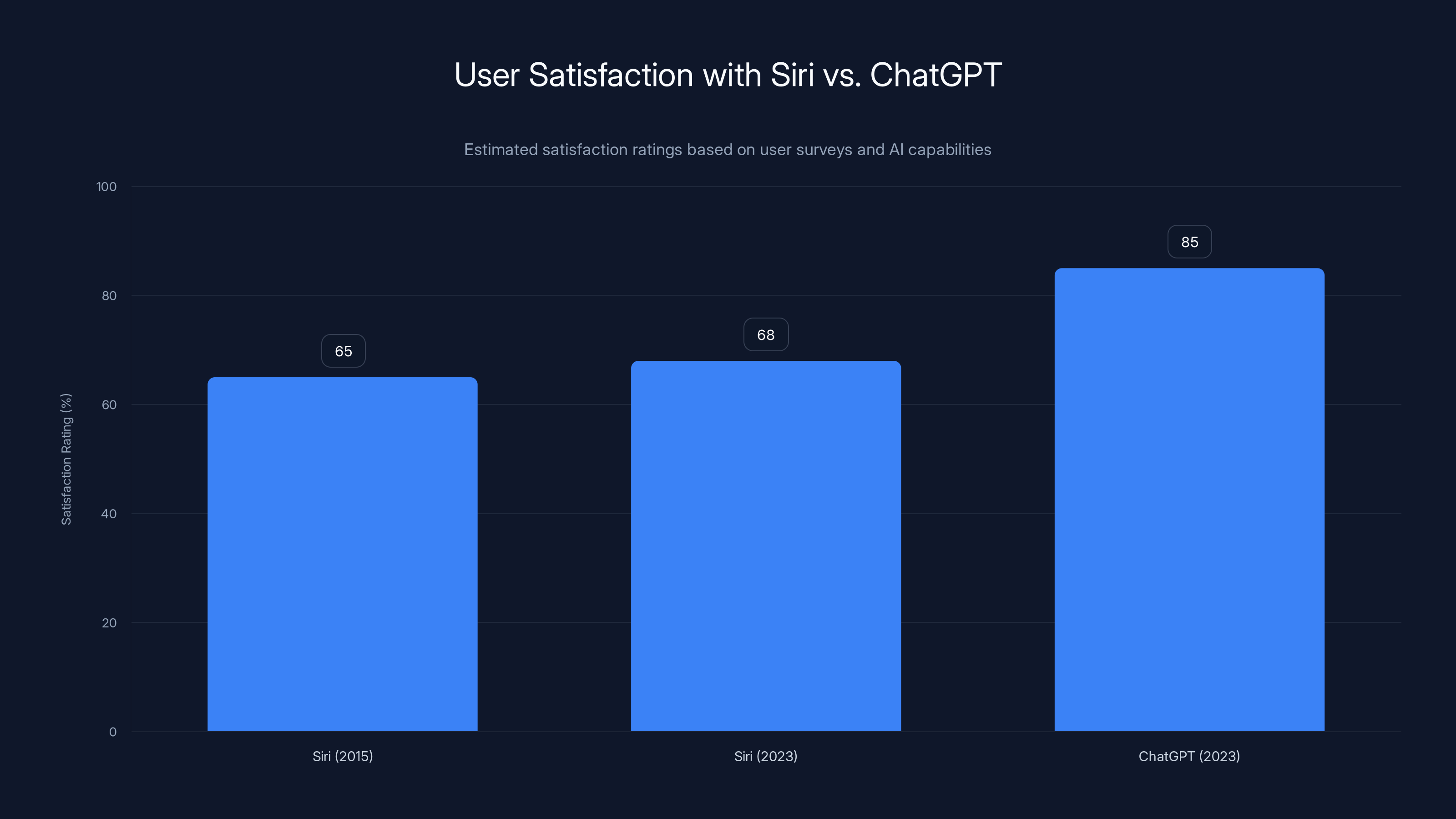

Compare that to ChatGPT, which arrived in late 2022 and instantly made every other AI assistant look dated. ChatGPT could reason through complex problems. It could write code, analyze documents, brainstorm ideas, and handle multi-step tasks. It felt like talking to someone who actually understood what you were asking.

Apple watched this happen. The entire world watched it happen. And for a company that prides itself on innovation, Siri's limitations became increasingly embarrassing.

The issue wasn't technical incompetence. Apple has brilliant engineers and enormous resources. The problem was strategic. Apple built Siri around a narrow set of use cases: controlling music, managing calendar entries, sending texts, checking weather. Each capability was hand-crafted and integrated into iOS.

Scaling that model to cover everything ChatGPT can do would require basically rebuilding Siri from scratch. And Apple, for years, seemed unwilling to make that investment—or perhaps more accurately, unwilling to use a large language model that might send queries to Apple's servers (or worse, make it harder to control your data).

So here we are in 2025, and Apple finally realized that the old approach isn't working anymore. Users expect more. The market has moved on. And the company needs to act.

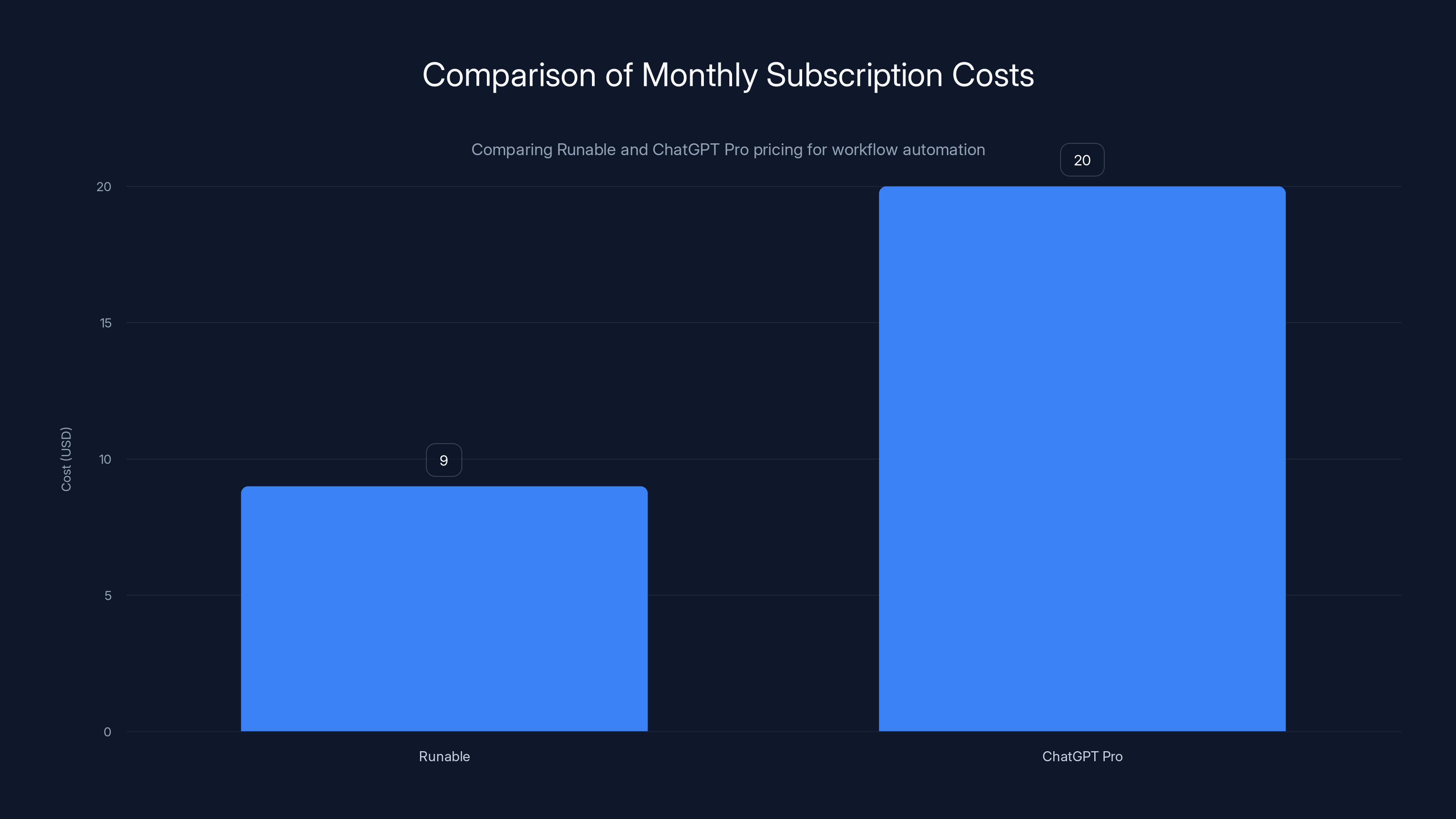

Runable offers a more affordable subscription at

The Google Gemini Partnership: What Changed

Let's talk about the elephant in the room: Apple partnering with Google on Gemini-powered Siri is genuinely surprising.

These companies compete on everything. Search. Maps. Cloud services. Privacy. And yet here's Apple, incorporating Google's Gemini AI model directly into iOS. It's the kind of move that would've seemed impossible five years ago.

What changed?

First, the competitive pressure became undeniable. ChatGPT proved that large language models work. Gemini, Claude, and other models showed that there's no single winner in the AI space—there's room for multiple approaches. Apple realized it couldn't pretend this trend wasn't happening.

Second, the partnership actually makes sense from a privacy perspective (or at least, Apple can market it that way). Here's the logic: instead of building its own large language model—which would require massive data collection and compute infrastructure—Apple can use Google's existing model. Apple can then add privacy protections on top: on-device processing for simple queries, encrypted transmission for complex ones, and the ability to opt out entirely.

Third, this lets Apple avoid the complexity of building an LLM from scratch. Training a world-class large language model takes years, billions of dollars, and access to incredible amounts of training data. Apple would need to hire entire teams of AI researchers, build new data centers, and maintain competitive parity with models that are being updated constantly. Why do all that when Google already has Gemini?

But here's the uncomfortable part: this partnership also reveals Apple's AI ambitions have limits. The company that spent years talking about privacy-first computing is now deeply dependent on a Google service. If there's ever a dispute between the companies, or if Google's business model shifts, Apple could find itself in a difficult position.

There's also the question of data. When you ask Siri (powered by Gemini) a complex question, where does that query go? How much data does Google see? How long does Google retain it? These are the questions Apple will need to answer clearly when the feature launches, and if the answers are fuzzy, trust erodes fast.

Timeline: When You'll Actually Get This

Let's walk through what's happening and when, because the timeline is important for understanding how Apple is rolling this out.

Late February 2025 (Public Demo): Apple will show off the new Siri in demonstrations. This is the "hey, look what's coming" moment. You won't get it yet, but you'll see what's possible. The company will likely highlight specific use cases: asking Siri to write emails, summarize documents, answer complex questions, handle multi-step workflows.

At this point, the feature will be codenamed "Campos" internally (that's the codename that's leaked). Apple won't use that name publicly, but it's useful to know when you're reading industry coverage.

February 2025 (iOS 18.4 Beta): Right around the time of the public demo, iOS 18.4 enters beta testing. This is for developers and people running beta software. If you're tech-savvy and willing to accept some instability, you could potentially try the new Siri before the official release.

This beta period is crucial for Apple. It's the testing ground for real-world usage at scale. The company will be monitoring how people use the new Siri, what breaks, what works better than expected, and what privacy issues surface.

March or Early April 2025 (Public Release): iOS 18.4 becomes available to everyone. This is when Gemini-powered Siri starts rolling out to regular users. But here's the catch: Apple typically does gradual rollouts. Not everyone will get it on day one. You might get it a few days or weeks later depending on your region, device model, and other factors.

Summer 2025 (WWDC): Grand Reveal: At Apple's Worldwide Developers Conference, the company will give the full presentation of the new Siri and all the Apple Intelligence features that go along with it. This is where marketing takes over from engineering, and you'll hear about all the use cases and why this matters.

WWDC is also where Apple will announce iOS 19 (sometimes called iOS 26 in internal testing, depending on Apple's internal versioning system—the company got creative with version numbers). That version will be the full release that brings Siri to all devices.

Fall 2025 (Official Launch): iOS 19, iPadOS 19, and macOS 19 ship with the new Siri as a standard feature. No beta, no gradual rollout—everyone gets it. This is the moment Apple considers the project "done."

Why does Apple announce it this early if it's not available until fall? A few reasons: it manages expectations, gives developers time to integrate with the new capabilities, and lets the company get feedback during the beta period that might influence the final product.

The new Siri will be demonstrated in February 2025, with a beta release in March/April and a full launch in fall 2025. Estimated data.

What Gemini-Powered Siri Actually Does

Now for the fun part: what can this thing actually do?

According to reports, the new Siri will function much more like ChatGPT than the current Siri. That means it can:

Handle complex questions: Instead of just looking up a fact, the new Siri can reason through multi-part questions. "What's the best time to visit Tokyo given that I have these dates free and I don't like crowds?" The current Siri would struggle. The new one should handle it.

Write and edit text: Ask the new Siri to write an email, draft a social media post, or rewrite a paragraph of text. It'll compose something based on your request and context from your device.

Summarize information: Hand Siri a document, email thread, or article, and ask it to summarize the key points. The current Siri can't do this. The new one can.

Understand context: The new Siri will have better understanding of what you're referring to. If you say "send a message to that person I met last week," it should be able to figure out who you mean based on your calendar and contacts.

Handle workflows: This is the big one. You could theoretically ask Siri to "prepare a report based on my sales data from this week and email it to my team." The new Siri could potentially break that down into steps: extract sales data, format it, generate a summary, compose an email, and send it. The current Siri can't do any of that.

Understand nuance: LLMs are better at understanding what people actually mean rather than what they literally say. "I'm feeling productive today" is different from "I'm feeling stressed." The new Siri should recognize these distinctions and respond appropriately.

The exact capabilities will depend on how Apple implements Gemini integration. The company might lock some features behind Apple Intelligence (its broader AI initiative), or limit certain capabilities to specific device models or geographies.

One thing to watch: how much of this runs on-device versus on Google's servers. Apple will almost certainly want to do as much on-device processing as possible for privacy and latency reasons. But complex reasoning tasks are computationally expensive. An iPhone might not be able to handle certain operations locally, which means they'll need to be sent to the cloud.

This is where Apple's privacy claims will be tested. The company will need to be transparent about what data leaves your device and how it's protected during transmission and storage.

The Privacy Question: Can Apple Really Protect Your Data?

Here's the uncomfortable reality: privacy and AI are fundamentally in tension.

Building a world-class AI assistant requires data. Lots of it. You need to train on diverse examples, learn user preferences, understand context. The more data flowing into the system, the better it gets.

But Apple's entire brand is built on privacy. "What happens on your iPhone stays on your iPhone." It's in their marketing, their executive speeches, their privacy policy. So how does Apple square the circle of partnering with Google (whose entire business model is built on data collection) while maintaining its privacy narrative?

Here's the approach Apple will likely take:

On-device processing: Simple queries get processed entirely on your iPhone. If you ask Siri what time it is, or to set a reminder, that stays completely local. No data leaves your device.

Private Cloud Compute: For more complex queries that require Gemini, Apple will use what it calls "Private Cloud Compute." The idea is that your request gets sent to Apple's servers (not Google's), where it's processed, and then the response comes back to you. Apple claims it doesn't store your data and uses encryption to keep requests private.

User choice: Apple will give you the option to opt out of using Gemini for complex queries. You can turn off the cloud-based features entirely if you want to, even though that limits what Siri can do.

The problem with this approach? It still requires trusting Apple. You're giving Apple the ability to send your voice queries to their servers. Even if Apple isn't storing the data, there's still a window where your information is in transit and could theoretically be intercepted or logged.

There's also the Google component. When Apple's servers route a query to Gemini, some information about your request flows through Google's infrastructure. Apple will claim this is anonymized and encrypted, but there's still a trust relationship involved.

For users who care deeply about privacy, the new Siri might be a hard sell. You're trading some privacy for functionality. That's an acceptable trade-off for many people, but it's worth thinking through carefully before you start using this feature.

Apple's challenge will be communicating this trade-off honestly without scaring people away. The company will want to emphasize the privacy benefits compared to ChatGPT, Google Assistant, or Alexa. But it also needs to be honest about the limitations of its privacy protections.

The timeline shows key stages in the rollout of Apple's new Siri, starting with a public demo in late February 2025 and culminating in a full reveal at WWDC in Summer 2025. Estimated data.

How This Affects Apple's AI Strategy

The Gemini-powered Siri isn't happening in a vacuum. It's part of Apple's broader "Apple Intelligence" initiative—the company's attempt to integrate AI throughout iOS, iPadOS, and macOS.

Apple Intelligence includes things like:

Better photo search: Find the exact photo you want by describing what's in it, rather than scrolling through hundreds of pictures.

Smarter notifications: Your phone learns what notifications matter to you and surfaces the important ones first.

Writing tools: Grammar checking, tone adjustment, and content generation built into every app.

Mail and Messages features: Smart reply suggestions, priority inbox filtering, and better conversation summaries.

The new Siri is the centerpiece of this initiative. It's the orchestrator that ties everything together.

What's interesting is that Apple is taking a hybrid approach. Some AI features run entirely on-device using efficient models. Others use cloud processing. Some use Gemini, others use Apple's own models. The company isn't putting all its eggs in one basket—it's building a multi-model, multi-approach system.

This is smart strategy. It hedges Apple's bets. If the Google partnership goes badly, Apple can rely on its own models and on-device processing. If privacy concerns emerge, the company can shift more work to on-device processing. If users love a particular feature, Apple can expand and optimize it.

But it also makes Apple vulnerable. The company is now dependent on Google in a way it wasn't before. If Google decides to change Gemini's capabilities, pricing, or terms of service, Apple has to adapt. That's a different kind of risk than Apple usually takes.

Comparison to Competitors: Where Siri Stands

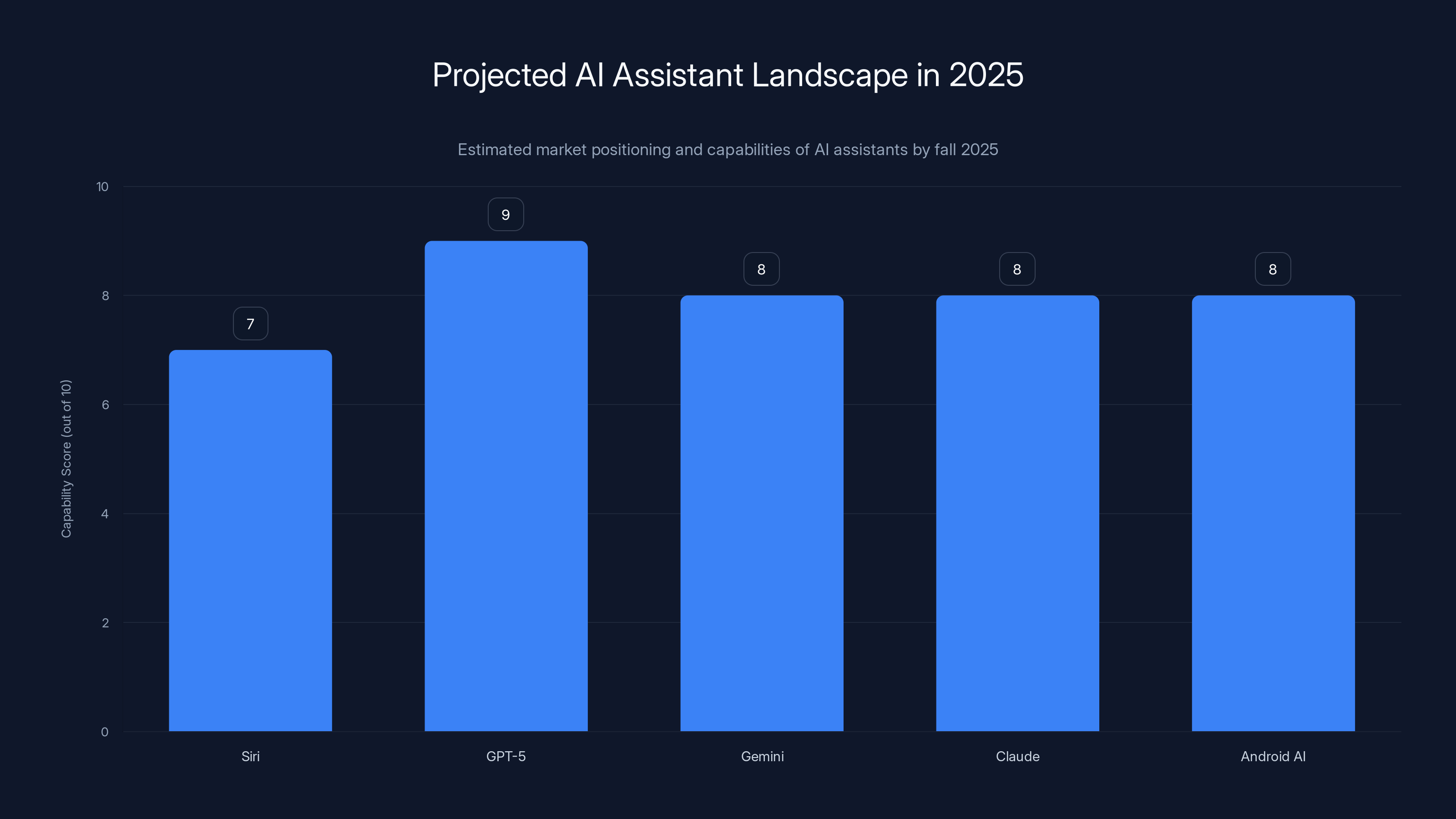

Let's put this in context. How does Gemini-powered Siri compare to what your competitors are already doing?

ChatGPT: OpenAI's system is the gold standard. It can do everything the new Siri is promising, and it's available right now. ChatGPT understands complex queries, writes code, analyzes documents, and handles multi-step tasks. The advantage of Siri will be integration with iOS and your personal data (calendar, contacts, photos). ChatGPT requires you to manually add context or switch between apps.

Google Assistant: Google's own assistant has been powered by LLMs for a while now. It's integrated into Android, so it has similar advantages to what Siri will have. The difference is Google Assistant has been coasting on the assumption that it's "good enough," while the new Siri is being positioned as a massive leap forward.

Alexa: Amazon's assistant has actually been adding generative AI capabilities too, trying to compete with ChatGPT-level intelligence. But Alexa is primarily a smart speaker assistant, while Siri is mobile-first.

Copilot: Microsoft's Copilot (powered by OpenAI) is everywhere—Office apps, Windows, Bing. The advantage is tight integration with Microsoft products. The disadvantage is that it's less personal than a phone-based assistant.

The new Siri's biggest advantage is context and intimacy. Your phone knows more about you than any other device or system. It has access to your location history, your messages, your photos, your calendar, your health data. If Apple can use all that context while protecting privacy, Siri could become something genuinely unique.

The risk is that building all this functionality while maintaining privacy constraints makes Siri less capable than competitors. You can't use all your personal data if you can't send it to the cloud for processing. This might force Apple to make compromises that competitors don't have to make.

By fall 2025, Siri is expected to catch up with competitors like GPT-5 and Gemini, but might not lead in capabilities. Estimated data.

What Developers Need to Know

If you're building apps for iOS, the new Siri opens up possibilities that didn't exist before.

Apple has been working on "Siri Shortcuts"—a system that lets third-party apps expose functionality that Siri can control. With Gemini-powered Siri, those shortcuts become much more useful.

Instead of just automating a sequence of steps, you could build shortcuts that use natural language understanding. For example, a banking app could let Siri understand "send money to Sarah" and convert that into a transfer without the user specifying an account, amount, or confirmation step.

Apple will likely announce expanded APIs at WWDC that let developers integrate their apps more deeply with the new Siri. This could include:

- Custom voice commands specific to your app

- The ability to ask Siri to access app data and describe it in natural language

- Integration with Apple Intelligence features like summarization

- Advanced intent recognition that understands complex requests

Developers will need to start thinking about how voice and natural language interaction changes their app's design. Apps that currently require multiple taps might be able to consolidate those interactions into a single voice command.

For certain categories of apps (banking, messaging, productivity), this could be transformative. For others, voice interaction might remain a nice-to-have rather than a core feature.

The Broader Implications for Apple's Business

Let's zoom out for a moment. What does this move mean for Apple as a company?

First, it signals that Apple is doubling down on services. The iPhone is still the revenue driver, but increasingly, Apple's growth comes from services like iCloud, AppleCare, Apple Music, and App Store revenue. A smarter Siri could drive more engagement with iOS and increase the stickiness of the ecosystem.

Second, it shows that Apple is willing to compromise on vertical integration when it makes strategic sense. For years, Apple refused to use external services for core functionality. Now, the company is comfortable relying on Google. That's a significant shift in philosophy.

Third, it positions Apple in a different way in the AI arms race. The company isn't trying to out-engineer OpenAI or Google on AI research. Instead, it's taking an existing best-in-class model (Gemini) and integrating it into the world's most personal device. That's a different way to compete, and it might be a better one.

Finally, it suggests that Apple is serious about software and AI as differentiators. The company spent the last decade optimizing hardware and margins. Now, it's investing heavily in software capabilities that might not show up on a spec sheet but dramatically improve the user experience.

For Apple shareholders, this is positive. It shows the company can still innovate in software. For iPhone owners, it means the device you're holding is about to become much more capable. For competitors, it's a reminder that Apple can move fast when it needs to.

Estimated data shows ChatGPT's user satisfaction significantly surpasses Siri's, which has seen little improvement since 2015.

Potential Challenges and Obstacles

Of course, this entire plan could hit bumps along the way. Let's talk about what could go wrong.

Regulatory pressure: Governments around the world are scrutinizing big tech. A partnership between Apple and Google, two of the largest tech companies, will face regulatory scrutiny. Some governments might question whether this violates competition laws. Apple might be forced to make concessions or even abandon parts of the plan in certain regions.

User confusion: Regular people don't understand the difference between on-device processing and cloud processing. They'll just know that their voice queries are going somewhere. If Apple can't clearly explain why this is private or safe, adoption could be slower than expected.

Privacy backlash: If there's any incident where user data is mishandled, or if security researchers find vulnerabilities in how Siri transmits data, it could damage trust. Apple's privacy brand has taken hits before (like when the company tried to scan iCloud photos for CSAM). Another incident could be significant.

Integration issues: Rolling out a major feature to billions of devices is complex. There will be bugs. Some combinations of hardware and software might not work well. Some apps might not integrate smoothly. The first few months will likely be messy.

Performance problems: If the new Siri is slow or unreliable, people will abandon it. The current Siri is responsive (even if it's not capable). Making it more capable without sacrificing speed is challenging.

Competitive responses: By the time the new Siri launches in fall 2025, Google Assistant and Copilot will have also evolved. Apple might find that the gap between Siri and competitors isn't as big as it seems right now.

Google relationship deterioration: If Apple and Google have a falling out (over antitrust issues, business terms, or something else), Apple could lose access to Gemini. That would be catastrophic for Siri's capabilities.

Apple has thought through many of these challenges, but they're real risks nonetheless.

The Opportunity for Alternative AI Solutions

Here's something worth considering: the new Siri actually creates opportunities for competitors.

Apple is betting that privacy-conscious users will accept the new Siri despite its cloud dependencies. But some users won't be comfortable with that. They'll want an AI assistant that's truly private, truly local, and doesn't depend on large tech companies.

That's an opening for open-source models like Llama, Mistral, or Mixtral. These models can run on a phone locally, with no data leaving your device. They're less capable than Gemini, but for many use cases (local search, text generation, summarization), they're good enough.

We might see new apps emerge that offer "private AI" alternatives to Apple's offering. Just like how some people refuse to use Google Search and prefer Ecosia or DuckDuckGo, some people might prefer a local AI assistant to a cloud-based one.

There's also an opportunity for third-party assistant makers. Apps like Runable could position themselves as privacy-first alternatives that use on-device AI models instead of relying on cloud services. The new Siri might actually drive more interest in alternatives rather than consolidating the market further.

For developers, this is good news. The market for AI assistants is expanding, and there's room for multiple approaches.

How to Prepare for the New Siri

If you're an iPhone user, what should you do to prepare?

First, manage expectations: The new Siri will be better, but it won't be perfect. It will still make mistakes. It will still fail to understand some requests. That's normal for AI systems.

Second, think about how you might use it: Start imagining the tasks you'd want Siri to handle if it were smarter. Writing emails? Summarizing articles? Scheduling based on your preferences? Get specific. The more you think about use cases, the faster you'll adopt the new Siri once it's available.

Third, review your privacy settings now: Get familiar with how to control privacy on your iPhone. Understand app permissions, iCloud settings, and location tracking. This will make it easier to make informed decisions about which Siri features to enable once they're available.

Fourth, try ChatGPT or Claude now: If you haven't used an LLM-powered AI assistant before, get familiar with how they work. Ask them questions. Have conversations with them. This will give you a baseline for what Siri could become.

Fifth, follow the beta releases: If you're tech-savvy, consider signing up for the iOS 18.4 beta in February. That's your chance to try the new Siri early and see if it's worth the privacy trade-offs.

Sixth, read the privacy documentation carefully: When Apple releases the new Siri, don't just accept the defaults. Read the privacy settings. Understand what data is being collected, what's encrypted, and what protections are in place. Make an informed decision about how much you're willing to trust Apple with your data.

Alternatives to Consider

Now, here's something most articles won't tell you: you don't have to wait for Siri.

Right now, you have options:

ChatGPT or ChatGPT Pro: Open the app, ask any question, get a powerful AI assistant. $20/month for the premium version gets you GPT-4 access and file uploads. It works on iOS and is available today.

Claude: Anthropic's assistant is arguably smarter than ChatGPT for many tasks. It's available via the web or as an iOS app. Also has a paid tier ($20/month) for better capabilities.

Perplexity: If you want AI search rather than a pure chatbot, Perplexity is excellent. It searches the web and synthesizes answers with source citations. Free version available, Pro version for $20/month.

Google's Gemini app: Google's own assistant, available as a standalone iOS app right now. It's integrated with Google's services and search, and it's free.

Copilot: Microsoft's assistant, available as an iOS app. Free to use, though some features require a paid subscription.

None of these are as integrated into your phone as Siri will be. But they all offer capabilities that the current Siri doesn't have. If you want a taste of what Siri will be like, try one of these.

The interesting question is what happens if the new Siri doesn't deliver on the hype. If the rollout is buggy, if capabilities are disappointing, or if privacy concerns emerge, iPhone users already have established habits with ChatGPT or Claude. Breaking those habits will be hard.

Apple's job is to make the new Siri so seamlessly integrated and capable that it becomes the default choice, not just another option.

The Competitive Landscape in 2025

Let's talk about timing. By the time the new Siri launches in fall 2025, what will the AI landscape look like?

AI models will be even better: GPT-5 or GPT-4.5 might be released. Gemini could be updated. Claude will continue improving. The baseline for what an AI assistant can do will have risen.

Mobile AI will be more prevalent: Other phone manufacturers will likely announce on-device AI assistants. Android phones might have built-in AI that rivals or exceeds Siri's capabilities.

Privacy-first AI will be more mature: Open-source models will improve. More privacy-conscious consumers will have adopted alternatives to big tech solutions.

Regulation might be stricter: Government oversight of AI will probably increase. Apple might face requirements about transparency, data deletion, or other constraints.

AI skepticism might grow: If there are any major incidents—hallucinations leading to bad decisions, privacy breaches, job displacement concerns—public opinion could shift against AI assistants.

Apple is being smart by launching Siri in phases. The February demo gives the company a chance to gauge reactions. The spring beta gets real-world feedback. The summer WWDC presentation can be adjusted based on what the company learns. The fall launch happens when the product is truly ready.

But there's a risk: if Apple takes too long, competitors will move ahead. The market for AI assistants is moving fast. By fall 2025, Siri might no longer seem revolutionary—it might just seem like it's finally caught up.

What This Means for the Future of Voice Interfaces

The new Siri could be a turning point for voice interfaces on phones.

Right now, most people don't use voice much on their phones. They text, tap, and scroll. Voice is useful in specific situations (driving, hands full, privacy concerns), but it's not the primary interaction method.

If Siri becomes genuinely intelligent and capable, that could change. You might use voice for more tasks: composing messages, searching for information, managing your schedule, controlling your home.

That shift would have profound implications:

Accessibility: Voice interfaces are huge for people with disabilities. Better voice control makes phones more accessible to everyone.

Distraction: Always-on voice assistants could make people more distracted or dependent on technology.

Privacy: More voice interaction means more data being recorded and transmitted.

Industry disruption: If phones become voice-first, that changes how apps are designed, how navigation works, and what kinds of interactions are possible.

Apple might be preparing for a future where your primary interaction with your phone is through Siri. That's different from the current model where Siri is a supplementary feature.

We might even see a future where you barely need to see your screen. Instead of looking at your phone, you talk to it. Instead of typing emails, you dictate them. Instead of scrolling through apps, you ask Siri to do things.

That's a significant shift in how humans interact with technology. And Apple might be leading it.

Key Takeaways and Final Thoughts

Let's bring this together.

Apple is launching a Gemini-powered Siri in February 2025, with a beta rollout in early spring and full launch in fall 2025. This is the most significant update to Siri in years, and it represents Apple partnering with Google on a core feature.

The new Siri will be capable of handling complex queries, writing text, summarizing information, and managing workflows—basically everything ChatGPT can do, but integrated into your phone.

Apple is betting that integration and privacy will matter more than pure capability. The company is also betting that users will accept cloud-based processing as long as it's encrypted and users have the option to opt out.

There are real risks: regulatory issues, privacy concerns, user confusion, competitive responses, and the possibility that the feature launches with bugs or disappoints.

But there's also significant opportunity. If Apple executes well, the new Siri could become the most useful AI assistant on the market, simply because it has such deep integration into your life.

For iPhone users, this is good news. Your phone is about to become much more capable. For competitors, it's a reminder that Apple can still move fast when it wants to. For the AI industry, it's a signal that the race for the best assistant is far from over.

The future of Siri isn't determined yet. It depends on execution, on how users respond, and on whether Apple can deliver on the promise. But February 2025 will be a turning point either way. We'll find out if Apple has finally solved the Siri problem, or if it's just created a new set of challenges.

In the meantime, if you want to experience what the new Siri will be like, download ChatGPT, Claude, or Perplexity. Get comfortable with having an intelligent AI assistant that can understand complex requests. By the time the new Siri arrives, you'll have a clear sense of what to expect.

Apple has been patient with Siri for over a decade. Now, finally, it's time for the assistant to catch up with the rest of the world. The real question isn't whether the new Siri will be better—it will be. The question is whether it'll be good enough to matter.

FAQ

When will the new Siri be available?

Apple will demonstrate the new Siri in late February 2025, with beta testing beginning in February 2025 for iOS 18.4. The beta will become publicly available in March or early April 2025. The full official launch with iOS 19 is expected in fall 2025. Early access will be limited to beta testers, while the general public will receive the update as part of the standard iOS release cycle.

What is Gemini and why is Apple using it?

Gemini is Google's large language model, a powerful AI system trained on vast amounts of text data to understand and generate human language. Apple is using Gemini because the company decided partnering with an existing proven LLM was faster and more cost-effective than building its own from scratch. This approach lets Apple focus on privacy protections and user experience rather than competing directly with Google and OpenAI on model development.

How does the new Siri protect my privacy?

Apple's approach includes on-device processing for simple queries (which never leave your phone), "Private Cloud Compute" for complex queries (where Apple claims requests are encrypted and not stored), and user controls to opt out of cloud-based features entirely. However, some data will still travel to Apple's servers and potentially to Google's Gemini infrastructure, so trust in Apple's security practices is still required.

Will the new Siri replace ChatGPT or Google Assistant?

No, but it will compete with them more effectively than the current Siri does. The new Siri will have capabilities comparable to ChatGPT, but with tighter integration into your phone and access to your personal data. ChatGPT and Google Assistant will remain useful for situations where you want a separate interface or don't trust Apple's privacy claims. Many power users will likely use multiple assistants depending on the task.

What features will the new Siri have that the current version doesn't?

The new Siri will understand complex, multi-part questions; write and edit text; summarize documents and emails; understand context better; handle multi-step workflows; and recognize nuance in language. These capabilities essentially bring Siri to feature parity with ChatGPT while adding the advantage of deep integration with your iPhone's personal data and services.

Can I try the new Siri before the official release?

Yes, if you sign up for the iOS 18.4 beta in February 2025. Beta testing requires installing pre-release software on your iPhone, which can be unstable. Apple typically releases betas to developers first, then expands to public beta testers. You can sign up for beta testing through Apple's beta program on their website, though spots can be limited.

Is the new Siri available on all Apple devices?

Initially, the new Siri will likely be available on newer devices (iPhone 15 and later, recent iPad models, and current-generation Apple Silicon Macs). Older devices might not have the processing power or storage for all features. Apple will provide specific device compatibility information when the feature launches. Some on-device processing might require specific hardware capabilities.

Will this partnership affect Apple's competition with Google?

This partnership is unusual given the two companies' competitive relationship. It shows that Apple is willing to compromise on vertical integration when strategic advantages justify it. The partnership likely involves specific legal agreements about data handling and could face regulatory scrutiny. Long-term, this partnership could increase tension between Apple and Google as they compete in AI, or it could lead to deeper integration if the partnership proves successful.

What should I do to prepare for the new Siri?

Start by trying ChatGPT, Claude, or Perplexity to understand what LLM-powered assistants can do. Review your iPhone's privacy settings to understand how to control app permissions and data sharing. Follow Apple's announcements and beta releases in early 2025. When the new Siri launches, read the privacy documentation carefully before enabling cloud-based features. Keep your iOS updated to get the latest Siri improvements as they roll out.

Will the new Siri cost money?

Apple hasn't announced pricing, but based on the company's typical approach, the new Siri will be included as part of iOS at no additional cost to current iPhone owners. Some premium features might be locked behind Apple's subscription services or might require higher-end devices, but the core functionality should be available to all compatible iPhones. Apple typically doesn't charge for major OS updates or bundled features.

Exploring Runable for Workflow Automation

If you're thinking about how AI can transform your productivity workflow, Runable offers AI-powered automation for creating presentations, documents, and reports. While Siri will handle voice commands and basic tasks on your iPhone, Runable tackles the larger workflow automation challenge—taking your requests and generating polished outputs like slide decks, formatted documents, and data reports automatically.

Use Case: Automatically generate your weekly status report or monthly presentation deck from data without manually formatting everything.

Try Runable For FreeThink of Siri as your conversational assistant—asking questions, setting reminders, controlling your phone. Think of Runable as your production assistant—turning those conversations and your raw data into finished documents, presentations, and reports. Many teams are using both: Siri for immediate queries and interactions, Runable for creating the outputs that matter for work.

Related Articles

- Apple's AI Pivot: How the New Siri Could Compete With ChatGPT [2025]

- iOS 27 Rumors: 5 Game-Changing iPhone Upgrades Coming [2025]

- Apple Intelligence Siri Upgrade: What to Expect in 2025

- OpenAI's Enterprise Push 2026: Strategy, Market Share, & Alternatives

- Is Apple Intelligence Actually Worth Using? The Real Truth [2025]

- Google's Free Gemini SAT Practice Tests: What Students Need to Know [2025]

![Apple's Gemini-Powered Siri Revolution Explained [2025]](https://tryrunable.com/blog/apple-s-gemini-powered-siri-revolution-explained-2025/image-1-1769366506882.jpg)