Snapchat Parental Controls: Family Center Updates [2025]

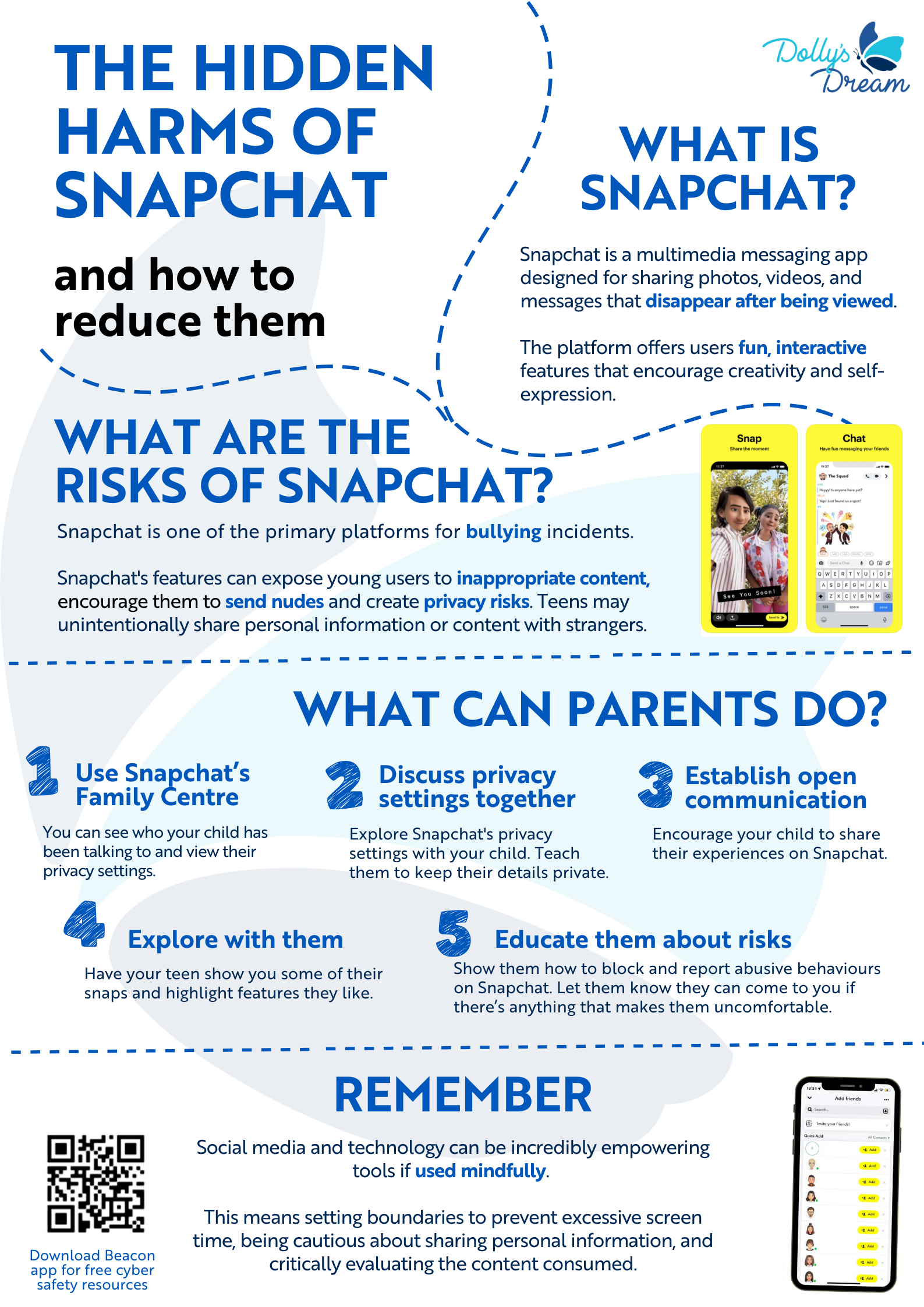

Snapchat's been under pressure for years. Lawmakers, attorneys general, and parent advocacy groups have all pointed the same finger: the app makes it way too easy for teenagers to talk to complete strangers. It's a legitimate concern. Teens get harassed. They get groomed. They fall into conversations that start innocent and turn predatory. So Snapchat is doing what most platforms eventually do when safety becomes a lawsuit risk: it's giving parents more tools to monitor what's happening as reported by Engadget.

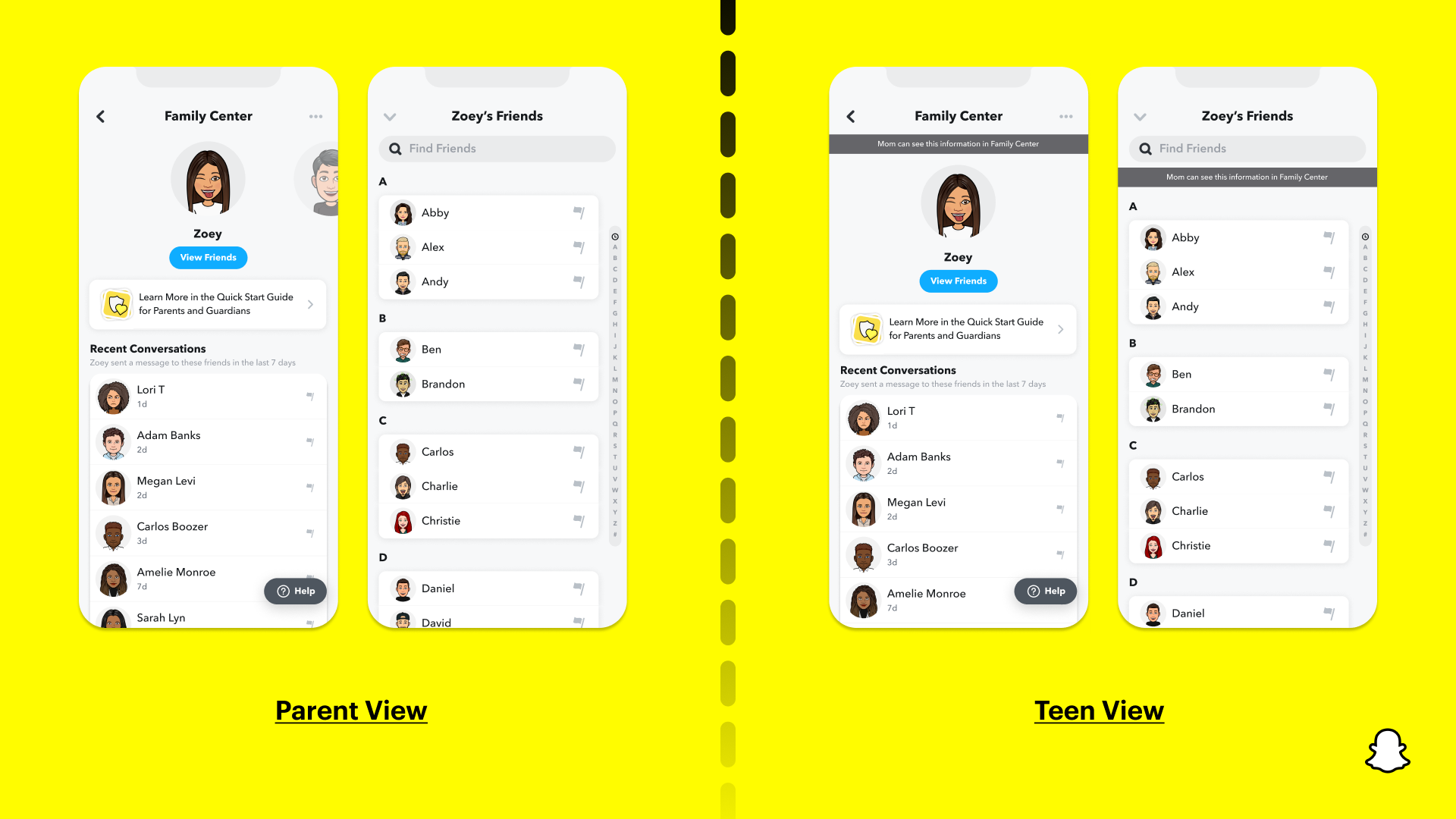

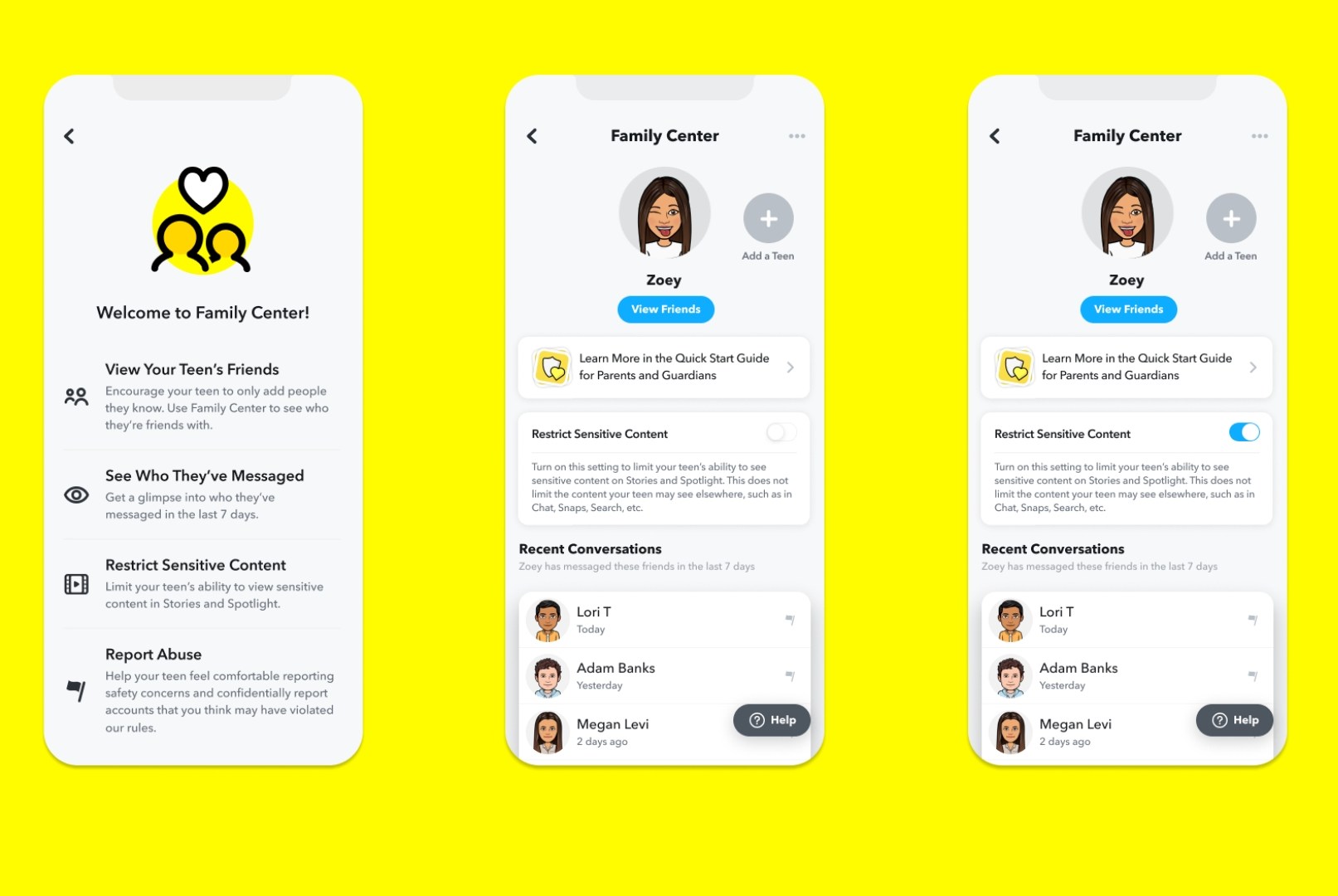

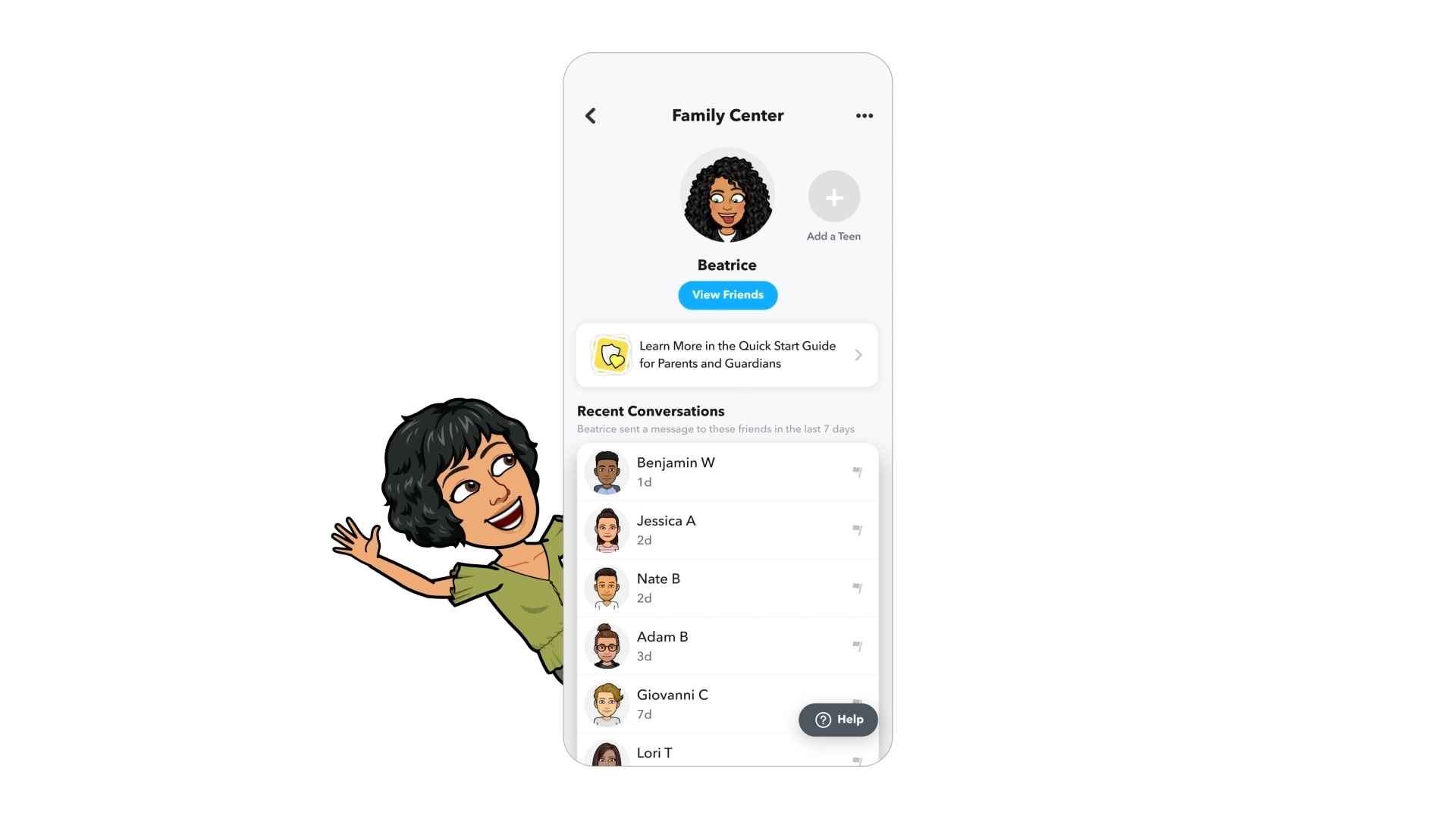

But here's where it gets interesting. Rather than going the draconian route—locking kids down completely or killing features—Snapchat's approach is actually thoughtful. The company is adding what it calls "trust signals" to its Family Center parental control suite. When a new friend connection happens, parents now see whether that person shares mutual friends with their teen, whether their contact info is saved on the phone, or if they're classmates in an in-app community. If there's zero overlap? That's a flag. It prompts parents to "start a productive conversation" instead of just forbidding the friendship outright according to Snapchat's newsroom.

This matters because parental controls are only effective if they're not just surveillance—they're guidance. Heavy-handed monitoring drives teenagers to hide their digital lives, not improve them. But strategic visibility into connections combined with real data about how kids spend their time? That changes the game as noted by PCMag.

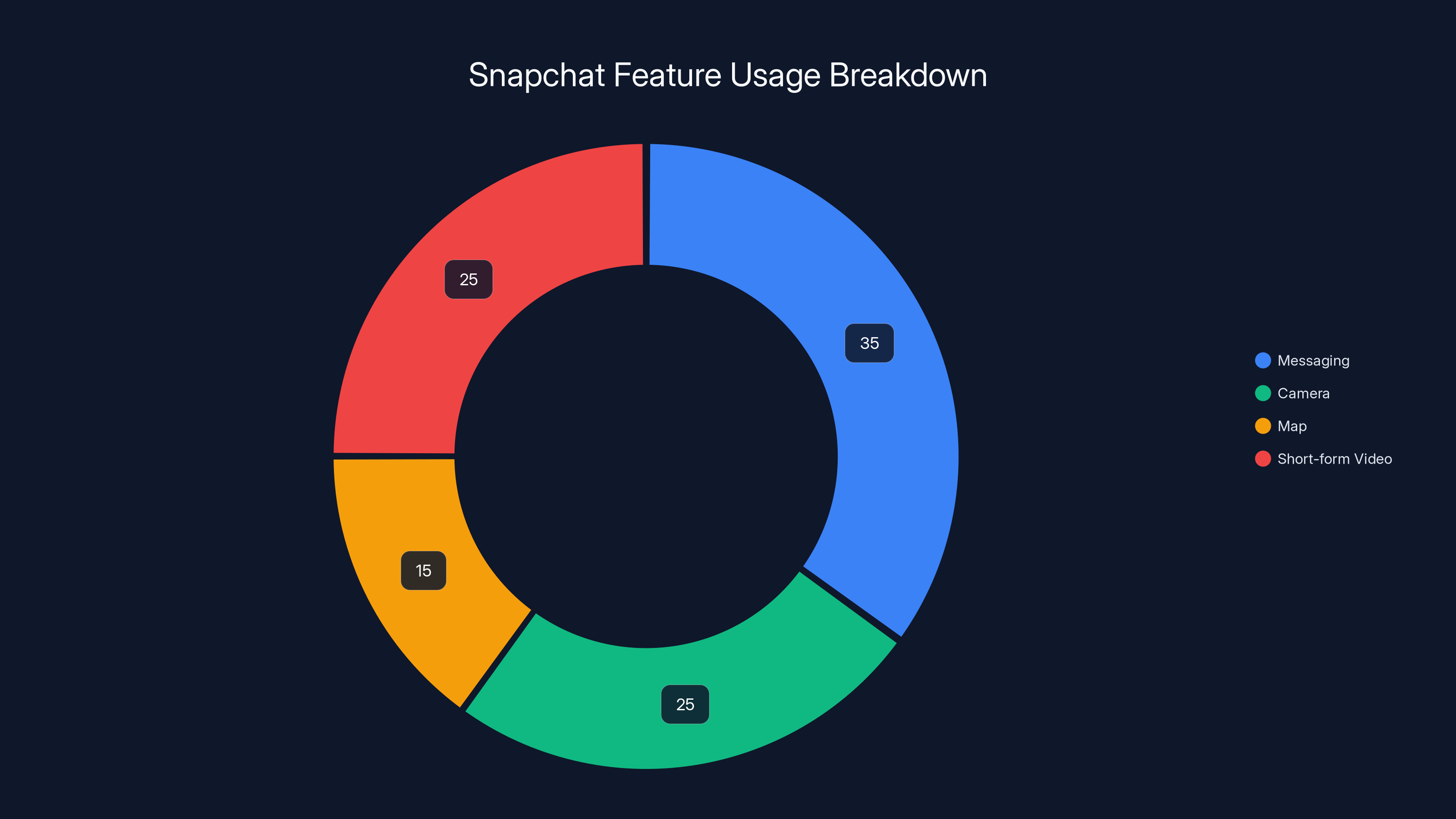

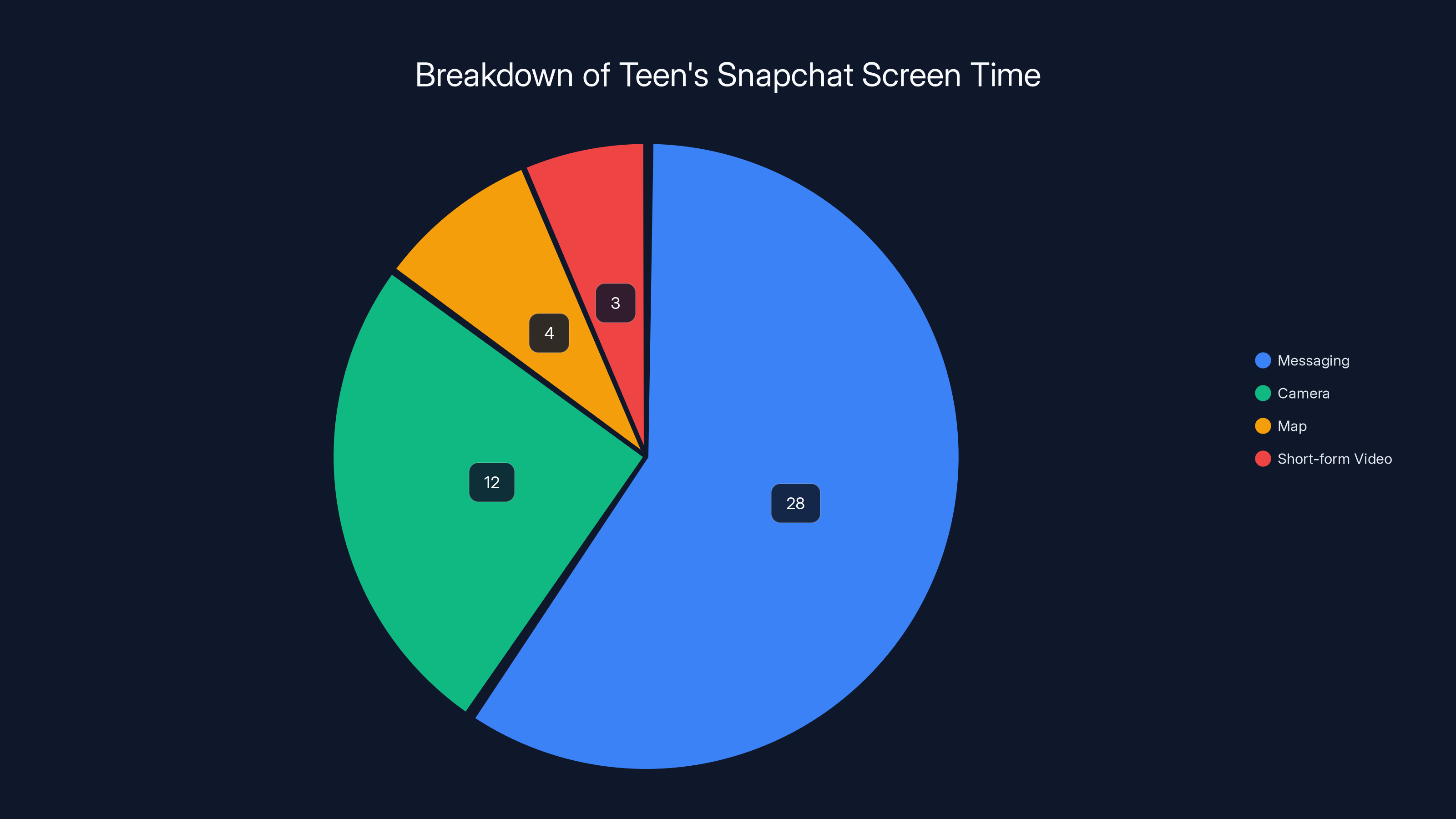

Snapchat's also completely overhauled the screen time dashboard inside Family Center. Instead of just showing "your kid spent 47 minutes today," parents now see exactly where that time went. Is it all in messaging? Camera use? The map feature? Watching short-form video? That granular breakdown reveals behavioral patterns that inform better conversations. A teen spending six hours a day in camera but five minutes messaging suggests different risk profiles than the reverse as detailed by TechCrunch.

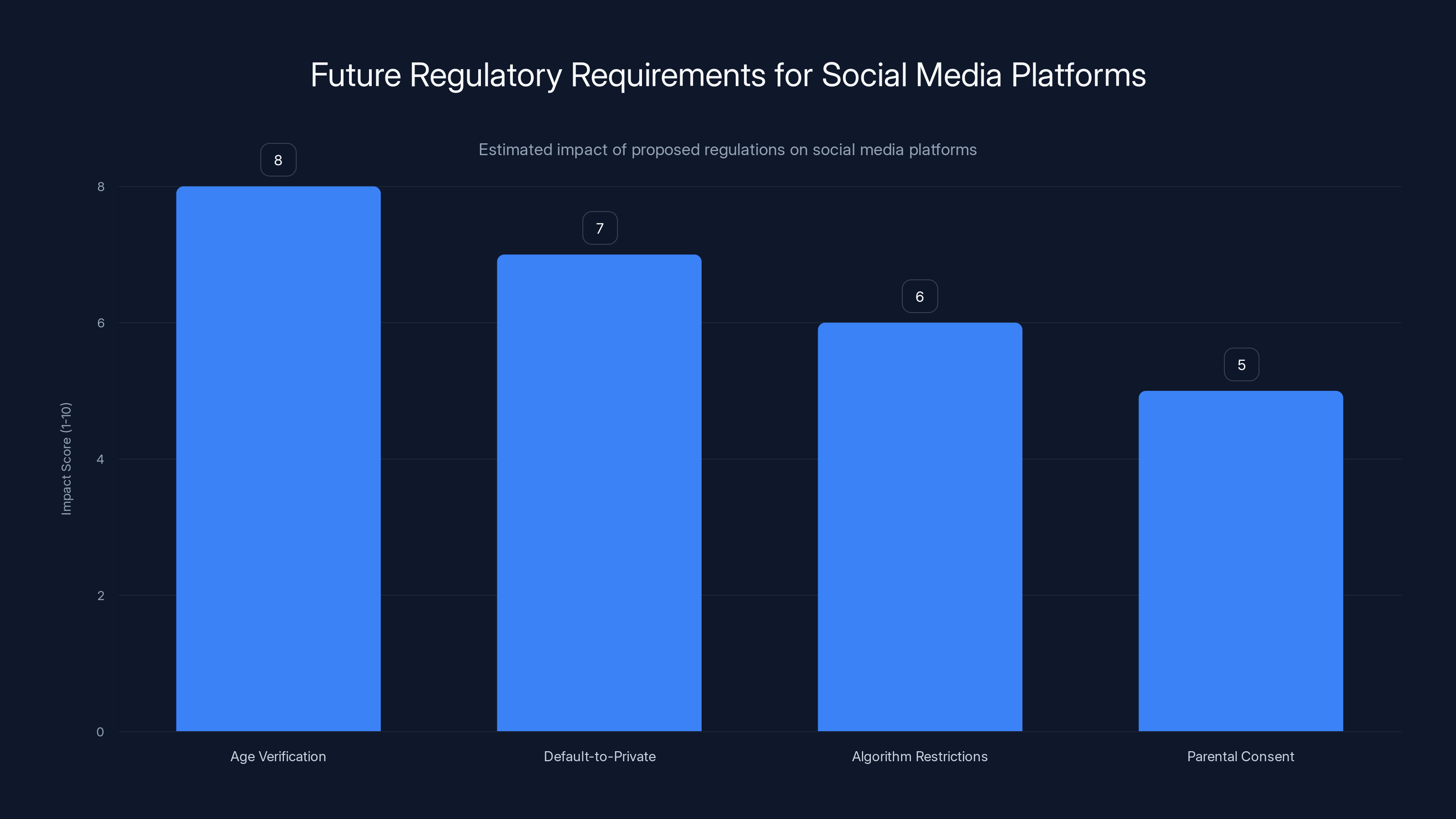

The timing of these updates isn't accidental. There's serious talk in Congress about restricting minors' social media use entirely. Tik Tok faces potential bans. Lawmakers are proposing age verification requirements. In this environment, Snapchat's strategy is clear: show parents you're taking safety seriously enough that outright bans become unnecessary. Give families the tools to make informed decisions rather than force-feeding restrictions as reported by TechCrunch.

This article digs into what these updates actually do, how they work, what they reveal about parenting in the social media age, and what gaps still remain. We'll also explore the broader landscape of teen safety on social platforms and what experts say actually works when it comes to keeping kids safe online as discussed by the Electronic Frontier Foundation.

TL; DR

- Family Center now shows trust signals when kids add new friends—mutual friends, saved contacts, shared communities, or none of these (potential warning sign)

- Screen time is now feature-specific, breaking down time in messaging, camera, map, and video separately instead of as one lump number

- The approach prioritizes conversation over restriction, giving parents info to discuss connections rather than just block them

- Snapchat is responding to lawsuits and regulatory pressure from attorneys general and parent advocacy groups citing teen safety risks as highlighted by the Lawsuit Information Center

- These updates reflect a broader shift in how platforms handle teen safety, balancing oversight with respecting teen autonomy

Estimated data suggests that messaging and short-form video are the most used features by teens on Snapchat, each accounting for approximately 25-35% of total screen time.

The Problem Snapchat Is Trying to Solve

Let's be direct: Snapchat has a stranger contact problem. The platform was designed with ephemeral messaging and a focus on visual communication. No permanent record. No pressure. Perfectly suited for teens who want privacy from their parents. Perfectly exploited by adults looking to contact minors.

The company has faced multiple lawsuits. New Mexico's Attorney General filed suit alleging Snapchat knowingly facilitated child exploitation. Other states have investigated. Parent groups have organized. The core complaint is always the same: the app makes it too easy for a predator to find a teenager, initiate contact, and establish what looks like a normal friendship before things get weird as reported by Oak Hill Gazette.

Snapchat's "Quick Add" feature is often cited as particularly problematic. It suggests new friends based on shared contacts, location data, and user behavior. For most teens, that's convenient. For predators, it's a roadmap. Similar features exist on other platforms, but Snapchat's visual-first design means less friction between connecting and communicating. A photo request is just a few taps away from a conversation.

The company's response has been incremental over the years. They've added safety resources. They've implemented AI to detect and remove predatory accounts. They've created guides for parents. But those feel like band-aids when the underlying architecture makes stranger connections so frictionless.

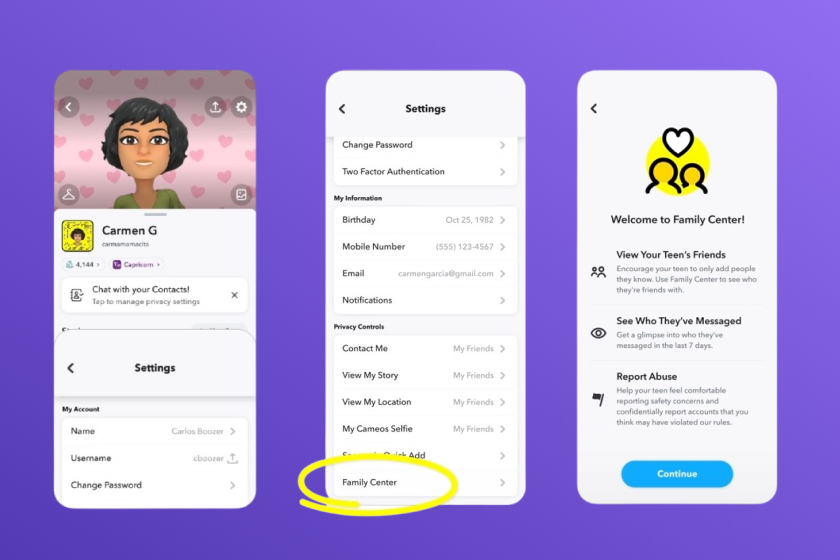

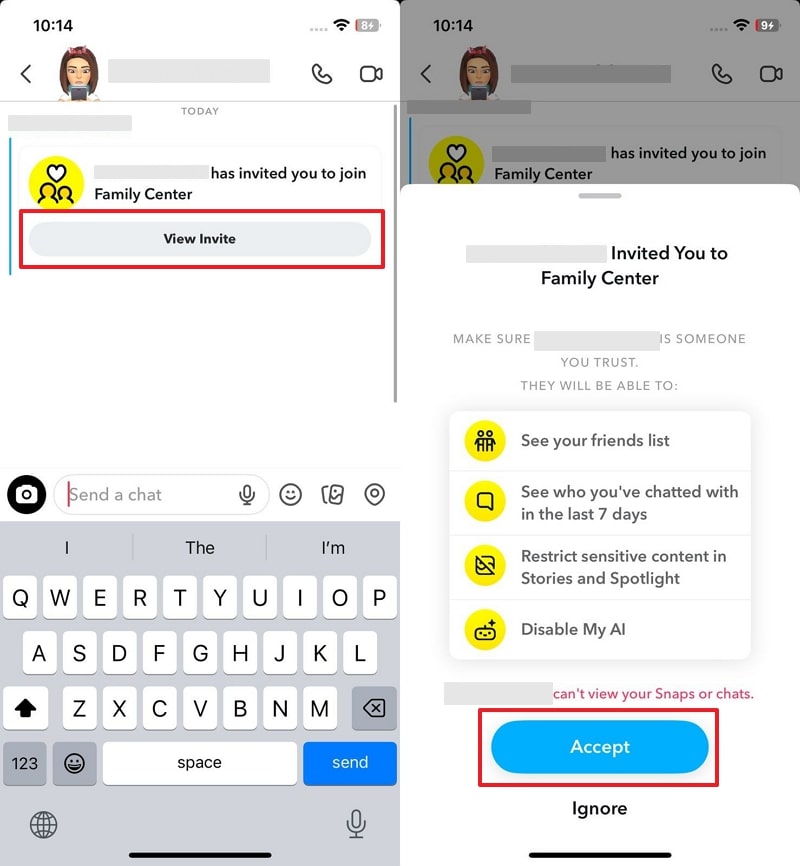

Now, with regulatory pressure intensifying and Congress seriously discussing teen social media restrictions, Snapchat is making a bigger move. Family Center, which launched in 2022, is getting a meaningful upgrade. The idea: if parents have real visibility into why their kid accepted a friend request, they can make better decisions about whether to allow it according to Snapchat's newsroom.

Understanding Family Center's Trust Signals

The core innovation here is what Snapchat calls "trust signals." When a teen accepts a new friend request or adds someone new, Family Center now displays contextual information about that connection.

Here's what parents actually see:

Mutual Friends: This is the strongest signal. If the new contact shares multiple mutual friends with the teen, it suggests a real social connection. Kids naturally form friendship groups. If someone is genuinely part of that circle, they'd be connected to others in it. A teenager with zero mutual friends with the new person is immediately more suspicious.

Saved Contact: If the new friend's phone number or contact info is already saved on the teen's phone, it indicates a real-world relationship. Maybe it's a classmate, a friend from camp, someone from a club. The key insight is that the relationship predates Snapchat. That's a legitimate trust signal. It means the teen didn't just connect with a random person on the app; they connected with someone they already knew offline.

Shared Community: Snapchat has in-app communities organized by location, interest, or shared status (like being a student at the same school). If the new contact is part of a community the teen joined, there's institutional overlap. They might both be members of the "Central High Class of 2025" community, for example. That's not as strong as mutual friends or saved contact, but it's better than nothing.

No Commonalities: This is the red flag scenario. New friend request, zero mutual friends, not in the teen's phone, no shared communities. That's when Snapchat explicitly recommends parents start a conversation. It's not an automatic block. It's a conversation prompt. "Hey, who is this person? How do you know them?"

What's clever about this approach is that it's not automatically restrictive. It doesn't prevent the friendship from forming. It just makes the parent aware. The actual parenting decision—what to do about it—stays with the parent. Some will absolutely forbid it. Some will ask questions and then allow it if they're satisfied with the explanation. Some will monitor the conversation that follows.

That flexibility matters because blanket restrictions don't work. Teens whose parents are overly controlling often develop sophisticated methods to hide their digital lives. They use private accounts, friend groups their parents don't know about, private messaging apps, burner accounts. Visibility combined with conversation is more effective than surveillance combined with control.

Estimated data: Age verification and default-to-private settings are expected to have the highest impact on social media platforms, pushing them towards stricter compliance with youth protection standards.

How Feature-Level Screen Time Changes the Conversation

The screen time dashboard upgrade is subtler but potentially more revealing than the trust signals feature.

Before this update, Family Center would show that a teen spent 47 minutes on Snapchat. That's it. No context. For a parent, 47 minutes seems moderate. Might be fine. Might be excessive. Hard to say without knowing what they were actually doing.

Now, that 47 minutes gets broken down:

- 28 minutes in messaging

- 12 minutes using the camera

- 4 minutes on the map

- 3 minutes watching short-form video

This completely changes the information available to a parent.

Messaging-heavy usage suggests the teen is primarily in one-on-one or group conversations. That's interactive, social, not passive consumption. If a parent is concerned about screen time, messaging-focused use is generally seen as less problematic than passive video watching. The teen is actively engaging with people they know.

Camera-heavy usage indicates the teen is creating content, taking photos, applying filters, trying effects. This is creative behavior. It's also the most visible—parents can see if kids are posting publicly or just sending between close friends. Camera use also requires more active participation than passive scrolling.

Map time is actually important from a safety perspective. Snapchat's map feature shows the approximate location of friends. Extended time here might indicate stalking behavior, obsessive location-checking, or just normal friend group awareness. Parents need to understand which.

Short-form video is the passive consumption element. Snapchat's Spotlight feature competes directly with Tik Tok and Instagram Reels. Time spent here is algorithmically driven, less socially connected, more addictive. This is the area where research shows the strongest links to mental health issues like anxiety and depression in teens as reported by Hootsuite.

By separating these four categories, Snapchat gives parents information that actually supports decision-making. A parent might be unconcerned about 90 minutes of messaging and camera use but alarmed by 90 minutes in Spotlight. The granular data enables better conversations.

It also reveals patterns. If a teen's usage pattern changes drastically, that's notable. If they usually spend most time messaging but suddenly shift to video, that might warrant a check-in. If the behavior stays consistent, that's more reassuring.

The Regulatory Pressure Behind These Updates

Snapchat isn't updating Family Center because it suddenly got enlightened about teen safety. The company's making these moves because the regulatory and legal environment has become hostile.

The New Mexico lawsuit is the most visible example, but it's not alone. Illinois filed a case. Utah investigated. Parent groups have organized campaigns. There's been coverage in every major outlet about how social media harms teen mental health. Meanwhile, Congress members are using increasingly strong language about the need for regulatory action as noted by Barron's.

Then there's the Tik Tok precedent. Whether Tik Tok actually gets banned or forced to sell, the fact that such a massive platform faces genuine existential regulatory risk is a wake-up call for every other social media company. Snapchat's executives understand that if user safety becomes the issue that kills Tik Tok, they need to be visibly ahead of it.

The background to this is the rise of age-appropriate design standards. The FTC has been pushing companies to build "by design" privacy and safety measures rather than bolting them on afterward. States are passing legislation requiring age verification or parental consent for minors on social platforms. The operating assumption is shifting from "social media is fine for everyone" to "social media has real risks that need active mitigation" as discussed by EdTech Innovation Hub.

Snapchat's strategy here is sophisticated. By upgrading Family Center proactively, the company positions itself as taking teen safety seriously. The message to regulators is: "We don't need to be banned or heavily restricted. We're building tools that give parents real agency. That's better for everyone."

It's a defensive play, but it's also genuinely useful. The gap between offering no parental controls and offering thoughtful ones is enormous. Whether motivated by regulation or not, the outcome is more tools for parents.

Trust Signals in Practice: Real-World Scenarios

Let's ground this in realistic scenarios. Understanding how these trust signals work in practice reveals both their usefulness and their limitations.

Scenario 1: The Classmate Nobody Knew Offline

A 15-year-old adds someone from their high school. Parents see they share five mutual friends, are in the same school community, but it's not a saved contact. Maybe it's a new student, or someone in a different grade. The teen says, "Oh yeah, we have English together." Parents can verify through the school community connection. Trust signal: moderate. Handled well.

Scenario 2: The Random Quick Add

A 14-year-old adds someone from a different state. Zero mutual friends. Not in the phone. Shared community? Nope, nothing. Parents see this flag and ask, "Who is this?" Teen says, "I don't know, they just kept appearing in Quick Add, so I figured they were cool." Red flag moment. This is the exact scenario Snapchat's system is designed to catch.

Scenario 3: The Friend From Camp

Teen adds someone from a summer program two years ago. Parents see a saved contact (they were exchanging messages back then). Mutual friends connection exists even though they go to different schools. Trust signals all point to legitimacy. Parents feel comfortable with it.

Scenario 4: The "Influencer" with No Real Connection

This is a trickier one. Teen adds someone with thousands of followers who seems cool. Turns out the person is someone teens often follow—maybe a student influencer or semi-famous person. Zero personal connection. No mutual friends. Not in phone. But the teen says, "Everyone knows them, they're viral." Here's where trust signals work, but the teen's reasoning is about parasocial relationships rather than real connections. This becomes a parenting conversation about what "knowing" someone means.

Scenario 5: The Grooming Attempt (The Hard One)

A predatory adult creates a fake teen profile, uses age-appropriate language, and connects with a real teen. Theoretically, they might trick their way into some mutual connections or join communities to establish legitimacy. But here's where the system has to acknowledge its limits: sophisticated predators know how to game these signals. They're not adding random teenagers cold. They're doing reconnaissance. They're building fake networks. This is where other safety measures (content moderation, flagging suspicious account patterns, AI detection) have to pick up what trust signals miss.

The Family Center updates help with the low-friction stranger connections. They're less useful against determined bad actors who do their homework.

The pie chart shows that the majority of screen time is spent on messaging (28 minutes), followed by camera use (12 minutes), map (4 minutes), and short-form video (3 minutes). This detailed breakdown helps parents understand their teen's Snapchat usage better.

The Screen Time Breakdown: What It Actually Reveals

Let's dig deeper into what parents learn from the feature-level screen time data.

Consider two teens, both spending an hour daily on Snapchat.

Teen A's Breakdown: 48 minutes messaging, 9 minutes camera, 2 minutes map, 1 minute video.

Teen B's Breakdown: 8 minutes messaging, 5 minutes camera, 2 minutes map, 45 minutes video.

These are completely different use patterns.

Teen A is messaging-focused. They're using Snapchat like a social network, having conversations with friends. The camera use is supplementary. The research literature suggests this pattern is closer to healthy social media use—it's interactive and socially connected.

Teen B is video-focused. They're scrolling through Spotlight almost exclusively. This is passive consumption driven by algorithms. The research literature links heavy short-form video consumption to increased anxiety, depression, and sleep issues in teens. Same time investment, fundamentally different risk profile.

For a parent armed with Teen B's breakdown, the conversation becomes specific. "I see you're spending almost all your Snapchat time watching videos. Are you enjoying that, or do you feel like it's hard to stop?" That's worlds better than "You're on Snapchat too much."

The data also reveals behavioral changes. If Teen B suddenly shifts to 40 minutes messaging, that's a shift worth discussing. New relationship? Joining a group chat? The parent has data to work with.

It's worth noting that Snapchat benefits from positioning messaging and camera use as healthier than video. That's partly because it's true from a mental health perspective, but also because it's self-interested. Short-form video is algorithmically addictive and drives engagement, but it's also the feature drawing the most criticism from researchers and regulators. By breaking out screen time this way, Snapchat subtly repositions messaging and camera use as the "good" way to use the app. Clever messaging, but not inaccurate.

Comparing Snapchat's Approach to Other Platforms

Tik Tok, Instagram, Facebook, and You Tube all have parental control features. How does Family Center stack up?

Tik Tok's Family Pairing: Allows parents to see daily usage time and some content restrictions. That's it. No visibility into connections, no feature-level breakdown. Pretty bare-bones.

Instagram's Family Center: Shows daily usage time and how much time teens follow certain accounts. Better than Tik Tok in some ways, but no connection visibility. Also, Instagram's platform is less about strangers contacting each other and more about public content, so connection safety is less of a concern.

You Tube's Family Link (through Google Family): Shows app usage, search history, browsing history, and recommended videos. More visibility into content than most, but You Tube is video-focused so the feature-level breakdown is less applicable.

Facebook's Supervision Tools: Shows friend list, message requests, and basic contacts. Similar to Snapchat's original Family Center, though Facebook's platform has less of a "random stranger" problem since the social graph is more identity-tied.

Snapchat's updated Family Center is arguably more sophisticated than competitors in two ways: (1) the trust signal system for new connections is more nuanced than simple friend list visibility, and (2) the feature-level screen time breakdown is more informative than generic usage time.

That said, all of these tools share a fundamental limitation: they're parental controls for platforms that still make stranger connections possible. The best parental control tool can't change the underlying architecture. Tik Tok's algorithm will still recommend content from creators the teen doesn't know. Instagram will still suggest users to follow. Snapchat will still have Quick Add. The tools help parents stay informed and have conversations, but they can't remove the fundamental risk that social platforms introduce.

The Conversation Angle: Beyond Restriction

What's genuinely interesting about Snapchat's approach is the emphasis on conversation rather than restriction.

Research on adolescent development shows that harsh restrictions on digital life often backfire. Teens whose internet and social media use is heavily controlled often get better at hiding their digital lives. They create accounts their parents don't know about. They use borrowed devices. They delete their browsing history. They communicate through apps that auto-delete. The restrictive approach creates an adversarial dynamic.

Conversational approaches work better. When parents understand why teens are using something and can discuss tradeoffs rather than just forbidding behavior, teens are more likely to internalize healthy habits rather than just hiding unhealthy ones.

Snapchat's trust signals encourage this. Instead of a parent seeing a new friend request and immediately forbidding it, they see the context and ask, "Who is this person?" If the answer makes sense—they're a classmate, they're a camp friend from a saved contact, they're in a shared community—the friendship can proceed with the parent having useful information. If the answer is "I dunno, they just kept appearing in my suggestions," that's a conversation about how to evaluate stranger requests more carefully.

Similarly, the feature-level screen time doesn't come with automatic restrictions in the interface itself. It's informational. Parents can use it to set limits if they want, or they can use it to have conversations. "I notice you're spending a lot of time in Spotlight. What are you watching? Do you feel like it's hard to stop?"

This approach assumes that parents are reasonable decision-makers who can be trusted with information. It also assumes that teens are capable of thoughtful reflection about their habits. That's a more optimistic framing than the surveillance model, and the research suggests it's more effective.

Of course, it only works if parents actually engage with the tools and have real conversations. A parent who sees that their teen is spending 45 minutes daily in Spotlight and decides not to discuss it is missing the opportunity. The tool is only as useful as the parent's commitment to using it.

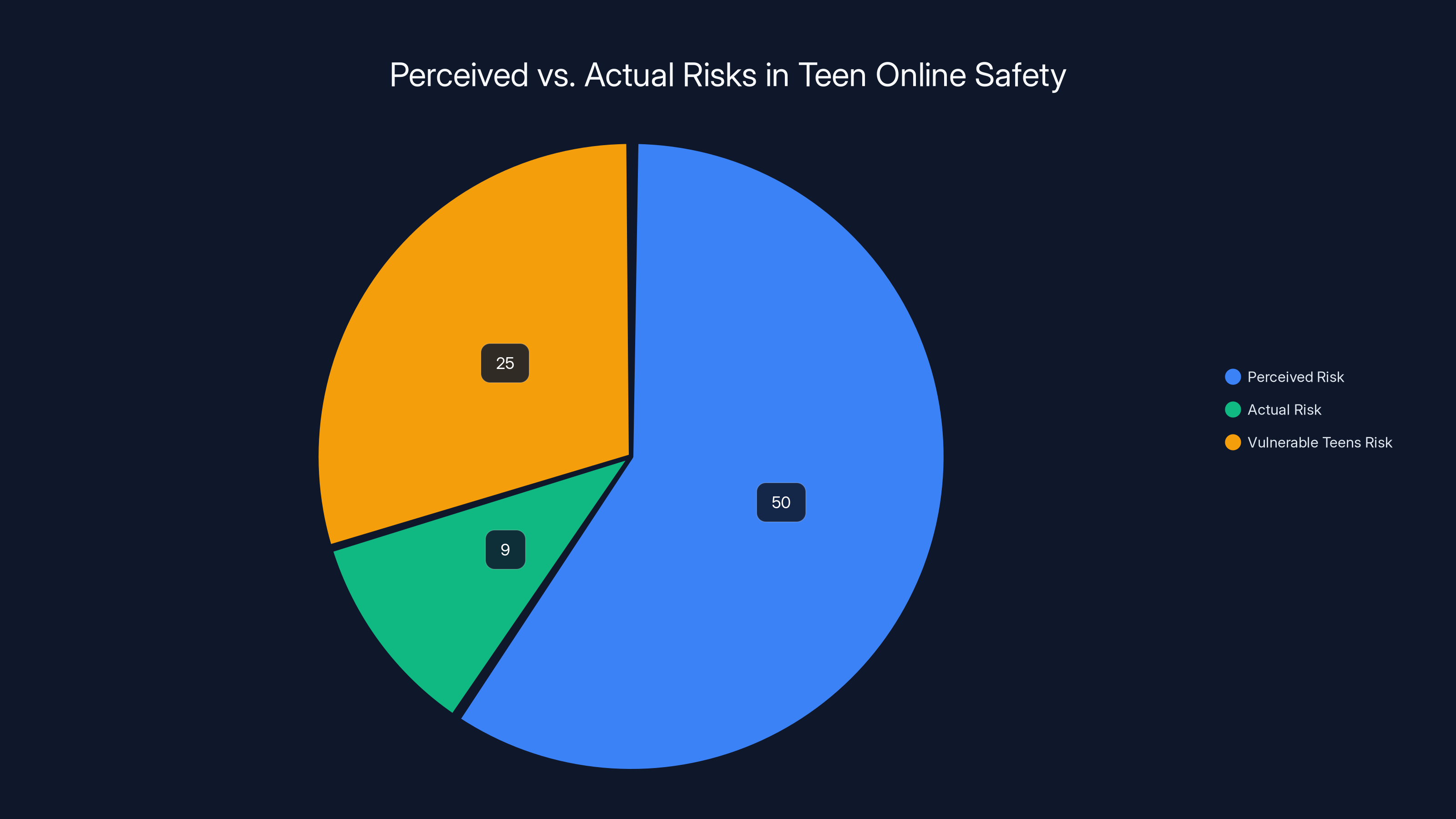

Estimated data shows a significant gap between perceived and actual risks of online predation for teens, with vulnerable teens facing higher risks.

Privacy Considerations and Data Limitations

Here's the tension: parental monitoring tools require collecting and displaying private information. That creates privacy risks that deserve discussion.

Snapchat is showing parents information about their teen's contacts, their location (via the map feature), and their activity patterns. That's sensitive data. What happens to it? Snapchat says this information is only visible to designated parents and guardians in the account. It doesn't appear in Snapchat's own analytics. But the company is fundamentally collecting and storing this data.

That's not necessarily wrong. Parents have a legitimate interest in their teen's safety, and teenagers are genuinely more vulnerable to online exploitation than adults. The question is whether the specific data collection is proportionate to the stated safety benefit.

The contact connection data (mutual friends, shared communities) is less privacy-intrusive because it's derived from information the teen's already sharing with the app. Snapchat already knows who their friends are. Making that visible to parents is an aggregation of existing data, not new collection.

The location data from map usage is more invasive. Snapchat is effectively telling parents not just whether their teen is using the map feature, but how much time they're spending there. If location data is visible in the map itself, that's a direct privacy-reducing change. Snapchat should be transparent about what location information is actually exposed to parents.

The screen time breakdown is in an intermediate position. Time spent in each feature is relatively benign compared to actual content viewed, but it's still creating detailed behavioral profiles that parents can see. That's fine if the teen knows it's happening, but it depends on family dynamics. A 17-year-old who feels micromanaged might resent this level of tracking even if it's not technically unreasonable.

The bigger privacy concern is how this data might be used. Right now, it's parent-to-teen. But what if Snapchat faced law enforcement requests for this data? What if they were hacked? What if they decided to use it for their own purposes in the future? Snapchat's privacy policy should be explicit about how long this monitoring data is retained, who can access it, and what restrictions exist on its use.

Real-World Application and Parent Feedback

To understand how these tools actually work in practice, consider real parent and teen perspectives.

Parent Perspective: Sarah is the mother of a 15-year-old. She set up Family Center after seeing news stories about teen exploitation on social media. She admits she doesn't check it obsessively—maybe twice a week. But when her teen added someone new, the trust signals immediately flagged zero mutual friends. Sarah asked about it. Her teen said, "Oh, it's someone from Discord who seemed cool." Sarah didn't ban the connection, but she said, "Okay, but be careful about what personal information you share with people you don't know in real life." The teen understood. The conversation happened because the tool provided a natural opportunity.

Teen Perspective: Marcus is 16. He knows his parents monitor his Snapchat through Family Center. He's not thrilled about it, but he gets why they're doing it. It doesn't really change his behavior because he's not doing anything particularly risky. He's mostly messaging friends and occasionally looking at Spotlight videos. The one time his parents noticed he was spending a lot of time in one feature, they asked him about it. He explained, and that was it. It felt invasive at times, but less invasive than when his parents wanted to actually read his messages.

The Implementation Gap: Not all parents actually use Family Center even after setting it up. It requires them to be proactive—checking it regularly, asking follow-up questions, using the information meaningfully. Parents who are overwhelmed, technically illiterate, or simply busy might set up the tool and then ignore it. In those cases, the tool provides no actual safety benefit.

The Workaround Problem: Savvy teens know about Family Center. Some use alternate accounts their parents don't know about, or they use other apps for conversations they want to keep private. The tool increases transparency if the teen doesn't actively circumvent it, but it doesn't provide the security guarantee that some parents might assume it provides.

What These Updates Miss: The Remaining Safety Gaps

Snapchat's updates improve parental visibility, but they don't solve the core problem: the platform still makes it easy for teenagers to connect with strangers.

The Quick Add Problem Persists: Snapchat's Quick Add algorithm still recommends people who have no connection to the teen. The trust signals help parents see when this happens, but they don't prevent the recommendation in the first place. A more fundamental change would be to stop recommending strangers entirely, but that would reduce the network effects that drive Snapchat's growth.

Account Verification Remains Optional: Snapchat doesn't require identity verification for all users. Anyone can create an account with minimal information. A predator can create a profile, add a photo, and be ready to target teens within minutes. Trust signals help catch this, but only if parents are actually monitoring.

Content Moderation Isn't Mentioned: The updates focus on connection visibility but say nothing about what happens after a connection is made. If a stranger starts sending inappropriate content to a teen, parental controls can't intervene in real-time. That requires active moderation and content scanning, which Snapchat does implement but which isn't highlighted in these updates.

The Mental Health Component: Research suggests that social comparison and algorithmic content (especially short-form video) harm teen mental health more than stranger contact does. The feature-level screen time helps parents see if their teen is spending lots of time in the video feed, but it doesn't address the underlying algorithm that's designed to be addictive.

Age Verification Gaps: Snapchat says it's for ages 13 and up, but there's no robust verification that users are actually that age. A 10-year-old can download and use Snapchat. The trust signals don't help with that problem.

These gaps suggest that while Snapchat's updates are meaningful, they're not comprehensive solutions to teen safety. They're necessary but not sufficient.

Teen A focuses on messaging, while Teen B spends most of their time on video content. This highlights different usage patterns and potential impacts on mental health.

The Broader Regulatory Context and Future Requirements

Snapchat's updates come in a specific regulatory moment. Here's what's currently happening:

Age-Appropriate Design Standards: The FTC's guidelines (established in 2023 through revisions to COPPA and broader age-appropriate design standards) require companies to design with younger users' privacy and safety in mind from the start, not as an afterthought. Parental controls help demonstrate compliance, but design-level changes matter more.

Proposed Legislation: Various states and Congress are considering rules that would require age verification, default-to-private settings for minors, restrictions on algorithmic recommendation for under-18s, and mandatory parental consent. These go beyond what parental controls do.

The Surgeon General's Report: In 2023, the U. S. Surgeon General released an advisory on teen social media use, focusing on mental health risks. The report doesn't call for bans but emphasizes the need for platforms to reduce risk factors, including limiting algorithmic feeds and recommendation systems for teens.

Industry Precedent: You Tube, Instagram, and Tik Tok have all tightened teen features over the past few years. You Tube's Restricted Mode, Instagram's Teen Accounts, Tik Tok's screen time warnings. Each platform is trying to show regulators they're taking this seriously.

Snapchat's updates fit into this pattern. They're not radical changes, but they're meaningful improvements that help the company argue that industry self-regulation works better than legal mandates. Whether regulators agree remains to be seen.

Looking forward, expect:

- Mandatory Age Verification: Platforms will likely be required to verify that users claiming to be under 18 actually are, using ID or other methods.

- Default-to-Private for Minors: Teens' accounts might default to private with limited algorithm-driven recommendations.

- Transparent Content Moderation: Platforms will need to disclose more about how they detect and remove predatory accounts and inappropriate content.

- Enhanced Parental Controls as a Minimum: What Snapchat's doing now might become the baseline requirement rather than above-and-beyond.

Snapchat's being proactive here, which gives it credibility when future regulations are debated.

Comparison Table: Parental Control Features Across Platforms

| Feature | Snapchat Family Center | Instagram Family | Tik Tok Family Pairing | You Tube Family Link |

|---|---|---|---|---|

| Connection Visibility | Yes, with trust signals | Friend list only | Limited to followers/following | Not applicable |

| Trust Signal System | Yes (mutual friends, saved contact, shared community) | No | No | No |

| Feature-Level Screen Time | Yes (messaging, camera, map, video) | No | No | No (overall time only) |

| Content Visibility | No | No | Limited recommendations | Yes (video history) |

| Time Limits | Parent-controlled through settings | Parent-controlled | Basic screen time warning | Parent-controlled |

| Direct Contact Control | Can restrict new friends | Can restrict followers | No | No |

| Safety Resources | Yes | Yes | Yes | Yes |

| Cost | Free | Free | Free | Free (part of Google Family) |

How Teens and Parents Feel About It

Acceptance of parental controls varies enormously based on family dynamics and teen age.

Younger teens (13-15) are more likely to accept parental monitoring as normal. It feels like a natural extension of parental involvement. Older teens (16-18) often resent it as invasive unless the rules are clearly explained and they're not heavily penalized for normal behavior.

Parents who frame monitoring as "I want to understand what you're doing" tend to get less resistance than parents who frame it as "I don't trust you." The actual tool is the same, but the context determines whether it becomes a trust-building or trust-eroding conversation.

One unexpected benefit some parents report: the feature-level screen time data gives them insight into how their teen actually experiences the app, making conversations less abstract. Instead of "You're on your phone too much," it's "I see you're spending 40 minutes a day in Spotlight. What makes that so appealing? Are you enjoying it, or does it feel hard to put down?"

That specificity changes the dynamic.

Snapchat Family Center offers the most comprehensive set of parental control features, including unique trust signals and feature-level screen time controls. Estimated data based on feature availability.

Industry Trends: The Move Toward "Safe by Default"

Snapchat's updates reflect a broader industry shift from reactive safety (addressing problems after they happen) to proactive safety (building features that reduce harm by design).

Apple's app-tracking transparency feature and on-device processing for sensitive data. Google's commitment to phasing out third-party cookies. Meta's changes to teen accounts on Instagram. These all signal that platforms are treating user safety and privacy as features, not compliance costs.

For Snapchat specifically, the message is: "We're making our platform safer without limiting functionality." It's a smart positioning because it argues that safety and usability aren't in tension. You can have both.

But there's a natural limit to what parental controls alone can do. They help parents be more aware and make better decisions. They don't change the fundamental incentives that drive platforms to maximize engagement. If Snapchat's core business model depends on teens spending as much time as possible in algorithmic recommendation feeds, parental controls can help parents limit that, but they can't change the underlying motivation.

Implementation Tips for Parents Using Family Center

For parents who are actually going to use these tools, here's what's effective:

Set It Up Early: Before your teen is savvy about circumventing it. Explaining why you're doing it as a normal part of digital parenting, not sneaking it in, gets better buy-in.

Check It Regularly but Not Obsessively: Once or twice a week is reasonable. Daily checking feels like surveillance and creates resentment.

Use the Data for Conversation, Not Punishment: "I noticed you added someone new, where did you meet them?" is better than immediately forbidding the friendship.

Acknowledge Graduations: As your teen demonstrates good judgment, ease off the monitoring. The point is guidance, not permanent control. A 17-year-old needs less oversight than a 14-year-old.

Be Transparent: Tell your teen that you're using Family Center and why. The secret to getting cooperation is explaining that it's not about not trusting them personally, it's about protecting them from threats (predators, scams, etc.) that don't discriminate by how smart or careful a teen is.

Pair It with Digital Literacy Conversations: Don't just monitor. Also teach. Explain why stranger requests are risky. Discuss how algorithms work. Talk about what information is private and why.

Have an Escalation Plan: If you see something concerning (a conversation that looks inappropriate, an adult claiming a personal relationship, a request for money), know what your next step is. Do you have direct conversations? Do you get law enforcement involved? Having this thought through in advance prevents reactive panic if something does happen.

The Role of AI and Automated Detection

Snapchat's parental controls are only part of the picture. The company also uses AI to detect predatory accounts and inappropriate conversations.

Snapchat has said it uses machine learning to identify and ban accounts that show patterns consistent with child exploitation. The company claims it removes accounts and reports them to the National Center for Missing and Exploited Children. But these systems aren't perfect. They have false positives and false negatives. Some predators are sophisticated enough to evade detection. Some inappropriate conversations look normal to an AI but are actually concerning to a trained human.

The combination of parental visibility (trust signals, feature breakdown) and automated detection (AI flagging suspicious accounts) is stronger than either alone. Parents catch the nuanced cases that AI misses. AI catches the patterns too complex or numerous for parents to monitor.

That said, the effectiveness of these systems isn't independently verified. Snapchat reports the numbers to regulators and the public, but there's no third-party audit. The company has an incentive to overstate how much predatory content it catches, so healthy skepticism is warranted.

Looking Ahead: What Comes Next in Teen Safety

Snapchat's updates are smart positioning for the next five years of regulation, but they probably aren't the final form of parental controls.

Future iterations might include:

Real-Time Content Review: Instead of just showing who your teen is connected to, showing the actual conversations themselves. This is more invasive, but some parents would want it. Snapchat's ephemeral message design makes this harder technically.

Predictive Risk Assessment: Using behavioral data to flag conversations or accounts that show signs of grooming patterns before actual exploitation happens. This is more AI-intensive and requires careful validation to avoid false positives.

Cross-Platform Visibility: Right now, parents see Snapchat separately from Instagram, Tik Tok, etc. A unified dashboard would be powerful but raises data sharing questions between competitors.

Granular Permission Controls: Instead of just knowing what features your teen uses, actually preventing them from using certain features. This is more restrictive than current tools but gives parents more control.

Integration with Device-Level Parental Controls: Apple and Google both offer device-level parental controls. Deeper integration between those and app-level controls would reduce workarounds.

The direction of travel is clear: more visibility, more granular controls, more AI-driven detection. The tension is between safety and privacy, between control and autonomy. Finding the right balance is the challenge every platform faces.

Expert Perspectives on Teen Safety and Parental Controls

Research and experts in adolescent development have perspectives worth considering.

On Parental Monitoring: Psychologists note that some parental monitoring is healthy and protective. It shows teens that you're involved in their lives. But excessive monitoring can undermine trust and push teens toward deception. The sweet spot is "protective monitoring"—knowing who your teen is communicating with, but trusting them to make good decisions once you've verified the person isn't obviously dangerous.

On Stranger Risk: Criminologists point out that while online predation is a real threat, the actual statistical risk is lower than public perception suggests. Most sexual contact with minors involves someone the teen already knows or trusts. But the risk that does exist (roughly 9% of online teen users face sexual solicitation according to some research) is concentrated among vulnerable teens—those already experiencing isolation, family problems, or low self-esteem. In other words, the tool helps everyone, but it's most valuable for kids at higher risk.

On Mental Health: Developmental psychologists have raised concerns that some teens use social media in ways that harm mental health (excessive use, comparison-driven scrolling, reduced face-to-face interaction). Parental controls can help limit problematic use patterns, but they can't address the underlying appeal of these platforms. That requires changes to platform design.

On Effectiveness: When researchers study the actual impact of parental controls on teen safety outcomes, the results are mixed. Parental controls help when parents actually use them and follow through with conversations. They don't help if they're set up and ignored. The tool is only as effective as the parent's engagement.

The Business Incentives Behind These Updates

Let's be honest about Snapchat's motivation here. This isn't purely altruistic. The company is making these changes because:

- Regulatory Risk: Lawsuits and potential legislation threaten the platform. Proactive safety measures reduce that risk.

- Market Position: Being seen as the "safe" platform for teens is valuable in a regulatory environment increasingly hostile to social media.

- User Retention: Better parental controls mean more parents allowing their teens to use the app, expanding the addressable market.

- Public Relations: Positive stories about safety tools neutralize negative coverage about exploitation.

None of these motivations are wrong, and they align with actual user benefit. But they're worth acknowledging. Snapchat isn't being generous; it's being strategic. That doesn't make the tools bad, but it means the company will keep pushing only as far as competitive pressure and regulation require.

If Tik Tok faced a ban or You Tube faced strict age restrictions, Snapchat would immediately invest in additional safety features to position itself as the compliant alternative. The direction of the industry depends on regulatory pressure as much as on corporate goodwill.

FAQ

What is Snapchat Family Center?

Family Center is Snapchat's parental control and monitoring suite that allows designated parents or guardians to see information about their teen's activity on the platform. It includes visibility into friend connections, screen time breakdown by feature (messaging, camera, map, short-form video), and trust signals that indicate whether new friends have real-world connections to the teen. It doesn't give parents access to the content of messages, but it does provide context about who their teen is communicating with.

How do trust signals work in Snapchat's Family Center?

When a teen adds a new friend or receives a friend request, Family Center displays contextual information about that connection. Trust signals include: whether the new person shares mutual friends with the teen, whether their contact information is saved in the teen's phone, and whether they're both members of the same in-app community (like a school group). If there are no commonalities between the new friend and the teen, the system alerts parents to start a conversation, but it doesn't automatically block the connection. This helps parents understand whether the friendship is with someone the teen already knew in real life or a complete stranger from the platform.

What does the feature-level screen time breakdown show?

Instead of just showing total time spent on Snapchat, Family Center now breaks down screen time by the specific features the teen is using. The breakdown includes: messaging time (one-on-one or group conversations), camera time (creating photos and videos), map time (using the location feature), and short-form video time (watching Spotlight). This granular data helps parents understand what their teen is actually doing on the app, revealing different usage patterns that suggest different levels of risk or different types of engagement.

Are these parental controls invasive to teens' privacy?

Parental controls necessarily involve some privacy tradeoffs. Family Center shows parents information about who their teen is connected to and how they spend time on the app, which reduces the teen's digital privacy compared to unrestricted use. However, the system doesn't show parents the actual content of messages or photos sent, which is where the deepest privacy concerns would arise. Research suggests that moderate parental monitoring is protective without being overly invasive, but family dynamics and the teen's age matter significantly. Transparent communication about why monitoring is in place helps reduce the adversarial feeling.

How do Snapchat's parental controls compare to other social media platforms?

Snapchat's Family Center is generally more sophisticated than competitors in two specific ways: the trust signal system for new connections (which other platforms don't have) and the feature-level screen time breakdown (which other platforms provide only as total time). Instagram shows friend lists but not connection context. Tik Tok shows only total screen time. You Tube shows video history and recommendations. Snapchat's combination of connection context and usage breakdown gives parents more nuanced information, though no platform offers a complete picture of what their teen is seeing and doing.

Do parental controls actually prevent online predation?

Parental controls help by making parents aware of who their teen is connecting with, enabling them to have conversations about stranger safety. However, they don't directly prevent predation. A more fundamental change would require the platform to restrict how easily strangers can connect with teens, which Snapchat hasn't done. Additionally, sophisticated predators often know how to game trust signals by appearing to have mutual connections or shared communities. Parental controls are one part of a comprehensive safety approach, but they're not a guarantee against exploitation.

What age is Snapchat designed for, and how is age verified?

Snapchat's terms of service require users to be at least 13 years old, aligning with COPPA (Children's Online Privacy Protection Act) regulations. However, Snapchat doesn't require robust age verification to create an account. Users self-report their age, and the company relies on automated systems to catch obviously false ages. This means younger children can create accounts, and there's no foolproof way to verify that someone claiming to be 16 actually is. This is a limitation of Snapchat's current system and an area where regulators are pushing for stronger verification methods.

How should parents actually use Family Center to be effective?

Effective use involves regular but not obsessive checking (once or twice weekly), using the information to start conversations rather than immediately restrict activities, and being transparent with your teen about monitoring. Instead of punishment-driven responses ("You can't talk to that person"), frame it as guidance ("I don't recognize this person, how do you know them?"). For maximum effectiveness, pair parental controls with digital literacy education about stranger risk, how algorithms work, and what information should stay private. Also adjust your level of monitoring as your teen demonstrates good judgment, rather than maintaining constant surveillance through their teenage years.

Is Family Center automatically enabled for all Snapchat users under 18?

No, Family Center is an optional feature that requires deliberate setup by a parent or guardian. The teen must add a parent to Family Center for monitoring to begin, or the parent initiates it through their own Snapchat account. This means many teens and parents don't use it at all. Snapchat has been gradually increasing the default privacy settings for teens (making accounts private by default, for example), but parental monitoring itself requires active opt-in, which limits its coverage.

What about teens using alternate accounts or other apps to avoid Family Center?

This is a real limitation. Savvy teens can create accounts their parents don't know about or use messaging apps (Discord, private messaging features on other platforms) for conversations they want to keep hidden. Parental controls rely on the assumption that the teen will use the monitored account for their primary Snapchat activity. If a teen actively circumvents the tools, the parent loses visibility. Having transparent conversations about why monitoring is in place and maintaining trust tends to reduce circumvention, but it doesn't eliminate it.

Snapchat's Family Center updates represent a meaningful step forward in parental oversight capabilities. The trust signals system offers a nuanced approach to flagging potentially risky connections without automatically restricting them, while the feature-level screen time breakdown provides parents with actionable information about how their teen actually uses the platform. These tools work best when parents actively engage with them and use the data to inform conversations rather than impose blanket restrictions.

The updates also signal that Snapchat is taking regulatory pressure seriously and positioning itself as a platform that can be trusted with younger users' safety. As legislation around teen social media use continues to evolve, parental controls like Family Center will likely become table-stakes requirements rather than value-adds. The company's proactive approach suggests it understands that better controls now are preferable to government mandates later.

For parents, the message is straightforward: if you have a teen on Snapchat, Family Center is worth setting up and checking regularly. It won't solve every safety concern—the platform's design still makes stranger connections possible—but it provides useful information that can prompt better conversations about digital safety. For teens, understanding that their parents have visibility into connections and usage patterns can encourage thoughtful choices about who to connect with and how much time to spend on the platform.

The broader takeaway is that teen safety online is a shared responsibility. Platforms need to reduce friction for harmful interactions and provide tools that help parents stay informed. Parents need to engage with those tools and use them constructively. Teens need digital literacy education so they understand the risks and make thoughtful decisions. No single intervention is sufficient. Snapchat's updates improve one part of that ecosystem.

Key Takeaways

- Family Center's trust signals reveal whether new connections have real-world overlap with your teen (mutual friends, saved contacts, shared communities), helping parents distinguish legitimate friends from strangers

- Feature-level screen time breakdown shows exactly how teens spend time across messaging, camera, map, and video features separately, revealing different behavioral and risk profiles

- The approach prioritizes conversation and guidance over surveillance and punishment, making parental oversight more effective than heavy-handed restrictions

- Snapchat is responding to regulatory pressure and lawsuits by positioning these enhanced controls as evidence that industry self-regulation can address teen safety concerns

- While these tools improve parental visibility, they don't fundamentally change platform architecture that enables stranger connections, and they only work if parents actively engage with them

Related Articles

- How to Set Up an iPad for a Child: Complete Safety Guide [2025]

- Snapchat's New Parental Controls: Screen Time Monitoring for Teens [2025]

- Floppy Disk TV Control System for Toddlers [2025]

- UK Social Media Ban for Under-16s: What You Need to Know [2025]

- Italian Regulators Investigate Activision Blizzard's Monetization Practices [2025]

- YouTube Shorts Parental Controls: Time Limits & Features [2025]

![Snapchat Parental Controls: Family Center Updates [2025]](https://tryrunable.com/blog/snapchat-parental-controls-family-center-updates-2025/image-1-1769085721085.png)