The 245TB SSD Revolution: How AI is Reshaping Hyperscale Storage

Let me paint a picture for you. It's 2025, and somewhere in a hyperscale data center, an AI training job is running. The model is processing billions of embeddings, logs, and inference results. That data isn't sitting on a tape drive waiting weeks to be accessed. It's living on flash storage. Specifically, it's living on a drive that holds a quarter of a petabyte.

If that sounds wild to you, I get it. Five years ago, the largest enterprise SSD you could buy topped out around 15TB. Today, we're looking at SSDs that are nearly 20 times larger, and this shift isn't just about bigger numbers. It represents a fundamental reset in how hyperscalers think about data infrastructure.

The catalyst? Artificial intelligence. The explosion in AI training and inference workloads has forced data center architects to rethink everything about storage architecture. And unlike most tech trends that fade after their moment in the sun, this one is reshaping the very fabric of hyperscale infrastructure.

TL; DR

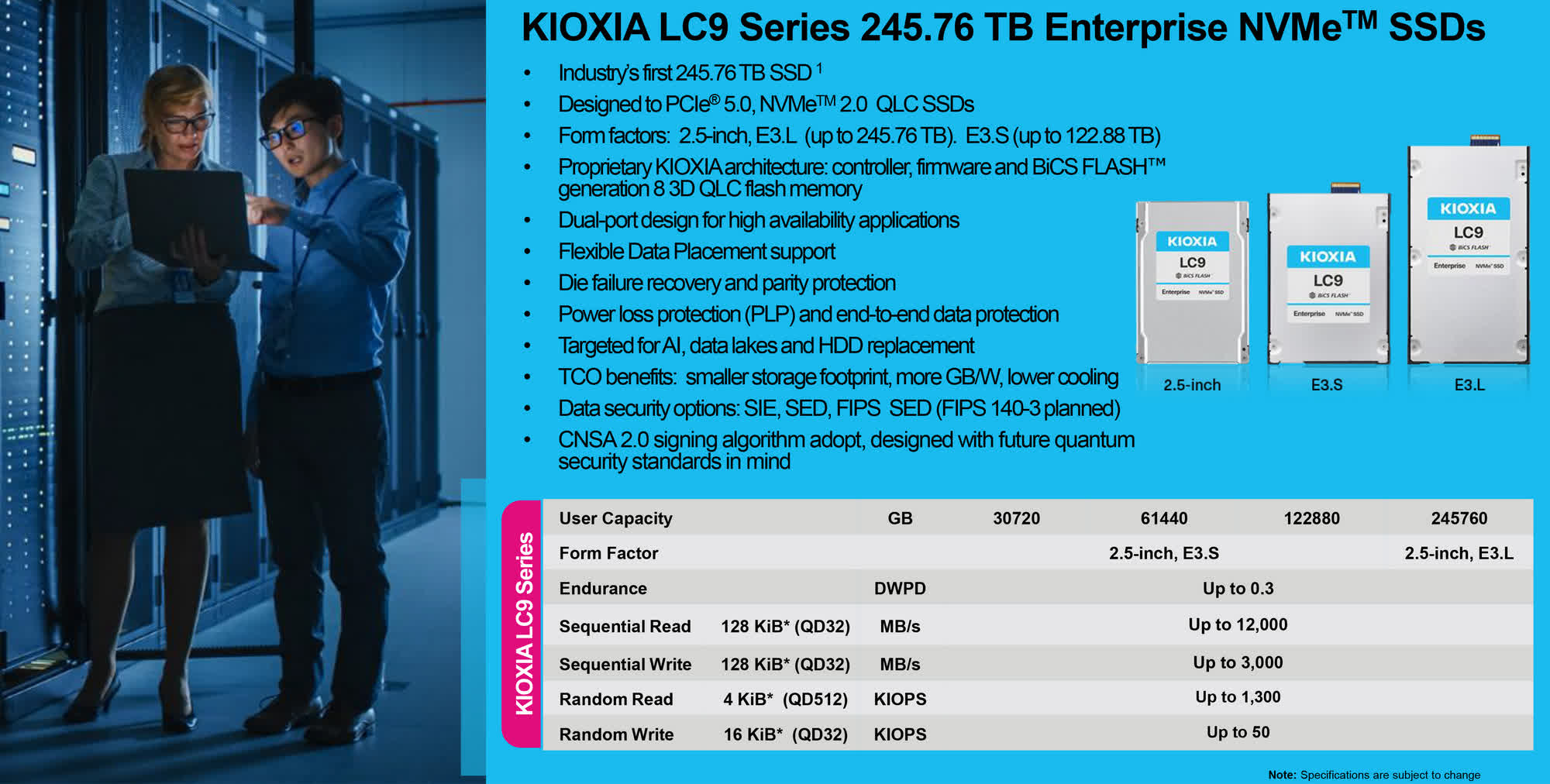

- Eight vendors have now announced 245TB class SSDs, with Dapu Stor joining Kioxia, Sandisk, Solidigm, SK Hynix, Samsung, Micron, and Huawei

- AI training generates massive embeddings and inference datasets that must stay online for frequent access, favoring dense flash over mechanical drives

- QLC NAND technology enables these capacities by storing four bits per cell, dramatically improving density and cost efficiency

- Hyperscale environments are the only market that can justify these drives, which cost tens of thousands of dollars each

- Power, cooling, and rack density have become critical factors driving adoption of ultra-capacity SSDs over traditional hard disk arrays

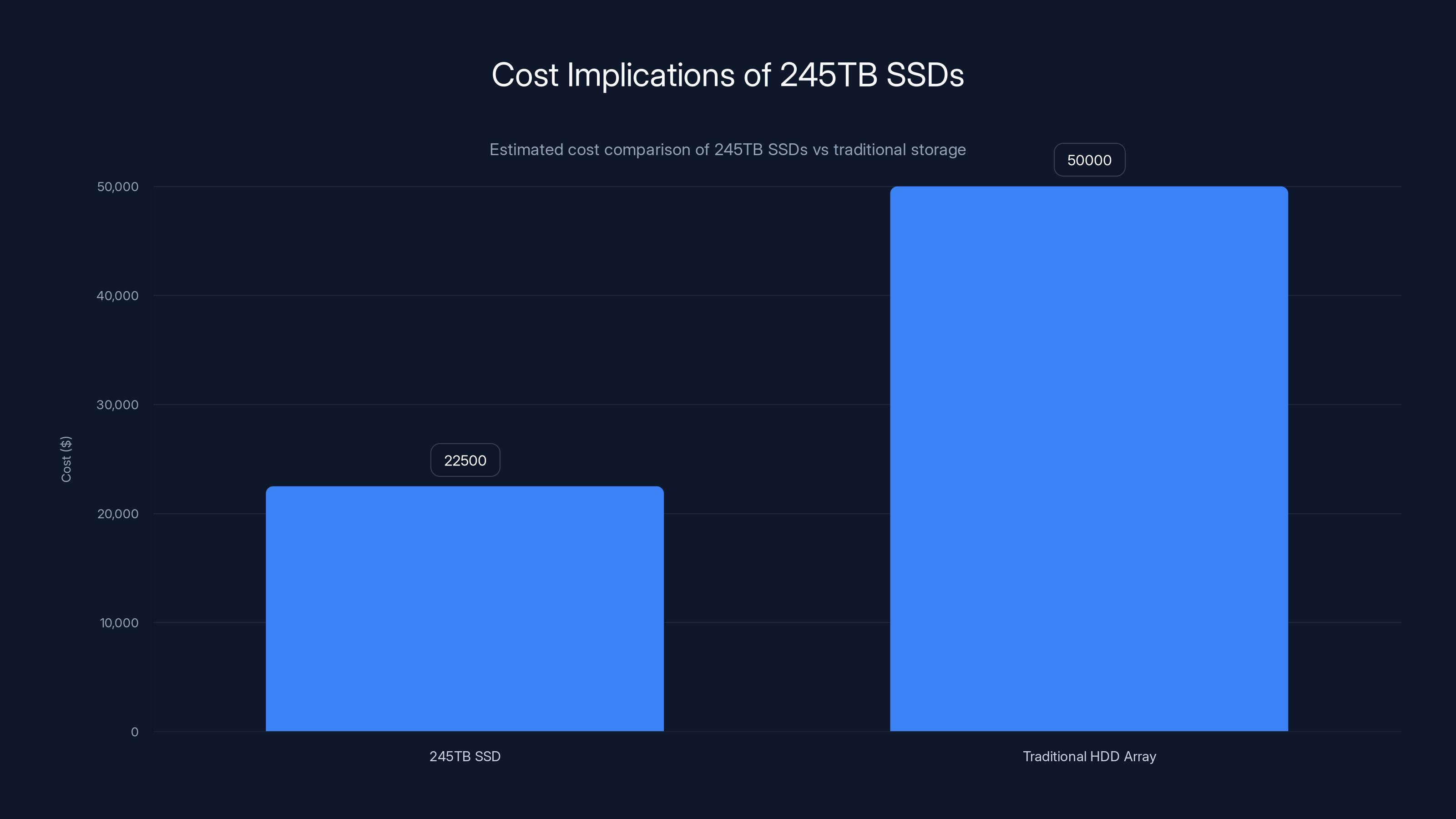

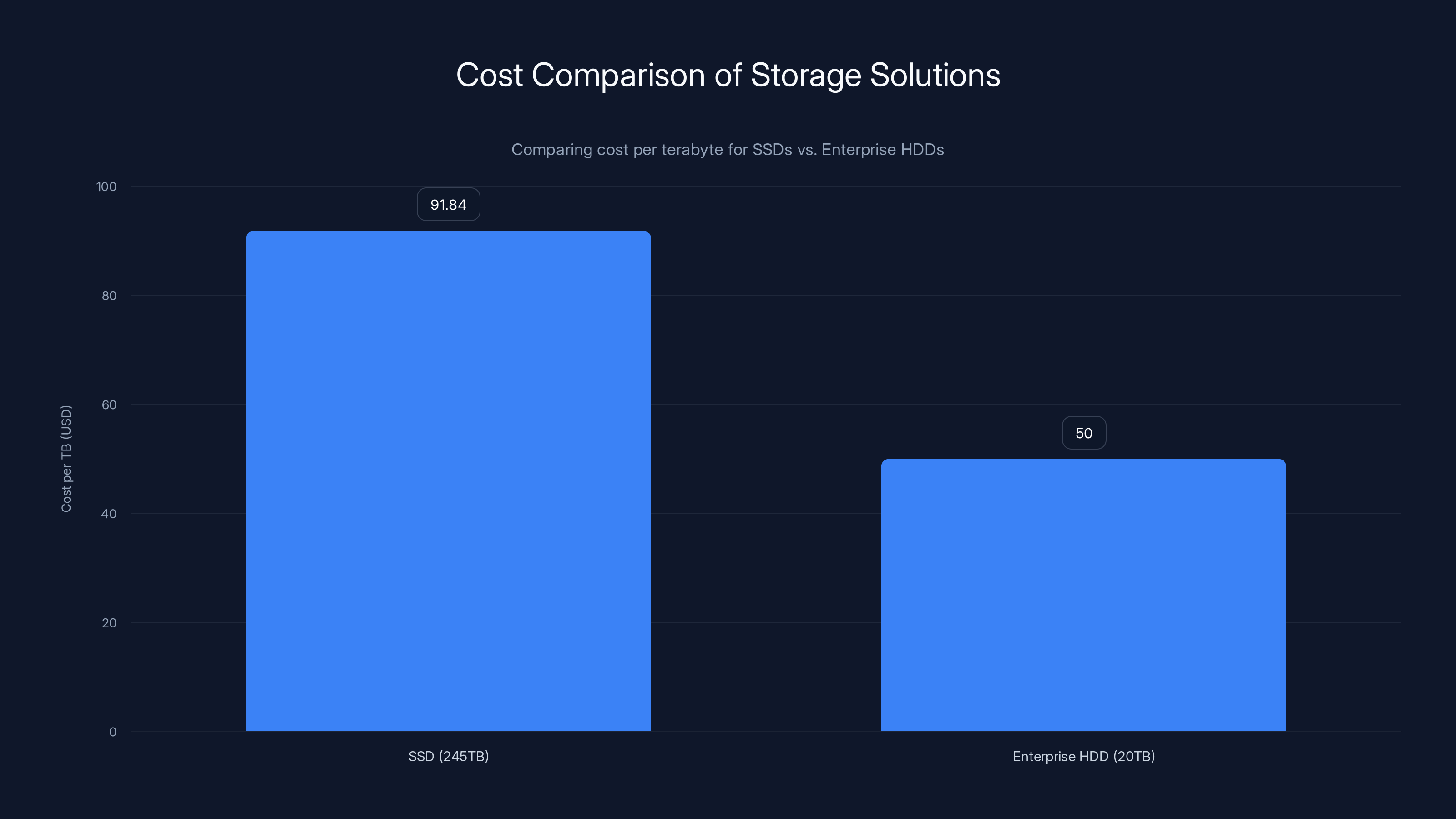

245TB SSDs, despite high upfront costs (

Why SSDs Are Eating Hard Drives' Lunch

Talk to anyone who designs data center storage, and they'll tell you the same thing: the economics have shifted. For decades, hyperscalers relied on massive arrays of mechanical hard drives. They were cheap, they had decent capacity, and for cold storage or sequential workloads, they made financial sense.

Then AI happened.

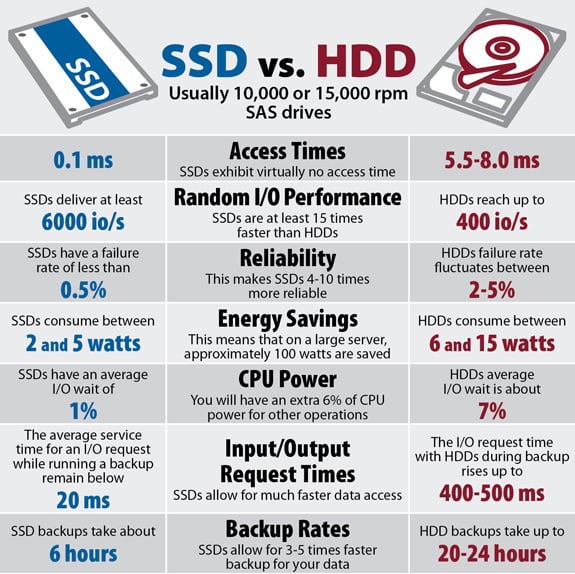

When you're training a large language model or running inference at scale, you're not accessing data sequentially. You're doing random I/O across massive datasets. A 7200 RPM hard drive can handle maybe 200 I/O operations per second. An SSD? Thousands. That gap matters when you're processing billions of data points.

But here's the real problem with hard drives: they're slow to fail. Replace a failed drive in a 100-drive array, and you're waiting hours for recovery. With SSDs, that problem shrinks. Flash also consumes significantly less power per gigabyte, and in data centers where power becomes your limiting resource, every watt counts.

The math gets even more interesting when you factor in rack density. A hard drive array might deliver 500TB of capacity across 8-10 rack units. A 245TB SSD? That's one drive, taking up less than a U of rackspace. When your data center is landlocked in an expensive metropolitan area, that density advantage translates directly to profitability.

These aren't theoretical advantages. They're the kinds of numbers that make storage architects lose sleep over their current infrastructure decisions.

Understanding the Dapu Stor Announcement

Let's zoom in on what Dapu Stor actually announced, because the details matter. They're not just slapping a bigger number on a drive and calling it a day. The engineering here is legit.

Dapu Stor's 245TB PCIe Gen 5 QLC SSD targets a specific problem: AI data lakes. These aren't small-scale operations. We're talking about data warehouses holding petabytes of training data, embeddings, vector databases, and inference logs. All of it needs to stay online. All of it gets accessed frequently.

The company says 122TB versions are already deployed with customers, which tells you this isn't vaporware. It's production hardware that people are actually using. The jump to 245TB is roughly doubling capacity in the same form factor, which is where the engineering complexity comes in.

One key technical point: Dapu Stor uses QLC NAND, which stores four bits per cell instead of the three bits per cell used in TLC (triple-level cell) drives. This packs more data density per wafer, which drives down costs and improves capacity. It sounds simple, but QLC had a reputation problem. People worried about endurance and performance.

Those concerns were legitimate, but they've largely been addressed. Modern controllers, firmware algorithms, and intelligent data placement techniques have made QLC reliable enough for enterprise workloads. When you're operating at Dapu Stor's scale, you have the engineering resources to make it work.

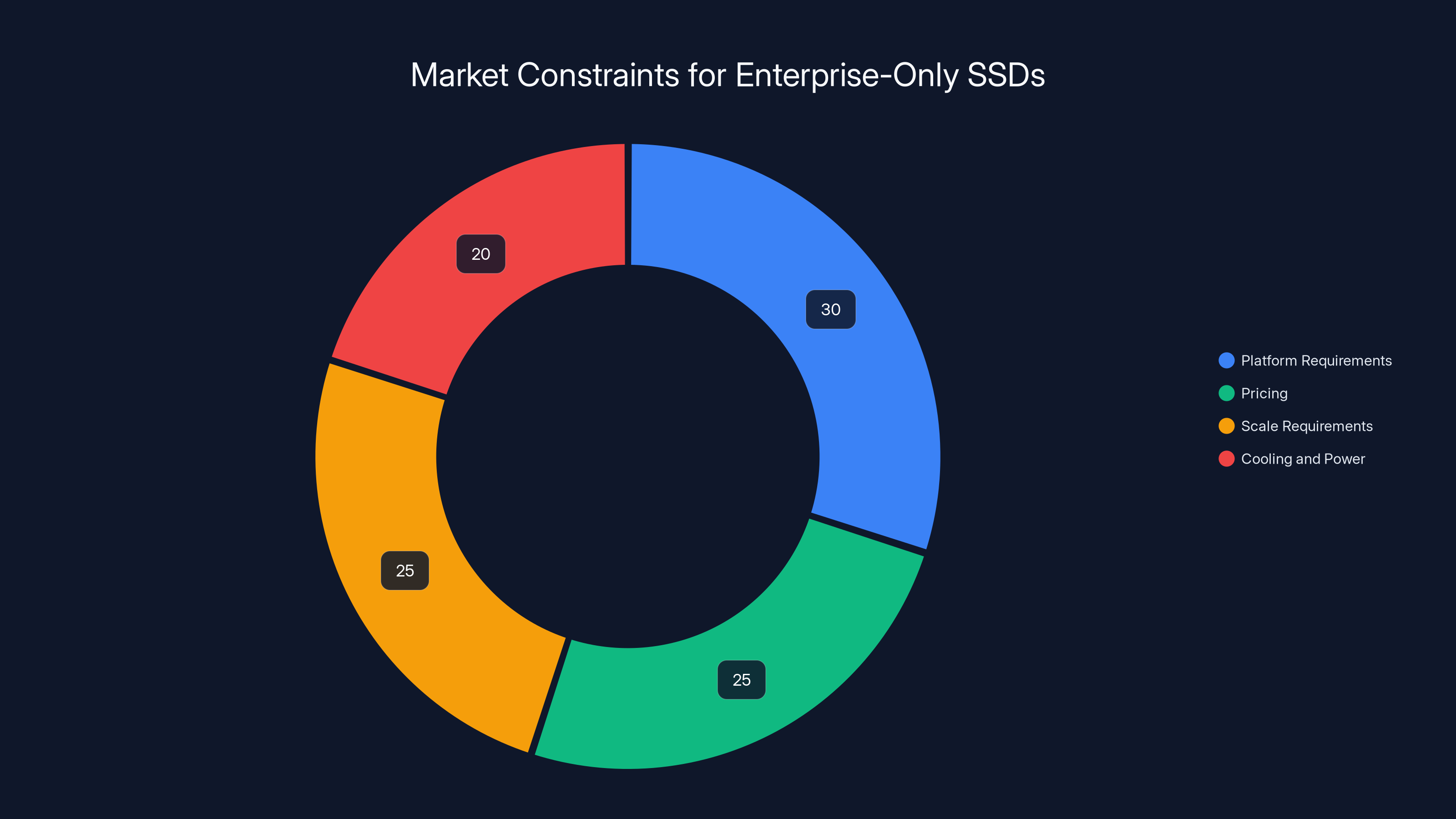

Platform requirements, pricing, scale, and cooling are key constraints, with platform requirements being the most significant. Estimated data.

The Crowded 245TB Club

Here's what's fascinating: Dapu Stor is the eighth vendor to announce a 245TB class drive. The eighth. That's not a niche announcement from a startup nobody's heard of. That's confirmation of a market trend.

Let's look at the lineup:

Kioxia announced a 246TB LC9 SSD. Sandisk revealed a 256TB model specifically targeted at AI workloads. Solidigm confirmed plans for 245TB drives and has already demonstrated massive deployments (more on that in a moment). Micron outlined 122TB PCIe Gen 5 drives as part of a broader effort to reduce reliance on hard disks. Huawei took a different approach, pairing high-capacity SSDs with controller innovations to reduce expensive HBM requirements.

Samsung has published roadmaps extending beyond current capacities. SK Hynix teased the PS1101, its own 245TB PCIe Gen 5 enterprise drive. And now Dapu Stor rounds out the group.

When every major NAND manufacturer and several storage specialists are racing to the same capacity point simultaneously, you're looking at a genuine market shift, not a marketing gimmick.

Why AI Demands Dense Storage

Let's dig deeper into why AI workloads specifically require these massive drives. It comes down to data volume and access patterns.

When you train a large language model, you're not just storing the model weights. You're storing training datasets that might span hundreds of terabytes. You're capturing embeddings, attention maps, gradient information. You're logging inference results when the model runs in production. All of that data has one thing in common: it gets accessed repeatedly.

Compare that to traditional enterprise workloads. A database might store customer records accessed thousands of times a day, but the total dataset is relatively small. Archival data sits untouched for months, making tape a rational choice. AI workloads break both patterns.

Embeddings are the real storage killer. When you convert text or images to embeddings, you're creating numerical representations that the model uses. A single large language model might generate trillions of embeddings during training. During inference, every query generates new embeddings. That's constant, high-volume data creation.

Inference logs pile up fast too. Every time someone interacts with an AI system, metadata gets logged. Request details, response times, token usage, latency metrics. Hyperscalers often store months of this data for fine-tuning, performance analysis, and regulatory compliance.

The access pattern is the killer argument. Hard drives assume your data is sequential. You read track A, then track B, then track C. SSDs excel at random access. When your AI system is pulling embeddings from across a massive dataset, that random I/O advantage is enormous.

Add it all up, and you're looking at data that's simultaneously huge (petabytes) and hot (constantly accessed). Exactly the scenario where dense flash storage makes sense.

The QLC Technology Behind the Capacity

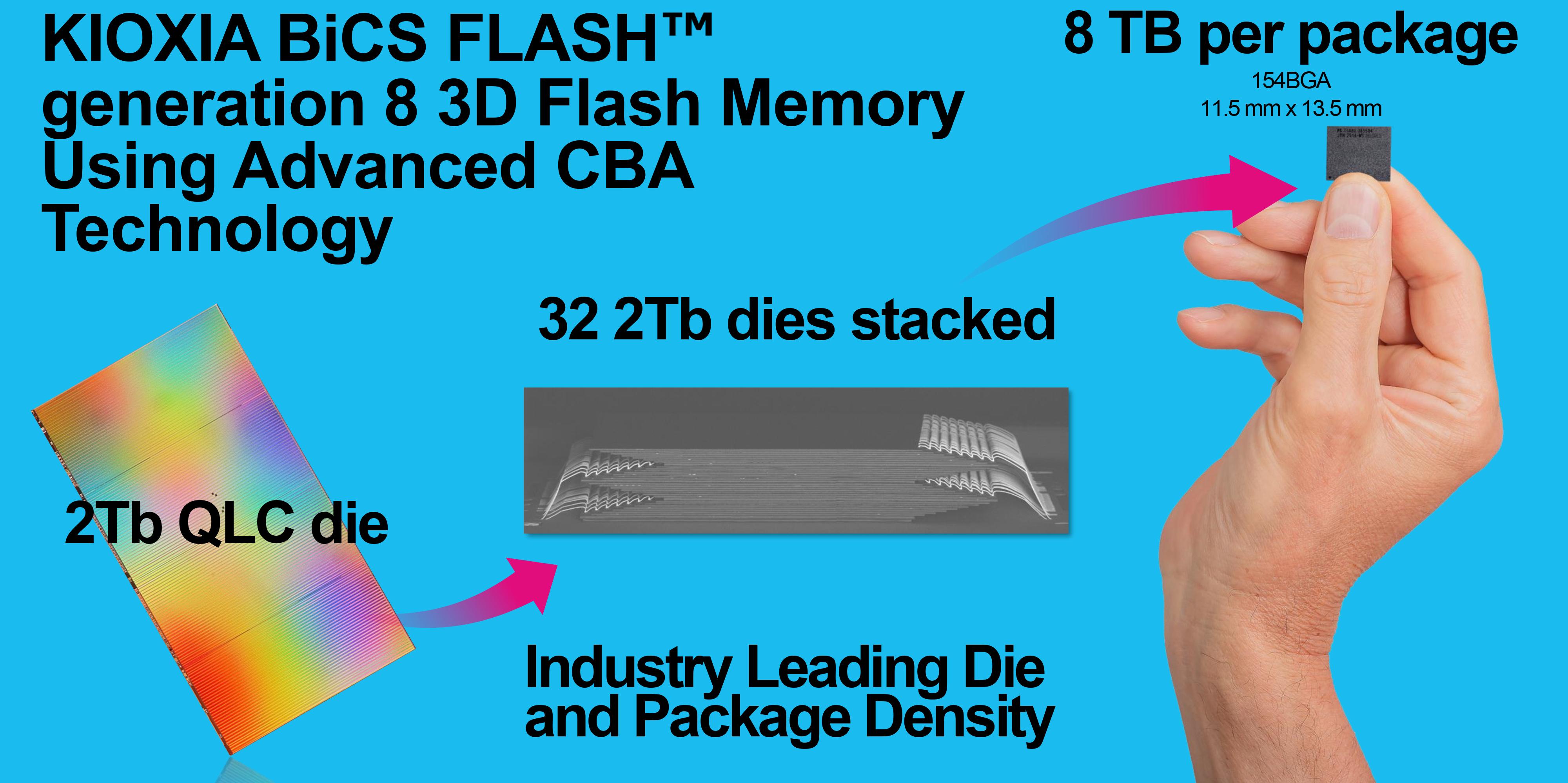

You can't build a 245TB SSD without making some unconventional engineering choices. The current generation builds on QLC NAND, and understanding how it works helps explain why these drives are suddenly possible.

Memory cells in NAND flash store data by trapping electrons. How many distinct electron states you can reliably distinguish determines how many bits you can store per cell. With SLC (single-level cell), you have two states: one bit of data. MLC (multi-level cell) introduced four states: two bits. TLC (triple-level cell) uses eight states: three bits. QLC pushes to sixteen states: four bits.

The benefit is obvious: more bits per cell means more data per wafer. The cost is reliability and endurance. More states means smaller margins between them. Electronic noise that you could ignore with TLC becomes a problem with QLC. Wear and tear affects the voltage thresholds faster.

Solving this requires multiple innovations working together:

Controller Design handles the complexity of managing those voltage states. Modern controllers use sophisticated error correction, read-retry algorithms, and wear leveling to compensate for QLC's inherent challenges.

Firmware Management makes intelligent decisions about data placement. Frequently accessed data might get written to TLC blocks for faster access. Cold data goes to QLC. This hybrid approach gives you capacity benefits without sacrificing performance where it matters.

Data Placement Techniques reduce write amplification. When you update a single byte, the controller doesn't rewrite the entire block. It uses log-structured designs and garbage collection algorithms to minimize unnecessary writes. Since write endurance is QLC's weak point, this is critical.

The fact that multiple vendors are shipping QLC at scale proves these problems are solved, at least well enough for enterprise deployments. Dapu Stor's 122TB drives already in the field? That's real-world validation.

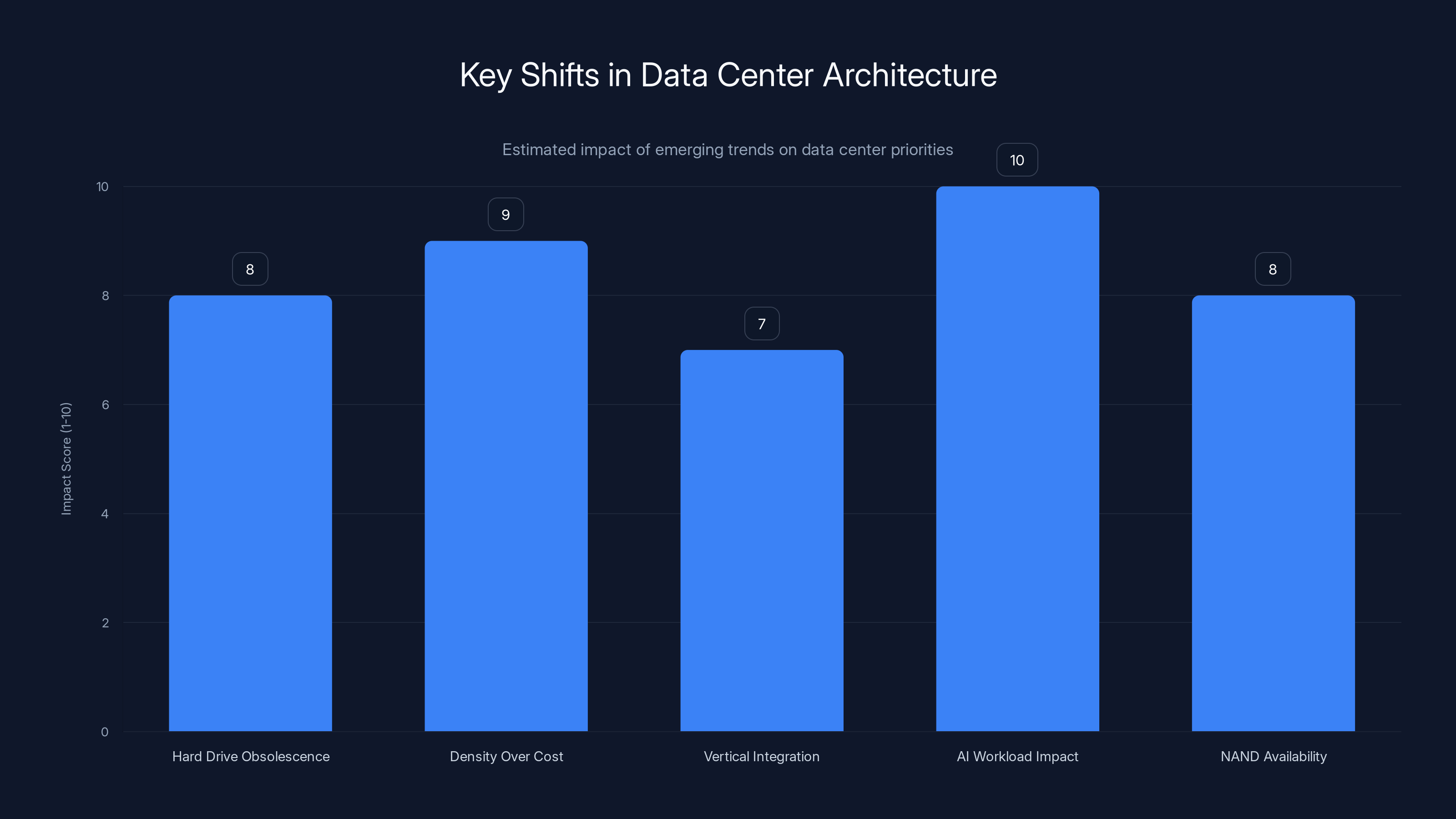

The shift towards SSDs, increased density, vertical integration, AI workload demands, and NAND availability are reshaping data center architecture. Estimated data.

Real-World Deployments: The Solidigm Case Study

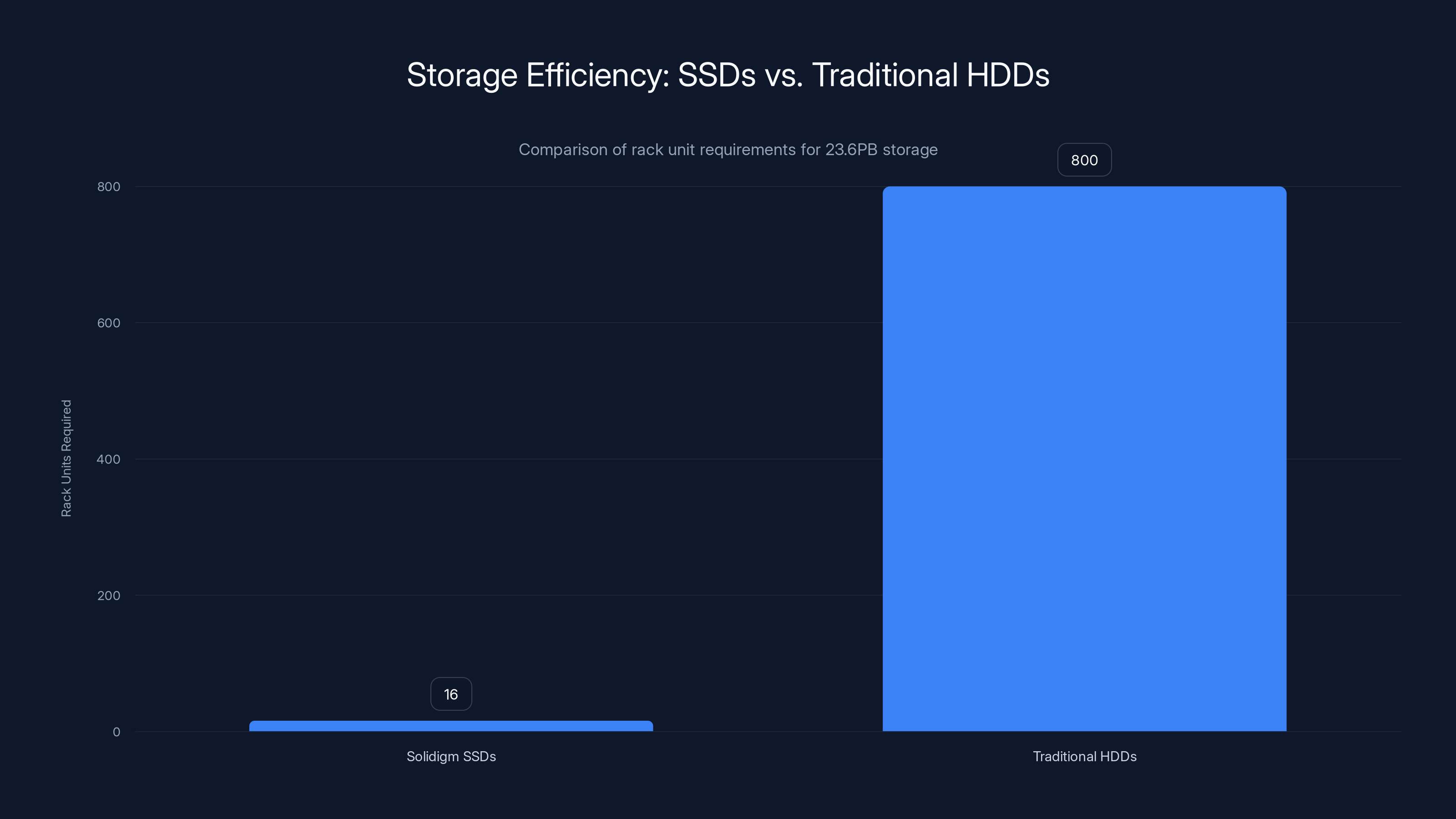

Here's a concrete example that illustrates exactly why hyperscalers are adopting these drives. Solidigm demonstrated a 23.6PB storage cluster built in 16U of rackspace using 122TB SSDs.

Think about that number. 23.6 petabytes in 16 rack units. That's 1.475 petabytes per rack unit.

Compare that to traditional hard disk storage. A typical 4U drive enclosure holds 60 large capacity drives, maybe 2TB each. That's 120TB in 4U, or 30TB per rack unit. To store 23.6PB with hard drives, you'd need roughly 800 rack units. With SSDs, you need 16.

Now multiply the implications. You need 50 times less physical space. You need 50 times less power distribution infrastructure. You need 50 times fewer cables. You need a completely different cooling approach.

At that density, Solidigm specified immersion cooling. The entire drive array sits in special dielectric fluid that conducts heat away from the electronics far more efficiently than air. It sounds exotic, but at these densities, it's mandatory.

The cost of the drives themselves? Solidigm reportedly packed

Power and Cooling: The Hidden Driver

When storage architects evaluate options, they're not looking at cost per terabyte in isolation. They're looking at total cost of ownership, and power consumption is often the largest variable.

A large capacity hard drive draws roughly 10-15 watts during normal operation. The higher the capacity, the more you need. A 500 petabyte storage system using hard drives might require 50-100 megawatts of power just for the drives themselves. Add in the storage controllers, network fabric, cooling systems, and you're easily over 200 megawatts.

SSDs draw less power per byte. A 245TB SSD typically consumes 5-10 watts in normal operation. The I/O patterns matter too. Hard drives pay the same power cost whether they're idle or actively accessed. SSDs power down idle flash, creating natural efficiency.

But here's the leverage point: denser drives mean fewer spindles, fewer fans, fewer power supplies. The infrastructure supporting the storage becomes simpler. You need less cooling. You have fewer redundant paths. The aggregate power savings compound.

Power cost formula: If power costs

In regions with expensive power, the ROI calculation changes completely. A hyperscaler in California or Northern Europe might find that SSDs pay for themselves in three years purely through power savings. A hyperscaler with cheaper power in a different region might still prefer SSDs for the operational benefits.

The Huawei Angle: Different Approach, Same Problem

While most vendors are attacking the problem with bigger SSDs, Huawei took a different path. They paired high-capacity SSDs with controller innovations specifically designed to reduce the amount of expensive HBM (high-bandwidth memory) required in AI systems.

This deserves explanation. Modern AI accelerators like GPUs require fast memory to process data. The bandwidth requirements are enormous. HBM is specialized memory soldered directly to processors, and it's wildly expensive. A single GPU with HBM memory can cost $50,000 or more.

Huawei's insight: if you can feed data to the GPU more efficiently from slower but larger storage, you reduce the HBM requirement. It's a trade-off. GPU cycles might be waiting slightly longer for data, but you're using cheaper storage instead of expensive memory.

It's a reminder that the industry doesn't solve problems in only one way. Different vendors with different strengths pursue different angles. Some optimize for pure density. Huawei optimizes for the system as a whole.

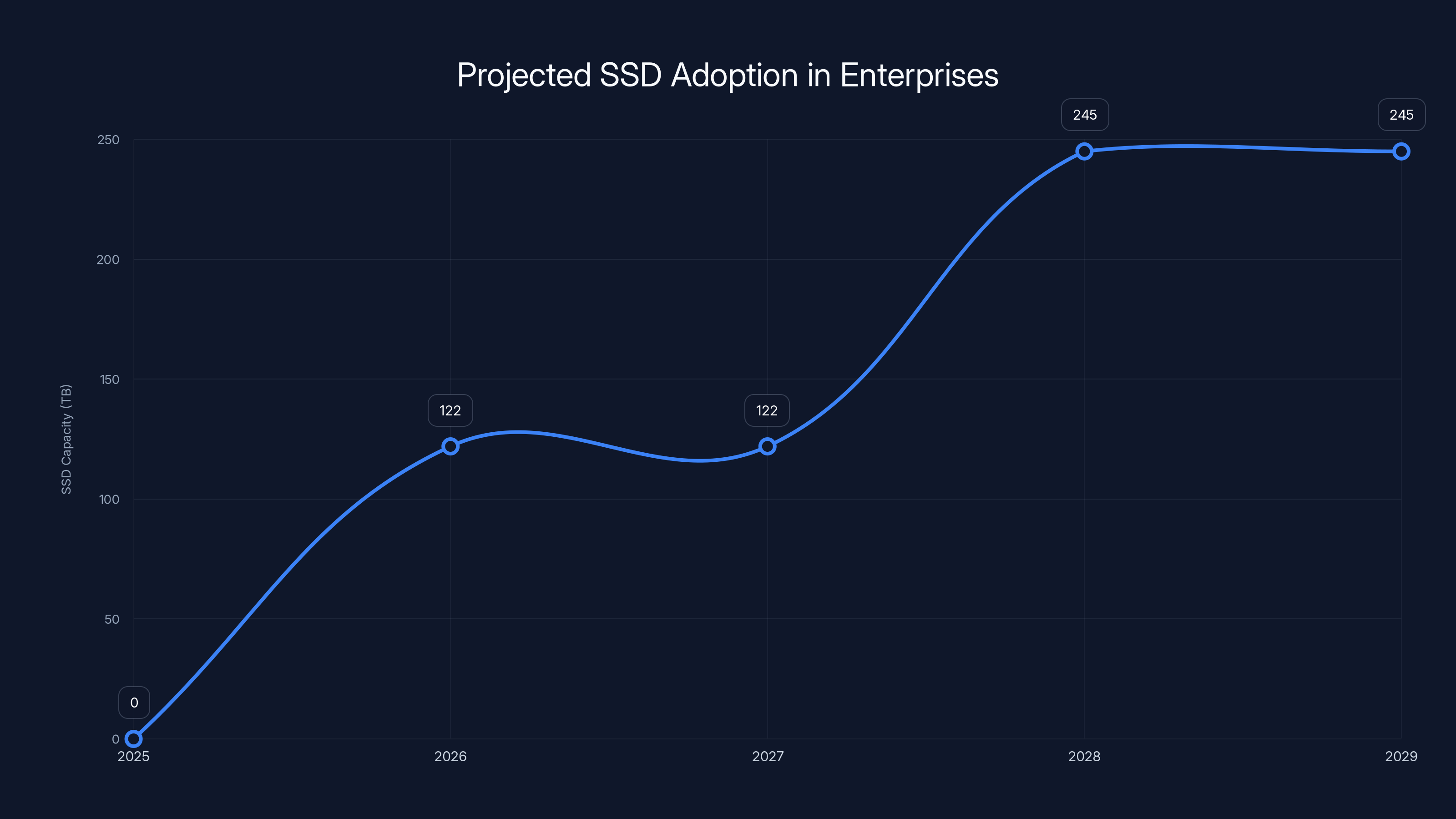

Enterprises are expected to start adopting 122TB SSDs by 2026-2027, with 245TB+ SSDs becoming standard by 2028. Estimated data.

Cost Economics of Ultra-Capacity SSDs

Let's talk dollars, because this is where the real story becomes interesting.

A 245TB SSD costs roughly

Calculate the cost per terabyte:

With a $22,500 drive and 245TB capacity:

Compare that to a typical enterprise hard drive. A 20TB enterprise drive costs roughly

Wait, the math says hard drives are cheaper?

Yes, if you only count the drive cost. But here's where the hyperscaler analysis diverges from simple math:

- Rack space cost: Real estate in a data center costs 15,000 per rack unit per year. Saving 50x rack units adds up fast.

- Power cost: Less power consumption saves hundreds of thousands per year at scale.

- Operational cost: Fewer drives means fewer replacements, fewer firmware updates, less management overhead.

- Network infrastructure: Fewer drives need fewer network ports, switch ports, and fabric connections.

- Cooling infrastructure: Denser deployments with less power mean less cooling needed, saving both capital and operational expense.

When you factor in all of these, the true cost per terabyte for the SSD approach becomes competitive with, or better than, the hard drive approach. And that's before you consider the performance and access pattern advantages.

The Technology Race: Why Eight Vendors, Why Now?

Here's a question worth asking: why did eight vendors independently decide to announce 245TB SSDs in roughly the same timeframe? Is it convergence, or are they following each other?

It's both, actually. But the real driver is NAND wafer supply and demand dynamics.

NAND fabrication is capital intensive. Building a new fab costs $20 billion. None of these vendors are building new fabs just for AI SSDs. They're using existing capacity. But NAND wafer demand from AI applications has grown so fast that it's become a limiting resource.

When a resource becomes scarce, you optimize how you use it. Packing four bits per cell instead of three means you get more capacity from the same number of wafers. That capacity constraint drives the industry toward QLC, which then enables higher capacities.

Second, there's a competitive dynamic. When Kioxia announced 246TB, every other vendor immediately announced comparable products. The market clearly wanted these drives, and no vendor wanted to be left behind. It's a classic technology race pattern.

But unlike some technology races that leave one winner and everyone else in the dust, this one has room for multiple winners. Hyperscalers run multiple supply chains intentionally, to avoid dependency on single vendors. If one vendor can't meet demand, you switch to another.

PCIe Gen 5: The Connectivity Foundation

None of these drives would work without PCIe Generation 5. That's worth understanding, because it's easy to overlook the connectivity layer while focusing on the storage layer.

PCIe Gen 5 provides 64 GB/s of bandwidth per direction. That sounds like overkill for a single drive, but it's not. At the scale of a hyperscale data center, you have hundreds of these drives connected to a single storage system, sharing that bandwidth. You need the headroom.

Moreover, PCIe Gen 5 is essential for consistency. If you have some older drives on Gen 4 (32 GB/s) and newer drives on Gen 5 (64 GB/s), you create management complexity. Hyperscalers prefer standardization.

PCIe Gen 6 is coming, which will double that bandwidth again. But Gen 5 is today's sweet spot. It provides enough bandwidth for current AI workloads while keeping hardware costs reasonable.

The industry's timing here is interesting. PCIe Gen 5 only became widely available in server hardware around 2023-2024. The announcement wave for 245TB SSDs starting in 2024-2025 is no coincidence. The connectivity bottleneck was removed, and drive vendors could finally push capacity without running into bandwidth walls.

Solidigm's SSD solution requires 50 times fewer rack units than traditional HDDs for the same storage capacity, highlighting significant space and infrastructure savings.

Addressing QLC Concerns: Endurance and Performance

I mentioned earlier that QLC had a reputation problem. Let's dig into why, and why that problem is largely solved.

Endurance measures how many times you can write to a drive before it fails. Traditional NAND cells tolerate 1,000-5,000 program/erase cycles. TLC can handle 500-1,000 cycles per cell. QLC? Closer to 100-500 cycles per cell in early implementations.

That sounds like a disaster. A 245TB drive might not even last a year if people kept rewriting entire drives repeatedly.

But here's where the firmware and controller come in. First, data doesn't live on a single cell for years. It gets moved around. Second, not all data gets rewritten equally. Hot data in cache might cycle rapidly. Cold data on the drive might write once and sit. Third, compression reduces the volume of data written.

Combining all these techniques, modern QLC SSDs achieve endurance ratings of 30-100 DWPD (drive writes per day). That's enough for enterprise deployments. Dapu Stor's drives in production are proving this in real-world conditions.

Performance is a different concern. More bits per cell means larger voltage margins. Read latency might increase slightly. Write speed depends heavily on the controller and its ability to manage the flash.

In practice, modern QLC SSDs hit 5,000+ MB/s sequential throughput and handle random I/O at comparable speeds to TLC. For most AI workloads, this is sufficient.

The key insight: QLC isn't inherently worse. It's different, and you need to design systems understanding those differences. Dapu Stor and the other vendors clearly have.

Market Constraints: Why These Are Enterprise-Only

I keep saying these drives are for hyperscalers only. Let me explain why, because it's important.

Platform Requirements: You can't just plug a 245TB PCIe Gen 5 SSD into your laptop. You need specific hardware. The server motherboard must support PCIe Gen 5. The BIOS must support it. The driver stack must exist. The operating system must handle large drives properly (older systems struggled with drives over 2TB).

Hyperscalers have standardized server hardware designed around these constraints. They work with vendors to ensure compatibility. Most enterprise environments aren't equipped for it.

Pricing: At $22,000+ per drive, you need workloads that justify the cost. That's almost exclusively AI training, large-scale analytics, and specialized vector databases. Nobody buys these for general-purpose enterprise storage.

Scale Requirements: These drives make economic sense in deployments of thousands of units. If you're buying 10 drives, the economies of scale disappear. Vendor support and negotiated pricing only work for massive commitments.

Cooling and Power: Proper deployment requires specialized infrastructure. Immersion cooling setups aren't something you bolt onto an existing data center. You're building purpose-built systems around these drives.

In other words, these SSDs aren't a trickle-down innovation. They're purpose-built for a specific market segment with specific needs. It'll be years, if ever, before similar technology reaches consumer or SMB markets.

The Broader Implications for Data Center Architecture

Zoom out for a moment. What does the rise of 245TB SSDs tell us about the future of data center architecture?

First, the hard drive is becoming obsolete for hot data. That's a dramatic shift. For decades, hard drives were the workhorse of data centers. They're not disappearing entirely, but they're being pushed to cold storage and archive roles where their cheapness compensates for slowness.

Second, density is becoming more important than cost. When you're constrained by space, power, and cooling more than money, you optimize differently. Paying

Third, the industry is vertically integrating. Hyperscalers work directly with NAND manufacturers on custom solutions. They skip the traditional OEM channel. This accelerates innovation but makes it harder for smaller players to access cutting-edge hardware.

Fourth, AI workloads are reshaping every layer of infrastructure. It's not just storage. Accelerators, interconnects, memory systems, power delivery, all of it is being redesigned for AI. We're watching the complete evolution of the hyperscale data center in real time.

Fifth, NAND availability is becoming a strategic asset. Companies are racing to secure supply because it's actually constrained. This is new. For most of the last 15 years, you could buy almost any amount of storage at commodity prices. That era is ending.

While SSDs have a higher cost per TB at

SK Hynix and Samsung's Competitive Moves

I mentioned SK Hynix and Samsung earlier, but they deserve deeper exploration because they're the biggest NAND manufacturers globally.

SK Hynix teased the PS1101, a 245TB PCIe Gen 5 enterprise drive. The term "teased" is important here. They haven't fully detailed specs or availability. Why? Probably because they're still ramping production. NAND fabrication is brutal. You can't just flip a switch and double output. It takes months to characterize new processes, validate production, and ship volume.

Samsung published roadmaps extending beyond current capacities. That's a careful way of saying they have something bigger coming, but they're not ready to announce yet. Samsung historically moves deliberately. They don't announce until they're confident in scale-up.

Both companies have competing demands. They supply internal data centers (Amazon for AWS, so to speak, since Samsung makes components for their own systems). They sell to the open market. They work with OEMs. Balancing these requires careful strategy.

What's fascinating is that even the market leaders feel the pressure to go after 245TB capacity. They can't afford to sit on 122TB SKUs if competitors move to 245TB. The momentum is real.

The Role of Vector Databases

I've mentioned vector databases a couple times. They deserve specific attention because they're a major driver of these storage requirements.

Vector databases are the infrastructure for modern AI applications. Every search query, recommendation, or similar-item lookup in a modern AI system typically uses vector search. The user queries get converted to embeddings, and the system searches through a database of stored embeddings to find matches.

Popular vector databases include Pinecone, Weaviate, Milvus, and others. They're designed specifically to store millions or billions of vectors and search them efficiently.

Now scale that up. A hyperscaler running semantic search for millions of users generates billions of query vectors daily. Those need to be stored somewhere for future analysis, fine-tuning, and retraining. The vectors are typically high-dimensional (768 to 4,096 dimensions depending on the model), which translates to megabytes per vector when you include metadata.

Multiply it out: billions of users, billions of daily queries, each query generating 3,072 dimensions of data. That's exabytes of vector data. It has to live online. It gets accessed constantly. It's exactly the scenario that demands dense flash storage.

Vector database support is becoming a major factor in storage decisions. Vendors that can support efficient vector lookups at scale win business.

What's Coming Next: 2026 and Beyond

Since we're in 2025, let's speculate about what's coming in 2026 and beyond. This is where it gets interesting.

Samsung, Micron, and Solidigm have publicly confirmed plans for similar products in 2026. That's not speculation. That's roadmap commitment. Expect 245-256TB SSDs from these vendors within the next 12 months.

PCIe Gen 6 is coming, which will double bandwidth again. We might see 512TB or larger SSDs within 2-3 years. At some point, the capacity per drive becomes so large that you're putting entire zettabyte-scale storage systems into a handful of rack units. That's both cool and weird from an architectural perspective.

3D NAND improvements will continue. Current NAND stacks are around 176-232 layers. The industry is targeting 400+ layers within a few years. More layers mean more density, which means larger capacities without increasing the die size.

AI-optimized storage controllers will emerge. Today's controllers are reasonably generic. Tomorrow's might include dedicated hardware for compression, deduplication, or even vector search operations. Storage is becoming a compute platform, not just capacity.

Integration with AI accelerators is inevitable. Imagine a system where storage controllers directly interface with GPU memory controllers, minimizing data movement and reducing latency. We're not there yet, but vendors are clearly thinking about it.

The Supply Chain Reality

Here's something rarely discussed but incredibly important: NAND supply is actually becoming constrained.

For the last 15 years, NAND suppliers had more capacity than demand. They competed by dropping prices. This created a buyer's market where hyperscalers could demand volume discounts and custom configurations.

That dynamic is reversing. AI demand for storage is outpacing supply growth. Every NAND fab is running at capacity. Suppliers can't build new fabs fast enough to meet demand.

This has consequences:

- Prices stabilizing or increasing instead of dropping

- Lead times extending for large orders

- Negotiating power shifting toward suppliers

- Custom configurations becoming harder to get

Hyperscalers are responding by securing long-term contracts, investing in NAND manufacturing themselves, and developing closer relationships with suppliers. Amazon's partnership with Kioxia, for example, gives AWS preferential access to high-capacity drives.

This is a fundamental shift in the storage market structure. It won't be widely discussed until it affects smaller companies, but it's happening now.

Practical Implications for Enterprises

If you're running a traditional enterprise data center (not a hyperscale AI operation), what does any of this mean?

Short term (2025): Nothing changes for most enterprises. These drives aren't available at reasonable prices. Your current storage strategy remains valid. You might see some supply tightness for lower-capacity enterprise SSDs as NAND gets allocated to hyperscalers, but nothing catastrophic.

Medium term (2026-2027): You'll start seeing some 122TB SSDs trickling down to enterprise channels. Pricing will be aggressive, probably

Long term (2028+): If trends continue, 245TB+ SSDs will become standard in high-end storage arrays. Hyperscaler innovation trickles down eventually. You might see enterprise versions with slightly lower performance but lower prices. Your future all-flash data center will look nothing like today's.

The key insight for enterprises: don't panic or rush to upgrade. Let hyperscalers iron out the kinks. Adopt these technologies once they're proven and available at reasonable cost.

Why This Matters for Developers and AI Engineers

If you're building AI applications, understanding the storage architecture underlying your infrastructure matters.

When you train a model in the cloud, where's your data living? Probably on SSDs now, if you're with a major cloud provider. They've been quietly upgrading storage infrastructure for AI workloads. Your training job runs faster because of decisions made in this storage architecture race.

When you deploy inference at scale, latency matters. Dense SSDs that keep model checkpoints, embeddings, and inference logs online reduce latency in your serving stack. You get faster lookups, less cache misses, better throughput.

For those building vector search applications or semantic search systems, the infrastructure is becoming more capable. You can now store and search billions of vectors efficiently, something that seemed impossible five years ago.

The infrastructure is quietly getting better. These 245TB SSDs are part of that story.

The Competitive Landscape

Let's look at who's winning and why.

Kioxia and Western Digital (Sandisk's parent) have scale and established relationships. They're moving aggressively into hyperscale. Kioxia's 246TB drive is serious competition.

Solidigm spun out from Intel. It lost some momentum when Intel struggled, but they're regaining traction. Their demonstrations with massive deployments show they're serious about the hyperscale market.

Samsung has the advantage of vertical integration. They make their own NAND, their own controllers, their own everything. That control helps them move faster.

SK Hynix is smaller but focused. Their PS1101 entry suggests they're picking this market carefully rather than competing everywhere.

Micron has solid engineering. Their PCIe Gen 5 SSDs are competitive, even if 245TB announcements came later than others.

Dapu Stor is the wild card. Polish company, relatively unknown, yet here they are announcing competitive products. They're probably focusing on specific hyperscaler relationships rather than broad market competition.

Huawei is taking a different angle with system-level optimization rather than pure capacity competition.

The landscape is competitive but not zero-sum. All these vendors will find customers. Hyperscalers multi-source intentionally.

The Environmental Dimension

Let me briefly address something often overlooked: environmental impact.

SSDs use less power than hard drives. On a massive scale, that matters. A hyperscale data center switching from hard drive arrays to SSD arrays reduces power consumption by 30-50%, depending on workload. That translates to significant energy savings and reduced carbon footprint.

At the scale of a large tech company, that's millions of tons of CO2 per year. It's not trivial.

The counterpoint: building new NAND fabs is energy-intensive. Manufacturing semiconductors uses a lot of power and water. If we're going to replace all hard drive arrays globally with SSDs, the manufacturing footprint is substantial.

Net assessment: SSDs are better, but they're not free from environmental cost. It's a trade-off where the operational benefits outweigh the manufacturing cost, but it's worth being honest about the full picture.

Conclusion: The Storage Architecture You're Not Paying Attention To

If you follow tech news, you hear about GPUs, accelerators, model sizes, training techniques. You rarely hear about storage. But storage is becoming just as important to AI infrastructure as compute.

The announcement of an eighth 245TB SSD isn't a footnote. It's confirmation that the industry has reached consensus on the direction of hyperscale storage. These drives are being deployed now. They're becoming the standard. Within two years, they'll be table stakes for any vendor competing for hyperscale AI workloads.

What does this mean? It means the era of spinning rust in data centers is ending faster than many people realize. It means NAND availability is becoming a strategic resource companies fight over. It means the infrastructure supporting AI is being completely redesigned around dense, hot flash storage.

It means that while everyone's talking about transformer architectures and fine-tuning techniques, quietly behind the scenes, storage architects are building the plumbing that makes all of it work at scale.

The 245TB SSD is just one piece of that transformation. But it's a significant piece, and understanding it gives you insight into where the hyperscale infrastructure is heading.

That matters if you're building on that infrastructure, if you work for a company that competes in this space, or if you simply want to understand how the AI revolution is reshaping the physical foundations of the internet.

Because at the end of the day, all that AI hype running on your cloud provider's hardware is only possible because of decisions being made in storage architecture right now. The 245TB SSD is evidence of that transformation.

FAQ

What is a 245TB SSD and why does it matter?

A 245TB SSD is an ultra-high-capacity solid-state drive designed specifically for hyperscale data centers and AI workloads. It matters because it represents a fundamental shift in how data centers think about storage density. Rather than using arrays of hundreds of hard drives, hyperscalers can now consolidate massive amounts of storage into a single drive, dramatically reducing power consumption, cooling requirements, and rack space. Eight major vendors announcing competing products simultaneously indicates this isn't a niche product but a standard direction for the industry.

How does QLC NAND enable these massive capacities?

QLC (quad-level cell) NAND stores four bits of data per cell instead of three (TLC) or two (MLC). This increases the density of data that can be stored on the same physical silicon wafer. While earlier QLC technology had concerns about endurance and performance, modern implementations address these issues through sophisticated controller design, firmware management, and intelligent data placement techniques. Dapu Stor's 122TB drives already in production prove that QLC at scale is reliable for enterprise deployments.

Why are AI workloads driving demand for these massive SSDs?

AI training and inference generate enormous volumes of embeddings, inference logs, and vector databases that must stay online and be accessed frequently. Unlike traditional enterprise workloads that might archive data after a certain period, AI systems continuously access this data for fine-tuning, analysis, and serving. Dense flash storage excels at random access patterns, making SSDs far superior to hard drives for these workloads. A single large language model can generate exabytes of training data that needs to live on hot storage.

What are the cost implications of moving to 245TB SSDs?

While individual 245TB SSDs cost

Will these 245TB SSDs become available to enterprise or consumer markets?

Not in the foreseeable future. These drives require specific hardware support (PCIe Gen 5), specialized infrastructure (immersion cooling), and custom controller firmware. They only make economic sense at hyperscale, where thousands of units are deployed together. Enterprise versions might trickle down in 2027-2028, but they'll still be expensive and purpose-built for specific workloads. Consumer access is many years away, if it ever happens.

What is the difference between these drives and traditional enterprise SSDs?

Traditional enterprise SSDs top out around 30-60TB and are designed for diverse workloads like databases, file servers, and general-purpose storage. The new 245TB drives are purpose-built for AI and analytics, optimized for sequential throughput, high-density deployment, and efficient management of massive datasets. They use QLC NAND instead of TLC, employ different controller algorithms, and require different infrastructure. They're specialization, not evolution, of traditional enterprise storage.

How does PCIe Gen 5 enable these drives?

PCIe Gen 5 provides 64GB/s of bandwidth per direction, compared to 32GB/s for Gen 4. At hyperscale deployment, multiple drives share the same storage fabric, so aggregate bandwidth matters. PCIe Gen 5 ensures the connectivity layer doesn't become a bottleneck as drives get larger and faster. Without Gen 5, drive performance would be artificially limited by connectivity constraints. That said, even Gen 5 bandwidth can become constraining with thousands of drives, which is why Gen 6 planning is already underway.

What does this mean for storage competition and supply?

With eight vendors competing at the 245TB mark, the market is broadly distributed, preventing any single vendor from monopolizing supply. However, NAND wafer availability is increasingly constrained. The industry is essentially fighting over a limited supply of NAND, which gives suppliers more negotiating power. Hyperscalers are responding by securing long-term contracts and investing in closer relationships with NAND manufacturers. This represents a shift from the historical buyer's market to a more balanced or supplier-favorable market.

How does Huawei's approach differ from other vendors?

While most vendors focus on raw capacity expansion, Huawei is optimizing storage systems to reduce expensive high-bandwidth memory (HBM) requirements in AI accelerators. They pair high-capacity SSDs with specialized controllers that enable efficient data movement, allowing GPUs to use smaller HBM pools. It's a system-level optimization rather than a component-level innovation, reflecting different engineering priorities and access to different markets.

What storage technology is coming after 245TB?

Within 2-3 years, expect 512TB drives to emerge. Longer term, improvements in 3D NAND stacking (moving from 176-232 layers to 400+ layers) will enable even higher capacities. PCIe Gen 6 with doubled bandwidth will arrive around 2027-2028. AI-optimized storage controllers with dedicated hardware for compression and vector search operations are likely. The architecture itself is evolving toward integrated storage-compute solutions rather than separate components.

Key Takeaways

- Eight vendors have announced competing 245TB SSDs in 2024-2025, confirming a genuine industry shift away from hard drives toward dense flash for AI workloads

- QLC NAND technology stores four bits per cell instead of three, enabling these capacities while maintaining enterprise-grade reliability through sophisticated controllers and firmware

- AI systems generate continuous streams of embeddings and inference logs that must stay online and be accessed frequently, making dense hot storage superior to hard drives

- Total cost of ownership analysis shows SSDs become economically justified at hyperscale when accounting for space, power, cooling, and operational savings despite higher per-unit cost

- NAND manufacturing capacity is becoming constrained by AI demand, shifting negotiating power from hyperscaler buyers to semiconductor suppliers for the first time in years

Related Articles

- Steam and Valve Outages: Complete Guide to Causes, Impact, and Fixes [2025]

- Dell Pro Micro Desktop Under $700: Intel Core Ultra 5 Deal [2025]

- The Ultimate VPN Gift Guide: Give Digital Privacy This Holiday [2025]

- Disney Bundle Deal: Save Big on Premium Streaming [2025]

- Europe's Startup Market Gap: Why Data Lags Behind Energy [2025]

- PS5 and PS5 Pro Discounts: Complete Buying Guide [2025]

![The 245TB SSD Revolution: How AI is Reshaping Hyperscale Storage [2025]](https://tryrunable.com/blog/the-245tb-ssd-revolution-how-ai-is-reshaping-hyperscale-stor/image-1-1766606780496.jpg)