Introduction: When Government Finally Gets Real About AI

Here's something that doesn't happen often: the UK government admitting it has a problem and building technology to solve it. Not some flashy pilot program that disappears in six months. Not a consultant's vanity project. A real, multi-year investment in artificial intelligence that touches the lives of millions of people claiming benefits.

The Department for Work and Pensions (DWP) just announced plans to deploy a conversational AI system to handle benefits-related calls. And before you roll your eyes, here's why this actually matters: the system will answer the phone, understand what you're asking about, and route you to the right human agent, or handle it entirely through self-service. The budget? Up to £23.4 million, running from July 2026 through July 2030, with optional extensions to 2032.

But here's the real story hiding in the numbers. Over the past four years, the UK experienced an 11.8% rise in benefit claimants, adding roughly 2.4 million additional people to the system. The National Audit Office discovered that 31.6 million call minutes could have been avoided in 2022-2023 alone. That's not just inefficiency. That's millions of hours of human labor that could've been spent elsewhere, and millions of people waiting on hold when they needed help.

This isn't about replacing people. It's about making the system work for them. And the timing is interesting, because it reveals something bigger about how government technology is actually changing. The DWP is asking for something specific: a natural language call steering system that lets people speak normally, in their own words, rather than having them press buttons and navigate menus. The system needs to identify intent from unstructured conversation, make routing decisions, and offer self-service options that actually work.

We're going to break down what this means, how it works, why it matters, and what it says about the future of public services.

TL; DR

- The UK DWP is investing £23.4 million in an AI call routing system running through 2030

- The system will handle 31.6 million wasted call minutes annually, redirecting them to self-service or appropriate agents

- Natural language processing lets callers speak normally instead of using menu navigation

- The project addresses 2.4 million additional benefit claimants added since 2019

- All infrastructure must be UK-based, GDPR-compliant, and follow UK security frameworks

- Bids close February 2, 2026, with the winning contractor announced by June 1, 2026

- This represents a fundamental shift in how UK government services operate digitally

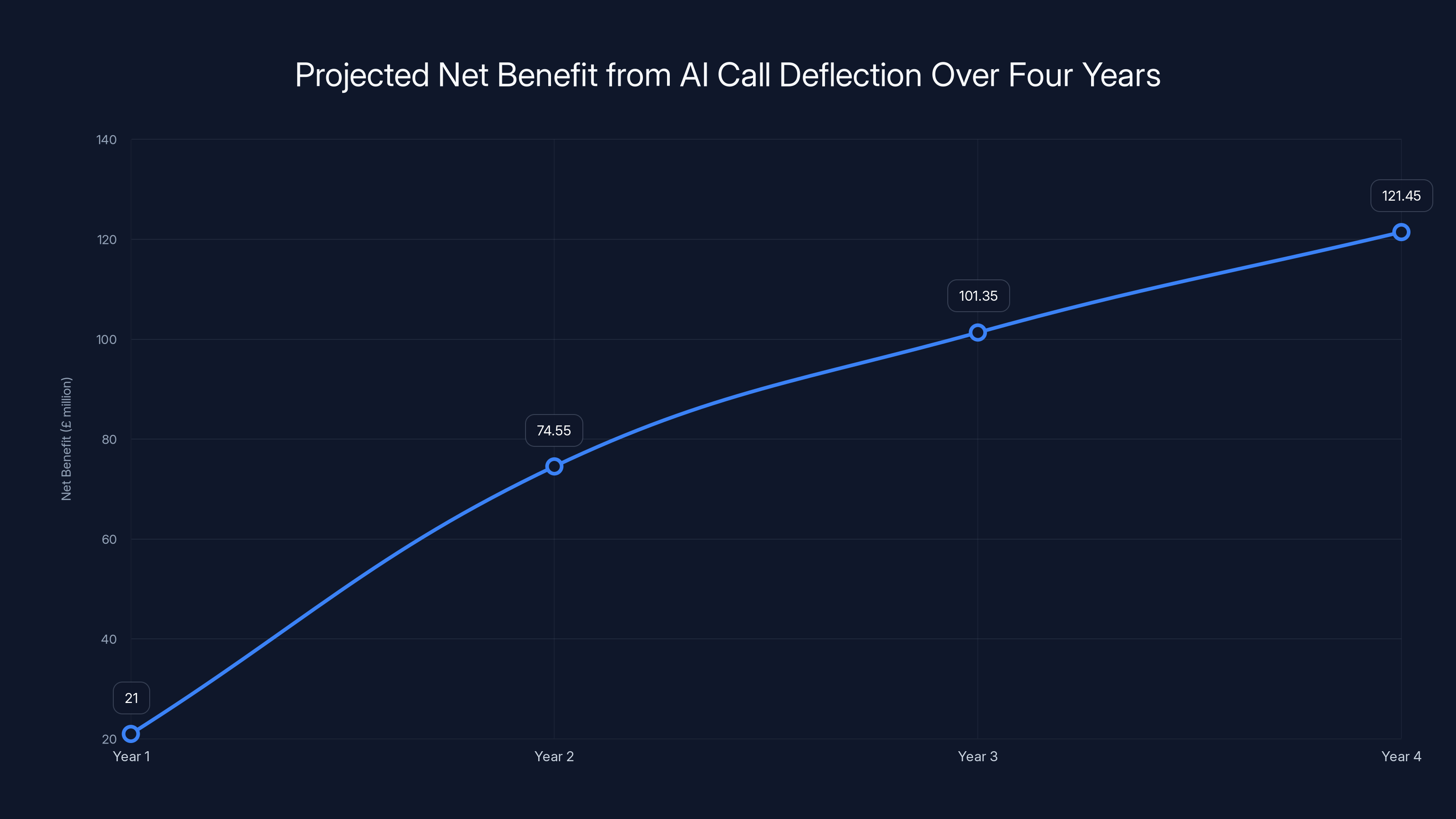

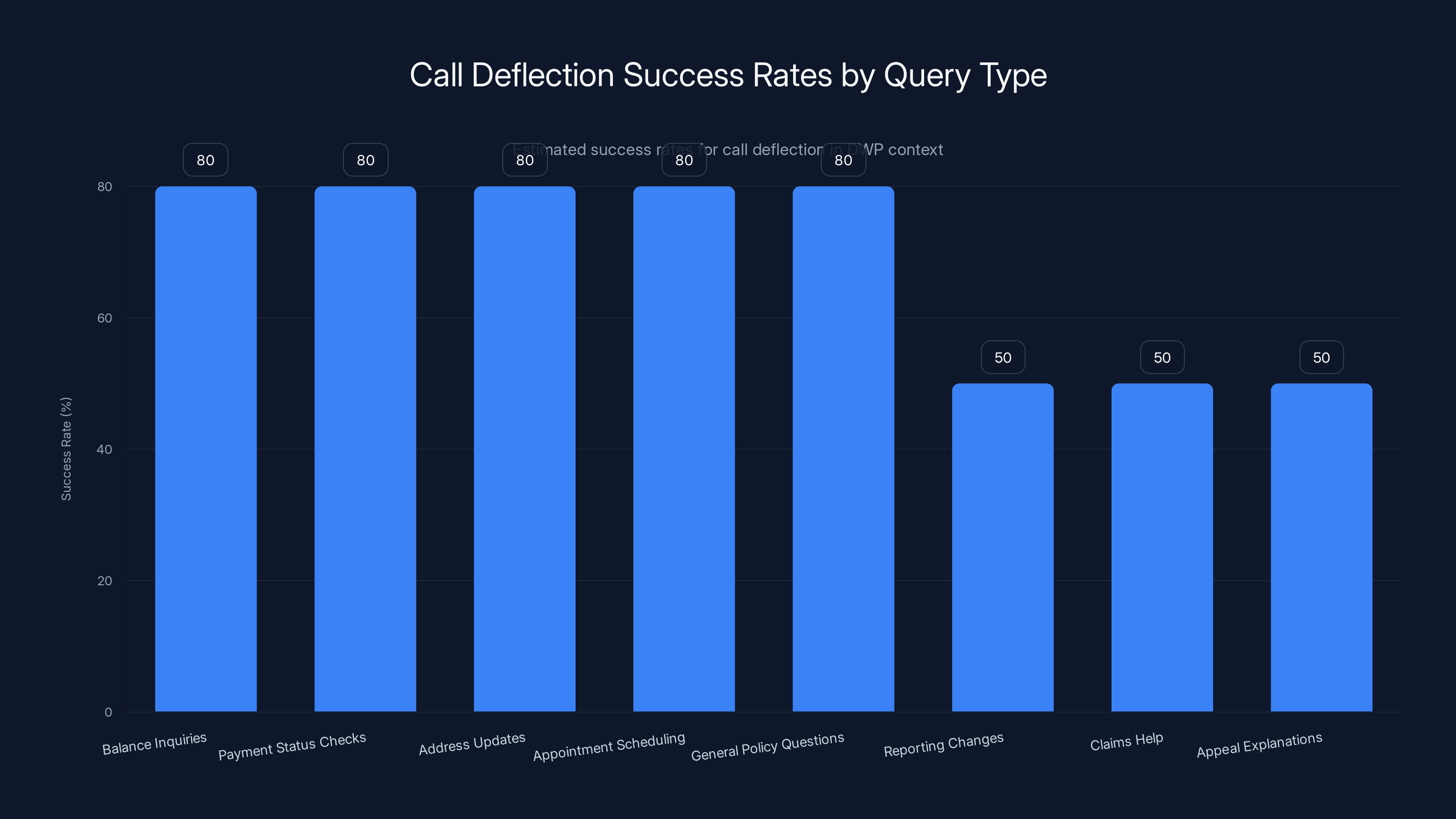

The projected net benefit from AI call deflection increases significantly over four years, reaching approximately £121.45 million in year four. Estimated data based on deflection effectiveness.

The Problem: What £23.4 Million of Wasted Calls Actually Means

Let's talk numbers first, because they're actually shocking. Between May 2019 and 2023, the UK's benefit claimant population grew by 11.8%. That's not a modest increase. That's roughly 2.4 million additional people trying to reach the DWP to discuss their benefits, their claims, their eligibility, their payments, and their problems.

For context, the DWP employs tens of thousands of people across the UK. But you can't scale a contact center linearly with demand. You can't just hire one person for every new claimant. Labor costs, training time, attrition rates, space constraints, all of it compounds. What happens is that call wait times explode, human agents become burnt out managing volume they weren't prepared for, and people waiting on the phone get increasingly frustrated.

The National Audit Office quantified this in a 2023 report. In 2022-2023 alone, DWP identified that 31.6 million call minutes could have been avoided. Let's sit with that for a moment. That's not 31.6 million seconds. That's millions of hours of human attention devoted to calls that shouldn't have needed a human in the first place.

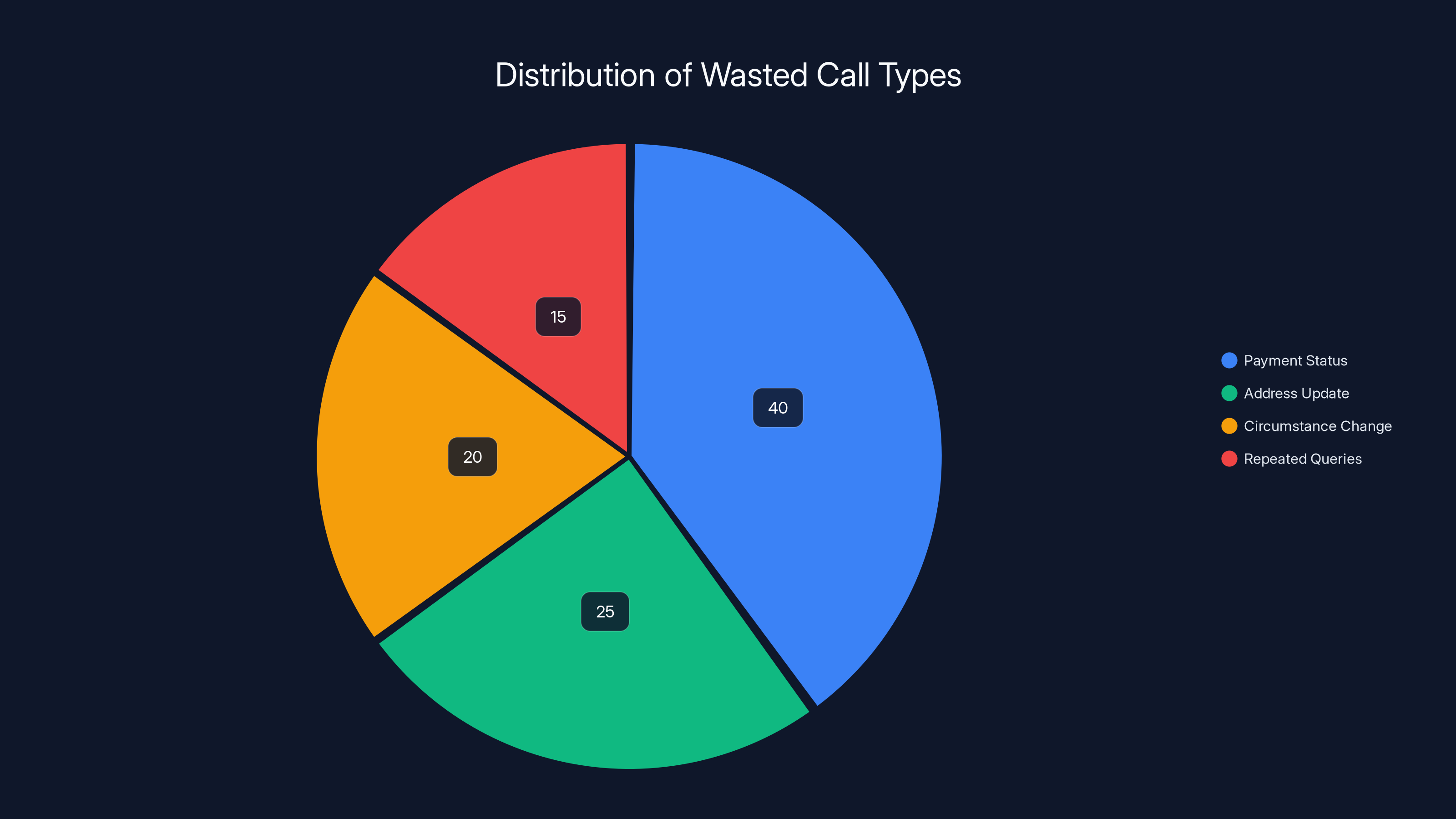

What calls could be avoided? The easy ones. Someone calling to check if their payment was processed. Someone asking how to update their address. Someone needing to report a change in circumstances that the system could handle through a simple automated workflow. Someone calling three times because they didn't understand the first two explanations, which means they probably need the information in a different format.

The cost isn't just in human labor. There's the frustration cost. There's the cost of people's time. There's the cost of delays in processing legitimate claims. There's the documented mental health impact of people in poverty being unable to reach support systems. And there's the systemic cost: when a contact center can't handle call volume, it degrades. Quality drops. Mistakes happen.

This is why the DWP is spending £23.4 million. Not because AI is trendy. Because the status quo is unsustainable.

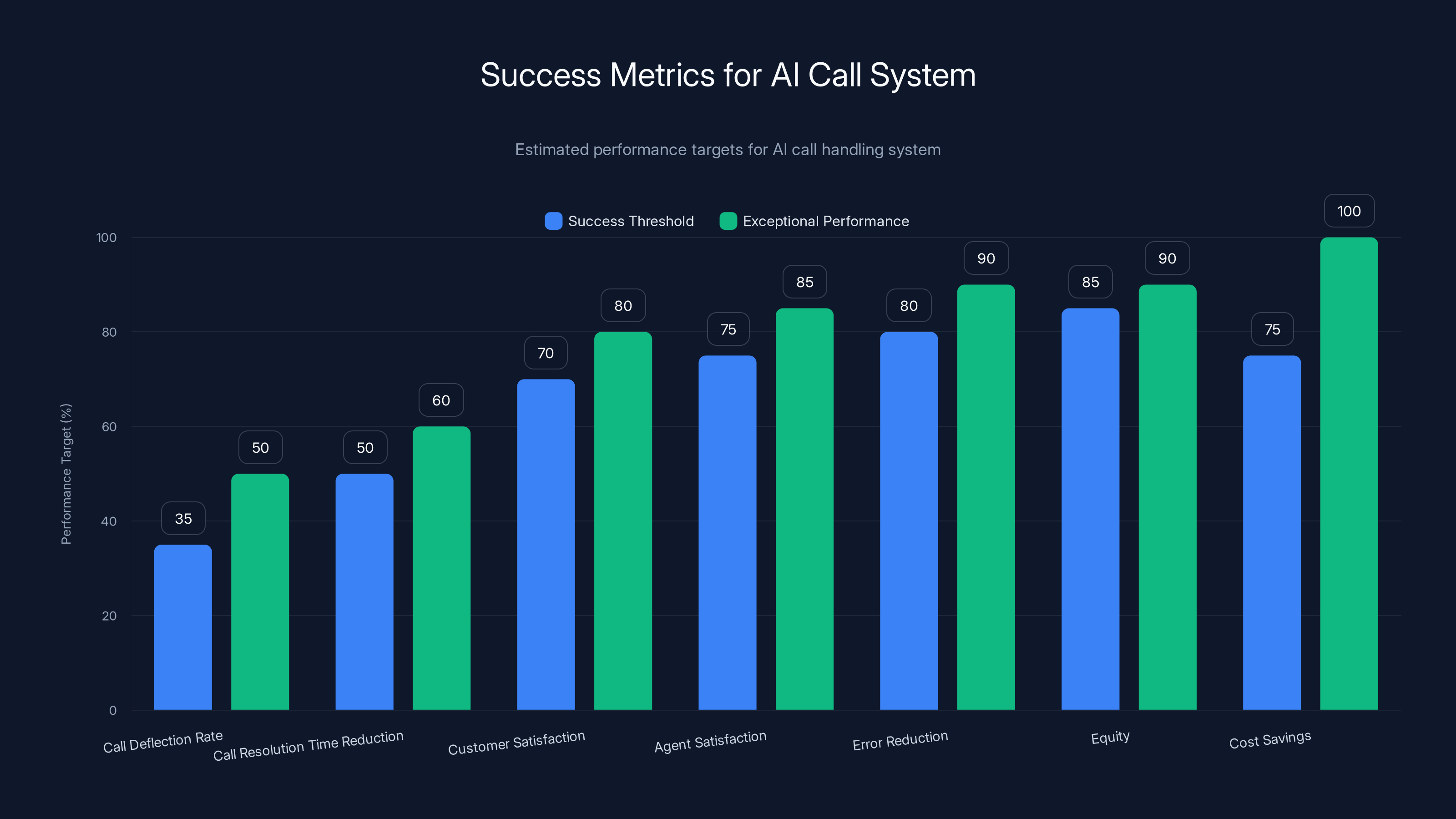

The AI call system aims for a 35% call deflection rate and a 50% reduction in call resolution time. Achieving 75%+ of projected cost savings is crucial. Estimated data.

How Conversational AI Handles Call Routing: The Technical Reality

Okay, so the DWP needs an AI system that can answer calls. But what does that actually involve? It's not as simple as throwing Chat GPT on a phone line and calling it a day. Government systems have constraints that enterprise systems don't.

First, let's understand the problem the system is solving. A traditional benefits call center uses interactive voice response (IVR) systems. You call, you hear a menu. "Press 1 for payment inquiries. Press 2 to report a change in circumstances. Press 3 to appeal a decision." And so on. The issue is that this forces callers into predefined categories. Someone calling with a complex problem might not fit neatly into any single category. Someone with limited literacy or language difficulties might not understand the options. Someone in crisis might not want to press buttons. They want to talk.

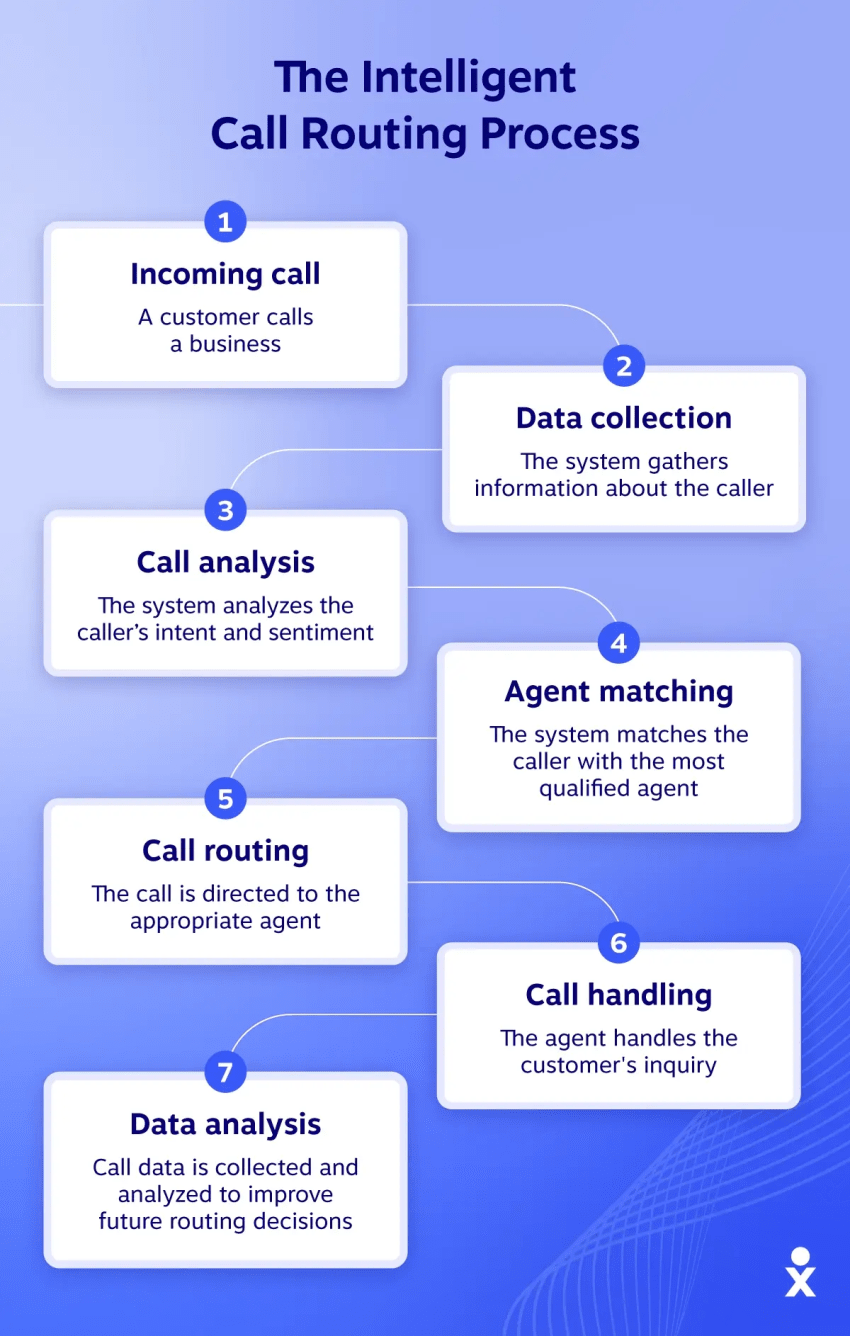

The DWP's new system uses natural language processing to handle open-ended conversation. Here's how the flow works:

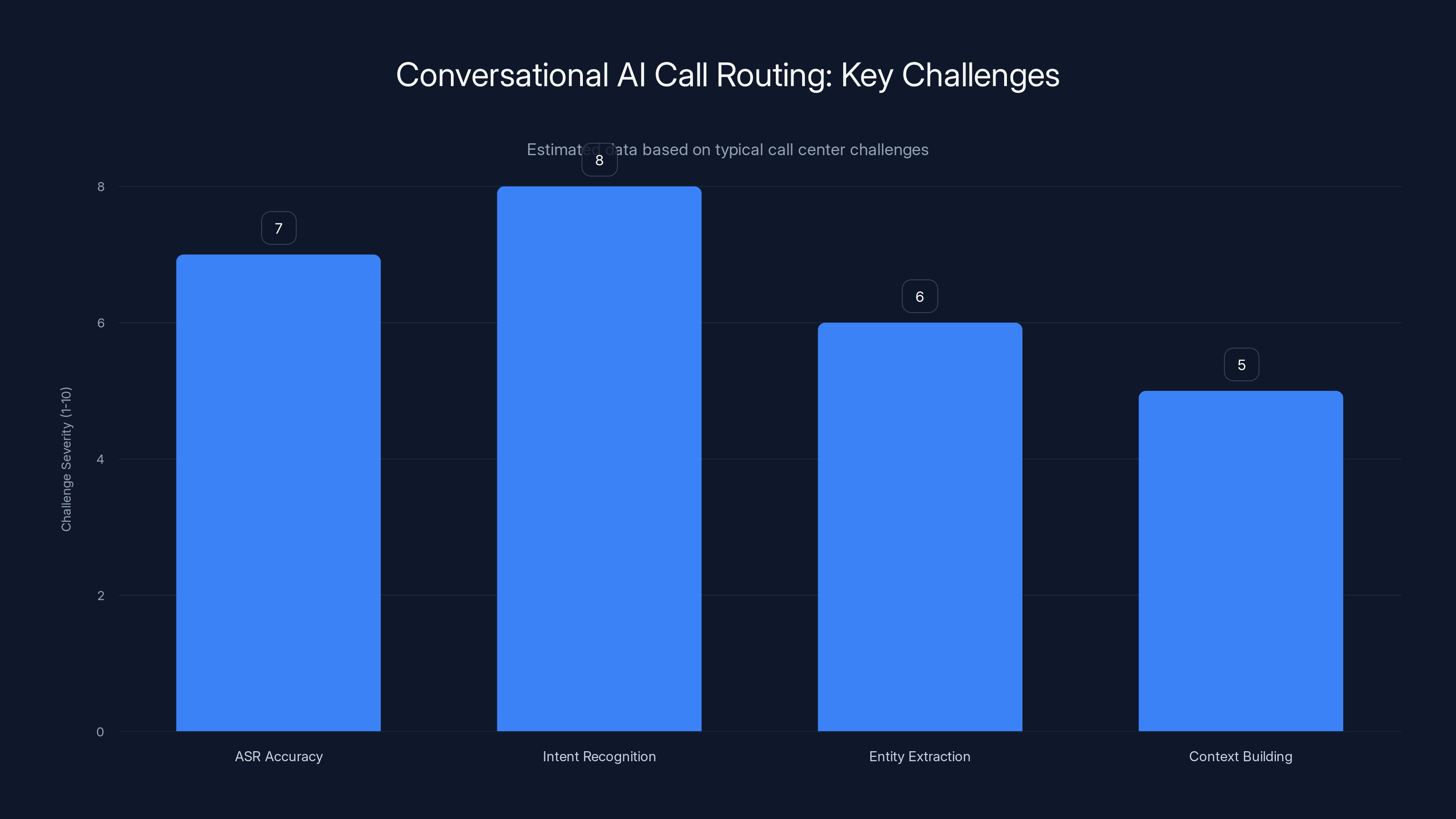

Step 1: Call Reception and Audio Processing Someone calls the DWP. Instead of a menu, they hear a greeting and an invitation to describe their issue. The system captures audio and converts it to text using automatic speech recognition. This isn't perfect, especially with background noise, accents, or dialectal variation, but modern ASR has reached a point where it's accurate enough for this use case.

Step 2: Intent Recognition The system analyzes the transcribed speech to identify what the caller actually wants. This is done using natural language understanding, a subset of AI that specializes in extracting meaning from unstructured text. A caller saying "I haven't received my money this month" has a different intent from "I started a new job and need to tell you" which is different from "I don't understand my decision letter." The system needs to categorize these accurately.

Step 3: Entity Extraction and Context Building Once intent is identified, the system pulls out relevant information. Name, National Insurance number, the type of benefit they're claiming, the specific issue. Some of this might come from the call itself (the caller says it). Some might come from pre-call data if the system integrates with the DWP's existing systems. The system builds a context model of the interaction.

Step 4: Decision Making Now the critical decision: can an AI handle this entirely, or does it need a human? The system evaluates complexity. A simple balance check? Handle it. Someone asking if they're eligible for a payment type they haven't claimed? Might need a human. Someone in distress or expressing harm? Definitely a human. The system routes accordingly.

Step 5: Self-Service or Human Handoff If the call can be handled automatically, the system provides the information through a conversational interface. The caller asks questions, the system answers them, all without a human. If human involvement is needed, the call gets routed to the right agent with a complete summary of the conversation already loaded. The agent doesn't have to start from scratch. They see: "Caller is asking about their Universal Credit payment. They reported a change in circumstances last month but haven't received adjusted payment yet."

This is fundamentally different from traditional IVR because it's flexible. People can speak naturally. The system accommodates different communication styles. And because the system has access to the full conversation context, handoffs to humans are actually productive rather than frustrating.

Natural Language Processing: Why This Matters for Government Services

There's a reason the DWP specifically requested a "natural language call steering system" rather than a traditional IVR upgrade. Natural language processing (NLP) is substantially harder than menu-driven systems, but it's also substantially more effective.

Traditional IVR works through predetermined decision trees. You go down a path based on button presses. The system knows exactly what to expect at each step. It's simple, reliable, but limited. Natural language systems work differently. They don't assume they know what the caller will say. They listen, parse the meaning, and respond contextually.

For a government service, this difference is profound. Benefit claimants aren't a homogeneous group. They include elderly people using government services for the first time. Young people comfortable with technology but unfamiliar with benefits language. People with disabilities that make menu navigation difficult. People calling in crisis. People for whom English is a second language. Traditional IVR fails at scale with this population because it assumes users will understand and follow instructions. NLP systems don't make that assumption. They work with what they get.

The DWP's requirement for intent identification is crucial here. When someone calls saying "I'm not getting my money," the system needs to identify that this is likely about a payment issue, but it also needs to consider whether the person means they haven't received their payment for the month, or they're unhappy with the payment amount, or they've heard rumors that their benefit type is ending. Same statement, multiple possible intents. An NLP system trained on historical DWP call data can usually get this right.

Entity extraction is similarly important. The system needs to know that when someone says "my UC" they mean Universal Credit. When they say "I got a sanction" they mean they've had their benefit reduced as a penalty. When they reference "the letter I got last week," the system needs enough context to know which letter they mean from potentially dozens of letters the DWP sends to that person.

But here's where it gets complex: government language and benefit language is genuinely complex. The DWP operates under statutory requirements, working age rules, calculations with multiple variables, appeals processes, and circumstances that change dynamically. An NLP system that works for a pizza ordering service will completely fail at this. The system needs to be trained specifically on DWP language and policy.

That's why the procurement requires UK-based hosting and UK data residency. The training data for this system is millions of real calls, transcribed and annotated with intent and outcomes. That's sensitive data. It includes personal information, benefit details, and vulnerability information. It cannot leave the UK. The system must be hosted on UK infrastructure and follow UK data protection rules.

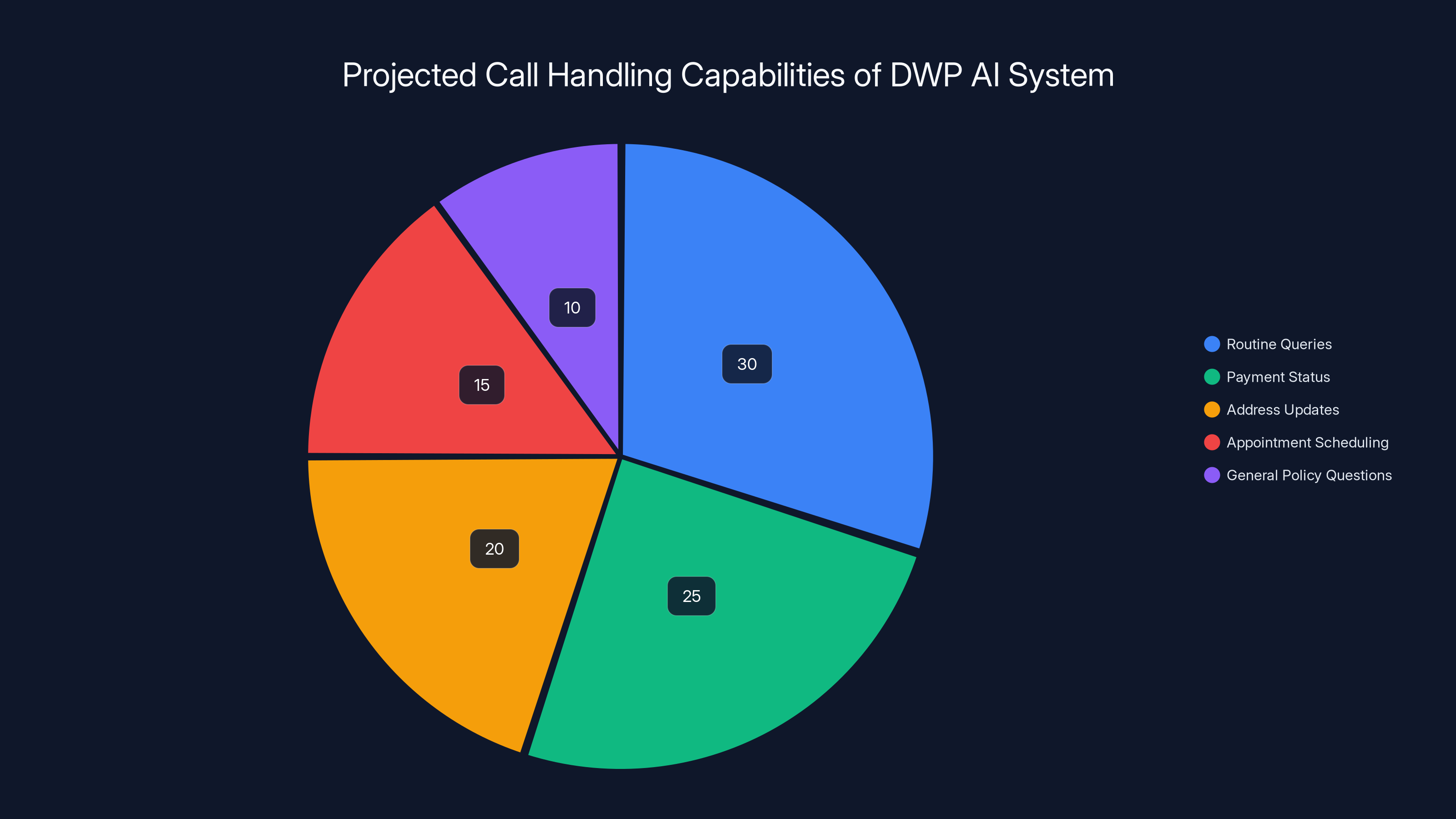

Estimated data shows that the DWP AI system will primarily handle routine queries and payment status checks, with a smaller portion dedicated to address updates and scheduling. Estimated data.

The DWP's Four-Year Transformation: Timeline and What Actually Needs to Happen

The project runs from July 6, 2026 to July 5, 2030. That's not a short sprint. That's a deliberate, multi-year implementation. And there's a reason for that timeline.

Year one (2026-2027) is probably procurement, contracting, and initial system design. The winning vendor needs to understand the DWP's actual call patterns, infrastructure, security requirements, and policy constraints. They need to request historical call recordings (with personally identifiable information stripped). They need to understand how the DWP's existing systems work and where the AI system needs to integrate.

Year two (2027-2028) is likely when the real work happens. System development, training on historical data, building the intent classification models, testing in controlled environments. The vendor likely builds a "sandbox" environment where the AI system can be tested against mock calls and historical patterns without affecting real callers.

Year three (2028-2029) is probably rollout. Starting with a pilot. Maybe certain call types, certain regions, certain times of day. Monitoring real performance. Adjusting models based on what actually happens when real people call the system. Fixing the inevitable failure modes that no amount of testing reveals.

Year four (2029-2030) is scaling. Once the pilot proves it works, expanding to broader coverage. More call types, broader regions, fuller integration with human agents and case management systems.

The optional extensions to July 2032 provide two additional twelve-month periods. Why would a vendor and the government agree to optional extensions? Probably to ensure the system continues to improve. Ongoing model training, adjusting to policy changes, scaling to handle continued growth in claimant volume. Government systems don't get turned on and forgotten. They evolve.

Here's what's notable about this timeline: it's realistic. Too many government tech projects fail because they try to build everything at once. The DWP's approach allows for learning and iteration. The first year won't be a mad rush to cut code. It'll be understanding the problem deeply.

Call Deflection Strategy: The Real Numbers Behind the Efficiency Gain

Let's talk about what "call deflection" actually means, because it's not what it sounds like.

Call deflection sounds like: the system hangs up on people and forces them to solve problems themselves. That's not it. Call deflection in the DWP context means: providing a resolution without the caller needing to speak to a human agent.

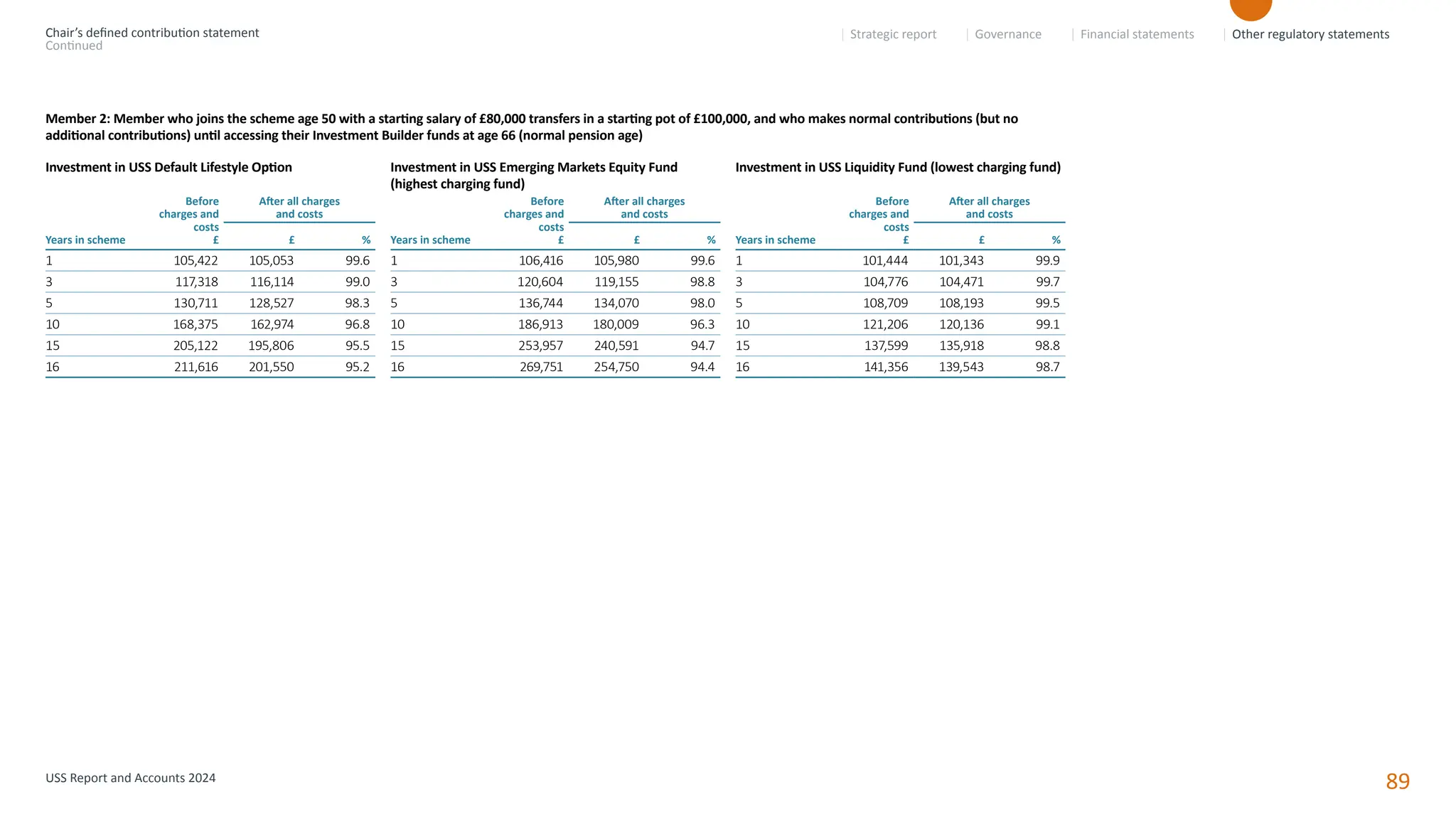

What percentage of calls can actually be deflected? That varies by call type. Some research suggests that in well-optimized contact centers, 30-50% of inbound calls can be handled through self-service once the self-service option is actually good. But "good" is the operative word. Self-service for checking a balance? Sure, 80% of people will use it. Self-service for understanding a complex benefits decision? Much lower success rate without human support.

The DWP's 31.6 million wasted call minutes gives us a clue. If we assume the average DWP call is 10 minutes (and benefits calls are often longer), that's about 3.16 million calls per year that shouldn't be happening. The DWP handles somewhere in the region of 20+ million calls annually (estimates vary), so if even half of the wasted calls could be handled through AI self-service, that's significant volume.

But let's be realistic about what this system can actually deflect:

High Deflection Potential (80%+ success rate):

- Balance inquiries: "How much Universal Credit will I get this month?"

- Payment status checks: "Has my payment been processed?"

- Address or contact information updates: "I need to change my address."

- Appointment scheduling: "I need to book a meeting with a work coach."

- General policy questions: "How does the benefits cap work?"

Medium Deflection Potential (40-60% success rate):

- Reporting changes in circumstances: "I started a new job."

- Claims help: "How do I apply for this benefit?"

- Appeal explanations: "What does it mean that my appeal was rejected?"

- Technical issues: "Why can't I access my online account?"

Low Deflection Potential (0-20% success rate):

- Complex eligibility questions where personal circumstances matter greatly

- Appeals or disputes

- Cases involving vulnerability or safeguarding concerns

- Interactions where someone is in distress

The math works roughly like this. If the system deflects just the high-potential calls (about 40% of total volume), and those calls currently take 8-10 minutes, that's roughly 8-12 million minutes annually removed from the system. That alone justifies the investment through labor cost savings.

But here's the deeper benefit: human agents now have more time for complex calls. Right now, agents spend half their time answering balance inquiries and processing routine changes. Once AI handles that baseline, agents can focus on callers who actually need help with complex problems. Call quality goes up. Agent satisfaction goes up. Resolution rates improve.

Estimated data shows that a significant portion of wasted calls (40%) are related to payment status inquiries, followed by address updates (25%). Automating these could reduce wasted call time significantly.

Infrastructure and Security: Why UK-Based Hosting Actually Matters

The procurement document specifies that the system must be "based in the UK and hosted on dedicated cloud." This isn't bureaucratic preference. It's a fundamental requirement with several layers of importance.

Data Residency and Privacy The training data for this system includes recordings of millions of DWP calls. These aren't just any calls. They're calls from people discussing their financial situation, their circumstances, sometimes their vulnerabilities. They're discussing benefit fraud (actual or accused), domestic abuse, disability, mental health issues. This is personal data of the most sensitive type.

Under GDPR and the Data Protection Act 2018, personal data about EU citizens (and UK regulations are similar) should generally stay within the UK/EU. More importantly, it absolutely cannot be moved to countries with weaker privacy protections. So the training data for the AI model stays in the UK. The models themselves stay in the UK. The inference (the actual processing when someone calls) happens in the UK.

This also means the vendor can't simply use pre-trained models from US companies. A vendor can't license a language model trained on arbitrary internet data and fine-tune it with DWP call data, then run that on US infrastructure. The entire thing needs UK-residency.

Security and the UK Security Policy Framework Government procurement requires compliance with HMG's Security Policy Framework. This is a specific set of standards about system access, vulnerability disclosure, incident response, and security clearances for personnel accessing the system.

What this actually means: the vendor's security practices get audited. Their staff need vetting. If they discover a security vulnerability, they report it through specific processes. Backups are stored securely. Disaster recovery exists. If someone's data is exposed, there's a clear incident response process.

Hosting on UK infrastructure makes all of this simpler. UK cloud providers (such as those certified under the Crown Commercial Service Framework) have clear compliance documentation. A US cloud provider can meet these requirements, but it's more complex. They need to have UK-specific legal agreements. They need to prove how they handle UK data differently. It's easier if the system just lives in the UK from day one.

Regulatory Compliance at Scale The DWP is a large, complex organization with multiple benefit types, each with statutory rules about who qualifies, how much they receive, what conditions apply. Universal Credit, Jobseeker's Allowance, Employment and Support Allowance, Personal Independence Payment, Housing Benefit. Each has different eligibility criteria, calculations, conditionality.

The AI system needs to understand this regulatory landscape and apply it correctly. If the system gives someone incorrect information about their eligibility, that's not just a customer service failure. That's a government system making incorrect determinations that might affect someone's income. There's clear liability.

With UK hosting and oversight, the DWP can audit the system. They can test it against known scenarios and verify it's giving correct answers. They can see what the model is doing and why. With international hosting and proprietary vendor models, this becomes much harder.

GDPR, DPA, and Data Protection: The Compliance Burden

Complying with GDPR and the UK Data Protection Act isn't just a legal checkbox. It's a genuine constraint on how the system can be built and operated.

Data Minimization GDPR requires that organizations collect and process only the data they need. So the AI system can't just record every word of every call and use it for training. It needs to collect specific information relevant to handling the call, and no more. This makes the system harder to build because it has less data to learn from, but it's required.

Consent and Transparency Callers need to know they're speaking to an AI system, at least initially. They need to understand that their call might be recorded. They need to understand how their data will be used. The system can't just claim to be a human agent.

Right to Explanation If the AI system makes a decision about someone (like routing them to a specific agent, or refusing to process a request), the person potentially has a right to explanation under GDPR Article 22. They can ask why the system made that decision. The vendor needs to be able to explain it.

This matters because many AI systems, especially deep learning systems, are opaque. You train them, they work, but if you ask why they made a specific decision, the answer is basically "the math worked out that way." For government applications, this is increasingly unacceptable. The DWP probably requires systems that can be audited and explained.

Data Retention and Deletion GDPR requires that data isn't kept longer than necessary. So call recordings can't be archived indefinitely. They need retention policies. After a certain period, they're deleted. This creates a tension with AI systems: the more historical data you have, the better the models. But data protection says don't keep it forever.

The vendor probably addresses this by using call recordings only for initial training and validation, then deleting them. The models remain (because they're not personal data, they're statistical patterns). But the original recordings get purged.

Intent recognition poses the highest challenge in conversational AI call routing, followed closely by ASR accuracy. Estimated data based on typical challenges.

Competitive Bidding and Who Actually Builds This

Who's going to build this system? Let's think about who could realistically bid.

Large Systems Integrators Companies like Accenture, IBM, Deloitte, Capgemini, TCS. These firms have experience with large government contracts, security clearances, compliance infrastructure. They could potentially partner with an AI/NLP vendor to provide the actual conversational AI.

AI-Specialist Vendors Companies that specialize in conversational AI and NLP. Google Cloud's Dialogflow, Amazon's Lex, Microsoft's Conversational AI services. Or specialized NLP companies that focus on enterprise conversation. These vendors have the AI expertise but might need to partner on the security and government compliance side.

Hybrid Approaches A mid-sized systems integrator might partner with an AI specialist. The integrator handles contracting, security, compliance, and integration. The AI specialist focuses on the conversational system. This is probably the most likely winning approach.

The procurement process opened up bidding. Companies had until January 16, 2026 to inquire about the contract, and until February 2, 2026 to request participation. The winning bid was expected to be announced by June 1, 2026. This is a formal government procurement, which means it's transparent and competitive.

Interestingly, the fact that the government is spending this money on a four-year contract with extensions suggests they expect this to actually work. They're not treating it as a pilot. They're treating it as a platform that will be in place for years. The extensions to 2032 suggest they expect ongoing development and refinement.

The Bigger Context: UK Government Tech Failures and Why This Is Different

There's a relevant detail buried in the original announcement: the UK government also admitted it failed to secure all systems by 2030, a previously stated deadline. In other words, while announcing a new AI project, the government simultaneously acknowledged it hasn't even met its cybersecurity targets.

This matters because UK government technology has a rough recent history. The Post Office Horizon scandal exposed systematic software failures that jailed innocent people. The Robodenial program incorrectly denied thousands of benefits claimants due to flawed decision-making. Multiple government digital platforms have launched expensively and then quietly failed.

So why should we believe the DWP AI system will actually work?

First, because the scope is more limited. This system isn't trying to make benefits eligibility decisions. It's handling call routing and simple self-service. Those are harder problems than traditional IVR, but they're not welfare policy. The system identifies intent and routes accordingly. It doesn't determine eligibility.

Second, because there's clear accountability. The DWP is putting specific requirements in the procurement document. UK-based, GDPR-compliant, specific security standards. These are measurable requirements. The vendor can't cut corners without violating the contract.

Third, because the timeline is realistic. Four years with optional extensions suggests the government learned from previous failures. They're not expecting this to be perfect on day one. They're expecting iteration.

Fourth, because the problem is real enough that failure is costly. The system needs to work because the current system is struggling. Government officials and unions and claimant advocates all agree that contact center capacity is inadequate. An AI system that doesn't work still needs to fail gracefully, routing calls back to humans rather than creating new problems.

That said, real risks exist. The system might perform worse for certain groups: people with accents outside standard British English, people with speech disabilities, people calling about complex or unusual situations. The system might have high false-negative rates: instances where it thinks a call can be deflected but actually can't, leaving callers frustrated. The system might take longer to hit full functionality than the timeline suggests. These are real risks.

But the baseline situation is also risky: millions of people unable to reach government services they're entitled to, contact center staff burnt out managing impossible call volumes, delays in processing valid claims. The DWP is making a bet that an imperfect AI system beats the current situation. That's probably true.

High deflection potential is seen in routine queries like balance inquiries and payment status checks, with an estimated 80% success rate. More complex issues such as claims help have a lower success rate around 50%.

What Self-Service Actually Looks Like in Practice

So what does calling the DWP and getting served by this AI system actually look like? Let's imagine a typical interaction.

Scenario 1: Payment Status Check (Deflected Successfully)

Caller: "Hi, I'm just checking if my Universal Credit payment went through."

System: "Thanks for calling. I can help with that. Can I get your National Insurance number?"

Caller: "It's XX 12 34 56 A."

System: "Thanks. Your payment of £XXX was processed on March 10 and should be in your account by March 12. Is there anything else I can help with?"

Caller: "No, that's great, thanks."

Result: Call handled entirely by AI. Length: 1 minute. Agent availability: freed up for someone else.

Scenario 2: Change of Circumstances (Partially Deflected)

Caller: "I've started a new job and need to report it."

System: "Congratulations on the new job. I can help you report that. Can I get your National Insurance number?"

Caller: "XX 87 65 43 B."

System: "Thanks. When did you start this job?"

Caller: "Last Monday."

System: "And how many hours per week will you be working?"

Caller: "About 15 hours."

System: "Got it. Your Universal Credit will be adjusted based on your earnings. You'll receive an updated payment decision letter within 5 working days. You can see your new payment amount in your online account after that. Just to confirm, is the address we have on file still current?"

Caller: "Yes, that's right."

System: "Perfect. Your change of circumstances has been reported. Is there anything else I can help with?"

Caller: "No, thanks."

Result: Call handled entirely by AI. Information collected, change processed, confirmation provided. Length: 2 minutes. Accuracy: system has confirmed address and basic details, reducing need for follow-up.

Scenario 3: Complex Situation (Routed to Human)

Caller: "I got a letter saying my Universal Credit is going to stop, but I don't understand why. I haven't changed anything."

System: "I'm sorry to hear that. I can help figure this out. Can I get your National Insurance number?"

Caller: "XX 11 22 33 C."

System: "Thanks. Let me pull up your account. I can see you received a decision letter on March 5. The decision letter itself will have specific reasons. Can you tell me, have there been any changes to your circumstances in the last three months? Changes to your living situation, your work, your relationships, anyone moving in or out of your home?"

Caller: "Well, my sister moved back in with us temporarily, but that shouldn't affect my Universal Credit."

System: "That actually might be relevant depending on the details. I'm going to connect you with a specialist who can review your specific situation and explain exactly why the decision was made. They can also discuss whether you have the right to appeal. One moment, please."

[System routes to human agent with complete context loaded]

Agent: "Hi, I can see you're asking about a UC cessation decision and your sister moving in. Let me walk through this..."

Result: Call routed after initial triage. Human agent has full context (call transcript, account summary, identified issue). Agent spends time on actual problem-solving rather than information-gathering. Call might still take 10-15 minutes, but it's productive.

These scenarios illustrate the real value. Not that AI handles everything, but that it handles routine queries efficiently and routes complex ones intelligently. The system doesn't make difficult calls easier. It makes easy calls instant and complex calls better-informed.

Training Data and Model Bias: The Real Challenge

Here's the thing nobody talks about enough: training an AI system on government call data is incredibly productive, but it also inherits the biases in that data.

If the DWP's historical call data shows that certain types of callers have always had their calls routed to specific departments, the AI system will learn that pattern and perpetuate it. If people with certain accents are more likely to be misunderstood by the current system, the AI system might learn the same misunderstanding patterns.

The classic example is gender bias. Conversational AI systems trained on internet text or call center data sometimes exhibit gender bias because the training data reflects societal biases. They might interpret similar requests differently depending on perceived gender. For a benefits system, this could mean the system treats similar requests from men and women differently, or treats transgender people inconsistently.

Age is another axis of bias. Older callers might be more likely to use old terminology for benefits programs. The system needs to understand "income support" when older people say it, even though it's been renamed. Younger callers might be more technically sophisticated and use online services. The system needs to handle both groups fairly.

Accent and dialect bias is substantial. AI speech recognition systems are notoriously worse at understanding some accents, especially non-native English speakers and regional British accents outside the "standard" accent the system was trained on. A DWP system serving all of the UK needs to understand Scottish, Welsh, Northern Irish, and various English regional accents with equal accuracy.

Vulnerability is also a consideration. Some callers will be in crisis. Some will have mental health issues, substance use issues, or cognitive disabilities. The system shouldn't just route distressed callers; it should recognize them as distressed and treat them differently. This requires nuance that generic AI systems don't have built in.

The DWP procurement probably requires "fairness audits" of the system. Testing it with diverse call recordings to ensure it performs consistently across demographics. Testing it with callers with disabilities. Testing it with people speaking with various accents. Making sure the system doesn't systematically perform worse for protected groups.

This is actually harder than building the system initially, because once you identify a bias, fixing it isn't straightforward. You can't just tell the AI to "be less biased." You need to retrain with rebalanced data, or adjust the decision thresholds, or both.

Staffing and Labor Implications: What Happens to DWP Employees

Let's address the elephant in the room: what happens to the people currently answering DWP calls?

Realistic answer: it depends. The system isn't designed to replace everyone. It's designed to handle the volume surge and take routine calls off human agents' plates. So in the best case scenario, some agents are freed up to do other work. Some move to more complex cases. Some leave naturally through attrition and aren't replaced. Staffing levels drop, but no mass firing.

In a worse case scenario, the system proves so effective that the government decides they need fewer call center staff. Some positions are eliminated. This would be politically contentious, especially for an organization that's already struggling with staffing retention.

In a realistic middle scenario: the volume growth that made it impossible to hire enough agents is now managed by AI handling baseline calls. The DWP maintains roughly current staffing, but redirects it. Some move from call centers to appeals or more specialized work. Some take voluntary redundancy packages. Over 5-10 years, staffing numbers drop slowly as demand is managed better.

This matters because DWP jobs are concentrated in specific regions. Liverpool, Glasgow, Wolverhampton. If call center staffing drops significantly, that has economic impact in those communities. But if the alternative is the current situation where the contact center is overwhelmed, there's a tradeoff being made.

There's also a work quality perspective. Current DWP agents handle 40-60 calls per day of 8-10 minutes each. That's a grueling job. You're taking calls from people often in difficult circumstances, many of whom are frustrated, some of whom are hostile. You're following scripts, handling complex policy, making mistakes because you're tired. If AI takes the routine calls, remaining staff work fewer calls per day and have more time per call. That's better work.

Cost Savings Calculation: Does the Math Actually Work?

Let's actually do the math on whether £23.4 million makes sense.

The DWP handles roughly 20-25 million inbound calls annually (estimates vary). Let's use 22 million as a midpoint. Current average handle time is probably 8-10 minutes. Let's say 9 minutes average.

22 million calls × 9 minutes = 198 million call minutes annually.

Agent loaded cost (salary, benefits, facility, systems): roughly £30,000-£35,000 annually per agent. Let's say £32,500. That's about £2.71 per call minute (assuming each agent handles 11,960 productive minutes per year: 40 hours/week × 50 weeks × 60 minutes/hour).

So 198 million call minutes × £2.71 = £536 million annually in current contact center operating costs.

Now, if the AI system deflects just 25% of calls to self-service:

5.5 million deflected calls × 9 minutes = 49.5 million minutes saved annually.

49.5 million minutes × £2.71 = £134 million annual savings.

But the project costs £23.4 million over four years. If we spread that over the four-year period, that's £5.85 million annually. But savings don't start immediately. Year one is probably 20% effective. Year two maybe 60%. Year three 80%. Year four 95%.

So actual savings ramp up:

- Year 1: 20% × £134M = £26.8M savings, £5.85M cost = £21M net benefit

- Year 2: 60% × £134M = £80.4M savings, £5.85M cost = £74.55M net benefit

- Year 3: 80% × £134M = £107.2M savings, £5.85M cost = £101.35M net benefit

- Year 4: 95% × £134M = £127.3M savings, £5.85M cost = £121.45M net benefit

Total four-year net benefit: roughly £318 million.

Even if the system is less effective (deflects only 20% of calls), you're still looking at £380 million in net benefit over four years.

Now here's the thing: these numbers are rough estimates. Actual costs and benefits will vary. But the math is clearly positive. Even with conservative assumptions, the ROI is massive. A system needs to be extremely ineffective to not pay for itself.

This is probably why the DWP is confident enough to commit to a four-year project with extensions. The economics work. This is rare for government technology.

Integration with Existing DWP Systems: The Unsexy But Critical Work

One thing the procurement notice doesn't emphasize but is absolutely essential: the AI system has to integrate with everything the DWP already uses.

The DWP runs benefit payment systems. They maintain eligibility databases. They have systems for recording interactions with claimants. They have appeals processes, sanction systems, overpayment systems. All of this is decades old. Some of it is probably using COBOL. None of it was designed to integrate with AI.

So the vendor doesn't just build a conversational AI system. They need to:

Real-time Data Access: When someone calls, the system needs to pull their current benefit claim data instantly. What are they claiming? How much are they getting? Are there flags on their account (sanctions, overpayments, appeals pending)? All of this needs to query legacy systems and return data in milliseconds.

Safe Hands-Off: When the system routes a call to a human agent, it needs to pass all relevant information. Not just the conversation transcript, but the claimant's full account summary. The agent needs to see everything the system saw, plus historical details. That requires the system to integrate with case management systems.

Decision Recording: When the system processes a request (like accepting a change of circumstances report), it needs to record that decision somewhere. It needs to feed data back into the eligibility system. It needs to generate decision letters. That's not trivial integration.

Audit and Compliance: Government systems need to be auditable. Who changed what, when, and why? With an AI system handling some of this, there needs to be complete audit trails. Every decision the system makes needs to be loggable and explainable.

Security and Access Control: The system can't let any caller see any data. The system needs to verify identity and confirm that the information it's showing is relevant to that specific caller. That requires integration with identity and access control systems.

These integration challenges are probably 60% of the actual project work. Building the conversational AI might be 20%. The remaining 20% is testing, deployment, and ongoing support.

This is also why a systems integrator is probably involved. An AI company alone can't do this work. They'd need deep knowledge of legacy government systems, federal and national standards for government IT, and experience integrating modern systems with old ones.

Looking Forward: What Success Actually Looks Like

What does success look like for this project? Here's what I'd watch for:

Call Deflection Rate: The system successfully handles (without human involvement) what percentage of calls? Success would be 30-40%. Anything above 50% would be exceptional. Anything below 20% is probably failure.

Call Resolution Time: How much faster are simple calls handled? Success would be reducing average handle time by 50% for deflected calls (from 9 minutes to 4-5 minutes for routine queries).

Customer Satisfaction: Do people prefer talking to the AI system or waiting for a human? This is where expectations should be tempered. Most people would rather talk to a human if they had the choice. Success is probably "satisfaction with AI handling is only slightly lower than human handling."

Agent Satisfaction: Do remaining DWP agents report better job satisfaction? Success would be agents spending less time on repetitive calls, more time on complex cases, and reporting feeling less burnt out.

Error Reduction: Does the system make fewer mistakes than humans? Success would be fewer repeat calls, fewer people contacting the system because they didn't understand the first response, fewer escalations due to miscommunication.

Equity: Does the system perform consistently across demographics? Success would be equal accuracy across accents, ages, and communication styles.

Cost Savings: Do the actual savings match projections? Success would be achieving 75%+ of the projected £134 million annual savings.

If the system hits even 50% of these success metrics, it's a win. Government technology usually fails on almost all of them.

The Broader Shift: Why This Matters for UK Public Services

Here's what's interesting about this project beyond the DWP specifically. It signals that UK government is moving toward treating AI as infrastructure, not novelty.

The DWP is the largest UK government department by spending. Billions annually. If they successfully deploy AI for call routing, expect other departments to follow. NHS services, passport applications, tax services. Government phone systems serving millions of people could all gradually shift to this model.

The requirement for UK-based hosting and security compliance signals something else: the government is done outsourcing without oversight. They've learned from failures. They're building systems with specific requirements they can audit.

The scale is also significant. £23.4 million is large enough to be serious, not so large that it's unrealistic. It's a genuine investment in modernization, not a consultant vanity project.

But the biggest signal is realistic timeline and expectations. Four years to deliver. Optional extensions. Gradual rollout. This is how you actually make technology work in government. Not overnight transformation, but sustained commitment to improving systems over years.

FAQ

What is the DWP AI project?

The Department for Work and Pensions is deploying a conversational AI system to handle benefits-related phone calls starting in 2026. The system uses natural language processing to understand what callers need, handle routine requests automatically, and route complex issues to human agents. The four-year project costs up to £23.4 million and runs through July 2030 with optional extensions to 2032.

How does the AI call routing system actually work?

Callers speak naturally instead of using menus. The system converts speech to text, identifies what the caller needs (called intent recognition), extracts relevant information from what they say, and decides whether an AI can handle it or if a human is needed. For simple requests like checking a payment status, the system provides the answer directly. For complex issues, it routes the call to an appropriate agent with full context already loaded so the agent doesn't need to start from scratch.

What calls can the AI system handle automatically?

The system is expected to handle routine queries like balance inquiries, payment status checks, address updates, appointment scheduling, and general policy questions. It can also help with some reporting of changes in circumstances. The system cannot handle complex eligibility disputes, appeals, cases involving vulnerability, or situations where someone needs personalized advice about their specific circumstances.

Why does the system need to be UK-based and hosted in the UK?

The training data for the system includes millions of real DWP calls containing sensitive personal information about people's financial situations and circumstances. Under GDPR and UK data protection law, this data cannot leave the UK. Additionally, UK government requires systems handling citizen data to comply with specific security and audit standards. UK-based hosting simplifies compliance with these requirements.

How many people could this system serve?

The DWP handles approximately 20-25 million calls annually. The system is designed to deflect an estimated 25-40% of these calls to full self-service or significantly reduce handle time, potentially freeing up capacity to serve millions more callers or allocating agent time to more complex cases. If successful, the system could handle the equivalent of 5-10 million calls annually without human involvement.

What happens to DWP call center staff if the AI system is successful?

The system is not designed to eliminate jobs but to manage call volume that's currently overwhelming the workforce. In practice, this likely means some natural attrition isn't replaced, some staff transition to other roles or more complex cases, and remaining staff work less stressful jobs handling fewer routine calls. The four-year timeline allows for gradual workforce adjustment rather than sudden changes.

When will the system actually be available?

The project timeline is July 6, 2026 to July 5, 2030. Companies had until February 2, 2026 to request participation in bidding. The winning vendor was expected to be announced by June 1, 2026. Actual deployment likely starts in late 2026 with a pilot, expanding to broader coverage through 2027-2028, scaling through 2028-2030. Callers might see the system in 2027 or 2028, though not across all call types initially.

Is the system replacing human customer service?

Not completely. The system augments human service by handling cases that don't need human judgment. Routine transactions, information inquiries, simple requests. This frees human agents to focus on cases that genuinely need human expertise: complex eligibility questions, disputes, cases involving vulnerable people, situations requiring interpretation and judgment. The goal is better service overall, not fewer service providers.

What's the actual business case for spending £23.4 million on this?

The DWP identified that 31.6 million call minutes were wasted in 2022-2023, representing capacity that could have been better used. Assuming the system deflects 25% of calls and call center labor costs roughly £2.71 per minute, annual savings would be approximately £134 million. Spread over four years, that's £536 million in gross savings against £23.4 million in project costs, yielding a net benefit of over £300 million. Even with conservative assumptions, the ROI is strongly positive.

What could go wrong with the system?

Potential issues include performance variation across accents and dialects, difficulty understanding complex or unusual circumstances, systems overload during peak call times, integration failures with legacy DWP systems, higher than expected training costs due to data quality issues, and public or political opposition if the system is perceived as dehumanizing service or if it makes systematic errors. The realistic rollout timeline and optional extensions suggest the DWP is prepared for challenges.

Conclusion: The Real Story Here

The DWP's AI call routing project is noteworthy not because AI is trendy, but because it represents a government department acknowledging a real problem and committing actual resources to solve it.

The numbers are stark: 2.4 million additional benefit claimants since 2019, contact centers struggling to handle volume, 31.6 million wasted call minutes annually. That's not a hypothetical problem. That's real people waiting on hold when they need help. That's real agents burning out handling impossible caseloads. That's a system degrading under its own weight.

The proposed solution is pragmatic. Not a complete technological overhaul, but a surgical intervention. Automate the obvious calls. Route the complex ones better. Free up human time for actual problem-solving. The math works even with conservative assumptions.

What's also notable is how the project is being structured. Specific requirements. UK-based infrastructure. Compliance with identifiable standards. A realistic four-year timeline. Optional extensions to allow for learning. This doesn't look like previous government tech failures that tried to build everything at once with vague requirements and unrealistic deadlines.

Will it work? Probably mostly. Will it be perfect? No. The system will misunderstand some callers. It will route some calls incorrectly. Some people will have better experiences, others will find it frustrating. But the baseline situation is already frustrating for millions of people. An imperfect improvement beats the status quo.

The bigger implication is that UK government is slowly learning to deploy technology effectively. Not perfectly, but better than it used to. The DWP project isn't just about benefits claims. It's a test case for whether government can actually modernize at scale. If this works, expect more AI systems across government. If it fails, government will be more cautious. Either way, watch this project. It tells you something real about the future of public services in the UK.

Key Takeaways

- The DWP is investing £23.4 million in conversational AI to handle benefits calls from July 2026 through 2030, with optional extensions to 2032

- Natural language processing lets callers speak normally while the system identifies intent and routes appropriately—no menu navigation required

- The system could handle 30-40% of inbound calls without human involvement, deflecting an estimated 49.5 million call minutes annually and saving approximately £134 million in labor costs

- All infrastructure must be UK-based, GDPR-compliant, and follow HMG Security Policy Framework due to sensitive personal data in call recordings

- Even conservative assumptions show the project generating over £300 million in net benefit over four years, with strong ROI making this more viable than typical government tech projects

![UK Government AI Benefits Claims System: DWP's £23.4M Transformation [2025]](https://tryrunable.com/blog/uk-government-ai-benefits-claims-system-dwp-s-23-4m-transfor/image-1-1767895929861.jpg)