The Hard Drive's Slow Fade: Why Data Storage is Reinventing Itself

Hard disk drives built the modern data center. For decades, they were the obvious choice—massive capacity at rock-bottom cost per terabyte. But something shifted. Flash got cheaper. Power bills got more expensive. Data centers started drowning in electricity costs just to spin magnetic platters. The volume of data keeps exploding, and suddenly HDDs feel like yesterday's technology.

Here's the thing: hard drives aren't dead, but their role has completely changed. They used to power everything. Now they're confined to the corners of the data center where capacity matters more than speed. The rest of the workload? It's moving to something newer, stranger, sometimes radical.

We're in the middle of a storage revolution that nobody talks about. While everyone obsesses over AI chips and GPUs, data center operators are quietly experimenting with technologies that sound like science fiction. 5D glass crystals that hold 360TB per disc. DNA storage that survives thousands of years without power. Optical media that resists radiation. Standing-wave technology inspired by 1800s photography techniques.

This isn't hype. Major companies are deploying these technologies in production. Microsoft's backing DNA storage. Meta's exploring optical media. Hyperscalers are cramming petabytes into refrigerator-sized racks. The financial pressure is real: every watt matters when you're running a million servers.

So what's replacing the hard drive? The answer depends on what you're trying to do. Need speed? Flash. Need to archive data for a century? Glass or DNA. Need warm storage that balances cost and performance? Something in between. The storage stack is fracturing into specialized tiers, each with its own champion technology.

Let's walk through the next generation of storage and what it means for data centers, enterprises, and the future of how we keep information.

TL; DR

- Flash dominates hot workloads: Enterprise SSDs now scale to 122TB+ per drive, directly replacing large HDD arrays in data centers

- Cold storage goes experimental: DNA, glass, and optical media promise durability measured in centuries, not years

- Capacity, power, and cost drive change: Data centers prioritize energy efficiency and physical footprint over raw speed

- Specialized tiers replace one-size-fits-all: Different storage technologies optimize for different workloads rather than one universal solution

- Transition underway: Major hyperscalers are already deploying next-generation tech in production environments

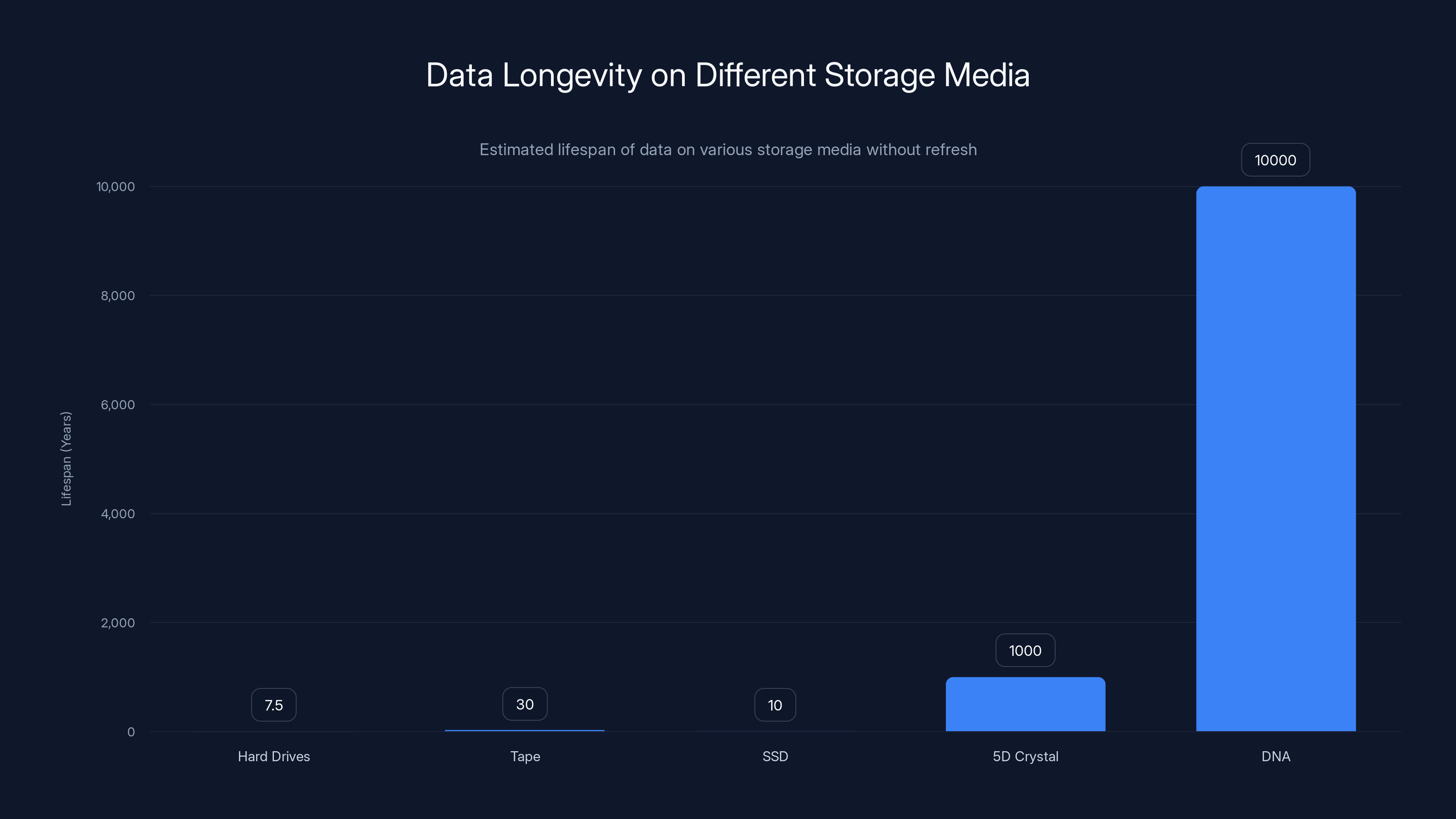

Estimated data longevity shows DNA storage potentially lasting the longest, but it's not yet commercially viable. Estimated data.

1. High-Capacity Enterprise SSDs: The Obvious Replacement

Flash storage is winning the obvious battle. We're not talking about consumer SSDs anymore. Enterprise SSDs have evolved into machines that directly target workloads that once required massive HDD arrays.

The Scale Challenge

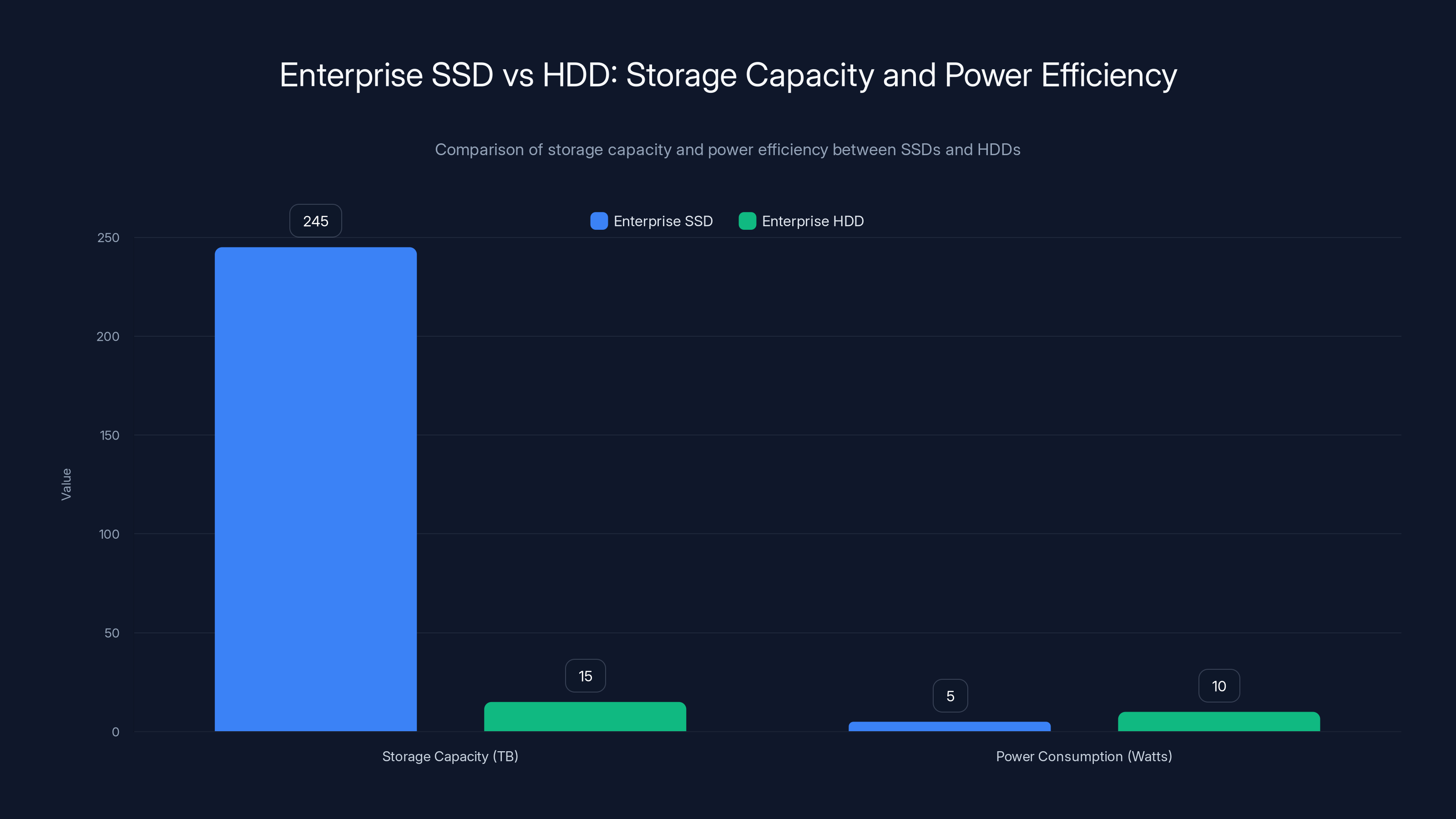

Micron's 6600 ION represents where this is headed. At 122TB per drive, it's already crossing into territory where a single SSD can replace entire HDD arrays. The company claims it'll scale to 245TB. Think about that for a second. A single drive the size of your hand holding more data than a refrigerator-sized HDD array from five years ago.

When you pack these drives into a standard 2U server with 36 E3. S form factor slots, you're looking at 4.42PB of storage in a box smaller than a pizza box is wide. The density is absurd. A single rack reaches 88PB. That's transforming how data centers think about physical space.

Power Efficiency Over Raw Speed

But density alone doesn't drive adoption. Power consumption does. Enterprise operators live and die by electricity bills. SSDs use dramatically less power than spinning drives, especially at scale. No mechanical parts means no wasted energy keeping platters spinning. No seek times means no power spikes.

Micron built the 6600 ION on their G9 NAND, optimizing for three things: density, power efficiency, and space savings. The drive isn't about peak performance. It's about letting hyperscalers consolidate storage, lower energy use, reduce cooling requirements, and stop throwing money at power infrastructure.

The Economics Shift

For warm and cold data workloads, that power savings is the killer feature. You're not paying for speed you don't need. You're paying for reliability and efficiency. Over a five-year deployment, those power savings add up to serious money. And when you're operating at hyperscale, serious money becomes transformative.

This is why enterprise SSDs are winning. They're boring. They work. They fit today's infrastructure. No crazy new technology needed. Just flash getting bigger and more efficient until HDDs look antique.

Enterprise SSDs offer significantly higher storage capacity and lower power consumption compared to traditional HDDs, making them a more efficient choice for data centers. (Estimated data)

2. The E2 SSD Form Factor: Flash's Middle Ground

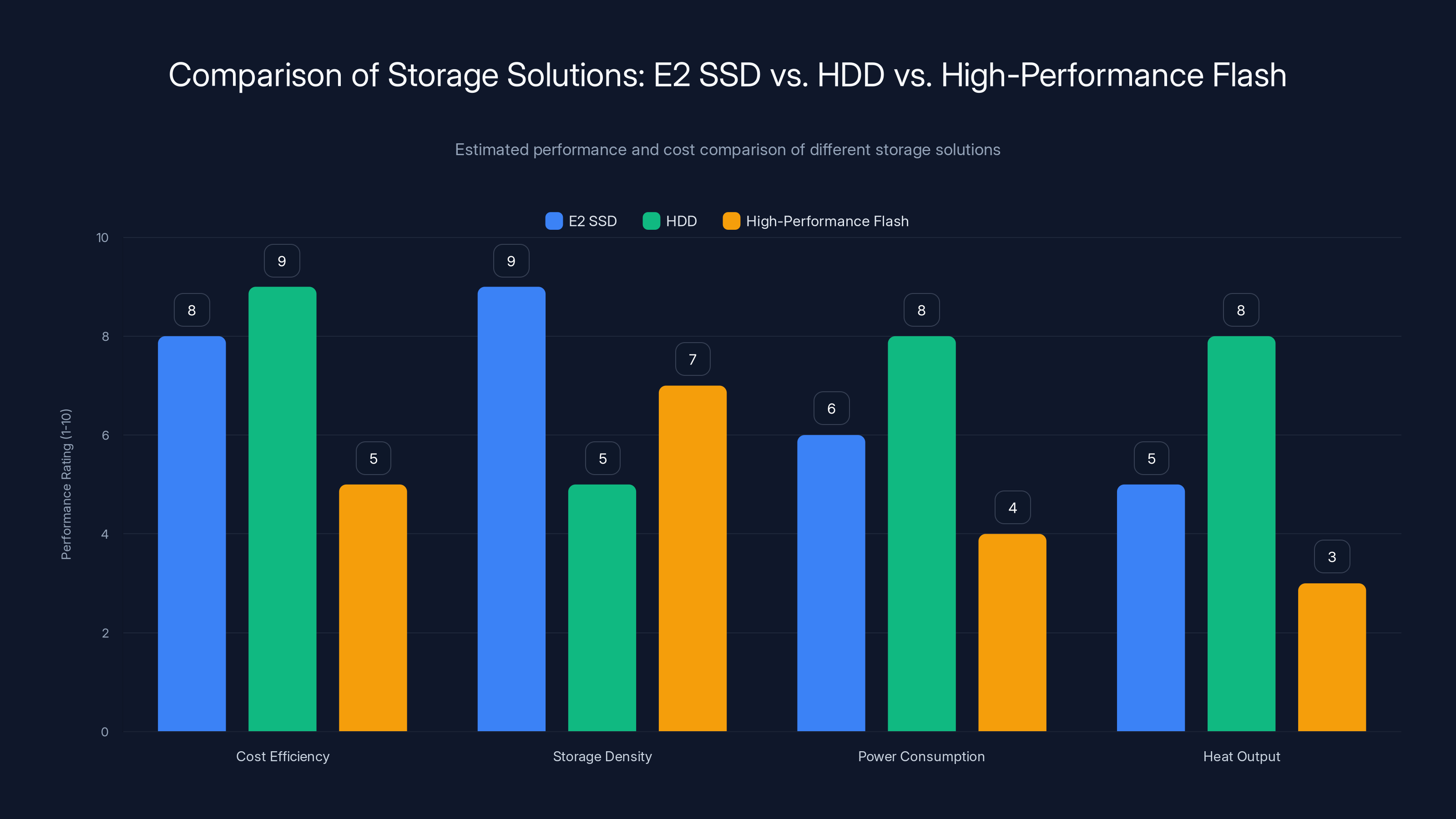

E2 targets something more interesting than just "bigger SSDs." It's about finding the sweet spot between expensive, high-performance flash and space-hungry HDDs. The market calls this warm storage, and it's where a lot of real-world data actually lives.

The Design Philosophy

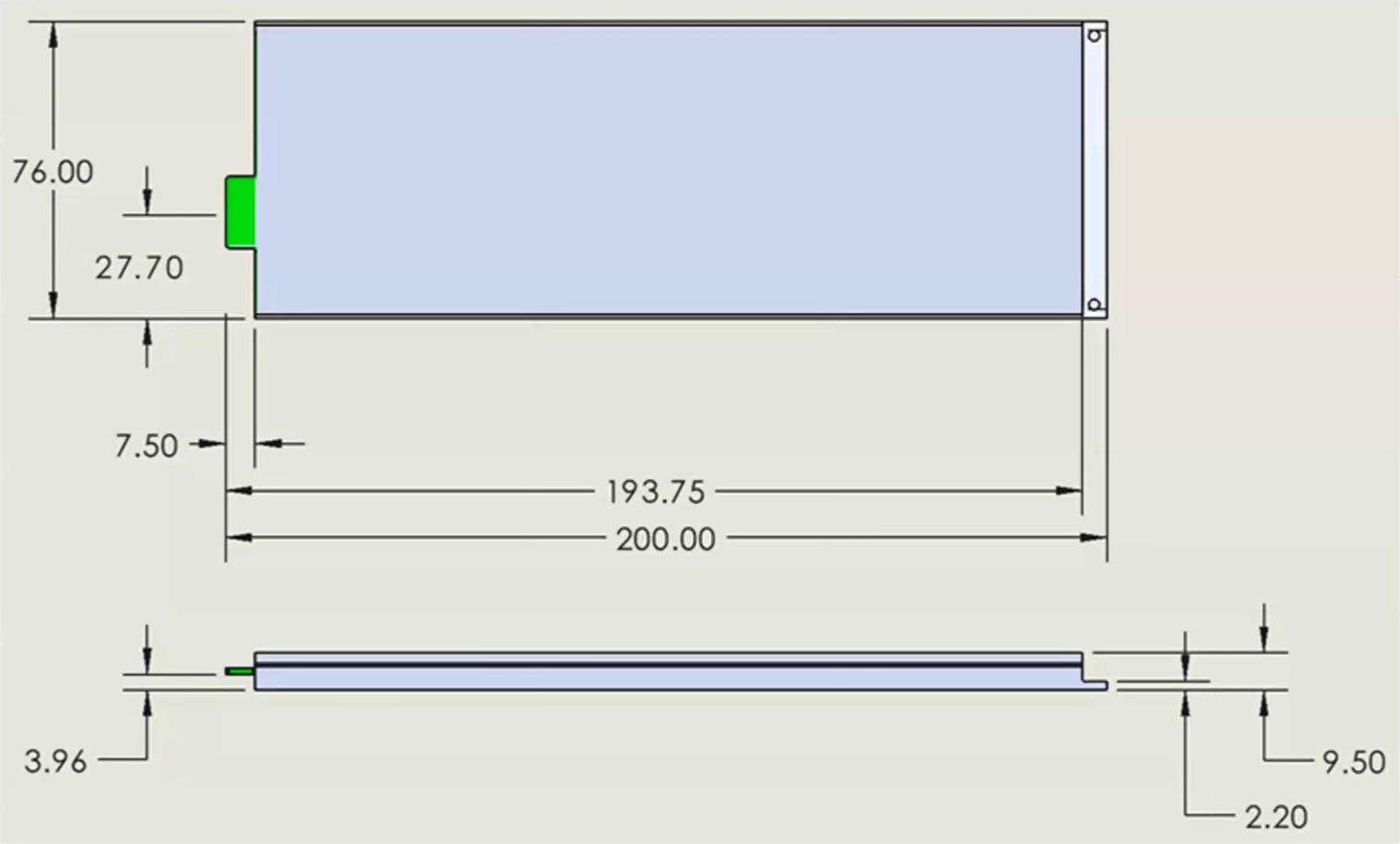

The E2 standard came from collaboration between the Storage Networking Industry Association (SNIA) and the Open Compute Project. These organizations don't mess around. They're thinking about hyperscale problems. What happens when you want petabyte-scale density in a standard 2U server? How do you achieve that without melting your power budget?

E2 answers with a ruthless focus on one thing: density in a form factor that fits existing infrastructure. Following the EDSFF Ruler standard, these drives use NVMe over PCIe 6.0. The ambitious version claims a single drive could hold up to 1PB of QLC (quad-level cell) NAND. One petabyte. In one drive.

The Power Problem

There's a catch, naturally. Power draw and heat output are brutal challenges. You can't cram a petabyte into a drive-sized package without thermodynamic consequences. The research community and vendors are working on this, but it's unsolved. This is why E2 remains a bridge technology rather than a shipping solution everywhere.

Yet the vision is clear. A single E2 drive delivers the capacity of an entire HDD array with the reliability and speed of flash, in a form factor that works with existing servers. Your power infrastructure doesn't need upgrading. Your cooling systems can handle it. You consolidate petabytes of warm storage in racks you already own.

Where It Lives

E2 isn't for your hot database tier. It's for the middle: archives that you access occasionally, backups you might need, historical data that sits mostly dormant. Performance is fine. Cost is better. And when you're storing exabytes of data, "better" costs become the entire economic story.

The technology is still emerging, but every major data center operator is watching closely. When E2 drives ship at scale with solved thermal problems, they'll reshape the storage landscape.

3. 5D Memory Crystal Storage: Archival for the Ages

Now we're getting weird. 5D memory crystals don't compete with hard drives for hot workloads. They compete for something more valuable: long-term archival that survives human timescales.

How It Works

Imagine a fused silica glass disc. Now imagine etching it with femtosecond lasers, creating microscopic structures that store data. That's the basic idea. The "5D" refers to five dimensions of data encoding: spatial position (x, y, z axes), orientation, and intensity of the etched structures. Instead of magnetism or electron charge, the data exists as physical deformation in glass.

A single five-inch disc can theoretically hold 360TB. Think about that density. Entire data centers worth of storage in a disc you could hold in your hand.

The Temperature Advantage

Here's what makes it different: the data remains stable at temperatures up to 190°C for extremely long periods. We're talking centuries of data preservation without power, without refresh cycles, without worry about magnetic decay or electron leakage.

Hard drives can't do this. SSDs can't do this. Their storage mechanisms degrade. Their controllers need power. Their lifespan is measured in decades at best. 5D crystals are essentially permanent.

The Speed Trade-off

The cost is performance. Current prototypes are slow. Write speeds hover around 4MB/s. Read speeds near 30MB/s. That's not a limitation for cold storage archives. It's not even a problem. You're writing data once and reading it almost never, except in disaster scenarios.

This technology belongs in cold storage tiers. Government records. Scientific data. Media libraries. Anything that needs to survive longer than any institution. When you're thinking in centuries, speed doesn't matter.

E2 SSDs offer a balanced solution with high storage density and cost efficiency, but face challenges with power consumption and heat output. (Estimated data)

4. DNA Storage: Biology as Data Center

DNA storage sounds like pure fiction. Yet it's the most radical reimagining of how data could be stored: using actual biological molecules to encode information.

The Theory

DNA has four bases: adenine, thymine, guanine, and cytosine. Binary has two states: 0 and 1. Map those bases to binary digits, and you've got an encoding system. Store that DNA in a test tube. Keep it in a freezer. Theoretically, you can store humanity's data in a refrigerator.

DNA is also remarkably stable. It survives thousands of years. Archaeologists read DNA from mammoths that died tens of thousands of years ago. The stability is extraordinary compared to magnetic media or semiconductor memory.

The Performance Reality

Here's where the theory crashes into reality. Early commercial DNA storage products exist, but they're incomprehensibly slow and expensive. Write speeds are measured in minutes to hours for kilobytes of data. Read speeds are better but still glacial. The cost per terabyte is thousands of dollars. This isn't a storage medium for anyone living in 2025.

But the vision persists. If DNA storage ever becomes practical at scale, it fundamentally changes the economics of long-term archival. One rack of DNA could hold exabytes. No cooling. No power. Just stability.

The Research Path

Major tech companies are funding this research. Microsoft demonstrated reading data from DNA after a year of storage. Startups are working on making synthesis faster and cheaper. The bottleneck isn't the science anymore. It's the engineering and economics.

Imagine a future where cold archives are stored in DNA. Your data survives power failures, climate disasters, even centuries of neglect. That's the promise. We're just not there yet.

5. Standing-Wave Storage: Photography as Data Archival

Standing-wave storage might be the most beautifully strange idea in this list. It takes inspiration from 1800s photographic techniques and applies it to data preservation.

The Concept

Developed at Wave Domain by Clark Johnson (the engineer behind the HDTV revolution), standing-wave storage works differently than anything else on this list. Instead of magnetism, electronics, or biology, it uses light itself.

The technology captures standing light waves inside a silver halide emulsion, similar to photographic film. The interference patterns create a physical record. Interference is encoded in the very structure of the material. The data becomes literally physical, not electrical or magnetic.

Extreme Durability

NASA tested this. They sent samples into space aboard the International Space Station. The samples were exposed to cosmic radiation for months. No measurable data degradation. Think about that. Cosmic radiation is brutal. It corrupts electronics. It damages every data storage medium. Standing-wave storage shrugged it off.

The durability comes from the storage mechanism itself. There's no power requirement. No magnetic field to decay. No electron charge to leak. The data is literally pressed into the structure of the material. Centuries of stability without energy.

The Access Problem

Reading the data requires optical scanning. You need equipment to read it. You need buffering and processing. It's not as simple as plugging in a drive. But for cold archives that you access rarely or never, that's acceptable.

Standing-wave storage is positioned for scientific data, governmental records, space missions, and anything that needs to survive far longer than hard drives or tape. It's not about speed. It's about permanence.

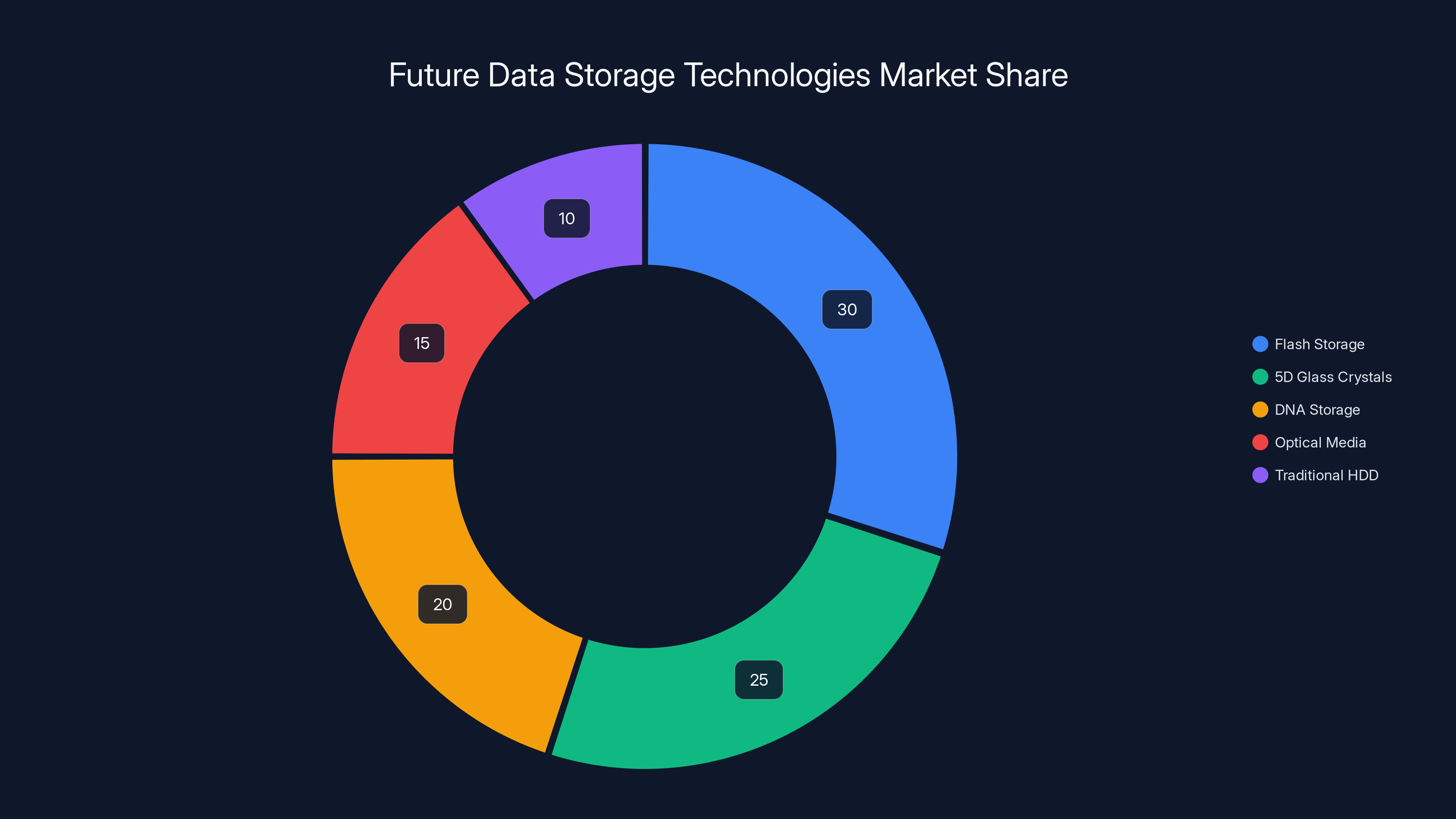

Estimated data suggests that flash storage will dominate the market, but emerging technologies like 5D glass and DNA storage are gaining traction as viable alternatives to traditional HDDs.

6. Magneto-Electric Disk (MED): Hybrid Storage Strategy

Huawei's Magneto-Electric Disk takes a different approach. Instead of replacing hard drives with one new technology, it combines two: SSD for speed and tape for capacity.

The Design

MED is essentially a box with an internal SSD connected to a tape mechanism. Externally, it presents itself as a single storage device. Hot data sits on the SSD. Cold data lives on tape. The system manages tiering automatically, moving data between the two based on access patterns.

This is pragmatic engineering. It acknowledges that no single storage medium is optimal for everything. Hot workloads need flash speed. Cold archives need tape capacity. Why choose when you can have both?

The Interface Question

The interesting part is how it presents itself. From the outside, it's a unified device. Internally, it's two very different storage technologies working together. This abstraction matters for adoption. You don't need to change your software or your thinking. You just plug in an MED and get both speed and capacity.

Huawei's betting that enterprises prefer simplicity. One device that handles multiple storage tiers beats managing separate SSDs and tape libraries. The economics work out when you consider management overhead and space savings.

7. Optical Media Evolution: The Comeback No One Expected

Optical storage never went away. It just got better. Modern optical media doesn't look like DVDs anymore. It looks like next-generation archival.

Multi-Layer Density

New optical formats stack multiple data layers within a single disc. Instead of information spread across a flat surface, data exists in three dimensions. Blue laser technology allows for smaller pit sizes than older red laser DVD formats. The result is massive capacity in a familiar form factor.

A single optical disc can hold terabytes in next-generation formats. That's not competitive with hard drives for speed, but for capacity per dollar in archival, it's interesting.

Radiation Resistance

Here's the underappreciated advantage: optical media doesn't care about electromagnetic interference. Space applications require storage that won't fail under radiation. Hard drives fail. SSDs fail. Optical media endures.

Meta and other companies are investing in optical media research specifically for this reason. Radiation hardness matters when you're thinking about data that needs to survive decades or centuries.

Accessibility

Optical discs are durable in another way: accessibility. You don't need proprietary equipment. The technology is mature. You can read forty-year-old Laser Discs with relatively simple hardware. That's better than proprietary formats that disappear when companies die.

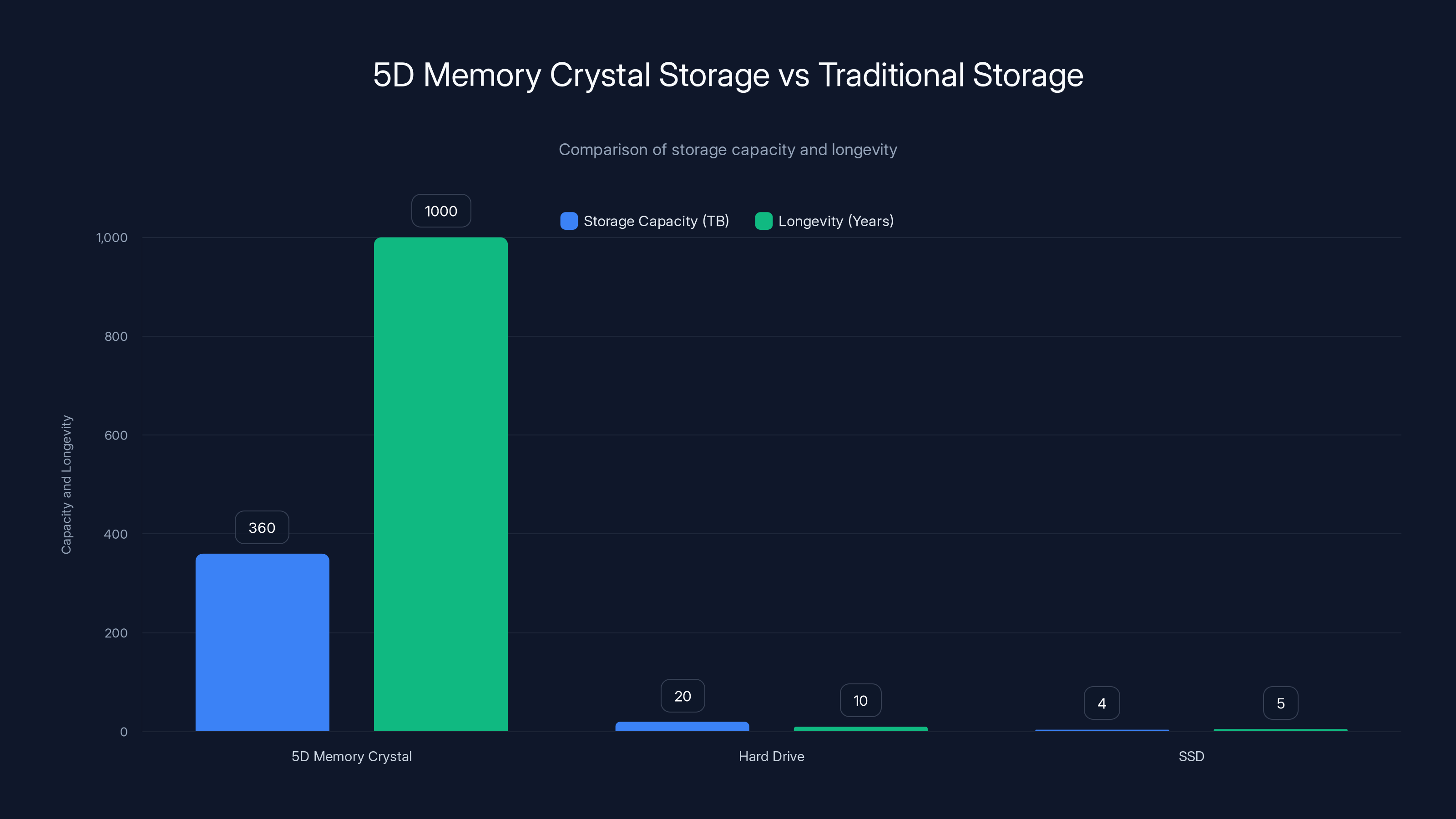

5D Memory Crystals offer unparalleled storage capacity and longevity, with 360TB per disc and stability over centuries, compared to traditional hard drives and SSDs.

8. Tape Storage: The Old Guard Gets Smarter

Tape isn't dead. It's hiding in archives and data centers everywhere, quietly storing exabytes of data that HDDs and SSDs haven't touched in years.

Why Tape Persists

Tape has two superpowers: absurd capacity at ridiculous cost per terabyte, and stability measured in decades without power. LTO (Linear Tape-Open) format keeps advancing. LTO-9 holds 18TB native, 45TB compressed. LTO-10 is coming with even more.

At those capacities, tape becomes economically unbeatable for cold archives. You pay nothing for power. You pay nothing for cooling. You pay almost nothing per terabyte. The tradeoff is access speed, which doesn't matter when you're accessing the data once every five years.

Automation and Tier Management

Modern tape libraries are fully automated. Robotic arms pull cartridges. Tape drives read them. The entire process is orchestrated by software. From an applications perspective, it looks like a file system. Internally, it's mechanical magic.

The advantage is transparency. Your backup system doesn't need to know it's using tape. The library abstracts that away. But the economics are undeniable. At petabyte scale, tape saves enormous amounts of money.

The Refresh Problem

The challenge with tape is refresh cycles. You can't just write data and forget about it for a century. Tape degrades. Every ten years or so, you need to read everything and rewrite it to fresh media. That's a labor cost and operational burden, but it's still cheaper than replacing hard drives or managing solid-state degradation.

9. Holographic Storage: The Technology That Wasn't

Holographic storage represents an interesting cautionary tale. Theoretically brilliant. Commercially impossible.

The Promise

Store data in three dimensions using holographic encoding. A small disc could hold terabytes. Access would be fast. The physics was sound. Multiple research teams proved it could work.

The Reality

Building commercially viable equipment is a different beast than laboratory prototypes. The systems needed to write, read, and verify holographic data were complex. Costs per device were astronomical. Manufacturing precision requirements were extreme. Nobody could figure out how to make it economical.

One company, Cerabyte, came closest. They pivoted to ceramic glass instead. The lesson: even brilliant storage ideas fail if you can't manufacture them cheaply and reliably. The physics can be perfect. The economics can still kill you.

Holographic storage lives on in research labs. It might emerge again in some form. But the market moved on before it became viable, and that's often enough to kill a technology.

10. Photonic Storage and Emergent Technologies

Beyond the major contenders, there are experiments happening in every major research institution.

Phase-Change Memory

Some teams are exploring phase-change materials that switch between states. The transition stores data. It's faster than some experimental media but slower than flash. It might find a niche in warm storage someday.

Molecular Storage

Others are working on encoding data at the molecular level, similar to DNA but using synthetic molecules. The advantage is faster read/write. The disadvantage is everything else is still experimental.

Quantum-Inspired Systems

Quantum effects might enable storage mechanisms that don't fit traditional categories. We're nowhere near practical implementation, but the research is serious.

These technologies probably won't replace hard drives directly. They'll likely find specialized roles. A solution for one specific problem—radiation resistance, thermal stability, extreme longevity, or unusual access patterns.

The Storage Tier Revolution: Replacing One Technology With Many

The big shift isn't replacement. It's differentiation.

For twenty years, hard drives did everything. They were slow, but they were cheap and spacious. Everything else was either too expensive (SSDs) or too weird (everything else).

Now the economics have flipped. You have options for every tier of your storage stack. Hot tier: high-capacity enterprise SSDs. Warm tier: E2 drives or MED hybrids. Cold tier: tape, DNA, glass, or optical media depending on your access patterns and durability requirements.

Each technology optimizes for its niche. Flash is fast but power-hungry. Tape is slow but incredibly cheap. 5D glass is permanence itself. DNA is future-proof if it ever becomes practical.

The Infrastructure Consequence

This matters for data center design. You're not sizing everything for peak IOPS anymore. You're designing a tiered system where data naturally flows from hot to cold storage over time. The software (hyperscalers and cloud providers) handles the orchestration. The hardware specializes.

This is actually more efficient than the old model. Less power for the same amount of storage. Better reliability in each tier. More appropriate technology for each workload.

The Operator Challenge

The downside is complexity. You need to understand which technology fits which workload. You need operational expertise in multiple systems. You can't just buy hard drives and call it a day.

But for hyperscale operators, that complexity is fine. They have engineers. They have the scale to justify specialized equipment. The real winners are companies building software to abstract this complexity away—tiering systems that automatically move data based on access patterns, making the underlying technology invisible to applications.

Timing and Maturity: Which Technologies Ship When

It's worth being realistic about timelines. Some of these are production-ready. Others are research projects.

Ready Now

Enterprise SSDs: Already shipping at 122TB. Scaling to 245TB soon.

E2 form factor: Early production, but real systems being tested.

Tape: Production everywhere. Already storing exabytes.

Optical media: Emerging in research labs, prototypes in development.

Coming in 5-10 Years

5D glass: Requires massive production investment. Currently slow. Speed improvements needed.

DNA storage: Synthesis and reading need 100x improvement in speed and 1000x improvement in cost.

Standing-wave: Small production volumes, requires custom reading equipment.

Stuck in Research

Holographic: Unlikely without major breakthroughs.

Molecular storage: Promising but years away from practical reality.

The honest timeline: SSDs dominate hot workloads immediately. Tape and optical media do the archival heavy lifting for years. By 2030-2035, you might see DNA or glass in production for extremely long-term storage. By 2040, the storage stack might look completely different.

But hard drives? They'll keep shrinking in role. Not disappearing, but confined to niches where their specific strengths (massive capacity, mechanical simplicity, established infrastructure) still matter. That's not an overnight transition. It's already happening, but it's gradual.

What This Means for Enterprise Buyers

If you're managing data storage right now, what should you do?

Start Thinking in Tiers

Don't assume one technology handles everything. Assess your workloads. What's actually accessed frequently? What sits dormant? What needs to survive decades?

Invest in Abstraction

Choose storage systems that abstract the underlying technology. Tiering software that moves data automatically. APIs that don't care if the backend is flash, tape, or something weirder.

Watch the Transitions

E2 SSDs might become your warm storage solution. Keep tabs on maturity. When the tech is ready, it can reshape your storage costs.

Plan for Hybrids

Huawei's MED model might be the future: multiple technologies in one system, managed transparently. That reduces operational complexity even if it increases hardware complexity.

Long-Term Archives

If you're building archives that need to survive fifty-plus years, research 5D glass or optical media. Traditional hard drives aren't appropriate for that timeline.

The Bigger Picture: Energy and Sustainability

Underlying all of this is a fundamental driver: energy.

Data centers consume roughly 4% of global electricity. That's massive and growing. Hard drives spinning uselessly consume power. Flash has lower operational power but high manufacturing energy. Every new technology is optimized partly on power efficiency.

The shift away from hard drives is partly about speed and capacity, but mostly it's about watts. You can't power another data center era the old way. The economics force innovation.

5D glass doesn't need power at all once written. DNA doesn't need cooling. Standing-wave storage is passive. Even tape's advantage is partly energetic: passive storage doesn't consume electricity.

This changes the calculus. A slower technology that consumes zero power becomes economically superior to a faster technology that drinks megawatts. That wasn't true in the past. It's true now.

The storage revolution is ultimately an energy revolution. We're not replacing hard drives because they're slow. We're replacing them because we can't afford to power them anymore.

FAQ

What makes enterprise SSDs so different from consumer SSDs?

Enterprise SSDs use more reliable NAND, have better error correction, and support higher capacity configurations than consumer drives. They're designed for 24/7 operation in data centers, with performance and reliability optimized for constant throughput rather than peak speed bursts that consumer SSDs prioritize.

Why would anyone use tape storage when SSDs are faster?

Tape's advantage is pure economics at cold storage scale. A single tape cartridge costs under $50 and holds 18TB-45TB depending on LTO generation. When your data sits dormant for years, the cost per terabyte for tape is orders of magnitude cheaper than SSDs, even accounting for periodic refresh requirements.

How does 5D crystal storage actually store data in five dimensions?

The five dimensions are three spatial coordinates (x, y, z position within the glass) plus the orientation and intensity of the laser-etched structures. By varying these five parameters, the technology encodes information far more densely than two-dimensional storage mechanisms like hard drives or flash.

Is DNA storage actually being used in production anywhere right now?

Not in production. Research labs have demonstrated reading data from DNA after storage periods, but synthesizing DNA is slow and expensive, reading it requires chemical processes, and the costs are thousands of dollars per gigabyte. The technology needs 100-1000x improvement in speed and cost before commercial deployment becomes realistic.

What's the main limitation of standing-wave storage?

Access speed. Reading data requires optical scanning and buffering, making it slow compared to electronic storage. This limits it to cold archives where data is accessed rarely or in disaster-recovery scenarios. For frequently accessed data, it's impractical.

How long can data survive on different storage media without refresh?

Hard drives: 5-10 years before mechanical failure becomes likely. SSDs: 10-20 years depending on NAND type. Tape: 20-30 years with proper storage conditions. Optical media: 50-100+ years claimed. 5D glass and DNA: hundreds to thousands of years theoretically.

Why haven't these new storage technologies completely replaced hard drives already?

Most are either too slow, too expensive per terabyte, require new infrastructure, or are still in early production stages. Hard drives have decades of infrastructure investment, compatibility with existing systems, and proven reliability. Transition takes time, and each technology has specific tradeoffs.

What does E2 form factor actually mean?

E2 is a standard for ultra-high-density SSD form factors designed to fit in standard 2U server chassis while holding up to 1PB per drive. It uses NVMe over PCIe 6.0 and the EDSFF Ruler physical standard to achieve extreme density in data center environments.

How does Huawei's MED handle the transition between SSD and tape storage?

MED uses software tiering policies to automatically move data between the internal SSD (hot tier) and tape mechanism (cold tier) based on access patterns. To applications, it looks like a single storage device, but internally it's optimizing access speed for hot data and cost for cold data.

Which storage technology is most likely to disrupt data centers in the next five years?

Enterprise SSDs at massive capacities (200TB+) and E2 form factors once thermal challenges are solved. These are closest to production and directly address the warm storage tier where HDD arrays currently dominate. DNA and 5D glass need more development time.

The Future of Storage: What Comes Next

We're standing at a transition point. The one-size-fits-all hard drive era is ending. The specialized, tiered storage era is beginning.

In five years, hyperscalers will deploy E2 drives at scale. Tape will still back up exabytes. Enterprise SSDs will hit 245TB and keep scaling. In ten years, 5D glass might enter production for long-term archives. DNA storage might finally become practical for select use cases.

But the real story isn't any single technology. It's the shift in thinking. Storage is no longer about having one product that does everything acceptably. It's about the right tool for each job.

That requires smarter software, better tiering systems, and operators who understand tradeoffs. It's more complex than "buy hard drives." But it's more efficient, more sustainable, and ultimately cheaper at scale.

Hard drives built the data center era. The next era will use many tools, each optimized for its specific role. That transition is messy and expensive and complicated. It's also inevitable.

Key Takeaways

- Enterprise SSDs now scale beyond 122TB, directly replacing large HDD arrays with superior power efficiency and reliability

- Five emerging technologies target specific storage needs: 5D glass and DNA for archival, tape for cold storage economics, optical for radiation resistance

- Data centers are shifting from one-size-fits-all HDDs to specialized tiered systems optimizing for power consumption, physical footprint, and workload patterns

- Timeline matters: E2 SSDs reach production in 1-2 years, 5D glass in 5-7 years, DNA storage likely requires 10+ years of development

- Energy consumption, not speed, is the primary driver replacing HDDs—technologies requiring zero power or minimal energy become economically superior at hyperscale

![10 Storage Technologies Challenging Hard Drives [2025]](https://tryrunable.com/blog/10-storage-technologies-challenging-hard-drives-2025/image-1-1766846114745.jpg)