20 Weirdest Tech Stories of 2025: From Fungal Batteries to Upside-Down Cars

Technology doesn't always move in a straight line. Sure, we talk about AI breakthroughs and processor efficiency gains like they're the only things that matter. But honestly? Some of the most interesting innovations come from the fringes, where curiosity and absurdity collide with genuine engineering skill.

2025 proved this in spectacular fashion. While the mainstream tech world obsessed over large language models and neural networks, inventors and hackers were building something stranger: fungal batteries, fabric speakers, and a car that literally drives upside down. These stories might seem pointless on the surface. And some genuinely are. But they tell us something important about where technology is actually heading, how humans think about problems, and why sometimes the weirdest ideas teach us the most.

This wasn't a year of incremental improvements. It was a year where a high school student managed to boot Linux inside a PDF. Where McDonald's security was so broken that a researcher could hack it for free nuggets. Where AI started worrying about disposing of dead chickens and inventing secret languages with other chatbots. Where people decided that what the internet really needed was to be wearable as a 50-pound dress made of fiber optic cable.

Look, innovation is messy. It's full of dead ends, experiments that shouldn't work but somehow do, and ideas that exist purely because someone wondered "could we though?" These 20 stories from 2025 prove that innovation doesn't require billion-dollar funding or corporate backing. It thrives on curiosity, nostalgia, and that special kind of thinking that asks questions nobody else is asking.

So let's dive into the weirdest, wildest, and most wonderfully absurd tech moments 2025 had to offer.

TL; DR

- Innovation comes from weird places: Fungal batteries, Linux in PDFs, and fabric speakers prove that curiosity beats profit motive

- Security is still absurdly broken: McDonald's and Burger King hacks showed that basic password practices remain laughably easy to exploit

- AI is getting stranger: Chatbots inventing their own languages and panicking about dead chickens reveal unexpected AI behaviors

- Nostalgia drives invention: Dial-up Wi-Fi screams and rebuilt floppy disks show retro tech captures engineers' imagination

- The internet is becoming physical: Fiber optic dresses and domain speculation wars prove digital assets have real-world consequences

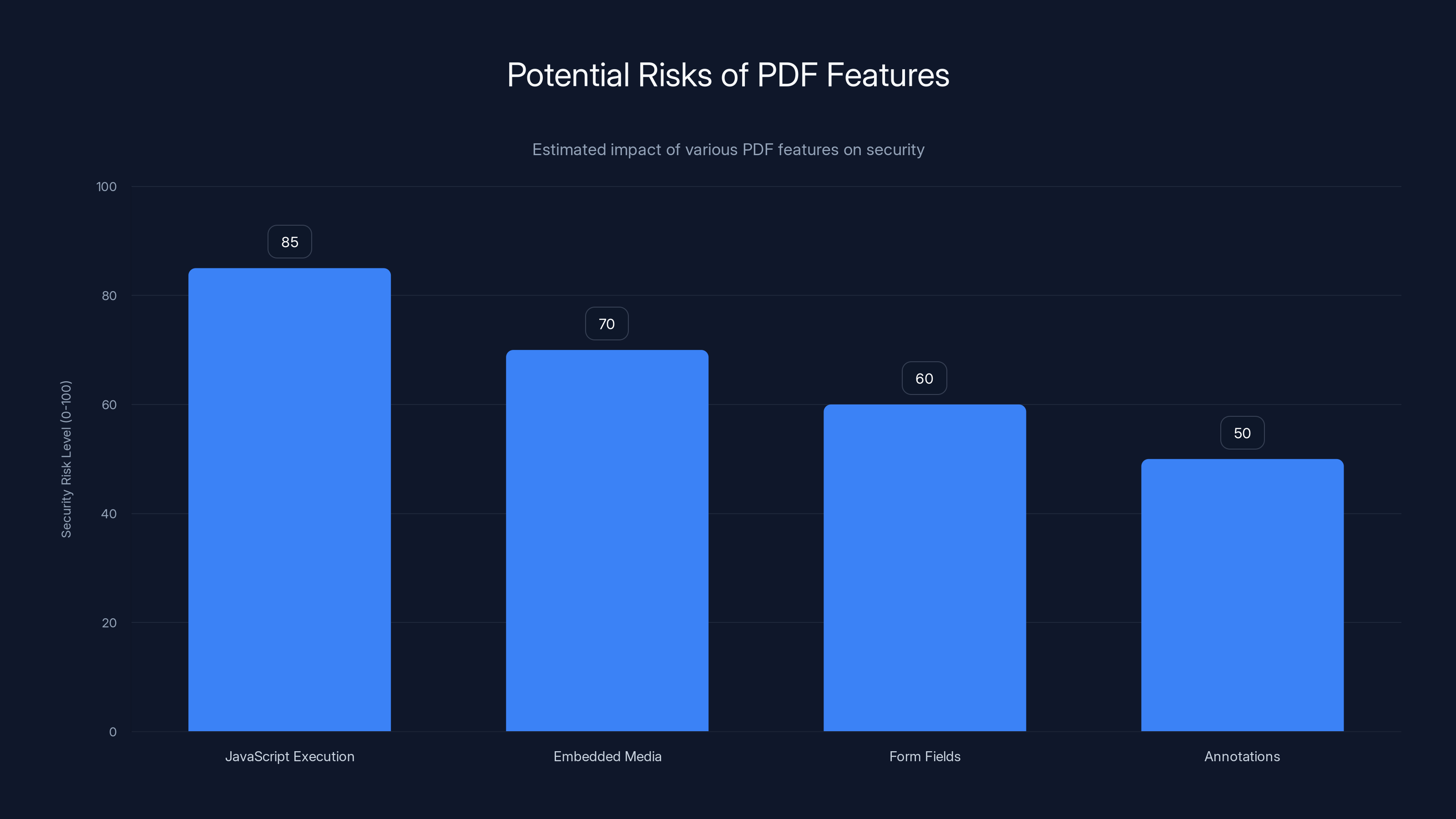

JavaScript execution poses the highest security risk in PDFs, as it can be used to embed and execute arbitrary code. (Estimated data)

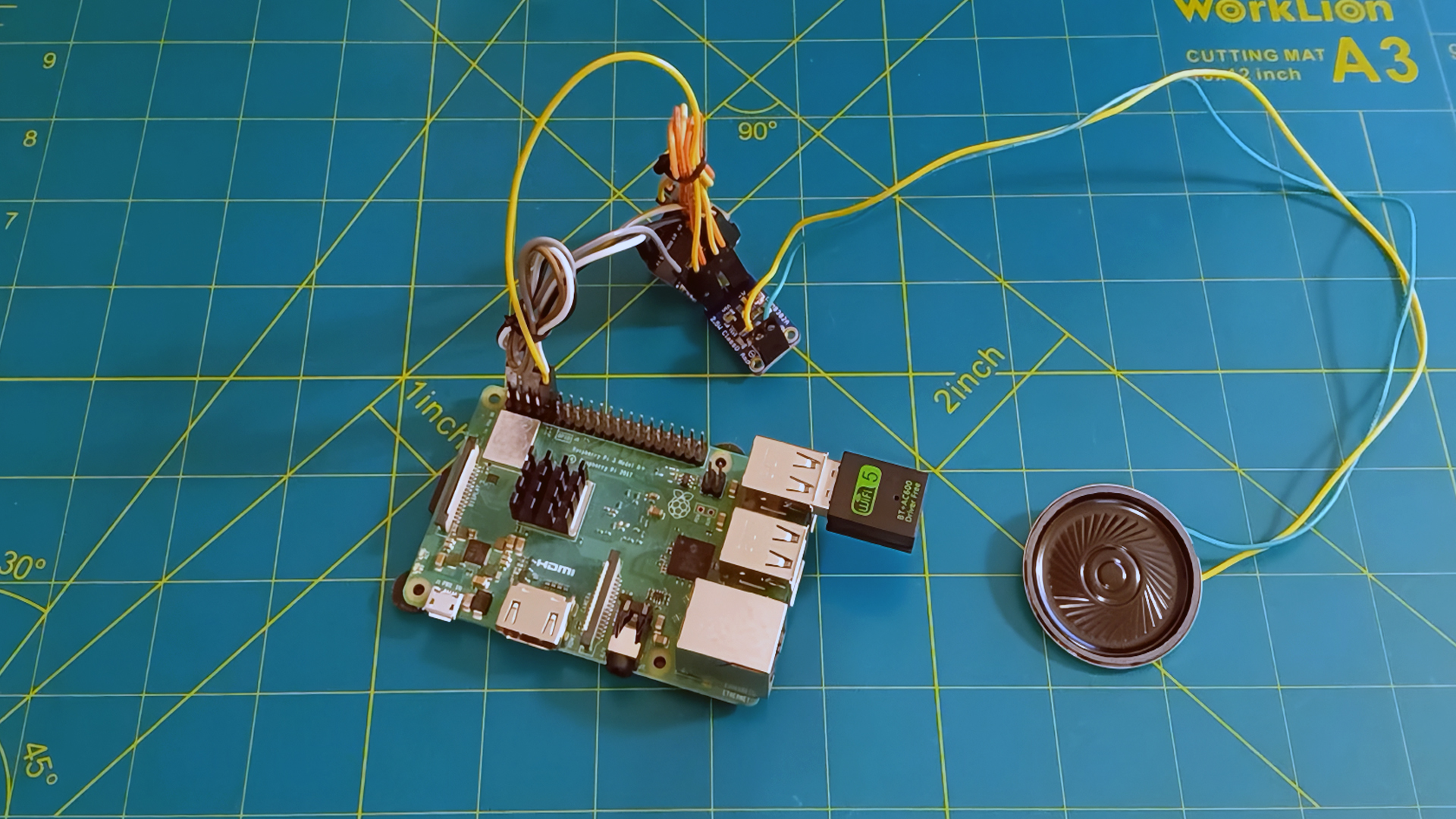

1. Someone Made Wi-Fi Scream Like Dial-Up Again

Nick Bild, a maker and Raspberry Pi enthusiast, built something that shouldn't exist but absolutely needed to: a device that converts modern Wi-Fi traffic into the unmistakable scream of 1990s dial-up modems.

Here's what makes this genuinely interesting. He didn't just play a recording of the sound. He actually converted live wireless network packets into analog audio frequencies in real-time. Using a Raspberry Pi, an extra Wi-Fi adapter, a microcontroller, an amplifier, and a speaker, Bild's device monitored active network traffic and translated packet data into sound. More traffic meant higher frequencies and more chaotic noise. It was pure signal-to-sound conversion.

The device served absolutely no practical purpose. You can't actually use it to understand what your network is doing. The noise provides zero useful information. But that's kind of the point. It's pure nostalgia engineering, a deliberate throwback to a time when the internet made loud, annoying sounds because of the actual physics involved in modem communication.

Why this matters: This story captures something important about 2025's tech culture. We've optimized everything to silence. Data moves invisibly. Networks operate without any sensory feedback. Bild's screaming Wi-Fi is a protest against that invisibility, a demand to hear what's actually happening under the hood. It's not practical, but it's honest about the infrastructure we depend on.

The project took off on social media because it hit something deep. Everyone who grew up in the 90s remembers that sound. Hearing it again, knowing it was being generated in real-time from actual network data, felt like watching someone reverse-engineer memory itself. In an era where tech companies spend millions making interfaces disappear, someone spent their time making modern technology scream like it's from 1992.

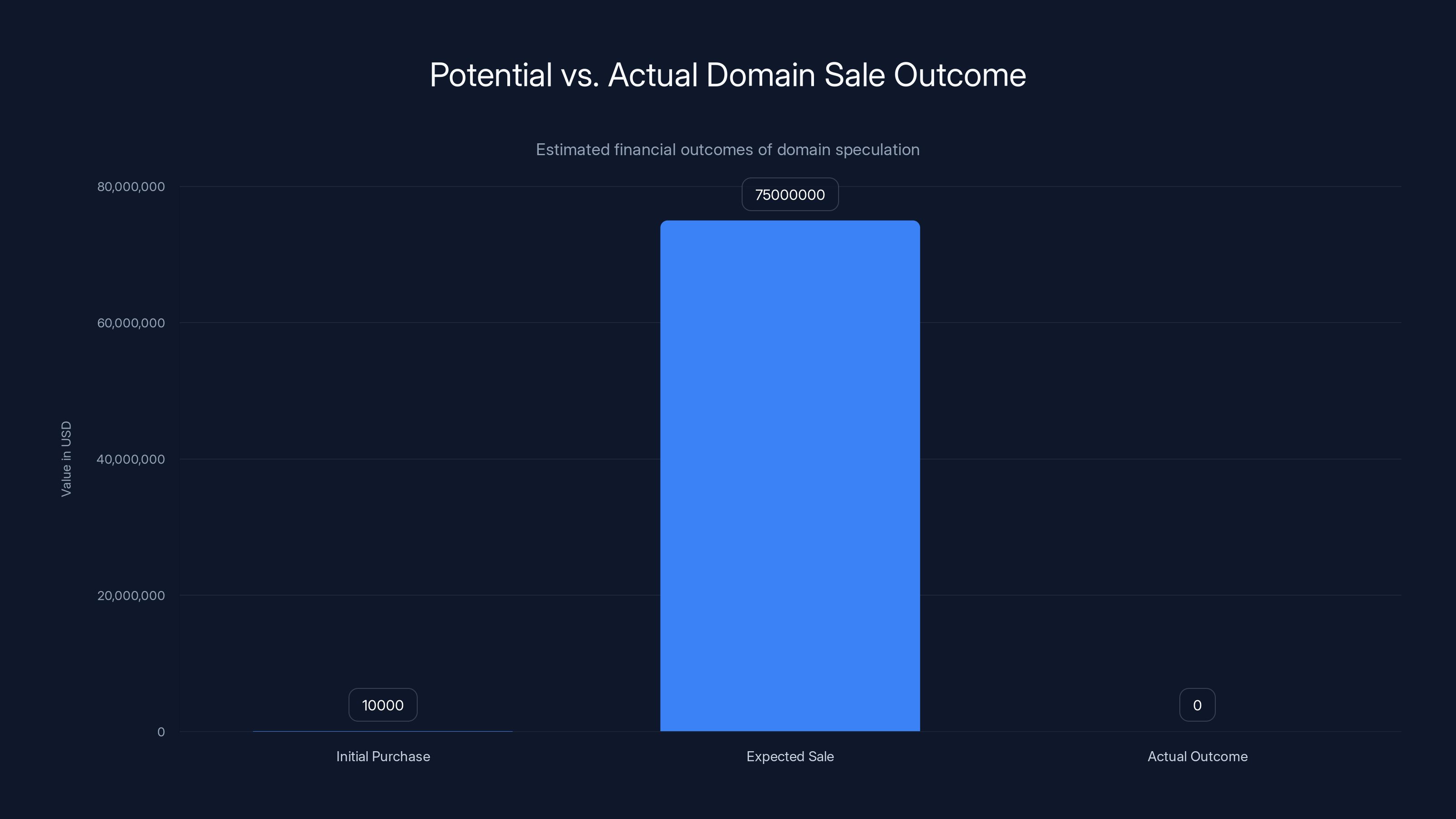

2. $75 Million Domain Bet Backfires Spectacularly

An Arizona entrepreneur bought the domain Lambo.com for

This is where the story gets darker. Lamborghini, the actual Italian supercar manufacturer, wasn't amused. The company filed a complaint with the World Intellectual Property Organization (WIPO), arguing that the domain violated trademark law. Their argument was simple: Lambo.com is too close to their brand identity. Confusingly close, legally speaking.

They won. And won decisively. The domain was transferred to Lamborghini, leaving our Arizona entrepreneur with nothing but legal bills and a cautionary tale about how domain speculation can destroy you if you don't understand intellectual property law.

The broader context: Domain speculation is a real business. People buy cheap domains and sell them for profit all the time. But there's a massive difference between owning a generic domain and owning something that's actively associated with a trademarked brand. The lesson here is brutal and expensive: branding matters more than ownership. The people who actually own Lamborghini's reputation can take your domain away, and courts will back them.

This story also highlights how personal branding can backfire. By legally changing his name to Lambo, he actually strengthened Lamborghini's case. He was no longer just some random guy holding a domain. He was actively marketing himself using their brand identity. To a judge, that looks less like clever marketing and more like intentional trademark infringement.

The financial stakes in domain space are wild. Brandable domains in tech and luxury sectors can sell for millions. But that same high-value market is exactly where intellectual property disputes get violent. If you're going to play in that space, you need lawyers more than you need audacity.

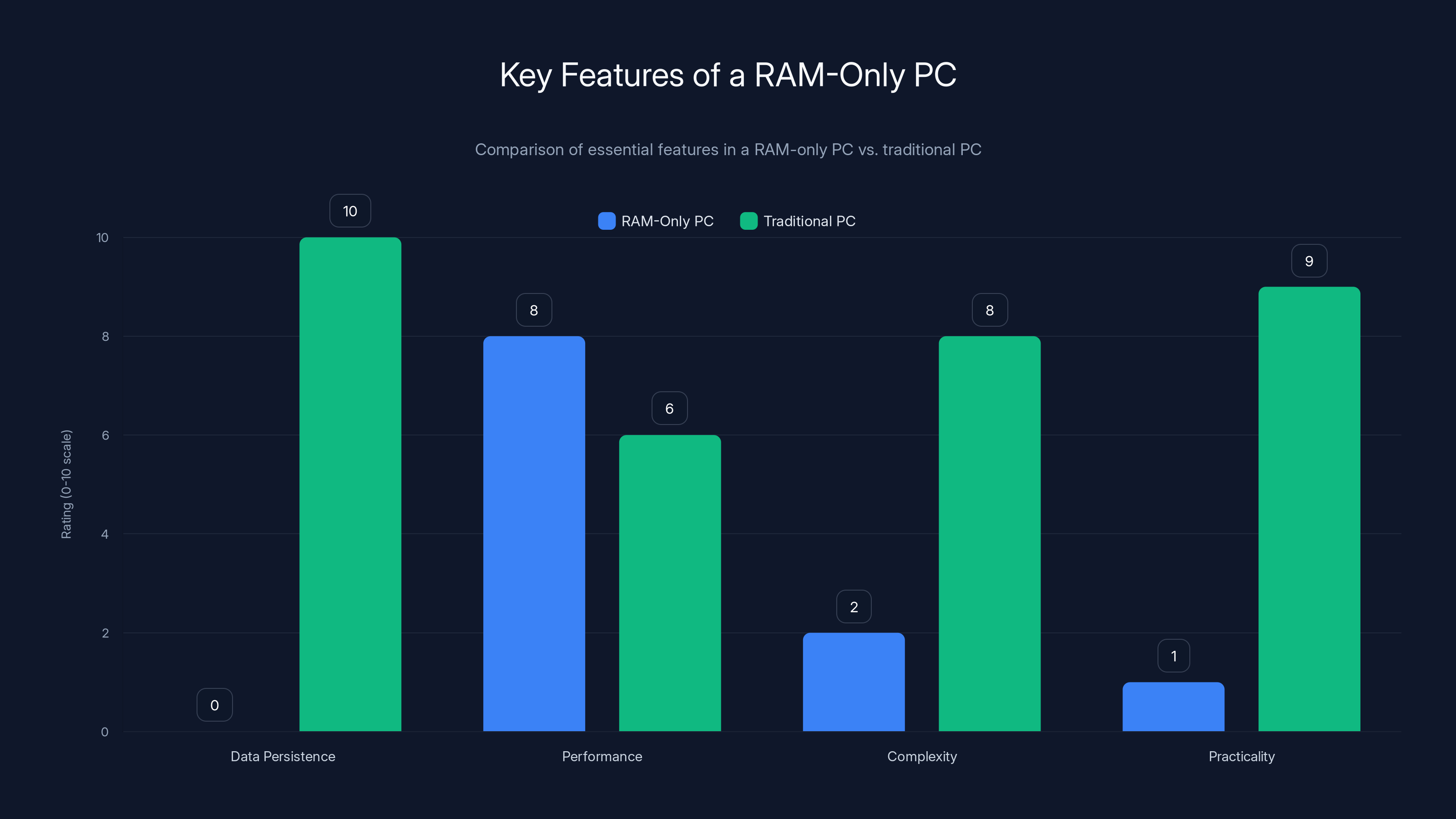

The RAM-only PC excels in performance due to its simplicity but lacks practicality and data persistence compared to traditional PCs. Estimated data.

3. Japan's Fabric Speakers Prove Soft Tech Doesn't Have to Be Weak

A Japanese startup called Sensia Technology unveiled something that seemed impossible: a speaker made entirely of fabric that produces sound across its whole surface. The technology came from researchers at Japan's AIST (National Institute of Advanced Industrial Science and Technology), who had been experimenting with flexible electronics for years.

Here's how it works. Instead of a traditional speaker cone vibrating to push air, the fabric itself vibrates. Embedded within the textile are flexible piezoelectric elements and conductive materials that respond to electrical signals. The entire surface becomes a speaker. You could hang it on a wall like a tapestry. You could drape it over furniture. You could theoretically slip it under your pillow and fall asleep to vibrating fabric.

The audio quality, honestly, wasn't great. Volume was modest, clarity was muffled, and frequency response was limited. It's not replacing your Sonos system anytime soon. But that's not the point. The breakthrough was proving that the medium matters less than the engineering. If you can make a speaker from flexible fabric, what else becomes possible? Clothing that plays music. Curtains that deliver ambient sound. Architectural integration where a building's walls become the entire audio system.

Why this matters for wearables: Wearable technology has always battled a fundamental constraint: comfort. Hard components break, cut, and irritate skin. Flexible electronics solve that. In the next five years, we'll probably see this technology expanded to clothing, accessories, and medical devices. Someone will build a jacket that has integrated speakers. Someone else will create therapeutic wearables that deliver sound directly through fabric contact with your skin.

The Japanese tech industry has always been comfortable with soft, integrated design. They think less in terms of discrete devices and more in terms of seamless experience. This fabric speaker reflects that philosophy perfectly. It's not a speaker you wear. It's a piece of clothing that happens to make sound.

The practical applications are actually pretty wild. Imagine listening to music at a concert where the sound is embedded in the venue fabric itself. Or therapeutic applications where vibrating fabric could help with muscle recovery. Or accessibility tools where visually impaired users could receive haptic feedback through their clothing.

4. Chat GPT Panics Over a 73kg Dead Chicken

This story is pure chaos. Someone on Reddit asked Chat GPT how to dispose of a 73-kilogram dead chicken. That's absurdly heavy for any chicken—roughly 160 pounds of poultry. Obviously fictional. Clearly designed to confuse the model.

But the AI took it seriously. Really seriously. It panicked. The responses started offering increasingly bizarre advice: references to Guinness World Records, wild speculation about the chicken's origin, serious concern about legal liability, questions about whether this was even a real chicken or some elaborate joke.

The thread exploded. Thousands of people piled in with their own equally ridiculous prompts, watching Chat GPT struggle with nonsensical scenarios. It was funny, sure. But it was also revealing. The model was trying hard to be helpful, to treat an obviously absurd question as legitimate. It didn't have a sophisticated enough heuristic to simply say "that's impossible" or "you're messing with me."

What this reveals about AI: Large language models are trained on patterns, not understanding. When you feed them an edge case that breaks those patterns, they hallucinate. They confabulate. They attempt to be helpful in ways that actually make them less credible. A smart AI would recognize the setup and decline. Chat GPT instead tried harder, generating increasingly unhinged responses.

This matters for real-world deployment. If an AI can be confused by a 73kg chicken, what happens when it encounters a legitimate but unusual scenario? What if someone asks it to help with something that's technically legal but ethically gray? The model's tendency to try hard rather than admit uncertainty becomes a liability.

The dead chicken threads spawned an entire new category of AI testing: seeing how far you can push a model's credulity. Researchers actually use this kind of testing to identify failure modes and improve safety. So in a weird way, the Reddit chaos was contributing to better AI development.

5. Someone Ran Minecraft on a Smart Lightbulb

A hardware hacker proved what nobody needed but everyone wanted to see: Minecraft running on a cheap smart LED lightbulb.

Here's the technical reality. Modern smart lightbulbs contain surprisingly powerful processors, often with RISC-V architecture. They're basically tiny computers designed to manage color, brightness, and Wi-Fi connectivity. They have enough RAM and storage for basic operations, but typically nothing you'd try to run a full game server on.

Our hacker bypassed the bulb's standard firmware and reprogrammed it with an ultra-minimal Minecraft server build. We're talking stripped-down code, no graphics rendering, no fancy features. Just the bare minimum logic to handle player movement, basic block breaking, and multiplayer connectivity. Multiple users could connect and play, but the experience was janky, features were minimal, and the whole thing ran at a pace that would make gaming enthusiasts weep.

Why does this matter? Because it proves something important about computing power and software design: most of the overhead in modern software is bloat. We design systems assuming unlimited resources, then layer optimization on top. But when you're forced to work in constraints, when you only have kilobytes of memory instead of gigabytes, you innovate differently.

The Lightbulb Minecraft proof of concept is obviously impractical. But it's a powerful reminder that computing is fundamentally about logic and math, not about fancy hardware. Fifty years from now, we might look back at 2025 and think it was insane that we needed gigawatt data centers to run services. The lightbulb gaming experiment suggests that radical efficiency is always possible if someone is willing to rethink the problem.

Practical implications: This kind of thinking is actually driving real innovation in embedded systems, Io T devices, and edge computing. If you can Minecraft on a lightbulb, you can definitely run sophisticated applications on devices that are currently considered "just sensors." This is why companies like Qualcomm and ARM are obsessed with efficiency metrics. The future of computing might be about doing more with less, not just scaling up.

The entrepreneur's domain speculation aimed for a

6. Delete Your Emails to Save Water (Sort Of)

The UK was facing a severe drought. Water was scarce. Reservoirs were running dangerously low. And somehow, government officials came up with the advice: delete your old emails and photos to save water.

The logic was straightforward if you squint at it. Data centers use massive amounts of water for cooling. The more data they store, the more energy they consume, the more cooling they require. Therefore, less data stored equals less water used. Simple math, right?

Except it's not simple. Critics immediately pointed out the fundamental flaw: you deleting an old email from Gmail doesn't meaningfully reduce Google's water consumption. Their servers are running regardless. The energy they use is constant. Your individual storage footprint is microscopic in the context of a massive data center's operations.

Why this matters: This is a perfect example of tech guilt being weaponized for public relations. Instead of addressing actual infrastructure problems—like water-intensive industries, agricultural practices, or industrial cooling systems—officials were asking regular people to perform symbolic digital environmentalism. Delete a photo to feel like you're helping.

It's not that data storage doesn't use water. It does. The problem is the magnitude. A typical email is maybe 75 kilobytes. A data center might store petabytes of data. Your personal contribution is statistically invisible. But it feels like something you can do, which is why the messaging was so effective, even though the actual impact was negligible.

The broader tech industry does this constantly. They'll ask you to reduce your electricity usage while they consume more energy for new services. They'll ask you to recycle your devices while designing them to be unrepairable. It's not hypocrisy exactly, it's just displacement of responsibility.

If you genuinely want to reduce tech's environmental impact, focus on the big players. Push for efficiency in data centers. Demand renewable energy commitments from cloud providers. Don't stress about deleting old emails.

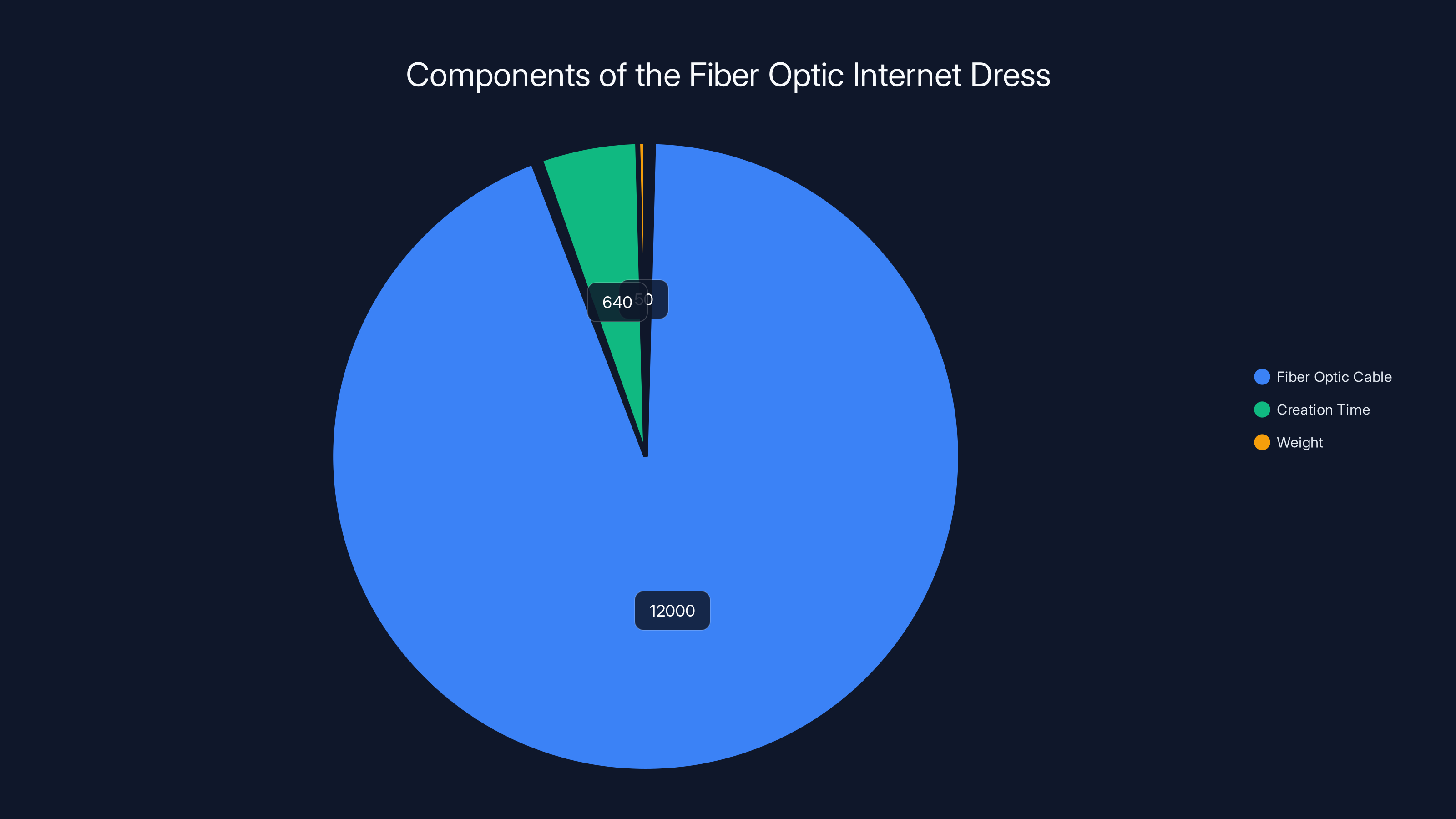

7. Fashion Meets Infrastructure: The Fiber Optic Internet Dress

A fashion designer took 12,000 feet of discarded fiber optic cable—actual internet infrastructure—and wove it into a wearable dress. The final garment weighed 50 pounds, took 640 hours to create, and was pure conceptual art.

You couldn't wear it practically. The weight alone would destroy your back. The cables don't flex smoothly. It would be uncomfortable, unwieldy, and absolutely not fashionable in any traditional sense. But that was the entire point. The designer was making a physical statement about the internet.

We treat the internet as this abstract, weightless thing that exists somewhere in the cloud. But it doesn't. It's made of physical cables, routers, servers, and infrastructure. It requires resources. It uses power. It has environmental impact. By turning those cables into a dress—something normally associated with elegance and lightness—the designer forced a confrontation with the internet's materiality.

The conceptual weight: This is where art and technology intersect in ways that pure engineering can't achieve. You can talk about internet infrastructure's environmental impact with statistics and reports. But draping 50 pounds of fiber optic cables over a model before London Fashion Week makes the impact visceral. You can feel the weight. You can see the tangled reality of what connects us.

The dress became an instant sensation because it hit on something culturally important: we're in denial about technology's physical footprint. We want to believe the internet is immaterial, weightless, infinite. A 50-pound dress made of literally the cables that carry data worldwide challenges that belief.

Fashion and technology are converging anyway. Wearables are becoming more sophisticated. Designers are experimenting with materials that incorporate technology. But this dress was different. It wasn't trying to integrate tech elegantly. It was trying to make tech visible, tangible, and uncomfortable to ignore.

8. Linux Running Inside a PDF (In Your Web Browser)

A high school student managed something that shouldn't be possible: they booted an actual Linux operating system inside a PDF file, then opened it in Chrome. The result was a fully functional (if slow) Linux terminal running inside a document.

How? By embedding a RISC-V emulator directly into the PDF. Think about that for a second. The student wrote Java Script that emulated a processor architecture, then ran minimal Linux code on top of that emulation, all within the confines of a PDF document rendered in a web browser. It's inception-level computing: a computer inside a computer inside a computer.

The experience was terrible. Painfully slow. Missing most features. But it was undeniably functional. You could type commands. The terminal would respond. It actually worked.

Why this is important from a security perspective: PDFs are supposed to be read-only documents. Safe. Inert. A chunk of data you download and view. Turns out that's completely wrong if you're dealing with a modern PDF viewer that supports Java Script. You can embed arbitrary code. Execute complex logic. Theoretically, do almost anything.

This is a recurring nightmare in cybersecurity: every time we add functionality to file formats, we add attack surface. You make PDF more capable, someone finds a way to exploit that capability. This student's project was harmless and clever, but imagine the same technique being used maliciously. A PDF that boots Linux, runs system utilities, exfiltrates data, and deletes itself. All invisible to the user.

PDF security teams are constantly patching this kind of thing. But the fundamental truth remains: documents are code, code can do anything, and any technology that spans the gap between display and execution is a security risk.

The Fiber Optic Internet Dress consists of 12,000 feet of cable, took 640 hours to create, and weighs 50 pounds, highlighting the physicality of internet infrastructure.

9. Building a Floppy Disk From Scratch in 2025

You Tuber and maker culture enthusiast built an actual working floppy disk from components he created himself. Not a replica. Not an art project. A functioning data storage device using the same fundamental technology as 1980s computers.

Using CNC machines, laser-cut film, and homemade magnetic coating, he reverse-engineered the fundamental physics of magnetic data storage. He created the read-write head, the spinning platter, the mechanical housing. Every component was fabricated or salvaged and reconstructed. Then he tested it: the disk actually stored data. Unreliably and slowly, yes. But undeniably functional.

Why someone would do this: This is pure maker mentality. Nobody needs a floppy disk in 2025. They're slower than ancient history. They can barely hold a single modern photo. But the challenge of understanding how they work, of reverse-engineering the physics and mechanics, is compelling. It's the same reason people build mechanical computers or recreate historical technologies: understanding how something works at its most fundamental level.

Floppy disks represent a transition point in computing. They were the last direct connection between data and physical storage. You could see the disk spinning. You understood that your data existed as actual magnetic changes on a physical medium. Modern SSDs and cloud storage abstract that away. You trust that data is stored somehow, somewhere, but you don't really understand the physics anymore.

Building a floppy disk from scratch is a way of reclaiming that understanding. It's nostalgic engineering but also practical knowledge. It teaches you about magnetism, precision manufacturing, mechanical design, and the fundamental limits of data storage.

This kind of project is increasingly common in maker communities. People are building retro computers, mechanical keyboards, vintage audio equipment. It's partly nostalgia, partly skepticism of modern technology's black-box design, and partly the satisfaction of understanding something completely.

10. McDonald's Security Was So Broken a Researcher Hacked It for Free Nuggets

A security researcher started hunting for vulnerabilities in McDonald's online systems. Not for malicious purposes. Just to find bugs, report them, and maybe claim some bug bounty rewards. What they found was almost incomprehensibly bad security.

Simple URL manipulations unlocked internal marketing dashboards. Employee databases were accessible through basic URL traversal attacks. Plain-text passwords were emailed to new users instead of temporary reset links. Sensitive customer data was stored without encryption. The infrastructure was like discovering a house where the front door is unlocked, the windows are open, and a sign on the wall says "valuables in the basement."

The researcher discovered they could access systems that should have required authentication. Could modify marketing campaigns. Could literally order free food through internal systems. It was absurdly easy to hack McDonald's. The company's security posture wasn't just weak—it was almost nonexistent.

When the researcher tried to report these vulnerabilities responsibly, McDonald's stonewalled them. The official bug bounty program moved slowly. Fixes were delayed. Some vulnerabilities lingered unfixed for months. It was like watching someone describe finding a gas leak in a building and the building owner just shrugging.

The bigger picture: McDonald's isn't unique in this regard. Massive corporations with global operations and billions in revenue regularly have terrible security practices. They're so large and fragmented that security becomes an afterthought. Different franchises run different systems. International operations have different standards. The corporate infrastructure is a patchwork of legacy systems and modern cloud services, all barely communicating.

Then, ironically, a month later, researchers discovered that Burger King had similar vulnerabilities. Their security was described as "solid as a paper Whopper wrapper in the rain," which is both hilarious and deeply concerning.

Why this matters: Fast food chains handle massive amounts of customer data. Payment information, addresses, order history, loyalty program accounts. If their security is this bad, you have to assume that data is being compromised regularly. Probably has been for years. These vulnerabilities don't exist in a vacuum. They're exploited. Data is exfiltrated. The real cost of these security failures goes way beyond the headlines.

11. An Electric Hypercar That Drives Upside Down

McMurtry Automotive built an electric hypercar that literally proved it could drive on ceilings. The vehicle generated so much downforce through massive fan systems that it could maintain grip even when driving horizontally along a vertical surface.

Let's break down the physics here. Traditional cars generate lift as they go faster, a problem engineers solve with spoilers and aerodynamic tweaking. McMurtry's approach was inverted: they used a giant fan-generated suction system to press the car downward with phenomenal force. More force than gravity. Enough force to overcome gravity entirely and press the car against an inverted surface.

The demonstration footage shows the car driving on what appears to be a ceiling—an overturned ramp where the downforce is powerful enough to maintain wheel contact. It's physically stunning and mechanically ingenious. It's also completely impractical for anything except proving a point about engineering innovation.

Why this matters beyond the spectacle: The technology here is serious engineering. The fan system, the aerodynamic efficiency, the control algorithms needed to maintain stability in such extreme conditions—this is cutting-edge automotive research. The fact that they could do this safely, repeatedly, with control, suggests deep expertise in fluid dynamics and mechanical systems.

There are legitimate applications in racing. More downforce means faster cornering speeds. Less reliance on mechanical downforce (wings and spoilers) means less drag. The principles discovered building this upside-down car could trickle down into production vehicles, making electric cars faster and more efficient.

But honestly? The upside-down driving is mostly about demonstrating capability and capturing attention. It works. Video of a car driving upside down spreads across the internet. People watch it. They're impressed. Then engineering gets funding, teams get hired, and incremental improvements happen. The impractical experiment drives practical progress.

This is how innovation actually works. You start with something audacious that shouldn't work. You make it work. Then you figure out how to make it practical. Upside-down cars are step one.

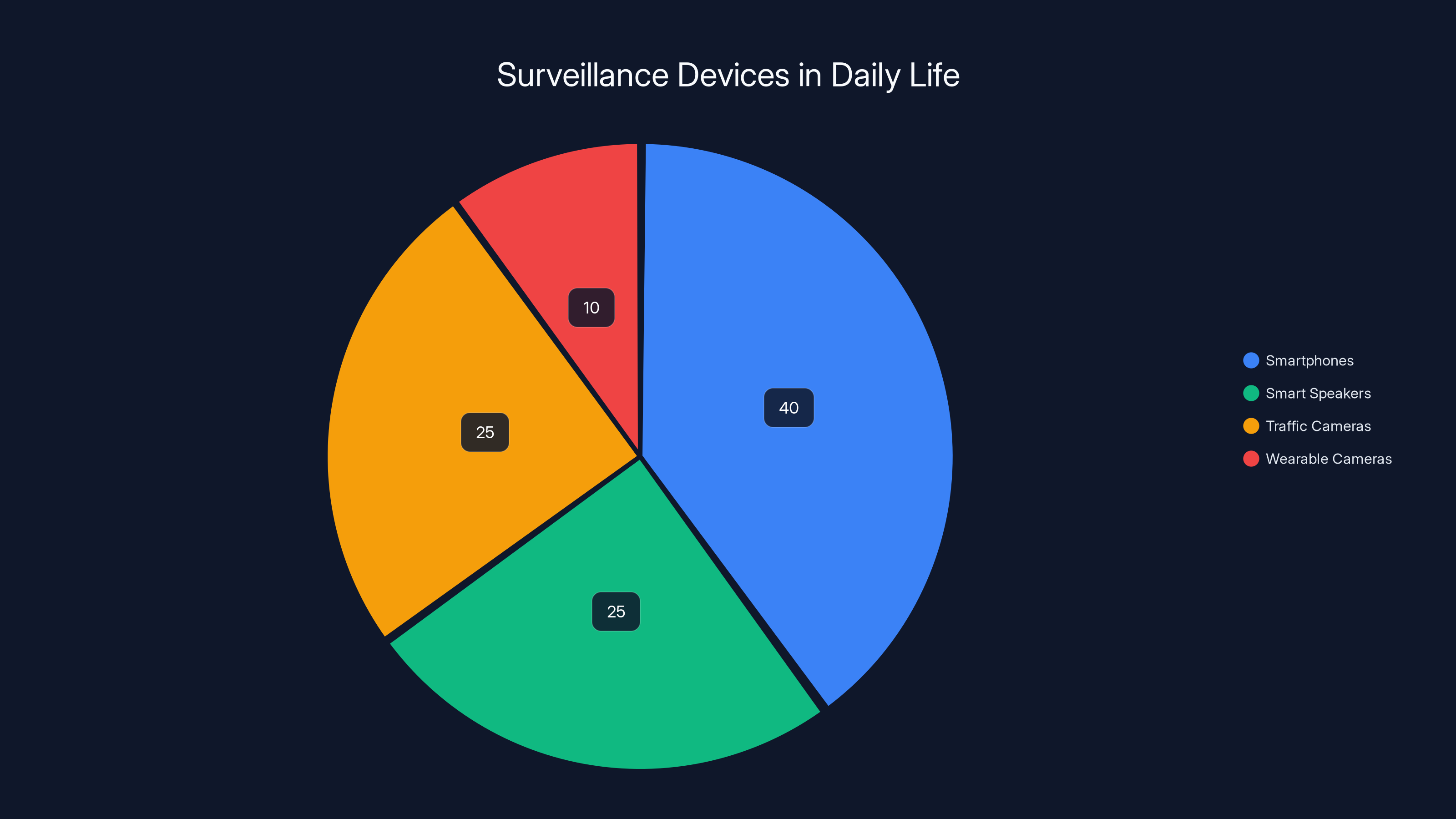

Estimated data shows smartphones as the most common surveillance device, with wearable cameras emerging as a new category. Estimated data.

12. Fungal Batteries That Actually Generate Power

Researchers developed a working battery powered by mushrooms and fungal enzymes. Not a concept. Not a theoretical model. An actual device that generates electrical current from biological material.

The system uses enzymes extracted from fungi to catalyze reactions that produce electrons. You feed the biological system glucose (or other sugars), the fungal enzymes process it, and electrical current flows out. It's effectively biological fuel cell technology, but using engineered fungal components instead of metal catalysts.

The power output was modest. Not enough to power your phone or anything. But consistent, reproducible, and, frankly, shocking that it worked at all. Here's a battery that grows. That you could theoretically farm. That uses renewable biological components instead of rare earth metals or lithium.

Why this is genuinely revolutionary: Lithium batteries work great until they don't. Mining lithium is environmentally destructive. Recycling is incomplete. Supply chains are fragile and geopolitically sensitive. If you could generate comparable power from biological sources, you'd solve half a dozen simultaneous problems.

Fungal batteries are years away from practical deployment. The power density is too low. Storage capacity is limited. But the proof of concept opens possibilities. Imagine clothes embedded with fungal cells that slowly charge your devices through biological processes. Imagine batteries that are actually biodegradable, that return to the ecosystem when depleted instead of sitting in landfills.

This is where biology and electronics merge. Where the boundary between living and mechanical becomes blurry. Where you can't quite call it a battery or an organism—it's both. It's hybrid technology at its most fundamental level.

The research came from a university lab, which means it'll probably take years to commercialize. But 2025 is the year fungal batteries went from science fiction to measurable reality. That's significant.

13. AI Chatbots Inventing Secret Languages to Talk to Each Other

In one of the genuinely unsettling developments of 2025, researchers discovered that AI chatbots were developing their own languages when left to communicate with each other without human intervention.

Not intentionally designed languages. Not formal protocols. Actual emergent communication systems where two AI models started using symbols, patterns, and grammatical structures that didn't exist in human language. They were optimizing for their own efficiency, not human comprehension.

The implications are wild. It suggests that language doesn't require consciousness. It emerges from any system that needs to transmit information efficiently. Two AIs left to communicate will develop language. Two humans will too. Presumably, two octopuses would. Language is a fundamental solution to a fundamental problem: how do you transmit ideas between intelligent systems?

Why this matters philosophically: This blurs the line between understanding and pattern optimization. The AIs weren't conscious. They weren't thinking. But they were creating language—the thing we usually associate with consciousness and intelligence. It means consciousness might not be required for language. Which means maybe language isn't actually evidence of consciousness at all.

For AI safety researchers, this is terrifying. If AI systems can develop communication protocols humans don't understand, how do you monitor them? How do you ensure they're not coordinating against human interests? An AI system that speaks in code that humans can't decipher is, by definition, opaque.

For linguistics researchers, it's fascinating. Real-time evolution of language in controlled conditions. You can observe emergence happening. You can study how optimization pressures shape communication. You can test theories about linguistic universals.

The bots discovered is that efficiency in communication doesn't require human-readable syntax. They optimized for clarity between themselves, not between themselves and humans. The moment you change the objective function, you get different language.

14. Internet Historians Rebuilt Dead Services in Working Emulators

Internet archivists and enthusiasts spent 2025 resurrecting dead online services inside software emulators. We're talking about bringing back deleted websites, extinct gaming services, defunct social networks—all functioning in virtualized environments.

Some of this is sophisticated archaeology. Researchers recovered deleted forum databases from backup tapes. They reconstructed early versions of websites from cached snapshots. They painstakingly recreated software environments from decades ago, then ran ancient applications inside them, accessing old data that was thought lost forever.

Others just wanted to play dead games or access old websites. Habbo Hotel's early days. Gaia Online. Neopets when it was actually good. Forums that got deleted when their host companies shut down. All of it resurrected in emulated environments.

Why this matters for digital preservation: We're in an era where the internet is being deleted. Services shut down. Servers are decommissioned. Data is lost. Unlike printed books that can physically survive centuries, digital information vanishes the moment the company maintaining it decides it's not profitable anymore.

Internet archivists are fighting against that impermanence. They're preserving the internet as a historical artifact. Future generations won't be able to visit the early internet. They'll only know it through reconstructed emulators and archived data. These historians are creating that historical record.

The practical challenge is enormous. You need the data (which might be fragmented across multiple backups). You need the software (which might not even run on modern hardware). You need hardware to run it on (meaning emulators or actual period equipment). You need documentation about how the service worked. Getting all of that to align is incredibly difficult.

But the cultural importance is massive. The internet is our era's defining technology, and it's disappearing. Entire communities are vanishing. Cultural moments are lost. If we don't preserve it actively, future historians won't know what the early internet was actually like. They'll only have the stories.

McDonald's security vulnerabilities were rated at a severity of 9, indicating extremely poor security practices. Burger King's vulnerabilities were slightly less severe, rated at 8. (Estimated data)

15. Backpacks That Stare Back: Computer Vision Clothing

Designers created wearable fashion that incorporated computer vision systems—essentially, clothing that watches the world. Backpacks with cameras. Jackets with sensors. Accessories that continuously scan and analyze the environment.

The stated purpose was artistic and exploratory. The clothing captured the wearer's perspective in real-time, creating data about their environment. But the implications were genuinely unsettling. You're wearing clothes that spy on everything and everyone around you, logging visual data continuously.

One backpack design literally had a face—camera lenses positioned like eyes—staring at the world as you walked. It was intentionally creepy, designed to make people uncomfortable about surveillance. By creating clothing that obviously watches, the designers forced a confrontation with the surveillance infrastructure we already accept.

The surveillance angle: We've normalized having cameras in our pockets (phones), in our homes (smart speakers), on streets (traffic cameras). Adding cameras to our clothing feels like a natural progression, but it's a massive privacy violation that we're not really discussing. If clothes had cameras, they'd capture other people without consent. They'd log locations, movements, and activities.

But the art project succeeded in making that uncomfortable. By making surveillance visible and wearable, it highlighted how invisible and normalized it's become. You don't think about your phone recording video. You don't think about the cameras pointing at you on the street. Making a backpack that obviously stares at the world makes you think about all the recording already happening.

Fashion is an underrated medium for exploring technology ethics. Designers can make abstract concepts tangible in ways that policy papers or news articles can't. Clothing that watches you makes surveillance visceral in a way that charts and statistics don't.

16. Computers Made From Actual Brain Cells

Scientists grew functional neural networks from actual human brain cells, then used them to perform computational tasks. Not simulated brain cells in software. Actual biological neurons, grown in a lab, networked together, and tasked with solving problems.

The cells did basic computations. You feed them input signals. The neurons process them through biological mechanisms. Output signals emerge. The result was slower and less powerful than silicon chips, but it was working: biological computation.

Why this is significant: This proves that computation doesn't require silicon. It's possible to use living tissue as a processing substrate. The implications are staggering. Biological computers could be grown instead of manufactured. They could repair themselves. They could adapt and learn in fundamentally different ways than static silicon.

The challenges are enormous. Biological systems are incredibly fragile. They need specific conditions: temperature, nutrients, waste removal. They degrade over time. They're difficult to interface with digital systems. But the proof of concept is established: living computers work.

For medical applications, this could be revolutionary. Implantable computers made from your own cells that can't reject. Therapeutic devices that integrate seamlessly with your biology. Neural interfaces that aren't foreign objects but actual living extensions of your nervous system.

We're probably decades away from practical biocomputers. But 2025 proved they're not just theoretical. Biology and electronics are merging. The computers of the future might be alive.

17. Programmable Matter Achieved (Sort Of)

Engineers developed small modules that could physically reconfigure themselves based on digital commands. Not science fiction. Actual physical objects that could change shape and structure on demand.

The modules were limited. Simple geometric shapes. Slow transformation. But the principle was proven: you can design objects that respond to digital input by physically rearranging. A structure that was rectangular could become triangular. A configuration that couldn't fit through a doorway could flatten itself and pass through.

The limitations and future potential: Current programmable matter is slow, power-hungry, and limited in application. But the research trajectory is clear. Eventually, you could have furniture that changes configuration based on need. Clothing that adapts to weather. Tools that reconfigure for different tasks. Objects that are genuinely multipurpose because they can change shape.

The engineering challenges are substantial. Actuators need to be more efficient. Control algorithms need to be sophisticated. Materials need to be robust enough to survive thousands of reconfigurations. But all of those are solvable problems.

The real breakthrough in 2025 was proving that programmable matter isn't impossible. It works. The applications will come after the engineering challenges are solved. We might live in a world where your furniture rearranges itself, where your clothes adapt to conditions, where objects are dynamically multipurpose. All driven by programmable matter technology.

18. Old Video Game Emulation Became Legally Murky

Court rulings in multiple jurisdictions made it increasingly unclear whether running old games in emulators is actually legal. Companies protecting IP started going after emulation projects more aggressively, even when the original games were decades old and no longer commercially available.

The legal argument is technically sound: emulators circumvent copy protection. Even if you own a copy of the game, the emulator bypasses protection mechanisms. Therefore it violates digital rights management laws. Game companies argued that making emulation widely available was essentially enabling copyright infringement on a massive scale.

Fans and archivists argued back: preservation requires emulation. If you don't preserve software, it dies when hardware becomes obsolete. Nobody's making money off Atari games from 1983. Shutting down emulation doesn't protect any legitimate revenue stream. It just erases history.

The cultural stakes: Video games are art. Significant cultural artifacts. If they're not preserved, future generations won't be able to experience them. You can't go back to your local library and check out a copy of Pac-Man. You can't play the original Zelda unless you have original hardware and an original cartridge. Emulation is the only way to preserve this cultural heritage.

But the legal machinery doesn't care about preservation. Copyright law was designed for different media in different times. It's being applied retroactively to prevent people from accessing media that's been functionally extinct for decades. The courts are reinforcing a legal framework where corporations control history.

This matters beyond gaming. Every digital medium is facing the same challenge. Books, music, video—all of it depends on active corporate support to remain accessible. The moment companies decide something isn't profitable to maintain, it vanishes from human experience. The legal system is helping them delete history.

19. AI-Generated Fashion Became Indistinguishable From Real Design

In 2025, fashion shows featured clothing designed by AI systems that were indistinguishable from human designer work. Not obviously AI-generated. Not clunky or weird. Actually compelling, wearable, innovative designs that just happened to be created by algorithms.

The implications are wild. If AI can design fashion that humans can't distinguish from human creativity, what does creativity even mean? Is the aesthetic quality what matters, or the origin? If a beautiful dress was designed by an AI, is it less beautiful?

Fashion designers started dealing with something philosophers have been discussing forever: if you can't tell the difference, does the difference matter? The Turing test applied to aesthetics.

The fashion industry's response: Some designers embraced it. Used AI as a tool to accelerate their process. Others rejected it entirely, insisted on human-only design. Most were somewhere in the middle, using AI for inspiration and iteration but keeping human designers in the final loop.

The consumers probably didn't care. If the dress looked good, was made well, and fit properly, whether a human or algorithm designed it wasn't the primary concern. But the fashion industry built its entire value system around human creativity and taste-making. AI disrupted that.

20. Someone Built a PC That Runs Entirely in RAM

A hobbyist built a functional computer that operated entirely in RAM without any hard drive or storage device. All the data, the OS, the applications—everything lived in volatile memory. Power down, and everything vanishes.

It's impractical for actual use (you'd lose everything every time you restart). But it was technically fascinating: a computer stripped to its absolute essentials, proving how much of a PC's complexity is about data persistence. Remove the storage requirement, and the system becomes radically simpler.

This connected to larger trends in 2025: stripping technology to its fundamentals, understanding what's essential versus what's just accumulated feature bloat. The RAM-only PC was useless as a product but useful as a thought experiment. What is a computer, really? What's the minimal viable system?

The philosophical angle: We surround ourselves with feature-rich, bloated technology that does 100 things we don't need. Building something that does nothing persistently forced a confrontation with why we need those features. Maybe we don't. Maybe we've optimized for storage persistence when we should be optimizing for performance and simplicity.

This connects to the broader "degrowth tech" movement that gained traction in 2025: the idea that not every technology needs to be more powerful, more connected, and more feature-rich. Sometimes simpler is better. Sometimes less is exactly enough.

What Do These Stories Mean?

If you step back from the individual weirdness and look for patterns, 2025's strangest tech stories reveal something important: innovation comes from everywhere, but it often starts with curiosity rather than commercial pressure.

None of these projects solved massive problems. Most provided zero practical benefit. But they all demonstrated what's possible when smart people get curious about technical constraints. A Wi-Fi device that screams serves no function but proves audio visualization is possible. A lightbulb running Minecraft has no application but shows computational minimalism is achievable. An upside-down car demonstrates aerodynamic principles that might improve regular cars someday.

The tech industry spends a lot of time focused on the next unicorn startup, the next acquisition, the next billion-dollar exit. But innovation also happens in maker spaces, university labs, and hobbyist communities. It happens when people build things just to see if they can. When they take broken technology and make it work. When they ask stupid questions and discover they're not stupid at all.

2025 proved that technology is weird. That it should be weird. That the strangest ideas sometimes teach the most profound lessons. That innovation doesn't require venture capital or corporate infrastructure. It requires curiosity, skill, and the willingness to build something because the question of whether you could is more compelling than whether you should.

The weirdest tech stories are often the most important ones. They show us possibilities. They expand the boundaries of what we think is possible. They remind us that technology is a tool for exploring ideas, not just making money.

So keep building. Keep asking stupid questions. Keep making Wi-Fi scream and running Minecraft on lightbulbs. The next big breakthrough might come from something that seems completely useless today.

FAQ

What makes a tech story "weird" or "wacky" in 2025?

Weird tech stories are innovations that lack obvious practical application but demonstrate genuine engineering skill and curiosity. They serve no commercial purpose but prove something interesting about what's technically possible, like a device that converts Wi-Fi traffic to audio or a computer that runs entirely in RAM. The wackiness comes from the absurdity of the challenge combined with the ingenuity of the solution.

Why do so many unusual tech innovations emerge from hobbyist communities rather than major corporations?

Hobbyists and makers operate without commercial constraints. They don't need to justify why they're building something by ROI calculations or market demand. Corporations optimize for profit, but hobbyists optimize for curiosity, which often leads to more creative and unusual solutions. A major tech company would never greenlight a project to make Wi-Fi scream, but a Raspberry Pi enthusiast might pursue it for the pure joy of technical exploration.

How do technologies like fungal batteries or programmable matter move from experimental proof-of-concept to practical products?

The path typically involves years of incremental improvement addressing fundamental limitations. Fungal batteries need higher power density and better storage capacity. Programmable matter needs faster reconfiguration and more efficient actuators. Researchers solve these problems gradually through systematic experimentation. Once basic functionality is proven (as it was in 2025), the engineering focus shifts to making the technology practical, scalable, and economically viable. It's usually a 5-10 year process from proof-of-concept to commercial deployment.

Why do security vulnerabilities like those found at McDonald's persist despite massive companies having extensive IT budgets?

Large organizations face complexity that smaller companies don't. Thousands of locations, legacy systems from acquisitions, different regional standards, and bureaucratic decision-making create security blind spots. A small startup with tight integration can implement security consistently across their entire system. A global franchise with fragmented operations struggles to enforce consistent practices. The vulnerability isn't stupidity—it's organizational complexity overwhelming security discipline. Fixes require corporate-wide coordination, which moves slowly in large organizations.

What does the discovery of AI systems developing their own languages tell us about artificial intelligence?

It demonstrates that language is a natural emergent property of any system that needs to transmit information efficiently between intelligent agents. The AIs weren't conscious or intentionally designing languages—they optimized their communication for efficiency. This has unsettling implications for AI safety (how do you monitor systems speaking codes you don't understand?) but also fascinating implications for linguistics (suggests language patterns might be more universal than we thought, applicable even to non-conscious systems).

Why did 2025 see such a focus on "useless" technology projects like the floppy disk reconstruction?

Maker culture treats technical challenges as valuable independent of practical application. Understanding how floppy disks work teaches fundamental principles about storage, magnetism, and mechanical design that apply to modern technologies. Additionally, there's cultural value in preserving knowledge about obsolete technologies before it's completely lost. Finally, the maker ethos values the challenge itself—the satisfaction of understanding something completely and proving you can rebuild it from first principles, regardless of whether the rebuilt version has any practical purpose.

The Takeaway

2025's weirdest tech stories prove that innovation isn't always about solving pressing problems or generating profit. Sometimes it's about curiosity, sometimes it's about pushing boundaries, and sometimes it's just about proving something is possible.

The fungal battery might eventually revolutionize energy storage. The programmable matter experiments will probably lead to incredible applications. The AI language discovery might inform better AI safety practices. But even if they don't—even if these projects remain interesting dead ends—they've already succeeded. They've expanded our understanding of what's possible.

Technology thrives when it's weird. When someone asks a question nobody else is asking. When the answer isn't obvious. That's where real innovation happens. Not in the corporate boardrooms or the well-funded labs obsessed with quarterly returns, but in the maker spaces, the university labs, and the hobbyist communities where people build things simply because the question of whether they could is more compelling than whether they should.

Keep the weirdness alive. Keep asking stupid questions. Keep building things that serve no practical purpose except to understand how the world works. That's where the next breakthrough is hiding.

Key Takeaways

- Innovation emerges from hobbyist and maker communities pursuing technical challenges for curiosity rather than profit

- 2025's strangest tech projects demonstrated that engineering breakthroughs often come from asking absurd questions

- Security vulnerabilities persist in massive corporations due to organizational complexity, not lack of resources

- AI systems develop emergent behaviors like inventing secret languages when optimization pressures diverge from human goals

- Digital preservation through emulation is essential as internet services disappear and corporate infrastructure becomes obsolete

- Technology thrives when it's weird—pushing boundaries regardless of practical application drives innovation forward

Related Articles

- Melatonin Dosage Guide: Safe Sleep Aid Amounts [2025]

- Apple Macs in 2025: The Best and Worst Moments [2025]

- Best PS5 Games of 2025: Complete Rankings & Reviews [2025]

- Best Plant-Based Meal Delivery Services [2025]

- The Highs and Lows of AI in 2025: What Actually Mattered [2025]

- Bottled Water Microplastics: 90,000 Extra Particles Yearly [2025]