The Great Code Volume Myth

Nvidia just dropped a bombshell claim. Thirty thousand of its engineers are now committing three times more code than they used to, thanks to AI-assisted development tools like Cursor. On the surface, this sounds incredible. Imagine tripling your team's output overnight. Sounds like a competitive advantage that would make any CTO weep with joy.

But here's where it gets messy.

Industry observers, software architects, and even some engineers within the company are raising eyebrows. Not because Nvidia is lying, but because they're measuring the wrong thing. Lines of code committed is a metric so divorced from actual software quality that treating it as a success indicator is like measuring a chef's skill by how many dishes they can plate per hour instead of how they taste.

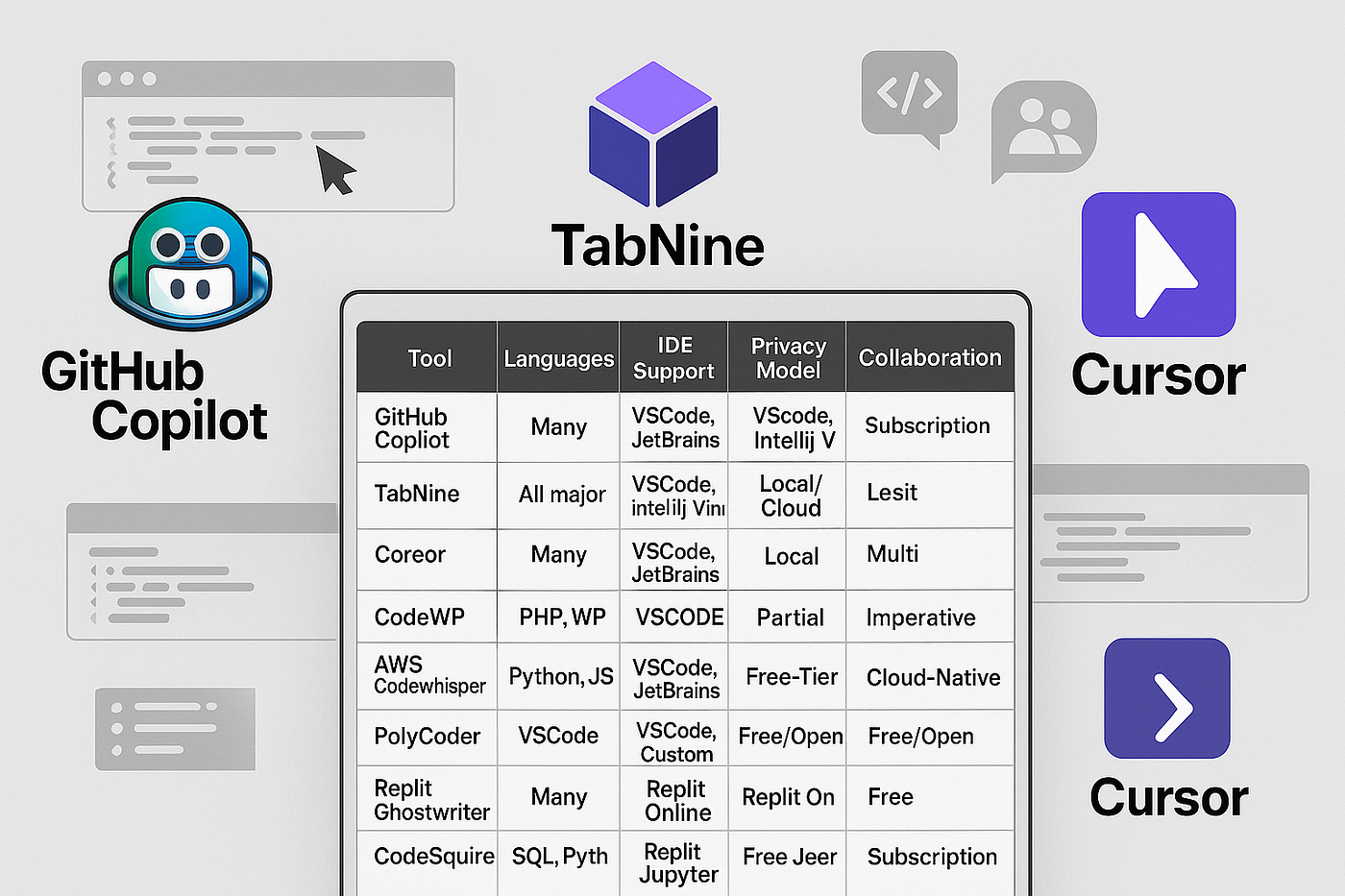

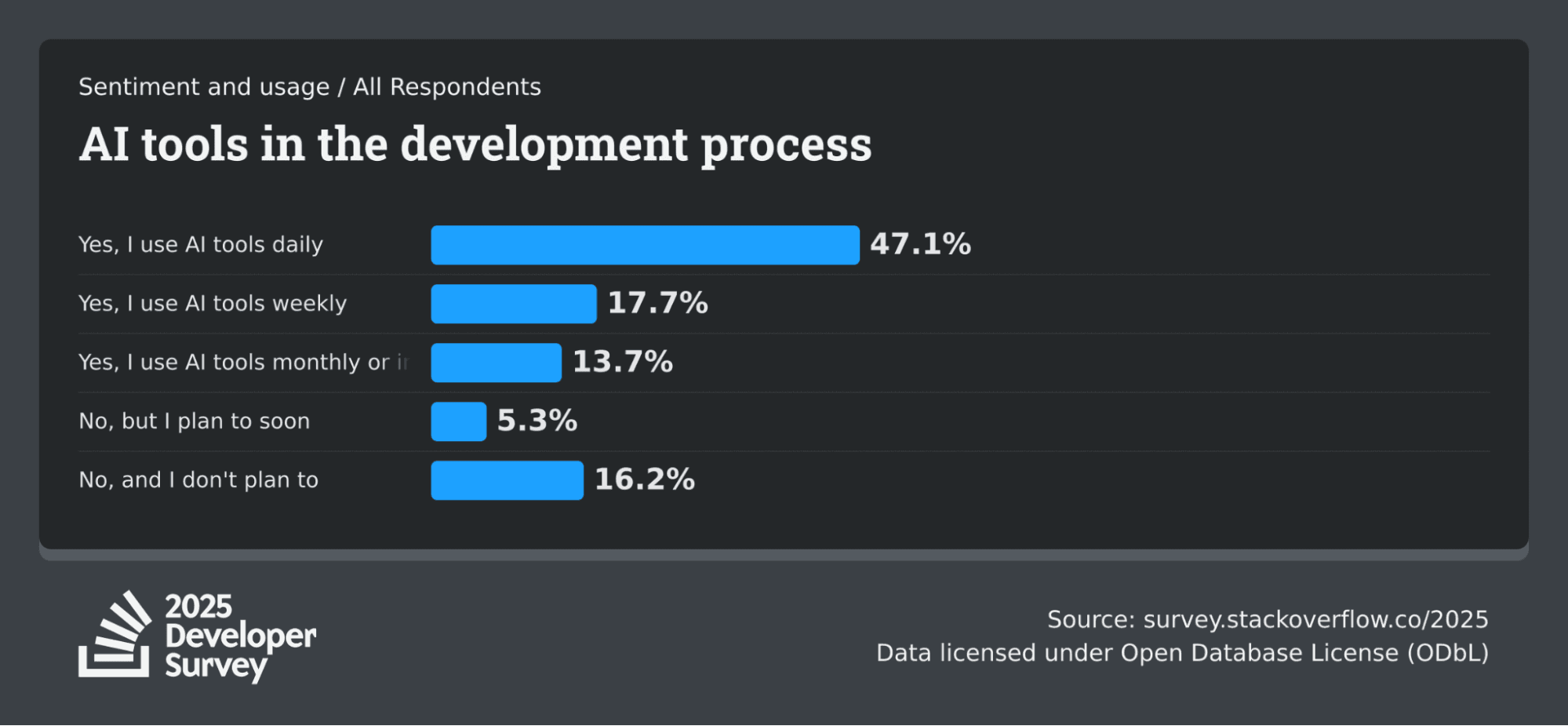

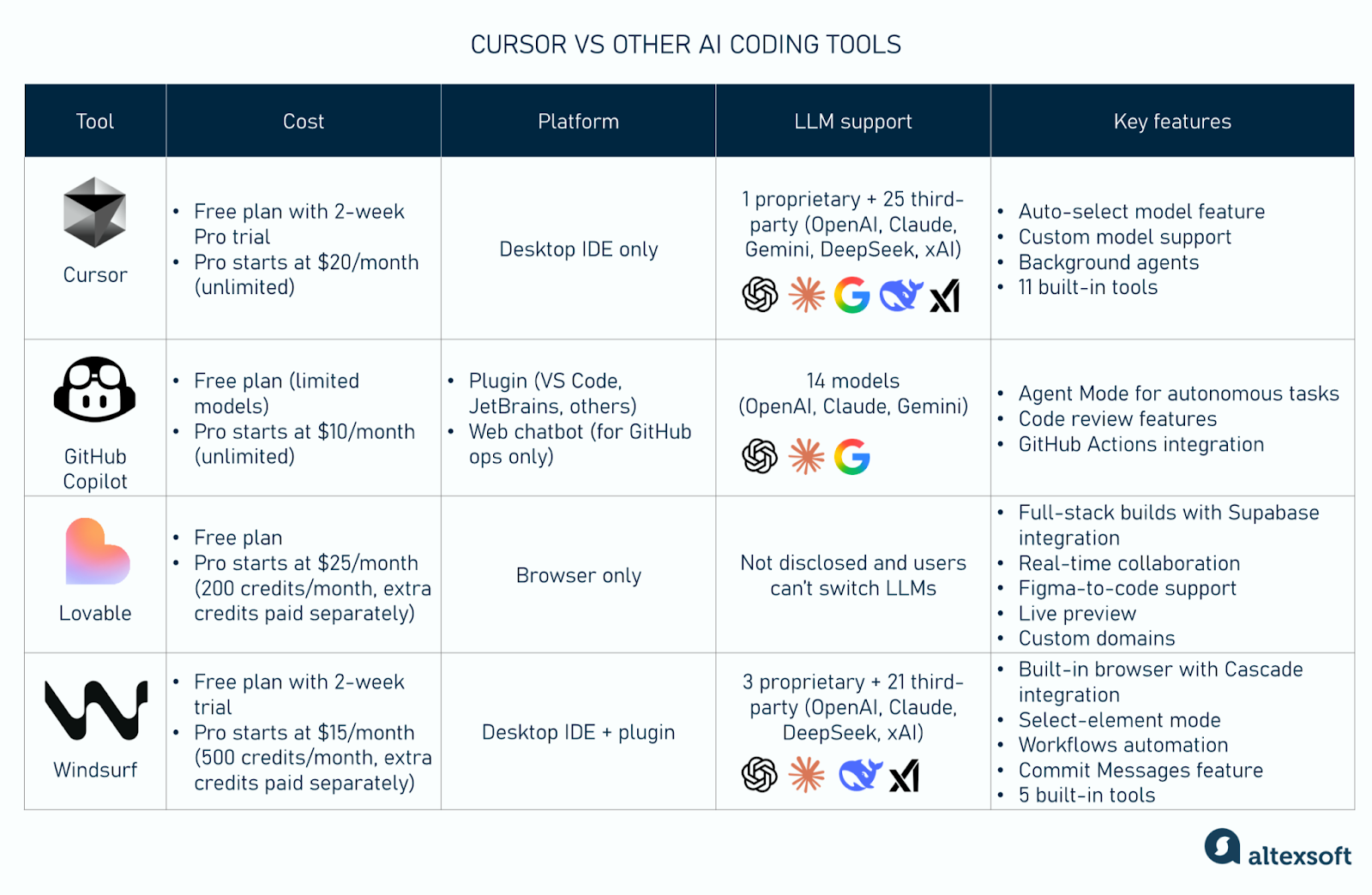

Let me be clear: AI-assisted coding is real. It works. Tools like GitHub Copilot, Cursor, and Claude are genuinely changing how developers work. But the narrative around what this means for software engineering is getting warped. We're conflating velocity with value, and that confusion is spreading through the industry like wildfire.

This matters because decisions being made right now about AI adoption in engineering teams are being influenced by metrics that don't actually measure what we care about. If you're a CTO evaluating whether to roll out AI coding assistants, you need to understand what you're actually looking at.

Understanding the Metrics That Matter

When Nvidia says engineers are committing three times more code, what does that actually mean? It means the amount of text being written and merged into version control has tripled. That's measurable. That's concrete. And that's almost completely useless for evaluating engineering effectiveness.

Think about it this way: if I asked you to write a function that checks if a number is even, you could do it in one line in Python.

pythonis_even = lambda x: x % 2 == 0

Or you could write it in fifteen lines with detailed comments, error handling, and logging.

pythondef is_even(number):

"""Check if a number is even.

Args:

number: An integer to check

Returns:

bool: True if even, False if odd

"""

if not isinstance(number, int):

raise TypeError("Input must be an integer")

logger.info(f"Checking if {number} is even")

result = number % 2 == 0

logger.info(f"Result: {result}")

return result

The second one commits more lines of code. It's not necessarily better. Sometimes it is. Sometimes it's bloated. This is the fundamental problem with volume metrics.

When AI tools generate code, they tend to be verbose. They add comments where humans might use context. They structure things explicitly where experienced developers might rely on conventions. Some of this is good. Some of it is technical debt being written in real time.

Nvidia maintains that defect rates have stayed flat despite the surge in output. That's actually the important claim here, not the volume increase. But we don't have independent verification of that. We have Nvidia's word. And Nvidia has a massive financial incentive to promote AI-driven development because their entire infrastructure business depends on GPU consumption growing.

This is the pattern we're seeing across the industry. Faster writing doesn't mean faster shipping. It sometimes means faster broken shipping.

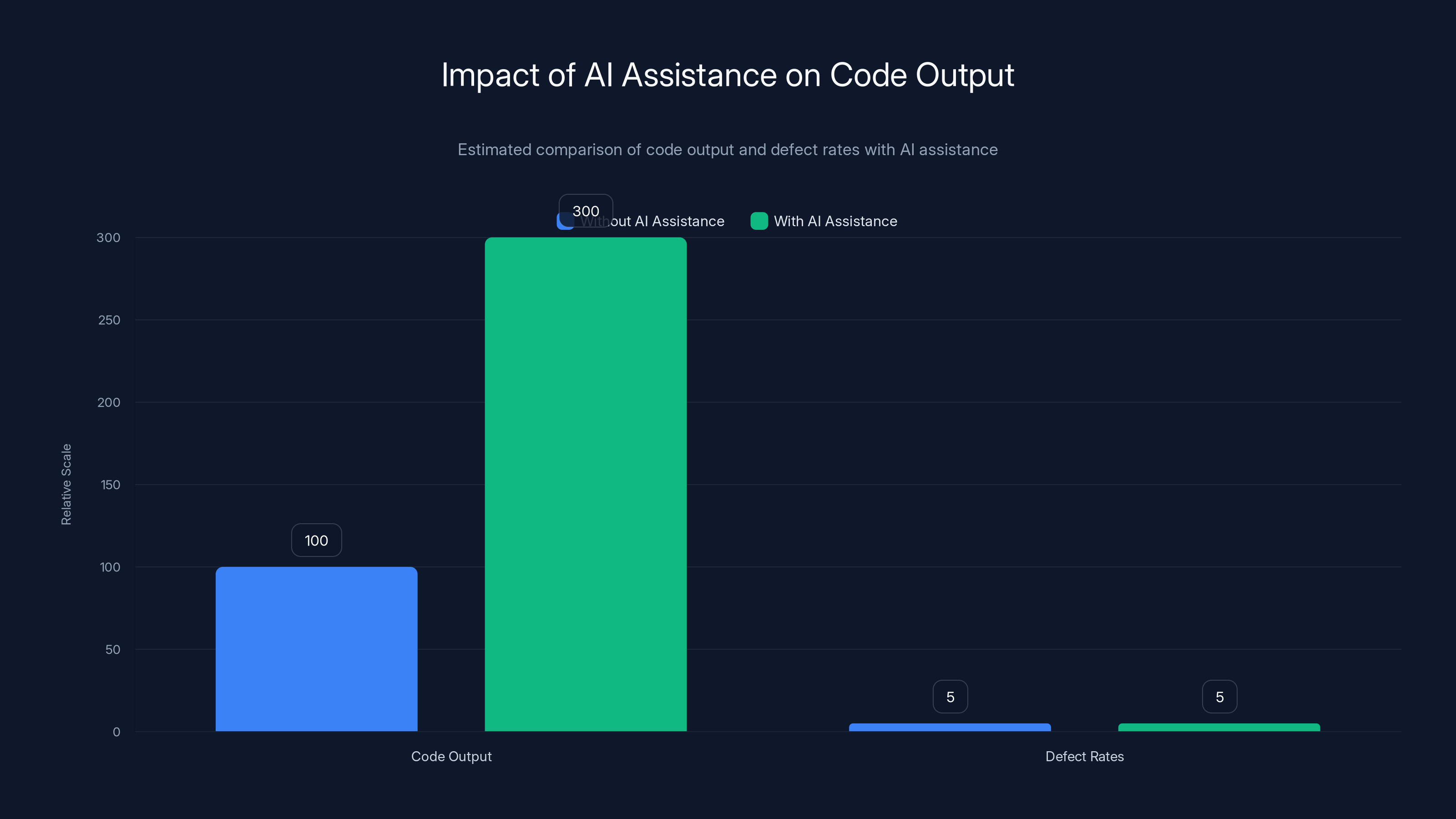

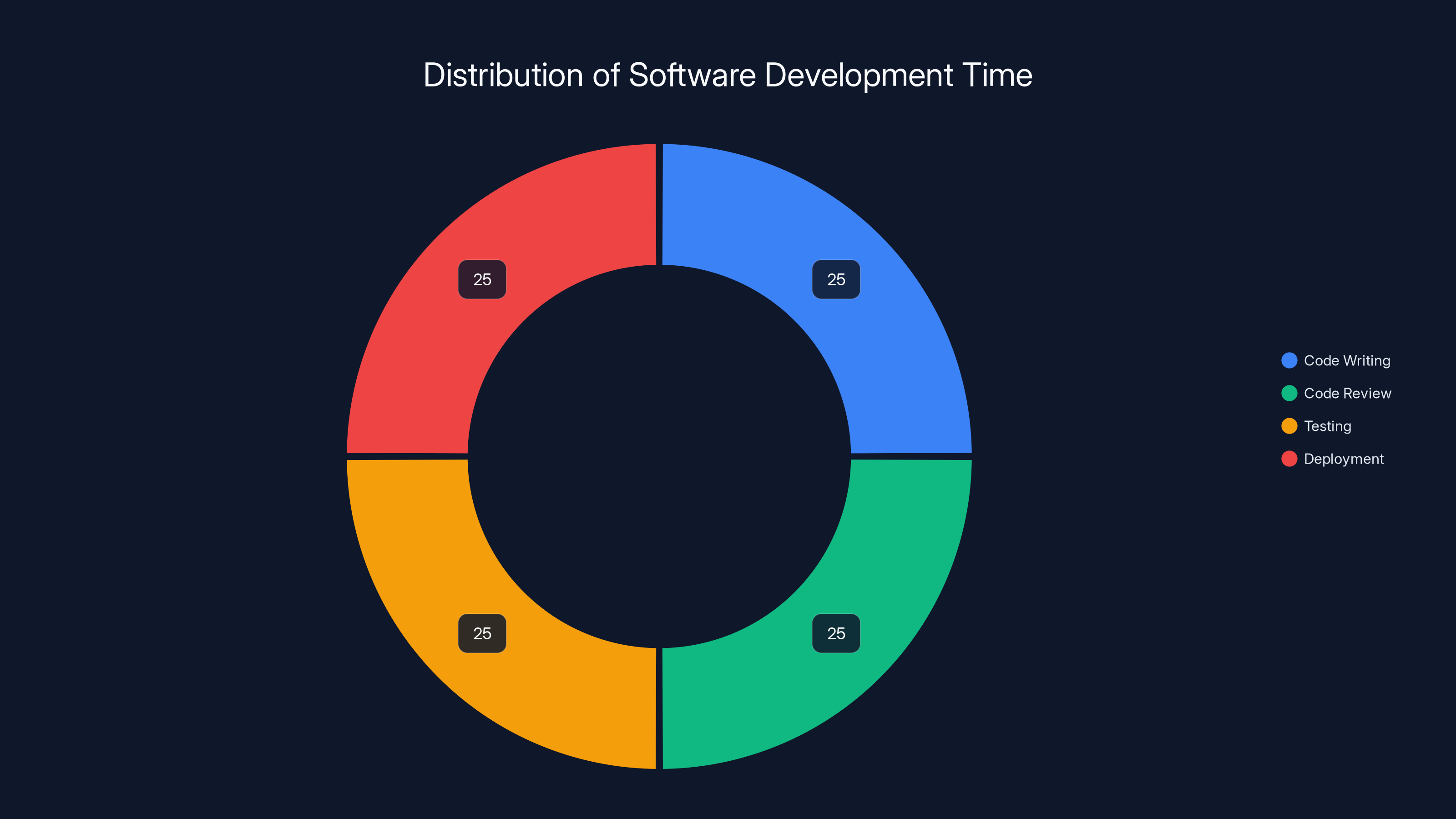

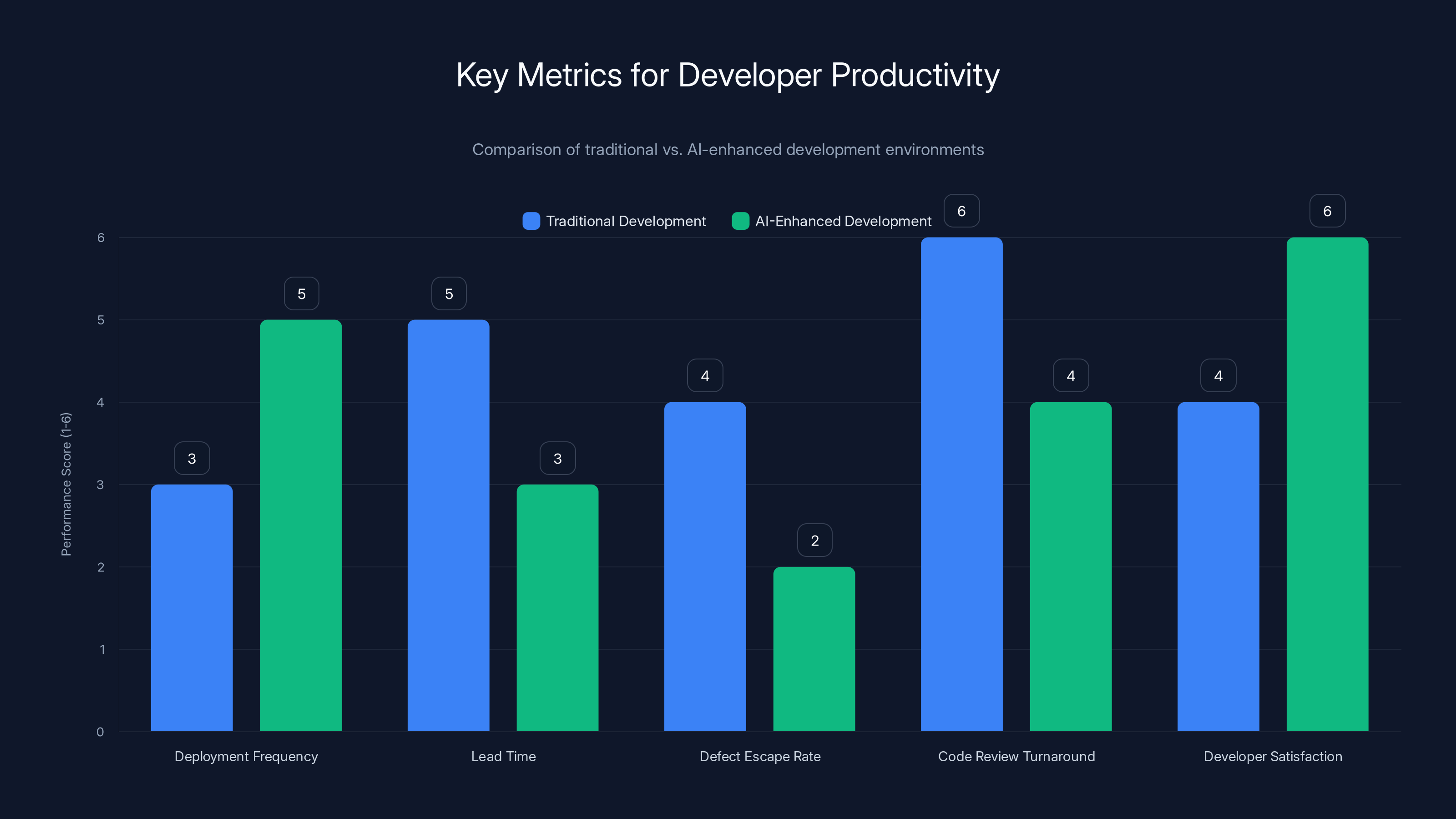

AI assistance can triple code output while maintaining defect rates, as seen in Nvidia's case. Estimated data based on typical industry scenarios.

The GPU Driver Reality Check

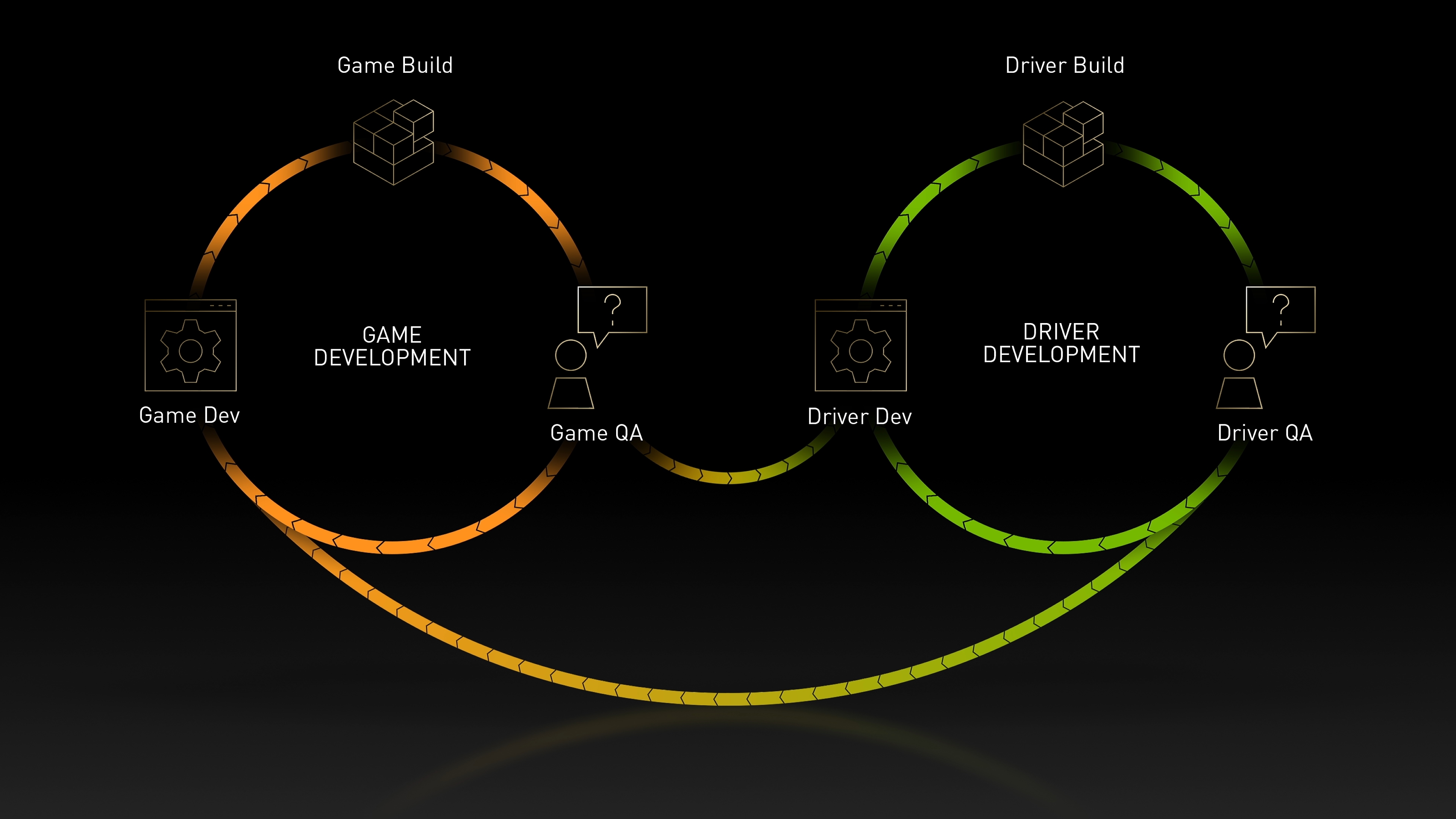

Let's talk about what Nvidia is actually using AI for. The company has integrated AI tools into development workflows across critical systems: GPU drivers, firmware, low-level infrastructure code that runs training systems, gaming platforms, and data center software. These aren't toys. Bugs in driver code have visible consequences. They cause crashes. They reduce performance. They create security vulnerabilities.

Nvidia points to recent wins as proof that AI-assisted development works. DLSS 4 shipped faster. GPU die sizes shrunk. These are real achievements. But here's the thing: Nvidia was already shipping complex, optimized hardware and software before AI tools became widespread. The company has some of the best systems engineers on the planet.

When you have that level of expertise, AI becomes a force multiplier. An expert engineer using Copilot probably produces better work faster than that engineer working alone. But we need to be careful about generalizing from Nvidia's experience. The company operates in a controlled environment with massive resources, strict code review processes, and extensive testing infrastructure.

Most companies don't have that. They have teams of varying skill levels, sometimes-chaotic code review processes, and testing infrastructure that's perpetually under-resourced. In that environment, tripling code output without tripling review and testing capacity is a recipe for disaster.

Nvidia has a defense here that's actually pretty solid. The company says its internal controls remain in place. Code still goes through review. Testing requirements haven't changed. If that's true, then AI isn't making their software worse. It's just making their developers faster at writing code that gets reviewed and tested anyway.

But that's not the story being told. The story being told is about productivity gains. Three times more code. And productivity gains are impressive, which is why they get quoted in earnings calls and industry discussions. The more complicated truth—code gets written faster, then reviewed and tested at the same pace as before—doesn't sound like a revolution.

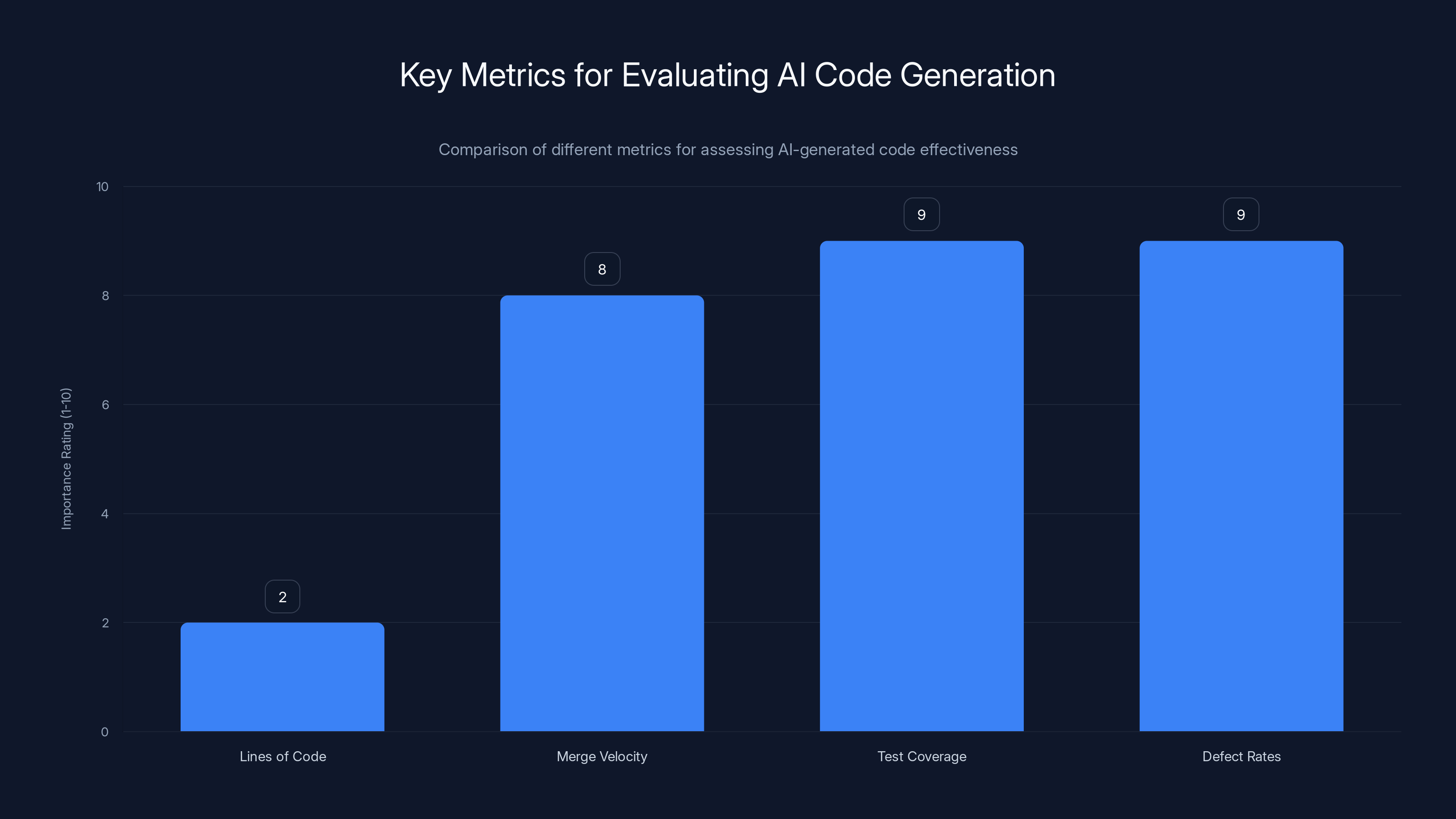

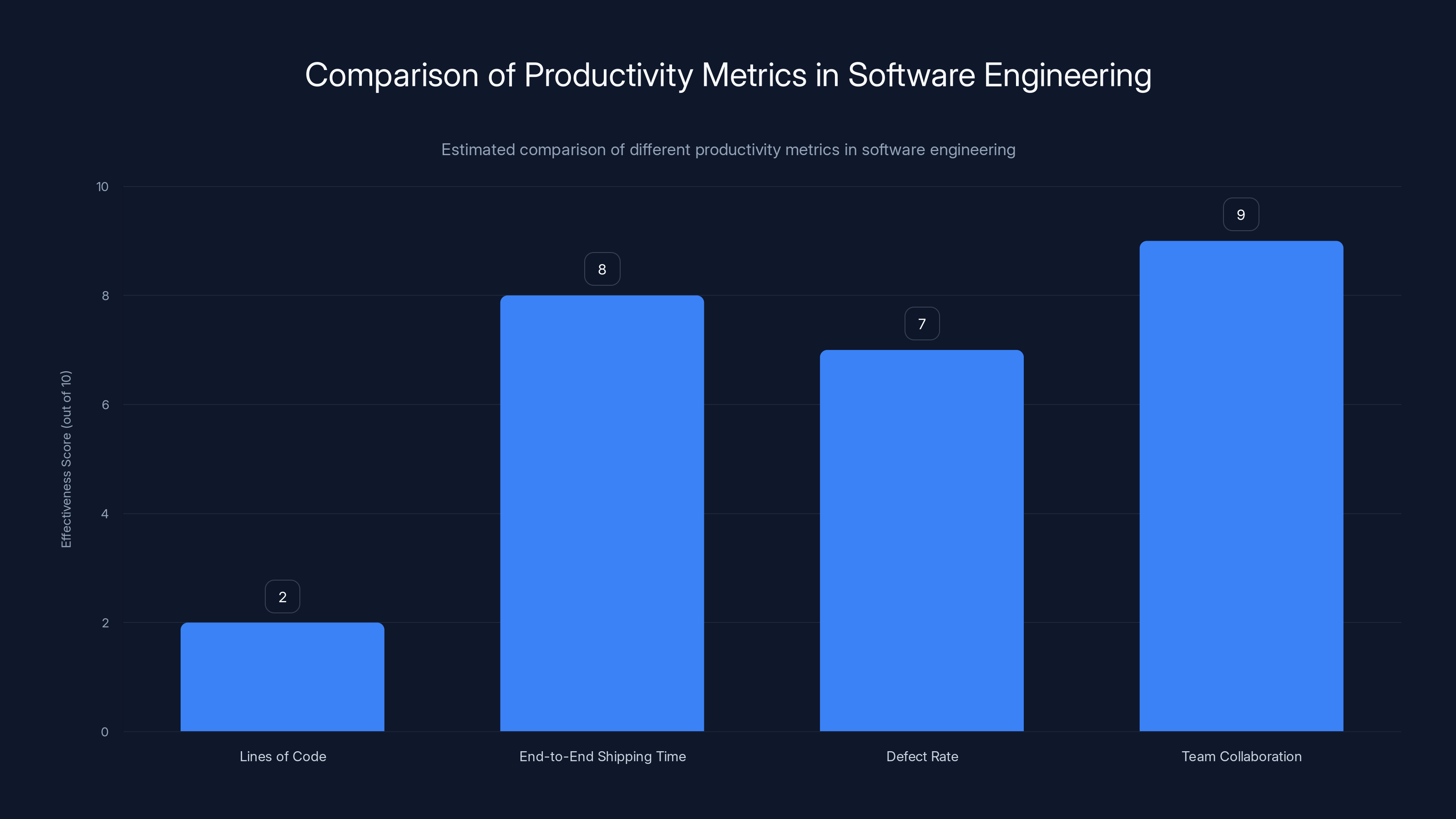

While lines of code are often cited, metrics like merge velocity, test coverage, and defect rates are more critical for evaluating AI code generation effectiveness. Estimated data.

The Quality Question That Won't Go Away

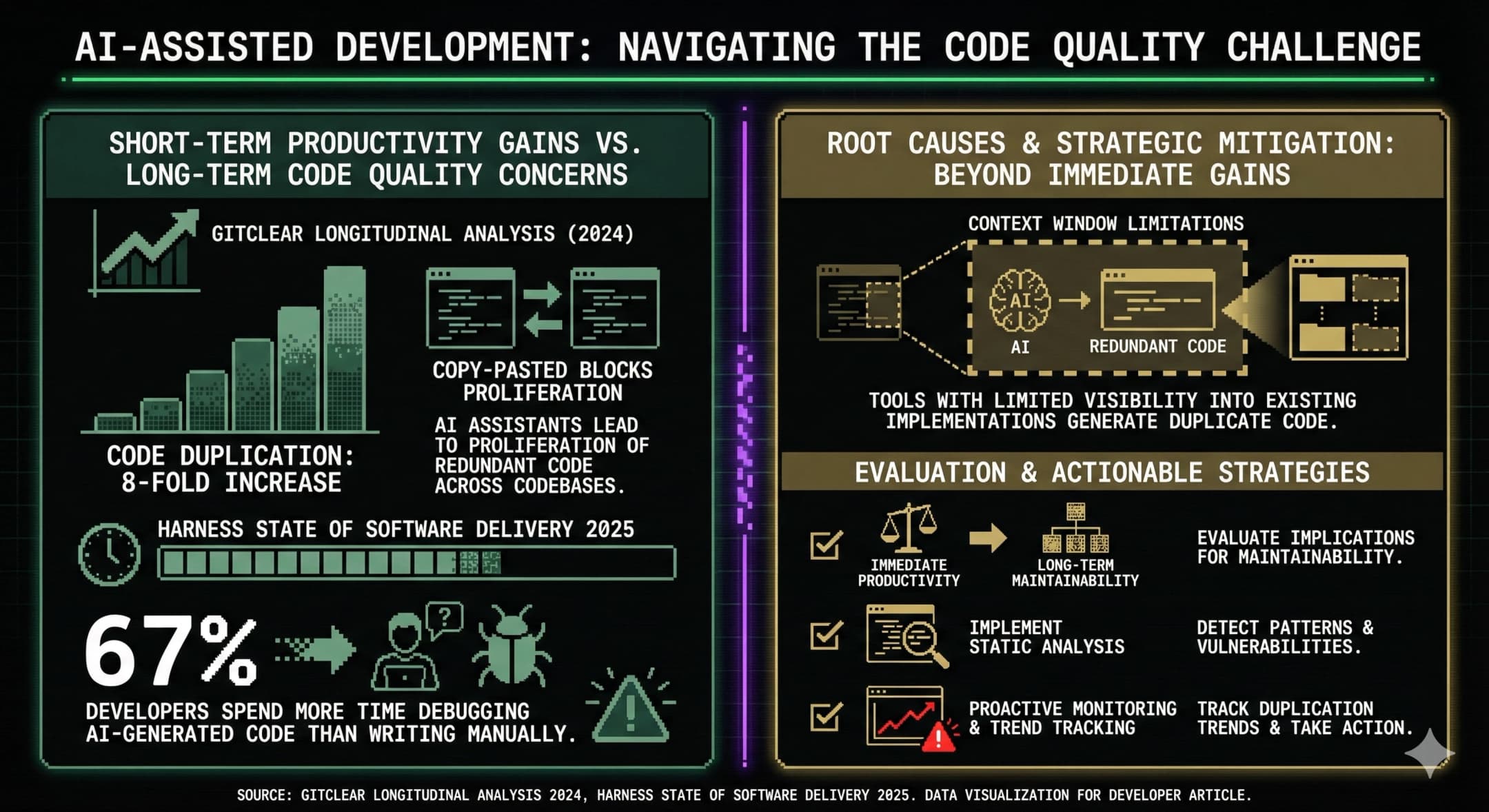

Here's where skepticism is justified. AI-generated code, statistically speaking, contains more bugs and security vulnerabilities than human-written code. This isn't controversial. Multiple studies have shown it. Microsoft's research on Copilot found that while developers got 55% faster at writing code, they also introduced more vulnerabilities. The ratio improved over time as developers got better at using the tools, but the initial trend was clear: AI code is buggier.

Why? Several reasons:

First, AI models are trained on open source code, which is not a representative sample of good code. Open source projects span from masterfully engineered systems to abandoned hobby projects. The model learns patterns from all of it, which means it picks up bad habits alongside good ones.

Second, AI tools don't understand context the way humans do. A developer might look at a function and think, "This needs to handle the case where the user has never set a preference," and add defensive code. An AI model sees syntax patterns and generates code that fits those patterns, but it might miss edge cases that matter in your specific domain.

Third, AI generates code that looks plausible but might not be correct. It's really good at writing code that's syntactically valid, uses the right libraries, and follows conventions. It's worse at ensuring the logic actually solves the problem correctly. This is the hallucination problem that's plagued AI tools since their inception.

Nvidia's response to this is essentially, "Our review process catches it." That's fair. Thorough code review catches most bugs, whether they come from AI or humans. But it means the gains from faster writing are partially offset by review time spent fixing mistakes.

What we don't know is how much of Nvidia's supposed defect-rate flatness is because AI output quality is actually fine, and how much is because the company's review process is so strong that it catches problems before they ship. That distinction matters. If it's the latter, then the real value isn't in the code generation. It's in the code review infrastructure. The AI is just another tool in that pipeline.

Developer Velocity vs. System Throughput

There's a principle in systems engineering called the constraint principle. Your system's throughput is limited by your most constrained resource. For most software organizations, that's not the ability to write code. It's the ability to review it, test it, deploy it, and maintain it.

I've worked at three startups and two larger companies. In every single one, the constraint was never, "We don't have enough developers writing code." It was always downstream. Code review took two weeks. QA testing was the bottleneck. Deployment processes were manual and risky. Building on top of legacy systems was complicated and slow.

Giving developers a tool to write code three times faster doesn't fix any of that. It might actually make things worse. Now you have a queue of code changes waiting for review. Now your QA team is drowning. Now your deployment infrastructure is the bottleneck instead of code writing.

This is what engineering leaders should be thinking about. Not how fast your developers can write code, but how fast you can ship robust, tested, maintainable code end-to-end.

Nvidia's environment is different because it's highly optimized. The company probably has fast code review turnaround. It probably has comprehensive automated testing. It probably has streamlined deployment processes. That's why tripling code writing speed can actually translate to productivity gains. The system can handle it.

Most companies can't say the same.

Estimated data shows that code writing represents about 25% of total development time, with the rest taken up by review, testing, and deployment processes.

The Hidden Costs of AI Code Generation

Let's talk about what nobody mentions when they tout AI productivity gains: the hidden costs.

Maintenance burden increases. More code means more stuff to maintain. Every line of code is a liability. It can have bugs. It can interact with other code in unexpected ways. It needs to be understood by future maintainers. If Nvidia's engineers are writing three times more code, the codebase is growing. That matters.

Cognitive load on reviews. When a developer submits a pull request with 300 lines of AI-generated code instead of 100 lines of human-written code, the reviewer's job gets harder. They have to understand more context, check more logic, verify more edge cases. This isn't always obvious, but reviewers definitely feel it.

Inconsistency creeps in. AI generates code that's locally correct but might not match the patterns used elsewhere in the codebase. You end up with multiple ways to solve the same problem, which makes the codebase harder to navigate and maintain.

Technical debt accumulation. This is the big one. When code gets written faster than it gets cleaned up, technical debt grows exponentially. You end up with more cruft, more deprecated patterns, more complexity that should be simplified but never is because there's always new code to write.

Nvidia can handle this because the company invests heavily in code quality and maintenance. But most organizations can't. They get seduced by the productivity numbers, roll out AI tools, and then six months later they're wondering why shipping is slower and everything is harder to change.

The Nvidia Commercial Incentive

Let's be direct about something. Nvidia has a massive commercial interest in promoting AI-driven development. The company supplies the hardware that runs AI models. The more developers use AI tools, the more GPU compute they consume. The more they consume, the more they buy from Nvidia.

This isn't a criticism of Nvidia. It's just how capitalism works. The company is being a good corporate actor. It's rolling out tools that genuinely help developers. It's measuring outcomes. But the company also has an incentive to frame those outcomes in the most favorable way possible.

When Nvidia says engineers are three times more productive, that framing benefits the company. It makes investors happy. It makes GPU sales sound justified. It makes AI infrastructure spending look like a no-brainer.

When Nvidia says defect rates stayed flat, that's also great news for GPU sales, because it suggests AI tools don't introduce quality problems. Convenient, right?

I'm not saying Nvidia is lying. I'm saying Nvidia has selected the metrics that tell the best story. That's not unusual. That's normal business behavior. But it's why skepticism is warranted.

Lines of code is often a misleading metric for productivity, whereas metrics like end-to-end shipping time and defect rate provide more meaningful insights. Estimated data.

What AI Code Generation Actually Does Well

Let's be fair to the technology. There are real, meaningful things AI tools are genuinely good at.

Boilerplate code. Writing configuration files, scaffolding new projects, setting up standard patterns—AI is excellent at this. You save time not because the code is better, but because you don't have to think about it. It's automating the tedious stuff.

Documentation generation. This is underrated. AI can read your code and generate helpful docstrings and comments. That saves time and makes code more maintainable.

Pattern matching. When you're working in a new framework or using a new library, AI can suggest the right patterns. It's like having a more patient Stack Overflow.

Velocity on constrained problems. When the problem space is well-defined and the solution is somewhat routine, AI can help you move faster. Building CRUD endpoints? AI can help. Building novel algorithms? Probably not.

Bug fixing suggestions. Some AI tools are getting pretty good at spotting bugs and suggesting fixes. This is genuinely useful.

None of this requires three times more code to be written. It just requires the right code to be written faster.

The Experience of Developers Using AI Tools

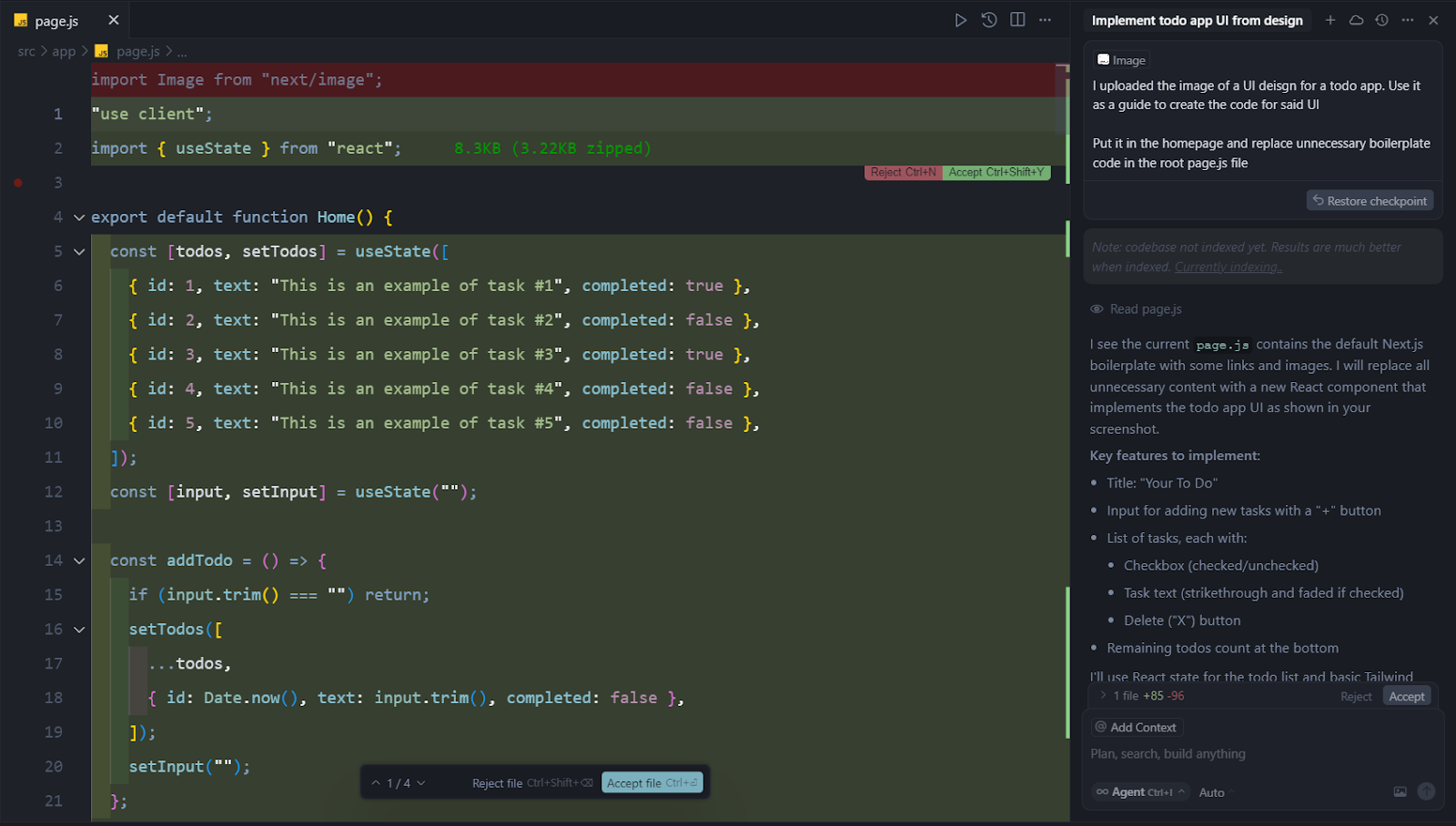

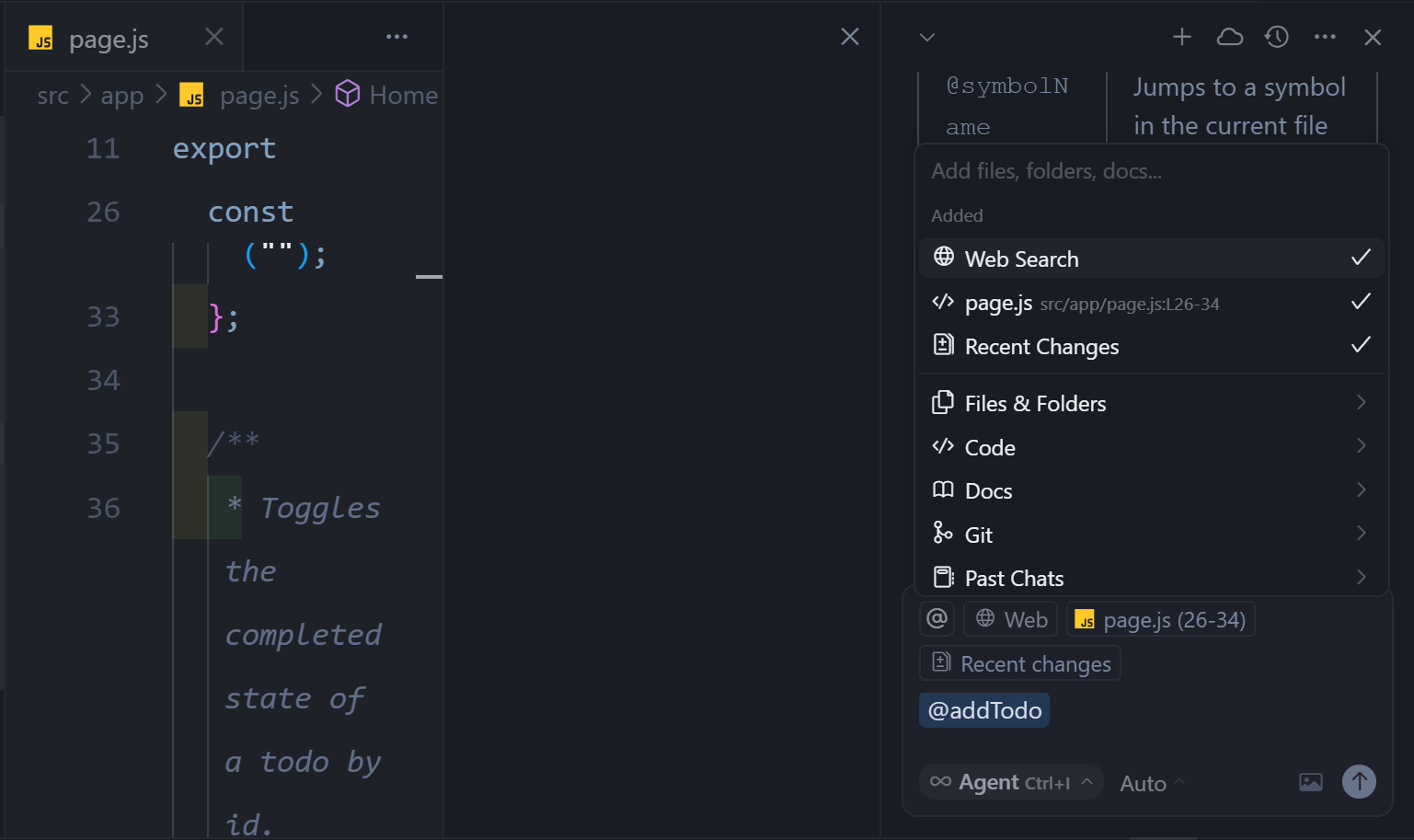

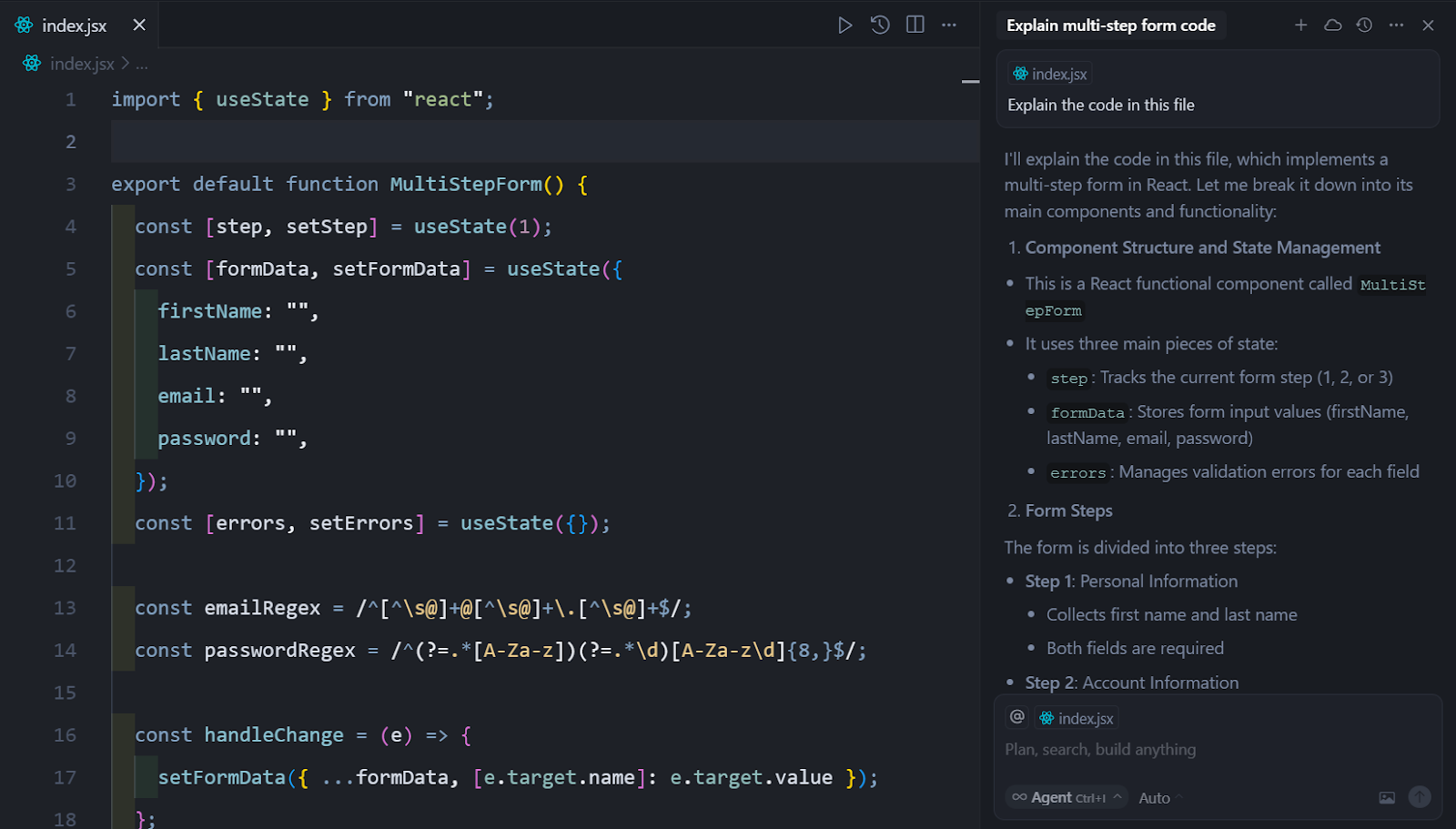

Here's something that gets lost in the metrics. Developers actually like using these tools. Cursor makes coding more fun. Copilot feels like pair programming with an imperfect but always-available partner. That's real value that doesn't show up in commit statistics.

Job satisfaction is a metric that matters. If your developers are happier, they stay longer, they're more engaged, they do better work. That's worth something. Maybe it's not worth three times the code output, but it's worth something.

The experience of using AI tools has improved dramatically in the last year. Cursor in particular has gotten smart about understanding your codebase context and making suggestions that actually fit your project. That's genuinely useful, and users notice it.

But even developers who love these tools recognize the limitations. They know AI can hallucinate. They know they have to review every suggestion. They know it works best for certain kinds of problems and fails for others.

The issue is when these nuances get lost in corporate messaging. Developers understand the reality. But when their managers hear "three times more code," without the context, expectations get misaligned.

AI-enhanced development environments show improved performance in key productivity metrics, particularly in reducing lead time and defect escape rates. Estimated data.

How to Evaluate AI Code Tools Properly

If you're considering rolling out AI-assisted coding in your organization, here's what you should actually measure.

Time to first shipping commit. How long does it take from "we have a feature request" to "code goes live." This is end-to-end throughput, which is what actually matters.

Defect rates in production. Count bugs that make it to production and cause actual problems. Not potential bugs in code review, not theoretical security issues, actual bugs that users encounter.

Code review turnaround time. How long does code sit in review? Does AI-generated code sit longer? Are reviewers taking more time to review it?

Developer satisfaction. Do developers prefer writing with or without AI tools? Are they more or less happy? This matters more than you might think.

Technical debt accumulation. How much code is being written that should probably be refactored or deleted? Are you accumulating complexity faster than you're cleaning it up?

Maintenance burden. How much of developer time goes to fixing old code versus writing new code? Is that ratio changing?

These metrics are harder to measure than lines of code committed. They require more thought to set up. But they measure what you actually care about.

The Role of Testing and Code Review

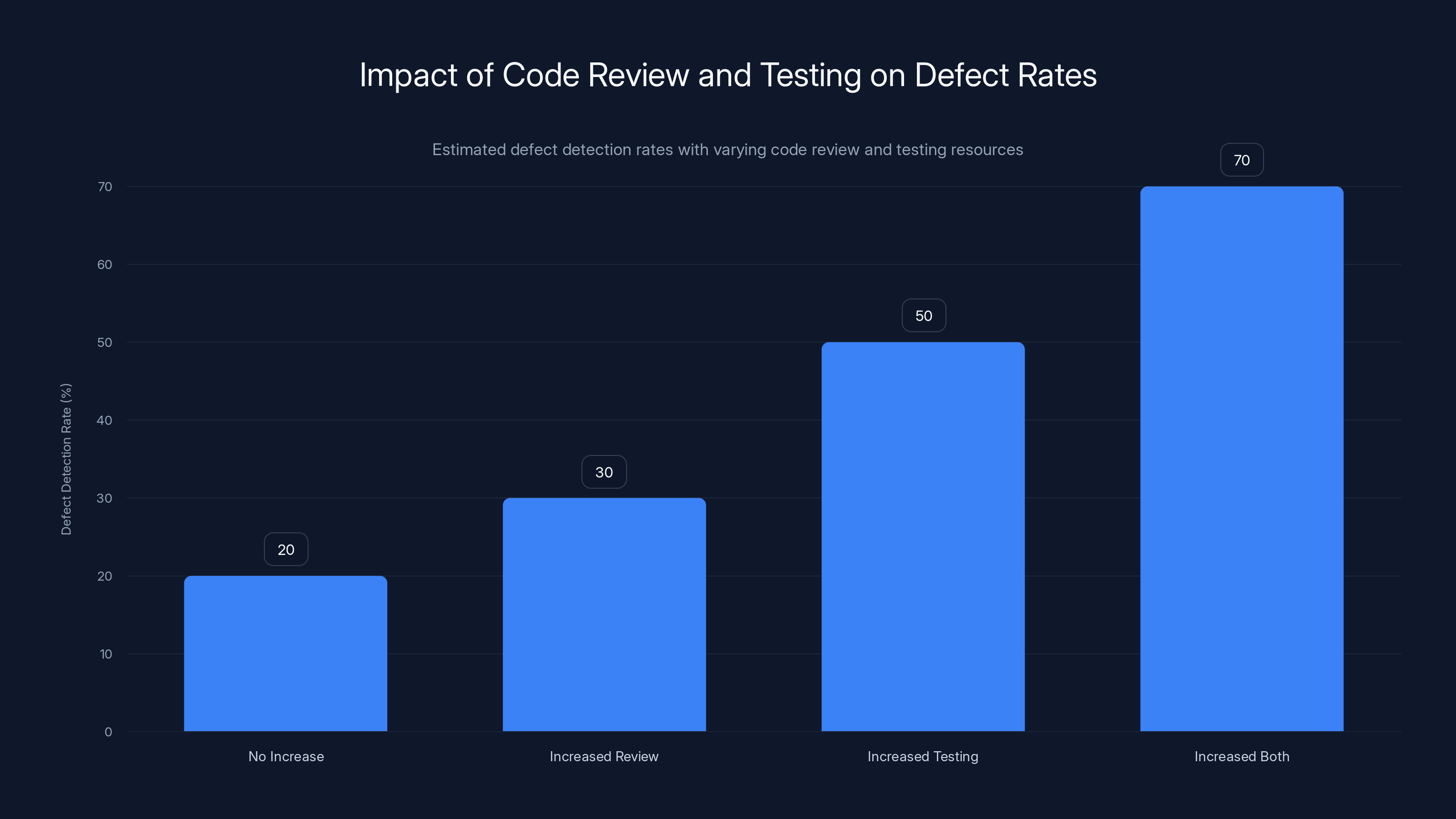

Here's the reality that Nvidia probably isn't shouting about: if you triple code output without proportionally increasing testing and review resources, your quality goes down. Period.

Nvidia maintains flat defect rates because it maintained or increased code review and testing resources. The company didn't triple code output and keep review resources the same. That would be insane.

Most companies don't think about this carefully. They see "developers can write three times more code" and think, "Great, let's deploy this company-wide." They don't think about what that means for code review capacity. They don't plan for increased QA burden. Then three months in, they're overloaded, quality is suffering, and they blame the AI tools.

The AI tools aren't the problem. The resourcing decision is the problem.

There's a reason Nvidia maintains that code review processes haven't changed. It's because review isn't actually the constraint. Review catches maybe 20-30% of bugs. The real filtering happens in testing. So if testing capacity stays the same and code volume triples, you catch the same absolute number of bugs, but a higher percentage of bugs slip through.

Unless, and this is a big unless, the bugs are less likely to exist in the first place. And that requires either really good AI models, really thorough review, or both.

Increasing both code review and testing resources significantly boosts defect detection rates, highlighting the importance of balanced resource allocation. (Estimated data)

The Future of AI in Software Development

This whole situation is going to evolve. AI models are getting better. Tools are becoming more context-aware. Integration with development environments is getting seamless.

In five years, AI-assisted coding will probably be the default. Most developers will use it, most teams will have workflows built around it, and pretending it doesn't exist will be like pretending Google doesn't exist today.

But the metric of success won't change. It's not going to be lines of code. It's going to be the same things it's always been: does the software work, does it stay working, can we change it without breaking it, and how fast can we ship improvements.

AI tools will succeed because they make these things easier, not because they increase raw code output. The teams that figure this out will win. The teams that optimize for code volume will hit a wall.

There's a pattern in software engineering history. Every time a tool promises to make development faster, organizations try to exploit that to squeeze more output without actually improving throughput. It never works. But they always try.

AI is just the latest iteration of that pattern.

Practical Implementation Strategy

If you're thinking about adopting AI-assisted coding, here's how to do it without creating a mess.

Phase 1: Pilot Program. Deploy to a small team, ideally one that works on well-defined problems. Give them three months. Measure everything. Let them get comfortable with the tools. This builds credibility and uncovers problems in a controlled way.

Phase 2: Infrastructure Upgrade. Before rolling out company-wide, upgrade your code review and testing infrastructure. Not after. Before. Add review capacity. Invest in automated testing. Make sure your deployment pipeline can handle higher velocity.

Phase 3: Training and Culture. Teach developers not just how to use AI tools, but how to use them well. Teach code reviewers how to review AI-generated code effectively. Build culture around "quality first, velocity second."

Phase 4: Gradual Rollout. Deploy to teams one by one, not all at once. Monitor metrics closely. Be prepared to pull back if things go sideways.

Phase 5: Continuous Measurement. Keep measuring. Don't let this become a set-it-and-forget-it initiative. Understand how it's actually affecting your organization.

This sounds like a lot of process. That's because it is. But it's less painful than the alternative, which is deploying AI tools, seeing metrics go weird, and having to figure out what went wrong while under pressure.

Lessons from Other Industry Transformations

Software engineering has been here before. When version control became standard, organizations had to rethink code review. When continuous integration became possible, organizations had to adapt deployment workflows. When cloud computing made scaling easy, teams had to learn not to over-engineer infrastructure.

Every time, the pattern was the same. New tool promises big productivity gains. Organizations get excited. Some implement carefully and win. Others stumble and generate organizational debt they spend years paying off.

AI is just the next iteration. The careful ones will figure out how to integrate it into their processes without creating problems. The careless ones will push too hard, too fast, and then spend years dealing with the consequences.

Nvidia is implementing carefully. That's probably why their defect rates stayed flat. But Nvidia is also a company with basically unlimited engineering resources and extremely high bar for code quality. Your organization is probably different.

That's not necessarily bad. It just means you need a strategy tailored to your constraints, not a strategy copied from Nvidia's blog.

The Real Question: Why Are We Talking About Lines of Code?

Honestly, the whole debate highlights something fundamentally off about how we measure software engineering productivity.

Lines of code is a terrible metric. Everyone knows this. Fred Brooks wrote about it in 1975. Google proved it when they showed that their smallest, most productive teams often had the lowest lines-of-code output. The software industry has known for decades that code volume is a bad proxy for productivity.

And yet, here we are, in 2025, having a big discussion about how many lines of code Nvidia engineers are committing. Because it's a number. It's quantifiable. It fits in a press release.

The real measures of productivity are harder. They require context. They take time to understand. "Three times more code" is a headline. "End-to-end shipping time is up 23% while maintaining the same defect rate" is a mouthful.

So we talk about lines of code because it's easy. And then we have to spend months debunking it.

The lesson here isn't just about AI. It's about being skeptical of simplistic metrics. When someone claims a huge productivity gain, ask what they're actually measuring. Ask if that measurement captures what you care about. Ask if there are hidden constraints or downsides.

Where AI Coding Tools Actually Add Value

Let's wrap up with the honest assessment. AI coding tools are valuable. But not for the reason everyone's talking about.

They're valuable because they reduce friction. They reduce the number of times a developer has to break context to look something up. They reduce the mental load of remembering syntax. They reduce the tedium of writing boilerplate. These things add up. A developer might spend 30-45 minutes per day on friction and context switching. Cut that in half, and you get meaningful productivity gains.

But those gains don't scale with code volume. They're asymptotic. Once you eliminate the friction, you're back to being limited by the hard parts: understanding the problem, designing the solution, testing it, integrating it with existing code.

The developers who get the most value from AI tools aren't the ones trying to maximize lines of code. They're the ones using the tools to eliminate busywork so they can focus on the parts that require thinking.

Nvidia's engineers are probably doing exactly this. They're using Cursor to write boilerplate and handle routine tasks, freeing up time to focus on optimization, architecture, and solving hard problems. That's a legitimate win.

But that story doesn't involve tripling code output. It involves the same or slightly lower output, done with less friction, leaving more time for high-value work.

The question isn't whether AI tools are valuable. They are. The question is whether we're measuring value correctly. And lines of code is, definitively, not the right measurement.

The Path Forward

AI-assisted coding is here to stay. In a few years, not using it will be like not using spell check. That's fine. Tools improve productivity, and productivity improvements are good.

But the path forward requires honesty about what these tools do and don't do. They make certain kinds of work faster. They introduce different kinds of quality challenges. They shift where bottlenecks are in the development pipeline. They change the experience of being a developer, in ways that are mostly good but require adjustment.

The companies that will succeed with AI coding are the ones that understand this. They'll integrate the tools thoughtfully. They'll invest in supporting infrastructure. They'll measure what actually matters. They'll be honest about tradeoffs.

The companies that will struggle are the ones that see three times more code and think they've found a magic bullet. They haven't. There are no magic bullets. There's just better tooling, which requires thoughtful implementation to actually deliver value.

Nvidia is probably doing this right. Their claim about defect rates remaining flat is credible because the company has the resources to maintain quality while increasing output.

But Nvidia's success isn't replicable everywhere. It's a function of the company's engineering maturity, resources, and culture. For most organizations, the lesson isn't "deploy AI tools and expect three times output." It's "deploy AI tools thoughtfully and expect modest throughput gains if you support them with proper infrastructure."

That's less exciting than a three times claim. But it's more honest. And in the long run, honesty is what builds sustainable technical organizations.

FAQ

What does it mean when developers commit three times more code with AI assistance?

It means the total number of lines of code being merged into version control has tripled. However, this metric alone doesn't indicate whether the code is better, more maintainable, or ships faster. Code volume can increase due to verbose comments, redundant implementations, or bloated boilerplate that AI tools generate. The real question is whether end-to-end shipping velocity and quality have improved, which isn't captured by raw line counts.

How accurate is lines of code as a productivity metric?

Lines of code is widely considered a poor productivity metric by software engineering researchers and practitioners. It incentivizes writing unnecessarily long code instead of elegant, concise solutions. Fred Brooks documented this problem decades ago. High-performing teams often produce fewer lines of code while shipping more valuable features faster. Better metrics include deployment frequency, lead time for changes, mean time to recovery, and change failure rate.

Why does Nvidia claim its defect rates stayed flat if engineers are writing three times more code?

Nvidia likely maintained or increased code review and testing resources proportionally with code output. The company has sophisticated internal testing infrastructure, strict code review processes, and senior engineers who catch problems before code ships. This level of quality infrastructure is expensive and resource-intensive, which is why most organizations can't replicate Nvidia's results without significant investment in QA and code review systems.

What are the actual benefits of AI-assisted coding tools?

The genuine benefits include faster boilerplate generation, reduced context switching when looking up syntax or patterns, automated documentation generation, and quicker velocity on well-defined problems. AI tools excel at eliminating routine work, freeing developers to focus on harder problems. Tools like Cursor also improve developer experience and satisfaction, which contributes to retention and team morale.

Should I roll out AI coding tools company-wide based on productivity claims?

No. Instead, start with a pilot program on one small team for 90 days. Measure end-to-end shipping time, defect rates in production, code review turnaround, and developer satisfaction. Before wide deployment, upgrade code review capacity and testing infrastructure. Plan for the fact that more code requires more review. Be skeptical of raw output metrics and focus on actual business outcomes like feature delivery speed and quality.

What's the relationship between AI code generation and code quality?

AI-generated code statistically contains more bugs and security vulnerabilities than human-written code, particularly in edge cases and complex logic. However, thorough code review, automated testing, and strong review culture can catch most of these issues before they reach production. The key is that quality doesn't come free with AI tools. It comes from maintaining strong engineering practices around code review and testing.

How does AI code generation affect technical debt?

AI tools can increase technical debt accumulation if teams write code faster without increasing refactoring and cleanup efforts. More code means more maintenance burden, more potential interactions between components, and more complexity overall. Organizations that deploy AI tools without investing in code quality and refactoring see technical debt grow exponentially over time, eventually slowing down all development work.

What's the difference between developer velocity and system throughput?

Developer velocity is how fast individual developers can write code. System throughput is how fast working, tested, deployed features reach users. These are different things. A developer might write code 50% faster, but if code review takes twice as long and deployment is bottlenecked, the system throughput might actually go down. Optimizing developer velocity without optimizing bottlenecks downstream often makes organizations slower overall.

Why should I be skeptical of Nvidia's three-times-more-code claim?

Nvidia benefits commercially from promoting AI-driven development because the company sells GPU infrastructure that runs AI models. More developers using AI means more GPU consumption and more revenue for Nvidia. This doesn't mean the claim is false, but it means the company has incentive to frame results in the most favorable way. The company selected the metric (code volume) that tells the best story, not necessarily the metric that tells the most useful story.

What should I measure instead of lines of code?

Focus on deployment frequency (how often you ship), lead time for changes (how long from request to production), change failure rate (what percentage of changes cause production problems), and mean time to recovery (how long to fix problems). These metrics, known as DORA metrics, actually correlate with organizational performance. You should also measure defect escape rate, code review turnaround time, and developer satisfaction when evaluating AI tool deployment.

TL; DR

-

Nvidia claims 30,000 engineers write 3x more code with AI, but lines of code is a terrible productivity metric. Code volume tells you nothing about quality, maintainability, or shipping speed. More code can mean more technical debt, not more productivity.

-

Defect rates staying flat is the actual important claim. This suggests Nvidia invested in proportional code review and testing resources, which explains how quality was maintained while output tripled. Most organizations can't do this without major infrastructure investment.

-

AI tools genuinely reduce friction and improve developer experience. The real value is in eliminating busywork like boilerplate generation, not in raw code quantity. Developers who benefit most use AI to free time for thinking, not to maximize output.

-

More code without more review capacity creates problems. If you triple code output but keep review resources the same, quality degrades. Organizations deploying AI tools must increase QA and code review capacity proportionally.

-

Measure what actually matters instead of code volume. Track deployment frequency, lead time, defect escape rate, code review turnaround, and developer satisfaction. These metrics correlate with real organizational performance. Volume metrics don't.

-

Bottom line: AI coding tools work, but not the way the headlines suggest. They make certain kinds of work faster, they require supporting infrastructure to maintain quality, and they're best deployed thoughtfully with proper measurement. Shortcuts in implementation lead to technical debt and organizational problems down the line.

Key Takeaways

- Lines of code is a fundamentally flawed productivity metric that tells nothing about code quality or actual engineering velocity

- Nvidia's flat defect rates despite 3x code output is credible only because the company invested proportionally in code review and testing infrastructure

- AI coding tools are valuable for reducing friction and eliminating boilerplate, but not because they increase raw code output

- More code without proportional increases in review capacity and testing resources leads to exponential technical debt accumulation

- Organizations should measure deployment frequency, lead time, change failure rate, and defect escape rate instead of code volume

- Most companies cannot replicate Nvidia's results without significant investment in QA and code review infrastructure to support increased output

Related Articles

- OpenAI Codex App: 1M Downloads, Features & Cost-Effective Alternatives [2025]

- GoDaddy Web Hosting Review: 60-Minute Deep Dive & Better Alternatives [2025]

- 10 Best Vibe Coding Tools in 2026: Complete Guide & Alternatives

- SaaS Revenue Durability Crisis 2025: AI Agents & Market Collapse

- OpenAI Codex Desktop App: AI Coding Agents & Alternatives [2025]

- Apple's AI Chatbot Siri: Complete Guide & Alternatives 2026

![AI Code Output vs. Code Quality: The Nvidia Cursor Debate [2025]](https://tryrunable.com/blog/ai-code-output-vs-code-quality-the-nvidia-cursor-debate-2025/image-1-1770934011885.jpg)