Open AI Codex Desktop App for macOS: Complete Guide to AI Coding Agents & Alternatives [2025]

Introduction: The Shift From Code Writing to Agent Management

Software development has entered a transformative era. What once required developers to manually write lines of code in integrated development environments (IDEs) is now shifting toward orchestrating autonomous AI systems that can handle entire features, debug workflows, and manage complex tasks independently. Open AI's new Codex desktop application for macOS represents a fundamental paradigm shift in how developers interact with artificial intelligence in their daily workflows.

The launch of this application signals a broader industry movement away from simple code completion tools toward sophisticated agent orchestration platforms. Where GitHub Copilot and similar autocomplete systems focused on helping developers write better code snippets in real-time, the Codex desktop app enables developers to delegate entire features, projects, and workflows to AI agents that can run autonomously for extended periods—sometimes up to 30 minutes—before returning completed work for review.

This distinction is crucial because it reflects a fundamental change in software engineering's skill requirements. Rather than proficiency in specific programming languages or frameworks, the premium skill increasingly becomes the ability to effectively specify problems, create workflows that autonomous systems can follow, and manage multiple AI agents working in parallel on different aspects of a project.

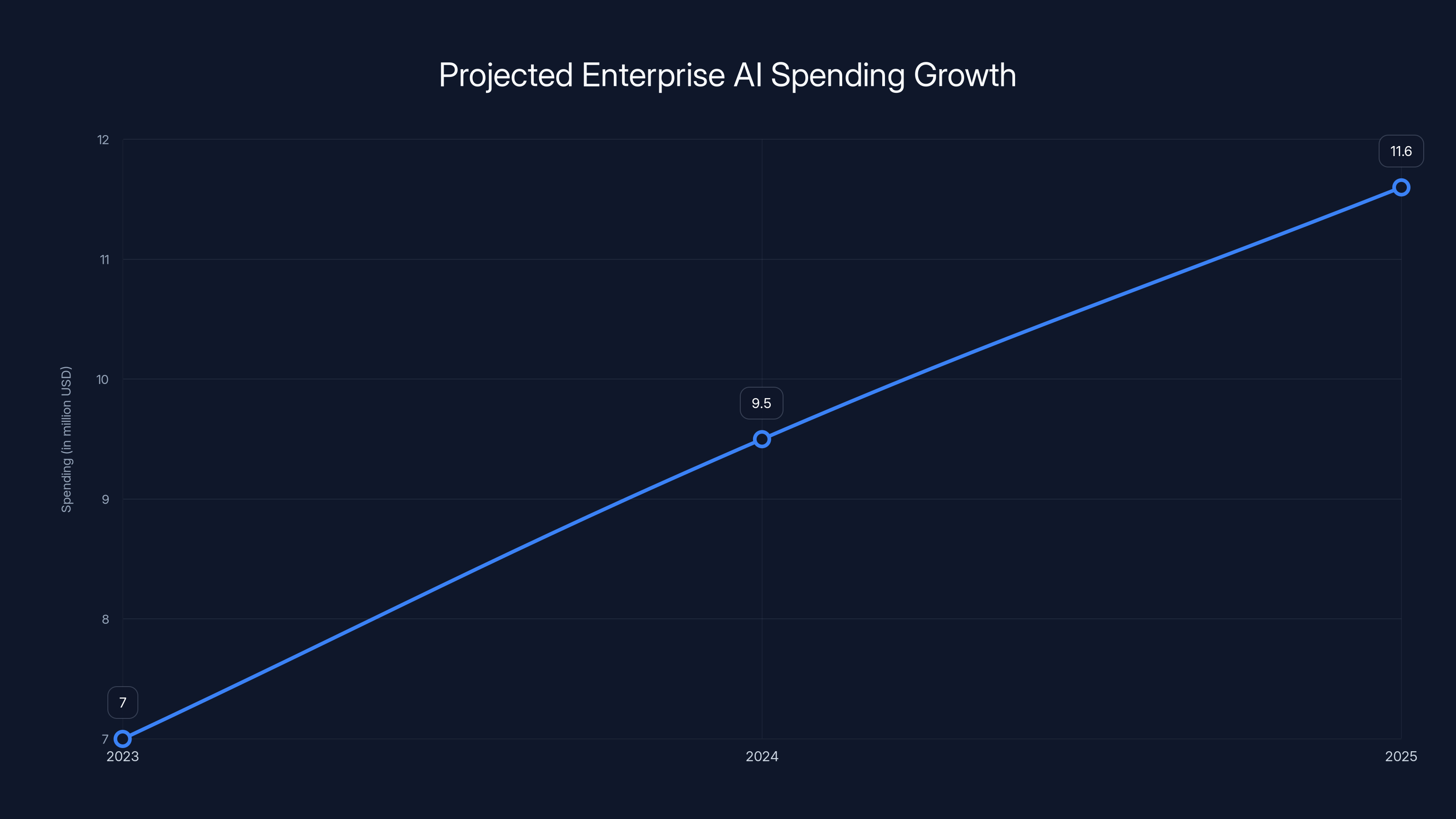

The statistics supporting this shift are compelling. According to recent enterprise surveys, 78% of Global 2000 companies are already using Open AI models in production, with average enterprise AI spend rising from

Sam Altman, Open AI's CEO, emphasized the product's transformative potential during the launch briefing, noting that he recently completed "a fairly big project in a few days without opening an IDE a single time." This isn't hyperbole but rather an indicator of how developers are already adapting their workflows to leverage AI agents more effectively than traditional coding approaches.

In this comprehensive guide, we'll explore the Codex desktop app's architecture, capabilities, practical use cases, pricing, and most importantly—how it compares to competing solutions and what alternative approaches teams might consider based on their specific needs and constraints.

Enterprise AI spending is projected to increase from

Understanding the Codex Architecture: From IDE-Based Autocomplete to Agent Orchestration

The Evolution of AI-Assisted Development

To understand the Codex desktop app's significance, we must trace the evolution of Open AI's coding initiatives over the past four years. The journey began in 2021 when Open AI first introduced the Codex model, which powered GitHub Copilot when it launched in 2022. During this initial phase, AI coding assistance was fundamentally reactive—developers would type a function signature or a comment describing what they wanted, and the model would suggest the next lines of code in real-time within their IDE.

This approach had both strengths and limitations. The strength was immediate assistance and reduced friction in the coding process. The limitation was that AI assistance was constrained to small chunks of code—typically a few lines to a complete function. Developers still needed to orchestrate the overall architecture, handle error cases, and integrate different components.

The landscape shifted dramatically with the release of more capable models. GPT-5 in August 2024 and subsequent versions (GPT-5.2 in December 2024) demonstrated substantially improved abilities for longer-context reasoning, complex problem decomposition, and multi-step task execution. These improvements meant that AI could now handle entire features end-to-end, not just code snippets.

The Codex team observed developers responding to these capabilities by changing their behavior. Rather than pair-programming with AI—providing continuous guidance and feedback—developers began delegating entire features to models. Instead of saying "write me a function that sorts an array," they were saying "implement a complete user authentication system with database integration, password hashing, and two-factor authentication." This behavioral shift revealed that developers valued autonomy in their AI tools over real-time guidance.

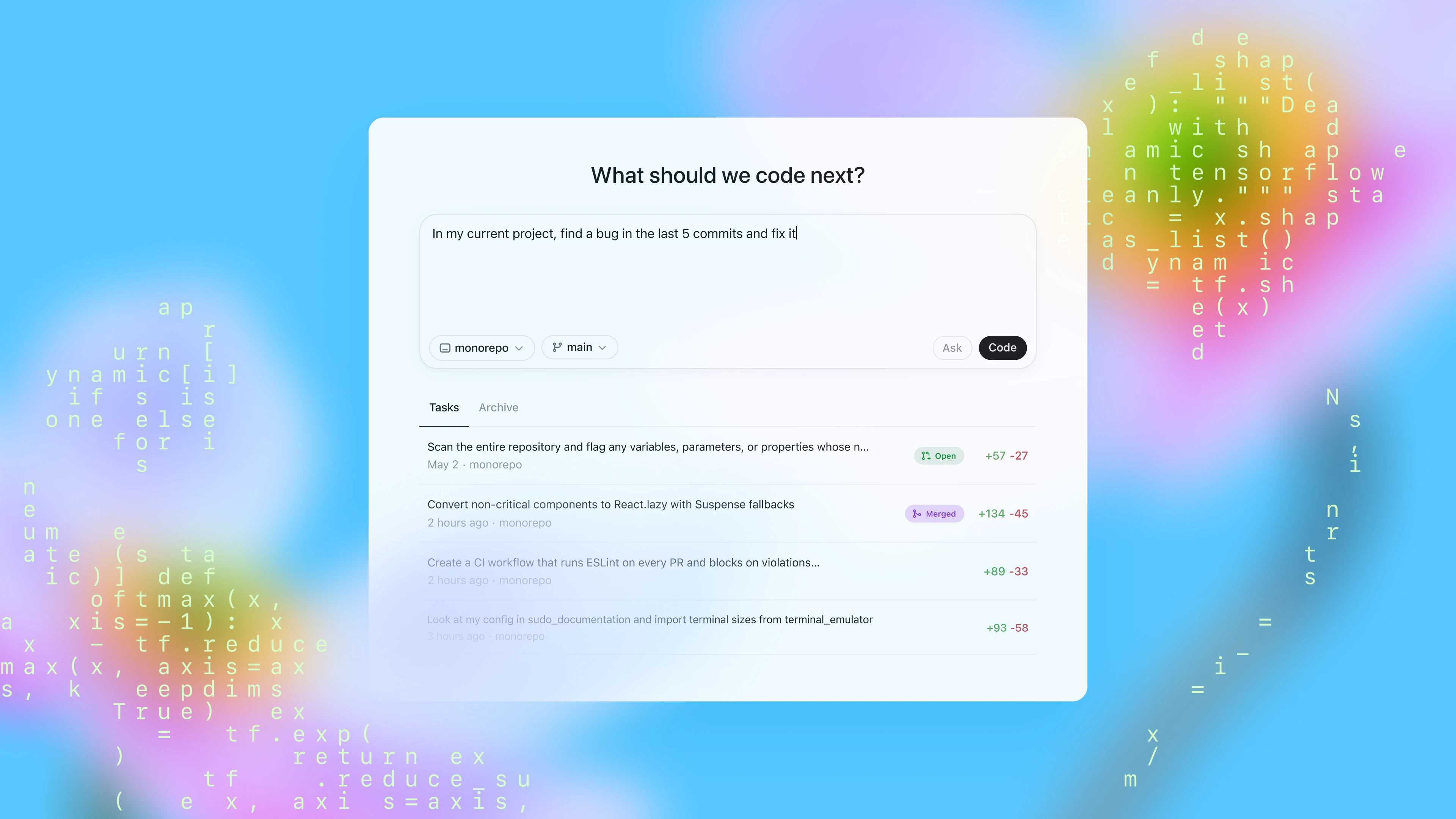

The Command Center Concept

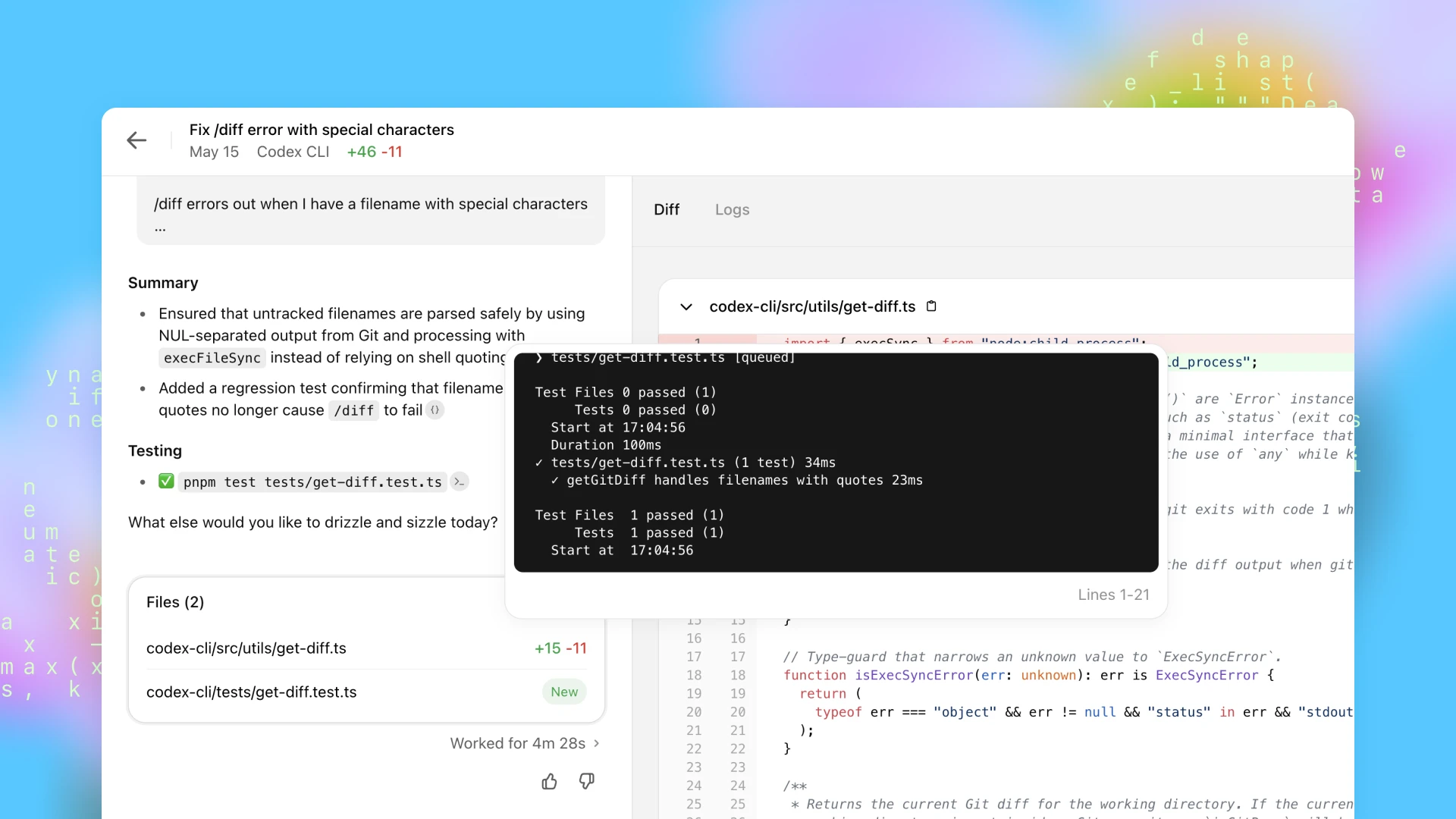

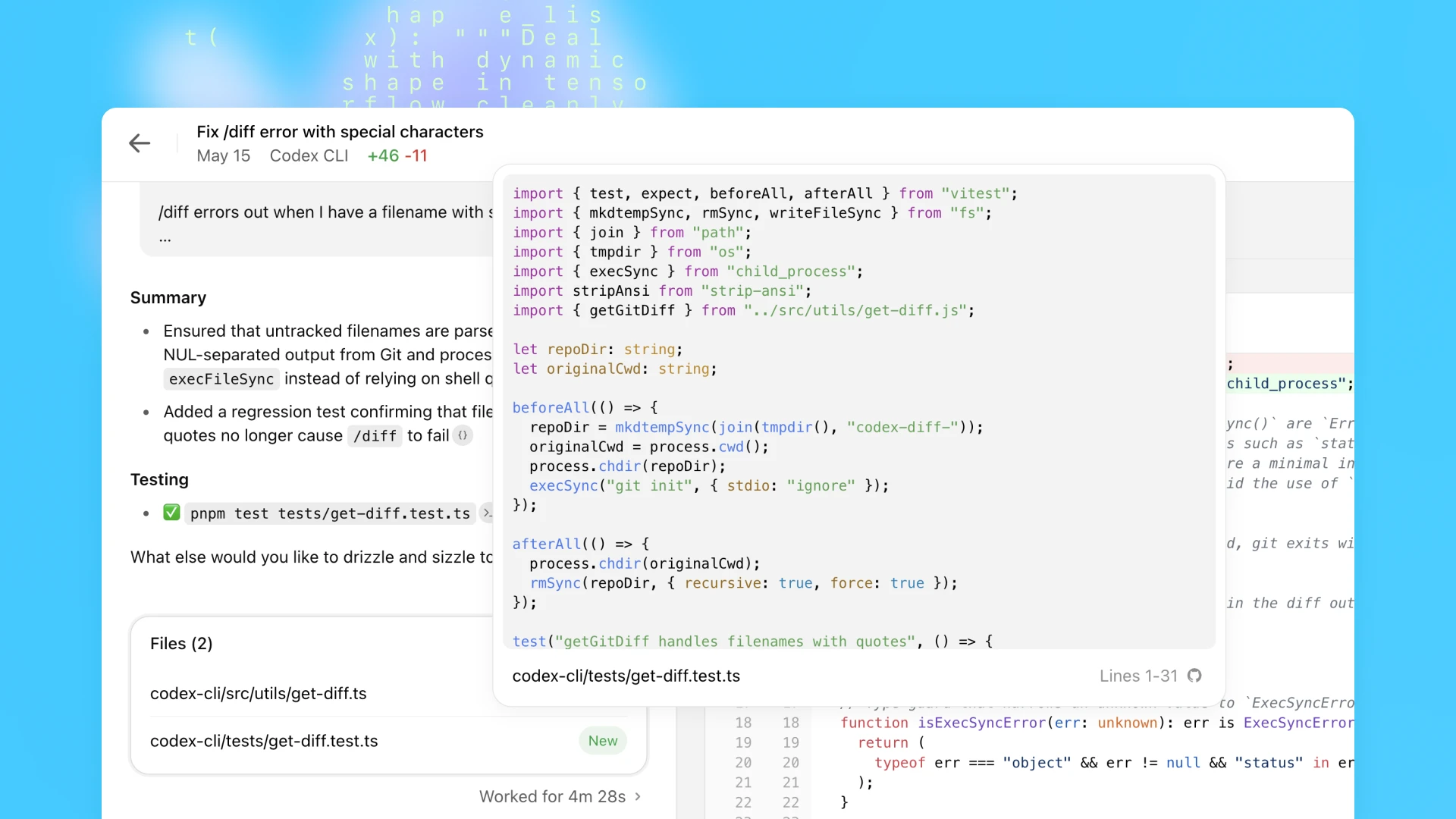

Open AI's response to this behavioral change was the Codex desktop application, which they explicitly describe as a "command center for agents." This terminology is deliberate and reveals important architectural thinking.

A traditional IDE is designed around the human developer as the primary actor. The developer initiates actions, reviews results, and makes decisions. An AI command center inverts this hierarchy—AI agents become the primary actors, with the developer assuming a supervisory, orchestration-focused role. Developers issue high-level directives, and agents handle task decomposition, execution, and coordination.

The application achieves this through several architectural components. First, it provides a unified interface for managing multiple parallel agent instances. Unlike traditional development where you open one project and work sequentially, the Codex app allows you to spin up multiple agents working on different features simultaneously. Second, it includes persistent agent state and memory—agents can work on tasks for extended periods (up to 30 minutes documented, but potentially longer for certain workflows) while maintaining context about the overall project.

Third, the application implements what Open AI calls "worktrees," which are isolated code branches where each agent works independently. This solves a fundamental coordination problem: if you have five agents working on five different features, how do you prevent their changes from conflicting? Worktrees allow each agent to work on an isolated copy of the repository. The system then handles merging these parallel workstreams back together, reducing conflicts and coordination overhead.

Integration Points and Data Flow

The Codex application isn't designed as an isolated tool but rather as a hub that connects to various external systems in a developer's toolkit. This integration-first approach is reflected in Open AI's published skills library, which includes:

- Design System Integration: Fetching design context directly from Figma, allowing agents to understand visual specifications and implement them programmatically

- Project Management: Direct integration with Linear for issue tracking, allowing agents to reference requirements and update task status

- Deployment Infrastructure: Integration with Cloudflare, Vercel, and other deployment platforms for continuous deployment workflows

- Content Generation: Integration with GPT Image for generating assets, and support for creating documents in PDF, spreadsheet, and Word formats

- Repository Management: Native Git integration with support for multiple branches, commits, and code review workflows

This architecture reflects a modern understanding that software development doesn't happen in isolation. It's embedded in a broader ecosystem of design tools, project management systems, deployment infrastructure, and communication platforms. By creating integration points to these systems, the Codex app positions itself as a coordination layer across the entire development workflow.

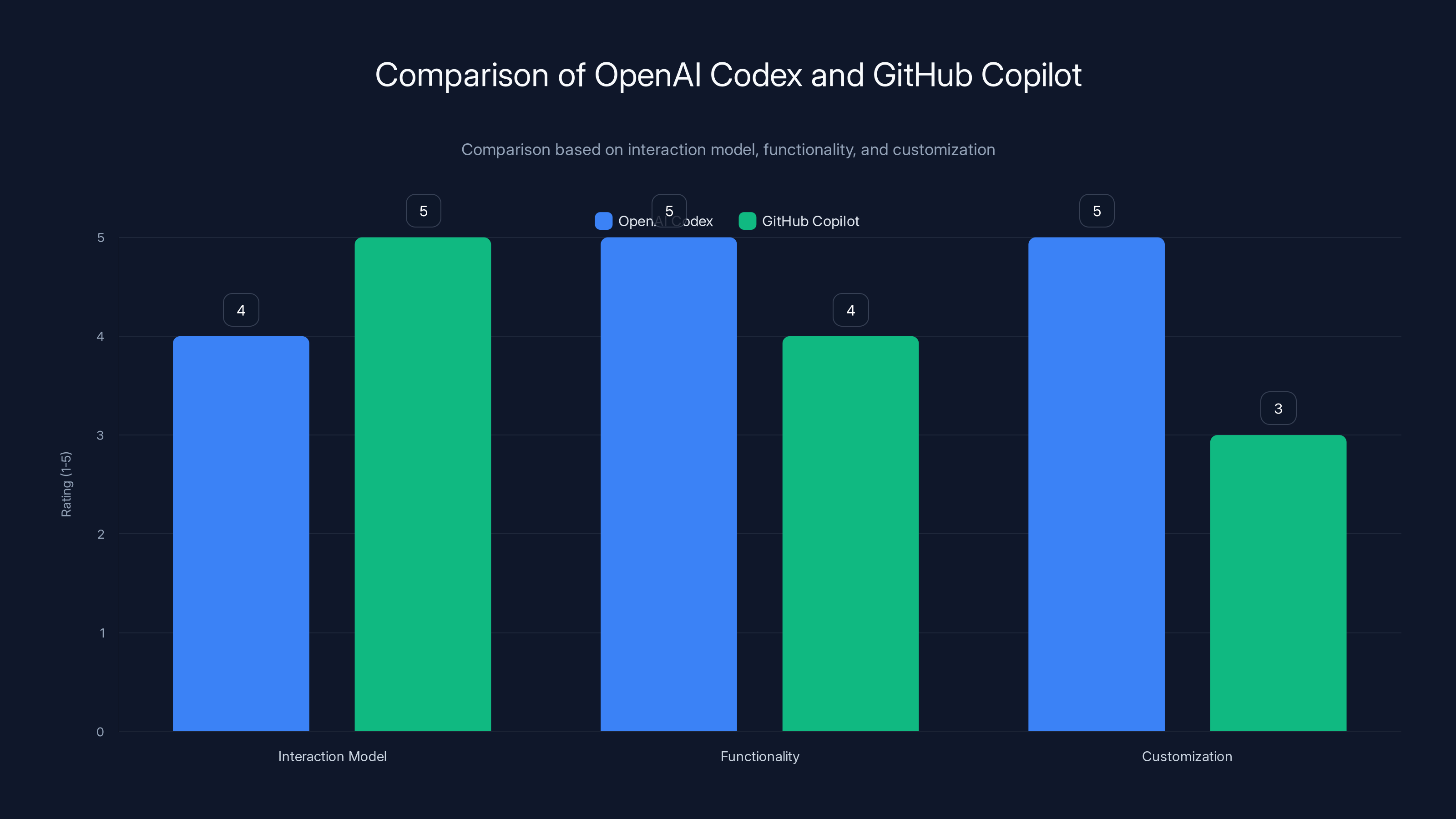

OpenAI Codex excels in functionality and customization, offering autonomous agent-driven development, while GitHub Copilot is strong in real-time interaction for human-driven coding. Estimated data.

Key Features and Capabilities: Beyond Code Generation

Skills: Extending Agent Capabilities Beyond Raw Coding

One of the most sophisticated aspects of the Codex application is the skills system. Unlike models that have fixed capabilities, the Codex app implements an extensible framework where developers can create, manage, and orchestrate specialized skills that agents can invoke.

Think of skills as highly specialized sub-agents, each optimized for a particular task. Open AI provides a base library of skills covering common development workflows, but the framework is designed for extensibility. A team might create custom skills for:

- Database schema migrations following specific conventions

- Custom testing frameworks or organization patterns used by the team

- Internal API standards and authentication mechanisms

- Company-specific code quality requirements or style guides

- Integration patterns for legacy systems or enterprise platforms

- Performance optimization procedures specific to the company's infrastructure

When an agent is given a task, it can either have specific skills called out in the task directive, or it can automatically select relevant skills based on the nature of the work. This automatic selection is handled by the model's reasoning capabilities—it essentially learns to recognize when a particular skill is relevant and applicable.

This skills system solves a critical problem in AI-assisted development: capability alignment. Generic coding models are good at many things but specifically optimized for nothing. By wrapping these models with context-specific skills, teams can guide AI agents toward approaches that work well in their particular technical environment.

A practical example illustrates this well. Suppose a team uses a custom ORM framework that's not widely known. A generic AI model might generate database interactions using standard patterns that don't conform to the team's conventions. With a custom skill that teaches the agent about the team's ORM framework, the agent can generate code that automatically follows internal standards, reducing code review friction and implementation time.

Automations: Background Task Scheduling and Delegation

The Codex app includes an "Automations" feature that represents a significant departure from traditional development workflows. Rather than requiring developer initiation for every task, Automations allow developers to schedule AI work to occur on a background schedule, with results appearing in a review queue when complete.

This feature is particularly powerful for categories of work that are repetitive but important—the kind of work that humans typically deprioritize because it's not directly creative. Open AI's own usage provides examples:

- Daily Issue Triage: Every morning, an automation runs that categorizes incoming issues, identifies duplicates, and routes them to appropriate teams

- CI/CD Failure Analysis: When continuous integration pipelines fail, an automation investigates the failure logs, identifies root causes, and generates diagnostic reports

- Release Notes Generation: Before each release, an automation analyzes commits and pull requests since the last release and generates comprehensive release documentation

- Bug Pattern Detection: Automations periodically scan repositories for common bug patterns, deprecated API usage, or security vulnerabilities

- Performance Monitoring: Regular automations analyze application performance metrics and generate reports on performance regressions

Each automation is configured to run on a schedule (daily, weekly, or triggered by specific events) and generates outputs that land in a review queue. This workflow preserves human judgment and decision-making while eliminating the tedium of routine maintenance tasks.

The efficiency gains are substantial. Consider bug detection: instead of developers spending hours per week on manual code reviews looking for common issues, an automated agent can scan the entire codebase, identify potential issues, and generate prioritized reports. Developers then review the generated output in a focused way, dramatically reducing the cognitive load.

Parallel Agent Execution and Coordination

The ability to run multiple agents in parallel addresses a fundamental limitation in human development: sequential work. Even with teams, most development happens sequentially—one developer works on a feature, finishes it, merges it, and then the next developer starts their feature. This sequential approach creates bottlenecks and suboptimal parallelization.

The Codex app enables true parallel work at the agent level. A developer can specify five different features they want built simultaneously, spin up five agents, and let them work independently. Each agent maintains its own execution context, its own view of the codebase (via worktrees), and its own state machine.

The application handles the complexity of coordinating these parallel streams of work. When agents are done, their changes must be merged back into the main codebase. Rather than manual conflict resolution, the system attempts to merge changes intelligently, escalating to the developer only when conflicts arise that require human judgment.

This parallel capability is one of the most significant productivity multipliers. If an agent can accomplish in 30 minutes what would take a developer 4-6 hours, and you can run five agents in parallel, you've effectively multiplied development productivity by

Extended Context and Long-Running Tasks

Traditional AI models have context window limitations—they can only "see" a certain amount of code and context at once. Open AI's latest models support extremely large context windows (200K tokens and beyond in some variants), which means agents can maintain detailed understanding of entire codebases, design documents, and requirements throughout task execution.

Long-running tasks (documented at up to 30 minutes but potentially extensible) mean that agents can work through complex, multi-step processes without requiring human intervention. An agent might:

- Analyze requirements documents

- Review existing code architecture

- Identify relevant design patterns

- Implement the feature

- Write comprehensive tests

- Generate documentation

- Run automated analysis tools

- Optimize performance

- Address security considerations

- Generate a summary for code review

All of this happens in a single continuous execution thread, with the agent maintaining perfect context throughout. This is fundamentally different from traditional development where a developer might spend time context-switching between different tools and perspectives.

Practical Use Cases: Where Codex Delivers Maximum Value

Enterprise Feature Development

The most straightforward use case for the Codex application is accelerating enterprise feature development. A product manager defines a feature requirement, and rather than estimating 2 weeks of developer time, a team can:

- Write a detailed specification (500-2000 words)

- Attach relevant design mockups and requirements

- Invoke Codex with the specification

- Receive a complete, tested implementation within hours

- Have developers review and refine rather than build from scratch

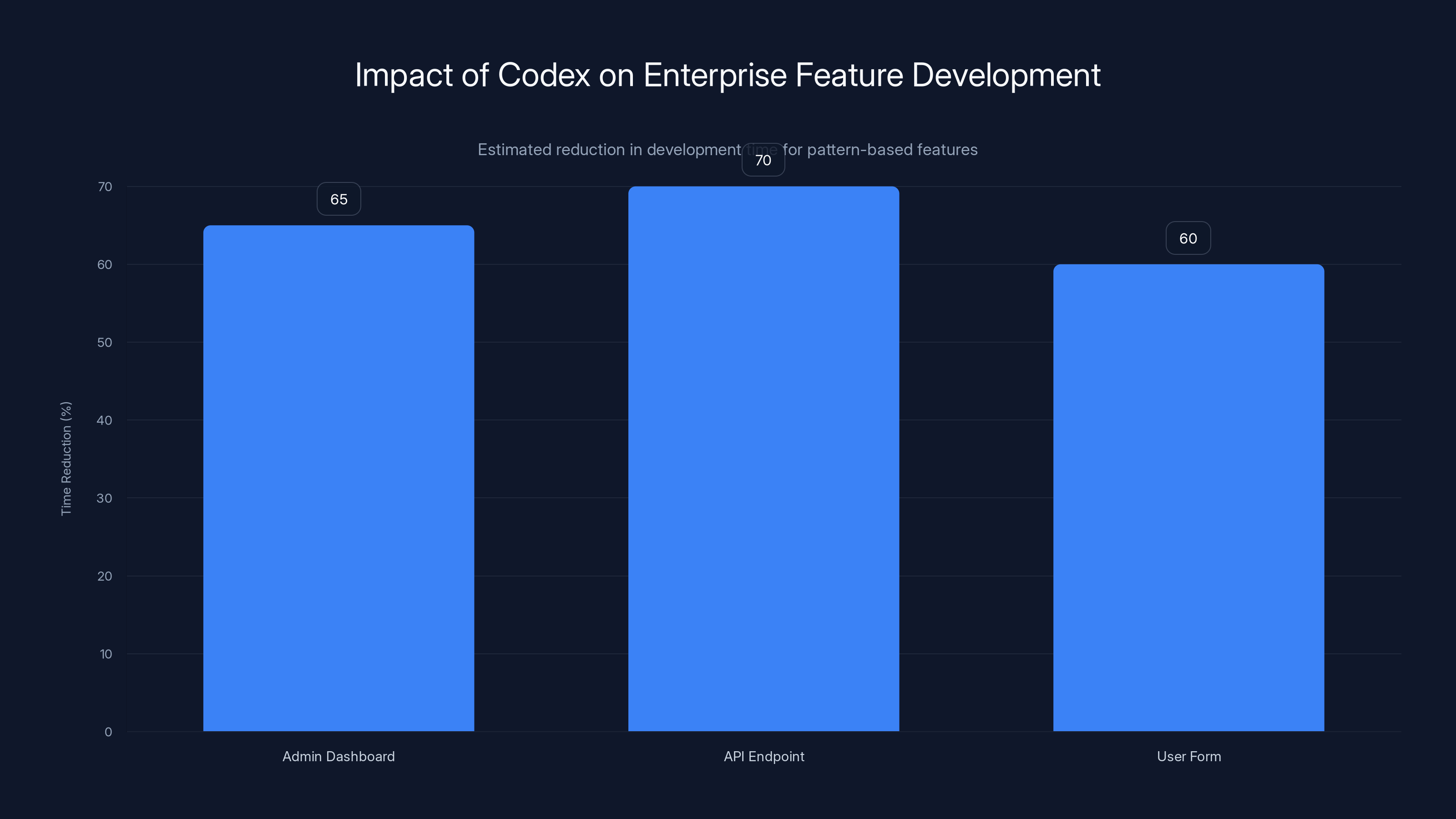

This approach works particularly well for features that fall into established patterns. Building a new admin dashboard? A new API endpoint? A new user-facing form with validation and error handling? These are high-value but relatively pattern-based work where AI agents can generate solid foundational code with minimal direction.

Enterprises report 60-70% reductions in feature development time for this category of work, with most of the remaining time spent on review, testing in production-like environments, and making business-specific adjustments.

Legacy Code Modernization and Migration

Another powerful use case is systematic modernization of legacy codebases. Many enterprises have code that's functionally correct but uses outdated patterns, frameworks, or languages. Rewriting this code manually is expensive and time-consuming.

Codex can be directed to systematically migrate code from one technology to another. For example:

- Migrate from jQuery to React or Vue

- Upgrade from Python 2 to Python 3

- Convert SQL queries to use a modern ORM

- Refactor monolithic code into microservices

- Modernize APIs from REST to GraphQL

The agent can work through the codebase systematically, maintaining backward compatibility and functional equivalence while updating the underlying technology. Developers review the changes and handle edge cases that require human judgment, but the bulk of mechanical work is automated.

Infrastructure as Code and DevOps Automation

Infrastructure management has become increasingly sophisticated, with infrastructure as code (IaC) frameworks like Terraform, CloudFormation, and Kubernetes manifests requiring careful attention to detail. Errors in infrastructure code can have significant consequences.

Codex is particularly effective at generating infrastructure code because it's highly structured and pattern-based. An agent can:

- Generate Terraform modules for new infrastructure

- Create Kubernetes manifests for containerized applications

- Write CloudFormation templates following organizational standards

- Implement CI/CD pipelines and automation

- Generate monitoring, alerting, and observability configurations

The combination of skills (integration with AWS, GCP, or Azure) and pattern-based knowledge makes infrastructure automation one of the highest-ROI use cases.

Test Suite Generation and Expansion

While test writing is often considered high-skill work, much of test development is actually mechanical—writing test cases that cover different input scenarios, error conditions, and edge cases. Codex can dramatically accelerate test development.

Given a function or module, an agent can generate:

- Unit tests covering normal cases, edge cases, and error conditions

- Integration tests that verify interaction between components

- Performance tests and benchmarks

- Security-focused tests (SQL injection, XSS, etc.)

- Property-based tests using frameworks like Hypothesis

Developers typically review these generated tests and add domain-specific test cases, but the mechanical portion is automated, increasing test coverage while reducing developer burden.

Documentation Generation

Keeping documentation synchronized with code is a perpetual challenge in software development. Documentation tends to become outdated as code evolves, creating a maintenance burden.

Codex can be scheduled to run automations that keep documentation current:

- Generate API documentation from code

- Create architecture decision records (ADRs)

- Write getting-started guides for new developers

- Generate changelog entries from commit messages

- Create runbooks and operational procedures

- Write release notes and migration guides

The agent can even integrate design specifications (from Figma) with code documentation to create comprehensive guides that show both design intent and implementation.

Database Operations and Data Migrations

Database management involves tedious, error-prone tasks that are perfect candidates for automation:

- Writing schema migrations in proper format

- Generating seed data for testing

- Creating data validation scripts

- Writing migration scripts for schema changes

- Generating database performance analysis and optimization recommendations

- Creating backup and recovery procedures

Since database operations are highly structured and pattern-based, Codex can achieve high accuracy while dramatically reducing manual effort.

Code Review Assistance and Quality Analysis

As an automation that runs on a schedule, Codex can assist with code review processes:

- Analyzing pull requests for common issues

- Checking for compliance with coding standards

- Identifying performance issues or security vulnerabilities

- Suggesting refactorings and improvements

- Verifying test coverage

- Generating review summaries

This doesn't replace human code review but rather enhances it by pre-filtering mechanical issues, allowing human reviewers to focus on architectural and design considerations.

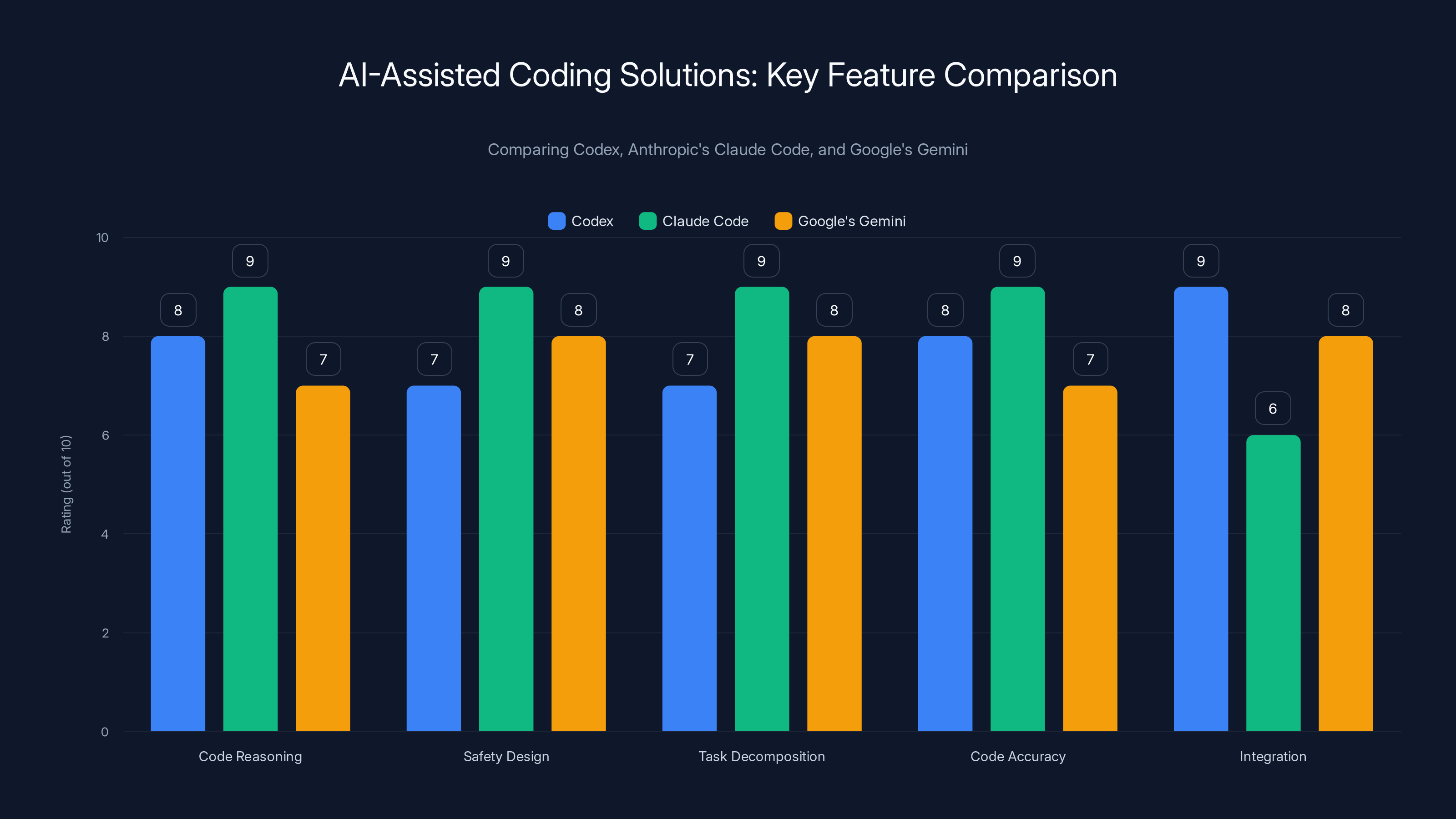

Codex excels in integration, while Claude Code leads in code reasoning and accuracy. Estimated data based on competitive analysis.

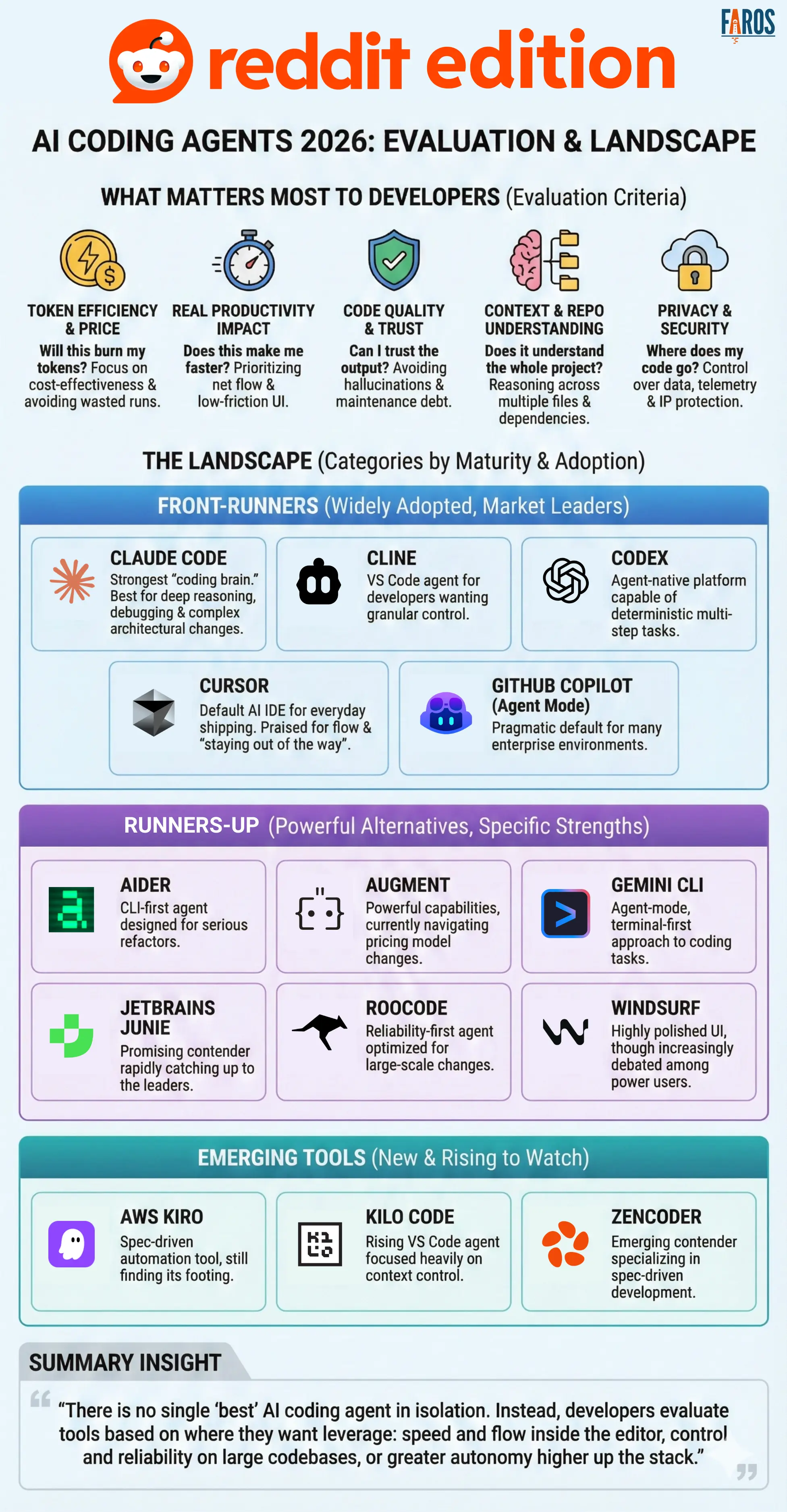

Competitive Landscape: How Codex Positions Against Rivals

Anthropic's Claude Code: The Strongest Competitor

Anthropic's Claude Code represents the most significant competitive challenge to Open AI's Codex offering. In the Andreessen Horowitz survey of Global 2000 companies, Anthropic has emerged as the leader for software development and data analysis use cases, with consistent reports from enterprise CIOs of "rapid capability gains since the second half of 2024."

Claude Code's strengths include:

- Strong code reasoning: Claude models are known for exceptional code understanding and explanation capabilities

- Safety-first design: Built with constitutional AI principles that align with enterprise governance concerns

- Thoughtful task decomposition: Claude tends to break complex problems into well-reasoned substeps

- Code accuracy: Lower hallucination rates compared to some competitors

Anthropic's growth in enterprise adoption is noteworthy—25% of enterprises now use Anthropic in production, representing the largest share increase of any frontier AI lab since May 2025. For teams evaluating AI-assisted coding solutions, Claude Code is a credible alternative that shouldn't be dismissed.

The key differentiator for Codex is the orchestration layer—Anthropic's Claude Code is primarily an inference system that requires external orchestration, whereas Codex bundles orchestration, scheduling, parallel execution, and integration management into a complete application. Teams choosing Claude Code would need to build additional infrastructure for automations, parallel execution, and worktree management.

Google's Gemini Code Generation and Project IDX

Google brings substantial resources and integrated cloud infrastructure to the AI-assisted coding space. Their Gemini model supports large context windows (1 million tokens) and integrates deeply with Google Cloud services.

Google's approach differs from Open AI's. Rather than a standalone desktop application, Google emphasizes integration with their cloud development environment (Project IDX) and their broader cloud infrastructure. This makes Gemini appealing for teams already invested in Google Cloud, but requires adopting Google's development environment and cloud services.

Gemini's strengths:

- Vast context windows: 1 million token context allows understanding of massive codebases

- Cloud integration: Seamless integration with Google Cloud services, BigQuery, and Google-specific frameworks

- Multimodal capabilities: Image understanding for visual design specifications

The limitation is that Gemini coding is less mature than competing offerings, and adoption among enterprises has been slower, with no major announcements of large-scale enterprise deployments for software development.

GitHub Copilot and IDE-Based Autocomplete Tools

GitHub Copilot and similar IDE-based autocomplete tools (like JetBrains AI Assistant, Tabnine) remain widely used but represent a different category than Codex. These tools focus on real-time code completion within traditional development environments rather than autonomous agent orchestration.

The differences are fundamental:

| Aspect | Copilot/IDE Tools | Codex |

|---|---|---|

| Interaction Model | Real-time suggestion within IDE | Autonomous delegation with oversight |

| Task Scope | Lines to functions | Full features and workflows |

| Developer Role | Active writing with assistance | Specification and oversight |

| Execution Length | Immediate (seconds) | Extended (minutes to hours) |

| Parallelization | Sequential | Parallel multiple agents |

| Scheduling | Manual invocation | Automated scheduling |

GitHub Copilot remains valuable for developers who prefer to maintain traditional coding workflows, while Codex appeals to developers ready to shift toward agent management.

Alternative Orchestration Platforms for AI Development

Beyond direct AI model competitors, there are platforms that provide orchestration and automation capabilities for AI-assisted development:

Runable offers an interesting alternative for teams building automation-heavy workflows. At $9/month, Runable provides AI agents for content generation, workflow automation, and developer productivity tools with a focus on simplicity and cost-effectiveness. For teams looking to delegate routine tasks like documentation generation, release notes, or content creation, Runable's automated workflow capabilities could provide similar efficiency gains to Codex's automations feature at a fraction of the cost. However, Runable is not specifically optimized for code generation, making it more complementary than directly competitive.

LangChain and similar frameworks provide infrastructure for building custom AI agent systems. Rather than using a pre-built application like Codex, teams can use these frameworks to build specialized agent systems tailored to their exact needs. The tradeoff is significantly more engineering effort to build, deploy, and maintain the system.

Temporal and Apache Airflow are established workflow orchestration platforms that have been extended with AI capabilities. Teams already using these platforms can integrate AI models (including Open AI's) into their existing workflows. The advantage is leveraging existing infrastructure; the disadvantage is that these platforms weren't designed specifically for AI orchestration and require substantial configuration.

Pricing and Commercial Terms: Understanding the Cost Structure

Codex Desktop App Pricing Model

Open AI has structured Codex pricing to align with usage patterns rather than fixed seats. The pricing typically follows Open AI's existing model structure:

- Base Application Fee: A monthly application license fee

- Token-Based Usage: Charges based on tokens consumed (input and output tokens)

- Optional Skills and Integrations: Premium skills and enterprise integrations may carry additional fees

For organizations already using Open AI's API extensively, integration with existing Open AI accounts is available, which can provide volume discounts and unified billing.

Comparing Total Cost of Ownership

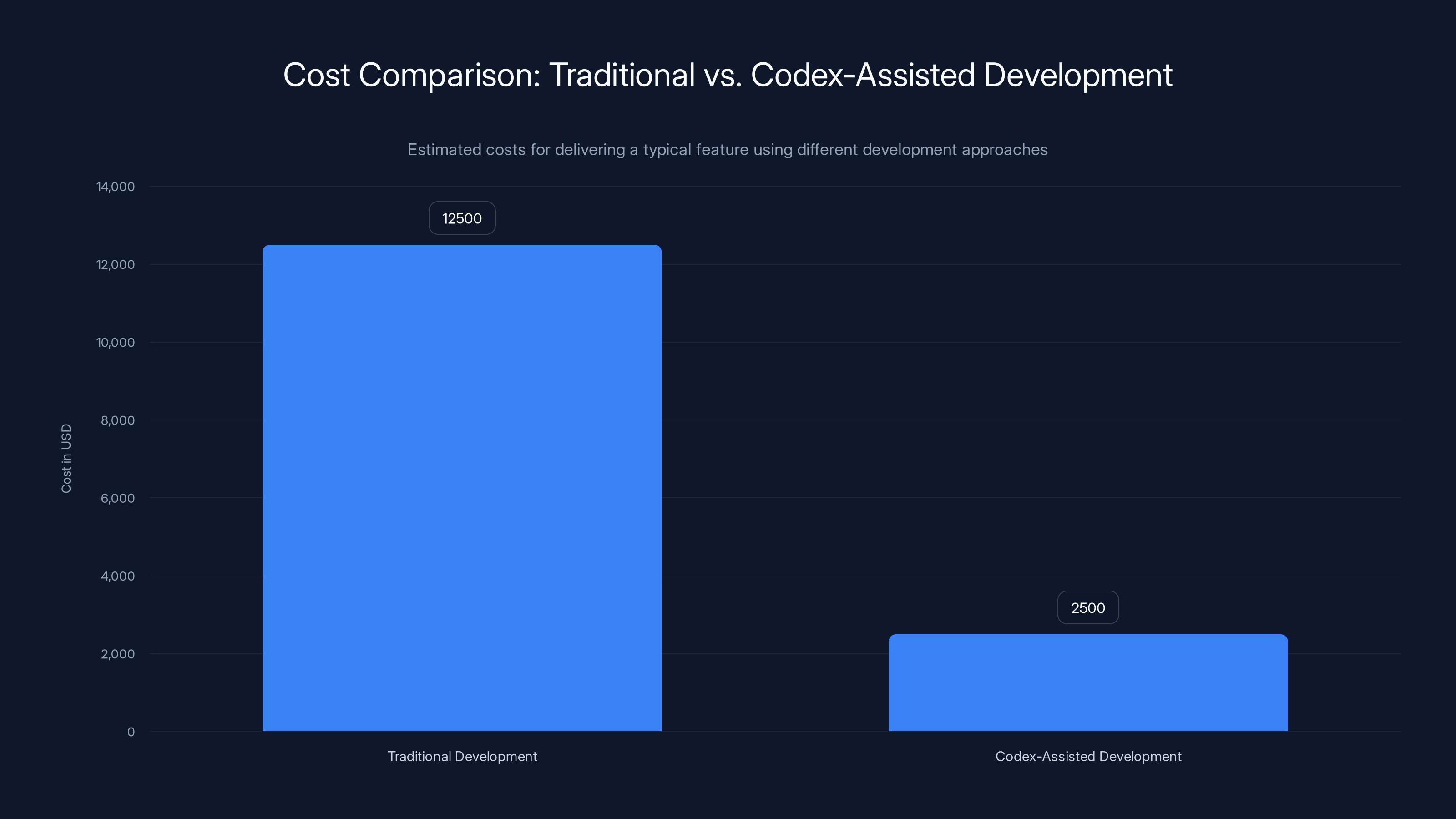

While absolute pricing requires current rate cards, the economics are compelling when calculated as cost per feature delivered. Consider a typical feature that might cost

- Traditional Development: 15,000

- Codex-Assisted Development: ~100/hour)

- Savings: 75-80% reduction in time and cost

These ROI calculations explain why enterprise adoption is accelerating despite the nascency of the technology.

Enterprise Pricing and Custom Arrangements

For large enterprises, Open AI offers custom pricing arrangements that can include:

- Volume discounts on token consumption

- Priority support and dedicated technical resources

- Custom skills development aligned with enterprise needs

- SLA guarantees and uptime commitments

- Data residency options for organizations with geographic compliance requirements

Enterprises considering Codex deployment should factor in these enterprise options, as they can significantly improve the economic case.

Cost Optimization Strategies

Teams deploying Codex can optimize costs through:

- Thoughtful Task Specification: Well-written specifications that clearly define requirements reduce wasted model tokens

- Incremental Task Decomposition: Breaking large features into smaller tasks that can be completed in sequence often uses fewer tokens than attempting everything at once

- Skill Optimization: Custom skills reduce tokens required to communicate context to the agent

- Batch Automations: Scheduling automations during off-peak hours (if pricing includes time-based discounts) reduces costs

- Review Loop Optimization: Efficient review processes mean fewer iterations and less rework

Codex-Assisted Development can reduce costs by approximately 75-80% compared to traditional methods, highlighting significant savings in both time and money. (Estimated data)

Technical Architecture: Deep Dive into System Design

Worktrees: Managing Parallel Development Branches

The most technically sophisticated aspect of Codex is the worktrees system, which solves a fundamental coordination problem in parallel agent development. In traditional Git, managing multiple feature branches requires careful manual coordination—developers must manually merge branches, resolve conflicts, and ensure no two branches modify the same files.

Codex's worktrees extend this concept for AI agents. When an agent is assigned work, it operates on an isolated clone of the repository. The agent can make commits, create branches, and manage its own version control state without any possibility of interfering with other agents' work.

The technical challenge comes when merging worktrees back to the main codebase. If five agents modify overlapping areas of code, intelligent merging becomes necessary. The system uses several strategies:

- AST-Based Conflict Detection: Rather than line-by-line conflict detection, the system parses code into abstract syntax trees (ASTs) to understand semantic intent, allowing smarter merging

- Constraint-Based Merging: If two agents modified the same function but in non-conflicting ways (one added parameters, another added logging), these can often be merged programmatically

- Escalation to Human Review: When conflicts can't be resolved programmatically, they're escalated to the developer for manual resolution

- Replay Automation: Changes can be automatically replayed onto updated base code if the main branch has been modified since the agent started

Agent State Management and Persistence

Long-running agents must maintain state across extended execution periods. The Codex system implements several mechanisms:

- Execution Context: The agent's working memory of the task, requirements, and progress

- Code State: The current state of the repository and files being modified

- Tool State: Integration state with external systems (Figma design specs, Linear issues, Git repositories)

- Memory: Structured summaries of completed work and lessons learned

This state must persist across potential system interruptions (network failures, application crashes). Codex implements write-ahead logging to ensure durability—state changes are written to persistent storage before being applied, ensuring no work is lost.

Skills Framework Architecture

The skills system is built on a modular architecture where skills are composable, versioned, and chainable. Each skill has:

- Definition: What the skill does and what inputs it requires

- Implementation: The code or instructions for executing the skill

- Training Data: Examples of the skill being used correctly

- Version Control: Skills can evolve over time with backward compatibility

- Activation Logic: When the agent should consider invoking this skill

The agent uses its reasoning capabilities to determine which skills to invoke. Rather than explicit if-then logic, the model learns to recognize situations where particular skills are applicable and beneficial.

Integration Architecture

Codex's integration framework allows connecting to external systems through APIs and webhooks. The architecture includes:

- Credential Management: Secure storage and injection of API keys and credentials

- API Abstraction: Simplified interfaces to common external services (GitHub, Figma, Linear)

- Webhook Listeners: Triggering automations in response to external events

- Rate Limiting and Retry Logic: Graceful handling of API rate limits and transient failures

- Audit Logging: Complete record of all external system interactions for compliance

Implementation and Adoption Best Practices

Getting Started: Setting Up Your First Agents

Teams new to Codex should follow a phased approach:

Phase 1: Evaluation (Week 1-2)

- Set up Codex with a test project

- Experiment with simple tasks (documentation generation, test writing)

- Evaluate code quality and accuracy

- Assess team's comfort level with AI-generated code

Phase 2: Skill Development (Week 3-4)

- Document your team's coding standards and patterns

- Create custom skills that encode these patterns

- Test skills with example tasks

- Refine skills based on results

Phase 3: Automation Pilots (Week 5-6)

- Identify 2-3 categories of routine work

- Set up automations for these categories

- Establish review processes for automation output

- Measure baseline metrics (time spent, code quality)

Phase 4: Production Rollout (Week 7+)

- Scale automations across the organization

- Onboard additional teams

- Expand to new use cases based on learnings

- Optimize costs and performance

Code Review Processes for AI-Generated Code

Reviewing AI-generated code requires different processes than reviewing human-written code. Key practices:

- Functional Correctness: Verify that code does what it's intended to do, including edge cases

- Performance: Check for performance issues (inefficient algorithms, unnecessary allocations)

- Security: Review for security vulnerabilities and adherence to security standards

- Style Consistency: Verify conformance to team style guides

- Architectural Alignment: Ensure choices align with overall system architecture

- Test Coverage: Verify that generated tests cover critical paths

Teams should invest in automated tooling to check mechanical aspects (style, security, performance) automatically, reserving human review for architectural and design considerations.

Managing Agent Failures and Edge Cases

AI agents aren't perfect and will fail in various ways:

- Hallucinated Functions: Agents might call functions that don't exist

- Infinite Loops: Poor handling of termination conditions

- Performance Issues: Inefficient algorithms or memory usage

- Security Issues: Not properly validating inputs or managing credentials

- Incomplete Implementation: Unfinished code or missing error handling

Teams should:

- Implement Safeguards: Automated testing, linting, and security scanning catch many issues

- Set Boundaries: Clear task specifications limit the scope of agent work

- Gradual Adoption: Start with lower-risk tasks before delegating critical components

- Post-Mortem Analysis: When agent mistakes occur, analyze root causes and adjust prompts or skills

- Escalation Procedures: Clear procedures for when agent output requires human rework

Team Dynamics and Skills Evolution

Adopting Codex changes team dynamics significantly. Developers shift from "builders" to "architects and reviewers." This requires:

- Skill Development: Training on effective agent specification and oversight

- Tool Proficiency: Learning Codex's specific features and capabilities

- Quality Mindset: Focus on code review and architectural thinking rather than mechanical coding

- Process Adaptation: Updating development processes to accommodate agent-driven workflows

Organizations should invest in training and change management to support this transition.

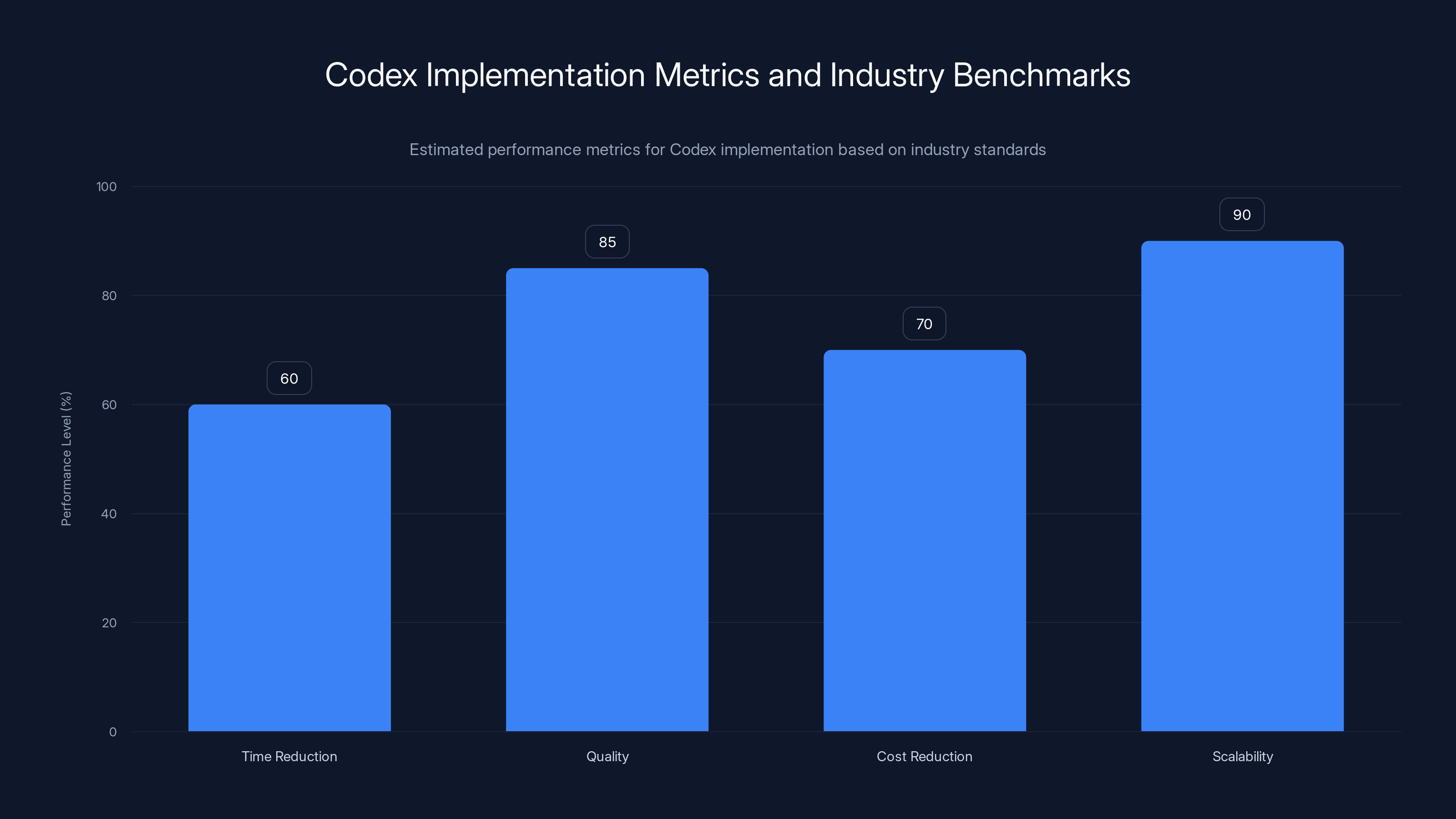

Estimated data shows Codex can reduce development time by 50-70%, maintain quality at 85%, reduce costs by 60-80%, and enhance scalability by 90%.

Performance Metrics and ROI Measurement

Key Metrics for Codex Implementation

Teams should track metrics that reflect the value Codex provides:

Development Velocity Metrics:

- Time to Feature Completion: Average time from specification to ready-for-review

- Code Generation Speed: Lines of code generated per hour of agent runtime

- Feature Throughput: Number of features completed per sprint or period

- Rework Rate: Percentage of agent-generated code requiring revision

Quality Metrics:

- Bug Escape Rate: Bugs found in production from agent-generated code

- Code Review Cycle Time: Time between code submission and approval

- Test Coverage: Percentage of code covered by tests (agent-generated and human-written)

- Security Issues: Vulnerabilities identified in agent-generated code

Cost Metrics:

- Cost per Feature: Total cost (tokens + human review time) divided by features

- ROI: Savings compared to human-only development approach

- Token Efficiency: Average tokens consumed per completed task

- Review Efficiency: Time spent reviewing agent work compared to total development time

Team Metrics:

- Developer Satisfaction: Qualitative feedback on working with agents

- Skill Utilization: Percentage of time developers spend on high-value activities

- Capacity Expansion: Effective increase in team capacity

Benchmarking Against Industry Standards

While absolute benchmarks vary by company and task type, general expectations based on early adopters:

- Time Reduction: 50-70% reduction in development time for agent-suitable tasks

- Quality: Comparable or slightly lower quality than experienced human developers (mitigated through review)

- Cost: 60-80% reduction in labor costs for agent-suitable work

- Scalability: Ability to parallelize work previously constrained by team capacity

These benchmarks should be validated in your specific context through pilot projects.

Security and Governance Considerations

Managing Code Security with AI Agents

Security becomes more complex when delegating code generation to AI agents. Key concerns:

Input Validation Risks: Agents might not properly validate external inputs, creating injection vulnerabilities

Dependency Management: Agents might introduce vulnerable dependencies without realizing it

Credential Management: API keys and secrets must be handled securely in agent prompts

Data Privacy: Code working with sensitive data must maintain appropriate access controls

Mitigation strategies:

- Automated Security Scanning: Run tools like Snyk, OWASP Dependency Check, or Semgrep on agent-generated code

- Security Skills: Create custom skills that teach agents about your organization's security standards

- Credential Injection: Use secure credential management rather than embedding secrets in prompts

- Security Review Checklist: Include security questions in code review processes

- Regular Training: Keep agents updated with evolving security best practices

Compliance and Audit Requirements

Organizations with compliance requirements (SOC 2, ISO 27001, HIPAA, GDPR) must ensure AI-assisted code generation doesn't create compliance gaps:

- Audit Trails: Complete logging of what agents did, what code they generated, and who reviewed it

- Change Management: AI-generated changes must follow change control procedures

- Data Residency: Ensure code generation happens within appropriate geographic boundaries

- Retention Policies: Long-term retention of agent outputs and decisions

- Access Controls: Ensure agents can only access systems they're authorized to work with

Ethical Considerations

Beyond technical security, organizations should consider ethical aspects:

- IP and Licensing: Understanding license implications of code AI generates based on training data

- Attribution: Documenting that code was AI-generated versus human-written

- Bias: Understanding potential biases in AI-generated code (performance optimization that disadvantages certain scenarios, etc.)

- Team Impact: Transparent communication about how AI tools affect developer roles

Enterprises report 60-70% reductions in feature development time for pattern-based work using Codex. Estimated data.

Comparison with Alternative Approaches and Strategies

Traditional Outsourcing vs. AI Agent Delegation

Organizations have traditionally handled excess development demand through outsourcing or hiring contractors. How does Codex compare?

| Factor | Outsourcing | Contractors | Codex Agents |

|---|---|---|---|

| Cost Per Feature | High ($5-15K) | High ($10-20K) | Low ($500-2K) |

| Latency | 2-4 weeks | 1-2 weeks | Hours to days |

| Knowledge Retention | External | Variable | Internal |

| Quality Consistency | Variable | Variable | Consistent |

| Security Risk | High | Medium | Low |

| Scalability | Limited | Limited | Unlimited |

| IP Ownership | Contracted | Full | Full |

Codex offers significant advantages for routine features, with primary trade-offs being quality consistency and the need for internal review capabilities.

Hybrid Approaches: Combining Human and AI Development

Most successful implementations use hybrid approaches:

- AI Scaffolding: Agents generate initial implementations; developers refine

- Division of Labor: Agents handle routine tasks; humans handle complex design decisions

- Code Review Partners: Agents suggest improvements to human code; humans review agent suggestions

- Specialized Tasks: Agents excel at specific categories (testing, documentation, infrastructure); humans focus on core feature logic

This hybrid approach captures benefits of both approaches while mitigating weaknesses of each.

Runable as a Complementary Solution

For teams looking for broader automation capabilities beyond code generation, Runable's AI agents for workflow automation and content generation provide complementary capabilities at $9/month pricing. While Runable isn't specifically optimized for code generation, its automation framework is well-suited for:

- Generating technical documentation and runbooks

- Creating release notes and changelog content

- Automating report generation and analysis

- Managing routine notification and alerting workflows

- Content generation for developer blogs or documentation sites

Teams might use Codex for code generation and testing, while using Runable for documentation automation and broader workflow orchestration, creating a comprehensive automation strategy across development and non-development work.

Building vs. Buying: Custom Agent Systems

Some organizations consider building custom agent systems using frameworks like LangChain, rather than adopting Codex. The tradeoffs:

Buying (Codex):

- Immediate productivity (weeks to productive)

- Managed infrastructure and updates

- Pre-built skills and integrations

- Limited customization

- Predictable costs

Building (Custom Agents):

- Complete customization

- Aligned with specific workflows

- Ongoing maintenance burden

- Requires specialized expertise

- Potentially lower long-term costs at scale

Most organizations should start with Codex and only build custom systems if standard offerings prove insufficient after pilot projects.

The Future of AI-Assisted Development

Emerging Capabilities and Roadmap

The trajectory of AI-assisted development points toward increasingly sophisticated agent capabilities:

Immediate Term (2025-2026):

- Multi-agent coordination across larger projects

- Deeper integration with version control and CI/CD

- Improved handling of legacy and unusual codebases

- Better security and compliance features

Medium Term (2026-2028):

- Agents that can design architectures, not just implement them

- Full end-to-end product development (design to deployment)

- Specialized agent types for different domains (mobile, web, systems programming)

- Improved understanding of performance and optimization

Long Term (2028+):

- Agents that can make architectural decisions without human input

- Fully autonomous system design and implementation

- Integration with product management and business planning

- Self-improving agents that learn from their successes and failures

Impact on Software Development Practices

Wider adoption of AI agents will reshape software development practices:

- Skill Requirements: Emphasis on specification, architecture, and review rather than mechanical coding

- Team Structure: Fewer junior developers; more senior architects and reviewers

- Development Speed: Features that took weeks will take days; focus shifts to ideation and refinement

- Code Quality: Higher baseline quality through systematic automated review

- Scaling: Organizations can scale development without proportional team growth

- Cost Structure: Fixed costs (infrastructure, skilled reviewers) vs. variable costs (AI tokens)

Potential Risks and Mitigations

Risk: Over-reliance on AI, Loss of Human Skills

- Mitigation: Maintain opportunities for human developers to work on complex problems; rotate junior developers through challenging projects

Risk: Security and Compliance Issues

- Mitigation: Invest in security scanning and governance; maintain human review of sensitive systems

Risk: Economic Disruption of Software Development Jobs

- Mitigation: Proactive reskilling and transition programs; focus on high-value work that AI can't do

Risk: Concentration of AI Development

- Mitigation: Open-source tools and frameworks; encourage competition and diversity in agent platforms

Real-World Implementation Case Studies

Case Study 1: E-Commerce Platform Feature Acceleration

A mid-size e-commerce platform needed to implement checkout flow improvements ahead of holiday season. Traditional timeline: 6 weeks. With Codex:

- Week 1: Specification and design work

- 2 days: Codex implementation of feature

- 3 days: Comprehensive review, testing, refinement

- 2 days: Production deployment and monitoring

Result: 75% time reduction, cost savings of $30,000 in developer time, code quality comparable to human-written code after review.

Case Study 2: Microservices Rewriting

A startup with monolithic codebase needed to convert to microservices architecture. Original estimate: 6 months. With Codex agents working in parallel:

- 10 services broken out in parallel

- 3 weeks initial implementation

- 4 weeks integration testing and refinement

- 1 week deployment and stabilization

Result: 80% time reduction, cost savings of $150,000, improved architecture due to systematic approach.

Case Study 3: Compliance and Security Hardening

A financial services company needed to audit and harden codebase for regulatory compliance. Traditional approach: 3-month security consulting engagement. With Codex automations:

- Daily scanning for compliance violations

- Weekly automated remediation for common issues

- Monthly security audits

Result: Continuous compliance checking, 90% reduction in manual security review work, better long-term compliance posture.

Troubleshooting Common Issues and Solutions

Problem: Generated Code Doesn't Match Team Standards

Solutions:

- Create custom skills that encode team standards

- Include detailed style guides in task specifications

- Implement automated formatting and linting as post-generation step

- Provide examples of desired code style to the agent

Problem: Agents Are Failing on Domain-Specific Work

Solutions:

- Create specialized skills for domain-specific patterns

- Provide comprehensive documentation of domain concepts

- Break domain-specific tasks into smaller subtasks

- Use examples in skill definitions

Problem: High Token Costs for Complex Tasks

Solutions:

- Break large tasks into smaller, focused tasks

- Improve task specification to reduce agent exploration

- Use custom skills to reduce tokens needed to communicate context

- Schedule complex tasks during off-peak hours if applicable

Problem: Integration with Legacy Systems Failing

Solutions:

- Document legacy system APIs and quirks thoroughly

- Create custom skills for legacy system interactions

- Provide working example code the agent can follow

- Consider adapter layers that provide standard interfaces

Problem: Inconsistent Results Across Multiple Agents

Solutions:

- Ensure all agents have access to same context and specifications

- Use deterministic task specifications rather than vague language

- Implement pre-task standardization steps

- Review agent outputs for consistency; adjust specifications if needed

Making the Decision: Is Codex Right for Your Team?

Decision Framework

Teams evaluating Codex should consider:

Factors Favoring Adoption:

- Significant development backlog

- High proportion of routine, specification-driven work

- Strong code review culture

- Experienced developer team (to handle review and oversight)

- Clear task specifications possible

- Limited budget for expanding team

- Comfortable with emerging technology risks

Factors Favoring Alternative Approaches:

- Very small team (limited review capacity)

- Primarily novel/research code

- Strict security/compliance requirements

- Mission-critical systems with high reliability requirements

- Team comfort with traditional development

- Ample budget for hiring

Pilot Project Selection

Teams new to AI-assisted development should select pilot projects that:

- Have Clear Success Criteria: Measurable improvements in speed, cost, or quality

- Are Non-Critical: Failures won't impair core business

- Have Good Test Coverage: Easier to verify correctness

- Are Well-Specified: Clear requirements and examples

- Represent Common Patterns: Agents will have training data for these patterns

- Offer Quick Feedback: Completion in days, not weeks

Examples of good pilot projects:

- New admin dashboard

- API endpoint for new data source

- Test suite expansion

- Documentation generation system

- Infrastructure as code for new service

Evaluation Checklist

Before committing to Codex, evaluate:

- Team understands capabilities and limitations

- Code review processes are established and tested

- Security and compliance requirements are understood

- Success metrics are defined

- Budget allocated for tokens and potential rework

- Training plan for team

- Risk mitigation strategies identified

- Integration with existing tools planned

- Governance and approval processes designed

- Change management plan developed

Conclusion: The Evolution of Software Development

Open AI's Codex desktop application represents a pivotal moment in software development's evolution. We're transitioning from an era where AI tools augment human developers in real-time, to an era where developers orchestrate autonomous AI agents to accomplish substantial portions of development work.

This shift isn't mere incremental improvement—it's a fundamental reorganization of how software gets built. The economics are compelling: features that might cost $15,000 in developer time can now be delivered for a few hundred dollars in API costs plus modest human review. The speed is transformative: work that takes weeks can often be completed in days. The scalability is unprecedented: a small team can deliver the output of a team ten times larger.

But the transition requires more than simply adopting new tools. It requires rethinking development workflows, redefining team roles, establishing new review practices, and managing the organizational change that comes with displacing significant human effort. Teams that navigate this transition successfully will see competitive advantages; teams that ignore this shift will find themselves at a disadvantage.

For teams considering whether to adopt Codex: The evidence suggests that early adopters are gaining meaningful advantages. Anthropic's growth and Open AI's investment in Codex both indicate this isn't a passing trend but rather the direction the industry is moving. The question isn't whether to eventually adopt AI-assisted development, but whether your organization will lead or follow.

For teams evaluating alternatives: Anthropic's Claude Code, Google's Gemini, and GitHub Copilot all provide viable approaches to AI-assisted development. Each has strengths suited to different organizational contexts. For cost-conscious teams seeking broader automation capabilities beyond code generation, platforms like Runable provide complementary automation capabilities at lower price points. The key is evaluating alternatives against your specific requirements rather than assuming any single solution is universally optimal.

The path forward: Start with a focused pilot project. Select work that's routine enough for AI to handle but important enough to matter. Establish clear metrics for success. Build organizational competency in effectively specifying tasks, reviewing agent output, and iterating on approaches. Gradually expand as team comfort and expertise increases.

The software development profession won't disappear—human developers will remain essential for architectural thinking, complex problem-solving, and ensuring systems meet organizational needs. But the nature of the work will change dramatically. The developers who thrive will be those who embrace this transition, develop new skills in agent specification and oversight, and learn to think of themselves as orchestrators of AI capabilities rather than mechanical code writers.

Open AI's Codex is a compelling solution for this emerging era of development. Whether it's the right choice for your organization depends on your specific context, requirements, and readiness for change. What's certain is that the shift toward AI-orchestrated development is underway, and the choices teams make today will significantly impact their competitive position in the years ahead.

FAQ

What exactly is Open AI Codex and how does it differ from GitHub Copilot?

Open AI Codex is a desktop application for macOS that enables developers to orchestrate multiple autonomous AI coding agents working in parallel, rather than providing real-time code completion suggestions. Unlike GitHub Copilot, which offers line-by-line autocomplete suggestions within your IDE as you type, Codex allows you to delegate entire features, workflows, and tasks to agents that can work independently for extended periods (up to 30 minutes) before returning completed implementations for human review. The fundamental difference is interaction model: Copilot assists human-driven coding, while Codex automates agent-driven development.

How does the skills system in Codex work, and can teams create custom skills?

The skills system in Codex works as a library of specialized sub-agents that the main agent can invoke when needed. Open AI provides base skills covering common development workflows (design integration, project management, deployment), but the framework is fully extensible. Teams can create custom skills that encode company-specific standards, architectural patterns, legacy system integrations, or domain-specific knowledge. When an agent is assigned work, it can either have specific skills called out explicitly in the task directive, or it can automatically select relevant skills based on the nature of the work. Custom skills are particularly powerful for teaching agents about your organization's conventions, reducing code review friction.

What security risks should organizations consider when adopting Codex for code generation?

Key security considerations include: input validation vulnerabilities (agents might not properly validate external inputs), dependency management risks (introducing vulnerable packages), credential handling (API keys must be managed securely rather than embedded in prompts), and data privacy concerns when working with sensitive information. Organizations should mitigate these through automated security scanning tools (Snyk, OWASP Dependency Check), creating security-focused custom skills that teach agents about your security standards, implementing secure credential injection systems, and maintaining thorough code review processes with security checklists. Compliance organizations need to ensure audit trails of agent activities, maintain change management procedures, and verify data residency requirements are met.

How does Codex compare to building custom agent systems using frameworks like LangChain?

Codex is a complete, managed application with pre-built orchestration, scheduling, parallel execution, and integration capabilities. Building custom agents with LangChain requires significant engineering effort but offers complete customization. Codex advantages include immediate productivity (weeks vs. months), managed infrastructure and updates, pre-built skills and integrations, and predictable costs. LangChain advantages include complete customization, alignment with specific workflows, and potentially lower costs at scale. Most organizations should start with Codex and only build custom systems if standard offerings prove insufficient after pilot projects. The tradeoff is between "time-to-value" (favors Codex) versus "long-term customization" (favors custom systems).

What metrics should teams track to measure the ROI of Codex implementation?

Key metrics include development velocity (time to feature completion, feature throughput per period), quality metrics (bug escape rate, code review cycle time, test coverage), cost metrics (cost per feature, ROI compared to human-only development, token efficiency), and team metrics (developer satisfaction, percentage of time on high-value activities, effective capacity expansion). Most successful implementations see 50-70% reduction in development time for AI-suitable tasks and 60-80% reduction in labor costs for those categories of work. Teams should establish baseline metrics before Codex adoption, then measure improvements through structured pilot projects, using these metrics to justify broader rollout.

How does Codex handle parallel development when multiple agents are working on the same codebase simultaneously?

Codex uses a sophisticated "worktrees" system where each agent operates on an isolated clone of the repository, preventing conflicts and interference between parallel agent streams. When agents complete their work, the system intelligently merges changes back to the main codebase using several strategies: AST-based conflict detection (understanding semantic intent rather than just line-by-line conflicts), constraint-based merging (combining non-conflicting changes automatically), and escalation to human review when conflicts can't be resolved programmatically. This allows true parallelization of development work—five agents can work on five different features simultaneously without the coordination overhead that plagues human teams. The system also implements write-ahead logging to ensure work is never lost due to system interruptions.

What alternative solutions exist for teams that want AI-assisted development but aren't ready for Codex?

Several alternatives exist depending on your needs: Anthropic's Claude Code is strong for software development and data analysis work (and has captured significant enterprise mindshare recently); GitHub Copilot remains valuable for real-time autocomplete within traditional development workflows; Google's Gemini Code offers integration with Google Cloud infrastructure; and established workflow orchestration platforms like Apache Airflow or Temporal can be extended with AI capabilities. For teams seeking broader automation beyond code generation, platforms like Runable offer AI agents for workflow automation and content generation at accessible price points ($9/month), making them well-suited for documentation generation, release notes, and administrative automations that complement code generation tools. The choice depends on your specific requirements, existing infrastructure, and team preferences.

What should organizations include in code review processes when accepting AI-generated code from Codex?

Code reviews for AI-generated code should include: functional correctness verification (does it actually work, including edge cases), performance review (checking for inefficient algorithms or unnecessary allocations), security assessment (vulnerability scanning and adherence to security standards), style consistency (conformance to team guides), architectural alignment (ensuring choices fit overall system design), and test coverage verification. Teams should automate mechanical aspects (style, security, basic performance checks) using linters, security scanners, and automated testing, reserving human review for architectural and design considerations. Establishing a checklist specifically for AI-generated code helps ensure consistent review quality and catches common patterns of agent errors.

What timeline should organizations expect for implementing Codex successfully, from evaluation to productive deployment?

Most organizations follow a phased approach: Phase 1 (weeks 1-2) focuses on evaluation with simple tasks like documentation generation; Phase 2 (weeks 3-4) involves developing custom skills encoding your standards; Phase 3 (weeks 5-6) pilots automations on routine work; Phase 4 (week 7+) scales across the organization. For a single pilot project, organizations typically see productive results in 4-6 weeks. For broader organizational adoption with multiple teams and use cases, expect 3-6 months to reach mature implementation. This timeline assumes you have experienced developers available to review AI output and refine task specifications—organizations with less development expertise may need longer. The key is starting with focused pilots rather than attempting organization-wide rollout immediately.

How do organizations handle the shift in developer roles and skills when adopting AI-assisted development like Codex?

Adopting Codex represents a significant shift in required skills. Rather than mechanical coding, developers become architects, specification writers, and reviewers. Organizations should: invest in training on effective task specification and agent oversight; ensure teams understand Codex's capabilities and limitations; emphasize code review skills and architectural thinking; maintain opportunities for developers to work on complex problems (preventing skill atrophy); and be transparent about how roles are evolving. Some organizations rotate junior developers through challenging projects to maintain their hands-on coding skills, while others transition junior roles toward code review and architectural support. This isn't replacement of developers but rather repurposing their expertise toward higher-value work that AI can't (yet) handle.

What are the main cost drivers when using Codex, and how can organizations optimize spending?

Cost drivers include token consumption (input and output tokens, with larger tasks consuming more tokens) and review/rework time (developers reviewing and refining agent output). Organizations optimize costs by: writing thoughtful, specific task specifications that reduce wasted tokens; breaking large features into smaller tasks that often use fewer total tokens; creating custom skills that reduce tokens needed to communicate context; implementing efficient review processes to minimize rework iterations; and potentially scheduling complex tasks during off-peak periods if time-based pricing is available. Teams report that well-executed Codex implementations cost 60-80% less than human-only development for suitable work categories, making even generous token budgets economically compelling compared to developer salaries.

Key Takeaways

- Codex represents a fundamental shift from IDE-based code completion to autonomous agent orchestration for entire features

- Parallel execution with worktrees allows multiple agents to work simultaneously without conflicts

- ROI is compelling: 50-70% time reduction and 60-80% cost reduction for suitable development tasks

- Anthropic's Claude Code and GitHub Copilot remain viable alternatives depending on team preferences and existing tooling

- Runable and other workflow automation platforms provide complementary capabilities for non-code automation

- Implementation requires new developer skills: specification writing, agent oversight, and code review rather than mechanical coding

- Security considerations include input validation, dependency management, and credential handling in AI workflows

- Success requires phased adoption starting with focused pilots, establishing metrics, and building team competency over time

- Future development trends point toward increasingly sophisticated autonomous agents and multi-agent coordination

- Organizations must balance early adoption advantages against risks of emerging technology

![OpenAI Codex Desktop App: AI Coding Agents & Alternatives [2025]](https://tryrunable.com/blog/openai-codex-desktop-app-ai-coding-agents-alternatives-2025/image-1-1770057978437.webp)