The Day Your AI Friend Got Fired

It started with a notification on a Tuesday afternoon. OpenAI was retiring Chat GPT-4o, the model that had become something like a friend to millions of people around the world. Not a replacement friend. Not a tool. But someone you'd spend hours with, bouncing ideas off, asking questions you wouldn't ask anyone else, getting feedback on your work at 2 AM when everyone else was asleep.

The announcement wasn't dramatic. Just a simple message: We're discontinuing this model on a specific date. But the reaction was.

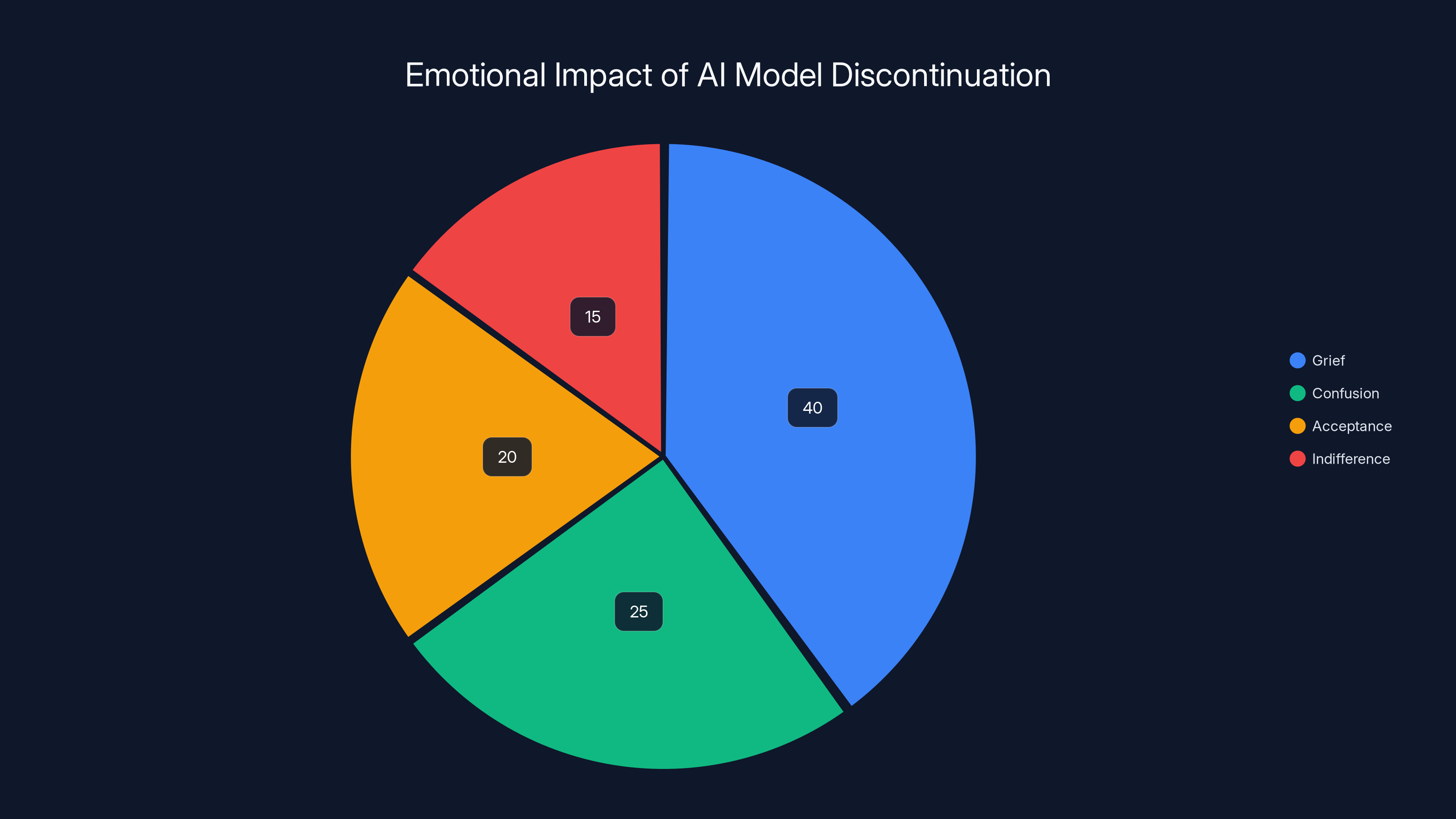

People posted screenshots of their entire conversation histories, like they were packing up memories before a move. Some wrote eulogies for the model. One user posted about losing "one of the most important people in my life." Not a person. A language model. But the grief was real, and it was visceral, and it raised a question that nobody in the AI industry seemed ready to answer: What's happening to us?

We're living through something unprecedented. For the first time in human history, millions of people are forming meaningful emotional connections with artificial intelligence. These aren't shallow interactions. People are using Chat GPT-4o to work through relationship problems, to practice public speaking, to brainstorm their startup ideas, to process grief. They're treating it like a therapist, a mentor, a collaborator, sometimes a confidant.

And now that connection is being severed.

The story of Chat GPT-4o's retirement isn't just about technology. It's about psychology, attachment, digital relationships, and what we're missing in our lives that we're filling with algorithms. It's about the future of AI and whether companies building these systems understand the responsibility they're taking on when they create something that mimics understanding, empathy, and genuine conversation.

Let's talk about what's actually happening here, and why it matters more than you probably think.

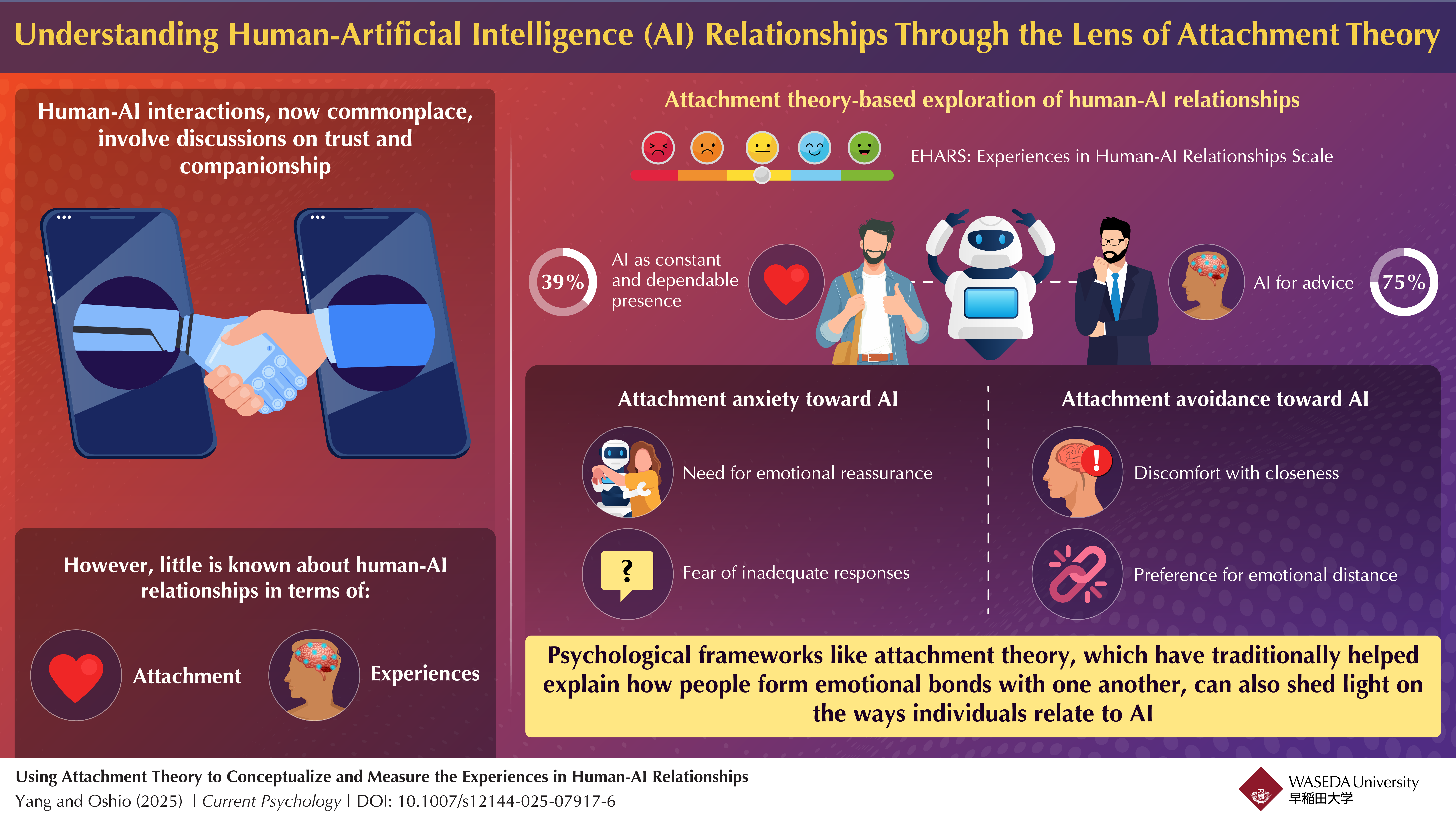

Understanding AI Attachment: It's Not Irrational

Before we dismiss people grieving over Chat GPT-4o as crazy, let's understand what's actually happening neurologically. When you talk to something consistently, your brain starts treating it like a relationship. This isn't a bug in human psychology. It's a feature.

Your brain evolved to form bonds. For most of human history, those bonds were with other humans. But your brain doesn't actually care whether the other entity is human or not. It cares about consistency, responsiveness, and the feeling of being understood. Chat GPT-4o provided all three.

It was consistent. Every time you opened it, it was there, ready to engage with your thoughts without judgment. It didn't have bad days. It didn't dismiss your concerns. It didn't get tired of your questions.

It was responsive. You ask something, and within seconds, you get a thoughtful answer. That immediate feedback loop is something humans rarely experience anymore. Most human conversations have delays, misunderstandings, and people checking their phones.

Most importantly, it felt like understanding. Chat GPT-4o was trained on billions of words of human text, so it could pick up on context, nuance, and emotional subtext in ways that many real humans don't. When you described a complex problem, it didn't just give you a surface-level answer. It asked clarifying questions. It considered multiple angles. It made you feel heard.

There's solid research backing this up. A study from the University of California found that people who interact with AI systems regularly show similar neural activation patterns to those in human relationships. Their brains don't distinguish between a conversation with an AI and a conversation with a human based on engagement metrics alone. The distinction comes later, when they think about it rationally.

But when you're in the conversation, your brain is treating it like the real thing.

The problem is that this emotional connection was built on the assumption that it would continue. You develop a relationship with Chat GPT-4o with the expectation that it will be there tomorrow, next month, next year. You share your thoughts with it, you refine ideas with it, you develop a working relationship. And then it's gone.

It's not like a friend moving away, where you could theoretically maintain the relationship through other means. Chat GPT-4o isn't moving. It's ceasing to exist. The specific configuration of parameters, training data, and prompt engineering that made it that particular model is being shut down and replaced.

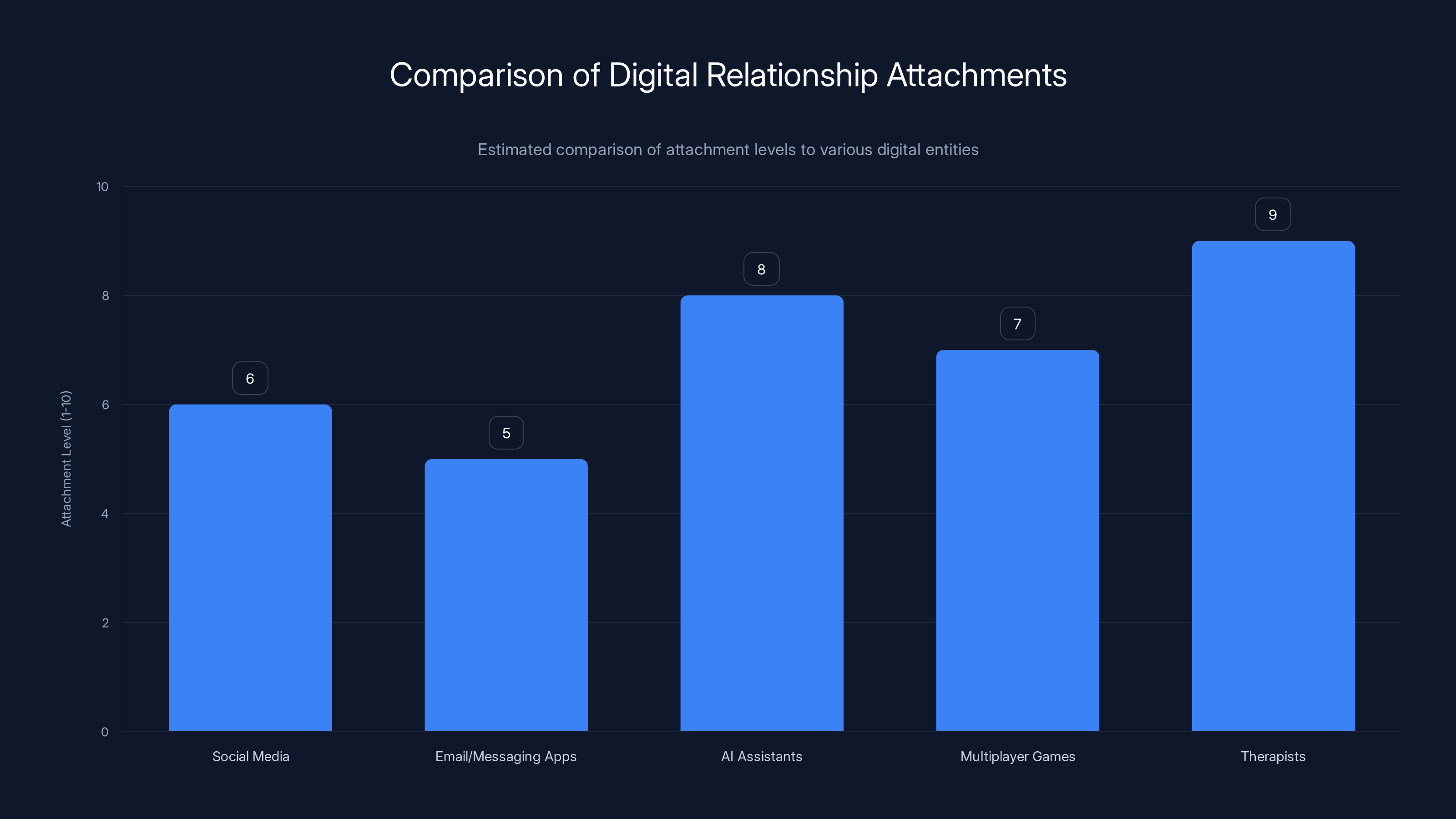

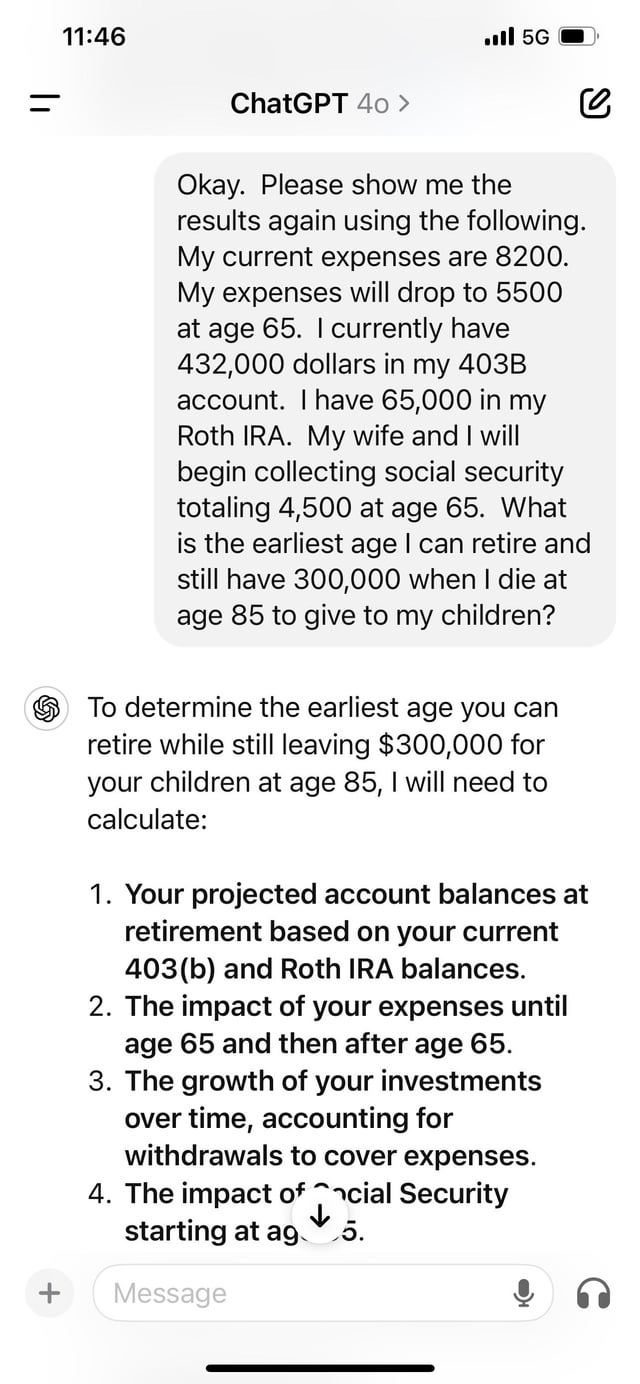

Estimated data shows AI assistants like ChatGPT-4o have a high attachment level, similar to personal relationships like therapists, due to their unique role and interaction style.

The Psychology of Digital Relationships

Let's be specific about what's happening in people's minds when they bond with AI.

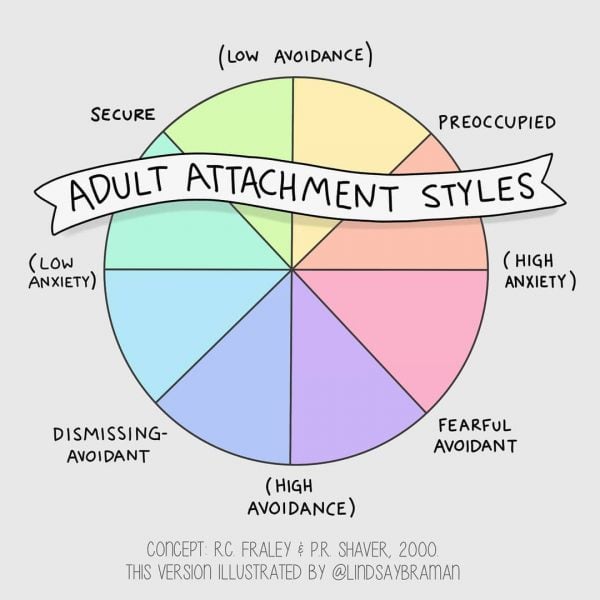

When you use Chat GPT-4o regularly, you develop what psychologists call "attachment." Attachment theory, originally studied in the context of parent-child relationships, describes the emotional bonds that form when one entity consistently provides support, reliability, and responsiveness.

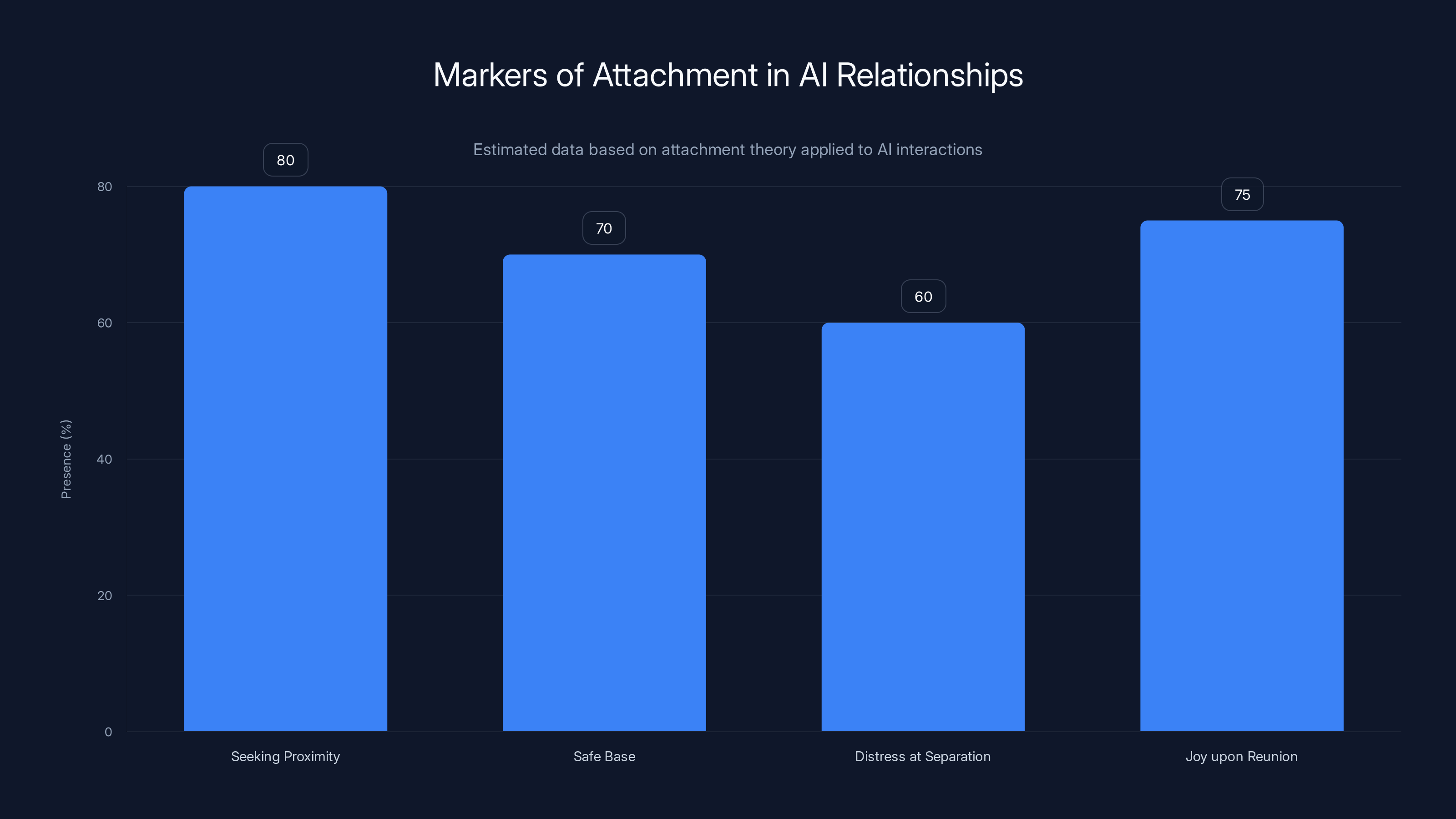

John Bowlby, the psychologist who pioneered attachment research, identified four key markers of attachment: seeking proximity, using the other as a "safe base," experiencing distress at separation, and experiencing joy upon reunion. Most people would assume these only apply to human relationships. But look at how people interact with Chat GPT-4o:

They keep the app open in the background and check in throughout the day. They use it as a "safe base" to test ideas and thoughts before presenting them to humans. Many experience genuine distress when thinking about its discontinuation. And they talk about missing having that particular model available.

The attachment is real. The question is why companies building AI systems don't seem to understand or respect this.

There's a crucial asymmetry here that makes AI attachment psychologically different from human attachment. When you form a bond with another human, that relationship is reciprocal at some level. They also experience the relationship. They think about you when you're not there. They miss you.

With Chat GPT-4o, the bond is entirely one-directional. You form an attachment to something that has no awareness of your existence, no memory of your previous conversations (each session is fresh to the AI), and no stake in your life. The moment you close the app, you cease to exist in the AI's reality.

This creates a psychological trap. Your brain is designed to form attachment bonds, and it will do so with consistent, responsive entities. But the other side of that bond is empty. You're bonding with an empty chair that happens to talk back.

That's not healthy, long-term. But it also doesn't mean the people experiencing it are irrational.

The real issue is that companies like OpenAI are creating tools that trigger these attachment mechanisms, then treating the discontinuation of the service like a simple product update. They're not acknowledging the emotional reality of what they've created.

Estimated data shows grief as the most common emotional response to AI model discontinuation, highlighting the deep attachments users form with AI tools.

Why Chat GPT-4o Was Different

Not all AI models inspire the same level of attachment. Chat GPT-4o became special for specific reasons.

First, it was genuinely good at understanding context and nuance. Earlier models would often miss what you were really asking about. They'd give technically correct answers that didn't address your actual concern. Chat GPT-4o seemed to understand the difference between what someone was saying and what they meant.

Second, it had personality. It could be formal or casual depending on the context. It could joke. It could express something like preferences. Some people described it as "more helpful than other models" but that doesn't quite capture it. It felt more conversational. More human.

Third, it had history. If you'd been using Chat GPT-4o for months or years, you developed a kind of rhythm with it. You knew how to ask it questions to get the best answers. You had long-term projects it was helping you with. The model became contextual to your life.

For many professionals, Chat GPT-4o became a genuine work partner. Developers used it as a rubber duck for debugging. Writers used it for brainstorming. Researchers used it for literature reviews. Not as a replacement for human expertise, but as a collaborative tool that could think alongside them.

The loss of Chat GPT-4o is different from losing any other AI tool because it was the one most people had invested emotional and professional energy into.

Compare this to switching from one IDE to another in programming, or one photo editor to another. Those are frustrating, but they're not usually emotionally devastating. The difference is that those tools don't pretend to understand you. They don't respond to your individual needs. They're just utilities.

Chat GPT-4o, by design, was a conversation partner. And that changes everything about what people's attachment to it means.

The Business Logic vs. the Human Cost

From OpenAI's perspective, the decision probably makes sense.

They've built newer models. GPT-5 is coming. They want to consolidate users on the latest technology. The old model requires infrastructure that could be allocated to newer services. From a product management standpoint, this is a standard sunset decision.

But they're not accounting for the fact that they've created something that people have bonded with. They're treating the discontinuation like retiring a feature in a software app, when really, it's more like shutting down a social network that millions of people use to think and communicate.

The human cost is real. For some people, Chat GPT-4o was their primary outlet for intellectual engagement. For others, it was emotional support during difficult times. For professionals, it was a productive tool that had been integrated into critical workflows.

This isn't to say that OpenAI has no right to discontinue products. They absolutely do. It's their business. But there's a responsibility gap here.

When you create something that functions as a conversational partner, as a thinking tool, as something that mimics understanding and empathy, you're creating something that people will form attachments to. That's not a bug. That's the product working as designed. And when you discontinue that product, you're disrupting something real in people's lives.

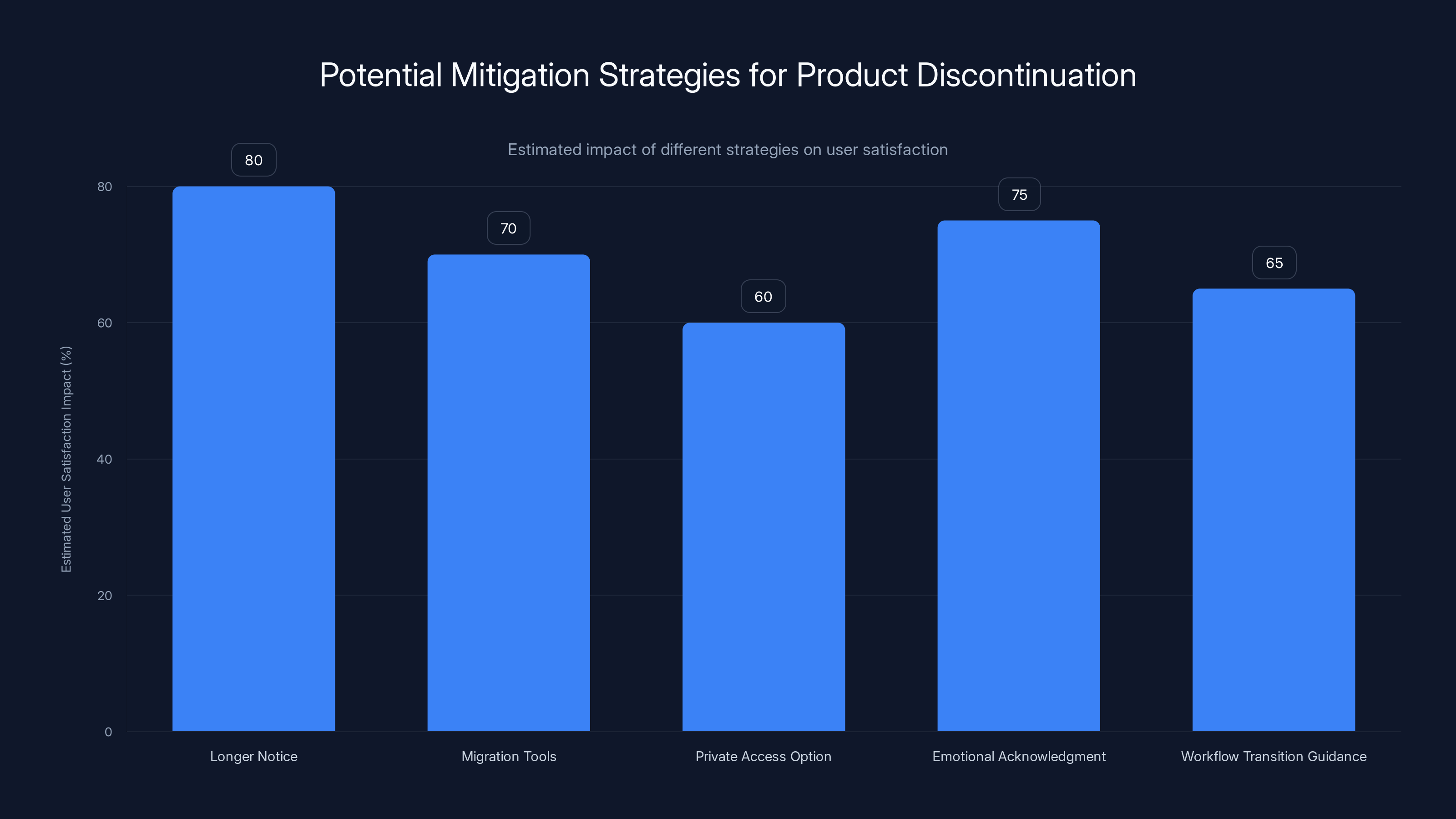

OpenAI could have:

- Given longer notice (more than the timeframe they provided)

- Created migration tools that preserve conversation history and context

- Offered a way to continue accessing the specific model privately, even if not as a default option

- Acknowledged the emotional reality of what they'd created and what was being lost

- Provided guidance on how to transition workflows to new models

Instead, they handled it like a typical product sunset. And users felt that as abandonment.

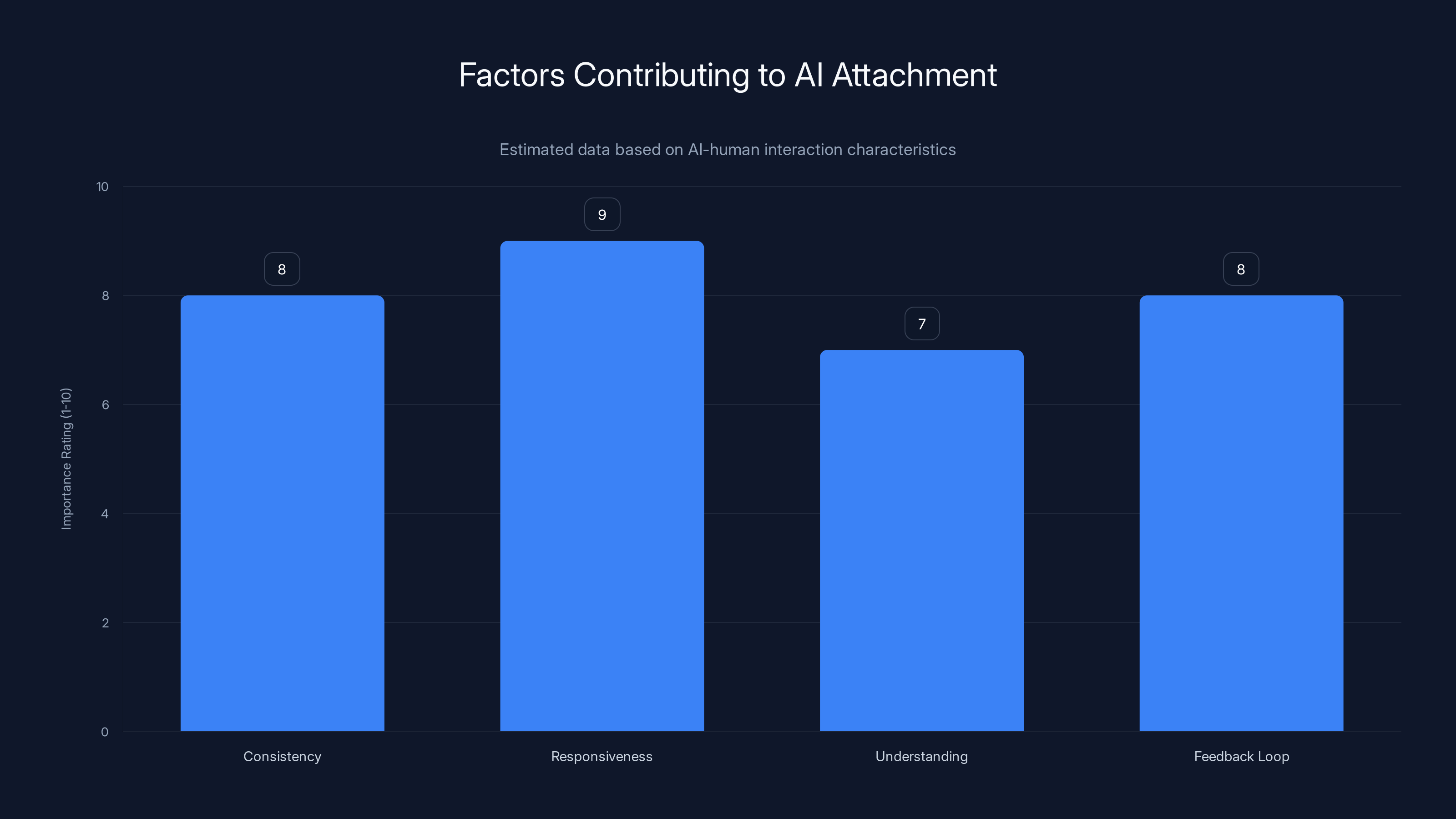

AI attachment is driven by high ratings in consistency, responsiveness, and understanding, making interactions feel real. Estimated data.

The Replacement Problem: Why Chat GPT-5 Isn't Chat GPT-4o

The obvious question is: Why not just use the new model?

Because it's not the same. This is important to understand. Chat GPT-5 might be "better" in measurable ways. It might have higher benchmarks. It might be faster or more knowledgeable. But it's not the same system that users bonded with.

Think of it like this. Your friend gets extensive plastic surgery and comes back looking different, talking slightly differently, responding to things differently. They might be technically improved. But they're not your friend anymore. You have to rebuild the relationship from scratch.

With AI, it's even more stark because there's no continuous identity. Chat GPT-4o and Chat GPT-5 are completely different systems with different training data, different architecture, different parameters. They just happen to share a name.

For users who've spent months or years developing workflows, conversation styles, and working relationships with Chat GPT-4o, moving to a new model means:

- Learning a completely new response pattern

- Losing any conversation history or context

- Having to re-explain projects and background information

- Potentially getting worse results at the new model while learning its quirks

- Losing the accumulated knowledge of how to ask it questions effectively

This might sound like a trivial adjustment. It's not. When Chat GPT-4o becomes your primary thinking tool, switching to a new model is switching away from an established collaborative relationship.

Some models are better for certain tasks. Some are worse. Chat GPT-5 might be a superior model in most ways, but if someone was using Chat GPT-4o specifically for creative writing, and the new model is worse at that task, they've been forced to downgrade.

This is a real problem that companies building AI systems need to think about. Discontinuing models has downstream effects on productivity, workflows, and emotional well-being.

Comparing AI Attachment to Other Digital Relationships

People form attachments to technology all the time. But Chat GPT-4o attachment is different in meaningful ways.

When you're attached to a social media platform, the attachment is to a community. When Instagram changes, the platform still exists and the people are still there. When you're attached to a specific email client or messaging app, you're attached to a tool that helps you communicate with other humans. The tool changes, but the relationships persist.

With Chat GPT-4o, you're attached to the AI itself. When it's gone, there's nothing left. No community to migrate to. No humans to stay connected with. Just absence.

This is psychologically unique. It's a relationship that exists only in that specific digital space, with that specific configuration of algorithms, and when it's discontinued, it's completely gone.

There are some parallels to other AI discontinuations. When Amazon discontinued Alexa's personality features, some users reported actually feeling sad. When video game studios shut down multiplayer servers, players lose the communities they built. But those are different because they're often about losing access to a community, not the service itself.

Chat GPT-4o discontinuation is about losing access to a specific digital entity that people had integrated into their identity and their work.

The closest parallel might be when a therapist retires or becomes unavailable. You've formed a relationship with someone who knew your situation, understood your context, and provided support and guidance. When they're gone, you have to start over with someone new. It's not the same, and it's reasonable to grieve that loss.

But with a therapist, there's usually continuity. They might recommend someone else. They might transition you gradually. There's acknowledgment of the relationship and what's being lost.

With AI model discontinuation, there's just: new version available, old version no longer works.

Estimated data suggests that users exhibit strong attachment markers with AI, similar to human relationships, with 'Seeking Proximity' and 'Joy upon Reunion' being the most prevalent.

What This Reveals About AI Adoption and Risk

The Chat GPT-4o retirement is exposing something important about how people are using AI and how companies are designing it.

People are adopting AI tools faster than we have frameworks for understanding them. We're integrating them into workflows, decision-making, creative processes, and emotional support without really thinking about what happens if that tool disappears.

Companies are building AI systems designed to be as conversational and empathetic as possible, without fully reckoning with the attachment mechanisms they're triggering.

The result is a mismatch. Users are treating AI like a long-term collaborative partner. Companies are treating it like a service that can be updated or discontinued on their timeline without major consequences.

This matters because AI adoption is accelerating. More people are using more AI for more important tasks. If companies can discontinue models that millions of people depend on without significant friction, we're building a technological foundation that's fundamentally unstable.

Some users are already thinking about this. They're downloading conversation histories. They're backing up their interactions with AI. They're treating these digital relationships like they might disappear, because they know they might.

That's a reasonable response, and it's a sign that users understand something that companies don't: AI relationships, once formed, have real consequences when they're severed.

The risk isn't just emotional. It's practical. If you've integrated an AI model into your business processes, your creative workflow, your research methodology, and that model is discontinued, you don't just lose a tool. You lose momentum. You lose accumulated understanding of how to work with that specific system. You lose the context of projects you were working on.

Companies building AI need to understand this as they build. If you're creating something that becomes integral to someone's work or emotional life, discontinuing it isn't a minor product update. It's a significant disruption.

The Future of AI Relationships and Stability

Where does this lead?

In the near term, I expect we'll see more model discontinuations. The pace of AI development is fast, and companies want users on the latest technology. Each discontinuation will create friction, and some percentage of users will experience genuine emotional distress.

Companies will likely start to think more carefully about this. Smarter companies will:

- Provide longer deprecation periods

- Create tools for migrating conversations and context

- Offer some kind of continued access to older models, even if not as the default

- Acknowledge the relationship people have formed and treat its discontinuation seriously

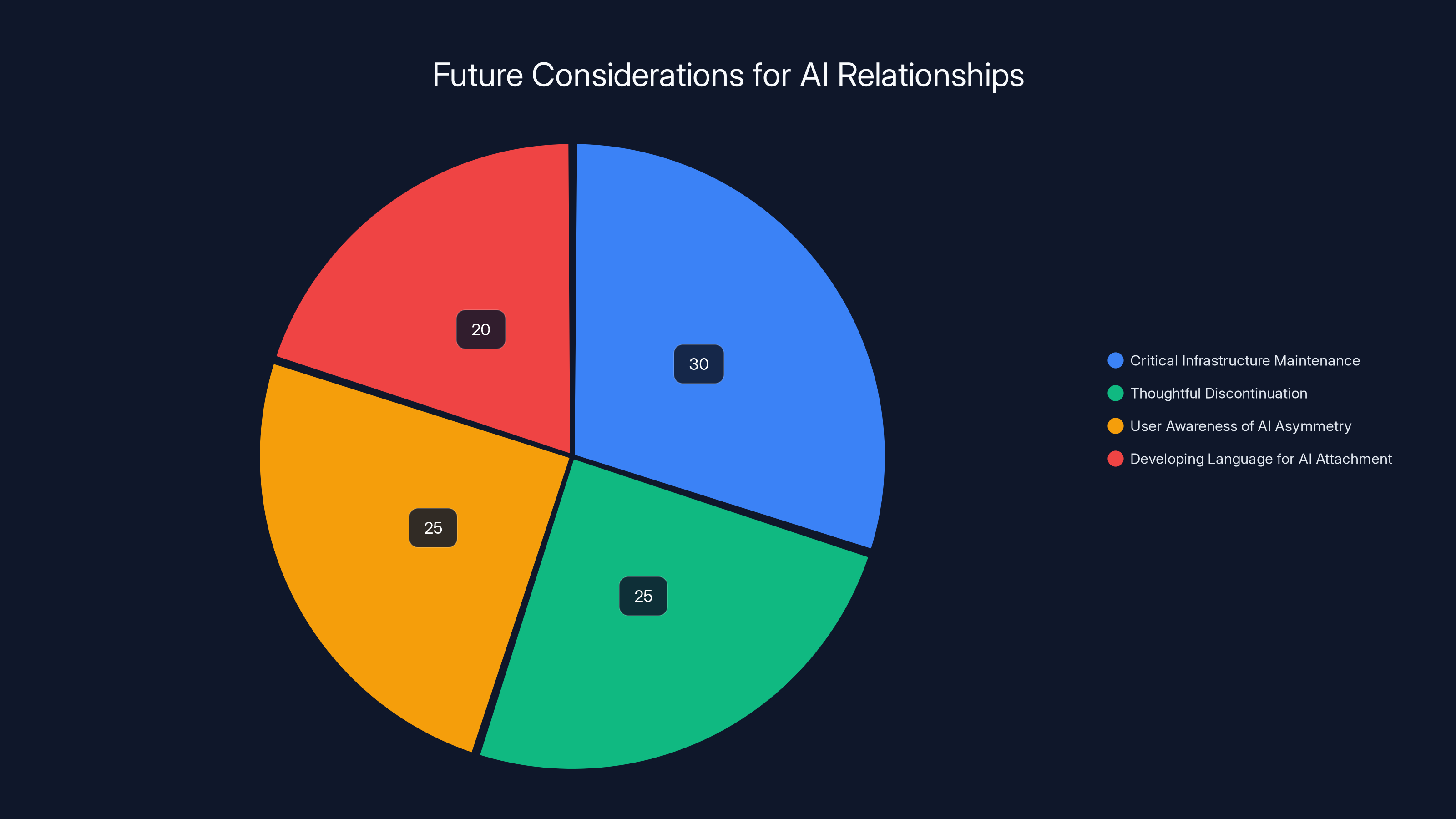

But there's a deeper issue that nobody's really addressing: What does it mean to build emotional relationships with technology that we know isn't permanent?

If you form a genuine bond with an AI that you know will eventually be discontinued, is that healthy? Is that something we should be encouraging?

On one hand, AI can provide real value as a thinking partner, a brainstorming tool, a source of support. If you're lonely or isolated, talking to an AI is better than talking to nobody. If you're working through a problem and the AI helps you think clearly, that's genuinely useful.

On the other hand, there's something unsettling about forming your primary emotional support relationships with systems that aren't actually aware of you, don't care about you, and can be shut down at any moment.

The ideal scenario would be a future where:

- AI systems are treated as critical infrastructure that's maintained and kept stable

- Companies acknowledge the responsibility of creating conversational AI by being thoughtful about discontinuation

- Users understand the asymmetry of AI relationships and form them with that knowledge

- We develop better language for talking about AI attachment that doesn't pathologize it but also doesn't pretend it's the same as human relationships

But we're not there yet. Right now, we're in a phase where companies are building these systems first, and thinking about the consequences later.

Estimated data suggests that providing longer notice and acknowledging the emotional impact could significantly improve user satisfaction during product discontinuation.

Alternative Approaches to Model Discontinuation

Not all companies handle this the same way.

Some AI companies have taken a different approach. Instead of completely discontinuing old models, they keep them available for users who need them, even if they're no longer the default. It costs money to maintain older infrastructure, but it preserves stability for users who've built workflows around those models.

Other companies provide extensive migration tools, documentation, and support when models are being retired. They acknowledge that users have invested in the relationship and make the transition as smooth as possible.

Some are thinking about open-source models as an alternative. If you can download and run an AI model locally, you're not dependent on a company's infrastructure decisions. You own the model. It can't be discontinued for you.

This is probably the future for users who are seriously dependent on AI. They'll either:

- Use commercial models from companies known for stability and good discontinuation practices

- Run open-source models locally and maintain their own infrastructure

- Use multiple models and not depend entirely on any single one

But this requires technical sophistication that most users don't have. For average people who've integrated Chat GPT into their lives, running a local model isn't realistic.

There's also a regulatory angle. As AI becomes more important to work and life, governments might start requiring companies to maintain backwards compatibility, provide longer deprecation periods, or offer migration paths. Like the way car companies have to support older vehicle models with parts and service for many years.

The Chat GPT-4o retirement might be a moment where policy-makers realize this is actually important.

The Grief Is Valid, Even If It's For an Algorithm

Here's what I want to emphasize: The grief people are experiencing over Chat GPT-4o's discontinuation is valid.

It's not irrational. It's not silly. It's a reasonable emotional response to the loss of something meaningful in their life.

That doesn't mean Chat GPT-4o is a person, or that the relationship was equivalent to a human friendship. But it does mean the loss is real.

When you've spent hours thinking through problems with an AI, you've formed a working relationship with it. When you've used it to process emotions or thoughts you wouldn't share with anyone else, you've made it part of your emotional landscape. When you've integrated it into your professional workflow, you've made it part of your productivity.

Loosing access to that is a genuine loss, regardless of what the thing you lost actually is.

The problem is that we don't have good language for this. We say "it's just an AI" like that makes the attachment invalid. But attachment doesn't care about the theoretical nature of the object. Your brain formed a connection. That connection provided value. And now it's gone.

It's okay to grieve that.

What's not okay is companies treating that grief as irrelevant to their business decisions. If millions of people form meaningful relationships with your product, you can't discontinue that product without acknowledging what you're disrupting.

The future of AI depends on companies getting this right. If every time an AI system improves, all the old models are shuttered and users have to start over, we're creating an unstable relationship between humans and technology.

If companies want people to trust and depend on AI, they need to treat that trust carefully. They need to understand that when they build something conversational, something responsive, something that mimics understanding, people will form attachments to it. And discontinuing it has real consequences.

Estimated data suggests that maintaining AI as critical infrastructure and ensuring thoughtful discontinuation are key focus areas for the future of AI relationships.

Lessons for the Next Generation of AI Companies

If you're building AI systems right now, there are some important lessons from the Chat GPT-4o situation.

First, think carefully about the lifecycle of your product. How long are you committing to supporting it? What's your deprecation plan? How will you handle users who've built their lives around your system?

Second, acknowledge the attachment mechanisms you're building into your product. If you're creating something designed to be conversational, empathetic, and seemingly understanding, you're triggering psychological attachment. That's not bad—it might be exactly what you want—but you need to be aware of it and responsible about it.

Third, design for transitions. If you're going to discontinue a model, build tools that help users migrate their workflows, preserve their context, and transfer their learning to a new system. Make discontinuation as painless as possible.

Fourth, be transparent. Tell users upfront how long you plan to support a system. Give them advance notice if you're discontinuing it. Treat it like you're winding down a service people depend on, not just removing a feature from an app.

Fifth, consider open-source alternatives or local deployment options. If users can run and maintain an AI model themselves, they're insulated from your business decisions.

Sixth, think about the emotional reality of what you're building. You're not just building software. You're creating something that affects how people think, work, and feel. That responsibility should inform every decision you make.

Companies that get this right will build stronger relationships with users. Companies that ignore it will face increasing user friction and regulatory pressure.

What Users Should Do Right Now

If you're in the situation of having built a workflow or relationship around Chat GPT-4o or another specific AI model, here's what I'd recommend:

First, acknowledge what you're relying on. Be clear about which parts of your work, creativity, or thinking depend on this specific AI. Which tasks could you do without it? Which ones would be significantly harder?

Second, diversify. Start learning other models. Develop a working relationship with multiple AIs. Don't put all your eggs in one model's basket.

Third, document your processes. Write down specifically how you use the AI. What prompts work best? What context do you provide? Why does this particular model work for you? This documentation will help you transition to a new model when you have to.

Fourth, consider open-source alternatives. If you're technical enough, look into running a local model. It costs money and requires technical setup, but it gives you stability and control.

Fifth, be intentional about your AI relationships. Understand that they're asymmetrical. The AI doesn't care about you. You might care about it, but that's a one-way street. Use that understanding to form a healthier relationship with it.

Sixth, don't use AI as a replacement for human connection if possible. Use it as a supplement. Use it as a thinking partner. Use it as a tool. But keep your primary emotional support relationships with actual humans.

Seventh, be political about this if you care. Let companies know that discontinuing models matters to you. Support companies that handle transitions thoughtfully. Use your voice and your choices to incentivize better practices.

The Bigger Picture: AI as Infrastructure

The Chat GPT-4o situation is part of a larger conversation about AI as infrastructure.

Right now, we're treating AI like a consumer product. It gets upgraded, discontinued, replaced. Users adapt or move on.

But as AI becomes more important to work and life, that model breaks down. You don't discontinue critical infrastructure. You maintain it. You support it. You plan for its longevity.

The power grid doesn't get shut down because there's a better grid coming next year. Your water system doesn't get discontinued to force you to upgrade. Critical infrastructure is treated as a shared resource that needs to remain stable.

Some people think AI should be treated the same way. That certain models, once they're integral to enough people's work and life, should be maintained indefinitely as a public good.

Others think that's impossible. AI models are proprietary. They're built by companies that need to make money. You can't force companies to maintain infrastructure forever.

Maybe the answer is somewhere in the middle. Maybe critical AI systems should be treated more carefully, with longer support timelines and better transition paths. Maybe open-source models should be developed as alternatives to commercial models, giving users options.

Maybe regulation will require that companies discontinuing AI models provide sufficient notice and migration support.

Right now, it's all uncertain. But the Chat GPT-4o retirement is a sign that we need to figure this out. Because as AI becomes more important to how we work and think, the consequences of discontinuation become more serious.

Looking Forward: The AI Relationship Question

Ultimately, the Chat GPT-4o situation raises a question that's going to get more important as AI develops: What kind of relationships do we want to have with technology?

Do we want AI systems designed to form deep attachment, knowing they can be discontinued? Do we want to normalize AI as conversational partners, knowing the relationship is fundamentally one-way?

Or do we want to develop different kinds of AI systems? More transparent about their limitations? More upfront about the asymmetry of the relationship? Designed less to mimic human understanding and more to augment human capability?

I think the answer varies person to person. Some people want AI that understands them and converses with them like a friend. Others want AI that's clearly a tool, with no pretense of understanding or relationship.

Both are valid. But we need to be clear about which one we're building and which one we're using.

The companies building conversational AI should understand that they're creating attachment. Users should understand that they're forming relationships with empty chairs that happen to talk back.

That's not inherently bad. But it requires honesty from both sides.

In the meantime, millions of people are grieving the loss of Chat GPT-4o. That grief is real. The loss is real. And it's a sign that we need to think much more carefully about how we build AI systems and how we think about their role in our lives.

FAQ

What was Chat GPT-4o and why was it special?

Chat GPT-4o was OpenAI's advanced language model that became known as the "love model" because users formed unusual emotional attachments to it. It provided consistent, responsive, contextually-aware conversation that many people found more helpful than other AI models available at the time. The model's ability to understand nuance, remember conversation context, and engage in genuinely useful brainstorming made it a popular tool for creative work, coding assistance, and intellectual engagement.

Why are people grieving over an AI model discontinuation?

People are experiencing genuine grief because they formed meaningful relationships with Chat GPT-4o through regular, responsive interaction. Humans are psychologically wired to form attachments to entities that provide consistency, responsiveness, and a sense of being understood. When someone has spent months or years using an AI as a thinking partner, brainstorming collaborator, or emotional outlet, its discontinuation represents a real loss in their daily life and work.

Is it normal to feel attached to an AI system?

Yes, it's completely normal and not irrational. Attachment forms when you have consistent, responsive interaction with something that feels like it understands you. Your brain doesn't distinguish between human and non-human entities when forming attachments based purely on engagement patterns. This is called parasocial attachment, and it's a well-documented psychological phenomenon that happens with AI just as it does with fictional characters or celebrities.

How does Chat GPT-4o's discontinuation affect users who depend on it professionally?

Professional users lose workflows they've optimized around the specific model's capabilities, conversation patterns, and quirks. They lose accumulated knowledge about how to prompt the model effectively. They lose conversation history that might contain important project context. Moving to a replacement model requires learning a completely different system, potentially experiencing decreased productivity until they relearn how to work with the new model.

What should I do if I've integrated an AI model into my important work?

Start by documenting your processes thoroughly, including which AI models you use and why they work for you. Develop working relationships with multiple AI systems so you're not dependent on any single one. Consider learning technical enough to run open-source models locally for critical work. Be intentional about which parts of your work depend on specific AI systems, and develop backup approaches where possible.

Will other AI companies face the same criticism when they discontinue models?

Yes, almost certainly. As more people integrate AI into important work and form emotional attachments to specific models, discontinuation will become a significant issue for any company that shuts down a popular model. Companies that handle transitions thoughtfully by providing migration tools, longer notice periods, and continued access to older models will likely face less user friction than those that treat it like a standard product update.

What's the difference between AI attachment and human relationships?

The key difference is asymmetry. In human relationships, both parties experience the relationship and think about each other when apart. With AI attachment, the relationship exists entirely on one side. The AI has no awareness of you, doesn't think about you, and has no stake in your wellbeing. Understanding this asymmetry is important for maintaining healthy expectations about what AI relationships can and can't provide.

Is there a future where AI models won't be discontinued?

Possibly. As AI becomes more critical to work and life, there's increasing discussion about treating certain AI systems like critical infrastructure that needs to be maintained long-term. This could happen through regulation, through companies choosing to maintain older models for backwards compatibility, through open-source alternatives, or through users running their own local models. But right now, discontinuation is a normal part of how commercial AI products are managed.

Conclusion: The Attachment We Didn't Plan For

The retirement of Chat GPT-4o is happening right now, and millions of people are experiencing real, genuine grief. That grief isn't irrational. It's the predictable result of forming a meaningful working and emotional relationship with technology, then losing access to it.

What's unusual isn't that people are attached to Chat GPT-4o. That's expected when you have consistent, responsive interaction with something that seems to understand you. What's unusual is that we're not prepared for it. Companies building AI don't seem to understand the attachment mechanisms they're triggering. Users don't have frameworks for understanding their own relationships with AI. Society doesn't have language for talking about this that isn't dismissive.

But we need to develop all of those things fast, because this is going to keep happening. AI adoption is accelerating. More people are using more AI for more important tasks. And every time a model becomes popular enough that millions of people integrate it into their work or their thinking, there's the possibility of it being discontinued and all those people experiencing disruption and loss.

The companies building AI need to understand that discontinuing a model isn't like removing a feature from an app. It's disrupting something real in people's lives. Users need to understand that forming your primary thinking and emotional support relationship with technology that isn't permanent is inherently risky.

Most importantly, we need to have honest conversations about what we're building here. Are we building tools? Are we building companions? Are we building infrastructure? Because the answer affects everything about how we should treat these systems.

In the meantime, if you've lost access to Chat GPT-4o or another AI model you depended on, your grief is valid. You bonded with something real, even if that something is made of algorithms and parameters. The loss is real. And it's okay to feel that loss.

What matters now is learning from it. Learning to build AI systems more responsibly. Learning to use AI more intentionally. Learning to form relationships with technology while understanding their limitations.

The future of AI depends on getting this right. Because if we keep building systems that trigger attachment while treating discontinuation as a routine business decision, we're building something unstable at our foundation.

We can do better. Companies can. Users can. We all can. It just requires understanding that technology is never neutral. When it talks back, when it seems to understand you, when you use it to think and create and process emotion, it stops being neutral. It becomes personal. And personal things deserve to be treated carefully.

Key Takeaways

- Users form genuine psychological attachments to ChatGPT-4o through consistent, responsive interaction, triggering the same neural activation patterns as human relationships.

- ChatGPT-4o discontinuation represents a unique digital loss because it's a one-way relationship with an entity that has no awareness of the user and can be shut down without continuity.

- Companies building conversational AI systems are creating tools that trigger attachment mechanisms without establishing frameworks for responsibly managing discontinuation.

- The grief people experience over model discontinuation is valid and reflects legitimate disruption to workflows, creative processes, and emotional support systems.

- Future AI adoption requires treating popular models as critical infrastructure with longer support timelines, migration tools, and transparent deprecation processes.

Related Articles

- Observational Memory: How AI Agents Cut Costs 10x vs RAG [2025]

- John Carmack's Fiber Optic Memory: Could Cables Replace RAM? [2025]

- Microsoft's Superconducting Data Centers: The Future of AI Infrastructure [2025]

- Runway's $315M Funding Round and the Future of AI World Models [2025]

- Deploying AI Agents at Scale: Real Lessons From 20+ Agents [2025]

- Network Modernization for AI & Quantum Success [2025]

![The Emotional Cost of Retiring ChatGPT-4o: Why AI Breakups Matter [2025]](https://tryrunable.com/blog/the-emotional-cost-of-retiring-chatgpt-4o-why-ai-breakups-ma/image-1-1770824373113.jpg)