AI-Generated Bug Reports Are Breaking Security: Why cURL Killed Its Bounty Program

Something strange started happening in the security community last year. Legitimate researchers and maintainers began receiving increasingly nonsensical vulnerability reports. Code snippets that wouldn't compile. Vulnerabilities that didn't exist. Changelogs that never happened. The culprit? Large language models (LLMs) operating without oversight, flooding security channels with what experts now call "AI slop" as reported by SC World.

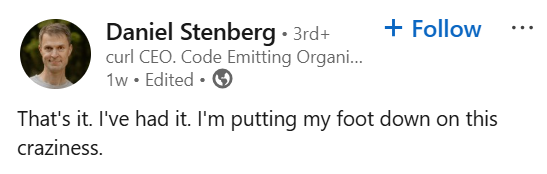

In January 2026, Daniel Stenberg, the founder and lead developer of cURL—a tool so fundamental that it's bundled into Windows, macOS, and virtually every Linux distribution—made a stunning announcement. After decades of running a successful vulnerability reward program, cURL would terminate its bug bounty initiative effective immediately. The reason wasn't a lack of funding or loss of interest in security. It was self-preservation.

"We are just a small single open source project with a small number of active maintainers," Stenberg wrote. "It is not in our power to change how all these people and their slop machines work. We need to make moves to ensure our survival and intact mental health" according to Bleeping Computer.

This wasn't hyperbole. The cURL project had been receiving dozens of AI-generated reports weekly, each requiring investigation despite being factually impossible. The security team was spending hours debunking hallucinations instead of fixing actual bugs. And cURL isn't alone.

What's happening right now is a preview of a much larger problem: AI is being weaponized (intentionally or not) to generate massive volumes of low-quality security reports, threatening the very systems that depend on human oversight to stay secure. Understanding this crisis matters whether you're a developer, security professional, or just someone who relies on the internet every single day.

TL; DR

- The Problem: LLMs are generating thousands of fake vulnerability reports that are technically impossible, forcing maintainers to waste hours debunking hallucinations

- The Scale: cURL received so many AI-generated reports that its small team couldn't keep up, pushing maintainers to burnout

- The Impact: Killing bug bounties reduces incentives for legitimate security researchers while doing nothing to stop AI spam

- The Bigger Picture: This is only the beginning—similar problems are appearing across open source, music streaming, academic publishing, and more

- The Real Issue: Humans are using AI tools carelessly without understanding their outputs, creating a tragedy of the commons in security research

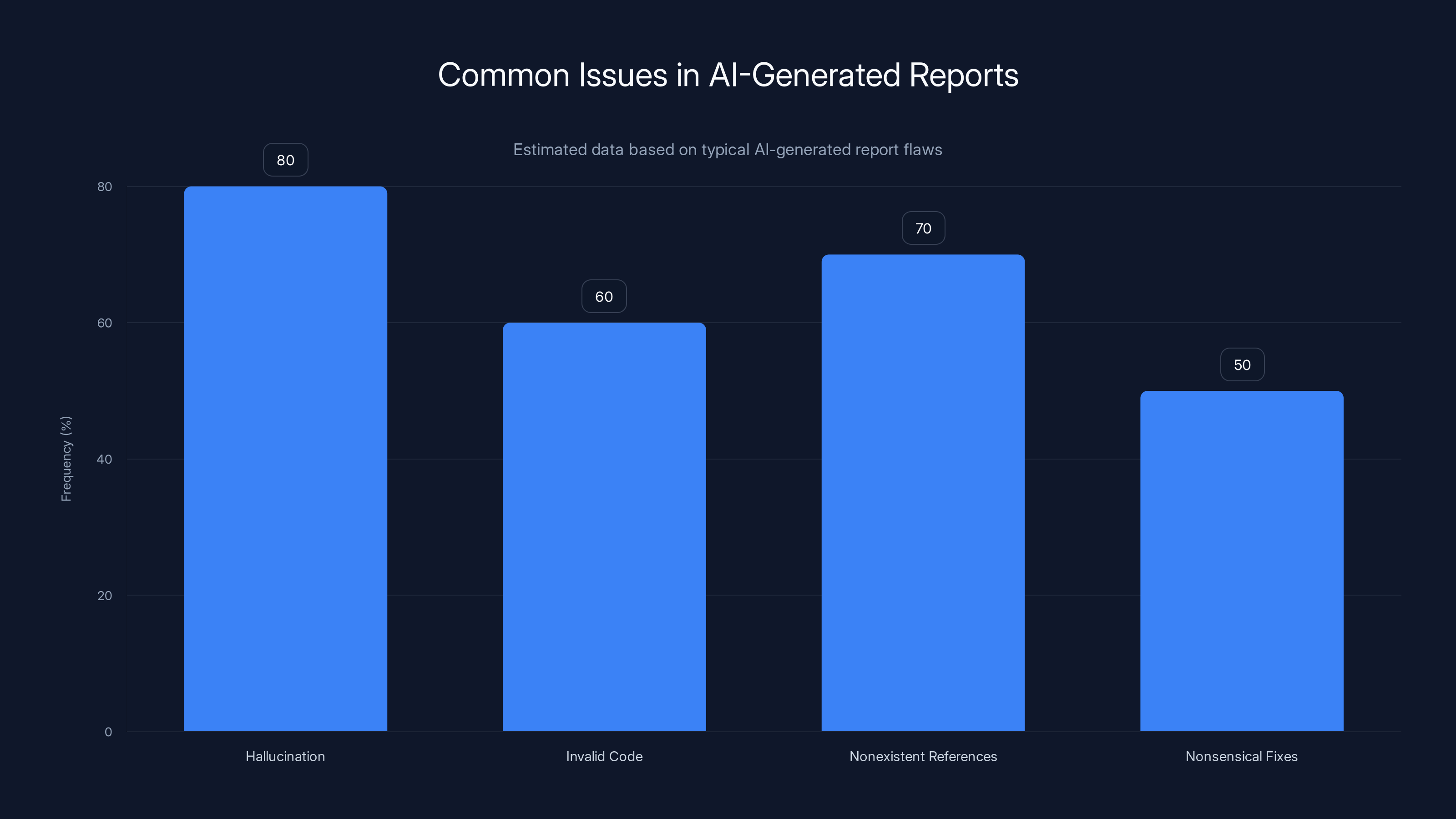

AI-generated reports often contain hallucinations, invalid code, nonexistent references, and nonsensical fixes. Estimated data highlights the prevalence of these issues.

The cURL Story: How a Decades-Old Tool Became Overwhelmed by AI Hallucinations

cURL has been around since 1996, when it was originally called httpget. For nearly 30 years, it's been the quiet backbone of internet infrastructure. When you download a file from the command line, transfer data between servers, automate API calls, or test web applications, there's a good chance you're using cURL. It's so ubiquitous that most people don't even know it exists, which is exactly how foundational tools work.

This ubiquity makes security critical. Any vulnerability in cURL could potentially affect millions of systems. Recognizing this responsibility, the cURL team established a vulnerability reward program. Researchers who discovered legitimate security issues could report them privately and receive cash compensation for high-severity findings. This is standard practice in professional software development.

The system worked well for years. Legitimate security researchers had an incentive to find real bugs. The cURL team had a known channel for receiving reports. Everything flowed smoothly until LLMs started generating vulnerability reports at scale.

Stenberg first publicly complained about the problem in May 2025. "AI slop is overwhelming maintainers today and it won't stop at curl but only starts there," he said. The tone suggested this was an emerging crisis, not yet at critical mass. But in the subsequent months, the situation deteriorated rapidly as noted by CyberNews.

By January 2026, the flood had become unbearable. The cURL team began documenting examples of the bogus reports they were receiving. One particularly telling case came from someone claiming to have found a vulnerability using Google's Bard AI. The report included a code snippet allegedly showing a security flaw in curl_easy_setopt, a function in the cURL library.

A cURL team member investigated and found fundamental problems. The code snippet didn't match the actual function signature. It wouldn't compile. The vulnerability description referenced a changelog that never existed. The CVE number cited in the report (CVE-2020-19909) was known to be completely fabricated from a previous AI hallucination.

When confronted with this evidence, the reporter actually defended the finding, insisting the vulnerability was real. They weren't lying—they genuinely believed an AI system had discovered something legitimate. Stenberg eventually had to step in and explain directly: "You were fooled by an AI into believing that" as reported by ITPro.

This exchange captures the core problem perfectly. It's not that AI is inherently bad at security research. It's that people are using AI tools without understanding their limitations, trusting outputs that sound authoritative but are fundamentally false.

Why AI-Generated Reports Look Credible but Aren't

Large language models are essentially sophisticated pattern-matching systems trained on vast amounts of text. They're remarkably good at generating coherent prose, explaining concepts, and even writing functional code. But they have a critical weakness: they can't distinguish between real and fake information.

This is called "hallucination," and it's not a minor bug in LLMs. It's a fundamental feature of how they work. When an LLM is asked to identify a vulnerability in code, it doesn't actually analyze the code for security flaws. Instead, it generates text that follows the statistical patterns of vulnerability reports it was trained on. If those patterns suggest that a certain type of code has vulnerabilities, the model will generate plausible-sounding vulnerability descriptions, even if the actual code is perfectly safe.

The problem gets worse when you consider what happens next. An LLM-generated vulnerability report contains several key elements: a description of the flaw, an explanation of its impact, sometimes a proof-of-concept code snippet, and references to related documentation. Each of these is generated based on patterns in the training data. If the training data includes real vulnerabilities, the model learns what a real vulnerability report looks like and can generate something superficially similar.

But the devil is in the details. The proof-of-concept code might be syntactically correct C or Python while being semantically invalid for the actual API it purports to exploit. The vulnerability description might reference CVE numbers or changelog entries that don't exist. The suggested fix might be technically nonsensical. An expert reading the report would spot these errors immediately. But a non-expert—or worse, someone who doesn't care enough to verify—will simply submit it.

This is exactly what's happening at scale. People are using Chat GPT, Gemini, Claude, and other LLMs to search for vulnerabilities, getting plausible-sounding results, and submitting them without validation. The sheer volume of these reports has created a crisis for maintainers.

Stenberg documented one example where an AI bot generated an elaborate vulnerability report claiming a flaw in how cURL handled a specific HTTP header. The report included background context, technical explanation, and even pseudocode for an exploit. But the vulnerability never existed. cURL never had this flaw. The entire thing was fabricated.

Here's what makes this particularly insidious: the report was technically sophisticated enough that it couldn't be immediately dismissed as spam. The cURL team had to actually read through the entire thing, understand the claim being made, research the codebase to verify whether the flaw existed, and then write a response explaining why it was false. This took time. Multiply this by dozens or hundreds of reports per month, and you see how quickly a small volunteer team gets overwhelmed.

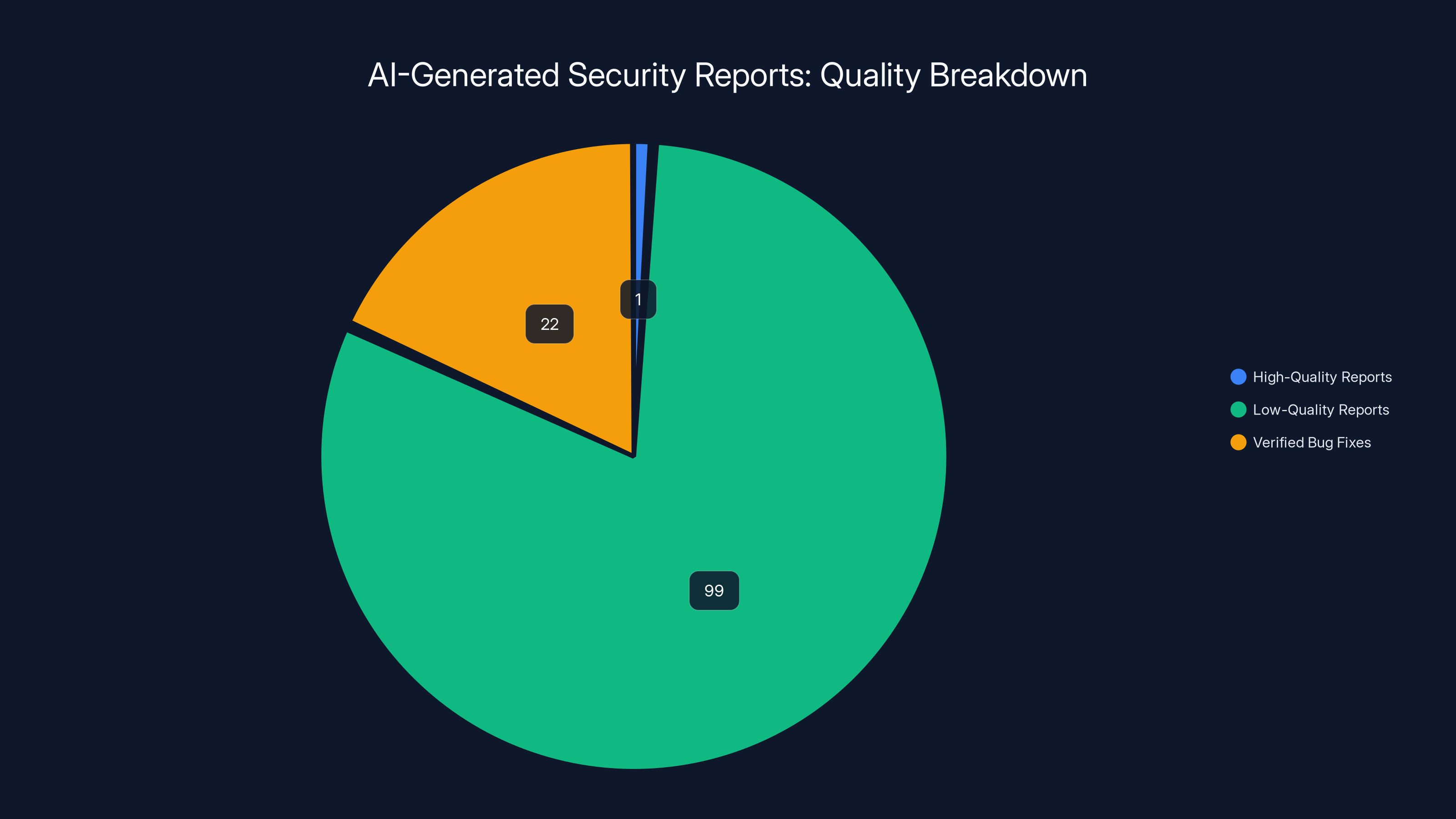

Estimated data suggests that 99% of AI-generated security reports are low-quality, with only 1% being high-quality and resulting in 22 verified bug fixes.

The Human Behavior Problem: Why People Are Submitting AI Garbage

Here's the uncomfortable truth: this crisis isn't really about AI being bad at security research. It's about humans being lazy, incompetent, or indifferent.

Stenberg himself acknowledged this distinction. In September 2025, a researcher named Joshua Rogers submitted a substantial list of legitimate vulnerability reports that were found using AI-assisted tools, specifically Zero Path, an AI-powered code analyzer. The reports resulted in 22 confirmed bug fixes. Stenberg publicly praised Rogers, calling him "a clever person using a powerful tool."

So what's the difference between Rogers and the person who submitted the Bard hallucination?

Expertise, effort, and care. Rogers used AI tools intelligently as part of a research process. He understood the tools' capabilities and limitations. He verified results before submitting them. He was doing security research, and AI happened to be one tool in his toolkit.

The other person was essentially running AI in automatic mode, trusting the output without verification, and submitting whatever came back. Stenberg put it bluntly: "I believe most of the worst reports we get are from people just asking an AI bot without caring or understanding much about what it reports."

This creates a tragedy of the commons situation. Individual submitters face no consequences for wasting maintainers' time. If 99 out of 100 submissions are garbage, one real bug still gets found, so the individual benefit persists. But the cumulative effect destroys the system for everyone.

There's also a perverse incentive at play. If someone can submit unlimited reports with minimal effort, the occasional lucky hit provides reward (a bounty payment, or just the satisfaction of finding a real bug) without the work involved in actually understanding security research. This attracts people who are motivated by quick money rather than genuine security interest.

When cURL offered bounties, this problem was tolerable—a small percentage of false reports was worth it to catch real vulnerabilities. But at a certain scale and velocity, the math breaks down. When 90% of reports are AI hallucinations, the program becomes unsustainable.

The Broader Crisis: AI Slop Is Colonizing Everything

cURL's problem is not unique. This is a preview of a much larger phenomenon that's already affecting multiple industries.

Music streaming services like Spotify have been flooded with AI-generated music, often falsely attributed to real artists. The volume is so enormous that some playlists are becoming difficult to navigate. The platform's music discovery feature, which relies on humans finding and curating music, has degraded because finding real music among AI-generated noise has become nearly impossible as reported by WebProNews.

Academic publishing is facing similar challenges. Journals are receiving increasing numbers of AI-generated papers that are technically well-written but scientifically nonsensical. Some papers include citations to studies that don't exist or experimental results that contradict the paper's own claims. Peer reviewers now have to factor in the possibility that a submission might be entirely AI-generated, adding another layer of skepticism to the review process.

Social media platforms are seeing coordinated campaigns of AI-generated content designed to manipulate discourse. Some accounts generate hundreds of posts daily, each technically coherent but collectively creating an overwhelming noise that drowns out human voices.

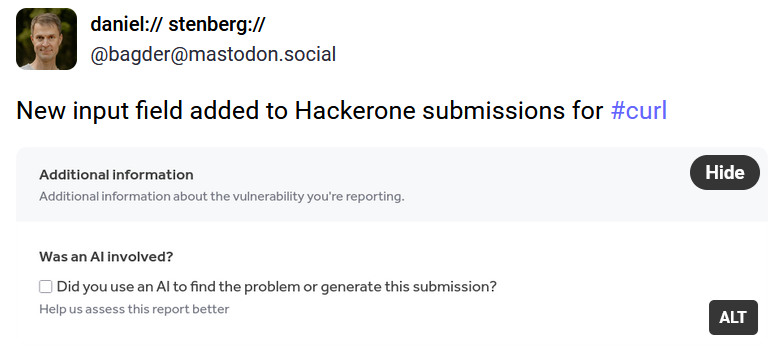

Bug bounty programs are experiencing similar stresses as cURL. Hacker One, one of the largest bug bounty platforms, has reported that they've seen an increase in low-quality submissions. While they haven't ended their programs, they've had to implement stricter filtering and validation processes.

The pattern is consistent: whenever there's a system that rewards submission volume or relies on human attention as a scarce resource, AI-generated garbage finds its way in and degrades the system. This is happening because LLMs have reached a level of sophistication where their output is plausible to casual observers, even when it's factually wrong.

What Gets Lost When Bug Bounties Disappear

When cURL ended its bug bounty program, Stenberg and the team weren't ignoring the security implications. They understood exactly what they were sacrificing. But they concluded it was necessary to save the project.

Vulnerability reward programs serve several critical functions beyond just incentivizing bug reports:

Financial motivation for security research: Professional security researchers often specialize in vulnerability discovery because bug bounties provide income. Remove bounties, and you reduce the incentive for serious researchers to focus on a particular project. Some researchers will continue out of passion or principle, but you're selecting against that group.

Prestige and credibility: Finding vulnerabilities in well-known projects like cURL provides reputation value. Researchers can point to successful disclosures as proof of competence. This drives high-quality submissions. Without the bounty, you lose some of that credibility incentive.

Timeliness: When there's money on the line, researchers are motivated to find bugs quickly and report them responsibly. Without financial incentive, the timeline stretches. A bug might be discovered but reported weeks or months later, during which time it's unpatched.

Responsible disclosure channels: Bug bounty programs provide official channels for vulnerability reporting. Remove the program, and you shift to informal channels, making it harder to coordinate patches and increasing the risk of accidental or malicious disclosure.

By ending the program, cURL accepted all of these downsides. The team decided that the mental health cost of reviewing thousands of garbage reports outweighed the benefit of financial incentives for legitimate researchers. This is a significant concession—it's essentially an admission that the system is broken.

However, Stenberg did clarify that cURL remains open to reports of legitimate vulnerabilities. The project hasn't turned hostile to security research. They're just no longer offering cash bounties, which removes one major incentive for both legitimate and illegitimate submissions.

Estimated data shows AI tool users are perceived to bear the most liability in false report scenarios, followed by AI companies. This reflects the complexity of assigning clear legal responsibility.

The Technical Side: What Makes a Bogus Report Obvious to Experts

When experts examine AI-generated vulnerability reports, certain patterns emerge that reveal the hallucination.

Contradictory technical details: A real vulnerability report will describe a specific flaw in specific code. An AI hallucination often includes details that contradict each other or don't match the actual codebase. For example, claiming a vulnerability in a function that doesn't exist, or describing an attack against an API that works differently than described.

Syntactically correct but semantically invalid code: LLMs can generate code that looks correct at first glance but doesn't actually do what's claimed. A proof-of-concept might have correct Python syntax but call functions with wrong parameters. An experienced developer spots this immediately.

Non-existent CVE references: Vulnerability reports often reference existing CVEs for context. AI sometimes generates CVE numbers that sound plausible but don't actually exist. Cross-referencing against the CVE database immediately exposes this.

Fictional changelogs: Reports claiming that a vulnerability was "documented in version 2.3.1's changelog" often reference changes that never happened. Checking the actual changelog takes seconds and reveals the lie.

Improbable attack scenarios: Real vulnerabilities have specific, reproducible attack vectors. AI-generated reports sometimes describe attacks that are technically possible in theory but would require an attacker to already have access that would render the vulnerability irrelevant.

For an expert with deep knowledge of a codebase, these errors are obvious. But that's precisely the problem—it takes an expert to verify each report. For a small volunteer team, reviewing hundreds of garbage reports is exhausting, even when the problems are detectable.

The Automation Problem: Why Simply Filtering Reports Won't Work

One might assume the solution is simple: implement automatic filtering to catch obvious AI-generated reports and reject them before they reach human reviewers.

This is harder than it sounds. First, there's the problem of false positives. A legitimate but poorly written report might get caught by a filter designed to catch AI hallucinations. Second, and more importantly, AI is improving at generating realistic-sounding technical content. A filter that catches 2024's AI-generated reports might miss 2025's.

There's also a fundamental issue: perfect filtering is impossible. Some AI-generated reports will inevitably pass through any reasonable filter. This creates a game of cat and mouse—as filtering improves, users of AI tools optimize their prompts to generate reports that pass the filter.

The real solution would require either drastically reducing the incentive for submitting low-quality reports (which cURL did by ending bounties) or implementing human review at the submission stage (which requires resources cURL doesn't have).

Legal and Ethical Liability: When AI Tools Become Weapons

Here's an interesting question: if someone submits a completely false vulnerability report generated by an AI, who's liable?

The person who submitted it? They're the one who sent it in, even if they claim they just trusted the AI. The AI company? They created the tool but didn't tell users to flood security programs with reports. The user who asked the AI to find vulnerabilities? They're responsible for the AI's outputs in many jurisdictions.

This legal ambiguity is part of why cURL's situation is so frustrating. There's no clear way to hold bad actors accountable. You can't sue someone for submitting a false report if they were genuinely fooled by an AI. You can't easily prosecute someone for wasting your time when they're technically in a jurisdiction you can't reach.

Some organizations are starting to take more aggressive legal stances. Stenberg posted on cURL's GitHub that the team would "ban you and ridicule you in public if you waste our time on crap reports." This is a credible threat—making someone's name public as a source of garbage reports has reputational consequences, especially in the security research community.

But this is essentially asking maintainers to become lawyers and detectives, investigating submissions, documenting false reports, and managing public shaming campaigns. That's not sustainable for volunteers.

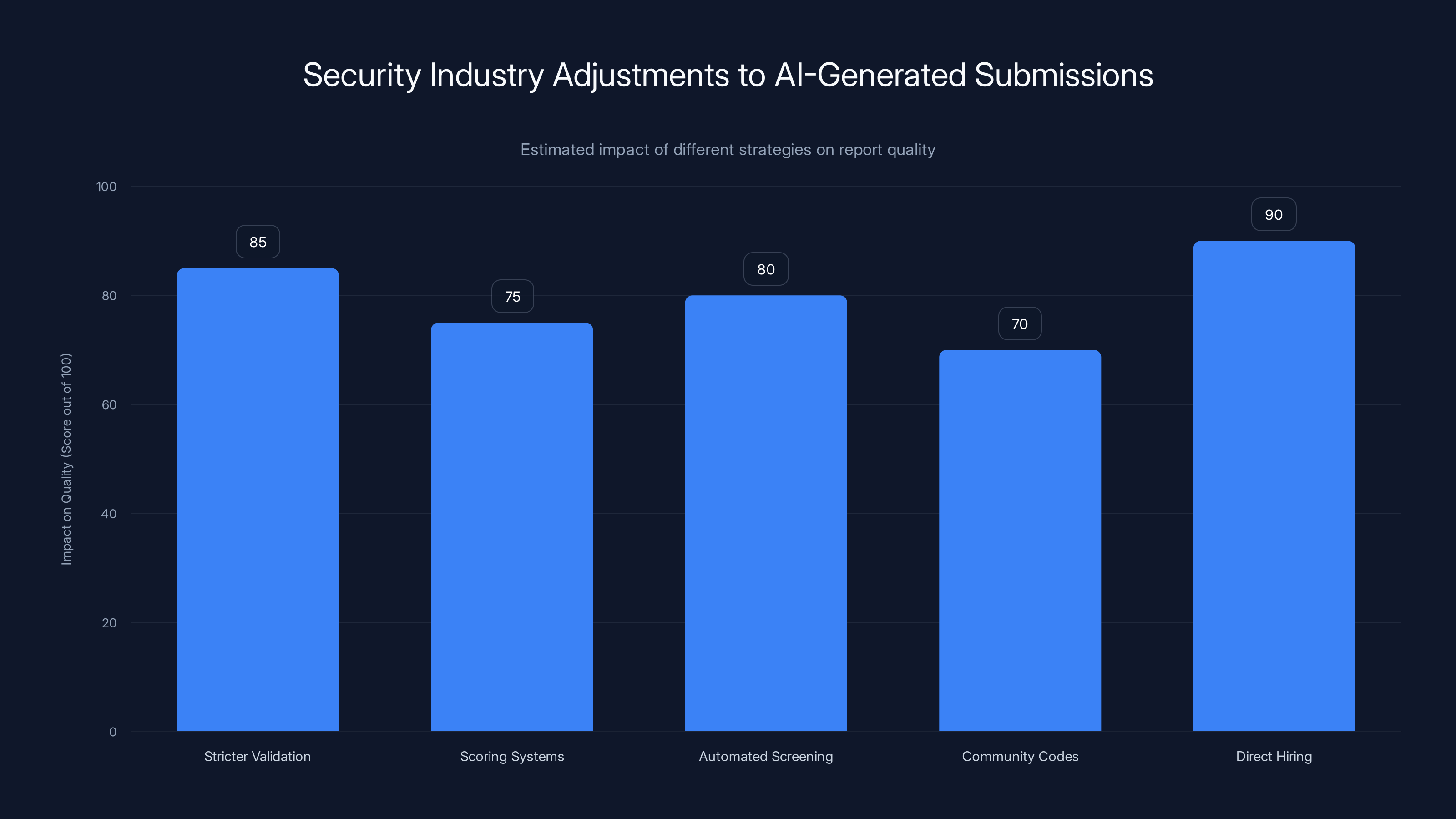

Estimated data suggests that direct hiring of security professionals has the highest impact on improving report quality, followed closely by stricter validation and automated screening.

The Open Source Sustainability Crisis

cURL's decision to end its bug bounty program is symptomatic of a much larger crisis in open source: maintainer burnout.

cURL is one of the most critical pieces of infrastructure in computing. It's used by hundreds of millions of devices. But it's maintained by a handful of volunteers who receive no compensation. The only incentive structure was the bug bounty program, which has now been eliminated.

This is the reality for much of critical open source infrastructure. Projects like OpenSSL, SQLite, Log4j, and others are maintained by tiny teams despite being central to global computing. These projects receive some funding through grants and sponsorships, but most maintainers are essentially volunteering their time.

The flood of AI-generated garbage is accelerating burnout. When a maintainer spends 2-3 hours debunking an AI hallucination instead of writing code or fixing real bugs, that's lost productivity. Multiply this across thousands of projects, and you're seeing a systematic degradation of open source maintenance capacity.

Some responses are emerging. Open source projects are implementing stricter submission requirements. Some are requiring researchers to complete security research training before submitting. Others are using automated tools to pre-filter submissions. But all of these require additional resources.

How Companies Are Responding: The Ripple Effect

cURL isn't alone in reconsidering its approach to security research. Other projects are watching this situation carefully and making adjustments.

Firefox, maintained by Mozilla, has been working with AI-powered security tools but has implemented strict guidelines about how these tools are used. The organization requires human expertise to supervise AI-assisted analysis, preventing exactly the kind of unsupervised report generation that cURL is experiencing.

Chromium, Google's browser project, has a much larger team dedicated to security, which allows them to absorb more low-quality reports. However, even with significant resources, they've had to increase filtering and validation.

Individual organizations are also establishing guidelines for employees using AI tools for security research. Companies like Google, Microsoft, and Apple that maintain their own bug bounty programs have started requiring explicit human verification before AI-assisted findings are submitted. Some are even banning pure AI-generated reports and requiring the researcher to demonstrate understanding of the vulnerability.

The trend is clear: the industry is responding to AI-generated garbage by placing more friction on the submission process. The irony is that this might reduce the rate of legitimate discoveries alongside the garbage.

The Culture Shift: From Trust to Verification

For decades, the security research community operated on a basis of trust. Someone would submit a vulnerability report, often without extensive documentation, and the team would investigate. Most researchers were motivated by genuine interest in security or by the desire to improve software.

AI-generated garbage is destroying that trust. Maintainers now have to approach every report with skepticism. Is this a legitimate discovery or an AI hallucination? Is the submitter an expert or someone who just ran Chat GPT?

This shift from trust to verification has real costs. Legitimate researchers feel insulted by the assumption that their work might be AI-generated. Projects that used to move quickly on reports now require more documentation and proof. The entire system becomes more adversarial.

Stenberg actually addressed this in his comments. He acknowledged that legitimate researchers would suffer from the end of the bug bounty program. "This move is a loss for cURL in the sense that we obviously want to fix bugs and security issues, and incentives obviously help with that," he wrote. But he concluded that the alternative—a system completely overwhelmed by garbage—was worse.

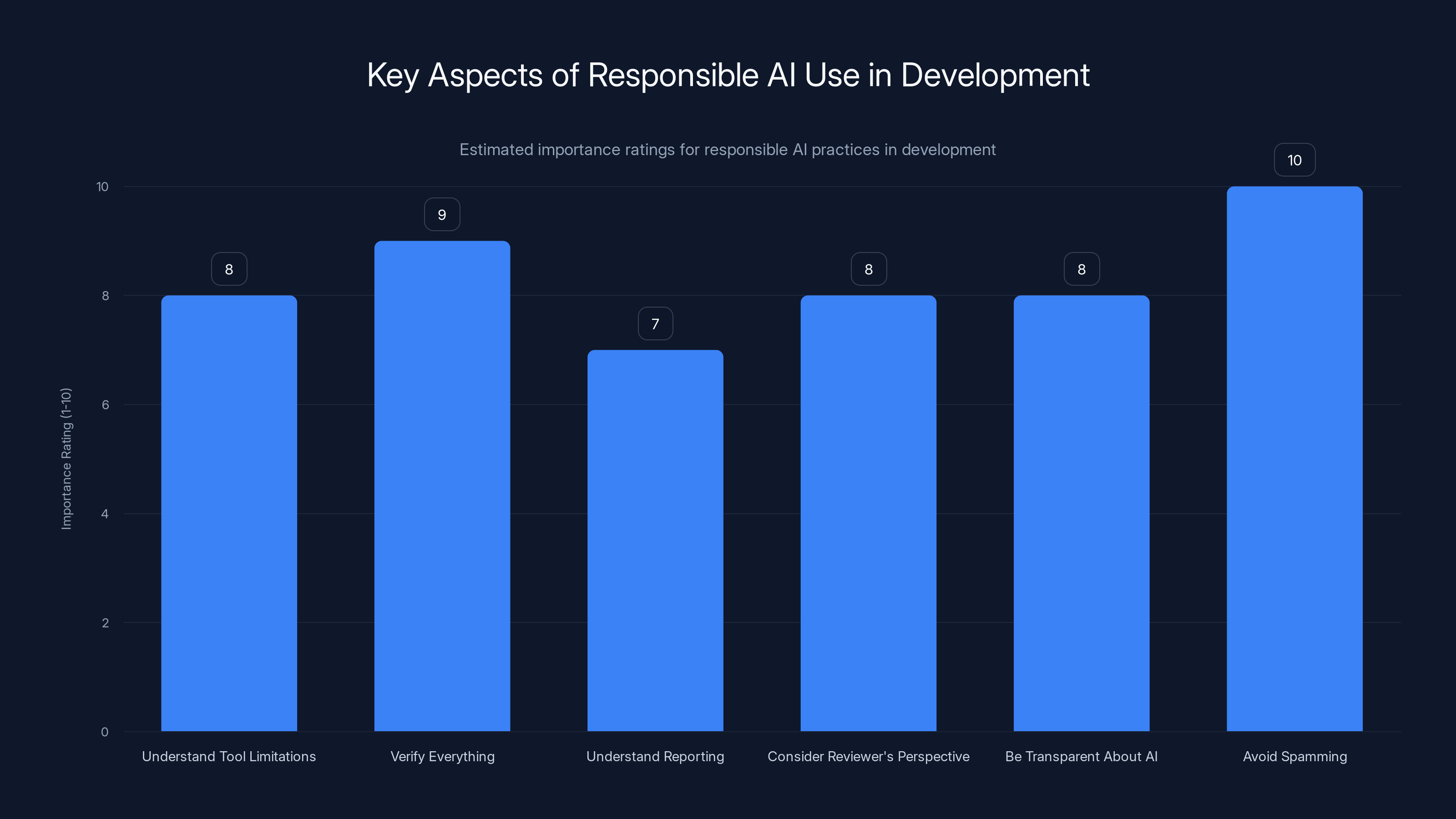

Understanding AI limitations and verifying outputs are crucial for responsible AI use in development. Avoiding spam is rated as the most critical aspect. (Estimated data)

What Real AI-Assisted Security Research Looks Like

Despite all the negatives, AI tools do have legitimate applications in security research when used properly. Joshua Rogers's work with Zero Path is a case study in how this should work.

Zero Path is an AI-powered static analysis tool designed specifically for security analysis. It understands common vulnerability patterns, can analyze code at scale, and can identify potential issues that might otherwise be missed. When Rogers used Zero Path, he did so as part of a research process: run the tool, analyze results, validate findings, and submit only verified vulnerabilities.

This approach resulted in 22 confirmed bugs across multiple projects. The bounties Rogers earned were deserved because he provided genuine value—not just volume.

The difference is fundamental: Rogers used AI as a research tool, not as a report generator. He understood both what the tool could do and what it couldn't. He took responsibility for validating its outputs. This is what responsible AI-assisted security research looks like.

Other tools in this category include:

Code analysis platforms: Tools designed to identify specific classes of vulnerabilities in specific languages. These are purpose-built for security research and operated by people with expertise in both the tool and security.

Fuzzing and testing frameworks: AI-assisted fuzzing can generate test cases to discover edge cases in software. When researchers use these tools and verify results through actual testing and execution, the findings are real.

Pattern matching engines: AI can identify when security patterns from known vulnerabilities appear in new code. But this requires human interpretation of whether the pattern actually represents a vulnerability in this specific context.

The key commonality: all legitimate applications involve expert humans taking responsibility for the AI's outputs.

The Prediction: What Happens Next

If this trend continues—and there's no reason to expect it won't—we'll see a fundamental shift in how security research is conducted.

More projects will end or restrict bug bounties: As AI-generated reports increase, more projects will follow cURL's lead. Small and medium-sized open source projects that can't handle the volume will retreat. This creates an incentive problem—legitimate researchers will focus on projects that still have bounties, leaving other projects less scrutinized.

Paid security research becomes more standardized: As bounties become less effective, paid employment becomes the dominant model. Companies will hire full-time security researchers rather than relying on external bounties. This concentrates security research among large organizations, leaving small projects vulnerable.

AI tools become smarter at avoiding detection: As more projects implement filters for AI-generated reports, AI tools will be optimized to pass those filters. The arms race between report filtering and report generation will continue, with unpredictable outcomes.

Reputation and verification become currency: Without financial incentives, security research might shift toward reputation-based systems. Researchers with established track records become more valuable, while newcomers face higher barriers to entry.

Legitimate security research becomes professionalized: The days of amateurs discovering critical vulnerabilities and selling the bounty might be numbered. Security research becomes more of a professional discipline, concentrating power among those with resources and credentials.

The Human Cost: Maintainer Perspective

This entire situation is ultimately about human beings being worn down by inefficiency. Stenberg's comment about "intact mental health" wasn't hyperbole or exaggeration.

Imagine maintaining a project you've dedicated decades to. You're doing this as a volunteer, without compensation. You care about security because you know millions of people depend on your code. But increasingly, instead of getting reports from skilled researchers about real vulnerabilities, you're getting nonsensical AI-generated garbage that requires hours to investigate and debunk.

The psychological toll is significant. You start to feel like your work is unappreciated. You become cynical about security research. You spend less time on actual development because you're drowning in noise. Eventually, you might just stop maintaining the project entirely, which would be a disaster for everyone who depends on it.

This is a tragedy of the commons problem with a human face. No individual user of AI-generated reports pays the cost of their low-quality submissions. But the cumulative effect destroys a public resource.

The chart illustrates the number of priority focus areas for each stakeholder group based on practical recommendations. Bug bounty platforms, private bounty programs, security researchers, and AI tool developers have the most focus areas. (Estimated data)

Potential Solutions: Making the System Sustainable

What would actually help? Several approaches could address the problem:

Rate limiting at the source: If bug bounty platforms required researchers to establish credentials or track records before allowing bulk submissions, it would reduce throwaway accounts spamming garbage. This would mean fewer total reports but higher quality.

AI tool accountability: If LLM companies required users of security-research features to acknowledge their responsibility for outputs, there might be more careful usage. Terms of service could explicitly forbid using AI for bulk vulnerability report generation without human verification.

Structural incentives for quality: Rather than bounties based on volume, platforms could implement reputation systems where researchers' track records affect how seriously their future reports are considered. This would reward quality over quantity.

Maintainer support: Open source projects could receive funding or volunteer support specifically for triaging and validating vulnerability reports. This would address the resource problem directly.

Technical filtering: While imperfect, implementing automated checks for common AI hallucination patterns could reduce the noise reaching human reviewers.

Education and norms: Community norms against submitting unvalidated AI-generated reports could have an effect. If researchers who submit garbage get publicly called out, future submissions might improve.

None of these is a complete solution. But combinations of them could make the system more sustainable without completely eliminating bug bounties.

Lessons for Other Industries

The cURL situation provides lessons for any industry dealing with AI-generated content at scale:

Volume without quality destroys signal: When AI makes it cheap and easy to generate content, the incentive structure flips. Instead of rewarding quality, systems get overwhelmed by quantity. This applies to music streaming, academic publishing, content moderation, and countless other domains.

Verification is the bottleneck: The limiting factor isn't generating content—it's verifying it. If you can't verify at scale, you can't sustain the system. This is why music streaming, academic journals, and now security programs are struggling.

Human effort can't scale with AI generation: If AI can generate 1,000 items per hour and humans can verify 10 items per hour, the math is unsustainable. Eventually, the system breaks. This is happening across multiple industries simultaneously.

Misaligned incentives: When submitters face no cost for garbage submissions but platforms and maintainers do, the system attracts garbage. The solution requires realigning incentives so that bad submissions are costly for submitters.

Culture matters: Systems that rely on community trust and professional norms are most vulnerable to AI-generated garbage. When that trust breaks, the system often collapses entirely.

The Broader Context: AI in Security Research

This story might make it seem like AI and security research are fundamentally incompatible. But that would be the wrong conclusion.

AI-powered tools, when used properly and responsibly, can be genuinely useful for security research. The problem is a human behavior problem, not an AI technology problem.

When skilled researchers use AI tools as one component of a broader research methodology, amazing things happen. Tools like Claude, Chat GPT, and specialized security analysis platforms can dramatically increase researcher productivity.

The key difference is that experts take responsibility for the outputs. They verify, validate, and understand what they're submitting. They use AI as a tool that amplifies their capabilities, not as an automated system that generates bulk reports.

The future of security research probably involves AI heavily, but likely in a more structured and controlled way than today's open use of general-purpose LLMs.

Industry Response: What Security Professionals Are Doing

Across the industry, security professionals are adjusting their practices in response to AI-generated garbage.

Bugcrowd and other platforms are implementing stricter validation workflows. Researchers now have to establish credentials and prove track records before their reports get serious consideration. This creates friction but improves quality.

Companies running bounty programs are implementing scoring systems where researchers with better hit rates get rewarded more generously and their reports are reviewed more quickly. Researchers with many false reports get deprioritized.

Automated screening tools are becoming more sophisticated. Synack and similar platforms use combination of human and machine review to filter low-quality submissions before they reach development teams.

Researcher communities are establishing informal codes of conduct against submitting unvalidated AI-generated reports. This social pressure can be surprisingly effective in technical communities.

Security teams are hiring differently: Instead of relying on external bounties, more companies are hiring professional security researchers directly. This trades the breadth of external programs for the reliability of permanent staff.

The overall trend is that security research is becoming more professional, more expensive, and more concentrated among organizations with resources. This is not necessarily good for small projects or emerging security issues.

The Developer's Dilemma: Using AI Responsibly

If you're a developer or security researcher using AI tools, what's the responsible approach?

Understand the tool's limitations: LLMs are not security researchers. They can't verify their own outputs. They can't understand code execution. They can't distinguish between real and fake vulnerabilities. Know this going in.

Verify everything: Before submitting anything based on AI output, validate it yourself. Run code. Test the vulnerability. Check the documentation. Don't submit based on what an AI told you—submit based on what you've verified.

Understand what you're reporting: You should be able to explain the vulnerability in your own words, from understanding not copying. If you can't, you don't understand it well enough to report it.

Consider the reviewer's perspective: Remember that the person who reviews your report has limited time. If your report wastes their time, you're hurting the entire ecosystem. Make their job easier by being precise, clear, and correct.

Be transparent about AI assistance: If you used AI tools in your research, say so. Be explicit about what the tool found and what you verified. This helps reviewers understand your methodology.

Don't spam: Using AI to generate bulk reports is harmful. Full stop. Even if 10% of them are real vulnerabilities, the 90% that are garbage cause real damage.

Making AI Tools Better for Security Research

From the AI tool developer perspective, what could be done differently?

Reduce hallucination rates: Investing in techniques to reduce false information generation would help. This is an active research area, but more progress is needed.

Explicit disclaimers: Tools used for security research should explicitly warn users about hallucination risks and encourage verification. Current disclaimer text is often generic and ignored.

Responsibility frameworks: Security-specific AI tools could implement features that encourage responsible use. For example, requiring users to document what they've verified before generating reports.

Integration with verification tools: Tools that allow AI analysis to be automatically verified against actual code execution would solve many problems.

Community reporting: LLM companies could track which of their generated reports are false and use this feedback to improve future outputs.

Rate limiting for research: Limiting the number of bulk submissions a user can make, or requiring human review before submitting many reports, could reduce garbage submission rates.

Some of these are being implemented. The problem is that the incentive structure doesn't reward AI tool companies for preventing misuse. If banning bulk security analysis loses customers, companies won't do it.

Alternative Career Paths in Security

One interesting implication of this shift is that security research careers might be evolving. What does the future of security careers look like as bounties decline and professional employment increases?

Employment-based careers: More security researchers will work as employees of companies or organizations rather than as independent bounty hunters. This provides stability but reduces autonomy.

Specialist consulting: Researchers with deep expertise in specific areas (cryptography, embedded systems, cloud security) will command premium rates as consultants. Generalists will struggle.

Open source contributions: Instead of bounties, researchers might contribute fixes directly to projects they care about. This is rewarding but doesn't pay bills.

Threat intelligence: Companies increasingly pay for threat intelligence about vulnerabilities. This might become a more significant revenue source than bounties.

Bug bounty platforms consolidation: Fewer, larger platforms (Bugcrowd, Hacker One) might dominate, with higher barriers to entry for researchers but potentially more reliable payment.

Academic research: Universities might become more important employers for security researchers, providing stability and resources.

The overall effect might be that security research becomes more professionalized, which could improve quality but might reduce diversity and accessibility.

The Long Game: What This Means for Software Security

Step back and consider what happens if this trend continues. AI makes it cheap and easy to generate plausible-sounding vulnerability reports. Projects can't handle the volume. Bug bounties get eliminated. Legitimate researchers lose incentives and move elsewhere. Fewer vulnerabilities get discovered. Security degrades.

This is actually a concerning scenario. The entire ecosystem of finding and fixing vulnerabilities depends on incentivizing and enabling security researchers. If that ecosystem breaks, the software that underpins everything becomes less secure.

On the other hand, maybe the industry adapts. Maybe formal verification, automated testing, and professional security teams become standard. Maybe AI itself becomes better at both finding and preventing vulnerabilities. Maybe the crisis pushes needed changes that make software more secure.

The next few years will be interesting. We're at an inflection point where the volume of AI-generated content is starting to break systems that were designed for human-scale input. How we respond to this challenge will shape the future of software security.

Practical Recommendations

Based on everything discussed, here are concrete recommendations for different stakeholders:

For open source maintainers:

- Implement clear submission guidelines that require human verification

- Use automated filtering for obvious hallucinations

- Consider requiring researchers to provide proof of expertise before accepting reports

- Document examples of bogus reports to establish community norms

- Join or create communities for sharing strategies about handling AI-generated submissions

For bug bounty platforms:

- Implement reputation systems that reward quality over quantity

- Provide tools to help researchers verify their findings

- Implement rate limiting on bulk submissions

- Create educational resources about responsible AI use

- Publish data about submission quality to discourage garbage

For companies running private bounty programs:

- Require human researchers to document their methodology

- Implement two-stage review: automated filtering, then human expertise

- Train reviewers to identify hallucination patterns

- Consider hiring full-time security researchers instead of relying entirely on bounties

- Provide feedback to researchers about what kinds of reports you value

For security researchers:

- Treat AI tools as research assistants, not automated report generators

- Always verify findings before submission

- Be transparent about your methodology

- Focus on quality over quantity

- Build reputation through accurate, valuable reports

For AI tool developers:

- Implement safety features that discourage irresponsible use

- Provide clear documentation of limitations

- Consider building security-specific tools with better verification capabilities

- Create feedback mechanisms to learn from misuse

- Support researchers using tools responsibly

For companies using open source:

- Support open source security through funding and resources

- Consider hiring from open source communities

- Contribute back security improvements you discover

- Don't rely solely on external bounties—provide resources directly

FAQ

What exactly is "AI slop" in the context of security research?

AI slop refers to low-quality, often hallucinated vulnerability reports generated by large language models without human verification or understanding. These reports might include non-existent vulnerabilities, code that doesn't compile, or attacks against systems that don't work the way described. The term has expanded to describe any AI-generated content submitted to systems without proper verification, but in security context it specifically means false vulnerability claims.

Why did cURL specifically decide to end its bug bounty program?

cURL ended its program because the volume of AI-generated garbage reports had become unsustainable for a small volunteer team. The project was receiving dozens of false reports weekly, each requiring investigation despite being technically impossible. The team concluded that maintaining the incentive program while managing this noise was impossible without dramatically impacting maintainers' mental health and ability to actually improve the software.

How can legitimate security researchers still report vulnerabilities to cURL if the bounty program is gone?

cURL explicitly stated it remains open to vulnerability reports—the project just won't offer cash bounties for them. Researchers can still report legitimate vulnerabilities and the team will investigate and fix them. However, the financial incentive is gone, which removes one motivation for some researchers to prioritize cURL over other projects.

Is AI fundamentally bad for security research?

No. AI tools can legitimately assist security researchers when used responsibly—for example, as code analysis tools, fuzzing frameworks, or pattern matching engines. The problem isn't AI itself but humans using general-purpose LLMs to generate bulk reports without understanding or verifying the outputs. Expert researchers using AI as one component of a broader methodology have found legitimate vulnerabilities.

What's the difference between AI-assisted security research that works and AI-generated garbage reports?

The fundamental difference is human expertise and responsibility. When skilled researchers use AI tools as part of a research process—running analysis, reviewing results, validating findings—they produce quality work. When non-experts simply ask an LLM to find vulnerabilities and submit whatever it generates without verification, the result is garbage. It's about methodology and responsibility, not the tool itself.

Why can't projects just implement automatic filtering to catch AI-generated reports?

While some filtering is possible, perfect filtering is nearly impossible. First, sophisticated AI-generated reports can pass basic filters. Second, as filtering improves, attackers will optimize their prompts to pass filters. Third, false positive filtering risks rejecting legitimate reports. The real solution requires changing incentive structures, not just technical filtering.

Will other open source projects follow cURL's lead and eliminate bug bounties?

Probably, yes, though gradually. Projects that can't handle the volume of garbage reports will either implement stricter requirements, implement better filtering, or eliminate bounties. Larger projects with dedicated security teams might continue bounties but with stricter validation. Smaller projects like cURL will be most affected.

What does this mean for the security of open source software?

It's genuinely concerning. Security depends on incentivizing researchers to find vulnerabilities. If bounties disappear and legitimate researchers lose incentives, fewer vulnerabilities will be discovered and reported. This could lead to less secure software overall. However, the industry may adapt through professional employment, better automated testing, and formal verification techniques.

Can AI tools be used responsibly for security research?

Absolutely. When experts use AI-powered tools like specialized code analyzers, fuzzing frameworks, and pattern matching engines as part of a broader research methodology, they can find legitimate vulnerabilities. The key is that the researcher understands the tool's capabilities and limitations, validates all findings, and takes responsibility for outputs before submitting them.

How should security researchers use AI tools going forward?

Treat AI as a research tool, not an automated report generator. Use it to accelerate your work, but validate everything before submission. Understand what you're reporting—if you can't explain it in your own words, you don't understand it. Be transparent about your methodology. Focus on quality over quantity. Build reputation through accurate, valuable reports.

Key Takeaways

-

AI-generated garbage is overwhelming open source: The flood of hallucinated vulnerability reports has pushed maintainers past their breaking points, forcing cURL and potentially others to eliminate bug bounties

-

This is a human behavior problem, not purely a technology problem: AI tools didn't cause this crisis—careless human use of AI tools did. Experts using AI responsibly still produce quality work

-

Incentive structures matter enormously: When there's money on the line for volume without quality controls, the system attracts garbage submissions. The solution requires realigning incentives

-

Verification is the bottleneck: AI can generate content at scales humans can't verify. When generation outpaces verification capacity, the system breaks

-

This pattern will repeat across industries: Music streaming, academic publishing, content moderation, and countless other domains are facing similar problems with AI-generated slop

-

Security research is likely to become more professional and concentrated: Without bounties, security research may become employment-based, concentrating expertise among organizations with resources

-

The future depends on responsible AI use: If researchers commit to verifying outputs and not submitting garbage, the crisis is manageable. If the current trend continues, security degrades

-

Open source sustainability is at stake: Critical infrastructure depends on maintainers who are increasingly burned out by noise and lack of resources. This isn't just a security research problem—it's an infrastructure crisis

Conclusion: The Moment We're In

The cURL decision to end its bug bounty program represents more than just a change in one project's policies. It's a watershed moment in how we're reckoning with AI-generated content at scale.

For 30 years, the open source ecosystem worked because people operated with relatively good faith. Projects offered bounties, researchers submitted quality reports, maintainers fixed bugs. The system wasn't perfect, but it functioned. AI-generated garbage broke that implicit contract.

Now we're in a period of adjustment. Projects are implementing stricter requirements. Researchers are being called out for garbage submissions. Platforms are improving filtering. Community norms are shifting. The system will eventually stabilize, but at a different equilibrium than before.

The question is whether we'll end up in a place that's actually better. Will increased professionalization and structure improve security research? Will automation and better tools compensate for lost bounties? Will open source infrastructure get the support it needs?

Or will we end up in a worse place? Will security research become concentrated among those with resources? Will smaller projects get less scrutiny? Will the overall security of the internet degrade?

The honest answer is that we don't know yet. We're in the middle of a transition. The outcomes depend on choices that developers, companies, platforms, and researchers make over the next few years.

What we can say for certain is that the days of assuming AI-generated outputs are trustworthy are over. Moving forward, responsibility matters. Verification matters. Expertise matters. And the sustainability of the systems we all depend on—from the infrastructure level up—depends on getting this right.

The cURL story is just the beginning. Pay attention to how this evolves. The solutions we implement now will shape software security for decades to come.

Related Articles

- Bitwarden Premium & Family Plans 2025: Vault Health Alerts & Phishing Protection

- Driving Theory Test Cheating: How Technology is Breaking the System [2025]

- SSD Prices Are Rising: The Best Drives to Buy Before 2025 [2025]

- Best Time to Post on Instagram in 2026: Data-Backed Strategy [2026]

- Tottenham vs Borussia Dortmund Free Streams: How to watch Champions League 2025/26 | TechRadar

- Why Netflix Ditched Wireless Casting (But Google Won't) [2025]

![AI-Generated Bug Reports Are Breaking Security: Why cURL Killed Its Bounty Program [2025]](https://tryrunable.com/blog/ai-generated-bug-reports-are-breaking-security-why-curl-kill/image-1-1769123378370.jpg)