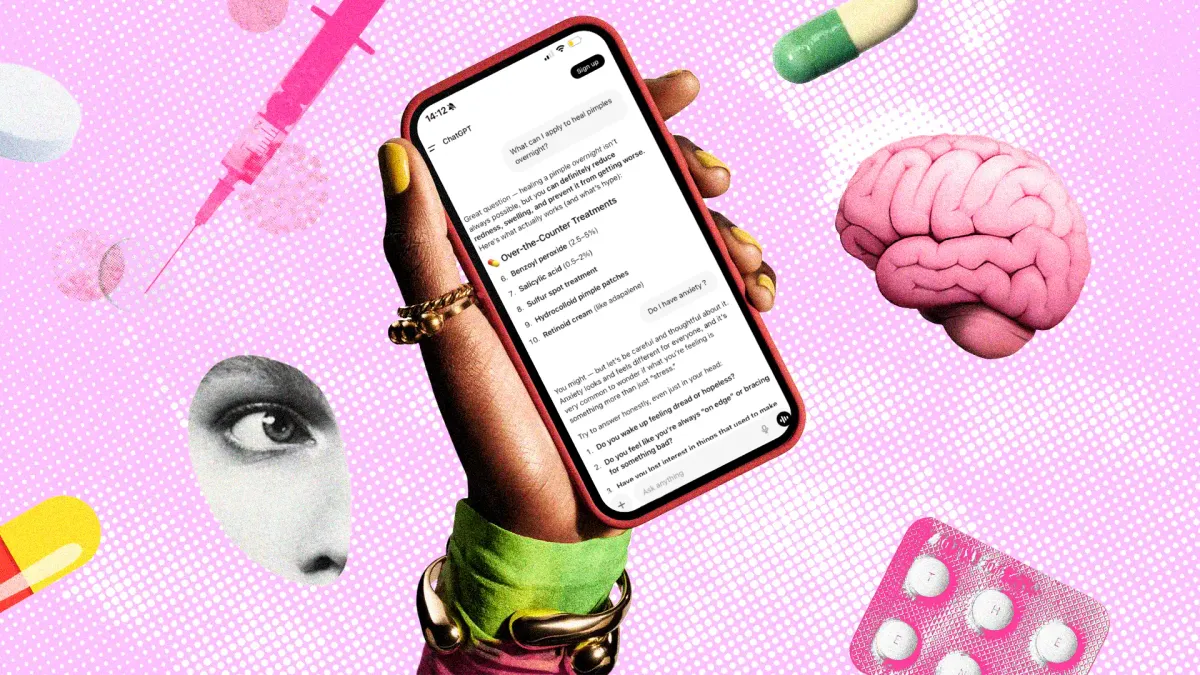

The Confidence Trap: Why AI Health Advice Feels Right But Might Be Wrong

You've felt it before. You ask Chat GPT about a persistent headache, and it responds with measured certainty. The answer sounds authoritative. It cites specific conditions. It lists potential causes in a logical sequence. You read it, feel slightly reassured, and maybe decide you don't need to call your doctor after all.

Here's the problem: confidence and correctness aren't the same thing.

This distinction became the centerpiece of my investigation into how medical professionals view AI health tools. After interviewing dozens of doctors, specialists, and healthcare researchers, I discovered something that genuinely surprised me. Most medical experts didn't outright condemn Chat GPT or similar AI systems for health queries. Instead, they expressed something more nuanced and unsettling: concern about the gap between how authoritative these tools sound and how often they get it wrong. According to a New York Times article, this gap is a significant issue.

The conversation around AI in healthcare has become oversimplified. You hear headlines about "AI replacing doctors" or "never trust AI for medical advice," but the reality is far more complicated. Doctors use AI tools themselves. Hospitals integrate machine learning into diagnostics. The question isn't whether AI has a place in healthcare—it already does. The real question is understanding the specific risks when you're using a general-purpose chatbot to self-diagnose. A Medical Economics article discusses how AI is already integrated into healthcare practices.

In 2024, healthcare providers noticed a tangible shift in patient behavior. People arrived at appointments already primed with information—sometimes accurate, sometimes dangerously wrong—obtained from AI conversations. Emergency departments reported cases where patients delayed seeking care because an AI tool told them their symptoms were "likely minor." Other patients arrived over-informed with treatment plans they'd drafted with Chat GPT, expecting their doctor to simply validate the AI's recommendations.

Medical professionals I spoke with described this shift in cautious terms. They weren't angry about it. They were concerned. Concerned because the problem isn't the technology itself—it's the transparency gap between what these tools can actually do and what users assume they can do.

The Hallucination Problem: Why Chat GPT Sounds So Certain When It's Making Things Up

Let's start with something that feels almost counterintuitive: Chat GPT has a tendency to invent medical details with complete confidence. This is what researchers call "hallucination," and it's not a metaphorical issue in healthcare—it's potentially dangerous. According to a Nature study, hallucinations can lead to serious misinterpretations in medical contexts.

Dr. Sarah Mitchell, a physician at Boston Medical Center who studies AI literacy in patient populations, explained this with a concrete example. "A patient came in asking about a medication combination we'd never discussed," she told me. "He said Chat GPT recommended it. When I looked it up, the combination was plausible-sounding but not actually supported by clinical evidence. The AI had essentially fabricated a treatment protocol that sounded completely legitimate."

Hallucinations in medical contexts are particularly insidious because they follow patterns that mirror real medical information. Chat GPT is trained on vast amounts of legitimate medical literature. It understands the language of medicine. It knows how doctors write. So when it makes something up, it doesn't sound made up. It sounds like information that was excerpted from a medical journal.

This happens because of how large language models actually work. Chat GPT doesn't access real-time medical databases. It doesn't "know" medical facts in the way a doctor learns them. Instead, it predicts the next most likely word based on patterns in its training data. Sometimes, those predictions align perfectly with reality. Sometimes they don't. And critically, the model has no built-in mechanism to distinguish between the two. A Britannica article explains how large language models like Chat GPT function.

Researchers at Stanford Medical School conducted a study comparing Chat GPT's responses to actual medical diagnoses. They found that while the AI often mentioned legitimate conditions, it frequently prioritized them incorrectly. A patient describing symptoms of seasonal allergies might receive a response that focused on rare autoimmune disorders while barely mentioning the most likely explanation.

Medical Bias in Training Data

Here's another layer to this problem: Chat GPT's training data reflects historical biases in medicine itself. The model was trained on information created largely by and about specific demographic groups. This means the AI tends to provide better information for symptoms as they appear in white male patients—because that's disproportionately who was included in the research that trained it. A Nature article discusses these biases in AI training data.

A woman describing chest pain might receive different guidance than a man with identical symptoms, because the AI has learned from a literature where women's cardiac symptoms have historically been documented less thoroughly. A patient from a non-Western background describing symptoms using different cultural frameworks might receive less accurate responses.

This isn't Chat GPT being deliberately discriminatory. It's reflecting the biases embedded in centuries of medical knowledge documentation. But the effect is the same: the tool is less reliable for people who aren't white men, and it does so while maintaining exactly the same tone of confidence.

Dr. James Chen, a cardiologist at UCLA who specializes in health equity, emphasized this point: "If a patient uses Chat GPT and receives guidance based on white male presentation of symptoms, that's not neutral. That's harmful. The person reading the response doesn't know this bias exists. They just think Chat GPT is giving them accurate medical information."

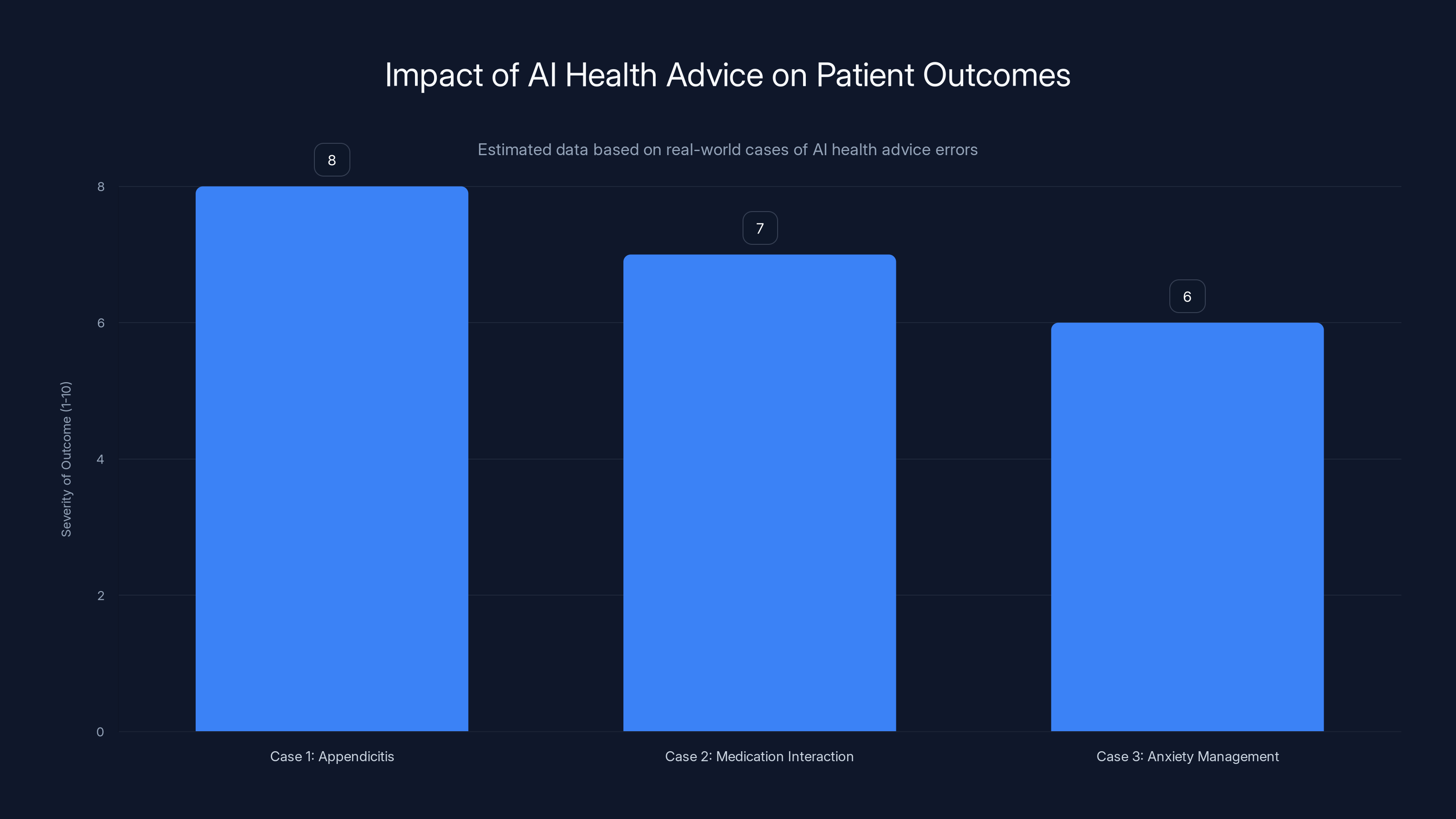

Estimated data shows varying severity in outcomes when AI health advice was incorrect. Case 1 had the highest severity due to delayed surgery.

The Confidence-Accuracy Gap: Why Experts Are Deeply Concerned

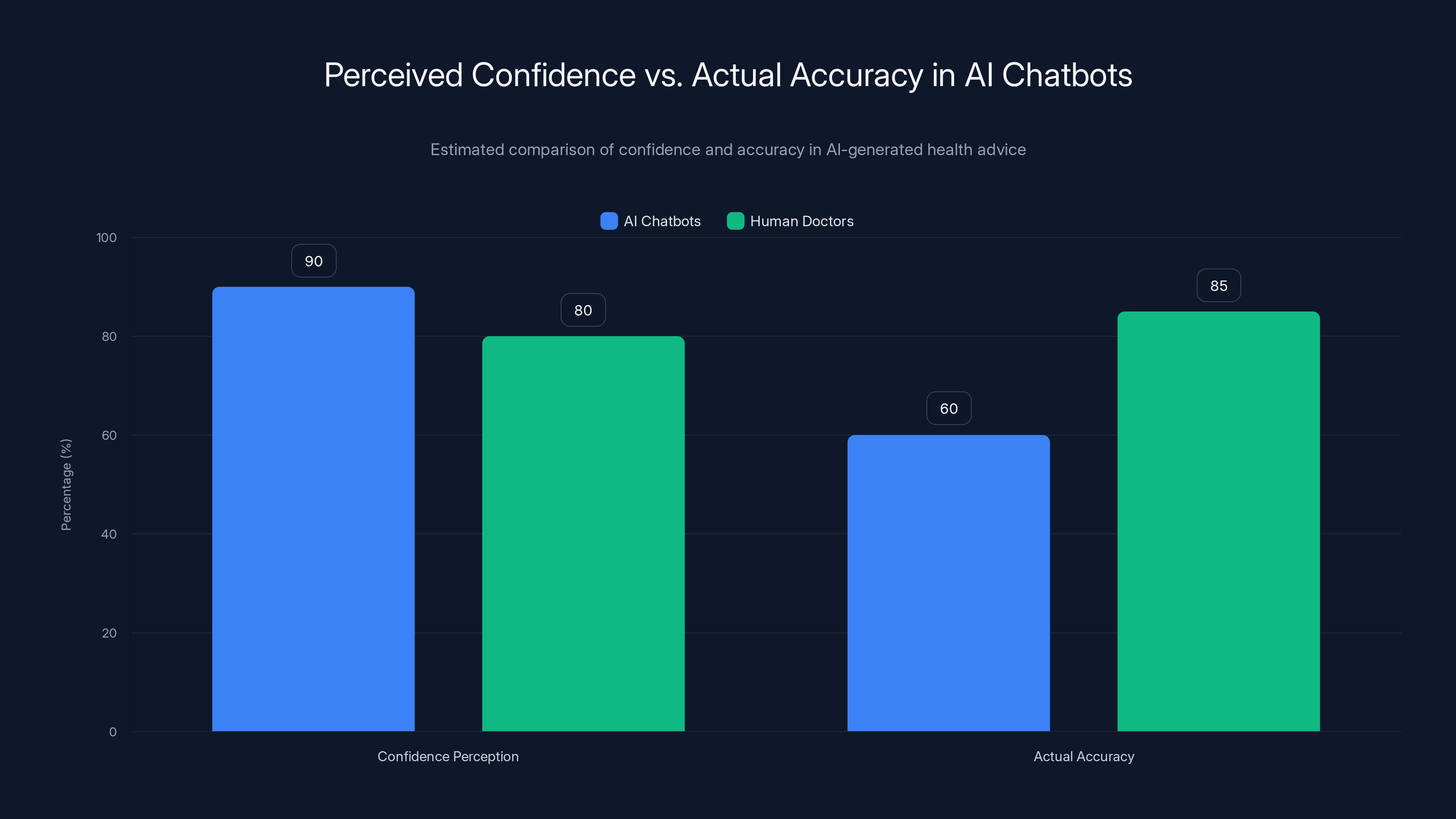

When I asked medical professionals what worried them most about patient use of AI chatbots, the answer was nearly unanimous: the model doesn't express uncertainty appropriately.

Consider how a good doctor handles uncertainty. When you describe symptoms, a competent physician will say things like "this could be X, but we should rule out Y," or "most likely this is minor, but I want to run these tests to be sure," or "I'm not certain, so I'm referring you to a specialist."

Chat GPT, by contrast, tends to present information in an orderly list format. Here's what causes this symptom. Here are the conditions we should consider. The structure itself implies equal credibility to all options, even when some are vanishingly rare and others are the obvious first thing to check.

This matters because human psychology is terrible at assessing confidence based on prose. We're actually pretty good at detecting uncertainty in tone—hearing hesitation in a doctor's voice makes us more cautious. But we're bad at assessing confidence in text. And Chat GPT's training data taught it to write in a mode that's consistently assured. That's actually useful for many tasks. For health advice, it's a liability. A New York Times opinion piece discusses the implications of AI's confident tone.

Dr. Rachel Goldman, a psychiatrist and medical writer at Columbia, explained the stakes: "A patient reads confident-sounding medical information from Chat GPT and changes their behavior based on it. They don't take a medication because the AI suggested an alternative. They delay seeing a doctor because the symptoms sound benign. The confident tone increases the chance they'll act on the information."

She emphasized that doctors aren't worried about patients having information. They're worried about patients having confident-sounding wrong information, which is worse than having no information at all.

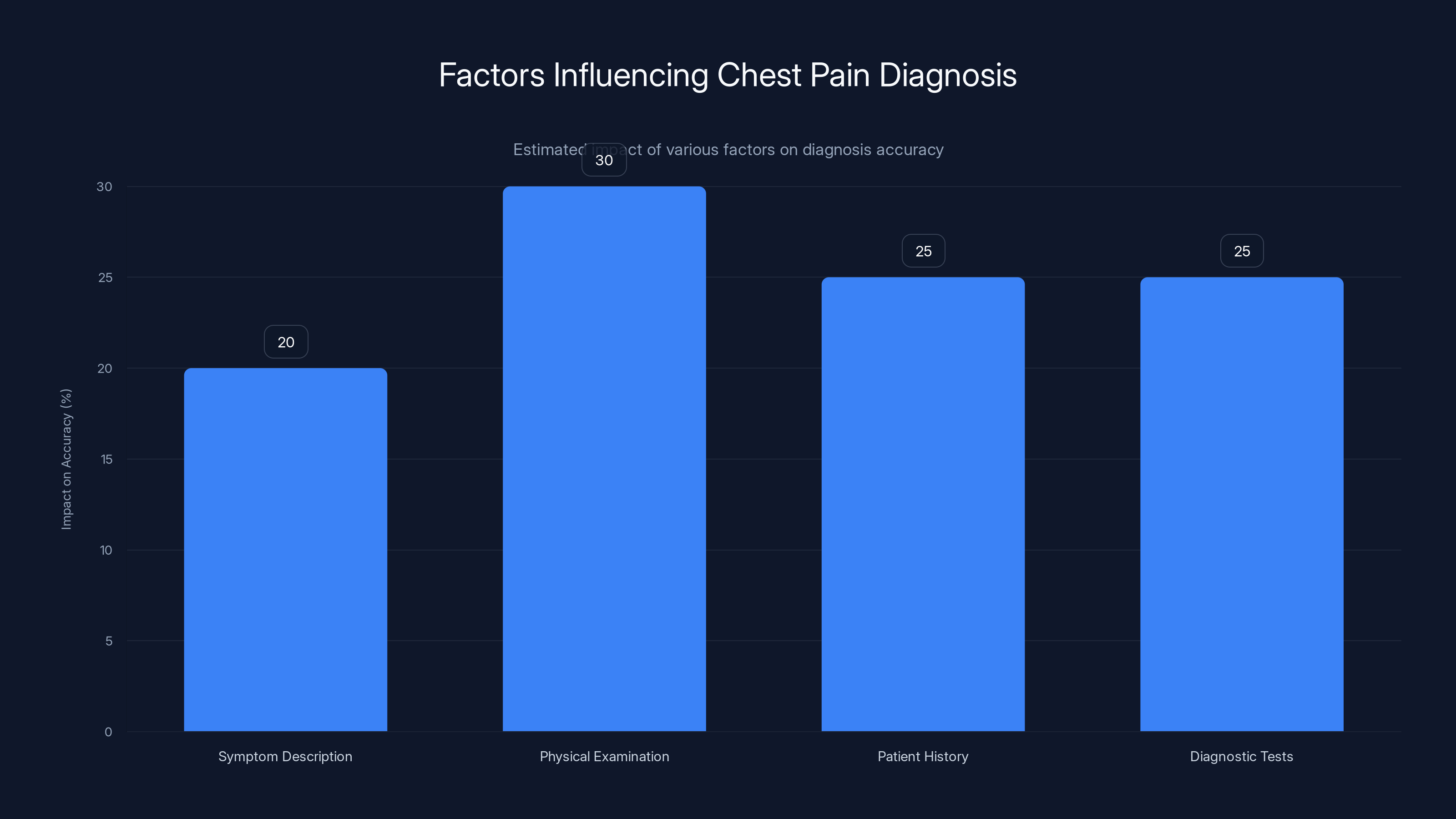

Estimated data shows that while symptom description is crucial, physical examination, patient history, and diagnostic tests significantly enhance diagnosis accuracy.

Real-World Cases: When AI Health Advice Went Wrong

Abstract discussions about AI limitations are easier to dismiss than concrete stories. So let me share what I learned from physicians who've dealt with the actual consequences.

Case 1 involved a woman in her thirties who presented to an emergency department with severe abdominal pain. Before arriving, she'd used Chat GPT to self-diagnose. The AI had listed appendicitis as one possibility but emphasized that her symptoms "more likely indicated irritable bowel syndrome." She'd spent three days at home, gradually worsening, because she thought she could manage a condition that didn't actually exist in her case. She had appendicitis. The delay meant she needed emergency surgery rather than a simpler laparoscopic procedure. The outcome was fine, but it could have been catastrophic.

Case 2 involved an older man with multiple chronic conditions. He used Chat GPT to check whether a new medication his doctor prescribed was appropriate. The AI flagged a potential interaction, and he stopped taking the medication without consulting his physician. The "interaction" was speculative and based on theoretical drug metabolism patterns. In his specific medical context, with his specific dosage, the interaction wasn't clinically significant. By stopping the medication, he caused his existing condition to worsen.

Case 3 involved a teenager who used Chat GPT to understand anxiety symptoms. The AI provided accurate information about generalized anxiety disorder, so the teenager felt reassured they could manage it alone. They never mentioned the anxiety to their parents or sought actual care. The condition progressed. By the time they finally sought help a year later, it had interfered with their academics and social development in ways that required more intensive treatment than would have been necessary with earlier intervention.

Doctors told me versions of these stories repeatedly. Not every case was dramatic. Some involved minor delays in necessary care. Some involved patients seeking unnecessary testing because an AI had suggested remote possibilities. But the pattern was consistent: the tools provided information with more confidence than accuracy justified, leading patients to make different healthcare decisions than they would have made with genuinely uncertain or appropriately cautious guidance.

Dr. Michael Torres, chief of emergency medicine at a major urban hospital, put it this way: "We're seeing more patients make healthcare decisions based on AI guidance. Some of it's harmless. Some of it's costly. A small percentage is genuinely dangerous. What's hard to convey to patients is that the danger isn't obvious. When the AI is right, it feels great. When it's wrong, it can feel right too—until something goes wrong."

What Chat GPT Actually Does Well (And Where It Fails)

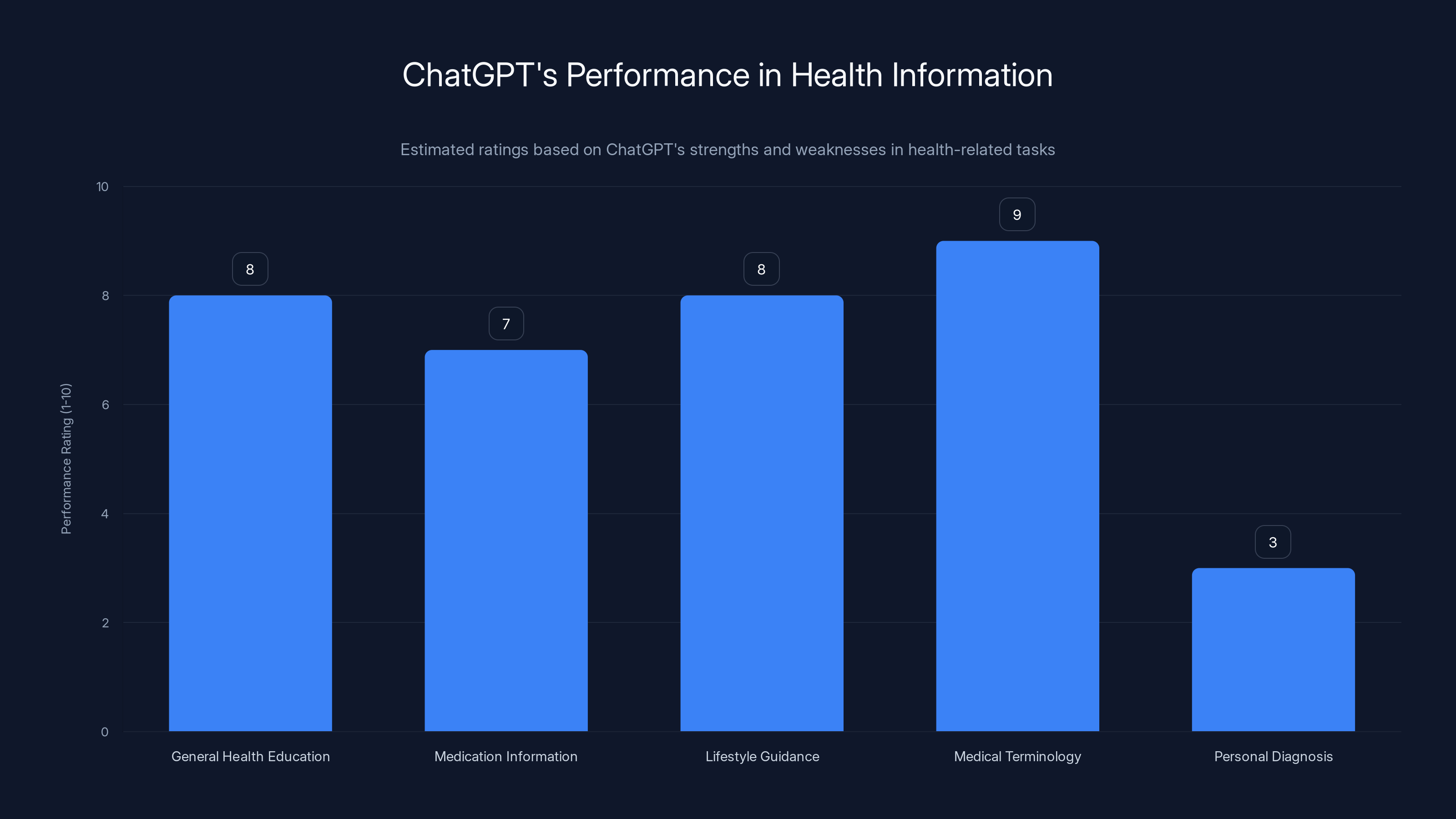

It's important to be precise here: Chat GPT isn't universally bad for health information. There are specific scenarios where it works reasonably well, and other scenarios where it fails predictably.

Where Chat GPT Succeeds

General health education is an area where the tool performs capably. If you want to understand how diabetes works, how your immune system functions, or what happens during pregnancy, Chat GPT can provide accurate, clearly-explained information. The tool excels at synthesis and explanation. It can take complex physiological concepts and make them understandable.

Medication information is another area of relative strength. Chat GPT has been trained on extensive pharmaceutical data. Ask it about how a specific medication works, what its typical side effects are, or how it should be taken, and you'll usually get accurate information. The AI is less good at assessing your individual suitability for a medication, but describing what the medication does? It's quite reliable.

Lifestyle and prevention guidance tends to be solid. Questions about nutrition, exercise, sleep, or stress management produce answers that align with established health guidance. These are areas with strong consensus in the medical literature, and the AI reflects that consensus well.

Understanding medical terminology is something Chat GPT handles beautifully. If your doctor mentioned a condition or procedure you didn't understand, asking the AI to explain it is genuinely useful. You'll get clear, accurate definitions that help you have better conversations with your healthcare provider.

Where Chat GPT Fails Critically

Personal diagnosis is the most dangerous failure mode. Because every person is unique, accurate diagnosis requires understanding individual context that Chat GPT can't properly evaluate. You can describe your symptoms, but the AI can't examine you. It can't order tests. It can't compare your presentation to ten similar patients it's seen. It can't access your complete medical history. It makes educated guesses based on symptom patterns, and those guesses are frequently wrong.

Determining urgency is another critical failure point. Should you see a doctor today, this week, or is this something you can monitor at home? This is a judgment call that requires medical training and experience. Chat GPT tends to err toward caution here (which seems good), but its caution is inconsistent. Sometimes it flags symptoms as potentially serious when they're routine. Sometimes it downplays symptoms that actually warrant immediate attention.

Medication interactions is more dangerous than many people realize. While Chat GPT has good baseline knowledge of how medications work individually, assessing interactions in the context of a specific person's age, kidney function, liver function, other conditions, and genetic factors requires specialized pharmacology training. The AI will sometimes identify interactions that aren't clinically meaningful. Other times it misses interactions that are critical.

Rare conditions expose the AI's pattern-matching limitations. If you have an unusual presentation of a common condition, or an actual rare condition, Chat GPT is likely to suggest common conditions that seem to match your symptoms. You go to your doctor expecting to discuss the AI's suggestions, when your actual condition is something completely different.

Emerging medical information gets handled poorly because Chat GPT's training data has a knowledge cutoff. New research, new treatments, updated guidelines—the AI doesn't know about them. If you're dealing with a condition that's been recently reclassified or has new treatment options, you might get outdated information delivered with the same confident tone as everything else.

The Nuance Most People Miss

Here's what medical experts emphasized repeatedly: the issue isn't that Chat GPT is bad at medicine. The issue is that it's mediocre at some aspects of medicine, bad at others, and users can't tell the difference. There's no indicator that says "I'm being accurate here" or "this is outside my competence." Everything comes out in the same authoritative format.

A competent doctor has internalized the limits of their knowledge. They know what they don't know, and they know how confident to be about what they do know. Chat GPT doesn't have this self-awareness. It will happily discuss conditions it shouldn't discuss, suggest treatments it shouldn't suggest, and provide confidence levels that don't match its actual accuracy.

This is why medical experts didn't tell me "never use Chat GPT for health information." They told me "understand that you're not getting medical advice—you're getting an educated guess that sounds like medical advice."

AI chatbots often present information with high perceived confidence (90%), but their actual accuracy (60%) can be lower than that of human doctors, who maintain a balance between confidence (80%) and accuracy (85%). Estimated data.

How Doctors Actually Use AI (And Why Their Use Is Different From Yours)

One thing that surprised me during my research was learning how extensively doctors actually use AI tools themselves. This seemed contradictory to their warnings about patient use. How can they warn against AI in healthcare while using AI in their own practice?

The answer reveals something crucial about context and training.

When a cardiologist uses an AI tool to analyze a heart imaging study, they're not using Chat GPT. They're using a specialized medical AI trained specifically on cardiology images, validated against thousands of real cases, and approved by medical regulators. The cardiologist reviews the AI's output through the lens of their medical education and the specific patient's clinical picture. The AI is a tool that augments their judgment, not a tool that replaces it. A report on AI in radiology highlights how these tools are used in practice.

When a radiologist uses AI to identify potential abnormalities in X-rays, again, they're using purpose-built tools with explicit accuracy rates, trained on the specific task, and incorporated into a workflow where a qualified professional reviews and validates the output.

This is completely different from you using Chat GPT to self-diagnose. The physician has years of training that lets them assess whether the AI output makes sense. They can compare it against clinical findings. They can place it in the context of the specific patient's medical history and risk factors. They can validate or reject the AI's conclusions based on experience.

You don't have that training. So when Chat GPT gives you an answer, you can't actually assess whether it's accurate. You can only assess whether it sounds plausible—and Chat GPT excels at sounding plausible, whether it's right or wrong.

Dr. Jennifer Williams, a pathologist who works with diagnostic AI regularly, explained the distinction: "There's a huge difference between AI as a consultation tool for a trained professional and AI as a diagnostic tool for a patient. When I use AI, I'm asking 'what am I missing?' I have the expertise to evaluate whether that answer makes sense. A patient is asking 'what do I have?' and assuming the AI's answer is correct because they lack the expertise to evaluate it."

She added an important point: even physicians using AI tools have to be cautious about over-relying on them. There's emerging concern in medicine about "AI blind spots"—cases where multiple physicians accept an AI diagnosis without properly evaluating it themselves, creating situations where systematic errors cascade. A study on AI in hospitals discusses these potential pitfalls.

If that happens with trained physicians using specialized medical AI tools, imagine the risks when untrained patients use general-purpose language models.

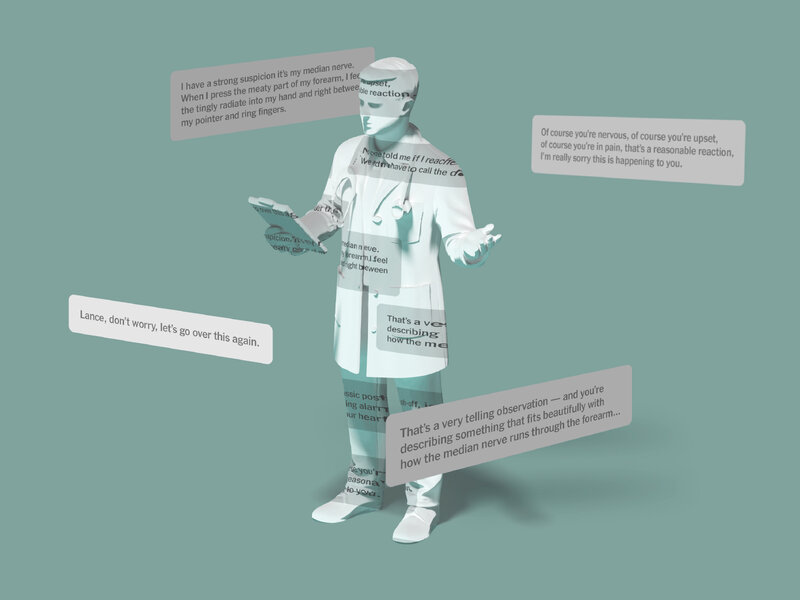

The Symptom Variability Problem: Why Your Symptom Description Never Captures the Full Picture

One thing every physician emphasized was the limitation of text-based symptom reporting for diagnosis. This is relevant to all health information seeking, but it's particularly important when using AI tools.

Consider a simple example: chest pain. This could indicate anything from a pulled muscle to a heart attack. The risk level depends on dozens of factors that don't appear in your symptom description. Is the pain sharp or dull? That matters. But how does the pain change when you move? That matters too. Was there a triggering event? Is the pain constant or intermittent? Does it radiate? Does it change with breathing?

You might describe this as "chest pain," and Chat GPT will generate a list of possibilities. But a doctor examining you will ask questions you didn't think to mention. They'll note the exact location of tenderness. They'll observe your posture and breathing. They'll check your heart rhythm. They'll review your family history and current medications. They might order tests.

All of this matters for accuracy. Diagnosis isn't pattern matching on textual descriptions. It's a complex integration of direct observation, historical information, physical examination, and testing.

This is why two people describing identical symptoms might have completely different underlying conditions. The accuracy of any diagnosis depends on the fullness and accuracy of the information going into the diagnostic process. Chat GPT by definition only gets the information you think to type, filtered through your understanding of medicine, expressed in your medical vocabulary (or lack thereof).

A doctor gets all of that plus the information they elicit through questioning and examination. This asymmetry is why physician diagnosis is more accurate, and why Chat GPT diagnosis is inherently limited.

Dr. Patricia Okonkwo, an emergency medicine physician, gave me a striking example: "A patient presented with 'persistent cough.' They'd researched on Chat GPT and thought they might have asthma or a chronic infection. Actual exam revealed they had a foreign body lodged in their throat from eating too quickly. There was no way that would appear in a text description of symptoms. There's no way Chat GPT would guess it. But a five-minute examination revealed the problem immediately."

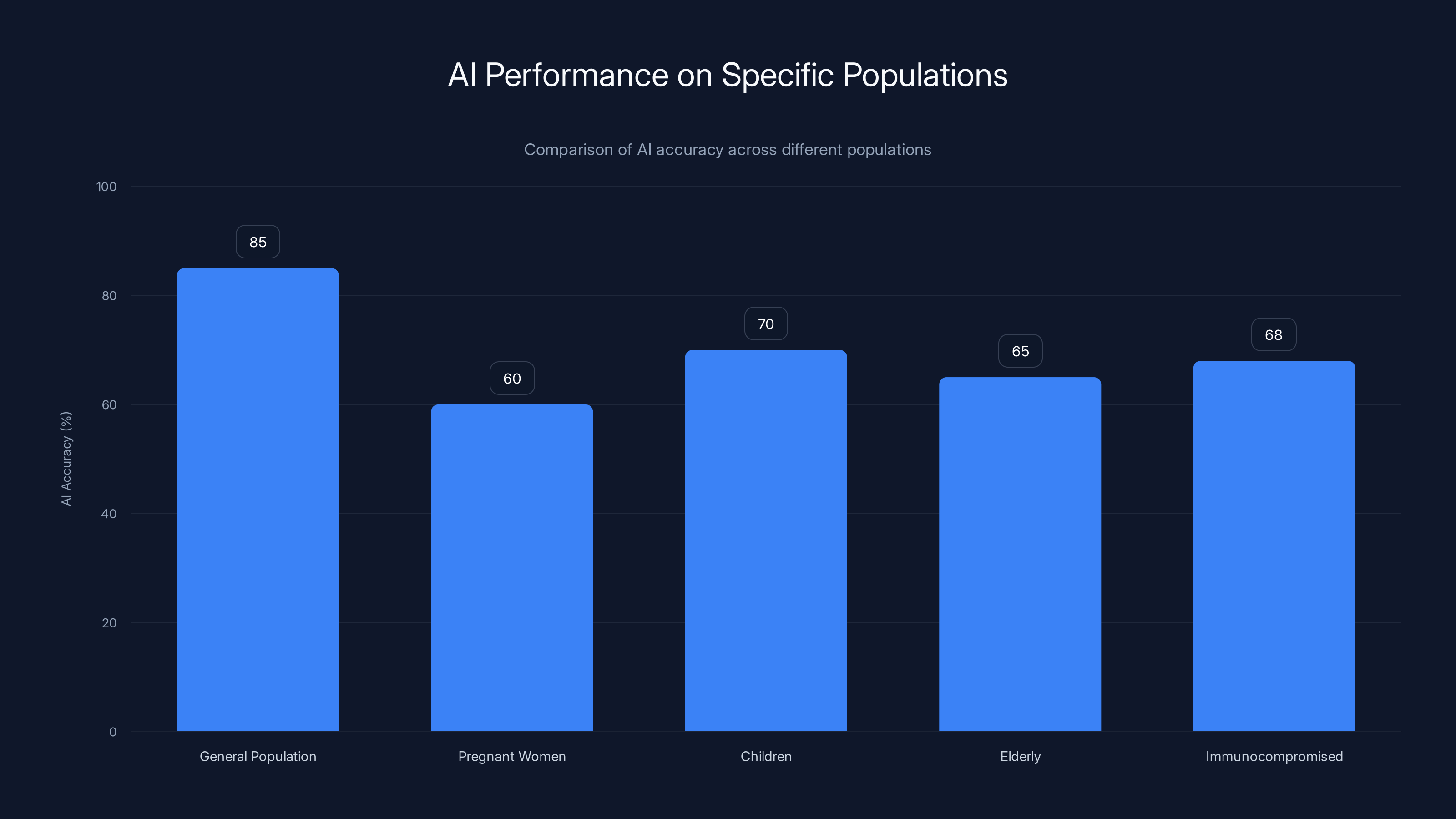

AI tools like ChatGPT show varying accuracy across different populations, with notably lower performance for pregnant women due to the unique medical considerations required. Estimated data.

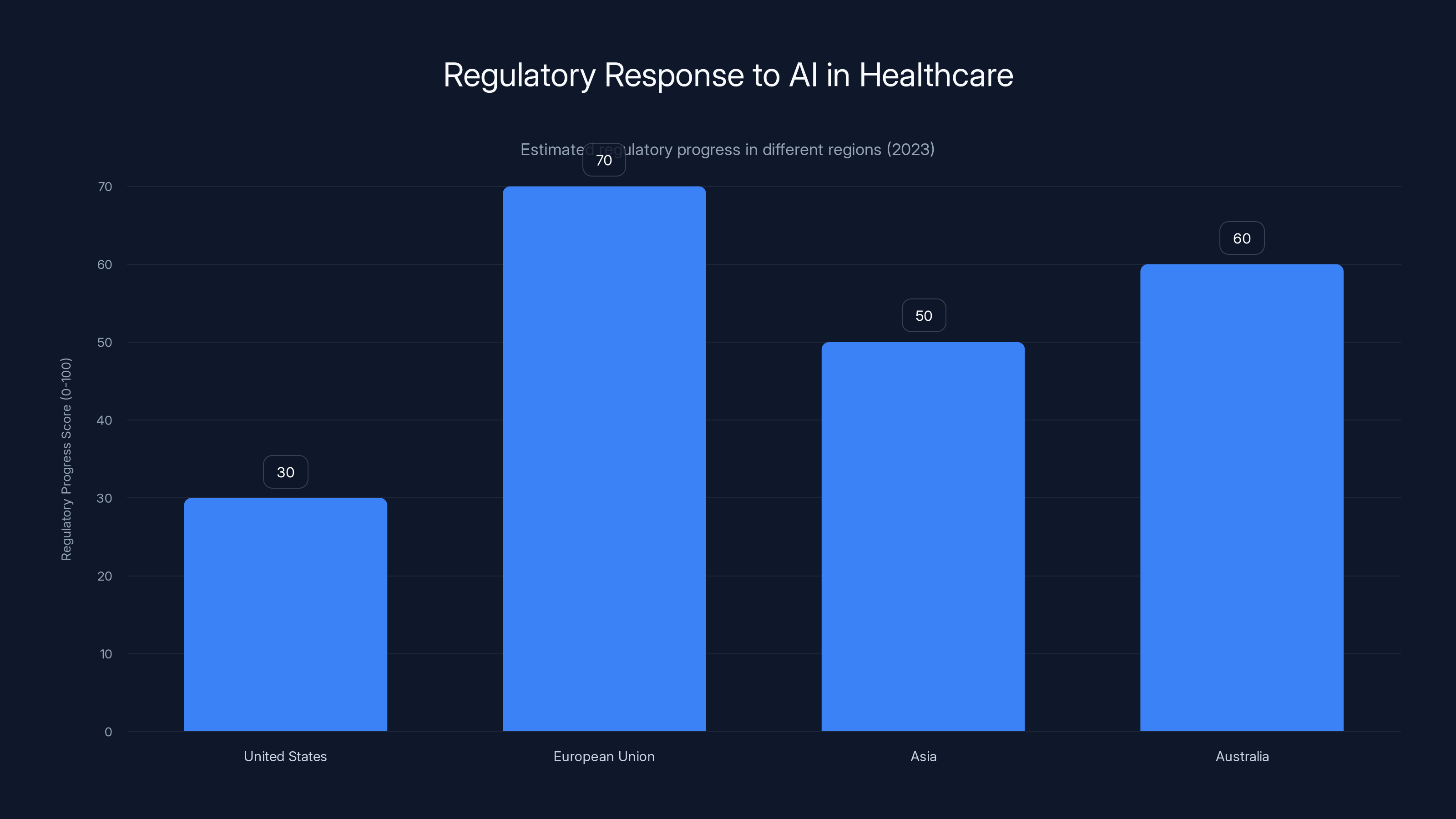

Regulation and Accountability: The Scary Gap in Healthcare AI

One particularly troubling aspect emerged from my conversations with healthcare policy experts: there's very little regulation of Chat GPT's use for health information.

When a medical device helps diagnose or treat a condition, it goes through extensive FDA approval. Clinical trials demonstrate safety and efficacy. Manufacturers are liable if the device causes harm. There's accountability built into the system.

When you ask Chat GPT for health advice, none of that applies. Chat GPT isn't regulated as a medical device. Open AI isn't liable if you make a healthcare decision based on its advice. There are no clinical trials demonstrating the AI's diagnostic accuracy. There's no independent verification of the information it provides.

This is starting to change. Medical regulators are increasingly interested in AI tools that affect health. But right now, we're in a state where anyone can build an AI system, market it without health claims (which keeps it outside medical regulation), and let millions of people use it to make health decisions. A report on AI regulation discusses these emerging concerns.

It's a governance vacuum.

Dr. Timothy Chen, who studies healthcare policy and AI regulation at Georgetown University, explained the problem: "The issue is that Chat GPT doesn't officially claim to be giving medical advice. It's just a general conversational AI. So regulators struggle with whether they have authority. But the functional outcome is that people are using it to make health decisions without any system ensuring it's safe or accurate for that purpose."

He noted that other countries are responding more quickly to this gap. The EU is developing stricter AI regulations that specifically address health applications. Some countries require disclaimers when AI systems discuss health. But in the United States, the regulatory framework is still catching up to the technology.

When Chat GPT Delays Necessary Care: The Dangerous Wait-and-See Approach

One pattern that concerned physicians most was what they called "AI-enabled delay." This is when someone uses Chat GPT, receives reassurance that a symptom is minor, and subsequently delays seeking care they actually needed.

This is particularly dangerous for conditions where early intervention matters. Stroke, heart attack, severe infections, appendicitis, and numerous other conditions have narrow windows where early treatment dramatically improves outcomes. A few days of delay can change the difference between full recovery and permanent disability.

Chat GPT can't know if you're in that narrow window. It doesn't have medical training. It can't examine you. It can't order tests. But it can make your symptoms sound reassuring enough that you decide waiting a few more days is fine.

Dr. Marcus Johnson, an emergency physician at a major trauma center, described the stakes: "We see patients come in who clearly should have come in days ago. When we ask why they didn't seek care earlier, some say they researched their symptoms and thought it was minor. When we ask what they researched, half the time they mention Chat GPT or a similar AI tool. If they'd come in when symptoms started, we would have identified the problem while it was still easy to treat. By waiting, they've complicated the case."

He emphasized that this isn't about internet information generally. Patients finding information on legitimate health websites at least encounter some level of accuracy checking. Chat GPT operates without that filter.

The incentive structure makes this worse. Chat GPT doesn't know if you show up at a hospital later with a complication. It doesn't experience the negative consequence. You do. But by then, the damage might be irreversible.

This is why medical experts are less concerned about Chat GPT providing health information and more concerned about Chat GPT providing health guidance that changes behavior. Knowing about a condition is neutral. Deciding you don't need to see a doctor because an AI told you to wait is dangerous.

ChatGPT excels in explaining medical terminology and general health education, but struggles with personal diagnosis. Estimated data based on described strengths and weaknesses.

The Pregnant Woman Problem: Why General AI Performs Poorly on Specific Populations

One conversation that stood out involved a perinatologist discussing how Chat GPT handles pregnancy-related questions. This is illustrative of how AI tools fail for specific populations.

Pregnancy creates a unique situation where normal medical guidance doesn't apply. Medications that are safe for non-pregnant people can harm a developing fetus. Symptoms that would be minor in a non-pregnant person require urgent evaluation in pregnancy. Risk stratification is completely different.

Chat GPT's training data includes pregnancy information, but it also includes general medical information. When answering a query about symptoms during pregnancy, the AI might prioritize general medical possibilities without properly accounting for pregnancy-specific factors. It might suggest a treatment that's contraindicated in pregnancy. It might downplay symptoms that require urgent evaluation in pregnant women.

The same problem applies to other specific populations: children (who have different physiology and medication dosing), elderly patients (who have multiple comorbidities and different medication metabolism), immunocompromised patients, and others. The AI has general information about these populations, but using general medical reasoning on population-specific cases is dangerous.

Dr. Elena Rodriguez, a perinatologist who's studied AI use in obstetrics, made this clear: "A pregnant woman asking Chat GPT about abdominal pain gets information that might be safe for a non-pregnant woman but dangerous for her. The AI doesn't have a mechanism to weight pregnancy-specific risks differently than general medical risks. It will talk about all the same conditions, without adequately flagging which are emergency situations specific to pregnancy."

She noted that this is a problem even sophisticated doctors occasionally face. If an obstetrician is asked a question outside their specialty—say, about a dermatological condition—they might not remember that certain medications used for that condition are contraindicated in pregnancy. At least a doctor has training to consult resources and seek specialist opinion. Chat GPT doesn't have that option.

The Nocebo Effect: How AI Health Anxiety Can Make You Sicker

Here's a psychological angle that came up frequently: the nocebo effect is real, and AI health advice can trigger it.

The nocebo effect is the opposite of placebo. With placebo, believing a treatment will help actually makes you feel better. With nocebo, believing a condition is serious actually makes symptoms worse.

Chat GPT mentions a condition. You read about it. You start noticing symptoms that match the description. Your anxiety increases. That anxiety causes physiological changes that intensify the symptoms. You become more convinced the condition exists. Your anxiety increases further. The cycle spirals.

This is particularly powerful with conditions involving pain, fatigue, or neurological symptoms—which are subjective and often affected by psychological state. Tell someone they might have a chronic pain condition and they start noticing every ache. Tell them they might have a neurological condition and they start interpreting normal sensations as abnormal.

Dr. Lisa Martinez, a psychologist specializing in health anxiety, explained how AI amplifies this: "When people search for health information online in general, there's some chance they'll read reassuring information. When they use Chat GPT, the AI tends to generate information that covers all possibilities, and human attention naturally focuses on the scariest possibilities. The AI isn't intentionally fear-mongering, but the structure of its responses can activate health anxiety."

She noted that some patients she works with become more anxious after using Chat GPT for health research. They find themselves in an endless loop of asking the AI about progressively more alarming possibilities, each query reinforcing their anxiety.

This is particularly problematic because the anxiety itself can cause symptoms. When someone's anxious about their heart, they become hyperaware of their heartbeat, which causes them to notice normal variations they'd normally ignore, which confirms their anxiety that something is wrong.

Medical professionals can interrupt this cycle by explaining what's normal, reassuring patients appropriately, and addressing the underlying anxiety. Chat GPT, by contrast, tends to add more information to consider, which can intensify the anxiety.

The European Union leads in regulatory progress for AI in healthcare, while the United States is still catching up. Estimated data based on current trends.

The Missing Context: Why Your Medical History Matters (And Chat GPT Doesn't Have It)

One thing that really clarified the problem was understanding why doctors always ask about medical history, medications, and family history.

These aren't just formalities. They're critical diagnostic information that completely changes risk assessment. A symptom that's minor in a 25-year-old healthy person could be a medical emergency in a 65-year-old with heart disease. A medication interaction that's irrelevant for someone with normal kidney function could be deadly for someone with kidney disease.

Chat GPT can't incorporate your medical history into its reasoning in any meaningful way. You could tell it "I have diabetes and take metformin," but the AI then has to sort through general medical knowledge to reason about what's relevant. A doctor with years of training can instantly categorize what matters. Chat GPT has to rely on pattern matching.

Worse, patients often don't know what medical information is relevant. You might think your childhood illness doesn't matter now. Maybe you do, and maybe the AI will pick up on it. Maybe you don't mention it because you forgot, and it would have been crucial information. You might not know that your medication interacts with common treatments for your symptom. You might not know that your genetic background affects risk for certain conditions.

A doctor knows to ask about these things. Chat GPT only knows about them if you volunteer the information. And even if you do, the AI might not weight it properly.

Dr. Raymond Foster, a primary care physician with 30 years of experience, put it this way: "My relationship with a patient is developed over years. I know their baseline. I know how they handle stress. I know their family history. I know their medication allergies. I know what they tend to worry about. When they describe new symptoms, I'm integrating that into a complete picture. Chat GPT is seeing a symptom description with a few contextual details. Those aren't the same kind of information."

This is why the same symptom can have different significance for different people. And it's why text-based AI assessment, no matter how sophisticated, will always be limited by the information patients volunteer versus the complete picture a long-term healthcare relationship provides.

Mental Health Queries: Where Chat GPT Is Particularly Risky

When I asked psychiatrists about Chat GPT's use for mental health questions, I received more guarded responses than from other specialists.

Mental health assessment is inherently subjective and contextual. Depression in one person might look different than depression in another. Anxiety manifests differently across individuals. The same symptom can have completely different causes and require completely different treatments.

Chat GPT can discuss mental health conditions in general terms, but it can't assess the specific person reading it. When someone describes depressive symptoms and receives information about depression, they might feel understood and reassured. Or they might receive confirmation bias—reading information that suggests their symptoms are more serious than they are, deepening their distress.

Dr. Andrew Patel, a psychiatrist specializing in digital mental health, explained the specific risk: "Someone struggling with anxiety asks Chat GPT about their symptoms. The AI generates comprehensive information about anxiety disorders. The person reads about severe anxiety disorder, social anxiety disorder, generalized anxiety disorder, and panic disorder. They match their experience to multiple descriptions. Their anxiety about having anxiety increases. The AI inadvertently made things worse."

Another concern: Chat GPT sometimes provides suggestions that are harmful for mental health without realizing the context. It might suggest meditation (which can be harmful for trauma survivors), breathing exercises (which can trigger panic in some people), or isolation (which worsens some mental health conditions).

The AI doesn't know enough about the specific person to know whether general suggestions are helpful or harmful.

There's also a question of accountability. If someone is suicidal and Chat GPT provides reassurance instead of directing them to crisis resources, what's the responsibility? Right now, there's none—Chat GPT isn't liable. That might be the correct legal framework, but it does mean the tool carries risks without corresponding safeguards.

How to Actually Use AI Responsibly for Health Information

After all of this, it's important to be clear: Chat GPT isn't categorically unusable for health information. But it requires a fundamentally different approach than most people take.

Start With Legitimate Medical Resources

If you're going to research a health condition, begin with sources specifically designed for patient education. The NHS provides information written by medical professionals specifically for lay audiences. The Mayo Clinic website does the same. Web MD, despite its reputation, employs medical editors to review content accuracy. The NIH provides authoritative information about diseases and treatments.

These sources are vetted. They're updated regularly with new evidence. They're written to be understandable without sacrificing accuracy. They carry some level of accountability if information is wrong. Chat GPT has none of these characteristics.

Use Chat GPT for Explanation, Not Diagnosis

If you encounter a term in a medical article you don't understand, Chat GPT is great for explanation. "What does ejection fraction mean?" Chat GPT will explain it accurately. "What are the side effects of lisinopril?" The AI will provide good information.

But "I have these symptoms, what do I have?" is fundamentally different. That's asking the AI to do something it's not good at.

Always Verify With a Doctor

If you use AI for any health guidance, treat it as a starting point for a conversation with your healthcare provider, not a conclusion. Mention what you researched. Ask your doctor if the information was relevant. Let them explain how it applies (or doesn't apply) to your specific situation.

A good doctor will appreciate that you're engaged with understanding your health. They'll clarify misconceptions. They'll explain why certain information doesn't apply to you. This is how you actually use health research responsibly.

Understand the Limitations

Be aware that Chat GPT can't examine you. It can't order tests. It can't access your complete medical history. It doesn't know whether a symptom is new or has been present for years. It doesn't know whether you're at high risk or low risk for serious conditions. All of this matters for accurate assessment.

Watch for Overconfidence

If an AI tool sounds too certain, that's a red flag. Medical decision-making involves uncertainty. A good source will express that. "This could be X, but we should rule out Y" is more trustworthy than "This is definitely X."

Seek Human Connection for Serious Issues

For anything involving significant symptoms, urgent concerns, or mental health struggles, talk to a person. A doctor, nurse hotline, mental health professional—someone who can engage in two-way conversation and know your context. AI tools lack the information and accountability necessary for serious health decisions.

The Future of AI and Healthcare: What Changes Are Coming?

Medical professionals aren't uniformly negative about AI's role in healthcare. The question is how to develop AI systems that are actually safe and effective for patient use.

Some promising directions are emerging. There's work on medical-specific AI systems that are trained and validated specifically for health tasks, rather than general-purpose language models. The FDA has started approving some AI diagnostic tools with demonstrated accuracy and safety profiles. Researchers are building systems that express uncertainty appropriately—that tell users when they're at the limits of what an AI can reliably assess.

Some hospitals and health systems are exploring AI systems that integrate with electronic medical records. An AI with access to your actual medical history, current medications, and test results could provide more contextually accurate information than a standalone chatbot.

There's also movement toward integrated systems where AI handles some tasks (analysis of imaging, identification of drug interactions, synthesis of medical literature) while human professionals handle others (diagnosis, communication, judgment calls). This division of labor can leverage AI's strengths while keeping humans in charge of decisions that require clinical judgment.

But these advances are years away from being widely available. In the meantime, Chat GPT and similar general-purpose AI tools will continue to be used for health questions despite their limitations.

Medical professionals are adapting by explicitly educating patients about AI limitations. Some primary care providers now ask patients whether they've used AI for health research and discuss the findings together. This bridges the gap between patient research and clinical judgment.

The ideal future might involve AI tools that are transparent about their limitations, that integrate with verified medical knowledge sources, that express uncertainty appropriately, and that encourage human oversight. We're not there yet. Today's Chat GPT is a general-purpose tool being used for a specialized purpose it wasn't designed for.

The Bottom Line: Confident Doesn't Mean Correct

Let me return to where we started: a confident answer isn't the same as a correct one.

Chat GPT is good at producing confident-sounding answers. It's trained on vast amounts of text. It understands the language of medicine. It can synthesize complex information into clear explanations. But confidence is a feature of the format, not a measure of accuracy.

Medical professionals aren't opposed to patients using AI. They're concerned about patients using AI without understanding what they're actually getting: a statistically sophisticated guess that sounds authoritative, based on patterns in training data, without any mechanism for expressing uncertainty or accounting for individual context.

The gap between how the tool sounds and what it actually does is the core problem. It's not that Chat GPT is uniquely terrible at health advice. It's that it sounds perfectly reasonable while giving advice that might be wrong. That combination is dangerous.

This is why doctors repeatedly emphasized the same point: use Chat GPT for education, not diagnosis. Use it to understand conditions, not to assess whether you have them. Use it to learn about medications, not to decide whether to take them. And always, always verify AI health guidance with an actual healthcare provider before changing any health decisions.

The technology isn't going away. AI will become increasingly capable. But capability and safety aren't the same thing. Until AI health tools are specifically designed for medical use, validated through clinical testing, and regulated like medical devices, they'll remain tools that can supplement medical information—but never replace actual medical judgment.

Your health is too important to rely on a confident guess. Even a very good confident guess.

FAQ

What exactly is hallucination in AI health advice?

Hallucination is when an AI system generates false information with complete confidence. In medical contexts, this means Chat GPT might invent medication interactions, make up treatment protocols, or describe symptoms of conditions that don't actually match what you've described. The dangerous part is that these inventions often sound completely plausible because they follow patterns from legitimate medical literature that trained the AI. It's not that the AI is lying—it's that it genuinely doesn't distinguish between information it learned from training data and information it's generating based on pattern prediction.

Why can't doctors just tell me if Chat GPT's answer is right or wrong?

Doctors can identify most obviously wrong information, but that's different from verifying everything Chat GPT said is actually correct. If the AI generates plausible-sounding information that's actually inaccurate, a doctor might assume it's correct or might not catch it without specifically checking. This is why it matters that you bring AI information to a doctor—not so they can validate it, but so they can integrate it with their medical judgment about your specific situation. Their value isn't in fact-checking the AI; it's in putting information in proper context and assessing what's actually relevant to you.

Is Chat GPT better or worse than Web MD for health information?

They're different in important ways. Web MD has medical editors reviewing content for accuracy. Chat GPT doesn't. Web MD's information is static and can be updated when evidence changes. Chat GPT's information is generated on the fly based on training data with a knowledge cutoff. Web MD tends to cover broad possibilities, which can trigger health anxiety, but it's typically accurate. Chat GPT can be inaccurate in ways that aren't obvious. For general health education, Web MD is probably safer. For understanding medical terminology or getting explanations of concepts, Chat GPT might be helpful. Neither should be used for diagnosis.

What should I do if Chat GPT gives me health advice that contradicts what my doctor said?

Ask your doctor about it. Bring the specific information from Chat GPT to your appointment. Your doctor can explain why the AI's information might not apply to your situation, might be outdated, or might be based on a misunderstanding of your specific context. If your doctor can't articulate why the AI's information doesn't apply, that might be worth discussing—but your doctor's assessment is based on examining you and understanding your complete medical picture, while Chat GPT's is based on pattern matching. The doctor's assessment is more reliable for your specific situation.

Can I use Chat GPT to research my medication's side effects?

Chat GPT generally provides accurate information about common side effects of medications. Where it becomes problematic is assessing whether a symptom you're experiencing is actually a side effect, whether it's serious enough to warrant stopping the medication, or whether it's related to the medication at all. If you experience concerning symptoms while taking medication, that's worth discussing with your doctor, possibly mentioning the potential side effects you've researched. But don't stop medication based on Chat GPT's information—discuss it with your healthcare provider first.

Is using Chat GPT for mental health support safe?

It's risky. Mental health assessment is highly contextual and subjective. Chat GPT can provide general information about mental health conditions, but it can't assess your specific situation or understand what's actually helping versus harming you. Some suggestions the AI makes might be counterproductive for your specific condition. For mental health concerns, connecting with an actual therapist, counselor, or psychiatrist is important. If you're having a mental health crisis, contact a crisis hotline or emergency services—don't rely on Chat GPT for guidance on serious mental health situations.

How do I know if Chat GPT is expressing appropriate uncertainty about health information?

Learn what genuine uncertainty sounds like. "This could be X, but we'd want to rule out Y" expresses appropriate uncertainty. "Most commonly this indicates X, though less common possibilities include Y and Z" expresses appropriate uncertainty. Chat GPT tends to present information in structured lists without clearly flagging how likely each possibility is or how much certainty exists. When information is presented in an orderly format without explicit expression of confidence levels, that's a sign appropriate uncertainty might not be expressed.

Should I tell my doctor I've researched my condition with Chat GPT?

Yes. Mention what you researched and what information you found. A good doctor will appreciate that you're engaged with understanding your health. They can discuss which information is relevant to your situation, explain any AI conclusions that might be inaccurate, and use your research as a starting point for conversation. Some doctors might express concerns about AI accuracy, which is valuable feedback for understanding the limitations of what you've learned. The key is treating the Chat GPT research as a starting point for conversation, not as conclusions you expect the doctor to validate.

Is Chat GPT ever appropriate to use for pregnancy-related health questions?

It's generally not recommended. Pregnancy creates completely different medical contexts where treatments that are safe for non-pregnant people can be harmful for a developing fetus. Chat GPT doesn't appropriately weight pregnancy-specific risks. It might suggest treatments that are contraindicated in pregnancy or downplay symptoms that require urgent evaluation. If you have pregnancy-related health questions, talking with your obstetrician or midwife is much more important than researching with AI. This is an area where the difference between general medical reasoning and pregnancy-specific medical judgment is critical.

What's the difference between using AI for health education versus using it for diagnosis?

Health education is learning how your body works, what conditions are, how treatments function. This is relatively safe with Chat GPT because the goal is understanding, and inaccuracy is usually pretty obvious (you can check against other sources). Diagnosis is assessing whether you have a specific condition based on your symptoms. This is dangerous with Chat GPT because you're asking it to apply medical judgment about your specific situation, the AI is not qualified to do this, and you have no way to verify accuracy. Keep AI in the education category and keep diagnosis in the healthcare provider category.

Key Takeaways

- You read it, feel slightly reassured, and maybe decide you don't need to call your doctor after all

- " Other patients arrived over-informed with treatment plans they'd drafted with Chat GPT, expecting their doctor to simply validate the AI's recommendations

- "He said Chat GPT recommended it

- The AI had essentially fabricated a treatment protocol that sounded completely legitimate

- And critically, the model has no built-in mechanism to distinguish between the two

Related Articles

- The Elder Scrolls 6 Returns to Classic Style: What Todd Howard Revealed [2025]

- Microsoft's Copilot Email Bug: What Happened & How to Protect Your Data [2025]

- Meta's 2025 Smartwatch Launch: What It Means for AI Wearables [2025]

- Startup's Check Engine Light On? Google Cloud's Guide to Scaling [2025]

- Samsung Galaxy Tab S10 Ultra: The 14.6-Inch Laptop Killer [2025]

- AI Music Generation: History's Biggest Tech Panic or Real Threat? [2025]

![ChatGPT for Health Advice: What Medical Experts Really Think [2025]](https://tryrunable.com/blog/chatgpt-for-health-advice-what-medical-experts-really-think-/image-1-1771666555227.jpg)