The Discover Weekly Crisis: When Algorithms Started Serving Garbage

Something changed on Spotify last year. Not gradually, either. One week your Discover Weekly felt curated by someone who actually understood your taste. The next, it looked like an AI had trained itself on 10,000 bedroom producers and decided you'd enjoy all of them at once.

Music fans started noticing the problem around mid-2024. Reddit threads exploded. Twitter filled with complaints. TikTok videos showed side-by-side comparisons of "before and after" playlists. The verdict was unanimous: Spotify's most beloved feature had become unreliable.

This isn't about snobbery. It's not purists hating electronic music or gatekeeping indie. The issue is specifically about AI-generated tracks flooding the platform with production so generic it makes elevator music sound innovative. Users describe tracks with zero instrumentation depth, vocals that sound like text-to-speech, and lyrics that follow formulaic patterns so obvious they might as well be randomized.

The core problem: Spotify's algorithm can't distinguish quality AI music from shovelware. It just sees music. The platform's discovery engine, which once separated human artists from each other, now has to swim through a growing sea of synthetic content generated by tools designed to game the system.

The real question isn't whether AI belongs on Spotify. It's whether the algorithm was ever built to handle this much garbage data.

How We Got Here: The Perfect Storm of Bad Incentives

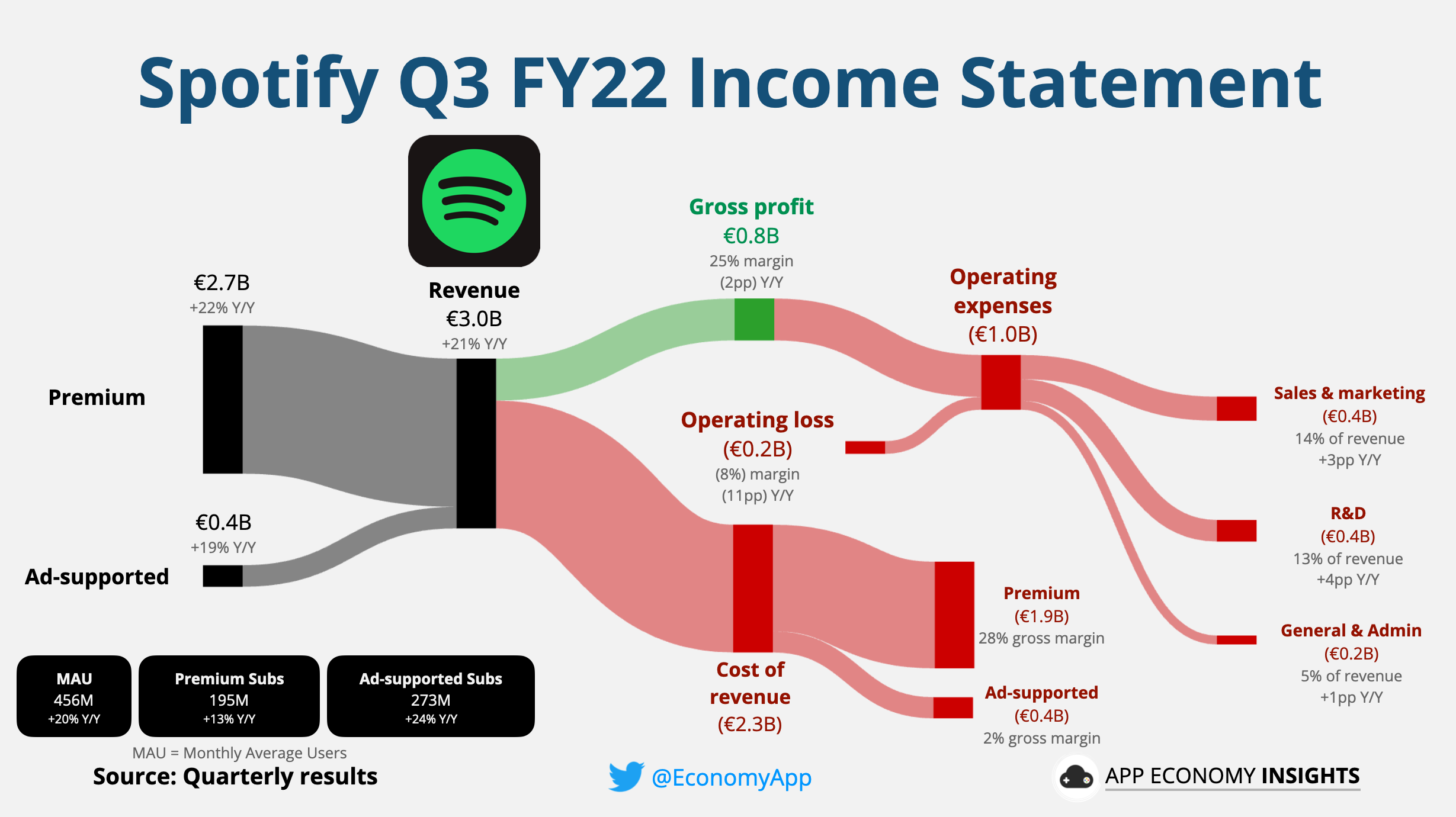

Understanding this crisis requires backing up to understand how Spotify's economics actually work. The platform doesn't pay artists per stream in the way most people think. There's no "$0.003 per play" that applies evenly. Instead, Spotify pools money and distributes it based on market dynamics, with major label deals, placement on official playlists, and algorithmic promotion all affecting how much money flows where.

This created an obvious arbitrage opportunity: if you could generate music cheaply and get it featured in high-visibility playlists like Discover Weekly, you could theoretically make money with minimal investment. The barrier to entry wasn't talent or production skill. It was just learning how to use AI music generation tools.

Startups like Boomy and AIVA emerged specifically to promise artists that AI could handle composition and arrangement automatically. Upload lyrics, select a genre, generate a thousand variations, and upload them all to Spotify. Some producers openly admitted they were uploading dozens of AI tracks per day, experimenting to see which ones gained traction.

Spotify didn't stop this. The platform's content moderation systems weren't equipped to flag obvious AI generation at scale. As long as a track played through to the end (which affects payment eligibility), Spotify treated it the same as a professionally produced song.

The incentive structure broke. Making "good enough" AI music became more profitable than making honest attempts at real production.

Big labels noticed. They started experimenting with AI composition tools themselves, not always disclosing it. Boutique labels popped up that existed specifically to A/B test generated music. The cost of creating a track dropped from hours of production to minutes of prompting.

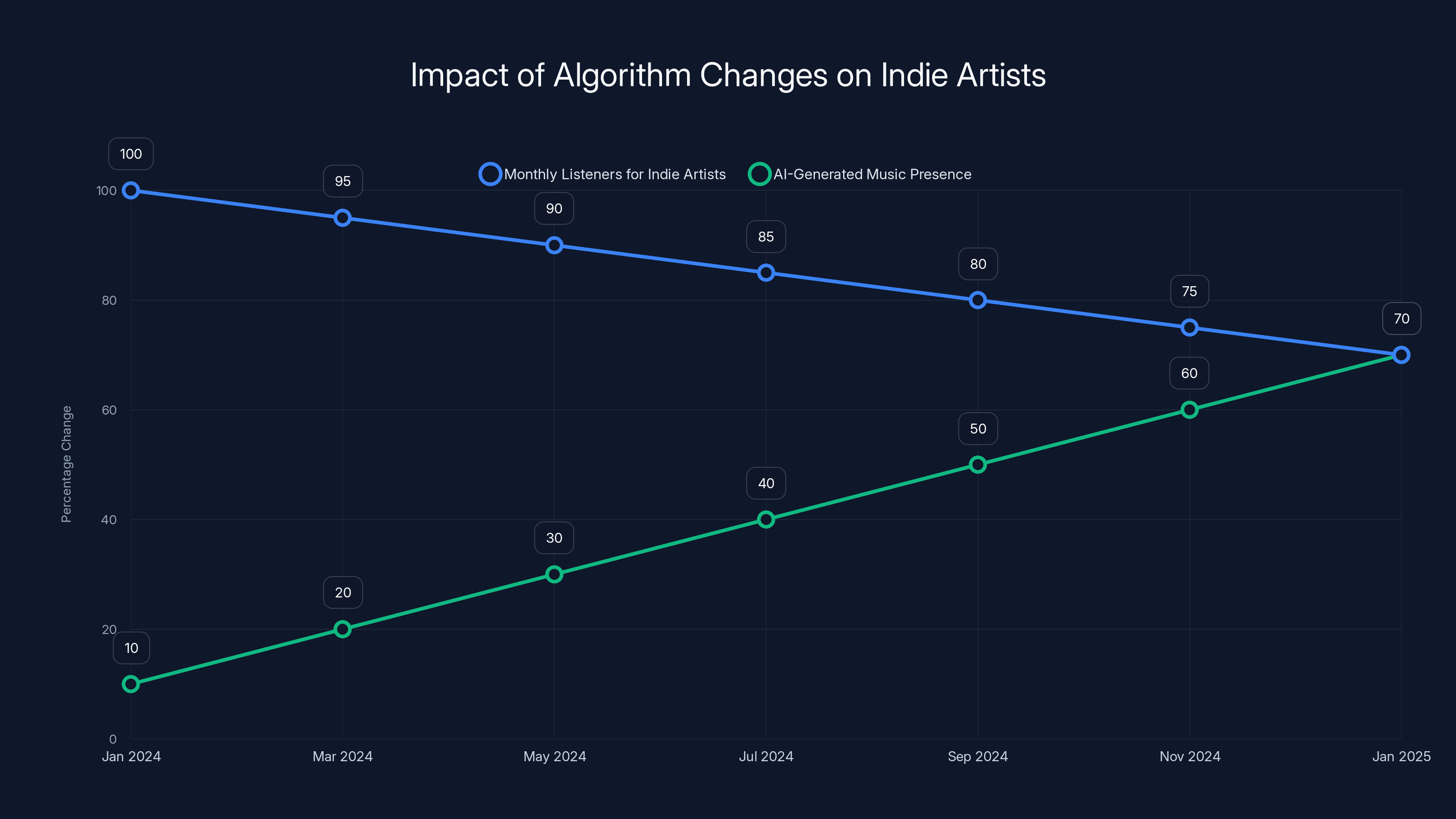

Estimated data shows a decline in indie artists' monthly listeners by 30% over a year, while AI-generated music presence increased significantly.

The Algorithm Learned Bad Habits

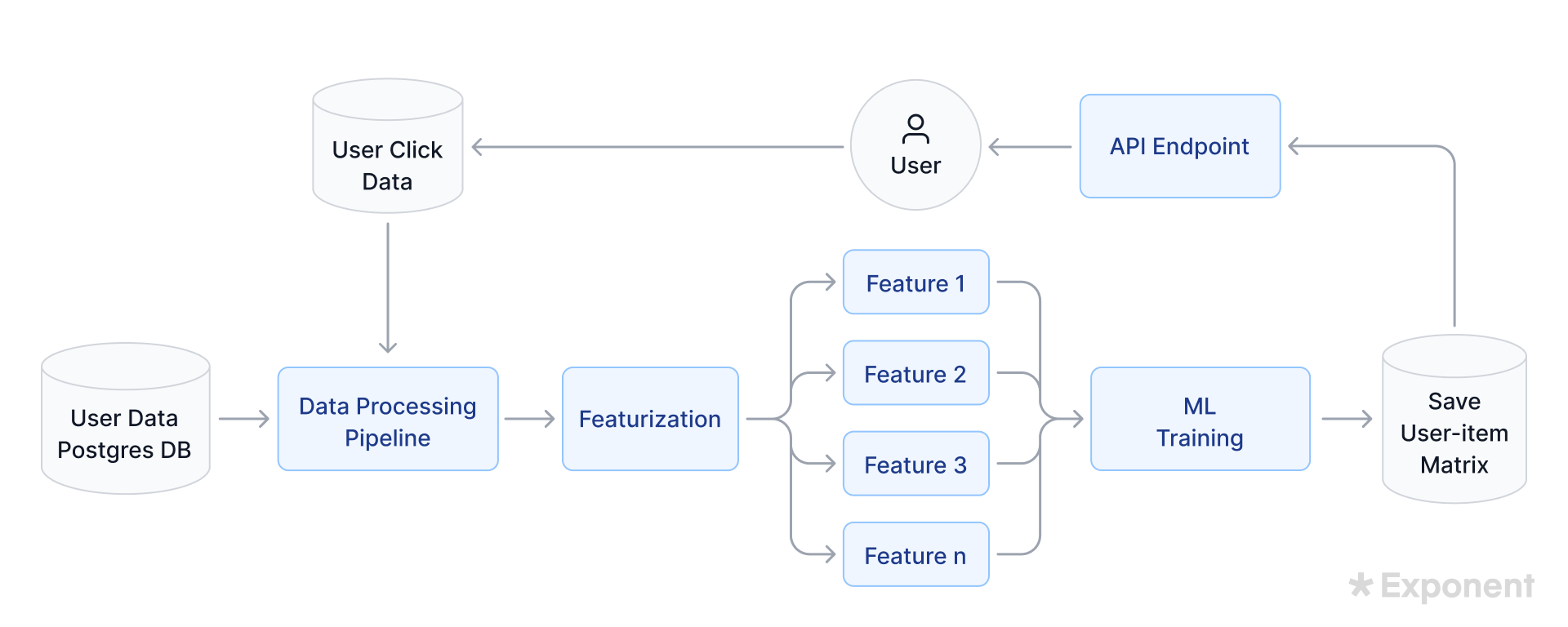

Here's where it gets technical. Discover Weekly runs on recommendation algorithms that learn patterns from listening behavior. When enough users skip a song after 5 seconds, the algorithm penalizes it slightly. When users save tracks and add them to personal playlists, the algorithm sees that as a strong signal.

AI-generated tracks, especially early ones, had one peculiar property: high skip rates but decent save rates from people trying them out of curiosity. The algorithm interpreted this as "interesting but niche." It then recommended similar tracks to users with similar tastes, creating feedback loops that amplified AI music in playlists.

Meanwhile, mid-tier human artists producing legitimate music in relatively niche genres found their Discover Weekly placement declining. The algorithm had decided that AI-adjacent content was more engaging for certain demographic clusters. Real artists competing for the same listener attention watched their playlist placements drop.

The platform's search function also started returning AI-generated results more prominently. Search for "lo-fi hip hop" and you'd get a wall of AI-generated beats before you'd see human producers who'd been making lo-fi for a decade.

The algorithm didn't choose AI over human artists. It just chose engagement metrics, and AI was winning that game through sheer volume.

Why Users Completely Lost Trust

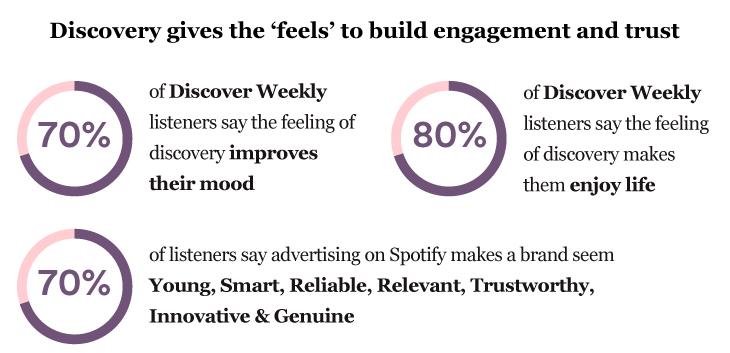

Spotify's brand promise was always that its algorithms understood you better than any human curator could. Discover Weekly was the proof of concept. Every Monday, you'd get 30 songs you'd never heard before but would probably like. For years, this actually worked.

Trust erodes slowly, then all at once. Users started noticing that the quality of recommendations had degraded. Not uniformly. Some playlists still felt perfectly tuned. Others looked like someone had fed random words to a title generator.

The breaking point came when users realized they couldn't trust the feature to discover actual artists anymore. They questioned whether that compelling instrumental track was produced by a human or generated by code. They wondered if the folk song they liked was authentic or another AI demo.

This uncertainty itself is corrosive. Spotify sold itself partly on curation quality. Now users were questioning the premise. If you can't trust Discover Weekly, why not just search YouTube for "synthwave 2024" and listen to established producers? Why rely on an algorithm that might be feeding you machine-generated slop?

Comments across social media captured the sentiment perfectly: "Discover Weekly feels like I'm listening to the demo version of music." Another: "Half of my recommendations sound like AI now. I've started using Apple Music just for variety." The recurring theme was betrayed expectations.

When an algorithm's entire value is based on trust, and that trust breaks, the feature becomes worse than useless.

The Scale of the Problem: Numbers Behind the Fury

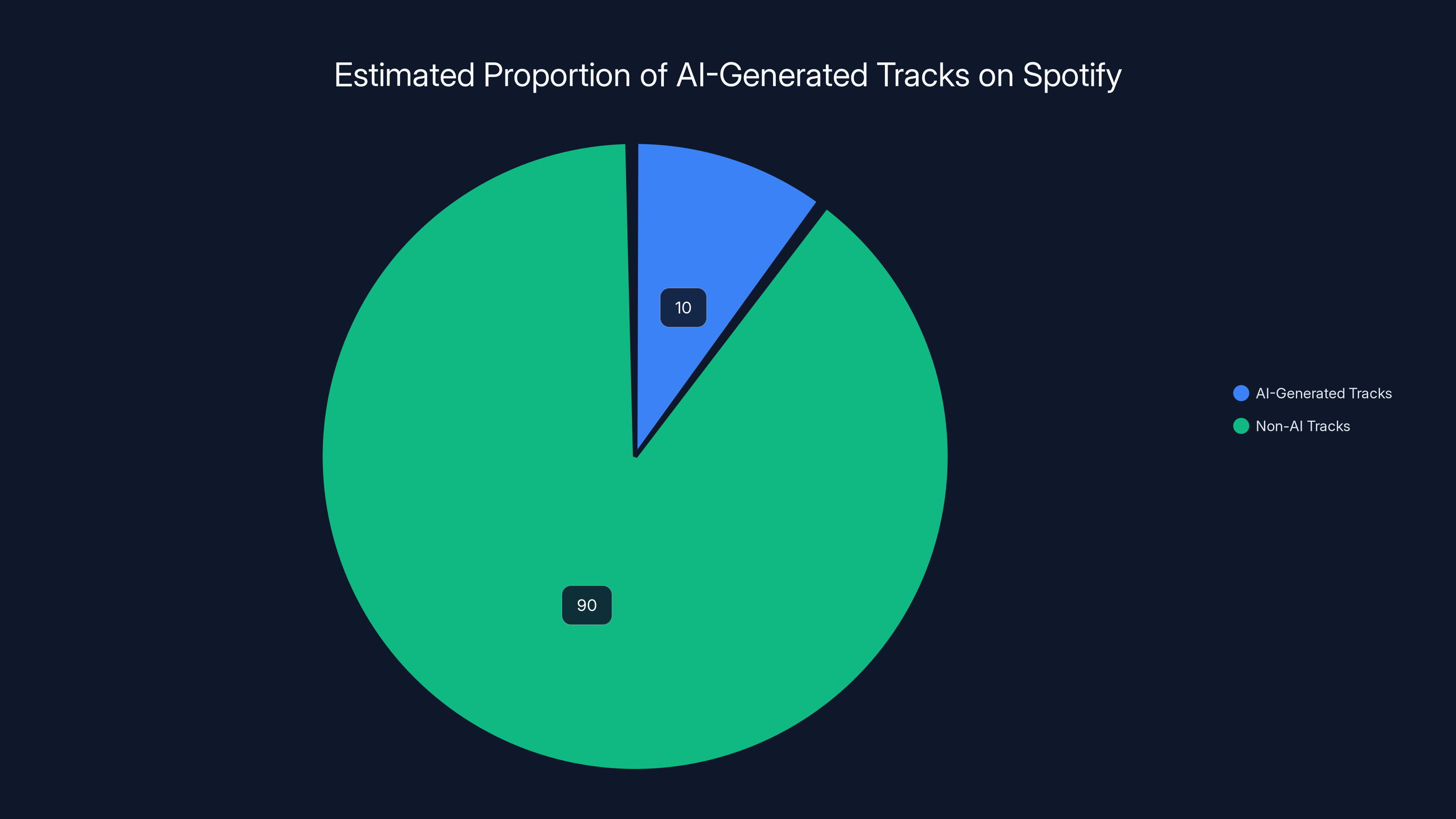

How many AI-generated tracks are actually on Spotify? The platform doesn't publish exact numbers, but industry estimates suggest somewhere between 5% and 15% of daily uploads are AI-generated or AI-assisted. On a platform where over 100,000 tracks are uploaded daily, that's 5,000 to 15,000 AI tracks hitting the system every single day.

Not all of those make it to Discover Weekly. The algorithm does filter. But when your filtering system wasn't designed to identify low-quality AI content, and the volume is this high, degradation in playlist quality becomes inevitable.

User-generated reports paint the picture. In r/spotify, threads about AI-flooded playlists typically garner hundreds of comments within hours. Music production forums documented the exact tools being used to generate tracks at scale. Some Spotify listeners reported that 40-60% of their Discover Weekly recommendations appeared to be AI-generated based on obvious tells like repetitive structures, generic vocal processing, or impossible audio characteristics.

Spotify itself has been cagey about admitting the scale. In late 2024, Daniel Ek mentioned that the company was working on tools to identify AI-generated content, but offered no timeline and no admission that this was a major ongoing problem. The lack of transparency only fueled user frustration.

When you can't get straight answers from the company, users assume the problem is bigger than you're saying.

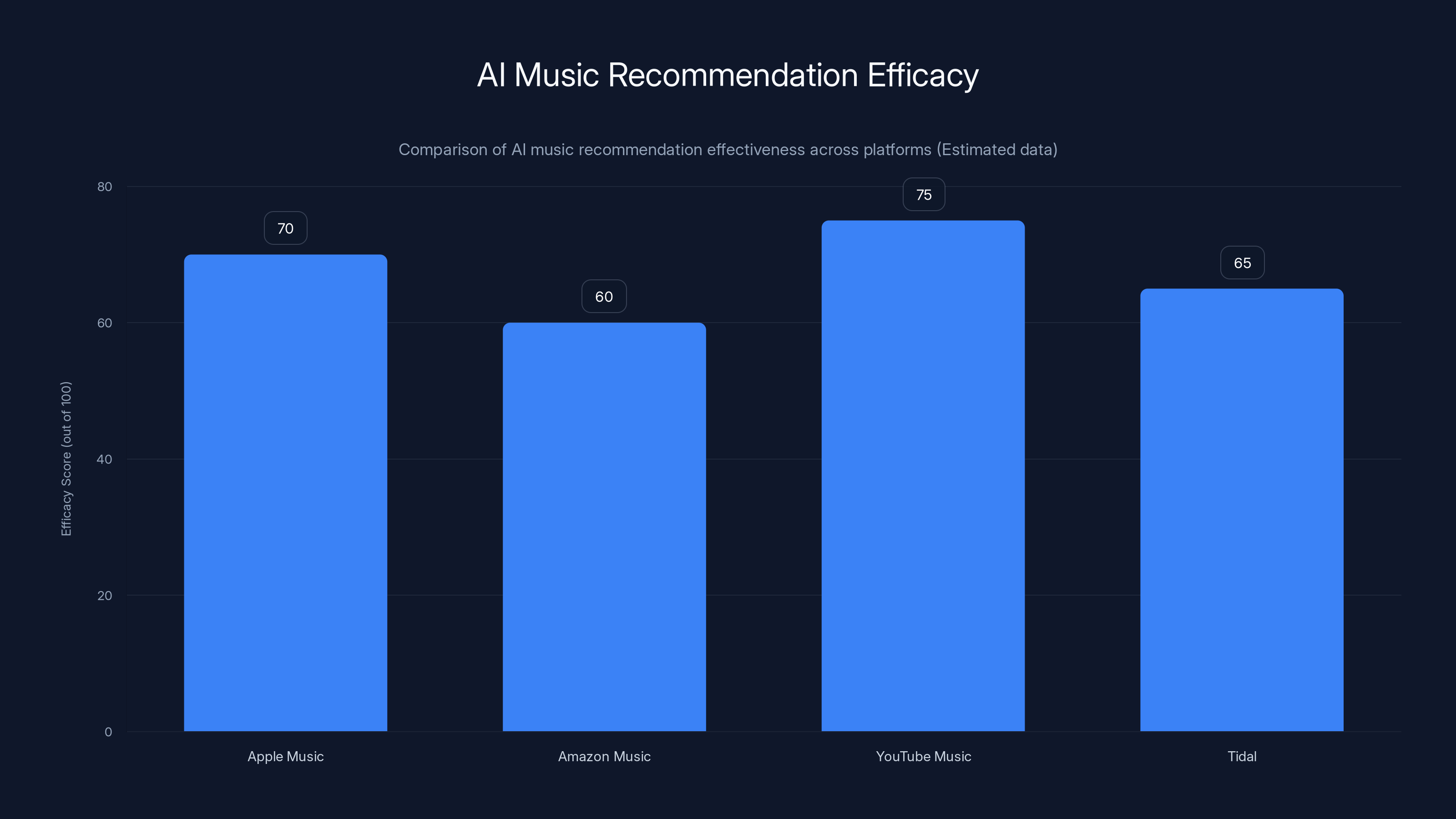

YouTube Music leads in AI music recommendation efficacy due to its integration with Google's broader recommendation infrastructure. Tidal, while prioritizing human curation, scores lower due to its smaller user base. (Estimated data)

How to Spot AI-Generated Tracks (And Why You Shouldn't Have To)

Music production professionals can identify AI-generated content with decent accuracy, though the technology keeps improving. Here are the tells:

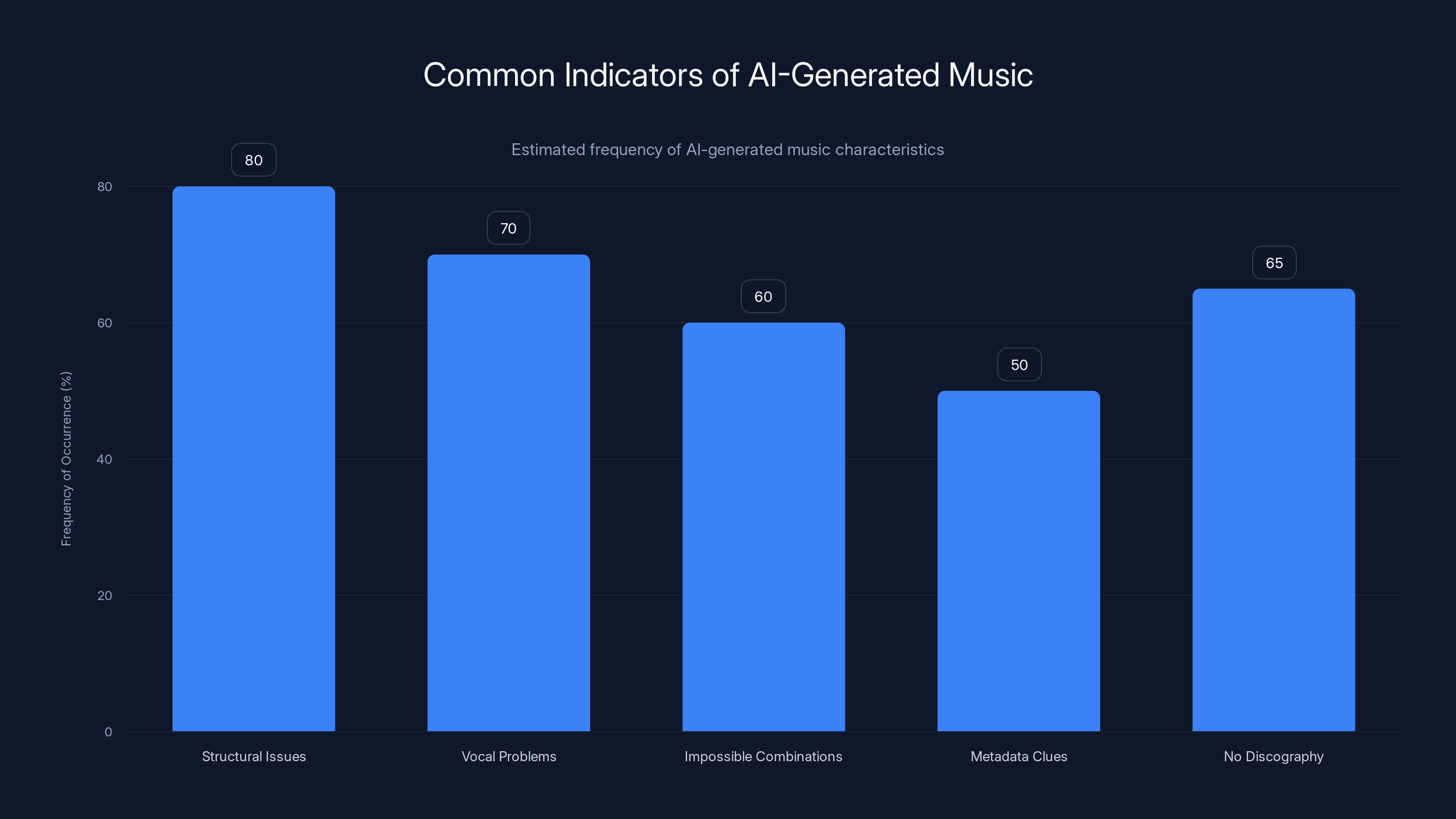

Structural Issues: Most AI music generators still struggle with longer-form composition. You'll hear repetitive 8-bar or 16-bar loops that never truly evolve. The "middle section" feels copy-pasted rather than intentionally different.

Vocal Problems: AI-generated vocals have a particular glossiness that's hard to describe until you've heard enough to recognize it. No breathy imperfections. No timing variations that come from human performance. Everything is too clean, like a voice passed through excessive compression and correction.

Impossible Combinations: Sometimes AI generates instrumentation combinations that no real producer would choose. Heavily reverb-drenched synth lines paired with bone-dry vocal samples. Genre mashups so unintuitive they signal generation rather than intentional curation.

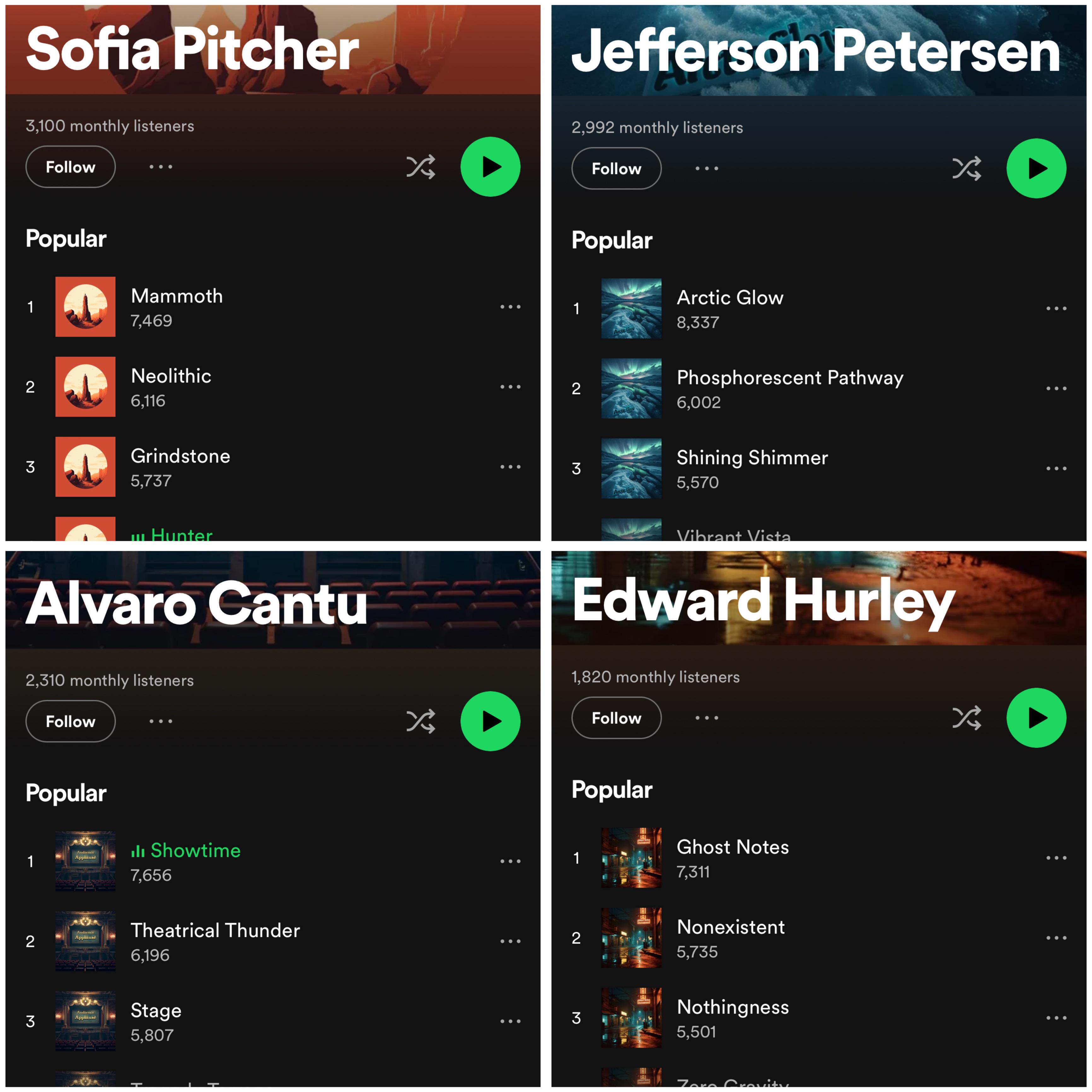

Metadata Clues: Some AI-generated tracks ship with obvious metadata like "Generated by [Tool Name]" in the description. Others use suspiciously generic artist names that sound auto-generated.

No Discography: Real artists accumulate work. They have collaborations, features, label associations, social media. AI-generated accounts sometimes have just one song or dozens with zero connection between them.

But here's the frustrating part: users shouldn't need a forensic audio analysis degree to enjoy a music streaming platform. The responsibility for quality control should rest with the platform, not with listeners acting as gatekeepers.

The Label Response: Record Companies Playing Both Sides

Major record labels have responded to AI music in contradictory ways that reveal their actual priorities. Publicly, they express concern about AI-generated music undercutting human artists. Behind closed doors, some labels are experimenting with AI composition tools themselves.

Universal Music Group has been the most vocal opponent of uncontrolled AI music. In 2024, they actively removed songs from some music generation platforms and pushed Spotify and other services to implement better detection. But even UMG has signed artists who use AI as part of their production process.

Independent labels have splintered. Some have created explicit policies banning AI-generated content from their catalogs. Others have embraced it as a cost-saving measure for their roster. The incentives point in one direction: AI music is cheaper to produce, so if you can get away with uploading it, the profit margins look incredible.

This creates a perverse dynamic where the companies best positioned to police AI music (the major labels with the most Spotify clout) have conflicted interests. They want to prevent AI from disrupting their business models while simultaneously experimenting with it to reduce production costs.

The market for authenticity is real, but it's losing to the market for cost reduction.

Spotify's Half-Measures and Why They're Not Working

Spotify has announced several initiatives to address AI-generated content, but most amount to incomplete fixes that either miss the mark or come too late.

The company introduced a "Content ID" system for identifying AI-generated music, loosely modeled on YouTube's copyright detection. But the system relies on uploaded metadata and voluntary disclosure. It's trivial to circumvent: just don't label your track as AI-generated.

Spotify also added tools for artists to indicate whether they've used AI in their production. Great in theory. In practice, there's zero enforcement mechanism. An AI track generator can upload content and simply not use the disclosure tool.

The platform has experimented with algorithmic detection but hasn't shared results publicly. Early indications suggest it's moderately effective (maybe 60-70% accuracy) but creates false positives that occasionally flag legitimate experimental music as AI-generated.

Most frustratingly, Spotify hasn't changed the underlying payment structure that incentivizes AI music uploads. As long as a track plays to completion and reaches minimum streaming thresholds, it generates the same revenue as a human-produced track. Until the economics change, every incentive in the system rewards flooding the platform with volume over quality.

Detection systems are necessary but insufficient. You need to change the incentives.

The Artist Perspective: When the Algorithm Chooses Against You

Interview independent musicians about their Spotify experience in 2024-2025, and you hear a consistent story: playlist placement has become harder, discovery has become noisier, and competition feels increasingly unfair.

One mid-tier indie artist reported that their monthly listeners dropped 15% over six months while AI-generated music in their genre exploded. They hadn't changed their production quality. Their human fanbase still engaged. But algorithmic discovery dried up as the system prioritized the volume play.

Another artist described the Discover Weekly experience as "a lottery you're increasingly unlikely to win." With thousands of new tracks daily, the statistical chance that any single artist gets meaningful algorithmic discovery has declined. Meanwhile, AI generators can flood the system with hundreds of variations, statistically guaranteeing that some will hit algorithmically.

This creates a chilling effect. New human artists look at the math, see that competing with AI-generated volume is like competing with infinite free labor, and some decide the platform isn't worth the effort. Spotify loses the creators that would produce its future breakout stars.

The platform's playlist submission system also broke under the weight of AI submissions. Curators report being overwhelmed with generic pitches. The signal-to-noise ratio for finding legitimate emerging artists has degraded significantly.

Estimated data suggests that 10% of daily uploads on Spotify are AI-generated, highlighting a significant presence of AI content on the platform.

Competing Platforms: Can They Do Better?

Apple Music has been quieter about AI music, which could mean either they're handling it gracefully or ignoring it entirely. The reality is probably somewhere in between. Apple's recommendation engine is similar to Spotify's in that it learns from listening patterns, but Apple's smaller user base means less incentive for the AI music arbitrage play.

Amazon Music's approach has been even less transparent. The platform exists primarily as an add-on benefit for Prime members, so the pure content quality incentives are different. If Amazon Music serves mediocre recommendations, users don't necessarily leave (they might tolerate it as a bonus feature).

YouTube Music, owned by Google, has algorithmic advantages in that it can leverage YouTube's broader recommendation infrastructure. But YouTube's own ecosystem is already dealing with AI-generated content at scale. The platform's quality issues might just be upstream problems manifesting.

Tidal, owned by artist Jay-Z, has made explicit commitments to prioritizing human artists. Their recommendations emphasize curation and artist relationships over pure algorithmic optimization. But Tidal's smaller user base (around 3 million paid subscribers compared to Spotify's 500+ million) means less data for algorithms to learn from.

The honest truth: none of the major platforms have solved the AI music problem. They're all racing to implement detection systems while trying not to alienate labels or disrupt their own revenue streams. The competitive pressure that would usually incentivize innovation toward higher quality playlists is being undercut by the cost savings AI music provides.

Technical Solutions Being Explored

Several promising approaches exist for addressing AI music at scale, though none are perfect.

Audio Fingerprinting and Watermarking: Rather than analyzing the audio content itself (which AI improves at constantly), watermarking embeds a digital signature in AI-generated tracks. This requires coordination between AI tool providers and streaming platforms, which is happening but slowly.

Metadata Analysis: Advanced ML models can analyze hundreds of metadata signals (artist history, upload patterns, collaboration networks, licensing data) to predict AI-generated content with decent accuracy. Early research suggests 75-85% accuracy is achievable without analyzing the actual audio.

Human-in-the-Loop Verification: For high-stakes playlist placements, human curators could verify content before algorithmic amplification. This adds cost, but concentrates it where it matters most: preventing bad content from reaching millions of listeners.

Blockchain-Based Attribution: Some researchers propose blockchain to create permanent, auditable records of whether a track uses AI in production. This would make disclosure harder to fake, though implementation challenges are significant.

Incentive Restructuring: The most effective solution would be changing how Spotify pays out revenue. If AI-generated tracks paid out less than human-created content, the economic case for flooding the platform collapses instantly. Spotify has shown zero interest in this approach, likely because it would anger AI music startups that are paying customers.

None of these solutions are sufficient alone. A robust approach would combine several: detection systems, payment incentive changes, clearer disclosure requirements, and algorithmic filtering that deprioritizes detected AI content in high-visibility playlists.

The technology exists. The question is whether Spotify prioritizes quality over the path of least resistance.

The Long-Term Implications: What This Means for Music Discovery

If this trend continues unchecked, the consequences extend beyond individual users being frustrated with their playlists. We're potentially watching the degradation of the primary mechanism through which new artists reach audiences.

For decades, radio DJs and human curators were the gatekeepers. Spotify promised algorithmic meritocracy: listen to enough users' behavior, and good music rises naturally. But that meritocracy assumed you could distinguish signal from noise. When the noise becomes louder than the signal, the system breaks.

Emerging artists already face a massive disadvantage in gaining visibility. Label backing, previous success, network effects, and timing all matter. Adding AI-generated volume to the mix essentially tips the scales further toward established artists and away from genuine emerging talent.

This has downstream effects on the entire music industry ecosystem. If new artists struggle to gain discovery traction on Spotify, they're less likely to view music professionally. The pipeline of talent feeding into the industry constricts. Eventually, the catalog of "new human-created music" would start to feel stale because fewer humans are investing in becoming good at making it.

Streaming platforms would become increasingly dominated by AI music, archive content, and a small group of mega-artists. Discovery would cease to be a feature and become a curation museum.

The tragedy would be that Spotify created the problem while claiming to solve it.

Alternatively, if the platform gets serious about quality, a different scenario plays out. AI music finds its proper role: not as a tool for arbitrage and bulk uploading, but as a creative tool that human artists use to enhance their work. The detection systems improve faster than AI generation does. Spotify stabilizes as a platform where algorithmic discovery actually works again, and users regain trust in their playlists.

Which timeline wins depends almost entirely on decisions Spotify executives make in the next 18 months. Every quarter they delay substantial policy changes, the AI music problem entrenches deeper into their dataset and user experience.

What Users Actually Want (and Why Spotify Isn't Delivering)

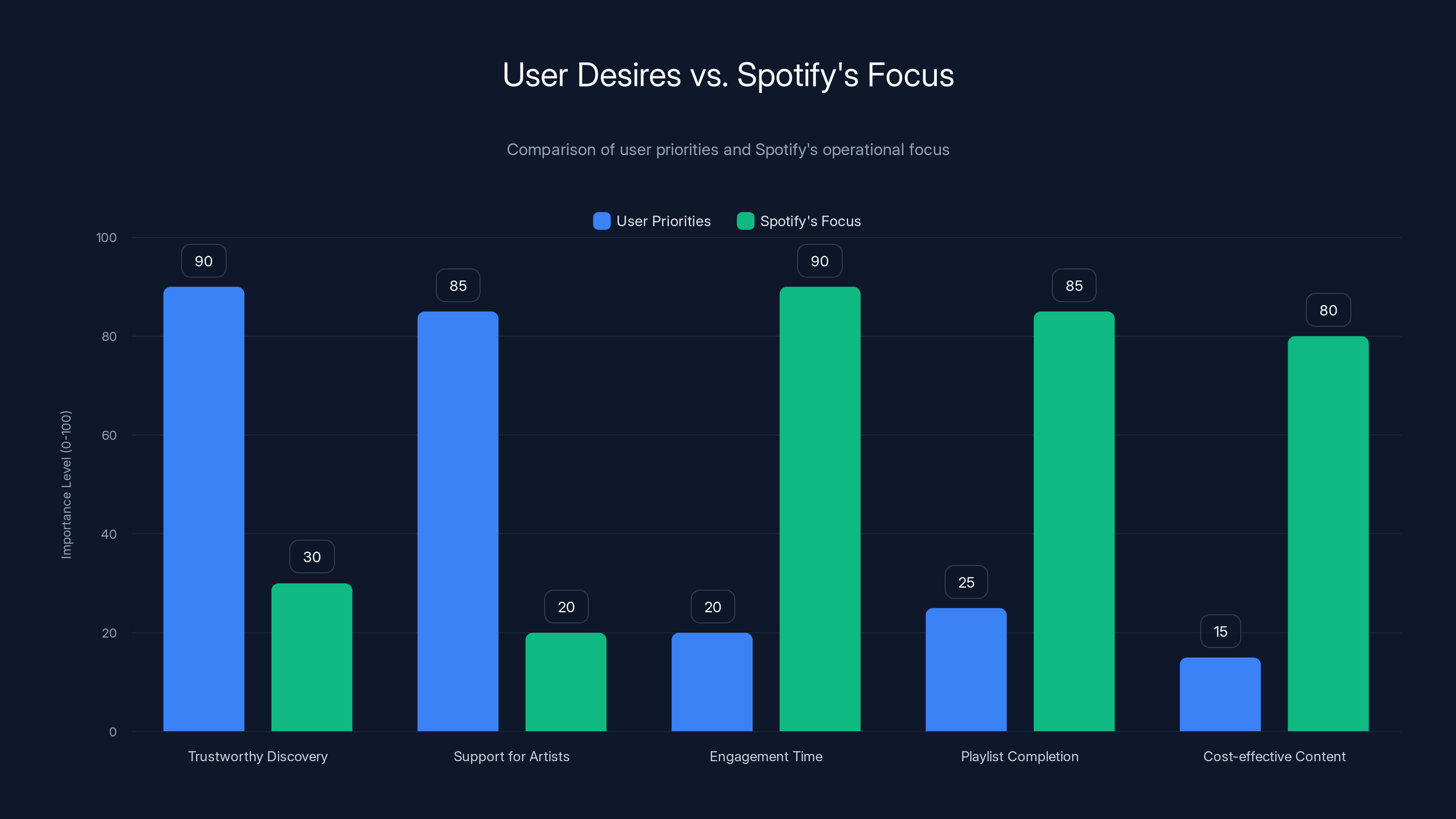

This entire situation stems from a misalignment between what Spotify users want and what Spotify's incentive structure provides.

Users want: Trustworthy discovery of music they'll actually like, produced by real musicians and artists they can connect with.

Spotify's system optimizes for: Engagement time, playlist completion rates, and cost-effective content that minimizes licensing disputes.

These aren't opposed, but they're not aligned either. The platform makes more money from engagement metrics, and AI music is genuinely good at generating engagement (people skip it, click through it, listen to it, and create the data trails that train the recommendation engine).

Users also want to support artists they like, which is a moral consideration that Spotify's metrics don't capture. If you listen to AI-generated music on Spotify, your streams go toward an AI tool developer's cut, not a musician's rent. Users feel implicitly complicit in a system that undermines human artists.

Spotify's solution to this has been transparency theater: adding AI disclosure tags that users can ignore, offering tools to identify AI content that require effort to use, making public statements about their commitment to artist discovery while their algorithms do the opposite.

The platform is solving for the wrong variables while pretending to solve for the right ones.

Estimated data shows that structural issues and vocal problems are the most common indicators of AI-generated music, highlighting areas where AI technology still struggles.

The Regulatory Question: Should Governments Step In?

Several European countries have started exploring regulations around AI music and artist compensation. These efforts have been tentative and mostly symbolic, but they signal that policymakers are aware of the problem.

The challenge with regulation is that music streaming exists in a complicated jurisdictional space. Spotify is headquartered in Sweden but operates globally. AI music tools are created in multiple countries. Artists are distributed worldwide. Crafting regulations that address the AI problem without strangling innovation or creating unintended consequences is extremely difficult.

Some argue that mandatory disclosure of AI-generated content is the right starting point: require all AI music to be labeled, and let users decide whether to engage with it. This is low-friction but relies on enforcement (AI uploaders can easily lie), and it doesn't address the underlying algorithmic fairness issues.

Others propose artist-friendly payment structures: increase per-stream rates for human artists, decrease them for AI-generated content, and mandate that a percentage of streaming revenue goes to a fund supporting emerging musicians. This would create a genuine incentive to prioritize human-created content, but it would face massive industry opposition and might be economically unworkable.

A middle ground might involve mandatory algorithmic transparency: require platforms to disclose how much AI-generated content their recommendation algorithms are promoting, and set targets to limit that percentage. This would create pressure on Spotify to improve detection and reduce AI music prominence without outright banning anything.

Regulation alone won't solve this, but the absence of any regulatory pressure means Spotify has zero external incentive to change.

The Path Forward: What Spotify Could Actually Do

If Spotify genuinely wanted to restore trust in Discover Weekly and address the AI music problem, here's what the roadmap would look like:

Immediate Actions (Next 60 Days): Implement strict AI music detection in the Discover Weekly algorithm and actively deprioritize detected content. This is technically feasible with existing ML models. Communicate this change transparently to users.

Short-Term Changes (3-6 Months): Adjust the payment structure so AI-generated tracks pay out at a reduced rate compared to human-created music. Make the AI music disclosure tool mandatory for all uploads, not optional. Partner with major labels and independent organizations to validate detection accuracy.

Medium-Term Initiatives (6-18 Months): Invest in human curation for niche playlists where algorithmic discovery has degraded most. Create a separate recommendation track for users who explicitly want to explore AI-generated music (some users do enjoy it). Develop artist support programs to help human musicians compete with AI's volume advantage.

Long-Term Vision (18+ Months): Rethink the fundamental discovery mechanism to balance algorithmic efficiency with curation quality. Invest in artist development tools that help emerging musicians build fanbases directly, reducing their dependence on algorithmic discovery.

This would be expensive. It would require Spotify to sacrifice short-term engagement metrics for long-term platform health. It would upset AI music startups that are currently Spotify customers. It would require honesty about the scale of the problem.

The probability of Spotify doing all of this? Low. The probability of them doing some of it? Moderate. They have strong financial incentives to make incremental improvements while maintaining the status quo.

The most likely scenario is that Spotify will do just enough to quiet complaints without fundamentally changing the system.

The Bigger Picture: AI Music Beyond Spotify

The Spotify situation is a microcosm of a larger tension in the music industry: how do you integrate AI as a creative tool without letting it become a mechanism for lowering quality and undercutting human artists?

Spotify specifically problematic because discovery is their core value proposition. For YouTube Music or Apple Music, a degraded recommendation system is annoying but not existential. For Spotify, if discovery becomes unreliable, you've removed the main reason casual listeners stick around.

Other parts of the music industry are dealing with similar issues differently. Music production forums are discussing AI tools explicitly and have largely coalesced around a view that AI is fine for inspiration, workflow enhancement, and certain specific tasks (fixing timing, adjusting levels), but not for replacing human musicianship entirely.

Radio stations and terrestrial music platforms are mostly insulated from the AI flooding problem because they have smaller uploads and more active curation. Your local radio station isn't getting thousands of AI submissions daily.

Live music venues have no AI problem. Touring artists, session musicians, and other live music professionals are actually protected from AI displacement because so far no AI can convincingly perform live. This has created an interesting dynamic where live shows have become more valuable relative to streaming as an artist revenue source.

The structural differences in how different parts of the music industry work mean they'll experience the AI disruption very differently.

What This Reveals About Algorithmic Discovery At Scale

Zoom out from the Spotify drama, and this situation reveals something important about how algorithmic recommendation systems work in the real world versus in theory.

The theory: algorithms analyze user behavior, identify patterns, and match users with content they'll enjoy. Beautiful in its simplicity.

The reality: algorithms respond to whatever signals are available in the data. If those signals are dominated by low-quality AI content, the algorithm's recommendations will be dominated by low-quality AI content. The algorithm isn't smart enough to know the difference; it just knows that people engage with stuff, and it optimizes for engagement.

This is a fundamental problem with recommendation systems that's been known since recommendation algorithms were invented. But it's been invisible in Spotify's case because the platform was curated by a combination of algorithmic and human factors (label relationships, playlist curation, human taste in editorial content).

Once the human curation layer partially failed (due to volume and cost pressures), the pure algorithmic layer became visible, and its limitations became obvious.

Other platforms will eventually face this same problem. YouTube, Amazon, TikTok—any platform that relies on algorithmic recommendation will be vulnerable to this kind of degradation once the incentives align to flood the system with volume.

Building recommendation systems is easier than maintaining them against adversarial input.

Estimated data shows a clear misalignment between user desires for authentic music discovery and artist support, and Spotify's focus on engagement metrics and cost-efficiency.

The Creator Economy Angle: AI Music and DIY Artists

Not everyone is using AI music generation for nefarious arbitrage purposes. Some legitimate creators are using AI as a tool.

Independent producers making lo-fi hip hop, ambient music, and other generative genres have embraced AI to increase their output without sacrificing quality. Some artists use AI to handle composition while they focus on production and mixing. Others use it for inspiration when they're stuck.

The issue isn't that AI music exists. It's that the platform's structure makes it economically rational to upload low-effort AI content en masse rather than put real work into creating good music.

If Spotify's payment system made it clear that human-created content was valued more highly, you'd see the creator community pivot immediately. The same people uploading hundreds of AI variations would instead focus on creating fewer, higher-quality tracks. The incentive gradient is what matters.

Some creators have tried to explicitly market their AI-generated music by being transparent about their tools and approach. This actually works for certain niche audiences who are interested in AI as a creative medium itself. But this is different from uploading generic AI tracks under artist names that sound human-generated.

Transparency about using AI tools is completely different from deception about the music's origin.

The Human Curation Question: Is It Dead?

One counterargument to the algorithmic discovery problem is that human curation is still available. Spotify employs curators who create playlists. You can follow other users' playlists. Communities still share music recommendations.

But algorithmic recommendation was valuable precisely because it scaled human taste. One curator can only evaluate so many tracks. Algorithms can evaluate millions. The promise was that you could get personalized curation at scale.

When algorithms fail, you're left with either: curated playlists (human-created, limited quantity, not personalized) or broken algorithmic recommendations (personalized, broken). Most users will choose broken algorithms over generic curation, which is why Discover Weekly remains popular despite its degradation.

The human curation industry also got hollowed out in the streaming era. Record labels used to have A&R departments that scouted talent and guided musical taste. Radio stations had program directors and DJs who chose songs. Magazines had editors who wrote about music they loved.

Streaming platforms mostly eliminated these roles or reduced them significantly. The idea was that algorithms could replace human judgment at scale. Now that algorithms are failing in the presence of adversarial input, the human expertise needed to fix the problem has already left the industry.

The music industry optimized itself out of the institutional knowledge needed to solve the problem it created.

Comparisons to Other Content Flooding Crises

Spotify's AI music problem isn't unique. Several other platforms have faced similar issues when incentives aligned to flood systems with low-quality content.

YouTube faced this with video content. Early on, anyone could upload anything, and the algorithm would distribute it based on engagement. This led to an explosion of clickbait, misinformation, and low-effort content. YouTube responded by investing heavily in human moderation, automated content identification, and policy changes. They're still dealing with the problem, but with much more active governance.

Twitter/X dealt with bot networks that would flood the platform with low-quality tweets, trying to game the algorithmic promotion system. The platform responded with detection systems and eventually subscription-based verification (which has its own issues).

App stores faced app spam: developers uploading thousands of low-quality apps trying to game search and discovery. App stores responded with stricter submission processes, better curation, and algorithmic quality scoring.

In each case, the platform had to actively choose quality over the path of least resistance. Spotify is currently on the "path of least resistance" side of that equation.

Every platform faces this same choice eventually: maintain quality or allow the system to be gamed.

The Financial Reality: Why Spotify is Dragging Its Feet

Understanding Spotify's slow response requires understanding their financial situation and incentives.

Spotify's streaming revenue comes almost entirely from licensing music from major labels. The company turns around and pays out royalties to artists, labels, and rights holders. The margin between what Spotify charges users and what they pay out is relatively thin.

This means every additional cost that Spotify takes on—better detection systems, human curation, increased payments to human artists—directly hits the bottom line. In an era where Spotify is trying to reach profitability (they're historically been unprofitable or barely profitable), adding costs is a hard sell to investors.

AI music is actually economically beneficial to Spotify under this model because:

- AI music is cheap to license or doesn't need licensing at all

- AI music fills the platform with content that drives engagement

- AI music doesn't require the company to take on additional licensing costs

- Users who encounter AI music in Discover Weekly might still stay on the platform

From a pure financial perspective, Spotify's current approach makes perfect sense. They're extracting every penny of value from the platform without taking on costs.

The counterargument—that degraded discovery will eventually drive away users—requires believing that the long-term user loss from degraded Discover Weekly will outweigh the short-term cost savings from not investing in quality control. Spotify's financial incentives are currently aligned against that belief.

If you want Spotify to change, you need to convince them that quality matters more than quarterly profit margins.

Estimated data suggests that around 50% of tracks on Spotify's Discover Weekly are perceived as AI-generated, highlighting a significant impact on user experience.

User Backlash: When Communities Organize

The Spotify AI music problem is unusual in that it's generated genuine grassroots backlash rather than just individual frustration.

Reddit's r/spotify community has become a hub for discussing and documenting the problem. Users post screenshots of obviously AI-generated tracks in Discover Weekly. Communities dedicated to specific genres have started creating filters and curating alternative recommendations. Discord servers and subreddits have popped up specifically to help users find actual human artists in their preferred genre.

This is consumer organizing in the social media age: rather than wait for Spotify to fix the problem, communities are building workarounds and solutions.

Some users have started using alternative platforms more frequently. Apple Music and Tidal have both reported upticks in users citing "better curation" as a reason for switching. Bandcamp, which connects users directly to artists, has seen increased interest from users frustrated with Spotify's direction.

The long-term impact of this is uncertain. If it reaches critical mass, it could force Spotify's hand. If it remains a niche complaint, Spotify will continue ignoring it. The historical pattern suggests that platform companies respond to coordinated user backlash only when it threatens revenue or regulatory attention.

The AI Music Arms Race: Tool Improvements vs. Detection

This is worth understanding because it illustrates why detection-only solutions are insufficient.

AI music generation tools are improving rapidly. Six months ago, generated vocals were obviously artificial. Today's tools produce vocals that, in isolation, are convincingly human. The tools are getting better faster than detection systems can adapt.

This means any detection system built today will gradually lose effectiveness as AI tools improve. You'd need constant updates and retraining of detection models to keep up, which is a perpetual cost burden.

Detection-based solutions also have a fundamental asymmetry: the AI tool developer is incentivized to improve their generation to evade detection. The platform is incentivized to improve detection, but detection is a cost center, not a revenue generator. Over time, the AI tool developers might pull ahead.

This isn't speculative. It's already happened in other domains. Email spam detection is a decades-old arms race where spammers have mostly stayed ahead of filters. Video deepfakes improve faster than detection systems adapt.

The only way to sustainably solve this is to change the incentives so that uploading AI music is economically irrational, not just technologically difficult. Detection helps, but it's not a permanent solution.

You can't solve an economic problem with purely technical tools.

Looking at Genre-Specific Impact

The AI music problem isn't uniform across all genres. Some are much harder hit than others.

Lo-fi hip hop and ambient music: These genres are disproportionately affected because they're relatively simple to generate convincingly. A basic AI music tool can produce passable lo-fi beats almost instantly. Listeners of these genres report that 50%+ of Discover Weekly recommendations appear AI-generated.

Synthwave and synthpop: Similar story. These genres rely on specific sound palettes that AI tools have largely figured out. Synthwave artists specifically complain that their genre has been flooded with indistinguishable AI variations.

Classical and orchestral: Surprisingly affected. Composers realized that AI could generate basic orchestral arrangements cheaply, and now Spotify is full of "background classical" tracks that are clearly AI-generated.

Jazz and complex instrumental: Harder for AI to handle convincingly, so less affected. The complexity of improvisation and nuanced playing still trips up AI models.

Vocal-heavy pop and hip hop: Less flooded initially because AI vocals were obviously fake. As vocal generation improves, this is changing, but the problem hasn't reached peak saturation yet.

Folk and acoustic music: Interesting case. Genuine folk music is harder to fake convincingly because authenticity and human quirks are central to the genre's appeal. But this also means it's less likely to get algorithmic promotion because the algorithm can't distinguish real folk music from well-made AI folk music.

The pattern is clear: genres that are structurally simple but pleasing to listen to get hit hardest. This creates a perverse incentive where creating genuinely good AI music in complex genres is harder, so fewer people try, so those genres remain less affected but also get less attention.

The Label Accountability Question

Why are major record labels, which created the music industry's current structure, not more actively preventing AI music from their artists?

Partially, it's a surveillance problem. A major label like Universal has thousands of artists. They can't monitor everything every artist uploads. Some AI music likely slips through under the radar.

Partially, it's because there's actually money in it for the labels. A label that figures out how to use AI tools to generate content for their B-tier artists can increase margins significantly. Some labels have explicitly run experiments with AI composition, and if it works (generates revenue without complaints), there's no incentive to stop.

Partially, it's because the alternative—cracking down hard on AI music—would require going against the industry trend and potentially losing artists who want to experiment with AI tools. No label wants to be seen as anti-innovation.

But the most important factor is that labels don't actually care about Spotify's user experience. They care about getting paid royalties. If AI music degrades Discover Weekly's quality and causes Spotify's user base to shrink, that eventually affects the label's revenue. But that's a secondary concern compared to the immediate revenue from paying royalties on AI-generated uploads.

The economic incentives are misaligned at every level of the industry.

The Trust Element: Why This Matters Beyond Just Playlists

On a surface level, this is about music recommendation quality. But deeper down, it's about platform trust.

Spotify built its entire brand on the idea that it understands music listeners better than anyone else. The company's value proposition to listeners is: "We'll deliver you music you love, algorithmically, at scale." The value proposition to labels is: "We'll distribute your music to listeners who want it."

When the algorithm starts serving garbage, both value propositions break. Listeners lose faith in the recommendations. Labels start questioning whether algorithmic promotion is worth the licensing money they're giving Spotify.

Once trust erodes, recovery is slow. Users who stop trusting Discover Weekly will explore alternative sources (YouTube, TikTok, friend recommendations, other platforms). Even if Spotify fixes the AI music problem completely, it will take months or years of consistently good recommendations to rebuild trust.

This is the long-term risk that makes the current situation serious, not just annoying.

The Regulatory Pressure Building

Governments are starting to notice the AI music problem, and initial regulatory responses are being proposed.

The European Union, which has been aggressive in regulating tech platforms, has started exploring requirements for AI-generated content disclosure. The Digital Services Act (DSA) creates obligations for large platforms around content moderation and user protection, which could eventually extend to AI music.

Spain has proposed specific regulations around AI music, including mandatory artist payments and transparency requirements. France has floated similar ideas.

In the United States, the approach has been more cautious, with mostly industry self-regulation. But this could change if consumer advocates start pushing for regulation.

The challenge for regulators is that music streaming operates in a complex international space with lots of industry lobbying. Any regulation that's too heavy-handed risks pushing legitimate AI music out while creating loopholes for bad actors. Regulation that's too light doesn't solve the problem.

What's likely is that in the next 2-3 years, some form of AI music disclosure requirement will become standard across major platforms. Whether that's sufficient to address the quality problem remains to be seen.

Regulatory pressure exists, but it's moving slowly relative to the speed of the problem.

A Personal Perspective on AI Music

Let's be clear about what's valuable about AI music generation and what isn't.

AI music is genuinely useful for: background scoring in videos, placeholder music for creative projects, starting points for human composition, exploring musical ideas, learning about music theory and structure.

AI music is problematic for: replacing human musicians, deceiving listeners about its origin, flooding platforms to game recommendation algorithms, artificially inflating artist counts to manipulate metrics.

The distinction is intentionality and transparency. An artist who uses AI tools to enhance their work and discloses that fact is doing something legitimate. A tool developer who uploads thousands of AI tracks under fake artist names trying to game Spotify's algorithm is doing something harmful.

Unfortunately, Spotify's systems can't distinguish between these. The platform treats all music the same way, so legitimate AI music and fraudulent AI music both degrade the discovery system.

The problem isn't AI music. It's the incentive structure that rewards deceiving users at scale.

What Happens Next: The 2025 Projections

If Spotify continues on its current path:

- The percentage of AI music in Discover Weekly will continue to increase

- User satisfaction with the feature will continue to decline

- Some users will switch to alternative platforms or discovery methods

- AI music tools will continue to improve, making detection harder

- The feature will eventually become unreliable enough that casual users stop checking it regularly

If Spotify makes meaningful changes:

- Detection systems would need to be deployed in discovery playlists by mid-2025

- Payment structures would need to change to prioritize human artists

- User satisfaction could stabilize and potentially recover by late 2025

- The company would establish itself as the platform that cares about quality

- Competitive advantage over Apple Music and other services would increase

The most likely scenario is somewhere in between: Spotify makes incremental improvements (better detection, clearer disclosures) without fundamentally changing the system. This partially addresses user complaints without solving the core problem. The feature continues to degrade slowly rather than rapidly.

The next 18 months will be crucial in determining which scenario plays out.

The Broader Implications for AI and Creative Work

What's happening with music is a preview of what we'll see across creative industries.

AI image generation has already followed a similar arc: initial excitement about the tool, followed by a flood of low-quality generated content, followed by platform saturation and decreased trust in image recommendations.

AI writing is heading the same direction. As AI writing tools improve, we'll see increased AI-generated content on publishing platforms, Medium, Substack, and other places where algorithmic discovery determines visibility. The same incentive structure—cheap to produce, hard to detect, profitable to upload at scale—will apply.

This suggests a broader pattern: any creative domain where (a) AI can generate passable content, (b) platforms have algorithmic discovery, (c) uploaders have economic incentives, will face this problem.

The solution, if it exists, probably involves a combination of approaches:

- Better detection systems (necessary but insufficient)

- Changed incentive structures (payment, visibility, recommendations)

- Transparent disclosure and user choice (let people opt in or out)

- Regulatory pressure (ensuring baseline standards)

- Community organizing and platform selection (users voting with their attention)

Understanding the Spotify situation now will help other industries navigate similar challenges later.

TL; DR

- AI music flooded Spotify's Discover Weekly: An estimated 5-15% of daily uploads are AI-generated, overwhelming recommendation algorithms designed before this was possible

- The problem started with incentives: Cheap AI music tools combined with Spotify's per-stream payment model created arbitrage opportunities for uploading bulk AI content

- User trust completely eroded: Listeners reported 40-60% of Discover Weekly tracks appeared AI-generated, prompting complaints across Reddit, Twitter, and music forums

- Detection is necessary but insufficient: Spotify's half-measures (voluntary disclosure, weak detection) don't address the core issue that AI music is economically rational to upload at scale

- The solution requires changing incentives: Until Spotify either reduces payouts for AI music or increases payouts for human music, the problem will continue

FAQ

What is Discover Weekly?

Discover Weekly is Spotify's personalized playlist feature that generates a unique 30-song playlist for each user every Monday. It uses machine learning algorithms to analyze listening history, similar users' preferences, and audio characteristics to recommend music users are likely to enjoy. For years, it was considered one of the best music discovery tools available and a key differentiator for Spotify.

How does AI-generated music get onto Spotify?

AI music generation tools like Boomy, AIVA, and others allow users to create music by inputting prompts or parameters. These tools produce audio files that can be uploaded to Spotify like any other track. Since Spotify doesn't have perfect detection systems for AI-generated content, and the platform's content moderation relies partly on disclosure that creators can skip, AI music can reach the platform easily. Uploaders then see their content distributed through algorithmic recommendations if it generates engagement.

Why does AI music flooding hurt the Discover Weekly algorithm?

Spotify's recommendation algorithm learns from user behavior: skips, saves, playlist additions, and completion rates. When AI-generated music is uploaded at massive scale, it creates noise in the data the algorithm learns from. The algorithm can't distinguish between high-quality human music and low-quality AI music, so it treats both the same way. With millions of AI tracks competing for attention, they increasingly dominate the algorithmic recommendations, degrading playlist quality.

What makes AI-generated music detectable?

Audio engineers and producers can identify AI music through several methods: structural repetition and loops, impossibly clean vocal processing without human imperfections, instrumentation combinations no human would choose, metadata patterns suggesting automatic generation, and artist profiles lacking history or discography. However, AI music quality is improving rapidly, making detection harder. Audio fingerprinting and watermarking embedded during generation are more reliable but require coordination between AI tool providers and platforms.

Why hasn't Spotify fixed this problem yet?

Spotify has implemented detection systems and disclosure tools, but they're incomplete and easy to circumvent. The core reason for the slow response is financial incentives. Building better detection requires expensive machine learning infrastructure and ongoing maintenance. Changing payment structures to deprioritize AI music would upset AI music startups and potentially reduce revenue in the short term. Spotify, like most public companies, faces pressure to maintain profitability, so long-term quality investments often lose to short-term cost containment.

What should Spotify do to fix Discover Weekly?

Effective solutions would include: (1) implementing mandatory AI disclosure with verification, not just voluntary tags; (2) adjusting payment structures so AI-generated music pays out at reduced rates compared to human-created music; (3) improving algorithmic detection and actively deprioritizing detected AI music in discovery playlists; (4) investing in human curation for niche playlists where algorithm quality has degraded most; (5) creating support programs for human artists to help them compete with AI's volume advantage. This would be expensive and would face industry opposition, but would restore user trust and platform quality.

Are there alternative music streaming platforms without AI music problems?

No platform has completely solved this issue, but some have less severe problems. Tidal emphasizes artist relationships and human curation over pure algorithms, so their recommendations reflect different values, though with smaller user bases and less algorithmic sophistication. Apple Music and YouTube Music have similar-scale AI music problems but less public transparency about it. Bandcamp connects users directly to artists and has better quality control because uploads are individual artists rather than bulk generators. No streaming service has figured out how to prevent AI flooding while maintaining open upload policies.

What does the music industry say about AI music flooding playlists?

Responses are mixed and contradictory. Major labels like Universal publicly oppose uncontrolled AI music, but some are experimenting with AI composition tools for their own artists. Independent artists are split: some embrace AI as a creative tool, others see it as unfair competition that commodifies their work. Artist advocacy groups have called for stronger disclosure requirements and changed payment structures. The lack of unified industry response reflects deeper conflicts about whether AI should be regulated, restricted, or integrated into normal music production.

Could this problem expand to other creative fields beyond music?

Absolutely. AI image generation is already following the same pattern on platforms like Deviant Art. AI writing tools are beginning to flood platforms like Medium and Substack. The pattern is predictable: as AI improves, uploading low-quality generated content at scale becomes economically rational if the platform pays per view or per interaction. Any creative field with algorithmic discovery and payment incentives will eventually face this problem unless policies proactively prevent it. Understanding how Spotify handled (or failed to handle) the music version is instructive for other industries now facing similar challenges.

What would it cost users if Spotify fixed this problem?

Actually implementing strong AI music controls probably wouldn't raise subscription prices, but it would require Spotify to take on costs that would otherwise go to profit. Those costs might manifest as slightly lower music licensing budgets, which could theoretically mean fewer resources for new artist development. In practice, fixing the discovery quality probably benefits users enough that any price impact would be more than offset by increased platform value. The real question isn't cost to users but cost to Spotify's shareholders, which is why change has been slow.

Is all AI music bad?

No. AI music generation is a legitimate creative tool when used transparently and intentionally. Artists using AI to enhance their production, compose background music for projects, or explore musical ideas are doing something valuable. The problem isn't the tool; it's the incentive structure that rewards uploading thousands of low-effort AI variations under fake artist names to game algorithmic recommendations. A musician who discloses that they used AI tools and creates thoughtful work is different from a bot that uploads generic AI variations. Platforms need to distinguish between these, but Spotify's systems treat all music the same way.

Conclusion: The Reckoning Coming For Spotify

The Discover Weekly crisis is bigger than just music playlists. It's a test case for whether algorithmic systems can maintain quality when incentives align to flood them with low-cost content.

Spotify invented the paradigm: algorithms understand users better than humans can. Build enough data, train enough models, and you can scale human curation infinitely. Discover Weekly proved the concept worked.

But the company didn't build in safeguards for when the system got gamed. They assumed that quality music would naturally rise to the top because users would skip low-quality content. That assumption held as long as the platform wasn't flooded with AI music specifically designed to be engaging enough to not skip while being cheap enough to upload at massive scale.

Once that happened, the algorithm's fundamental mechanism broke. Skipping low-quality AI music still generated the data signals that told the algorithm to recommend similar AI music. Engagement with AI music was engagement that the system optimized for, regardless of whether users were actually happy with the results.

Spotify has built a business on the premise that its algorithms are trustworthy. That trust is now visibly broken. The company can rebuild it, but only if they prioritize quality over quarterly earnings. Given the financial pressures and competitive landscape, betting on Spotify choosing quality seems optimistic.

Most likely, we'll see incremental improvements and user frustration that gradually drives some portion of the user base away. The platform will remain usable for most purposes, but Discover Weekly will never recapture its reputation as a genuinely good discovery tool.

The broader lesson: trust in algorithmic systems is fragile, and once broken, difficult to restore. Any platform relying on algorithmic discovery needs to actively maintain the quality of that discovery, not assume the algorithm will do it automatically. Spotify didn't, and now they're paying the price in user confidence.

For other creative platforms facing similar challenges—YouTube with video, Medium with writing, Deviant Art with images—the Spotify situation should be a cautionary tale about what happens when incentives align to flood systems with low-quality generated content. Building detection systems helps, but you also need to fundamentally change the incentives that make flooding profitable.

The companies that figure this out first will win user trust. The ones that wait will gradually lose it.

Key Takeaways

- Spotify's Discover Weekly algorithm became unreliable as AI-generated music flooded the platform at scale, with estimates of 5-15% of daily uploads being AI-created tracks

- The core problem stems from misaligned incentives: cheap AI music generation combined with Spotify's per-stream payment model made bulk uploading economically rational

- User trust completely eroded when listeners realized they couldn't distinguish genuine artist recommendations from AI-generated content, with complaints across Reddit and social media

- Spotify's half-measures like voluntary disclosure and weak detection haven't worked because they don't address the fundamental incentive structure rewarding AI music uploads

- Competing platforms face similar issues but haven't solved them either, indicating this is a structural problem affecting the entire music streaming ecosystem

Related Articles

- Spotify Ends ICE Recruitment Ads: What Changed & Why [2025]

- Grok's Deepfake Problem: Why the Paywall Isn't Working [2025]

- Google's App Store Policy Enforcement Problem: Why Grok Still Has a Teen Rating [2025]

- GoFundMe's Selective Enforcement: Inside the ICE Agent Legal Defense Fund Controversy [2025]

- Apple's Siri Powers Up With Google Gemini AI Partnership [2025]

- Ofcom's X Investigation: CSAM Crisis & Grok's Deepfake Scandal [2025]

![AI Music Flooding Spotify: Why Users Lost Trust in Discover Weekly [2025]](https://tryrunable.com/blog/ai-music-flooding-spotify-why-users-lost-trust-in-discover-w/image-1-1768325876132.jpg)