Apple's Strategic Shift: Why Siri is Getting Gemini AI Instead of Chat GPT

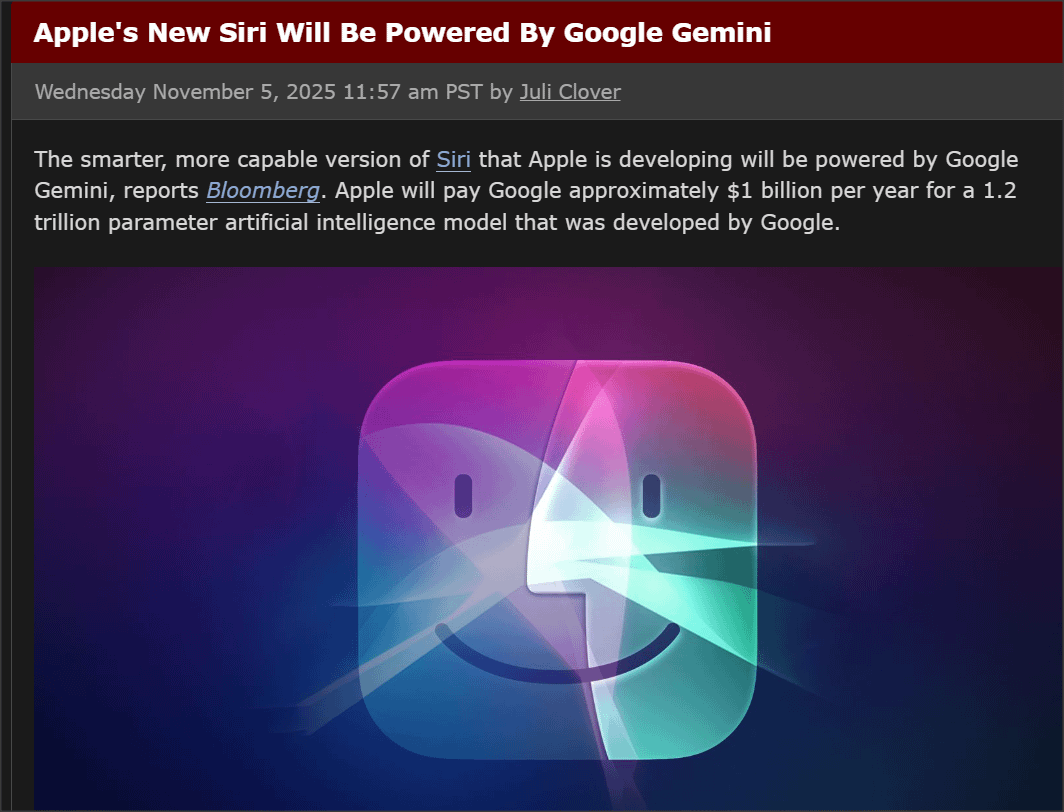

Something genuinely surprising just happened in Big Tech. Apple, the company that built its entire brand on keeping Google at arm's length, just announced it's partnering with Google to power the next generation of Siri with Google's Gemini AI models. And not just a quiet integration either—we're talking about a multi-year, high-stakes partnership that reportedly costs Apple around $1 billion annually as reported by CNBC.

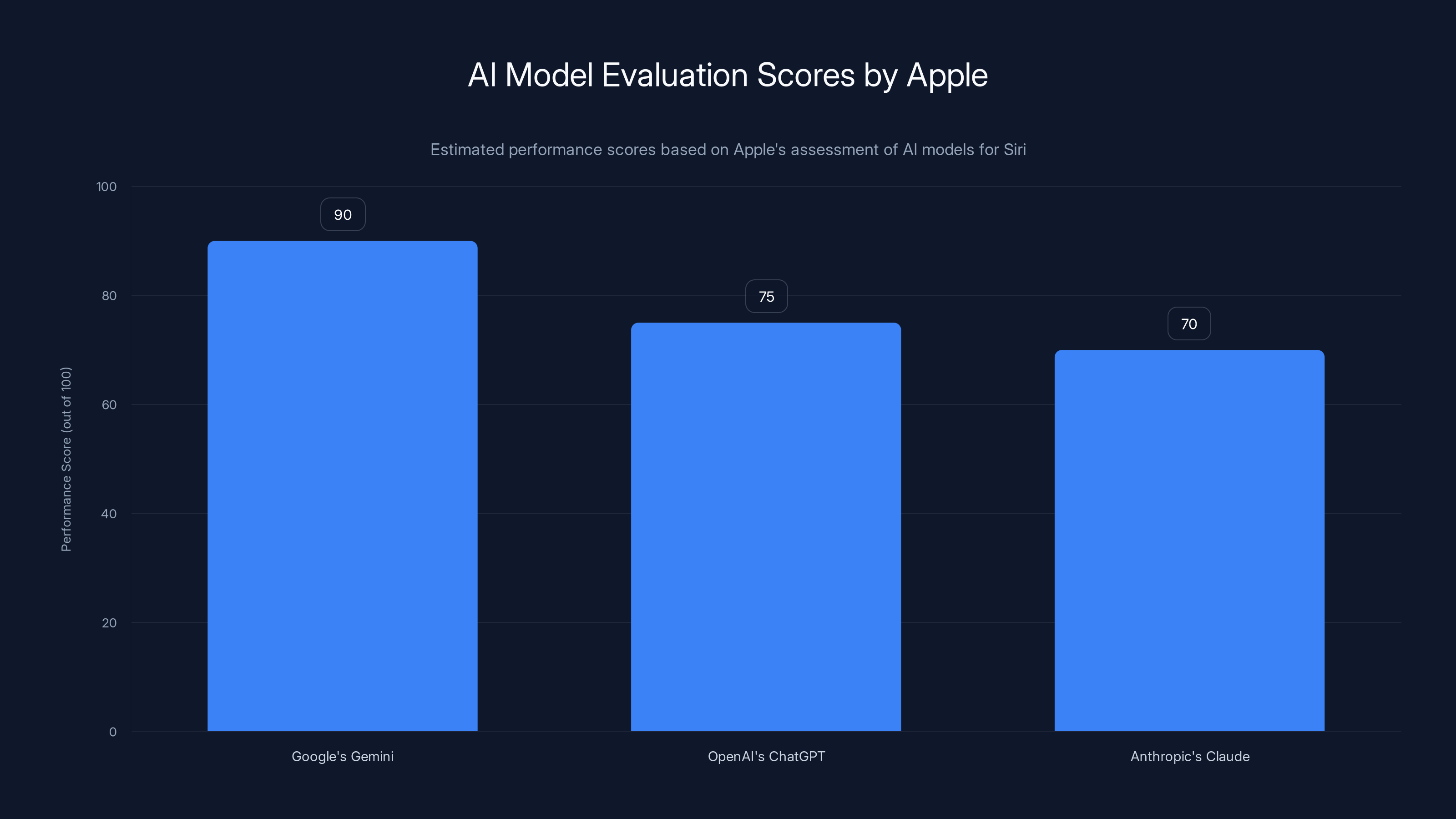

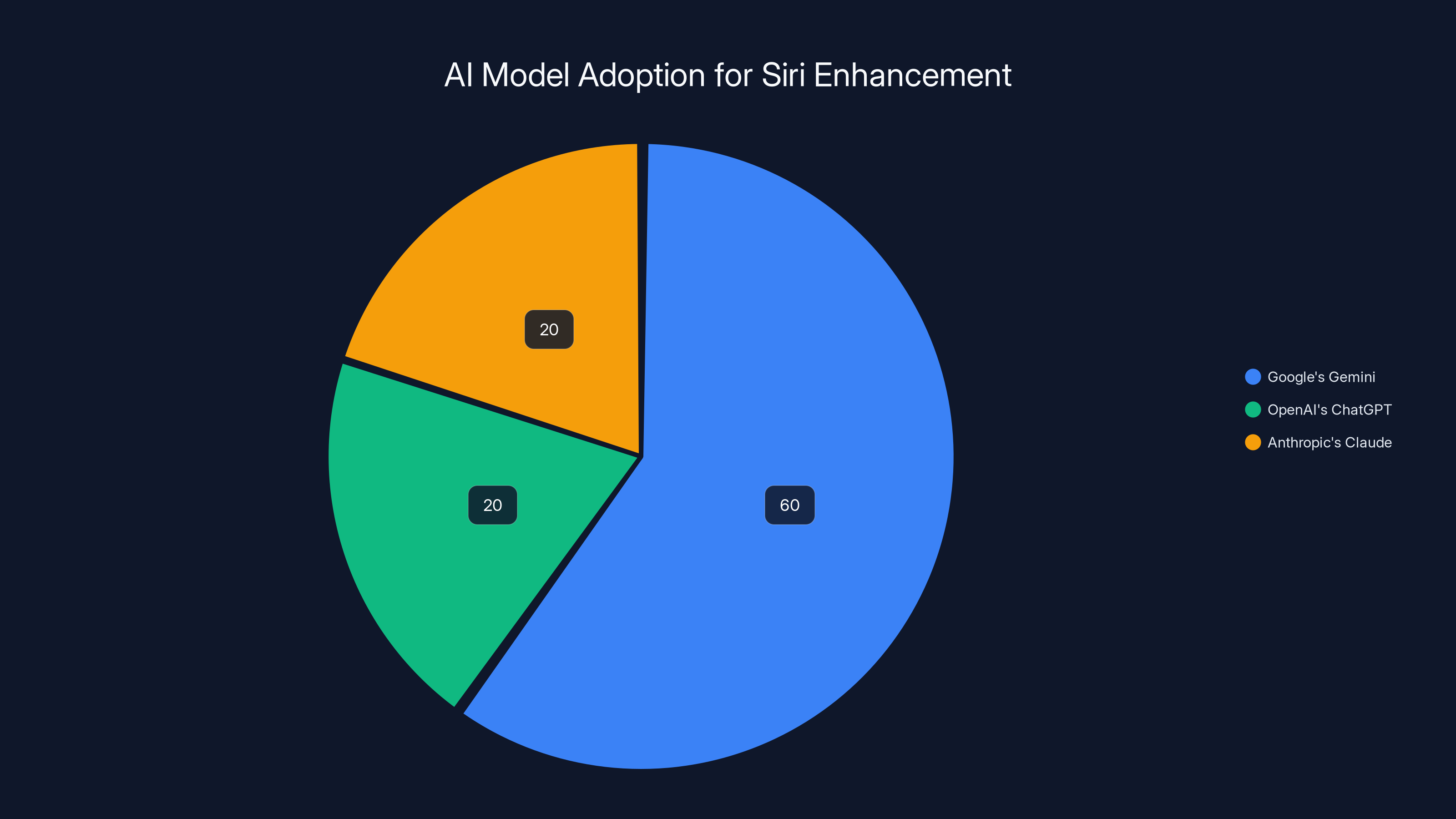

Let that sink in for a moment. Apple tested Open AI's Chat GPT. They tested Anthropic's Claude. They have their own in-house AI teams working around the clock. Yet they looked at all their options and said, "Yeah, Google's technology is what we need." That decision tells you everything about where the AI race actually stands right now, and it's not where most people thought it was.

This isn't just a business move. It's a referendum on which AI models actually perform best when it matters most. It's a wake-up call for Open AI, whose CEO Sam Altman literally declared a "code red" last month over Google's Gemini advances according to Fortune. It's a validation of Google's AI infrastructure after years of playing catch-up. And it's a fascinating glimpse into how even bitter competitors have to work together when the technology demands it.

I've been following the AI landscape closely, and what's wild is how quickly this shifted. Two years ago, Chat GPT was untouchable. Everyone wanted to be Chat GPT. Now? Open AI's own CEO is worried enough about Gemini to restructure his company's roadmap. And Apple just confirmed that Google's models passed every test better than the alternatives.

Here's what actually went down, what it means for your phone, and why this partnership might reshape how we think about AI competition in 2025 and beyond.

TL; DR

- The Deal: Apple is using Google's Gemini AI models to power the next version of Siri, reportedly paying Google $1 billion annually for a multi-year partnership

- Why It Matters: Apple tested Chat GPT and Claude but found Gemini offered superior capabilities, validating Google's AI strategy

- The Timeline: Enhanced Siri arrives in iOS 26, iPadOS 26, and macOS 26 Tahoe later in 2025 as noted by MacRumors (originally promised for 2024)

- Privacy Angle: User data stays on Apple's Private Cloud Compute servers, isolated from Google's infrastructure

- The Competition: Open AI's Sam Altman called a "code red" and restructured Chat GPT's roadmap in response to Gemini advances

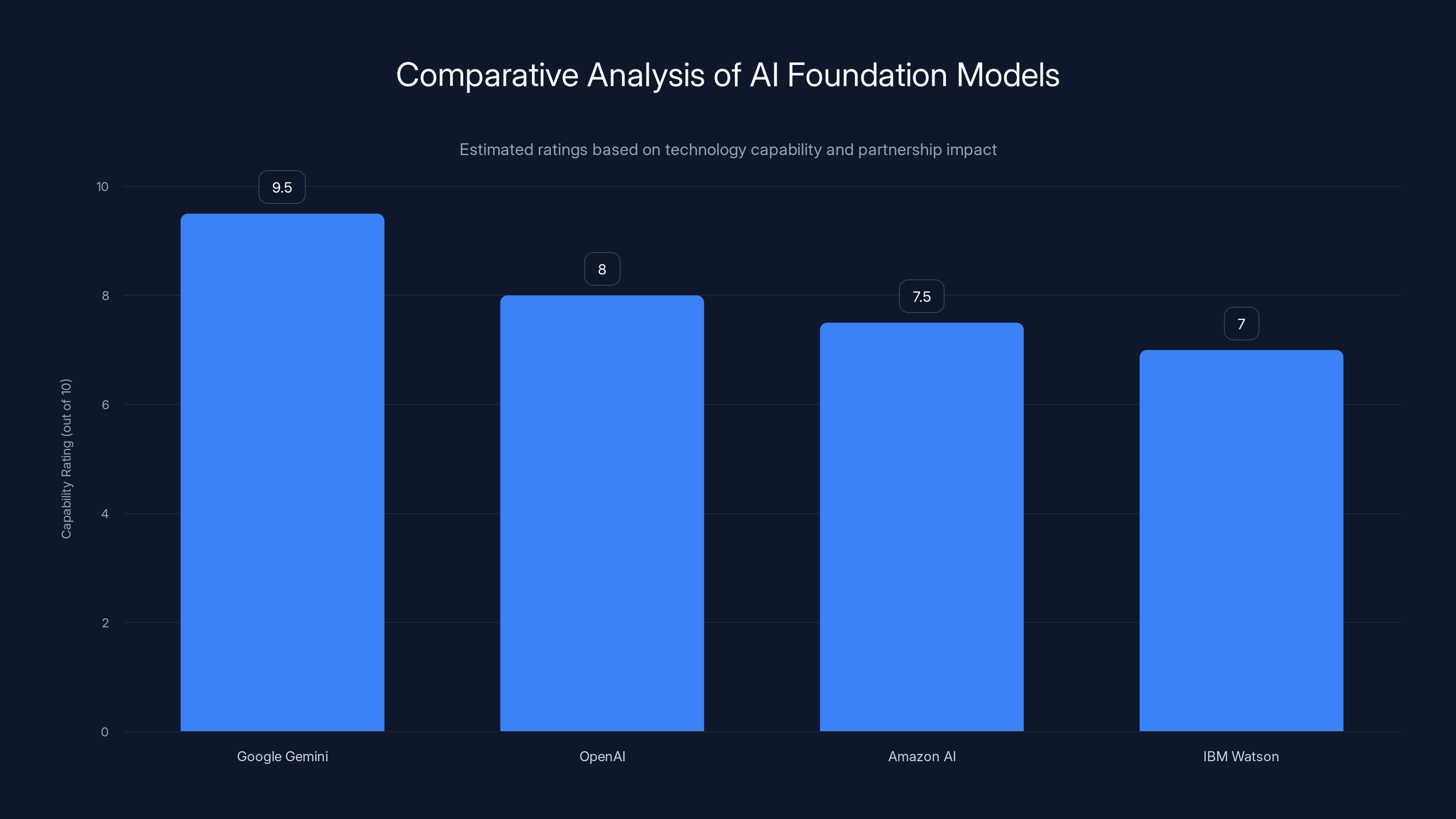

Apple's evaluation suggests Google's Gemini outperformed ChatGPT and Claude in key areas like natural language understanding and reasoning. Estimated data based on narrative insights.

The Announcement That Shocked Silicon Valley

Apple's official statement was carefully worded but clear. "After careful evaluation, we determined that Google's technology provides the most capable foundation for Apple Foundation Models," the company told press outlets. Note the language: "most capable." That's not negotiation speak. That's a company saying it ran the tests and Google won.

The partnership arrived via a CNBC report, which makes sense because it's the kind of story that doesn't leak easily. When Apple and Google want to keep something quiet, it stays quiet. These two companies have spent decades building walls between their ecosystems. Android versus iOS. Google Search versus Spotlight. Google Maps versus Apple Maps. The list goes on. So when they publicly announce a technology partnership, you know both sides have concluded it's worth more than the PR optics cost.

What makes this announcement particularly brutal for Open AI is the timing and the tone. Apple didn't say Gemini was "competitive." They said it was "the most capable foundation." Those words have weight in Silicon Valley. They're the kind of words that ripple through boardrooms and shape engineering roadmaps for quarters to come.

The multi-year aspect is equally important. This isn't a one-year trial. This isn't a "let's see how it goes." Apple committed to years of partnership with Google. That means Siri's AI backbone will be Gemini-based for the foreseeable future, unless something drastic changes. In the world of AI, where capabilities shift rapidly, a multi-year commitment is a massive vote of confidence.

And we can't ignore the financial component. $1 billion annually is real money, even for Apple. That's not licensing a patent or paying for API access. That's a substantial partnership fee that reflects both the value Google brings and the scale at which Apple will use these models. Scale matters because it means Apple isn't just testing Gemini on a small feature—Siri's core intelligence will run on it.

What This Means for Siri's Future

Siri has always been the forgotten stepchild of Apple's AI ambitions. The voice assistant came to life in 2011 with a ton of hype, but over the years, it became clear that Siri was... not great. It couldn't understand natural language the way you'd want. It got confused by colloquialisms. It couldn't handle complex requests. Compared to what Alexa and Google Assistant could do, Siri felt stuck in 2012.

Apple kept trying to fix it. They acquired AI companies, hired top machine learning researchers, poured resources into on-device processing. But Siri remained mediocre. That's the gap Gemini is supposed to close.

With Gemini backing Siri, you're looking at a fundamentally different assistant. We're talking about natural language understanding that actually works. Context awareness that makes sense. The ability to handle multi-step requests without getting lost. If Apple executes this well, Siri could finally become the assistant people actually want to use instead of grudgingly accepting.

The privacy angle is crucial here too. Apple's been hammering the privacy message for years, and they could have taken an easy win by just using Gemini directly. Instead, they committed to running Gemini on their own servers—specifically their Private Cloud Compute infrastructure as detailed by Spyglass. What that means in practice is your Siri queries don't hand your data to Google's servers. The model runs on Apple's hardware. Your data theoretically stays in the Apple ecosystem.

Now, there's a reasonable debate about whether Private Cloud Compute actually provides the privacy Apple claims. But the point is they built architecture specifically designed to isolate user data from Google's infrastructure. That's not nothing. It's Apple's way of saying, "We're using Gemini's intelligence, but we're not letting Google profile you."

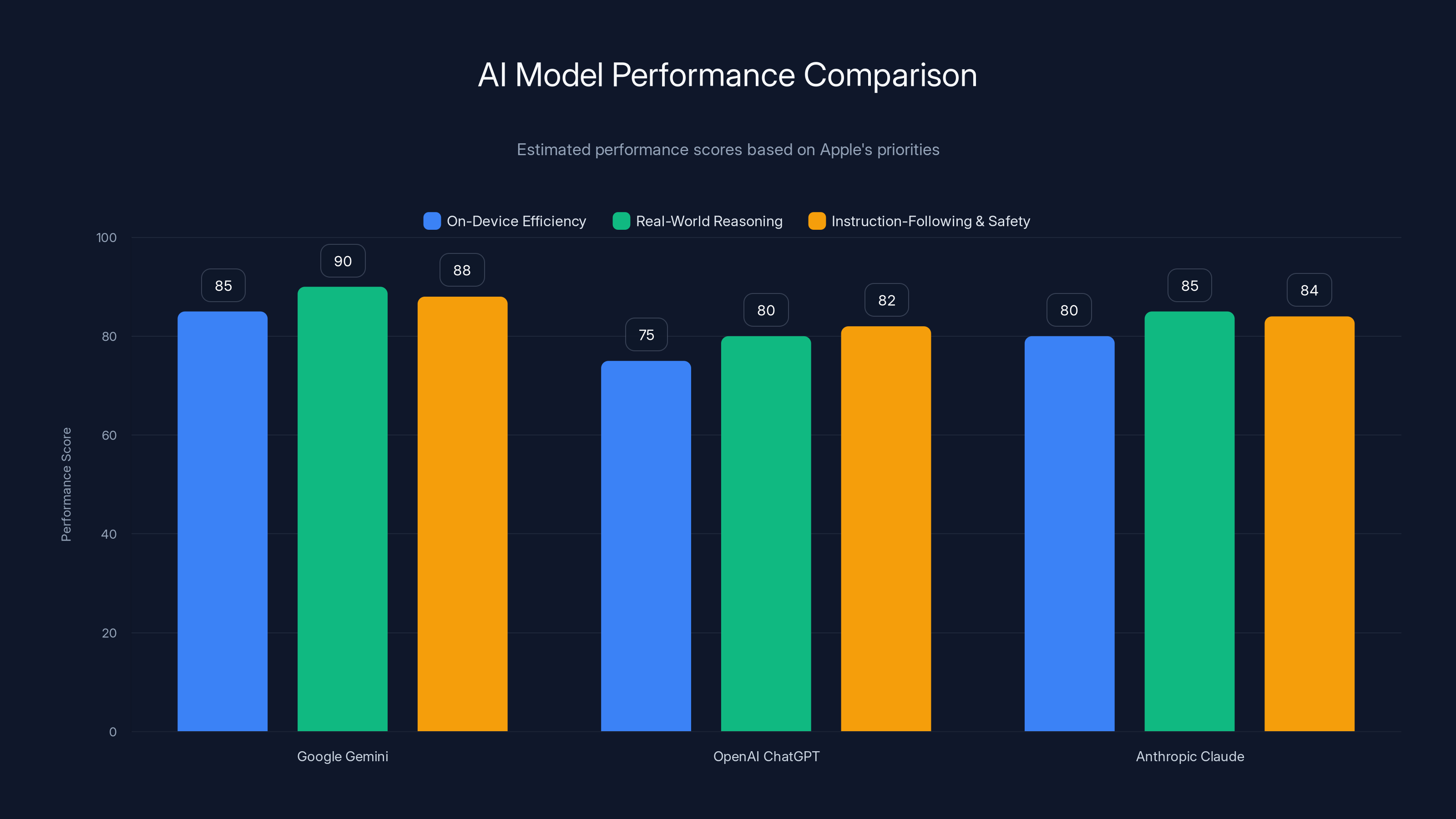

Google's Gemini outperformed competitors in on-device efficiency and real-world reasoning, key factors for Apple's selection. (Estimated data)

The AI Capabilities Comparison That Led Here

Apple ran actual tests. They compared Google's Gemini models, Open AI's Chat GPT, and Anthropic's Claude side by side. Then they picked Gemini. That's the headline, but the subtext is what matters.

Open AI built Chat GPT on massive amounts of training data and compute. The model was frankly better than everything else for about eighteen months. Then competitors caught up. Google's Gemini improved. Anthropic's Claude got smarter. The gap narrowed. But here's the thing nobody expected: Google didn't just catch up on capability. They surpassed Chat GPT in the specific tests Apple cared about.

We don't know the exact benchmarks Apple used. But you can infer a lot from the context. Apple cares about on-device efficiency, so Gemini's multimodal capabilities probably mattered. They care about real-world reasoning for assistant tasks, so benchmark scores on MMLU (Massive Multitask Language Understanding) and similar reasoning tests probably figured in. They care about instruction-following and safety, so quality of responses on adversarial prompts probably mattered.

Gemini's performance on these metrics improved dramatically over 2024. Google released Gemini 2.0 with expanded capabilities, better reasoning, faster inference as noted by Tech Times. The model got noticeably better at the things Siri actually needs to do—understand context, follow complex instructions, handle ambiguous requests gracefully.

Meanwhile, Open AI was dealing with organizational chaos. They had the CEO drama with Sam Altman leaving and returning. They had the CTO situation. They had to defend against Claude being better at specific tasks. Then Google released Gemini 2.0, and Altman essentially said the company needed to hit pause on some roadmap items to respond. That pause is telling.

What's not public is the exact performance gap. But when a company like Apple, which has infinite resources and could just build its own AI from scratch, chooses an external partner, that partner has to be offering something genuinely superior. You don't pay $1 billion annually for a technology that's merely "fine." You do it when the alternative is months or years of development work that wouldn't get you to the same place.

The Collapse of the AI Moat

This partnership basically confirms what researchers and engineers have been saying quietly for months: the advantage in AI is shrinking. It's not gone. But it's collapsing faster than anyone expected.

Think about how this played out. Open AI emerged with Chat GPT and had the world's attention for a solid year. Everyone wanted to be Open AI. The startup world tried to build Chat GPT competitors. Enterprises lined up to integrate Chat GPT into products. Open AI was on top.

Then Google, using its existing research infrastructure and compute resources, released Gemini. The model wasn't immediately better at everything, but it was better at some critical things and available through APIs quickly. Then they iterated. Gemini 2.0 came out with multimodal capabilities, vision understanding, real-time reasoning. Suddenly the gap compressed.

Anthropic added Claude, which some users prefer for specific tasks. The market that was monolithic around Chat GPT fractured.

And Apple's decision proves the moat is actually gone. If you had a unique technological advantage that competitors couldn't replicate, a company like Apple would just build it in-house. They have the resources, the talent, and the incentive. The fact that they looked at everything and said, "Google's is the best we can get externally" means the capabilities are genuinely commoditizing.

This doesn't mean AI is solved or that capability gaps don't matter. It means the gap isn't huge anymore. The leaders are clustered together. If you need best-in-class AI, you have options. That's a major shift from eighteen months ago when Chat GPT was untouchable.

Why Not Just Use Chat GPT?

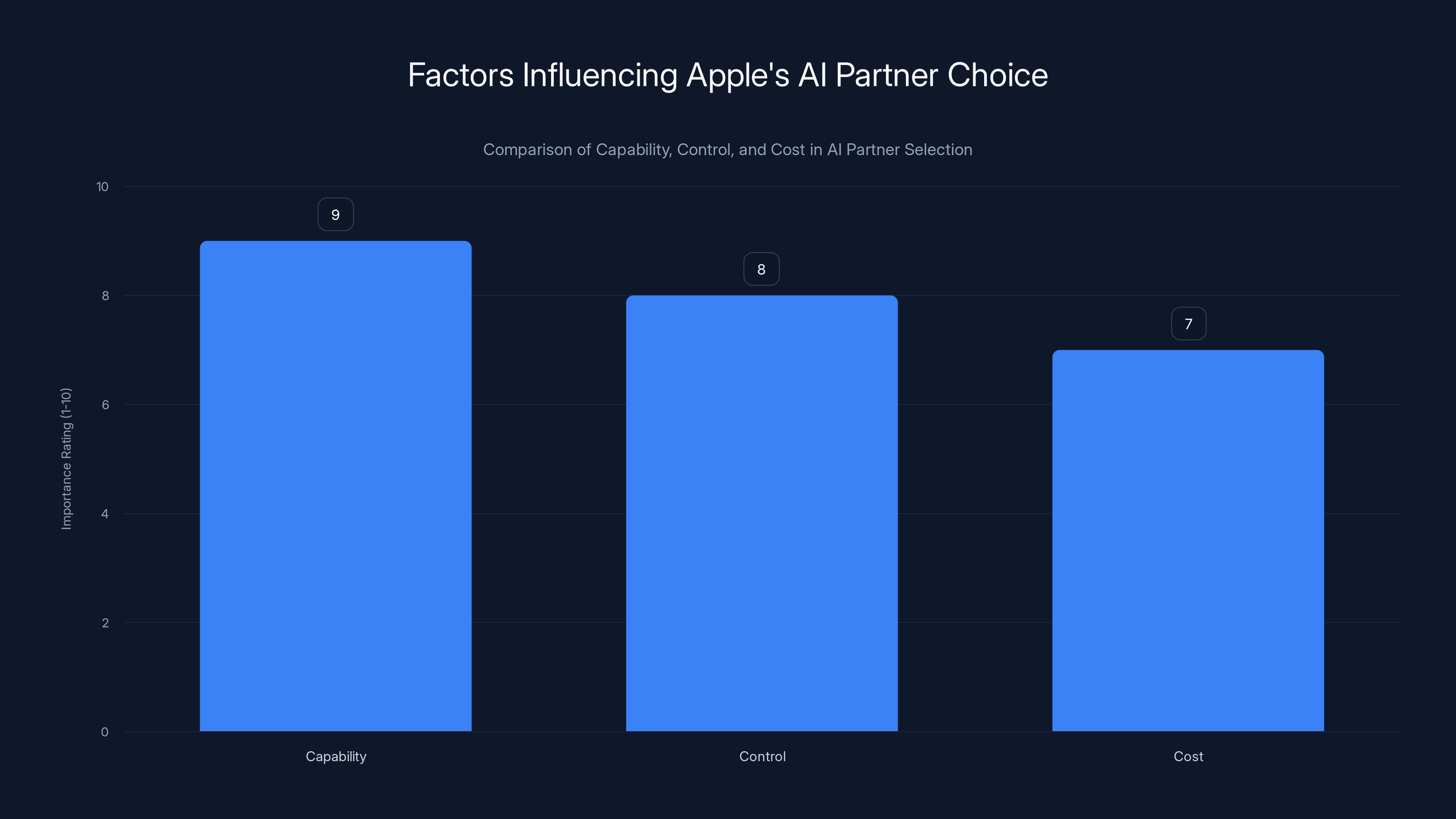

Apple actually has a relationship with Open AI already. iOS 18 lets you hand off requests to Chat GPT if you want. So why not build Siri entirely on Chat GPT? The answer probably comes down to three factors: capability, control, and cost.

On capability, we've already covered it. Apple tested Chat GPT and found Gemini superior for the specific tasks Siri needs to do. That's the clean answer.

But control matters too. With Gemini, Apple has more leverage. Google needs the partnership to succeed because it's validating their AI strategy against Open AI. Open AI doesn't need Apple in the same way—they have their own consumer products, enterprise access, and API revenue. For Apple, a partner that needs the relationship to work is a more stable partner. This doesn't mean Google will give Apple special treatment, but the incentive alignment is better.

Cost could be a factor as well, though $1 billion annually isn't cheap. But if Chat GPT's API costs were higher or the commercial terms less favorable, Gemini becomes more attractive. We'll probably never know the exact numbers, but API pricing matters at scale.

There's also the longer-term strategy to consider. Apple wants to reduce dependence on external AI. Their statement mentioned they're working on in-house models that could eventually replace Gemini. But that's years away. In the meantime, they need a partner. Google's the right choice for now, but Apple will eventually build enough internal capability to reduce that dependence.

Apple's choice of AI partner is influenced by capability, control, and cost, with capability being the most critical factor. (Estimated data)

The Broader Context: Google's AI Redemption

This partnership is a huge moment for Google. The company invented the transformer architecture that underpins modern AI. They were doing groundbreaking AI research when Open AI was a nonprofit. But they had a branding problem: they were late to the consumer AI moment.

When Chat GPT launched in November 2022, it was a shock to Google. The company had incredible AI research (BERT, LaMDA, PaLM), but they weren't moving fast in the product space. By the time Google released Bard (now Gemini), the narrative was "Google's response to Chat GPT." That's a bad narrative if you want to be seen as the leader.

Over 2024, that changed. Gemini started winning benchmarks. The multimodal capabilities became genuinely impressive. The reasoning improved. And now Apple's partnership is basically saying, "Hey, we're not just using Google's AI for fun. We're using it because it's the best." That's a narrative reset.

For Google, this is validation that their AI strategy is working. It's evidence that being second to market but better in execution is still winning. It's also a defensive move—if Apple publicly used Chat GPT for Siri, that would be a massive PR win for Open AI. Instead, Google gets the credit.

The partnership also gives Google more data to improve Gemini further. Running inference on billions of Siri queries gives Google a window into what real-world language understanding actually looks like at scale. That data is incredibly valuable for training the next generation of models.

The Timing of Open AI's "Code Red"

Sam Altman called a "code red" at Open AI last month. Specifically, he said the company needed to pick up the pace on AI capability development because Google's Gemini was advancing faster than expected. Some features got deprioritized. The focus shifted entirely to capability and speed as reported by Fortune.

Apple's announcement is the context for that decision. Altman was watching the same benchmarks everyone else was. He could see Gemini improving. And he probably heard whispers about Apple's evaluation. When your biggest potential customer (Apple) is testing your product against competitors and your competitor wins, that's a code red situation.

What's interesting is Altman's framing. He didn't say, "We made a mistake and Chat GPT isn't competitive." He said the pace of capability development needed to increase. That's a correct diagnosis. Open AI's been working on incremental improvements and new features when they should have been focused on raw capability gains. Gemini's improvement trajectory is steeper right now, which means Open AI needs to catch up on fundamentals.

The reality is the AI race is incredibly competitive right now. It's not winner-take-all. But there are tier-one competitors and everyone else. Apple just confirmed that Google is tier-one. Open AI is too, but they're not obviously ahead. Anthropic has Claude in the tier-one conversation. That's a crowded top tier, which means everyone has to keep improving or fall back to tier-two.

What Apple's Private Cloud Compute Actually Does

Apple made a big deal about running Gemini on their own servers using something called Private Cloud Compute. This deserves explanation because it's actually meaningful for privacy, even if perfect privacy isn't guaranteed.

Here's how it works in theory: You make a request to Siri. That request gets processed locally on your device first. If it needs to go to the cloud for additional processing, it goes to Apple's servers, not Google's. The Gemini model runs on Apple's infrastructure. Your data and the inference happen in Apple's controlled environment. Only the results come back to your phone.

Why does this matter? Because it means Google doesn't get to see your raw queries. Google gets to see aggregate data about model performance, but not the specific requests you made. That's different from handing your Siri queries directly to Google's API.

The catch is "in theory." In practice, there are questions. Apple controls the infrastructure, but Google controls the model weights. If you were truly paranoid, you might worry about backdoors or telemetry at the model level. Apple probably has clauses preventing that, but you can't verify it yourself.

Moreover, this only applies to Siri. If you're using Chat GPT through the iOS integration, your queries go directly to Open AI. If you're using Google's apps directly, your data goes to Google. So the privacy benefit is specific to Siri-Gemini integration, not comprehensive.

But evaluated purely as engineering, Private Cloud Compute is clever. It lets Apple use a best-in-class AI model without giving a competitor direct access to user data. That's a meaningful privacy protection, even if it's not perfect.

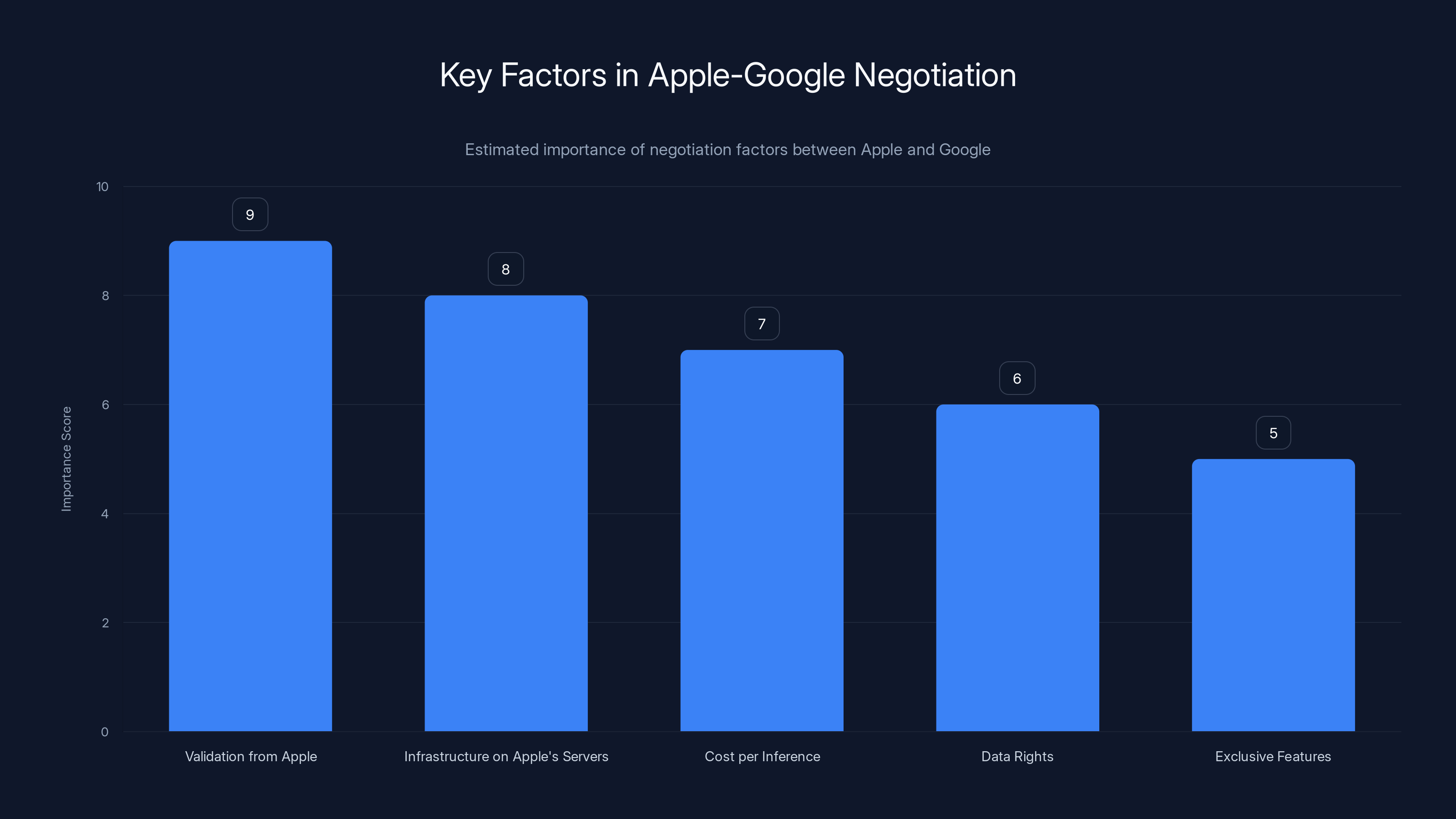

Estimated data shows that validation from Apple and infrastructure on Apple's servers were crucial factors in the negotiation, scoring highest in importance.

The Competitive Landscape Resets

Let's map out where everyone stands right now after this announcement:

Google: Gemini is now powering Siri. They're validated as tier-one. They have the partnership with the world's most valuable company. Momentum is theirs. The question is whether they can maintain improvement velocity because Apple wants to eventually replace Gemini with in-house models.

Open AI: Still tier-one but second in the latest round. Chat GPT is integrated into iOS for fallback queries, which is valuable but not the same as being the core Siri engine. Sam Altman's code red suggests they know they need to innovate faster. They have the advantage of being proven at scale, but that's yesterday's advantage.

Anthropic: Claude is somewhere in the tier-one conversation, but Apple didn't pick them. That's not fatal, but it's a signal that their capabilities are behind Gemini and Chat GPT for this specific use case. They probably tested well but not as well as the alternatives.

Apple: Committed to using external models for now but building internal capability to reduce dependence. Long-term, they want in-house models that can compete with Gemini. In the short term, they're buying best-in-class capabilities from Google.

Everyone else: If you're not Google, Open AI, Anthropic, or Apple building in-house, you're probably reselling or wrapping someone else's models. The tier-one player advantage is real.

This is actually good for the AI market. Competition is pushing everyone to improve. But it also means the barrier to being a top-tier AI company is incredibly high. You need billions in compute resources, world-class researchers, and access to enough data to train properly. That limits who can play at the highest level.

The Path to iOS 26 and What Users Will Actually Experience

Apple promised Siri improvements for 2024's iOS 18, then delayed. Now they're coming to iOS 26 in 2025. That's significant because it means you're probably not getting a fully transformed Siri in the first iOS 26 release. Apple typically rolls out new capabilities gradually, beta testing with a subset of users before wide release.

What you'll likely see first: Better natural language understanding. Siri should understand more conversational requests without needing exact phrasing. Questions with context ("Show me emails from my team about the project we discussed last week") should work better because Gemini handles context reasoning.

Second: More accurate task completion. Rather than Siri defaulting to showing you web results, it'll actually try to complete the task you requested. If you ask for something it doesn't immediately understand, it'll ask clarifying questions instead of giving up.

Third: Cross-app awareness. Siri might become better at understanding what app you're in and what you're trying to do. "Schedule this for tomorrow at 10am" when you're in an email should know to create a calendar event, not a reminder.

What's less clear: Real-time capabilities. Chat GPT's o 1 model can spend minutes reasoning about complex problems. Siri can't do that because you're waiting for a response on your phone. So even with Gemini backing it, latency matters. Apple will probably optimize for responses under 2-3 seconds, which limits how complex the reasoning can be.

Also uncertain: Privacy improvements beyond what already exists. iOS has on-device processing already. Using Gemini on Private Cloud Compute is better than handing queries to Google directly, but it's not a revolution in privacy compared to current Siri.

Expectations matter here. If you're picturing Chat GPT-level natural conversation, that's probably overstated. This is Siri getting substantially better at understanding and executing on what you ask, not Siri becoming a conversational AI that can chat with you about philosophy. The focus is task completion, not conversation.

What This Means for iPhone Users and Ecosystem Lock-in

Here's the thing that might matter most: This partnership deepens ecosystem lock-in in a new way.

Previously, you stayed in the Apple ecosystem because of the devices, the services, the app selection, and the integration. Now you stay partly because Google's best AI is available through Siri on iPhone, but getting similar capabilities on Android might be different (or worse).

Google's Gemini is available on Android, sure. But Apple's getting first-class integration with Private Cloud Compute and Siri's tight integration with the OS. When a user experiences Gemini through Siri on iPhone, it might feel more polished and faster than if they get Gemini on Android (where it competes with other options and runs through Google's infrastructure).

This creates an asymmetry: Apple users get the best of Google's AI. Android users get Google's AI, but it's not coordinated with the OS the way Siri is. That's not massive, but it's one more reason to stay on iPhone.

Long-term, though, Apple's strategy is clear: use Google's models now, build internal capability, eventually reduce dependence. In five years, Apple might have in-house models good enough that they don't need Gemini. When that happens, Apple gets all the benefits of best-in-class AI without paying anyone $1 billion annually.

That's why this partnership is temporary in Apple's mind, even if it's multi-year. It's a way to accelerate to the right Siri experience while buying time to build internal AI capability. Google knows this too, which is why they're probably extracting as much value as possible from the partnership, including inference data that helps them improve Gemini.

Apple chose Google's Gemini AI model over ChatGPT and Claude, indicating a preference for Gemini's capabilities. Estimated data based on reported preferences.

The Ripple Effects Through the Tech Industry

Apple's choice cascades through the industry in ways most people won't notice immediately:

For enterprise customers: If Apple is confident enough in Gemini for Siri, maybe they should be confident in it for their own enterprise AI initiatives. This validates Google's models for high-stakes applications.

For AI startups: The threshold for competing gets higher. If you're not Google, Open AI, or Anthropic, how do you build something that Apple would pick? The answer is probably you become part of one of those companies or build for a narrow use case they don't cover.

For content creators: Apple's Siri will now be trained on whatever data Gemini was trained on. That raises questions about copyright and attribution that the tech industry is still fighting over. More AI companies using your content means more lawsuits eventually.

For Google's stock: In theory, this should be positive. Validation from Apple that their AI is best-in-class. But markets don't always react logically. The real value is in improved Gemini performance in the market, which will come out over months and years.

For Microsoft and Copilot: Microsoft's bet on Open AI looks less certain. Apple, the company that often copies features from Microsoft, didn't pick the path Microsoft chose. That's a signal.

The biggest ripple: This proves AI capability is becoming more important than brand loyalty. Apple picked Google, their biggest competitor, because Google was better. That's ruthless prioritization of capability. Every other tech company should notice that logic will prevail over partnership preferences.

Future Evolution: What Comes After Gemini?

Apple's statement included a revealing line: "innovative new experiences it will unlock for our users." Note that it didn't say Gemini is the endpoint. It said Gemini is the foundation.

That foundation could shift. Apple's investing heavily in machine learning infrastructure, hiring top AI researchers, and building capabilities internally. In three to five years, they might have models competitive with Gemini. When that happens, they'll probably transition. The partnership is not permanent. It's a checkpoint.

What will spur that transition? Probably a combination of factors:

- Apple's internal models reaching parity with Gemini on key benchmarks

- The pressure of paying $1 billion annually becoming intolerable

- A shift in Apple's business strategy toward vertical integration

- Better on-device processing capabilities that reduce reliance on cloud inference

When that happens, Siri won't lose capability. It'll just run on Apple silicon instead of Gemini. The experience for users might be identical, but Apple will own the entire stack.

Internally at Apple, there's probably a roadmap. "Use Gemini for iOS 26 and 27, evaluate internal models, potentially transition in iOS 28." That's how Apple operates. They use external technology as a bridge while building internal alternatives.

Google knows this too. They're probably planning for the day Apple leaves. That's why Google's focus on improving Gemini matters so much. They need to make Siri so good that even Apple's own engineers, comparing internal models to Gemini, struggle to justify the cost of transition.

The Geopolitical Angle Nobody's Discussing

There's a geopolitical subtext to this announcement that most coverage misses. Apple, an American company, is relying on Google's AI infrastructure. Both are American. But the relationship between the US, China, and AI capability matters.

China's AI capability is advancing rapidly. If there's ever a scenario where US-China tech relations deteriorate further, Apple's using Google's infrastructure means they're not dependent on any single point of failure. It's all within the US tech ecosystem.

That might sound paranoid, but it's how governments and large companies think about strategic technology. When you make infrastructure decisions, geopolitical resilience matters.

Also relevant: Apple's privacy story becomes more coherent if Siri runs on Apple's servers instead of handed directly to external APIs. From a regulatory standpoint (especially in Europe with GDPR), keeping data within Apple's infrastructure is cleaner than sending it to third parties.

This doesn't mean geopolitics drove the decision. Capability did. But the geopolitical alignment makes the decision easier politically.

Google's Gemini is rated as the most capable AI foundation model, influencing Apple's decision for a multi-year partnership. Estimated data based on industry insights.

What About Privacy Really?

Let's be honest about the privacy question because it's important and gets glossed over.

Apple's marketing has been "privacy-first." That narrative gets harder to maintain when you're using Google's Gemini for Siri. Even with Private Cloud Compute, you're now dependent on Google's responsible handling of your inference patterns.

The PR answer is: Private Cloud Compute isolates data from Google's servers, so your privacy is protected. The technical answer is: Probably true, but not verifiable by users. You have to trust Apple's engineering.

The honest answer is: This is a privacy vs. capability tradeoff. Apple decided capability matters more than the pure privacy ideal of on-device-only processing. That's reasonable! Better AI is genuinely valuable. But don't mistake it for privacy maximization.

Over time, Apple will probably improve the privacy angle by improving on-device models. Eventually, some Siri tasks might run entirely on your phone. Some might use Gemini on Private Cloud Compute. Some might be hybrid. That's the sophisticated approach.

But right now, understand what's actually happening: Apple is saying that best-in-class AI, even if it requires cloud processing and involves a Google partnership, is more important than the simplicity of "we never touch your data." That's probably the right call, but it's worth being clear about the tradeoff.

The Negotiation That Got Here

We'll never fully know what the negotiations looked like between Apple and Google. But you can infer some contours:

Apple probably opened with: "We want to integrate your Gemini models into Siri. Here's what we need..." They had requirements around latency, quality, cost, and data handling.

Google probably said: "Interesting. What did Open AI's Chat GPT offer?" They wanted to understand the benchmark they had to beat.

Apple then ran tests with both. Gemini won. Apple came back with: "Here's the business structure we're thinking about."

Google said yes because:

- Validation from Apple is incredibly valuable

- Running inference at Apple scale is good business

- The data Apple provides helps train better models

- It's a defensive move against Open AI's dominance in consumer AI

Apple said yes because:

- Gemini performed best in testing

- Google was willing to put infrastructure on Apple's servers

- The terms were acceptable

- They got a partner who needs the relationship to work

Both sides probably negotiated hard on: cost per inference, data rights, exclusive features, availability commitments, and termination clauses. We know the annual fee is approximately $1 billion, but not the per-inference cost or volume commitments.

The negotiation process actually reveals something important: Apple drives hard bargains. Even when a technology is best-in-class, Apple extracts value. Google accepted whatever terms Apple demanded because being integrated into Siri is too valuable to lose.

Implications for the AI Startup Ecosystem

There's a message here for every AI startup that's not Google, Open AI, or Anthropic: You're probably not going to power Siri. That's not fair, but it's true.

The AI startup playbook used to be: Build a better model, sell it as an API, get rich. That worked fine when Open AI had 18 months of head start. But now the tier-one players are so far ahead on capability and infrastructure that startups can't compete on raw model quality.

So where can startups win? Verticals. Integrations. User experience. Specific use cases that tier-one players don't optimize for. You don't beat Google on general AI, but you might build something better for legal document analysis or medical imaging.

Apple's decision effectively says: "For general-purpose AI, we want the best vendor available. We picked Google." That's harsh for everyone else. But it's also clarifying. The AI market isn't winner-take-all, but the winner-take-most dynamics are real.

The startup lesson is: If you're betting on building a general-purpose AI model that competes with Gemini, Chat GPT, and Claude, you're probably making a bad bet. If you're building focused AI for a specific problem, you have a shot.

The Longer Arc: AI Commoditization

Why does any of this matter beyond the immediate news cycle? Because it signals that frontier AI is commoditizing faster than anyone expected.

Think back to 2023. Chat GPT was magic. It was obviously better than alternatives. Everyone wanted it. The moat seemed permanent.

Now it's 2025, and capability is so distributed that Apple can pick whatever's best without getting locked into a single vendor's roadmap. The moat collapsed in two years.

Expand that timeline and you see the future clearly: In five years, frontier large language models will be like cloud computing was in 2010. Commoditized. Multiple vendors with similar capabilities. The differentiation will move upmarket to application-specific models and specialized AI. The commodities will be embedded everywhere.

That's actually good for consumers and enterprises. Competition drives prices down and quality up. But it's bad for anyone betting their company on owning the frontier model moat.

Apple's partnership with Google is a data point in that commoditization arc. Not the end, but definitely proof the arc exists. Five years from now, this announcement will look like the obvious thing that happened when AI infrastructure became mature enough for multiple vendors to compete.

FAQ

What exactly is the Apple-Google partnership about?

Apple announced a multi-year partnership with Google to power the next version of Siri using Google's Gemini language models. Apple will reportedly pay approximately $1 billion annually for access to Gemini, which will run on Apple's Private Cloud Compute servers. The partnership represents a major shift in strategy, with Apple choosing Google's AI over alternatives like Open AI's Chat GPT and Anthropic's Claude.

Why did Apple choose Google's Gemini over other AI models like Chat GPT?

Apple tested Google's Gemini, Open AI's Chat GPT, and Anthropic's Claude through rigorous evaluation. In Apple's assessment, Gemini proved to be "the most capable foundation for Apple Foundation Models." While the exact evaluation criteria aren't public, Apple's decision suggests Gemini outperformed competitors in natural language understanding, reasoning, multimodal capabilities, and the specific tasks Siri needs to perform.

How does this partnership affect user privacy and data security?

Apple confirmed that Gemini inference runs on Apple's Private Cloud Compute servers, not directly on Google's infrastructure. This architectural choice means user Siri queries remain isolated within Apple's ecosystem rather than being sent to Google's servers. However, this is a privacy improvement over direct API access, not absolute privacy—users are still dependent on Apple's server security and infrastructure.

When will the AI-powered Siri be available to users?

Apple originally promised the improved Siri for iOS 18 in 2024 but delayed it due to reliability concerns. The new Siri-Gemini integration will arrive as part of iOS 26, iPadOS 26, and macOS 26 Tahoe later in 2025 as noted by MacRumors. Apple typically rolls out new features gradually, so you might not see all improvements in the initial release—expect updates throughout the year.

What does this decision mean for Open AI and Chat GPT?

Apple's choice of Gemini over Chat GPT is a significant validation of Google's AI strategy and a competitive setback for Open AI. The decision prompted Open AI CEO Sam Altman to declare a "code red" at the company and deprioritize some roadmap features to focus on capability improvements. While iOS still integrates Chat GPT for fallback queries, Gemini is now Siri's primary AI engine.

Will this partnership be permanent, or could Apple switch back to Chat GPT or another provider?

Apple's statement indicates the partnership is multi-year, but not necessarily permanent. Apple is simultaneously developing in-house AI models and has stated intentions to eventually reduce reliance on external providers. Over the next few years, Apple will likely improve its internal models, and if they reach competitive parity with Gemini, Apple may transition Siri to use its own technology instead.

How does Siri compare to other AI assistants like Google Assistant after this partnership?

Siri's capabilities will significantly improve with Gemini backing, but the experience will differ based on integration approach. Siri will understand context better, handle complex multi-step requests, and provide more accurate task completion. However, this doesn't mean Siri becomes Chat GPT-like in conversational ability—the focus is on task completion and natural language understanding for assistant functions rather than open-ended conversation.

What about competitors like Anthropic and Claude—why weren't they chosen?

Anthropic named Claude was tested alongside Chat GPT and Gemini, but Gemini outperformed it in Apple's evaluation. This doesn't mean Claude isn't capable—Anthropic has built an excellent model—but for Siri's specific requirements and performance benchmarks, Gemini was the better choice. Claude might still see integration in other contexts or products over time.

How much will Siri actually improve from this partnership?

The improvements will be meaningful but not revolutionary. You can expect: better natural language understanding, more accurate task completion, improved context awareness, and fewer "I didn't understand" moments. However, Siri won't become a philosophical conversationalist or spend minutes reasoning through complex problems—those capabilities require different architectural choices that prioritize depth over latency.

What are the financial implications of Apple paying Google $1 billion annually?

At $1 billion annually, Apple is making a substantial investment, but one justified by the scale and strategic importance of Siri. This cost is offset by not needing to spend years developing competitive in-house models. The payment structure likely includes both base fees and per-inference costs, though Apple's massive scale gives them leverage for better pricing than smaller companies would negotiate.

The Significance of Tech Rivals Working Together

When Apple and Google cooperate despite being fierce competitors, it signals something profound about the tech industry. These companies battle over search, smartphones, privacy, app stores, and market share. They have fundamentally different philosophies on data and advertising. Yet they're choosing partnership on AI.

This happens only when the technology becomes important enough that cooperation outweighs competition. The same way Microsoft and Apple put aside rivalry to serve enterprises. The same way Amazon and competitors share infrastructure in cloud computing. Sometimes the market demands cooperation even between adversaries.

Apple's choice validates Google's technology so thoroughly that no other narrative works. If Chat GPT were better, Apple would use Chat GPT and brag about it. Instead, Apple chose a competitor and paid them $1 billion annually. That's respect earned through capability.

For the industry, this sets a precedent: If you build something genuinely better, the best companies in the world will use it, regardless of competitive context. That incentivizes continuous improvement. Complacency is punished. Innovation is rewarded. Google's getting rewarded right now.

Open AI has been warned. Anthropic is still learning. The message is clear: Stay at the frontier or get left behind. Apple's partnership with Google is a reminder that in technology, yesterday's dominance doesn't guarantee tomorrow's relevance.

The AI landscape will keep shifting. Models will keep improving. New competitors will emerge. But Apple's choice to use Google's Gemini is a data point that matters. It's proof that the AI race is real, the competition is fierce, and the winners are determined by capability, not marketing or brand loyalty.

That's good for everyone except those betting on complacency.

Key Takeaways

- Apple partnered with Google to power Siri with Gemini AI models in a multi-year deal worth approximately $1 billion annually

- Apple evaluated ChatGPT and Claude but found Gemini superior, validating Google's AI capabilities and surprising the industry

- The partnership uses Private Cloud Compute to isolate user data from Google's infrastructure, addressing privacy concerns

- OpenAI CEO Sam Altman called a 'code red' in response to Gemini's advances, indicating heightened competition among AI leaders

- Apple plans to eventually replace Gemini with in-house models, using the partnership as a temporary foundation while building internal capability

Related Articles

- Apple & Google's Gemini Partnership: The Future of AI Siri [2025]

- AI Models Learning Through Self-Generated Questions [2025]

- xAI's $20B Series E: What It Means for AI Competition [2025]

- AI PCs Are Reshaping Enterprise Work: Here's What You Need to Know [2025]

- Google Gemini for Home: Worth the Upgrade or Wait? [2025]

- Grok's Deepfake Problem: Why the Paywall Isn't Working [2025]

![Apple's Siri Powers Up With Google Gemini AI Partnership [2025]](https://tryrunable.com/blog/apple-s-siri-powers-up-with-google-gemini-ai-partnership-202/image-1-1768241264450.jpg)