The Future of AI Is Closer Than You Think: 2026 Predictions

Last year, I watched a developer build an entire customer support system in under three hours using AI agents. No frontend code. No database schema. Just prompts and automation.

That's the moment it hit me: AI isn't coming to your workflow. It's already there. And 2026 is going to make that way more obvious.

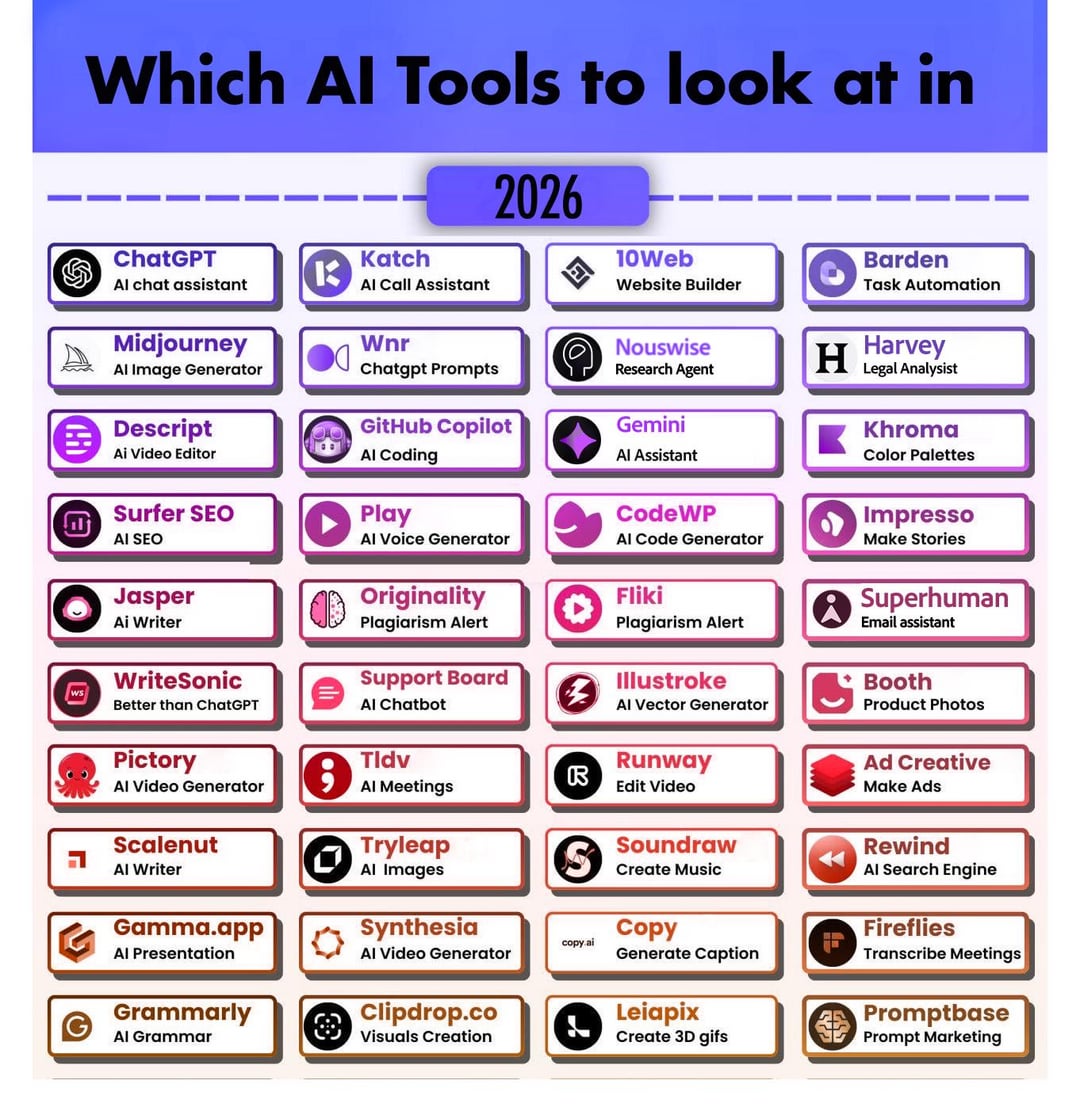

We're not talking about Chat GPT getting a new interface or Gemini adding a few more parameters. This is about AI becoming so woven into the tools you use daily that you won't think of them as "AI tools" anymore. They'll just be tools.

My team's been tracking what's brewing in the AI space—patent filings, job postings, developer conferences, closed beta tests. Add that to what the major players are publicly saying, and a pretty clear picture emerges. So here's what I think is coming in 2026.

The thing is, these aren't wild predictions. They're anchored in what's already happening. I'm just connecting the dots a few months ahead.

TL; DR

- AI agents become standard: Multi-step task automation moves from "cool experiment" to default workflow

- Context windows explode in usefulness: 200K token limits stop being party tricks and actually solve real problems

- Real-time reasoning transforms workflows: Models that think before responding shift how teams work

- Personalization gets genuinely useful: AI actually remembers what you care about instead of generic responses

- Horizontal integration dominates: AI stops being a separate tool and becomes embedded in everything

Prediction 1: AI Agents Become the Default Way Work Actually Gets Done

Let me set the scene. Right now, most people use AI like a search engine with better answers. You ask, it responds. Done.

But that's not where this is heading.

AI agents—systems that break down goals into steps, execute them, check results, and adapt—are still in early adopter territory in 2025. By 2026, they won't be.

Here's what I mean by agents: You tell the system a goal ("create a weekly report from our database, format it as slides, add our brand colors, and schedule it for Friday morning"). The agent breaks that into subtasks, executes them sequentially, handles errors, and delivers the final product. No human intervention between start and finish.

Why This Is Happening Now

Three things had to align. First, models got smart enough to plan reliably. Second, the infrastructure for chaining prompts together became stable. Third, real companies started seeing ROI from it.

Take OpenAI's recent moves. They're not just making Chat GPT smarter. They're building frameworks for agent reasoning. Anthropic released similar capabilities. Even Google's Gemini is getting agent features baked in.

Why? Because users are already hacking agents together manually. They're using multi-step prompts, copying outputs between windows, building pipelines. The big players are seeing demand and moving to make it native.

What This Looks Like in Practice

Imagine you're a content manager. Today, creating a blog post means: write outline (AI), expand sections (AI), find images (Google), add metadata (manually), schedule (third tool), update social calendars (another tool).

In 2026, you'd just tell the AI agent: "Write a 2,000-word blog post about AI in healthcare, target SEO for 'AI medical diagnosis,' include three original images, add schema markup, and create three social variations." Ninety seconds later, it's done and scheduled.

This isn't theoretical. Runable and similar platforms already offer agent-based automation for creating presentations, documents, reports, and multi-format content at scale. By 2026, this becomes the standard, not the novelty.

The Catch

Agents work best when goals are well-defined and outcomes are measurable. Fuzzy requests still fail. And security gets messier when an AI is executing multiple steps autonomously. But by 2026, most teams will have figured out how to constrain agents safely.

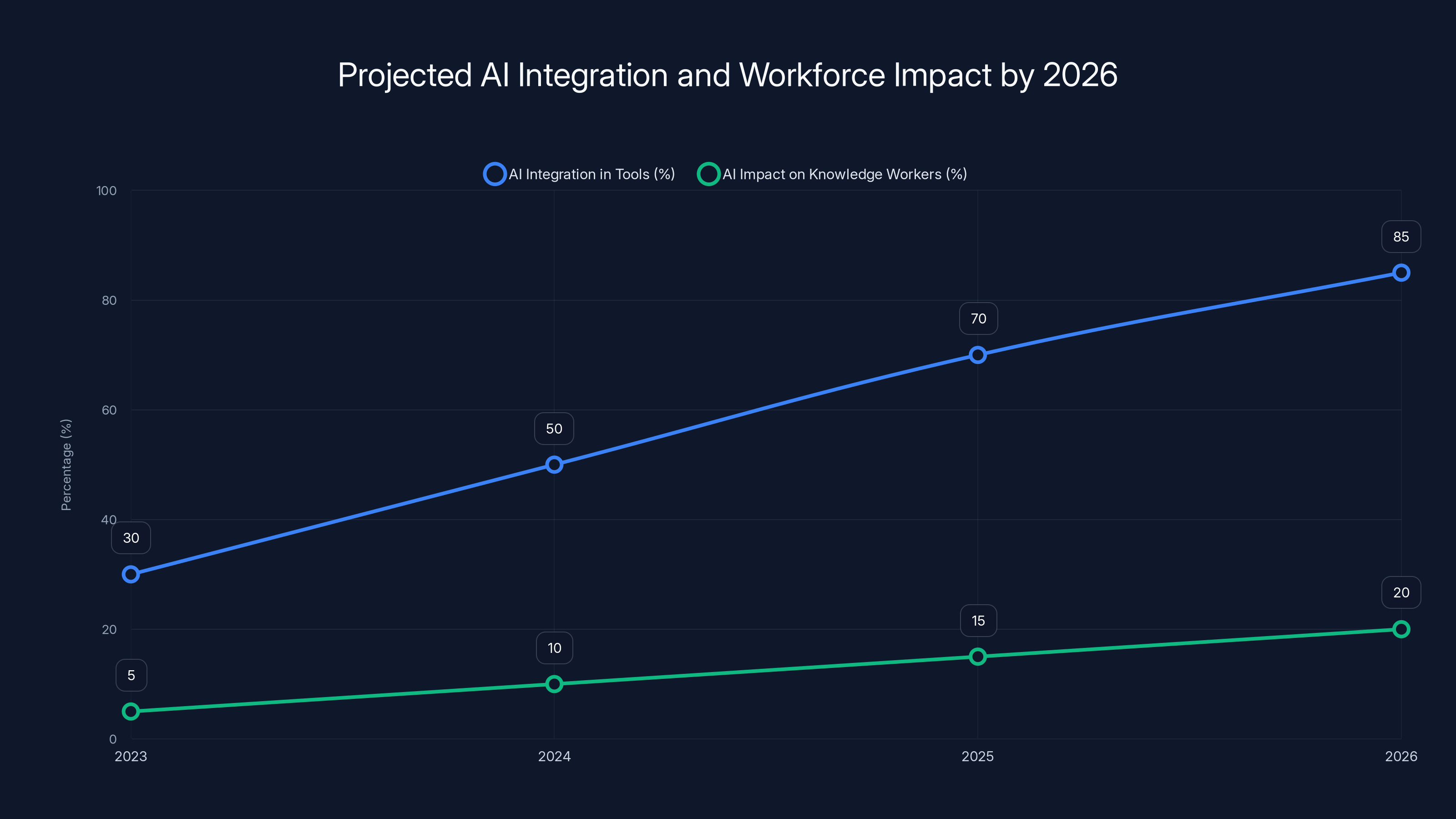

Estimated data shows increasing AI integration in tools, reaching 85% by 2026, while the impact on knowledge workers remains moderate at 20%.

Prediction 2: Context Windows Become Genuinely Useful, Not Just a Numbers Game

Right now, companies are obsessed with context window size. "Our model reads 200K tokens!" "Ours reads 1 million!"

But that's missing the point.

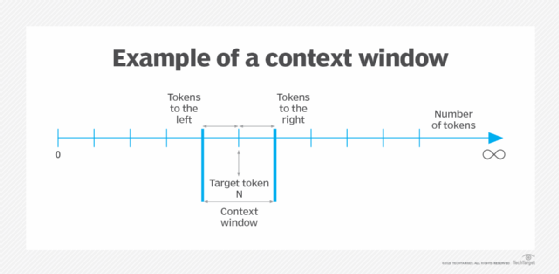

In 2025, big context windows are mostly bragging rights. You get a 200K token limit, but feeding it that much actually makes the model stupider. It gets confused. The "context degradation" problem is real.

By 2026, that changes fundamentally.

Models will get smarter about what to pay attention to within massive context windows. Instead of treating all 200K tokens equally, they'll learn to identify the relevant 10% and focus there. That's a bigger leap than simply increasing size.

Where This Gets Useful

Long-form documents. Code repositories. Research threads. Customer history. These are the places big context matters.

Picture a developer working on a legacy codebase. In 2025, feeding the entire codebase to an AI helps a little. In 2026, the AI actually understands how the pieces fit together because it's grasping relationships across the whole system at once.

Or a lawyer reviewing discovery documents. Instead of summarizing one file at a time, an AI agent could read an entire case folder and identify contradictions, highlight key evidence, and flag relevant precedents—all in one pass.

The practical effect: problems that currently require multiple AI calls, human review, and manual synthesis get solved in a single, coherent response.

The Technical Shift

This happens through better attention mechanisms and smarter retrieval. Instead of "give me everything," it becomes "give me what matters." Anthropic and OpenAI are both publishing research on this exact problem.

By mid-2026, the first models with truly usable 100K+ token windows will ship. Not usable because they're big, but usable because they're smart about what's inside them.

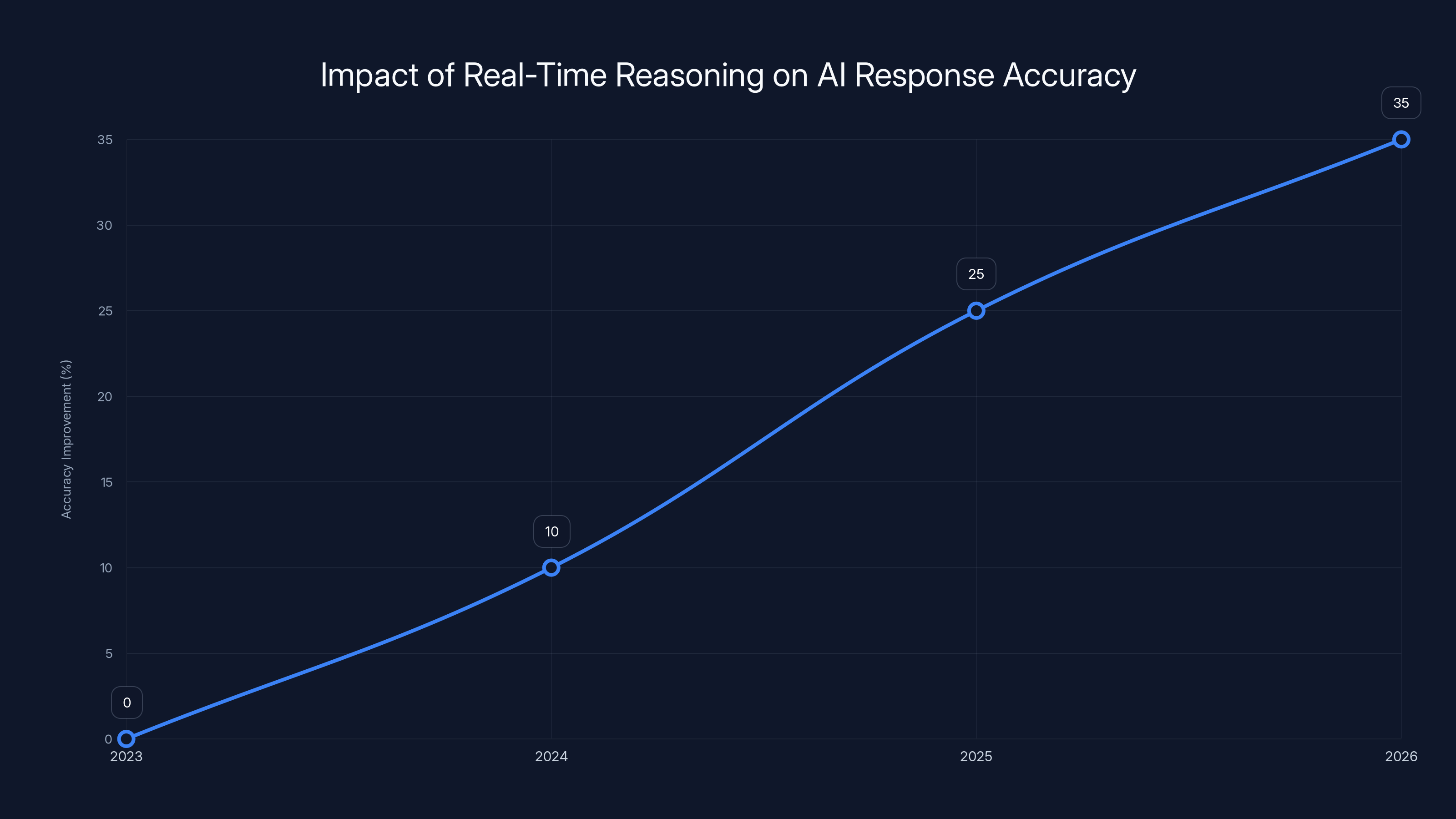

Prediction 3: Real-Time Reasoning Becomes the Hidden Advantage

There's a difference between fast and thoughtful.

Today's AI is fast. But it doesn't think through problems before answering. It generates the first plausible response in ~100 milliseconds.

That's fine for most things. But for complex reasoning, analysis, or debugging, it's a weakness.

The Shift Coming

By 2026, you'll have models that reason step-by-step before responding. Not the "chain of thought" prompting hacky workarounds we use now. Native, built-in reasoning where the model genuinely thinks through the problem.

OpenAI already hinted at this. DeepMind published research showing that thinking before responding dramatically improves accuracy on hard problems.

What changes for you? Response times go from instant to 2-5 seconds for complex tasks. But accuracy on those tasks jumps 30-40%. It's a trade worth making for anything that matters.

Where This Actually Matters

Code generation. Financial analysis. Strategic decision-making. Research synthesis. Bug fixing. Architecture design.

These are the high-stakes problems where fast-but-sloppy is worse than slow-but-right.

A developer asks an AI to help design a database schema for a multi-tenant Saa S. With real-time reasoning, the model actually considers scaling implications, security patterns, and common pitfalls before suggesting an approach. Without it, you get something that works for the demo but breaks at scale.

The Developer Experience

Imagine asking your AI assistant a complex question and seeing it "think" for a few seconds before responding. Some responses might get a small thinking indicator, like "Reasoning for 3 seconds..." That delay signals to your brain that the answer is solid.

By late 2026, this becomes standard for any model claiming to handle serious work.

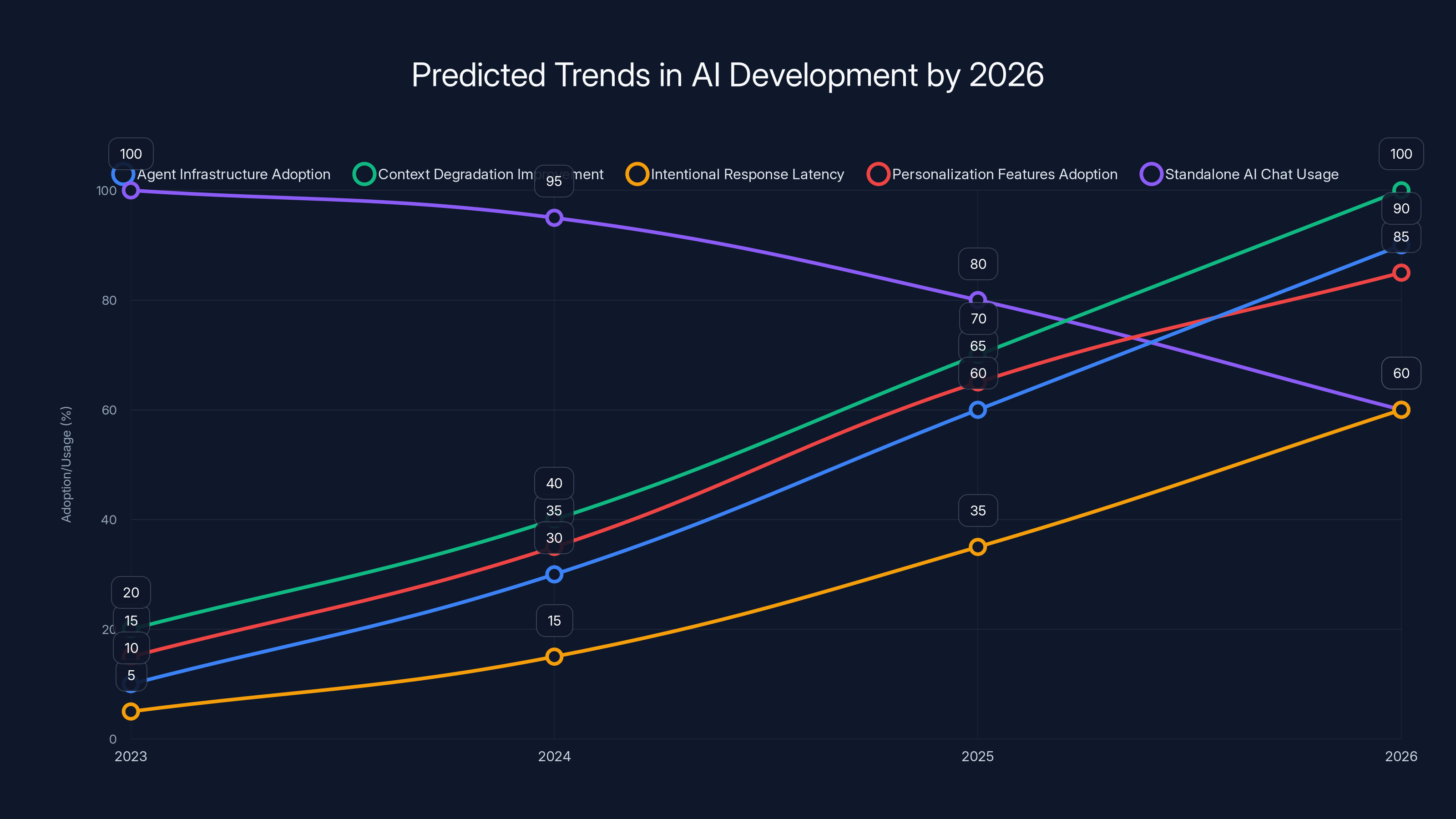

This chart projects key AI trends leading up to 2026, with agent infrastructure and personalization features expected to see significant adoption, while standalone AI chat usage declines. Estimated data.

Prediction 4: Personalization Actually Works (Finally)

AI's biggest weakness right now? It treats you like a stranger every time.

You tell it about a project. It responds generically. You explain your constraints again. It still gives broad advice. There's no learning.

In 2026, that ends.

What Changes

Models get better at storing and retrieving personal context. Not through simple conversation memory—we have that. But through genuine understanding of your preferences, goals, past decisions, and unique situation.

This means your AI actually knows you prefer certain code styles. It understands your company's tech stack and doesn't suggest incompatible solutions. It remembers what you've tried before and doesn't repeat failed approaches.

How It Works Technically

Some of this comes from better long-context windows (see Prediction 2). Some from improved fine-tuning where companies customize models to their specific needs. Some from smarter knowledge retrieval.

The result: AI that doesn't feel generic. That feels like it knows what it's talking about because it does.

The Privacy Question

Obviously, personalization raises privacy concerns. By 2026, expect:

- Local models becoming more viable for sensitive work

- Better privacy guarantees from major providers

- Enterprise deployments with strict data isolation

- User controls for what data gets stored and how

Companies like Anthropic are already building privacy-first models. By 2026, privacy-respecting personalization becomes table stakes, not a premium feature.

Practical Upside

You're a marketer working on campaign messaging. Instead of giving your AI the brand guidelines every time, it just knows them. Instead of explaining your target audience's pain points again, it remembers them. Instead of asking for three different tones, it already knows your preferred voice.

This saves maybe 5-10 minutes per prompt. On a team running 100 prompts a day, that's real productivity.

Prediction 5: Horizontal Integration—AI Gets Embedded Everywhere

This is the big one.

Right now, AI tools are separate. Chat GPT is one app. Claude is another. Gemini is another. You bounce between them.

By 2026, this changes dramatically.

The Shift from Vertical to Horizontal

Instead of standalone AI tools, you get AI baked into everything you already use. Email, documents, spreadsheets, databases, design tools, project managers.

You won't launch a separate app to use AI. You'll use AI within the apps you live in.

This is already starting. Microsoft's integrating AI into Office. Google embedded it in Workspace. Figma added design AI.

By 2026, it's not "AI in some tools." It's "AI in everything."

What This Means for Your Workflow

You're working on a presentation in your design tool. You highlight some text and ask the AI to expand it. It does, inline, without switching apps.

You're in your email. You draft a response. The AI suggests improvements before you hit send.

You're building a spreadsheet. You ask the AI to analyze the data and suggest what metrics matter. It does, within the sheet.

No friction. No context switching. Just work.

The Platforms That Win

Companies that become "platforms for AI" win big. Zapier already moved in this direction. Make.com is aggressive about AI integrations.

But the real winners are the ones already in your daily workflow: Microsoft, Google, Notion, Slack.

They own the distribution. They own the habit. By 2026, they'll own the AI experience too.

Specialized Tools Adapt or Die

Standalone AI tools don't disappear. But they become specialized. You use a specific tool for coding, another for research, another for creativity. The general-purpose chat interface becomes less valuable as AI spreads everywhere.

This is actually good for users. Instead of one AI trying to be everything, you get tools optimized for specific tasks.

The Broader Shift: From "AI Tools" to Just "Tools"

Here's the meta trend underneath all five predictions.

In 2025, we think of AI as a category. "AI tools," "AI assistants," "AI platforms." It's a bucket.

By 2026, we stop. AI becomes invisible infrastructure. You don't use an "AI-powered presentation maker." You use a presentation maker that happens to be powered by AI. The AI isn't the feature—it's the foundation.

This shift changes everything about how people relate to these tools.

Why This Matters

When something is new and exciting, people experiment. They test. They learn the edges.

When something becomes routine? It gets serious. Teams adopt it. Companies build on top of it. Standards emerge.

In 2026, AI crosses that threshold. It stops being novel. It becomes boring in the best way—it just works.

For people building products, this means the playbook changes. You're not selling "AI capabilities" anymore. You're selling "faster design workflows" or "better code quality" or "cleaner data." The AI is just how you deliver that.

The Developer Experience Shift

Today, AI gives developers superpowers. Code faster. Ship quicker. Solve harder problems.

By 2026, AI stops being optional. It becomes baseline.

If you're a developer in 2026 and your tools don't have AI built in, you're working at a disadvantage. Everyone else is automating their grunt work. You're not.

This creates pressure on tools that haven't integrated AI. They adapt or lose market share.

Platforms like Runable—which offer AI agents for creating slides, documents, reports, and multi-format content—become the standard toolkit for teams that care about efficiency. Not because they're labeled "AI tools," but because they deliver measurable results.

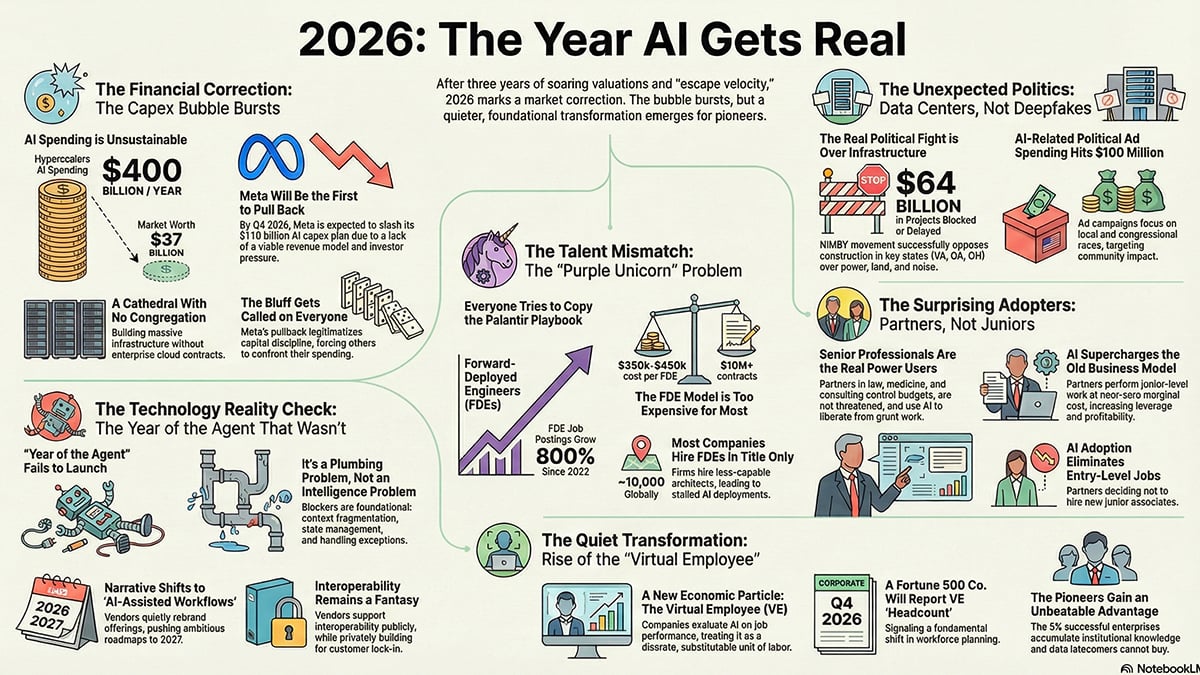

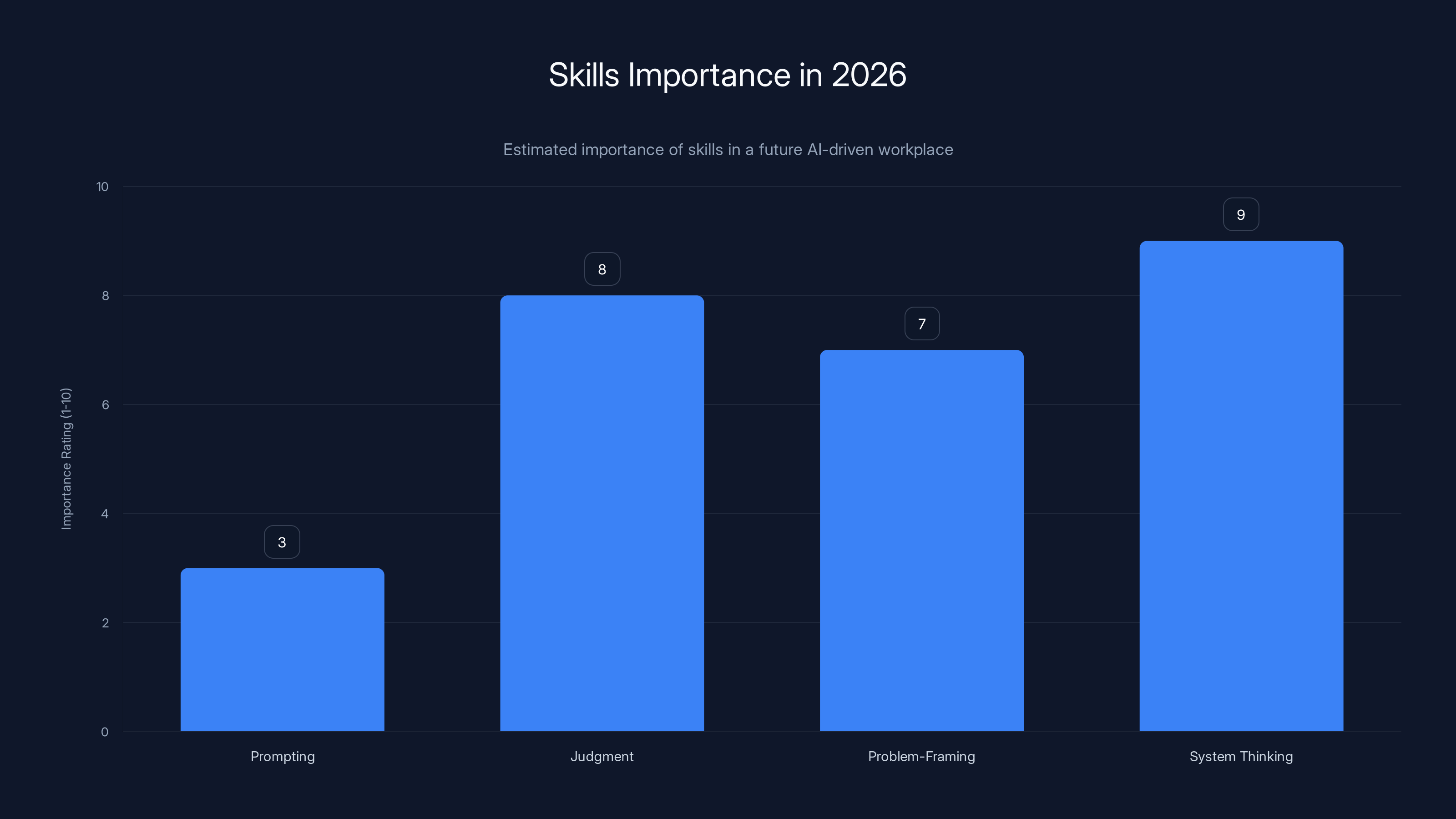

In 2026, judgment, problem-framing, and system thinking become crucial skills in an AI-driven workplace, while prompting becomes less critical. Estimated data.

How These Predictions Interconnect

These five predictions aren't independent. They're part of a larger pattern.

Agents (Prediction 1) need better context windows (Prediction 2) to plan effectively. Real-time reasoning (Prediction 3) helps agents make smarter decisions. Personalization (Prediction 4) lets agents understand your specific constraints. Horizontal integration (Prediction 5) puts agents where you actually work.

Together, they describe a shift from "AI as a tool I launch" to "AI as infrastructure in the tools I use."

The Timeline

Early 2026: First major models ship with agent frameworks and improved context handling.

Mid-2026: Real-time reasoning becomes competitive. Horizontal integrations proliferate.

Late 2026: Personalization reaches maturity. It becomes weird if your AI assistant doesn't know basic facts about your situation.

By end of 2026: Most people don't think "I'm using AI." They just think "this is fast" or "this works well."

What This Means for Your Work

Let me get practical.

If you're a developer, 2026 is the year you stop writing boilerplate. Agents handle it. You focus on architecture and logic.

If you're in marketing or content, 2026 is when personalization actually helps. Your AI assistant knows your brand voice, your audience, your past campaigns. It doesn't suggest generic garbage anymore.

If you're in operations or management, 2026 is when AI agents start handling workflows end-to-end. Weekly reports. Data analysis. Scheduling. Resource allocation. These become fire-and-forget.

If you're in creative work, real-time reasoning helps. Your AI doesn't just generate ideas. It thinks through implications, constraints, and creative directions before showing you options.

The Skills That Matter

Prompting becomes less valuable. Understanding "how to talk to AI" is now basic literacy.

What matters is judgment. You need to know if the AI's answer is right, even if you couldn't have generated it yourself. You need to spot when it's hallucinating. You need to understand its constraints.

Problem-framing becomes more valuable too. The better you describe what you actually need, the better the result. This is true with people and machines, but it becomes acute with AI that can do deep work if pointed right.

System thinking matters more. These tools interact with each other and your existing systems. Understanding those interactions is where value lives.

The Risks and Uncertainties

Now, I should be honest about what could derail these predictions.

Regulation

If governments move aggressively on AI regulation, adoption could slow. Not stop, but slow. This might actually push toward better privacy and safety practices, which could accelerate horizontal integration paradoxically—because enterprises demand that before letting AI into sensitive workflows.

Capability Plateaus

Maybe we're hitting fundamental limits on what next-token prediction can achieve. Maybe we're not, and the next breakthroughs are around the corner. This uncertainty makes 2026 predictions inherently fuzzy.

Market Consolidation

We might not get the diversified ecosystem the predictions assume. A single platform might dominate horizontal integration. That changes how accessible these tools are.

Security and Reliability

For agents to become standard, they need to be reliable. If we ship agents that break critical workflows, adoption stalls. This is the biggest dependency: execution quality.

Actual User Adoption

Fancy AI features don't matter if people don't use them. By 2026, we'll know which predictions stuck with real users and which were hype. My guess: agents and horizontal integration stick. Real-time reasoning becomes niche. Personalization mostly works but privacy concerns limit it.

Projected data suggests that by 2026, AI models with real-time reasoning could improve accuracy on complex tasks by up to 35%. Estimated data.

How to Prepare for 2026 Right Now

If these predictions are right, what should you do today?

For Individuals

Start experimenting with multi-step workflows. Not just asking Chat GPT questions, but building sequences. Learn what breaks. Learn what works. Build intuition for where AI is genuinely useful versus where it's solving imaginary problems.

Experiment with context. Feed AI large documents. See where it loses coherence. Get a feel for the limits.

Think about personalization. What would an AI need to know about you to be useful? Not just surface-level preferences, but deep stuff about your constraints, goals, and taste. Start documenting that. Some tools let you provide this; most don't yet. But 2026 is coming.

For Teams

Audit your workflows. Find the repetitive, high-friction stuff. That's where agents will hit hardest first. Start planning how to integrate AI into those workflows.

Build AI literacy. Not everyone needs to be an AI expert. But everyone should understand what it can and can't do, how it breaks, and when it's worth using.

Think about your platform strategy. If AI becomes embedded in tools you use, which tools matter most? What's your stack going to look like? Start moving toward platforms that already have AI integrated.

For Builders

If you're building products, embed AI early. Don't bolt it on later—it won't integrate cleanly. Think about where in the user's workflow AI naturally helps. Build there.

Focus on problems AI actually solves. Not "our tool has AI" but "our tool saves you six hours a week." The AI is implementation detail.

Think horizontally. Can your AI feature work inside other tools? Can it integrate with existing workflows? If not, you're fighting the trend.

The Competitive Landscape in 2026

Who wins and who loses?

Winners

Platforms with existing distribution: Microsoft, Google, Slack, Notion. They own the attention. They'll win at horizontal integration.

Specialized AI tools that solve specific problems really well: Coding assistants, design AI, research tools that outperform general-purpose models.

Infrastructure companies enabling agents: Zapier, Make, and similar orchestration platforms become more valuable as agents need to integrate with existing systems.

Productivity platforms that embrace AI agents: Tools like Runable that make it stupidly easy to automate multi-step workflows across presentations, documents, reports, and images gain serious traction.

Losers

General-purpose chat interfaces: Standalone Chat GPT competitors struggle as AI embeds everywhere.

Tools that ignore AI: They lose to competitors that integrate it, period. No differentiation.

Companies that sell AI as the product instead of AI as the feature: People don't want "AI tools." They want tools that work better and faster. If your pitch is "AI," you're doing it wrong.

What 2026 Means for the AI Industry Itself

These predictions also reshape what AI companies do.

The Model-Building Shift

By 2026, the focus moves from "make a better foundation model" to "make better tools on top of foundation models."

This shifts competition. It's no longer just about parameter count or benchmark scores. It's about integration, reliability, specific use cases, and user experience.

Smaller companies can compete. A team of 20 people building a specialized coding AI can outperform the big foundational model labs if they optimize for developers specifically.

API-First Becomes Standard

Most AI interacts through APIs by 2026. You don't run models locally (mostly). You call APIs. This centralizes power with companies that control the APIs but also lets anyone build on top.

Data Becomes the Moat

With foundation models commoditizing, the differentiation shifts to data. Companies with rich, specific, proprietary training data win. This means enterprise AI solutions thrive because they can fine-tune on company-specific data.

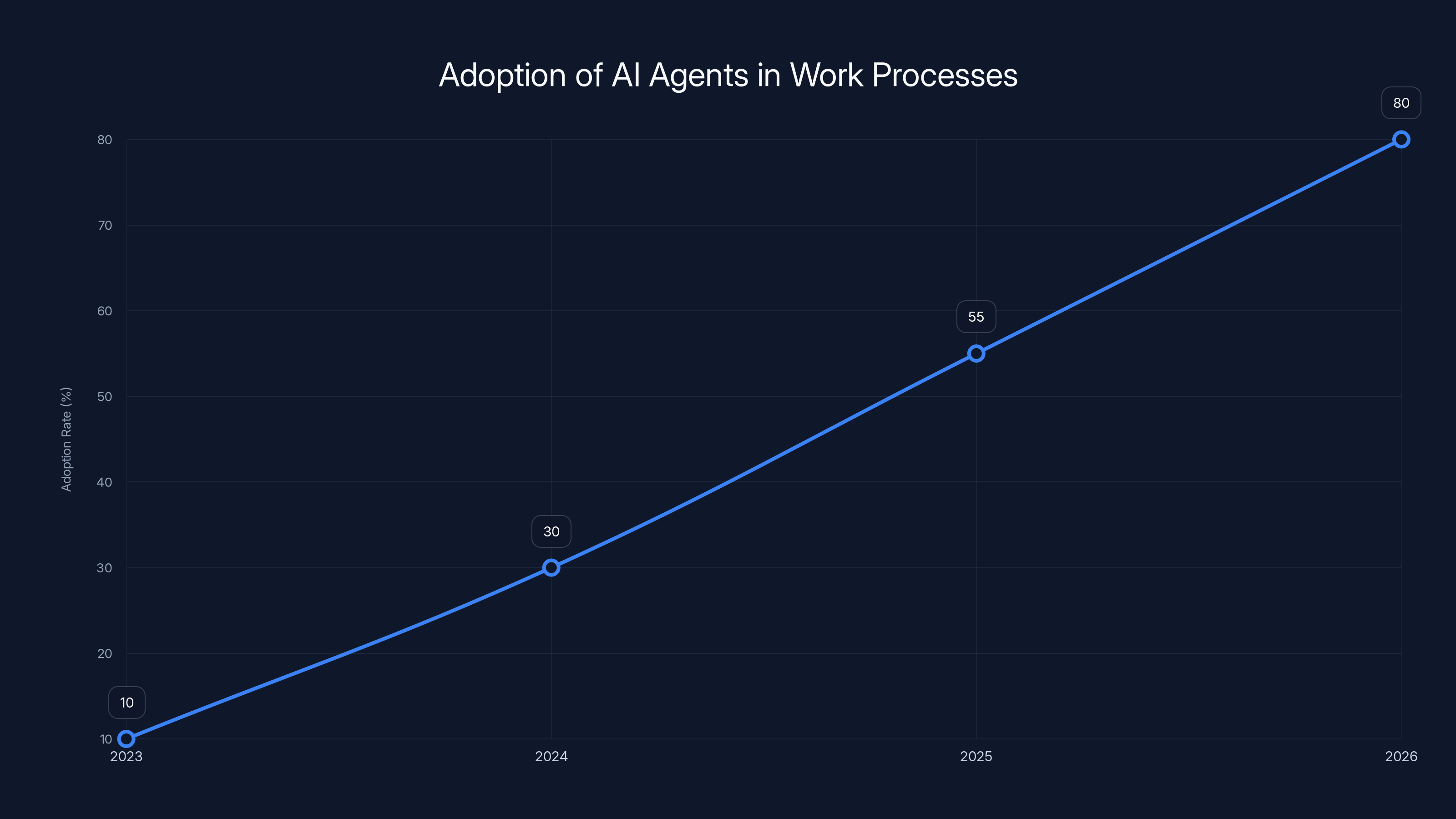

The adoption of AI agents in work processes is projected to increase significantly, reaching 80% by 2026. Estimated data.

The Long-Term Trajectory Beyond 2026

These five predictions are steps on a longer staircase.

By 2027-2028, we'll probably see:

- Agentic AI handling 40-50% of knowledge worker tasks fully autonomously

- Context windows becoming almost meaningless (models might "remember" entire project histories)

- Reasoning becoming invisible (it's just part of every response)

- Personalization so specific that generic AI seems quaint

- "AI tools" as a category becoming obsolete—it's just tools

By 2030, the question shifts from "can AI do this?" to "why would a human do this manually?"

But 2026? That's the inflection point. The year AI stops being novel and becomes infrastructure. The year it stops being a separate thing you launch and becomes part of how work works.

Key Uncertainties and What Could Change

I should note where I'm most uncertain.

Real-time reasoning adoption: This could be slow. Most people work on problems where "good enough now" beats "perfect in 5 seconds." Reasoning might stay niche for years.

Personalization viability: Privacy concerns could wall this off. We might end up with local models for personalization while cloud APIs remain generic. That's actually fine—probably better.

Agent reliability: If agents can't reliably execute complex workflows without human supervision, adoption won't happen the way I'm predicting. We might stay in a "supervising agents" phase for longer.

Horizontal integration resistance: Some users might hate AI everywhere. They might value simplicity and transparency over convenience. If that movement gains traction, horizontal integration could stall.

Economic factors: If recession hits hard, companies cut AI spending. This delays adoption but probably doesn't change the direction.

My confidence in agents becoming standard is high (80%+). Confidence in horizontal integration is similarly high. Real-time reasoning? Medium confidence (60%). Personalization? Medium-high (70%). Everything else is dependent on execution and market conditions.

What You Should Watch For in 2026

These signals will tell you if these predictions are actually happening:

-

Agent infrastructure going mainstream: If you see agent frameworks from Open AI, Google, and Anthropic shipping with serious production support, prediction 1 is materializing.

-

Context degradation disappearing: If major models show consistent performance across their entire context window (no accuracy cliff), prediction 2 is happening.

-

Response latency increasing intentionally: If major AI companies add visible thinking time and market it as better, prediction 3 is real.

-

Personalization features shipping widely: If platforms start offering saved contexts, memory systems, and preference learning, prediction 4 is taking off.

-

Standalone AI chat declining: If Chat GPT's usage growth flattens or declines while embedded AI in other tools grows, prediction 5 is materializing.

Watch for these. If you see three or more of these signals by Q3 2026, the predictions are tracking well.

The Practical Bottom Line

If you read nothing else, remember this:

AI in 2026 becomes invisible. It stops being a tool you launch and becomes infrastructure in the tools you already use. The organizations that adapt first—that integrate AI into their workflows early—will have significant competitive advantages.

For individuals, this means developing judgment about when AI is useful and building skill in extracting value from it. For teams, this means auditing your workflows now and planning integrations before they become mandatory. For builders, this means embedding AI early and thinking horizontally about distribution.

The pace of change is real. But 2026 isn't a surprise—the pieces are already visible. We're just connecting them and watching the integration accelerate.

FAQ

What is an AI agent?

An AI agent is a system that can break complex goals into steps, execute them automatically, check results, and adapt based on outcomes without constant human intervention. For example, an agent could handle creating a multi-step marketing campaign by researching competitors, drafting copy, generating images, scheduling posts, and analyzing performance—all without you touching the keyboard.

How does real-time reasoning improve AI responses?

Instead of generating the first plausible answer instantly, models that use real-time reasoning spend a few seconds thinking through the problem step-by-step before responding. This deliberation process significantly improves accuracy on complex tasks like debugging code, analyzing data, or strategic planning, though it trades off speed for quality.

Will AI replace knowledge workers by 2026?

No. By 2026, AI handles specific high-volume tasks very well—routine analysis, content creation, code generation, summarization. But it still needs human judgment for strategy, creativity, ethical decisions, and anything requiring real-world understanding. The realistic timeline for significant displacement is multiple years beyond 2026.

How does horizontal integration of AI change which tools I should use?

Instead of maintaining separate AI chat apps alongside your regular tools, horizontal integration means the AI you need is built into your email, documents, spreadsheets, and project management software. This reduces app-switching friction and context loss. By 2026, you should prioritize platforms that already integrate AI rather than trying to manage separate specialized AI tools.

What's the difference between personalization and privacy risks?

Personalization requires storing information about your preferences, history, and constraints. This creates privacy risks because that data could be accessed improperly. By 2026, this should be mitigated through better encryption, local processing for sensitive data, and user controls over what gets stored. The goal is personalization without exposing your information unnecessarily.

Should I invest in learning AI tools if they'll change so much by 2026?

Absolutely. The skills transfer. Learning how to work effectively with AI, understanding its limitations, and developing judgment about when it helps—these skills apply regardless of specific tool. Plus, the fundamentals of AI (how context works, what makes prompts effective, where it breaks) aren't changing in 2026. You're building solid foundations.

How do I prepare my team for these AI changes?

Start by identifying repetitive workflows where AI could help. Run experiments with existing tools. Build understanding of what AI can realistically do in your domain. Evaluate platforms that are already integrating AI rather than waiting for tools to catch up. Most importantly, start now—teams that pilot these tools in 2025 will be far ahead when 2026 arrives and integration accelerates.

Will smaller companies be able to compete in an AI-first world?

Yes, actually better than in previous tech transitions. Because AI is becoming infrastructure available through APIs, smaller teams can build sophisticated products without needing massive resources. The barrier to entry for AI-powered products is lower than ever. The competitive advantage shifts from "can we afford to build AI?" to "can we solve a real problem and integrate it effectively?"

What happens to the companies that built the foundation models if AI becomes embedded everywhere?

They shift from selling models to selling AI as a service through APIs. OpenAI, Anthropic, and Google will make more money from APIs consumed by platforms and enterprises than from direct users. Their competitive advantage remains in model quality and scaling, but it's invisible to end users.

How will AI regulation affect these predictions?

Regulation could accelerate or slow adoption depending on how it's designed. Heavy compliance requirements might actually favor horizontal integration with major platforms (because they can afford compliance teams) over smaller tools. Privacy-focused regulation would slow some aspects of personalization but push toward local processing and better security—which is good long-term. These predictions assume reasonable regulation, not existential restrictions.

What's the single most important thing I should do to prepare for 2026 AI changes?

Start using AI for real work now. Not experimenting—actually integrating it into your workflow. Get comfortable with when it fails, when it succeeds, and how to get the most value. This knowledge becomes your competitive advantage when AI adoption accelerates. Everyone will have access to the same tools in 2026. The difference will be who knows how to use them effectively.

Final Thoughts on 2026

The AI revolution isn't coming in 2026. It's already here. 2026 is just when it becomes undeniable. When the early adopters become the baseline. When AI stops being optional.

The companies and individuals who've been experimenting and building since 2023-2024 will have a massive head start. They'll have workflows optimized for AI integration, teams trained in AI-first approaches, and products that leverage these capabilities.

If you haven't started yet, now is the time. Not because 2026 is some magic date that changes everything. But because the trends visible today will be fully mature by then, and you want to be on the front edge of that wave, not trying to catch up after it's already landed.

The future is AI-augmented work. Less busywork, more actual thinking. Less repetition, more exploration. Less waiting for tools to catch up, more building what you actually need.

2026 doesn't invent that future. It just makes it normal.

Key Takeaways

- AI agents move from experimental to standard by 2026, handling multi-step workflows end-to-end without human intervention

- Context windows become genuinely useful through intelligent prioritization, not just size increases, solving real problems for developers and analysts

- Real-time reasoning becomes competitive as models spend seconds thinking through complex problems before responding, improving accuracy 30-40%

- Personalization finally works by 2026 as AI remembers your preferences, constraints, and past decisions instead of treating you generically each time

- Horizontal integration embeds AI into existing tools you use daily rather than requiring separate AI applications, fundamentally changing how knowledge work gets done

Related Articles

- What AI Leaders Predict for 2026: ChatGPT, Gemini, Claude Reveal [2025]

- AI Budget Is the Only Growth Lever Left for SaaS in 2026 [2025]

- Best AI Chatbots for Beginners: Complete Guide [2025]

- Trump's Offshore Wind Pause Faces Legal Challenge: Data Center Power Demand Crisis [2025]

- AI Data Center Boom vs Infrastructure Projects: The Resource War [2025]

- JuicyChat.AI: The Future of Unfiltered AI Interaction [2025]

![AI Predictions 2026: What's Next for ChatGPT, Gemini & You [2025]](https://tryrunable.com/blog/ai-predictions-2026-what-s-next-for-chatgpt-gemini-you-2025/image-1-1767258336710.jpg)