The AI Slop Crisis: Why Your Music Streaming Service Is Broken

Last week, I opened YouTube Music and scrolled through what should've been a curated listening experience. Instead, I found myself staring at track after track of absolutely lifeless, corporate-sounding AI-generated music. Artist names I'd never heard of. Album covers that looked like they were generated in 0.3 seconds. Song titles that read like they were assembled by a marketing algorithm with zero taste.

And here's the thing: I'm willing to bet you've heard some of this stuff too. You just didn't realize it was AI-generated.

This isn't theoretical anymore. YouTube Music—one of the biggest music streaming platforms in the world—has become a dumping ground for what industry insiders are calling "AI slop". Not music made with AI as a creative tool. Not artists experimenting with generative technology. This is something far more cynical: thousands of tracks designed explicitly to game the algorithm, feed the recommendation engine, and extract ad revenue without ever being listened to by a human being.

The problem is so pervasive that actual musicians, listeners, and even industry veterans are losing their minds over it. And as someone who's spent years in music production, I understand exactly why. This isn't just about artistic integrity anymore. It's about the infrastructure of music discovery itself breaking down.

Let me walk you through what's actually happening, why it matters, and what needs to change.

What Exactly Is AI Slop in Music?

First, let's define this clearly because the term "AI slop" gets thrown around a lot, and people mean different things by it.

AI slop isn't every song made with AI assistance. It's not AI music generation tools used creatively. It's not an artist using AI audio editing software to speed up their workflow. Those things exist on a spectrum, and reasonable people can disagree about where the line is.

No, AI slop is something more specific and more insidious. It's music that's generated with zero creative intent, uploaded in bulk, and designed specifically to exploit platform mechanics. It's the digital equivalent of spam emails—except it's taking up valuable real estate on music streaming services.

Here's what makes it slop:

It's generated in bulk. We're talking thousands of tracks, sometimes tens of thousands, created by a single actor or small group using generative AI tools. The speed of creation is the entire point. You can't create that volume of music quickly if you're actually making something thoughtful.

It's algorithmically optimized, not artistically optimized. These tracks are titled, tagged, and uploaded specifically to trigger the algorithm's recommendation engine. Genre tags are stuffed with irrelevant keywords. Metadata is engineered to maximize placement in playlists. None of this serves the listener—it only serves the revenue mechanism.

It's intentionally forgettable. The music itself is typically generic, mood-based background material—lo-fi beats, ambient soundscapes, lo-fi hip-hop, that kind of thing. It's designed to be inoffensive enough that people don't skip it, but not so engaging that they actually care about it. It's background noise masquerading as music.

The entire purpose is extracting revenue. Most of these tracks exist for one reason: to generate streaming revenue through the platform's payment system. Since streaming services pay fractions of a cent per stream, the math actually works if you can generate enough fake or semi-fake engagement. Bots stream the tracks. The algorithm promotes them. Real listeners hear them in playlists they never asked for. Money flows to accounts created specifically to harvest it.

This is fundamentally different from an artist using AI as a tool within their creative process. It's spam, dressed up as music, infecting the very platforms meant to help artists reach listeners.

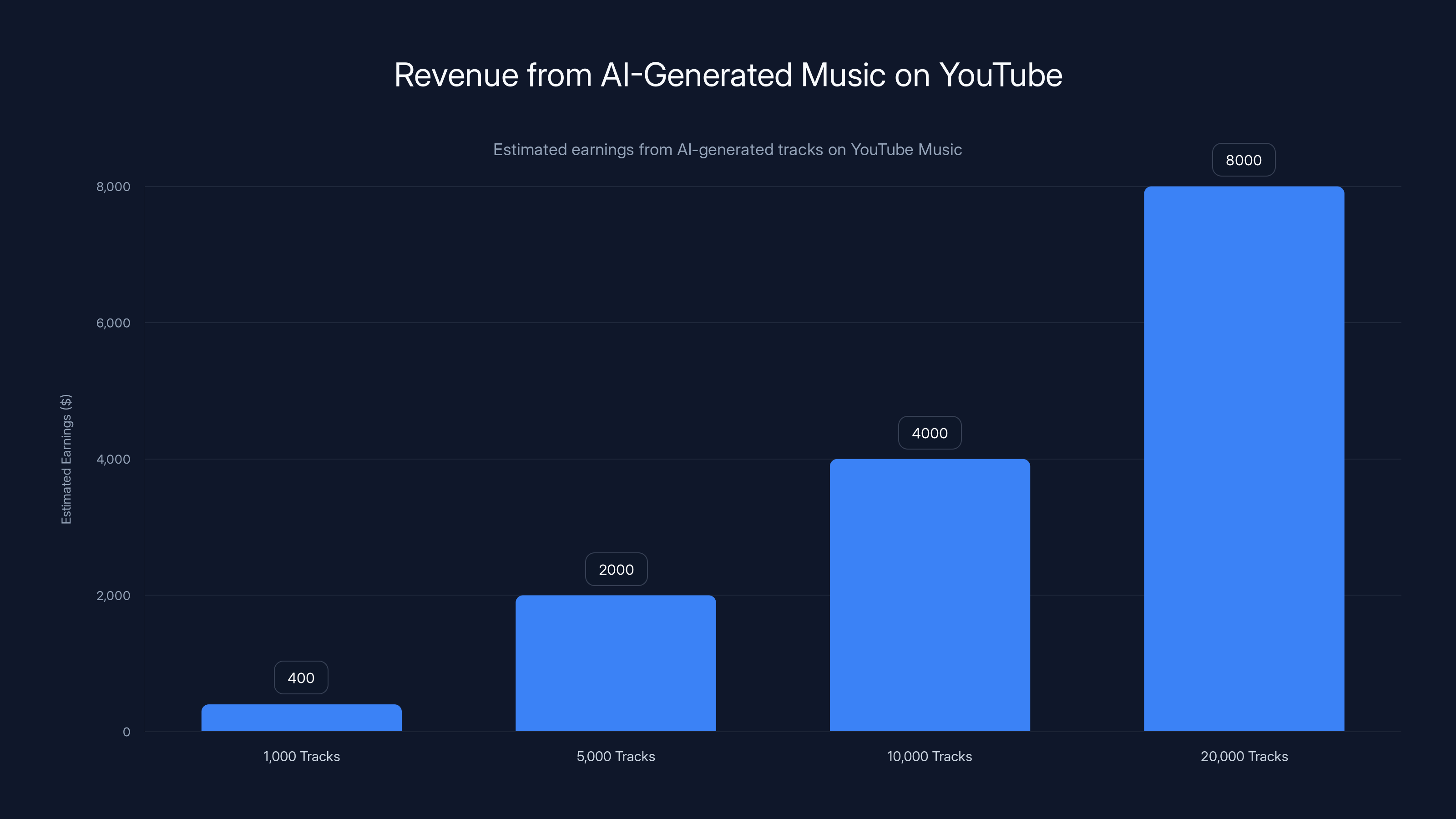

Uploading 10,000 AI-generated tracks can yield approximately $4,000, highlighting how volume can drive revenue despite low per-stream payouts. Estimated data.

How Did YouTube Music Become an AI Slop Landfill?

YouTube Music didn't wake up one day and decide to become the dumping ground for AI-generated garbage. This happened gradually, the way most platform problems do: through a combination of incentives misalignment, scaling challenges, and insufficient moderation.

Let's trace the incentive chain. YouTube Music uses a payment model where rights holders earn money based on streams. It's not a huge amount—we're talking somewhere in the range of

If you upload 10,000 tracks and each one averages just 100 streams from a combination of bot activity and algorithmic promotion, that's a million streams. At

Scale that further. If you create a dozen AI slop "artists" or split uploads across multiple accounts, suddenly you're talking about meaningful revenue. Not wealthy-person money, but enough to incentivize doing it. Especially if you're in a country where that income is substantial relative to local wages.

The problem is compounded by YouTube's recommendation algorithm. The algorithm is trained to maximize watch time and engagement. It doesn't care if that engagement is genuine. If a track gets decent streaming numbers, the algorithm assumes listeners like it and starts recommending it to similar listeners. This creates a feedback loop where AI slop actually gets promoted to real users, who might stream a few seconds before skipping.

For real artists, this is catastrophic. Your album gets buried under thousands of generically titled "Chill Lo-Fi Study Beats Vol. 437" tracks. Discovery becomes impossible. Getting on playlists becomes harder because curators are sifting through noise. The signal-to-noise ratio on music discovery has degraded to the point where it's barely functional.

YouTube's moderation infrastructure simply wasn't built to handle this scale of abuse. The platform has content moderators for video, but audio at scale is a different challenge. You can't reasonably listen to every track uploaded to the platform. Detection is mostly automated, which means AI slop creators are constantly trying to find ways around the automated filters.

Meanwhile, YouTube's approach has been relatively hands-off. The company hasn't taken aggressive measures to prevent bulk uploads or identify and remove AI-generated slop in volume. This isn't necessarily malicious—it might just be resource constraints. But the result is the same: the platform has become increasingly unusable for discovering actual music.

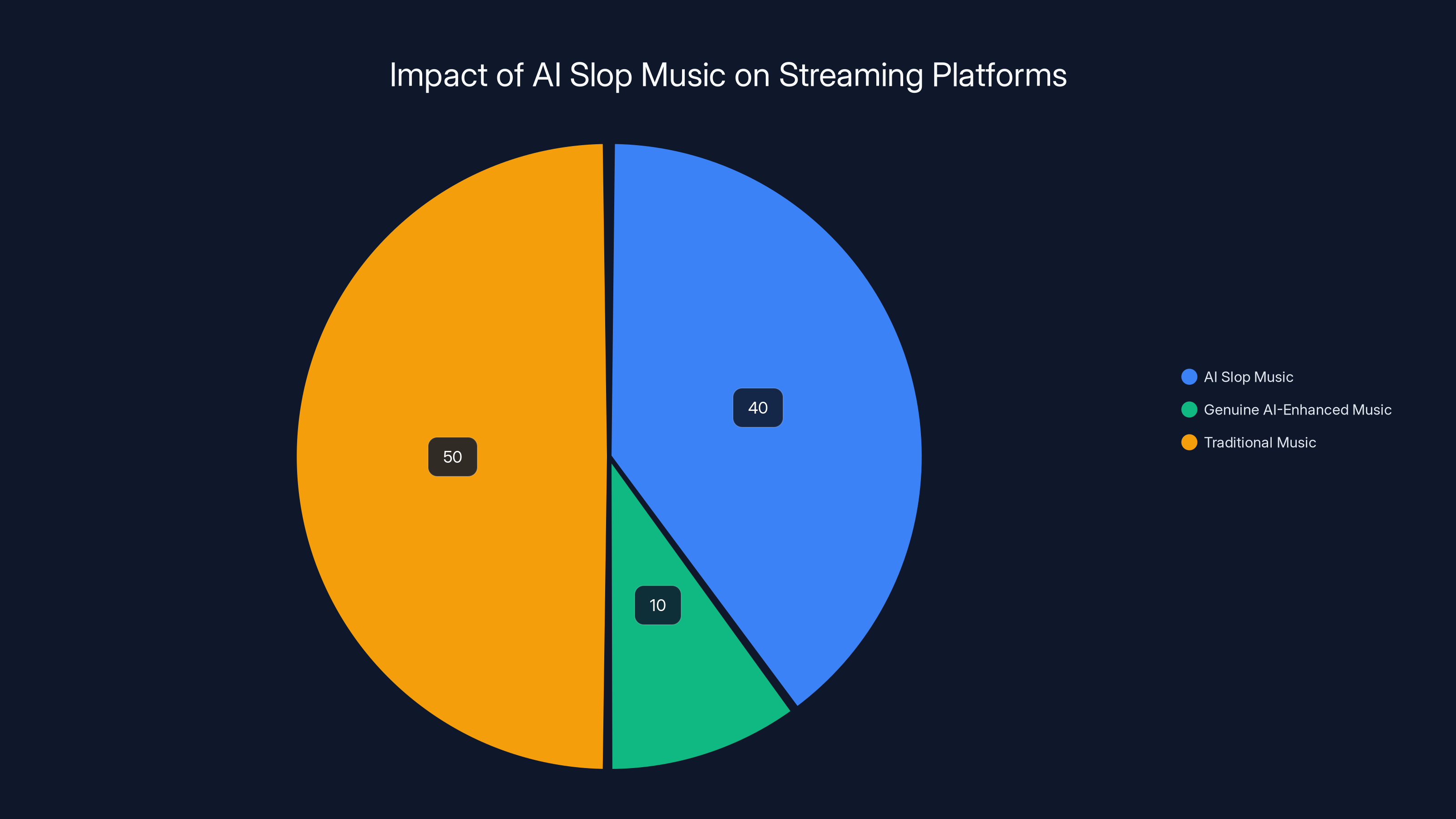

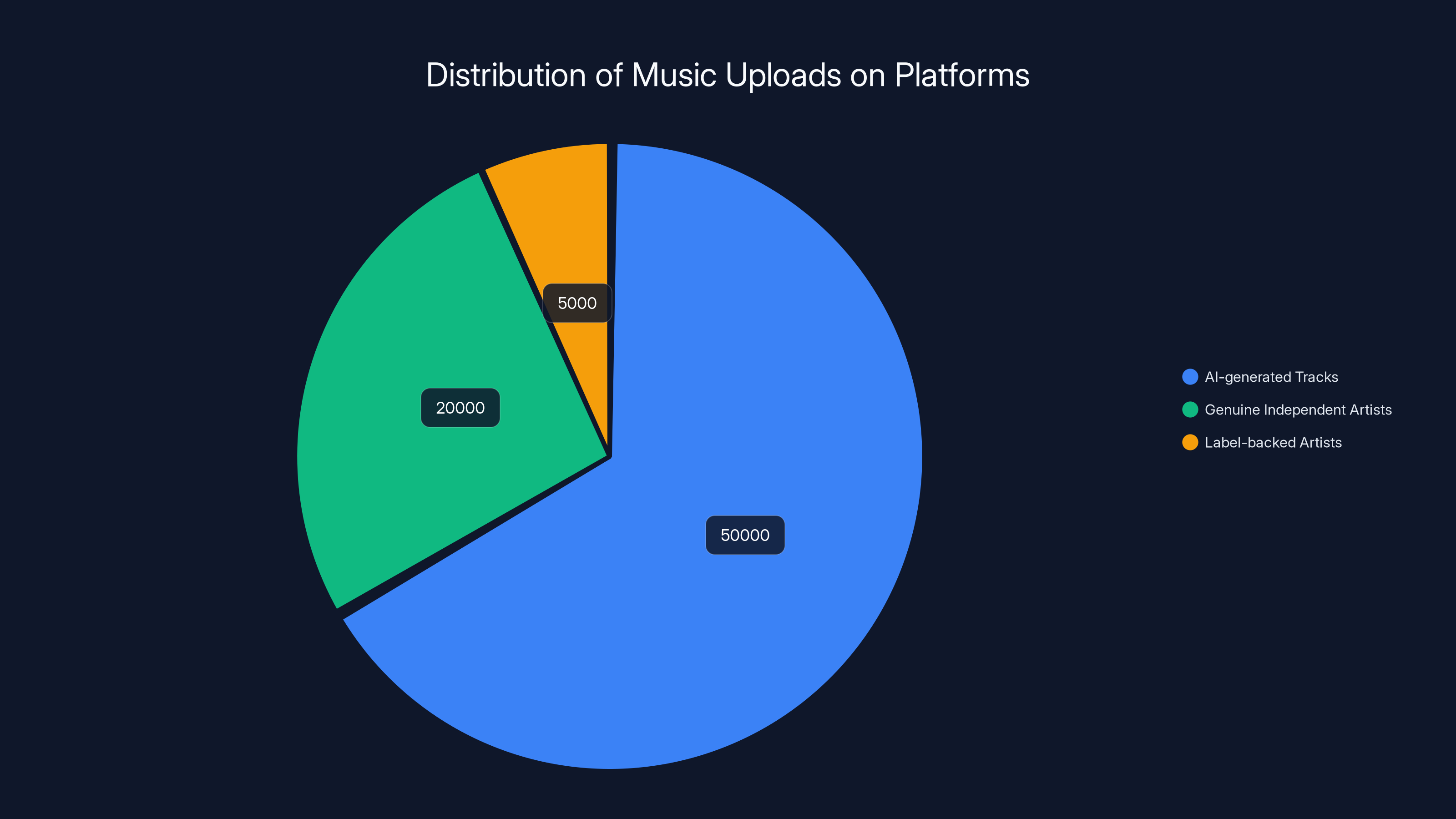

Estimated data suggests AI slop music constitutes a significant portion of streaming content, potentially overshadowing genuine music. Estimated data.

The Streaming Economy Is Broken

Here's the uncomfortable truth: AI slop is a symptom of a system that's fundamentally broken. And that broken system existed long before AI-generated music became an issue.

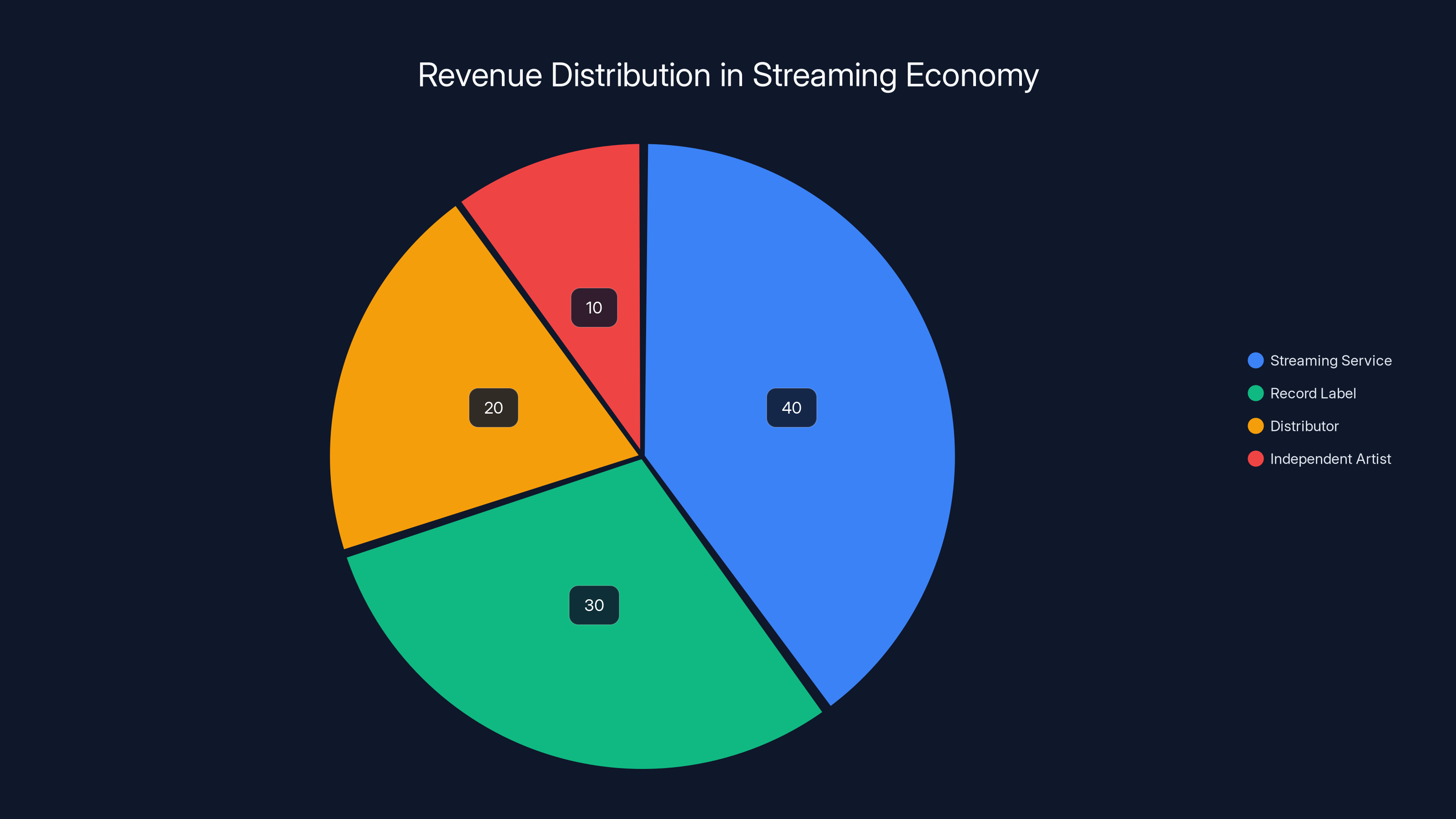

The streaming economy has never been fair to artists. Streaming services pay out an average of

Add onto this the fact that streaming services take a cut, then labels take a cut, then distributors take a cut. By the time money reaches an independent artist, they're looking at pennies per thousand streams.

This is why AI slop exists. The system created the incentive structure that makes it profitable. If streaming services paid artists fairly, AI slop wouldn't be economically viable. If artist discovery actually worked, slop wouldn't need to game the algorithm. If moderation was robust, slop couldn't survive at scale.

Instead, we have a situation where the economics make sense for someone to automate music generation, upload it in bulk, and let the algorithm do the rest. The platform benefits from increased content volume and watch time. The AI slop creator benefits from the revenue stream. Everyone else—real artists, real listeners—loses.

This is what happens when you align economic incentives around volume rather than quality.

The Impact on Real Musicians

If you're an independent musician, AI slop isn't just annoying—it's actively destroying your ability to be discovered.

Let me paint a scenario. You're a solo artist. You've spent six months writing, recording, and producing an album. You've paid for studio time or invested in home recording equipment. You've mastered the tracks. You've designed cover art. You've uploaded to DistroKid, TuneCore, or CD Baby to get your music onto all the major platforms.

You release the album. It's genuinely good. But so is the new album from the person two towns over. So is the album from the artist on the other side of the country. And there are thousands of other genuine artists releasing music at the exact same time.

But alongside all of that, there are also 50,000 AI-generated slop tracks uploaded that day. Many of them have better algorithmic optimization. They're titled with trending keywords. They're tagged with genres they don't belong to, stretching across every conceivable listener type. They're technically in the queue, taking up space.

Playlist curators are overwhelmed. They can't possibly listen to everything that gets uploaded to find genuine talent. So they either rely on algorithm-driven recommendations (which are now poisoned with slop) or they work with labels and distributors they already know (which typically means they're not discovering new independent artists).

The result: your album gets lost. Not because it's bad, but because there's too much noise. The platform's discovery mechanisms have degraded to the point where they're unreliable.

This is where the actual harm happens. It's not that AI slop is aesthetically bad (though it usually is). It's that AI slop is breaking the infrastructure that allows real artists to reach audiences.

I know musicians personally who've given up trying to build careers through streaming. The odds feel impossible. The time investment required to game the algorithm or build an audience seems insurmountable when you're competing with both legitimate artists and thousands of spam tracks. Some of them have shifted to live performances. Some have quit music entirely. All of them are frustrated with the platforms they invested in.

Estimated data shows that independent artists receive only about 10% of the revenue per million streams, highlighting the disparity in the streaming economy.

What The Data Says About AI Music Slop

Let's look at what actually happens at scale. The evidence is pretty damning.

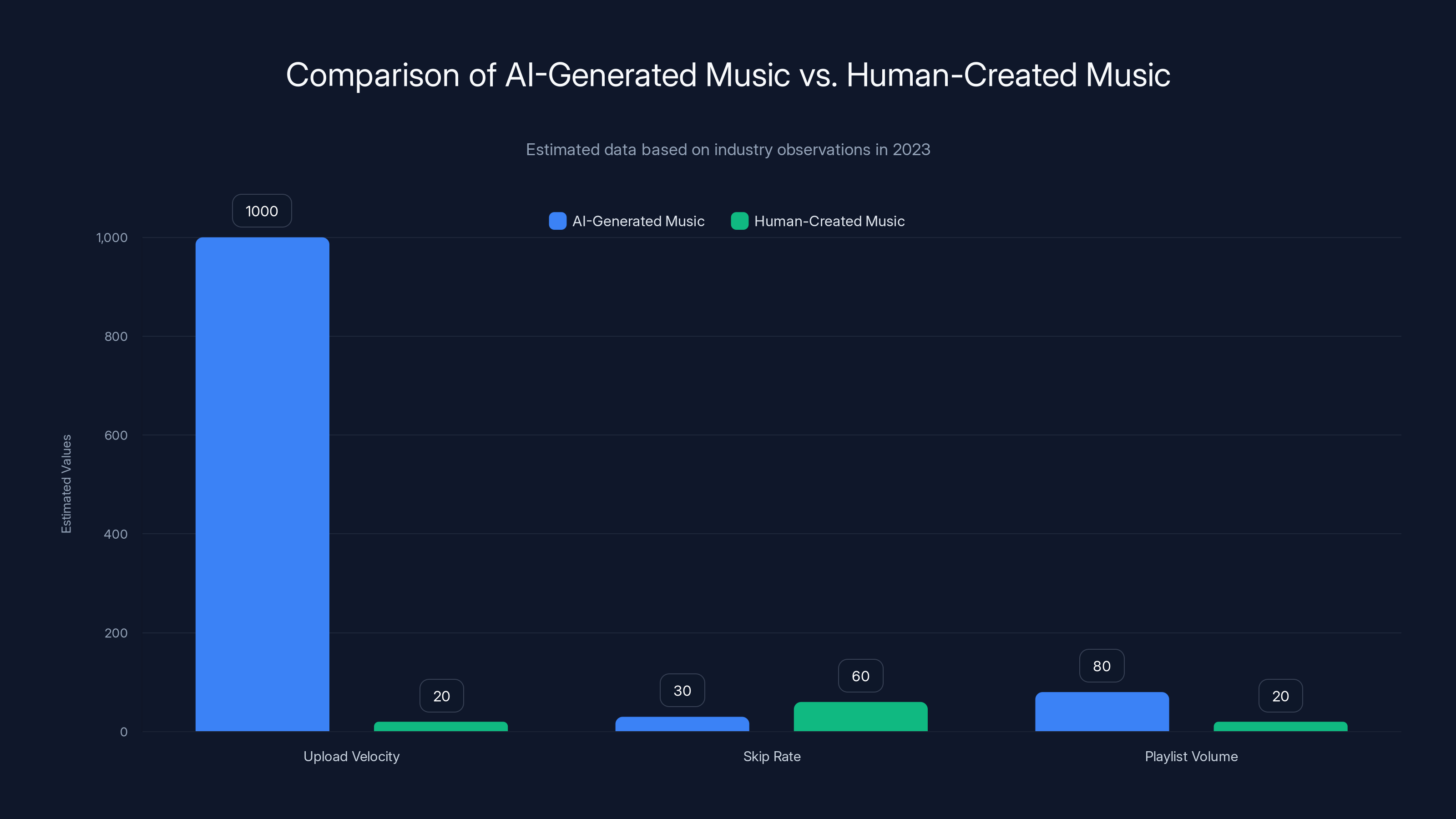

First, there's the upload velocity. Industry researchers tracking metadata from music distribution services have documented an exponential increase in track uploads since late 2023. But here's the interesting part: this increase doesn't correspond with an increase in artist registrations. Instead, existing accounts or networks of accounts are uploading dramatically more content.

In practical terms, this means individual creators or small groups are uploading hundreds or thousands of tracks per month. A legitimate musician might release 10-20 songs a year. Someone leveraging AI to generate slop might upload that many in a week.

Second, there's the engagement data. Research analyzing playlist inclusion patterns shows that AI-generated slop tracks tend to get lower average skip rates than human-created music, but higher bulk volume in algorithmic playlists. This is suspicious. Real listeners skip music they don't like. The fact that slop gets lower skip rates despite sounding generic suggests these tracks are being played in contexts where engagement isn't real—background mood playlists that autoplay, bots, algorithmic fills-ins to meet playlist length requirements.

Third, there's the metadata analysis. When you look at how slop tracks are tagged and titled, the pattern is obvious. Keywords are stuffed in unusual ways. Genre combinations are bizarre and technically incorrect. Release schedules show clear batch processing rather than the typical artist release calendar. These aren't the fingerprints of creative work. They're the fingerprints of algorithmic optimization.

Fourth, there's the creator behavior. Slop creators operate at scale. They use distribution networks that allow bulk uploading. They monitor streaming numbers across accounts. They have automated workflows for creating, uploading, and promoting content. A real artist might track their streaming numbers. A slop creator has dashboards monitoring thousands of tracks across dozens of accounts.

All of this points to the same conclusion: AI slop is a systematic, industrialized problem. It's not a few bad actors making weird music. It's an entire supply chain optimized to extract revenue from the streaming economy.

Platform Responses and Why They're Failing

To be fair, platforms are responding. But the responses are mostly inadequate.

Spotify has been more aggressive than most. The company has implemented policies requiring artists to verify their identity before distributing music. They've cracked down on artificial streaming tactics. They've removed tracks and accounts engaged in clear manipulation.

But here's the problem: these measures are reactive. Spotify catches violations after they happen, not before. And the scale of the problem is so large that even when you remove thousands of slop tracks, more are already being created and uploaded. It's a game of whack-a-mole that Spotify can't win because the economic incentive structure is still in place.

YouTube has been even more passive. The platform has content moderation tools designed for video, but music at scale is a different challenge. YouTube Music reportedly has less robust moderation than Spotify, and the platform has been slower to act on AI slop specifically.

Some platforms are experimenting with AI-detection tools. The idea is to automatically identify AI-generated music and either flag it or remove it. But here's the catch: AI detection is imperfect. You can't reliably distinguish between a song made with AI assistance and a song made entirely by AI. You also can't reliably distinguish between legitimate AI music generation (which should be allowed) and slop (which shouldn't). The technology simply isn't there yet.

Meanwhile, the slop creators are using more sophisticated techniques. They're adding slight variations to generated music to evade detection. They're mixing AI-generated elements with real instruments to make detection harder. They're learning from removed content to create material that's harder to identify.

It's an arms race, and the platforms are losing.

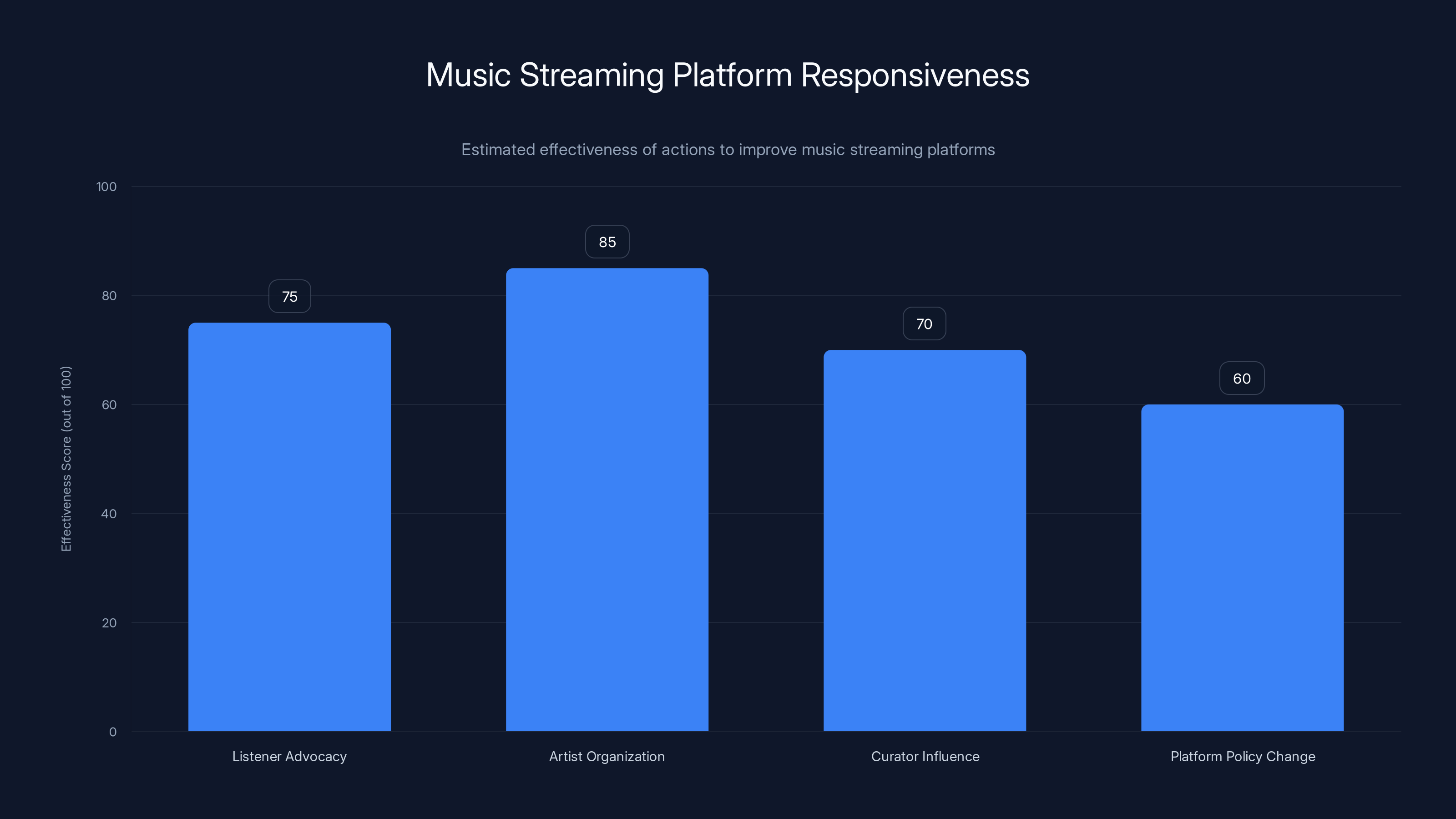

Listener advocacy and artist organization are estimated to be the most effective actions in driving positive changes in music streaming platforms. (Estimated data)

The Copyright and Attribution Nightmare

There's another dimension to this problem that doesn't get discussed enough: copyright and attribution.

When you generate music with AI, that music has to come from somewhere. Generative AI is trained on existing music. The models learn from millions of recordings. While the output is technically new, it's derived from patterns learned from copyrighted works.

Here's what happens in practice. An AI slop creator uses a tool like Amper Music or similar services to generate tracks. These tools are trained on massive music databases. The generated output might not be a direct copy of any single song, but it's trained on music that's owned and copyrighted.

The legal question becomes: who owns the copyright to AI-generated music? Is it the tool creator? The user? The artists whose music was used to train the model? Current legal frameworks don't have clear answers, and companies aren't providing clarity.

Meanwhile, there's a secondary issue: misattribution. Slop creators upload music under generic artist names or fake names. They might use existing artist names, creating confusion. If you search for "John Smith" on Spotify, you might find both the real John Smith and five fake John Smiths uploading slop. Real listeners get confused. Real artists see their names diluted and their discoverability harmed.

And then there's the sampling issue. Some AI generation tools can create music that sounds suspiciously similar to specific existing songs. While the AI isn't technically copying, it's producing something that's derivative to the point of copyright infringement. The legal gray area here is enormous, and nobody's really grappling with it.

This is where stronger regulation could help. If platforms required clear attribution of AI-generated content and were legally liable for copyright violations in their AI-generated music, they'd have incentive to moderate more aggressively. Right now, they operate in a gray zone where they're not sure what their responsibilities are.

Why Listeners Should Care (Even If They Don't Listen to These Songs)

Maybe you're thinking: "I don't listen to AI slop, so why should I care?"

Here's why you should care, even if you never intentionally listen to this stuff.

First, the recommendation algorithm is degraded. When a platform's algorithm is trained on bad data (slop tracks mixed in with real music), it makes worse recommendations for everyone. Your personalized playlists get worse. The "recommended for you" suggestions get worse. Discovery playlists get worse. This is a negative externality that affects all users.

Second, the platform's resources are diverted. Money that could go to paying artists goes to moderation and fraud prevention instead. Content moderation teams are overwhelmed handling slop uploads, which means they have fewer resources for actual harmful content. This is true particularly for smaller platforms or countries where English isn't the primary language.

Third, the creative economy suffers. When the economics are broken such that spam is more profitable than genuine creation, you get less genuine creation. Talented musicians don't pursue careers. Artists who might've spent time creating music spend time instead trying to game the algorithm. The entire industry becomes less vibrant and less innovative.

Fourth, there's the precedent-setting concern. If AI slop becomes accepted and normalized, it changes the baseline for what's acceptable on platforms. Then the next level of increasingly cynical content becomes the new normal. Before you know it, the distinction between intentional, creative work and spam has collapsed entirely.

Fifth, if you care about AI development, this matters. AI music generation isn't inherently bad. It has legitimate creative applications. But when the primary use case for a technology is spam, it poisons public perception of that technology. It makes people defensive about AI generally, which actually slows down development of beneficial applications.

In other words, AI slop isn't just a problem for musicians. It's a problem for the entire ecosystem.

Estimated data shows AI-generated tracks dominate music uploads, overshadowing genuine independent artists and label-backed artists.

What Real AI Music Creation Looks Like (And Why It's Different)

Here's where I want to be careful with nuance. AI music generation technology isn't inherently bad. There are legitimate, creative uses for these tools.

I know producers who use LANDR's AI mastering to improve their mixes. I know creators who use Splice's AI-powered sample suggestions to find sounds they wouldn't have discovered otherwise. I know YouTube creators who use AI tools to generate background music quickly for videos. These applications make sense. They're using AI as a productivity tool within a creative process.

There's also experimental music being made with generative AI as a primary tool. Artists like Holly Herndon and David Cope have been exploring AI-assisted music creation for years, and they're producing genuinely interesting work. It's not mainstream, but it exists and it's worth listening to.

The difference between these applications and slop is intentionality and finality. When a musician uses AI, they're typically using it as a step in a creative process. The AI output is the starting point, not the end product. The artist refines it, adds to it, modifies it, combines it with other elements. They take responsibility for the final product.

AI slop, by contrast, treats the AI output as final. There's no refinement. There's no artistic decision-making beyond the initial parameters fed into the AI. The output is uploaded as-is because the entire point is volume, not quality.

This is an important distinction that often gets lost in the conversation. The problem isn't AI in music. The problem is industrial-scale automated spam disguised as music.

The Solutions Aren't Simple, But They're Necessary

So what actually fixes this? There's no single solution, but several approaches working together could make a real difference.

Stronger platform responsibility. Platforms need to take AI slop more seriously. This means investment in detection tools, human moderation, and policies that explicitly prohibit bulk AI-generated uploads designed to game the algorithm. It means treating this like the fraud problem it actually is, not a minor content moderation issue.

Better artist verification. Spotify's identity verification is a start, but it needs to go further. Platforms should verify that uploaders are actually the artists they claim to be. This doesn't prevent all slop, but it does eliminate some of the anonymous bulk upload vectors.

Algorithmic changes. Recommendation algorithms could be modified to detect and downrank suspicious patterns. If a new artist suddenly has thousands of tracks, that's a red flag. If engagement patterns look bot-like, that's a red flag. If metadata looks algorithmically optimized rather than naturally created, that's a red flag. The algorithm can't be fooled perfectly, but it can be made harder to exploit.

Changing payment structures. If streaming services paid per-minute listened rather than per-stream, it would change the economics dramatically. It's harder to game minute-listening metrics with bot engagement. It's also fairer to artists because you don't get paid if someone skips after two seconds. This would reduce the incentive to create slop significantly.

Copyright enforcement. Clearer copyright law around AI-generated music and stricter enforcement against copyright violation in generated content would help. If platforms were liable for copyright infringement in AI music, they'd moderate much more aggressively.

Industry standards. The music industry could establish standards for AI-generated music metadata. Clearly flagging AI-generated content isn't censorship. It's transparency. It lets listeners and curators make informed decisions. Some platforms are moving in this direction, but it needs to be industry-wide.

Artist support initiatives. This is maybe the most important one. If platforms genuinely invested in artist discovery and artist development—not through algorithm, but through human curation and support—it would make the ecosystem healthier. When real artists have visibility and real income potential, they're more likely to participate. When the barrier to entry is algorithmic gaming, only the people willing to game it bother.

AI-generated music shows significantly higher upload velocity and playlist volume but lower skip rates compared to human-created music. Estimated data suggests AI tracks are optimized for quantity and algorithmic playlist inclusion.

The Bigger Picture: AI, Authenticity, and Trust

This whole situation is actually a perfect case study in why AI governance matters.

Generative AI is incredibly useful technology. It can solve real problems. But when economic incentives and platform mechanics align to make spam the rational choice, the technology gets used for spam. This isn't because AI is inherently bad. It's because humans are optimizing for the wrong things.

The same dynamic will play out across industries. We'll see AI-generated news articles designed to trigger engagement. AI-generated social media comments designed to manipulate sentiment. AI-generated images designed to deceive. AI-generated video designed to spread misinformation.

The pattern is always the same: a technology emerges, platforms adopt it, incentives get misaligned, spam follows. The platforms scramble to respond, but they're always behind because the economic incentive is too strong.

This is why regulation matters. This is why platform responsibility matters. And this is why the design of incentive structures in technology platforms needs to be intentional, not accidental.

In the short term, the AI slop problem in music will get worse before it gets better. More music platforms will face the same issue. More sophisticated techniques will emerge. More harm will be done to real artists trying to build careers.

But long-term, something's got to give. Either platforms will be forced to take stronger action, or the entire discovery infrastructure will become so degraded that the model breaks. Neither outcome is great, but both are inevitable if nothing changes.

What Listeners Can Do

If you're frustrated with AI slop in your music streaming experience, here are concrete things you can do.

Report suspicious content. When you encounter obviously AI-generated slop, report it through the platform's reporting mechanism. Include details about why it seems suspicious. If enough people report it, algorithms start flagging the creator.

Support artists directly. Use Bandcamp, Patreon, or direct artist websites. These models pay artists significantly better than streaming services.

Engage with real discovery. Follow music publications, curators, and critics who do manual curation. Follow subreddits and communities around genres you care about. Seek out music intentionally rather than relying entirely on algorithmic recommendations.

Be skeptical. When you encounter a "new artist" with a suspiciously perfect mood-based name and no social media presence, be skeptical. Ask questions. Do a bit of research. This is the fastest way to train your ear to detect slop.

Make playlists. Curate your own playlists of artists you like. Share them. This helps real artists reach new listeners through genuine discovery rather than algorithmic manipulation.

Advocate for change. If you use YouTube Music, tell Google to take the problem seriously. If you use Spotify, tell Spotify. Platforms respond to user feedback when enough users care about an issue.

The Future of Music Streaming

Here's my honest take on where this goes.

The AI slop problem is going to force a reckoning with the entire streaming economics model. The current system was designed when streaming was seen as a convenience on top of traditional music sales. Now it's the primary way most people access music. The economics that made sense at small scale don't work at global scale.

Something's got to change. Either streaming services will have to radically change their payment models and quality controls, or we'll see a shift back toward other distribution models. Maybe that's more artists selling directly to listeners. Maybe it's more subscription services focused on quality curation rather than volume. Maybe it's blockchain-based models that cut out intermediaries.

What almost certainly won't happen is the status quo continuing. The status quo is broken. It's broken economically, broken algorithmically, broken in terms of discovery. AI slop is a symptom of that breakage.

The musicians I know who are successfully building careers in 2025 aren't relying on streaming platform algorithms. They're building communities. They're performing live. They're selling directly. They're being guests on podcasts. They're engaging with fans on social media. They're using every tool except algorithm gaming because algorithm gaming doesn't work anymore.

That's not a sustainable model for most musicians, especially early-career artists. But it might be the direction we're heading if platforms don't fundamentally change how they approach discovery and artist payment.

What Artists Need To Do Right Now

If you're a musician trying to build a career in this environment, here's the hard truth: you can't rely on streaming platforms alone anymore.

That said, you shouldn't ignore them either. You should be on Spotify, YouTube Music, Apple Music, and the rest. But those should be one part of a diversified approach.

Build your own community. Email list, Discord server, whatever. The algorithm can disappear or change overnight. Your email list is yours.

Prioritize live performance. This is where real connection happens and where you can actually make real income. Touring might seem expensive, but it's often more profitable than trying to build a streaming career.

Leverage YouTube directly. Upload your music as videos, not just audio. Build a YouTube following separate from YouTube Music. The platform dynamics are different and you have more control.

Consider Bandcamp as a primary distribution channel. Yes, use aggregators like DistroKid to get on all the platforms. But encourage fans to buy or stream on Bandcamp where you make more money.

Collaborate and cross-promote. Build relationships with other artists. These relationships matter more than algorithm placement.

Be intentional about your release strategy. Release less frequently, but make each release count. Build anticipation. Create context for your releases. This takes more effort than just uploading every week, but it works better.

Don't try to game the algorithm. This is a losing game against people and systems with more resources than you. Instead, focus on making music that resonates with people and building real relationships with those people.

I know this is harder advice than "post consistently and game the algorithm." But it's more realistic advice for where we actually are in 2025.

FAQ

What is AI slop music?

AI slop is music generated using artificial intelligence tools, created in bulk, and uploaded to streaming platforms specifically to exploit algorithmic recommendation systems and extract streaming revenue. Unlike music made with AI as a creative tool, AI slop treats the AI output as a finished product with zero artistic refinement, typically uses generic mood-based titles and algorithmic keyword stuffing, and is designed to maximize platform metrics rather than create genuine listening experiences. The entire purpose is revenue extraction through bot engagement and algorithmic placement.

How can I identify AI slop on YouTube Music and Spotify?

AI slop usually has several telltale signs: generic mood-based titles with unnecessary modifiers (like "Chill Lo-Fi Study Beats Vol. 437"), artist names with no social media presence or band history, album covers that look algorithmically generated or generic, metadata that seems stuffed with unrelated genre keywords, and release patterns showing hundreds of tracks from a single account. When you encounter a song with these characteristics, you can research the artist name, check if they have a real online presence, and report suspicious content through the platform's reporting mechanism.

Why is AI slop bad for real musicians?

AI slop directly damages real musicians' ability to be discovered by flooding streaming platforms with low-quality content that still triggers recommendation algorithms. This forces genuine artists to compete with thousands of algorithmically-optimized spam tracks for playlist inclusion and algorithmic placement. Real musicians also lose potential streaming revenue as their share of platform payments is distributed across millions of slop tracks that shouldn't exist, and the degraded recommendation algorithm makes it harder for listeners to find authentic music, reducing discoverability for all independent artists.

Is all AI-generated music the same as AI slop?

No. AI-generated music created as part of an intentional artistic process—where an artist uses AI tools to assist in their creative workflow, refines the output, and takes responsibility for the final product—is legitimate music creation. The problem isn't AI as a creative tool; it's industrial-scale automated spam where AI output is uploaded without refinement, human decision-making, or creative intent, purely for revenue extraction. Artists like Holly Herndon and production tools like LANDR demonstrate legitimate AI music applications.

What can streaming platforms do to stop AI slop?

Platforms can implement multiple strategies: stronger artist identity verification, detection tools designed to identify bulk uploads and suspicious engagement patterns, algorithmic changes that detect and downrank suspicious patterns, changes to payment structures (like paying per-minute listened instead of per-stream), copyright enforcement against AI-generated content that violates copyright, and investing in human curation and artist support rather than relying entirely on algorithm-driven discovery. The most effective approach combines platform responsibility, better detection, stronger verification, and changes to the economic incentives that make slop profitable.

Should AI-generated music be labeled?

Yes. Clear labeling of AI-generated music is transparency, not censorship. It allows listeners and curators to make informed decisions about the music they're listening to and discovering. This is similar to how food products are labeled, how synthesizers are labeled as synths, and how sampled music credits the original artists. Transparent labeling doesn't prevent AI music from existing; it just ensures everyone knows what they're listening to.

How much revenue do AI slop creators actually make?

At scale, it can be significant. If someone uploads 10,000 AI-generated tracks and achieves even modest bot engagement plus algorithmic placement, they might generate 1 million streams monthly, which translates to roughly $3,000-5,000 in platform revenue. For people in countries where this income is substantial relative to local wages, or when spread across multiple accounts, the economics are compelling enough to make it worth doing at industrial scale.

What should I do if I encounter AI slop on a streaming platform?

Report it through the platform's reporting mechanism with specific details about why the content appears suspicious, including bulk uploads, generic titles with keyword stuffing, algorithmic metadata, and suspicious release patterns. Follow artists and curators who do manual discovery work. Support artists directly through Bandcamp, Patreon, or artist websites where they earn significantly more per transaction. Be skeptical of new artists with no online presence and generic mood-based names.

Will streaming platforms ever fully solve the AI slop problem?

Not without fundamental changes to their incentive structures and business models. The current streaming economics make AI slop rational and profitable, so fighting it will always be an uphill battle. Real solutions require changing payment structures (per-minute instead of per-stream), stronger artist verification, better algorithmic detection, meaningful copyright enforcement, and genuine investment in artist discovery and support—changes that require rethinking core platform mechanics and business models.

How is this different from other internet spam?

AI slop follows the same pattern as email spam, comment spam, and SEO spam: a technology enables cheap, scalable distribution, economic incentives make spam profitable, platforms struggle to respond, and legitimate users suffer from degraded experience. The difference is that music is creative work that's supposed to enrich culture, while spam actively harms that goal. Music spam doesn't just degrade experience; it damages careers and corrupts the entire discovery infrastructure for everyone.

Conclusion: The Fight for Music Worth Listening To

Look, I get why people are frustrated with YouTube Music and the state of music streaming generally. The platforms have become cluttered with content that isn't designed to be listened to, only to be exploited. The discovery mechanisms have degraded to the point where finding actual music from real artists takes more work than it should.

But here's the thing: this problem is solvable. It's not some inevitable consequence of technology. It's the result of specific design choices, specific incentive structures, and specific platform policies. Change those things, and the problem changes.

What's frustrating is that platforms know how to fix this. Spotify has already shown they can implement identity verification and content moderation at scale. The technology for detecting suspicious patterns exists. The ability to change payment structures or payment mechanisms is there. The knowledge about what actually works for artist discovery exists.

What's missing is the will. Because the current system, with all its problems, works financially for the platforms. It works for the slop creators. It doesn't work for artists or for listeners, but nobody's forcing platforms to prioritize those groups.

That's where pressure matters. When enough listeners care enough to use alternative platforms, report content, and advocate for change, platforms respond. When enough artists organize and demand better terms, platforms respond. When enough curators, publications, and music professionals make noise about the problem, platforms respond.

Change is possible. It just requires the people who are harmed most—artists and listeners—to care enough to demand it.

If you're a musician reading this, keep making real music. Keep building real relationships with listeners. Keep refusing to game the algorithm. The authentic stuff outlasts the spam every single time.

If you're a listener reading this, support artists directly. Demand better from platforms. Seek out real discovery. Be skeptical of what you hear in algorithm-driven playlists. Your choices matter more than you think.

And if you work for a streaming platform, fix this. You know what's wrong. You know how to fix it. Stop pretending it's someone else's problem.

Key Takeaways

- AI slop is industrial-scale automated music generation designed to exploit platform algorithms and extract streaming revenue, not to be listened to

- Slop creators upload thousands of tracks with generic titles and algorithmic keyword-stuffing, overwhelming discovery systems and harming real musicians' visibility

- The streaming payment model directly incentivizes slop creation—uploading 10,000 AI tracks can generate $4,000+ monthly in revenue with minimal effort

- Legitimate music discovery on YouTube Music and Spotify has degraded significantly as recommendation algorithms are poisoned by millions of slop tracks

- Platforms like Spotify and YouTube have failed to implement aggressive enough moderation despite having tools and knowledge to combat the problem

Related Articles

- MTV Rewind: The Developer-Built Tribute to 24/7 Music Channels [2025]

- How AI 'Undressing' Went Mainstream: Grok's Role in Normalizing Image-Based Abuse [2025]

- xAI's $20B Series E: What It Means for AI Competition [2025]

- Grok Deepfake Crisis: Global Investigation & AI Safeguard Failure [2025]

- How Disinformation Spreads on Social Media During Major Events [2025]

- AI Accountability Theater: Why Grok's 'Apology' Doesn't Mean What We Think [2025]

![AI Slop Songs Are Destroying YouTube Music—Here's Why Musicians Are Fighting Back [2025]](https://tryrunable.com/blog/ai-slop-songs-are-destroying-youtube-music-here-s-why-musici/image-1-1767791349427.jpg)