AI vs AI: How Machine Learning Solves Data Center Energy Crisis

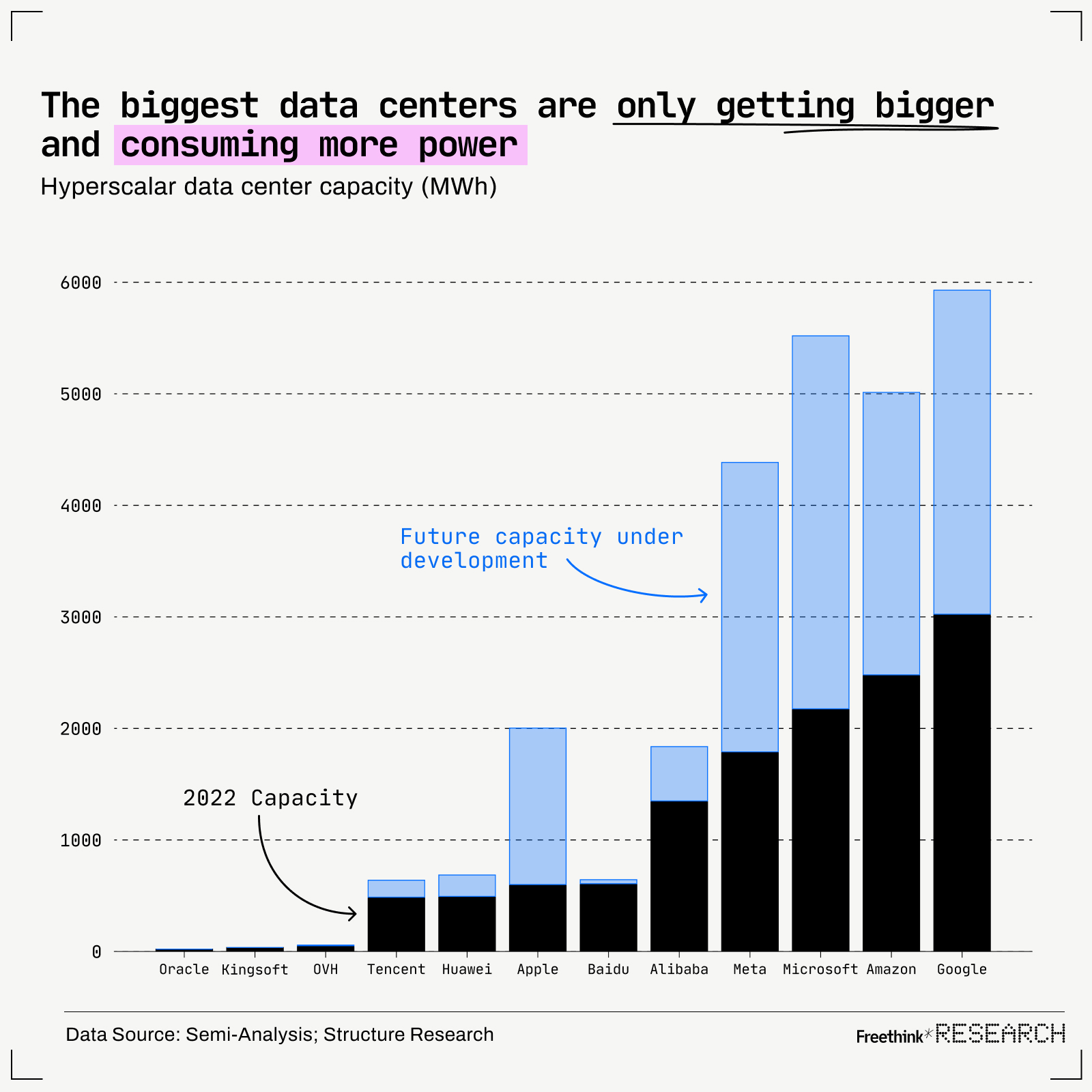

Here's the problem nobody wants to talk about: a single AI-focused data center uses as much electricity as 100,000 homes. And we're building more of them every day.

This isn't some distant climate concern anymore. It's happening right now, in real cities, creating real infrastructure bottlenecks. West London saw it firsthand in 2022 when grid headroom was literally exhausted, forcing housing projects to delay timelines. Developers couldn't build because data centers were consuming all available power capacity.

The irony is impossible to miss. We're building AI systems to solve increasingly complex problems, but the very act of building them is creating a crisis we can't solve with traditional infrastructure planning. Linear forecasting models that worked for decades suddenly fail when you're dealing with hyperscale facilities demanding unpredictable power bursts across interconnected grids powered by fluctuating renewables.

So what's the solution? More AI. Specifically, AI designed to manage the energy chaos that AI itself creates.

This isn't some futuristic concept. It's already happening. Utilities are deploying intelligent systems that predict demand hours ahead. Data centers are shifting compute loads across regions dynamically. Virtual power plants are aggregating batteries, electric vehicles, and solar panels into coordinated units. The grid is becoming smarter, more responsive, and capable of absorbing the energy demands of the digital economy.

But here's what most articles get wrong: they treat this as a one-way problem. "AI consumes too much energy, so we need renewable energy." That's like saying your car uses too much gas, so you should build more roads.

The real story is far more interesting. It's about using intelligence to solve the very problem intelligence created. It's about re-architecting entire energy systems so they can think and adapt in real-time. And it's about understanding that the only tool capable of managing this complexity is AI itself.

Let's dig into how this actually works, why it matters, and what comes next.

TL; DR

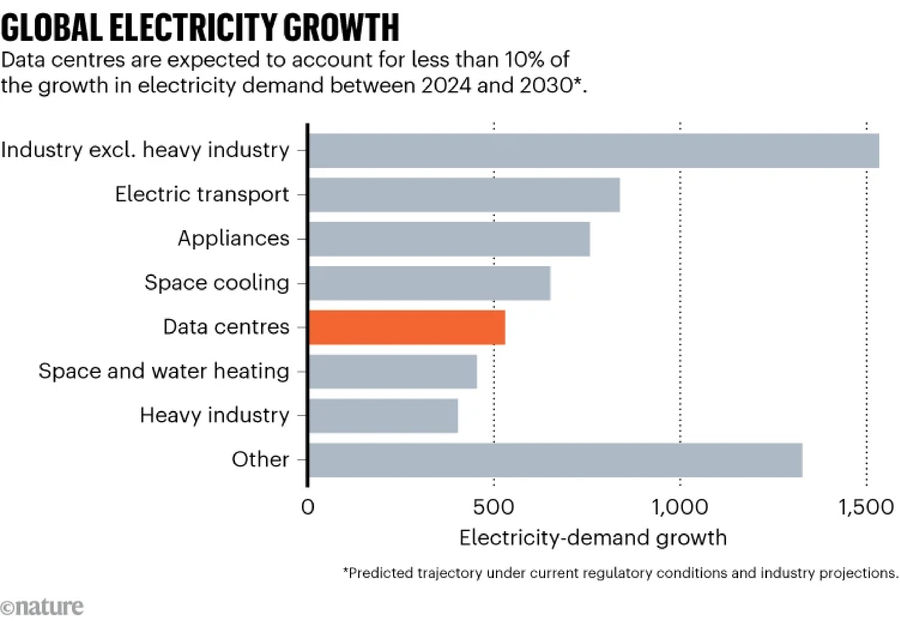

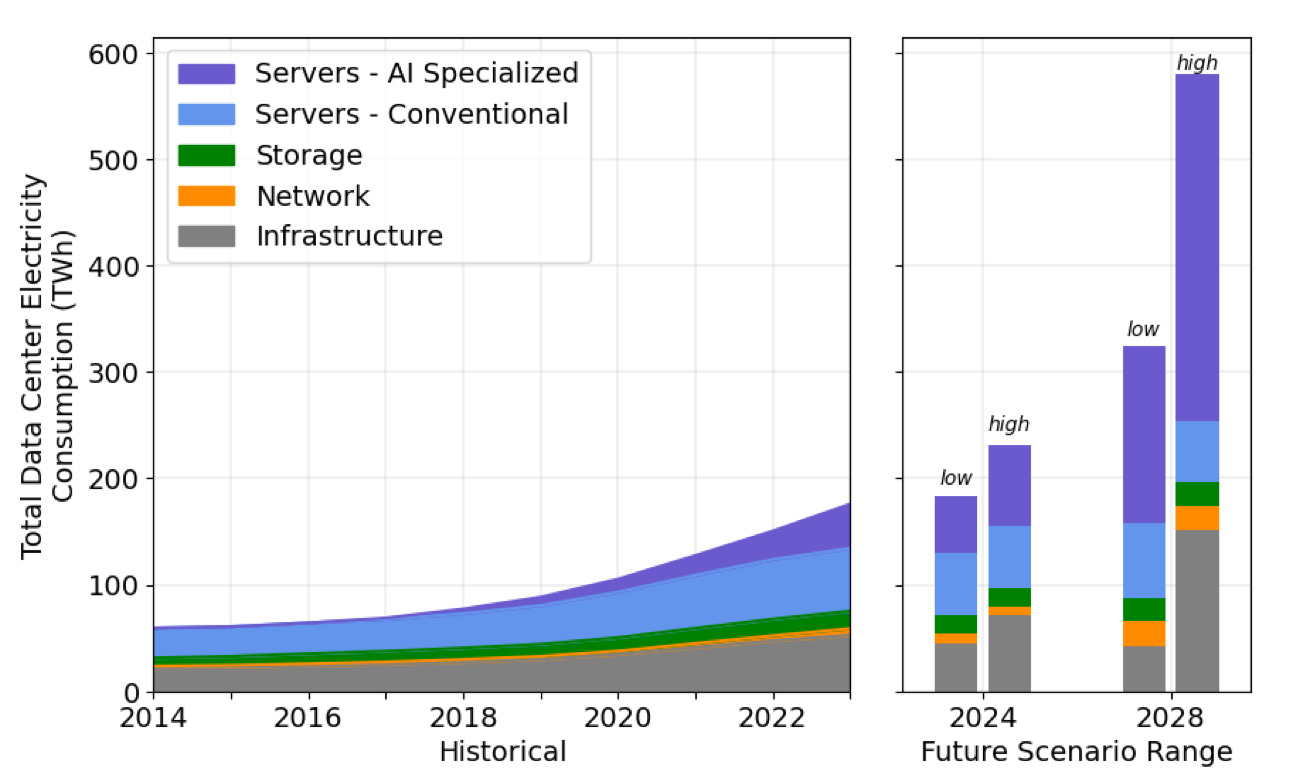

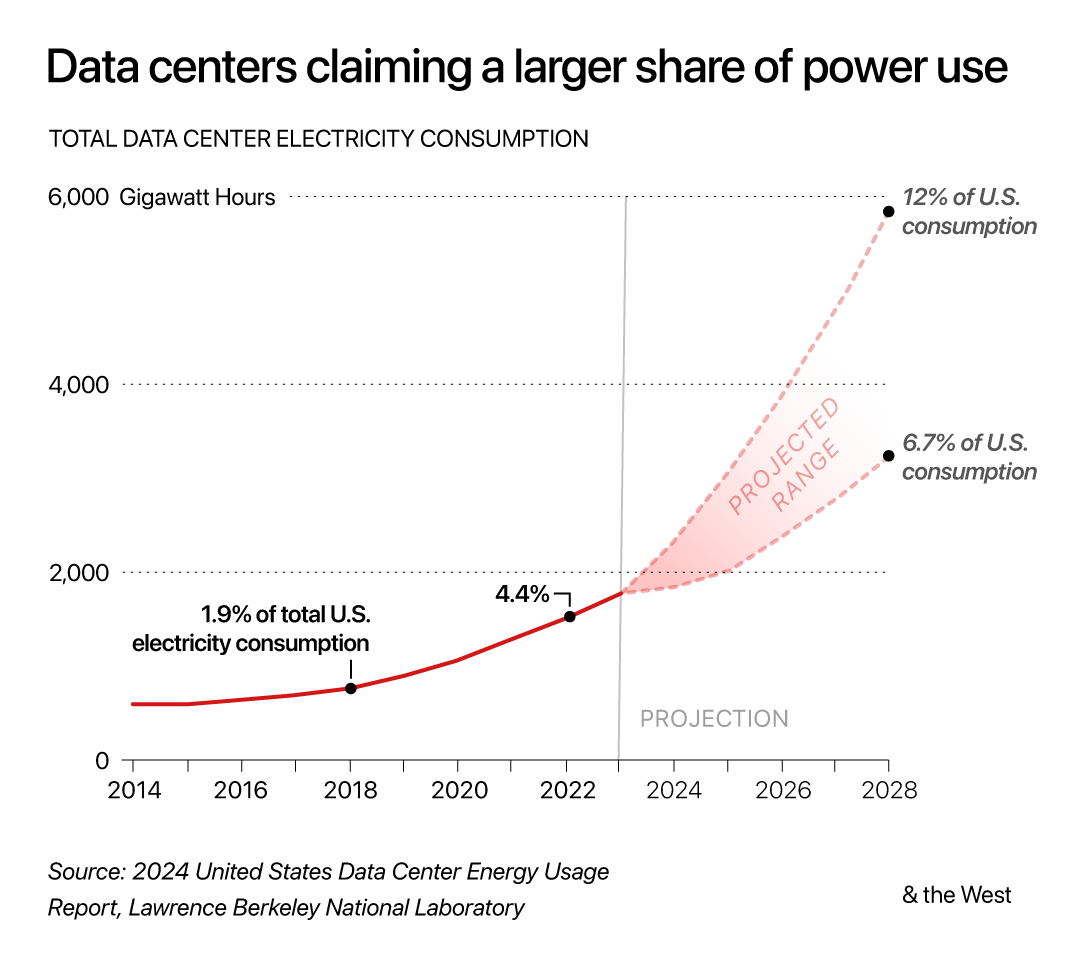

- Data center power demand will hit 700 TWh in 2025, exceeding electric vehicle electricity consumption globally

- AI-driven grid management systems can process millions of data points per second to optimize load distribution and prevent blackouts

- Virtual power plants aggregate distributed energy resources into coordinated units that actively support grid stability

- Spatial shifting technology allows hyperscalers to move compute loads across regions in real-time, aligning energy consumption with renewable availability

- Knowledge graphs and machine learning models enable utilities to anticipate disruptions before they cascade across interconnected grids

- Bottom Line: AI is no longer just a consumer of energy; it's becoming the nervous system that keeps modern grids functional under unprecedented demand

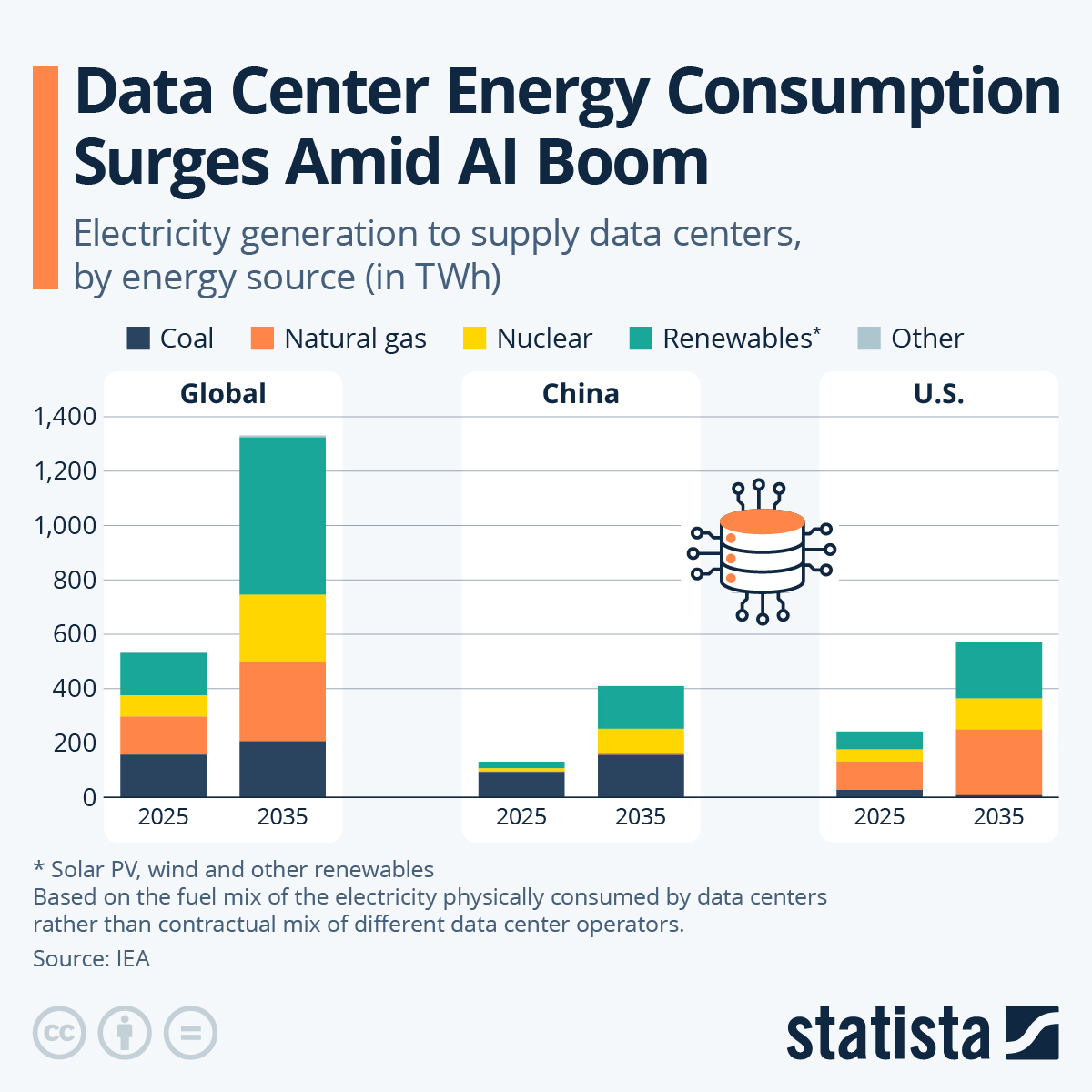

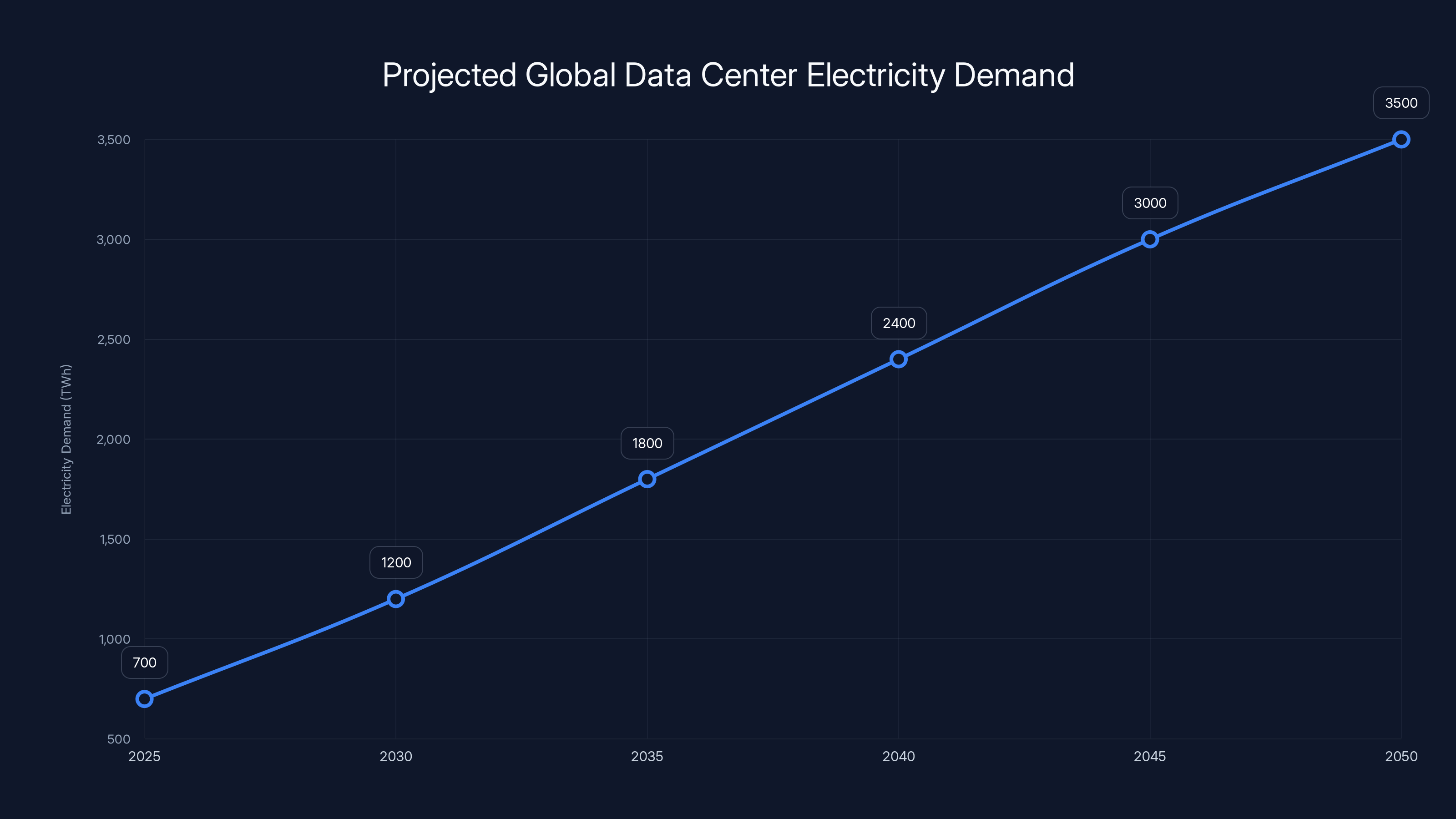

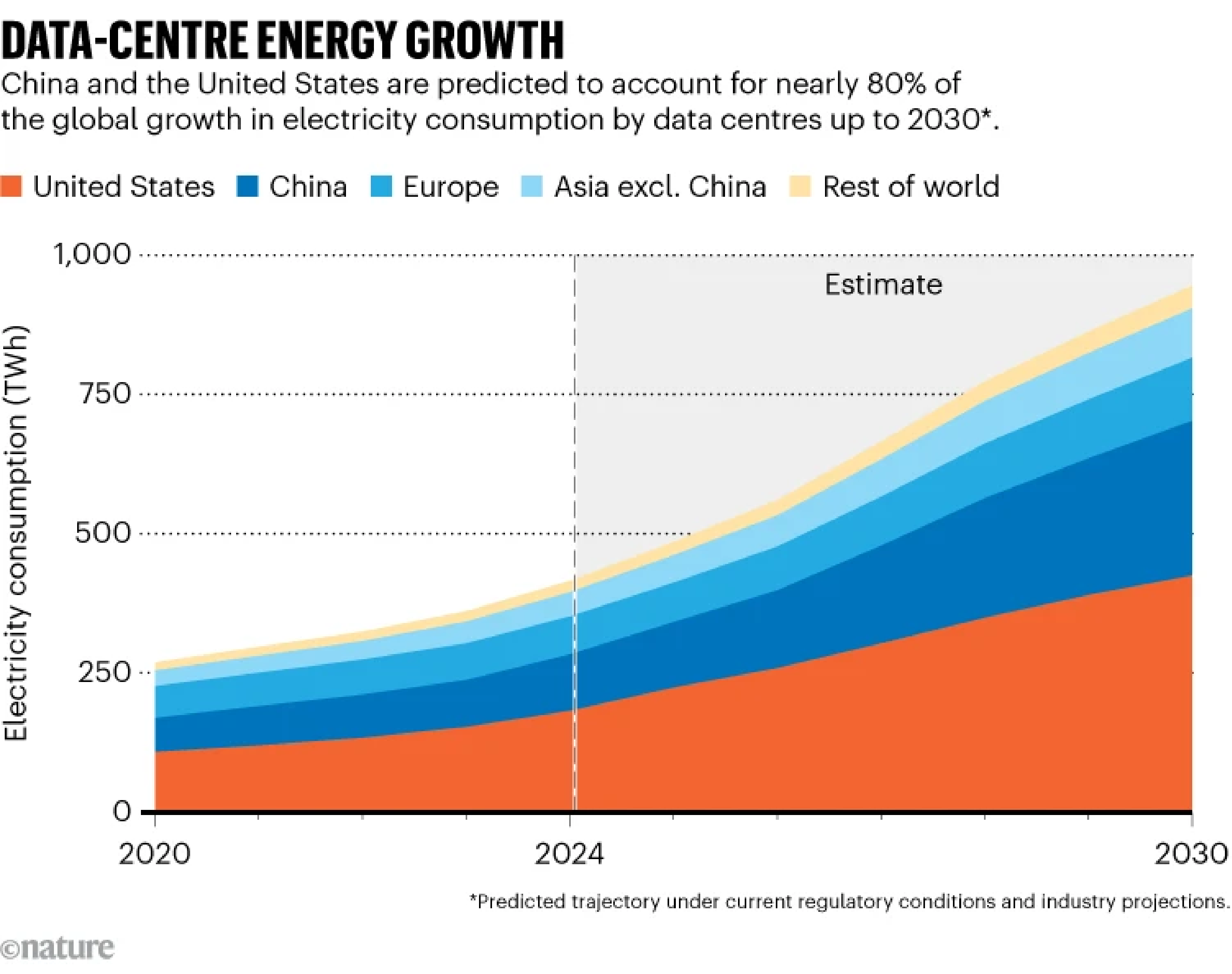

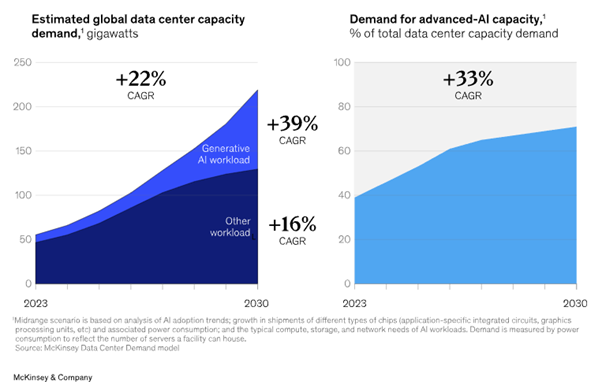

Global electricity demand by AI data centers is projected to increase significantly, reaching 3,500 TWh by 2050. This growth poses a major challenge for energy infrastructure. Estimated data.

The Staggering Scale of Data Center Energy Consumption

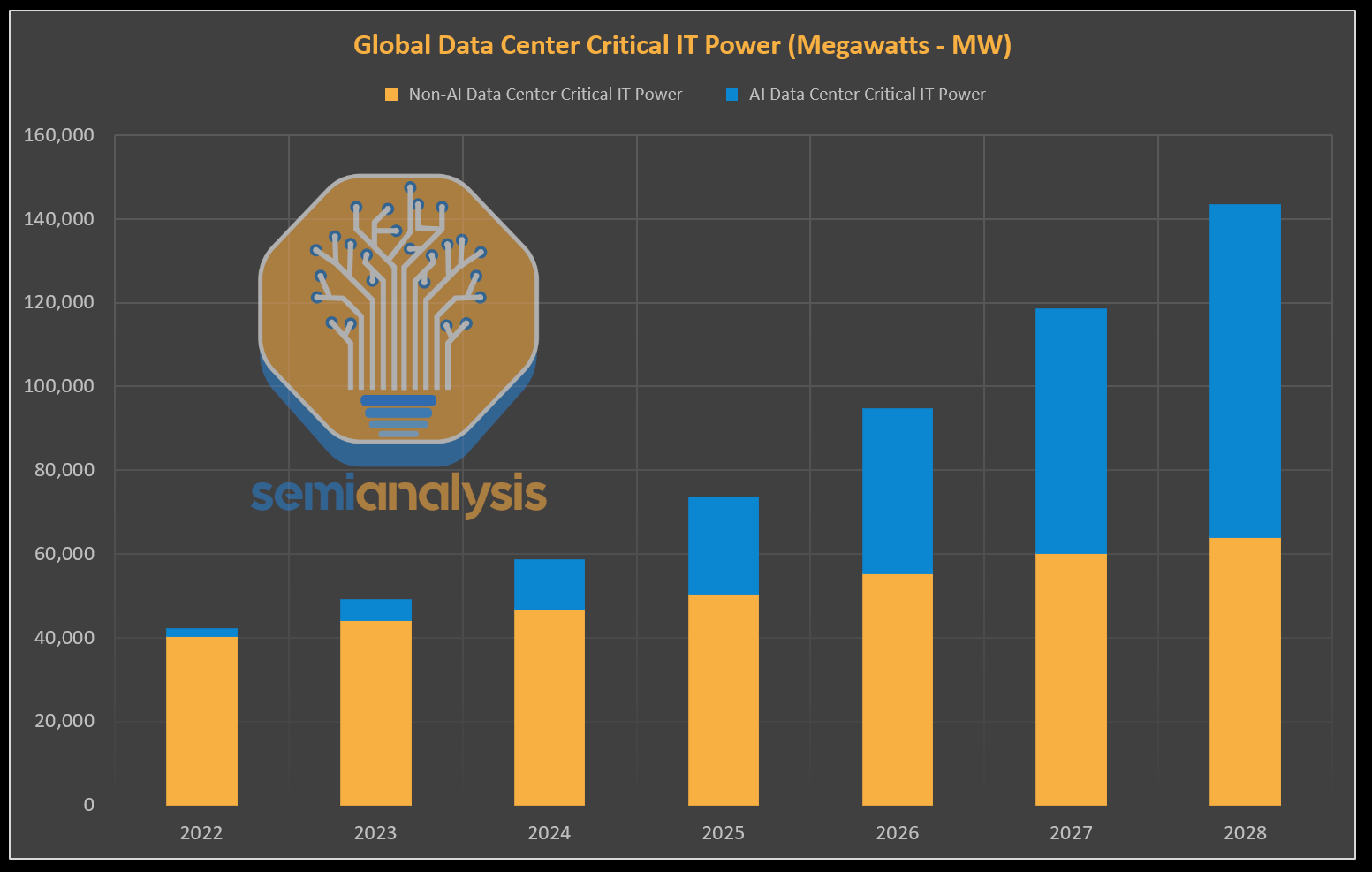

Let's start with numbers that actually mean something. A single AI-focused data center consumes roughly 13 to 17 megawatts of continuous power. That's equivalent to the electricity needs of 100,000 typical homes.

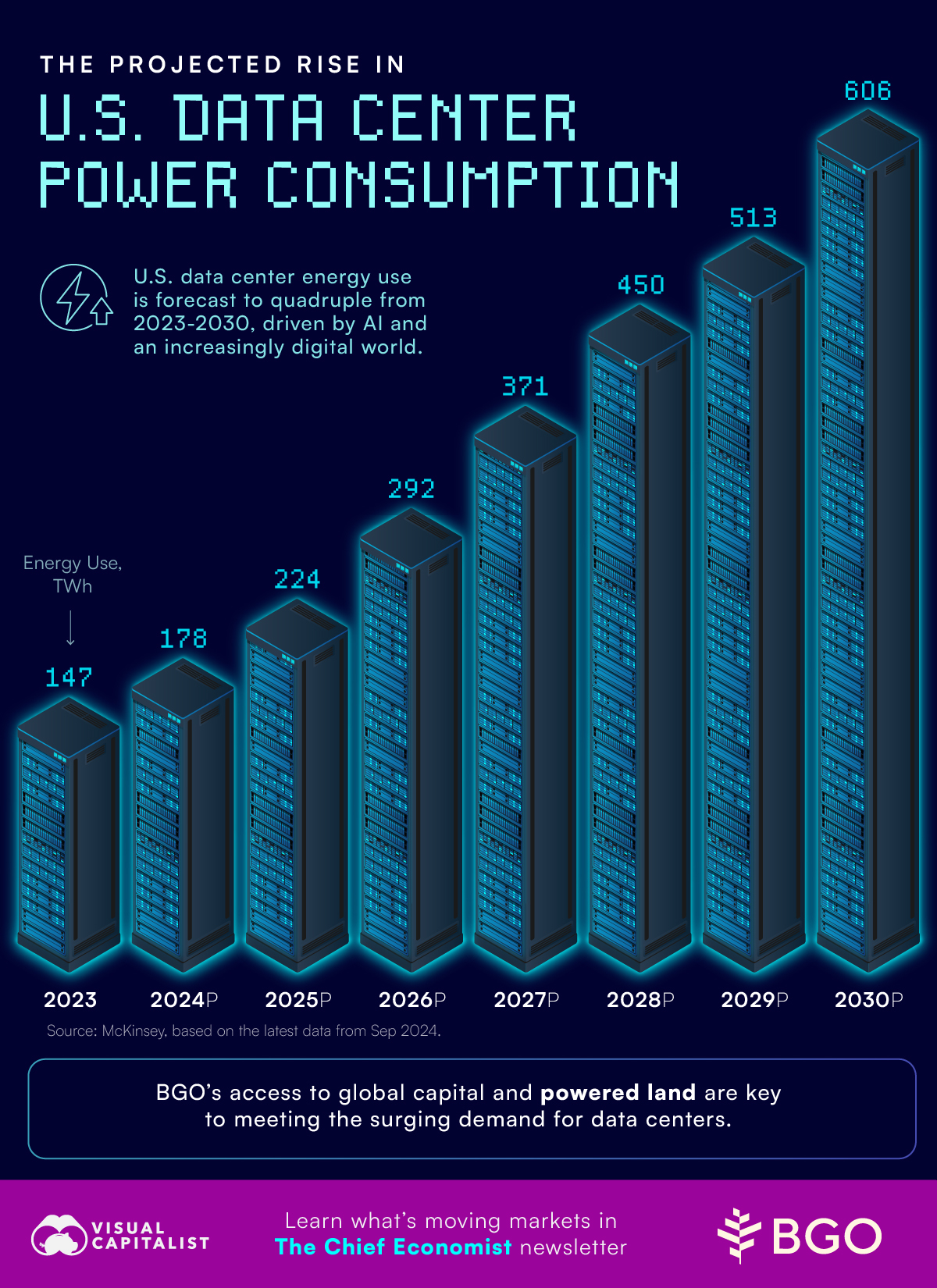

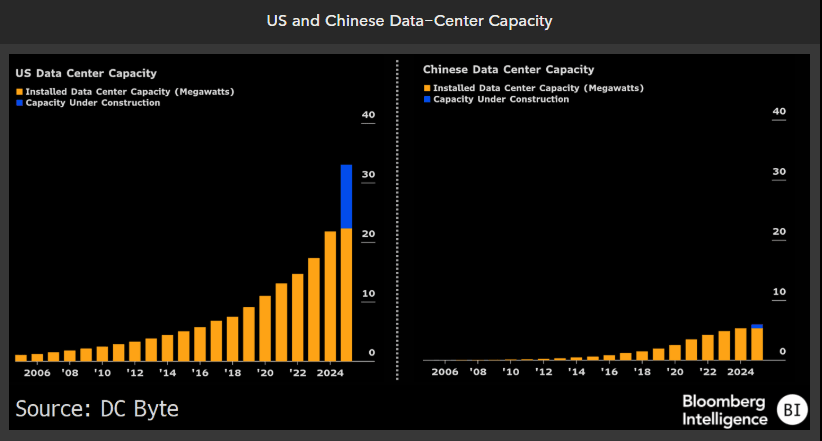

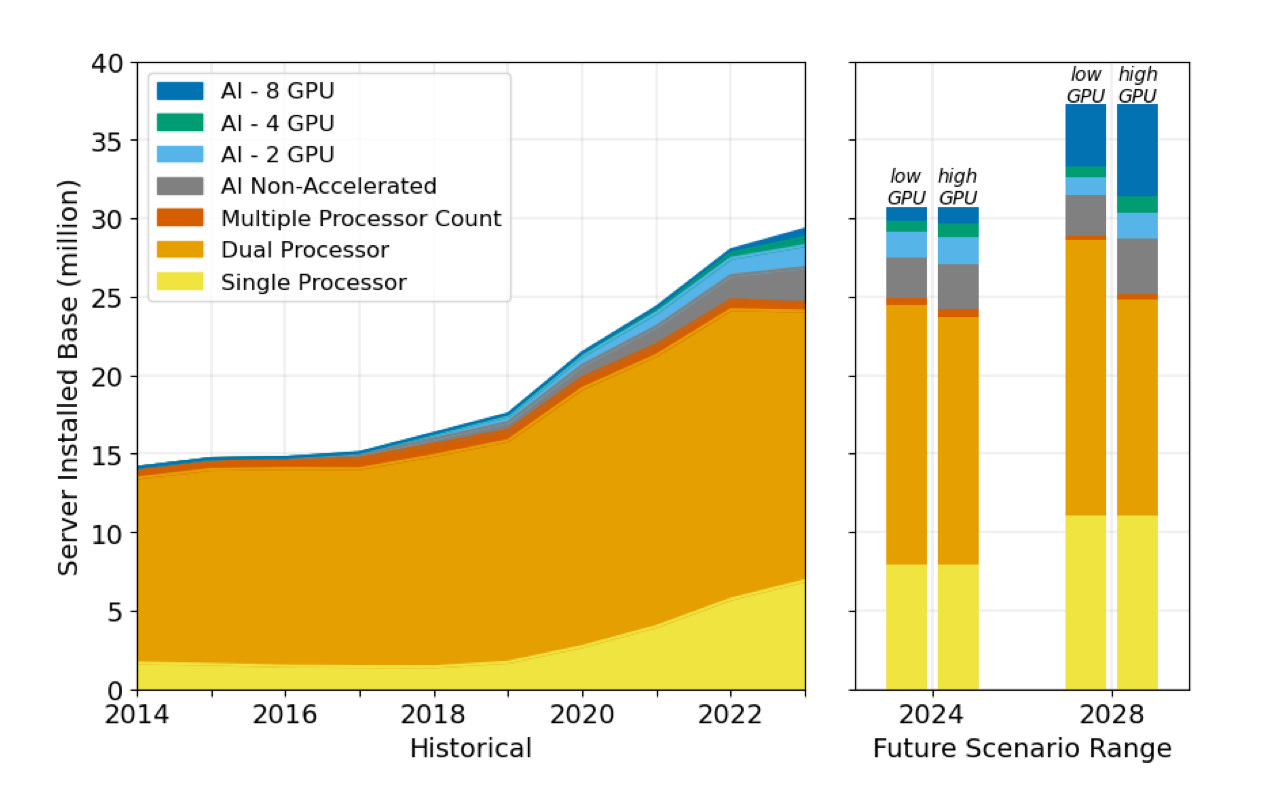

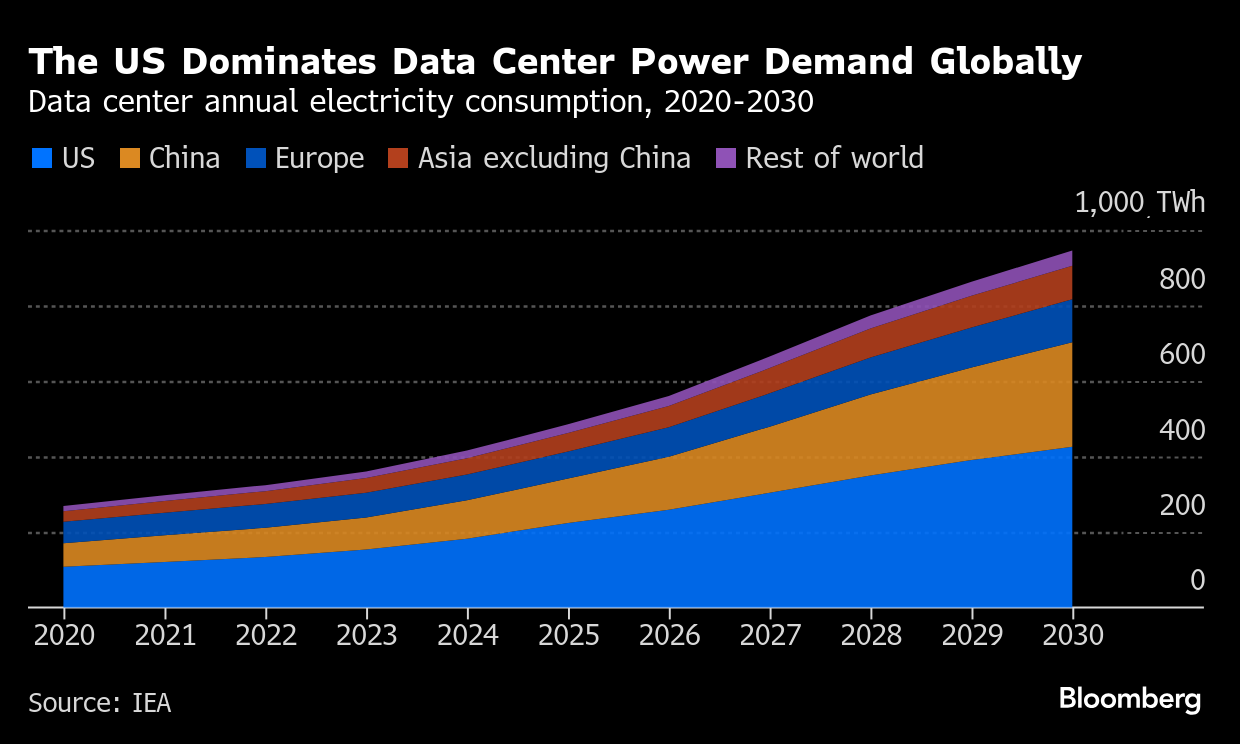

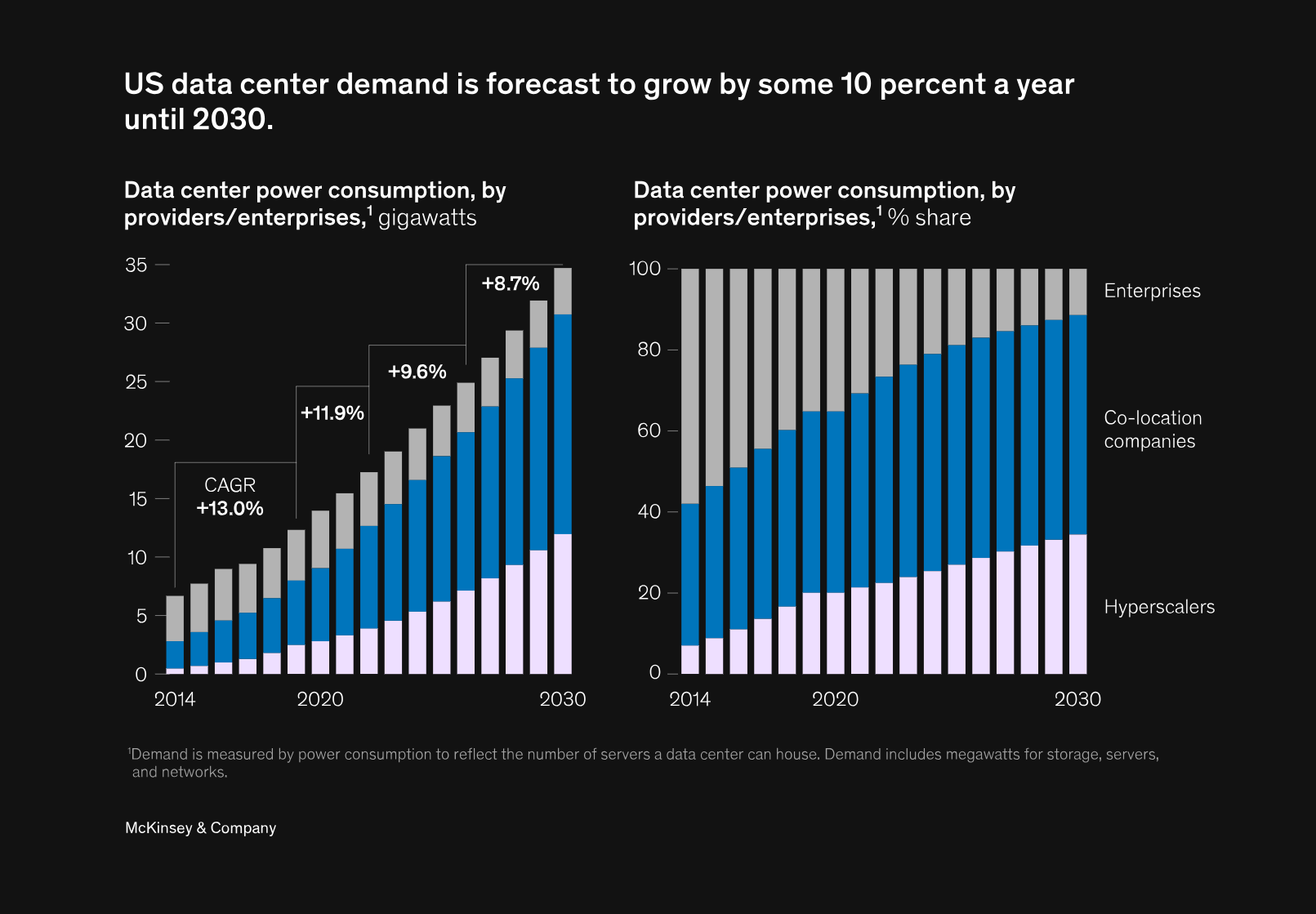

Now multiply that across the globe. According to Wood Mackenzie research, global data center power demand reached approximately 620 TWh in 2024 and will hit 700 TWh in 2025. To put that in perspective, the entire nation of Japan consumed about 980 TWh in 2023. Data centers are approaching the electricity consumption of entire developed nations.

The trajectory gets worse. Projections suggest data centers could demand 3,500 TWh by 2050, equivalent to the combined current power demand of India and the Middle East. That's not hyperbole. That's the trajectory we're on if current trends continue.

What makes this especially problematic is that data centers aren't distributed evenly. They cluster near major fiber routes, undersea cable landing points, and existing transmission infrastructure. West London felt this pressure acutely. The area exhausted all available grid headroom, forcing developers to pause construction on housing projects. Fiber providers couldn't expand capacity. Hyperscale facilities couldn't power up additional racks. Everyone hit the same constraint simultaneously: the grid literally didn't have power to give.

Similar bottlenecks are emerging across Northern Europe, the eastern United States, and Southeast Asia. In Ireland, data center demand consumed 28% of total electricity growth between 2015 and 2023. Danish data center operators are competing with residential customers for renewable energy allocation.

The problem compounds because data center demand is non-linear and unpredictable. Legacy grid planning assumed demand growth of 2-3% annually with predictable seasonal variations. Data centers created spikes that could reach 20-30% increases in specific regions within months. A single AI training run can demand concentrated power for weeks. A new model deployment creates sudden baseline increases. The system wasn't built to handle this kind of volatility.

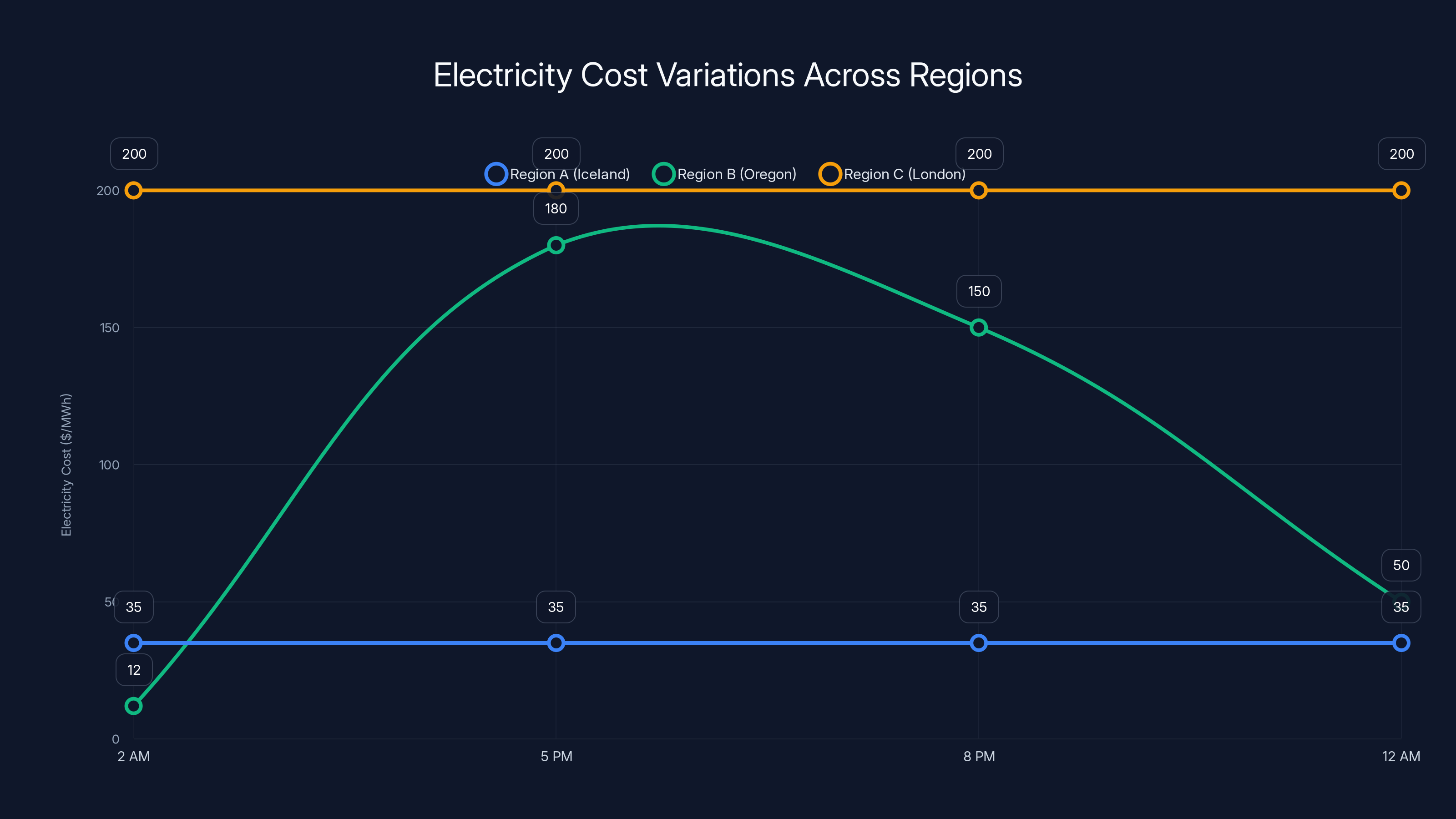

The chart illustrates how spatial shifting can optimize data center operations by moving workloads to regions with lower electricity costs. Estimated data shows significant cost savings by utilizing regions with surplus renewable energy.

Why Traditional Energy Planning is Fundamentally Broken

Energy grid planning has worked the same way for decades. Utility companies analyze historical consumption patterns, project future demand using statistical models, then plan infrastructure investments accordingly. It's remarkably predictable when demand grows steadily and generation sources are stable.

That entire framework collapses in the modern energy landscape. Here's why.

First: Distributed renewables are fundamentally unpredictable. A solar farm produces wildly different power output based on cloud cover, season, and time of day. Wind farms generate power randomly based on weather patterns. Battery systems can discharge or charge based on market prices and grid conditions. Legacy forecasting models treated generation as a fixed resource that utilities could dispatch predictably. Modern grids treat generation as a variable that must be absorbed through demand flexibility, battery storage, and interconnected trading with neighboring grids.

Second: Demand is no longer predictable. Cryptocurrency mining creates sudden industrial loads. Cloud providers spin up new facilities. AI training jobs create massive but temporary consumption spikes. Smart buildings adjust consumption based on price signals. Electric vehicles charge opportunistically when renewable energy is abundant. The grid went from having a handful of large, predictable consumers to millions of small, flexible consumers plus dozens of massive, unpredictable industrial loads.

Third: The system is increasingly interconnected, which means local failures ripple globally. The 2003 Northeast blackout affected 55 million people across eight states and Canada, cascading from a single tree branch touching a power line in Ohio. Modern grids are more tightly interconnected than ever. A solar surge in California flows to Arizona. A wind farm ramp-down in Germany affects France. A data center load spike in England affects the entire Western European grid. Local optimization can create systemic problems.

Traditional planning tools literally cannot handle this. Most utility forecasting systems are Excel-based models updated quarterly or annually. They can't ingest real-time data from thousands of distributed sources. They can't simulate thousands of scenarios per second. They can't react to changing conditions. When faced with data center connection requests, utilities often fall back on conservative assumptions, denying connections or requiring infrastructure upgrades that take years to complete.

This is where AI becomes essential. Not as a nice-to-have optimization layer, but as a fundamental requirement for grid stability.

How AI-Driven Grid Management Actually Works

Modern AI systems approach grid optimization differently than human operators ever could. Instead of relying on periodic forecasts and manual adjustments, they process millions of data points continuously, identify patterns humans can't see, and execute corrective actions in real-time.

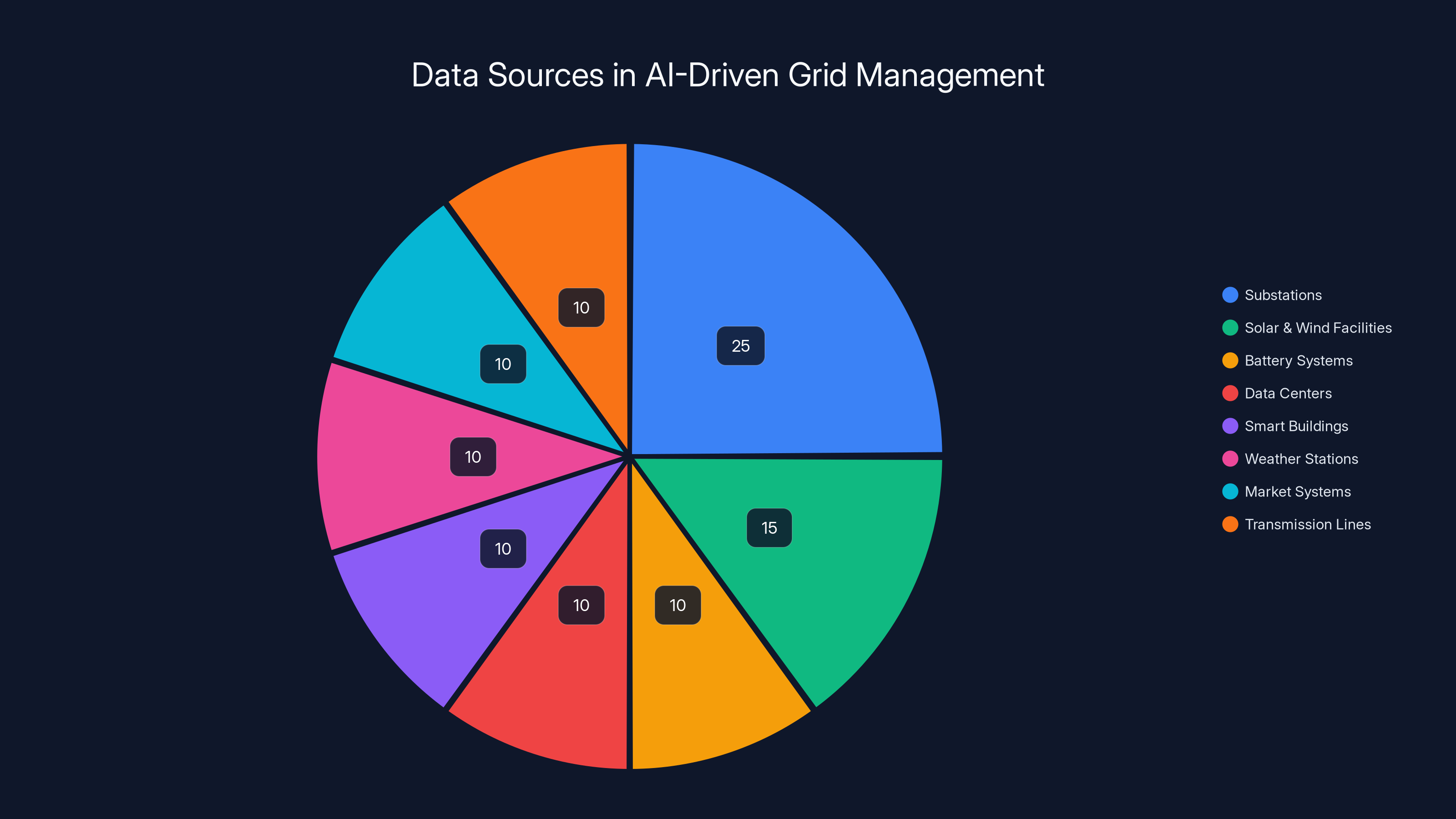

The data ingestion layer is massive. A modern grid management system receives data from:

- Thousands of substations reporting voltage, frequency, and load in real-time

- Distributed solar and wind facilities reporting generation output

- Battery systems reporting charge state and available dispatch capacity

- Data centers reporting power consumption and flexibility

- Smart buildings reporting demand and flexibility

- Weather stations reporting conditions and forecasts

- Market systems reporting prices and trading activity

- Transmission lines reporting flow and congestion

Each data point updates continuously. Processing this volume manually is impossible. A single day generates billions of data points. You need AI to consume it.

The forecasting layer predicts what's coming. Machine learning models trained on historical patterns can forecast demand and generation hours ahead with remarkable accuracy. More importantly, they can quantify uncertainty. A model might predict solar generation will be 450 MW between 2 PM and 4 PM tomorrow, but acknowledge 15% uncertainty due to cloud cover forecasts. That uncertainty quantification lets operators prepare for multiple scenarios simultaneously.

The scenario modeling layer identifies optimal actions. Rather than reacting to problems after they occur, advanced systems simulate hundreds of potential actions and their consequences in parallel. Should we discharge batteries now or in two hours? Should we offer data centers incentives to shift loads? Should we adjust interconnection flows with neighboring grids? Each action gets modeled through a complete simulation of the next four hours of grid operation, showing costs, benefits, and risks.

The optimization layer finds the best solution across multiple objectives. Minimizing cost matters, but so does minimizing emissions, maximizing reliability, and maintaining operator comfort. Modern systems use multi-objective optimization algorithms to find solutions that balance competing goals. Sometimes that means accepting slightly higher costs to improve grid stability. Sometimes it means running renewable energy through the system less efficiently because it's cleaner overall.

The execution layer automates implementation. Once a solution is identified, the system can automatically execute dispatch instructions. Batteries charge or discharge. Data centers shift loads. Smart buildings adjust consumption. Transmission lines rebalance flows. All of this happens without human intervention, measured in milliseconds.

The result is a grid that can absorb unprecedented load swings while maintaining stability and minimizing waste. When a data center suddenly demands 50 MW more power, the system doesn't scramble. It automatically sources that power from the optimal combination of available resources.

Implementing real-time visibility systems can cost between

Virtual Power Plants: Turning Distributed Assets into Coordinated Resources

Tradition utility thinking treats power generation as the domain of large central facilities. A coal plant generates 1,000 MW. A nuclear plant generates 1,200 MW. Utilities dispatch power from these large facilities to meet customer demand.

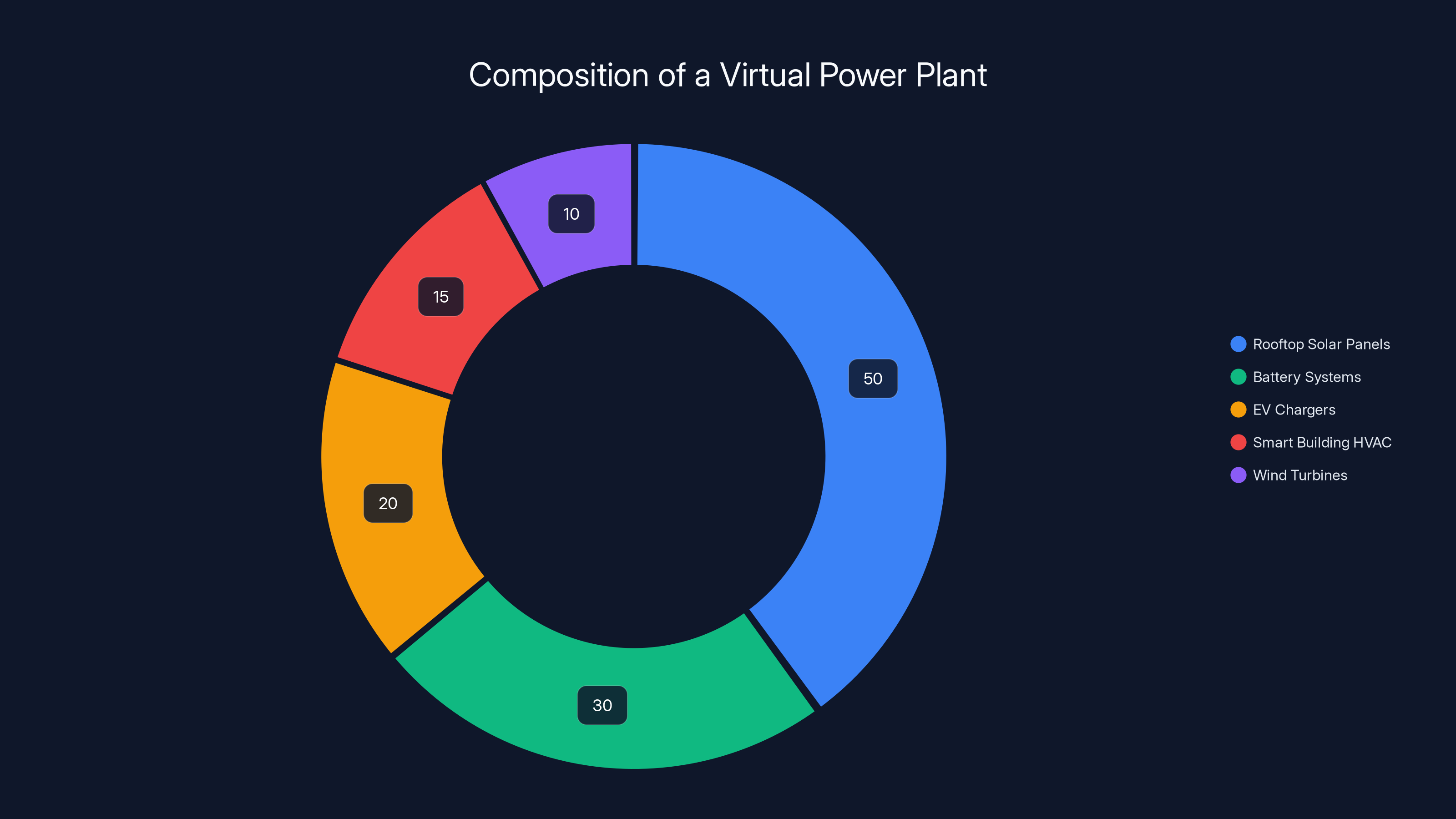

Virtual power plants invert this model. Instead of treating distributed energy resources (solar, wind, batteries, EVs, smart buildings) as problems to manage, they treat them as a coordinated system that can be optimized as a single entity.

Here's how it works in practice. A virtual power plant aggregates:

- Rooftop solar panels from thousands of homes (maybe 50 MW total capacity)

- Battery systems in homes and businesses (maybe 30 MW capacity, 100 MWh storage)

- Electric vehicle chargers that can charge or discharge (maybe 20 MW capacity)

- Smart building HVAC systems that can shift consumption (maybe 15 MW flexibility)

- Wind turbines from small farms (maybe 10 MW capacity)

Individually, these assets are too small to participate in grid balancing markets. A home solar system can't sell power to the grid economically because transaction costs exceed benefit. But aggregated, these assets become a 125 MW resource with 100 MWh of storage, capable of responding to grid signals with millisecond precision.

The AI system managing the virtual power plant must solve a complex optimization problem: which assets should charge or discharge at each moment to maximize value while respecting technical constraints and customer preferences?

It sounds simple. It's not. The optimization considers:

- Price signals: Electricity is cheaper at some times and locations. Should we charge batteries now (cheap) or later (expensive)? Should we shift load to where it's cheaper?

- Physical constraints: Batteries have minimum/maximum charge rates. Cables have current limits. Transformers have capacity limits. The system must respect all of them simultaneously.

- Customer preferences: Some customers want their EV charged by 8 AM tomorrow. Others want solar energy prioritized over grid energy. These constraints must be satisfied.

- Forecast uncertainty: We don't know exactly how much solar we'll have tomorrow. The system must maintain enough reserve capacity to accommodate surprises.

- Market opportunities: Sometimes the grid offers premium prices for fast response. Should we sacrifice customer preferences to capture these payments?

Traditional optimization algorithms would explore millions of possible combinations and pick the best one. That works for small problems but breaks down with thousands of assets and hundreds of decision points. Machine learning systems use neural networks trained on similar optimization problems to propose good solutions quickly, then refine them with classical algorithms.

The business case is compelling. Virtual power plant operators make money from:

- Energy arbitrage: Buy electricity cheap, sell it expensive

- Capacity payments: Get paid to be available during peak hours

- Frequency regulation: Get paid to inject or absorb power to maintain grid frequency

- Congestion relief: Get paid to reduce load in congested areas

- Renewable integration: Get paid to absorb excess renewable generation

These revenue streams often exceed the cost of deployment within 3-5 years. Virtual power plants have deployed across Australia, California, Germany, and Scandinavia with measured success. A 2023 study of Australian virtual power plants found they reduced peak demand by 15-20% in participating areas while reducing participants' electricity costs by 25-30%.

For data centers, virtual power plants represent something different: an opportunity to become active participants in grid stability rather than passive consumers. Some operators are installing batteries and bidirectional EV charging to support the grid during peak periods. During off-peak hours with abundant renewable generation, they charge batteries and offer EV charging. During peak periods, they shift non-latency-sensitive compute loads to lower-demand regions and potentially provide backup power.

Spatial Shifting: Moving Computation to Where Energy is Available

Hyperscale data center operators have a unique advantage that most industrial facilities lack: flexibility in where computation happens. A cloud provider running training on a neural network can run it in Dublin, Oregon, or Singapore. The result is identical. The location is fungible.

This flexibility creates an opportunity. Instead of fixing data centers in one location and forcing grids to accommodate them, what if operators shifted where computation happens based on where energy is available?

This is spatial shifting. The concept is elegant. At any moment, different regions have different renewable energy availability and different electricity prices. The system moves compute loads toward abundant, cheap energy and away from scarce, expensive energy. The result: data centers operate more efficiently while supporting grid stability.

Here's a concrete example. Imagine a hyperscaler with data centers in three regions:

- Region A (Iceland): Abundant geothermal and hydro power, cheap electricity, but limited data center capacity

- Region B (Oregon): Abundant wind power overnight, but peaks during afternoon peak demand

- Region C (London): Expensive electricity, high demand, limited renewable capacity

Traditional planning would distribute workloads across regions statically based on latency requirements and contractual commitments. Spatial shifting does something smarter.

Non-latency-sensitive workloads (data processing, model training, video transcoding, batch analytics) shift dynamically based on energy availability. When Region B has wind generation surplus at 2 AM and electricity costs

The impact compounds. Over a year, spatial shifting might reduce a data center's average electricity cost by 15-25%. More importantly, it reduces the aggregate demand on expensive, constrained grids during peak hours. A single data center shifting 10 MW of load away from London's peak reduces strain on the entire Western European grid.

Implementing this requires sophisticated systems:

Real-time energy accounting: The system must know the current cost of electricity at each data center, including both the commodity price and the grid constraint premium (a premium that increases when the grid is stressed).

Workload characterization: The system must understand which workloads can move (low latency sensitivity) and which can't (customer-facing interactive systems). A machine learning recommendation system requires low latency. A data processing pipeline doesn't.

Data movement optimization: Moving workloads between data centers requires moving data. A terabyte of neural network weights takes time to transfer. The system must decide: is the energy savings worth the data movement cost and latency impact?

Contractual compliance: Some workloads have data residency requirements or latency SLAs that prevent movement. The system must respect these constraints while finding optimal solutions within them.

Carbon accounting: Forward-looking operators care about carbon, not just cost. Wind energy in Oregon is carbon-free but might be more expensive than hydroelectric power in Iceland. The system can optimize for carbon instead of cost, or balance both.

Operators who've deployed spatial shifting report interesting results. Meta's data center strategy involves maintaining excess capacity in renewable-rich regions and shifting workloads toward them as renewable availability fluctuates. Google has disclosed that geographic load shifting reduced their energy consumption by approximately 13% while improving renewable electricity utilization.

The technology isn't yet perfect. Moving workloads takes minutes to hours, and some workloads can't move without impacting user experience. But as systems improve, as data center capacity in renewable-rich regions expands, and as electricity price volatility increases, spatial shifting becomes increasingly important.

The virtual power plant aggregates distributed assets, creating a 125 MW resource. Rooftop solar panels contribute the largest share, followed by battery systems. Estimated data.

Knowledge Graphs and Causal Understanding in Energy Systems

Most data scientists would agree that correlation doesn't imply causation. But in practice, machine learning systems optimize based on correlations. A system trained on historical data learns that when certain patterns occur, certain outcomes follow. It doesn't understand why.

This limitation becomes critical in energy systems. When an LNG shipment is delayed, fuel costs increase. This causes power plant dispatch decisions to change. This affects grid frequency and transmission line flows. These effects cascade across interconnected grids, potentially causing problems thousands of miles away.

A machine learning system trained on historical correlations might learn "when shipping delays occur, transmission congestion appears 18 hours later." It could use this correlation to predict congestion. But it wouldn't understand the causal chain, so it couldn't explain the relationship to operators or anticipate novel situations.

Knowledge graphs solve this by explicitly mapping causal relationships in the system. A knowledge graph for an energy system might include:

- Entities: Power plants, transmission lines, substations, batteries, data centers, weather stations, LNG terminals, natural gas pipelines

- Relationships: Generates, transmits, stores, consumes, controls, influences, supplies

- Attributes: Generation capacity, transmission capacity, current flow, cost, carbon intensity, location, availability

With this graph, the AI system can reason about causality. "If an LNG shipment is delayed, the gas terminal will reduce supply, so gas power plants will generate less, so the grid frequency will change, so the system should prepare backup resources."

This reasoning capability transforms forecasting. Instead of learning from historical patterns, the system can simulate the physical processes that actually occur. This makes it more robust to novel situations.

Knowledge graphs also enable integration of disparate data sources. Historically, energy market data, physical grid data, weather data, and commodity market data existed in separate systems. Analyzing relationships between them required custom integration work. Knowledge graphs can unify these datasets, allowing the AI system to understand how policy decisions, weather, market conditions, and physical grid operations all interact.

Some utilities have begun deploying knowledge graphs for specific applications. The Spanish transmission operator Red Eléctrica uses knowledge graphs to improve renewable energy forecasting by explicitly modeling relationships between weather patterns, generation facility characteristics, and historical generation. The result: forecast accuracy improved by 7-12% compared to standard machine learning approaches.

Beyond forecasting, knowledge graphs enable root cause analysis. When a grid event occurs, an AI system with causal understanding can trace back: what triggered this? What alternatives could have prevented it? This transforms grid operations from reactive (responding to problems) to proactive (preventing problems).

AI Agents: From Analysis to Autonomous Decision-Making

Most current AI systems in energy management are decision-support tools. A utility operator sees forecasts, scenario simulations, and recommendations. The operator makes the final decision about which action to take. The AI helps, but humans remain in control.

AI Agents represent the next evolution: systems that can perceive conditions, reason about options, make decisions, and take actions autonomously, without human intervention.

This isn't science fiction. Early versions are already operating. Utilities are testing AI agents that:

- Monitor grid conditions continuously

- Detect anomalies or potential problems before they occur

- Simulate response options and their consequences

- Execute corrective actions automatically

- Learn from outcomes and adjust future decisions

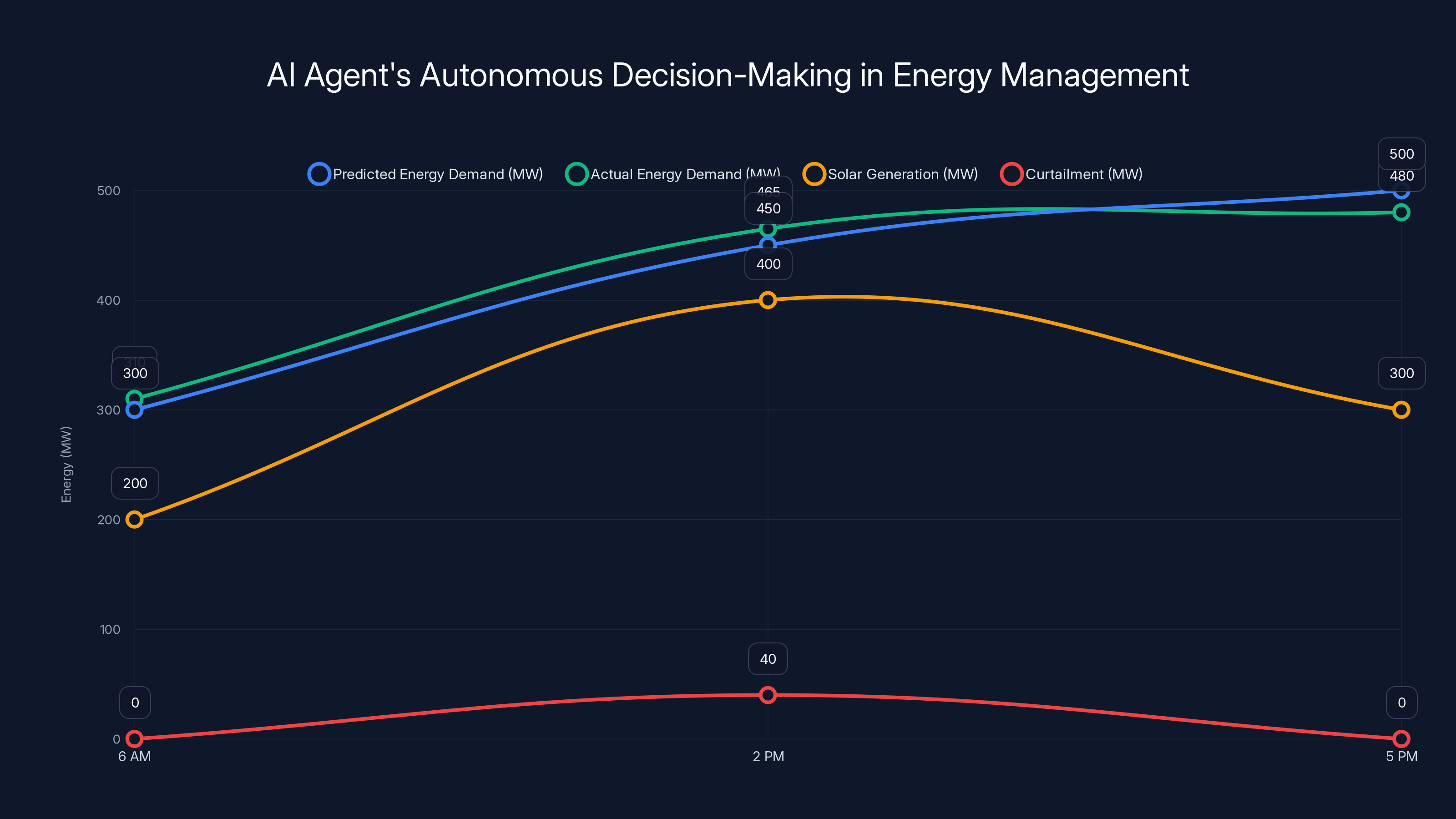

Here's what an AI agent managing grid operations might do during a typical day:

6 AM: The agent wakes up and reviews overnight conditions. Solar generation will ramp up starting in two hours. Battery systems are fully charged. Demand is about to increase as people wake up. The agent predicts that solar generation will exceed regional demand by 2-3 PM, causing curtailment (wasted renewable energy) unless loads shift. Action: automatically trigger incentive offers to smart buildings and EV chargers in the region, offering them lower rates if they increase consumption between 2-4 PM.

2 PM: Solar generation peaks. The incentive worked partially. Demand increased by 15%, but 40 MW of solar is still curtailed. The agent checks with neighboring grids. One region can accept additional power through interconnection lines. Action: automatically adjust interconnection flows and power generation dispatch to move excess solar to neighboring regions where it has value.

5 PM: Demand peaks as people return home. Wind generation has dropped unexpectedly due to changing weather. The agent forecasts that the region will be short 80 MW between 5-7 PM. Multiple response options exist:

- Discharge batteries (reduces available capacity for overnight)

- Shift data center loads to other regions (some latency cost)

- Import power from neighboring regions (more expensive)

- Shed non-critical loads (customer impact)

The agent runs full simulations of each option including cascading effects, then chooses the combination that minimizes total cost and risk. Action: automatically discharge 40 MW from batteries, shift 30 MW of data center loads, and import 10 MW from neighbors at negotiated rates.

8 PM: Wind generation recovers. Demand drops. The agent now has excess generation and needs to manage it. Action: automatically reduce output from expensive generators, offer discounted rates to incentivize off-peak charging and consumption, and recharge batteries for the next day.

Midnight: The agent reviews the day's performance, identifies deviations from forecasts, and updates its predictive models. It finds that weather forecasts were inaccurate in ways similar to three other recent days. It tags these patterns and will weight them more heavily in future model updates.

This isn't a speculative scenario. Versions of this are operating today. The challenge is moving from pilot systems to production scale while maintaining safety and regulatory compliance.

Why are AI agents essential for managing data center growth? Because human operators literally cannot make decisions fast enough. Grid frequency deviations that take seconds to propagate are corrected by agents in milliseconds. Load forecasting errors that would have taken hours to identify and address are detected in minutes. Optimal resource allocation decisions that would require days of expert analysis happen in seconds.

Moreover, AI agents can handle complexity that exceeds human cognitive capacity. Optimizing across thousands of variables simultaneously while respecting hundreds of constraints is not something human operators can do mentally. The system becomes too complex. AI agents handle it routinely.

The trust problem is real, though. Operators are naturally hesitant to give autonomous systems control over critical infrastructure. The solution is graduated autonomy. Start with systems that recommend actions to human operators. Move to systems that execute actions with human oversight. Eventually move to systems that make decisions within predefined parameters autonomously, with human review of boundary cases.

This has precedent. Modern aircraft fly themselves 99% of the time using autopilot. Pilots monitor and intervene when needed. The result: safer operations than human-only control. Energy grids are moving in the same direction.

The AI agent effectively manages energy demand and generation, reducing curtailment and optimizing grid operations. Estimated data reflects typical daily operations.

The Carbon Opportunity: AI Optimization for Emissions Reduction

Strictly speaking, AI grid optimization is energy-neutral from a carbon perspective. If you optimize to minimize cost, you reduce cost. If you optimize to minimize carbon, you reduce carbon. The underlying capability is the same: finding the optimal solution to a complex problem.

But carbon optimization is different because the objective changes decision-making in interesting ways. Instead of choosing the cheapest electricity source, you choose the lowest-carbon source. Instead of minimizing energy consumption, you maximize renewable energy utilization. These lead to different operational decisions.

Consider a decision: should a data center run a non-urgent computation job right now, or should it wait until tomorrow? The cost optimization algorithm says: run it now (cheaper). The carbon optimization algorithm says: wait until tomorrow (more renewable generation expected). Both are valid based on different objectives.

Forward-looking operators are optimizing for carbon explicitly. Google discloses that their data centers aim to run on 24/7 carbon-free energy by matching electricity consumption with renewable generation hourly. This requires significant optimization work: shifting workloads by hours or days to align with renewable availability, diversifying geographic locations to capture different renewable sources (wind, solar, hydro), and potentially running workloads at less-than-peak efficiency to maximize renewable utilization.

The technical challenge is enormous. Perfect 24/7 carbon-free matching would require massive battery storage or demand flexibility. Neither is currently available at required scale. But incremental progress is visible. Operators improving from 50% to 60% renewable matching save roughly 120,000 tons of carbon annually per data center.

AI systems enable this optimization at scale. A machine learning system managing grid operations can:

- Forecast renewable generation hours or days ahead with 80-90% accuracy

- Optimize data center scheduling to align compute with renewable availability

- Coordinate across data centers to find the globally optimal schedule that maximizes renewable utilization

- Negotiate with power producers to secure renewable energy purchases that match timing

- Adjust applications in real-time to use more compute when renewable generation is abundant

This creates a virtuous cycle. As data centers become more efficient at using renewable energy, renewable energy becomes more valuable. This encourages investment in additional renewable capacity. As renewable capacity increases, grid balancing becomes easier (more resources to coordinate). As grid balancing becomes easier, data centers can operate more efficiently with lower carbon footprint.

Practical Implementation: Building Intelligent Energy Systems

All of this technology is fascinating in theory, but how does it actually get built and deployed? What does implementation look like for utilities, data centers, and grid operators?

Step 1: Data Integration and Real-time Visibility

You cannot optimize what you cannot see. The first requirement is real-time visibility into the energy system. This means:

- Installing sensors on transmission lines that report voltage, current, and frequency in real-time

- Connecting generation facilities to report current output and capacity

- Installing smart meters on key loads to understand consumption patterns

- Integrating weather stations and forecasts to predict renewable generation

- Connecting market data feeds to track prices and trading

This is expensive. A single transmission line monitoring system costs $50,000-150,000. A major utility might need thousands of them. But the benefits are substantial: prevented outages, optimized operations, and better investment decisions.

Step 2: Forecasting Model Development

With data in hand, the next step is building models that can forecast future conditions. This involves:

- Collecting historical data from multiple years

- Developing machine learning models for demand forecasting

- Developing models for renewable generation forecasting

- Validating models against holdout test data

- Implementing models in production systems

This typically takes 6-18 months. Demand forecasting is relatively mature; renewable forecasting is still evolving. A well-tuned system should forecast demand 24 hours ahead with 90%+ accuracy and renewable generation with 80-90% accuracy.

Step 3: Optimization Algorithm Development

With forecasts, the next step is optimization: given predicted supply and demand, what's the best dispatch schedule? This involves:

- Defining the optimization objective (minimize cost, minimize carbon, maximize reliability, or multi-objective)

- Building models of physical constraints (transmission capacity, generator ramp rates, battery limits)

- Implementing optimization algorithms (linear programming, dynamic programming, or machine learning-based approaches)

- Testing the optimization offline against historical scenarios

- Gradually introducing it to operations

This is computationally complex. A large grid might have thousands of variables and millions of constraints. Commercial optimization solvers can handle this but cost significant money. Some operators use machine learning approaches that are faster but less exact.

Step 4: Integration with SCADA Systems

The system must integrate with SCADA (Supervisory Control and Data Acquisition) systems that actually control grid equipment. This means:

- Building APIs that connect optimization outputs to SCADA systems

- Implementing safety checks and limits on autonomous actions

- Creating dashboards for operator monitoring

- Testing extensively before handing control

This is critical. A buggy integration could cause serious problems. Operators typically run parallel systems for months, comparing AI recommendations to human operators, before moving to autonomous operation.

Step 5: Continuous Learning and Improvement

Once deployed, the system must learn and improve:

- Comparing predictions to actual outcomes and identifying systematic errors

- Retraining models with new data quarterly or semi-annually

- Analyzing operational decisions that created problems and adjusting algorithms

- Staying current with new forecasting and optimization techniques

This is ongoing work. Energy systems are constantly changing (new generation added, loads shifting, grid configuration changing). Models trained on last year's data are already slightly stale.

Total cost to build a complete intelligent energy system for a major utility? Typically $50-150 million over 3-5 years. This includes hardware (sensors, servers), software development, consulting, training, and operations. The payoff comes from:

- Reduced fuel costs: 5-10% operational cost reduction

- Reduced curtailment: preventing renewable generation waste

- Improved reliability: fewer outages and disruptions

- Deferred infrastructure: optimizing existing assets before building new ones

Most utilities find these benefits justify the investment, with payback periods of 5-7 years.

Substations contribute the largest share of data in AI-driven grid management, followed by solar and wind facilities. Estimated data.

The Competitive Advantage: Who's Leading and Who's Falling Behind

Around the world, energy system intelligence is becoming a competitive advantage. Regions that deploy it first gain significant benefits. Those that don't risk infrastructure constraints and higher costs.

Scandinavia is leading in many dimensions. Denmark, Norway, Sweden, and Finland all have high renewable penetration (60-100% of electricity from renewables) and sophisticated grid management systems. They integrate wind and hydro efficiently, support Europe's energy balancing, and maintain reliable systems despite high variability. Their secret: decades of investment in grid monitoring, forecasting, and optimization.

Germany has made massive renewable investments but struggles with integration. When the grid reaches 100% renewable generation on sunny, windy days, there's nowhere for excess energy to go. Some is exported to neighbors. Some is curtailed. The system is working, but it's inefficient. German operators are now deploying more sophisticated storage and demand management systems to reduce waste.

The United States is geographically divided. California leads with sophisticated renewable integration, virtual power plants, and demand response systems. It's also the most expensive electricity in the nation due to these systems and California's environmental regulations. Texas pioneered renewable integration and has benefited from cheap wind energy, but faced operational challenges during the 2021 winter freeze. Other states are moving more slowly.

For data center operations, the geographic differences matter enormously. A facility in Denmark has cheap, clean electricity and a grid designed for renewable integration. A facility in Texas has cheap electricity from wind but occasional grid stress. A facility in London has expensive, carbon-intensive electricity and constrained grid capacity.

Smart operators are establishing facilities in regions with good energy fundamentals (cheap electricity, high renewable %, sophisticated grid management) and considering this alongside labor costs, fiber infrastructure, and regulatory environment. Some operators are explicitly targeting regions with grid constraints to support local communities (a public relations benefit) while gaining operational advantages (lower electricity prices during peak times due to high curtailment).

Governments are taking notice. The UK's energy strategy now explicitly considers data center capacity and coordinates it with renewable energy deployment plans. The EU is developing frameworks to ensure data centers support grid stability rather than destabilizing it. These regulatory developments will increasingly shape where data centers locate and how they operate.

The Barriers: Why This Isn't Adopted Everywhere Yet

If AI grid optimization is so beneficial, why isn't it deployed everywhere?

Cost and complexity are the first barriers. Developing these systems requires expertise in electrical engineering, machine learning, software development, and operations. Few organizations have this expertise in-house. Hiring external consultants costs millions. Many utilities struggle to justify this spending when existing systems work adequately.

Regulatory uncertainty is the second barrier. Grid operation is highly regulated. Making changes to how grids operate requires regulatory approval. Regulators are cautious about autonomous systems, rightfully so. Getting approval to test new approaches can take years. Some jurisdictions actively restrict automated grid control.

Legacy infrastructure is the third barrier. Most grids were built decades ago without real-time monitoring or automated control. Retrofitting these systems is expensive and risky. You cannot simply shut down an existing grid and upgrade it. You must maintain operations while slowly, carefully adding new capabilities. This takes time and money.

Skills shortage is the fourth barrier. Building and operating intelligent energy systems requires people with skills that span electrical engineering, data science, and software development. These people are rare and expensive. Many organizations struggle to hire and retain them.

Vendor lock-in is the fifth barrier. Deploying proprietary systems from vendors creates long-term dependencies. Organizations are rightfully concerned about being locked into expensive vendor relationships. Open-source alternatives are limited, creating a dilemma.

Performance concerns are the sixth barrier. Any system change risks stability. What if the AI makes a bad decision? What if the forecast is wrong? What if the optimization algorithm produces unexpected results? These concerns are valid. Robust testing and gradual deployment address them, but operators remain cautious.

Despite these barriers, progress is happening. The value proposition is strong enough that utilities, data centers, and grid operators are beginning deployment. Cost is declining as competition increases and open-source tools mature. Regulatory frameworks are evolving to support innovation. As early deployments prove success, adoption will accelerate.

Looking Forward: The Grid of 2035

What will energy grids look like in a decade?

If current trends continue, we'll see:

Fully distributed, real-time optimized energy systems. Grids will continuously optimize across thousands of generation resources, storage systems, and flexible loads. Operators will manage at a level of sophistication far beyond current capability. This enables higher renewable penetration with lower curtailment.

Geographic load balancing as standard practice. Data centers will routinely shift workloads across regions to align with energy availability. This will reduce peak demand on constrained grids while improving renewable utilization. Companies will report their 24/7 carbon-free energy percentage as a standard metric.

Prosumers as the norm. Most buildings will have solar panels and batteries. Most vehicles will have bidirectional charging. Businesses will actively participate in grid balancing, earning revenue from demand flexibility. The traditional divide between producers and consumers dissolves.

Virtual power plants at scale. Millions of distributed resources will coordinate through AI systems, effectively replacing large central generators for certain functions. This provides resilience (failures affect small portions of the system) and efficiency (small systems match demand locally).

Energy as an information problem. Grid operators will think about energy like data center operators think about data: something that flows, gets routed optimally, gets stored, and gets consumed efficiently. The physics is different, but the optimization principles are similar.

Will data centers continue growing? Almost certainly. AI, cloud computing, and digital services demand will continue increasing. But the way data centers integrate with energy systems will transform. Instead of passive consumers that utilities accommodate, they'll be active participants that support grid stability. Instead of locating based purely on labor and infrastructure, they'll locate based on energy availability. Instead of operating with fixed consumption patterns, they'll shift workloads dynamically.

This represents a fundamental shift in how energy and computing interact. It's not inevitable. Poor choices about regulation, investment, or technology could derail it. But the incentives are strong enough that we'll likely see substantial progress over the next decade.

The Role of Platforms Like Runable in Energy Optimization

Complexity management is central to solving data center energy challenges. As systems grow more sophisticated, managing them becomes harder. Runable represents a class of platforms designed to automate complex operational tasks through AI-driven workflows.

For energy system operators, this matters because managing the interactions between forecasting, optimization, and execution is tedious and error-prone. Runable's AI agents and automation capabilities could streamline operational workflows, generate reports on grid performance, and create presentation decks summarizing optimization results. Instead of manually running forecasts, optimization algorithms, and coordination with operators, these processes become automated.

For data center operators considering spatial shifting or demand response programs, Runable could automate the analysis of regional electricity prices, renewable availability, and optimal load distribution. The system could generate documents analyzing cost-benefit tradeoffs, create presentations for stakeholders, and automate workflows that trigger load shifts based on changing conditions.

Consider a typical workflow: an energy analyst receives updated grid forecasts at 10 AM daily, analyzes them against current data center configuration, identifies optimization opportunities, creates a summary for operations teams, and hands it off for implementation. With AI automation, this entire workflow runs without human intervention. Forecasts are ingested automatically. Analysis runs on schedule. Documents summarizing findings and recommendations are generated automatically. Operations teams receive a prioritized list of actions to take.

At $9/month, platforms like Runable are accessible to even small organizations. This democratizes access to AI-driven automation for energy management, allowing utilities and data center operators of all sizes to benefit from AI workflows without massive infrastructure investments.

Conclusion: Intelligence as Essential Infrastructure

We've arrived at a point where energy system complexity exceeds human cognitive capacity. A utility operator cannot simultaneously optimize across thousands of variables while respecting hundreds of constraints in real-time. A data center manager cannot manually decide where to shift workloads as grid conditions change hourly. Grid operators cannot predict disruptions far enough in advance to prevent them.

Yet these decisions must be made, and made well. The energy system that powers modern civilization depends on getting them right.

AI is not a solution we chose because it was convenient. It's a solution we need because the problem exceeded human capability. Using intelligence to solve the problems that intelligence created might sound paradoxical, but it reflects the reality of modern systems. We've built something so complex that only artificial intelligence can manage it.

This creates an opportunity. Regions, utilities, and companies that deploy intelligent energy systems early will operate more efficiently, pay less for energy, and produce less carbon. Those that don't will face increasing costs and constraints. The competitive advantage is substantial.

For data center operators, the implication is clear: energy will increasingly be a managed resource, allocated efficiently based on availability rather than consumed greedily regardless of cost. Data centers that adapt to this will thrive. Those that don't will find themselves in constrained regions paying premium prices.

The grid is becoming intelligent. Data centers must become intelligent in return. The dance between energy supply and computational demand is about to get a lot more sophisticated. Those who understand it first will write the rules for everyone else.

FAQ

What is the primary energy challenge posed by AI data centers?

AI-focused data centers consume enormous amounts of electricity, with a single facility using as much power as 100,000 homes. This concentrated demand creates infrastructure bottlenecks and forces grids to accommodate unpredictable power surges. Global data center demand is projected to reach 700 TWh in 2025 (equivalent to Japan's total annual electricity consumption) and could reach 3,500 TWh by 2050, creating unprecedented strain on energy infrastructure.

How do AI-driven grid management systems work?

AI grid management systems continuously process millions of data points from sensors, weather stations, power plants, and loads. Machine learning models forecast future demand and generation hours ahead. Optimization algorithms then identify the best combination of resources to meet demand while minimizing cost and carbon. Advanced systems execute these decisions autonomously, adjusting power dispatch, battery charging, and load distribution without human intervention. This enables grids to absorb unprecedented demand swings while maintaining stability.

What are virtual power plants and why do they matter for grid stability?

Virtual power plants aggregate distributed energy resources (solar panels, batteries, electric vehicles, smart buildings) into a coordinated system managed by AI. Instead of operating as individual, uncontrollable assets, thousands of small resources become a large flexible resource that can be optimized together. They provide benefits including energy cost arbitrage, peak demand reduction of 15-20%, and the ability to support grid services like frequency regulation, making renewable energy integration much more efficient.

How does spatial shifting help data centers manage energy costs and support grids?

Spatial shifting moves computational workloads across geographic regions based on where electricity is cheapest and most abundant. Non-latency-sensitive workloads (data processing, model training, video transcoding) shift to regions with abundant renewable energy and cheap prices, while latency-sensitive workloads stay local. This reduces average electricity costs by 15-25% while supporting grid stability by shifting demand away from constrained regions during peak hours. Some operators reduce energy consumption by 13% through geographic load shifting alone.

What is a knowledge graph and why is it important for energy systems?

A knowledge graph explicitly maps causal relationships in energy systems, connecting entities like power plants, transmission lines, weather stations, and data centers. Instead of learning only correlations from historical data, AI systems can understand why certain outcomes occur. This enables better forecasting, root cause analysis of grid events, and reasoning about consequences of different actions. Knowledge graphs transform grid management from reactive (responding to problems) to proactive (preventing problems).

What are AI Agents in the context of energy grids?

AI Agents are autonomous systems that continuously monitor grid conditions, forecast future states, simulate response options, make decisions, and execute actions without human intervention. They perceive the energy system, reason about optimization opportunities, choose the best actions based on multiple objectives, and implement decisions in real-time. Rather than supporting human decisions, agents make decisions autonomously within predefined parameters, enabling response speeds and decision complexity that exceed human capability.

What barriers prevent wider adoption of intelligent energy systems?

Key barriers include: high cost (implementing full systems costs $50-150 million for major utilities), regulatory uncertainty (grids are highly regulated and slow to change), legacy infrastructure (retrofitting existing systems is complex and risky), skills shortage (few people have expertise spanning electrical engineering, data science, and operations), vendor lock-in concerns, and performance concerns (operators are rightfully cautious about autonomous systems controlling critical infrastructure). Despite these barriers, the value proposition is strong enough to drive gradual adoption.

How will energy grids evolve over the next decade?

Expect fully distributed, real-time optimized energy systems where thousands of resources are continuously coordinated. Geographic load balancing will become standard for data centers. Prosumers (buildings with solar and batteries) will actively participate in grid balancing. Virtual power plants will operate at massive scale. Energy will be managed like information: routed optimally, stored efficiently, and consumed based on availability. Data centers will shift from passive consumers to active participants supporting grid stability.

How does carbon optimization differ from cost optimization in energy systems?

Cost optimization chooses the cheapest energy source and running time. Carbon optimization chooses the lowest-carbon source and running time. This often leads to different decisions. A data center might run a job immediately if cost optimization is used, but delay it until tomorrow if carbon optimization is used (waiting for more renewable generation). AI systems can explicitly optimize for either objective or balance both, enabling operators to achieve carbon reduction while managing costs. Forward-looking operators are increasingly targeting carbon-free electricity matching.

What role will automation platforms play in energy management?

Automation platforms like Runable can streamline energy operations by automating routine analysis, report generation, and workflow execution. Energy analysts can use AI to automatically process forecasts, run optimization analyses, generate summaries, and trigger operational decisions. This reduces manual work, improves consistency, and enables faster response to changing conditions. At accessible pricing ($9/month), these tools democratize AI-driven energy management for organizations of all sizes.

Key Takeaways

- Data center electricity demand will reach 700 TWh in 2025, exceeding EV consumption globally and rivaling entire nations

- AI-driven grid management systems process millions of real-time data points to optimize energy distribution and prevent blackouts

- Virtual power plants aggregate thousands of distributed resources into coordinated units that actively support grid stability

- Spatial shifting technology allows data centers to move workloads to regions with abundant renewable energy, reducing costs 15-25%

- Knowledge graphs enable AI systems to understand causal relationships in energy systems, enabling proactive disruption prevention

- AI Agents make autonomous operational decisions at speeds and complexity levels exceeding human capability

- Intelligent grid systems deliver 5-10% operational cost reduction and improved reliability despite initial deployment costs of $50-150M

- Geographic distribution of data centers will increasingly follow renewable energy availability rather than traditional infrastructure

![AI vs AI: How Machine Learning Solves Data Center Energy Crisis [2025]](https://tryrunable.com/blog/ai-vs-ai-how-machine-learning-solves-data-center-energy-cris/image-1-1769615259908.jpg)