Apple's AI Revolution: The Siri Transformation Nobody Expected

Let's be honest. Siri's been... fine. Not great, but fine. For years, it sat there on your iPhone doing voice searches and setting timers while everyone else marveled at what Google Assistant could pull off. But something's about to change. If the latest reports are accurate, Apple's about to fundamentally reimagine what Siri can do, and the timing couldn't be more interesting.

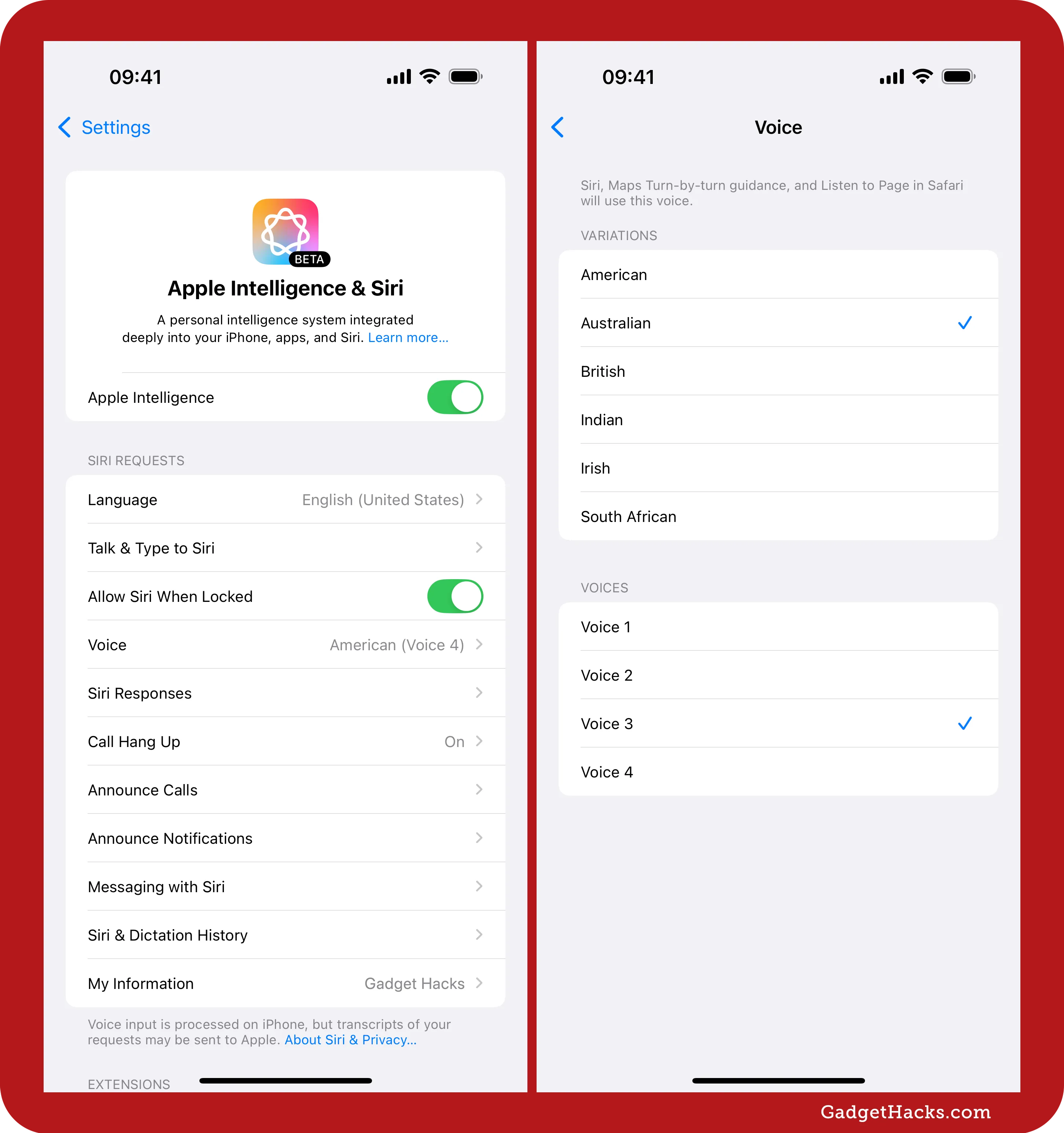

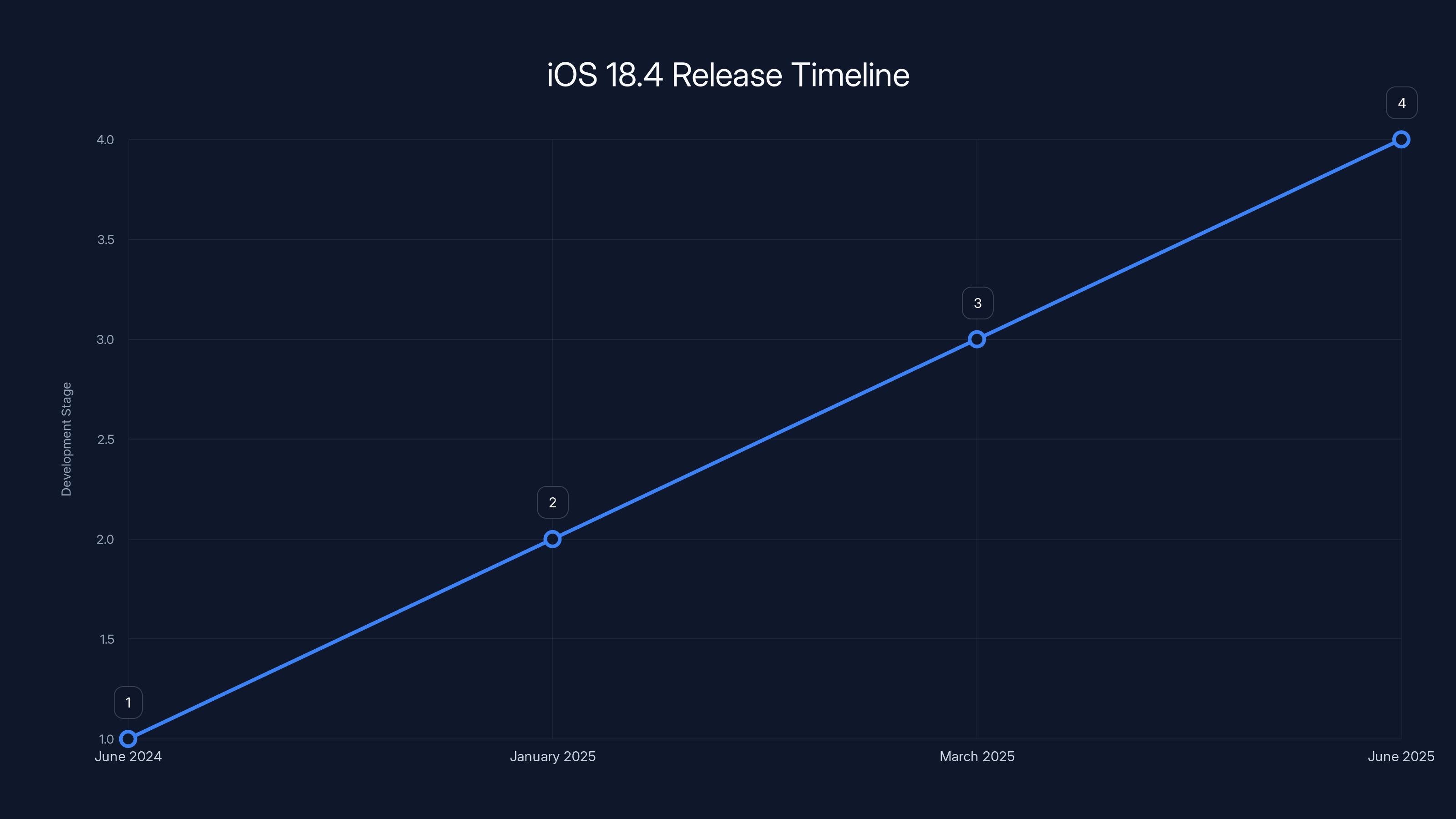

Back in June 2024, Apple showed off "Apple Intelligence" during WWDC. It was a moment where the company finally admitted it needed to catch up in the AI space. But here's the thing: that initial rollout? It didn't include the Siri upgrade everyone was really waiting for. That was planned for later. Much later. And now, after months of waiting, we're getting signals that the real Siri transformation might finally be arriving in iOS 18.4, potentially within the next few months.

This isn't just a cosmetic refresh. This is about Siri actually understanding context, remembering what you've done before, and handling complex requests without you having to break them down into three separate voice commands. Imagine telling Siri to "find all emails from Sarah about the marketing campaign and summarize them" and having it actually work the way you'd expect. That's the vision here.

But what's taking so long? Why is Apple letting Google and Amazon sit on this advantage for so long? Part of it comes down to how these systems work. Building a voice assistant that's genuinely smart requires processing language in ways that are fundamentally different from search or regular AI chatbots. You need to understand intent, context, and personal preference. You need to be fast enough that people don't feel like they're waiting for a response. And you need to do most of it on-device so Apple can maintain its privacy stance, which is a whole other engineering challenge.

The delays tell us something important: Apple's taking this seriously. They're not shipping something half-baked just to check a box. What's coming should actually be worth the wait.

Why Siri Has Always Been the Weak Link

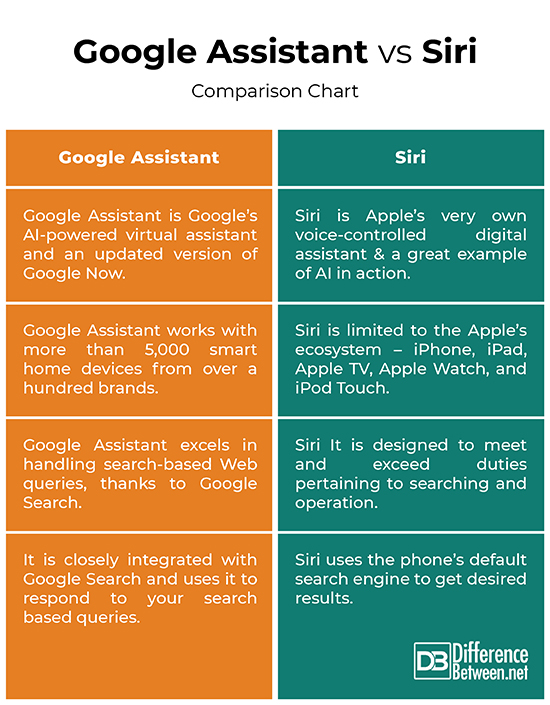

Siri launched in 2011 with the iPhone 4S. At the time, it was revolutionary. Nobody had voice assistants on phones that actually worked. Fast forward thirteen years, and Siri's essentially the same. Sure, Apple added features here and there. Siri got smarter about controlling smart home devices. It learned to handle more app-specific commands. But fundamentally, Siri still requires you to think in a very specific way. You can't have a natural conversation with it. It doesn't learn from your behavior. It doesn't anticipate what you might need.

Google Assistant and Alexa moved past these limitations years ago. They got better at understanding natural language. They started learning patterns. When you ask a question, they actually try to understand what you really mean, not just what you literally said. Amazon's Alexa can carry context across multiple requests. Google's assistant can tell you're asking a follow-up question and actually use information from your previous request to answer the new one.

Siri? It's been stuck. Every command feels transactional. Ask it something, get a response, move on. There's no sense that it understands you or remembers anything about how you work. This is why so many iPhone users just pulled out their phones and typed instead of using Siri. It wasn't faster. It wasn't more natural. It was just another interface that kind of worked.

Apple's been aware of this gap. The company's had brilliant engineers looking at this problem for years. But here's what makes this interesting: Apple's got a privacy-first approach that other companies don't have. Most of what Alexa and Google Assistant do happens on distant servers. Apple wants to process as much as possible directly on your device. That's computationally harder. It requires training models to be more efficient. It's the right approach from a privacy standpoint, but it's technically more challenging.

What iOS 18.4 Actually Brings to the Table

So what's actually coming in iOS 18.4? Based on current reports, this isn't just a speed bump or a few new skills. Apple's essentially building a new Siri from the ground up, and it shows a fundamental rethinking of how voice assistants should work on personal devices.

First, context awareness. Real context. Not the "I remember you asked me something five seconds ago" kind, but actual understanding of what you've been doing, what you care about, and how you work. If you've been researching flights to Tokyo, Siri will understand that when you ask "what should I pack." It'll remember that you booked a hotel, and it'll factor that location into recommendations. This seems simple in description, but it requires the system to track information across your device, apps, and browsing history in ways that previous Siri iterations never attempted.

Second, on-device processing. This is crucial to understanding why it took so long. Apple wants to keep this intelligent without sending all your data to the cloud. That's not just a preference, it's a core part of Apple's marketing message. "What happens on your iPhone stays on your iPhone." Building a genuinely smart AI system that mostly runs locally is substantially harder than building one that can offload to server farms. It's like the difference between having someone smart in your pocket versus a line to the smartest person in the world. The pocket version needs to be way more efficient.

Third, natural language understanding. You should be able to talk to Siri like you talk to another person. Follow-up questions without repeating context. Casual phrasing instead of precise commands. "Hey Siri, find that email I mentioned yesterday and remind me to respond when I get to the office." That should work. No fuss, no special phrasing required.

The Technical Challenge Behind the Scenes

Building this required solving some genuinely hard problems. The biggest one: how do you run a sophisticated AI model on a device with limited battery and processing power? The answer involves something called "model compression," which is exactly what it sounds like. You take a large, capable AI model and you squeeze it down to run efficiently on a phone. You also do more processing on the device's neural engine (Apple's specialized hardware for AI tasks) and less on the CPU.

Another challenge: privacy at scale. When Apple's system is learning from your behavior to get smarter, where does that data go? How is it encrypted? Can Apple actually not see it even if they wanted to? These aren't academic questions. They're engineering requirements that have to be baked into every part of the system. It's one reason competitors haven't solved this problem the same way.

The third challenge is integration. Siri needs to work across iOS, iPadOS, macOS, and watchOS. It needs to understand commands that span multiple apps. It needs to know which app you're currently in and adjust its behavior accordingly. This integration layer is where a lot of the complexity sits.

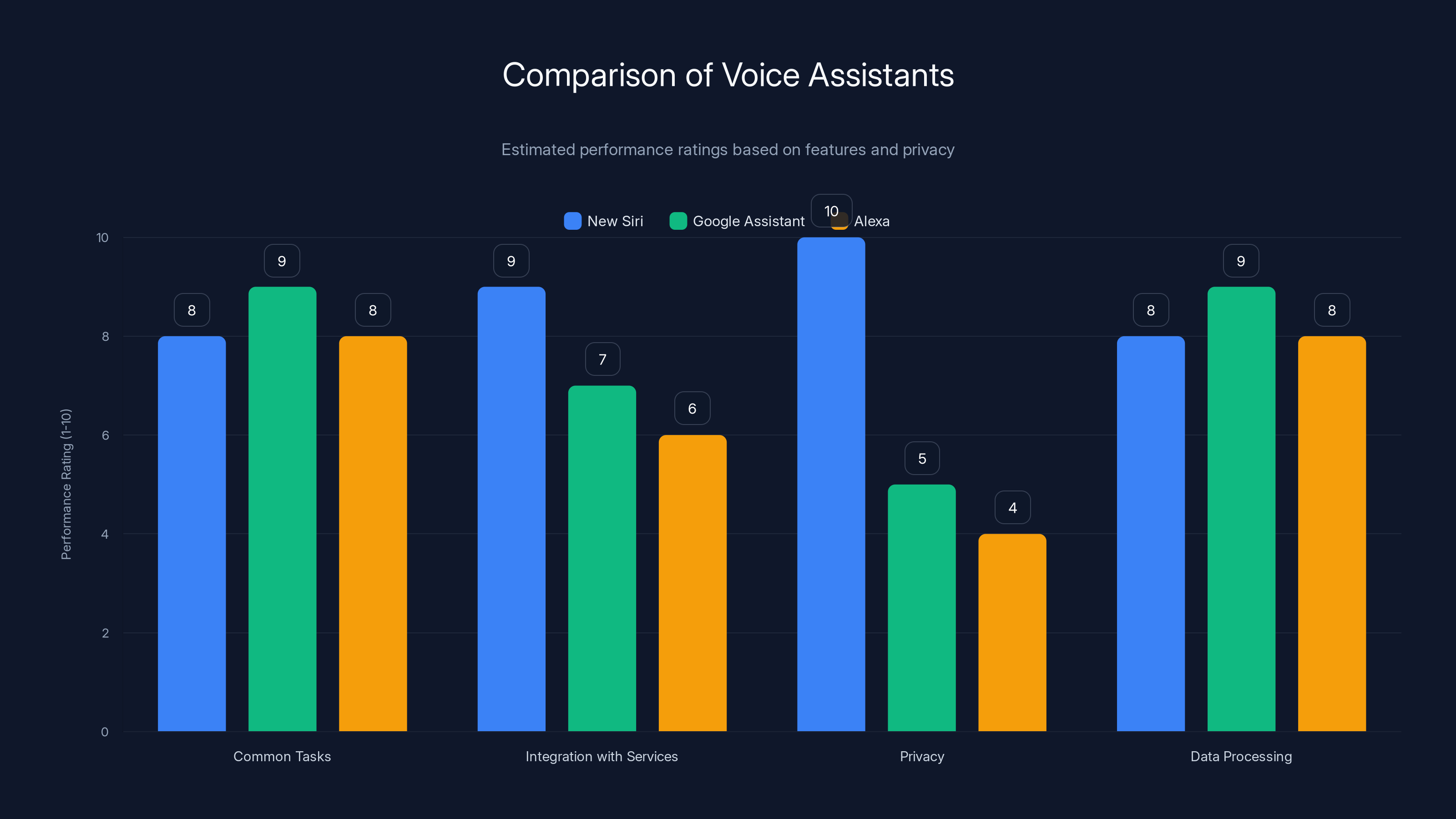

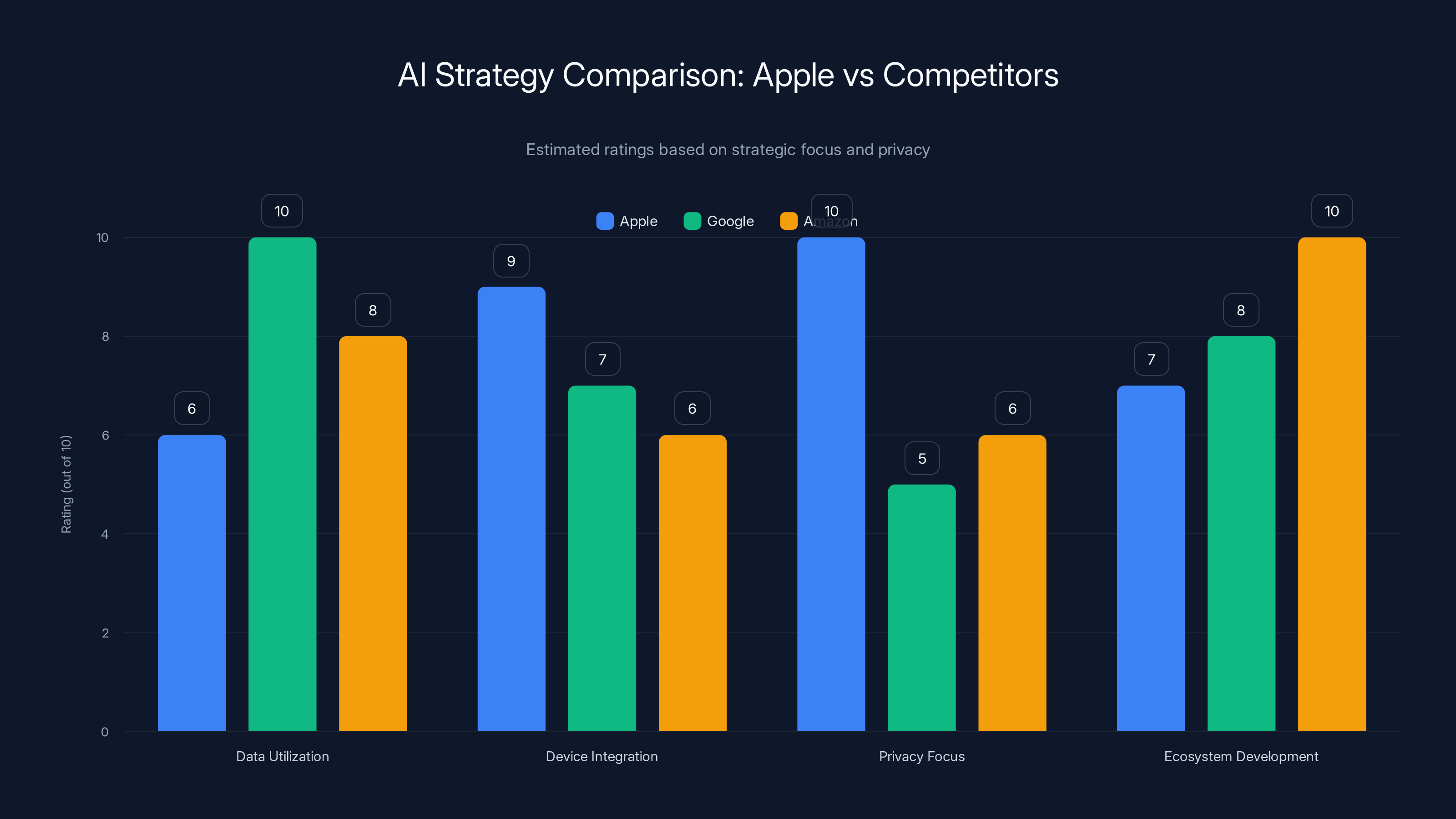

The new Siri is expected to excel in privacy and integration with Apple services, while Google Assistant may lead in data processing due to its extensive data resources. Estimated data based on feature analysis.

Apple Intelligence: The Broader Picture

It's important to understand that Siri's upgrade is just one part of Apple Intelligence, the broader AI initiative Apple announced last year. Think of it as Apple's attempt to integrate AI throughout iOS without making the operating system feel like it's just running AI services.

Apple Intelligence includes on-device image generation, smarter writing tools, more capable photo search, and context-aware summaries of your notifications and emails. The Siri upgrade is the most visible piece because we interact with Siri constantly, but the entire system is working toward something larger: making your device smarter about understanding your life and anticipating your needs.

What makes this different from competitors is the philosophy. Google's approach is essentially: "We have more data about more people than anyone else, so our AI will be better." Amazon's approach is: "Alexa is everywhere, so we can create an ecosystem around voice." Apple's approach is: "We have the most capable devices in people's pockets, and we can make them smarter in ways that protect your privacy."

These are fundamentally different bets. Apple's betting that smart devices don't need to be connected to massive cloud infrastructure to be useful. That's either going to look brilliant in five years or like a decision that cost Apple dearly. Based on the delays and the apparent complexity of what's coming, Apple's taking the former seriously.

Why the Delay Matters

Apple promised Apple Intelligence in iOS 18 back in June. It's now late in the year, and the full vision still isn't here. This tells us something: Apple's standards for shipping are extremely high. They'd rather delay and get it right than ship something that feels half-baked. This is actually consistent with how Apple operates. They're not the fastest company. They're the company that waits until they have something really good.

The Siri delays specifically suggest that Apple ran into more complexity than anticipated. Most voice assistant improvements follow a predictable path: you get better natural language understanding, you improve accuracy, you add features. But Apple seems to have decided that wasn't enough. They wanted something that actually felt like a different product. That level of ambition takes time.

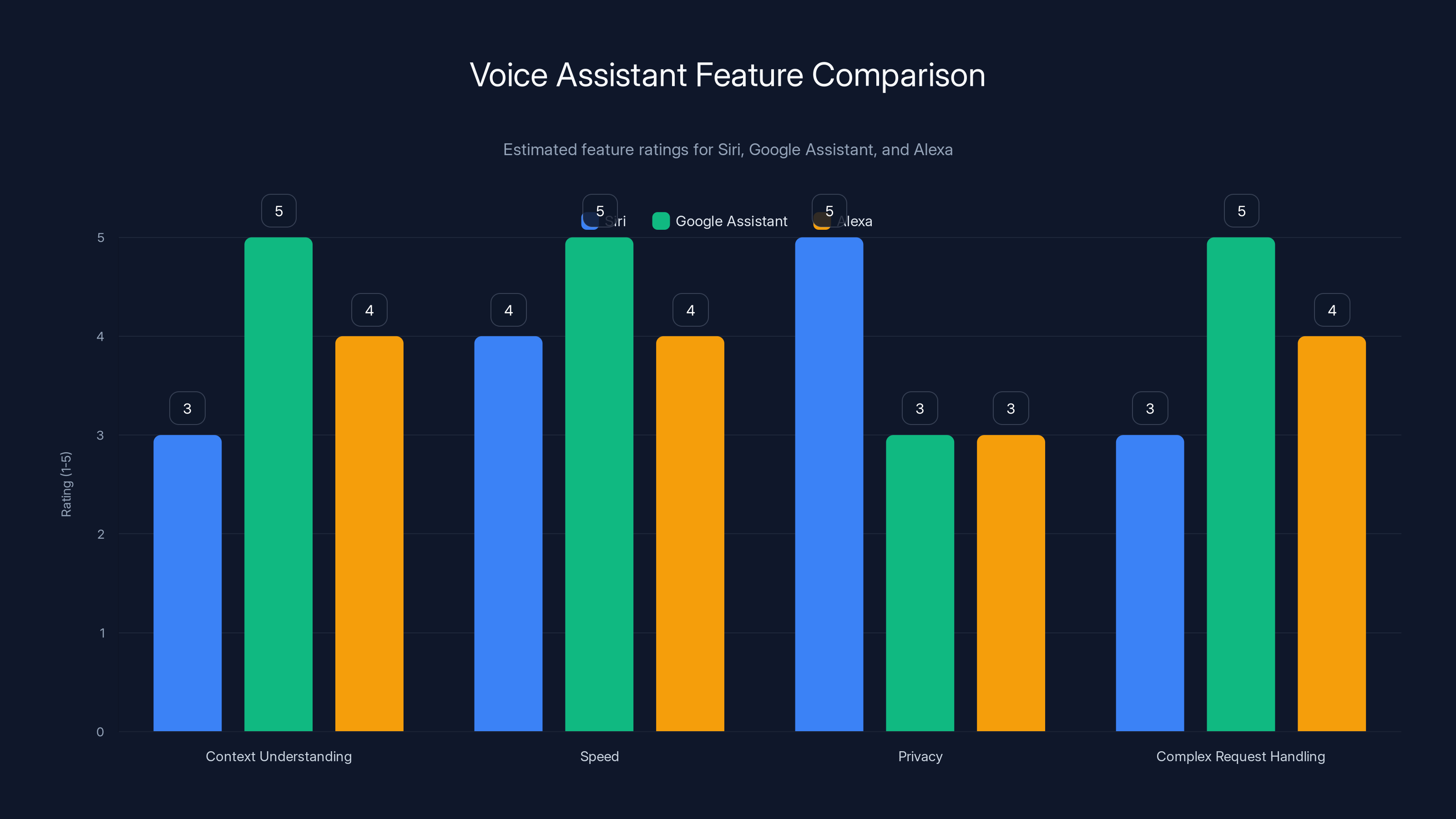

Estimated data shows Siri's potential improvements in context understanding and complex request handling, while maintaining high privacy standards.

What We Know About iOS 18.4 and the Timeline

Let's talk specifics. iOS 18.4 is expected to arrive sometime in the first half of 2025. This is when the enhanced Siri experience should become available to regular users. The exact date isn't set yet, but Apple typically releases major iOS updates in the spring.

Beta versions are likely to hit developers before that, probably in early 2025. If you want to try this out before the general release, that's your window. Apple seeded beta versions of iOS 18 starting back in June, so the pattern will probably be similar here. Developers get first access, then gradually the public gets their turn.

Not all devices will be supported immediately. Apple's narrowed the scope of Apple Intelligence to newer devices with more processing power. If you've got an iPhone 15 Pro or newer, you're good. Older models will either get a subset of features or nothing at all. This is Apple saying: "We'd rather have this work really well on recent hardware than pretend we can make it work on everything."

What Gets Left Behind

There's a real question here about what happens to people with older iPhones. Apple's built some features into the standard iOS updates that everyone gets. But the really interesting AI features? Those are locked to recent hardware. This is partly a processing power question, but it's also a marketing decision. New hardware performs better, so your new device feels smarter. That drives upgrades.

For most people this doesn't matter much. iPhone upgrade cycles are typically three to four years, and the latest hardware is available if you want it. But it does create a capability gap between new and old devices that's more significant than it used to be. Someone with an iPhone 14 will notice a difference when they move to an iPhone 16 or later. That difference will be obvious.

How Siri 2.0 Actually Works (What We Know)

Diving into the technical details, the new Siri experience is built on several foundational changes:

Contextual Processing: Siri now maintains a richer understanding of your digital life. It's not just looking at what you're asking right now. It's considering:

- What app you're in

- What you've been working on recently

- Your location history

- Your calendar and commitments

- Your contact relationships

- Recent communications

All of this happens locally on your device, not on Apple's servers. That's the privacy piece. Your data stays on your phone.

Semantic Understanding: Rather than pattern matching against pre-trained responses, the new Siri attempts genuine semantic understanding. It understands the meaning of requests, not just the surface words. "What should I wear tomorrow" involves understanding your location, the weather forecast, your calendar (to know if you have meetings), and your personal style preferences.

Cross-App Integration: Previous Siri could handle single-app requests well. "Text Sarah that I'll be late." New Siri can orchestrate across apps. "Delay my 3 PM meeting, text the attendees, and reschedule the conference room." That's three separate systems working together based on a single request.

Adaptive Learning: The system improves over time based on how you interact with it. If you frequently ask Siri to do something in a particular way, it learns that pattern and can anticipate similar needs in the future.

Apple focuses heavily on device integration and privacy, setting it apart from Google and Amazon, which emphasize data utilization and ecosystem development, respectively. Estimated data.

Privacy Implications: What Actually Stays Private

Apple's privacy stance is central to understanding the new Siri, so let's dig into what it actually means:

When Siri processes your requests locally, Apple doesn't see the raw data. Your email contents, your location history, your calendar events—these stay on your device. Apple doesn't have copies. This is genuinely different from how Google Assistant or Alexa work. Those systems process everything through servers where Google and Amazon can see it (they claim they don't store it long-term, but they can see it).

But here's the complexity: sometimes Siri needs to reach out to Apple's servers. For example, if you ask "what's the weather in Tokyo," it needs to fetch current data. In those cases, Apple's designed the system so it sends minimal identifying information. It won't send a request that says "I'm Sarah, I live at 123 Oak Street, and I want to know about Tokyo because I'm traveling there on December 15th." It sends something much more basic: a location query. Nothing that identifies you.

When on-device processing isn't possible (like when you ask Siri to draft an email using generative AI), Apple has options. One approach is to use Private Cloud Compute, where your request goes to Apple's servers, gets processed, but the servers immediately forget about it. No logs, no learning from your data. Another approach is to offer a toggle: you can use the cloud version for better results, or stick with the local version for privacy.

Nobody's going to verify this from the outside. You either trust Apple's approach or you don't. But the important distinction is that Apple's betting their reputation on privacy in a way that Google and Amazon aren't.

The Trade-offs of Privacy-First AI

Privacy-first AI has real costs. A system running locally on your phone can't access the massive datasets that cloud-based systems use for training. That means Siri might be less accurate on edge cases than Google Assistant. It might miss more nuance. It might be slower at learning new things because it can't learn from the billions of interactions happening across all devices.

But Apple's apparently decided that's an acceptable trade-off. They're optimizing for capability that serves your individual needs, even if that means less overall accuracy on aggregate problems.

There's also a battery cost to consider. Running sophisticated AI on your device uses power. Apple's optimized this extensively with the neural engine, but it's still real. A cloud-based system offloads that processing to servers with unlimited power.

Comparing to the Competition: How Far Behind Is Apple Really?

Let's be direct: Apple's behind on AI-powered voice assistants right now. Google Assistant is more capable. Alexa has more integrations. But Apple's not trying to win on those metrics. They're trying to win on a different axis: privacy and integration with the Apple ecosystem.

Google Assistant advantage: it understands context from across your Google services. It knows your emails, your calendar, your search history. It can synthesize information from all of those to answer questions. It does this really well. If you live in the Google ecosystem, it's the best voice assistant available.

Alexa advantage: it's everywhere. Every Amazon device, every device with Alexa built in. The ecosystem is enormous. If you're looking for a voice assistant that controls smart home devices and interfaces with Amazon services, Alexa's a solid choice.

Siri advantage: it's entirely focused on making iPhone and iPad users' lives better. It doesn't try to be a platform for third-party integrations. It doesn't try to sell you products. It's just a tool that lives on your device and helps you accomplish what you want to accomplish. The new Siri doubles down on this: deeply integrated, contextually aware, privacy-preserving.

Which approach wins depends on what you value. If you value privacy, Siri wins. If you value capability across services, Google wins. If you value ecosystem breadth, Alexa wins.

Siri 2.0 shows significant improvements in semantic understanding and contextual processing, enhancing user interaction and privacy. (Estimated data)

Real-World Use Cases: What You'll Actually Be Able to Do

Here's where the abstract becomes concrete. When iOS 18.4 arrives, what changes in how you actually use Siri?

Email Management: "Find all emails from my mom about Thanksgiving and summarize them." Siri looks through your mail, identifies emails from your mom's address, filters for ones mentioning Thanksgiving, and gives you a summary. Previously, this would require multiple searches.

Meeting Management: "I can't make my 2 PM. Reschedule it for tomorrow and let everyone know." Siri identifies the meeting, checks your availability tomorrow, proposes a time, updates the calendar, and sends notifications to attendees. It does all of this because it understands the full context of what a "meeting" means in your life.

Information Synthesis: "What did I miss while I was offline?" Instead of just reading notifications, Siri understands what's actually important to you. It prioritizes messages from close contacts, urgent work emails, and meaningful social updates. It deprioritizes notifications from apps you never use.

Smart Automation: "I'm leaving the office." Siri knows what "leaving the office" means for you. It turns off work notifications, updates your status, suggests the fastest route home, starts your commute music, and preps your car controls if you have an Apple CarPlay-compatible vehicle. This is orchestration across multiple systems based on understanding your routine.

Shopping and Planning: "I need a gift for my dad's birthday. What should I get?" Siri knows your dad's interests from your emails and messages. It knows the budget you typically spend on gifts because it's learned your pattern. It understands what he might like. It could even suggest specific items or help you find deals.

The Business Angle: Why Apple Cares and Why It Matters

Let's address the money question: why is Apple investing this heavily in Siri? It's not like Siri makes money directly. Users don't pay for it. Apple doesn't charge per command.

The answer is ecosystem lock-in. If your voice assistant is deeply integrated with your phone, deeply integrated with how you work, deeply integrated with your calendar and email and messaging, you're less likely to switch to Android. That's worth hundreds of billions of dollars in lifetime value. One good reason not to switch is a phone that understands you better than any other phone. That's valuable.

There's also the AI arms race. Every major tech company is investing in AI assistants because they're betting these will be the primary interface for computers in five to ten years. Google, Amazon, Microsoft, OpenAI—they're all all-in on this. Apple can't afford to be substantially worse at this, or the company starts to look outdated.

But there's a third reason that's less obvious: controlling the AI narrative. Apple's framing of AI is very specific. "AI that respects your privacy." "AI that's just useful, not creepy." "AI that stays on your device." These aren't just features, they're identity statements. They're how Apple differentiates in a world where everyone's trying to do AI.

Siri 2.0 is basically Apple saying: "We can do sophisticated AI without surveillance capitalism." Whether that's true or not is almost beside the point. The message is powerful because it's different from everyone else's message.

iOS 18.4 introduces significant improvements in context awareness and natural language understanding, with a strong focus on on-device processing. Estimated data based on feature descriptions.

Challenges Apple Needs to Overcome

Now, let's be honest about what could go wrong. Several technical and social challenges remain:

Accuracy at Scale: Getting a local AI system accurate across millions of devices and use cases is hard. Google and Amazon have solved this because they process everything centrally and can see patterns. Apple has to solve it without that advantage.

Feature Parity: Users will compare the new Siri to Google Assistant and expect feature parity. If something Google does effortlessly, Apple's Siri can't do at all, that's a negative headline. Apple needs to be at least competitive on every common use case.

User Expectations: People's expectations for AI assistants have risen because of ChatGPT. Users expect genuine understanding, not pattern matching. If the new Siri doesn't clear that bar, disappointment will be immediate.

Integration Complexity: Getting Siri to work seamlessly across iOS, iPadOS, macOS, and watchOS while maintaining consistent behavior is a monumental task. Different devices have different capabilities, different screen sizes, different interaction models.

Device Fragmentation: Not all devices support the new Siri. Managing user expectations around what does and doesn't work on older hardware is going to be tricky.

When the Rollout Actually Happens

Let's talk timeline more specifically. If iOS 18.4 arrives in spring 2025 (which is most likely), here's what the progression probably looks like:

January/February 2025: Developer beta versions start circulating. Tech people and developers get to play with it. Apple starts gathering feedback.

March 2025: Public beta release. Anyone can opt into the beta program and try the new Siri. This is when real-world feedback starts coming in.

Late March/Early April 2025: iOS 18.4 general release. The general public gets the update.

Summer 2025 onwards: Apple refines based on feedback, fixes bugs, maybe releases iOS 18.4.1, 18.4.2 as needed.

During all this time, there will be headlines about how good the new Siri is, or how much it disappoints. Expect a lot of comparison articles with Google Assistant. Expect think pieces about Apple's approach to AI privacy.

Estimated timeline for iOS 18.4 suggests beta releases in early 2025 with a public release in spring 2025. Estimated data.

What This Means for Your Device Right Now

If you've got an iPhone 15 Pro or newer, congratulations, you'll be in the first wave of devices supporting the new Siri. Assuming your device can handle the iOS 18.4 update (which it should), you'll get this when it rolls out.

If you've got an older iPhone, you have options. You can wait until your next upgrade to get Siri 2.0. Or you can accept that Siri will remain mostly as it is, which is still fine for basic tasks. Siri handles timers, music control, and simple searches well. It's just not going to be an intelligent assistant that understands your life.

There's also a question of whether you should enable certain privacy-sensitive features when they arrive. Even though Apple's privacy model is better than competitors, that doesn't mean you have to use every feature. If there are aspects of the new Siri that feel too invasive to you, you can disable them. Apple typically offers granular controls for this kind of thing.

The Broader Implications for How We'll Use Our Phones

The new Siri isn't just about voice commands. It represents a fundamental shift in how Apple thinks about the relationship between you and your device. Instead of a device that you command, you're getting a device that anticipates your needs and suggests actions.

This changes how notification management works. Instead of being bombarded with notifications, your device filters them intelligently. It knows what matters to you right now.

It changes how app usage works. Instead of hunting through your phone to find what you need, Siri can surface it proactively. "Your dentist appointment is in an hour and traffic is bad. I've called your car." That's the kind of thing that becomes possible.

It changes how privacy feels. On one hand, your device knows a lot more about you. On the other hand, that information stays on your device instead of being sent to servers. There's a real psychological difference between those scenarios.

How to Prepare for iOS 18.4

If you want to get the most out of the new Siri when it arrives, there are some practical steps you can take now:

Clean Up Your Digital Life: The better organized your calendar, email, and contacts are, the better Siri can work with them. If you've got hundreds of contacts with no organization, fix that now.

Establish Patterns: Create routines and habits that are consistent. Siri learns from patterns, so if your life is predictable, it can better anticipate your needs.

Check Permissions: Make sure relevant apps have the permissions they need. If you want Siri to know about your location, make sure location services are enabled for relevant apps.

Test the Current Siri: Get comfortable with the current version. The new Siri builds on it, so understanding what it can and can't do currently helps you understand what improvements are meaningful.

Stay Updated: Make sure you're running the latest iOS version. Apple often backports features and fixes that improve overall system stability.

Skepticism Is Reasonable

Let me be direct: there's reason to be skeptical about how much this actually changes things. Apple has promised AI improvements before. We've seen tech companies over-promise on AI capabilities. The gap between what's shown in a demo and what actually works in the real world is often substantial.

Will the new Siri really understand context the way Apple shows in videos? Probably most of the time, but not always. Will it be faster? Probably. Will it occasionally misunderstand what you're asking and do something wrong? Almost certainly yes.

The important thing is whether it's significantly better than current Siri. Based on what we know, the answer is probably yes. Not perfect, not as good as Google Assistant in every scenario, but better at understanding what you actually need in ways that feel meaningful.

The skepticism should also extend to privacy claims. Apple's approach is better than alternatives, but it's not zero surveillance. Your device still collects data about you. Apple still knows when you buy things, where you go, what apps you use. They're just claiming (and probably being truthful) that they're not using that data for ad targeting or selling to third parties.

The Bottom Line: This Actually Matters

Siri 2.0 represents the first major attempt by a major tech company to build a genuinely intelligent voice assistant that respects privacy. If Apple pulls this off, it changes the conversation around AI. It proves that you don't need to hand over all your data to have an AI system that actually understands you.

If they fumble it, if the new Siri disappoints users, then the message is different: maybe you do need to sacrifice privacy for capability. Google wins that narrative.

So the stakes here are bigger than just "is Siri better." It's a test of whether Apple's entire vision for privacy-first computing actually works at scale. iOS 18.4 and the new Siri are going to be carefully watched by people way beyond Apple users. Every tech company is going to be watching to see if this approach is viable.

For you, practically speaking: if you've got a newer iPhone, you should expect significantly better voice assistant capabilities starting in spring 2025. It's worth looking forward to. And if you've got an older device, this might be the feature that finally makes you want to upgrade.

TL; DR

- The Upgrade: Apple's redesigned Siri with AI intelligence is coming in iOS 18.4, expected spring 2025

- What's New: Real contextual understanding, on-device processing for privacy, cross-app coordination, and natural language conversations

- Device Requirements: iPhone 15 Pro and newer will get full support, older models may get limited features

- Privacy Approach: Data stays on your device instead of being sent to servers, a major difference from Google and Amazon

- Timeline: Beta testing early 2025, general release mid-2025, with continued refinements throughout summer

- Bottom Line: This is Apple's bet that you can have intelligent AI without surveillance, and how well it works matters for the entire industry

FAQ

What exactly is the new Siri doing that current Siri can't?

The new Siri can understand context across your digital life—your calendar, emails, messages, location, and recent activities. Current Siri handles single, isolated commands. New Siri understands relationships between commands and can accomplish complex multi-step tasks with a single request. It also learns patterns in how you work and can anticipate needs before you ask.

When will iOS 18.4 actually be available?

Based on Apple's typical release schedule, iOS 18.4 should arrive in the spring of 2025, likely between March and May. Developer betas will probably start arriving in January or February 2025. There's always uncertainty with exact dates, but spring 2025 is the most reliable estimate based on historical patterns.

Do I need a new iPhone to use the new Siri?

You need an iPhone 15 Pro or newer for the full capabilities. Older devices may get some features, but not the complete experience. This is because the neural engine in newer phones has the processing power required for on-device AI. If you have an iPhone 14 or older, you'll be limited to whatever subset of features Apple decides to enable.

How does Apple keep this private if Siri knows so much about me?

Apple processes most Siri requests directly on your device using local processing, so the information never leaves your phone. When cloud processing is necessary, Apple uses what they call Private Cloud Compute, where servers process your request and immediately forget it without storing logs. Compare this to Google Assistant or Alexa, which process everything on centralized servers that can see your data.

Will the new Siri be as good as Google Assistant?

It will probably be comparable for common tasks and better for integration with Apple services. Google Assistant might still have advantages in certain areas because Google has more data to work with. But Apple's betting that a privacy-first, device-focused approach can be competitive enough. The trade-off is privacy for something close to equivalent capability.

What happens to Siri on my old iPhone if I don't upgrade?

Current Siri will continue to work as it does now. Apple will probably push out some improvements to the current version for older devices, but the AI-powered redesign is reserved for newer hardware. You won't be missing major functionality—Siri handles basic tasks well. You just won't get the smarter, context-aware version.

Can I turn off the new privacy features if I don't trust them?

Apple typically provides granular privacy controls, so yes. If there are aspects of the new Siri that concern you, you should be able to disable them selectively. You might not get the full benefits, but you'll maintain control over what data the system can access. Apple's entire privacy marketing depends on giving users this kind of control.

Is Apple actually processing this on my device, or is that marketing?

Apple's commitment to on-device processing is real and verifiable to some degree. Security researchers and app trackers can monitor network activity to see if data is being sent to Apple's servers. That said, you can't verify everything Apple claims without looking at their source code. Trust in Apple's privacy claims requires some faith that their public commitments match their actual implementation.

What's the battery cost of running all this AI on my device?

Apple's optimized the neural engine to be power-efficient, so the battery drain shouldn't be significant. But there is some cost—running AI processing uses power that cloud-based assistants offload to servers. In practice, most users probably won't notice much difference, but power-sensitive users might see some impact during heavy Siri use.

Should I wait to upgrade my iPhone until iOS 18.4 arrives?

If you're thinking about upgrading anyway and Siri is a factor in your decision, it might be worth waiting to try it first. But if your current iPhone still works fine, you don't need to rush. The new Siri is nice, but not life-changing. It's an improvement, not a revolution. Upgrade on your normal schedule, whenever your device needs replacing.

Why did this take so long if Apple promised it in June 2024?

Apple is taking a fundamentally different approach to voice assistants than competitors. Building local AI processing that's as capable as cloud-based systems is technically harder. Apple's also working to balance capability with privacy, which adds complexity. The company would rather ship something really good late than something mediocre on time. This approach usually works out better in the long run, even if it's frustrating in the moment.

Key Takeaways

- iOS 18.4 expected spring 2025 will bring Siri redesign with context awareness, processing local to your device

- New Siri understands multi-step commands, learns from patterns, and integrates across Calendar, Mail, Messages, and other apps

- Privacy-first approach keeps data on device unlike Google Assistant and Alexa which process everything on remote servers

- iPhone 15 Pro or newer required for full Apple Intelligence Siri features due to neural engine hardware requirements

- Apple's approach trades off raw capability for privacy and ecosystem integration, betting this matters to users

Related Articles

- Subtle Voicebuds: AI Earbuds That Transcribe Whispers and Loud Spaces [2025]

- Google Pixel Watch's Forgotten Device Feature Explained [2025]

- Apple Notes Gets AI Superpowers: The Complete 2025 Guide [iOS 18+]

- UK Government AI Benefits Claims System: DWP's £23.4M Transformation [2025]

- Samsung Galaxy S26 Rumors and Expected Features [2025]

- Lenovo's Qira AI Platform: Transforming Workplace Productivity [2025]