Apple Pauses Texas App Store Changes After Age Verification Court Block: What This Means for Digital Rights

On a Tuesday that will likely echo through tech policy circles for years, a federal judge handed down a decision that reshaped how we think about age verification, digital privacy, and the power of massive tech corporations to resist state regulation. Apple announced it would pause its previously announced compliance plans for Texas's ambitious new age assurance law. The reasoning wasn't about protecting kids—it was about protecting free speech. And that's where things get complicated.

Texas had passed something genuinely unprecedented: a law that would force app stores to verify user ages before downloading nearly any app, require parental consent for anyone under 18, and mandate that sensitive age data be shared with developers. The App Store Accountability Act, officially known as SB2420, was supposed to take effect in January, setting off a domino effect that could have fundamentally changed how mobile apps work across America.

But it never got there. The federal judge, citing First Amendment concerns, blocked the law's enforcement before it could even launch. Apple, which had spent months preparing new technical requirements and APIs to comply, suddenly found itself in a holding pattern. The company released a statement saying it would pause its Texas-specific implementation while continuing to monitor the legal process.

Here's what makes this moment so important: this isn't just about one state's law or one company's compliance headaches. This is about the fundamental tension between protecting minors online and protecting everyone's privacy and free speech rights. It's about whether states can mandate invasive data collection in the name of child safety. And it raises uncomfortable questions about whether the biggest tech companies will ever voluntarily police themselves, or whether government regulation is the only answer.

Let me walk you through what happened, why it matters, and what comes next—because despite this court ruling, the fight is far from over.

TL; DR

- Federal court blocked Texas age assurance law on First Amendment grounds before it could take effect in January

- Apple pauses compliance plans but keeps age verification tools available for developers choosing to adopt them voluntarily

- Law would have required invasive data collection: age verification, parental consent for users under 18, and sharing age data with app developers

- Privacy vs. protection debate: Texas argues the law protects minors; Apple argues it requires unconstitutional collection of sensitive personal data

- Legal battle continues: Texas Attorney General's office plans to appeal the ruling, guaranteeing years of litigation

- Bottom Line: Age verification regulation remains one of the most contentious policy issues in tech, with no clear resolution in sight

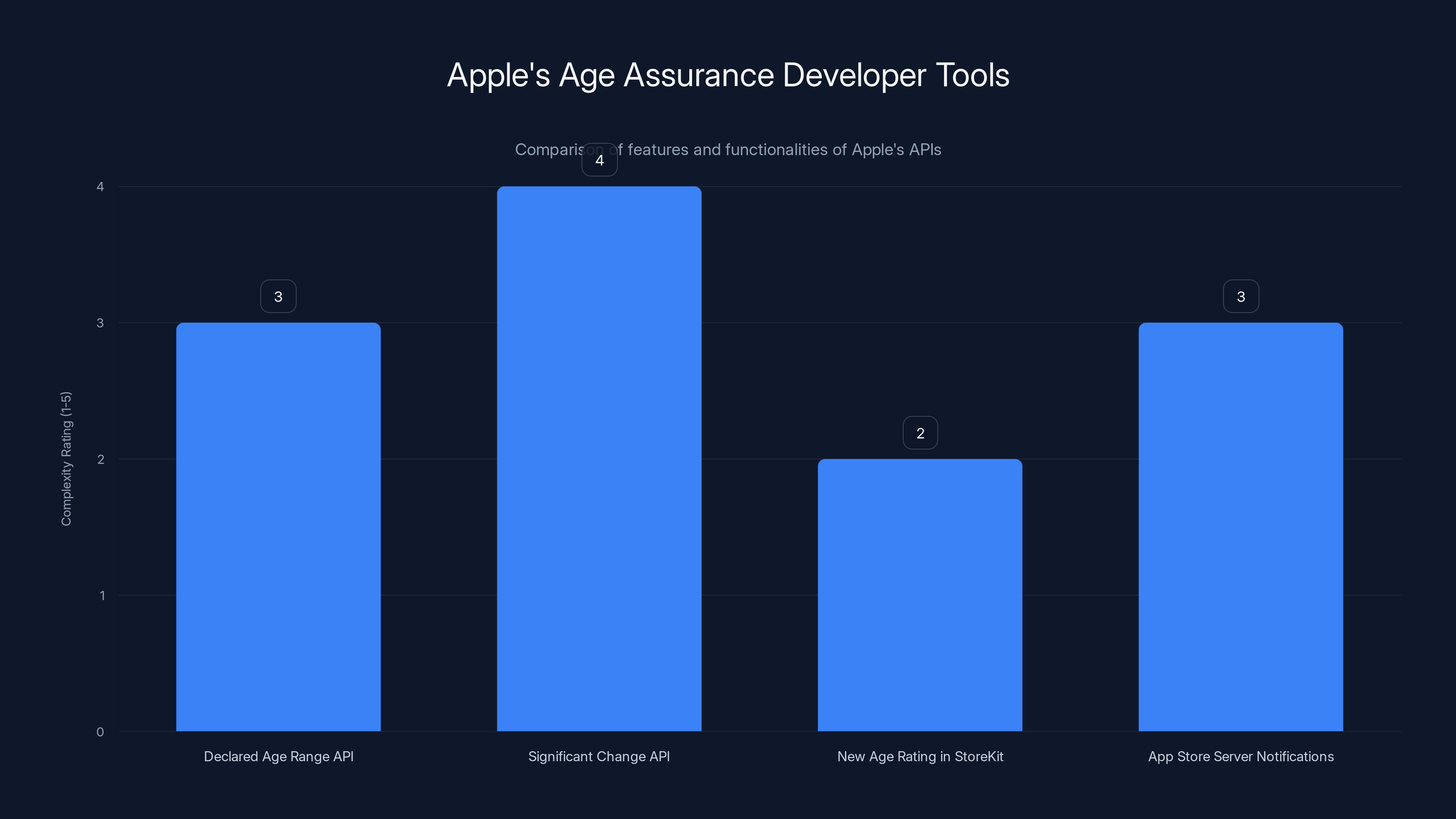

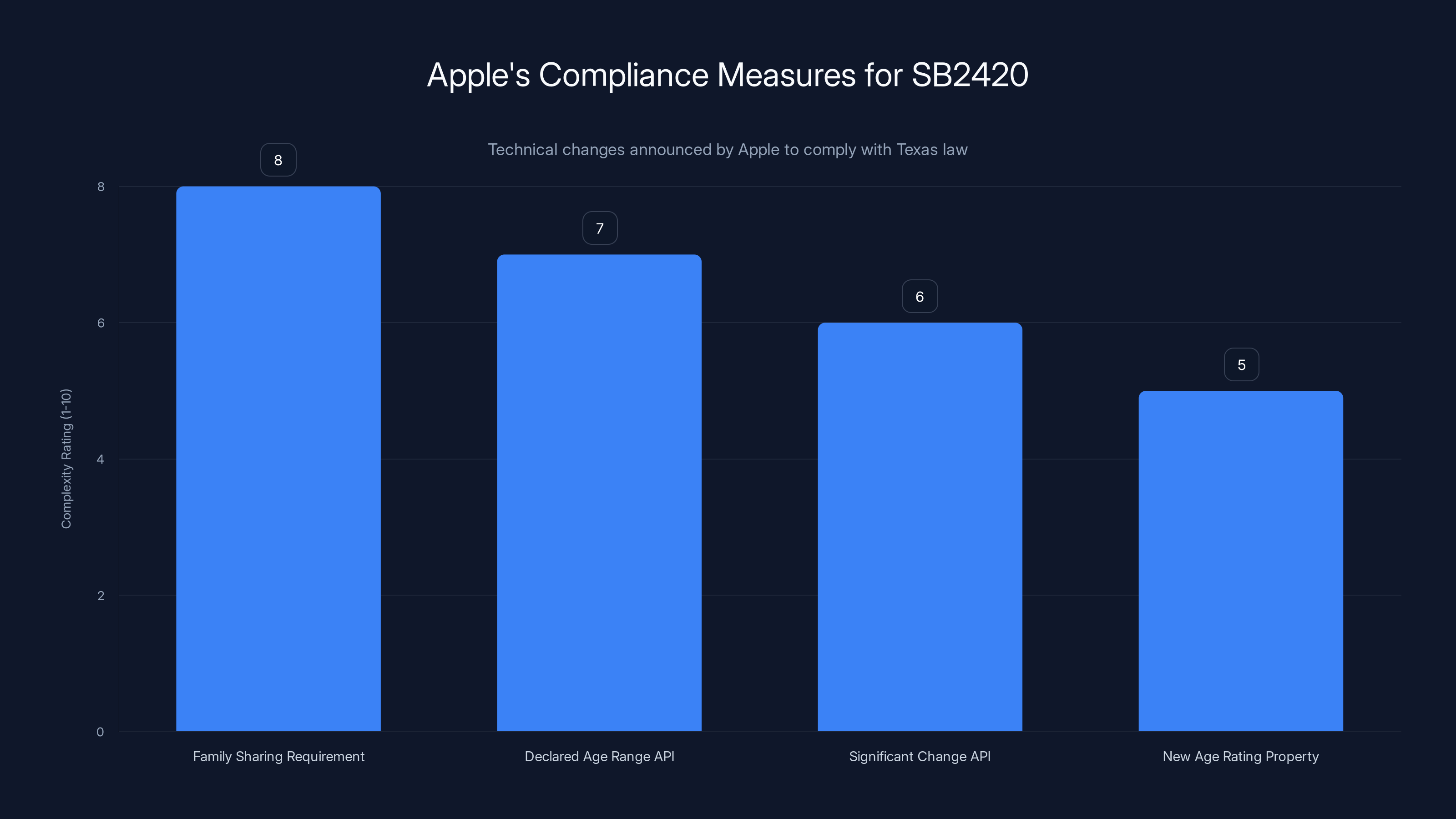

Apple's developer tools vary in complexity, with the Significant Change API being the most complex due to its dynamic consent flow feature.

Understanding SB2420: What Texas Tried to Do

Texas didn't wake up one morning and decide to regulate app stores on a whim. The state had watched years of controversies unfold: social media platforms enabling cyberbullying, apps designed specifically to exploit children's psychology, and widespread concerns that digital services aimed at kids had virtually zero meaningful age verification. So lawmakers decided to take action with something radical.

SB2420, formally titled the App Store Accountability Act, would have created the most aggressive age verification mandate ever attempted at the state level. Here's what it would have required:

Every person downloading an app through Apple's App Store or Google Play would need to verify their age. Not just kids. Not just specific categories of apps. Everyone. This applies to weather apps, sports score apps, calculator apps—literally everything. The law didn't carve out exceptions for low-risk apps. If you wanted to download an app, any app, you'd need to prove your age.

For anyone under 18, the requirements went further. Parents or guardians would have needed to provide affirmative consent before the download could proceed. This wasn't passive consent or opt-out after the fact. This was active, documented permission. Parents would be able to monitor and revoke consent at any time, which on the surface sounds protective.

But here's the part that made tech companies lose their minds: the age data would have to be shared with developers. The app makers would get information about whether their users were adults or minors. This is where the law crossed into genuinely invasive territory. Developers would know the age demographics of their user base with precise detail.

Apple prepared for this. In October, the company announced a series of technical changes it would implement in Texas:

Family Sharing requirement: All users under 18 would need to be enrolled in a Family Sharing group. Parents would provide consent not just at download, but for all in-app purchases and transactions. This would centralize parental control in a way Apple's system hadn't required before.

Declared Age Range API: Apple had developed this technology to help comply with age assurance laws worldwide. In Texas, it would be updated to provide the required age categories for new account users. Developers could query this data and understand their user age distribution.

Significant Change API: If an app received a major update, developers could trigger a new parental consent request. This prevents the scenario where a parent gives consent for version 1.0, then version 2.0 becomes completely different without re-verification.

Updated app rating property: A new age rating property type in Store Kit would allow developers to declare age requirements more granularly.

Apple was preparing to comply. The company even announced a timeline for rolling out these changes. But compliance never happened because the law never went into effect.

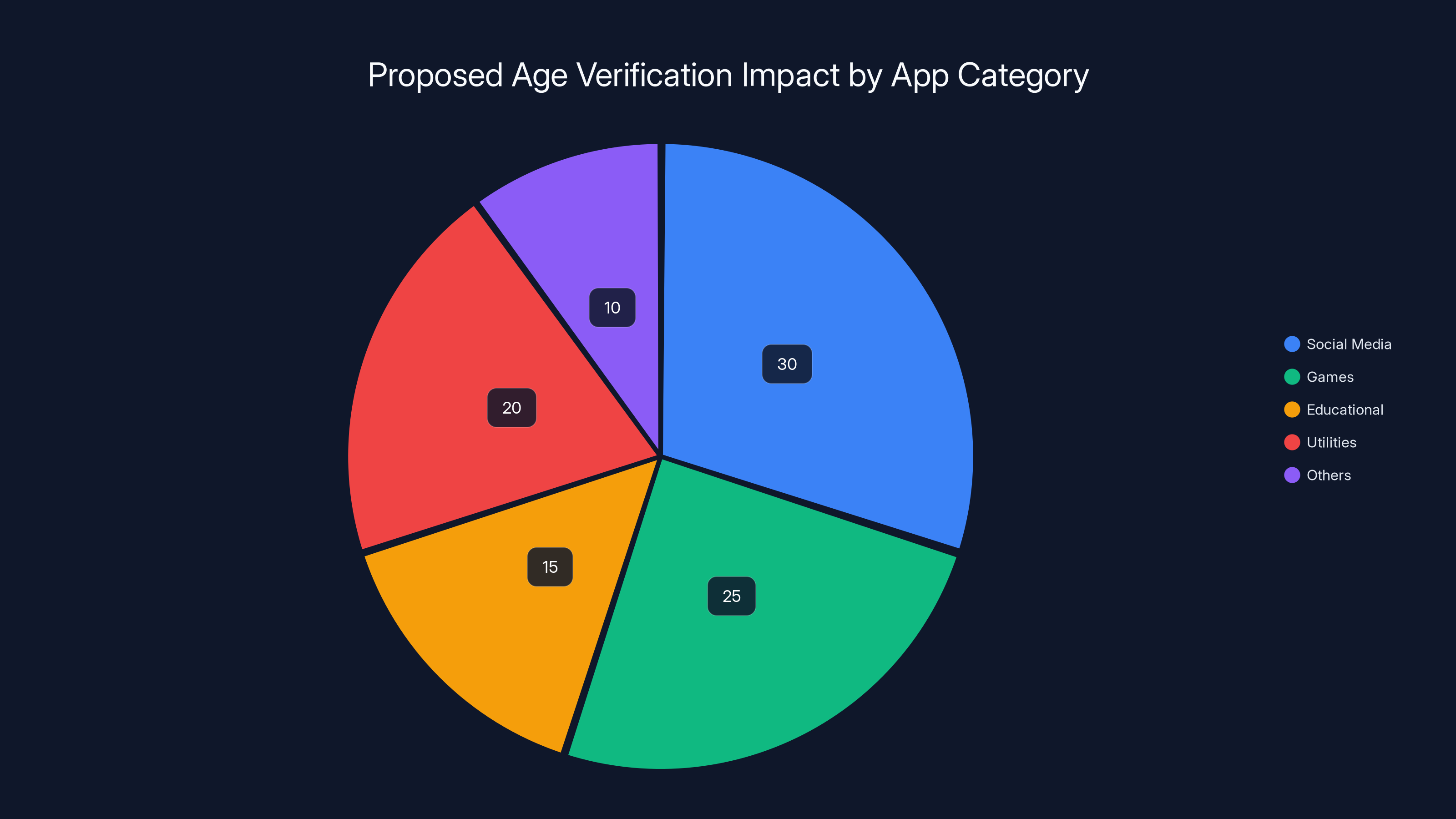

Estimated data shows that social media and games would be most affected by SB2420's age verification mandate, accounting for over half of the impacted app categories.

Apple's Privacy Argument: The First Amendment Defense

Apple's opposition to SB2420 wasn't framed as "we don't want to protect kids." Instead, the company made a sophisticated constitutional argument rooted in free speech protections.

The company argued that requiring the collection and sharing of sensitive personally identifiable information—specifically age data—to download any app constitutes an unconstitutional burden on free speech. Here's the reasoning: if you want to download an app, even something completely innocuous like checking the weather, you'd have to disclose your age. That's a barrier to accessing information and services. It's compelled disclosure of personal information as a condition for exercising First Amendment rights.

In Apple's developer announcement, the company explained it clearly: "While we share the goal of strengthening kids' online safety, we are concerned that SB2420 impacts the privacy of users by requiring the collection of sensitive, personally identifiable information to download any app, even if a user simply wants to check the weather or sports scores."

This argument has teeth. Courts have historically been skeptical of government-mandated collection of personal information, especially when it's required as a condition for accessing services or information. The First Amendment doesn't explicitly mention privacy, but courts have read privacy protections into it through the lens of compelled disclosure.

The federal judge agreed. In the ruling blocking the law, the judge cited First Amendment concerns as a primary reason. The decision essentially said: Texas can't require people to hand over sensitive personal information just to access an app, even in service of a legitimate goal like child protection.

But here's where things get murky. Is accessing an app really a First Amendment issue? Apps aren't inherently speech, though they often contain or facilitate speech. A weather app transmits information. A social media app facilitates speech. A game app... well, games involve some expressive elements. But is downloading software a First Amendment-protected activity?

Texas would argue no. States have broad authority to regulate commerce within their borders, including digital commerce. If a state can require age verification for buying alcohol or cigarettes in physical stores, why not for digital products? The judge disagreed, but that disagreement isn't inevitable or final.

The Judge's Decision: First Amendment Triumphs

The federal judge didn't just block SB2420. The decision provided a roadmap for why age assurance laws might be constitutionally problematic, and that matters for similar laws in other states.

The key holding was that SB2420 imposes a compelled disclosure requirement on speakers and listeners. When someone downloads an app and accesses information or services within it, they're engaging in something the First Amendment protects. Requiring them to disclose their age as a precondition violates their right to speak and receive information anonymously.

The judge also noted that less restrictive alternatives exist. Apple itself had developed age verification tools that could work without mandatory data collection and sharing. Developers could voluntarily implement age verification if they wanted to. Parents could use parental control apps. The law wasn't narrowly tailored to achieve its child protection goals with minimal First Amendment burden.

This reasoning echoes a long line of First Amendment case law. The Supreme Court has repeatedly struck down laws requiring compelled disclosure of information, even when the government had legitimate reasons. The classic example is laws requiring disclosure of anonymous speakers—courts generally won't allow that because the speech itself is protected.

But there's a reasonable counterargument. Digital child safety is genuinely important. Kids are being exploited at scale in ways that weren't possible before. Social media companies have algorithmic systems designed to maximize engagement for young users, and engagement often correlates with distress and mental health problems. Predators use apps to find and groom victims. The harms are real, documented, and severe.

Some argue that First Amendment protections shouldn't apply to commercial platforms making business decisions about what content to recommend or whose information to collect. A weather app doesn't really need to know your age. But TikTok's algorithm definitely should know if it's recommending self-harm content to a 13-year-old. Should the First Amendment really shield that?

The judge's decision doesn't fully grapple with this tension. It treats app access as inherently a First Amendment issue without considering whether different types of apps might warrant different legal treatment. A version of the Texas law that specifically required age verification only for apps that collect user data or have algorithmic recommendations might have fared better legally.

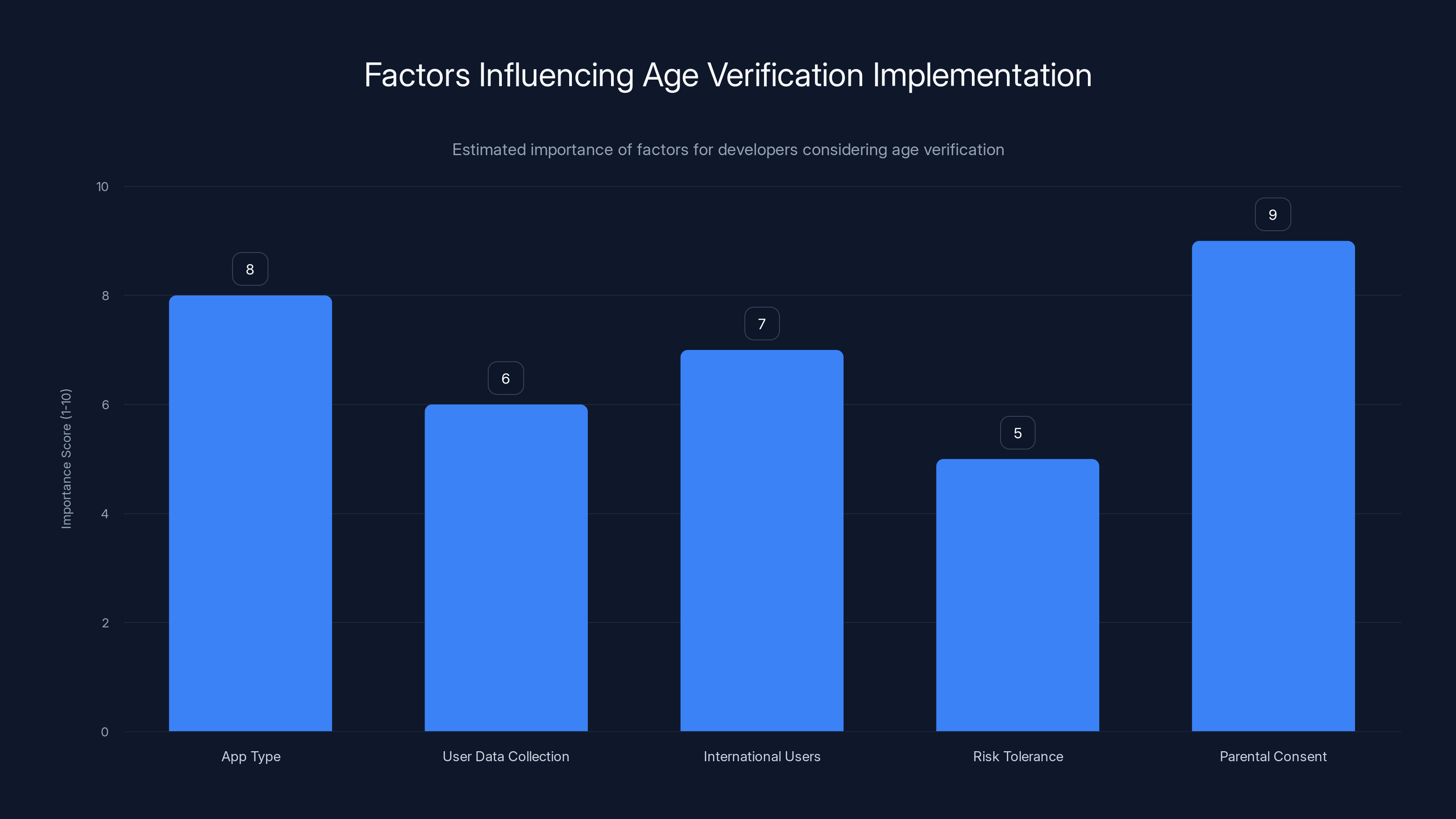

Parental consent and app type are the most critical factors when considering age verification. Estimated data based on developer considerations.

Similar Laws in Other States: Utah and Louisiana on Deck

Texas's law didn't emerge in a vacuum. Similar age assurance laws are already queued up to take effect in other states, and the Texas court ruling creates immediate uncertainty for all of them.

Utah has its own age verification law taking effect soon. Louisiana passed similar legislation. Both states are now watching what happens in the Texas appeal, because if that ruling gets affirmed, their laws face the same First Amendment challenges.

The difference is timing and implementation. Utah's law focuses more specifically on social media rather than all apps, which might give it better legal footing. Laws narrowly targeted at particular categories of apps with documented harms might survive constitutional scrutiny better than blanket age verification requirements.

Some states are taking a different approach entirely. Instead of requiring age verification at the platform level, they're focusing on platform accountability for recommended content to minors. Others are requiring parental consent for specific features like algorithmic recommendations or personalized advertising. These approaches might avoid the direct First Amendment problems that killed SB2420.

What's clear is that the Texas ruling doesn't end this debate. It's the opening move in a longer legal chess match. The Texas Attorney General's office immediately announced plans to appeal. If the ruling gets reversed on appeal, age assurance laws could start spreading rapidly across state lines.

Companies like Apple and Google are already preparing for that scenario. By keeping their age verification APIs available even after pausing Texas-specific implementation, they're signaling that when other states require age verification, they're ready to deploy it.

The Privacy Implications: What This Law Would Have Meant

To really understand why Apple fought so hard, you need to think through what would actually happen if SB2420 had gone into effect.

Every single person in Texas downloading any app would need to establish verified identity. That means either facial recognition, government ID verification, or credit card information. One of the three. Apple would be running these verifications at scale—potentially millions of transactions per month.

Apple isn't in the business of storing sensitive personal identification data. The company's entire marketing argument is built around privacy. If Apple started maintaining databases of age-verified Texans linked to their Apple accounts, that's a massive liability. That data becomes a target for hackers. It becomes leverage in lawsuits. It fundamentally changes what Apple is as a company.

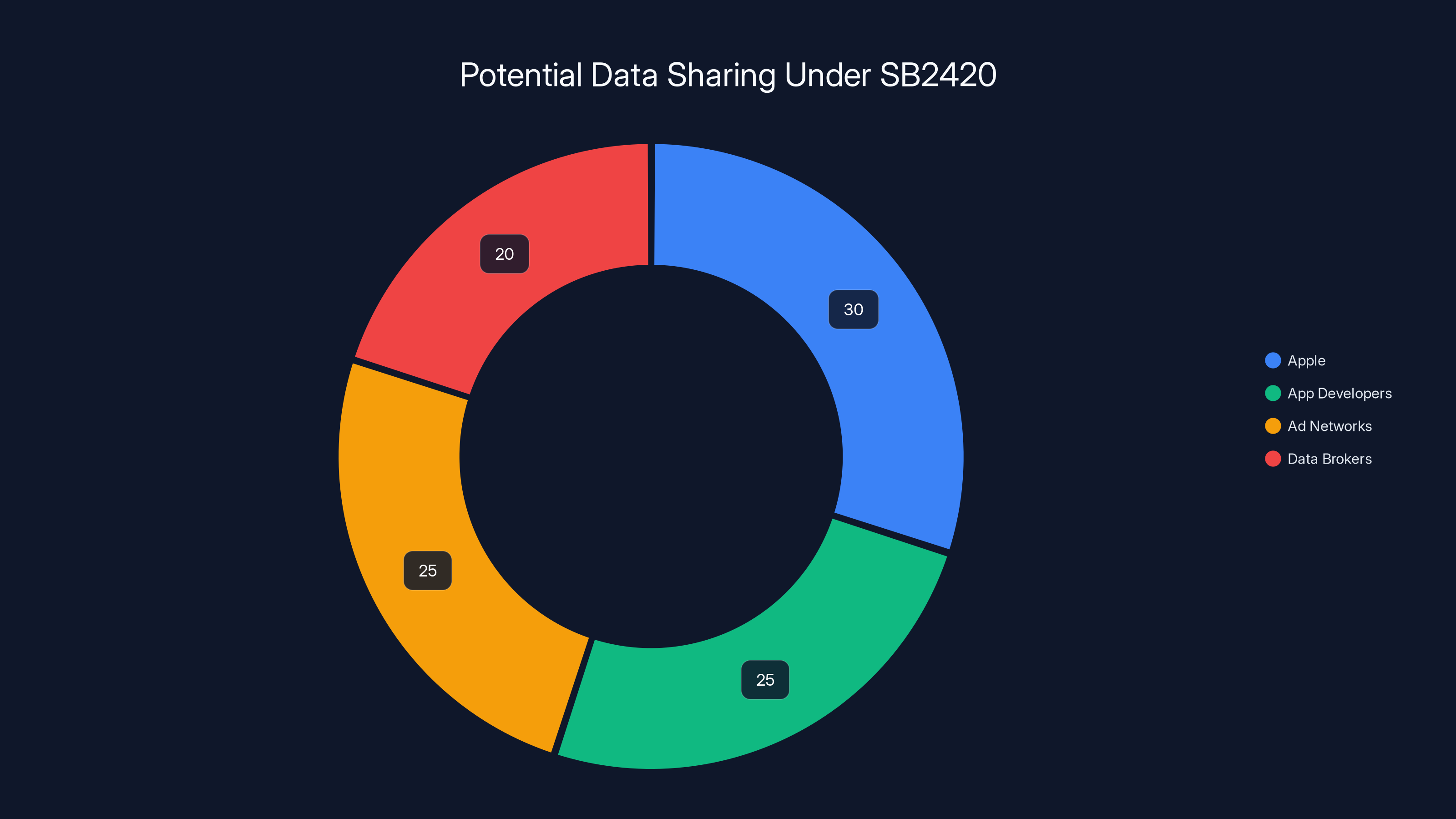

When the law requires sharing age data with developers, the problem compounds. Suddenly thousands of app makers have your age in their systems. If you use a free app that's ad-supported, your age goes to ad networks. If you use a social media app, the platform knows your exact age or age range. The data spread is enormous.

Here's the specific scenario Apple was worried about: someone searches for information about reproductive health, mental health treatment, addiction recovery, or sexual orientation on an app. That app developer now knows their age. That information could be sold to data brokers. An insurance company could eventually use it to set rates. A future authoritarian regime could use it to target and surveil vulnerable populations.

Apple's privacy concern isn't theoretical. We live in a world where data brokers sell age, location, and app usage information to anyone with a credit card. We're not far from a scenario where sensitive personal information collected for ostensibly protective purposes gets weaponized against the people it was supposed to protect.

That said, the current alternative isn't great either. Without any age verification requirements, children access apps designed to be habit-forming. Social media algorithms show them distressing content. Predators can contact them without any verification that they're actually connecting with a minor.

The real problem might be that we're asking app stores to solve a problem that app stores can't actually solve. Age verification at the download level doesn't prevent grooming, cyberbullying, or algorithmic harm. It just creates a data collection mechanism that could be abused.

Apple's compliance measures for SB2420 varied in complexity, with Family Sharing requirements being the most complex. Estimated data.

Apple's Technical Response: The APIs That Remain

Even though Apple paused its Texas-specific implementation, the company made clear that its age assurance developer tools aren't going anywhere. In fact, Apple emphasized that these tools would remain available worldwide across iOS 18, iPadOS 18, and macOS 15 and later.

What are these tools, exactly? Let's break down what Apple built:

Declared Age Range API: This allows app developers to declare age categories for new users. Instead of requiring precise age data, the API uses age ranges: perhaps 13-17, 18-25, 26-35, 36-50, and 50+. Users declare their age range, and developers get information about their user demographic distribution. This is less invasive than precise age verification but still gives developers insight into whether their user base skews young.

Significant Change API: Part of Apple's Permission Kit, this API allows developers to re-request parental consent if their app undergoes significant changes. A parent approves version 1.0, but version 2.0 adds features the parent might not want their child accessing. The API triggers a new consent flow, ensuring continued parental oversight.

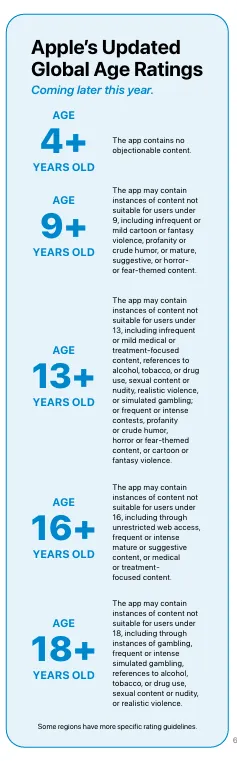

New age rating property in Store Kit: Store Kit is Apple's framework for in-app purchases and app store functionality. The new property type allows more granular age rating declarations. Developers can specify age requirements more precisely than the existing age rating system, which just has broad categories like 12+, 17+, etc.

App Store Server Notifications: This infrastructure allows the App Store to send real-time notifications to developers about changes to app permissions, ratings, or parental consent status. If a parent revokes consent for an app, the developer gets notified immediately.

What's interesting is that Apple's describing these as tools that "remain available for testing and use." That phrasing is deliberate. Apple isn't pushing developers to implement these tools. The company is making them available for developers who want to voluntarily adopt age assurance measures.

This represents a philosophical difference from what Texas law would have required. Texas wanted mandatory age verification with data sharing. Apple's approach is: if you want to do age verification, here are the tools that let you do it in a privacy-respecting way. The choice is yours.

Many developers will choose not to use these tools. A weather app developer has no incentive to implement age verification. The data doesn't provide business value, and it creates friction that might reduce downloads. But a social media app or a game targeting teens might implement Declared Age Range to get useful demographic data.

The question is whether this voluntary approach is sufficient. If age verification is truly important for child safety, leaving it optional means lots of apps won't use it. Kids can still access age-restricted apps by lying about their age. Parents can't enforce consistent rules across apps unless each app individually implements parental controls.

What Happens Next: The Appeals Process

The Texas Attorney General's office didn't accept the court's ruling quietly. Immediately after the decision, the state announced plans to appeal. This means the case is far from over.

The appeal will likely go to the Fifth Circuit Court of Appeals, which covers Texas, Louisiana, and Mississippi. The Fifth Circuit is known for being relatively conservative, which might favor Texas's argument that states have broad power to regulate commerce and protect minors.

Apple and other tech companies will file amicus briefs—basically saying, "Hey, Fifth Circuit, we have an interest in this case too." Privacy advocates will file their own briefs. Kids' safety organizations will argue that the law is necessary and constitutional.

The Fifth Circuit's decision won't be final either. Whoever loses can appeal to the Supreme Court. Whether the Supreme Court takes the case depends on several factors: whether there's a circuit split (different appeals courts ruling different ways), whether the case involves a major constitutional question, and whether the Court is in the mood to take it.

Here's a realistic timeline: If the Fifth Circuit rules in the next year or two, and the Supreme Court takes the case after that, we're looking at a final decision in 2027 or 2028 at the earliest. That's a long time for legal uncertainty.

During this period, companies won't know whether to implement age verification. Some might preemptively build it, betting that laws will eventually pass. Others will wait for legal clarity. States will keep passing new laws, adding more complexity.

Meanwhile, the actual kids these laws are supposedly protecting aren't getting safer. They're still using social media platforms that optimize for engagement over wellbeing. They're still vulnerable to exploitation. The legal fight over age verification procedures is a distraction from the harder work of actually redesigning digital platforms to be safer by default.

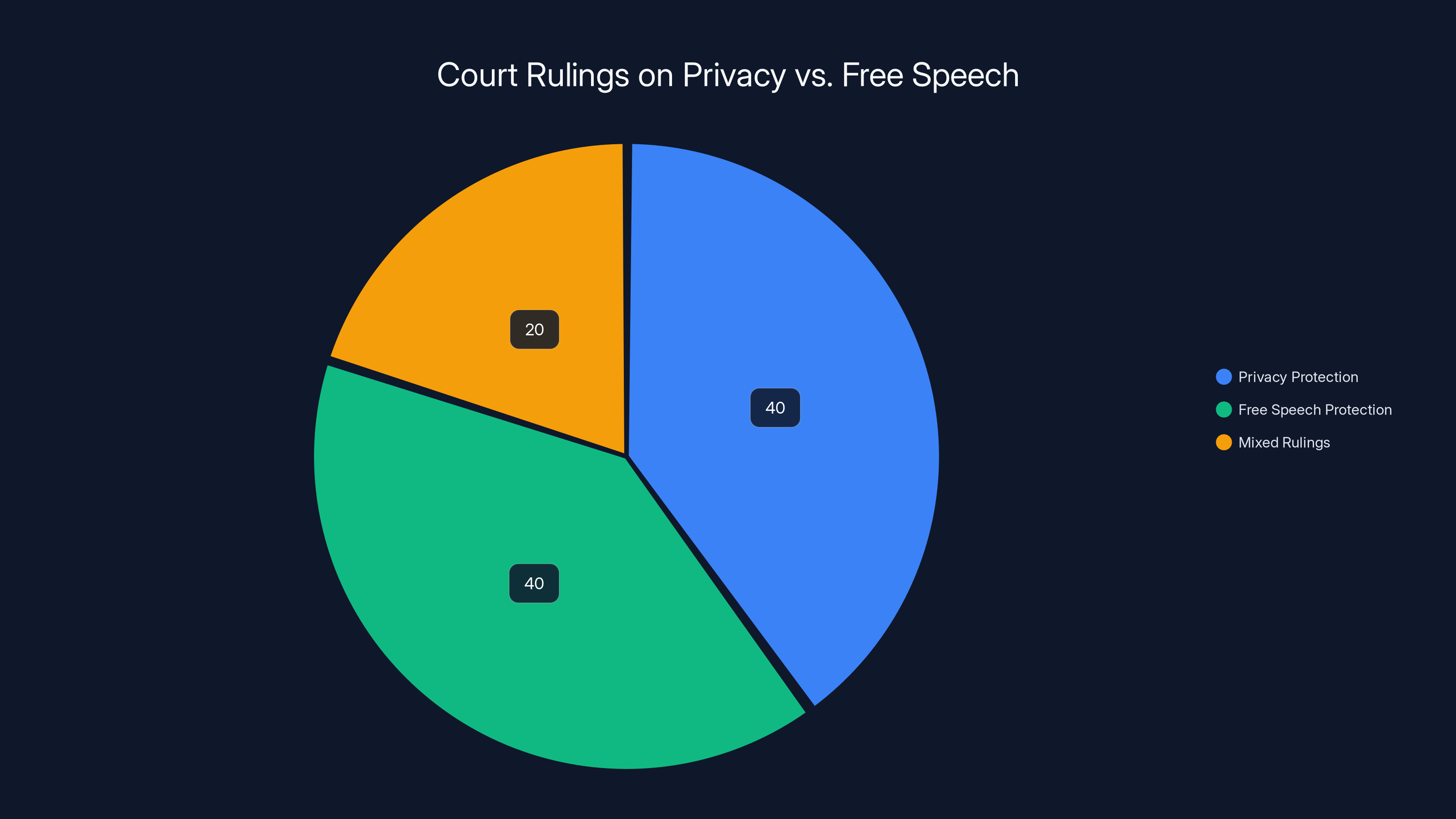

Estimated data shows that court rulings on privacy vs. free speech are often balanced, with a significant portion being mixed rulings. This highlights the complexity of cases like Apple's First Amendment defense.

The Broader Context: Other States Watching Closely

Texas isn't fighting this battle alone. Louisiana and Utah have their own age assurance laws on the books. Several other states are considering similar legislation. All of them are now watching what happens in Texas, because the legal outcome will affect whether their laws can survive constitutional challenges.

What's interesting is how the laws vary. Utah's law is narrower—it specifically targets social media apps with algorithmic recommendations, rather than all apps. That narrower focus might actually make it more likely to survive legal scrutiny. The broader the law, the harder it is to argue that it's narrowly tailored to achieve a legitimate government purpose.

Louisiana's law has some similar features to Texas's but also some differences in implementation. These variations matter because they might affect how courts analyze the constitutional questions.

International context matters too. The European Union has passed age assurance regulations as part of its Digital Services Act. These approaches differ significantly from what Texas tried—they focus more on platform responsibility for recommended content to minors rather than universal age verification at download.

Apple operates globally, so it's watching how different jurisdictions approach this problem. The company might eventually find itself complying with age assurance laws in some regions while resisting them in others, creating a patchwork where American users get different privacy protections depending on their state.

The Child Safety Argument: Is Age Verification the Answer?

Let's zoom out and ask the bigger question: even if age verification were constitutional, would it actually improve child safety online?

The honest answer is: maybe not much. Age verification at the app download level doesn't prevent most of the harms kids actually face online:

Social media cyberbullying: Age verification doesn't stop peers from harassing each other. The bullying happens on the platform itself, which already knows users' ages (or should).

Predatory behavior: Predators often pose as peers, so knowing someone's age doesn't automatically prevent grooming. What matters is verifying that the person claiming to be a 14-year-old is actually 14.

Algorithmic harms: The recommendation algorithms that push kids toward distressing content don't care whether the user is 15 or 25. Age verification at download doesn't change the algorithm.

Exploitative apps: Freemium games designed to exploit psychological vulnerabilities don't become safer because parents gave consent. The business model remains exploitative.

What actually prevents these harms is harder work: platform design changes, algorithmic transparency, content moderation, and enforcement against predators. That's not as easy as checking IDs at download.

Age verification might help with some specific problems—preventing access to genuinely age-inappropriate content like extreme pornography. But that's a small slice of the child safety problem online.

Some researchers argue that age verification might actually harm child safety. If the only way to access certain apps is through verified age identity, that creates a centralized database of minors. That's actually a bigger security risk than the decentralized current system. Predators don't need to hack millions of app accounts; they just need to hack one age verification database.

Texas's law tried to address that by requiring Apple to do the verification centrally (with parental data) rather than creating a separate database. But Apple was right that this still creates a massive privacy risk.

The uncomfortable truth is that protecting kids online is hard, and there's no technical solution that doesn't involve tradeoffs. Every approach to age verification involves either creating invasive databases or allowing identity fraud. Every approach to algorithmic safety involves transparency that companies resist because it reveals how their systems work. Every approach to content moderation requires judgment calls that are controversial.

Age assurance laws are appealing because they feel like a clear solution—just verify ages and require parental consent. But they're actually a Band-Aid on a much larger problem.

Estimated data sharing distribution under SB2420 shows significant spread to app developers and ad networks, raising privacy concerns.

The Business Impact: What Companies Need to Know

If you're a developer or run a tech company, the Texas ruling and the broader age assurance debate creates real business uncertainty.

The safest approach right now is to implement Apple's voluntary age assurance APIs if your app targets young users or handles sensitive data. You're not required to, but building the infrastructure now means you're ready if laws eventually take effect in your state.

If you run a freemium game or social app that monetizes through user engagement, age data suddenly becomes valuable. The Declared Age Range API tells you what percentage of your users are under 18. That demographic insight helps with advertising targeting and content strategy.

For most apps, though, age verification creates friction without clear benefits. A task management app doesn't need to verify ages. A calendar app doesn't care how old users are. The cost of implementing age verification exceeds the business value.

The real issue for companies is the patchwork of potential regulations. If age assurance laws vary significantly between states, companies might need to implement different systems for different regions. That's expensive and creates compliance nightmares.

Given this uncertainty, many companies are adopting a wait-and-see approach. When the Fifth Circuit issues its decision on the Texas appeal, that will be a moment when companies reassess their compliance strategies. If the law is upheld, you can expect rapid deployment of age assurance systems. If it's struck down again, the industry will likely relax for a while until the next legal battle.

International Perspective: How Other Countries Handle Age Assurance

The United States isn't the only place grappling with these issues. Different countries have taken radically different approaches.

The UK has been experimenting with age assurance for social media access. The Online Safety Bill requires platforms to implement age verification for age-restricted content, but it gives platforms flexibility in how to do it. Some use ID verification, others use behavioral analysis, others use shared device assumptions (like, if you're using an adult's device, you're probably not a young child).

The EU's Digital Services Act takes a different approach. Rather than requiring universal age verification, it focuses on platform accountability. If a platform recommends harmful content to minors, the platform is responsible. Age verification might be one tool to prevent that, but it's not mandatory.

Australia has proposed banning social media for under-16s, which is more blunt than age verification—just outright prohibition. That raises its own constitutional and practical issues.

Singapore has proposed age verification requirements for apps, but with lighter privacy requirements than Texas. The age verification is done by device manufacturers and platforms, not centrally stored.

China has implemented real-name registration requirements for online services, which is technically age-verifiable but also enables surveillance of the population. That's obviously a cautionary tale about what overly aggressive age verification infrastructure can become.

What's clear from the international perspective is that there's no consensus approach. Every country is experimenting with different models, trying to balance child protection with privacy and free speech. Some models protect privacy better than others. Some are more effective at preventing harm than others. And some (looking at you, China) are more about surveillance than protection.

The question for the United States is which model to follow. Should we adopt the EU's platform accountability approach? The UK's flexible verification? Or the restrictive Australian approach? Texas tried the most invasive American approach, and the courts shot it down.

What Apple Actually Did During Pause and Current Status

When Apple announced it would "pause" its Texas compliance plans, what did that actually mean?

It didn't mean Apple deleted code or removed features. The company still has all the infrastructure it built for Texas compliance. The Declared Age Range API, the Significant Change API, the new age rating properties in Store Kit—all of it is still functional and available worldwide.

What Apple did pause is enforcement of the specific requirements. Apple isn't going to require all apps in Texas to implement Family Sharing or age verification. Apple isn't going to push developers to use the age assurance tools. The company is simply saying: "We built this for compliance, but since the law is blocked, we're not requiring it."

This is actually a clever position. Apple preserved its technical work, maintained capability to comply with future laws, but doesn't have to burden its entire Texas user base with invasive compliance measures right now.

Developers can still voluntarily use Apple's age assurance tools if they want to. Some developers might do this anyway—not because the law requires it, but because having age demographic data is valuable for their business. A social media app might want to know what percentage of its user base is under 18 for advertising purposes.

Apple's current status, as far as public statements go, is that it's monitoring the legal situation and will adjust as the appeals process unfolds. The company made clear that if the law gets reinstated, it's ready to implement whatever technical requirements are necessary.

Developer Perspective: Should You Implement Age Verification Now?

If you're building an app, should you implement age assurance features even though they're not currently required anywhere in the United States?

The answer depends on several factors:

What does your app do? If it's a social media app, messaging app, or anything where user identity matters, age verification might be valuable. If it's a weather app or calculator, probably not.

How much user data does it collect? If you're collecting detailed user data, knowing ages helps you understand your demographic. If you're minimizing data collection, age verification adds friction without much value.

Do you have international users? If you operate in the EU or other jurisdictions with strict privacy laws, implementing privacy-respecting age assurance might be smart preemptively.

What's your risk tolerance? Some companies implement compliance features now to avoid scrambling if laws change. Others wait until they're actually required.

How important is parental consent for your business? If you're building something kids use, having documented parental consent might reduce legal liability even if it's not required.

For most developers, the pragmatic approach is to wait. Building age verification infrastructure costs time and money. Creating additional friction for users reduces downloads and engagement. You're not legally required to do it right now, so there's no pressing reason.

But keep Apple's tools in your mental models. When the legal situation becomes clearer—either through appellate court decisions or through laws actually taking effect—you'll be able to implement age assurance quickly using Apple's infrastructure.

The Political Dynamics: Why Age Verification Remains Contentious

What's fascinating about the age assurance debate is how it splits traditional political coalitions.

Conservatives generally want child protection, which aligns with age assurance laws. But conservatives also tend to oppose government mandates on business and worry about big government. So some conservatives oppose age verification requirements on anti-regulation grounds.

Progressives generally want privacy protection, which aligns with opposing age verification laws. But progressives also care about child safety. So some progressives support age verification as necessary protection for vulnerable minors.

Tech companies mostly oppose age verification because it creates compliance costs and liability risks. But some tech companies support it as a way to shift responsibility for child safety from platforms to platforms and parents jointly.

Kids' safety organizations strongly support age verification as a tool to prevent exploitation and grooming. Mental health advocates sometimes support it to prevent access to harmful content.

Privacy advocates strongly oppose age verification because it enables surveillance and creates centralized databases of personal information that could be misused.

This political complexity explains why the legal battles are so protracted. There's no clear "left" and "right" position on age assurance—the debate cuts across traditional political lines. That makes compromise harder and makes it more likely the courts will end up deciding.

The Supreme Court, if it takes the case, will have to weigh fundamental constitutional questions: Does First Amendment protection extend to app access? Do states have broad police powers to regulate digital commerce and protect minors? How do we balance privacy rights against child safety?

These aren't questions with obvious answers. Reasonable people disagree.

The Future of Age Assurance: What Comes Next

Even if the Texas law gets overturned on appeal and eventually the Supreme Court refuses to hear the case, age assurance regulation isn't going away.

States will keep passing laws with slightly different structures, trying to find an approach that survives constitutional scrutiny. Some will focus specifically on social media rather than all apps. Some will target platforms rather than app stores. Some will require age verification only for specific features rather than blanket requirements.

International regulation will influence American law. If the EU successfully implements age assurance without major privacy scandals, American lawmakers will point to that as proof of concept. If the EU's systems get hacked or misused, that will discourage American adoption.

Technology will also evolve. Better privacy-preserving age verification might emerge—ways to verify age without collecting and storing personal data. Zero-knowledge proofs, for instance, could theoretically verify that someone is over 18 without revealing their actual age. Biometric age estimation might improve enough to be reliable. If better technical solutions emerge, the legal arguments change.

Meanwhile, the fundamental problem—kids being harmed online—won't wait for legal clarity. Platforms will keep optimizing for engagement. Parents will keep struggling to protect their kids. Predators will keep evolving tactics. And policymakers will keep looking for regulatory solutions.

Age assurance might be part of the answer, but it's probably not the whole answer. The real solutions involve redesigning platforms to prioritize safety, implementing actual content moderation, enforcing laws against predators, and teaching digital literacy. That's harder than age verification, which is probably why there's so much focus on age verification.

Takeaways for Different Stakeholders

For Parents: Age verification laws won't solve the problem of digital child safety. Use the existing parental controls on your devices. Have conversations with your kids about online risks. Monitor their activity when it's appropriate. Don't rely on legal solutions to replace engaged parenting.

For Developers: Be ready to implement Apple's age assurance tools if your app targets young users or handles sensitive data. But don't implement them now unless there's a specific business reason. Wait for legal clarity before making major compliance investments.

For Tech Companies: The patchwork of state laws is coming whether you like it or not. Start building compliance infrastructure now, but don't deploy it until legally required. Monitor the appellate process in Texas and similar cases. Engage with policymakers to shape regulations toward privacy-respecting approaches.

For Policymakers: Age verification alone won't protect kids. Focus on platform accountability, algorithmic transparency, and enforcement against predators. If you do require age verification, build in strong privacy protections and regular audits to prevent misuse.

For Privacy Advocates: Age assurance laws threaten privacy if they're designed poorly. Engage with the legislative process to push for privacy-protective implementations. Support the courts in blocking overly invasive laws. But also acknowledge that online child safety is a real problem requiring solutions.

FAQ

What is SB2420 and why did Texas pass it?

SB2420, also called the App Store Accountability Act, was a Texas law designed to require age verification for all app downloads and parental consent for users under 18. Texas passed it to address concerns about children accessing age-inappropriate content, encountering predators, and being exposed to algorithmically-curated harmful content. The law aimed to make app stores responsible for verifying ages and enforcing parental consent.

How did Apple plan to comply with the Texas law?

Apple announced several technical changes including a Family Sharing requirement for all users under 18, updates to the Declared Age Range API to provide age categories for new accounts, a Significant Change API to re-request parental consent when apps are significantly updated, and new age rating property types in Store Kit. These tools would have allowed Apple to enforce the law's requirements while collecting minimal personal data.

Why did the federal judge block the law on First Amendment grounds?

The judge determined that requiring people to disclose personal information as a condition for downloading any app—including low-risk apps like weather or calculator apps—constitutes an unconstitutional burden on free speech. The compelled disclosure of age data violates First Amendment protections for anonymous speech and receiving information. The judge also noted that less restrictive alternatives existed for achieving child safety goals.

What are the privacy concerns with mandatory age verification?

Mandatory age verification would require massive collection of personal identification data—either through facial recognition, government ID verification, or credit card information. Apple would need to store this data, creating a target for hackers and potential for misuse. When age data must be shared with developers, the problem multiplies as thousands of companies gain access to sensitive personal information. This data could eventually be sold to data brokers, used for discrimination, or exploited by future authoritarian regimes.

Does the court's ruling mean age verification laws are unconstitutional everywhere?

No. The ruling applies to Texas's specific law and its requirement for universal age verification across all apps. Other states' laws with narrower scope—targeting only social media, for instance—might survive constitutional challenges. The appeals process could reverse this ruling. And the Supreme Court might eventually take the case and rule differently. The First Amendment analysis is complex and reasonable judges disagree.

Will Apple be required to implement age verification if other states pass similar laws?

Yes. Apple will likely comply with state age verification laws if they're legally required, though the company will push back in court and argue for privacy-protective implementations. What Apple did in Texas—preparing technical infrastructure while pausing deployment—shows the company's strategy: build compliance capability without deploying it until legally forced to do so.

What are Apple's developer tools for age assurance, and who would use them?

Apple offers the Declared Age Range API (which allows developers to see age demographic distribution of users), Significant Change API (which re-requests parental consent when apps update), updated age rating properties in Store Kit, and App Store Server Notifications (for parental consent changes). Developers might voluntarily use these tools if they target young users or want demographic data about their user base, even though they're not currently required.

If age verification isn't mandated, does that mean kids will be at risk online?

Age verification at the app download level prevents only some harms. It doesn't stop cyberbullying, predatory behavior by those posing as peers, or algorithmic recommendations of harmful content. What actually prevents these harms is better platform design, content moderation, parental oversight, and enforcement against predators. Age verification is one tool among many, not a complete solution for online child safety.

What happens next in the legal battle over age assurance laws?

Texas plans to appeal the court's decision to the Fifth Circuit Court of Appeals. If that court upholds the ruling, either side can appeal to the Supreme Court. The entire process could take several years. During this period, other states' laws remain in legal limbo. Companies won't know whether to implement age verification until the legal situation becomes clearer.

Are other countries implementing age verification differently than the United States?

Yes. The EU's Digital Services Act focuses on platform accountability for recommended content rather than requiring universal age verification. The UK allows platforms flexibility in how to verify age. Australia has proposed banning social media for under-16s entirely. Singapore requires age verification but with lighter privacy requirements. International approaches range from the privacy-protective (EU) to the surveillance-heavy (China), offering different models for the U.S. to potentially follow.

Conclusion: The Unsolved Tension Between Protection and Privacy

The federal judge's decision blocking Texas's age assurance law didn't solve anything. It just highlighted how difficult this problem actually is.

Texas wanted to protect kids. That's a legitimate goal. Kids are genuinely at risk online—from predators, from algorithmic manipulation, from content that harms their mental health. Age verification could theoretically prevent some of those risks. But it would do so by creating massive privacy risks for everyone, including adults who just want to download a weather app.

Apple opposed the law on constitutional and privacy grounds. Those are also legitimate concerns. Compelled disclosure of personal information is something democracies should be cautious about. Once you create a centralized database of age-verified users, that database becomes a target for anyone with nefarious intentions.

Both sides are right about the problem they're worried about. The question is how to weigh the risks. Which is worse: kids having access to age-inappropriate content, or adults having their age and app usage patterns linked to their identity? Different people answer differently based on their values.

What's clear is that age verification regulation is coming, even if the Texas law doesn't survive appeal. Some state will eventually pass an age assurance law that survives constitutional scrutiny. Maybe it will be narrowly tailored to social media only. Maybe it will include strong privacy protections. Maybe it will use innovative technology like zero-knowledge proofs to verify age without collecting personal data.

Apple and other tech companies should be preparing for that inevitability while pushing back against overly invasive approaches. Policymakers should be designing regulations that actually protect kids while respecting privacy. Privacy advocates should acknowledge that some level of age verification might be necessary while fighting against surveillance-heavy implementations.

And all of us should remember that age verification, no matter how well-designed, isn't going to solve the core problem of how to make digital platforms safe for kids. That requires harder work: better platform design, real content moderation, stronger enforcement against predators, and honest conversations about the harms that technology can cause.

The Texas ruling is just the beginning of this conversation. The real debate is just getting started.

Key Takeaways

- Federal judge blocked Texas age assurance law citing First Amendment concerns about compelled disclosure of personal information

- Apple pauses Texas implementation but maintains voluntary age assurance developer tools available worldwide for companies choosing to adopt them

- Age verification at app download level cannot prevent most online child harms like cyberbullying, predatory behavior, or algorithmic manipulation

- Patchwork of state laws emerging in Texas, Louisiana, and Utah creates regulatory uncertainty forcing tech companies to prepare for varied compliance requirements

- Privacy vs. protection debate remains unresolved as appeals process could take years, during which vulnerable minors continue facing unmitigated online risks

![Apple Pauses Texas App Store Changes After Age Verification Court Block [2025]](https://tryrunable.com/blog/apple-pauses-texas-app-store-changes-after-age-verification-/image-1-1766590593551.jpg)