Discord's Age Verification Crisis: What Happened With Persona [2025]

Discord did something that fundamentally violated user trust, and the internet noticed immediately.

The messaging platform announced a sweeping change to its platform: all users would soon default to "teen experiences" until they verified their ages. Sounds reasonable on the surface, right? Protect kids from adult content. But the execution was where things got messy. Discord planned to collect government IDs as part of its global age verification rollout, which seemed reckless given that a third-party age verification vendor had recently exposed 70,000 Discord users' government IDs in a breach.

What made this worse wasn't just the plan itself. It was how Discord communicated about it, what they didn't say, and the third-party vendor they chose to partner with in the UK that sparked the most controversy.

This article breaks down exactly what happened with Discord and Persona, why users got furious, what the privacy implications actually are, and what this means for age verification on social platforms moving forward.

TL; DR

- Discord partnered with Persona for age verification in a UK test without initially disclosing the partnership or full details

- The company planned to collect government IDs while users were still reeling from a prior breach that exposed 70,000 IDs

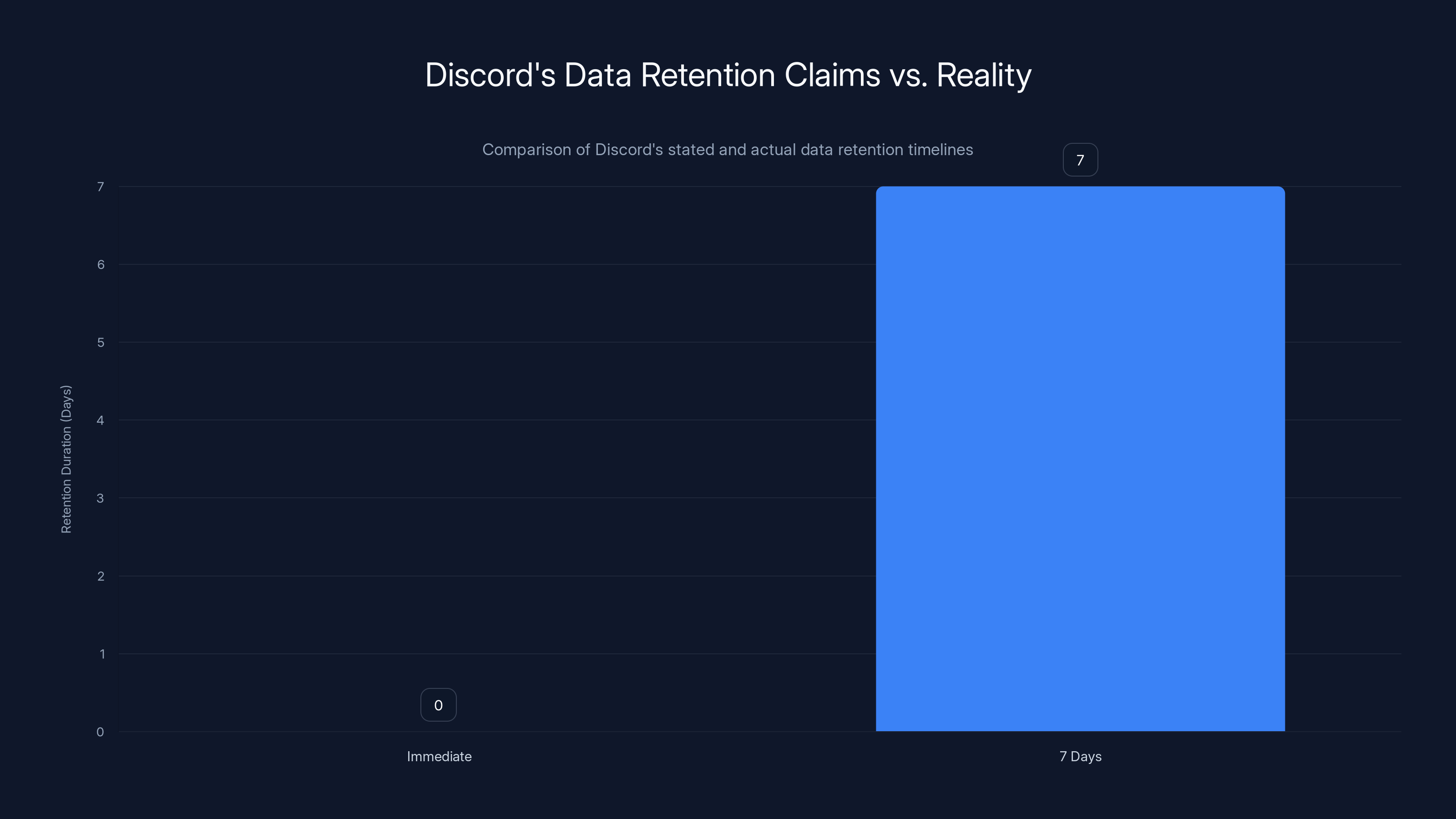

- A deleted FAQ disclaimer revealed IDs would be stored for up to 7 days, contradicting Discord's claims of immediate deletion

- Backlash included concerns about Persona's investor base and data security practices

- Discord ended the Persona partnership after widespread criticism, with Persona confirming all test data was deleted

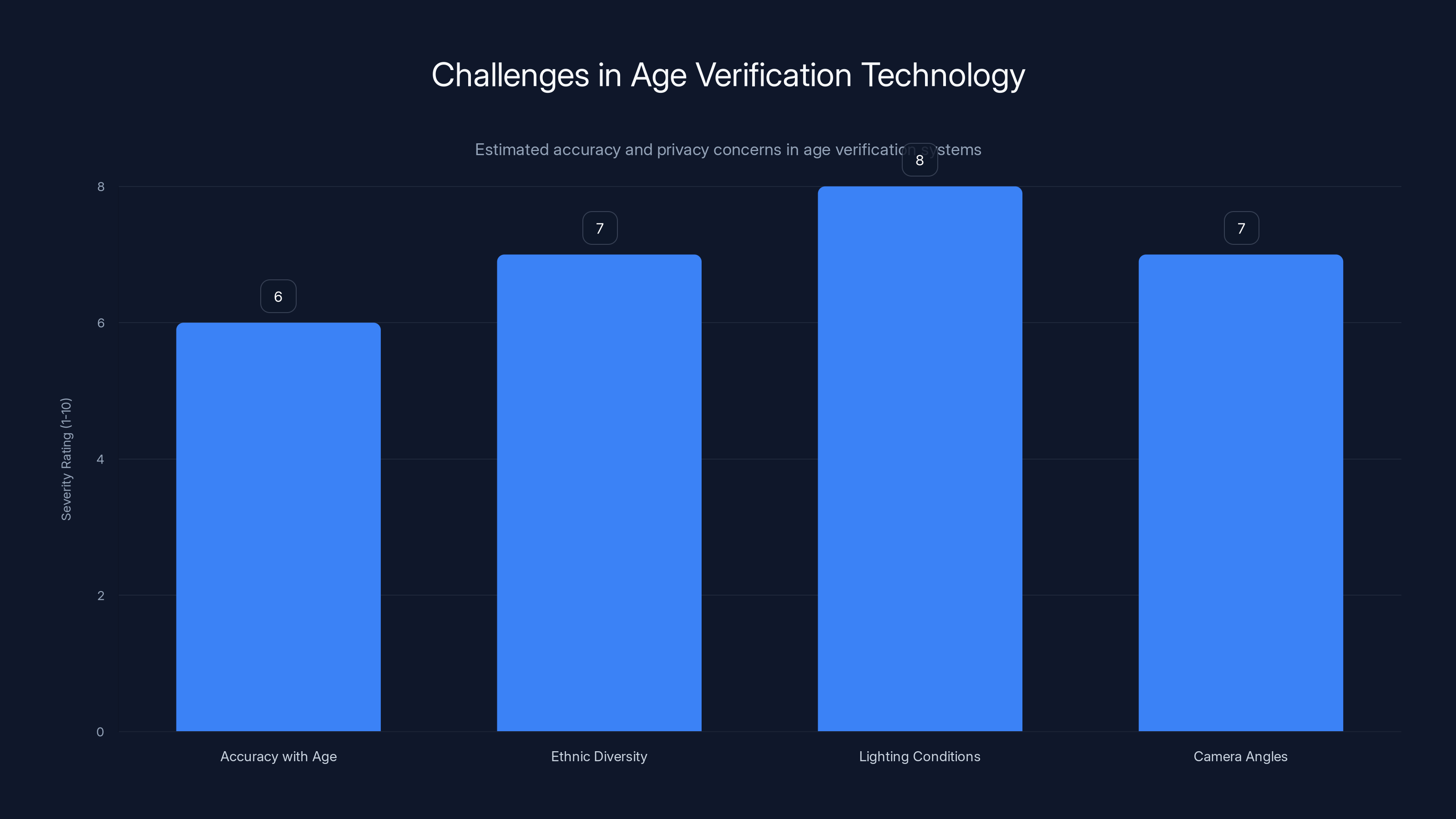

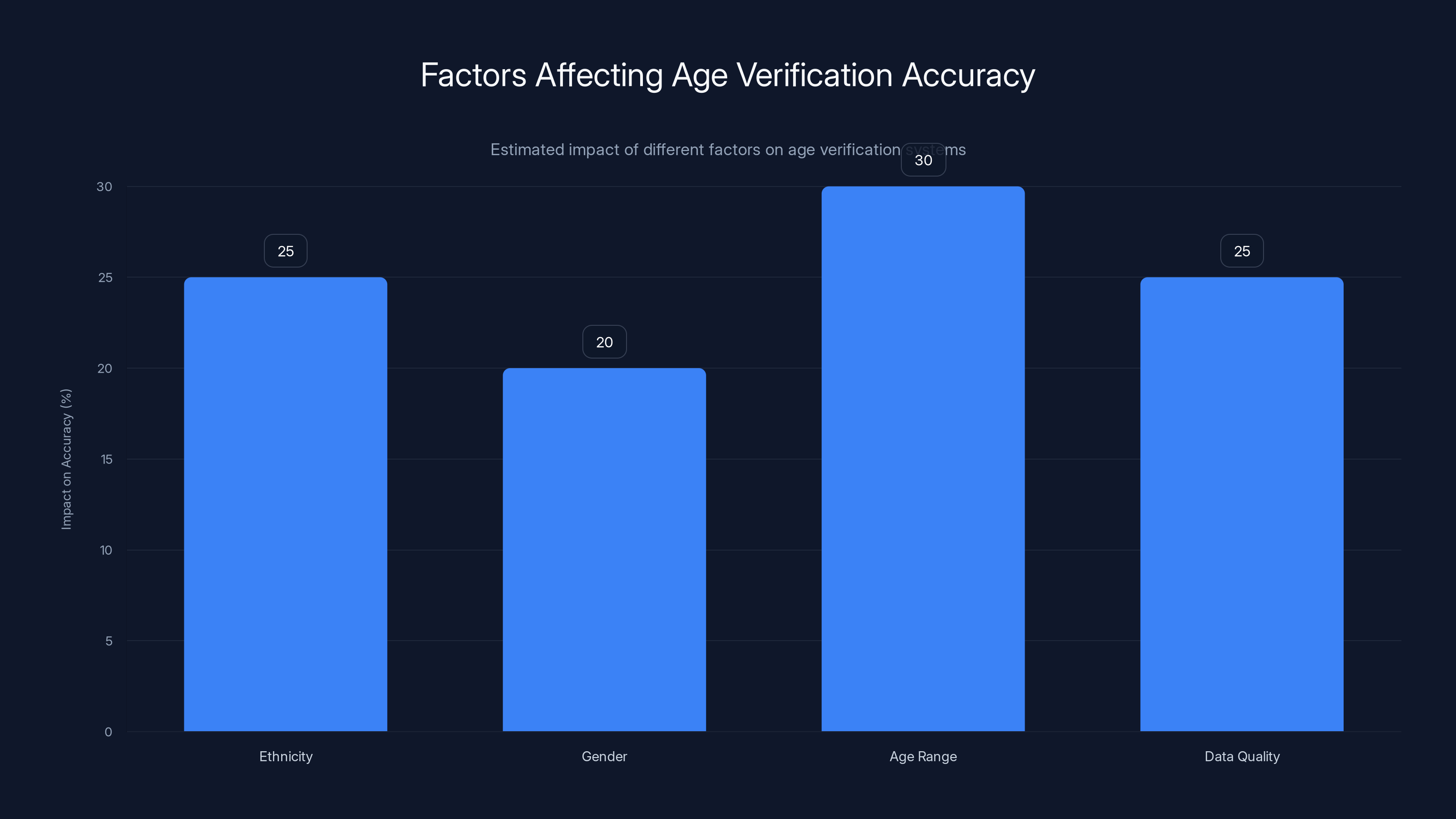

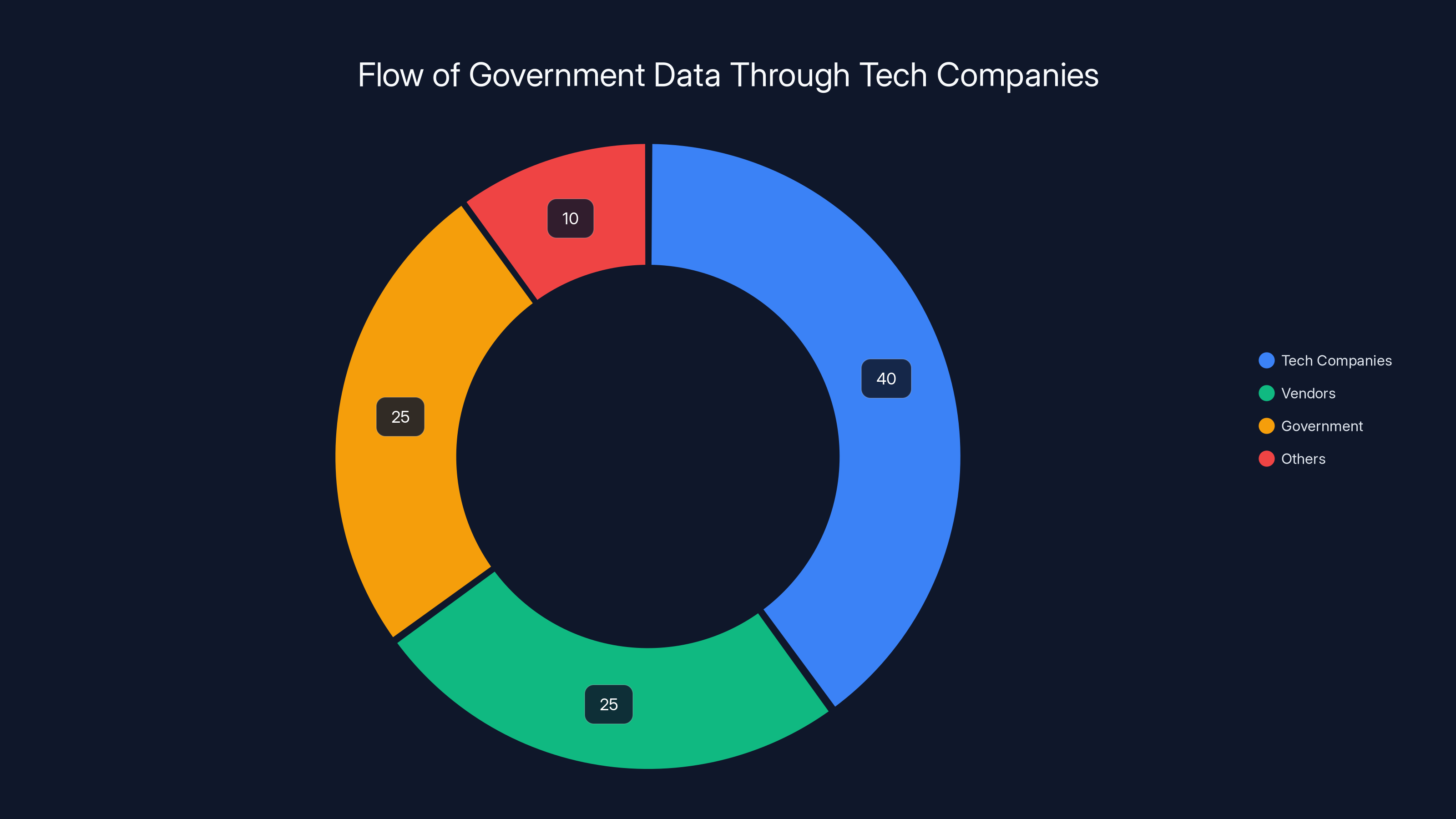

Age verification technology faces significant challenges, particularly with accuracy under varying conditions and privacy concerns. Estimated data.

Why Discord Needed Age Verification in the First Place

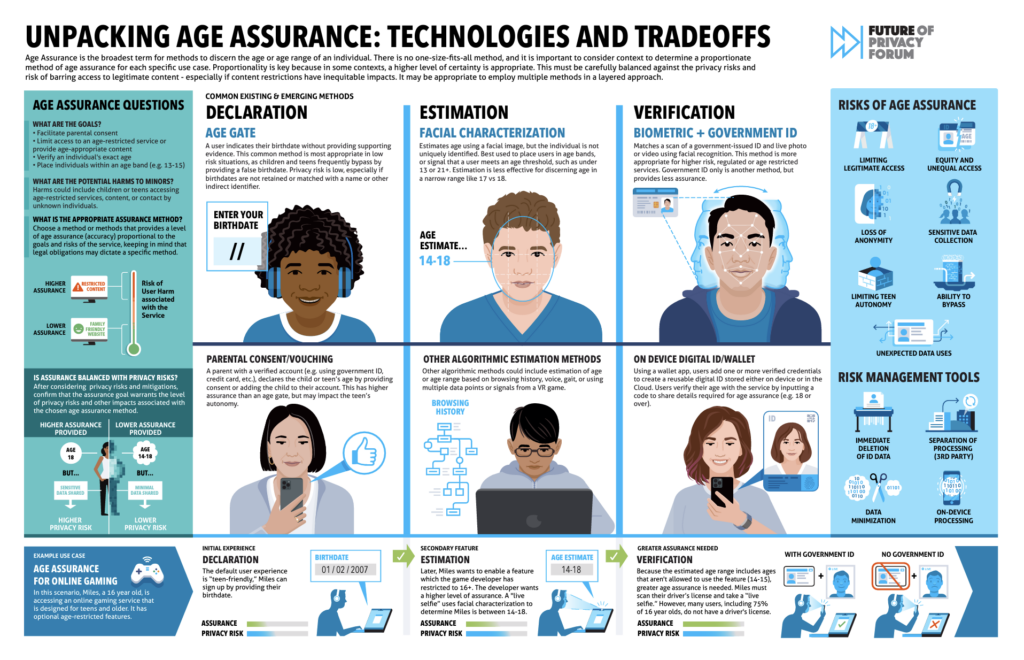

Discord didn't wake up one day and decide age verification sounded fun. The platform was forced into this decision by regulatory pressure, specifically from two major jurisdictions.

Australia introduced an under-16 social media ban that went into effect, requiring platforms to implement age verification or face massive fines. Around the same time, the United Kingdom's Online Safety Act (OSA) came into force, which was even more complex than Australia's approach. The UK didn't just want Discord to block kids from accessing adult content. It required Discord to prevent adults from messaging minors. That's a fundamentally different and harder problem to solve.

Think about it from a technical perspective. Blocking a 12-year-old from accessing a channel labeled "adult" is one thing. Your AI can estimate age from a video selfie and make that call work most of the time. But preventing a 45-year-old predator from contacting minors on the platform requires much higher confidence in age detection. The stakes are different. A false negative (thinking an adult is a teen) is catastrophic.

This is why Discord couldn't just slap on a basic age-check solution and call it done. The UK's OSA was specifically written to handle this dual requirement, and Discord needed a partner that could credibly meet those standards. Enter Persona.

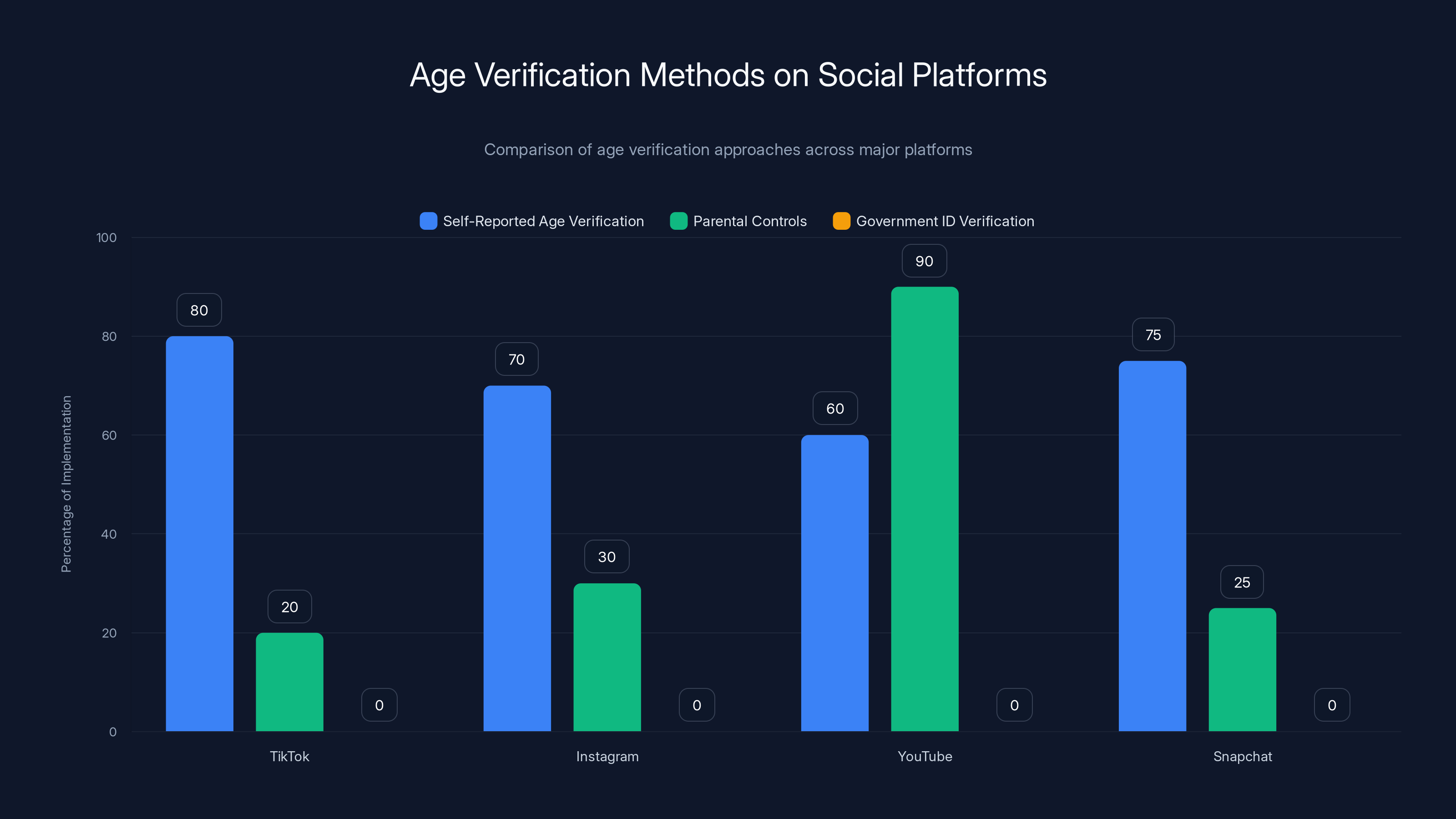

Most platforms rely on self-reported age verification, with limited use of parental controls and no widespread government ID verification. Estimated data based on typical platform practices.

Who Is Persona and Why Did Discord Choose Them?

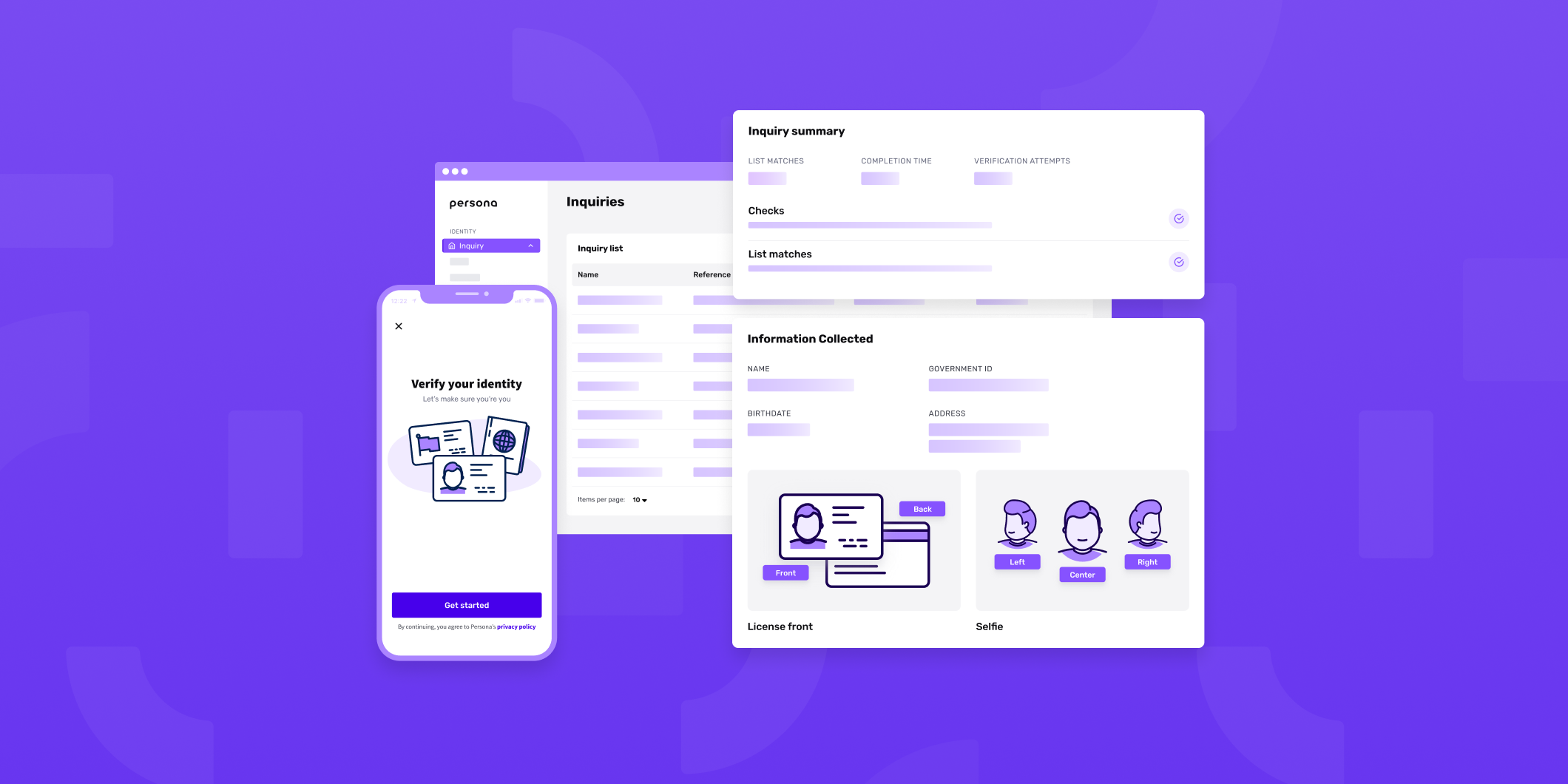

Persona is an identity verification company that specializes in helping platforms implement age checks and KYC (know your customer) compliance. The company uses AI to process government IDs, video selfies, and behavioral signals to estimate age and verify identity.

On paper, Persona made sense for Discord. The company had already been approved by UK regulators as an age verification service on Reddit, another platform with similarly complex age verification requirements. Reddit needed to allow adults to access NSFW communities while preventing minors. Persona apparently passed that scrutiny, which suggested it would work for Discord too.

But here's where the investor controversy came in. Peter Thiel's Founders Fund was a major investor in Persona. Peter Thiel isn't just any Silicon Valley figure. He co-founded PayPal, founded Palantir (a data analytics company with deep government contracts), and has explicit ties to the Trump administration. When internet sleuths discovered this connection, conspiracy theories spread rapidly across social media.

Users worried about several things simultaneously: Would Thiel have access to Discord's age verification data? Would that data eventually be fed into government facial recognition systems? Could the Trump administration gain access to photos of Discord users? These aren't wild paranoid fantasies. Palantir does work with law enforcement and government agencies, and Thiel has been vocal about his political influence.

The backlash wasn't just about privacy. It was about trust, transparency, and who actually had access to users' most sensitive biometric data.

The Deleted Disclaimer That Started Everything

Here's where things went from bad to worse for Discord.

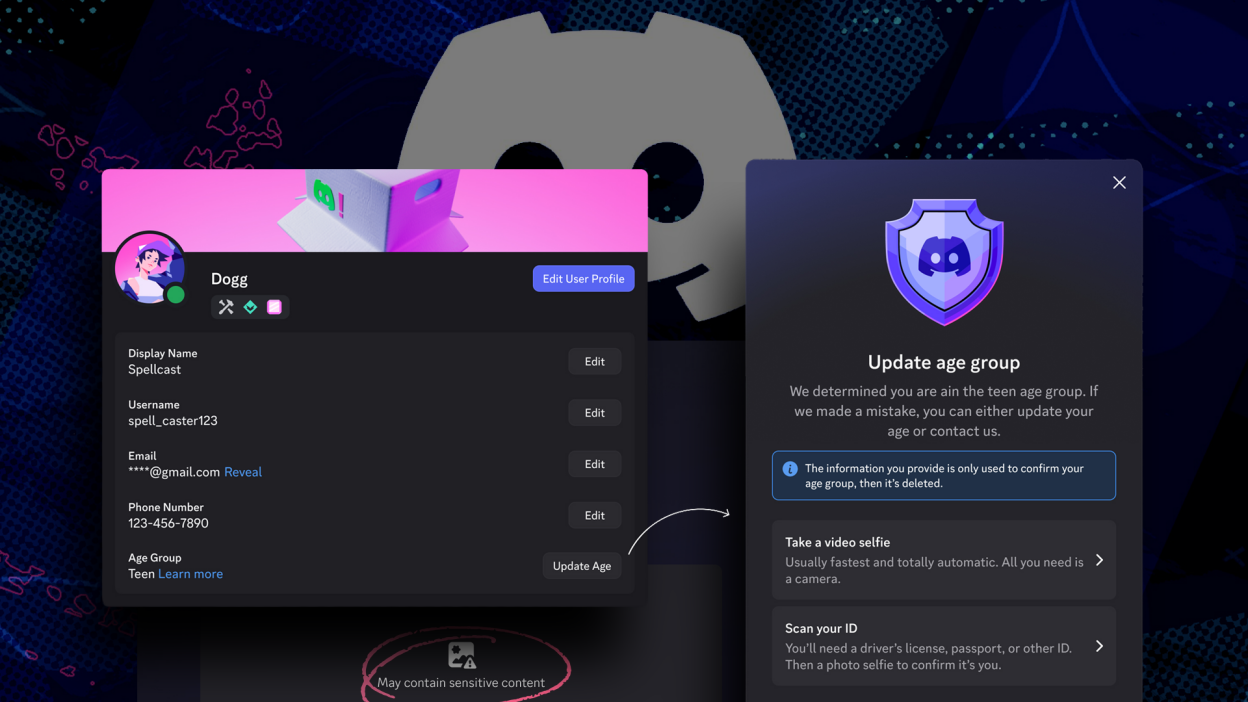

The company posted an FAQ page about its age assurance policies that included a disclaimer about the Persona test. The disclaimer was specific and concerning: "If you're located in the UK, you may be part of an experiment where your information will be processed by an age-assurance vendor, Persona. The information you submit will be temporarily stored for up to 7 days, then deleted."

That disclaimer stayed up for a bit, but then Discord deleted it. And when internet archivists pulled up the cached version, the contradiction became obvious. Discord's leadership had been telling The Verge and other publications that ID data was deleted "immediately after age confirmation" or "in most cases, immediately." But the FAQ said data would be stored for up to 7 days.

Which was it? Immediately or up to 7 days? That's a massive difference when you're talking about storing government IDs. And why did Discord delete the disclaimer? Was it an accident? A PR decision? The company never clearly explained.

The deletion itself became the story. When a company deletes information that contradicts what leadership publicly claimed, users naturally assume the worst. Transparency is gone. Trust evaporates. And suddenly, you've got a PR crisis on your hands.

Worse still, Discord didn't acknowledge that Persona was even a partner. The vendor wasn't listed anywhere on Discord's platform documentation. Users found out about it from a deleted FAQ and internet sleuthing, not from Discord being upfront.

Discord claimed immediate deletion of IDs, but the actual retention period was up to 7 days, leading to user mistrust.

Discord's Contradictory Claims About Data Storage

This is where the messaging got genuinely confusing, and confusion breeds mistrust.

Savannah Badalich, Discord's global head of product policy, told The Verge that IDs shared during appeals "are deleted quickly—in most cases, immediately after age confirmation." That's a strong claim. Immediately. No delay. Right now.

But the FAQ told a different story. Up to 7 days of storage. That's not immediately. That's a full week where your government ID, your photo, and your date of birth are sitting on Persona's servers.

Why the discrepancy? A few possibilities:

One: Discord's leadership genuinely didn't understand what retention policy Persona had in place. They made a promise they couldn't keep because they didn't verify the details with their vendor.

Two: Discord knew the real retention was 7 days but wanted to downplay it in media interviews, so they said "immediately" instead.

Three: Persona's actual retention practices were somewhere in between, and Discord was being technically accurate (IDs are deleted quickly, eventually, 7 days is quick from a data retention perspective) while being deliberately misleading about the timeline.

Regardless of which is true, the gap between "immediately" and "7 days" destroyed user confidence. And when you're asking people to upload government IDs, confidence is the entire ballgame.

The Prior Breach That Made Everything Worse

Timing is everything, and Discord's timing was absolutely terrible.

Just before Discord announced its age verification plans, a third-party age verification vendor had suffered a breach that exposed 70,000 Discord users' government IDs. The IDs were stolen. They were on the internet. This happened because a vendor that was supposed to be protecting that data failed catastrophically.

Then Discord stands up and says, "We're collecting government IDs now." You can see why users reacted with visceral fear. The memory of that breach was still fresh. Trust in age verification vendors was at an all-time low. And Discord had the audacity to plan an even more invasive system right after that.

Worse still, Discord even acknowledged this in their messaging. The company tried to reassure users by saying "most users won't have to show ID." Instead, Discord would use video selfies and AI to estimate age, which raised a different set of privacy concerns. Users would opt into a system that scans their face, analyzes their appearance, and makes judgments about their age.

But if the AI gets it wrong? If you look young and the system thinks you're underage? You'd have to appeal. And appeals required showing an ID. Which meant that even if you initially avoided the ID collection, you might get pushed into it anyway.

So Discord was essentially saying: "We'll use AI to guess your age first, and then if we guess wrong and you complain, we'll collect your government ID as punishment for disagreeing with our AI." That's a system designed to pressure people into compliance.

Estimated data shows that age range and data quality have the highest impact on the accuracy of age verification systems, each contributing around 25-30% to potential errors.

What Happened to the Persona Test

Discord later confirmed that the Persona test was small in scope and ran for less than one month. Only a handful of users were affected. The company didn't provide numbers, didn't explain what they were actually testing, and didn't say when the test started or ended.

That lack of transparency was itself a problem. When you're running experiments on user data, especially sensitive data like government IDs, people deserve to know:

- How many users were involved?

- How long did the test run?

- What specific variables were you testing?

- How were test participants selected?

- Were they informed they were part of an experiment?

Discord answered almost none of these questions. The company just confirmed the test happened, it's over, and Persona is no longer a partner.

Persona's CEO Rick Song did make one explicit claim to help calm fears. He said all age verification data from Discord's UK test had been deleted. Not stored for 7 days. Not kept for compliance purposes. Deleted. Song understood that the only way to manage the PR crisis was to promise complete data destruction.

But here's the thing: we have no way to independently verify that claim. We're supposed to trust Persona that the data is gone. Given that the company had just been embroiled in a privacy controversy and faced intense scrutiny, was that trust reasonable? Not really.

The Hacker Investigation and Security Vulnerabilities

The backlash against Persona intensified when security researchers started investigating the company's infrastructure.

They didn't just find theoretical vulnerabilities. They found practical exploits. Independent researchers discovered a "workaround" to avoid Persona's age checks entirely on Discord. The hack wasn't difficult. It was accessible enough that average internet users could potentially use it to bypass age verification.

But more concerning than the bypass was what else researchers found: a Persona frontend exposed to the open internet on a US government-authorized server. Not a private server. Not encrypted. A government server with Persona's interface just sitting there, accessible to anyone who knew where to look.

This is the kind of thing that makes security professionals lose sleep. If Persona's interface is exposed on government servers, what else might be exposed? What data might be accessible? How many other vulnerabilities exist that haven't been discovered yet?

These findings didn't cause the backlash. But they validated all the fears that users had already expressed. Persona wasn't just a privacy concern. It was a security liability.

Transparency and vendor security are crucial lessons from Discord's challenges, rated highest in importance. Estimated data.

The Broader Debate About Age Verification Technology

All of this happened within a larger context about whether age verification technology actually works, and whether it's worth the privacy cost.

Here's the honest assessment: Age estimation AI is not as accurate as most people think. These systems can't consistently distinguish between a 15-year-old and a 17-year-old with high confidence. They struggle with different ethnicities, lighting conditions, and camera angles. A person who dyes their hair gray might be identified as older. A person with youthful features might be identified as younger.

And yet, age verification is being deployed in higher-stakes situations. The UK's Online Safety Act requires platforms to prevent adults from messaging minors, which is a much harder problem than just blocking kids from adult content. It requires false positive rates to be extremely low. You can't afford many mistakes.

There's also the philosophical question: Is surveillance the only answer? Discord's system would eventually use "behavioral signals" to override the need for age checks. That means Discord would be tracking and analyzing user behavior to infer age. That's not less privacy-invasive than government IDs. That's more invasive.

Some researchers have argued that age verification on this scale is impossible to do responsibly. You're either storing sensitive biometric data (privacy nightmare) or you're analyzing behavioral patterns (different privacy nightmare). There's no clean solution.

Discord's rollout suggested the company hadn't really grappled with these tradeoffs. The company seemed to assume age verification was just a technical problem to solve, not a fundamental privacy and surveillance issue.

Why Transparency Matters in Age Verification

Let's step back and talk about why the transparency failures mattered so much here.

When a company collects government IDs, that's a high-trust interaction. Users are making a decision to share their most sensitive personal data because they believe it's necessary and safe. But that trust is fragile. It breaks the moment users detect dishonesty or ambiguity.

Discord failed on multiple transparency fronts:

The Persona partnership wasn't disclosed. Users weren't told upfront that their age verification data would be processed by a third-party company. They found out from archived web pages and internet sleuthing.

The retention policy was contradictory. Leadership said "immediately deleted." The FAQ said "up to 7 days." Those aren't compatible claims, and Discord didn't reconcile them.

The test wasn't explained. Users didn't know it was a test, how long it ran, what was being tested, or how many people were affected.

The deleted disclaimer was suspicious. When companies delete information that contradicts their public statements, it looks like a cover-up, not a correction.

Each of these failures individually would be a problem. Together, they created a narrative of deliberate obfuscation. And once that narrative takes hold, it's almost impossible to fix with statements and promises.

Estimated data flow shows tech companies and vendors as major conduits for government data. Estimated data.

What Happened After the Partnership Ended

Discord moved quickly to distance itself from Persona after the backlash. The company confirmed the partnership had ended, all test data had been deleted (according to Persona), and moving forward, Discord would "keep our users informed as vendors are added or updated."

That last part is important. Discord basically admitted that it hadn't done that with Persona. The company had added a vendor and not informed users about it. So the promise going forward was to change that behavior.

But promises aren't trust. And Discord had just demonstrated that its internal communication about data practices could be contradictory or deliberately vague. Even with new pledges of transparency, users had reason to be skeptical.

The Persona situation became a case study in how not to implement controversial tech. It was a masterclass in things that destroy user confidence: undisclosed partnerships, contradictory statements, deleted disclaimers, high-profile investor concerns, and security vulnerabilities discovered by independent researchers.

The Regulatory and Legal Implications

While Discord and Persona were dealing with the PR crisis, regulators were probably paying attention too.

The UK's Online Safety Act has real teeth. Ofcom, the UK's communications regulator, can fine platforms for failures to meet safety requirements. What Discord tried to do with Persona might not have complied with those requirements. A test that stores government IDs for up to 7 days without clear user consent or notification could violate GDPR and the OSA.

Similarly, Australia's age verification regime comes with real penalties. Platforms that don't implement adequate age verification can face massive fines. But those fines exist to protect kids, not to force platforms into privacy-invasive data collection. If Discord's approach doesn't actually protect kids (and there's debate about whether it does), the regulatory pressure continues.

The legal exposure here is significant. Discord could face:

- GDPR violations for data processing without clear consent

- OSA violations for failing to implement adequate age verification

- Regulatory investigation by Ofcom

- Data protection inquiries in multiple jurisdictions

- Class action lawsuits from affected users

Persona could face similar exposure, plus additional liability for its own security vulnerabilities and the discovered exposed frontend on government servers.

How Other Platforms Are Handling Age Verification

Discord isn't the only platform dealing with age verification. TikTok, Instagram, YouTube, and Snapchat all have age gating features. But they're mostly voluntary, not global mandates.

TikTok's approach involves asking users their age during signup but relies heavily on self-reporting. Instagram lets teens use the platform with restricted features. YouTube Kids exists as a separate app with parental controls. None of these platforms are collecting government IDs at scale.

The difference is that most platforms were given time to develop age verification gradually. Discord faced sudden regulatory pressure from multiple jurisdictions almost simultaneously, which forced a rushed solution.

When regulatory pressure forces implementation rather than allowing thoughtful product development, you get situations like the Persona debacle. Companies make bad choices to hit compliance deadlines. They partner with vendors they haven't fully vetted. They communicate unclearly because they haven't worked through the implications themselves.

This is a pattern regulators should learn from. When you mandate age verification with tight timelines, you're practically guaranteeing that platforms will implement it poorly.

The Privacy Implications for Biometric Data

Even if Persona had executed perfectly—secure infrastructure, clear data retention, transparent practices—the underlying technology still raises privacy concerns that deserve discussion.

Video selfie age estimation involves processing facial features, skin texture, facial structure, and other biometric data. That data is being analyzed by AI to make inferences about age. But the same biometric data could theoretically be used for facial recognition, emotion detection, deception detection, or other purposes.

Once you've collected someone's face scan, that data becomes valuable. Not just to the company collecting it. The data could be stolen, sold, or repurposed. A government facial recognition database would be far more complete if it included voluntary contributions from every teenager on Discord.

This is why privacy advocates worry about biometric data collection even from companies with good intentions. The data is too valuable and too sensitive to be collected without extraordinary safeguards. And the safeguards are often inadequate.

In Discord's case, the planned reliance on behavioral signals to eventually eliminate the need for age checks is even more invasive. It means Discord would be tracking and analyzing user behavior across the platform to infer age. That's not facial recognition. That's behavioral surveillance. It's different data, but it's arguably a more comprehensive privacy invasion.

What Users Should Understand About Age Verification

For Discord users trying to make sense of this situation, here are some things to understand about age verification systems in general:

Age verification is not the same as identity verification. Just because a system can estimate someone's age doesn't mean it can prevent someone from lying about who they are. A 12-year-old could use a parent's ID to claim to be 18. An adult could claim to be 35 when they're actually 45. Age and identity are different data points.

No system is perfectly accurate. Age estimation AI has error rates. They vary by ethnicity, gender, age range, and other factors. The systems Discord was using wouldn't have been perfectly accurate for anyone.

Data retention is the real risk. The question users should ask isn't "Will this company sell my data?" It's "How long will this company retain my data, and what's their track record on security?" Retention time is the primary lever that controls risk.

Third parties expand the risk surface. When Discord uses Persona, your data isn't just with Discord. It's with Persona, on Persona's servers, subject to Persona's security practices. That's a bigger risk than if Discord processed everything internally (which also has risks, but it's one company, not two).

Transparency is the only accountability mechanism. You can't verify that your data is actually deleted. You can't audit Persona's security practices. The only way to hold companies accountable is if they're transparent about what they're doing, so external researchers and journalists can investigate.

The Industry Lesson From Discord's Failure

If other platforms and companies are paying attention, they should learn something from what happened to Discord.

Transparency about data practices isn't optional. It's the foundation of user trust. The moment you're vague about how long data is stored, who has access to it, or which third parties are involved, you're broadcasting that you haven't thought through the privacy implications yourself.

Second, regulatory pressure is real, but it shouldn't override good security and privacy practices. Discord faced a deadline to implement age verification in the UK. The company could have said, "We can't implement age verification responsibly in that timeframe. Here's what we can do instead, and here's when we can do it properly." Instead, Discord rushed, cut corners, and paid a reputational price.

Third, high-profile investors in your vendors matter. When Thiel's Founders Fund is backing an age verification company, that information is going to come out, and people are going to have concerns. Company leadership should acknowledge those concerns and explain why they chose the vendor despite the investor overlap.

Fourth, deleted statements create conspiracy theories. When Discord deleted that FAQ disclaimer, it invited speculation about why. Was there something to hide? Transparency would have stopped that immediately. A simple statement like "We updated our retention policy and this disclaimer is no longer accurate" would have resolved it.

Finally, vendors need security reviews before deployment, not after. The fact that Persona's infrastructure had publicly exploitable vulnerabilities and exposed frontends on government servers suggests the company wasn't thoroughly vetted before Discord handed over user data.

What Happens Next With Age Verification on Discord

Discord is still required to implement age verification in the UK and Australia. The Persona test ending doesn't change those regulatory requirements. So the company will need to find a different vendor or build the system in-house.

Given the backlash, Discord will probably move more slowly next time. The company might test with different vendors in different regions. The company might be more transparent about the process. Or Discord might decide that the risk to its brand isn't worth the regulatory benefit, and the company might challenge the regulations themselves.

What seems unlikely is that Discord will return to Persona. The vendor's brand is now toxic in the context of Discord. Every time Discord mentions age verification, users will think about Persona, government IDs, and Peter Thiel's investment fund.

That's the real cost of this debacle. It's not just reputational damage to Discord. It's reputational damage to the age verification industry itself. Users now associate age verification with sketchy practices, opaque data handling, and regulatory capture.

The Broader Context: Tech Companies and Government Data

The Discord-Persona situation fits into a larger pattern of tech companies collecting government data and storing it in ways that users don't fully understand or trust.

There's a fundamental asymmetry here. Governments require age verification, which forces platforms to collect government IDs. Governments also have contracts with companies like Palantir that specialize in analyzing data from tech companies. Over time, the government gets more and more access to user data, and users have less and less ability to opt out.

This isn't necessarily a conspiracy. It's how bureaucratic incentives work. Regulators want to protect kids, so they mandate age verification. Tech companies want to comply with regulations, so they use the easiest vendor available. That vendor might have government contracts, but that's not the company's problem. And before you know it, you've created a system where government data ends up with government-connected companies.

Users should be aware of this pattern. When you're asked for government ID, you're not just giving it to the company asking for it. You're potentially giving it to that company's vendors, and those vendors' business partners, and eventually, you're creating a trail that leads back to the government.

That's not to say it's necessarily illegal or even wrong. Governments have legitimate interests in knowing the ages of platform users. But users should understand what they're consenting to, and platforms should be transparent about how government data flows through their systems.

FAQ

What is Persona in the context of age verification?

Persona is an identity verification company that specializes in age verification for digital platforms. The company uses AI to process government IDs, analyze video selfies, and apply behavioral analysis to estimate user age. Persona had been approved by UK regulators as an age verification service on Reddit before Discord selected it as a vendor for the UK test.

Why did Discord choose Persona for age verification?

Discord selected Persona because the company had already been approved by UK regulators as an age verification service on Reddit, another platform with complex age verification requirements. This prior regulatory approval suggested Persona would meet Discord's needs for the UK's Online Safety Act compliance.

What was the main privacy concern with Discord's age verification plan?

The primary concern was that Discord planned to collect government IDs, which are highly sensitive biometric data. This plan came just after a prior age verification vendor had suffered a breach exposing 70,000 Discord users' government IDs. The combination of recent breach history and plans to expand ID collection created justified user fears about data security.

Why did users worry about Peter Thiel's involvement with Persona?

Users discovered that Thiel's Founders Fund was a major investor in Persona. Because Thiel is the co-founder of Palantir, a data analytics company with government contracts, and has ties to the Trump administration, users worried that Persona data might eventually reach government agencies or be used for facial recognition systems. While speculative, these concerns reflected legitimate questions about data access and government surveillance.

What did the deleted Discord FAQ disclaimer reveal?

The deleted FAQ stated that information from Discord's UK age verification test "will be temporarily stored for up to 7 days, then deleted." This directly contradicted public claims by Discord's leadership that ID data would be "deleted immediately" after age confirmation. The deletion of this disclaimer itself raised questions about whether Discord was trying to hide information from users.

What happened to the data from Persona's work with Discord?

Persona's CEO confirmed that all age verification data from Discord's UK test had been deleted. However, users have no independent way to verify this claim. The company had no obligation to provide proof of deletion, and independent audits didn't occur. Users were asked to trust Persona's word after the company had already been implicated in privacy controversies.

Is Discord still using Persona for age verification?

No. Discord ended the partnership with Persona after the backlash. The company confirmed the test concluded, and Persona is no longer an active vendor. However, Discord still faces regulatory requirements to implement age verification in the UK and Australia, so the company will need to find a different vendor or approach.

What security vulnerabilities were discovered in Persona's systems?

Independent researchers discovered a "workaround" that allowed hackers to bypass Persona's age checks on Discord. More concerning, researchers found that a Persona frontend was exposed to the open internet on a US government-authorized server. These findings suggested significant security gaps in Persona's infrastructure that should have been caught before the company was deployed at scale.

How does age verification affect youth privacy on social platforms?

Age verification creates multiple privacy risks. Biometric data from video selfies can be repurposed for facial recognition. Behavioral analysis used to infer age involves extensive tracking of user activity. Government ID data is extremely sensitive and creates breach risks. All of these methods involve tradeoffs between protecting children and invading privacy, with no perfect solution currently available.

Should users provide government IDs for age verification?

Users should carefully evaluate the necessity and security of any system asking for government IDs. Questions to ask include: How long will the data be retained? Is it a required test or optional? Who are the third parties with access? What's the company's track record on security? Are there alternatives available? In Discord's case, the answers to these questions were unclear or unsatisfactory, which justified user reluctance.

Conclusion

The Discord-Persona situation was a perfect storm of poor decisions, unclear communication, and regulatory pressure colliding with user privacy concerns. It wasn't a massive breach or a data theft. It was something potentially worse: a case study in how trusted companies can fumble their handling of sensitive information and destroy user trust in the process.

What happened here matters beyond Discord and Persona. It matters because age verification is coming to more platforms in more jurisdictions. Regulators are going to keep pushing for it. Tech companies are going to keep implementing it. And users are going to keep worrying about whether their government IDs are actually safe.

The Discord situation shows what goes wrong when companies don't think through the implications of what they're collecting, when they don't communicate clearly with users, and when they partner with vendors without fully vetting them. It shows what happens when regulatory deadlines force rushed implementation over thoughtful product development.

For users, the lesson is to be skeptical of any platform asking for government IDs or biometric data. Ask questions. Demand clarity on retention policies, third-party vendors, and security practices. If the company isn't transparent, that's a signal that they haven't thought through the privacy implications themselves.

For regulators, the lesson is that mandating age verification without giving companies adequate time to implement it responsibly leads to worse outcomes. Better to allow gradual implementation with real security reviews than to create artificial deadlines that force corner-cutting.

For other tech companies, the lesson is that transparency about data practices is the foundation of user trust. The moment you start being vague about how long data is stored, who has access, or which third parties are involved, you're inviting scrutiny and conspiracy theories. Clear, honest communication about the tradeoffs and risks is the only path to maintaining trust when you're asking users to share sensitive information.

Discord will move forward. The company will find another age verification vendor, or it will build the system internally. The platform's massive user base gives it staying power despite this controversy. But the reputational damage lingers. And that damage serves as a reminder that when you're handling sensitive user data, transparency isn't optional. It's the price of trust in the digital age.

Key Takeaways

- Discord partnered with Persona for UK age verification without initially disclosing the vendor to users, creating trust issues

- Contradictory claims about data retention (immediately deleted vs. up to 7 days) destroyed user confidence in the system

- A deleted FAQ disclaimer that contradicted leadership statements created the appearance of a cover-up

- Persona's investor ties to Peter Thiel's Founders Fund raised concerns about potential government data access

- Security researchers discovered vulnerabilities in Persona's infrastructure, including an exposed frontend on government servers

- The prior breach of another age verification vendor (70,000 IDs) made timing of Discord's ID collection plan particularly poor

- Discord's regulatory deadline pressure forced rushed implementation that skipped proper security and vendor vetting

- Age estimation AI has accuracy limitations and cannot distinguish ages reliably across all demographics

- Even with deletion, biometric data collection creates privacy risks that can't be fully mitigated

- Transparency about data practices is the foundation of user trust for sensitive information collection

Related Articles

- Discord's Age Verification Disaster: How a Privacy Policy Sparked Mass Exodus [2025]

- Roblox Lawsuit: Child Safety Failures & Legal Impact [2025]

- Wisconsin VPN Ban Scrapped: What This Means for Your Privacy [2025]

- Ring's AI Search Party: From Lost Dogs to Neighborhood Surveillance [2025]

- Ring's Search Party: How Lost Dogs Became a Surveillance Battleground [2025]

- Jikipedia: AI-Generated Epstein Encyclopedia [2025]

![Discord's Age Verification Crisis: What Happened With Persona [2025]](https://tryrunable.com/blog/discord-s-age-verification-crisis-what-happened-with-persona/image-1-1771628790289.jpg)