Introduction: Apple's Major Pivot Toward Wearable AI

Apple's been quiet about its AI hardware roadmap. Too quiet, actually. While everyone's been watching OpenAI release new GPT models and watching AI chatbots explode in popularity, Apple's been working on something different—something physical. And according to recent reports, the company is about to make a major move into wearable AI devices.

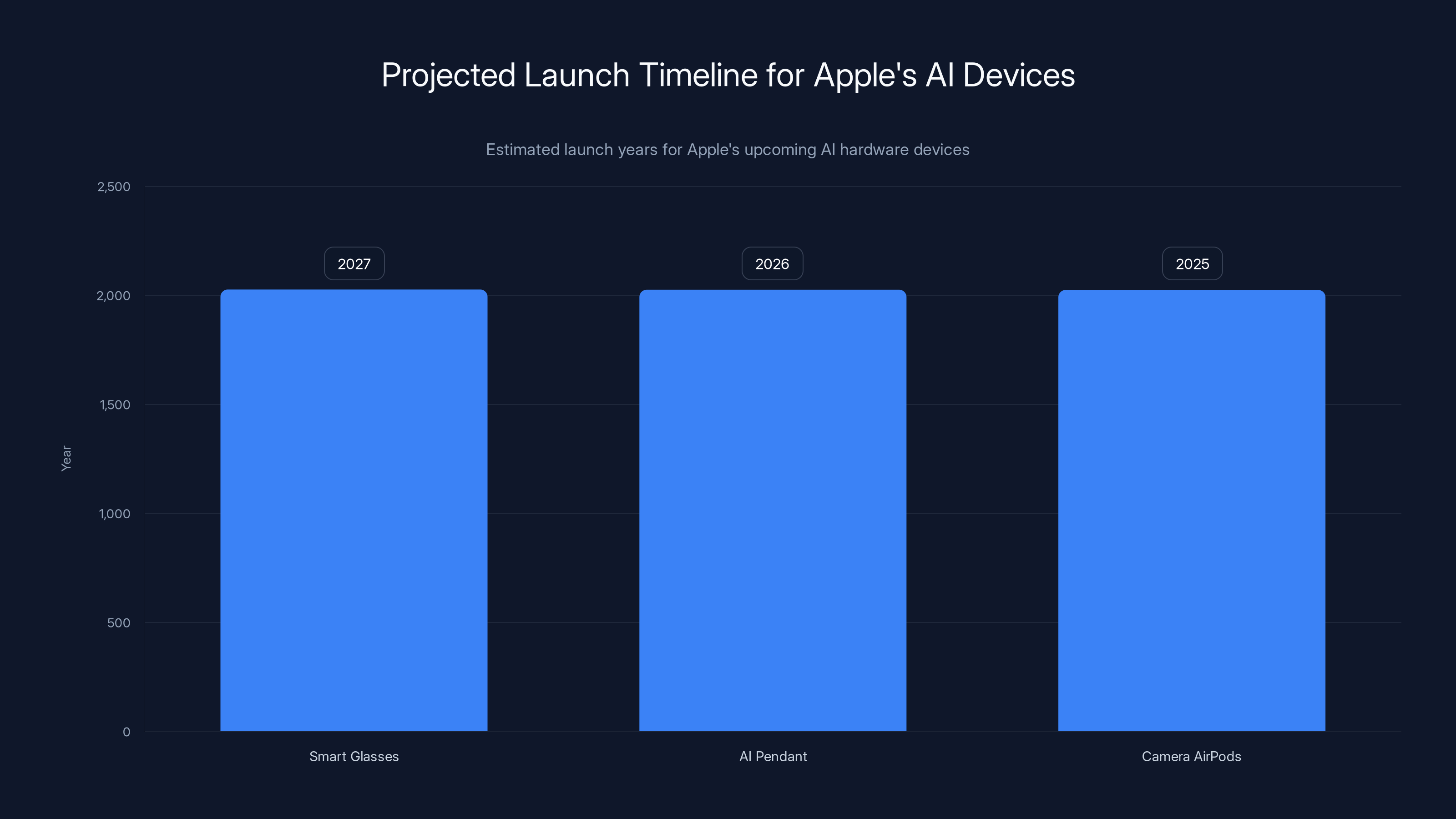

The news hit hard: Apple isn't just thinking about AI glasses anymore. The company is actively developing a complete ecosystem of AI-powered wearables. We're talking smart glasses without a display, an always-on AI pendant, and upgraded AirPods with built-in cameras. This isn't some far-off dream. Production is already ramping up, with timelines ranging from this year to 2027, as detailed in Bloomberg's report.

Here's what's wild about this approach. Apple doesn't typically chase trends. The company waits, watches what works and what doesn't, then enters the market with something that feels inevitable in hindsight. That's what happened with the iPad, the Apple Watch, and AirPods. This AI hardware strategy feels different. It's aggressive. It's diverse. It's Apple saying, "We're not betting on one form factor—we're betting on the entire wearable ecosystem."

But let's be clear: this is a calculated risk. Smart glasses have failed before. Google Glass became a punchline. Snapchat's Spectacles never took off. Meta's smart glasses are selling, sure, but they're still niche products. So why is Apple jumping in now? What does Apple know that others don't?

The answer lies in AI. Specifically, how AI makes wearable cameras useful. A camera without AI context is just a camera. But add generative AI to the equation, and suddenly you've got something that understands what you're looking at, who you're talking to, and what you might need next. That's the real game here. Apple's not building hardware. Apple's building AI interfaces that happen to be made of glass and aluminum.

This article digs into everything we know about Apple's wearable AI strategy—the timeline, the technology, the competition, and what it all means for the future of human-computer interaction. Let's start with the smart glasses.

TL; DR

- Apple's developing three new AI devices: Smart glasses (launching 2027), an AI pendant (possibly 2026), and upgraded AirPods with cameras

- Production timeline is aggressive: Smart glasses manufacturing starts December 2025, no display but packed with cameras and AI features

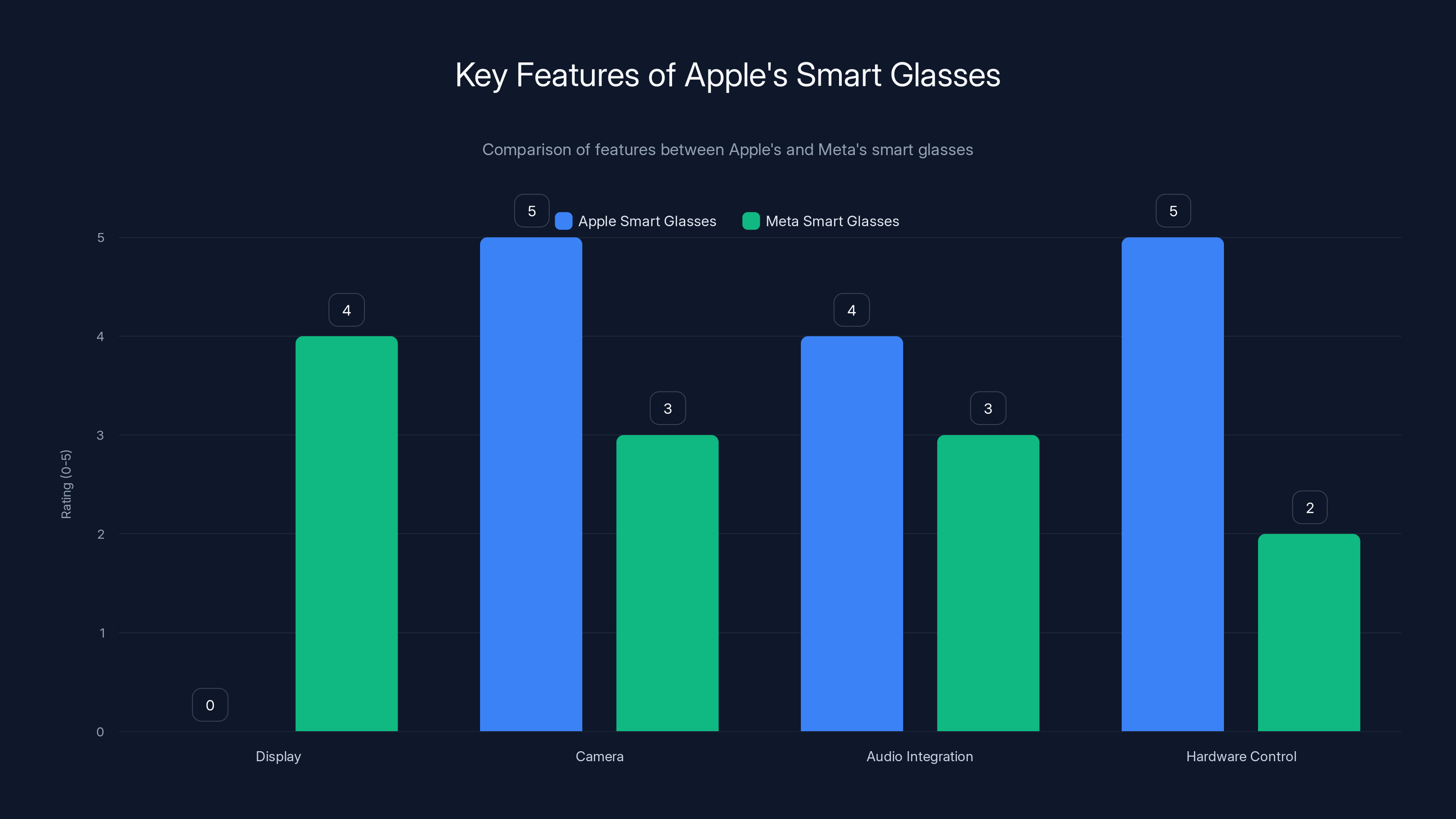

- Direct competition with Meta: Unlike partnering with Ray-Ban, Apple is building frames in-house for superior quality and control

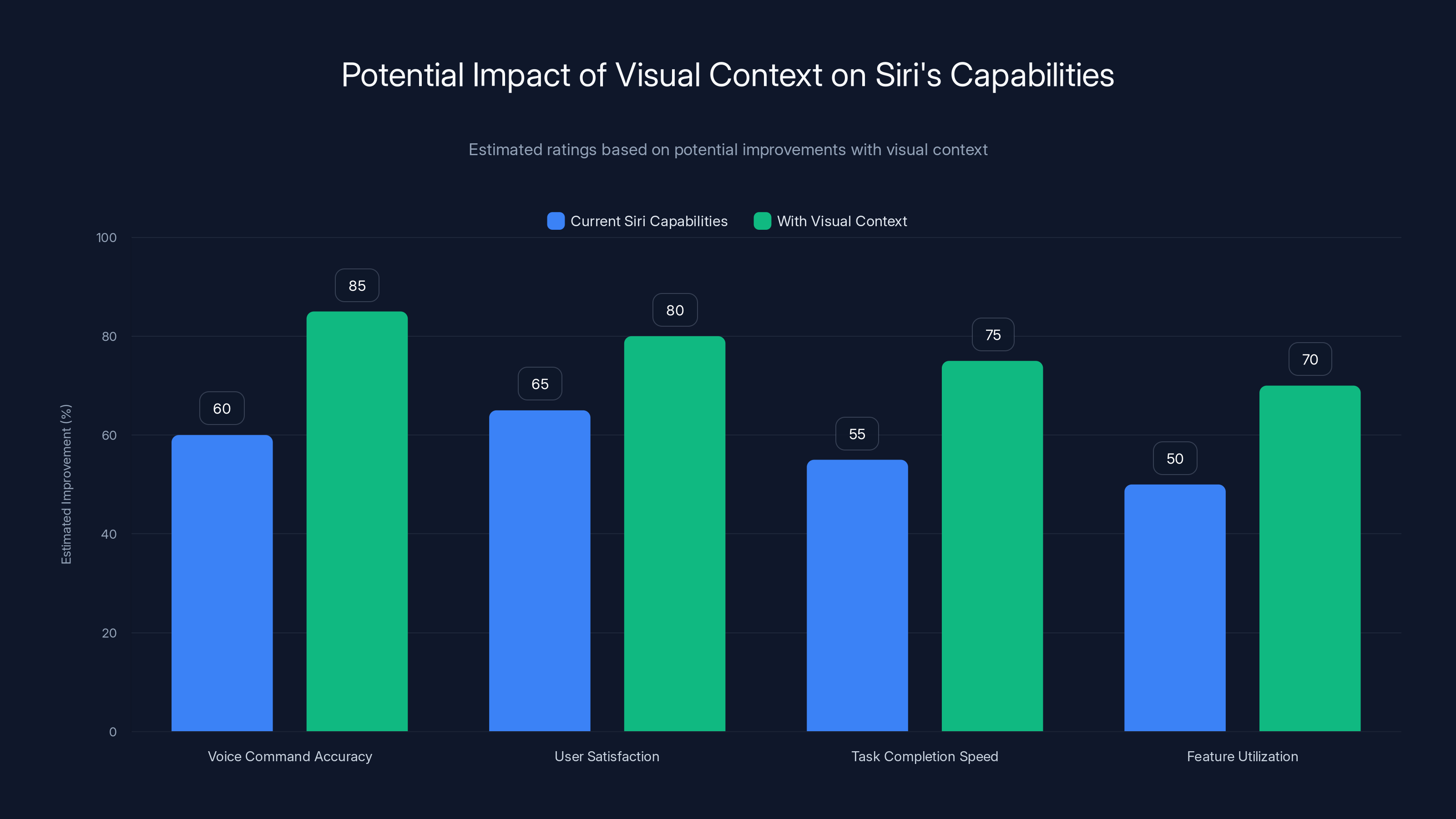

- Visual context is the key: Siri will analyze what you're seeing and take actions based on your surroundings, not just voice commands

- Still years away from full AR displays: Apple has a long-term vision for glasses with displays, but that's many years out

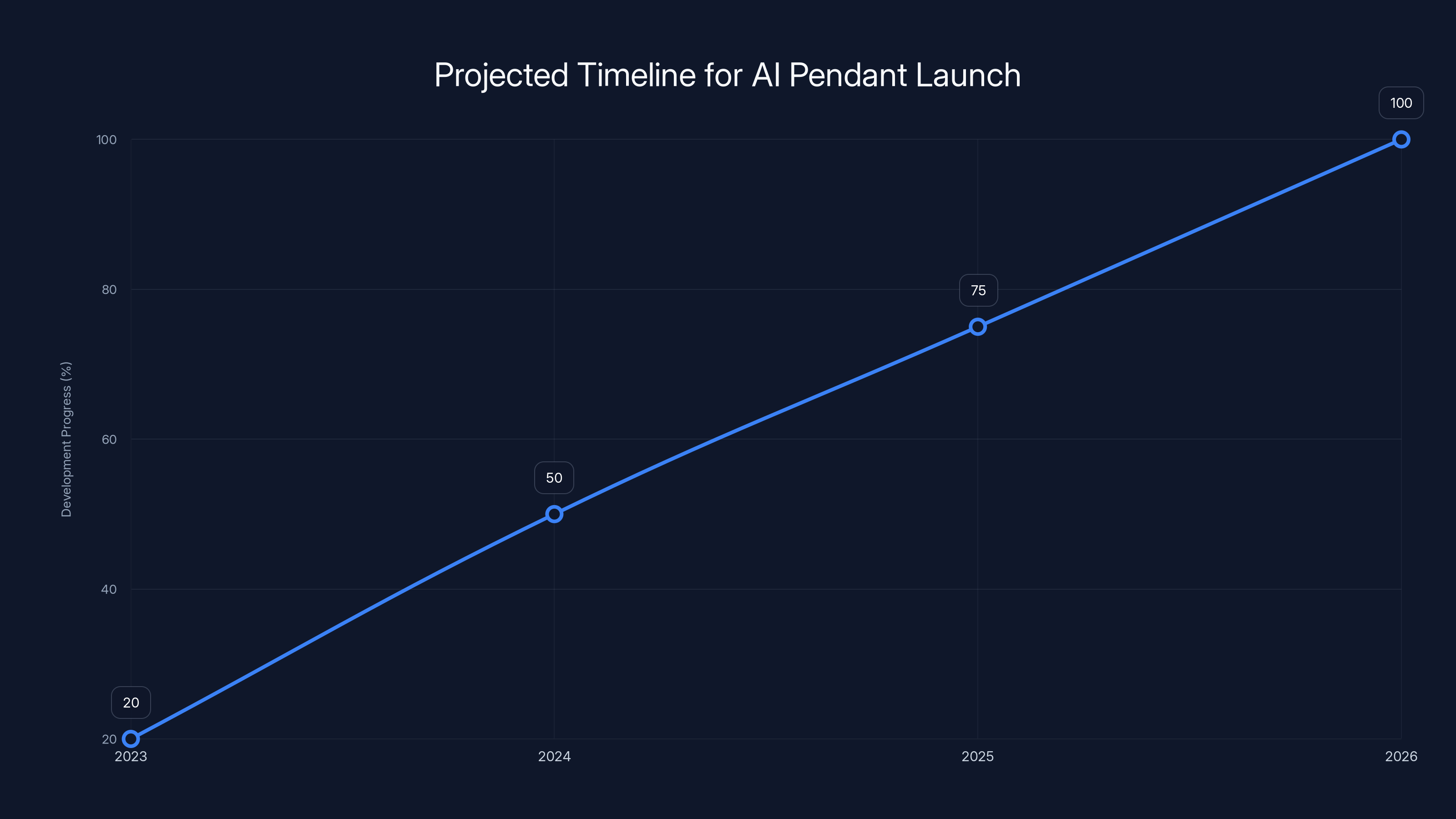

Apple's AI hardware devices have staggered launch timelines, with Camera AirPods expected in 2025, AI Pendant in 2026, and Smart Glasses in 2027. Estimated data based on current reports.

Apple's Smart Glasses: No Display, All Camera

The Core Design Philosophy

Apple's first-generation smart glasses won't have a display. This is actually the smart move, and here's why. Displays are expensive. They drain battery faster than anything else. They create heat management problems. And they're not technically necessary if your phone is already in your pocket.

Instead, Apple's glasses are being built as a visual intelligence device. Think of them as an extension of your iPhone's camera—but mounted on your face. The glasses will have multiple high-resolution cameras. One primary camera will handle photos and videos. Another lens is specifically designed for AI-powered context analysis. This dual-camera approach is crucial. You don't want the same lens doing double duty. It compromises both.

The frame itself is being developed entirely in-house. This is different from Meta's approach, which relies on partnerships with Ray-Ban and Oakley. Apple wants complete control over the hardware-software integration. That control allows for tighter optimization and faster iteration when something doesn't work, as noted in PCMag's analysis.

Speakers and microphones will be embedded into the frames. So you can listen to music, take calls, and interact with Siri without pulling out your phone. The weight distribution matters here. Putting everything in the frame means equal balance on both sides of your face. Prototypes are already incorporating this, with earlier versions using a cable to a battery pack that will eventually become embedded in the frame itself.

Camera Technology and Visual AI

The cameras are where the magic happens. Apple is reportedly working on what they're calling a "high-resolution" primary camera. We don't have exact specifications yet, but based on what we know about Apple's typical approach, we're probably talking about at least 12-megapixel sensors, possibly higher. The secondary lens for AI analysis is likely a lower-resolution sensor optimized for edge cases—identifying objects, reading text, recognizing faces.

Here's the key insight: these cameras aren't just passive recorders. They're active intelligence sensors. When you look at something, the glasses will analyze what you're seeing in real-time. That analysis happens partly on the device itself and partly on your iPhone. Apple's been building computational photography capabilities for years with the iPhone. Those same principles apply here.

Imagine you're at a restaurant and you're not sure if a dish is vegan. You ask Siri, "Does this have dairy?" The glasses see what you're looking at. Your iPhone processes the image. Within seconds, you get an answer. Maybe it's pulling from a restaurant's menu database. Maybe it's analyzing the food itself. The point is, the context matters.

Or consider this scenario: you're walking through a city you've never visited. You ask for directions to a specific landmark. Instead of just giving you a turn-by-turn route, Siri can see what you're seeing. It can point out when you're approaching the landmark. It can tell you about the architecture. It becomes an actual personal guide, not just a voice telling you which way to turn.

Privacy will be a massive concern here, and Apple knows it. The company will likely position this as fully encrypted, with processing happening on-device wherever possible. Apple's got a good track record on privacy compared to other tech companies. But the optics of wearing a camera on your face in public—that's going to require serious trust-building.

Timeline: December 2025 Production Start

According to reports, Apple is aiming to start production in December 2025. That's months away from now. This isn't a "we're exploring this" timeline. This is a "we're committed, and we're moving fast" timeline.

A production start in December typically means a public announcement by summer 2026, followed by a holiday 2026 launch or early 2027 launch. The timeline provided in reports suggests 2027. That's a reasonable window. It gives Apple time to work out manufacturing issues, gather feedback from beta testers, and refine the software experience.

But here's the thing about Apple timelines: they're often conservative. The company announces products when it's confident they're ready. If we're looking at a December 2025 production start, that means Apple's spent probably two to three years getting to this point. The prototypes work. The battery life is acceptable. The AI features actually do something useful. This isn't vaporware.

Competing with Meta's Ray-Bans

Meta's Ray-Ban Meta smart glasses are the closest competitor right now. Those glasses have cameras, they have AI processing, and they're actually selling. But there are differences in the approach.

Meta partnered with Ray-Ban, which gave Meta instant access to optical expertise and retail distribution. But it also means Meta doesn't control the entire product. Apple's approach is different. By building the frames in-house, Apple maintains complete control over the hardware-software experience. That's the Apple way.

Meta's glasses are about capturing life and sharing it. They've got a social media angle. They're positioned as a tool for creators and people who want to document their lives. Apple's positioning feels different. Apple's glasses are about intelligence. They're about what you can do with the information you're gathering. That subtle difference could matter a lot in how these products sell.

Pricing will tell you a lot about Apple's intentions. Ray-Ban Meta glasses run around

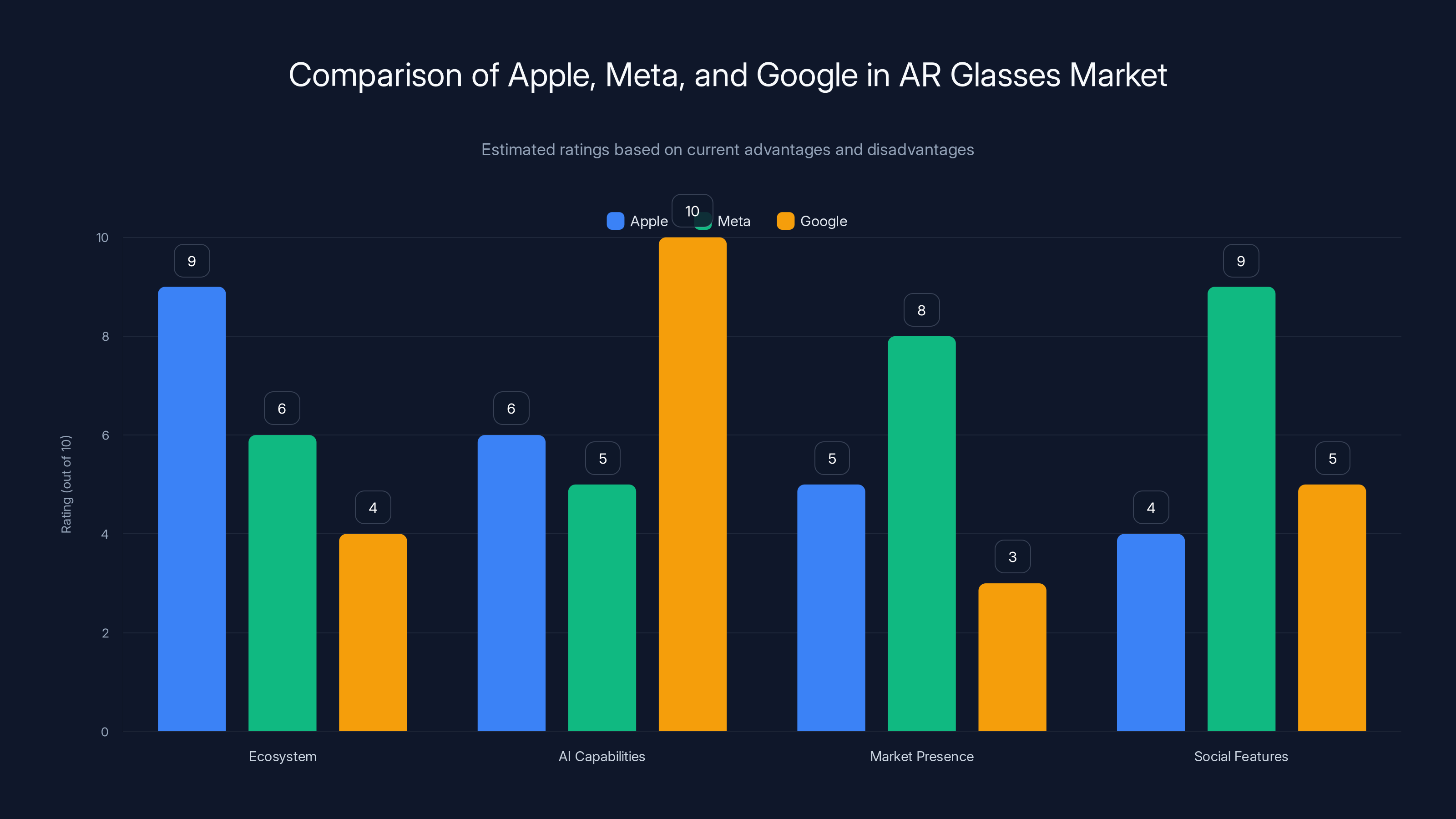

Apple's smart glasses focus heavily on camera technology and hardware control, while Meta's glasses include a display but have less emphasis on integrated audio and control. Estimated data based on feature emphasis.

The AI Pendant: Your Personal AI Assistant Gets Physical

What Is This Thing, Exactly?

An AI pendant sounds weird when you first hear it. A pendant sized like an AirTag that you wear around your neck or clip to your shirt. But think about it from a practical standpoint: it's a way to always have a camera on you without the social friction of obvious glasses. You're already used to wearing jewelry or carrying keychains. A pendant is just the next step.

This device will essentially be an always-on camera paired with a microphone. Unlike the smart glasses, the pendant doesn't need to handle complex processing. It's primarily a capture device. The real intelligence happens on your iPhone. The pendant is the sensor. Your phone is the brain.

Built-in hardware will handle some processing—probably audio capture and maybe some basic image compression. But the heavy lifting happens on your phone. This is where Apple's ecosystem advantage becomes clear. If you own an iPhone, iPad, and Mac, this pendant has a lot of computing power to tap into. Android users can't participate in this system in any meaningful way.

The form factor matters more than people might think. A pendant is deniable in a way that glasses are not. People know when you're looking at them through glasses. A pendant is much more ambiguous. That has both privacy implications (good for the wearer) and surveillance implications (bad for the people being filmed). Apple will need to be careful about how it markets this.

Timeline: Possibly 2026

Reports suggest the pendant could launch as early as next year. That's much sooner than the smart glasses. Why? Because it's simpler. There's no display to develop. The optical design is straightforward. The software integration is lighter touch—it's mostly just passing video to your iPhone.

A 2026 launch would actually make sense from a product strategy perspective. Apple could introduce the pendant as a more accessible entry point to visual AI features. Then when the smart glasses arrive in 2027, they become the premium flagship. You're building an ecosystem.

Always-On Camera: Battery Reality

Here's a hard truth: a truly always-on camera will destroy your iPhone battery. Recording video constantly? That's going to drain your phone in hours. Apple will need to solve this with one of several approaches:

First option: periodic sampling. The pendant doesn't record continuously. It samples the camera feed at intervals, then asks the AI "is anything interesting happening?" Most of the time, nothing is. So it doesn't waste power recording. Only when something interesting is detected does it start continuous recording.

Second option: edge processing. Do as much processing as possible on the pendant itself. This is computationally expensive, but it's better than sending raw video to your phone constantly. A dedicated AI chip on the pendant could handle basic object recognition and scene analysis. Your phone gets summaries, not raw data.

Third option: accept lower quality. Maybe the pendant doesn't record in full resolution. Maybe it's recording in a compressed format that's lower quality but uses less power. Apple could trade resolution for battery life.

Whatever approach Apple takes, battery life will be the first test of whether this product works. If it needs charging every eight hours, it's dead on arrival. If it can get through a full day on a charge, it's viable. Apple hasn't disclosed this yet, but it's absolutely critical.

Camera-Equipped AirPods: The Sneakiest Hardware Play

Why AirPods Are the Perfect Vehicle

AirPods are ubiquitous. Millions of people wear them every day. They're not as socially imposing as glasses. They're already accepted as normal accessories. So adding a camera to AirPods is actually genius from a market adoption perspective. People already think of AirPods as wearable tech. Adding a camera is just the next evolution.

But here's the technical challenge: where do you put the camera on an earbud? The form factor is tiny. There's barely room for a speaker and microphone. Adding optics and a sensor increases the size significantly. Apple will probably put the cameras on the lower or outer edge of the earbuds, probably in a way that's not immediately obvious. Discretion matters here.

These won't be the high-resolution cameras in the smart glasses. Reports suggest low-resolution cameras optimized for AI analysis rather than photo quality. You're talking probably 2 to 5 megapixels, tops. But that's actually fine. You don't need a professional camera to analyze what your ears are hearing and what's visually nearby.

The Use Cases

Low-resolution cameras on earbuds might seem pointless until you start thinking about the use cases. Imagine asking Siri a question that requires visual context. "What ingredient is this?" Your AirPods see it. You get an answer. You don't need to pull out your phone. You don't need to wear glasses. It just works.

Or consider this: you're on a call with someone, and they're describing an object. You say, "Show me." The AirPod camera captures it and sends it to the person you're talking to. Instant visual context without fumbling for your phone.

There's also a hearing aid angle here. Cameras on earbuds could help with spatial awareness for people with hearing loss. But that's probably further down the roadmap.

Timeline: This Year Possible

Unlike the pendant and glasses, camera AirPods could launch much sooner. Mark Gurman's reporting suggests this could happen in 2025, meaning later this year. That's aggressive, but it's technically simpler than the other devices.

If Apple launches camera AirPods in the next few months, that sends a signal: the company is moving fast on visual AI. It's not waiting for perfect. It's shipping things to gather real-world data and user feedback.

A 2025 launch for AirPods, followed by a 2026 pendant, followed by 2027 smart glasses would be a smart rollout strategy. Each product teaches Apple something about how people interact with always-on cameras and AI context. Each product refines the experience for the next one.

Visual context is projected to significantly enhance Siri's capabilities, improving accuracy and user satisfaction by approximately 20-25%. (Estimated data)

The Siri Angle: Visual Context Changes Everything

From Voice to Vision

Siri's been a voice assistant for over a decade. It's gotten better over the years, but it's still limited by what it can do without context. Ask Siri "what time is the game?" and it has no idea what game you're talking about. You need to provide context verbally.

But when Siri can see what you're seeing, everything changes. "What time is the game?" Siri looks at your screen, sees you've got a sports app open with a specific team and game loaded. Now it knows exactly what you're asking. Context transforms voice commands from guesses to certainties.

This is actually how human conversation works. When you talk to someone in person, they see what you're talking about. They see your facial expressions. They see the environment. All of that context makes conversation faster and more accurate. Giving Siri that same context makes it dramatically more useful.

Apple's been building toward this with the iOS upgrades that added picture recognition, scene detection, and visual processing. The company's computational photography team has been working on understanding images for years. Now all of that expertise gets funneled into a voice assistant that can actually see.

Specific AI Capabilities We Know About

Based on the reports, here are the visual AI features Apple is working on:

Object and ingredient identification: You point at something. Siri tells you what it is. For food, it could tell you ingredients or nutritional information. For products, it could give you pricing or reviews.

Landmark recognition: You're traveling. You see something interesting. Siri recognizes it and tells you about it. This is basically Google's reverse image search, but integrated into your AI assistant.

Context-based reminders: You're in a specific location, doing a specific activity. Siri reminds you to do something relevant. You're at the grocery store, and you forgot to add milk to your list. Siri reminds you because it recognizes where you are.

Direction assistance: Siri doesn't just tell you which way to turn. It sees where you're going. It points out landmarks so you know you're on the right track.

Visual information retrieval: You're reading something, see a word you don't know, and ask Siri. Instead of a dictionary definition, Siri could pull context from what you're actually looking at.

These are all things that Google Assistant and Alexa can theoretically do with your phone's camera. But Apple's doing it with glasses and earbuds that make it much more natural and seamless.

Privacy and Data Collection Concerns

Let's be direct: a Siri that can see everything you're looking at is a surveillance system. Apple will need to address this head-on.

The company's approach will likely be heavy on-device processing. Analyze what you're seeing on your phone, not on Apple's servers. Keep that visual data local. This is technically harder than sending everything to the cloud for processing, but it's better for privacy.

Apple could also be transparent about when cameras are active. Visual indicators on the hardware showing that recording is happening. Clear software controls for when the camera is on or off. Privacy dashboards showing what data is being collected.

But here's the challenge: people will still be skeptical. "Is Apple really not recording everything?" Building trust is going to take time and consistent behavior. One data breach, one revelation that Apple was collecting more than it said, and the whole thing collapses.

How Apple's Ecosystem Advantage Makes This Work

iPhone as the Processing Powerhouse

All of these devices—glasses, pendant, AirPods—they're all designed to work with your iPhone. The iPhone is the processing core. The wearables are the sensors and input/output devices.

This is a huge advantage. The iPhone A18 chip is incredibly powerful. Processing video on the fly, running AI models, analyzing images in real-time, all while maintaining battery life—the iPhone can do it. And if it can't, the phone can tap into iCloud for heavier processing.

But there's a subtle point here: this locks users into the Apple ecosystem. You can't use these wearables without an iPhone. That's by design. Apple isn't trying to make a platform that works across Android and iOS. It's trying to make an ecosystem that works because all the pieces are designed together.

Google and Meta can't do this the same way. They don't control both the wearables and the phone as tightly. Google's making Pixel phones and trying to get people to use them, but there's no guarantee someone buying Pixel-adjacent hardware will buy a Pixel phone. Meta doesn't make phones at all anymore.

Apple's got the ultimate advantage: vertical integration. Every layer, from silicon to software to wearables, is made by Apple. Everything talks to everything else. Everything assumes you're in the ecosystem.

iCloud Processing and On-Device AI

Most of the processing will happen on-device. Your iPhone analyzes that image from the glasses. Your iPhone processes the audio from the pendant. Your iPhone figures out what you're looking at.

But for harder problems, the iPhone can call to iCloud. Complex image recognition that requires knowledge of the entire internet? That might go to the cloud. Identifying a famous landmark? Could go to the cloud. But personal data—photos of your face, your home, your private moments—that stays on device.

This is where Apple's infrastructure matters. The company's built iCloud to handle massive data processing. It's got the servers, the networking, the data centers. But it's also built a reputation for keeping personal data private. Balancing those two things is the challenge.

The App Store Angle

There's also an app ecosystem opportunity here. Once these devices exist, developers will want to build on them. New apps for smart glasses. New uses for the pendant. New AI features for AirPods.

Apple controls the App Store. It can curate which apps get access to camera data. It can set rules about privacy. It can take a cut of the revenue. This is a business opportunity, but it's also a control mechanism.

We've seen app ecosystems drive adoption before. The iPhone became dominant not just because it was a good phone, but because developers built incredible apps. If Apple can create a compelling app ecosystem for visual AI wearables, that becomes a powerful lock-in mechanism.

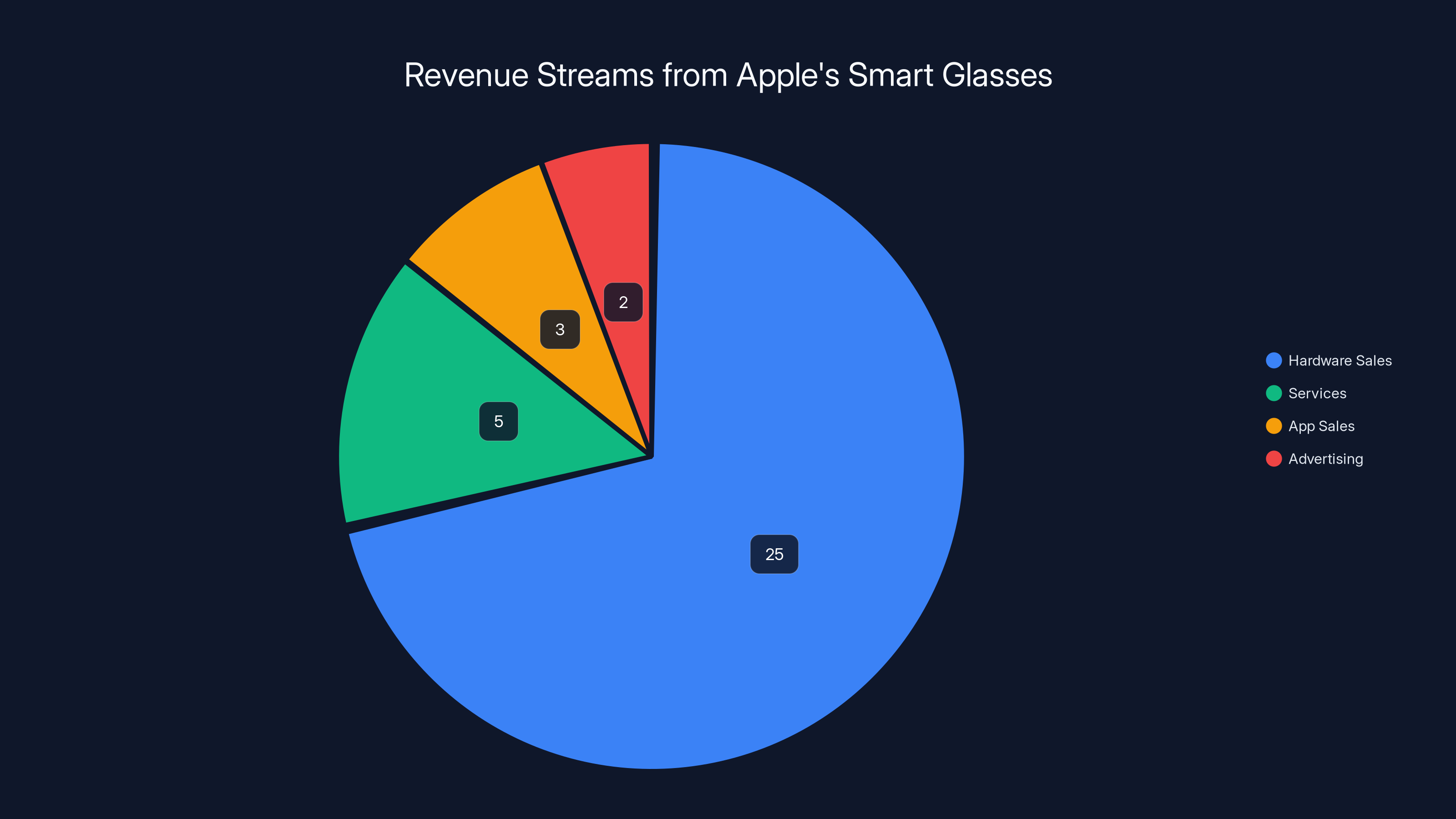

Apple's smart glasses could generate significant revenue from hardware sales, services, app sales, and potential advertising. Estimated data based on strategic projections.

Competition: Meta, Google, and Others

Meta's Current Advantage

Meta's got smart glasses already on the market. The Ray-Ban Meta glasses are shipping, people are using them, and the company is gathering real-world data. That's a huge advantage. Apple's still in development.

But Meta's glasses are limited in some ways. They're designed for sharing and entertainment. They're a creator's tool. Apple's glasses are being positioned as an intelligence tool. Different use case, different audience.

Meta also has Instagram and WhatsApp. It's building an entire infrastructure around visual content sharing. Apple doesn't have that same social media play anymore. But Apple does have the most loyal user base in tech. Apple users trust Apple in a way they don't trust Meta. That matters.

Google's Uncertainty

Google's been unusually quiet on smart glasses. The company tried Google Glass years ago and failed spectacularly. It's been burned before. But Google's AI capabilities are arguably better than Apple's. The company has Gemini, which is competitive with ChatGPT and Claude. Google's image understanding is incredibly strong.

So why isn't Google in the smart glasses race hard? Possibly because the company is focused on Gemini integration everywhere else. Maybe it's waiting for the market to mature before jumping in. Or maybe Google sees the social friction of obvious glasses and thinks the market is smaller than everyone assumes.

Startups and Smaller Players

There are startups working on AR glasses. Companies like Mojo Vision failed, but others are still trying. But they don't have the capital, the manufacturing expertise, or the ecosystem that Apple, Google, or Meta have. The market is probably going to consolidate around the big three.

Manufacturing: The Underrated Challenge

In-House Frame Production

Apple building the frames in-house is a decision with enormous implications. It means Apple needs to invest in manufacturing infrastructure. It means the company needs to hire optical engineers and manufacturing specialists. It means dealing with supply chain complexity.

But it also means control. Apple doesn't have to negotiate with Ray-Ban or Oakley about design changes. If the company wants to iterate quickly, it can. If it needs to customize hardware for different face shapes, it can do that.

Manufacturing glasses at scale is genuinely difficult. You're dealing with precise optical tolerances. You're dealing with comfort and fit. You're dealing with durability. Get any of these wrong, and you've got a failure on your hands.

But Apple's done hard manufacturing before. The original iPhone was manufacturing hell. The Apple Watch pushed the boundaries of what's possible in tiny device manufacturing. The AirPods required novel approaches to wireless charging and assembly. Apple's got the infrastructure and expertise to do this.

Supply Chain and Scaling

If Apple really starts production in December 2025, that means the company has already secured the parts. The camera sensors, the processors, the optics—all of it. Building the supply chain for millions of units takes time.

Apple will probably start with a limited production run. Maybe a few million units in 2027. If it works, scale up to tens of millions. If it doesn't work, shut it down. That's the standard Apple approach.

The supply chain could also be Apple's competitive moat. If Apple can build glasses more efficiently than competitors, at lower costs, with better quality—that's a sustained advantage. And the company's track record suggests it can do exactly that.

The AI Pendant is projected to launch by 2026, with significant development progress expected in the years leading up to it. Estimated data.

Software: The Real Innovation

Real-Time AI Processing

The actual innovation here isn't the hardware. The hardware is important, but it's not revolutionary. The innovation is doing real-time AI processing on that video stream.

When you put on the glasses, they're constantly capturing video. That video is being analyzed by AI models running on your phone. Those models need to identify objects, read text, recognize faces, understand context. All in real-time. With low latency. Without destroying battery life.

This is a software and systems engineering challenge. Apple's probably spent as much time optimizing the software as designing the hardware. The company's got teams working on:

Vision processing: Analyzing what the cameras see.

Language processing: Understanding what you're asking Siri.

Context integration: Combining visual and audio information to understand what's happening.

Privacy implementation: Making sure data is handled securely and stays local when it should.

Battery optimization: Making sure all this processing doesn't drain your phone in an hour.

These are hard problems. But solving them is what makes or breaks the product.

Machine Learning at the Edge

A lot of this will be edge computing—running ML models directly on the device, not sending everything to the cloud. Apple's been investing in this for years. The Neural Engine in iPhone chips is designed for exactly this.

Edge computing is faster (no network latency), more private (data stays local), and uses less battery (no constant network communication). It's the right approach for a wearable device.

But edge computing is also constrained. You can't run the biggest, most powerful AI models on a phone. You need smaller, more efficient models. Apple's probably been working with its AI team to build and optimize models that work at the edge.

Siri Integration

All of this visual processing needs to feed back to Siri. Siri needs to understand what the cameras are seeing and incorporate that into its responses. That integration is non-trivial.

Right now, Siri is primarily speech-based. It hears your request and tries to fulfill it. In the future, Siri will hear your request AND see what you're looking at, AND understand the broader context. That's a much richer information environment.

Siri could also become more proactive. Instead of waiting for you to ask a question, Siri could notice something interesting and mention it. "That landmark over there is the old courthouse. It was built in 1892." You didn't ask, but Siri thought it was relevant.

This kind of proactive AI is controversial. Some people will love it. Others will find it intrusive. Apple will need to calibrate the right level of helpfulness without being annoying.

Privacy, Ethics, and Regulation

The Camera Problem

Wearing a camera on your face or in your ears raises legitimate privacy concerns. Not just for you, but for everyone around you. When you're wearing glasses with cameras, you're potentially recording everyone in your vicinity without their consent.

Apple will need to address this. Possible solutions include:

Clear indicators: Visual lights that show the camera is active. People around you can see that you're recording.

Legal compliance: Following local laws about recording in public. Some places require consent, some don't.

Technical limitations: Geofencing the devices. They might disable recording in certain locations (bathrooms, locker rooms, etc.).

User responsibility: Putting the onus on the user to respect others' privacy. But this is weak—people will do it anyway.

Transparency reports: Publishing data about what the glasses record and how it's used. Building trust through openness.

This is a problem that will take years to solve satisfactorily. But Apple's got to try, because if these devices get a reputation as secret surveillance tools, they're dead.

Data Collection and Government Access

Apple's been in fights with law enforcement over encryption and data access. The company's taken a strong stance: your data is yours, and we won't make backdoors for governments.

But glasses that record everything you see? That's a lot of data. Governments will want access. Possibly for legitimate reasons (investigating crimes). Possibly for authoritarian reasons (suppressing dissent).

Apple's been clear that it won't cooperate with unreasonable requests. But we're in territory where the company's privacy stance will be tested. Don't be surprised if there are court battles over this.

Consent and Recording Ethics

On the interpersonal level, there's a consent issue. If you're wearing glasses that record, people near you might not know. They might not want to be recorded. What are their rights?

This is philosophically interesting. Cameras are everywhere now. Phones record video constantly. Google Maps cars drive around recording street views. But there's something different about a person recording you without your knowledge. It feels more invasive.

Apple could address this with transparency requirements. Maybe the glasses need to be visually obvious. Maybe there's a light that indicates recording. Maybe you need to announce that you're wearing them. These are all possible approaches.

Apple excels in ecosystem integration, Meta leads in market presence and social features, while Google dominates in AI capabilities. (Estimated data)

The Broader Vision: Where This Goes

Year-by-Year Roadmap

If reports are accurate, here's what we might expect:

2025: Camera AirPods launch, possibly alongside upgraded software that makes them useful.

2026: AI pendant launches. Adoption grows. Developers start building apps for visual AI.

2027: Smart glasses launch. This becomes the flagship visual AI device. High price, premium positioning.

2028-2030: Iterative improvements. Display technology gets better. Price comes down. Market adoption accelerates.

2031+: AR glasses with full displays and true augmented reality. This is years away, but it's coming.

Each product teaches Apple something. Each product refines the experience. By the time full AR glasses arrive, Apple will have years of data about how people use visual AI. That knowledge is invaluable.

The AR Display Question

Apple's reportedly still working on smart glasses with full AR displays. But those are "many years away" according to reports. Why the long timeline?

Displays are hard. AR displays are harder. You need a display that's bright enough to see in sunlight. Lightweight enough to be comfortable. With a large field of view. But not so large that it drains battery. And you need to be able to display content at infinity focus (far away) and near focus (close up) without making your eyes tired.

The technical challenges are immense. But Apple's working on them. When the company finally releases AR glasses with displays, they'll probably be revolutionary. That's worth waiting for.

AI Becomes Personal

The bigger picture is that AI becomes incredibly personal. Instead of talking to a chatbot on your phone, AI becomes an ambient intelligence. It's always there, always aware of context, always ready to help.

Some people will love this. Others will find it creepy. The truth is probably that it becomes normal. Just like people got used to carrying a camera in their pocket, people will get used to having AI always aware of their surroundings.

What matters is implementation. If Apple implements this thoughtfully, with strong privacy protections and user control, it's a positive. If it becomes a surveillance tool, it's negative.

Comparison: Apple vs. Meta vs. Google

The Current State

Meta: Glasses on the market now. Social media integration. Creator-focused. Lower price point.

Apple: Glasses in development. Ecosystem-focused. Utility-focused. Premium positioning.

Google: Quiet. Probably working on something. Strong AI capabilities. Undefined product strategy.

Apple's got a clear vision. Meta's got first-mover advantage. Google's got the best AI but no clear product strategy. The next three years will be interesting.

Advantages and Disadvantages

Apple's advantages: Ecosystem integration, brand loyalty, privacy stance, vertical integration.

Apple's disadvantages: Enters late, premium pricing, limited social features.

Meta's advantages: First-mover, social features, partnership with Ray-Ban, existing user base.

Meta's disadvantages: Privacy reputation, less cohesive ecosystem, weaker AI.

Google's advantages: Best AI, largest search index, YouTube integration.

Google's disadvantages: No cohesive product strategy, weak wearable ecosystem, consumer trust issues.

The Business Case: Why Apple Is Doing This

Ecosystem Lock-In

Apple's core strategy is selling an ecosystem. The company makes money from iPhone sales, but also from services (App Store, Apple Music, iCloud). Adding visual AI wearables extends that ecosystem. More devices means more lock-in. More lock-in means higher lifetime value per customer.

If you own an iPhone, iPad, Mac, Apple Watch, AirPods, and smart glasses, you're fully committed to Apple. Switching to Android becomes much harder. You'd have to replace everything.

This is worth billions of dollars in revenue. That's why Apple is investing in these products.

Revenue Opportunities

Beyond ecosystem lock-in, there's direct revenue:

Hardware sales: If Apple sells 50 million smart glasses at

Services: Subscriptions for advanced Siri features, iCloud processing, premium AI capabilities. Probably $10-20 per month, optional.

App sales: Premium apps built specifically for visual AI. Apple takes a 30% cut. With millions of users, this becomes significant revenue.

Advertising: This is controversial, but Apple could theoretically use visual context data (anonymized) to improve ad targeting. Probably not in the first generation, but maybe down the line.

The TAM (total addressable market) for smart glasses could be enormous. If 500 million people globally wear smart glasses eventually, and Apple captures 30% of that market, that's 150 million customers. At

Defensive Strategy

Apple also has defensive reasons. If Meta dominates smart glasses, that's a major competitor in a space Apple considers core to its future. Glasses will be as important as phones eventually. Apple can't cede that market to Meta.

By launching first-generation smart glasses without displays, Apple is buying time to develop display technology while still participating in the market. It's a smart move. The company gets experience manufacturing glasses, gets customer feedback, and doesn't overcommit to unproven display tech.

Challenges and Risks

Manufacturing and Scaling

Making millions of smart glasses is genuinely hard. Optics manufacturing is complex. Quality control is difficult. Supply chain is fragile. If Apple has production problems, the whole timeline slips.

The company's got experience with this—iPhones, Apple Watches, AirPods have all had manufacturing challenges. But smart glasses might be harder. The tolerance requirements are tighter.

Adoption Challenges

People might not want to wear glasses. Some people wear contacts instead. Some people wear prescription glasses and don't want another pair. Some people just don't like the form factor.

Apple's banking that the utility of visual AI outweighs the form factor friction. That's not guaranteed. If adoption is weak, the entire strategy stalls.

Battery Life

This is the big one. If battery life is disappointing—if you need to charge glasses multiple times a day—they won't work. Earbuds have accustomed people to all-day battery life. Glasses will need the same.

Apple's probably solved this, but it's the biggest technical risk. If the company announces all-day battery life, great. If it's vague about battery, watch out.

Privacy Backlash

People might just not trust that Apple isn't recording everything. Even if the company is genuine, even if on-device processing is real, people might not believe it. One scandal, one revelation of misuse, and the whole product is tainted.

This is maybe the biggest risk. It's not technical, it's social.

Competition Response

Once Apple enters the market, Google and Meta will respond. Meta will drop Ray-Ban prices. Google will finally announce its own smart glasses. Samsung might enter the market. Price wars will follow. Margins will compress.

Apple's used to this dynamic. The company competes on brand, quality, and ecosystem, not price. But price competition is still a risk.

Looking Ahead: 2027 and Beyond

The Vision

Apple's vision for wearable AI is clear: invisible computers that enhance your life without getting in the way. Glasses that give you information about your surroundings. Earbuds that listen and understand. A pendant that's always watching.

It's powerful. It's also a bit scary. The line between helpful and intrusive is thin.

Market Adoption Predictions

If smart glasses launch in 2027 at a premium price ($500-1000), adoption will probably be slow initially. Early adopters, tech enthusiasts, professionals who can benefit from the features.

Over time, as price comes down and features improve, adoption accelerates. By 2035, smart glasses might be as common as AirPods are now. By 2045, almost everyone might have them.

But that's speculation. The technology could also flop. Google Glass was going to revolutionize everything too. Then it didn't.

The Long Game

Apple's playing a long game. The company doesn't need smart glasses to be a massive hit immediately. It just needs them to be interesting enough to attract early adopters, generate revenue, and gather data for the next generation.

If Apple can maintain its premium brand positioning while slowly expanding the user base, the company wins. In ten years, smart glasses will be normal. Apple wants to own that market.

The Investment Implications

Capital Requirements

Building this ecosystem requires massive capital investment. New factories. New research. New teams. Probably billions of dollars over the next five years.

Apple's got the cash, so it can do this. But it's a bet. The company is betting that wearable AI will be as big as the iPhone. If it's wrong, that's a lot of money wasted.

Shareholders should be aware of this. Apple's capital allocation is shifting toward wearables and AI. That's a strategic choice with real risks and rewards.

Competitive Advantage

Apple's advantages are structural: vertical integration, brand, ecosystem, privacy reputation. These are hard to replicate. Meta has social, but not privacy. Google has AI, but not ecosystem coherence.

If Apple executes well, these structural advantages compound. The company's smart glasses become the default. App developers optimize for Apple. Other platforms fade. Apple's market position strengthens.

But execution is hard. And competitors won't give up market share without fighting.

What Users Should Know

Should You Wait for These Devices?

If you're considering smart glasses, waiting for Apple's is probably smart. The company's track record on hardware is excellent. The first-generation experience will likely be better than competitors.

But be prepared to wait. Smart glasses probably won't ship until 2027. And they'll be expensive. $500-1000 seems likely.

If you need smart glasses now, Meta's Ray-Bans are available. They work well for their intended purpose. Just be aware that Apple's will probably be better (and more expensive) when they arrive.

Privacy Considerations

Think carefully about wearing a camera on your face. Yes, Apple's committed to privacy. But you're still capturing images of everyone around you. That has ethical implications.

Consider the people around you. Respect their privacy. If someone asks you not to record them, don't. The technology allows something, but that doesn't mean it's always right to do it.

Ecosystem Lock-In

Understands that buying Apple wearables locks you into the Apple ecosystem. That's not necessarily bad—the ecosystem is good. But it's not reversible without significant friction.

If you think you might switch to Android someday, buying expensive Apple wearables is a bet that you'll stay in the ecosystem. Make sure you're comfortable with that.

FAQ

What are Apple's new AI hardware devices?

Apple is developing three new AI-powered wearable devices: smart glasses (no display, launching 2027), an AI pendant (AirTag-sized, launching possibly 2026), and upgraded AirPods with built-in cameras (possibly 2025). All three devices will connect to your iPhone and allow Siri to understand visual context from what the camera sees, enabling the AI to take actions based on your surroundings.

When will Apple's smart glasses be available?

According to reports, Apple is targeting production start in December 2025, with a launch expected in 2027. The AI pendant could arrive as early as 2026, while upgraded camera AirPods might launch as soon as 2025. These timelines are based on current development reports, but Apple typically keeps product launches confidential, so exact dates could change.

How is Apple's approach different from Meta's smart glasses?

Apple's glasses won't have a display (at least initially), whereas Meta's Ray-Ban Meta glasses have built-in capabilities for sharing content. Apple is building the frames in-house rather than partnering with a glasses manufacturer like Ray-Ban. Apple's strategy focuses on visual context for Siri and iPhone integration, while Meta's focuses on content creation and social sharing. Apple's will likely price higher as a premium product, typically in the

What cameras will these devices have?

The smart glasses will feature a high-resolution primary camera for photos and videos, plus a secondary lens specifically designed for AI-powered visual analysis. The AI pendant will have an always-on camera optimized for context capture. The AirPods will have low-resolution cameras designed primarily for AI analysis rather than photo quality. All cameras are engineered to work with Siri to provide visual context for AI responses.

How will Apple protect privacy with always-on cameras?

Apple is expected to emphasize on-device processing, meaning visual data analysis happens primarily on your iPhone rather than being sent to Apple's servers. The company will likely implement technical privacy features like indicators showing when cameras are active, geofencing that disables recording in certain locations, and strong encryption. However, the company will face ongoing scrutiny around consent and ethics regarding recording people without their knowledge, as these devices can capture anyone nearby.

Will I need an iPhone to use these devices?

Yes. All three devices—the smart glasses, pendant, and camera AirPods—are designed specifically to work with iPhones. They rely on the iPhone's processing power for AI analysis and connect to the device via Bluetooth or proprietary wireless connection. Android users won't be able to use these Apple wearables in the same way, which is a significant advantage for Apple's ecosystem lock-in strategy and positions these devices as premium Apple ecosystem products.

What AI features will these devices enable?

Siri will gain the ability to identify objects and ingredients, recognize landmarks and provide information about them, understand context from your visual surroundings to answer questions more accurately, set location-based reminders based on what you're doing, assist with directions by seeing where you're going, and help with visual information retrieval like reading text or identifying products. Essentially, these devices turn Siri from a voice-only assistant into one that understands your visual context and environment.

How will battery life work for always-on cameras?

Battery life is the critical unknown. Apple will likely use a combination of edge processing (analyzing some video on the device itself), periodic sampling (not recording continuously but checking for interesting moments), and careful power management to maintain all-day battery life. However, the exact battery specs haven't been announced, and this remains one of the biggest technical challenges for these products, as continuous video recording would quickly drain batteries in any wearable device.

How much will Apple's smart glasses cost?

Pricing hasn't been officially announced, but based on Apple's typical premium positioning and the complexity of the technology involved, expect smart glasses to cost between

Will these devices work with Android phones?

No. These devices are specifically designed for the Apple ecosystem and require iPhones for full functionality. The wearables won't work with Android phones in any meaningful way, as they rely on iPhone-specific hardware, software, and AI processing. This is a deliberate strategy by Apple to increase ecosystem lock-in and ensure tight integration between hardware and software.

How does this compare to Google's approach?

Google has been unusually quiet about smart glasses despite having strong AI capabilities with Gemini. The company was burned by Google Glass years ago and hasn't committed to a clear wearable AI strategy yet. Apple's move forces Google to either accelerate its own smart glasses roadmap or cede the market to Apple and Meta. Google's challenge is that it lacks Apple's ecosystem cohesion and Meta's first-mover advantage in the smart glasses space.

Conclusion: A New Era of Wearable Intelligence

Apple's pushing into wearable AI with three new devices that represent a fundamental shift in how we interact with technology. These aren't gadgets. They're the future of computing.

The smart glasses arriving in 2027 will be watched closely. They need to work. They need to be useful. They need to be private. They need to be beautiful. That's a high bar. But if anyone can clear it, it's Apple.

What's happening here is bigger than one product category. Apple is positioning itself to dominate the next era of computing, just like the company dominated mobile with the iPhone. These wearables are the beginning of that transition.

The competition will be fierce. Meta's already in the market. Google will eventually move. Startups will try. But Apple's structural advantages—ecosystem, brand, privacy reputation, vertical integration—are enormous.

The risks are real too. Privacy backlash. Adoption resistance. Manufacturing challenges. Competition. But Apple's betting that wearable AI will be as transformative as the iPhone. Based on what we know, that's not an unreasonable bet.

The next three years will tell us if Apple's vision is right. If it is, we're looking at a complete reimagining of how people interact with AI. AI that's always there, always aware, always helpful. Some will love it. Others will find it intrusive.

But one way or another, the future of computing is personal, visual, and always on. Apple's building the tools for that future. Competitors are scrambling to catch up.

The best time to pay attention to these devices was when they were first reported. The second best time is now, before they ship and before the hype becomes unbearable. Understand what Apple is building. Understand the implications. Then decide if it's right for you.

Because once these devices ship, once they prove the concept, once people get used to wearing cameras on their faces, the world changes. And Apple will have led the way.

Key Takeaways

- Apple is developing three AI wearable devices: smart glasses (2027), AI pendant (2026), and camera AirPods (2025) designed to give Siri visual context from your surroundings

- Unlike Meta's partnership with Ray-Ban, Apple is building smart glass frames in-house for complete hardware-software control and optimization

- Smart glasses won't have displays initially; Apple is prioritizing visual intelligence and AI capabilities over augmented reality displays in generation one

- All devices rely on iPhone as the processing core, strengthening ecosystem lock-in and requiring users to stay within Apple's platform

- Visual AI features will enable Siri to identify objects, recognize landmarks, understand context, and take actions based on what the wearer is seeing

Related Articles

- Apple's AI Wearable Pin: What We Know and Why It Matters [2025]

- Rokid Style AI Smartglasses: Everything You Need to Know [2026]

- Ultrahuman Ring Pro: The Oura-Killer Wearable [2025]

- Snap's Specs Subsidiary: The Bold AR Glasses Bet [2025]

- Apple's AI Pin Strategy: Why It Matters [2025]

- Google Gemini Personal Intelligence: Scanning Your Photos & Email [2025]

![Apple's AI Hardware Push: Smart Glasses, Pendant, and AirPods [2025]](https://tryrunable.com/blog/apple-s-ai-hardware-push-smart-glasses-pendant-and-airpods-2/image-1-1771357050888.jpg)