Apple's Ambitious AI Health Coach Dream Died Quietly in 2025

Apple just killed one of its most ambitious health technology projects, and almost nobody noticed. The company was building an AI health coach—basically a digital doctor that could live on your iPhone. This wasn't some experimental lab project either. Apple invested serious resources: teams of engineers, production crews filming medical training videos, and infrastructure for processing massive amounts of health data. The vision was compelling: an AI that understands your fitness habits, your diet, your medical history, and your lifestyle patterns, then offers personalized health guidance without needing a subscription to some third-party wellness app.

Then, in late 2024, Apple's services chief Eddy Cue took over the health division, took one look at the project timeline, and apparently decided the whole thing needed to get shelved. According to Bloomberg, this decision tells you something important about where AI technology actually stands right now—and why even trillion-dollar companies struggle to ship AI products that actually work.

Why Apple's Health Coach Represented Peak Ambition

When you really understand what Apple was attempting, the scope becomes obvious. The company wasn't just trying to build another fitness tracker app. They were building a system that would need to:

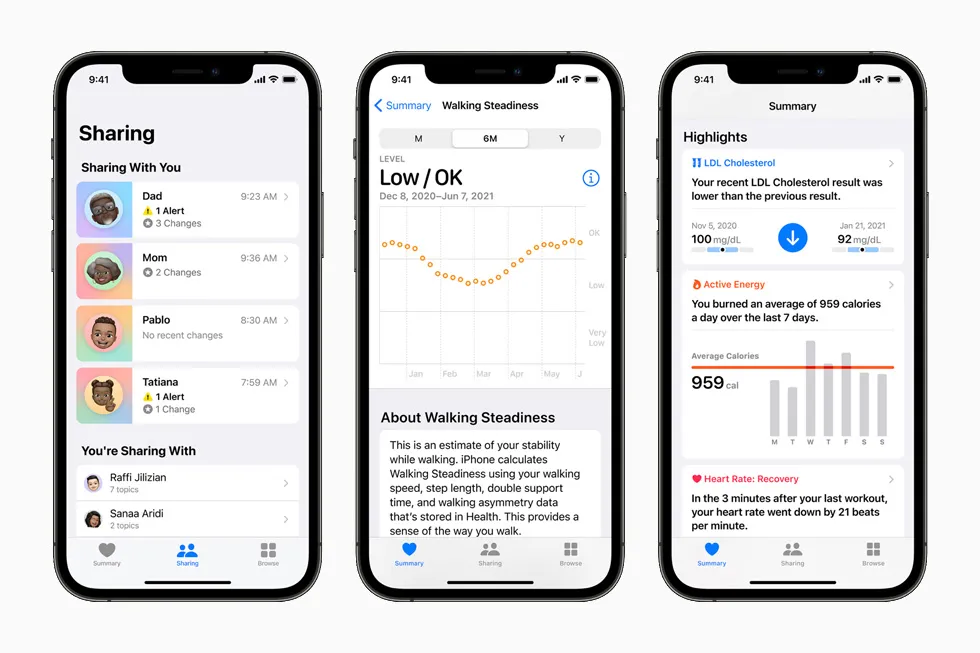

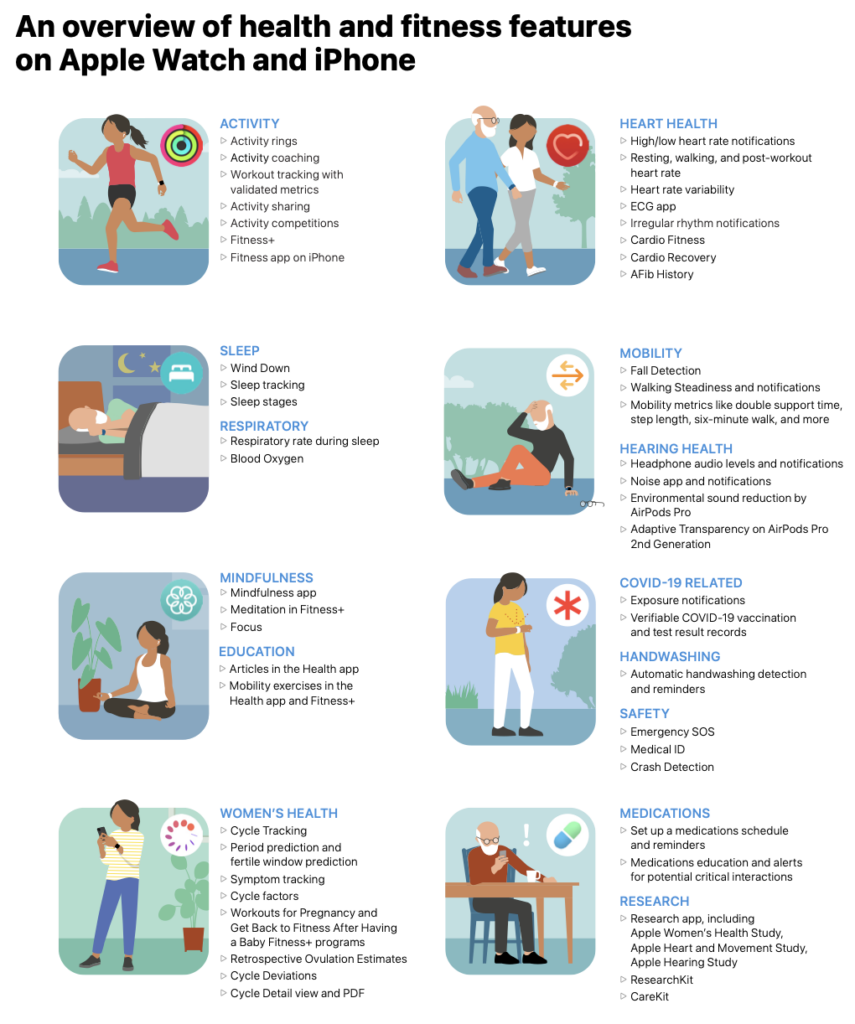

Process complex health data across multiple sources. Your iPhone already knows a ton about you—heart rate from Apple Watch, workout data, sleep patterns, and now increasingly detailed health metrics from wearables. But aggregating that data and drawing meaningful insights from it? That's exponentially harder than just storing the numbers. According to World Economic Forum, digital solutions in healthcare are evolving rapidly, but integrating them effectively remains a challenge.

Understand context and nuance in health recommendations. A generic fitness app can tell you to drink more water. But a real health coach needs to understand that you just started a new medication, you're training for a marathon, you've got a family history of diabetes, and you're already pushing yourself too hard. It needs to balance competing health goals and adapt recommendations when your circumstances change.

Handle the regulatory minefield. The second you start making health recommendations, you're operating in territory that the FDA takes seriously. Apple would need to be incredibly careful about not crossing the line from "helpful suggestion" to "medical advice." That requires serious legal infrastructure and constant monitoring. The FDA's regulations are stringent, ensuring that health tech companies maintain high standards.

Build trust at scale. Health is personal. People aren't going to trust an AI coach with sensitive health information unless that AI proves itself over time. Building that credibility takes more than a slick interface. It takes months of reliable, accurate, personalized guidance.

Apple had actually started down this path already. The company produced training videos explaining medical conditions. It built out the capability to make recommendations based on your personal health data patterns. The infrastructure was starting to take shape.

But here's the crucial part: Eddy Cue looked at the timeline and the competitive landscape and apparently concluded that Apple's plans couldn't compete with what companies like Oura were already shipping.

The Competitive Reality That Killed the Project

Oura doesn't get mainstream tech press coverage the way Apple does, but the company has been absolutely dominating the personalized health data space. Their smart rings give them access to incredibly detailed biometric data—skin temperature, HRV, respiratory rate, sleep stages—that most fitness trackers can't touch. And they've been shipping AI-powered insights for years.

When Cue evaluated Apple's health coach project, he was probably looking at questions like:

How long until we can actually ship this? Apple's projects take time. But the health AI space is moving incredibly fast. Every quarter that passes, competitors get better. By the time Apple shipped, would their AI-powered recommendations actually be better than what Oura or Whoop or other specialized health companies were already doing? Or would Apple look behind the curve?

Do we have the hardware moat anymore? Apple's advantage has always been hardware-software integration. But the truth is, Oura has better health data than Apple does. They have the ring, which gives them metrics Apple Watch can't measure. If you're building an AI health coach, who has the better raw material to work with: Apple with its watch data, or Oura with their comprehensive biometric package?

Is this actually where users want health insights? Apple Watch is great, but it's not the device people look at constantly. Your iPhone is. But here's the problem: people open their health app maybe once a week, if that. They're not checking it obsessively. Meanwhile, health-focused companies have built app ecosystems where users engage constantly. The engagement differential probably mattered more than Apple initially thought.

Cue's assessment seems brutal but probably accurate: Apple couldn't ship fast enough to compete, and even if they did, they might not have a fundamental advantage that justified the massive investment required to build a genuine AI health coach.

The Path Apple Is Taking Instead

So what happens to all that work? The videos explaining medical conditions, the recommendation engine, the infrastructure for processing health data—does it all just get deleted from internal servers?

No. Apple's new strategy is actually smarter, albeit less visionary.

The company is going to release individual features into the Health app over time instead of bundling them into a single "AI health coach" service. That videos Apple produced about medical conditions? Those can roll out as an educational feature. The recommendation engine based on your health data? That can surface insights in the Health app without being positioned as a comprehensive coaching service.

This approach has real advantages:

Lower regulatory risk. Individual features that help you track metrics are much easier to ship than a service positioned as replacing doctor guidance. Apple can be more conservative with claims.

Faster iteration. Instead of waiting for a complete product, Apple gets health features in front of users now. They can gather feedback and improve specific capabilities incrementally.

Reduced engineering complexity. You don't need to build the comprehensive AI reasoning system all at once. You can ship helpful features independently and wire them together later if the pieces actually work well together.

Learning opportunities. By shipping individual features, Apple gets real-world usage data. They learn what health recommendations users actually trust, what data combinations generate useful insights, what the engagement patterns look like.

But here's the honest part: this is also a much less ambitious vision. It's Apple saying, "We're going to be helpful with health, but we're not going to be the health company."

The Interim AI Health Chatbot Strategy

Apple isn't completely giving up on AI health conversations though. The company is building an AI health chatbot to answer wellness questions in the near term. Think of it like Siri specifically trained to understand health queries and provide reliable information.

But here's where this gets interesting: Apple views even the health chatbot as temporary. The real plan is to eventually let a more general-purpose Siri chatbot—the next evolution of Siri that can handle complex conversational AI—handle these health inquiries. OpenAI has demonstrated the potential of AI in health-related conversations, setting a benchmark for future developments.

This is Apple being strategic about not building a bunch of specialized AI systems when they can build one better system that handles multiple domains. Instead of Health Chatbot, Weather Chatbot, Travel Chatbot, etc., you eventually get one Siri that's competent across all of these areas.

The intelligence required for this to work is non-trivial though. A Siri that can have a genuine health conversation needs to:

Understand medical context. It can't just run searches for disease symptoms. It needs to understand interactions between conditions, medications, and lifestyle factors.

Know what it doesn't know. This is actually the hardest part. An AI health chatbot has to be comfortable saying, "You should talk to a doctor about this," instead of confidently giving advice that might be wrong.

Maintain consistency over time. If you ask Siri about your cholesterol situation in January and December, the AI needs to remember the context and adapt advice based on changes in your situation.

Avoid medicolegal nightmare scenarios. Every single response could theoretically be questioned later if a health outcome goes wrong. The AI needs to be defensive without being useless.

Apple believes that building a general-purpose Siri with strong reasoning capabilities will eventually solve these problems better than building a specialized health AI. We'll see if that bet pays off.

What Happened Behind the Scenes

The official story is that Eddy Cue took over the health division and thought Apple's plans weren't competitive. But there's probably more context worth understanding.

Cue is known for wanting to move faster and being willing to make aggressive competitive decisions. When he took over health from its previous reporting structure, he probably had a mandate to either accelerate the division's output or rationalize what Apple was actually investing in.

What he apparently found was a health coach project that was ambitious but had unclear product-market fit. Sure, everyone uses the Health app. But usage is shallow and inconsistent. Most people never open it. Meanwhile, specialized health companies have built engaged communities of users who actively check their apps multiple times per day.

Apple has a tendency to say, "We're going to build this better than anyone," and sometimes that's true. But the health coach project was becoming a case where "better" in Apple's mind meant "more carefully engineered" when what the market actually needed was "shipped and iterable."

Cue's decision was probably: let's not spend two more years building the perfect AI health coach. Let's ship useful health features now, get them in front of users, learn what works, and then decide if we want to build something bigger.

The Competitive Landscape That Made This Decision

Apple's health division is operating in a surprisingly crowded market now.

Oura dominates the data collection side with their rings. Whoop focuses on athletes and performance tracking. Fitbit has enormous distribution through Google's ecosystem. Garmin runs deep in the sports watch market. Peloton built engagement through connected fitness. MyFitnessPal owns food tracking. Withings makes health hardware that's genuinely innovative.

Each of these companies has been shipping AI-powered health insights for years. They've learned what works, what users ignore, what creates actual behavior change versus what just looks cool in a demo.

Apple's assumption was probably that they could leverage their scale and their position in people's pockets to do health better. But scaling health insights is weird. It's not like scaling entertainment where more money just equals more content. With health, different populations need different insights. An elite athlete needs completely different guidance than someone trying to recover from cardiac surgery. Building one-size-fits-most health AI is nearly impossible.

The companies that are succeeding in health are the ones who picked a narrow focus: elite athlete performance, managing a specific chronic condition, weight loss, sleep optimization. Not the ones trying to be everything to everyone.

Apple's canceled health coach project was trying to be a comprehensive health AI coach. That's an incredibly hard problem. Maybe too hard for a company that doesn't have deep expertise in health behavior change and medical science.

What This Tells Us About AI Progress in 2025

The cancellation of Apple's health coach is actually a really useful signal about where AI technology stands right now.

There's this common belief that AI is so powerful that any company with smart engineers and enough resources can build anything. That's not quite true. Some problems have structural constraints that AI hasn't solved yet.

For AI health coaching, the constraints include:

Data quality and privacy. Building a trustworthy health AI requires incredibly clean, reliable health data. But most health data in the wild is messy, incomplete, and fragmented across different providers and devices. Getting high-quality data at scale while respecting privacy is genuinely hard.

Regulatory uncertainty. Is your AI health coach "advice" or "medical diagnosis"? Different jurisdictions draw that line differently, and it keeps shifting. Building a system that works globally while staying on the right side of regulations is a massive engineering headache.

Evaluation and safety. How do you test whether an AI health coach is actually safe? You can't just release it and hope nothing goes wrong. You need clinical validation. You need to demonstrate that the AI's recommendations actually improve health outcomes or at least don't make things worse. That's expensive and time-consuming.

User trust. Health is where people are most skeptical about AI. They've seen AI make mistakes. They're worried about their privacy. Building enough trust to have people act on AI health recommendations takes time and a track record of reliability.

Apple has engineers who could probably solve any single one of these problems. But solving all of them simultaneously while also shipping faster than competitors? That's legitimately difficult.

The Fate of Health Features Apple Still Has Plans For

Here's what's still happening at Apple on the health side:

Medical condition explainer videos. These are actually valuable. Apple spent resources producing professional videos explaining conditions like diabetes, heart disease, and asthma. Those can ship as educational content in the Health app without any AI complexity. Users interested in understanding a condition better can watch high-quality, accurate information.

Data-driven health insights. The capability to show users patterns in their health data—like "your heart rate variability tends to be lower when you sleep less than 7 hours"—is useful. This is pattern matching more than true AI, but it requires infrastructure to analyze personal health data without sending it to servers. Apple can ship this.

AI health chatbot for wellness questions. The interim solution. Not as ambitious as the full health coach, but more helpful than nothing. This can answer questions like, "How much water should I drink?" or "What are signs of dehydration?" without claiming to replace a doctor.

Integration with Siri's reasoning. The long-term bet. As Apple's Siri AI gets smarter, health conversations can become a natural capability. Not a specialized health bot, but Siri being competent about health alongside its other capabilities.

These aren't as visionary as a comprehensive AI health coach, but they're probably more achievable and more likely to actually ship in the next 12-18 months.

What This Means for Health Tech Users

If you're someone who was hoping Apple would become a major player in health guidance, this is disappointingly underwhelming news. Apple just said, "We're not going to be the health company. We're going to add some helpful health features to our ecosystem."

But here's the thing: that's probably fine. It means Apple is getting out of the way and letting specialized health companies do what they do best.

If you're serious about health tracking and guidance, you're probably better off with Oura or Whoop or whatever focused health company best matches your needs anyway. Apple adding general health features to the Health app doesn't hurt those services. It just means you can aggregate your data in one place.

If you're a casual health tracker who just wants to understand your patterns without obsessing, Apple's individualized health features probably help you. You can see what's happening with your metrics without paying for another subscription.

The real loss here is that we don't get to see whether Apple could have solved the hard problems of AI health coaching. Could they have built something genuinely better than what competitors offer? We'll never know. But the fact that they looked at it and said, "This isn't worth the investment and timeline," is its own kind of answer.

The Broader Pattern: When Tech Giants Retreat

This isn't the first time Apple has killed an ambitious project. But it's interesting to see it happening in real-time with health technology.

The pattern usually goes like this: Apple identifies a space where they think they can win. They invest heavily in engineering and infrastructure. Then, when execution proves harder than expected, they make a brutal decision to either ship something smaller or get out entirely.

The difference between Apple and competitors is that Apple can absorb the sunk cost. They invested whatever they invested in the health coach project, decided it wasn't going to work, and just moved on. Most companies would try to force the project out the door anyway because they're desperate to justify the investment.

Apple's willingness to kill projects is actually a competitive advantage. It means they're not stuck shipping things that don't work just because people are already working on them.

But it also means: when Apple is focused on something, that's genuinely impressive. The reverse is also true. If Apple looked at a project and decided it wasn't worth it, that tells you something about the difficulty level.

Health Data Privacy: The Unsolved Problem in Health AI

One thing the canceled health coach project probably struggled with is the fundamental privacy challenge of building health AI at Apple's scale.

Apple has been very public about privacy-first design. But building a health AI that learns about you requires processing data. That data has to go somewhere, be analyzed somehow. Even with on-device processing and encryption, there's inherent tension between "powerful personalization" and "privacy first."

A health coach that really understands you needs to:

Store historical data about you. What's your baseline heart rate? How much have your sleep patterns changed? What were your fitness trends last season? To give you good guidance, the system needs to know your history.

Compare you to reference populations. Is your heart rate at rest normal for your age and fitness level? Is your sleep quality comparable to others? Reference data requires aggregating information from other users (anonymized, but still).

Make inferences about you. If the system detects patterns that might indicate a health issue, should it tell you? How confident does it need to be? Who's liable if it's wrong?

Update its understanding over time. As your health data changes, the AI needs to update its models. But that's computationally expensive and requires some infrastructure for processing.

Apple's privacy commitments make this even harder than at a company like Google, which is more comfortable with data processing. If Apple can't find a way to do health AI that meets their privacy bar, they won't ship it.

That's probably why the health coach got killed. It's not that the AI technology doesn't exist. It's that combining strong AI capabilities with Apple's privacy requirements is genuinely complicated, and it wasn't clear they could solve it well enough to justify the timeline and cost.

Comparing Apple's Approach to Competitors

Companies like Google have taken a different approach. Google is comfortable processing health data more aggressively. They've been integrating health data across their ecosystem—fitness data from your phone, search queries you've done, maybe eventually healthcare data partnerships.

Google's bet is: we'll process this data deeply, find real insights, and demonstrate value good enough that people accept the privacy tradeoffs.

Apple's bet is: we'll process less data, make fewer assumptions, but what we do process stays on-device and private.

For a health coach specifically, Google's approach might actually be better. Google can collect more data, build a more powerful AI, and frankly have fewer qualms about the privacy aspects.

Apple's constraint is: we want to maintain our privacy positioning. That's valuable for brand and trust, but it may make it harder to build truly ambitious health AI.

What Health Tech Investors Should Take Away

If you're investing in health technology, this Apple decision is actually useful data.

It confirms that building comprehensive AI health coaching is genuinely hard. It's not a problem that can be solved with just a better algorithm or more engineering. It requires solving regulatory, privacy, data quality, and user trust problems simultaneously.

Companies that are succeeding in health tech are the ones who picked a narrow lane: Oura focuses on biometric data quality. Whoop focuses on athlete performance. Fitbit focuses on mainstream adoption and integration.

Companies trying to be comprehensive health AI platforms are struggling. Apple just joined that list of struggling companies—except Apple has the resources to say, "This isn't working, let's try something else."

For investors, this probably means: bet on companies with narrow focus and strong data moats, not companies trying to be "everything health." The narrow focus companies are more likely to actually ship and improve their products.

The Timeline: What Happens Next

Apple said that the medical condition explainer videos and recommendation engine capabilities could be available "early this year." That probably means Q1 or Q2 of whatever year this decision was made.

The AI health chatbot is described as an interim solution, meaning it gets built faster than the full health coach was, but it's not the final vision.

The real question is: when does the improved Siri chatbot show up? That's the long-term play. But building a genuinely capable AI reasoning system takes time. Apple probably won't even show off Siri's new capabilities until they're confident it's actually good.

So the realistic timeline probably looks like:

Next 6 months: Health app gets new features for explainer videos, recommendations, maybe starts rolling out the health chatbot.

Next 12-18 months: Health chatbot gets better at answering wellness questions. Maybe ties into Health app more deeply.

2-3 years out: Improved Siri starts showing up in iOS with actual reasoning capabilities. Health conversations become just one domain where Siri is competent.

That's a much longer timeline than the original health coach project probably had, but it's more realistic about what Apple can actually build and ship.

Lessons From the Health Coach Cancellation

When a company like Apple kills a project this ambitious, there are lessons in there.

First lesson: execution speed matters more than you think. Apple could have built a better health AI than competitors eventually. But "eventually" was taking too long. By the time it shipped, competitors would have improved. Speed is a feature.

Second lesson: your hardware advantages don't guarantee software advantages. Apple makes incredible hardware. But having a Watch on someone's wrist doesn't automatically mean they'll use Apple's health software. Market share is divided among many companies.

Third lesson: narrow focus beats comprehensive visions when you're building sophisticated AI. Companies that win in AI tend to own one specific domain really well, not everything.

Fourth lesson: privacy is a real constraint, not just marketing. If you're serious about privacy, it limits what AI you can build and how quickly you can build it. That's a real tradeoff.

Fifth lesson: knowing when to kill a project is important. Apple could have forced the health coach out the door anyway. Instead, they killed it and moved resources elsewhere. That discipline is rare.

The Future of AI Health Coaching

Does this mean AI health coaching is dead? No. But it's probably going to happen differently than we expected.

Instead of one company building the comprehensive health coach, we'll probably see an ecosystem develop:

Specialized health companies will build deep AI in their areas. Oura will get better at sleep and recovery. Whoop will get better at athletic performance. There will be companies we haven't heard of yet that build AI for managing specific conditions.

General purpose AI assistants (like future Siri or ChatGPT) will become more capable at health conversations. They won't be specialized health AIs, but they'll be knowledgeable enough to be helpful.

Health platforms will integrate data from multiple sources and help you understand patterns. Apple Health is one example. Google Health is another. These will become more sophisticated but probably won't be making recommendations.

Regulatory clarity will improve. Right now, it's unclear what an AI can say without it being considered medical advice. As regulators clarify, more companies will be comfortable building health AI.

The canceled health coach was an attempt to do everything in one system. The future is probably more modular.

What This Means for Your Health Data

If you're an Apple user wondering what this means for your health data and privacy, here's the practical reality:

Your health data in Apple Health is still under Apple's privacy controls. Apple is still not sending it to servers for analysis (with some limited exceptions). Nothing about this cancellation changes that.

What changes is that you won't get a comprehensive AI health coach built into Apple's ecosystem. You'll get individual features that help you understand your health—explainer videos, pattern recognition, conversation ability through Siri.

If you want more comprehensive AI health guidance, you'll need to use a specialized health app from Oura, Whoop, or another company. And you'll need to accept that those companies process your data more aggressively to provide more powerful insights.

That's actually probably fine. Most people don't need comprehensive AI health coaching. They need to understand their patterns and get nudges toward healthier behavior. Apple's approach probably serves that well enough.

FAQ

What exactly was Apple's AI health coach supposed to do?

Apple was building a service that would analyze your personal health data from your iPhone and Apple Watch, then provide personalized health recommendations similar to a coach or healthcare advisor. It could have suggested lifestyle changes based on your patterns, corrected workout form using the iPhone camera, tracked nutrition, and made recommendations adapted to your individual situation. The system was designed to handle complex health conversations and understand your medical context.

Why did Apple cancel the health coach project?

Apple's new services chief Eddy Cue took over the health division and concluded that the project's timeline was too long and its competitive position was weak. Specialized health companies like Oura were already shipping compelling AI-powered health insights, and Cue determined that Apple couldn't build something significantly better fast enough to justify the investment. The decision reflected a strategic choice to focus on shipping smaller, incremental health features rather than one comprehensive coaching service.

What happened to all the work Apple did on the health coach?

Apple is repurposing the individual components. The medical education videos explaining health conditions will be released as features in the Health app. The recommendation engine that analyzes health patterns is being adapted for Health app insights. The infrastructure developed for the project isn't wasted—it's being broken into smaller pieces that can ship independently and more quickly.

Is Apple still building health AI products?

Yes, but with a different strategy. Apple is developing an interim AI health chatbot to answer wellness questions, building educational content about health conditions, and creating features that surface health insights from your data. The longer-term plan is to integrate health conversations into an improved Siri that handles health inquiries alongside other conversational tasks, rather than maintaining a specialized health-focused AI.

How does this affect my health data privacy with Apple?

Nothing about this cancellation changes Apple's health data privacy practices. Your health data continues to be protected under Apple's privacy controls and generally isn't processed on Apple's servers without your explicit permission. The cancellation means you won't get a comprehensive AI health coach, but your data protection hasn't changed.

What are the alternatives if I want AI health coaching?

Companies like Oura, Whoop, Fitbit, Garmin, and specialized health apps offer AI-powered health insights and coaching. These services process your data more actively than Apple does, which allows them to provide more sophisticated recommendations. For general wellness conversations, you can also use general-purpose AI assistants like ChatGPT or future versions of Siri that will have health knowledge without being health-specific.

When will Apple's individual health features start rolling out?

According to reports, the medical condition explainer videos and recommendation engine capabilities could be available in early 2025. The health chatbot is being developed as an interim solution. The larger timeline for AI health capabilities being integrated into Siri is probably 2-3 years away as Apple improves Siri's reasoning capabilities.

Does this mean Apple is giving up on health technology?

No. Apple is restructuring its health strategy from "build one comprehensive AI health coach" to "build multiple focused health features that can ship faster." This is actually a more pragmatic approach than the original vision. Apple is staying in the health space but with a different product strategy.

Could Apple change its mind and build the health coach later?

Possibly, but it's unlikely in the near term. For Apple to revisit a health coach project, they'd need to see material progress in how their individual health features are adopted and used, plus significant advances in AI reasoning capabilities that make the health coaching problem easier to solve. Right now, the calculation is that the effort-to-benefit ratio doesn't justify the investment.

What does this tell us about AI limitations in 2025?

It demonstrates that building comprehensive AI systems with multiple constraints—health data complexity, privacy requirements, regulatory concerns, user trust, competitive timelines—is genuinely difficult even for companies with massive resources. The limitation isn't computing power or algorithms. It's the coordination problem of solving multiple hard problems simultaneously.

Apple's AI Health Coach faced high complexity in data processing and context understanding, leading to its discontinuation. Estimated data.

The Takeaway

Apple's cancellation of its AI health coach is actually a useful reminder about how AI technology works in practice. The capability to build a sophisticated AI system exists. The constraint is everything else: regulation, privacy, user trust, data quality, engineering timeline, and competitive positioning.

For Apple specifically, the decision means pivoting from a visionary health coaching product to a more pragmatic approach of shipping incremental health features that can improve over time. For the health tech industry, it suggests that comprehensive AI health coaching is harder than we thought, and the future probably belongs to companies with narrow focus and deep domain expertise rather than companies trying to be everything.

And for users, it means you'll still get helpful health features from Apple, but if you want sophisticated AI health guidance, you'll probably need to use specialized health apps from companies that have built their entire business around health data and insights.

The health coach dream was ambitious. But sometimes the right call is to step back and build something smaller that you can actually ship well.

Apple's decision to cancel the health coach project was primarily influenced by the long project timeline and weak competitive position, scoring highest in impact. Estimated data.

Key Takeaways

- Apple cancelled its AI health coach project because it couldn't ship fast enough to compete with specialized health companies already offering AI insights

- The decision reveals that building comprehensive AI with privacy constraints, regulatory compliance, and user trust requirements is genuinely harder than engineering alone

- Apple will release individual health features incrementally instead: education videos, recommendation engine, and health chatbot instead of one complete service

- Specialized health companies like Oura and Whoop have data advantages that are difficult for Apple to overcome despite superior engineering resources

- The future of health AI is modular with specialized companies owning narrow domains rather than any single company building comprehensive health coaching

Related Articles

- Fitbit Google Account Migration: What You Need to Know [2026]

- Fitbit Co-Founders Launch Family Health Tracker: What You Need to Know [2025]

- Luffu: The AI Family Health Platform Fitbit Founders Built [2025]

- Fitbit Google Account Migration: Everything You Need to Know [2025]

- Apple Watch Hypertension Alerts: Complete Setup & Use Guide [2025]

- How AI Is Accelerating Scientific Research Globally [2025]

![Apple's AI Health Coach Cancelled: What It Means for Your Health Data [2025]](https://tryrunable.com/blog/apple-s-ai-health-coach-cancelled-what-it-means-for-your-hea/image-1-1770386832824.jpg)