Why Apple's Siri Redesign Keeps Getting Pushed Back

Apple promised us a smarter Siri. Not the voice assistant we've complained about for years, but something genuinely different. A Siri that actually understands your life, your preferences, and what you're doing right now on your screen. A Siri that could read your email, glance at a web page, and take action without you spelling everything out.

Then nothing happened.

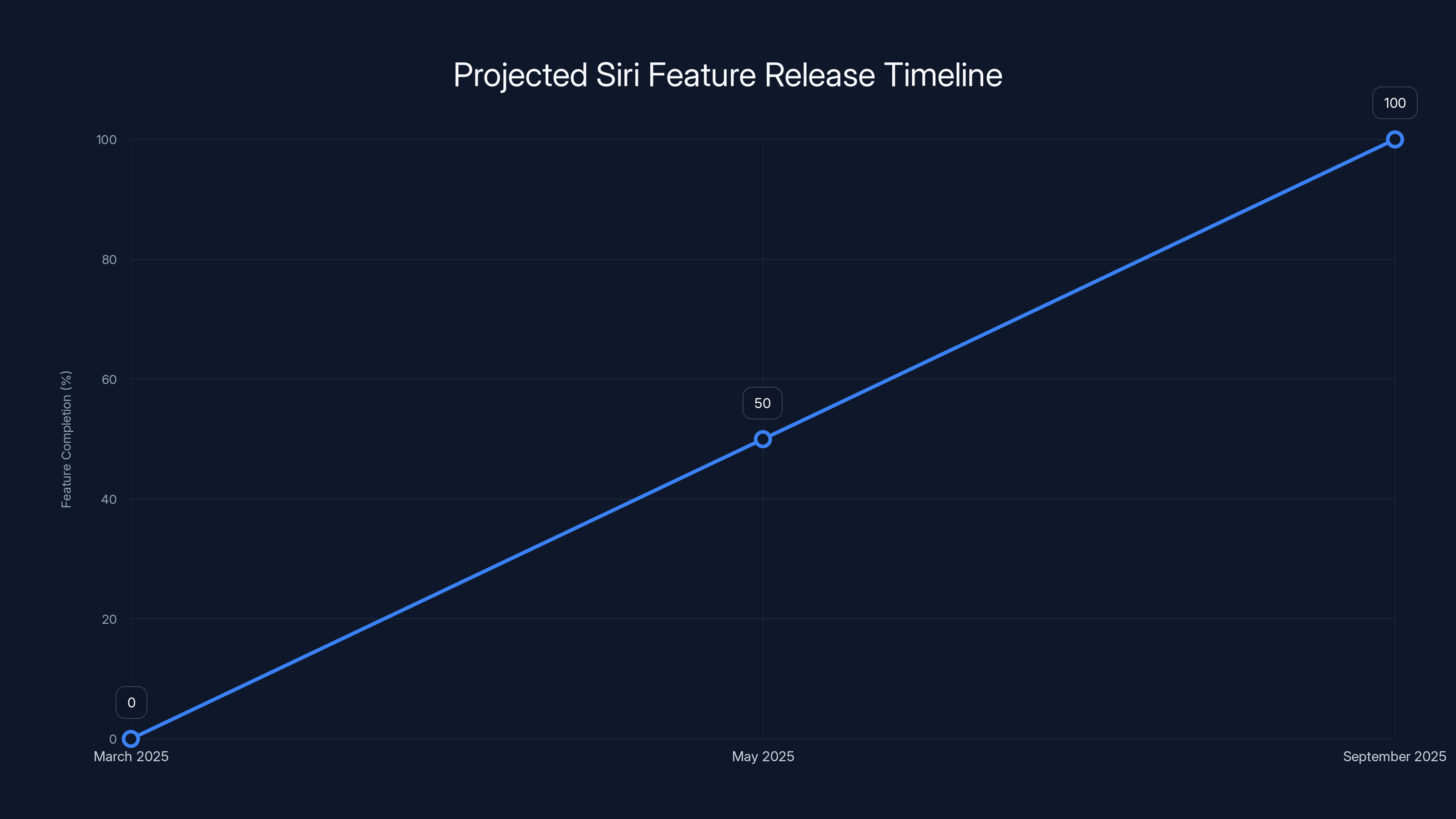

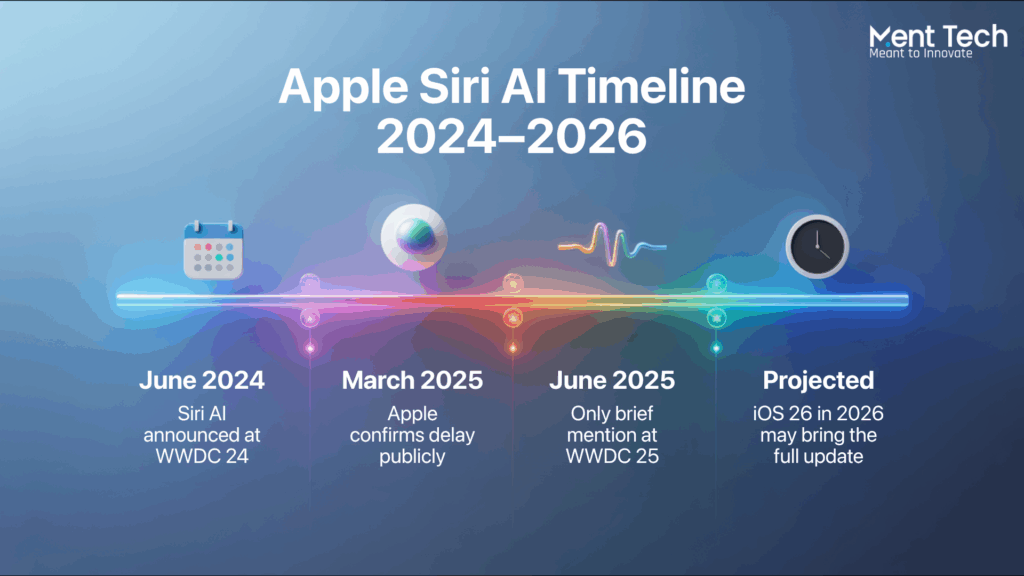

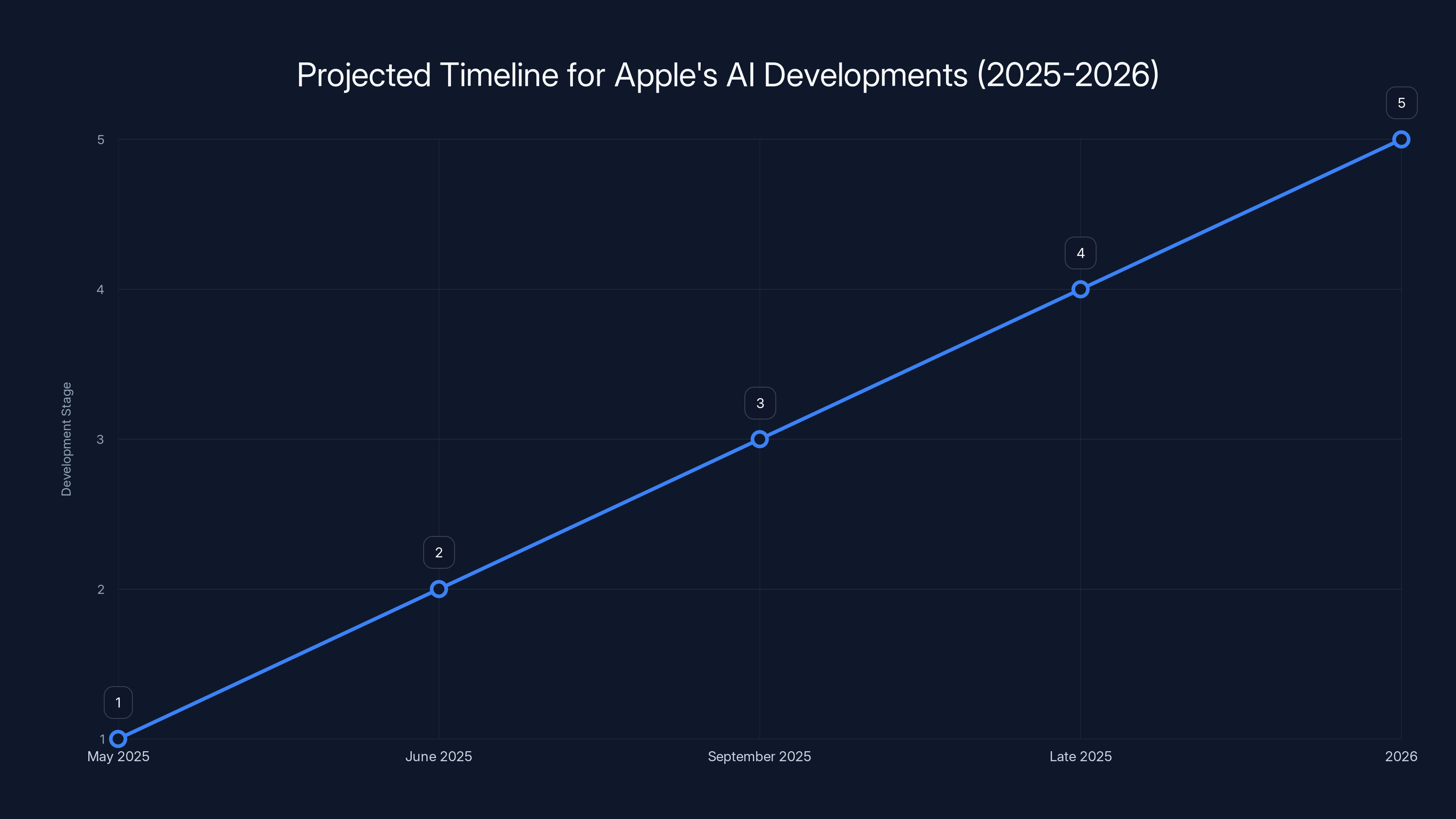

Last year, Apple delayed these features. Then it delayed them again. Now, according to recent reports, the company is pushing what was supposed to arrive in iOS 26.4 (launching March 2025) into iOS 26.5 (May 2025) and potentially iOS 27 (September 2025). The reason? Testing found problems. Fresh ones. The kind that suggest Apple's AI ambitions for Siri are running into the same messy reality that plagues every major software overhaul.

This isn't just about a missed deadline. It's a window into how hard it actually is to rebuild a core product that's been baked into hundreds of millions of devices for over a decade. It's about the gap between what sounds impressive in a keynote and what actually works when real people use it on their iPhones. And it raises a bigger question: what's taking so long?

The Original Siri Problem: A Decade of Stagnation

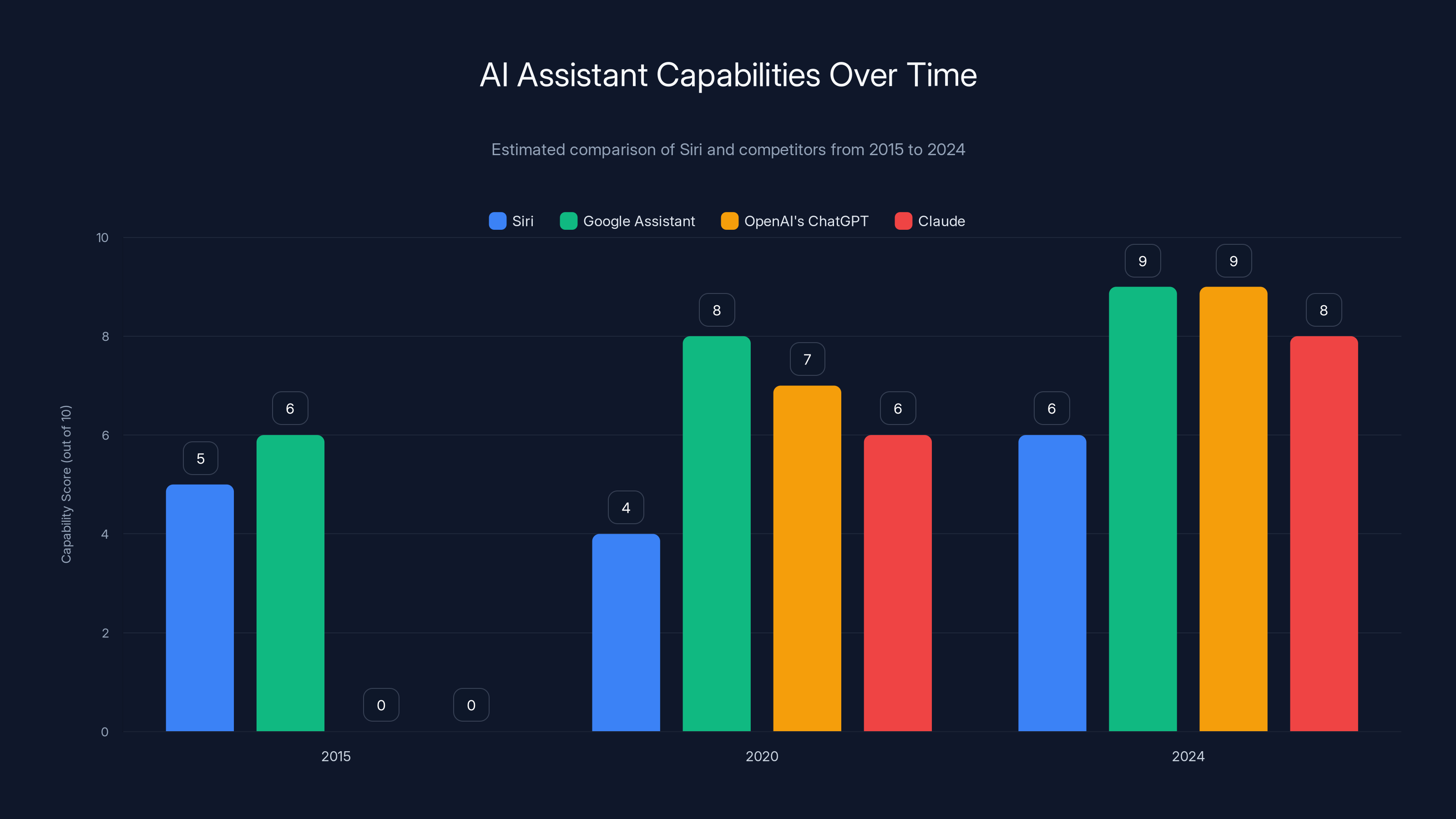

Let's be honest about where we started. Siri in 2015 felt cutting-edge. By 2020, it felt archaic. By 2024, it was genuinely embarrassing for a company as obsessed with AI as Apple claims to be.

The core issue was fundamental: Siri couldn't understand context. You'd say "Remind me about this when I get home," and Siri would create a reminder about the word "this." You'd ask Siri to do something on your screen, and it would default to a web search instead. The assistant lived in a bubble, unable to see what you were looking at or understand the flow of your actual day.

Meanwhile, competitors were shipping smarter tools. Claude was proving that AI could understand nuance. OpenAI's ChatGPT had already hit 100 million users by doing things Siri still couldn't do. Even Google Assistant was miles ahead in understanding natural language and taking meaningful actions across your device.

Apple's response? The company started making moves toward something bigger.

In 2024, Apple signaled it was finally going to overhaul Siri from the ground up. The company even made deals with OpenAI to integrate GPT-4 capabilities and reportedly worked with Anthropic on other AI features. This wasn't a small patch. It was a fundamental reimagining of what Siri could do.

Except here's the thing: promising a smarter Siri and actually shipping one are two very different challenges.

The new Siri features are expected to be partially available in May 2025 with a full release in September 2025. Estimated data based on current projections.

What Apple Promised: Personalization and Context

When Apple first announced plans for the upgraded Siri, the company focused on two things: personalization and device context.

Personalization means Siri would learn who you are. It would understand your daily routines, your communication patterns, and your preferences. You wouldn't have to explain everything from scratch. Siri would know that when you say "mom," you mean a specific contact, and when you ask for coffee, you probably want locations near your home, not randomly across the map.

Device context is even more important. The new Siri would actually look at your screen. If you're reading an email about a meeting at 3 PM on Thursday, and you ask Siri to add it to your calendar, Siri would see that meeting information and handle it automatically. You wouldn't need to provide redundant details. The assistant could see what you see.

This seems obvious in 2025. Most modern AI assistants work this way. But for Siri, it's a completely different architecture. The current version of Siri has to be told everything explicitly. It's like having an assistant who's never seen the inside of your office, doesn't know your routines, and requires you to repeat information twice before acting.

The new Siri was supposed to change that. The feature Apple called "personal context" would let Siri understand what's on your screen and take action based on that information, not just voice commands. This would theoretically make Siri useful for something beyond setting timers and playing music.

That's the feature that's now delayed into iOS 26.5 at the earliest.

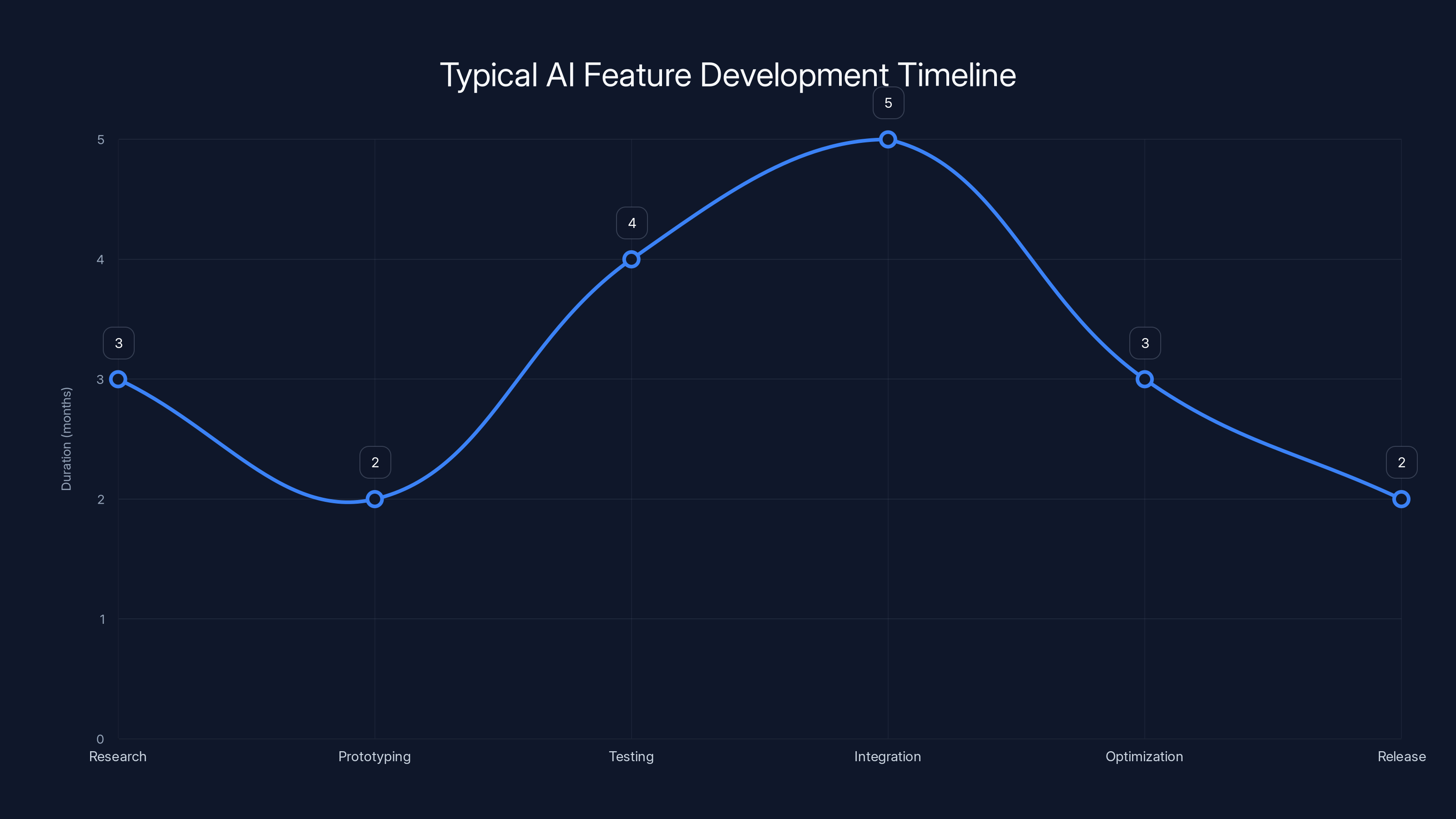

AI feature development typically spans several phases, with integration and testing often taking the longest. Estimated data based on industry trends.

The First Delay: Why March Became May

Apple initially planned to launch these enhanced Siri features with iOS 26.4, scheduled for March 2025. The company had been testing the features. Engineers were working toward a deadline. Everything seemed on track.

Then testing found problems.

According to reports from people familiar with Apple's development process, the new Siri features didn't work reliably enough for a public launch. We're not talking about minor bugs here. We're talking about fundamental issues with how the AI understood context, how it processed personal information, and how it translated that understanding into actual actions.

The specifics aren't entirely clear, but consider what's happening under the hood. Siri needs to:

- See what's on your screen

- Understand what that content means

- Connect it to your personal information and routines

- Execute actions based on that understanding

- Do all of this while preserving privacy and not breaking existing functionality

Each of these is a complex problem. Together, they're exponentially harder. When you're trying to get them all working simultaneously across hundreds of millions of devices running different versions of iOS, with different hardware capabilities and network conditions, the failure modes multiply.

So Apple made a decision: delay to iOS 26.5 in May. Use the additional testing time to fix what's broken. Iron out the reliability issues. Make sure Siri actually works before putting it in front of users.

This is actually the right call, but it reveals something important: Apple's AI timelines are overestimating what's achievable.

The Broader Challenge: Making AI Reliable at Scale

Apple's problem with Siri is the same problem every company faces when trying to scale AI from a research project to billions of real devices: reliability at scale is exponentially harder than reliability in demos.

When you show a feature to a journalist, you can cherry-pick the best examples. When you launch it to 500 million iPhone users, you're exposing it to edge cases you never imagined. Users will ask Siri things you didn't anticipate. They'll use the feature in ways that seem obvious in hindsight but weren't obvious during development. They'll expose bugs in the underlying models that only appear when you have that kind of scale and diversity of input.

This is why companies like OpenAI spend months on "alignment" and "safety" testing before major releases. It's why Anthropic emphasizes constitutional AI principles. It's why Google takes conservative approaches with large language model integrations. Getting AI right is hard.

For Siri specifically, there are additional complications:

Privacy constraints. Apple has positioned itself as the privacy-first tech company. The new Siri needs to understand your personal information without actually collecting it on Apple's servers. That means running AI models on-device, which means dealing with the computational limitations of your iPhone. This is harder than just running everything in the cloud like your competitors do.

Hardware diversity. Your iPhone runs on an A-series chip. Your Mac uses different silicon. Your iPad is somewhere in between. Getting an AI model that works reliably across all these different hardware configurations, with different RAM, storage, and computational capacity, is significantly more complex than shipping the same model to everyone.

Integration complexity. Siri touches almost every part of iOS. Mail, Calendar, Messages, Maps, Home, Health, third-party apps. Every integration point is a potential failure mode. If Siri's context understanding breaks something in Maps, that's not just a Siri problem. That's an iOS problem. This forces Apple to be extremely cautious.

User expectations. People have been using Siri for 13 years. They have ingrained habits. They know its limitations. When you fundamentally change how something works, you risk breaking the mental model people have built around it. Apple needs to ensure that the new Siri is backward-compatible enough that it doesn't confuse existing users, while also being advanced enough to actually be useful.

Solve any one of these problems in isolation, and you've got a publishable research paper. Solve all of them simultaneously, and you're doing real product engineering.

Estimated data shows Apple's AI development milestones from limited feature releases in May 2025 to potential advanced model integration by 2026.

What Else Is Delayed: Web Search and Image Generation

Beyond the personalization features, Apple was also planning to add other AI capabilities to iOS 26.4. Some of these are also being pushed to later versions.

Apple has been testing a Perplexity-like web search feature for Siri. Instead of defaulting to Google or Bing, Siri would do what Perplexity does: synthesize information from multiple sources and provide a more intelligent answer than a traditional search would. This requires understanding what the user is actually asking, finding relevant sources, and synthesizing the information into a coherent response.

That's not trivial. It requires a capable language model, access to real-time search data, and the ability to rank sources for relevance and reliability. When you're doing this on a device with limited connectivity, battery life concerns, and privacy requirements, it becomes significantly more complex.

Apple has also been testing custom image generation. The idea would be that you could ask Siri to generate an image, and it would create one using on-device models. This requires large generative models running locally, which brings back all the hardware constraint problems we mentioned earlier.

Both features are still in testing for iOS 26.5, which suggests they're not ready either. The combination of these delays paints a picture: Apple's entire AI strategy for Siri is behind schedule, and the company is taking the cautious approach of pushing everything back rather than shipping partially working features.

The Chat GPT Integration: A Different Kind of Siri

While personal context features are being delayed, Apple is also planning something more dramatic: a Chat GPT-like version of Siri that would ship with iOS 27 in September 2025.

This is actually a different product than what we've been discussing. Instead of an improved voice assistant, this would be a conversational AI chatbot version of Siri. You could have multi-turn conversations with it. You could ask it to explain concepts, brainstorm ideas, or help with creative work. It wouldn't just take commands; it would actually discuss things with you.

The announcement is planned for the Worldwide Developers Conference in June 2025, with a public launch in September alongside iOS 27.

This is Apple's answer to Chat GPT. The company is integrating OpenAI's GPT-4 technology (and possibly models from other partners) to power a more capable assistant. Users would be able to handle more complex requests than current Siri can manage.

But here's the thing: this is also a bet that the market actually wants conversational AI built into iOS. We know people use Chat GPT. We know they find it useful. But does it need to be Siri? Will iPhone users actually use a Chat GPT-like Siri, or will they just open the Chat GPT app like they do now?

Apple's thinking is probably that if Siri is more capable, users will reach for it instead of switching to a different app. That's a reasonable theory. It's also unproven.

Estimated data shows Siri's stagnation in capabilities compared to competitors like Google Assistant and OpenAI's ChatGPT, which have shown significant improvements over the years.

The Competition Problem: Siri Is Already Falling Behind

While Apple has been delaying, everyone else has been shipping.

Google Assistant has gotten significantly smarter, especially with the integration of Gemini models. Microsoft's Copilot is available across Windows and other devices. Amazon's Alexa has been adding AI capabilities. Even phone manufacturers like Samsung are shipping their own AI assistants with more capability than Siri currently has.

The market has moved on from simple voice assistants. Users now expect AI that understands context, can have conversations, and integrates meaningfully with their digital life. Siri doesn't do any of that yet. The delays make that gap wider.

Apple's strategy seems to be: take your time, ship something really good, and leapfrog the competition with the quality of the execution. It's a strategy that has worked for Apple before. But it requires actually shipping something eventually. And it requires that something being good enough to justify the wait.

The challenge for Apple is that by the time iOS 27 ships in September 2025, it will have been almost two full years since the company first announced these AI upgrades for Siri. In AI timescales, that's an eternity. The landscape will have changed. New competitors will have emerged. Expectations will have shifted.

Apple has to deliver something genuinely impressive to make those delays feel justified.

Technical Deep Dive: Why Context Understanding Is Hard

Let's get into the weeds a bit, because understanding why this is technically difficult helps explain why Apple keeps pushing deadlines.

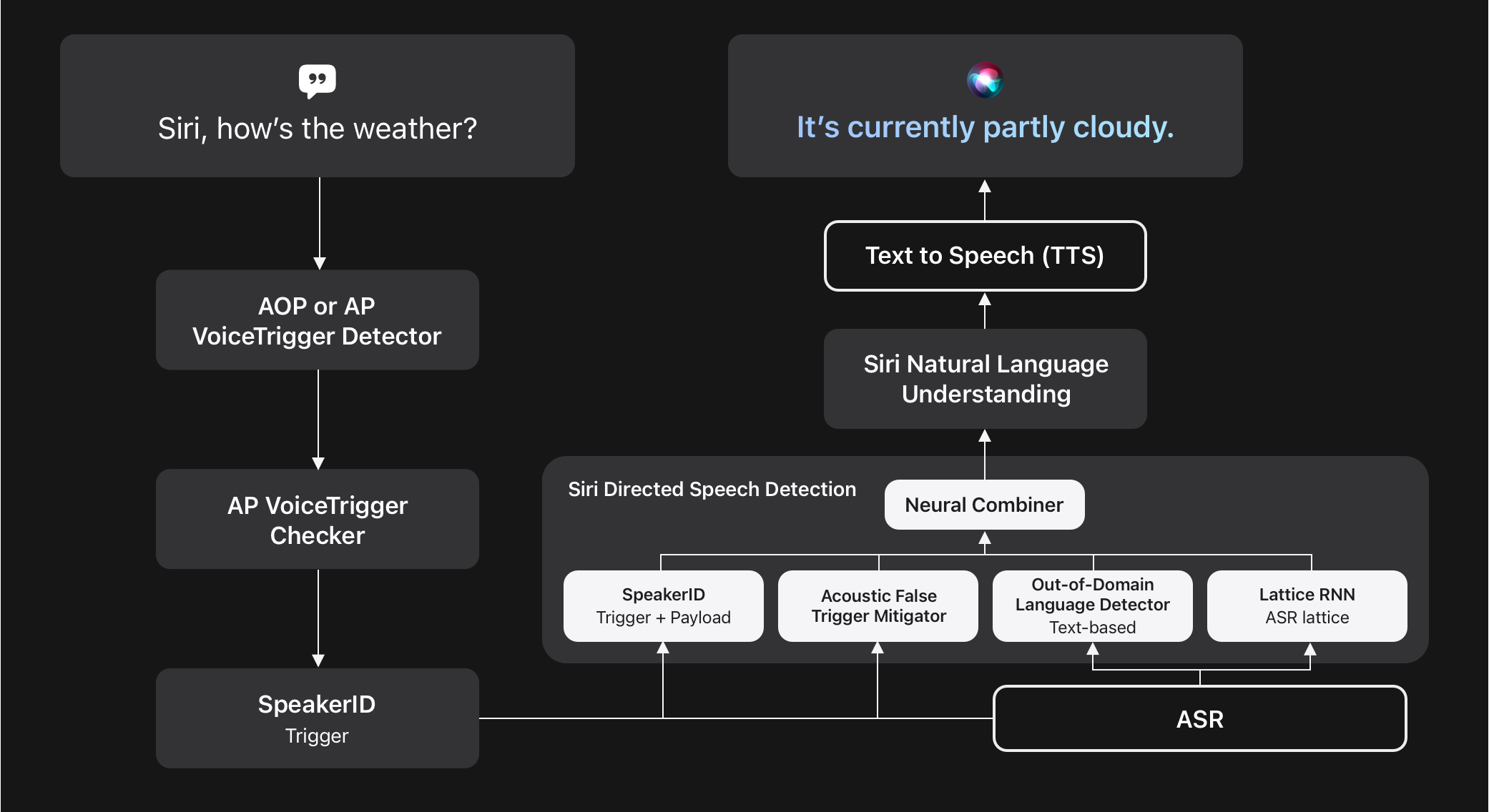

When you ask Siri to "add this to my calendar," the current version of Siri does the following:

- Listens to your voice

- Converts speech to text

- Identifies the intent (calendar event creation)

- Asks for missing details (date, time, title)

The new Siri would need to do this:

- Listen to your voice

- Convert speech to text

- Look at what's currently on screen

- Understand what that content means

- Extract relevant information from screen content

- Combine screen information with the spoken command

- Identify the intent

- Execute the action without asking for additional details

- Provide feedback confirming what it did

Step 4 is where it gets complicated. "Understanding what's on screen" means running a vision model that can analyze the pixels your camera sees. This requires either:

a) Running an advanced vision model on-device, which requires significant computational resources and battery power, or

b) Sending your screen content to Apple's servers for analysis, which violates Apple's privacy positioning

Apple is almost certainly going the first route, which means dealing with the computational constraints of on-device inference.

Step 5 (extracting relevant information) requires understanding not just what's on screen, but what parts are relevant to the user's command. If you're looking at an email thread and you ask Siri to "call the person who sent this," Siri needs to:

- Identify that you're in an email application

- Extract the sender's email address

- Look up that contact

- Initiate a call

Step 6 (combining information) is actually harder than it sounds. Siri needs to understand that information from two different sources (voice command + screen content) refers to the same thing. This requires language understanding that current AI models struggle with in unrestricted domains.

Then there's the privacy layer on top of all this. Every piece of data extracted from your screen needs to be handled carefully. It shouldn't be stored. It shouldn't be sent to the cloud. It shouldn't be logged in a way that could be used to build a profile of your activity. This adds complexity to every step of the pipeline.

And finally, this all needs to work in iOS, which means it needs to be compatible with the existing app ecosystem, user preferences, accessibility settings, and dozens of other integration points.

When you look at the actual complexity, the delays start to make sense. This isn't a simple feature. It's a complete reimagining of how an operating system's fundamental assistant works.

Higher scores mean longer development times.

Screen content analysis and information extraction are the most challenging steps in enhancing Siri's context understanding. Estimated data.

What Developers Need to Know About the New Siri

If you're building apps for iOS, the new Siri features matter to you. Apple is redesigning how developers integrate with Siri, and understanding these changes helps you prepare.

First, Apple is expanding Siri's capabilities to handle more complex app interactions. Currently, Siri can do basic things with your app if you've defined "Siri Shortcuts." The new version should understand more sophisticated requests and take more meaningful actions within your app.

Second, the personal context features mean developers need to think about how their apps expose information to Siri. If Siri can see your screen, it can potentially understand more about what you're doing in your app. Developers will need to decide what information to expose and how to structure it so Siri can understand it.

Third, the conversational aspect of the new Chat GPT-like Siri means that Siri might engage in longer interactions with users about your app's functionality. Developers might want to surface more information about their apps' capabilities to Siri during these conversations.

Apple will likely announce the exact technical details at WWDC 2025, but developers should start thinking about how their apps will integrate with a smarter, more capable Siri. This could be an opportunity to improve user experience, or it could be a pain if Apple's design decisions don't align with your app's architecture.

The Timeline Problem: iOS Release Cycles and Feature Shipping

Here's something people outside the tech industry don't fully understand: iOS release cycles create artificial pressure that can force premature feature launches.

Apple ships major iOS versions once a year, in September. The development cycle is roughly six months. That means if a feature misses a major release, it's typically delayed by a full year, not just a few months.

So when Apple says Siri's context features are going from iOS 26.4 (March) to iOS 26.5 (May), that's a two-month delay. But these are point releases, not major releases. They're smaller update cycles. This gives Apple more flexibility than it would have with major versions.

However, the bigger context features are now tied to iOS 27 (September 2025). If they miss that deadline, they're pushed to iOS 27.1 (October), or potentially into 2026. These kinds of delays cascade across the entire ecosystem because users, developers, and media all orient around the major version schedules.

This is why companies obsess over release dates: missing one can create a chain of delays that affects product strategy for years. It's also why companies sometimes ship unfinished features. The pressure to hit the arbitrary September deadline can override the requirement to ship something polished.

Apple seems to have decided that for Siri, quality matters more than the schedule. The company is willing to push features into the next release cycle if they're not ready. This is the right call, but it does mean that Apple's original promises about what iOS 26.4 would deliver were overly optimistic.

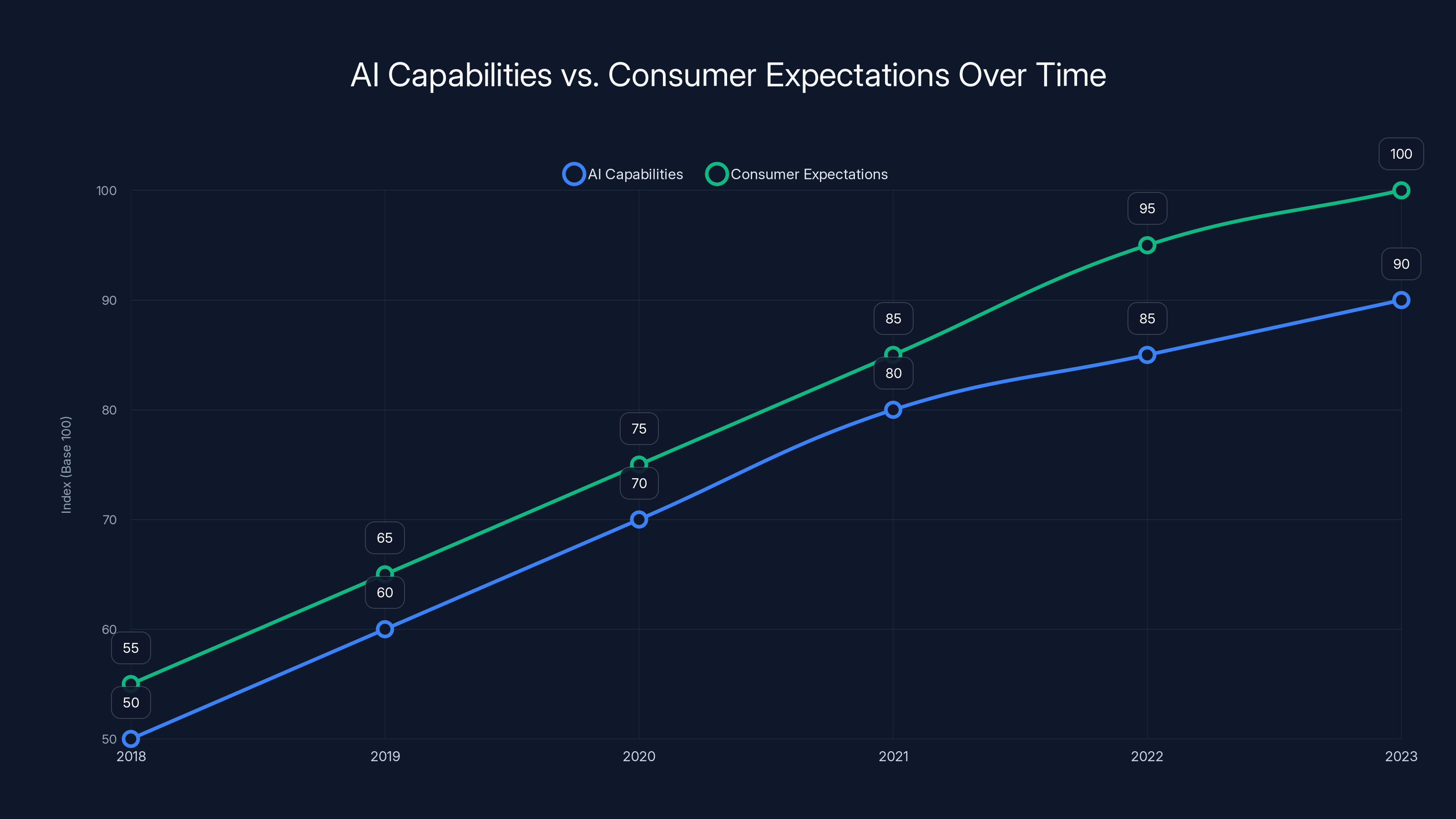

Consumer expectations for AI have consistently outpaced actual capabilities, highlighting the challenge of meeting user demands. Estimated data.

Privacy vs. Capability: The Fundamental Tension

The delays also reveal a deeper tension in Apple's product strategy: privacy and capability are often at odds.

To make Siri truly capable, Apple could send everything to the cloud. Sophisticated AI models running on Apple's servers could analyze your emails, understand your calendar, read your messages, and synthesize all that information to provide smart suggestions. Competitors are essentially doing this. It works. It's powerful.

But Apple has positioned itself as the company that respects privacy. The marketing narrative is: we don't collect your data; we process it on your device; we don't profile you. If Apple started sending all your personal information to servers to make Siri smarter, it would undermine that positioning.

So Apple is trying to do the hard thing: run sophisticated AI models on your device, where your data never leaves your phone, while still delivering capabilities that match cloud-based AI services.

This is technically much harder. On-device models need to be smaller and more efficient than cloud-based models. They're more constrained. They typically perform worse. But they preserve privacy.

Apple is betting that it can close the gap through better engineering, better model optimization, and better integration with iOS. Whether that bet will pay off is still an open question. But the delays suggest the gap is larger than Apple initially thought.

This is also why Apple is hedging by integrating Chat GPT. For truly complex tasks that require the most capable models, users can opt to send requests to OpenAI. But for basic stuff, Siri should handle it locally. It's a pragmatic compromise.

What the Delays Tell Us About Apple's AI Strategy

The Siri delays aren't isolated. They reflect broader challenges in Apple's AI strategy.

The company has been aggressive in announcing AI features. But the execution has been slower than the announcements suggest it should be. Features get pushed back. Timelines slip. The gap between what Apple promises at keynotes and what Apple ships in reality keeps widening.

This could mean a few things:

One: Apple's estimation of its own capabilities is becoming less accurate. The company might be under-estimating the complexity of integrating advanced AI into core OS features.

Two: Apple's quality bar for AI features is high. The company isn't willing to ship something half-baked. This is admirable, but it creates timeline pressure.

Three: The AI landscape is moving faster than Apple expected. By the time Apple ships something, it's already been superseded by newer approaches or stronger competitors.

All three are probably true to some degree.

For customers, this means expecting longer waits between announcement and availability for Apple's major AI features. For developers, it means planning for a more uncertain feature roadmap. For investors, it's a signal that Apple's AI execution might not move at the pace the company has historically managed.

Apple has built a reputation for getting products right, not getting them first. But in AI, first-mover advantage still matters. By being slow and deliberate, Apple risks being third or fourth, even if the final product is more polished.

The User Experience Question: Will Better Siri Actually Matter?

There's another question lurking beneath all of this: will users actually want a smarter Siri?

We know people love Chat GPT. We know they use AI writing assistants and image generators. But those are optional tools people actively choose to use. Siri is baked into iOS, always available, but that doesn't mean people want it to become a more prominent part of their workflow.

Siri's current limitation (simplicity) is actually a feature for many users. You ask it to set a timer. It sets a timer. You ask it to play music. It plays music. Simple, predictable, reliable. A more capable Siri might be less predictable. It might do things you didn't ask for. It might misunderstand your intent in more creative ways.

Apple will need to design the new Siri carefully to avoid these pitfalls. It needs to be powerful without being intrusive. Helpful without being presumptuous. Smart without being surprising.

That's a difficult balance to strike. And it's probably another reason the delays are happening. Apple is likely testing not just whether the feature works, but whether users actually want it.

Once Siri ships, Apple will get real data about usage. The company will see how often people invoke the new features, whether they're satisfied with the results, and whether the new Siri actually improves the user experience. That will inform whether this bet on a smarter assistant pays off.

Looking Ahead: What to Expect in 2025 and Beyond

Based on current timelines, here's what we can expect:

By May 2025 (iOS 26.5): Apple will likely introduce limited versions of personal context features, web search improvements, and possibly custom image generation. These will still be experimental, with significant limitations.

By June 2025 (WWDC): Apple will announce the new Chat GPT-like Siri for iOS 27, probably with impressive demos showing what's possible.

By September 2025 (iOS 27): The full redesigned Siri ships, along with the conversational AI features. Early reviews will either praise Apple's quality execution or criticize the lack of innovation compared to what's already available in other AI tools.

By late 2025 and into 2026: Apple will iterate on the features based on real-world usage data, fixing problems, adding capabilities, and potentially integrating even more advanced models.

Beyond that, the arc is less clear. If the new Siri is genuinely good, it becomes a competitive advantage. If it's merely competent, it's just catching up to where other AI assistants already are. If it's disappointing, Apple will have burned credibility on multiple missed deadlines for a feature that didn't justify the wait.

The stakes are actually pretty high. Apple has positioned itself as an AI company. But the execution so far has been more about partnerships (OpenAI, Anthropic) than independent innovation. True Siri innovation would be a genuine demonstration that Apple can execute on AI strategy. The delays suggest that's harder than it looks.

Lessons for Other Companies: How to Handle AI Feature Delays

While Apple specifically is facing Siri delays, every company trying to ship AI features is dealing with similar challenges. Here are some lessons worth learning:

One: Announce features earlier, ship them later. Apple did this by talking about Siri's future at keynotes before the features were ready. This builds anticipation but also creates expectations that might not be met. The lesson: be more conservative with public commitments.

Two: Quality matters more than speed. Apple could have shipped partially working context features in iOS 26.4. Instead, it delayed. Users generally prefer a feature that works to one that's available but broken. But this comes at the cost of being slow to market.

Three: Integration complexity is underestimated. Siri touches almost everything in iOS. Making changes to Siri affects the entire ecosystem. This creates cascading complexity that pure research projects don't face.

Four: User expectations set by marketing are hard to reset. Once you tell customers something is coming, they remember. Even if you explain the delay, some portion of users will feel disappointed or frustrated. This is why being overly specific about timelines is risky.

Five: Hardware diversity creates real engineering challenges. iOS runs on devices ranging from iPhone 15 to iPhone SE. Making AI features work well across all of them, with vastly different computational capacity, is a real problem that cloud-only competitors don't face.

These lessons apply beyond Apple. Any company making promises about AI capabilities should factor these realities into their timelines.

The Bigger Picture: AI and Consumer Expectations

Siri's delays are part of a broader pattern in how consumer AI is being adopted and deployed.

We're in a phase where AI capabilities are advancing extremely quickly, but consumer expectations are advancing even faster. Every new breakthrough in language models, vision understanding, or reasoning creates headlines and gets people excited about what's possible. Then when those capabilities hit the messy reality of consumer software, things slow down.

It turns out that making AI work reliably at scale is hard. It turns out that privacy and capability are often at odds. It turns out that integrating AI with existing systems creates complexity that demos don't reveal. It turns out that users have preferences and edge cases that pure performance metrics don't capture.

Apple isn't unique in facing these challenges. Every company shipping AI features is dealing with them. The difference is that Apple has committed publicly to specific features and timelines, so the delays are more visible.

This is actually good for consumers. It means companies are prioritizing quality over hype. It means features are being tested more thoroughly before launch. It means less likelihood of embarrassing failures after shipping.

But it also means managing expectations. The age of rapid AI deployment is hitting the limits of what's actually feasible at scale. We're entering the era of consolidation and refinement, where the focus shifts from what's theoretically possible to what's practically useful.

Siri's redesign is a good case study in that transition.

FAQ

What is the new Siri supposed to do differently?

The redesigned Siri is supposed to understand personal context by seeing what's on your screen and knowing your routines. Instead of requiring explicit commands, it can extract information from your email, calendar, or web pages and take action automatically. For example, if you're looking at a meeting time in an email and ask Siri to add it to your calendar, the new Siri would see that information and add the event without asking you to repeat the details. It will also become more conversational, like Chat GPT, allowing multi-turn discussions and more complex requests than the current voice-command-based Siri allows.

Why has Apple delayed the new Siri features so many times?

Apple initially planned to ship context-aware Siri features with iOS 26.4 in March 2025, but testing found problems with reliability and functionality. The company needed more time to fix these issues, so it pushed the features to iOS 26.5 (May 2025) and iOS 27 (September 2025). The delays reflect the complexity of running advanced AI models on-device while respecting privacy, integrating with all of iOS's existing features, and ensuring reliable performance across different hardware configurations.

When will the new Siri actually ship?

Limited versions of the new Siri features are expected in iOS 26.5, scheduled for May 2025. The more comprehensive redesign, including conversational AI capabilities, is planned for iOS 27, launching in September 2025. However, given previous delays, these timelines should be considered estimates rather than guarantees. Apple will announce the exact feature set and capabilities at the Worldwide Developers Conference in June 2025.

Why can't Apple just use cloud-based AI like competitors do?

Apple has positioned itself as a privacy-first company that processes data on-device rather than sending it to servers. If Apple sent all your personal information to cloud servers for Siri to analyze, it would contradict that positioning. Instead, the company is investing in on-device AI models that can understand context without leaving your device. This approach is more technically challenging but aligns with Apple's privacy messaging. For very complex tasks, Apple is allowing users to optionally send requests to OpenAI for Chat GPT-powered assistance, giving users a choice about privacy versus capability.

How will the new Siri work with privacy and personal data?

The new Siri processes information locally on your device, meaning your personal data doesn't get transmitted to Apple's servers. The AI models that analyze your screen content and understand your context run directly on your iPhone using dedicated neural processing hardware. Apple hasn't sent technical specifications yet, but the approach involves understanding your data without storing it or using it to build profiles of your behavior. This is different from how cloud-based AI assistants work, where data typically flows to company servers for processing and analysis.

What does the Chat GPT integration mean for Siri's future?

Apple is integrating OpenAI's GPT-4 technology into Siri to handle more complex requests that exceed the capabilities of on-device models. This means Siri will transform from a simple voice assistant into something more like a conversational AI chatbot. Users will be able to ask Siri longer, more complex questions and engage in multi-turn conversations. The integration also gives users an option to send queries to OpenAI when they want access to the most capable models, with Apple handling the privacy implications of that choice.

Will developers need to change their apps to work with the new Siri?

Apple hasn't detailed the developer requirements yet, but they'll likely be announced at WWDC 2025. Developers will probably need to consider how their apps expose information to Siri and how to structure that information so the AI can understand it. Apps that want to take full advantage of the new Siri's conversational capabilities might need to define additional integration points. Apple will provide documentation and APIs to help developers prepare their apps for the upgraded assistant.

Is the new Siri delayed because Apple is struggling with AI?

The delays don't necessarily indicate that Apple is struggling with AI research. Rather, they reflect the challenges of shipping consumer AI features at scale. Moving from research prototypes to reliable, privacy-respecting consumer features is significantly harder than proving a concept works. The delays suggest Apple is being cautious about quality and reliability, which is generally the right approach. However, they also show that Apple's initial timeline estimates were too optimistic about how quickly these complex features could be ready for public use.

How does the new Siri compare to Google Assistant or Amazon Alexa?

Once the new Siri ships, it will likely match or exceed the capabilities of Google Assistant and Amazon Alexa in terms of conversational ability and context understanding. The key difference will be Apple's on-device approach, which prioritizes privacy over the potentially superior results of cloud-based processing. Whether that trade-off appeals to users will determine the new Siri's success compared to alternatives.

What should users do in the meantime?

Continue using Siri for what it currently does well: basic commands like setting timers, playing music, or calling contacts. For more complex AI tasks, use dedicated tools like Chat GPT, Perplexity, or Claude. Once the new Siri ships in late 2025, you can evaluate whether it's useful enough to integrate into your daily workflow. Don't hold your current productivity hostage waiting for these features to arrive; they'll improve Siri over time, but they're not essential until they're actually available and proven useful.

Conclusion: The Cost of Getting AI Right

Apple's Siri delays teach us something important about AI in consumer products: getting it right is harder and slower than the hype suggests.

We live in an era where breakthroughs in artificial intelligence happen constantly. Every few months, there's a new model or capability that seems to unlock something previously impossible. The temptation is to ship these breakthroughs immediately, to capitalize on the momentum and excitement.

But there's a chasm between "impressive in a demo" and "reliable for 500 million users." That chasm is what Apple is crossing right now with Siri. The company is trying to build an AI assistant that doesn't just work in the lab; it works on your actual iPhone, preserving privacy, integrating seamlessly with existing features, and not breaking anything.

That's hard. Harder than most people appreciate.

The delays don't mean Apple is failing at AI. They mean Apple is doing what it historically does: taking its time to get things right, even if it means missing the initial wave of excitement. The company has built a reputation on this approach. Whether that reputation will hold in the AI era remains to be seen.

What we can say is this: when Siri finally does ship with these new capabilities, people will have a clear picture of what Apple thinks a consumer AI assistant should look like. It will either vindicate the delays or prove them to be a costly mistake. But one way or another, we're about to find out whether Apple's measured approach to AI actually delivers on its promise.

The wait continues, and Apple is betting that when the new Siri finally arrives, it will be worth it.

Key Takeaways

- Apple delayed Siri's major redesign from iOS 26.4 (March 2025) to iOS 26.5/iOS 27 because testing found reliability issues with context understanding features.

- The new Siri will understand personal context by analyzing screen content and taking actions without explicit commands, requiring advanced on-device AI models.

- Privacy constraints force Apple to run AI models locally rather than in the cloud, making development exponentially harder than competitors face.

- Apple is also integrating ChatGPT to provide conversational AI capabilities, transforming Siri from a voice assistant to a true AI chatbot.

- The delays reveal fundamental challenges: integrating new AI features across billions of devices with different hardware, supporting legacy functionality, and balancing capability with privacy.

Related Articles

- Apple's Siri Revamp Delayed Again: What's Really Happening [2025]

- Alexa+: Amazon's AI Assistant Now Available to Everyone [2025]

- Amazon Alexa Plus Nationwide Launch: Pricing, Features & Comparison [2025]

- OpenAI Researcher Quits Over ChatGPT Ads, Warns of 'Facebook' Path [2025]

- OpenAI's ChatGPT Ads Strategy: What You Need to Know [2025]

- Claude + WordPress Integration: AI Site Management [2025]

![Apple's Siri AI Overhaul: Why the Delays Keep Coming [2025]](https://tryrunable.com/blog/apple-s-siri-ai-overhaul-why-the-delays-keep-coming-2025/image-1-1770845922474.jpg)