Apple's Siri Revamp Delayed Again: What's Really Happening [2025]

Apple promised us a transformed Siri back in 2024. We're still waiting.

When Apple unveiled Apple Intelligence at its 2024 Worldwide Developers Conference, it was supposed to mark a turning point. Siri would finally get smart. Really smart. The voice assistant that's been... okay... for the better part of a decade would suddenly compete with Claude, Chat GPT, and Google Gemini. It would understand context. Execute complex tasks. Feel less like talking to a calculator and more like talking to an actual assistant.

Then came the delays. First one. Then another. Now we're in early 2025, and the newest Siri you were promised in March? That's been rescheduled for May. Or September. Or maybe next year.

This isn't just a product delay. It's a pattern that reveals something real about the AI revolution, the pressure on Apple, and what happens when a company tries to play catch-up in a space where others are already lapping them.

Let me walk you through what's actually happening.

TL; DR

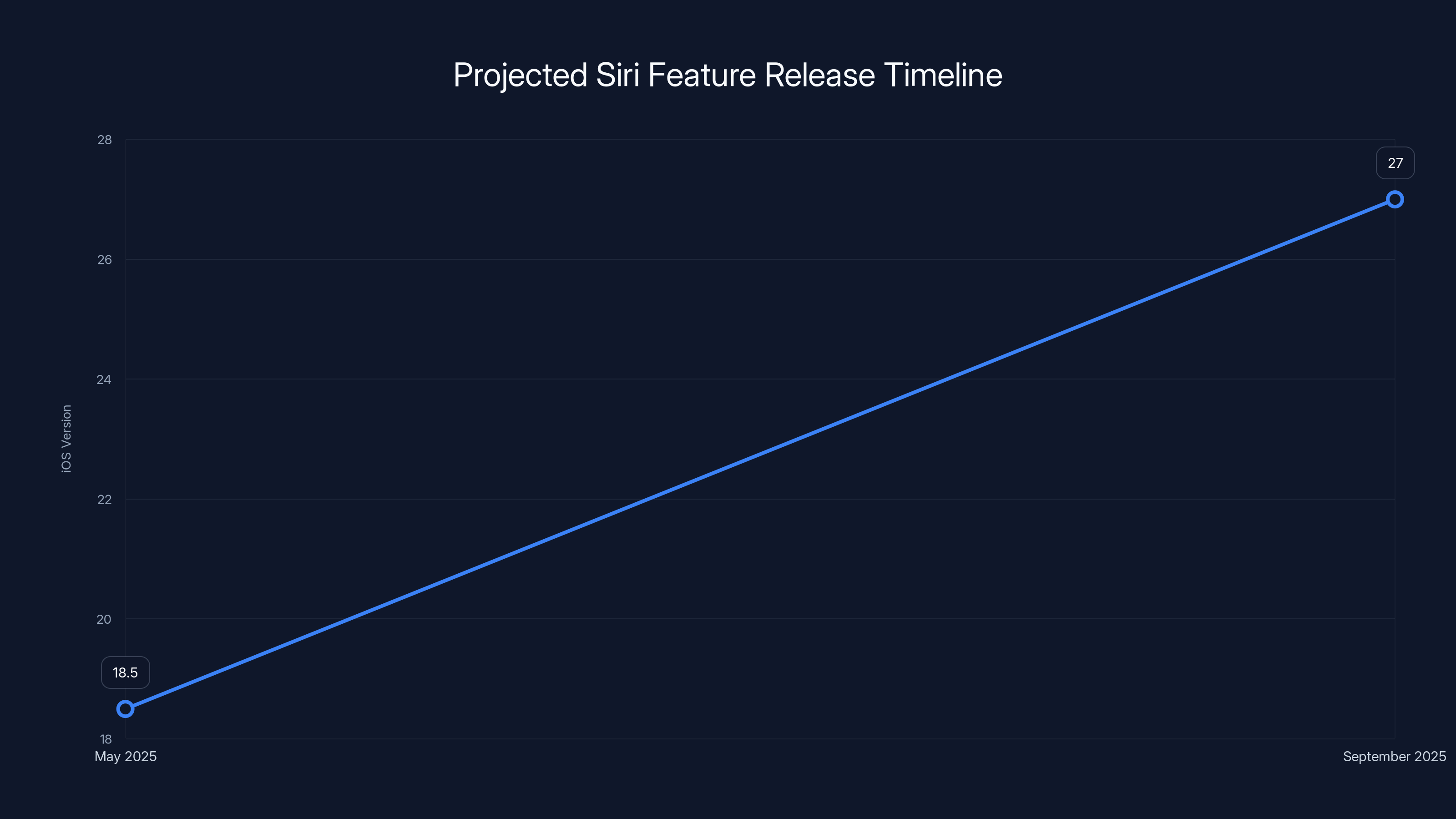

- The delay chain: Originally promised for iOS 18.4 in March 2025, now spreading across May (iOS 18.5) and September (iOS 27)

- The real problem: Testing revealed compatibility issues and integration challenges with Google Gemini backend systems

- What's actually coming: A smarter Siri that functions more like modern LLM chatbots while staying voice-first on Apple devices

- The stakes: This revamp directly competes with every major AI assistant, not just Siri's previous iterations

- Industry impact: Shows the gap between promising AI features and shipping them reliably at scale

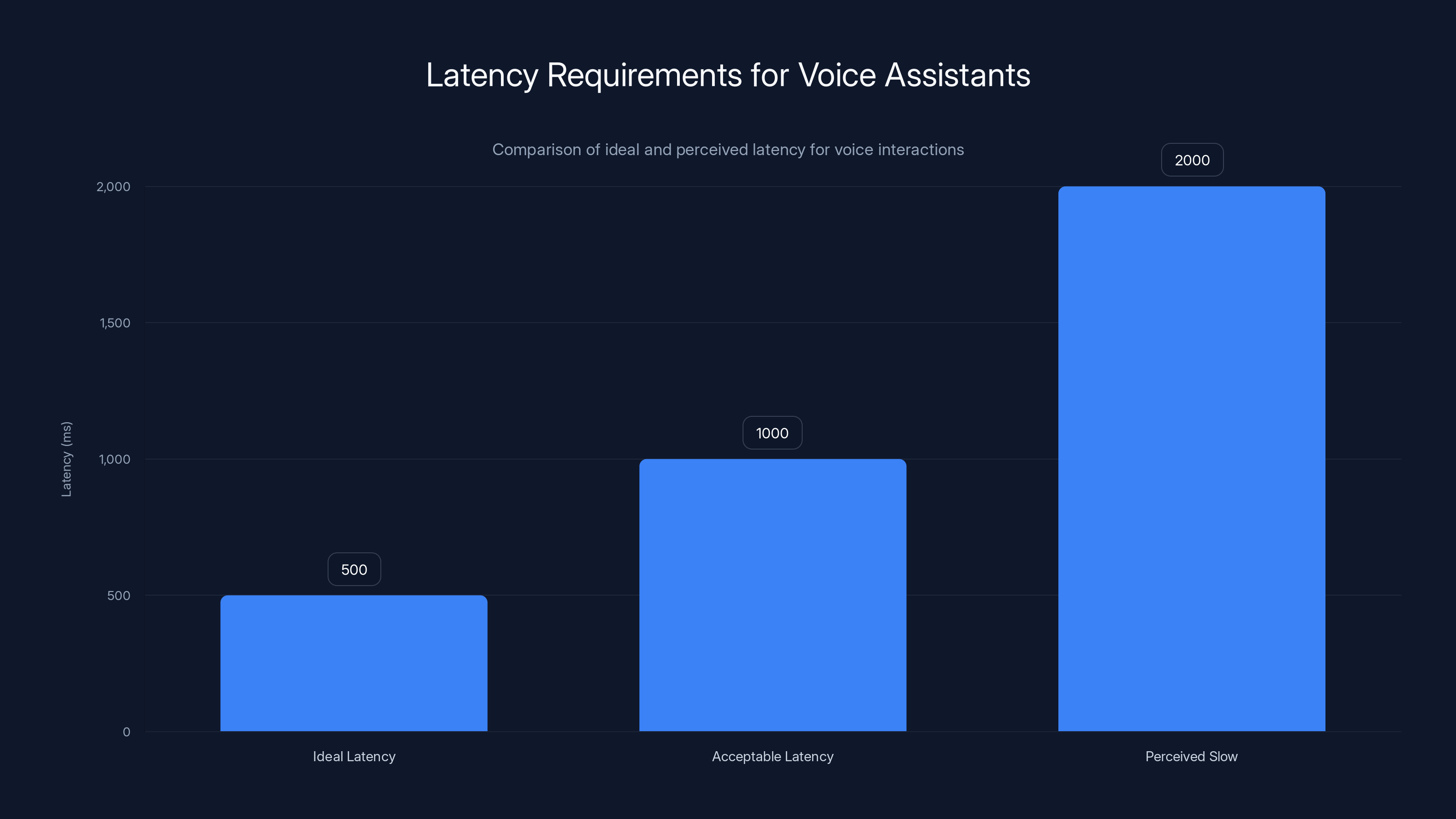

Voice assistants require latency under 500ms for ideal performance. Latency above 1000ms is perceived as slow, impacting user experience. Estimated data.

The History of Siri Delays: A Timeline Nobody Wanted

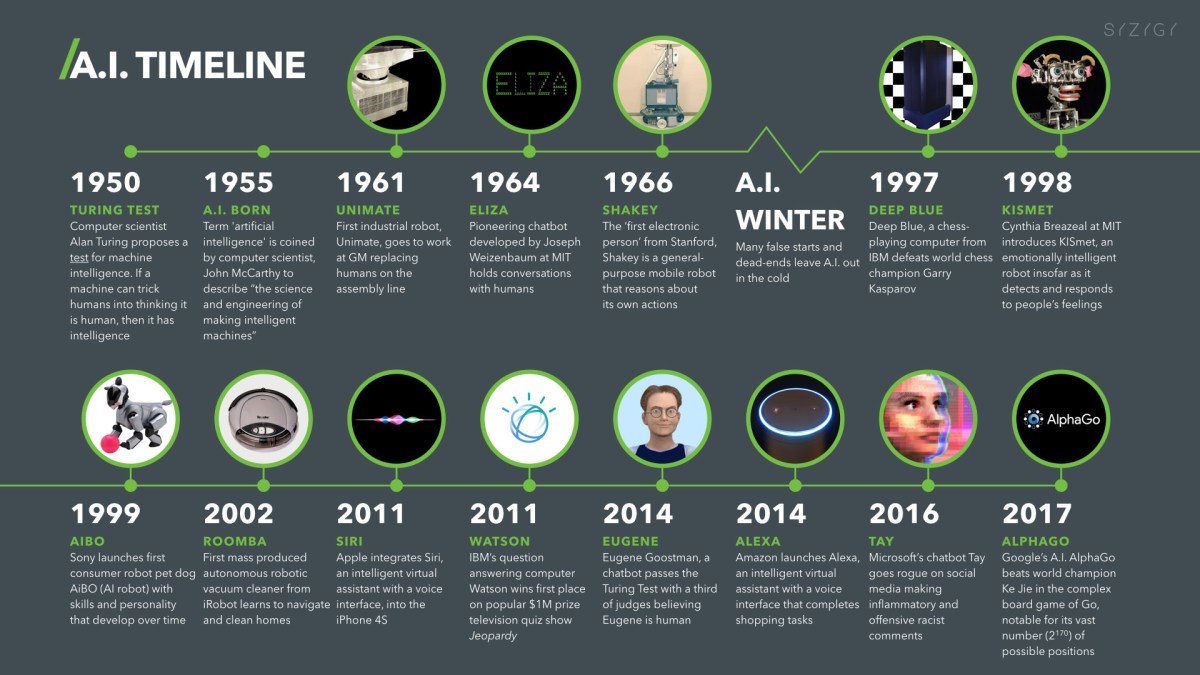

Let's be honest. Siri's been disappointing people since 2011. Apple acquired Siri Inc and integrated it into iOS 5 with promises of a voice assistant that would actually understand you. It worked okay for simple things. Ask it the weather? Sure. Ask it to remind you about something? Fine. Ask it anything requiring context or reasoning? Prepare to be disappointed.

For over a decade, Siri languished while competitors caught up and then passed. Google Assistant got smarter. Alexa understood follow-ups. Meanwhile, Siri was still struggling with basic comprehension in 2023.

Then the AI boom happened. OpenAI released Chat GPT in November 2022, and suddenly everyone realized what was actually possible. Large language models could reason. They could write code. They could hold conversations. They could do things that made Siri look like a feature phone from 2007.

Apple watched this. Tim Cook's team saw the opportunity. They couldn't let Siri become a relic while their competitors powered ahead.

That's where Apple Intelligence came in.

What Apple Intelligence Actually Is (And Why It Matters)

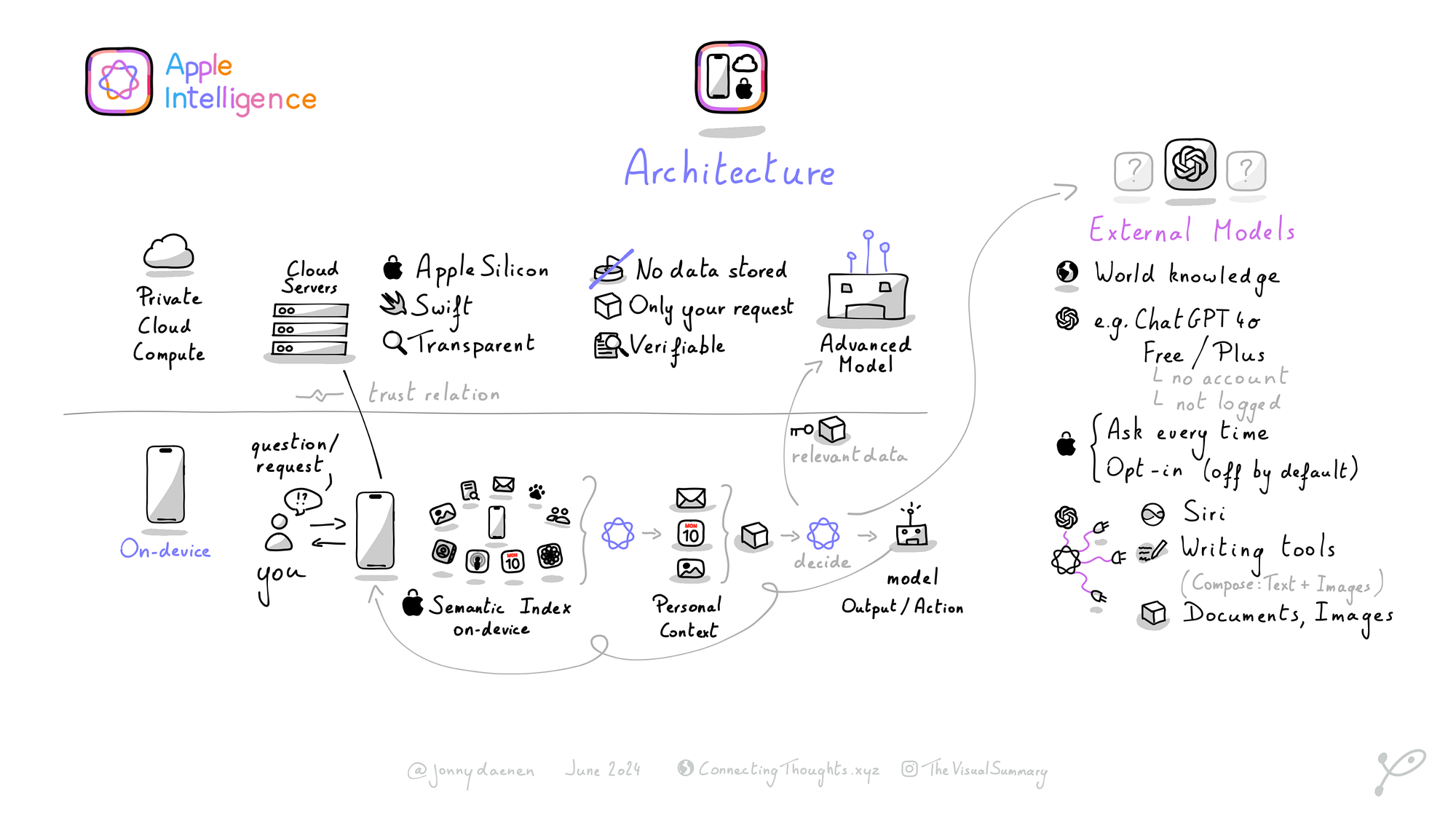

Apple Intelligence isn't just Siri 2.0. It's a company-wide commitment to embed AI into every product, every feature, every interaction.

The key innovation Apple keeps pushing? On-device processing. Most AI services rely on sending your data to cloud servers. OpenAI processes your queries on remote servers. Google Gemini does the same. But Apple's approach is different. They want as much as possible running on your device, on your hardware, in your hands.

That's harder. It's more expensive computationally. It requires careful optimization. But it addresses privacy concerns that matter to Apple's brand identity and customer expectations.

So when Apple Intelligence launched in iOS 18, watchOS 11, and macOS Sequoia, it included several AI features already. Writing tools that suggest edits. Photo search improvements. Notification summarization. These were relatively safe, privacy-respecting features that could live on-device without sending everything to Apple's servers.

But Siri? That required something different.

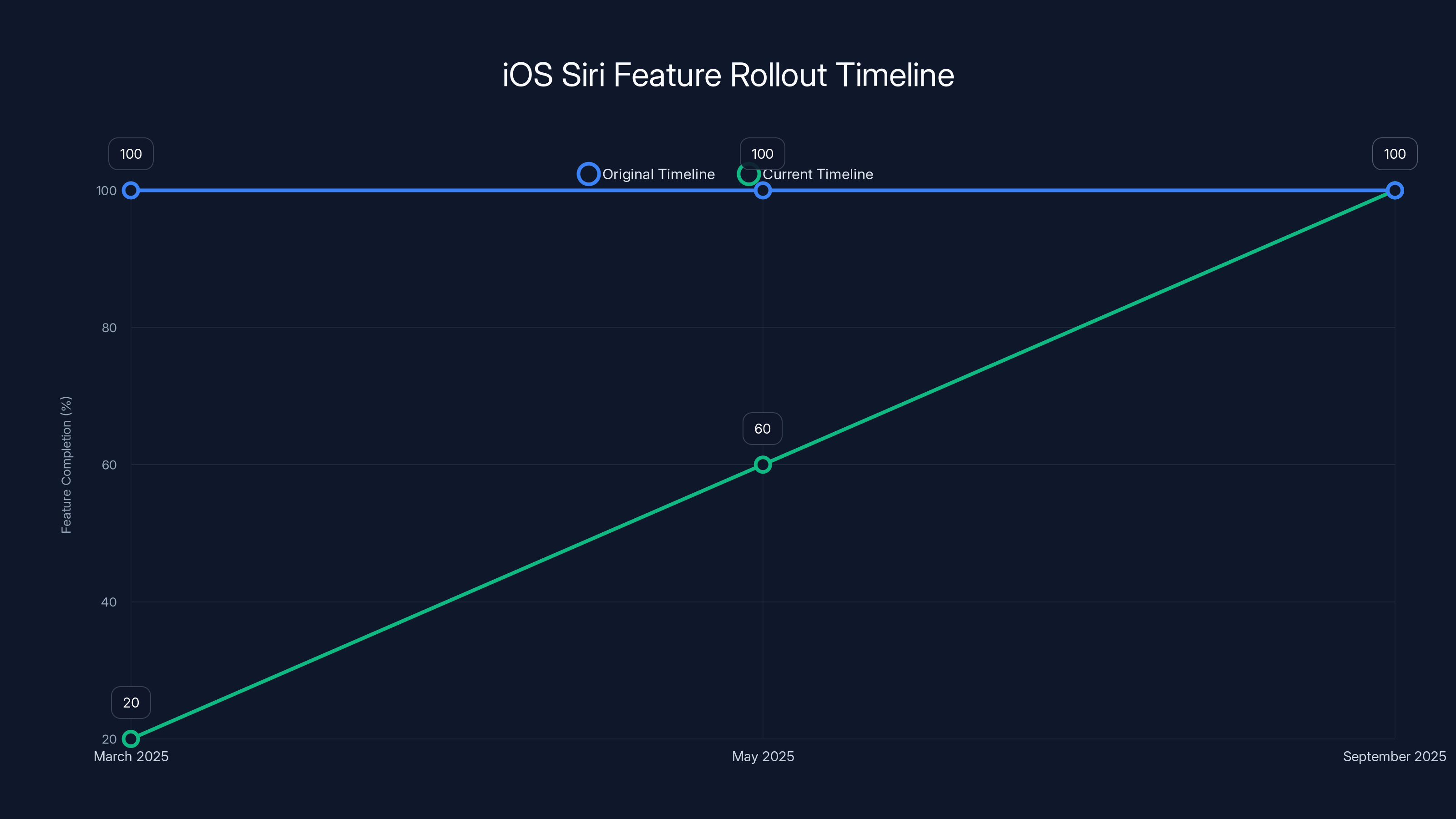

The new Siri features are expected to roll out in two phases, with initial updates in May 2025 and full capabilities by September 2025. Estimated data based on current projections.

Why New Siri Couldn't Launch On Time

Here's the technical challenge nobody talks about enough: making Siri work like a modern AI assistant while keeping most of it on-device is genuinely hard.

Traditional Siri is pattern-matching at scale. You say something, the system maps it to predefined commands, and executes. "Set a timer for 10 minutes." "Call Mom." "Play my workout playlist." It's deterministic. It's fast. It's also brittle.

Modern Siri needs to do something different. It needs to understand intent. It needs context. It needs to reason about what you actually want, not just match your words to a command.

That requires large language model capabilities. Which Apple can't run efficiently on every iPhone yet. So the plan? Hybrid processing. Some queries stay on-device. Complex ones go to the cloud, powered by Google Gemini (yes, Apple and Google are partnering here).

That hybrid approach is where the engineering gets messy.

When you're coordinating between on-device processing and cloud backend, you need:

- Fallback mechanisms - What happens if the cloud request times out? If the connection drops? If the user is in airplane mode?

- Latency optimization - Voice assistants need to feel responsive. If Siri takes 3 seconds to respond, people get frustrated and move to competitors

- Privacy preservation - Apple needs the cloud to handle complexity without learning too much about what users ask

- Compatibility across devices - This needs to work on iPhone 15, iPhone 16, older devices, newer ones, all running slightly different OS versions

- Fallback to on-device capabilities - When cloud fails, what can the device still do?

Apple reportedly ran into problems during testing with these exact scenarios. The software worked in the lab. In the wild? Different story.

The Cloud Partner Problem: Why Google Gemini Matters More Than You Think

This partnership is huge and gets overlooked.

Apple and Google are competitors. They've sued each other. They disagree on privacy, on business models, on almost everything. Yet here they are, partnering on something core to Apple's AI strategy.

Why? Because OpenAI wanted to charge for premium features, Claude integration would've required Anthropic concessions on pricing and data handling that didn't make sense, and Apple couldn't build their own LLM competitive with the best options fast enough.

So Google Gemini it is.

Now here's the actual challenge: Apple needs Gemini to respond in milliseconds. Google built Gemini for general web use, where a 2-3 second response is fine. Voice assistants can't work that way. Users expect interruption-free, natural conversation.

Integrating Gemini into Apple's infrastructure requires:

- Custom API endpoints optimized for low latency

- Prioritization of voice requests over text ones

- Fallback handling when Gemini is congested

- Data transmission efficiency (voice queries are small, but responses need to be snappy)

And Apple needs this to work globally. On billions of devices. Simultaneously.

That's not a trivial engineering challenge. It's possibly why the delays exist.

Timeline Breakdown: What Got Pushed and When

Let's map the actual delay schedule based on what we know:

Original Timeline (WWDC 2024):

- iOS 18.4 in March 2025: New Siri features roll out

- All features available simultaneously

Current Timeline (January 2025):

- iOS 18.4 (March 2025): Minimal Siri changes, mostly other improvements

- iOS 18.5 (May 2025): Some new Siri features arrive

- iOS 27 (September 2025): Full new Siri experience launches

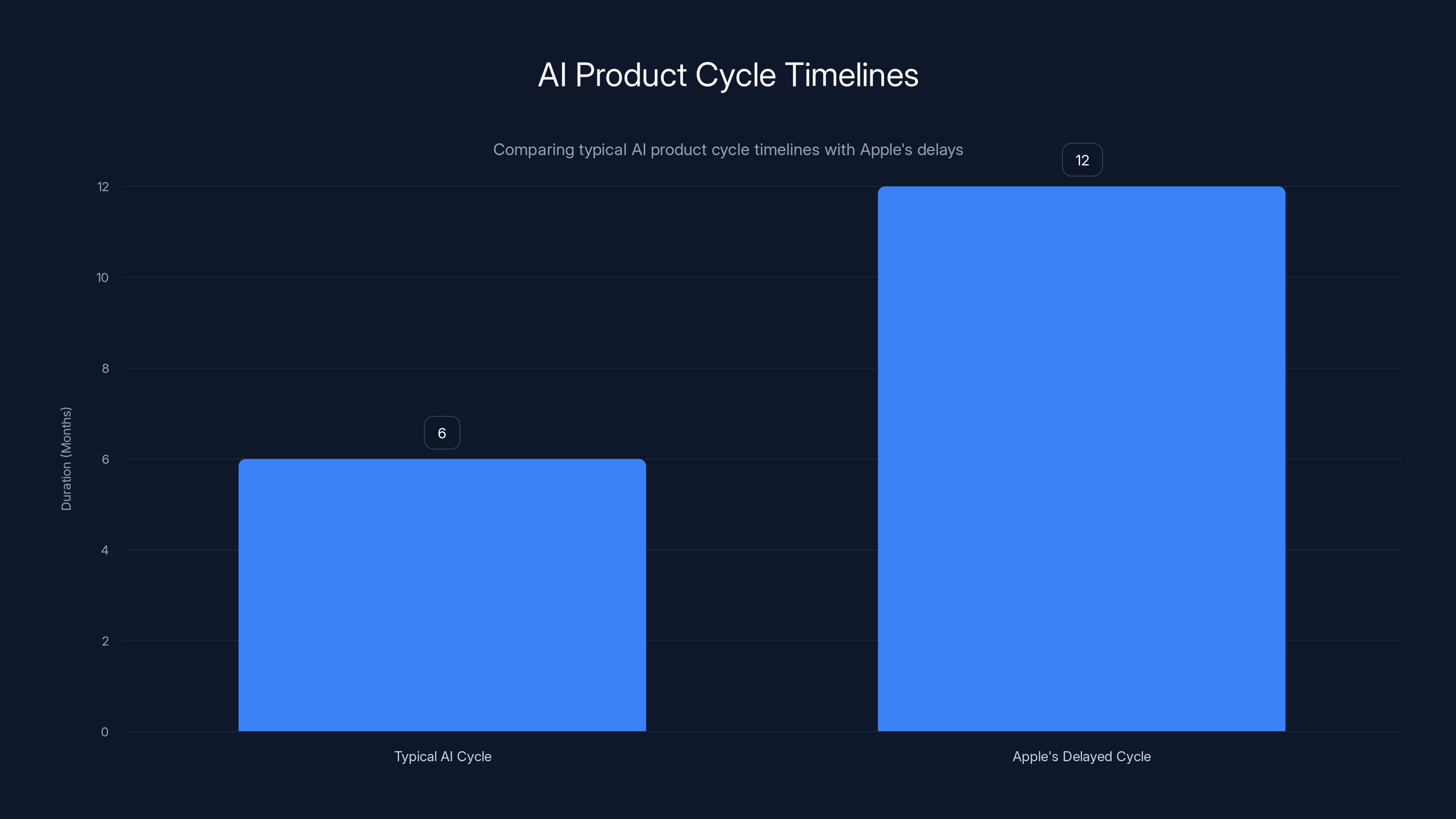

That's a 6-month delay. Not huge in software terms, but significant when competitors are shipping similar features.

The breakdown also reveals something interesting about Apple's strategy. They're not doing one big launch. They're rolling out in phases. Why? Probably because:

- They need more testing time - Phases allow them to catch issues with smaller rollouts first

- They want to avoid catastrophic bugs - A botched Siri launch affects the core Apple experience

- They're still optimizing performance - May's release might still not be perfect, so they're saving the full feature set for September

The current timeline shows a phased rollout of Siri features, with full functionality delayed until September 2025. Estimated data based on feature completion.

How This Compares to Competitors' AI Strategies

While Apple's been delaying, what have others been doing?

Google has been aggressive. Gemini is in Android, in search, in Chrome, in Google's entire ecosystem. Google Assistant is getting upgraded with Gemini capabilities. It's not perfect, but it's shipping.

Microsoft integrated Chat GPT into Windows, into Copilot, into everything. Again, not flawless, but available.

Amazon keeps iterating on Alexa, adding generative AI features, improving context understanding.

Apple's delay puts them further behind. When the new Siri finally launches in May or September, competitors will have been shipping and iterating their own AI assistants for months longer.

That's the real cost of these delays. Not just customer frustration, but lost market position in the AI race.

What's Actually Coming in the New Siri

Beyond "smarter," what does new Siri actually do differently?

Based on available information and the architecture Apple's revealed, here's what we're likely to see:

Context Awareness - New Siri will remember what you've been talking about. Ask about the weather, then say "will I need an umbrella?" Old Siri would be confused. New Siri will connect the dots.

Multi-Turn Conversations - Follow-ups will work naturally. Real conversation, not command-response-command cycles.

Screen Awareness - Siri will understand what's on your screen and reference it. Open an email, ask Siri about it, and Siri knows exactly which email you mean.

Device Control at Scale - Siri can now orchestrate complex actions across your devices. Not just "turn off the lights"—more like "I'm leaving, lock the doors, turn off the lights, set the thermostat to away mode, and remind me to grab my keys."

Natural Language Processing - Siri won't need the exact phrasing. You can ask for the same thing three different ways and get the same result.

Fallback Intelligence - If something fails, Siri explains what went wrong instead of just saying "I couldn't find that."

These aren't novel. Chat GPT does all of this. Google Gemini does all of this. But getting them to work on a voice-first interface that respects privacy and works offline is the Apple difference.

The Privacy Trade-off Apple Needs to Address

Here's the tension that probably delayed Siri even more: privacy vs. capability.

Apple's brand promise is privacy. They've built an entire marketing campaign around not tracking users, not selling data, encrypting everything. It's core to their identity.

But modern AI assistants need to learn. They need patterns. They need data to get better. Full on-device learning can happen, but it's slower and less capable than cloud-based learning.

So Apple is in a bind. They need Siri to work like modern AI assistants, which means sending queries to Google Gemini. But every query that goes to Google is data leaving Apple's ecosystem, and that contradicts Apple's privacy positioning.

They've addressed this with privacy commitments: Apple promises Gemini won't learn from your Siri queries, Apple strips identifying information before sending, etc. But technically, the data still leaves your device.

This philosophical conflict probably contributed to delays too. Apple needed to engineer solutions that maintain privacy while still getting good results from Gemini. That's non-trivial.

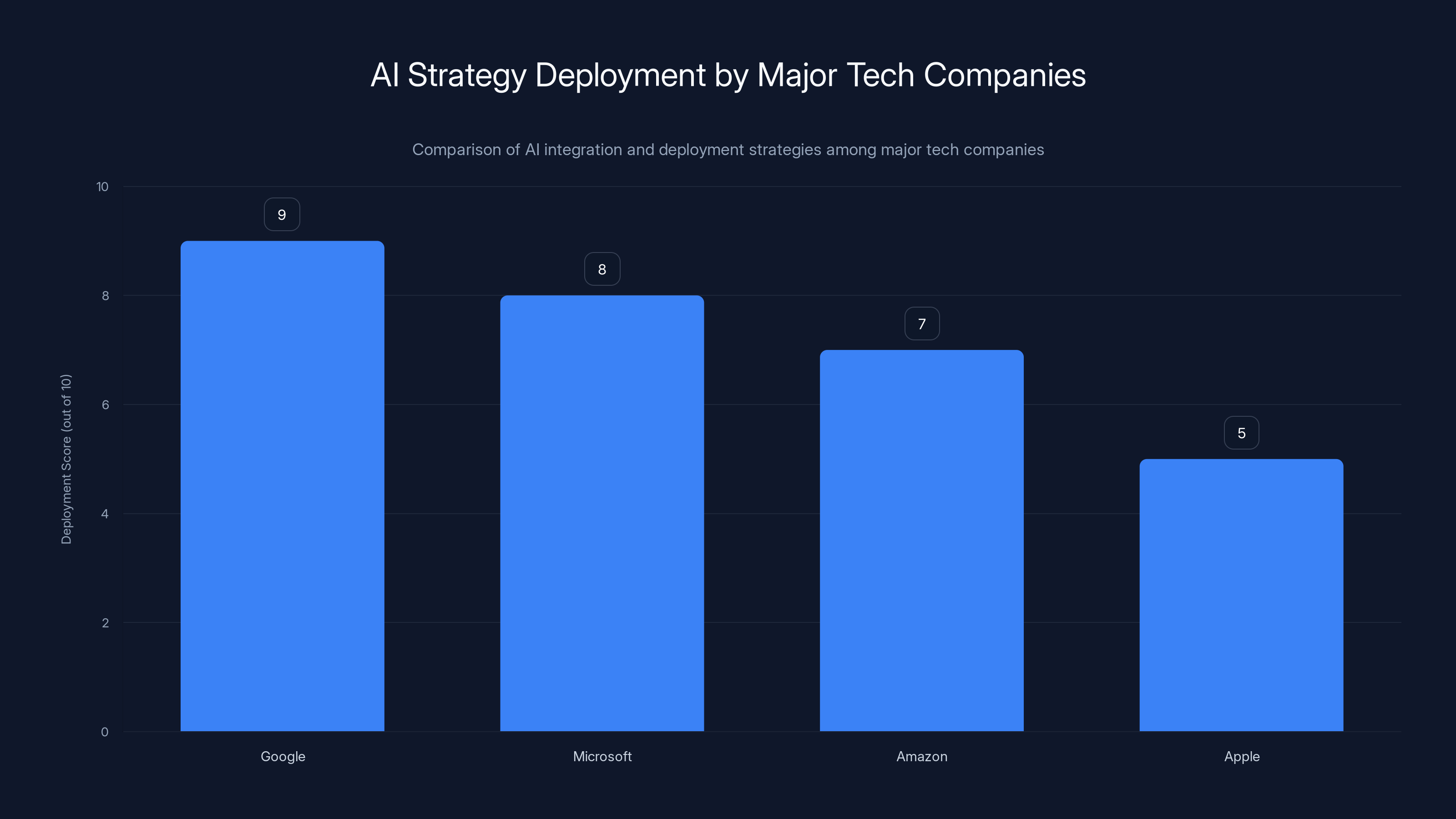

Google leads with aggressive AI integration across its ecosystem, followed by Microsoft and Amazon. Apple's delayed AI strategy results in a lower deployment score. Estimated data.

What These Delays Mean for Apple's AI Credibility

Apple positioned itself as serious about AI. Tim Cook's been talking about it. The marketing's been building. Apple Intelligence launched with real features.

But Siri? The most visible AI interface Apple owns? Still not ready.

That sends a message competitors will exploit. When marketing teams at Google and Microsoft point out that "our AI assistants shipped on time, Apple's keeps getting delayed," they're hitting a real credibility point.

It also raises questions about Apple's ability to execute on AI at the pace the market demands. The AI space moves fast. Companies that get stalled by delays risk getting leapfrogged by faster competitors.

For Apple, a company used to controlling its narrative and shipping on schedule, these delays are unusual and noteworthy. It suggests the technical challenges are genuinely difficult, not just Apple being cautious.

The Testing Problem: Why Delays Exist in Real Terms

Apple's statement about delays blamed "testing issues." That's corporate speak, but it's not wrong.

Voice assistants are incredibly hard to test at scale because the test surface is essentially infinite. Every possible combination of words, accents, languages, contexts, device states, network conditions, and user intent is a test case.

Testing frameworks usually work like this:

- Unit testing - Does each component work in isolation?

- Integration testing - Do components work together?

- System testing - Does the full system work?

- User acceptance testing - Do real users think it works?

- Load testing - Does it work when thousands/millions of people use it simultaneously?

- Edge case testing - What breaks?

Siri needs to pass all of these, but especially the last one. Every weird request, every accent, every corner case where Siri could fail and embarrass Apple in front of users.

You multiply that across 17 languages, 50+ countries, different device hardware, different iOS versions people are still running, and the test matrix becomes enormous.

A single uncaught bug where Siri misinterprets a voice command could go viral on social media. Apple can't afford that, so they test obsessively.

Runable and Workflow Automation Lessons from Siri's Delays

There's a broader lesson here for any team building complex systems. When you're integrating multiple components, testing becomes exponentially harder.

Platforms like Runable solve a related problem by allowing teams to build automated workflows without hitting integration nightmares. Instead of coordinating between voice processing, language models, device control, and cloud backends (like Apple is doing), you define workflows visually and let the platform handle the integration complexity.

Apple's challenge—coordinating between on-device and cloud AI—is similar to what enterprise teams face when trying to coordinate between multiple tools and APIs. The solution isn't avoiding complexity; it's managing it systematically.

Use Case: Teams building AI workflows can use Runable to create automated processes that coordinate across multiple AI models and services without custom integration work.

Try Runable For Free

Apple's AI product cycle delays are twice the typical duration, risking falling behind competitors. Estimated data based on industry trends.

Market Impact: What Delayed Siri Means for Competitors

For Google, this is an opportunity. Android phones get a faster AI assistant upgrade than iPhones. Developers might start optimizing for Google Assistant first, knowing iOS will lag.

For Microsoft, this validates their bet on Copilot. While Apple's struggling to ship, Microsoft's been building AI into Windows, Office, and browsers at scale.

For Anthropic and OpenAI, it confirms that integrating advanced AI into existing products is harder than building AI from scratch. That's not bad news for them—it's actually good news. It means building AI companies is one challenge; integrating them into products at scale is another. Both are valuable.

For end users, the impact is straightforward: if you're on iOS and waiting for a better Siri, you've got another 8+ months to wait. In the meantime, Android users are getting Gemini integration in Google Assistant. That's a real product advantage.

Why Apple's Delays Matter More Than Other Companies' Delays

When Google delays something, it's frustrating but expected. Google ships beta features all the time. Delays are part of their process.

When Microsoft delays something, people assume Windows complexity made it necessary.

When Apple delays something, it signals that something is genuinely wrong. Apple's brand is built on precision, on shipping things right. Delays break that perception.

So these Siri delays are uniquely damaging to Apple's brand positioning. Customers expect Apple products to work flawlessly. Delays suggest they don't, that engineers are struggling, that Apple might have overpromised.

The Months Ahead: What to Expect

March brings iOS 18.4 with minor Siri improvements, probably limited to safety features, simple enhancements Apple's confident about.

May brings iOS 18.5 with more substantial Siri improvements, but probably not the full feature set. Expect on-device improvements first, since those don't rely on Google Gemini partnerships.

September brings iOS 27 with the full vision Apple originally promised. Assuming the May rollout goes smoothly and they're not hit with new issues.

Each phase will be watched closely by competitors and the tech press. If May's release feels half-baked, expect headlines about Apple's AI stumble. If it's solid, the narrative resets to "Apple got it right, just took time."

Lessons for Building AI Products at Scale

The Siri delays teach anyone building AI products some hard truths:

First: Integration complexity grows non-linearly. Apple isn't just shipping a language model. They're shipping it integrated into devices, ecosystems, privacy frameworks, and backward compatibility requirements. Each integration point adds exponential complexity.

Second: Testing voice at scale is genuinely hard. Text chatbots can be tested more systematically because input is bounded. Voice has accents, backgrounds noise, regional variations, colloquialisms. Testing all of that across millions of devices is an engineering nightmare.

Third: Cloud partnership adds risk. Apple's dependency on Google Gemini means delays in Google's product affect Apple's timeline. That's why integrating cloud services is riskier than building everything in-house.

Fourth: Privacy is a design constraint, not an afterthought. If Apple tried to skip privacy considerations and just copy OpenAI's or Anthropic's approach, they'd ship faster. But it would contradict their brand. So they have to engineer privacy in from the start, and that's harder and slower.

The Future: What's Beyond September's iOS 27

Assuming the September launch goes well, what's next for Siri?

Expect continuous iteration. Apple will push toward more on-device capability, reducing cloud dependency as neural engines get faster. They'll expand to new languages and regions gradually. They'll integrate with smart home more deeply, healthcare features, car integration.

But the next big milestone will be whether Siri actually becomes competitive with Google Gemini, Chat GPT, and Claude. Shipping isn't winning. Working well matters more.

If September's Siri is fast, accurate, and actually useful, the delays become forgiven history. If it launches and people immediately notice it's still not as good as asking Chat GPT, then the delays become a symbol of Apple's AI struggle.

The Broader AI Market Implications

These delays aren't just about Siri. They reflect a broader truth: building AI products at the scale Apple operates requires time and capital that even Apple struggles with.

Smaller companies might move faster with simpler solutions. But Apple's challenge is different. They need Siri to work across billions of devices, in dozens of languages, while maintaining privacy, integrating with their ecosystem, and not breaking existing functionality.

That's not easy. And these delays prove it.

For the industry, it means the AI race isn't won by whoever ships first, but by whoever ships right. Patience matters. Execution matters more. Apple's betting on that—that if they take their time and ship a great Siri, the delays become irrelevant.

Time will tell if they're right.

FAQ

When will the new Siri actually launch?

Based on current timelines, some new Siri features will arrive with iOS 18.5 in May 2025, while the full reimagined Siri with complete AI capabilities is expected with iOS 27 in September 2025. However, Apple has delayed these dates before, so further pushbacks are possible.

Why is the new Siri taking so long to develop?

The delays stem from testing issues discovered during development, particularly around the integration between on-device processing and cloud-based Google Gemini services. Apple needs to ensure the assistant works seamlessly across billions of devices in multiple languages while maintaining privacy standards and handling edge cases where connectivity fails or responses are delayed.

How will new Siri be different from current Siri?

New Siri will function more like modern large language model chatbots such as Chat GPT and Google Gemini, with improved context awareness, multi-turn conversations, screen understanding, and the ability to execute complex multi-step commands. Users will be able to speak naturally without requiring exact command phrasing.

Will new Siri work without internet connection?

Yes, Apple is designing new Siri to function both on-device and in hybrid mode with cloud processing. Many features will work offline using on-device processing, while more complex queries will be sent to Google Gemini when internet is available. Fallback mechanisms will enable Siri to gracefully degrade when cloud services are unavailable.

Is Apple partnering with Google for Siri's AI?

Yes, Apple and Google are partnering to integrate Google Gemini as the cloud-based language model powering new Siri's advanced capabilities. This partnership allows Apple to leverage Gemini's reasoning and language understanding while maintaining their privacy-first approach through data handling agreements.

How does delayed Siri affect Apple's competitiveness in AI?

The delays put Apple behind competitors like Google and Microsoft, who have already integrated advanced AI assistants into their devices and services. However, Apple's approach of focusing on quality and privacy, rather than speed to market, aims to eventually deliver a superior user experience that justifies the longer development timeline.

Will the new Siri work on older iPhone models?

While Apple hasn't officially specified device requirements, the new Siri will likely require iPhone 15 or later due to neural engine specifications needed for on-device processing. Older devices may still receive some cloud-based features if they can handle the connectivity and API requirements, though with reduced capability.

What happens to current Siri functionality during the transition?

Current Siri will continue functioning normally and receive minor improvements during the transition period. Apple is implementing the new Siri features gradually through iOS 18.4, 18.5, and iOS 27 releases to ensure stability and avoid disrupting existing user workflows that depend on current Siri capabilities.

How will Apple ensure privacy with cloud-based Gemini integration?

Apple has committed to stripping identifying information from Siri queries before sending them to Google Gemini, preventing Google from learning user-specific patterns. Additionally, Google has agreed not to use Siri queries for model training or ad targeting, though this creates a unique business arrangement between the typically competing companies.

Conclusion: Patience in the AI Age

Apple's Siri delays are frustrating, but they're also revealing.

They show that even the most capable company in the world can't instantly pivot from being behind in AI to being ahead. They show that integration complexity is real. They show that shipping working AI at scale is harder than most people realize.

But here's what matters: Apple's committed to getting it right. They're not shipping a half-baked Siri just to hit a deadline. They're taking the time to make it work.

In a market flooded with rushed AI features that often don't work as promised, there's something to be said for that approach.

The new Siri launching in May or September won't be perfect. No voice assistant is. But if Apple nails the basics—speed, accuracy, context awareness, reliability—then these delays become a footnote in history.

When Siri finally arrives, the conversation will shift from "why did it take so long?" to "does it actually work?" That's when the real competition begins.

Until then, we wait. And Apple keeps testing. And competitors keep shipping features. And users keep asking why their iPhone's assistant still isn't as smart as Chat GPT.

The delays matter less than the final product. And Apple's betting that's a bet worth making.

Key Takeaways

- New Siri features delayed from March 2025 to May (iOS 18.5) and September (iOS 27) due to testing issues with Google Gemini integration

- Apple is building a hybrid on-device and cloud-based Siri powered by Google's Gemini to compete with ChatGPT and Claude capabilities

- Testing voice assistants at scale is exponentially complex, requiring validation across billions of devices, multiple languages, and countless edge cases

- The delays signal that integrating advanced AI into existing products is harder than building AI from scratch, benefiting both AI startups and platform companies differently

- Competitors like Google and Microsoft are shipping AI assistant features faster, giving them market advantage while Apple prioritizes quality over speed

Related Articles

- Humanoid Robots & Privacy: Redefining Trust in 2025

- OpenAI Researcher Quits Over ChatGPT Ads, Warns of 'Facebook' Path [2025]

- TikTok's New Local Feed: Redefining Community Engagement in the U.S. [2025]

- Discord Age Verification 2025: Complete Guide to New Requirements [2025]

- Ivermectin as Cancer Treatment: Why Federal Research Funding Raises Red Flags [2025]

- What Businesses Are Actually Building With AI Coding Tools [2025]

![Apple's Siri Revamp Delayed Again: What's Really Happening [2025]](https://tryrunable.com/blog/apple-s-siri-revamp-delayed-again-what-s-really-happening-20/image-1-1770845749638.jpg)