Bandcamp's AI Music Ban: What It Means for Artists and the Industry [2025]

In January 2025, something genuinely rare happened in tech. A major platform took a hard stance against AI. Not a cautious middle ground. Not a "we're monitoring the situation." A complete ban.

Bandcamp announced it would no longer permit AI-generated music on its platform. Period. The company also prohibited users from scraping content or using uploaded audio to train machine learning models.

This matters because Bandcamp isn't some niche startup. It's one of the few places where independent musicians actually make money. Artists have earned over $3 billion through the platform since its founding in 2008. When Bandcamp speaks, independent musicians listen.

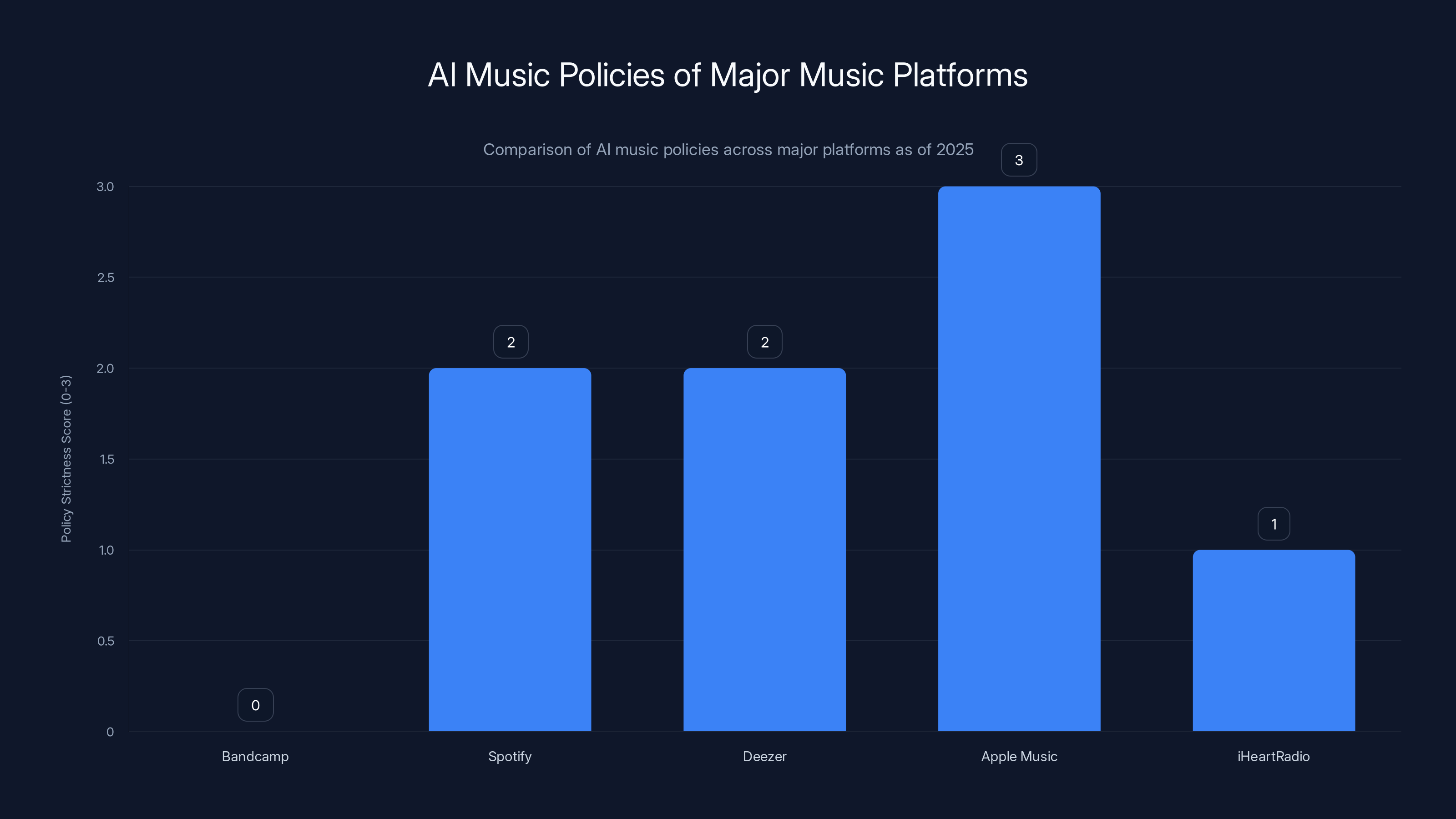

But here's what caught everyone by surprise: Bandcamp was the first major music platform to go this far. Spotify? They banned music that imitates real artists, but they allow some AI content. Deezer took a similar cautious approach. Even Apple Music hasn't issued a blanket prohibition. Meanwhile, iHeart Radio pledged not to play AI music or use AI DJs, but enforcement remains unclear.

Bandcamp just drew a line in the sand.

This decision didn't happen in a vacuum. It comes after months of increasing artist outrage, copyright lawsuits against AI companies, and growing awareness that AI training datasets were built largely without artist consent. Understanding this moment—and what comes next—requires looking at the who, what, why, and how of Bandcamp's decision.

TL; DR

- Bandcamp banned AI-generated music entirely, making it the first major music platform with a complete prohibition

- Artists cannot upload music made substantially or wholly by AI, and the platform encourages users to report violations

- Content scraping for AI training is prohibited, preventing companies from using Bandcamp's catalog to train models without permission

- Other platforms remain cautious, with Spotify and Deezer focusing on imitation rather than outright bans

- This reflects broader artist concerns about consent, copyright, and the future of human-made music

Bandcamp has implemented the strictest policy against AI music with a complete ban, while other platforms have partial restrictions or unclear policies. Estimated data based on policy descriptions.

The Context: Why This Moment, Why Now

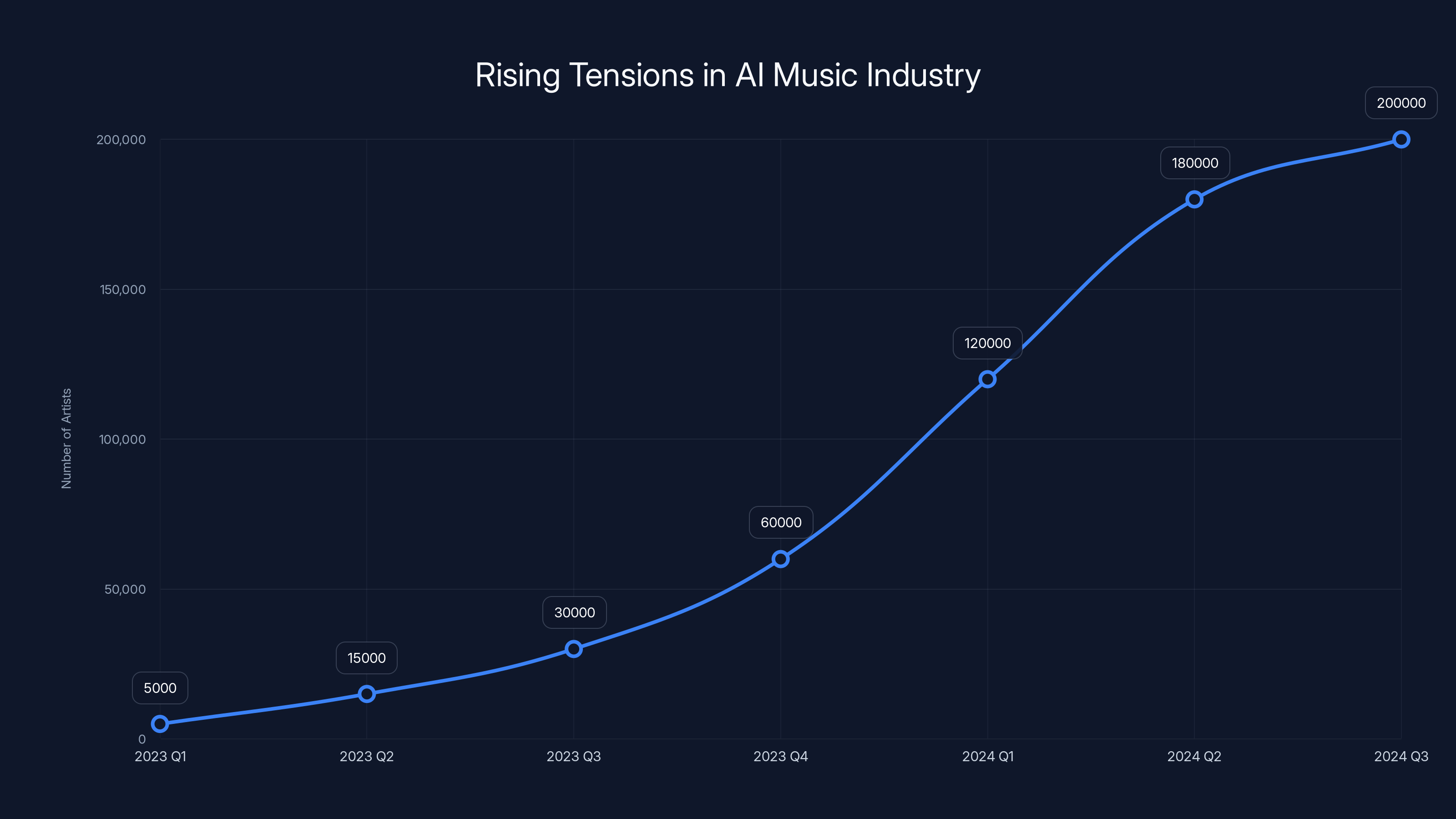

Bandcamp's decision didn't emerge from nowhere. It's the culmination of a year of increasing tension between AI companies, artists, record labels, and music platforms.

The AI music training debate has three interconnected problems. First, companies like OpenAI, Google, and Meta have trained their models on massive datasets that include copyrighted music and artist work—often without explicit consent or compensation. Second, generative AI tools can now create passable music, which threatens traditional musician income streams. Third, AI can impersonate specific artists, which raises both legal and ethical red flags.

By mid-2024, the anger reached critical mass. Universal Music Group, Sony, and Warner Bros. sued major AI music generators like Suno and Udio, claiming copyright infringement. The lawsuits alleged that AI companies used millions of copyrighted songs to train their models without licensing or permission.

Then came the protest songs. Artists started releasing tracks with lyrics specifically designed to poison AI training data. The message was clear: we didn't consent to this, and we're not happy about it.

Bandcamp's timing is strategic but also inevitable. The company has always positioned itself as artist-first. While Spotify, Apple Music, and Amazon Music are owned by or answer to conglomerates with other interests, Bandcamp is independent. Its core business model depends on artists trusting the platform with their work and their livelihood.

If artists stopped uploading to Bandcamp, the platform dies. So when the artist community mobilized against AI, Bandcamp listened.

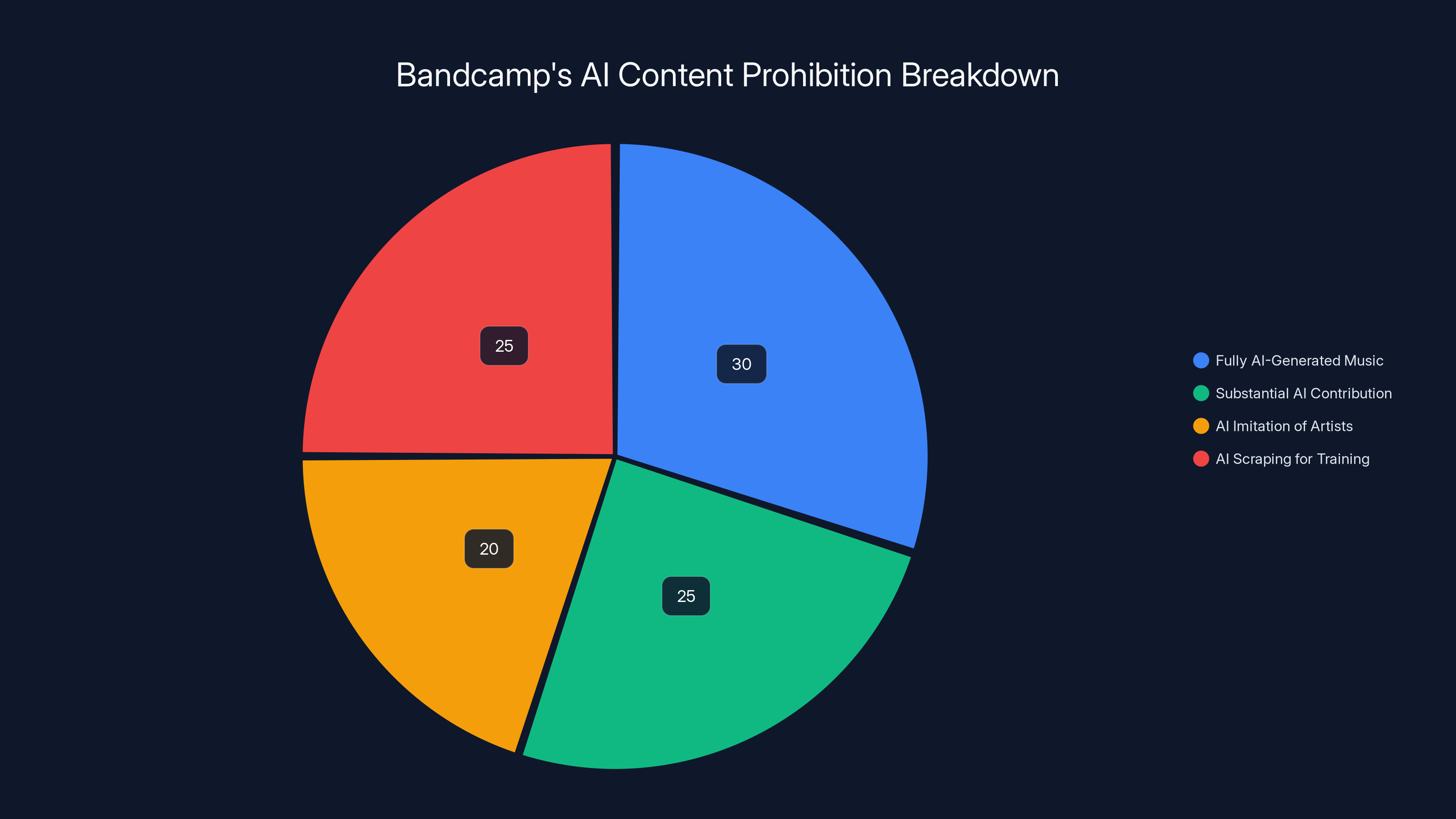

Estimated data suggests that Bandcamp's AI policy is equally focused on prohibiting fully AI-generated music, substantial AI contributions, AI imitation of artists, and AI scraping for training purposes.

What Exactly Did Bandcamp Prohibit

Bandcamp's policy is actually remarkably clear, which is refreshing in an industry drowning in ambiguity.

The core rule: "Music and audio that is generated wholly or in substantial part by AI is not permitted on Bandcamp."

Let's break down what this means in practice. If you used an AI tool to generate 100% of a track, it's banned. If you used AI to generate 70% and then heavily modified the remaining 30%, it's still banned because it was "in substantial part" AI-generated.

But what about using AI for a drum loop while writing melodies yourself? Or using AI for arrangement suggestions while playing instruments live? The policy doesn't explicitly address these hybrid scenarios, and that's where enforcement gets tricky.

The second part of the policy specifically prohibits using AI to imitate other artists or styles. This mirrors Spotify's policy from September 2024, which focuses heavily on impersonation.

The third prohibition is perhaps most significant for AI companies: Bandcamp explicitly prohibits scraping its platform or using its content to train any machine learning or AI model. Users agree not to "train any machine learning or AI model using content on our site or otherwise ingest any data or content from Bandcamp's platform into a machine learning or AI model."

This is a direct response to how modern AI works. Companies train models on massive datasets scraped from the internet. By prohibiting scraping, Bandcamp removes itself from the training data pool for any responsible AI company. Companies that ignore this and scrape anyway open themselves to legal liability.

Bandcamp also encourages the community to report violations. The company built this into the platform—users can flag music that "appears to be made entirely or with heavy reliance on generative AI." This shifts some moderation burden to the community, which is both efficient and risky. Community moderation works when standards are clear and the community agrees on enforcement. On a subjective topic like "how much AI is too much," this could get messy.

How Bandcamp's Ban Compares to Other Platforms

Here's where the landscape gets interesting. Bandcamp isn't operating in isolation. Let's map how major music platforms are actually handling AI content right now.

Spotify's Cautious Approach

Spotify announced its AI policy in September 2024. The platform doesn't ban AI-generated music outright. Instead, it focuses on preventing impersonation and requiring clear labeling in some cases.

Spotify's actual enforcement mechanism is softer than Bandcamp's. The company said it would remove content if "created to sound like a real artist without their consent," but content that's labeled as AI or doesn't attempt impersonation remains allowed. Spotify also allows artists to opt out of AI training via their artist dashboard, which is useful but requires individual action.

The practical difference: a generic lo-fi beat created entirely by Suno would theoretically be fine on Spotify if it doesn't impersonate anyone. That same track would be banned on Bandcamp.

Apple Music's Silent Handling

Apple Music hasn't issued a comprehensive AI policy statement. The platform moderated some AI content quietly, removing music suspected of being AI-generated, but there's no public framework for what is or isn't allowed.

This approach is frustrating for both artists and AI music creators because the rules remain invisible. You upload something, it gets removed, and the reason might be AI detection—or it might be something else entirely.

Amazon Music and YouTube Music

Both services have largely stayed quiet on comprehensive AI bans. Amazon Music and YouTube Music moderate content case-by-case without announcing a major policy shift.

YouTube's position is complicated because the platform hosts AI music creation content—tutorials, reviews, and music created with AI tools. Banning AI music entirely would require massive content removal.

SoundCloud's Ambiguous Position

SoundCloud, another major platform for independent creators, hasn't made a strong statement either direction. The platform allows AI-generated music currently but provides tools for artists to protect their work from being used in training datasets.

iHeart Radio's Radio-Specific Ban

iHeart Radio pledged in November 2024 not to play AI-generated music on its radio stations or use AI DJs. But iHeart Radio is a radio service, not a music hosting platform. The company can control what plays on its network without needing to enforce an upload ban.

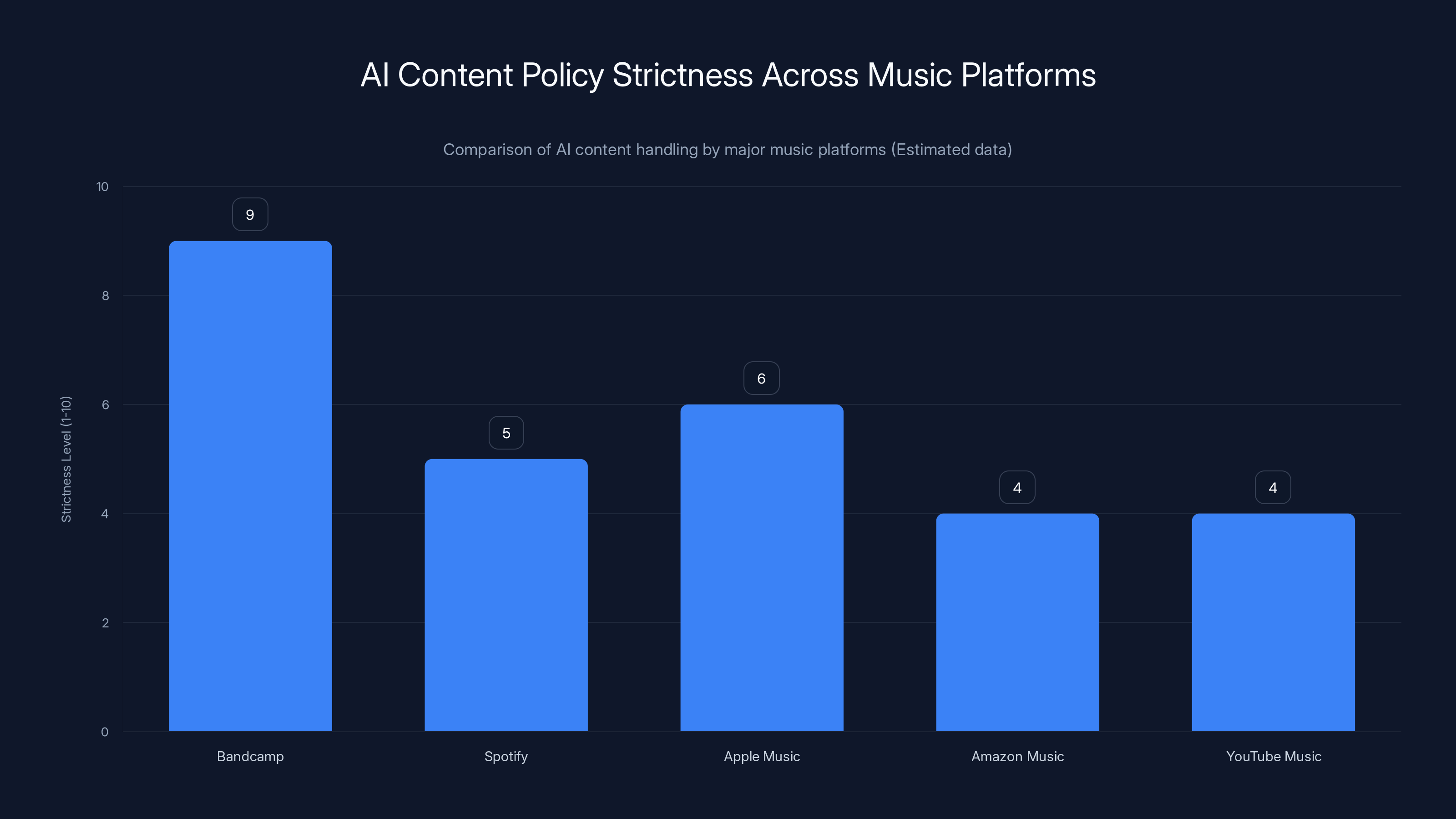

Bandcamp has the strictest AI content policy, banning all AI-generated music, while Spotify and others have more lenient or unclear policies. (Estimated data)

Why Bandcamp's Approach Is Different

Bandcamp's decision stands apart for one key reason: it's not a compromise policy. The company didn't create a middle ground. It didn't introduce labeling requirements or individual opt-outs. It went all-in on artist protection.

This reflects Bandcamp's business model and values in a way that Spotify's approach cannot. Spotify is owned by a giant media conglomerate managing relationships with labels, rights holders, and investors with competing interests. Bandcamp is independent. Its stakeholders are artists.

When your entire platform exists to serve musicians and take cuts from their sales, you can't afford to let AI-generated music dilute the value of human-created work. Every AI-generated track that trends on Bandcamp is a track that might have been created by a real artist who needs that income.

The policy also reflects something deeper: Bandcamp's team genuinely seems to believe that unauthorized training data scraping is wrong. The company isn't just preventing uploads; it's actively prohibiting its content from being used to train AI models. This is a values-based position, not just a business decision.

The Enforcement Question: How Will This Actually Work

Here's where reality gets complicated. Banning something and enforcing that ban are two very different challenges.

Detecting AI-generated music is getting harder. Early AI music tools produced output that was obviously synthetic—repetitive, uncanny, missing the subtle details that make human-played music compelling. But by late 2024, tools like Suno and Udio were producing material that could pass casual listening tests.

Bandcamp's strategy relies on three enforcement mechanisms:

First: Community reporting. Users flag content they believe is AI-generated. Bandcamp then reviews flagged content. This works but creates problems. What happens when someone falsely reports a human artist? What if a musician made something unconventional and it gets reported? The policy says content generated "wholly or in substantial part" by AI is banned, but determining that threshold requires human judgment.

Second: Audio fingerprinting and detection tools. Bandcamp could deploy (or develop) AI tools specifically designed to detect AI-generated music. This is recursive—using AI to catch AI—but it's technically possible. Companies like Authenticity.ai have started offering detection services for this exact purpose.

Third: Metadata and context. If someone uploads a track and the metadata includes "Generated with Suno," that's pretty clear. But users can hide this information or lie about their tools.

Bandcamp hasn't announced which detection methods they're using, which means they're probably relying primarily on community reports for now and building detection capability over time.

The enforcement challenge also extends to the scraping prohibition. How does Bandcamp prevent AI companies from scraping its platform? Primarily through legal terms and technical barriers. The company probably uses robots.txt rules and rate-limiting to discourage bots. They can also pursue legal action against companies that scrape deliberately and in violation of the terms of service.

But determined scrapers can work around these defenses. A sufficiently motivated AI company could download Bandcamp tracks manually, use rotating IP addresses to avoid detection, or hire contractors to collect data. This is why Bandcamp's policy is meaningful primarily as a moral and legal statement: "If you scrape us, you're liable."

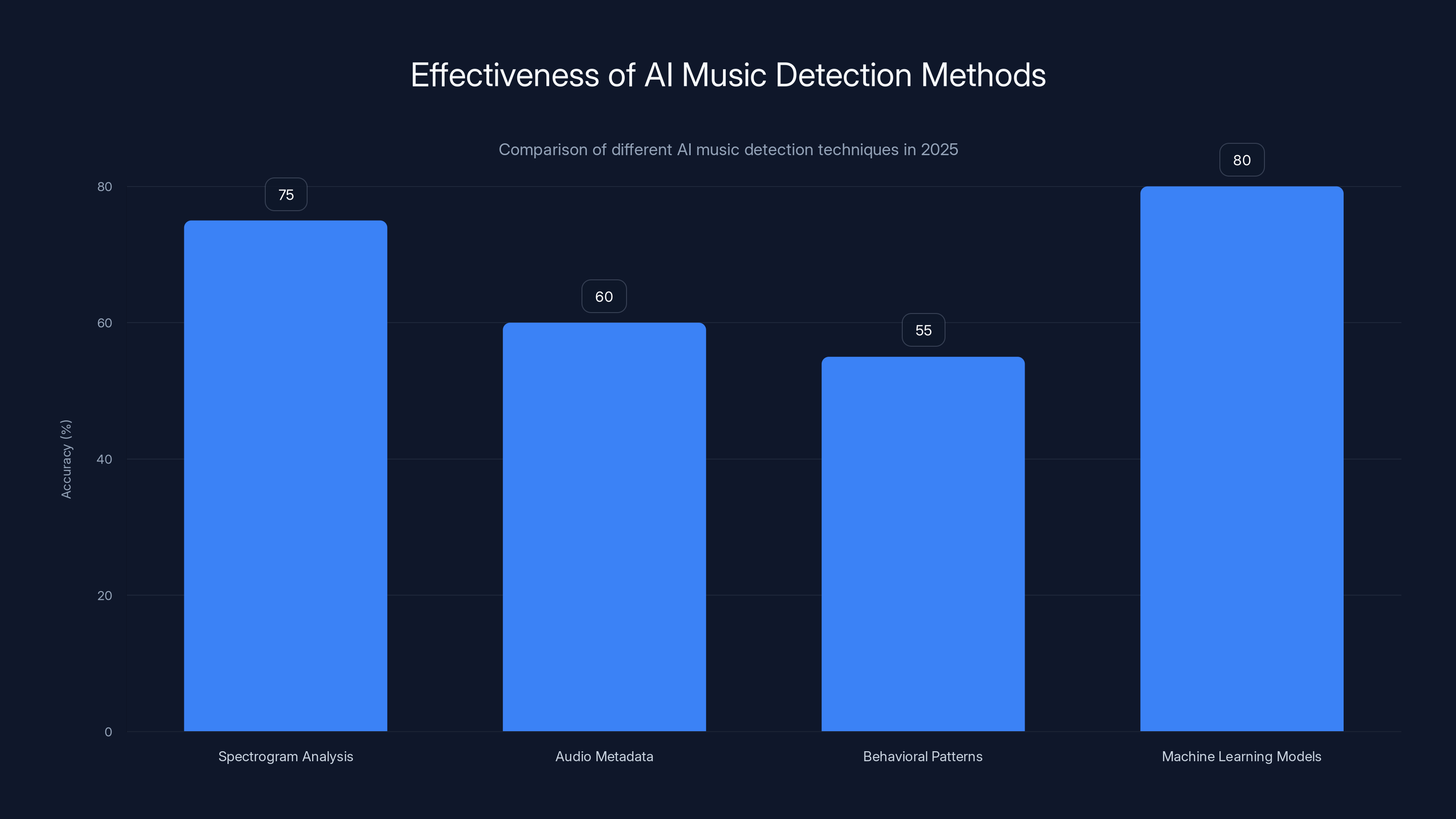

Machine learning models are currently the most effective method for detecting AI-generated music, with an estimated accuracy of 80%. However, all methods face challenges with false positives and negatives. (Estimated data)

What This Means for AI Music Creators

Let's be clear about what Bandcamp's ban does NOT do. It doesn't prevent AI music tools from existing. It doesn't shut down Suno or Udio or any other AI music generator. It doesn't make it illegal to create AI music.

What it does is eliminate one major distribution channel for AI-generated music. For creators trying to monetize AI-generated tracks through streaming, Bandcamp is now off the table.

For hobbyists and experimentalists, this is probably fine. There are dozens of platforms where you can upload and share AI-generated music without restriction. SoundCloud still allows it. Lesser-known platforms like Audiomatch, Reverb Nation, and various music submission sites don't have blanket bans.

But for someone trying to build a legitimate business around AI music—creating background music for content creators, producing AI-generated instrumental albums, or developing AI as a creative tool—losing Bandcamp is a real cost. Bandcamp is one of the few platforms where independent creators can actually build an audience and earn meaningful revenue without a record label.

The bigger picture: Bandcamp's ban signals that the industry is increasingly hostile to unattributed, non-consensual AI music. If more platforms follow Bandcamp's lead, AI music creators will face a narrowing distribution landscape.

There's also a philosophical question here. Some artists and technologists argue that AI music creation is a legitimate artistic tool, no different from synthesizers or drum machines. This argument has merit. The issue isn't AI creation itself; it's unauthorized training data and AI that impersonates real artists without consent.

Bandcamp's policy conflates these issues somewhat. It bans all AI-generated music, even music created by someone who built their own AI model on their own training data and created something entirely original. That's arguably overly broad.

What This Means for Human Musicians and Artists

For the artists Bandcamp serves, the ban is largely good news. It's a statement that Bandcamp values human creativity and is willing to limit the platform to protect artist income and reputation.

But there are nuances. The ban doesn't prevent AI music from existing elsewhere. It just keeps it off Bandcamp. A musician still faces competition from AI-generated music on every other platform. Spotify will still recommend AI lo-fi beats to users looking for background music. YouTube still hosts AI-generated music. Apple Music still allows it (though they're quieter about it).

Bandcamp's decision is meaningful primarily because Bandcamp's users—both artists and listeners—tend to value human creativity and artist support. The platform's community culture aligns with protection of human-made music.

There's also a longer-term question: Does this increase or decrease opportunities for musicians?

One argument says yes: By eliminating cheap AI-generated competition on Bandcamp, the platform becomes more valuable for human artists. Listeners come to Bandcamp specifically to find human-created music. That artist concentration creates a more valuable audience.

Another argument says the ban is mostly symbolic. The real problem isn't AI music existing on Bandcamp; it's AI music existing everywhere else, trained on human artist data without consent. Banning it from one platform doesn't solve the core problem.

Estimated data shows a sharp increase in artists opting out of AI training datasets, reaching over 200,000 by October 2024.

The Bigger Issue: Training Data and Consent

Here's the thing that matters most: Bandcamp's AI music ban is really a proxy for the actual issue, which is about training data consent.

Artists aren't mainly angry because AI music exists. They're angry because AI companies built their models using copyrighted music without asking permission, without offering compensation, and without clear licensing.

Consider what happened with music AI. Companies like OpenAI (used music in text-to-music experiments), Google (trained models on YouTube music), and various startups (scraped entire music catalogs) essentially said: "We're going to use your work to build a product that will compete with you, and you don't get a say."

This is why the lawsuits from Warner Bros., Universal, and Sony matter so much. These companies are fighting for the principle that artists should control their work and get paid when their work is used.

Bandcamp's scraping prohibition is the real enforcement mechanism. By saying "you can't train on our content," Bandcamp is drawing a line that forces AI companies to either license content or find other sources.

This matters because it raises the cost of building music AI ethically. If every platform requires licensing, AI companies have to negotiate deals with artists and rights holders. That's expensive. It's also more fair.

The unresolved question is whether legal pressure and platform policies can actually change AI company behavior. Companies determined to build AI music models can always scrape content, use licensing loopholes, or source data from outside the U.S. (where enforcement is weaker).

But they do so at legal risk. And increasingly, that legal risk seems substantial.

How the Industry Might Respond

Bandcamp's decision creates pressure on other platforms. Internally, executives at Spotify, Apple Music, and Amazon Music are probably having urgent conversations about policy.

The calculus looks like this: If Bandcamp can take a hard AI music stance without losing users, other platforms might follow. But Bandcamp's incentive structure is unique. The company is independent and artist-focused. Spotify answers to investors and major labels. The decision-making pressures are different.

Still, expect more explicit AI music policies in 2025. Here's what might happen:

Scenario 1: Status Quo Continuation. Platforms maintain ambiguous policies, moderate on case-by-case basis, and avoid taking strong positions. This is easiest for platforms because it minimizes liability and avoids alienating either AI enthusiasts or artist communities.

Scenario 2: Spectrum-Based Approaches. Platforms create tiered categories: AI-generated content must be clearly labeled, and recommendations are adjusted accordingly. Users can opt to see or hide AI music. This is the Spotify path—middle ground enforcement.

Scenario 3: Bandcamp-Style Bans. Other platforms recognize that artist trust matters more than hosting AI music, and they implement comprehensive bans. This seems less likely for major platforms but possible for artist-focused services.

Scenario 4: Licensing-Based Solutions. Platforms develop licensing agreements with AI companies, creating legal pathways to use human music for training in exchange for compensation. This requires negotiation but could become standard practice.

My prediction: We'll see a mix. Larger platforms will lean toward spectrum-based approaches with clearer labeling. Artist-focused platforms will follow Bandcamp toward bans. New AI music platforms will emerge that focus on transparently licensed or original training data.

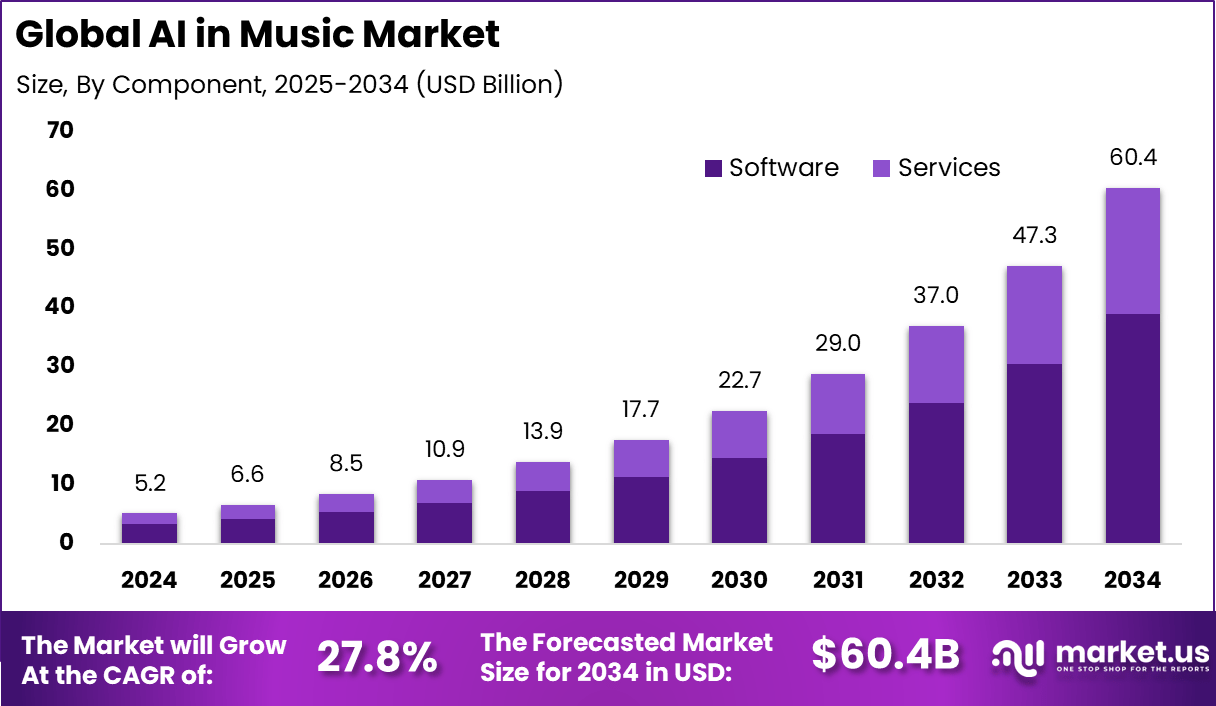

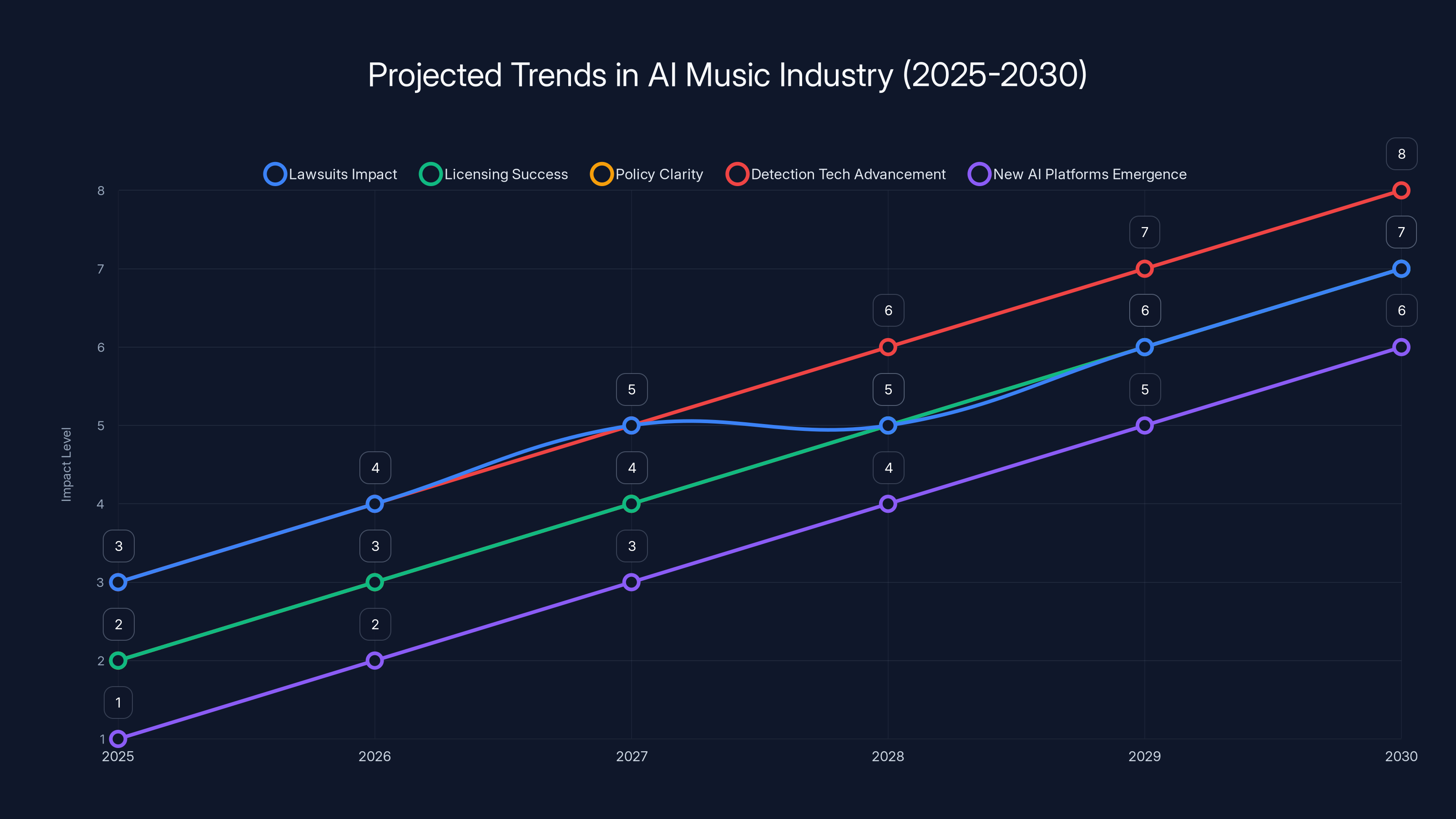

Estimated data suggests a steady increase in the impact of lawsuits, licensing success, policy clarity, detection technology, and new AI platforms from 2025 to 2030.

The Technical Challenge: Actually Detecting AI Music

Bandcamp's ban is only as strong as its detection capability. Let's talk about the technical reality of detecting AI-generated music in 2025.

Early AI music had tell-tale signs. The audio would have subtle artifacts. The timing of instruments would be slightly too perfect. The dynamics would lack the natural variation of human performance. Tools like audio forensics could identify these patterns.

But modern AI music tools have improved dramatically. Suno and Udio now produce output that passes basic listening tests. The music sounds human enough for background music, lo-fi beats, and instrumental tracks.

Detecting this requires more sophisticated approaches:

Spectrogram analysis: AI-generated audio leaves fingerprints in spectrogram visualization—patterns that neural networks create consistently but differ from human performance. Tools can detect these patterns.

Audio metadata: AI music tools often embed metadata or watermarks that identify the source. This only works if the metadata isn't stripped.

Behavioral patterns: Patterns in how artists upload, what genres they choose, how frequently they upload—these can suggest AI generation at scale. Someone uploading 50 tracks per day might be using AI generation tools.

Machine learning detection models: The ultimate recursive solution—train AI models specifically to detect AI-generated music. Companies like Authenticity AI are building exactly this.

The challenge is that all of these approaches have false positive and false negative problems. Spectrogram analysis might flag an unusual human instrument as AI. A human artist uploading many songs might be flagged as an AI user. Detection models are probabilistic—they give confidence scores, not definitive answers.

Bandcamp will likely need to accept this uncertainty and build appeals processes. If your music gets flagged for AI generation, you should be able to contest it and provide evidence (multitrack files, production photos, video of the creation process) that you created it.

The Global Dimension: What Happens Outside the U.S.

Bandcamp's policy applies primarily to its U.S. operations. International platforms and music services have different legal frameworks and cultural attitudes toward AI.

In the EU, for example, copyright law is stricter and creator protections are stronger. The EU's approach to AI regulation (the AI Act) is more prescriptive than U.S. regulation. European platforms might find it easier to justify AI music bans within this legal context.

In other regions, especially developing markets, AI music tools are seen as democratizing—they let people create music without expensive equipment or training. The cultural attitude is more permissive.

This creates a fragmented global landscape. Artists in different regions face different rules. AI music creators can target markets with looser policies. And major platforms have to navigate different standards across geographies.

Bandcamp's global user base is significant, so the company's policy will affect artists and creators worldwide. But it won't create complete uniformity.

What This Means for Music AI Companies

For companies building AI music tools, Bandcamp's ban is a signal: the industry is tightening around AI music creation and usage rights.

This doesn't kill the AI music industry. But it does require those companies to think more carefully about licensing, consent, and ethical training data practices.

The sustainable path forward probably looks like this:

Licensed Training Data. AI music companies negotiate with rights holders, platforms, and artists to use music for training. They pay licensing fees. This is expensive but creates a defensible legal position.

Opt-In Systems. Artists choose to contribute to AI training data in exchange for compensation. This solves the consent problem.

Original Model Training. Companies develop models trained on original compositions they commission or own, rather than scraped web data. This is also expensive but avoids legal liability.

Transparent Attribution. When AI generates music, the tool clearly indicates it's AI-created. This manages expectations and respects human artist distinctiveness.

Companies taking shortcuts—scraping training data, building models without licensing, not disclosing AI generation—face increasing legal and reputational risk. Bandcamp's policy is part of that pressure.

The Artist Perspective: Mixed Feelings

Bandcamp's decision isn't a unanimous victory for the artist community. Artists have complicated feelings about AI in general.

Some artists are enthusiastically anti-AI. They see AI music tools as theft and exploitation. For them, Bandcamp's ban is exactly right.

Other artists are pragmatists. They see AI as a tool—like synthesizers, drum machines, or digital audio workstations. They're happy to use AI as part of their creative process. For them, Bandcamp's blanket ban is too broad. It doesn't distinguish between AI used as a creative tool and AI used as a replacement for human creativity.

A third group is experimental. They're interested in exploring AI as a new artistic medium. They want to collaborate with AI or use it to create entirely new kinds of music that humans couldn't make alone. For them, Bandcamp's ban closes off creative possibilities.

The policy does take sides in this debate. It says: AI-generated or substantially AI-generated music isn't welcome here. This is a clear values statement, but it's not universally aligned with what all artists want.

Over time, this might create niche platforms for AI music experimentation while Bandcamp becomes the home of human-created music. That's probably fine—different platforms for different purposes.

What Happens Next: 2025 and Beyond

Bandcamp's ban in January 2025 is part of a larger shift. Here's what to watch for:

More lawsuits. The Universal, Sony, and Warner Bros. lawsuits against AI music generators will continue through 2025. These will establish legal precedent for whether AI training on copyrighted music constitutes infringement.

Licensing negotiations. AI music companies will attempt to negotiate licensing deals with record labels and artist groups. Some will succeed; others will fail.

Policy evolution. Expect major platforms to gradually clarify their AI music policies. Some will move toward stronger bans; others will establish labeling and discovery restrictions instead.

Detection technology advancement. Tools for detecting and attributing AI-generated music will improve. This doesn't mean they'll be perfect, but detection will become easier and more reliable.

New AI music platforms. Platforms specifically designed for AI music creation and sharing will emerge to fill the niche left by mainstream platform bans. These will likely focus on AI as a collaborative tool or on music created with licensed or original training data.

Creator protection tools. More platforms like Spawning will emerge, giving artists tools to protect their work from unauthorized AI training.

International harmonization. Over time, expect some convergence in AI music policies globally, driven partly by platforms standardizing rules across regions and partly by emerging international norms.

The Philosophical Question: What Does "Created By" Mean

Underneath the practical questions, there's a philosophical one: What counts as a music creator?

If I use Suno to generate a piece of music, did I create it? I didn't play any instruments. I didn't compose melodies or chord progressions in the traditional sense. I wrote a text prompt.

Bandcamp's position is essentially: No, you didn't create it substantially. The AI system did.

But this gets philosophically thorny. What if I used the AI tool to generate 100 variations, listened to each, made decisions about which direction worked best, then heavily arranged and mixed the result? Did I create that?

Or consider sampling, which is unquestionably a legitimate creative practice. A producer takes a piece of existing music and builds something new with it. We consider that creation. Why? Because the artistic decisions—what to sample, how to arrange it, what to do with it—come from the artist.

With AI music, the core artistic decisions (the model training, the algorithm design, the neural network architecture) come from the AI company. The user's contribution (the text prompt) is relatively thin.

That's why Bandcamp's distinction makes sense: using AI as a tool within a creative process is different from using AI as your primary creative engine.

This distinction might become more important as AI tools evolve. A future version of AI music tools might function more like synthesizers—giving users fine-grained control over every aspect of the output. That would be closer to traditional creation.

For now, most AI music tools operate more like magic boxes. You input a prompt; the algorithm does the work. In that context, Bandcamp's ban is philosophically defensible.

FAQ

What exactly is Bandcamp's AI music policy?

Bandcamp bans music generated wholly or substantially by AI. The platform also prohibits using AI to impersonate other artists or styles. Additionally, users cannot scrape Bandcamp's content or use any audio uploaded to the platform to train machine learning or AI models. Violations are subject to removal, and community members are encouraged to report suspected AI-generated music.

How does Bandcamp plan to enforce this ban?

Bandcamp relies on three mechanisms: community reporting (users flag suspected AI music), audio detection tools to identify AI-generated content, and terms of service enforcement against scraping. The company hasn't detailed its specific detection methods, but detection likely combines community reports with technical analysis of audio files. Enforcement of the scraping prohibition is primarily legal, with violations potentially subject to lawsuits.

Can I use AI tools to help with production but still upload to Bandcamp?

According to Bandcamp's policy, music generated "wholly or in substantial part" by AI cannot be uploaded. This creates ambiguity for hybrid approaches where artists use AI for some elements but create much of the music themselves. The practical answer depends on enforcement. If you use AI for a single drum loop but compose and perform everything else, you're probably fine. If you use AI to generate most of the track and then make minor edits, you'd likely violate the policy.

Why does Bandcamp's ban matter if AI music is allowed on other platforms?

Bandcamp's ban matters because the platform is uniquely important to independent musicians. It's one of the few places where musicians can earn substantial income without a record label. Bandcamp's decision signals that artist-focused platforms will prioritize human creators, which affects the broader industry narrative around AI music. When major music platforms see Bandcamp taking this stance successfully, it increases pressure on them to adopt stronger AI policies.

What's the difference between Bandcamp's approach and Spotify's?

Spotify's policy focuses on preventing impersonation and allows AI-generated music that doesn't try to sound like real artists. Bandcamp bans all substantially AI-generated music. Spotify's approach is more permissive but requires artists to opt out of AI training rather than AI companies getting consent upfront. Bandcamp's approach is more protective but potentially too broad for artists who want to use AI as a creative tool.

Can AI music companies legally train on Bandcamp content if they ignore the ban?

Technically, they can try, but they face significant legal liability. Bandcamp's terms of service explicitly prohibit scraping and using platform content for AI training. Companies that violate this open themselves to legal action from Bandcamp and potentially from artists whose work is infringed. The terms are clear enough that courts would likely find scraping intentional violation, which could trigger damages beyond the original infringement.

Will other music platforms follow Bandcamp's lead?

Some probably will, but major platforms like Spotify and Apple Music likely won't implement complete bans. These companies answer to investors and major labels with competing interests. However, expect more explicit policies and stronger enforcement against impersonation. Smaller artist-focused platforms will probably follow Bandcamp's lead more quickly. YouTube Music likely won't ban AI music because it hosts creation content, but expect clearer labeling and discovery restrictions.

What does this mean for musicians who want to use AI as a creative tool?

Bandcamp is no longer an option, but many other platforms allow AI-assisted music. SoundCloud, Reverb Nation, Audiomatch, and other services don't have blanket bans. However, the industry trend is toward stricter policies, so finding platforms that welcome AI music is getting harder. For musicians interested in AI as a legitimate creative tool, the sustainable path forward probably involves clearer policies on what counts as AI-generated and maintaining control over your own AI models and training data.

What This Moment Reveals About the Future

Bandcamp's decision is a flashpoint, not an endpoint. It reveals deeper tensions in how we think about creativity, technology, and compensation in the AI age.

On one side: Artists who feel their work is being exploited, who see AI as a threat to their livelihoods and legitimacy. These people built careers in a world where skill mattered, where hours of practice and thousands of failed attempts were necessary. AI threatens to collapse that value.

On the other side: Technologists and creators who see AI as the next frontier of creative expression. They want to explore what's possible, to collaborate with machines, to build new forms of art that humans couldn't create alone.

Bandcamp's ban doesn't resolve this tension. It takes a side. And that choice has consequences.

The optimistic view: This conversation forces the industry to develop better policies around consent, licensing, and attribution. AI music companies have to pay for the data they use. Artists have a say in whether their work trains models. The industry develops norms around disclosure and fairness.

The pessimistic view: Restrictions on AI music push development underground or offshore. The most interesting research happens in private labs rather than open platforms. Artists still face competition from AI but lose access to tools that might help them.

The probably realistic view: We get a mixed landscape. Some platforms ban AI music. Others embrace it with clear policies. Some artists use AI; others refuse to. The industry develops both better protections for artists and clearer paths for AI music creation. Over time, most people stop caring whether music was made by humans or machines and instead focus on whether they like how it sounds.

Bandcamp's move matters because it shifts the conversation. The default is no longer "AI music is allowed; deal with it." The default is increasingly "AI music is restricted unless handled ethically." That's a significant shift, and it opens space for the industry to establish better norms.

Whether those norms will actually protect artists or just make tech companies more sophisticated at hiding their practices remains to be seen. But the conversation is moving in a direction that might, actually, benefit creators.

And in an industry that's been extractive and exploitative toward artists for decades, even the possibility of change is notable.

Key Takeaways

- A major platform took a hard stance against AI

- But here's what caught everyone by surprise: Bandcamp was the first major music platform to go this far

- Meanwhile, iHeart Radio pledged not to play AI music or use AI DJs, but enforcement remains unclear

- AI Music Policies of Major Music Platforms

- By mid-2024, the anger reached critical mass

Related Articles

- Signal's Founder Built a Private AI That ChatGPT Can't Match [2025]

- Barcelona vs Real Madrid Free Streams: How to Watch Spanish Super Cup Final 2026 | TechRadar

- Tottenham vs Aston Villa free streams: How to watch FA Cup 2025/26 | TechRadar

- ‘AI-generated music is awesome, somebody’s still generating it. When the machine’s doing it on its own, talk to me’ — will.i.am tells me how he tunes LG’s new speakers, and why TikTok rather than AI is the big danger to music | TechRadar

- Has The Pitt season 2 episode 1 already let slip its biggest mystery yet? I’m convinced the answer is right under our noses | TechRadar

- Elon Musk's OpenAI Lawsuit Heads to Jury Trial in March 2025

![Bandcamp's AI Music Ban: What It Means for Artists and the Industry [2025]](https://tryrunable.com/blog/bandcamp-s-ai-music-ban-what-it-means-for-artists-and-the-in/image-1-1768406929651.jpg)