The Future of Automotive Voice Assistants is Here

For decades, talking to your car has felt more like shouting orders at an uncooperative teenager than conversing with an intelligent assistant. You'd ask something slightly ambiguous, the system would either do nothing or execute the wrong command, and you'd spend the next five minutes fishing for the right phrasing while keeping one hand on the wheel. It's been a frustrating experience that has made most of us default back to using our phones or just accepting silence as our travel companion.

But something fundamental is about to change. The 2026 BMW iX3 arrives as the first vehicle equipped with Alexa+, Amazon's next-generation voice assistant powered by large language models and generative AI. This isn't just another incremental improvement to voice recognition. This is a complete rethinking of how cars understand and respond to driver requests.

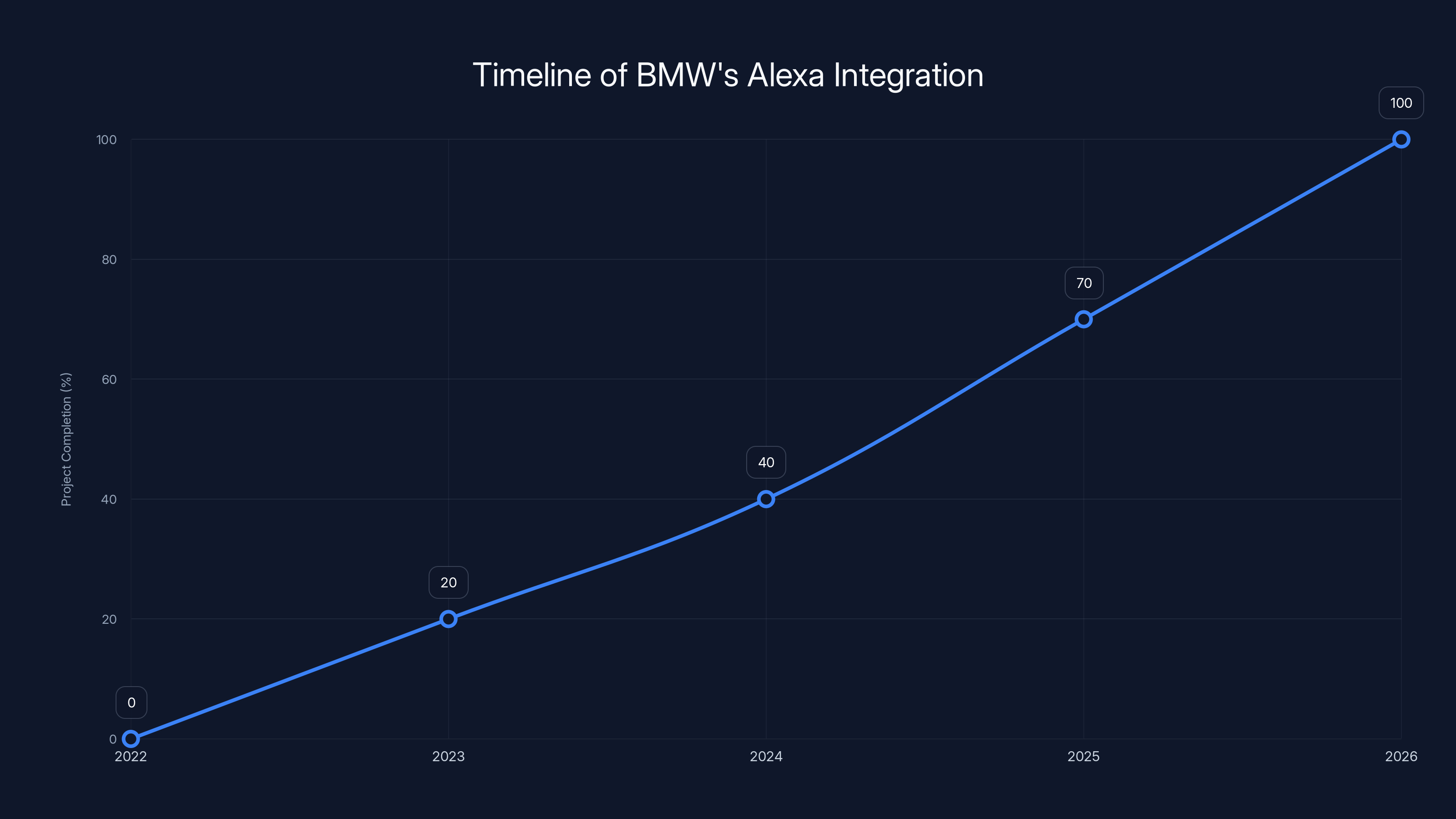

For three years, BMW and Amazon have been quietly engineering this partnership in the background. The timeline wasn't arbitrary. Amazon spent years building Alexa+—an AI system that fundamentally reimagines voice interaction—while simultaneously developing an automotive-specific version that could handle the unique demands of the driving environment. What they've created could finally solve a problem that has plagued the entire automotive industry since voice assistants first appeared in cars: making them actually useful.

The stakes are enormous. Voice interfaces in vehicles represent one of the last major unsolved problems in automotive technology. Self-driving capabilities get the headlines, but voice assistants affect every single interaction a driver has with their car, every single day. Get this right, and you've just improved the driving experience for millions of people. Get it wrong, and you're back to manually poking at screens while trying not to crash.

This partnership between BMW and Amazon signals something bigger than just one luxury carmaker adopting a new voice system. It represents a fundamental shift in how the automotive industry approaches artificial intelligence, how generative AI is being deployed in physical products, and how cars will increasingly become intelligent agents capable of understanding context, managing complexity, and anticipating user needs.

Let's break down what's actually happening here, why it matters, and what this means for the future of in-car technology.

Understanding Alexa+: More Than Just Better Voice Recognition

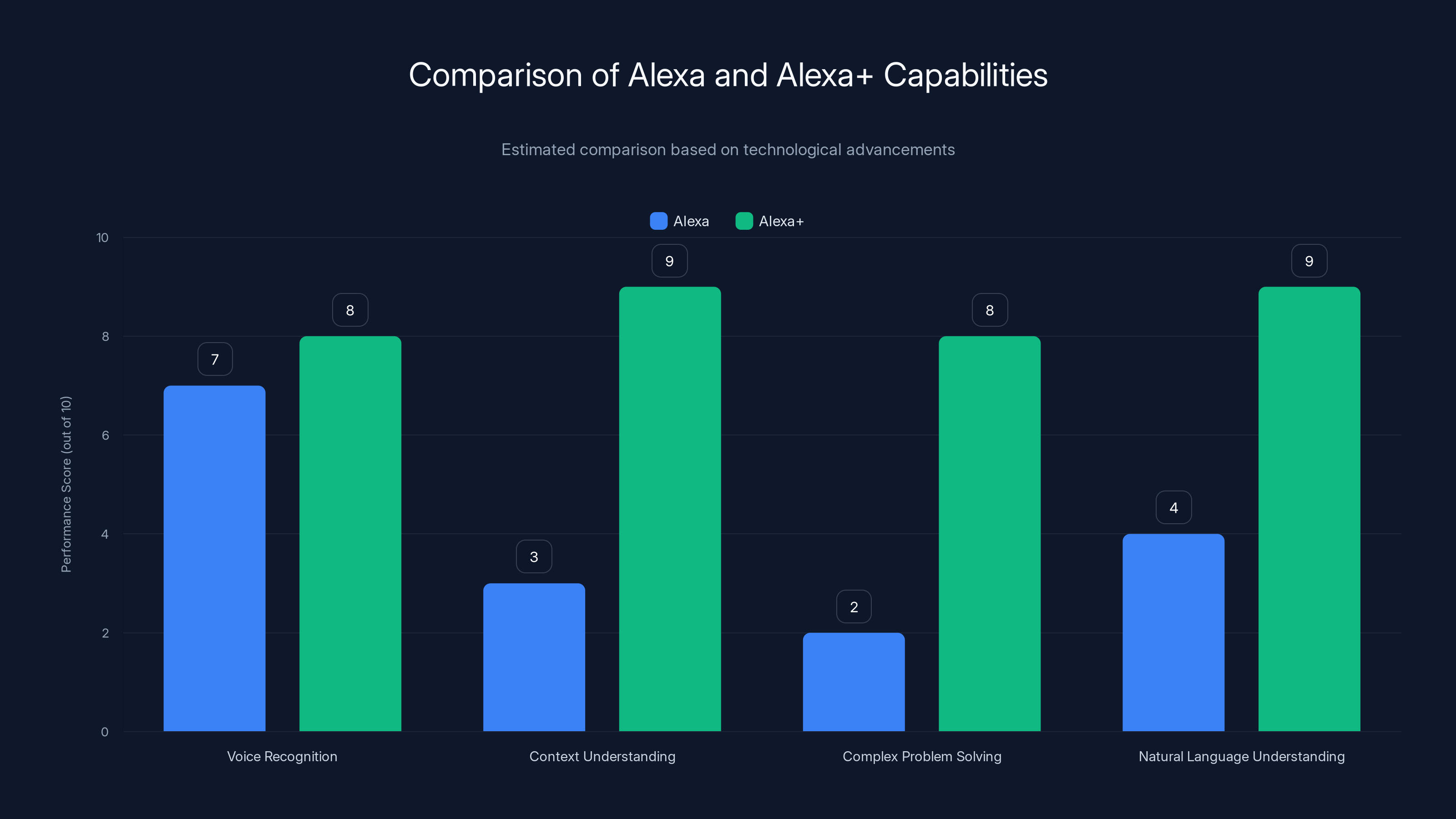

Almost everyone misunderstands what made Alexa+ different from the original Alexa. It's not faster. It's not more accurate at transcription. Those are nice-to-haves, but they're not the fundamental innovation.

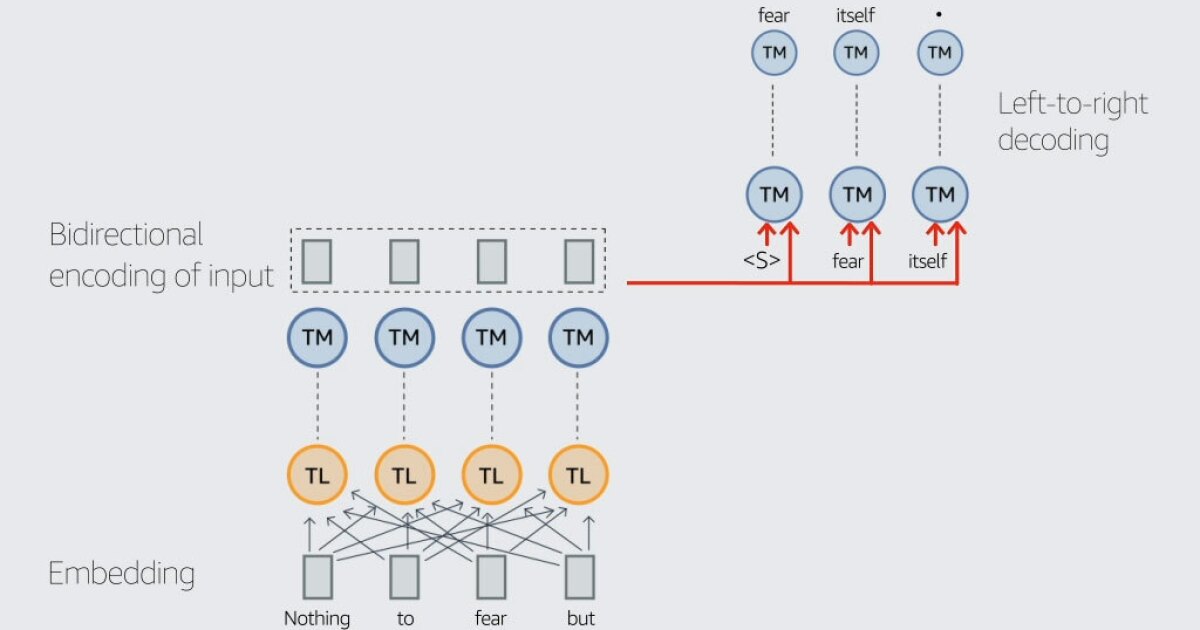

Alexa+ is built on something completely different: large language models (LLMs) that can reason through complex problems, understand context across multiple turns of conversation, and generate natural responses that sound like they're coming from an intelligent entity rather than a lookup table.

Consider a simple request. With original voice assistants, if you said "I'm cold," the system would either do nothing (because "cold" wasn't explicitly mapped to a command) or it would misinterpret your request and try to search the internet for information about temperature. With Alexa+, the system understands context. It knows you're in a car. It knows it's January. It knows you have climate controls available. So it infers that you want the heat turned up, and it does that without you having to say those exact magic words.

This capability—called natural language understanding—has been a moonshot goal for tech companies for over a decade. Early voice assistants were essentially sophisticated pattern matchers. You trained them with examples of things people might say, and they matched incoming requests to the closest example. But this approach falls apart immediately when someone uses a phrasing you didn't anticipate.

LLMs work differently. They've been trained on billions of tokens of human text, learning the underlying patterns of how language works. This means they can handle novel phrasings, context switching, ambiguity, and multi-step requests in ways that older systems fundamentally couldn't.

In the BMW iX3, this means you can start a conversation normally, make requests that are genuinely complex, and the system will follow the thread of what you're asking for rather than hitting a wall when you deviate from its expected inputs.

Amazon built Alexa+ using Amazon Bedrock, a platform that lets companies customize generative AI models with their own proprietary data. BMW will be able to train the system with BMW-specific information—details about how their specific vehicles work, their feature sets, their menus, their systems—creating a version of Alexa+ that knows their cars better than a generic voice assistant ever could.

This customization layer is crucial. Generic AI is often 70% of what you need. Getting that last 30% to feel natural and reliable usually requires domain-specific training and optimization. BMW gets to do that.

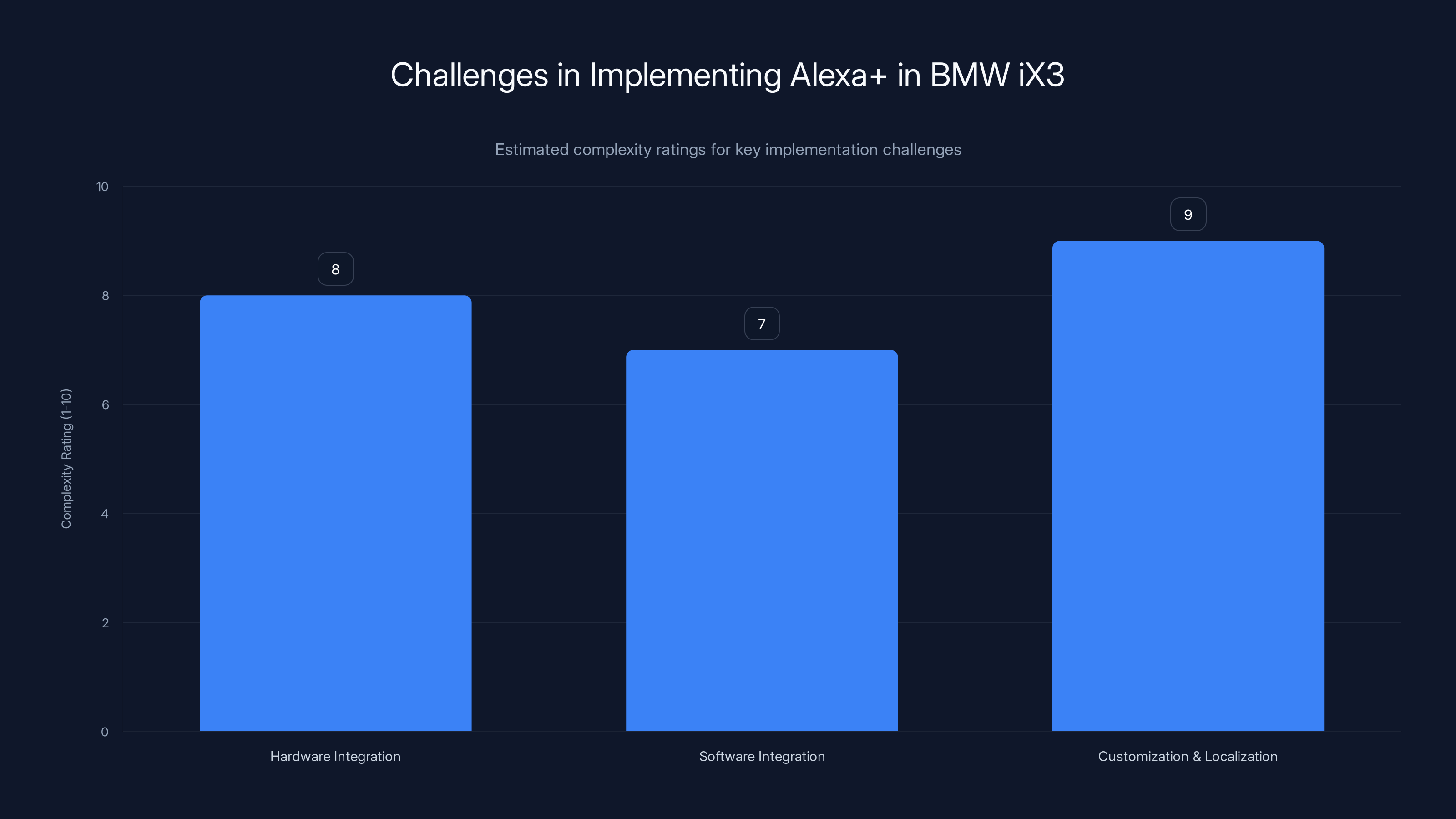

Estimated data suggests that customization and localization posed the highest complexity, followed by hardware and software integration challenges.

The Three-Year Journey: Why This Took So Long

BMW announced the Alexa partnership in 2022. That's four years ago. The 2026 iX3 is arriving in 2026. That's nearly a half-decade from announcement to deployment, which seems absurdly long for integrating existing software into a car.

But here's the thing: the project timeline keeps extending because building an automotive-grade voice assistant is orders of magnitude more complex than building one for a smart speaker or phone.

When Alexa sits in your home on a bedside table, it needs to be accurate. When it's in your car while you're doing 65 mph on a highway, it needs to be accurate and it needs to be instantly responsive, it needs to work with background noise from the road and engine, it needs to handle safety-critical operations where a misunderstanding could be dangerous, and it needs to integrate seamlessly with hardware and software that wasn't designed with Alexa+ in mind.

The automotive environment is uniquely challenging. Home devices sit in controlled environments. Phones stay in pockets or are held at consistent distances from faces. But a car? There's wind noise, tire noise, engine rumble, multiple passengers talking, music playing, and the driver is at varying distances from the microphone depending on road position and posture.

Beyond the acoustic challenges, there are safety considerations. When a smart speaker misunderstands a request, the worst-case scenario is that it plays the wrong song or orders the wrong product. When a car misunderstands a request while navigating or controlling climate systems, the consequences could genuinely be dangerous.

BMW and Amazon spent years developing algorithms that could filter out noise while preserving the driver's voice, testing the system in real driving conditions, and validating that it could handle critical operations without failure.

They also spent time integrating the system with BMW's existing vehicle architecture. BMW doesn't just need to make Alexa+ work in their cars—they need to make it integrate with every system in the vehicle, from climate control to navigation, from infotainment to vehicle diagnostics.

The timeline extension also reflects Amazon's decision to wait until Alexa+ was genuinely ready. The company could have shipped an earlier version of generative AI into the iX3 years ago, but they apparently decided that releasing something half-baked would be worse than waiting for the technology to mature.

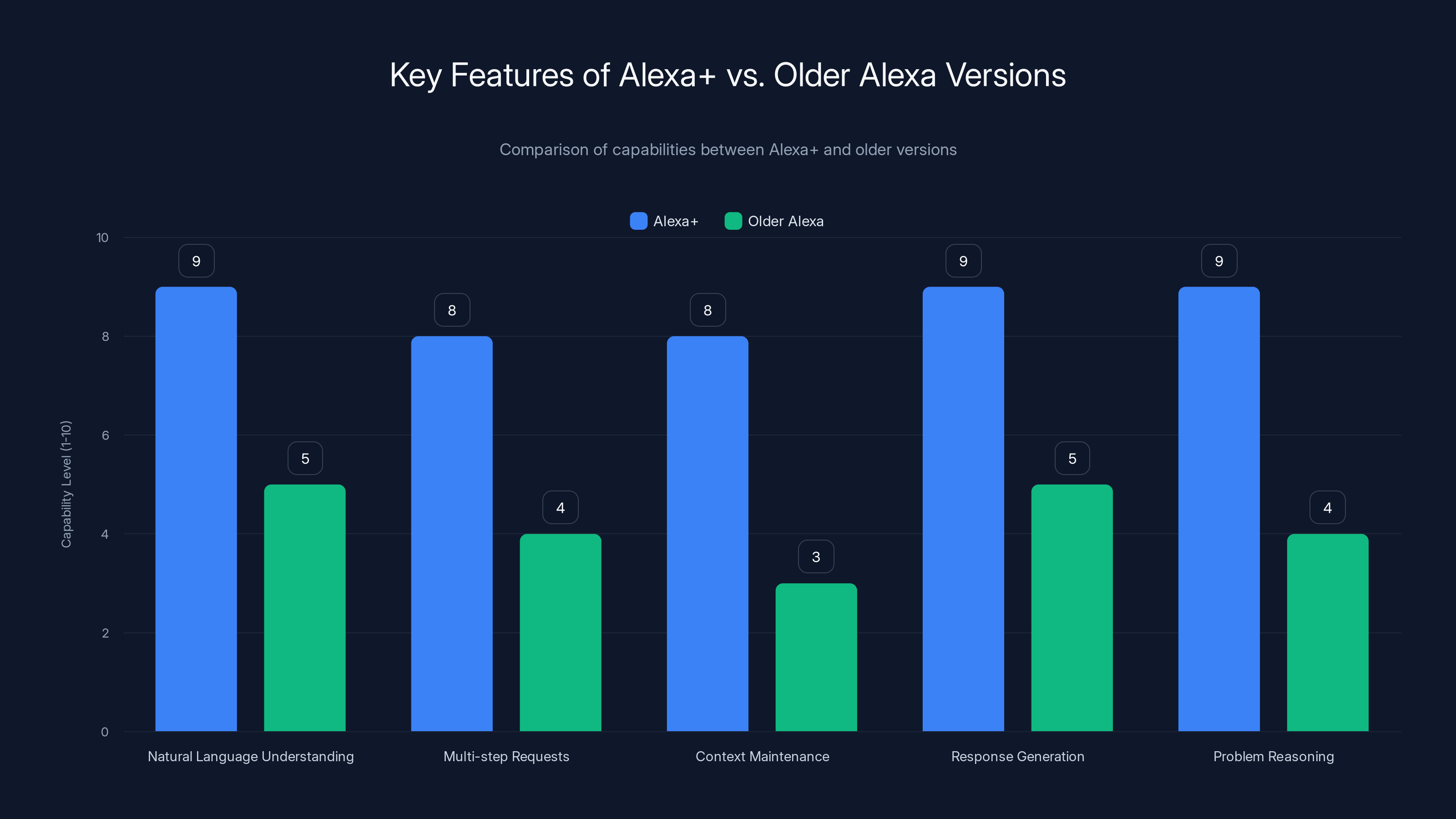

Alexa+ significantly outperforms older versions in understanding natural language, handling complex requests, and generating responses. Estimated data.

How Alexa+ Actually Works in the BMW iX3

Let's get specific about what actually happens when you talk to Alexa+ in the 2026 BMW iX3.

You start a conversation with a wake word (BMW will presumably have customized this). The system is now listening. Unlike older voice assistants that process your entire statement before responding, Alexa+ can start understanding your intent while you're still speaking, using a technique called streaming speech recognition.

As you finish your statement, the system converts your speech to text (a process called speech-to-text or STT). That text gets processed by the language model, which has been customized for the BMW iX3. The model doesn't just try to identify a single command. It performs several simultaneous operations: intent recognition (what are you actually asking for?), entity extraction (what specific things did you mention?), context analysis (how does this fit with what we've talked about before?), and action planning (what should I do in response?).

Here's where it gets interesting. The model might recognize that you've asked for something that requires multiple steps. For example: "I'm heading to my sister's place in downtown Portland, but I need to grab coffee on the way and pick up dry cleaning."

An older voice assistant would either:

- Do nothing (this request doesn't match any predefined command)

- Try to navigate you to Portland and ignore the rest

- Ask you to repeat yourself in a more "voice assistant-friendly" way

Alexa+ would parse this as: (1) destination is downtown Portland, (2) add a stop for coffee somewhere on the route, (3) add a stop for dry cleaning. It would then take action on all three elements, likely asking for clarifications only if needed ("Which coffee place do you want, or should I find one near your route?").

The system generates a natural language response back to you, which gets converted to speech using text-to-speech (TTS) technology. Again, this is customized for BMW, so the voice sounds like it belongs in the car rather than sounding like a generic Alexa voice.

Critically, Alexa+ in the iX3 maintains context across multiple turns. If you ask it to navigate somewhere, then ask "How long until I arrive?", the system knows you're asking about the current navigation, not starting a new task. If you ask "Make it 72 degrees," it understands you're adjusting climate control, not asking for weather information.

One of the most powerful aspects of this system is that it can reason through steps. If you ask the vehicle to handle something that requires multiple operations, the model can think through what those operations are and execute them in sequence. It can also recognize when it doesn't have enough information and ask clarifying questions that sound natural rather than robotic.

Continuity Across Devices: The Home-to-Car Experience

This is where the BMW-Amazon partnership gets genuinely innovative. Most of us already have Alexa devices in our homes. Amazon says Alexa+ is already in more than 600 million devices worldwide. One of the key benefits of the iX3 implementation is that it doesn't start from scratch—it connects with your existing Amazon ecosystem.

Imagine this scenario: You're sitting in your living room with an Alexa-enabled Echo device, and you're planning a road trip. You ask Alexa, "Add a reminder to pack suitcases before my trip to San Francisco next Thursday."

The next Thursday, you're in your 2026 BMW iX3, about to head to the airport. As you're getting in the car, Alexa+ notices that you have a pending reminder for today. But rather than just announcing it verbally, the iX3 offers to start navigation to the airport, to preheat or precool the car for the journey, and to play your favorite road trip playlist.

The example in Amazon's announcement is slightly different but illustrative: you start a conversation with your Echo at home, discussing a trip. Then you get in the car and continue the conversation with Alexa+ in the BMW. The assistant remembers what you were just talking about, maintains that context, and can now help you execute the journey—navigation, climate control, music, all without you having to restart the conversation.

This continuity is powerful because it acknowledges how people actually live their lives. We don't compartmentalize our digital assistants by device. We expect them to be extensions of a single, intelligent entity that follows us from home to car to wherever we're going next.

For BMW owners, this means the car becomes less like a separate device you interact with and more like a natural extension of the smart home you've already set up.

There's also integration with other Amazon services. You can ask Alexa+ in the iX3 to order food for delivery to a specific location, to check in for flights, to control smart home devices in your house while you're away, or to manage your calendar and send messages to contacts.

Alexa+ significantly outperforms the original Alexa in context understanding, complex problem solving, and natural language understanding, thanks to its integration of large language models. Estimated data.

Voice Assistants in Automotive: The Troubled History

Understanding why the BMW-Amazon partnership is significant requires understanding how badly voice assistants have performed in cars historically.

Almost every major automaker has tried to deploy voice assistants. General Motors has OnStar. Audi has been using a custom version of Alexa for years. Tesla vehicles support voice commands. Mercedes has MBUX (Mercedes-Benz User Experience). Ford uses both Alexa and its own system. Porsche, Volkswagen, and others all have attempted voice-based control.

And almost universally, they've been frustrating. Here's the core problem: older voice assistant technology was fundamentally rule-based. Engineers had to anticipate what drivers might say and program the system to respond to those specific phrasings or close variations.

In a home or office, this works okay. There's a limited set of things you might ask Alexa. Mostly: play music, check the weather, control smart home devices, set timers, make purchases.

But in a car? The potential requests are essentially infinite. Climate control alone has dozens of possible variations: "It's too cold," "Turn on the heat," "I'm freezing," "Warm it up," "Set it to 72," "Make it more comfortable," "Adjust the temperature." That's just climate control. Multiply that across every system in the vehicle, and you're looking at thousands of possible phrasings for relatively simple operations.

And here's the catch: people don't communicate with voice assistants the way engineers think they will. In testing, people naturally use conversational language that contains ambiguity, context dependency, and implicit references that rule-based systems can't handle.

This is why so many people defaulted back to using their phones or just accepting silence. The voice assistant in their car demanded that they speak in a specific, unnatural way, and if they deviated from that script, it would fail. After a few frustrating experiences, they stopped trying.

Generative AI changes this dynamic fundamentally. An LLM-based system doesn't need to have every possible request pre-programmed. It can understand intent from natural language and figure out what action to take. It can handle ambiguity, ask clarifying questions, and improve its accuracy through machine learning over time.

BMW isn't the first car company to attempt this transition, but they're doing it with more maturity and resources than most. And crucially, they're partnering with Amazon, which has been deploying and optimizing LLM-based AI at massive scale for over a year now.

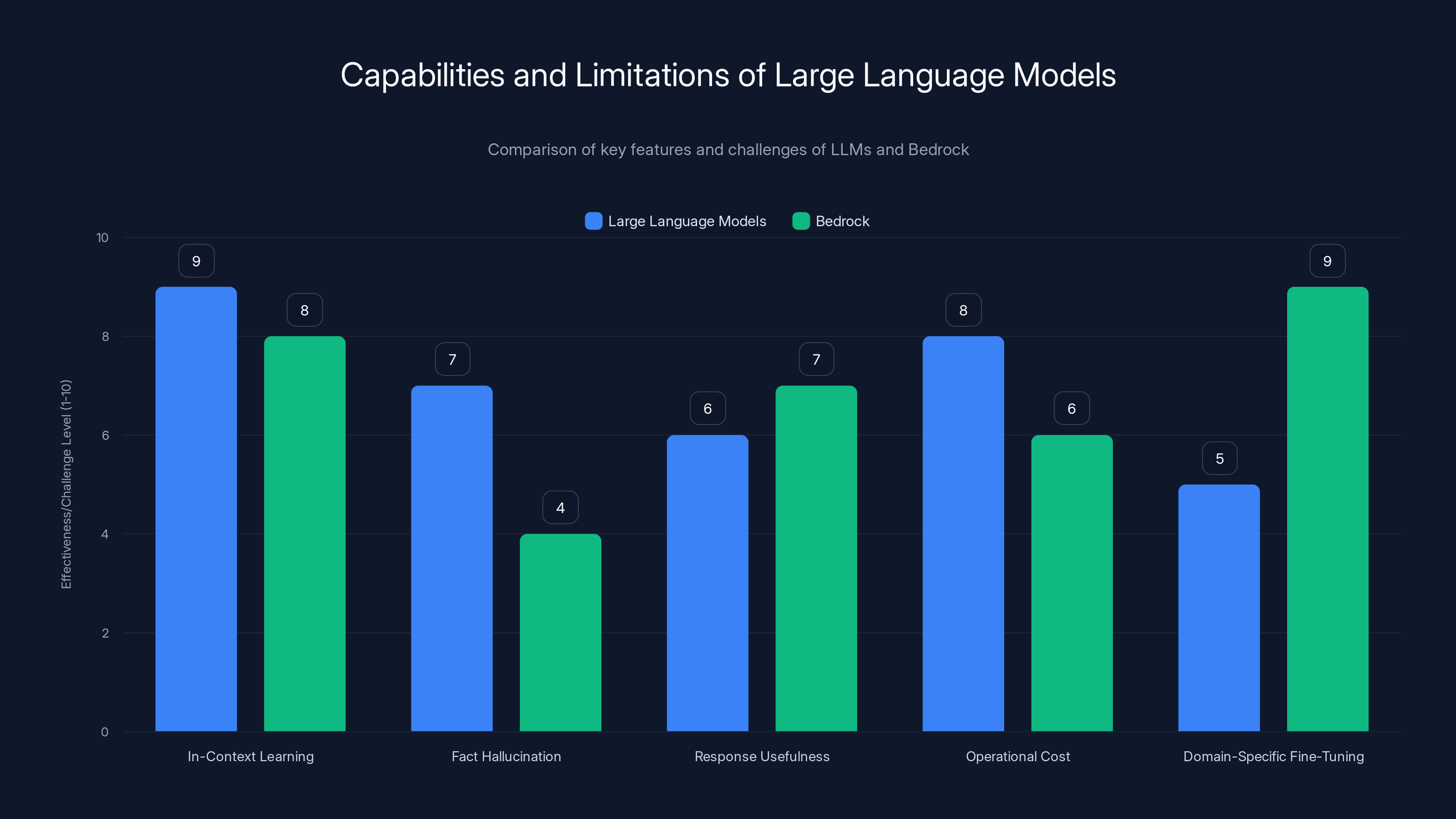

The Technology Behind Alexa+: Large Language Models and Bedrock

To understand what makes Alexa+ different, we need to understand the underlying technology: large language models and the platforms that make them accessible.

A large language model is essentially a massive neural network that's been trained on billions of tokens of text. The model learns statistical patterns about how language works—which words tend to follow other words, how concepts relate to each other, how meaning is constructed through language.

Because the model has learned these patterns at scale, it can perform tasks it was never explicitly trained for. Give it a few examples of what you want, and it can often figure out the pattern and apply it to new cases. This capability is called in-context learning, and it's one of the reasons LLMs are so powerful.

But raw LLMs have limitations. They can sometimes hallucinate facts that sound plausible but are incorrect. They might generate responses that are technically impressive but not actually useful. They can be slow and expensive to run. And they don't have the ability to take actions in the real world—they just generate text.

This is where Amazon Bedrock comes in. Bedrock is a platform that makes it easy for companies to work with foundation models (the large language models that serve as the foundation for more specialized systems). Bedrock abstracts away a lot of the complexity of working with raw LLMs.

More importantly, Bedrock lets companies fine-tune models with their own data. BMW can take the base Alexa+ model and train it with information specific to BMW vehicles. This fine-tuning process is crucial because it takes a general-purpose system and makes it domain-specific.

Below the language model layer, Alexa+ also needs what's called a reasoning engine. This is the component that translates natural language intent into actual actions. When you ask the car to "find coffee on the way," the reasoning engine needs to: extract that you want coffee, understand where you are, understand where you're going, access real-time location data, query a database of nearby coffee shops, insert that stop into your navigation, and execute the navigation update.

This requires integrating the language model with multiple APIs, databases, and vehicle control systems. It's non-trivial engineering.

BMW and Amazon have presumably spent significant time optimizing this for the automotive context. They've likely incorporated safeguards to ensure that the system doesn't attempt to execute commands that would be dangerous while driving. They've built in error handling so that if the system misunderstands a request, it fails gracefully rather than doing something unexpected.

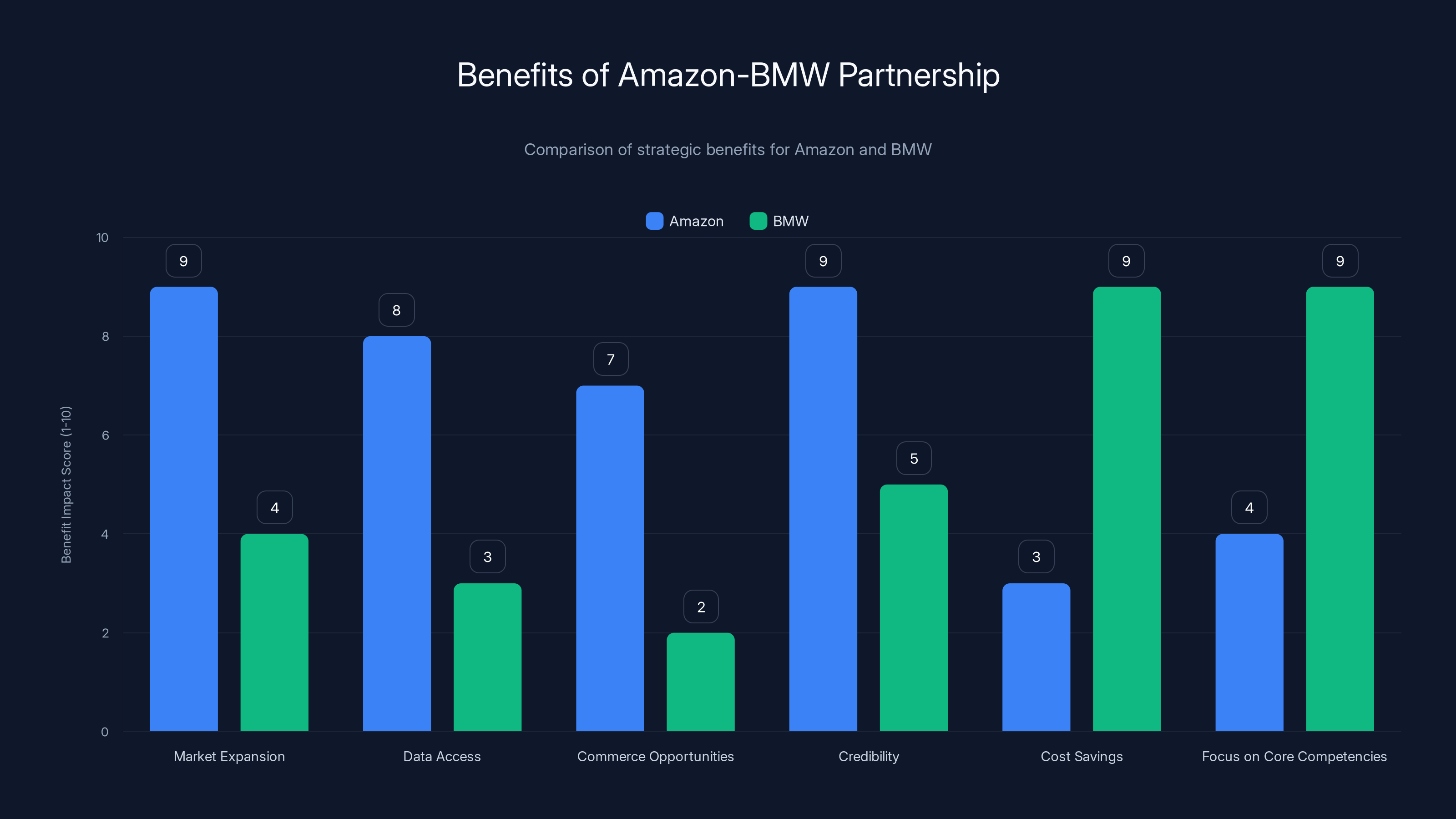

Amazon benefits from market expansion, data access, and credibility, while BMW gains cost savings and focuses on core competencies. (Estimated data)

Safety Considerations: Why This Matters for Drivers

When you're giving voice commands to a speaker in your home, safety is not a primary concern. If the speaker misunderstands and does something weird, it's inconvenient but not dangerous.

In a car, safety is paramount. A misunderstood voice command could potentially distract a driver, cause them to take an incorrect route, or inadvertently disable a safety-critical system.

Both BMW and Amazon have obviously considered this extensively. There are likely several layers of safety baked into the system.

First, the system needs to recognize when a request should be allowed and when it shouldn't. For example, the system probably won't allow you to disable airbags through voice command, even if you explicitly ask for it. Similarly, if you're on a highway driving 70 mph, the system might decline to execute requests that require significant manual input.

Second, the system needs to be accurate enough that false positives are rare. If the system frequently misidentifies what you said and executes the wrong command, drivers will stop using it, which means they might resort to more dangerous behaviors (like trying to manually control systems while driving).

Third, the system needs to have a graceful fallback when it's uncertain. Rather than guessing, it should ask a clarifying question. "Did you want me to navigate to the coffee shop on 5th Street, or the one on Main Street?" This is more natural and reduces the risk of executing the wrong action.

Amazon has extensive experience with this from deploying Alexa in millions of homes. They've learned how to build systems that are simultaneously smart and reliable. The automotive application is more critical, so presumably, the bar is even higher.

BMW's reputation is built on engineering excellence. They presumably wouldn't ship this feature if it wasn't meeting rigorous safety and reliability standards. The fact that the timeline extended by years suggests they're being appropriately cautious.

The Competitive Landscape: Where Are Other Automakers?

BMW isn't the only luxury automaker interested in upgrading their voice assistant technology. Several competitors are working on similar solutions, though most are still earlier in development.

Mercedes-Benz has MBUX (Mercedes-Benz User Experience), their own in-house voice and language system. They've been iterating on this for years and have recently begun incorporating more advanced AI. However, they haven't yet reached the level of integration and sophistication that Alexa+ in the BMW apparently achieves.

Audi and Volkswagen (both part of the Volkswagen Group) have been using Amazon Alexa for years. However, they're using earlier versions. They may eventually upgrade to Alexa+ as well, but there's no official announcement yet.

Tesla has their own voice assistant built directly into their vehicles. Elon Musk has made it clear that Tesla wants to own all the software layers in their vehicles. However, Tesla's approach is closed-ecosystem and proprietary, which means they can't leverage the breadth of services that Amazon Alexa provides.

Porsche has partnerships with voice assistant companies, but they've historically been less aggressive in this space than some competitors.

Luxury Chinese EV makers like NIO and Li Auto have been incorporating more sophisticated voice assistants in their vehicles, though these are typically based on local AI platforms rather than global systems like Alexa+.

The competitive advantage BMW is gaining here is significant but potentially temporary. Once Alexa+ proves itself in the iX3, other automakers using Alexa (Audi, Volkswagen, potentially others) can adopt it as well. But for at least the next few years, BMW will have a sophisticated voice assistant that most competitors lack.

More strategically, this partnership helps BMW brand itself as a company that values intelligent technology and seamless user experience. For luxury car buyers, this kind of thoughtful integration is exactly what they're paying extra for.

The integration of Alexa into BMW's iX3 spans nearly five years, reflecting the complexity of adapting voice technology for automotive use. Estimated data.

The Broader Implications: Voice as an Interface Revolution

The BMW iX3 and Alexa+ partnership is significant not just for BMW owners, but because it signals a broader shift in how voice will be used as an interface to machines and services.

For the past fifteen years, we've primarily interacted with devices through screens and touchscreens. The iPhone revolutionized this with a brilliant touchscreen interface. Subsequent devices have mostly followed this pattern.

But touchscreens are actually pretty terrible for some use cases—particularly driving. Your eyes need to stay on the road. Your hands need to be ready to control the vehicle. Voice is the natural interface for this context.

The problem has always been that voice technology wasn't sophisticated enough. When voice assistants were simple pattern matchers, they were only useful for a limited set of interactions. But with generative AI, voice assistants are finally becoming sophisticated enough to be genuinely useful.

This means that voice is likely to become the primary interface for more and more devices and services. You'll see voice assistants in appliances, in homes, in public spaces, and increasingly in vehicles.

For tech companies and device manufacturers, this represents an enormous opportunity. The company that owns the voice assistant becomes the gateway through which users access a wide range of services. Amazon recognized this early, which is why they've invested so heavily in Alexa and why they're now expanding Alexa+ to automotive.

For users, this has both benefits and concerns. The benefits: if voice assistants become sophisticated and reliable, they can be genuinely helpful, reducing cognitive load and improving safety in contexts like driving. The concerns: voice assistants are constantly listening and recording, raising privacy questions. And if a single company controls the voice interface in multiple devices across your life, that company has enormous power over your digital experience.

Integration with the BMW Ecosystem: How This Fits into Modern Luxury

BMW's approach to the iX3 has always been to position it as a statement about the future of luxury. The iX3 isn't just an electric crossover—it's a showcase for how BMW thinks about design, technology, and experience.

Alexa+ integration fits naturally into this positioning. Modern luxury, particularly for affluent tech-savvy buyers, is increasingly about seamless integration. It's not about having the most features. It's about having the features that matter work together elegantly, without friction.

A driver walking up to their iX3 expects it to be aware of their schedule, their preferences, and their destination (if they've already planned it). As they get in the car and start driving, they expect to be able to adjust climate, navigation, and entertainment without taking their eyes off the road or their hands off the wheel.

Alexa+ enables all of this naturally. Because it's integrated with the broader Amazon ecosystem, it can access your calendar, your shopping list, your music preferences, your home settings. It can anticipate what you might need.

Beyond the functional integration, there's a symbolic integration. BMW is saying: "We recognize that your digital life extends beyond the car. We're not trying to create a closed ecosystem that locks you in. Instead, we're creating a car that integrates with the tools and services you already use and trust."

This positioning is important for luxury. Affluent buyers have options. They could buy a Tesla, a Mercedes, a Porsche. BMW needs to offer something that's not just technically excellent but that feels thoughtfully integrated into how these buyers actually live their lives.

Integration with Alexa also helps BMW with something they've struggled with: future-proofing. Cars have 10-15 year lifespans. Technology changes much faster than that. By using Amazon's Alexa+ platform, BMW is essentially saying, "We're tying our voice assistant to a platform that will continue to evolve and improve over time." Amazon will update and improve Alexa+. BMW owners will get those improvements through over-the-air updates.

This is much better than building a proprietary voice assistant that will inevitably feel outdated in 3-4 years when AI capabilities advance.

Large Language Models excel in in-context learning but face challenges like fact hallucination and high operational costs. Bedrock enhances domain-specific fine-tuning and reduces hallucination issues. Estimated data.

The Business Model: Why Amazon and BMW Benefit

This partnership makes business sense for both companies, though in different ways.

For Amazon, automotive represents one of the last major categories where Alexa hasn't achieved significant penetration. Amazon has Alexa in smart speakers, phones, tablets, wearables, smart displays, and hundreds of other devices. But for cars, their presence has been limited to a few partnerships and the Alexa app on Android phone integrations.

The auto industry is enormous. If Amazon can successfully establish Alexa+ as the standard voice assistant across multiple automakers' vehicles, that's a massive market. It also gives them access to rich data about driving behavior, preferences, and commerce patterns.

Moreover, voice assistants in cars create new commerce opportunities for Amazon. If the voice assistant can help users order food, navigate to restaurants, manage deliveries, or handle other transactions, that's potential revenue.

The automotive space also gives Alexa+ credibility. If it works well in cars—arguably the most demanding environment for voice assistants—it demonstrates the platform's maturity and sophistication. This makes it easier for Amazon to convince other industries and device manufacturers to adopt Alexa+.

For BMW, the benefits are somewhat different. They get a best-in-class voice assistant without having to build it themselves. BMW is not a software company. Building a competitive generative AI system from scratch would require hiring hundreds of engineers, running massive compute infrastructure, and competing with companies like Amazon, Google, Microsoft, and OpenAI that have been doing this longer.

By partnering with Amazon, BMW gets to focus on what they do well: automotive engineering, design, and brand. They get a voice assistant that's been battle-tested across 600 million devices. And they get the ability to customize it for their specific needs through Bedrock.

Beyond the technical benefits, there's a marketing benefit. "Powered by Alexa+" is credible. Customers recognize the Alexa brand. They know it works (because they likely have Alexa devices at home). This makes it easier for BMW to convince buyers that the voice assistant in their car is worth trusting and using.

There's also a competitive benefit. If Audi and Volkswagen (both part of VW Group) are still using older Alexa versions, BMW has a feature differentiation. The iX3's voice assistant will be noticeably better than what those competitors offer.

Finally, the partnership helps BMW differentiate in a category where differentiation is increasingly hard. When multiple companies are building electric crossovers with similar range, power, and pricing, features like a sophisticated voice assistant can be genuine differentiators in purchasing decisions.

Looking Forward: What Comes After Alexa+ in Cars?

The BMW iX3 with Alexa+ is a significant milestone, but it's clearly not the end point. As technology continues to evolve, in-car voice assistants will likely become even more sophisticated and integrated.

In the near term (next 2-3 years), we'll likely see other automakers adopting Alexa+ or developing their own generative AI-powered voice systems. Mercedes, Audi, and Volkswagen will probably upgrade. Some manufacturers will develop proprietary systems similar to what Tesla has attempted.

We'll also see expansion of voice assistant capabilities. Current voice assistants handle individual requests. Future versions will handle multi-step, multi-system orchestrations more seamlessly. You might ask the car to "plan the most efficient route that includes stops at the places we talked about last week." The assistant would need to remember previous conversations, infer which places were discussed, and plan accordingly. This level of context and inference will become more common.

There's also the question of how voice assistants integrate with autonomous driving. As vehicles become more autonomous, voice control becomes even more important. In a car with full self-driving, the driver might need to give fewer manual commands, but voice might become the primary interface through which they interact with the vehicle and access services.

Privacy and regulation will also shape development. As voice assistants become more prevalent in cars, regulators may impose requirements around data handling, user consent, and the ability to opt out. This will likely lead to more privacy-focused voice assistant designs and clearer rules about data usage.

There's also the interesting question of whether voice assistants in cars will eventually be integrated with other vehicle intelligence systems. Imagine a system that can optimize your route not just based on your destination, but based on real-time traffic, energy efficiency in electric vehicles, and your schedule. The voice interface would be the way you interact with this system, asking for explanations about route choices, requesting changes, or requesting that the system optimize for a specific factor (fastest route, most scenic route, most efficient route, cheapest route).

The Bigger Picture: AI Integration in Physical Products

The BMW iX3 and Alexa+ partnership is part of a larger trend: the integration of sophisticated AI systems into physical products.

For decades, product categories have largely stayed separate. A car was a car. A refrigerator was a refrigerator. A washing machine was a washing machine. Each had its own manufacturer, its own interface, its own limitations.

But increasingly, smart device companies, cloud AI platforms, and traditional hardware manufacturers are recognizing that the real value comes from integration. A car without cloud-connected services, without access to real-time information, without integration with a driver's broader digital life, is less useful than a car with all of those features.

This integration creates new possibilities and new challenges. Technically, it's much harder to build a car that integrates with a cloud AI service than to build a car with just local intelligence. You need robust connectivity, you need to handle scenarios where that connectivity drops, you need to manage latency and power consumption.

But the benefits can be tremendous. A car with access to real-time traffic, weather, and information about services and attractions can make driving more pleasant and efficient. A car with a sophisticated voice assistant can reduce driver cognitive load and improve safety.

For consumers, this trend raises important questions about privacy, data ownership, and choice. If your car is sending data to Amazon, to BMW, and to dozens of other services, what happens to that data? Who has access to it? Can you opt out?

These questions don't have easy answers, but they're important to think about as AI integration in physical products accelerates.

Implementation Challenges BMW and Amazon Likely Faced

While the announcement of Alexa+ in the iX3 makes it sound straightforward, the actual implementation process was likely filled with thorny challenges.

Hardware Integration: The iX3 has specific microphone placements, speaker configurations, and acoustic properties. The system had to be optimized for the specific acoustic environment of the vehicle. Microphones might be in the ceiling, the door panels, or the windshield. The acoustic properties of a car (hard surfaces that reflect sound, insulation that absorbs sound, wind noise entering through multiple locations) are completely different from the acoustic environment of a smart speaker sitting on a table.

BMW and Amazon presumably spent months testing microphone configurations, developing noise cancellation algorithms specific to the automotive environment, and optimizing the speech recognition pipeline to work reliably in cars.

Software Integration: The iX3 runs BMW's existing infotainment software stack. Integrating a new voice assistant platform meant creating compatible APIs, ensuring that Alexa+ could communicate with every system in the vehicle, and making sure that existing controls still worked if someone preferred not to use voice.

This kind of integration is straightforward in theory but complex in practice. You need to ensure that voice commands don't interfere with other systems, that the voice assistant has the appropriate permissions and access controls, and that everything fails gracefully if there are unexpected errors.

Customization and Localization: The automotive version of Alexa+ isn't the same as the Alexa+ running in millions of Echo devices. It's been customized for BMW vehicles and will presumably be localized for different markets (German for Germany, French for France, etc., with market-specific services and preferences).

This customization process using Bedrock requires careful data preparation, training, testing, and validation. BMW and Amazon had to make sure that the system understood BMW-specific terminology, that it knew about BMW-specific features, and that localization didn't break any of the core functionality.

Safety Validation: Before deploying a system that affects vehicle safety, it needs to go through extensive testing and validation. This isn't like deploying a new feature to a smartphone where bugs can be fixed quickly through over-the-air updates. Cars are in use for a decade or more. If there's a safety issue with the voice assistant, it could affect millions of vehicles.

BMW and Amazon presumably conducted real-world testing in various driving conditions, at various speeds, with various inputs. They tested edge cases: What happens if someone shouts a voice command? What if multiple people are talking in the car? What if the system is uncertain and the user clarifies their request? What if the system crashes?

Connectivity and Offline Functionality: Alexa+ is cloud-based, which means it needs a reliable internet connection to function. But connectivity in cars is inconsistent. You might have strong 4G/5G in cities, but weaker signal on rural highways. You might lose signal when driving through tunnels.

The team had to implement fallback behavior for when the connection is poor or unavailable. Some functions might be available offline (basic navigation, climate control), while others might require cloud connectivity (accessing real-time information, making purchases).

BMW and Amazon likely had to define the specific trade-offs: what functionality is available offline versus online, how does the system handle spotty connectivity, how long can it cache data before it becomes stale?

Power Management: Vehicles have power constraints. The voice assistant needs to be constantly listening (in case someone says the wake word) without draining the battery. This is a non-trivial challenge, especially when the car is parked and not running.

They likely had to optimize the system to use ultra-low-power microphone arrays, to only activate the full system when the wake word is detected, and to manage power consumption carefully when the car is idle.

Data Privacy and Legal Compliance: Every conversation with the voice assistant is potentially sensitive. If a user is giving navigation commands, location data is involved. If a user is setting up calendar integration or making purchases, financial or personal data might be involved.

BMW and Amazon had to navigate privacy regulations (GDPR in Europe, CCPA in California, and various regulations in other jurisdictions), define data handling policies, ensure encryption, and provide users with transparency and control.

Competitive Alternatives and What They Offer

While Alexa+ in the BMW iX3 is impressive, it's worth considering what alternatives are available and how they compare.

Tesla's Voice Assistant: Tesla has built their own voice system, integrated directly into their vehicles. The advantage is that it's deeply integrated with Tesla's ecosystem. The disadvantage is that it's closed to services outside the Tesla ecosystem. If you want to use your voice to access Amazon services, Spotify, or other third-party integrations, you can't. Tesla's voice assistant is useful, but it's limited.

Google Assistant in Cars: Google offers a voice assistant integrated into some vehicles, particularly through Android Automotive OS. Google has different strengths than Amazon: deep search capabilities, excellent music integration (YouTube Music, Spotify), and strong smart home integration (Google Home). However, Google's automotive integration is less mature than Alexa+.

Mercedes MBUX: Mercedes-Benz developed their own voice assistant. It's sophisticated and well-integrated, but it's proprietary. Unless you're a Mercedes owner, you can't use it. And because it's not based on generative AI (at least not yet), it doesn't have the natural language understanding that Alexa+ provides.

Siri in Apple Cars: Apple has Siri in vehicles through CarPlay, but integration is limited to iOS devices. You need an iPhone to use it, and it primarily handles navigation, messaging, and music. For broader vehicle control, it's not as comprehensive as Alexa+.

The advantage of Alexa+ is that it's comprehensive. It works with third-party services, it understands natural language, it integrates with your existing smart home and cloud services, and it can control all vehicle systems.

The trade-off is that you're relying on Amazon's infrastructure and data handling. If that concerns you, proprietary solutions like Tesla or Mercedes might be preferable despite their limitations.

The Business of Voice: Why Voice Assistants Matter to Tech Giants

To understand why Amazon has invested so heavily in Alexa and Alexa+, it helps to understand how voice assistants fit into the bigger picture of tech company strategy.

For Amazon, Google, Microsoft, and Apple, voice assistants represent a way to deepen their relationship with users and increase their share of wallet. When you have a voice assistant, you're more likely to use that company's services. You're more likely to make purchases through that platform. You generate data that helps that company understand your preferences and behavior.

Voice assistants are also a hedge against disruption. As new categories of devices emerge (smart homes, wearables, cars, etc.), a dominant voice assistant means you have a presence in those categories without needing to build hardware for each one.

For Amazon specifically, Alexa+ in cars serves a strategic purpose: it extends Amazon's reach into a new domain and creates a reason for BMW owners to integrate more Amazon services into their lives. If your car has Alexa+, you might be more likely to order food, manage deliveries, control your smart home, or use Amazon Music.

This is the broader context in which the BMW partnership should be understood. It's not just about making cars better. It's about establishing Amazon as the trusted voice assistant platform across all of the categories where voice assistants matter.

Privacy, Data, and the Elephant in the Room

Here's the uncomfortable truth about voice assistants: they're always listening, always recording, and always sending data somewhere.

When you activate Alexa+ in your BMW, what happens? The microphone is listening for the wake word. If it detects the wake word, it starts recording. That audio gets sent to Amazon's cloud servers. Amazon transcribes it, understands what you're asking for, executes the request, and sends a response back to your car.

Amazon has policies about how they handle this data. They generally claim they delete audio recordings after a period of time (typically 3 months for Alexa), but the transcription and intent data persists indefinitely for machine learning purposes.

For some users, this is fine. They trust Amazon and don't mind if Amazon has access to their voice commands. They might even benefit from it (Amazon can suggest useful automations based on patterns in your commands).

For other users, the privacy implications are concerning. If Amazon is recording all your voice commands, they're getting visibility into your location (from navigation requests), your preferences (from music and shopping requests), your health (from queries about medical conditions), your relationships (from messages to contacts), and more.

BMW has addressed this to some degree by allowing users to control when the microphone is listening and by providing controls over data sharing. But the fundamental issue remains: using a cloud-based voice assistant means accepting some level of data sharing with the cloud provider.

This is an important consideration for potential BMW iX3 buyers. If privacy is a primary concern, the solution is either to not use the voice assistant or to be very deliberate about what commands you issue through it.

Amazon's business model depends on understanding user behavior. They're not going to build a voice assistant that doesn't provide them with insights. So if you choose to use Alexa+, you're implicitly choosing to share data with Amazon.

Real-World Use Cases: What This Actually Enables

Let's think concretely about how someone would actually use Alexa+ in the BMW iX3 and what that experience would be like.

Scenario 1: The Commute You get in your iX3 on a Monday morning. Alexa+ automatically checks your calendar and sees you have a 9 AM meeting downtown. It proactively suggests starting navigation and tells you that traffic is light right now but will get heavy in about 20 minutes.

You ask, "What's my schedule for today?" Alexa+ lists your meetings. You then ask, "Remind me to grab lunch before the 2 PM meeting." Alexa+ confirms the reminder.

As you drive, traffic gets heavier than expected. You ask, "Find an alternate route." Alexa+ re-routes you, saving about 8 minutes.

You realize you're going to be a few minutes late. You ask, "Send a message to Sarah saying I'll be there in 5 minutes." Alexa+ composes a message, reads it back to you for confirmation, and sends it.

Scenario 2: Road Trip Planning You're planning a road trip from Los Angeles to San Francisco. The night before, you ask your home Echo: "Plan a road trip to San Francisco tomorrow with stops at scenic viewpoints and good coffee places."

The next day, you get in your iX3. Alexa+ already has the route planned. It's loaded with waypoints for coffee shops and viewpoints. As you drive, it proactively suggests stops: "There's a highly rated coffee place 10 miles ahead. Would you like me to navigate there?"

At one of the scenic viewpoints, you ask the car to take a photo of the landscape and send it to your family group chat. Alexa+ does that.

Scenario 3: Connected Home and Car You're leaving your home for a drive to a client meeting. As you're getting in your iX3, Alexa+ asks if you'd like to set your home to "away mode" (lock doors, arm security system, adjust thermostat for energy savings). You confirm.

On the drive, you realize you need something from your home office. You ask Alexa+ to turn on the lights so that when you return, you can see clearly when you enter.

After the meeting, before driving home, you ask Alexa+ to "unlock the front door and turn on the living room lights." When you arrive home, the door is unlocked and the lights are on, because Alexa+ coordinated these actions from your car.

Scenario 4: Emergency Scenario You're driving and get a message that there's an issue with your home (water leak detected by a smart sensor). You ask Alexa+, "Turn off the water main in my house." Alexa+ does that, providing confirmation of the action and suggesting you contact a plumber.

These scenarios illustrate the real power of the system: it's not just about playing music or setting the temperature. It's about orchestrating complex, multi-step actions and integrating your car with your broader digital and physical life.

The Bottom Line: Why This Matters and What It Means

The 2026 BMW iX3 with Alexa+ represents a significant step forward in automotive voice technology. It's the first vehicle to ship with a truly capable generative AI-powered voice assistant, and it demonstrates that this technology is mature enough for real-world deployment in a consumer product.

The implications extend beyond BMW owners. This partnership validates the approach of integrating cloud-based AI services into physical products. It shows that generative AI can meaningfully improve user experience in domains where rule-based systems have historically failed.

For the automotive industry, it raises the bar for what users will expect from voice assistants. Competitors will need to match Alexa+ functionality or they'll risk losing sales to customers who want more sophisticated voice control.

For Amazon, it's a validation that Alexa+ is ready for prime time and a beachhead for expanding into other automotive partnerships. Over the next few years, we'll likely see Alexa+ in vehicles from multiple manufacturers.

For users, it means better experiences in a device they use every day. A voice assistant that actually understands what you're asking for, that can handle complex requests, and that integrates seamlessly with your digital life, makes driving safer and more enjoyable.

Of course, this comes with trade-offs around privacy and data sharing. Users who choose to use Alexa+ are accepting that Amazon will have visibility into their driving patterns, preferences, and behaviors.

But for many people, the convenience and capability will be worth it. And as voice assistants continue to improve—as they become more accurate, faster, and more capable of handling complex scenarios—they'll become increasingly central to how we interact with our vehicles and our digital lives.

The three-year journey from announcement to deployment might seem long, but it reflects the complexity of bringing sophisticated AI to a physical product. The result is a system that's been carefully engineered, extensively tested, and optimized for real-world use. That's worth the wait.

FAQ

What is Alexa+ and how does it differ from older Alexa versions?

Alexa+ is Amazon's next-generation voice assistant powered by large language models and generative AI. Unlike earlier versions that relied on pattern matching and pre-programmed responses, Alexa+ can understand natural language at a deeper level, handle complex multi-step requests, maintain context across conversation turns, and generate natural-sounding responses. It's been trained on billions of tokens of text and can reason through problems rather than just matching keywords to fixed commands. This means you can speak naturally to your BMW iX3 without having to use specific phrases or script-like language.

How did Amazon build Alexa+ for automotive use specifically?

Amazon used Amazon Bedrock, a platform that lets companies customize generative AI models with their own proprietary data. BMW trained the base Alexa+ model with BMW-specific information about their vehicles, features, and systems. The partnership took three years because they needed to optimize for the automotive environment, which presents unique challenges: engine and wind noise, variable microphone placement in the vehicle, need for safety-critical operations, integration with existing vehicle systems, and real-time responsiveness while driving. Both companies conducted extensive testing to ensure the system worked reliably in real driving conditions.

Can I use Alexa+ in the BMW iX3 if I don't have other Alexa devices at home?

Yes, you can use Alexa+ in the iX3 without owning any other Amazon devices. However, the system becomes more powerful if you have an existing Amazon ecosystem. If you do own Echo devices, smart home products, or use Amazon services at home, Alexa+ can leverage that existing setup for continuity. The system can continue conversations started on your home Echo device, control smart home devices in your house, and access your Amazon preferences and history. But none of this is required—the voice assistant in the car works standalone.

What safety features does Alexa+ have to prevent dangerous driver distraction?

Alexa+ includes multiple layers of safety features, though Amazon and BMW haven't disclosed all the specific details. The system recognizes which requests are safe to execute while driving and which require the vehicle to be stopped or parked. It's designed to reduce distraction rather than increase it, allowing drivers to keep their eyes on the road while handling navigation, climate, and other vehicle functions. The system can also refuse requests that would be dangerous, such as adjusting safety-critical systems or operations that would require significant manual input. Both companies have emphasized that safety was a primary consideration throughout the development process.

How does Alexa+ maintain privacy and handle the data it collects?

Amazon has stated that audio recordings are deleted after a period of time (typically 3 months for Alexa devices), though transcription and intent data is retained for machine learning purposes. Users can control when the microphone is listening and can manage data sharing preferences through BMW's interface. BMW and Amazon comply with privacy regulations like GDPR and CCPA. However, using a cloud-based voice assistant does mean accepting that Amazon will have access to your voice commands and the data those commands reveal about your location, preferences, and behavior. Users concerned about privacy can choose not to use the voice assistant or be selective about what commands they issue through it.

When will Alexa+ be available in other BMW vehicles or other automakers' cars?

Amazon has announced Alexa+ specifically for the 2026 BMW iX3, but hasn't made official announcements about other vehicles or timeframes. However, it's reasonable to expect that other automakers currently using Alexa (like Audi and Volkswagen, which are part of the Volkswagen Group) will likely eventually upgrade to Alexa+. The technology is now proven and available through Amazon Bedrock, so adoption by other manufacturers is likely. Timeframes for those rollouts haven't been announced, but typically new technology debuts in flagship luxury models before expanding to more affordable vehicle segments.

What happens if the internet connection drops while you're driving?

Alexa+ requires cloud connectivity for most features, but the system has fallback behavior for poor or dropped connections. Basic functions like climate control and some navigation features should work offline, though real-time services (traffic updates, live information queries, cloud-connected smart home controls) won't function without connectivity. The system is designed to handle spotty connectivity gracefully, maintaining what it can work with locally while alerting the user when a function requires an internet connection. This reflects the reality of driving through various coverage areas, from strong 5G in cities to weaker signal on rural highways.

How do I enable or disable the microphone for privacy?

BMW has provided user controls to manage the microphone and voice assistant. Users can disable the microphone entirely if they choose not to use voice control, addressing privacy concerns. The specific interface and settings options will be documented in the iX3's user interface, allowing drivers to control when the system is listening and what permissions it has. This gives users agency over their privacy while still making voice control available to those who want it.

Can Alexa+ handle requests that require controlling multiple vehicle systems at once?

Yes, this is actually one of Alexa+'s key strengths. Because it's powered by a language model that can reason through complex tasks, it can handle requests that involve multiple steps or systems. For example, you could ask it to "Start navigation to the restaurant, then set the temperature to 72 degrees and play my driving playlist." The system understands this is three separate requests and executes all three. Or you could ask for something more complex like "Find a highly rated coffee shop within 5 miles, navigate there, and make a reservation," and the system would break that down into its component parts and execute what it can directly (navigation) while integrating with external services for the rest (coffee shop reservations).

How does Alexa+ compare to voice assistants in other luxury cars?

Alexa+ represents a significant advancement over most existing automotive voice assistants, primarily because it's built on generative AI rather than traditional pattern-matching systems. Mercedes' MBUX is sophisticated but not yet powered by LLMs at the same level. Tesla's voice assistant is integrated but limited to Tesla's proprietary ecosystem. Google Assistant and Siri are available in some vehicles but offer less comprehensive vehicle control than Alexa+. The BMW iX3 essentially has the most capable voice assistant available in a production vehicle, which is a meaningful competitive advantage. However, other manufacturers will likely catch up as they implement their own generative AI-powered systems or adopt newer versions of competing platforms.

Key Takeaways

- The 2026 BMW iX3 is the first production vehicle with Alexa+, Amazon's generative AI-powered voice assistant that uses large language models instead of pattern matching

- Three-year development timeline reflects complexity of optimizing AI for automotive safety, reliability, and integration with existing vehicle systems

- Alexa+ understands natural language, maintains conversation context, handles multi-step requests, and integrates with BMW vehicles and smart home systems

- Amazon Bedrock platform allows BMW to customize Alexa+ with BMW-specific data, creating a vehicle-optimized version that understands BMW features and terminology

- This partnership signals broader trend of integrating sophisticated cloud AI into physical products, raising both capabilities and privacy considerations for consumers

Related Articles

- Senso Plant Care Device: Tamagotchi Meets Smart Gardening [2025]

- Earliest African Cremation: 9,500 Years of Ritual and Community [2025]

- Vocci AI Note-Taking Ring: Complete Guide & Alternatives 2025

- Glasses-Free 3D Displays Make a Comeback in 2025 [Samsung 6K Monitor]

- Satya Nadella's 'AI Slop' Pushback: Why the Backlash Actually Misses the Point [2025]

- January TV Sales 2025: Complete Guide to Up to £400 Off Leading Models

![BMW iX3 Alexa+ Voice Assistant: The Future of In-Car AI [2026]](https://tryrunable.com/blog/bmw-ix3-alexa-voice-assistant-the-future-of-in-car-ai-2026/image-1-1767634929885.jpg)