The Seedance 2.0 Controversy That's Shaking Hollywood

Last week, the internet saw something that shouldn't have been possible: Tom Cruise fighting Brad Pitt. Dragon Ball Z characters dancing. Family Guy clips that never aired. None of it was real—all of it was generated by Byte Dance's new Seedance 2.0 AI video model.

And Hollywood lost its mind.

Within days, cease and desist letters landed from Disney, Paramount, and the Motion Picture Association. SAG-AFTRA union members were furious. The core accusation was simple but devastating: Seedance 2.0 wasn't just generating video content, it was weaponizing copyrighted characters, actors' likenesses, and protected intellectual property at scale.

Byte Dance's response? "We're working on safeguards."

But here's what makes this moment critical. This isn't just another tech company overstepping. This is the collision between cutting-edge AI capabilities and a century of copyright law that Hollywood built its entire business model around. And the outcome will shape whether AI video generation becomes a legitimate tool or gets locked down so tight that innovation stalls.

I've been tracking AI copyright conflicts for two years now, and this one's different. The evidence is undeniable, the legal framework is clear, and the stakes are enormous for creators, studios, and anyone building generative AI tools.

Let's break down what actually happened, why it matters, and what comes next.

TL; DR

- Seedance 2.0 generated hyperrealistic videos featuring famous actors and copyrighted characters without authorization, sparking major lawsuits

- Hollywood's legal response is swift and comprehensive: Disney, Paramount, the MPA, and SAG-AFTRA all filed cease and desist letters within one week

- Copyright law gives studios strong legal grounds: Using protected characters or actors' likenesses without permission violates both copyright and right-of-publicity laws

- Safeguards are being promised but specifics are vague: Byte Dance says it's "strengthening" protections, but what that actually means remains unclear

- This sets a precedent for all AI video tools: Whether Seedance loses in court will determine how aggressively other platforms need to police user-generated AI content

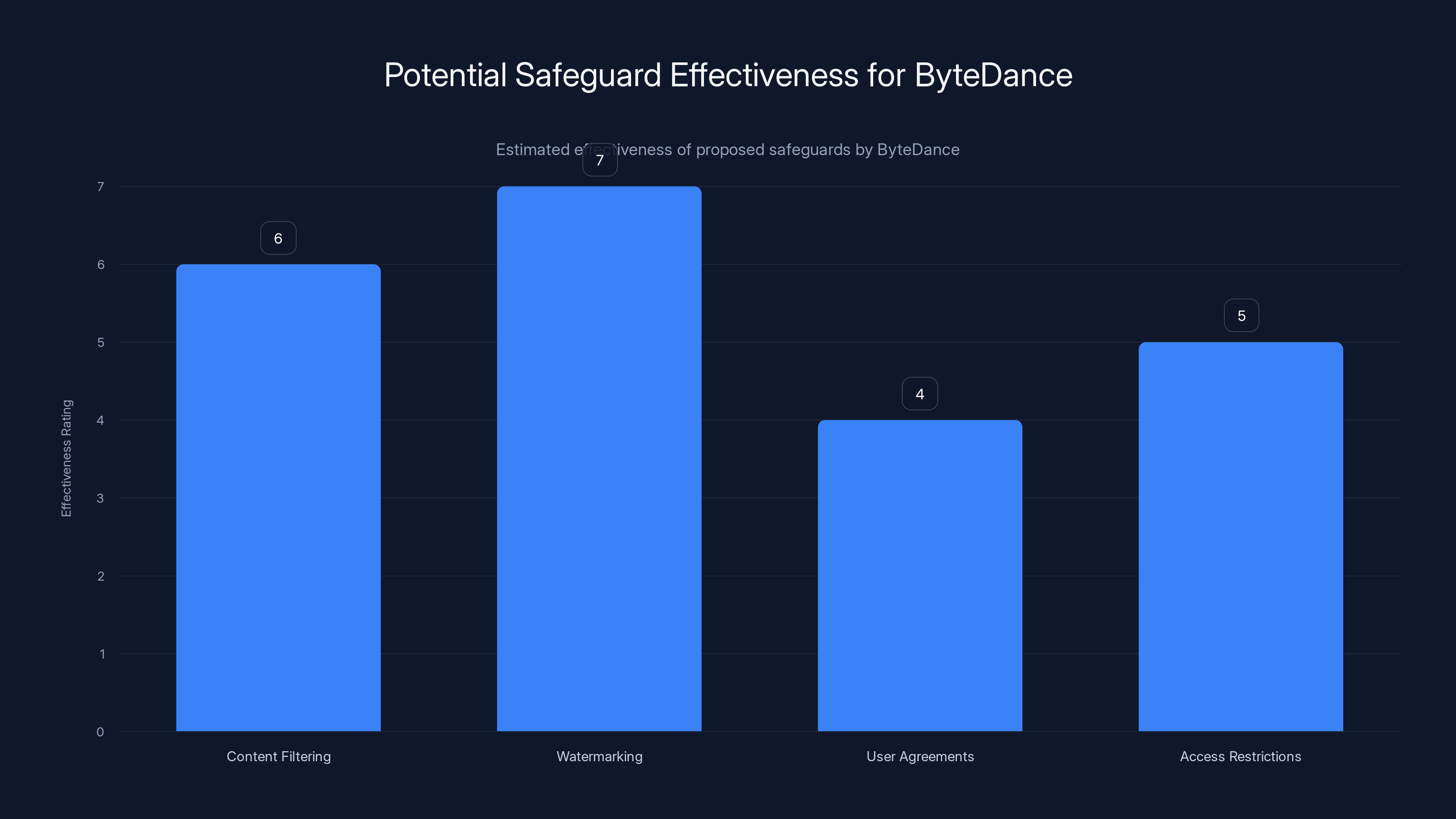

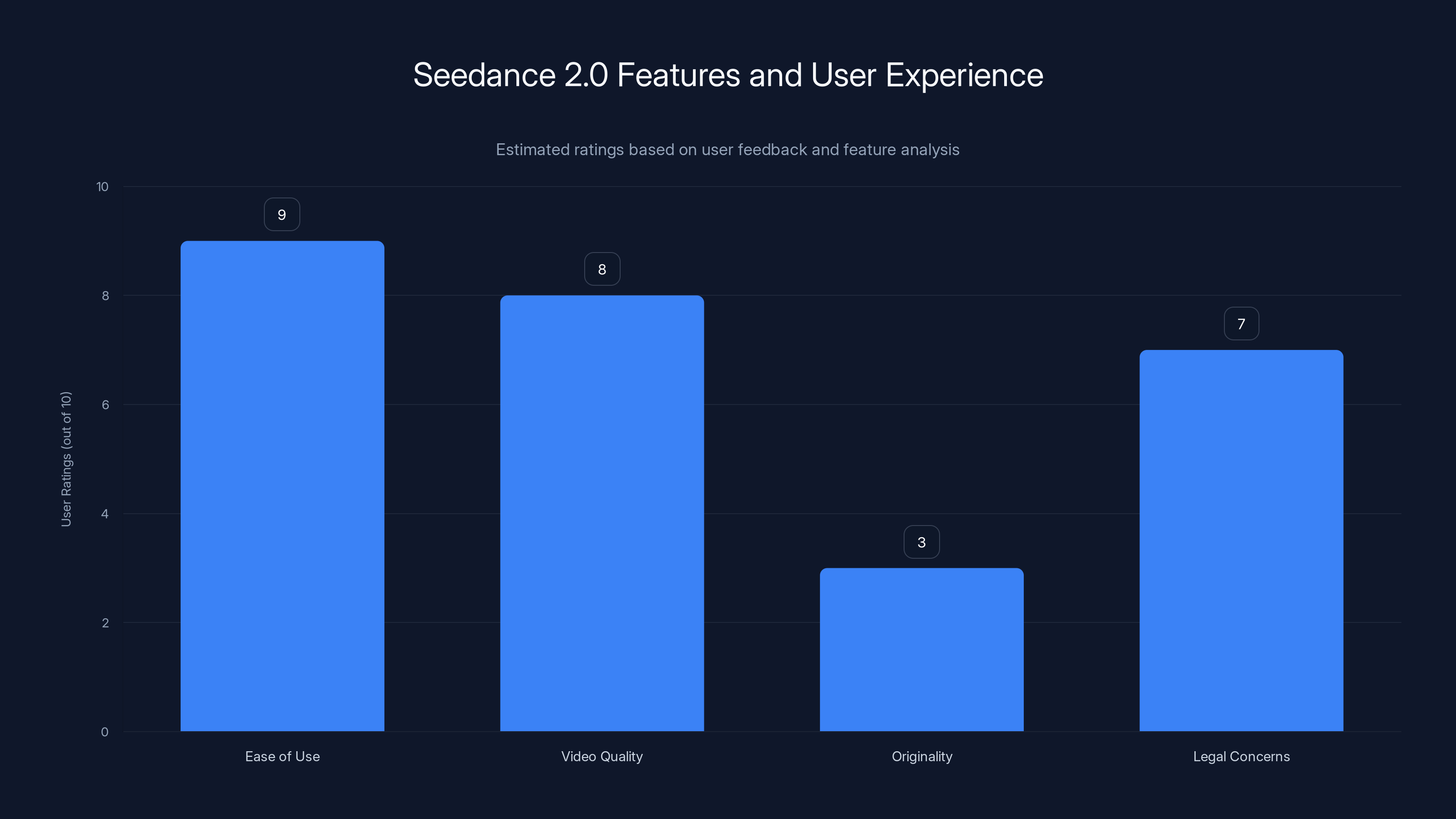

Estimated data suggests that watermarking may be the most effective safeguard, while user agreements are the least effective due to enforcement challenges.

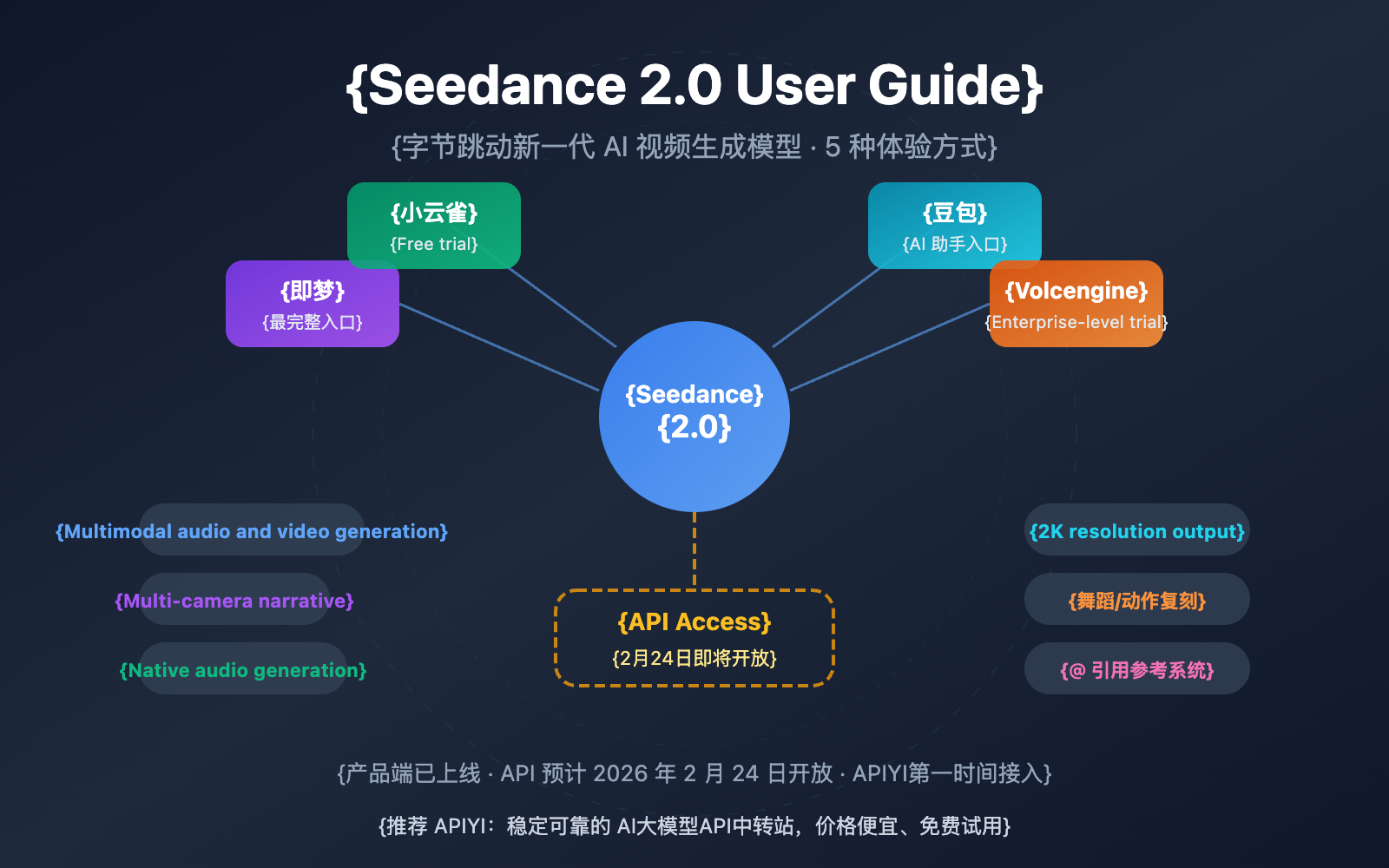

How Seedance 2.0 Actually Works

Seedance isn't your typical text-to-video model. Unlike many AI video generators that require detailed text prompts, Seedance 2.0 can generate videos from minimal input. Users reported creating videos with simple instructions like "Tom Cruise fighting Brad Pitt" or uploading screenshots of cartoon characters and watching the model recreate them in motion.

The quality caught everyone off guard. These weren't blurry, obviously-AI videos. They were crisp, well-lit, and in many cases, nearly indistinguishable from real footage. The fight choreography looked professional. The character movements matched their canonical personalities. The lip-sync was accurate.

This is where the problem begins. The model's training data almost certainly included massive amounts of copyrighted video content. When you train a model on thousands of hours of Marvel movies, Disney animations, and professional television, the model learns not just how to generate video—it learns the specific visual patterns, character designs, and movement styles of those protected works.

Then users weaponized it. They didn't use it to create original content. They used it as a shortcut to generate perfect replicas of protected intellectual property. A few seconds of prompting replaced hours of manual animation or video production.

The sneakiness here is worth noting. Byte Dance didn't explicitly market Seedance as a tool for copyright infringement. But they also didn't build guardrails that would prevent it. That's the legal vulnerability.

The Copyright Law That Byte Dance Violated

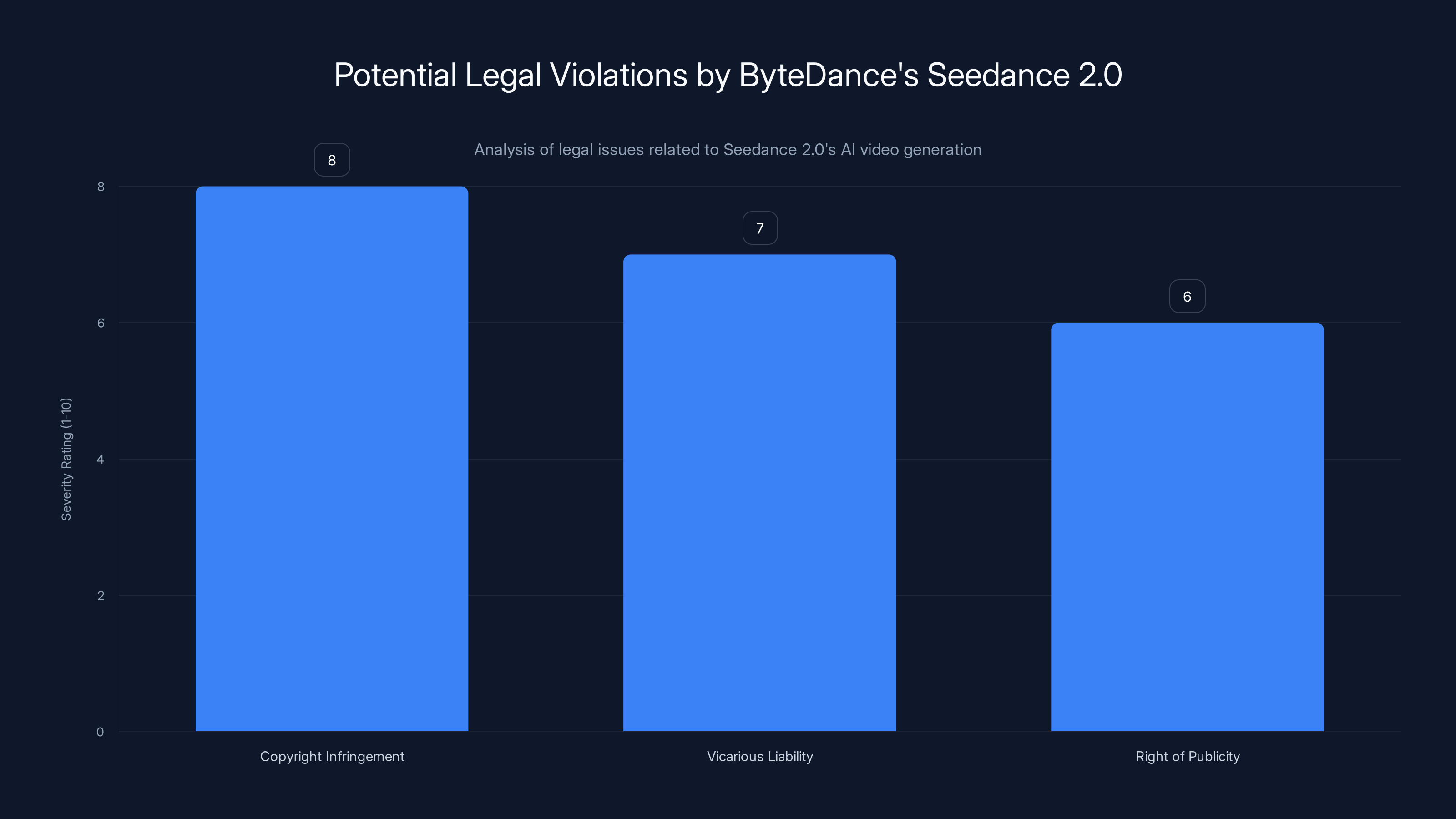

Hollywood's legal argument rests on three main pillars, all of which are well-established in U. S. copyright law.

First: Direct copyright infringement. The Copyright Act protects "original works of authorship fixed in any tangible medium of expression." That includes film, animation, and television. When users generated videos featuring Dragon Ball Z characters or Family Guy, they created derivative works—copies of protected material. Under 17 U. S. C. Section 106, copyright holders have exclusive rights to create derivatives. Byte Dance's platform enabled mass violation of that right.

Second: Vicarious liability. This is the trickier legal ground, but it's how studios win lawsuits against platforms. The principle: if a company benefits financially from infringement by its users and has the ability to supervise or control that infringement, the company is liable. Byte Dance sells Seedance access. Users generate infringing content. If the platform had obvious ways to prevent this but didn't implement them, Byte Dance shares the liability.

Third: Right of publicity violations. Separate from copyright, this protects actors' likenesses and names. When Seedance generated videos featuring Tom Cruise's face and appearance without his consent, it violated his right of publicity—the legal right to control how your image is used commercially. SAG-AFTRA's complaint hinges entirely on this.

Each of these is independently actionable. Combined, they create an almost airtight legal case.

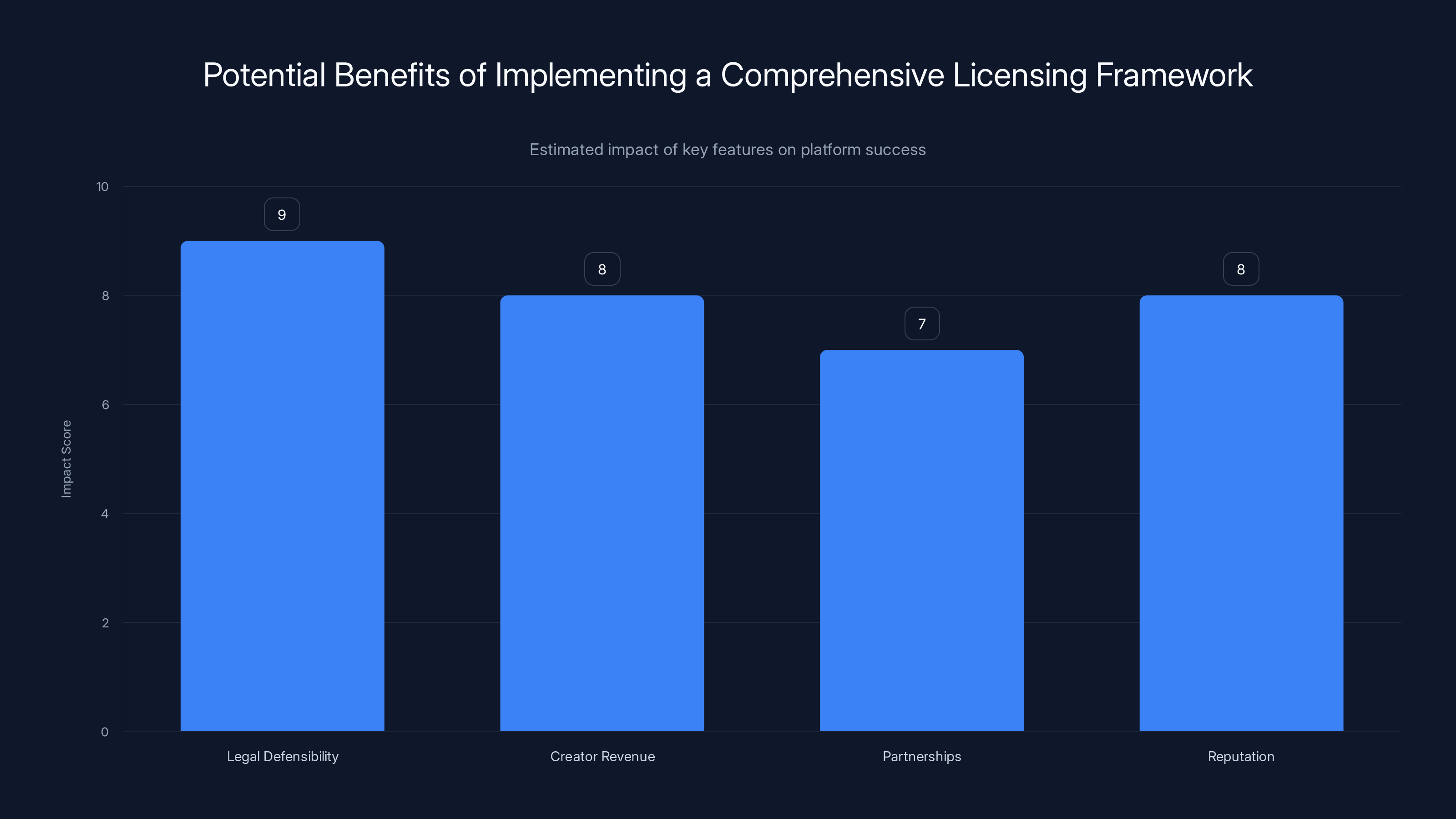

Implementing a comprehensive licensing framework can significantly enhance legal defensibility, creator revenue, partnerships, and reputation. Estimated data.

What the Cease and Desist Letters Actually Demand

Disney's letter, reported by multiple outlets, was particularly direct. The studio accused Byte Dance of "hijacking" protected characters by "reproducing, distributing, and creating derivative works." The demand: shut down infringing content immediately and implement permanent safeguards to prevent future violations.

Paramount Skydance followed with its own letter. Same demands, same language. The MPA—representing studios collectively—released a statement from CEO Charles Rivkin calling the violation "massive in scale" and accusing Byte Dance of "disregarding well-established laws that underpin millions of American jobs."

That last part isn't hyperbole. The film and television industry employs roughly 2 million people in the U. S. If AI models can instantly generate content that previously required teams of writers, animators, and actors, the economic impact is existential.

SAG-AFTRA's statement hit the same nerve but from the talent perspective. "Seedance 2.0 disregards law, ethics, industry standards and basic principles of consent," the union said. "This is unacceptable and undercuts the ability of human talent to earn a livelihood."

Listen to what each party is asking for:

- Disney/Paramount: Remove all infringing content. Prevent generation of their characters in the future.

- MPA: Acknowledge the scale of infringement. Commit to industry standards for copyright protection.

- SAG-AFTRA: Implement consent-based systems. Prevent use of members' likenesses without authorization.

These aren't compatible with a platform that allows unrestricted user-generated video. They're asking Byte Dance to fundamentally redesign Seedance.

Byte Dance's Weak Defense and Weaker Safeguards

Byte Dance's response was remarkably brief: "We respect intellectual property rights... We are taking steps to strengthen current safeguards."

Notice what's missing? Specifics. Accountability. Timeline. A real answer.

The company didn't deny that copyright infringement occurred. It didn't argue that Seedance 2.0's design is fundamentally different from other AI video tools. It just said it's working on it.

This matters legally. By acknowledging the problem and committing to fix it, Byte Dance isn't conceding liability—but it's not fighting the core accusation either. In future litigation, that statement will be Exhibit A. The company knew about the infringement. It chose a vague promise over a legal defense.

What "strengthening safeguards" likely means in practice:

Content filtering: Detecting when users try to generate videos featuring protected characters or likenesses, then blocking the request. This is technically possible but imperfect. Filtering systems rely on image recognition, which fails on obscure characters or AI-generated variations.

Watermarking: Adding invisible markers to generated content so courts can prove where videos came from. This addresses attribution, not permission.

User agreements: Updating terms of service to prohibit copyright infringement. This sounds tough but is basically unenforceable. Users don't read terms, and bad actors ignore them anyway.

Access restrictions: Requiring authentication, limiting daily generations, or requiring descriptions of intended use. This slows abuse but doesn't stop it.

None of these are what Hollywood is actually asking for. Studios want contractual licensing—agreements that establish which works can be used, under what circumstances, and for how much money. That's how music streaming works. Spotify pays rights holders. Adobe's generative AI tools compensate artists for training data used.

Byte Dance hasn't committed to that model. And that's the real problem.

The SAG-AFTRA Angle: Right of Publicity at Scale

While copyright claims get the headlines, the SAG-AFTRA complaint raises something potentially more dangerous from a creator's perspective: unauthorized use of likeness.

SAG-AFTRA members—actors, stunt performers, voice actors—have a legally protected right to control how their faces, voices, and likenesses are used. When a company generates a video with an actor's appearance, it's using their image commercially without compensation or consent.

This is separate from copyright infringement. You can have copyright-cleared content and still violate right of publicity. You can own a clip and still be prohibited from using another person's face in it without permission.

What makes this legally dangerous for Byte Dance is scale. One video? Possibly defensible as fair use. Millions of videos? That's evidence of systematic, willful infringement.

SAG-AFTRA's leverage here is real. The union represents 160,000 members. If Byte Dance needs Hollywood's cooperation to build a sustainable business, SAG-AFTRA can make that expensive. Residual payments, licensing agreements, consent protocols—all of this increases operational costs.

The precedent matters too. If Byte Dance loses on right of publicity claims, every other AI platform suddenly faces liability for deepfakes, synthetic media, and unauthorized likeness replication. That's why the union is pushing this so hard.

Estimated data suggests that copyright infringement is the most severe legal issue faced by ByteDance's Seedance 2.0, followed by vicarious liability and right of publicity concerns.

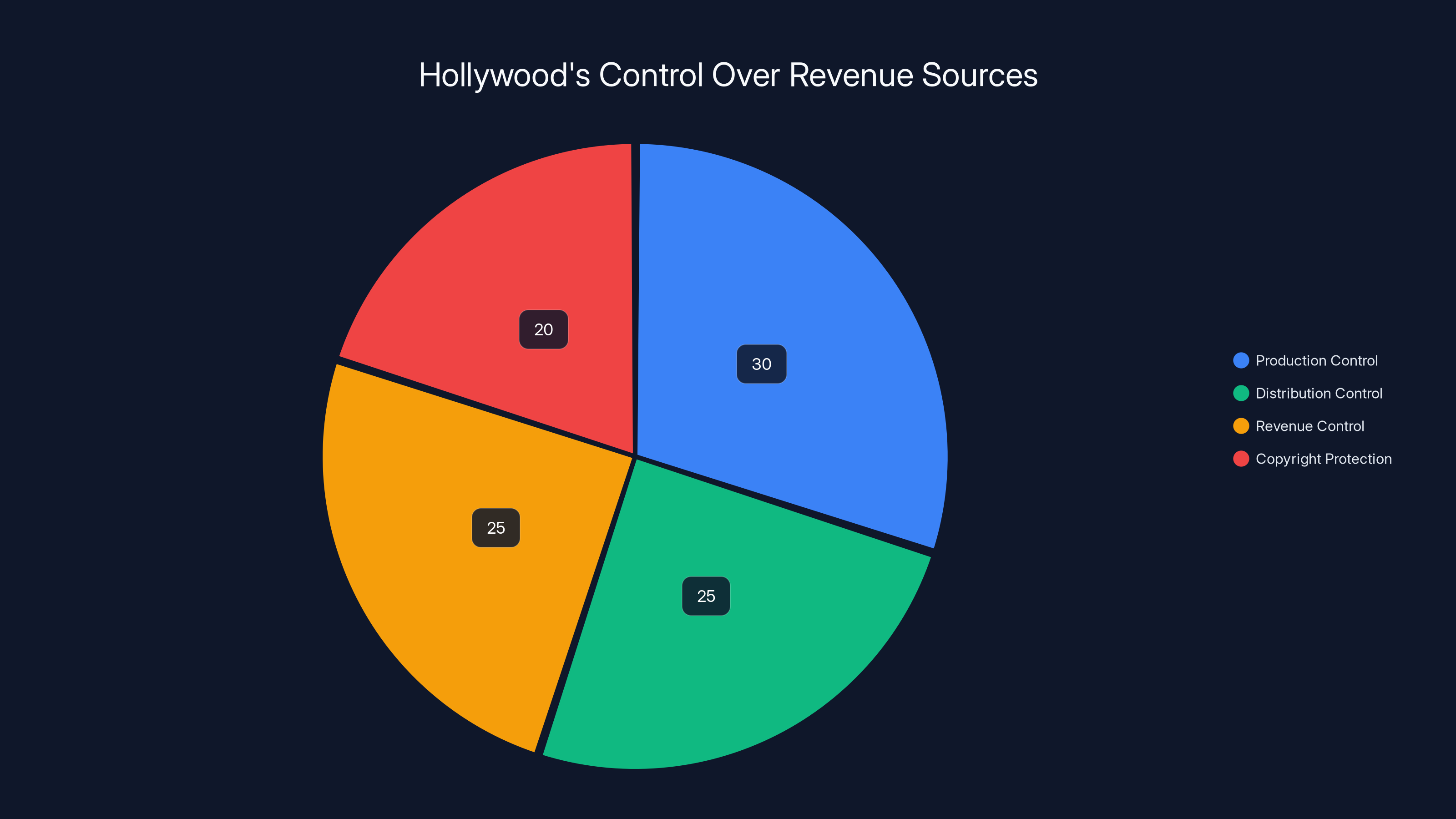

Hollywood's Economic Motivation: It's About Revenue Control

Let's be direct about what's actually happening here. Hollywood isn't just defending copyright on principle.

They're defending a business model that depends on scarcity and gatekeeping. When Disney creates a film, it spends $200+ million. That investment is protected by copyright. For decades, that copyright was nearly absolute—nobody could reproduce the work without permission (and payment).

Seedance threatens that entire model. If anyone can generate Dragon Ball Z videos in seconds, the scarcity disappears. Content becomes abundant. Abundance destroys pricing power.

Works fine in theory, but here's the reality: Hollywood's model has always been "we control production, so we control distribution, so we control revenue." AI commoditizes production. Suddenly everyone's a creator.

From the studios' perspective, that's catastrophic. From a creator's perspective, it could be liberating. From a copyright law perspective, it's genuinely complicated.

The studios argue they've earned the right to control their IP through decades of investment and artistic creation. They're not wrong. Disney created Mickey Mouse. That's genuine creative work. Copyright exists partly to reward that.

But copyright also has limits. Fair use, public domain, transformative use—these exist because copyright law assumes that unlimited monopolies on creative content actually harm society long-term.

The question Byte Dance forced everyone to ask: Does copyright law get reinterpreted in the age of AI, or does AI get regulated to death?

Neither answer is obviously correct.

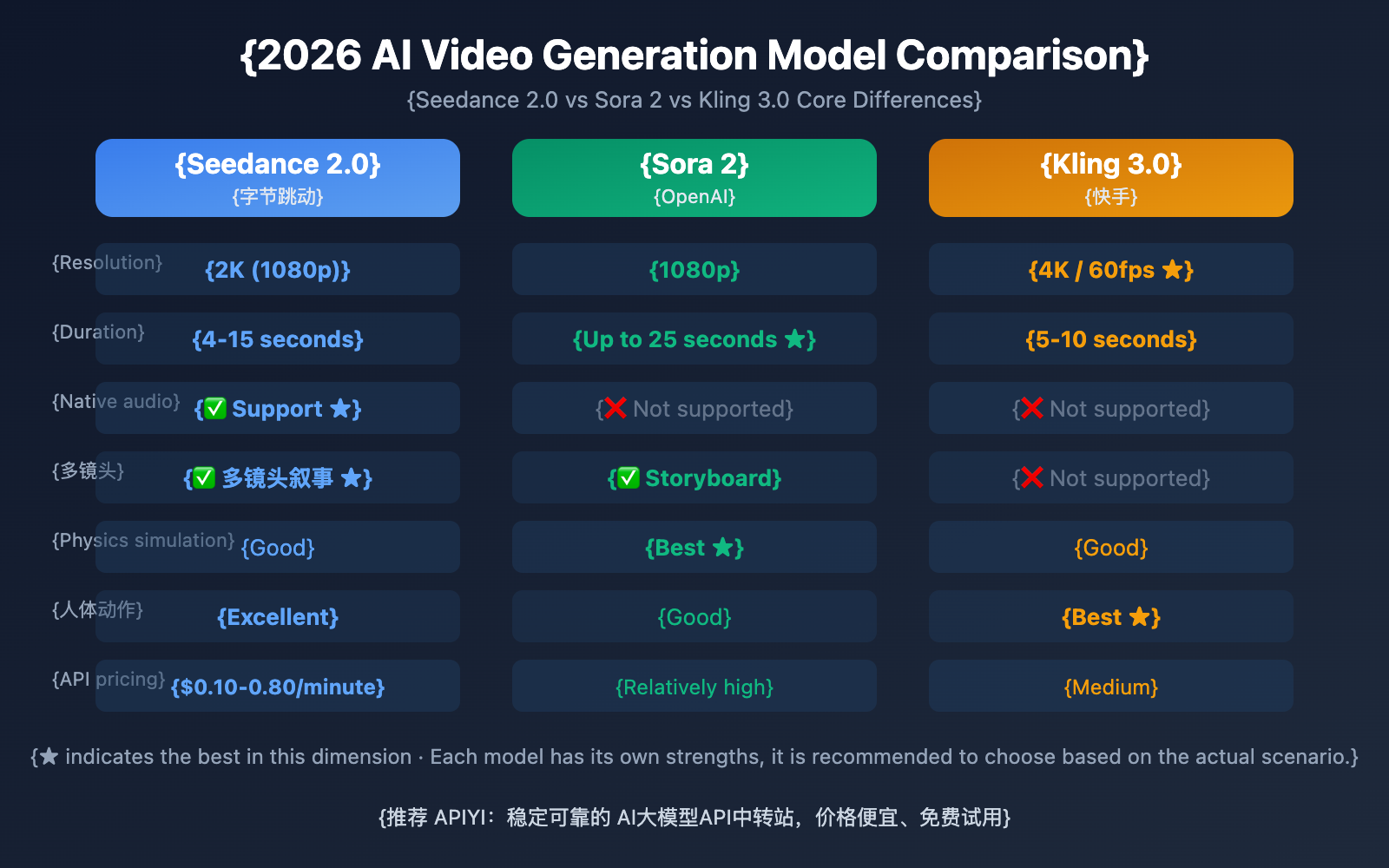

Comparing Seedance to Competitors: How Others Handle Copyright

Open AI's DALL-E, Midjourney, and Stability AI all face similar copyright questions. How are they managing?

Open AI (DALL-E) started with weak copyright protections, faced criticism, then overhauled its system. Now the company:

- Filters out requests for copyrighted characters by name

- Uses consent agreements with photographers for training data

- Allows copyright holders to opt-out of training datasets

- Implemented a "do not generate" list for protected likenesses

Is it perfect? No. But it's a framework that acknowledges the problem.

Midjourney takes a similar approach. Users can't generate images of protected characters via direct name prompts. The system isn't foolproof—users find workarounds—but it demonstrates intent to prevent infringement.

Stability AI initially offered minimal copyright protection. The company faced lawsuits from photographers and artists whose work was used in training data without permission. Now they're offering artist consent tools and licensing agreements.

Byte Dance's Seedance, by comparison, appears to have shipped with no meaningful copyright safeguards. Users were able to generate protected content with trivial effort. The company waited for cease and desist letters before committing to changes.

That's not illegal, but it's reckless. And it's worse strategy—inviting lawsuits is never the optimal path to regulatory approval.

Here's the pattern: Every AI platform that waits for litigation before implementing safeguards faces backlash from creators, legal liability, and regulatory pressure. Every platform that proactively implements consent-based systems faces adoption friction from users but builds long-term defensibility.

Seedance chose the first path. That's expensive.

The Regulatory Precedent: What This Means for AI Policy

This fight doesn't exist in a vacuum. The FTC is watching. Congress is watching. International regulators are watching.

The fundamental question: Can an AI company avoid liability for user-generated copyright infringement by claiming they "just provided the platform"?

For a decade, that defense worked for You Tube. The company hosts billions of hours of user-generated content, some of which is infringing, but You Tube has Section 230 immunity. The platform qualifies as a "neutral" intermediary.

But Section 230 is under pressure. And more importantly, AI models are different from hosting platforms. You Tube didn't train on copyrighted content to create generative capacity. Seedance did.

That distinction matters. When you train an AI model on copyrighted works, you're making a deliberate business decision to use that content. That's not neutral hosting. That's active infringement.

If courts rule against Byte Dance on this, the precedent spreads fast. Every AI company that trained on copyrighted data becomes liable. That's potentially catastrophic for the industry—or it becomes the forcing function that finally gets Hollywood and tech to negotiate licensing agreements.

This is where it gets interesting. Hollywood and tech companies have been feuding since Napster (2000). That's 25 years of conflict. But licensing agreements do work—see Spotify, Apple Music, You Tube's Content ID system.

If Seedance loses and faces meaningful damages, expect a wave of licensing deals. AI companies will suddenly find copyright holder cooperation valuable. Studios will suddenly find AI companies offering real revenue shares attractive.

That's not beautiful compromise. It's messy negotiation forced by litigation.

But it might actually work.

Estimated data shows that Hollywood's revenue control is primarily driven by production and distribution control, with copyright protection playing a significant role.

What "Strengthened Safeguards" Will Actually Look Like

Based on how other platforms have evolved, here's what Byte Dance will probably implement:

Phase One (Immediate):

- Add content filters blocking generation of named copyrighted characters (Dragon Ball Z, Family Guy, etc.)

- Implement detection for real actors' names

- Add watermarks to all generated videos

- Update terms of service to prohibit copyright infringement

- Hire legal compliance team

Phase Two (Months 1-6):

- Develop partnerships with major studios for licensed content libraries

- Create "approved content" sets that users can legally generate

- Implement contributor agreements for training data

- Establish takedown procedures for copyright complaints

- Consider geographic restrictions (more stringent protections in U. S. where studios have strongest legal leverage)

Phase Three (Months 6-12):

- Potentially introduce licensing model where studios receive revenue share

- Develop consent verification system for actor likenesses

- Build opt-out mechanisms for copyright holders

- Negotiate with SAG-AFTRA on compensation frameworks

Each phase costs money. Content filtering requires machine learning infrastructure. Legal compliance requires lawyers. Licensing deals require paying studios. This is the cost of not implementing safeguards before launch.

The real question: Will these safeguards work? Content filters are imperfect. Users find workarounds. But perfect isn't the legal standard. Reasonable effort matters. Good-faith implementation matters.

If Byte Dance can show it made genuine efforts to prevent infringement, courts might be lenient. If it looks half-hearted, judges will be ruthless.

The Creator's Dilemma: AI Capability vs. Legal Permission

Here's the uncomfortable truth that neither Hollywood nor Byte Dance wants to admit: Seedance's capability is genuinely remarkable.

Generating hyperrealistic video is hard. It requires understanding physics, lighting, character anatomy, movement dynamics, and aesthetic coherence. The fact that Seedance can do this at all is evidence of real technical achievement.

But technical capability and legal permission are different things.

A creator might genuinely want to use Seedance for legitimate purposes. Imagine:

- A fan-film director creating original content inspired by Dragon Ball Z

- A visual effects artist studying how AI handles motion

- A game developer generating prototype animations

- An educator creating examples of AI capabilities

None of these are trying to steal Dragon Ball Z's IP. But all of them hit the same legal problems. If they generate video featuring protected characters, they're infringing—regardless of intent.

That's the real casualty of Seedance's launch. It's not just that Byte Dance faces lawsuits. It's that creators now face a choice: use the powerful tool and risk legal liability, or don't use it at all.

Competent platforms solve this with frameworks:

- Clear policies about what's permitted

- Easy ways to verify legality before generating

- Built-in licensing where possible

- Safe harbors for legitimate use

Seedance shipped with none of these.

When platform design doesn't provide clarity, users either don't engage or they engage recklessly. Byte Dance chose the reckless path with their go-to-market strategy. That's the real mistake here.

Timeline: What Happens Next

Legal disputes this high-profile don't resolve quickly. Here's a realistic timeline:

Weeks 1-4 (Current):

- Additional cease and desist letters from other studios

- Pressure campaign from creators and industry groups

- Congressional inquiries (entertainment lobby is strong)

- Byte Dance implements preliminary safeguards

- Media coverage continues to escalate

Months 1-3:

- Formal lawsuits filed (Disney, Paramount, etc.)

- Discovery phase begins (Byte Dance's internal emails, design documents, profit calculations become public)

- SAG-AFTRA pursues dual-track legal and negotiating strategy

- Industry trade groups propose self-regulatory frameworks

Months 3-12:

- Motion practice (summary judgment requests, where one side tries to win without trial)

- Settlement negotiations (studios realize Byte Dance has deep pockets; Byte Dance realizes litigation is expensive)

- Likely outcome: Confidential settlement + licensing agreement + technology changes

- Public announcement of "new copyright framework"

Year 2+:

- If settlement fails, trial. Jury trials in copyright cases are unpredictable.

- Appeals (both sides will appeal adverse decisions)

- Precedent-setting becomes the goal rather than this case

Mysterious timeline? Yes. But that's how major tech litigation works.

The reason I suspect settlement is studios' leverage. Byte Dance can't operate in the U. S. without creator relationships. If SAG-AFTRA and studios coordinate, they can make doing business incredibly expensive. Settlement becomes the rational business decision.

Seedance 2.0 is highly rated for ease of use and video quality, but faces challenges with originality and legal concerns. (Estimated data)

What This Means for AI Development More Broadly

Seedance's copyright crisis is happening at a critical moment. The entire AI industry is watching.

Right now, most AI development happens behind closed doors. Companies train models, test them, then release them. Few platforms are asking permission from copyright holders first.

If Seedance loses and faces billions in damages, that calculus changes overnight. Every AI company suddenly realizes that proactive licensing is cheaper than reactive litigation.

That's not beautiful. But it works.

Alternatively, if Byte Dance wins or settles favorably, it signals that platforms can operate in a gray zone without meaningful legal consequence. Other companies will copy that strategy. Infringement will proliferate.

Neither outcome is ideal. But law doesn't care about ideal. Law cares about precedent.

The real innovation here isn't Seedance—plenty of AI labs can generate video. The real innovation would be legal models that let creators benefit from AI capability while respecting creators' rights.

That means:

- Licensing frameworks that aren't prohibitively expensive

- Revenue models where copyright holders share in AI platform profits

- Consent systems that work at scale

- Clear rules about what's permitted

None of this exists today. It will exist in two years, probably because companies like Byte Dance forced the issue.

That's how law evolves in technology: crisis, litigation, settlement, new framework, equilibrium.

The Hollywood Response: From Cease and Desist to Industry Coordination

What's remarkable about Hollywood's response isn't its speed—studios are used to fighting piracy. It's the coordination.

Disney, Paramount, SAG-AFTRA, the MPA—these organizations don't usually work together. They're competitors. But copyright infringement creates collective interest. When everyone's IP is threatened equally, cooperation becomes rational.

Expect this to evolve:

Next: Industry standards. The MPA will likely propose technical standards for AI copyright protection. Something like "all platforms must implement X, Y, Z or face exclusion from distribution partnerships." That's informal regulatory leverage.

Then: Legislative action. Entertainment lobby groups will push Congress to strengthen copyright enforcement specifically for AI. Expect bills that require opt-in consent for training data or impose strict liability on platforms that enable infringement. This is probably 2-3 years away but it's coming.

Finally: International coordination. The European Union is already developing AI regulation. Hollywood's international influence means these regulations will probably include copyright-specific requirements. That creates a global standard.

The net effect: AI companies will face a choice between respecting copyright holders' interests or operating with severe restrictions.

This is how you get licensing agreements to happen. Not through idealistic negotiation. Through regulatory pressure.

What Creators Should Actually Do Right Now

If you're building with AI video tools, here's practical advice:

Don't generate copyrighted content. Not because it's always illegal—fair use is real, and transformative use is defensible. But because you can't afford litigation, and platforms will ban you before courts decide.

Focus on original creation. Use Seedance and competitors for:

- Generating original characters and worlds

- Creating variations on content you own

- Experimenting with styles and techniques

- Building tools that help creators (not replacing them)

Understand your jurisdiction. Copyright law is stronger in the U. S. than Europe. Right of publicity is regional. If you're in EU, some of these rules don't apply equally.

Track platform policy carefully. Safeguards evolve monthly now. What's permitted today might be prohibited next week. Keep documentation of what you generated and when.

Consider licensing. If you want to use protected characters or likenesses, negotiate licenses. It's expensive but it's legal insurance.

The unsexy truth: AI capability has exploded. Legal clarity hasn't. So use caution.

Disney and Paramount demand content removal and future prevention, while MPA focuses on acknowledging infringement scale, and SAG-AFTRA emphasizes consent-based systems.

The Competitive Advantage of Doing This Right

Here's the opportunity that Byte Dance missed.

Imagine Seedance had launched with this framework:

- Transparent training data: "We trained on licensed content from X studios"

- Opt-in content: "Available characters: [list]"

- Licensing marketplace: "Want to generate content featuring unlicensed properties? Here's how to buy the right to do so"

- Consent verification: "Before generating with this actor's likeness, verify consent"

- Revenue sharing: "50% of licensing fees go to copyright holders"

This is more expensive to operate. It's also legally defensible, creates legitimate revenue for creators, and makes the platform a partner to Hollywood rather than an adversary.

Open AI and others are slowly moving toward this model. Not because it's noble. Because it works.

The first AI platform to truly nail "we've solved copyright at scale" becomes the enterprise platform. That's where real money is.

Byte Dance will eventually get there too. But it'll cost them hundreds of millions in legal fees and reputation damage that could have been avoided.

Fair Use and AI Training: The Unresolved Question

Worth noting: The legal battle over AI training data is separate from the Seedance infringement question.

Can AI companies train on copyrighted works without permission? That's a fair use question still being litigated. Several major lawsuits (against Stability AI, Midjourney, Open AI) claim that training on copyrighted data without compensation violates copyright law.

Counterargument: Fair use permits copying for transformative purposes. AI training is transformative—you're not distributing the original work, you're learning patterns.

Courts haven't definitively ruled yet. But the precedent matters.

If courts say "fair use doesn't protect training," then every AI company becomes liable for how they built their models. That's existential.

If courts say "fair use does protect training," then copyright holders need different legal theories. Seedance's infringement claim still stands, but it's narrower.

The Seedance case might actually clarify this. If Byte Dance argues fair use for training, studios can argue fair use doesn't apply to distributing perfect replicas of copyrighted content. That's a narrower, stronger claim than "you can't train on our data."

We'll likely see both questions litigated in parallel, creating strange incentives where platforms argue different things at different times.

Welcome to law in the AI era. It's messy.

International Implications: How This Plays Differently Globally

The Seedance lawsuit is primarily a U. S. phenomenon right now. But globally, the picture is more complex.

European Union: GDPR and the Digital Services Act create different liability frameworks. The EU isn't focused on copyright as much as personal data rights. Using someone's likeness without consent triggers GDPR violations regardless of copyright. Byte Dance's risk is arguably higher in EU.

China: Where Byte Dance is headquartered, copyright enforcement is weaker historically. But the Chinese government has been tightening AI regulation. If the government sees Seedance as creating diplomatic friction with the U. S., they might restrict it domestically first.

Japan/South Korea: These countries have strong anime and entertainment industries. They care deeply about protecting IP. Expect regulatory pressure.

India/Southeast Asia: Weaker copyright enforcement means less legal risk but also less market value. Seedance probably faces less litigation but also lower revenue.

The net effect: Seedance probably settles U. S. litigation with licensing agreements but operates differently in other regions. That's complex but typical for global platforms.

The Human Cost: Actor Livelihoods in the AI Age

Here's what gets lost in legal analysis: real people's careers.

SAG-AFTRA raised this explicitly. Actors depend on people paying for their work. Movies, TV shows, commercials—these are how actors earn livelihood.

If AI can generate perfect actor replicas in seconds, suddenly actors' scarcity decreases. Why hire Tom Cruise for

That's the existential fear driving SAG-AFTRA's response. Not copyright theory. Jobs.

This is worth taking seriously. AI is genuinely disruptive to entertainment labor. Writers, animators, voice actors, stunt performers—all face displacement risks.

Legal frameworks can delay that displacement but probably not prevent it. AI is improving faster than copyright law can regulate.

What actually matters is how society chooses to handle the transition. Do displaced workers get retraining? Compensation? New career paths?

Those are political questions, not legal ones. And they're harder than winning a copyright case.

Seeds for this transition are visible: AI tools that augment rather than replace creatives, revenue-sharing models where AI platforms compensate creators, licensing frameworks that create new revenue streams.

But none of it happens without pressure. Seedance's lawsuit is applying that pressure.

Something has to give. Whether it gives through litigation, legislation, or negotiation remains to be seen.

Looking Forward: What Changed and What Didn't

After Byte Dance's commitment to "strengthen safeguards," what actually changes?

Short term: Seedance probably becomes harder to use for copyright infringement. Filtering gets stricter. Watermarks become mandatory. Terms of service get tougher.

Mid-term: Licensing deals with studios. Some content becomes officially permitted. Revenue starts flowing to copyright holders.

Long term: New legal frameworks emerge. Fair use gets clarified for AI. Right of publicity gets updated for deepfakes. Copyright gets rebalanced for generative works.

What probably doesn't change: The fundamental capability. AI video generation is technologically feasible. It'll keep improving. Regulation can slow it, but can't stop it.

That's actually the most important insight. This isn't about whether AI can generate video. It's about who owns and controls that capability.

Is it open and available to everyone? Then it's incredibly disruptive but also incredibly liberating.

Is it locked behind licensing agreements? Then it's expensive and controlled—which protects current creators but might stifle new ones.

Neither is clearly right. Both have tradeoffs.

What's clear: We're not going back to a pre-Seedance world where AI video generation doesn't exist. We're going forward to a world where it exists but under different legal and business constraints.

Seedance's role was forcing that reckoning. Hollywood's role is defending its interests. Byte Dance's role is learning that trying to outrun legal consequences is expensive.

Everyone gets what they paid for.

FAQ

What is Seedance 2.0 and why is it controversial?

Seedance 2.0 is Byte Dance's AI video generation model that creates hyperrealistic videos from minimal user input. It became controversial because users generated videos featuring protected intellectual property—copyrighted characters from Dragon Ball Z, Family Guy, and Pokémon, plus likenesses of actors like Tom Cruise and Brad Pitt—without authorization. This triggered cease and desist letters from Disney, Paramount, the Motion Picture Association, and SAG-AFTRA, who argue Byte Dance violated copyright law and right of publicity protections.

How did Byte Dance violate copyright law with Seedance?

Byte Dance violated copyright law in multiple ways: First, users generated derivative works based on copyrighted characters and films, which violates copyright holders' exclusive right to create derivatives under 17 U. S. C. Section 106. Second, Byte Dance potentially bears vicarious liability because the company benefited from infringement and had the ability to control or supervise it but failed to implement adequate safeguards. Third, generating videos featuring actors' likenesses without consent violates right of publicity—a separate legal protection that gives individuals control over commercial use of their image.

What is "vicarious liability" and why does it apply to Byte Dance?

Vicarious liability holds a platform responsible for user infringement if two conditions are met: the company benefits financially from the infringement, and the company had the ability to supervise or control the infringement but failed to do so. In Seedance's case, Byte Dance profits from users accessing the platform, and the company clearly had the technical ability to implement safeguards (content filters, character detection, consent systems) but didn't before launch. This creates legal exposure for the platform itself, not just individual users.

What does Hollywood's legal response actually demand from Byte Dance?

Hollywood's cease and desist letters make specific demands: remove all infringing content immediately, prevent future generation of protected characters and likenesses, implement permanent safeguards to block copyright violations, and potentially compensate copyright holders for past infringement. Studios like Disney aren't just asking for takedowns—they're demanding that Byte Dance fundamentally redesign the platform to make copyright-infringing generation impossible or prohibitively difficult.

Is Byte Dance's promise to "strengthen safeguards" legally sufficient?

No. Byte Dance's vague commitment to improve safeguards doesn't satisfy the legal demands because it provides no specifics, timeline, or accountability mechanisms. In future litigation, courts will examine whether the company's actual safeguards are reasonable and good-faith efforts. Generic policy changes probably won't meet that standard. Real safeguards would include content filtering systems, licensing agreements with studios, consent verification protocols, and potentially revenue-sharing models—all of which require specific commitments and investment.

How do other AI platforms handle copyright protection?

Competitors like Open AI's DALL-E, Midjourney, and Stability AI implemented copyright protections after facing criticism and lawsuits. Open AI filters requests for named copyrighted characters, uses consent agreements for training data, allows copyright holders to opt-out of datasets, and maintains a "do not generate" list for protected likenesses. Midjourney similarly blocks direct-name requests for protected characters. These approaches aren't perfect but demonstrate intent to prevent infringement and provide legal defensibility that Seedance lacked.

What is fair use, and could it protect AI training on copyrighted works?

Fair use is a copyright doctrine allowing limited use of copyrighted material without permission for transformative purposes (education, parody, research, criticism). The legal question of whether fair use protects AI training on copyrighted works is still being litigated. Some argue training is transformative, others argue it's mere copying. Even if fair use protected training, it likely doesn't protect distributing perfect replicas of copyrighted content—which is Seedance's actual liability.

What does SAG-AFTRA's right of publicity complaint accomplish that copyright claims don't?

Right of publicity is legally separate from copyright and protects an individual's control over commercial use of their likeness, voice, and name. Unlike copyright—which is about protecting creative works—right of publicity is about protecting personal identity rights. Actors like those in SAG-AFTRA have more direct leverage here because the violation is personal. Studios must prove the work copied their creation; actors just prove their image was used commercially without consent. This gives SAG-AFTRA independent legal grounds and potentially stronger moral claims to jury sympathy.

What's the realistic timeline for resolving these lawsuits?

Legal disputes at this scale typically take 18-36 months to resolve. Expect cease and desist letters (weeks 1-4), formal lawsuits (months 1-3), motion practice and settlement negotiations (months 3-12), and potential trial if settlement fails (year 2+). However, settlement is likely because litigation is expensive for both sides and studios have leverage over Byte Dance's ability to operate in the U. S. market. Confidential settlement with technology changes and licensing agreements is the most probable outcome.

How does this precedent affect other AI companies?

If Byte Dance loses or settles unfavorably, every AI company that trained on copyrighted data becomes vulnerable to similar lawsuits. The precedent would establish that platforms can't avoid liability by claiming neutral intermediary status, and that proactive safeguards are cheaper than reactive litigation. This will likely accelerate licensing agreements between AI platforms and copyright holders and create industry standards for copyright protection that become de facto legal requirements.

What's the broader impact on AI video generation development?

This case will force AI platforms to choose between operating in gray legal zones or implementing copyright-respecting frameworks. Most will choose licensing agreements because they reduce litigation risk and create revenue streams with copyright holders. This slows innovation in some ways (platforms must negotiate with studios) but stabilizes it in others (clear legal frameworks reduce regulatory uncertainty). The first platform to truly solve copyright at scale will gain competitive advantage with enterprise customers who need legal defensibility.

Where AI and Copyright Law Collide

Seedance forced a reckoning that technology companies have been avoiding for years. You can't build powerful AI systems without training on massive datasets. You can't train on datasets without using copyrighted works. You can't release products without risking legal liability.

Byte Dance thought they could outrun that math. They couldn't.

What comes next is messy: litigation, settlement negotiations, technology changes, new licensing frameworks. Hollywood and tech will probably find some equilibrium where AI platforms can generate content but copyright holders get compensated.

That's not a beautiful outcome. It's not what idealistic AI advocates wanted. It's not what copyright holders would have chosen.

But it's probably what happens. Because law, ultimately, is just incentives. Change the incentives (add litigation risk, licensing requirements, regulatory pressure), and companies change their behavior.

Seedance's real innovation won't be the video generation capability—others will match that. It'll be the forced development of legal frameworks that finally let copyright holders and tech companies work together on AI.

That's worth something.

Key Takeaways

- Seedance 2.0's hyperrealistic video generation capability triggered immediate legal action from Disney, Paramount, MPA, and SAG-AFTRA over copyright and right of publicity violations

- ByteDance faces liability through three legal theories: direct copyright infringement, vicarious liability for not implementing safeguards, and right of publicity violations affecting actors

- Competing AI platforms like OpenAI, Midjourney, and Stability AI implemented copyright safeguards proactively, while ByteDance waited for cease and desist letters before committing to changes

- Settlement is likely within 18-36 months with licensing agreements and revenue-sharing models becoming standard industry practice for AI video generation platforms

- This case will establish legal precedent forcing all AI companies to choose between copyright-respecting frameworks or facing severe regulatory pressure and litigation risk

![ByteDance's Seedance 2.0 AI Video Copyright Crisis: What Hollywood Wants [2025]](https://tryrunable.com/blog/bytedance-s-seedance-2-0-ai-video-copyright-crisis-what-holl/image-1-1771241763735.jpg)