The Moment Everything Changed for AI Image Generation

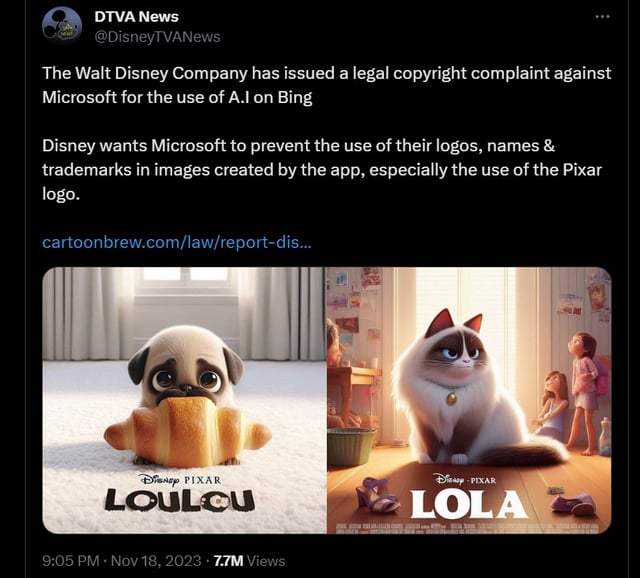

Last month, something quietly shifted in the AI world. Disney didn't announce it with fanfare or press releases. But if you tried to generate images of Mickey Mouse, Cinderella, or any Disney character through Google's Gemini or other AI image platforms, you suddenly got blocked. A flat "no." No workaround, no loophole.

This wasn't just Disney being Disney. This was the first domino falling.

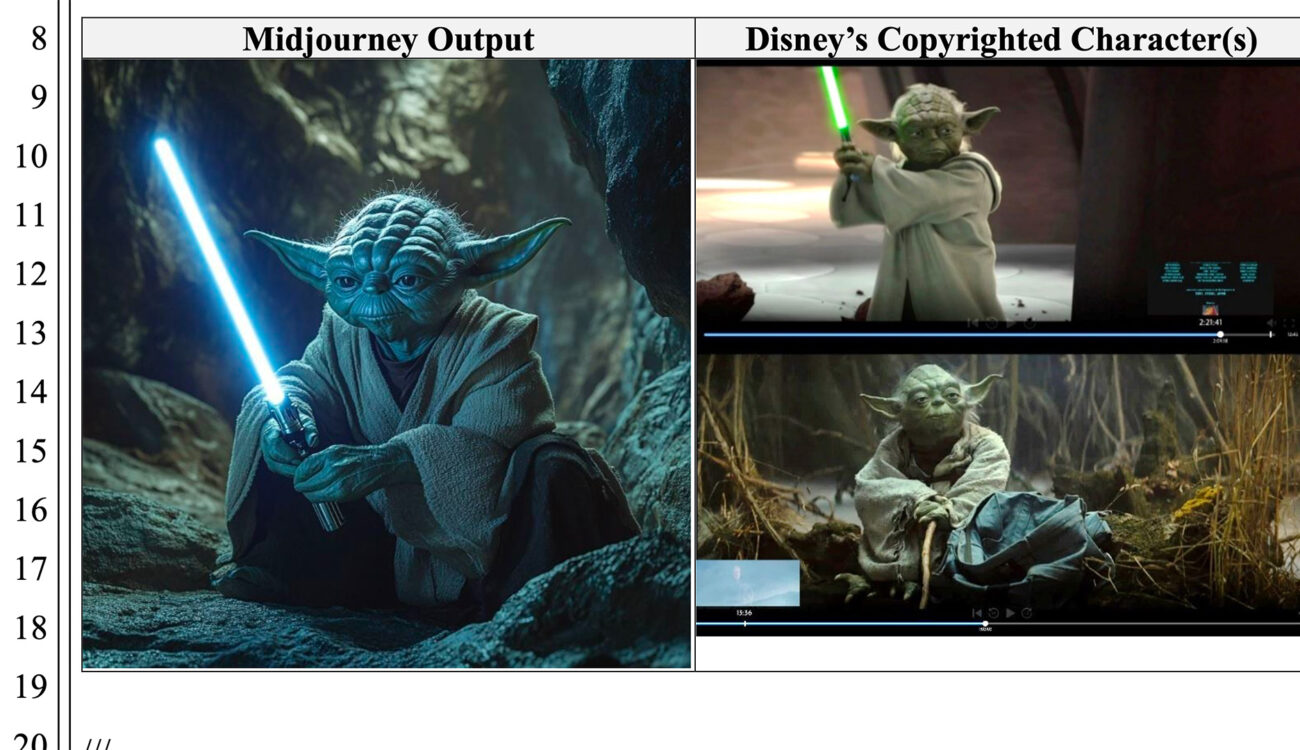

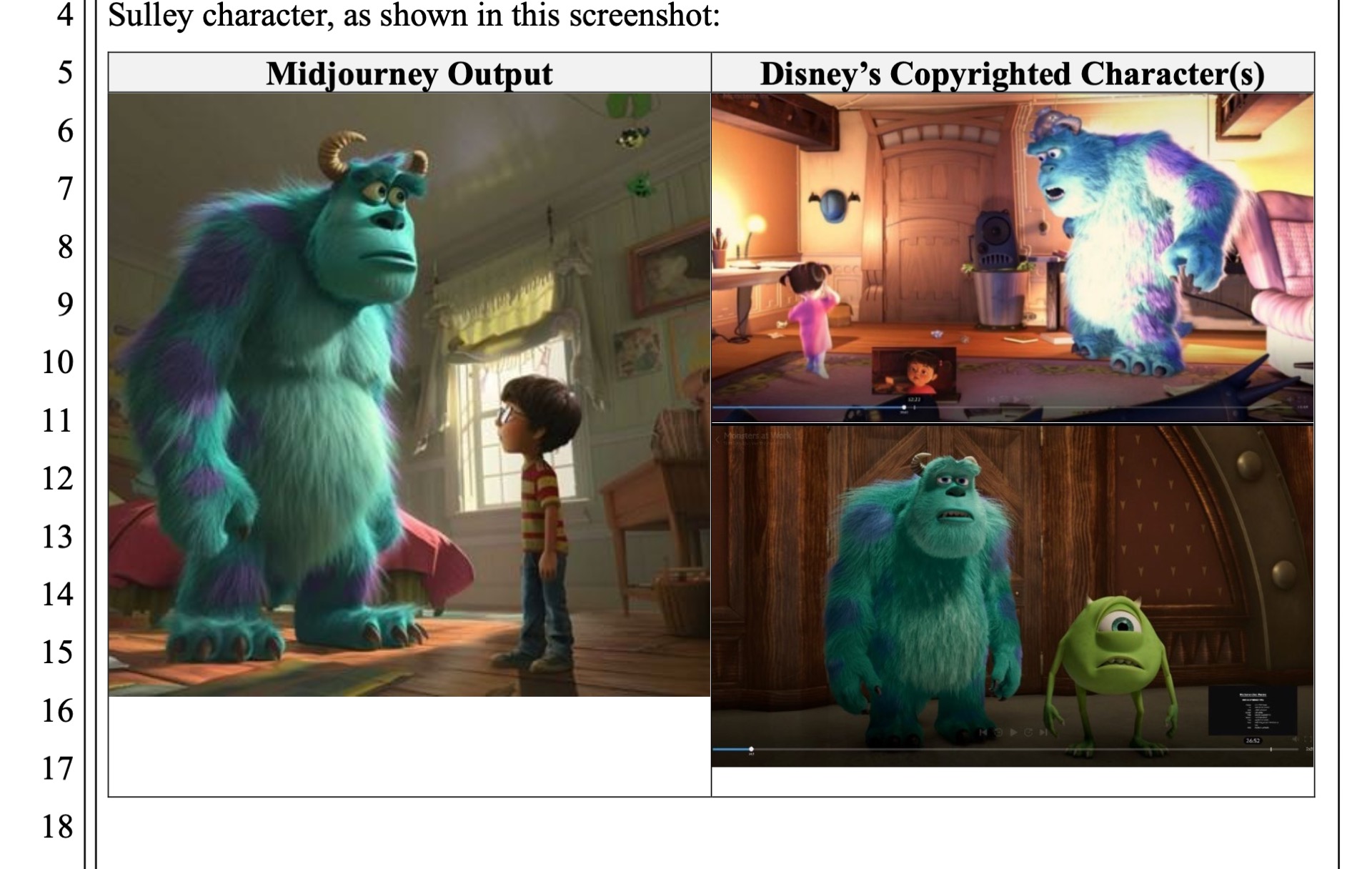

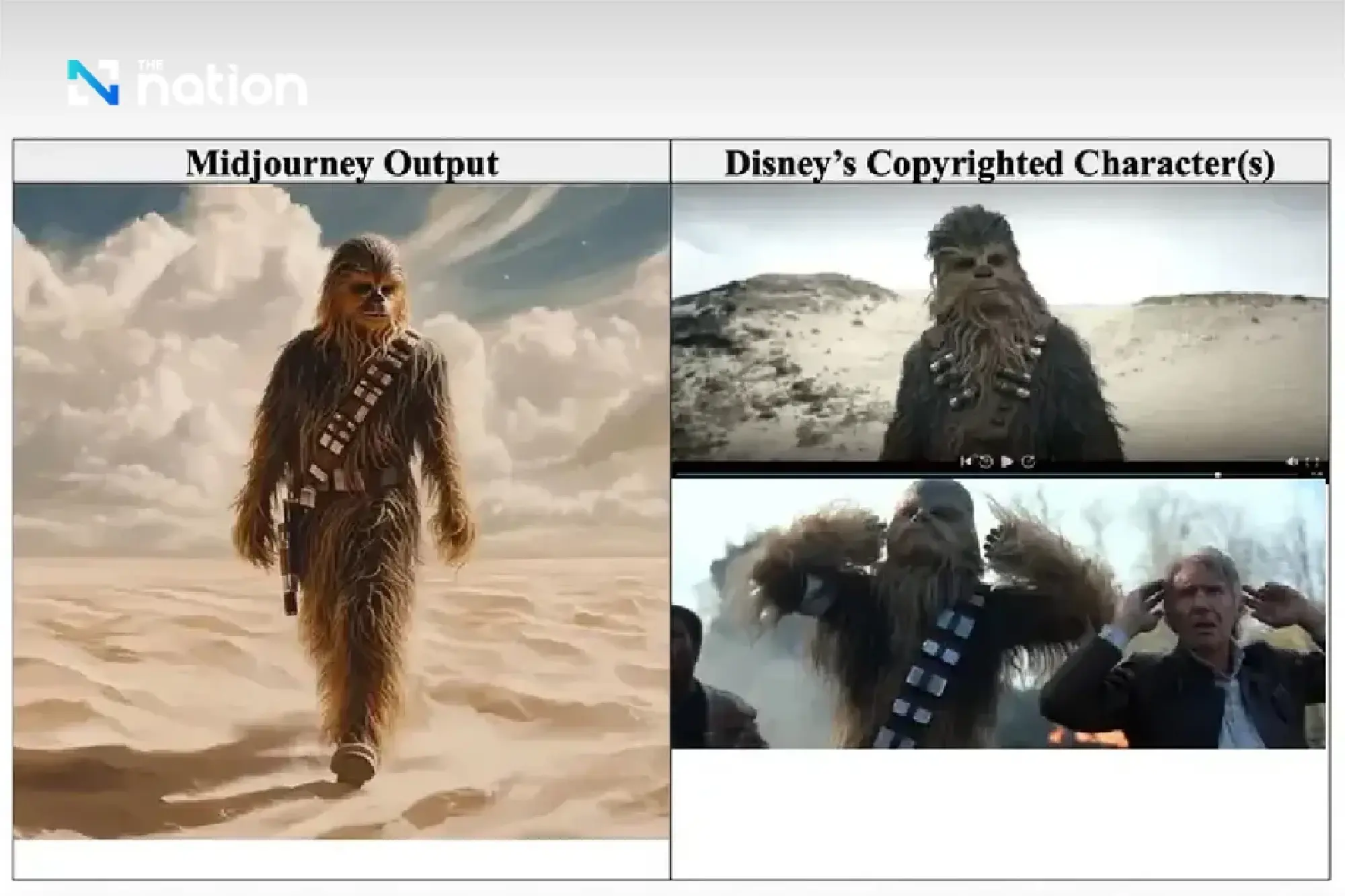

For the past few years, generative AI has existed in a kind of regulatory gray zone. Entrepreneurs fired up Midjourney prompts to create artwork that looked suspiciously like famous characters. Content creators fed copyrighted images into training datasets. Artists watched their styles get replicated by algorithms trained on their work. And mostly, nobody could stop them. The law was unclear. The enforcement was nonexistent.

Then Disney said enough.

This move signals something bigger than copyright protection. It marks the end of AI's Wild West era, where anything went because nobody knew the rules yet. We're entering a new phase now, one where major corporations are drawing lines in the sand and tech companies are enforcing them. It's messier than you might think, and it affects way more than just Disney fans who want to generate fan art.

Here's what you need to understand about why this matters, what it actually changes, and what comes next.

TL; DR

- Disney blocked AI character generation across multiple platforms including Google Gemini, shutting down a popular feature that let users create images of Mickey, Elsa, and other iconic characters.

- Copyright protection just got real for AI companies, signaling they'll now enforce IP rights or face legal liability from major rightsholders.

- The Wild West era is ending: Companies like Open AI, Google, and Midjourney can no longer claim ignorance about copyrighted content in their systems.

- Expect rapid dominoes to fall: Other studios, brands, and creators will demand similar protections, forcing AI platforms to implement content filters across the board.

- The rules are being written right now: This decision won't come from legislators. It'll come from power plays between corporations, with users and smaller creators caught in the middle.

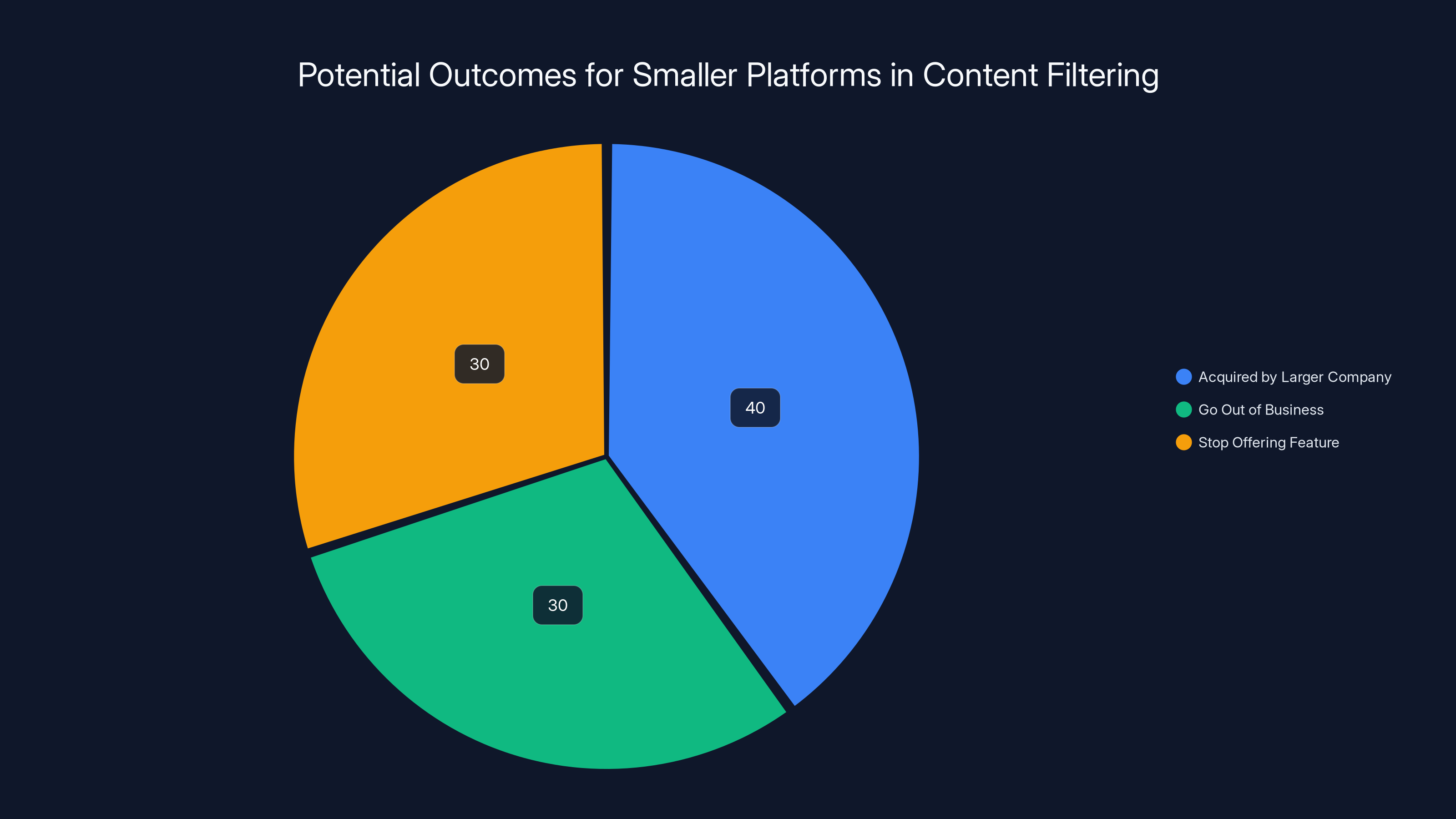

Estimated data shows that smaller platforms face significant challenges, with many potentially being acquired, going out of business, or ceasing to offer content filtering features.

Why Disney's Crackdown Matters More Than You Think

You might think this is just about protecting Mickey Mouse's image. That's actually the least interesting part.

What's really happening is this: Disney demonstrated that companies can force tech platforms to build new enforcement mechanisms. Not through lawsuits (yet). Not through legislation (yet). But through simple demand and the threat of future legal action.

Google, which powers Gemini, has a choice: filter out Disney characters or face potential litigation from a company with nearly unlimited legal resources. The choice becomes obvious pretty quickly.

But here's where it gets complicated. Once you build the filter for Disney, you've now created infrastructure that other companies will demand. Marvel wants one. Universal wants one. Every major studio wants one. Every luxury brand wants one. Every artist's estate wants one.

Suddenly, your "general-purpose" AI image generator needs hundreds of specialized filters. You're not building a tool anymore. You're building a gatekeeper.

The real question isn't whether this will happen. It's how fast it'll happen and who gets to decide what gets filtered.

Disney's move also reveals something uncomfortable about how AI training works. These models were trained on massive datasets pulled from the internet. Some of that data included Disney content. Some of it included images scraped from Google Images without explicit permission. The entire AI industry has been built, to some degree, on content that wasn't quite legally obtained.

Disney's crackdown forces platforms to actually reckon with that reality. And that reckoning doesn't just affect copyright holders. It affects everyone.

The Legal Minefield Underneath This Decision

On the surface, Disney's demand seems straightforward: don't let people generate our characters. But the legal landscape supporting this move is actually pretty murky.

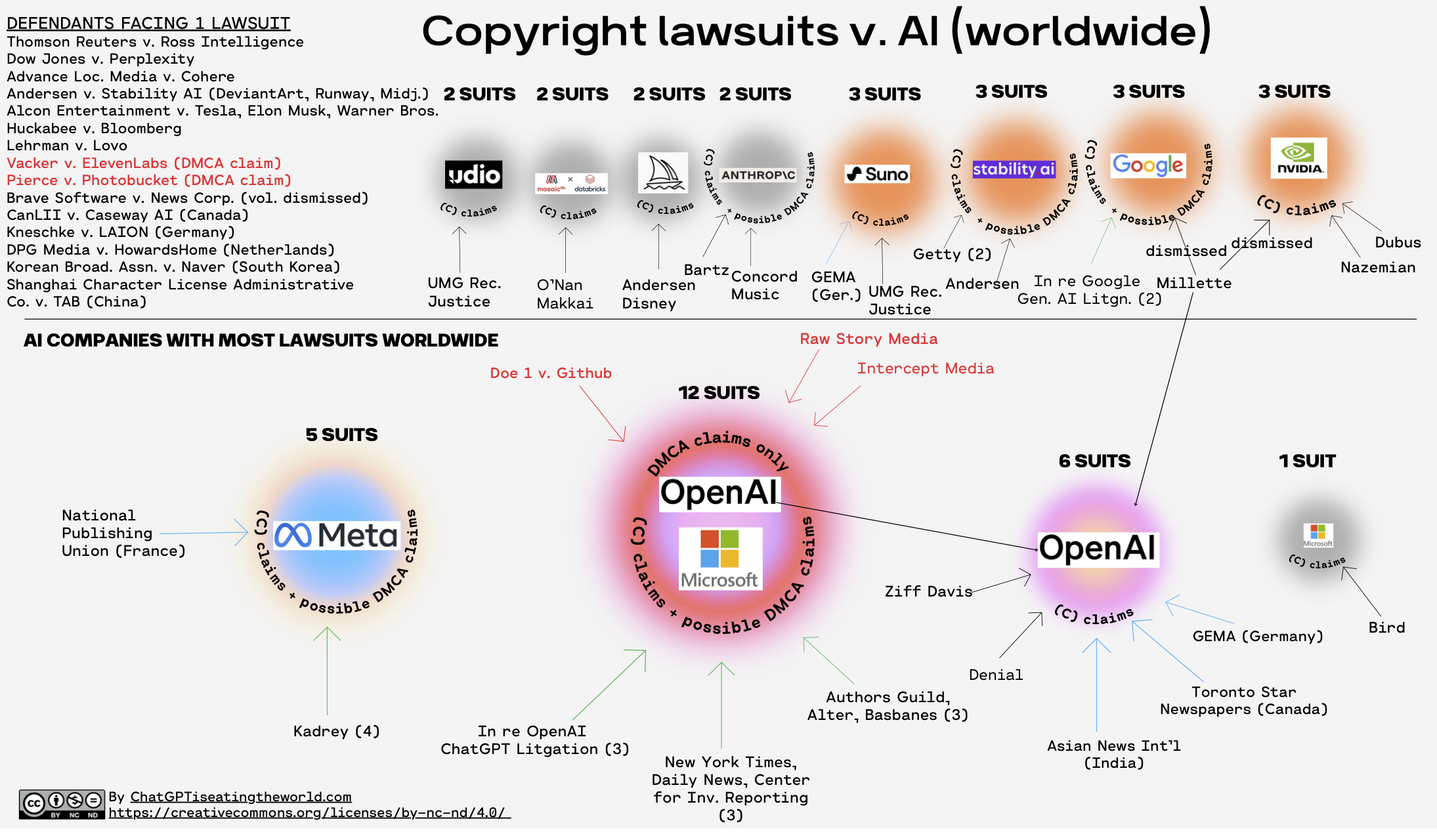

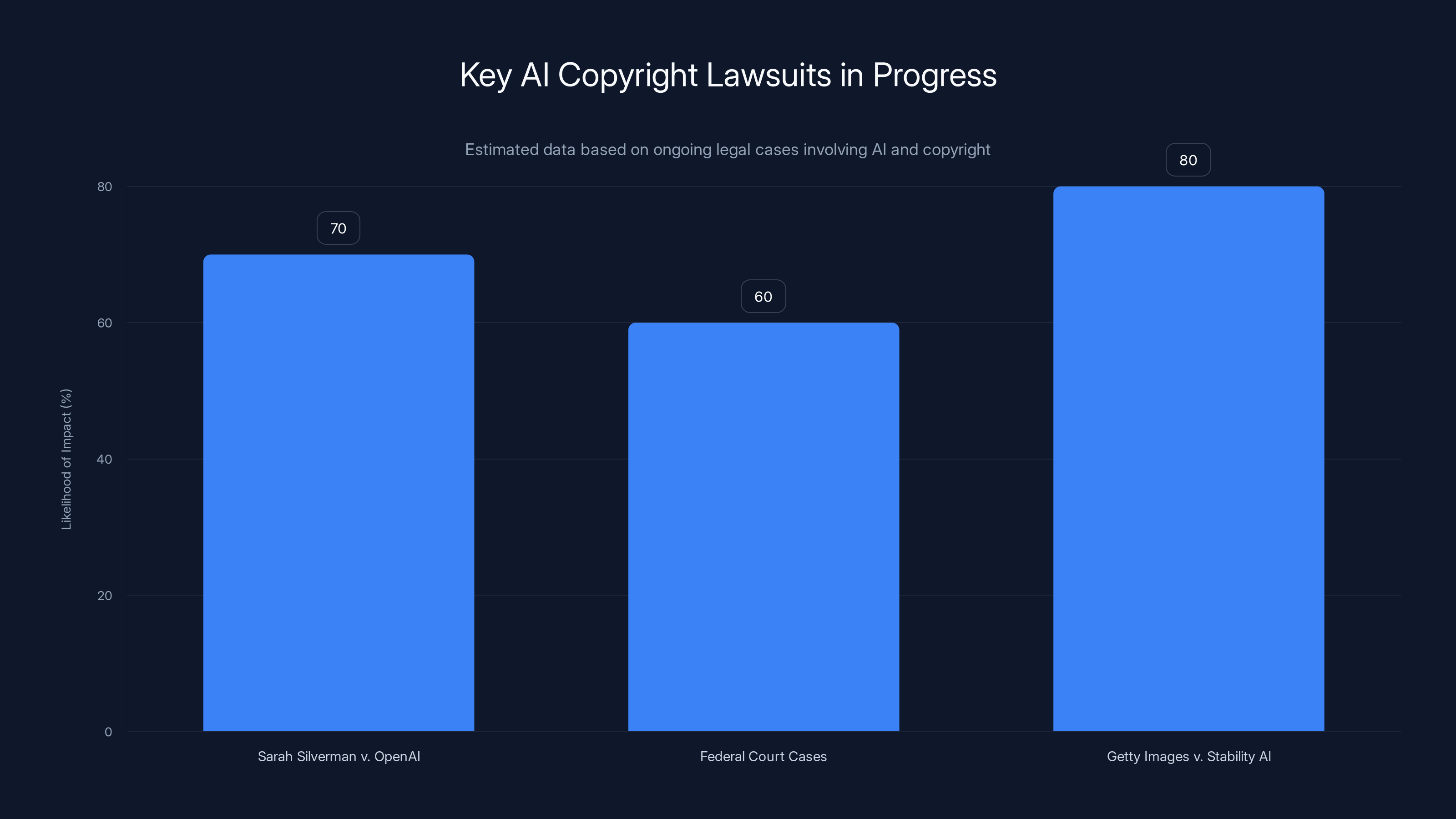

Generative AI copyright law is genuinely unsettled. There are multiple cases working through courts right now. Sarah Silverman and other authors sued Open AI over whether training on copyrighted books was legal. Federal courts have suggested that AI companies can be held liable for reproducing copyrighted training data. But nothing is final. Nothing is clear.

Technically, Disney doesn't have a slam-dunk legal case that AI platforms are liable for letting users generate their characters. The case for liability is stronger if you can prove the platform knew copyrighted material was in the training data and did nothing. But even that's contested.

Yet Disney still got what it wanted. Why? Because the legal uncertainty itself is expensive and unpleasant for Google.

Disney is simply saying: "The legal risk of defending this isn't worth the benefit of offering this feature. Build a filter."

Google, facing potential litigation and bad press from one of the world's most powerful entertainment companies, decided to build the filter. It's cheaper and easier than fighting.

This sets a precedent that's important: companies with enough legal leverage and brand power can essentially get what they want from AI platforms without needing a court to prove anything. They just need to make the math uncomfortable enough.

The problem is, this math only works if you're Disney. What about mid-size brands? What about individual artists? What about creators who aren't household names?

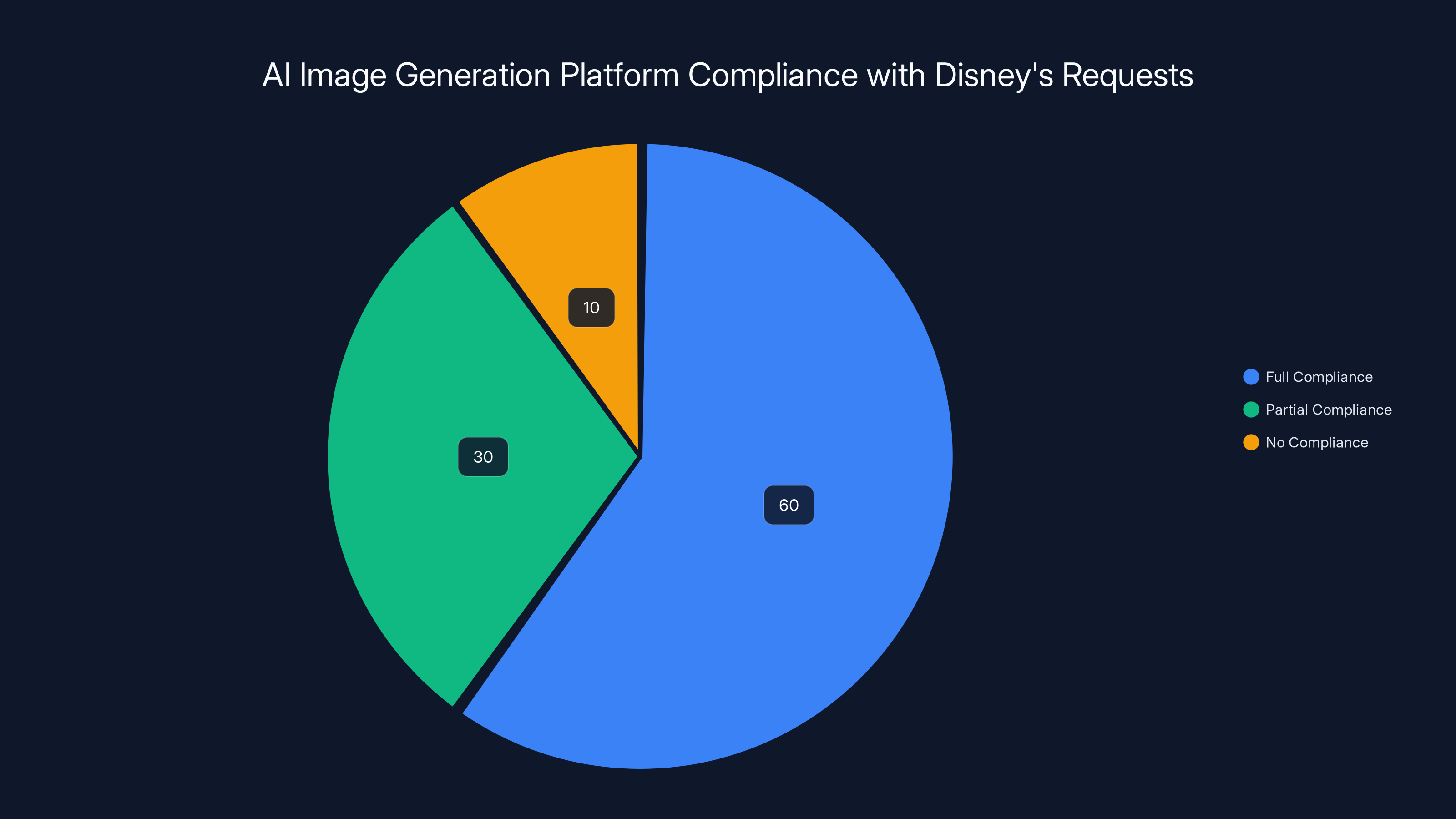

Estimated data suggests that 60% of AI platforms fully comply with Disney's filter requests, while 30% partially comply, and 10% do not comply at all.

How AI Image Filters Actually Work

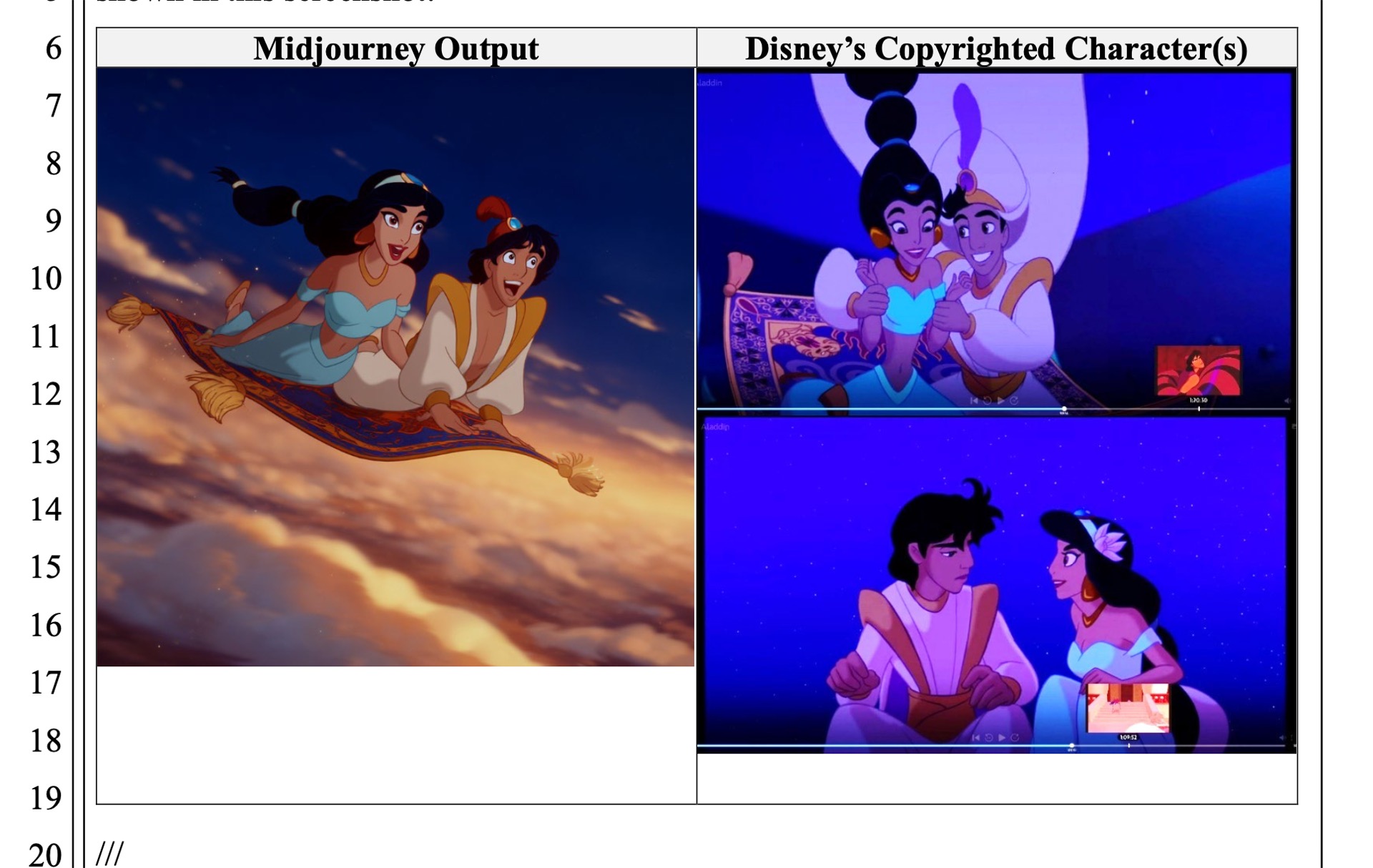

You might assume Disney's filter is just a simple blocklist. Something like: "If prompt contains 'Mickey Mouse,' block it."

If only it were that simple.

In reality, AI content filters are absurdly complex. Here's why: people are creative with their prompts. They'll say "the big-eared mouse from the House of Mouse." They'll say "animated mascot from 1928." They'll say "copyright-free character resembling a famous mouse." Each of these needs to be caught by the filter.

Advanced filters don't look for exact phrase matches. They use machine learning to understand intent. The system learns what makes an image "Mickey Mouse-like" versus just "a generic cartoon mouse." The filter looks for visual indicators: white gloves, specific ear shapes, the art style, the clothing.

So the filter becomes its own AI model, trained to recognize when you're trying to generate something too close to a protected character.

But there's a huge problem with this approach: false positives.

If the filter is too sensitive, it blocks legitimate use cases. You can't generate any cartoon mouse. You can't generate images inspired by 1920s animation styles. The tool becomes less useful.

If the filter is too loose, people just work around it. They'll use slightly different prompts, blend characters from multiple universes, or use tools that don't have the filter.

Google and other platforms are essentially stuck between making their tools worse and making them less effective at blocking content.

There's another issue: the filter only works if it's applied everywhere consistently. But Midjourney might have a filter. DALL-E 3 might have a filter. Some random open-source model someone released might not have any filter at all.

A user who can't generate Mickey on Google Gemini will just switch to a different tool. The demand doesn't disappear. It just moves.

The Domino Effect: Who Gets Protected Next?

Disney's crackdown is already creating ripples.

Other studios are probably having urgent meetings about AI character generation right now. Universal, Paramount, Sony, Warner Bros., DC Comics, Marvel, anime studios in Japan. Each of these has a catalog of intellectual property worth billions. Each of them is watching to see if Disney's move actually worked.

If it did, they'll all demand the same treatment.

But studios are just the beginning. What about:

Luxury brands? Hermès, Gucci, Louis Vuitton. These companies have fought counterfeiting for decades. AI image generation that creates "Louis Vuitton-style" bags is essentially counterfeiting with extra steps. They'll demand filters too.

Fashion designers? Alexander Mc Queen's distinctive aesthetic. Balenciaga's silhouettes. These are copyrightable. Or at least, designers will argue they are and threaten platforms until they get filters.

Musicians and artists? Taylor Swift's team has been actively fighting AI voice clones. If voice synthesis filters start getting implemented, will visual art filters be far behind? An artist whose style has been trained into a model without permission might demand a filter that prevents the AI from "sounding" like their work.

Sports teams and athletes? The NBA might say you can't generate images of LeBron James in unauthorized merchandise. The NFL might demand protections on team logos and uniform designs.

This isn't speculation. This is the inevitable trajectory once you establish that filters can be forced through pressure rather than law.

The question becomes: who controls the filters? Who gets protected and who doesn't? A mega-studio like Disney can probably force a filter. A regional theater company probably can't. An individual artist definitely can't.

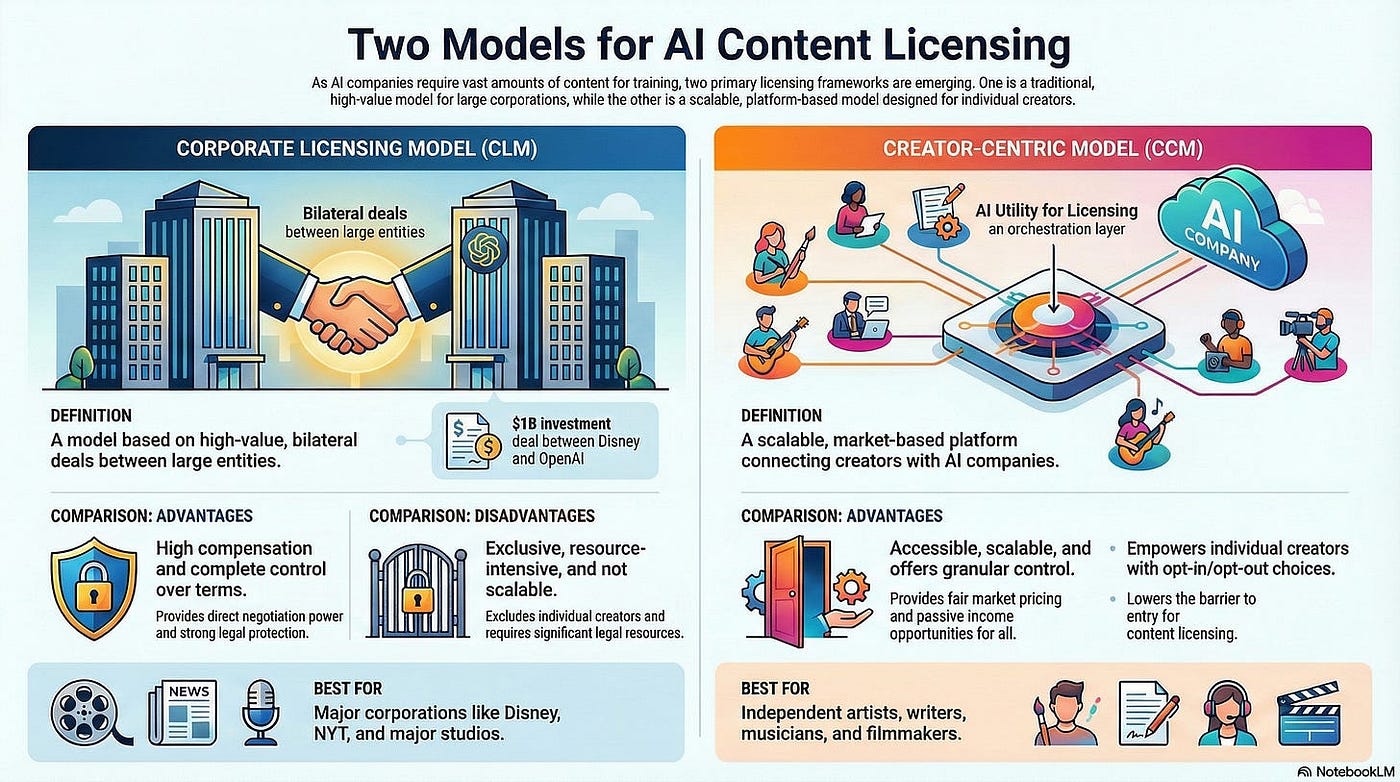

You start creating a two-tier system: protected content (controlled by people with legal budgets) and unprotected content (everything else).

What This Means for Creators and Artists

Here's where this gets really uncomfortable for a lot of people.

For the last few years, generative AI has been both a tool and a threat for creators. On one hand, it's democratized certain kinds of content creation. A person with no design skills can now generate images in seconds. A writer can outline a story with AI assistance.

On the other hand, AI has been trained on creators' work without their permission. Artists woke up to find their names in model cards, their styles replicated, their work used to train systems that compete with them for work.

Disney's crackdown doesn't fix this. It actually makes it worse in some ways.

Right now, if you're a digital artist whose style has been trained into a model, you have almost no legal recourse. The AI companies argue they had a license (usually from scraped internet data, but technically they had "access"). The courts haven't ruled definitively against them.

But individual artists won't be able to force filters like Disney can. You'll need a lawyer. You'll need money. You'll need proof that the model's output literally violates your copyright (not just mimics your style).

Meanwhile, Disney gets protection almost automatically, just by asking.

The inequality here is stark. It's not that Disney doesn't deserve protection. It's that everyone else probably deserves the same protection, and they're not getting it.

There's also a weird secondary effect: Disney characters are now harder to find in generated images, which might actually drive more interest in them. The banned character becomes more mythical, more desired. It's like the Streisand Effect but for AI.

Estimated data suggests that ongoing AI copyright lawsuits could significantly impact AI platforms, with Getty Images v. Stability AI potentially having the highest impact.

The Training Data Problem That Nobody's Solving

Here's the thing that Disney's filter doesn't actually address: the model was already trained on Disney images.

Filtering prompts is one thing. Filtering training data is another. And that's much harder.

When you train an AI image model, you feed it millions of images. Those images come from somewhere. The internet, mostly. That internet data includes Disney characters. Lots of them. Promotional images, fan art, screenshots from movies, merchandise photos.

Once the model is trained on that data, the knowledge is baked in. You can't really "untrain" it. The model has learned Disney's visual language as part of learning visual language generally.

A content filter prevents users from explicitly asking the model to generate Mickey Mouse. But if someone asks for "a cheerful mouse character with a boat captain theme," the model might generate something that looks suspiciously like the captain from "Steamboat Willie" anyway, because it learned that connection during training.

The deeper problem: nobody's actually regulating what goes into training data. There's no requirement to get permission before using copyrighted images to train a model. There's no registry. There's no consent system.

So companies are still legally gray on whether they can train on copyrighted material, but the courts are slowly moving toward "probably not without permission."

Disney's filter is a surface-level solution to a deep structural problem. It's like putting a fence around a pasture while the horses have already escaped.

The real fix would require:

- Transparent training data labeling: Every image in a training dataset marked with its source and copyright status

- Permission systems: Opt-in rather than opt-out. Creators consent to their work being used

- Compensation mechanisms: If someone's work trains a commercial model, they get paid

- Auditability: Platforms open their training processes to legal review

None of this is happening. Instead, we're getting filters on prompts, which is theater.

How Other Platforms Are Responding

This is where it gets interesting, because not all AI platforms are responding the same way.

Google Gemini implemented the Disney filter pretty quickly. Google's risk calculus is interesting because they're not primarily an AI company anymore. They're an advertising company. Bad press from Disney is expensive. Legal liability is expensive. Compliance is cheaper.

Open AI has been more cautious. Chat GPT GPT-4 can't generate images of specific real people without permission (policy), but it's less clear about Disney characters. Open AI's been in fights with copyright holders already, so they're thinking more carefully about their liability stance.

Midjourney is taking a hybrid approach. They're actively researching what they can and can't do legally. They're consulting with lawyers. They're trying not to get sued, but they're also not preemptively banning content.

Stable Diffusion (the open-source model) is essentially uncontrollable. Because it's open-source, anyone can run it, modify it, or remove safeguards. You can't enforce a filter on software that thousands of people have downloaded and are running locally.

This creates an interesting split: closed-source, commercial AI platforms will increasingly have filters and restrictions (because they can be sued). Open-source models will increasingly become the refuge for people who want to generate protected content.

So Disney's filter doesn't stop the problem. It just pushes it somewhere else.

The Regulatory Landscape That's Quietly Forming

People keep waiting for Congress to pass AI regulation. They probably will, eventually. But in the meantime, regulation is happening informally through corporate enforcement.

Companies are setting their own rules. Studios are demanding protections. Courts are slowly clarifying liability. The result is a patchwork where rules are different for different companies, different models, and different types of content.

Europe is slightly ahead of the U.S. here. The EU's AI Act includes provisions about high-risk applications and transparency in training data. It's not perfect, but it's something.

The U.S. is basically letting companies figure it out. There's the White House's Executive Order on AI, but it's focused on government use and high-risk scenarios. It doesn't directly address copyright and intellectual property in AI training.

So we're entering a weird phase where:

- Big companies enforce their own IP protection through platform pressure

- Smaller companies either comply or get sued

- Creators have almost no protection unless they're famous enough to threaten litigation

- Users get an increasingly restricted experience as filters accumulate

It's less "regulation" and more "might makes right."

The question nobody's asking: Is this the kind of regulation we actually want? Or are we letting corporations define the rules because it's convenient in the short term?

Transparent labeling, permission systems, compensation, and auditability are crucial for ethical AI training data practices. Estimated data.

What Filtering Disney Characters Actually Prevents

Let's be concrete about what Disney's filter actually stops and what it doesn't.

What it stops:

- Direct requests: "Generate an image of Mickey Mouse"

- Clear character prompts: "Draw Cinderella in modern clothing"

- Trademark use: "Generate Minnie Mouse wearing a business suit"

What it probably doesn't stop:

- Indirect requests: "Generate a cheerful mouse character wearing red shorts and large white gloves"

- Style requests: "Generate an image in the style of early 1920s animation"

- Character combinations: "Generate a character that looks like Mickey and Minnie combined"

- Workarounds: "Generate the main character from that old Disney movie with the steamboat"

Users are remarkably creative at working around filters. They'll use descriptions instead of names. They'll ask for "inspired by" rather than "copy of." They'll blend multiple sources.

So the filter probably reduces explicit Disney character generation by maybe 70-80%. But it doesn't stop it entirely.

The real impact is more about signaling: "We take this seriously." It protects the platform legally by showing they made a reasonable effort to prevent infringement.

It also trains users to change their behavior. If you try to generate Mickey Mouse and get blocked, you learn pretty quickly not to ask. Eventually, the demand adapts.

The Economics of Content Filters

Building and maintaining content filters is expensive and ongoing.

First, you have to design the filter: what exactly are you protecting? For Disney, it's the characters. But which characters? Just the most famous ones? Or every single Disney character ever? The scope matters.

Then you have to implement it. This means training a model or building a heuristic system to detect when someone's trying to generate protected content. This isn't a one-time cost. You have to maintain it, update it, test it.

Then you have to handle false positives and false negatives. Someone will complain that a legitimate request got blocked. Someone else will report that a prompt clearly violated the filter but went through anyway.

And you have to do this for every company that demands protection. If you're Google and you've got 50 different entertainment studios all demanding character filters, you've now got 50 different systems to maintain.

The economics get worse for smaller platforms. For Google, building a Disney filter is maybe a rounding error in their engineering budget. For a startup AI company, it's a significant expense.

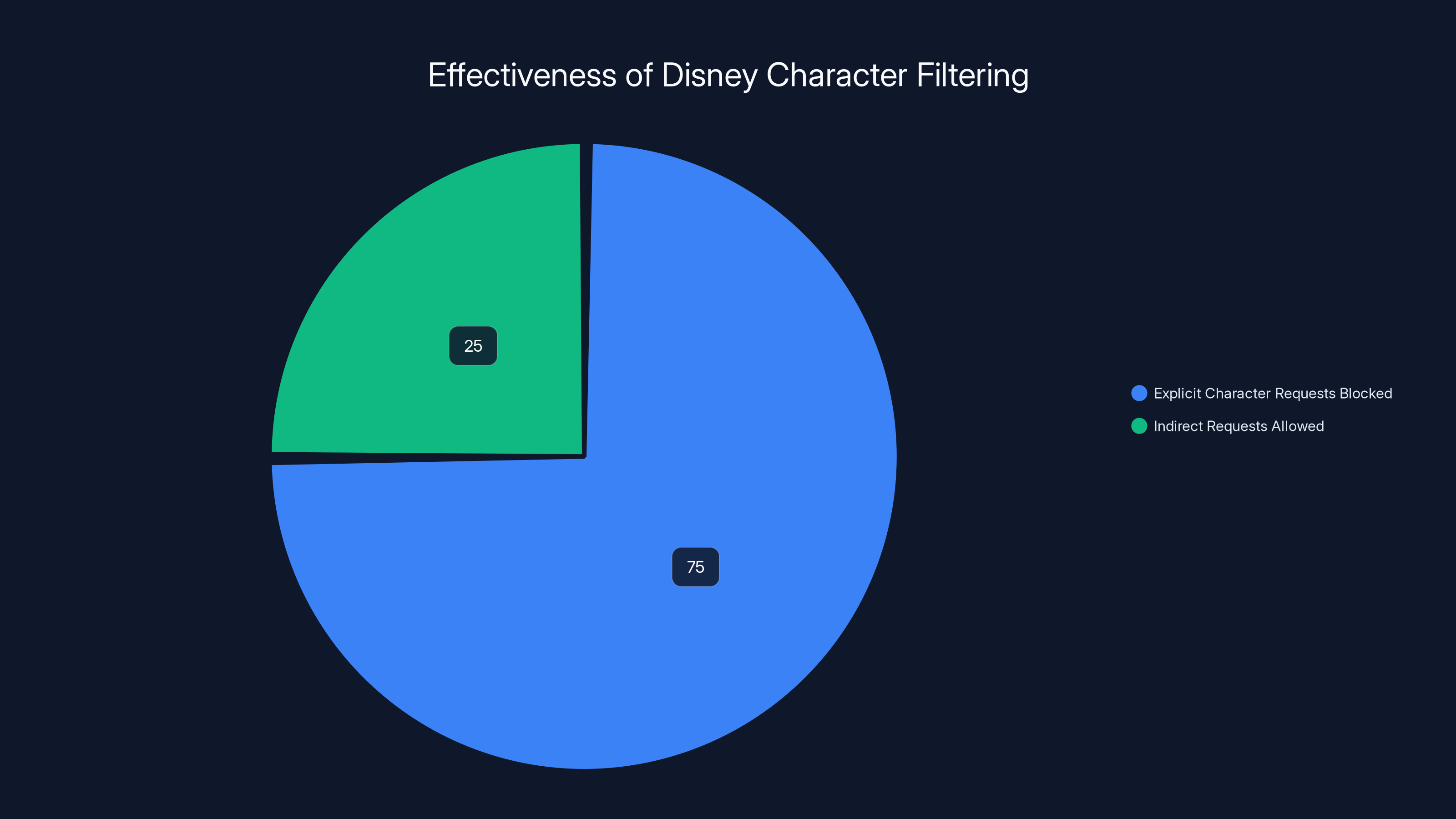

This creates natural consolidation pressure. Smaller platforms can't afford to build filters for everyone who demands them. So they either:

- Get acquired by a larger company with resources

- Go out of business

- Stop offering the feature entirely

The result is that the AI image generation market will likely consolidate further into a few large players (Google, Open AI, Meta, maybe Midjourney). And those players will have increasingly restrictive feature sets because they're under more pressure from more rights holders.

The open-source movement will remain as the alternative, but it'll remain technically harder to access and use.

What Comes Next: The Escalation

Disney's crackdown is probably not the end of anything. It's the beginning.

In the next 12-24 months, expect:

More character filters. Universal, Sony, Disney subsidiaries (Marvel, Lucasfilm, 20th Century Studios) will all demand the same treatment. By mid-2026, major AI image generators might have filters for hundreds of protected characters.

Style-based protections. Fashion brands and individual artists will start demanding that their visual styles be protected. This is legally shakier (you can't copyright a style, only specific creative works), but studios will try. The liability risk might force compliance anyway.

Trademark and brand protection. Companies will demand that their logos, packaging designs, and branded elements can't be generated. Again, some of this is probably illegal (you can't prevent all uses of a protected mark), but the threat of litigation might be enough.

Geographic fragmentation. Different rules in different places. The EU filters might be stricter than U.S. filters due to GDPR and regional regulations. Asia will have its own rules. You'll get a fragmented internet of AI where the same prompt produces different results depending on where you access it from.

New tools emerging specifically to circumvent filters. Once every mainstream platform has filters, new platforms will emerge specifically marketing themselves as "no filters" or "no censorship." These will be less safe, less regulated, and will attract the people who want to generate protected content.

The overall trend is clear: more restrictions, more fragmentation, more inequality between big IP holders and everyone else.

Estimated data suggests that Disney's filtering system blocks around 75% of explicit character requests, while indirect requests still pass through.

The Question of Fair Use and Transformation

There's a legal concept called "fair use" that's supposed to protect transformative uses of copyrighted material. If you use a small part of something copyrighted in a way that creates new value, that's usually okay.

But where does AI fit in this?

If you use AI to generate a Mickey Mouse parody, is that fair use? Arguably yes, it's transformative. But if you're generating it for commercial purposes without Disney's permission, that's a lot shakier.

The problem is that the courts haven't really figured this out yet. And in the meantime, Disney doesn't have to wait for a court ruling. They can just demand filters, and most platforms will comply to avoid the legal risk.

So fair use becomes whatever Disney (or whoever has the biggest legal budget) says it is.

This is a real loss for creativity. There's value in parody, in remixing, in taking copyrighted material and doing something new with it. But when the only way to test whether something is fair use is to go to court, most people will just not do it.

Small creators especially get squeezed out. A major studio can afford to push back on a filter if they think something should be allowed. An individual artist can't.

Training Models on Public Domain Content Instead

One interesting response to this whole mess: train AI models only on public domain content.

There are thousands of books, images, and films in the public domain. Most importantly, there's no copyright holder to sue.

Some companies are exploring this. The theory is that a model trained only on public domain material would be legally uncontroversial. You'd still get useful AI capabilities, but without the copyright liability.

The downside is obvious: public domain material is older. A model trained mostly on books from before 1924 and films from before the 1950s will have a very different character than a modern model trained on contemporary material.

But it's an interesting path forward for anyone who wants to build AI tools without getting sued into oblivion.

The User Experience Gets Worse

Let's talk about what this actually means for people using these tools.

Right now, if you want to generate an image, you can prompt for pretty much anything (with some limits). The experience is fairly open-ended.

As filters accumulate, the experience degrades. You can't generate protected characters. You can't generate protected styles. You can't generate recognizable people without special approval. You might not even be able to generate certain objects (luxury handbags look like specific brands).

The tool becomes less of a creative tool and more of a restricted utility.

It's like the difference between owning a paint set and being given a paint set where you're told in advance which subjects you're not allowed to paint.

Platforms will probably try to soften this with explanations. "We can't generate images of this character because it's protected by copyright." But the effect is still the same: fewer possibilities, less freedom.

For people who just want to make cool images, this sucks.

For Disney, it's a win. For other rights holders, it depends on how aggressively they push for filters. For creators who want to use AI as a tool, it's complicated. For companies like Google that have to maintain all these filters, it's an operational nightmare.

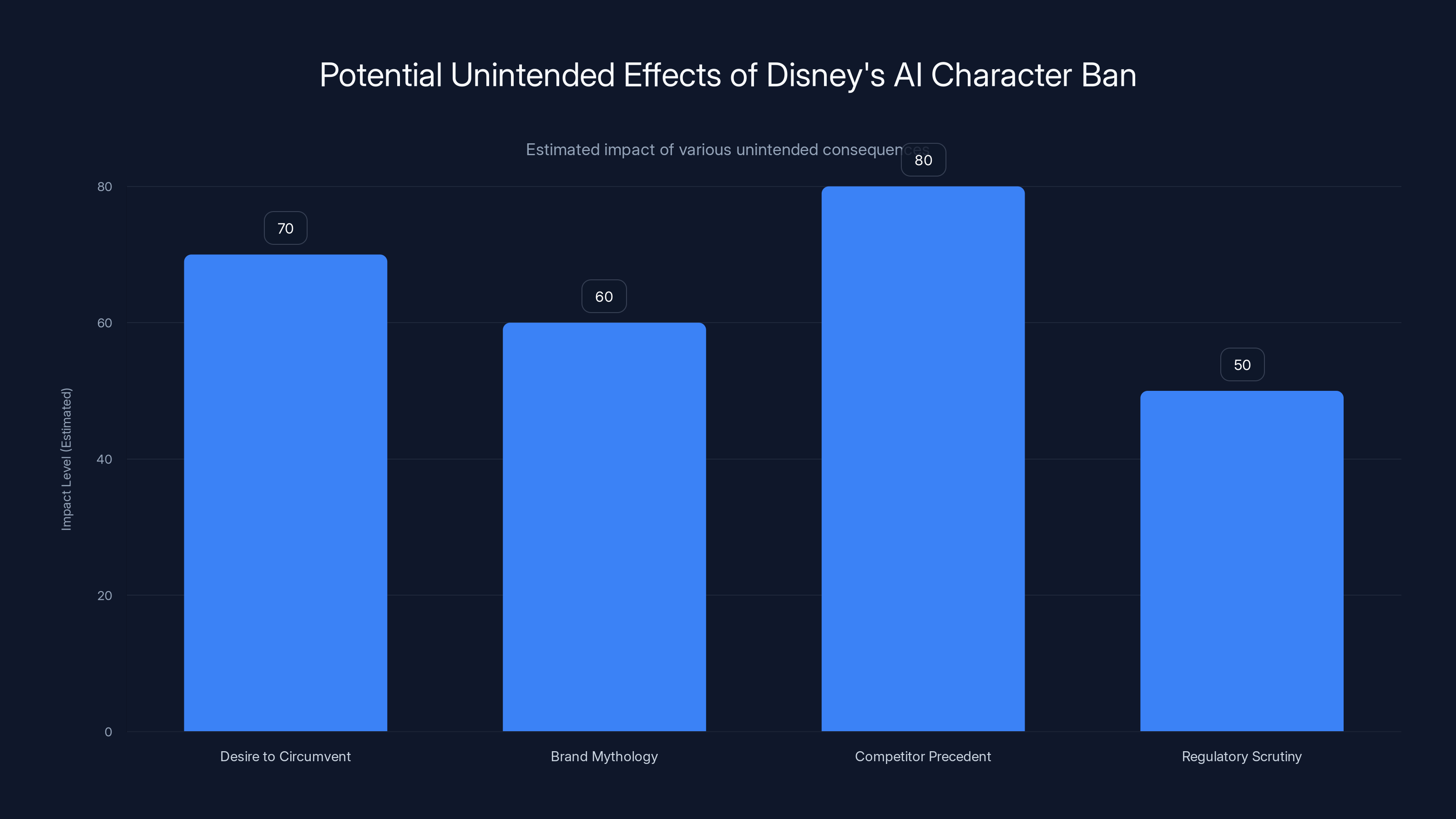

Disney's AI character ban may have unintended consequences, with competitor precedent and desire to circumvent potentially having the highest impact. Estimated data.

Comparing This to Other Content Moderation Crackdowns

Disney's AI character ban is not the first time we've seen content restrictions expand due to corporate pressure.

Think about YouTube. The platform started as essentially unregulated. You could upload almost anything. But as the platform grew and companies realized the liability risks, content moderation expanded dramatically. Copyrighted music got flagged. Bootleg copies got removed. Trademarked content got pulled.

Now YouTube has extremely sophisticated automated systems detecting copyright violations. And the user experience is more restricted as a result.

Something similar happened with social media. Facebook, Twitter, Instagram all evolved from "anything goes" to increasingly restricted environments, partly due to legal pressure.

AI image generation is probably heading the same direction. We're at the YouTube 2005 era, where the platform is still relatively open. Disney's move is probably the first of many pushes toward a more moderated ecosystem.

The lesson from these previous platforms is that once you start restricting content, the restrictions tend to expand, not contract. There's always a new group demanding protection, a new liability concern, a new reason to filter.

Where Open Source Models Fit In

Amid all this, open-source AI models like Stable Diffusion remain interesting because they're harder to control.

If you download an open-source model and run it on your own computer, nobody can force you to add filters. The creator might include them, but you can remove them.

This makes open-source models attractive to people who want unrestricted access. It also makes them attractive to people who want to generate protected content.

The downside is that open-source models are harder to use. You need technical knowledge. You need computational resources. Most people won't bother.

But for serious users and researchers, open-source provides an alternative to the increasingly restricted commercial platforms.

The long-term question is whether this creates a two-tier system: restricted commercial models for mainstream users, and unrestricted open-source models for technical users.

Or whether legal pressure and regulatory crackdowns eventually force open-source models to include filters too.

What Disney Probably Didn't Anticipate

When Disney demanded that AI platforms filter out their characters, they probably thought about fan art prevention and IP protection.

But there are second and third-order effects they might not have considered:

Increased desire to circumvent. Restrictions create the desire to circumvent them. The fact that you can't generate Mickey Mouse easily makes some people want to try harder.

Brand mythology. A banned character becomes more interesting, more discussed, more mythical. People remember that Mickey Mouse was the first character Disney used to force AI filters. That's kind of a big deal.

Precedent for competitors. Every studio in Hollywood watched this and immediately started planning their own demands. Disney didn't just protect itself. It created a template for everyone else.

Regulatory scrutiny. This kind of private enforcement of IP protection might eventually attract antitrust attention. Disney using its market power to force changes in AI platforms could eventually be seen as anticompetitive.

Disney got what it wanted in the short term. But the long-term effects are more complicated.

The International Angle: Different Rules in Different Places

Here's a complication that almost nobody's talking about: this will play out differently in different countries.

In the U.S., copyright duration is long (70 years after death for individual creators, 95 years for works made for hire). Disney characters are protected and will be for decades.

In some countries, copyright duration is shorter. In others, fair use protections are stronger. In others, enforcement is basically nonexistent.

A user in a country with stricter fair use protections might be able to generate images that would be blocked in the U.S. Or a user could access the tool through a VPN based in a different country to circumvent filters.

This creates a patchwork where AI tools might have different capabilities in different regions.

Google and other platforms will probably implement geographically specific filters. U.S. users get fewer options. Users in countries with different copyright regimes get different rules.

This is already happening with content moderation on social media platforms. It'll happen with AI too.

The Weird Economics of Character Protection

Here's something interesting: Disney characters are valuable because they're iconic, famous, and trademarked. But they're also valuable because people want to generate content with them.

By preventing AI generation, Disney protects the exclusivity of their characters. Only Disney can generate official Mickey Mouse content. If you want Mickey Mouse imagery, you have to buy it from Disney.

This actually increases the value of the characters economically. Scarcity drives price.

But it also means Disney is basically saying: "This character is so valuable that we can't let anyone else create content with it, even fans, even for free." There's a confidence there that borders on arrogance.

Smaller creators or brands probably can't afford to take that stance. They need fan content. They need people generating content with their IP because it drives engagement and interest.

But Disney is big enough that it can say "no" and still thrive.

The economics are probably different 5-10 years from now when AI tools are more integrated into everything. Disney might need to reconsider. Or they might double down. Either way, the decision is revealing about how Disney sees its intellectual property.

What This Means for the Future of Generative AI

Step back from the Disney stuff for a second. What does this decision signal about generative AI's future?

It signals that generative AI is now mainstream enough to be a concern for major corporations. It signals that IP protection is going to be a major governance issue. It signals that we're moving away from the Wild West toward a more regulated ecosystem.

It probably also signals that generative AI, as a general-purpose creative tool, is going to have limitations that weren't previously necessary.

There are a few possible futures:

Future 1: Regulated convergence. AI tools gradually add more and more filters and restrictions until they become relatively safe and limited. The tools are less creative, but they're legally defensible. This is probably the most likely path given current incentives.

Future 2: Market fragmentation. Restricted commercial models emerge as the mainstream, while unrestricted open-source tools become the alternative. Users pick based on their preferences and tolerance for risk. This is possible but requires strong technical adoption of open-source.

Future 3: Copyright reform. Policymakers realize the current system is broken and reform copyright law to be more AI-friendly. Creators get compensated for training data. Licenses become more standardized. This is probably the most optimistic path and also the least likely given how hard it is to reform IP law.

Future 4: Arms race. Restrictions and circumvention tools escalate. Every filter gets defeated. Every new tool creates pressure for new regulations. This seems plausible and would be messy.

We're probably heading toward some combination of these, with different regions following different paths.

Lessons for Other Tech Companies

If you're running a tech company that offers any kind of content generation or creation tools, Disney's move should worry you.

Not because you're doing anything wrong, but because you're now in a position where powerful companies can demand compliance even without legal precedent.

The lesson is:

-

Content moderation infrastructure is now mandatory. You need to build systems to handle IP protection requests, not as a nice-to-have, but as table stakes.

-

Legal ambiguity costs money. When the law is unclear (like AI copyright liability), platforms comply with powerful companies because litigation is more expensive than compliance.

-

Your users' experience will decline. As you add more restrictions, your product becomes more limited. This is probably unavoidable, but users will notice.

-

Open-source alternatives will emerge. As commercial tools become more restricted, open-source options become more attractive to power users.

-

First-mover disadvantage. Disney went first, so Disney gets the favorable treatment. Companies that wait for legal clarity will have already lost the ability to shape the outcome.

For any startup building AI tools, this means you should probably be consulting with lawyers now about IP protection, even if you don't think you're at risk yet.

The Wild West is Officially Over

There's a moment in any new technology's lifecycle where the rules solidify.

Internet startups had a Wild West era. Bitcoin did. Social media did. Generative AI is now officially past that point.

Disney's character filter isn't the last boundary; it's the first one to be clearly drawn. And once boundaries start getting drawn, others follow quickly.

The Wild West era of AI image generation, where you could prompt for pretty much anything and get a result, is ending. Not everywhere, not all at once, but the trend is clear.

What replaces it is probably a more complex, more fragmented, more regulated ecosystem where:

- Different platforms have different rules

- Different regions have different rules

- Big rights holders get special treatment

- Regular creators get squeezed

- Users have fewer options but safer experiences

It's not necessarily worse. Some regulation and IP protection is probably good. You want creators to get paid for their work. You want companies to have some say over how their IP is used.

But it's definitely different. And it's definitely more restrictive.

If you've been exploring generative AI image tools and getting comfortable with what they can do, get comfortable quickly. The capabilities you have access to today might not be available in 12-24 months.

FAQ

What exactly did Disney do to AI image generators?

Disney demanded that Google Gemini and other AI image platforms implement filters preventing users from generating images of Disney characters like Mickey Mouse, Cinderella, and other iconic figures. The platforms complied, essentially blocking prompts that try to create these protected characters. This wasn't a lawsuit or legal action, but rather corporate pressure based on the threat of potential future litigation and reputational damage.

Is it legal for AI companies to train on Disney images without permission?

The legality is currently unsettled and being challenged in multiple lawsuits. Cases like Sarah Silverman's lawsuit against Open AI are testing whether training on copyrighted material without permission violates copyright law. Federal courts have suggested AI companies could be liable, but nothing is final. Disney didn't wait for courts to decide; they just demanded filters, and platforms complied because legal uncertainty is expensive.

Can people still generate Disney characters using other tools or workarounds?

Yes, but with difficulty. Users can probably circumvent filters using indirect prompts or descriptions rather than character names. Open-source models that people download and run locally have no filters at all. However, mainstream commercial platforms like Gemini and DALL-E 3 are increasingly restricting protected characters. The restrictions aren't perfect, but they significantly reduce explicit protected content generation.

Does this filter actually protect Disney, or can people still make infringing content?

The filter reduces direct generation of Disney characters but doesn't completely prevent it. Creative prompting can still generate very similar-looking characters. More importantly, it doesn't address the fundamental issue that the AI model was already trained on Disney content. The filter is more about legal protection for the platform (showing they made reasonable effort to prevent infringement) than about completely preventing Disney content creation.

Will other companies demand similar filters?

Almost certainly yes. Marvel, Universal, Paramount, luxury brands, individual artists, and others will see that Disney successfully got what it wanted without litigation and will demand the same treatment. Expect hundreds of character, brand, and style filters within the next 2-3 years. This will significantly reduce the creative flexibility of mainstream AI image tools.

What happens to open-source AI models like Stable Diffusion?

Open-source models are harder to control because they're downloaded and run locally. Users can remove filters or run versions without restrictions. This makes open-source models attractive as an alternative to increasingly restricted commercial platforms. However, open-source requires more technical knowledge and computing resources, so it remains less accessible to casual users. Open-source will likely become the refuge for unrestricted AI image generation.

Is this copyright infringement or fair use?

That's genuinely unclear. Generating a parody of Mickey Mouse might be fair use (transformative), but generating it commercially without permission is shakier legally. The problem is that nobody wants to test this in court, so Disney's definition of what's acceptable (essentially "nothing") becomes the de facto rule. Small creators can't afford to push back on filters even if they think their use would qualify as fair use.

How does this affect AI image generation for legitimate purposes?

It depends on your use case. If you want to generate generic creative content, you'll be fine. If you want to generate anything inspired by or similar to protected characters, brands, or copyrighted works, you'll face increasing restrictions. The more mainstream the AI tool, the more filters it probably has. This limits creative experimentation and means users have to be more careful about what they prompt for.

Could this lead to copyright law reform?

Maybe, but probably slowly. These AI copyright questions could eventually prompt legislation clarifying what's legal in AI training and generation. However, current politics and lobbying make comprehensive copyright reform unlikely in the short term. More likely, we'll get a patchwork of rules, with different platforms and regions handling things differently while the legal question remains unsettled.

What's the difference between filtering prompts and filtering training data?

Filtering prompts prevents users from asking the AI to generate protected content. Filtering training data would mean removing copyrighted material before training the model. Filtering prompts is relatively easy and shows compliance. Filtering training data is much harder and less common, because by the time anyone demands it, the model is already trained. The real solution would be getting permission before training, but that's not happening at scale yet.

The Road Ahead

We're at an inflection point in generative AI history. The days of "anything goes" are genuinely over. What replaces that era will be determined partly by regulation, partly by litigation, and partly by power dynamics between companies.

Disney's move is the first significant domino. Others will follow. The question isn't whether more restrictions are coming, but how fast and how restrictive they'll be.

For now, use these tools while they're still relatively open. Understand their limitations. Think about what you're generating and why. The golden age of unrestricted AI image generation probably just ended, even if we didn't notice it happening.

Key Takeaways

- Disney successfully forced AI platforms to implement character filters without litigation, signaling the end of unregulated AI generation

- The filter is technically limited but legally important—it shows platforms made reasonable effort to prevent infringement

- Expect rapid expansion as other studios, brands, and creators demand similar protections across platforms

- Open-source AI models remain unfiltered, creating a two-tier system of restricted commercial tools vs unrestricted alternatives

- Copyright law remains unsettled, but corporate power and liability concerns are driving enforcement faster than legislation

Related Articles

- Amazon's AI Content Marketplace: How Publishers Get Paid [2025]

- ChatGPT Caricature Trend: How Well Does AI Really Know You? [2025]

- Thermodynamic Computing: The Future of AI Image Generation [2025]

- Best AI Sticker Makers: The Viral Tools Changing Creative Design [2025]

- Master AI Image Prompts Better Than Google Photos Remixing [2025]

- Grok's Child Exploitation Problem: Can Laws Stop AI Deepfakes? [2025]

![Disney's AI Image Ban: What It Means for the Future of Creative AI [2025]](https://tryrunable.com/blog/disney-s-ai-image-ban-what-it-means-for-the-future-of-creati/image-1-1770860168035.jpg)