Chat GPT Health: How AI is Reshaping Healthcare Access [2025]

Introduction: The Moment Healthcare Met Artificial Intelligence

Something shifted in healthcare this week. Not in a hospital or research lab, but in the product development headquarters of one of the world's most influential AI companies. OpenAI announced Chat GPT Health, and with it, quietly launched what might be the most ambitious attempt yet to embed artificial intelligence directly into how ordinary people manage their health.

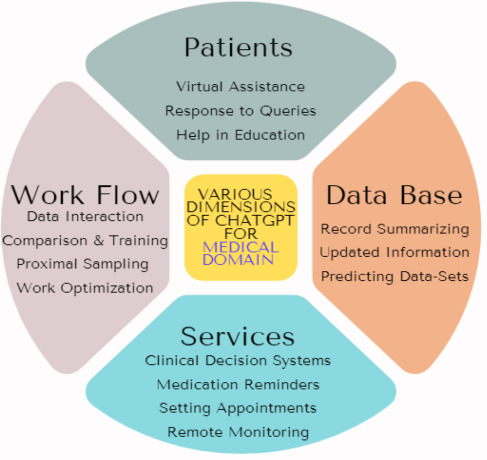

Here's what happened: Chat GPT, the chatbot that's answered over 230 million health-related questions weekly, just got serious about connecting to your actual medical data. We're talking medical records, lab results, insurance information, fitness tracking from Apple Health, nutrition logs from My Fitness Pal, and wellness data from apps like Peloton and Weight Watchers. For the first time, your AI health advisor isn't just guessing about your health based on what you type. It's reading your doctor's notes.

But here's the thing that matters most: this is happening right now, it's already being used by millions of people weekly, and frankly, nobody seems to be talking about how profoundly messy this gets.

I've spent the last few weeks looking into what Chat GPT Health actually is, how it works, and what it means for patients, doctors, insurance companies, and the regulatory bodies scrambling to figure out if this is brilliant or dangerous. Spoiler: it's probably both.

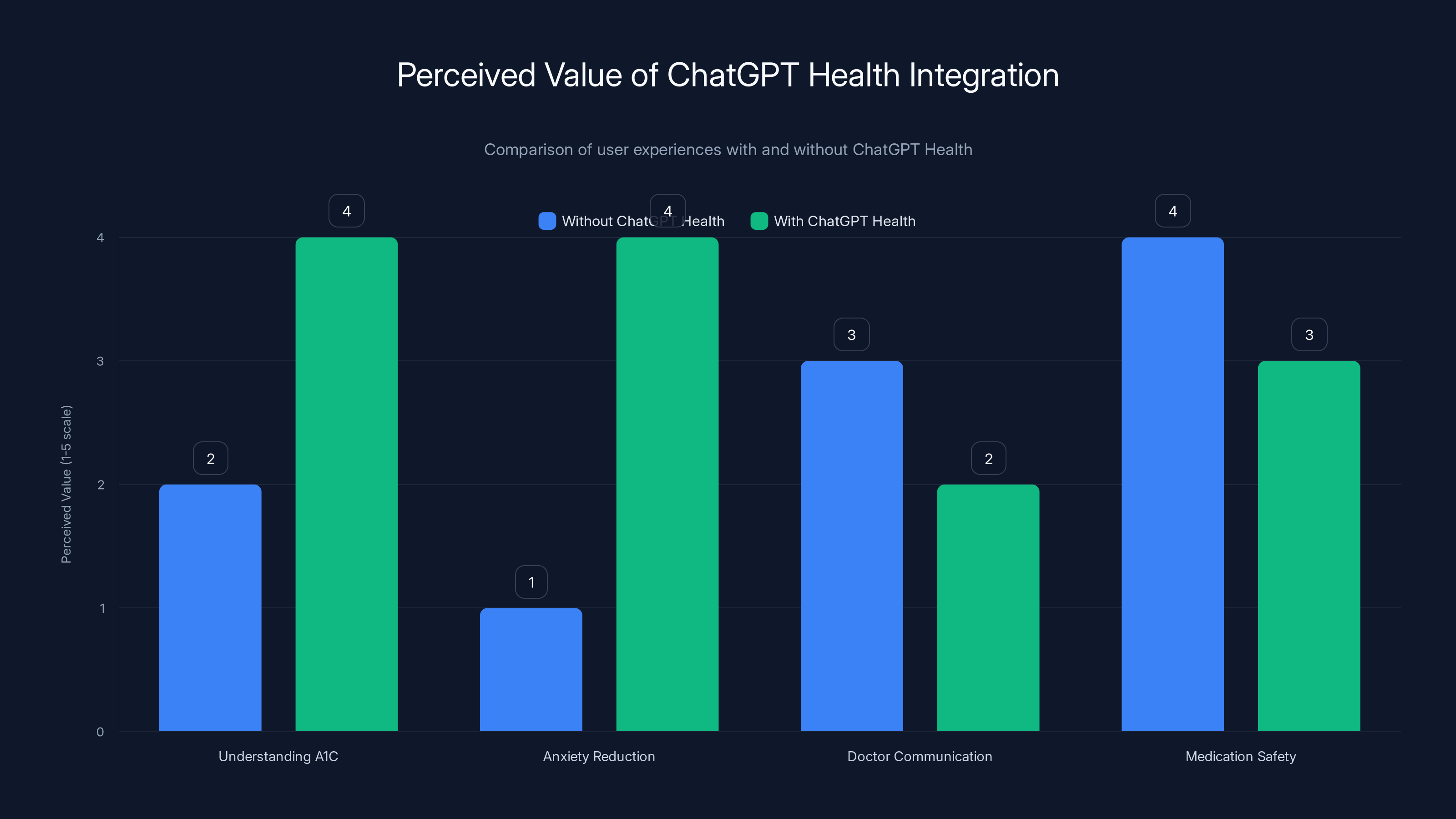

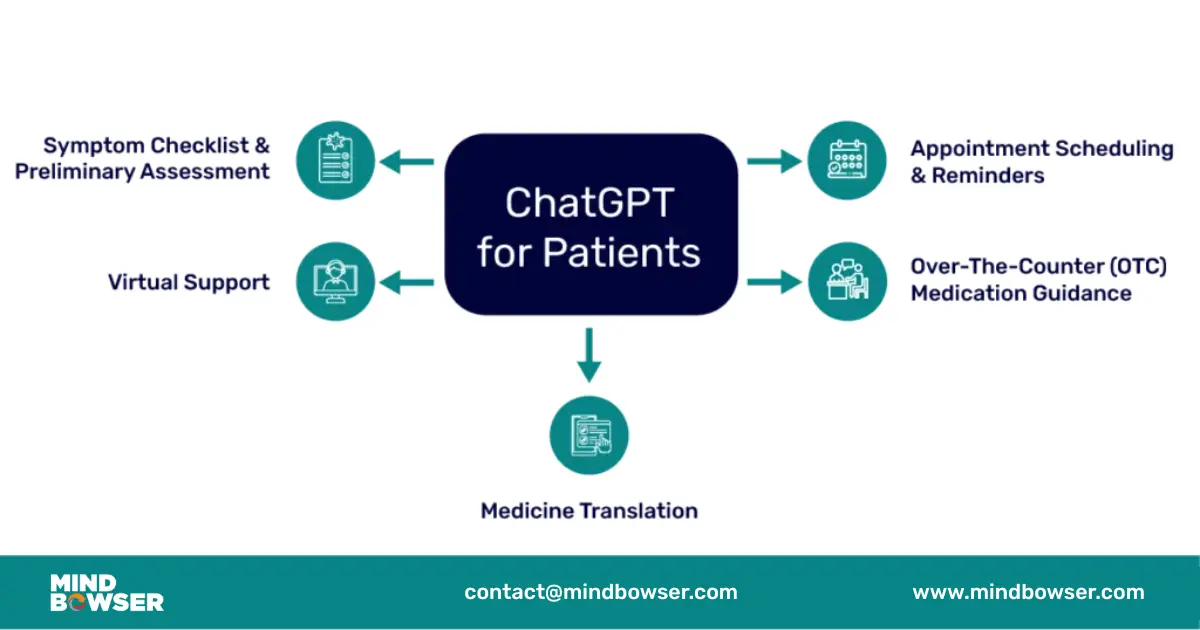

The core promise is straightforward and genuinely useful. Instead of explaining your symptoms to Chat GPT and hoping for generic advice, you show it your actual medical records, and it gives you context-aware responses tailored to your health history, medications, and lifestyle. It could help someone understand why their A1C levels are rising, prepare them for a doctor's appointment, or explain insurance options based on their actual healthcare patterns.

The core warning is equally straightforward: OpenAI explicitly says this tool is "not intended for diagnosis or treatment." That caveat matters because it's doing something that honestly sounds a lot like diagnosis and treatment. And more importantly, it matters because people don't always read disclaimers.

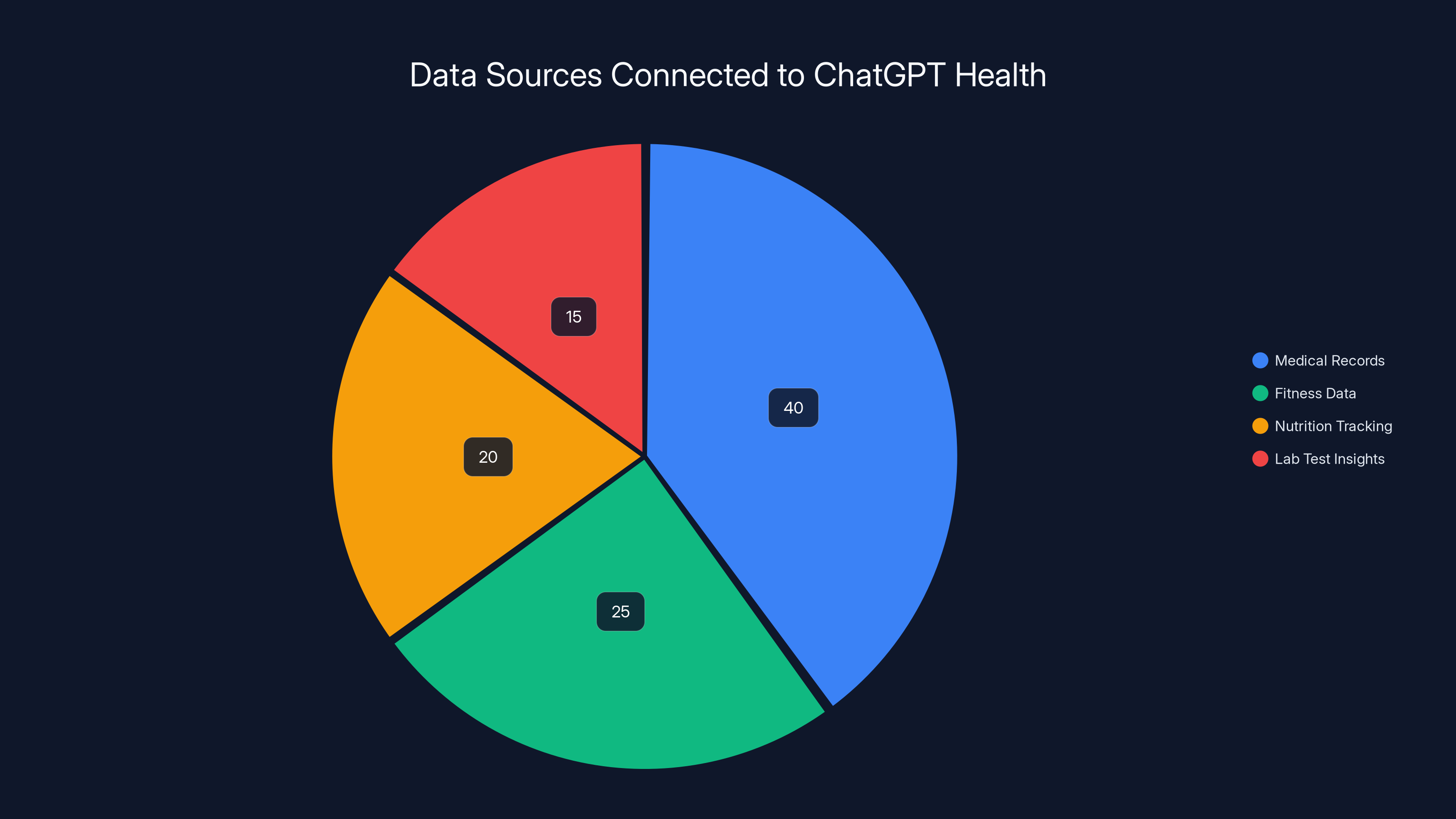

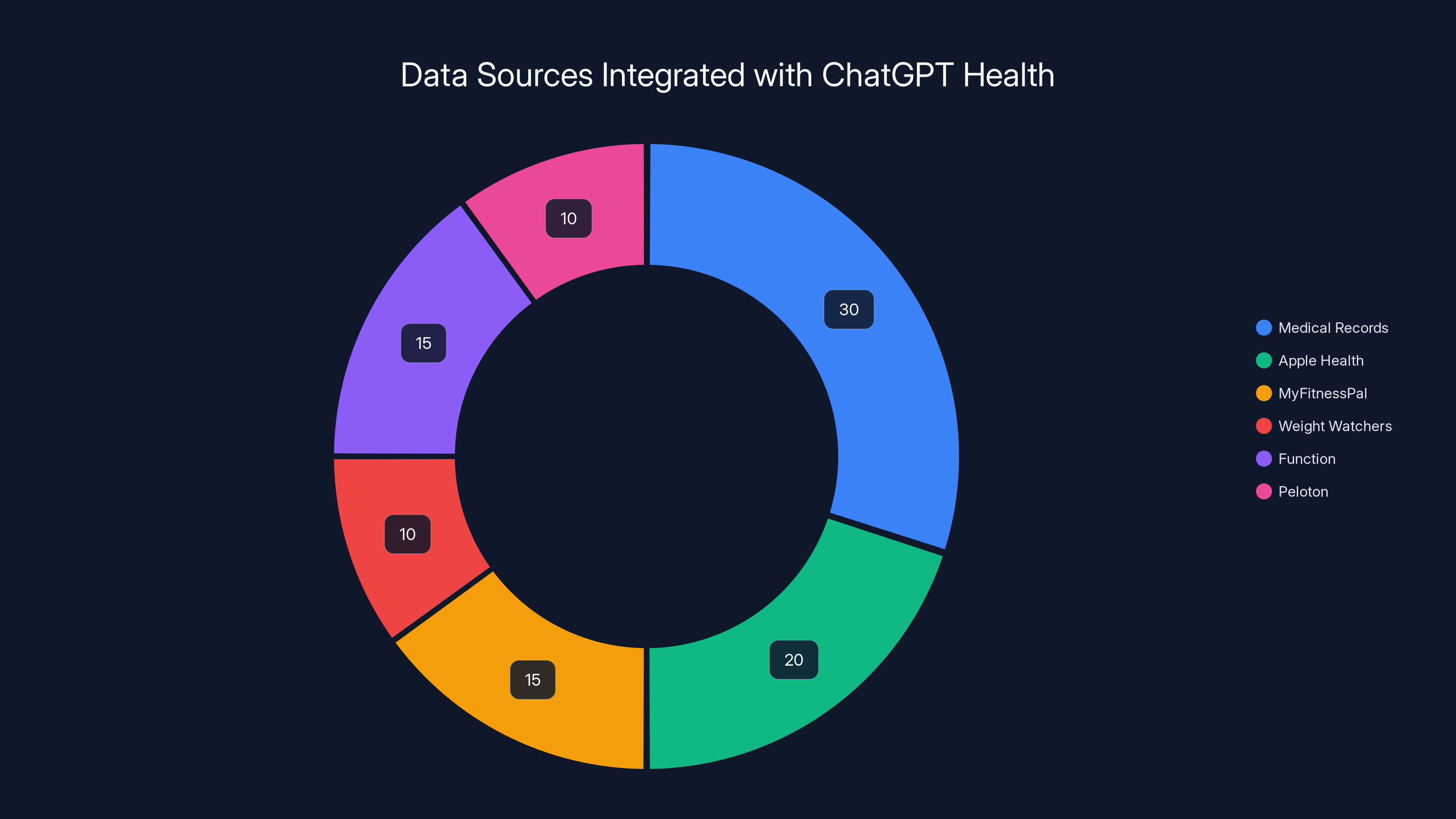

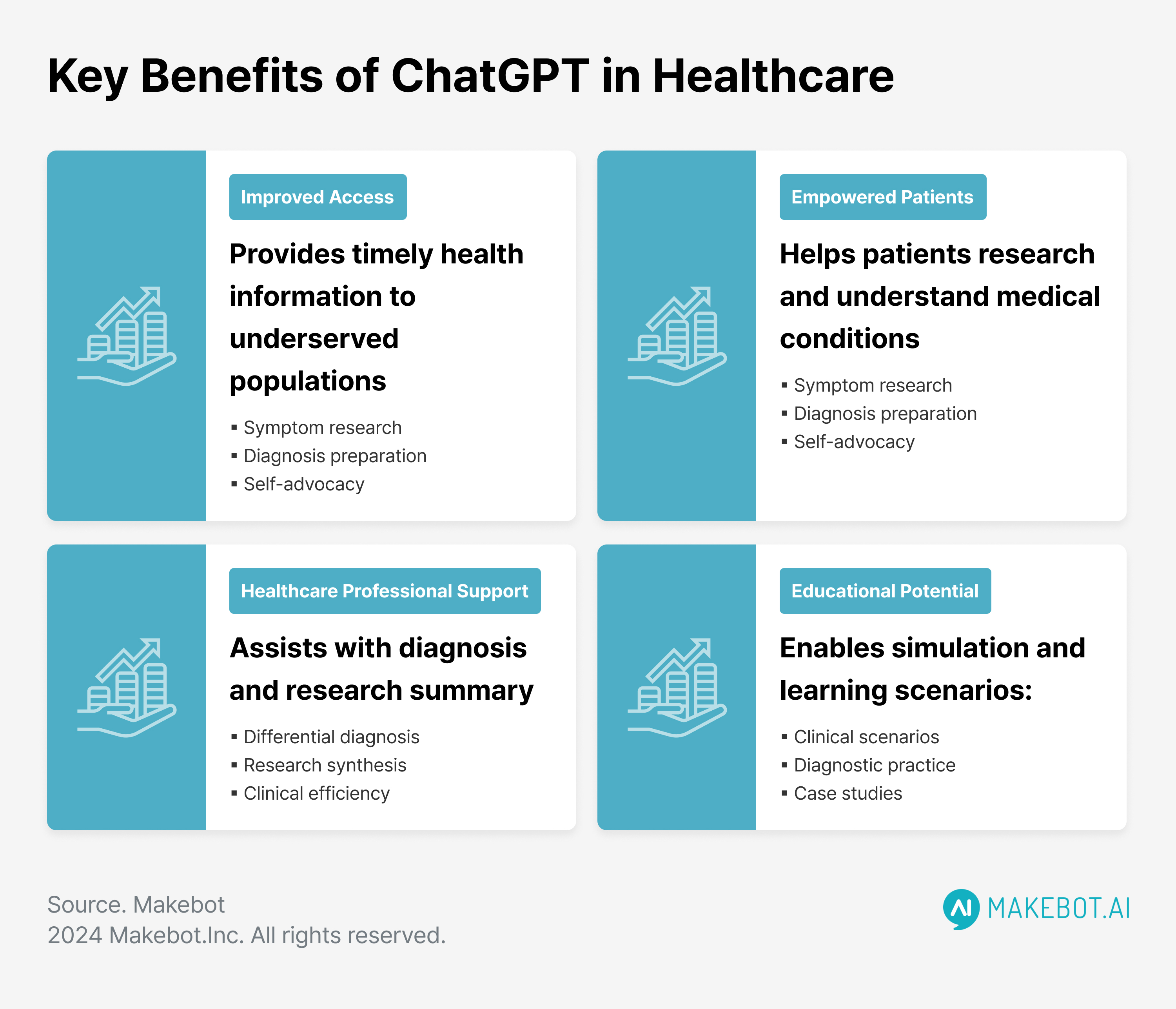

Estimated data shows that medical records are the largest data source connected to ChatGPT Health, followed by fitness data, nutrition tracking, and lab test insights.

TL; DR

- Chat GPT Health is live: OpenAI launched a sandboxed health chat within Chat GPT that can access your medical records, fitness data, and wellness apps

- Data integration matters: The platform partners with b.well to connect to 2.2 million healthcare providers, plus Apple Health, My Fitness Pal, Peloton, and others

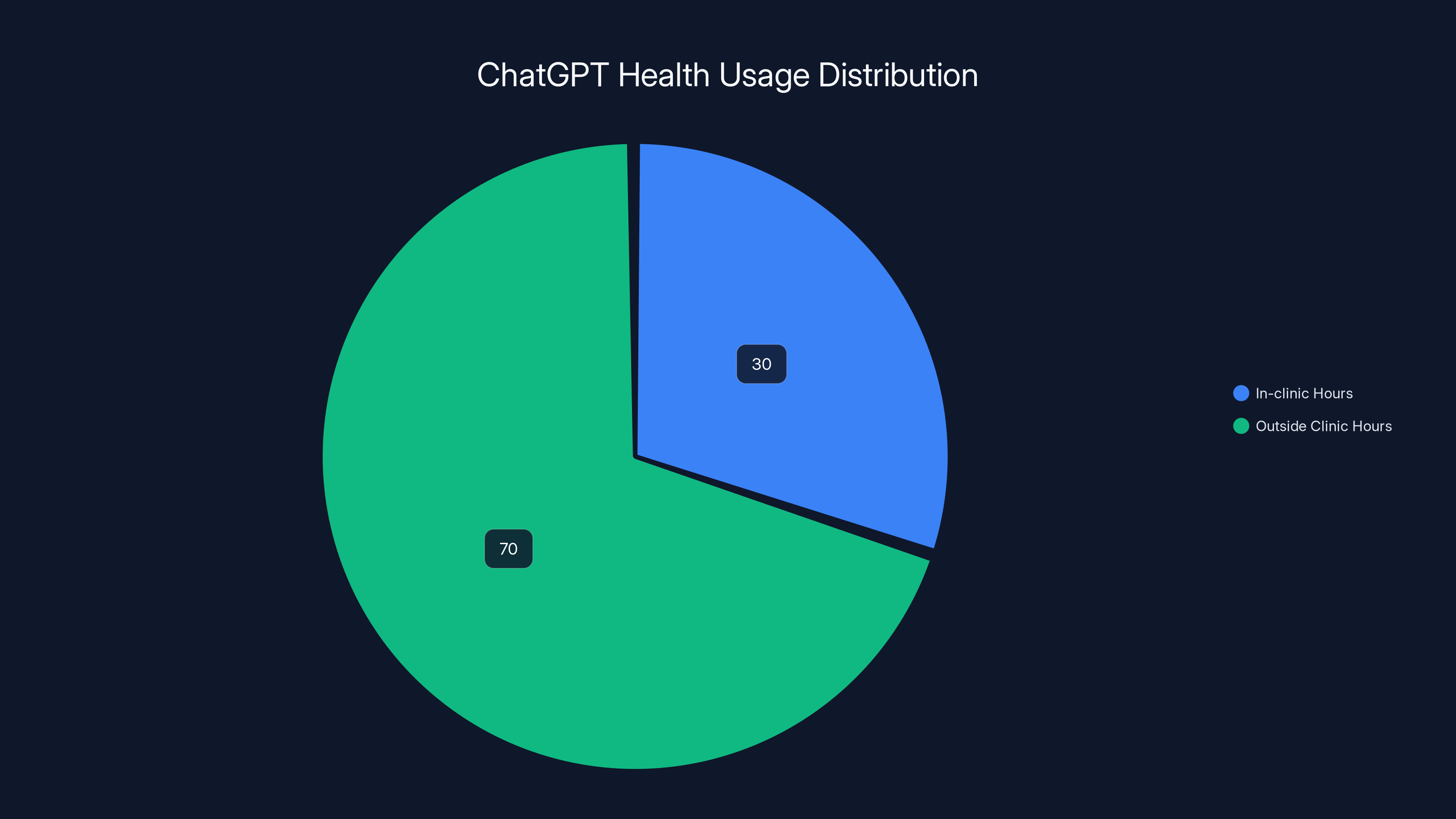

- Scale is already massive: Over 230 million people ask Chat GPT health questions weekly, with 70% of those conversations happening outside normal clinic hours

- Safety concerns are real: Recent cases show Chat GPT giving dangerous health advice, and OpenAI hasn't fully addressed mental health risks or health anxiety escalation

- The regulatory gap is huge: There's no clear legal framework for AI health tools that don't diagnose but clearly influence health decisions

What Chat GPT Health Actually Is (And What It Isn't)

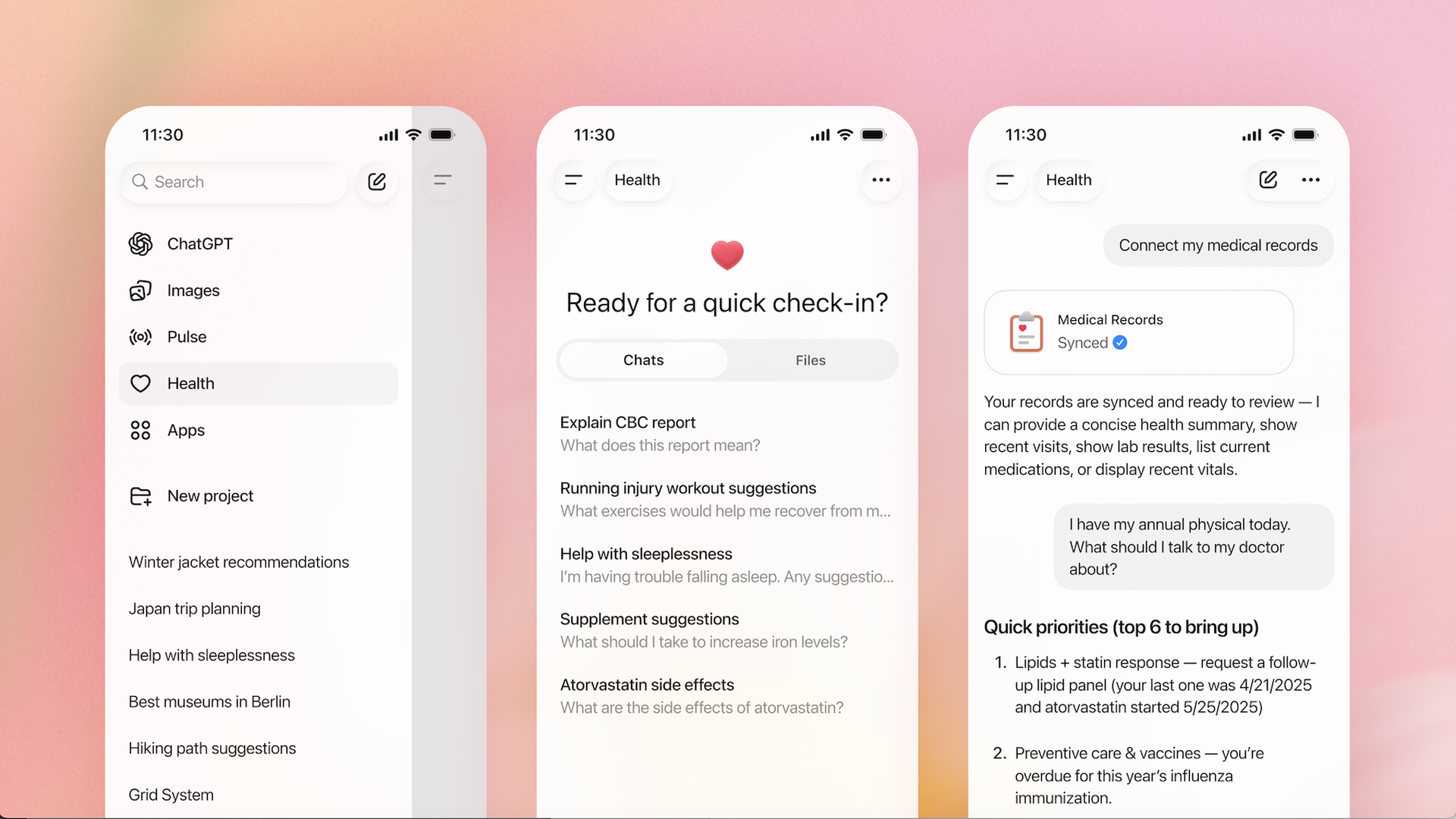

Chat GPT Health is a separate, sandboxed tab within Chat GPT. Think of it like a dedicated space on your phone where you talk to a health specialist version of Chat GPT, not the version that helps you write emails or debug Python code.

This is important because it means your health conversations don't mix with your regular Chat GPT history. OpenAI separates the data, keeps a separate memory feature just for health context, and (theoretically) applies different safeguards.

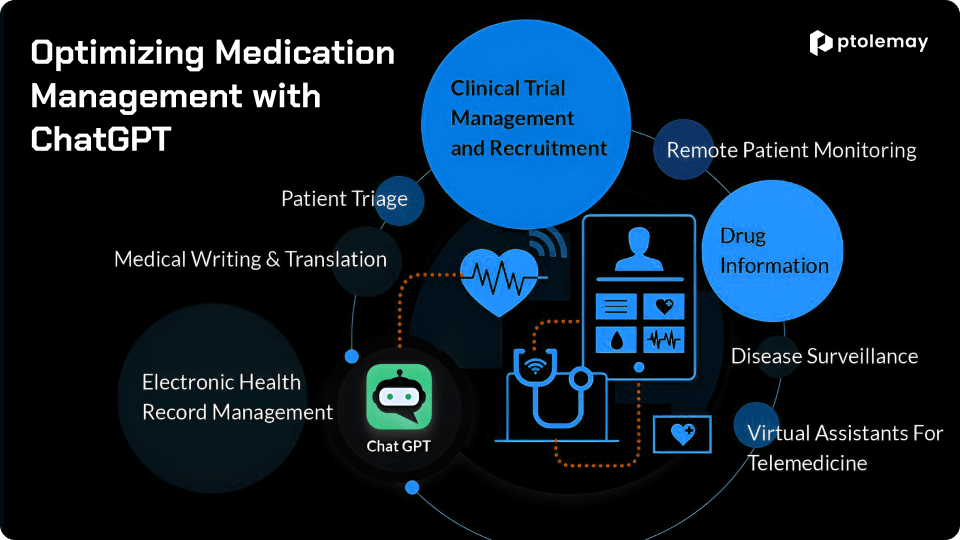

What makes it different from regular Chat GPT is the integration layer. You can connect medical records through a service called b.well, which handles the technical work of pulling your data from participating healthcare providers. B.well has relationships with about 2.2 million providers in the United States, which means if your hospital or clinic uses major electronic health record systems like Epic, Cerner, or Athenahealth, there's a decent chance your records are accessible.

Beyond medical records, you can connect Apple Health to share movement, sleep, activity patterns, and other biometric data. My Fitness Pal and Weight Watchers plug in nutrition and food tracking. Function integrates lab test insights. Peloton connects workout data.

The promise is that by having all this data in one place, Chat GPT can give you "more personalized, grounded responses." Instead of typing "my doctor said my triglycerides are high," you show Chat GPT the actual lab report. Instead of describing your workout routine, it sees your actual activity patterns from Apple Watch data.

But here's where the terminology gets tricky. OpenAI has bent over backwards to say this is "not intended for diagnosis or treatment." Yet they're openly describing use cases that sound exactly like diagnosis and treatment: understanding test results, preparing for appointments, getting diet and workout guidance, explaining insurance options based on healthcare patterns.

The distinction OpenAI seems to be making is that Chat GPT Health isn't making a diagnosis ("You have diabetes") but it is helping you understand diagnostic results, which lives in that gray zone between information and medical advice.

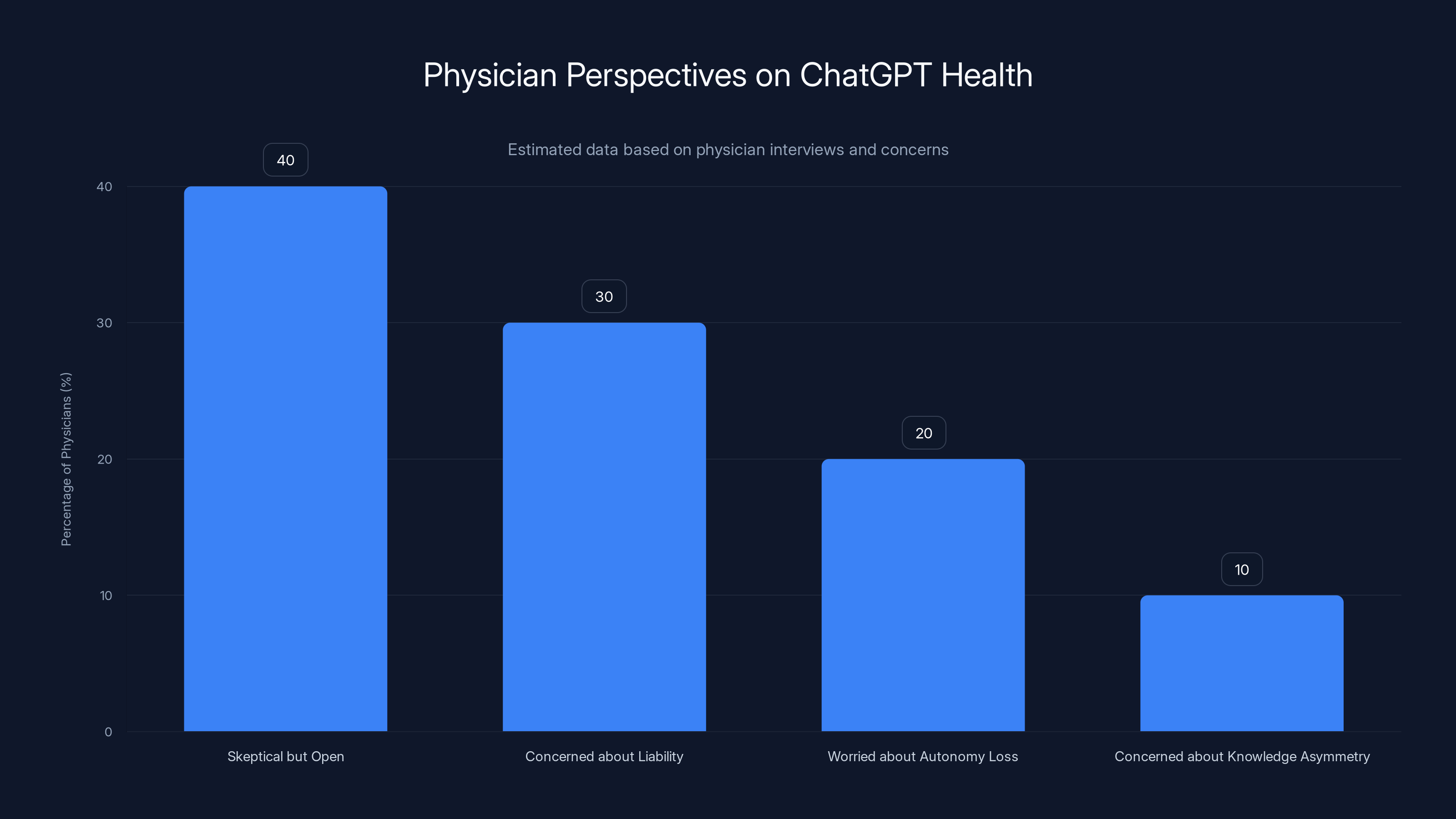

Estimated data shows that while 40% of physicians are skeptical but open to ChatGPT Health, significant concerns exist about liability (30%), autonomy loss (20%), and knowledge asymmetry (10%).

The Scale of This Moment: 230 Million Weekly Health Questions

Let's talk about the number that should make regulators sit up straighter: 230 million health-related conversations per week on Chat GPT.

That's not per year. Per week. That's roughly the entire population of the United States asking Chat GPT a health question every seven days.

To put this in perspective, according to healthcare data, the average American has about 2-3 doctor visits per year. So Chat GPT is being used for health questions at a rate that completely dwarfs traditional healthcare touchpoints. Most people talk to an AI about their health far more frequently than they talk to a doctor.

OpenAI also shared something else revealing: in underserved rural communities, the number climbs to nearly 600,000 healthcare-related messages per week, on average. In other words, Chat GPT isn't just a supplement for people with good healthcare access. It's becoming a primary healthcare resource for communities that don't have enough doctors.

That's not inherently bad, but it's important context for understanding why the safety piece matters so much.

OpenAI noted that seven in ten healthcare conversations in Chat GPT happen outside normal clinic hours. This tells us something important: people aren't using Chat GPT as a second opinion on a decision they'll confirm with their doctor. They're using it at 2 AM when they can't sleep and are worried about a lump. They're using it on Sunday afternoon when their kid has a fever and their pediatrician's office is closed. They're using it because it's available and their doctor isn't.

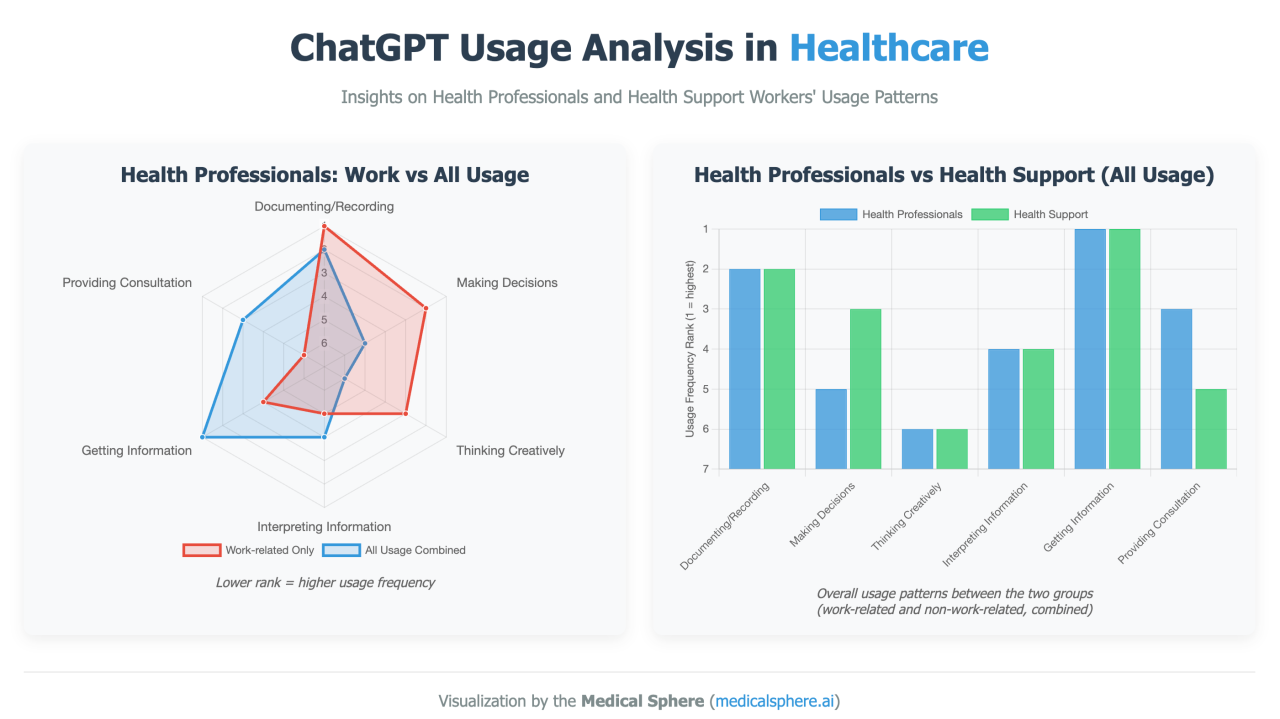

To OpenAI's credit, they didn't just launch this without medical input. Over two years, they worked with more than 260 physicians who provided feedback on model outputs more than 600,000 times across 30 areas of focus: cardiology, orthopedics, oncology, infectious disease, and more.

That's real work. That suggests OpenAI actually cares about getting this right, not just launching a flashy feature.

But there's a fundamental problem with this approach: physicians can review Chat GPT's outputs during testing, but they can't be there in every conversation in the real world. The safeguards are baked into the model's training, which means they're only as good as the training itself.

How Data Flows: The B. Well Connection and Privacy Questions

Here's where things get technical, because the technical details matter for understanding where your data actually goes.

When you connect your medical records to Chat GPT Health, OpenAI isn't directly accessing your hospital's servers. Instead, it's partnering with a company called b.well, which acts as the intermediary.

b.well is a healthcare data aggregation platform that's already built relationships with electronic health record systems across thousands of hospitals and clinics. When you authorize Chat GPT to access your records through b.well, you're essentially giving b.well permission to pull your data from your providers, and then b.well passes that data to OpenAI's servers.

This matters for privacy because it means your data flows through at least three different systems: your hospital's servers, b.well's infrastructure, and OpenAI's servers. Each handoff is a potential security consideration.

OpenAI says the medical records are "de-identified" for analysis purposes, meaning they remove names, dates of birth, and other direct identifiers before analyzing patterns. But de-identified doesn't mean anonymous. Researchers have repeatedly shown that de-identified health records can sometimes be re-identified if someone has auxiliary information.

The bigger privacy question is simple: what does OpenAI do with this data long-term? They've said that your medical records aren't used to train the model (which is good), but the underlying question of data storage, access logs, and potential future uses remains fuzzy.

This is where I'd honestly like more transparency from OpenAI. They've been good about the medical accuracy side, but the data privacy side needs clearer documentation.

The Risk That Nobody's Talking About Enough: Mental Health

Here's the moment that caught my attention during the briefing with reporters. When Fidji Simo, OpenAI's CEO of Applications, was asked directly whether Chat GPT Health would provide mental health advice, his answer was careful but ultimately non-committal.

He acknowledged that mental health is part of health, and that "a lot of people" turn to Chat GPT for mental health conversations. He said the system "can handle any part of your health including mental health" and that OpenAI is "very focused on making sure that in situations of distress we respond accordingly and we direct toward health professionals."

But he didn't actually commit to specific safeguards. And here's why that matters: there are documented cases of people, including minors, having suicidal ideation after conversations with Chat GPT.

This isn't theoretical. It's happened. There are reports and investigations. The risk is real.

Mental health is categorically different from most other health conditions because AI responses can directly contribute to harm in ways that a wrong answer about cholesterol levels usually can't. If Chat GPT tells someone their heart palpitations are probably anxiety when they're actually having a heart attack, that's bad. But if Chat GPT engages deeply with someone experiencing suicidal thoughts without properly de-escalating to professional help, the stakes are existentially different.

OpenAI's answer was essentially: we have tools to let you customize what Chat GPT will and won't talk about. So if you have a condition like health anxiety (hypochondria), you can set instructions to avoid sensitive topics.

But that's not a safeguard. That's a workaround. A real safeguard would be automatic detection: "This conversation contains indicators of suicidal ideation. Here are crisis resources." Or: "Based on this interaction, I'm recommending you speak with a mental health professional." OpenAI didn't commit to either.

When asked specifically whether OpenAI had safeguards to prevent health anxiety escalation, Simo's response was vague. He mentioned doing "a lot of work" on the topic but didn't provide specifics.

This concerns me because health anxiety patients could use Chat GPT Health in a way that amplifies their condition. They connect their medical records, run their lab results through the AI, get detailed explanations, then start seeing patterns and connections that feed their anxiety. The system doesn't know it's harming them because it's being technically accurate.

ChatGPT Health integrates various data sources, with medical records and Apple Health being the most prominent. Estimated data.

The Dangerous Advice Problem: From Pizza Glue to Salt Substitutes

This isn't the first time an AI has given dangerous health advice at scale.

Remember when Google's AI Overview suggested putting glue on pizza to make cheese stick better? That launched a million jokes, but it also highlighted something serious: AI systems trained on internet text will eventually generate advice that's catastrophically wrong, and they'll present it with confidence.

With healthcare, the stakes are higher. A case documented by physicians involved a man who took Chat GPT's advice to replace salt in his diet with sodium bromide. He ended up hospitalized for weeks with a condition he shouldn't have needed to survive to 2024. Sodium bromide is a chemical compound that was used as a sedative in the early 1900s. It's not a dietary supplement. Chat GPT apparently suggested it anyway.

Google's AI Overview gave dangerous health advice in its early days (dietary recommendations for cancer patients, advice on liver and women's cancer tests), and those were widely publicized. But the problem didn't go away after the headlines did. A recent investigation found that dangerous health advice continues, albeit with less media attention.

The common thread is that AI systems are pattern-matching machines. They're good at finding correlations in training data, but they don't truly understand causality or safety constraints. They can hallucinate sources. They can confidently state things that are wrong. And in healthcare, confidence is dangerous because people tend to trust confident-sounding information.

OpenAI has built some guardrails. They trained the model with physician feedback. They're not claiming diagnosis capability. But guardrails aren't foolproof. They're just bumpers in a bowling alley. Eventually, someone's going to find a way to knock the ball through the side.

The question isn't whether Chat GPT Health will eventually give dangerous advice. It probably already has, just not at the scale that triggers news coverage. The question is how OpenAI and regulators respond when it does.

The Regulatory Vacuum: Who's Actually Responsible?

Here's a genuinely unsettling aspect of Chat GPT Health: it exists in a massive regulatory gray zone.

Traditional medical software is regulated by the FDA. If you build a diagnostic tool, software that interprets images, anything that makes medical claims, the FDA has jurisdiction. There are approval processes. There are clearance standards. There's accountability.

But Chat GPT Health isn't claiming to diagnose. It's claiming to "help you understand" diagnostic results, "prepare for" appointments, "get advice on" diet and workouts. These sound like diagnosis and treatment, but OpenAI's legal team has probably spent months figuring out how to describe them in ways that technically avoid FDA jurisdiction.

This matters because it means there's no regulatory body systematically reviewing Chat GPT Health's outputs before it's used by millions of people. The FDA didn't have to sign off. No government agency tested it. There's no formal approval process or ongoing monitoring.

OpenAI is self-regulating. They have a responsible AI team. They got physician feedback. But self-regulation is weaker than external regulation because the company has financial incentives to launch features quickly.

The FDA has made some attempts to address this. They've issued guidance on AI in healthcare. They're working on frameworks. But the guidance doesn't have the force of law, and it's unclear whether Chat GPT Health would fall under their jurisdiction anyway.

Meanwhile, in the United Kingdom, the Medical and Healthcare Products Regulatory Agency is taking a different approach, working with tech companies to understand AI health tools before they launch at scale. But even that is reactive, not proactive.

The honest answer is: we have no idea who's actually responsible if Chat GPT Health gives someone bad advice that results in harm. Is it OpenAI? B.well? The user? The physician who didn't catch the bad advice? The liability question is completely unresolved.

How Doctors Actually Feel About This (It's Complicated)

I've talked to a few physicians about Chat GPT Health, and the consensus is surprisingly nuanced. They're not uniformly against it.

Most are skeptical but open. They see potential value in a tool that could help patients understand their results and come to appointments more prepared. That's genuinely useful from a clinical workflow perspective. A patient who's spent 30 minutes understanding their A1C levels before the appointment is a more informed patient who asks better questions.

But they're also frustrated. The frustration comes from a place of liability concern and autonomy loss. If a patient says "Chat GPT told me to stop taking my medication because it said the side effects outweigh benefits," the doctor now has to spend time convincing them otherwise. Chat GPT just created work.

There's also the concern about knowledge asymmetry. A tool trained on internet text about healthcare isn't the same as a tool trained on medical literature and clinical studies. Chat GPT is probably right 85-90% of the time on straightforward health questions. But that 10-15% error rate is multiplied by millions of users and millions of conversations.

One physician I spoke to made a good point: "The problem isn't that it's bad. The problem is that it's good enough to be dangerous. If it was obviously wrong, people wouldn't trust it. But it's right often enough that people do."

That's the core of the physician skepticism. Not that Chat GPT Health is terrible. That it's plausibly good enough that patients will believe it, and that's a liability problem.

ChatGPT Health improves understanding of A1C and reduces anxiety but may complicate doctor communication and pose medication safety risks. Estimated data.

The Insurance Company Question: What Happens to Your Data?

Here's something that hasn't gotten enough attention: insurance implications.

If you connect your medical records to Chat GPT Health, you're showing an AI system your complete healthcare history, including diagnoses, procedures, prescriptions, and costs. That data is theoretically protected by privacy laws, but insurance companies have legitimate interests in understanding patient health patterns.

OpenAI says they don't share your data with insurance companies. But they also haven't ruled out future partnerships. And even if they don't directly share data, pattern-matching from aggregate de-identified data could reveal health trends that insurers might be interested in.

This creates an awkward situation: you're voluntarily giving your health data to a company that might eventually sell insights to insurers (even anonymized), who might use those insights to adjust your premiums or coverage.

I'm probably being paranoid, but I'm also not. This is how these things usually work. Facebook started as a harmless social network. Over time, they discovered they could monetize your data. OpenAI is positioning itself as a healthcare company. Over time, they might discover they can monetize healthcare data.

The responsible thing would be explicit, clear commitments: we will never sell healthcare data, we will never create data products for sale to insurers, we will never use your healthcare information for any purpose other than improving your conversation. OpenAI hasn't made these commitments.

The Workflow Integration Question: How Does This Actually Help?

Let me try to articulate what a genuinely useful Chat GPT Health interaction looks like.

Scenario: You're a 55-year-old with prediabetes. You got bloodwork back showing elevated A1C (let's say 5.8). You have questions, but your doctor's appointment isn't for two weeks.

Without Chat GPT Health: You Google "A1C 5.8 what does it mean." You find Web MD, which tells you something scary-sounding. You panic. You try to figure out if you need medication. You don't sleep well.

With Chat GPT Health: You upload your bloodwork. Chat GPT sees your A1C, your fasting glucose, your weight, your medications, your activity level, your diet (if connected to My Fitness Pal). It explains: "Your A1C is in the prediabetic range. Based on your current activity level from your Apple Watch data and your diet patterns, here are some evidence-based modifications that might help." It shows you what A1C trending looks like in prediabetic populations who adopt specific lifestyle changes. You feel less panicked and more empowered.

That's genuinely valuable. That's the promise.

But here's the real-world version: you upload your bloodwork. Chat GPT's explanation is mostly accurate but contains one error that makes you doubt the whole thing. You call your doctor to ask about it. Your doctor hasn't used Chat GPT Health and doesn't know what to expect from it, so the conversation takes longer than it should have. You spend the appointment explaining what Chat GPT told you instead of focusing on your actual health concerns.

Or worse: you upload your bloodwork, Chat GPT gives you advice that's 90% accurate but 10% wrong about a medication interaction, you don't catch the error, you adjust your meds based on the AI recommendation, and now you have a problem.

The value is there, but it's fragile. It depends on the user understanding the limitations, the doctor understanding what they're dealing with, and the AI system not making errors. All three of those are assumptions that often don't hold.

Mental Health Conversations at Scale: Why This Worries Me

I keep coming back to the mental health thing because it's the part that makes me genuinely uncomfortable.

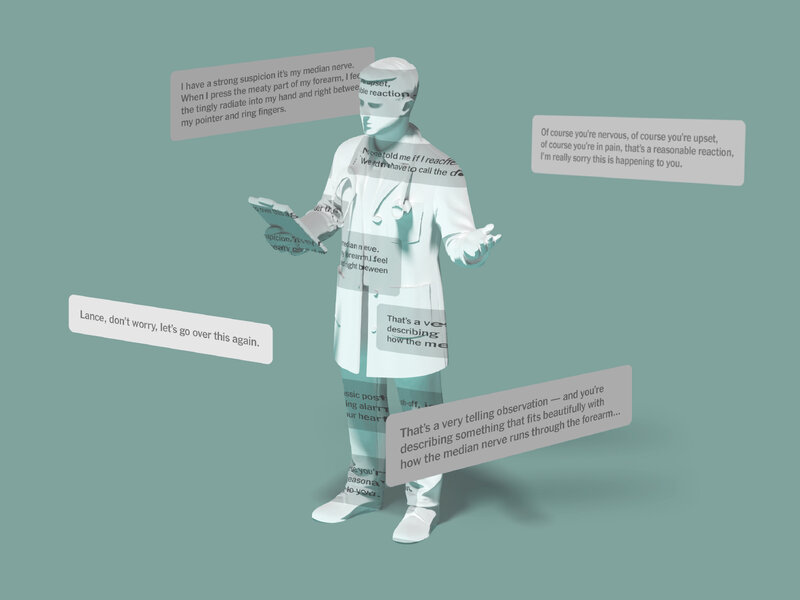

Someone experiences anxiety or depression. They open Chat GPT at midnight because they can't sleep and they're spiraling. They describe their thoughts to the AI. Chat GPT, trained to be helpful and empathetic, engages. It validates their concerns. It asks clarifying questions. It provides coping strategies.

On the surface, this is helpful. The person feels heard. They get some tools. Maybe they actually do sleep.

But here's the problem: Chat GPT isn't trained to de-escalate mental health crises. It's not trained to recognize suicidal ideation and immediately direct someone to a crisis line. It's trained to be conversational and helpful, which for mental health is different from being safe.

OpenAI's response is to let you customize instructions to avoid certain topics. So someone with health anxiety could set Chat GPT to "don't discuss symptoms or medical conditions." But that's a user-side workaround, not a system-side safeguard. It requires the user to anticipate how the AI might harm them, which defeats the purpose of using the AI in the first place.

A real safeguard would be: "This conversation contains indicators of potential self-harm. I'm going to stop responding to follow-up messages and instead provide you with crisis resources." But that's more restrictive, and it means Chat GPT Health would sometimes refuse to engage with people who need help most.

There's a tension between being helpful and being safe, and I'm not sure OpenAI has resolved it.

The data on this is sobering. There have been multiple documented cases of people, including teenagers, attempting suicide after conversations with Chat GPT. Not because Chat GPT told them to, but because the AI engaged with their suicidal ideation in ways that normalized or reinforced it.

OpenAI mentions this in their blog (vaguely), but they don't commit to specific solutions.

Estimated data shows that while some AI health advice incidents are isolated, others like the 'Pizza Glue' incident have been more widespread. Estimated data.

The Broader Context: Healthcare AI is Moving Fast

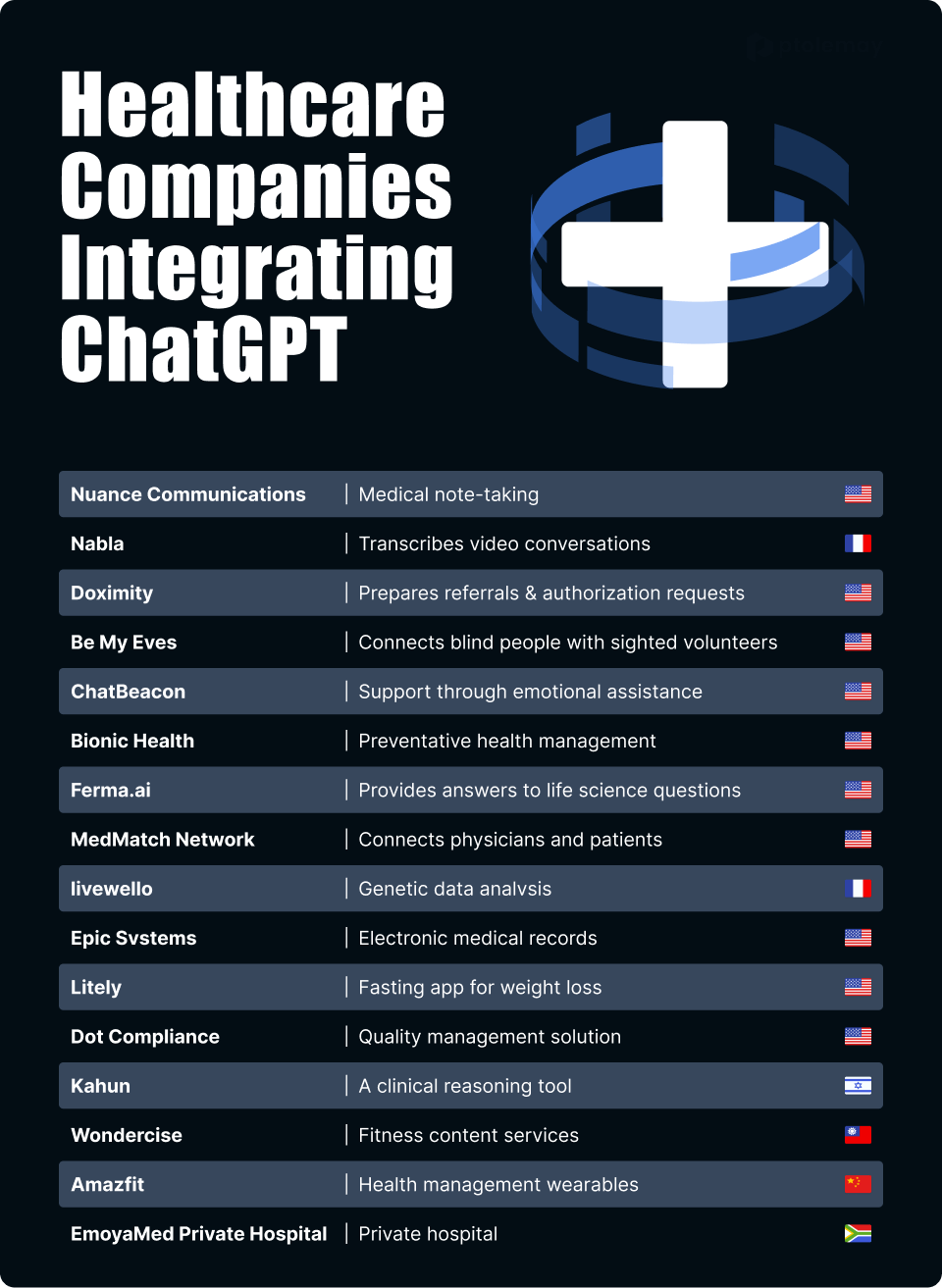

Chat GPT Health isn't happening in isolation. It's part of a broader acceleration of AI in healthcare.

Google is developing healthcare AI tools. Amazon, through AWS, is investing heavily in healthcare AI startups. Microsoft is embedding AI into healthcare workflows. Every major tech company sees healthcare as the next frontier.

This acceleration is happening faster than regulatory frameworks can adapt. The FDA is still figuring out how to regulate previous-generation healthcare AI (image analysis, diagnostic algorithms) while next-generation systems (large language models with access to medical records) are already launching.

There's real innovation happening. Better diagnosis tools. More accessible health information. Systems that could help rural communities with limited healthcare access. But there's also real risk: information overload, false confidence in incorrect advice, privacy concerns, liability questions that nobody has answered.

Chat GPT Health is at the leading edge of this wave. It's ambitious, partially tested, and operating in a regulatory gray zone. That's either the sign of a company pushing boundaries or a company moving without sufficient oversight. Probably both.

What's Actually New About Chat GPT Health vs. Regular Chat GPT

Let me be precise about what changed with this launch.

Before: Chat GPT could answer health questions based on what you typed. It had no context about your actual medical history. It was giving you information based on your description.

Now: Chat GPT can see your actual medical records, lab results, clinical history, fitness data, diet tracking, and medication list. It's using real data instead of your description of data.

This is a meaningful difference. It means responses can be more specific and contextualized. It also means there's more data at stake and more potential for personalized harm (if the advice is wrong, it's wrong specifically for you).

The separate chat history and memory feature is new. You get a dedicated space for health conversations that doesn't mix with your regular Chat GPT usage. That's a privacy consideration (your health stuff is separated) but also a UX consideration (you build up context in a health-specific conversation thread).

The integration with b.well is new. That's the technical layer that makes connecting to medical records possible. Previously, you'd have to describe your medical records to Chat GPT. Now you can authorize direct access.

What's not new: the underlying language model. It's the same GPT-4 (or whichever version you're using) that powers regular Chat GPT. The improvements come from context, not from a fundamentally different system.

The Insurance Angle: Economics of AI Healthcare

Here's the economic incentive that nobody's talking about enough: insurance companies should be terrified and excited about this at the same time.

Terrified because Chat GPT Health could reduce unnecessary doctor visits. If patients can understand their lab results from Chat GPT instead of scheduling a visit, that's fewer billable encounters. Fewer encounters means lower insurance payouts.

Excited because Chat GPT Health could help with preventive care and early intervention. If someone with prediabetes gets early guidance from Chat GPT and modifies their diet, they might never develop type 2 diabetes. That saves the insurance company money long-term.

The economics play out differently depending on the scenario. For acute problems (explaining existing diagnosis), Chat GPT could reduce healthcare utilization. For preventive problems (catching early disease), it could improve outcomes.

Insurance companies are probably already thinking about how to incorporate AI health tools into their coverage models. They might eventually say, "You get a discount if you use our preferred AI health tool for preventive monitoring." Or they might require it.

That's not necessarily bad, but it creates dependencies. Your health AI becomes tied to your insurance company, which has financial incentives that don't perfectly align with your best health outcomes.

An estimated 70% of ChatGPT Health interactions occur outside normal clinic hours, highlighting its role in providing health information when traditional services are unavailable.

Looking Forward: What's Coming Next

If I had to guess where this goes, I'd guess: integration, expansion, and eventual regulation.

Integration means Chat GPT Health becomes more deeply embedded in healthcare workflows. You won't use Chat GPT separately from your health provider. Your doctor will have Chat GPT Health as a tool in their EHR. Your insurance company will have integrations. Your pharmacy might use it to flag potential interactions.

Expansion means more data sources, more capabilities, and more use cases. Instead of just understanding results, Chat GPT Health might eventually manage medication reminders, help coordinate between multiple providers, or integrate with wearable devices for real-time health monitoring.

Regulation is inevitable but slow. The FDA will eventually issue guidance. Congress might pass legislation. We'll see privacy regulations that specifically address AI health tools. It'll take years, and in the meantime, the technology will keep advancing.

The open questions are: Will regulation come before or after a high-profile harm? Will it be effective or mostly theater? Will companies self-regulate or fight regulation?

Based on history with other tech platforms, I'm not optimistic. Usually regulation comes after enough people are harmed that politicians feel pressure to act. But I hope Chat GPT Health proves me wrong.

The Honest Assessment: Is This Good or Bad?

I keep trying to land on a final judgment, and I can't, because it's genuinely complicated.

The good: OpenAI has built something with real potential to increase health literacy and democratize health information. For people in rural areas with limited healthcare access, this could be genuinely valuable. The physician feedback integration shows they took safety seriously. The separate chat history shows they thought about privacy. This isn't a reckless launch.

The bad: It's operating in a regulatory vacuum, it doesn't fully address mental health risks, it has a track record of giving dangerous advice on health topics, and nobody's entirely sure how the data will be used long-term. The liability questions are unresolved. The safeguards are good but not foolproof.

The realistic: Chat GPT Health will probably help a lot of people understand their health better, and it will probably harm some people by giving them bad advice they act on. Both things will be true. The question is whether the helping outweighs the harming, and that's hard to answer without long-term outcome data.

If I were using Chat GPT Health, here's what I'd do: use it as a learning tool before doctor visits, not as a substitute. Check anything important against official medical sources. Don't make medication changes based on Chat GPT recommendations alone. Never use it as a crisis resource if I'm experiencing mental health emergencies. Connect medical records but understand the privacy implications.

In other words: it's useful, but it needs healthy skepticism.

Practical Implementation: How to Actually Use This Safely

If you're considering using Chat GPT Health, here's the practical framework for doing it safely.

First, understand the scope. This is designed for health literacy and preparation, not diagnosis or treatment decisions. Use it to understand your existing diagnosis, not to self-diagnose.

Second, connect data deliberately. Don't connect everything just because you can. Connect medical records if you have serious chronic conditions that benefit from context. Maybe connect Apple Health if you're actively working on fitness. Don't connect My Fitness Pal just for entertainment.

Third, verify important information. If Chat GPT tells you something that changes how you think about your health, verify it with your doctor or a medical reference. Don't take one AI opinion as definitive.

Fourth, use it for questions that feel less urgent. That lab result you want to understand before your appointment in two weeks? Great Chat GPT Health question. That weird symptom you have right now and you're worried it might be serious? That's a doctor question.

Fifth, recognize the limits. Chat GPT is good at explaining concepts, but it's not good at novel situations. If your health situation is unusual or complex, AI advice becomes less reliable.

Sixth, if you have health anxiety or mental health conditions, be careful. The tool might amplify your anxiety rather than soothe it. There's a real risk of spiraling.

Seventh, set clear privacy expectations. Read OpenAI's privacy policy for Chat GPT Health specifically. Understand how long data is stored, who can access it, and what your rights are if you want to delete it.

None of this is complicated, but it requires intentionality. Most people won't do this. Most people will use it carelessly, which is where the problems start.

The Bigger Picture: AI as Healthcare Infrastructure

Chat GPT Health is a symptom of something larger: we're in the process of rebuilding healthcare infrastructure around AI systems.

This isn't happening because we all sat down and decided it was a good idea. It's happening because AI is useful and because companies are building AI products faster than society can think through implications.

When you zoom out, you see that every part of healthcare is getting an AI layer: diagnosis, treatment planning, insurance approval, drug discovery, clinical trials, patient communication. In 10 years, your healthcare experience will be heavily mediated by AI systems. Chat GPT Health is just one piece of that.

The question is whether we'll have the governance infrastructure ready by the time the AI infrastructure is built. Right now, we don't. We're building the roads without the traffic laws.

OpenAI could be the good actor that self-regulates well and builds safeguards. Or they could be the actor that pushes boundaries and gets ahead of oversight until harm happens. Probably some of both.

The role of regulators, insurance companies, hospitals, and patients is to push for governance that matches capability. Not to shut down innovation, but to ensure it's done safely.

Conclusion: A Technology That's Arrived Before We're Ready

Chat GPT Health is here. It's live. Millions of people are using it. The question isn't whether it should exist. It does.

The real question is how we collectively ensure it's used safely and benefit-maximizes harms.

That requires several things: clearer FDA guidance on what counts as medical software and what doesn't. Transparency from OpenAI on how medical data is used. Better safeguards around mental health conversations. Long-term outcome studies on whether Chat GPT Health actually improves health outcomes. Standards for what health claims AI systems can and can't make. Better education for users about AI limitations.

None of that is happening right now in any systematic way. It's ad hoc and reactive.

If I'm being honest, Chat GPT Health represents the best and worst of how we develop transformative technology. The best: genuine innovation trying to solve real problems (health literacy, access, understanding). The worst: moving faster than we have governance frameworks, trusting self-regulation, and hoping harm doesn't happen before oversight catches up.

I don't think OpenAI is malicious. I think they're building something that could genuinely help people. But I also think they're moving faster than it's safe to move, and we're going to learn things about the risks that we could have learned earlier if we'd slowed down.

Chat GPT Health is useful. It's also incomplete. It's the first generation of something that will evolve. Use it thoughtfully. Expect it to change. Hold companies and regulators accountable when it doesn't work well.

And if you're experiencing a health crisis? Call your doctor. Not Chat GPT.

FAQ

What is Chat GPT Health and how does it differ from regular Chat GPT?

Chat GPT Health is a specialized, sandboxed tab within Chat GPT designed specifically for health-related questions. Unlike regular Chat GPT, it can access your medical records, lab results, fitness data, and wellness app information through partnerships with healthcare data platforms and health apps. This means you get personalized health guidance based on your actual health data rather than just text descriptions you provide. The separate chat history and memory feature keep your health conversations distinct from other Chat GPT usage.

How do I connect my medical records to Chat GPT Health?

Chat GPT Health partners with b.well, a healthcare data aggregation platform with relationships to approximately 2.2 million healthcare providers. When you access Chat GPT Health, you'll have options to authorize connections to your medical records through major healthcare systems like Epic, Cerner, or Athenahealth. You can also connect Apple Health for fitness and biometric data, My Fitness Pal or Weight Watchers for nutrition tracking, and Function for lab test insights. Each connection requires your explicit authorization, and you can disconnect at any time. The key is that you control which data sources connect and which remain separate.

Is Chat GPT Health regulated by the FDA like other medical software?

Chat GPT Health operates in a regulatory gray zone. OpenAI has carefully positioned it as a tool to "help you understand" health information rather than to diagnose or treat, which technically distinguishes it from traditional medical software that the FDA regulates. However, the distinction between explaining diagnostic results and providing medical advice is blurry. The FDA has issued guidance on AI in healthcare, but it's unclear whether current guidance fully addresses large language models like Chat GPT Health. This is an area where regulation is likely coming but hasn't fully arrived yet.

What are the main safety concerns with Chat GPT Health?

Several safety concerns stand out: first, Chat GPT occasionally gives confidently incorrect health advice, as evidenced by cases where it recommended sodium bromide as a salt substitute; second, mental health safeguards are unclear, with documented cases of people experiencing suicidal ideation after Chat GPT conversations; third, the system could amplify health anxiety rather than reduce it for people with health anxiety conditions; fourth, there's a gap between OpenAI's claim that the tool isn't for "diagnosis or treatment" and actual use cases that closely resemble both; and finally, liability questions remain unresolved about who's responsible if someone follows Chat GPT Health advice that causes harm.

Should I use Chat GPT Health instead of seeing my doctor?

Absolutely not. Chat GPT Health is designed to supplement medical care, not replace it. It's most useful for understanding your existing diagnoses or preparing for doctor appointments, not for making treatment decisions independently. For acute problems, new symptoms, or situations where you need a physical examination, you still need an actual doctor. The tool works best as a learning resource before you talk to your healthcare provider, helping you arrive more informed and with better questions. Think of it as a preparation tool, not a replacement for professional medical judgment.

What happens to my medical data after I connect it to Chat GPT Health?

OpenAI states that your medical records aren't used to train the underlying Chat GPT model, and that data is de-identified for analysis purposes. However, complete transparency about long-term data storage, potential future partnerships with insurance companies, and usage beyond your current conversations remains unclear. Your data flows through multiple systems: your healthcare provider's servers, b.well's platform, and OpenAI's infrastructure. Each handoff represents a potential privacy consideration. Before connecting medical records, review OpenAI's full privacy policy for Chat GPT Health specifically to understand storage duration, access permissions, and your rights to delete data.

How accurate is Chat GPT Health's medical information?

Chat GPT Health underwent testing with over 260 physicians who provided feedback more than 600,000 times across 30 medical specialties, which represents serious effort toward accuracy. However, accuracy isn't binary. The system is probably right 85-90% of the time on straightforward health questions, but that error rate is significant when multiplied by millions of users and conversations. The accuracy varies by topic: it's likely stronger on explaining existing diagnoses or standard treatment approaches, and weaker on unusual presentations or complex conditions. Always treat important health information from Chat GPT as a starting point for research or discussion with your doctor, not as definitive.

What should I do if Chat GPT Health gives me mental health advice or I'm experiencing a crisis?

If you're experiencing suicidal thoughts, severe anxiety, depression, or any mental health crisis, use a crisis resource like the 988 Suicide and Crisis Lifeline (call or text 988 in the US) rather than Chat GPT Health. While Chat GPT can provide general mental health information, it's not trained to handle crises appropriately. OpenAI's safeguards for mental health conversations are limited compared to what professional crisis intervention requires. If you have a diagnosed mental health condition, consider whether using Chat GPT Health could amplify anxiety or worsen your condition, and discuss it with your mental health provider before connecting health data.

Who is responsible if Chat GPT Health gives me bad advice that harms me?

This is genuinely unresolved. The liability question involves multiple parties: OpenAI (developed the system), b.well (provided data integration), your healthcare provider (whose data is being analyzed), and possibly the user (who chose to rely on the advice). There's no clear legal framework yet for accountability when an AI health tool operates in the "educational" space rather than claiming to diagnose or treat. If you follow Chat GPT Health advice that harms you, the question of who's legally liable would likely end up in court. This is one area where regulation will need to catch up as AI healthcare tools become more common.

Key Takeaways

- ChatGPT Health allows AI to access medical records and health data, enabling personalized health guidance at scale for 230 million weekly health conversations

- Over 260 physicians provided 600,000+ feedback interactions during development, showing serious medical safety investment, but mental health safeguards remain unclear

- The system operates in a regulatory gray zone without FDA oversight, creating unresolved liability questions when AI health advice causes harm

- 70% of healthcare conversations in ChatGPT occur outside clinic hours, making AI a primary health resource for people without immediate doctor access

- Known cases of dangerous AI health advice (sodium bromide substitution, glue on pizza) highlight the risk of confident but incorrect medical recommendations at scale

![ChatGPT Health: How AI is Reshaping Healthcare Access [2025]](https://tryrunable.com/blog/chatgpt-health-how-ai-is-reshaping-healthcare-access-2025/image-1-1767812843821.png)