Google Pulls AI Overviews From Medical Searches: What Happened [2025]

Introduction: When AI Health Advice Becomes Dangerous

Picture this: You wake up with liver pain and do what millions do every day. You open Google and type "what is the normal range for liver blood tests?" Instead of traditional search results, you get an AI overview synthesizing information into a neat, confident answer. Except the answer is completely wrong. And you might be about to make a health decision based on false information.

This isn't hypothetical. It happened to thousands of people. In March 2025, The Guardian published an investigation revealing that Google's AI Overviews feature was dispensing dangerously misleading medical guidance. When experts reviewed specific queries, they found recommendations that were medically backwards, potentially harmful, and in at least one case, life-threatening.

Google's response? Disable the feature for those medical queries. No major announcement. No apology. Just quietly removing AI overviews from health-related searches while the tech community asks harder questions about whether AI should be summarizing medical information at all.

This story matters beyond Google. It's about trust, reliability, and what happens when we let machine learning systems make decisions in domains where mistakes kill people. It's about the gap between what these systems can do and what they should do. And it's about a growing realization in the AI industry: speed and scale don't matter if the foundation is fundamentally unreliable.

Let's break down what happened, why it happened, and what it means for the future of AI in healthcare and beyond.

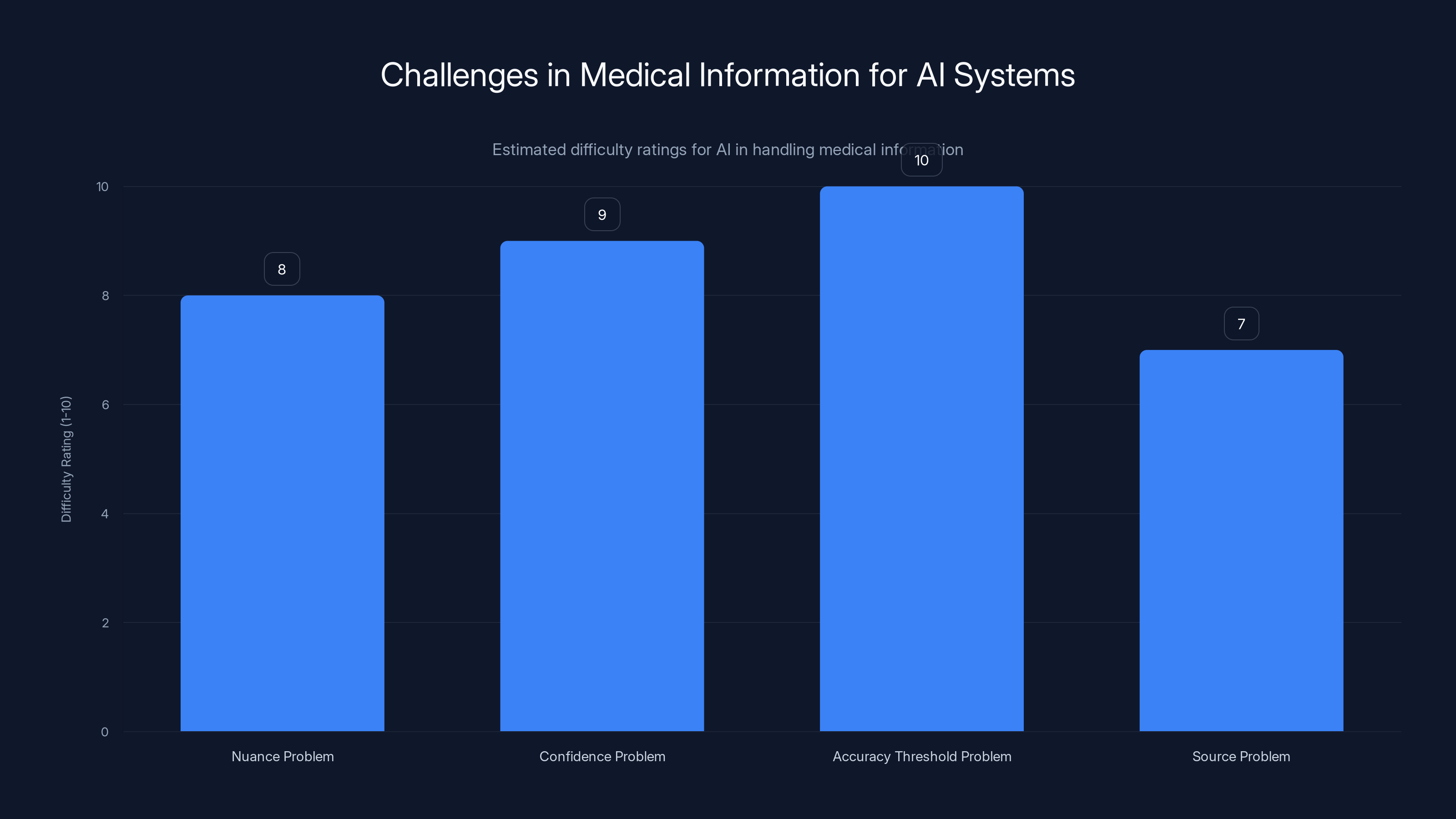

The 'Accuracy Threshold Problem' is rated as the most challenging for AI systems, highlighting the critical need for near-perfect accuracy in medical information. (Estimated data)

TL; DR

- Dangerous Medical Misinformation: Google's AI Overviews gave medically backwards advice on pancreatic cancer diets and incorrect liver function test information, prompting expert concern

- Swift Removal: Within weeks of The Guardian's investigation, Google disabled AI overviews for medical searches

- Broader Pattern: This is one of many high-profile failures including the "glue on pizza" incident and legal consequences

- Trust Crisis: The incident highlights fundamental challenges in deploying large language models for high-stakes domains

- Industry Lesson: Speed to market and "move fast and break things" doesn't work when breaking things means giving bad medical advice

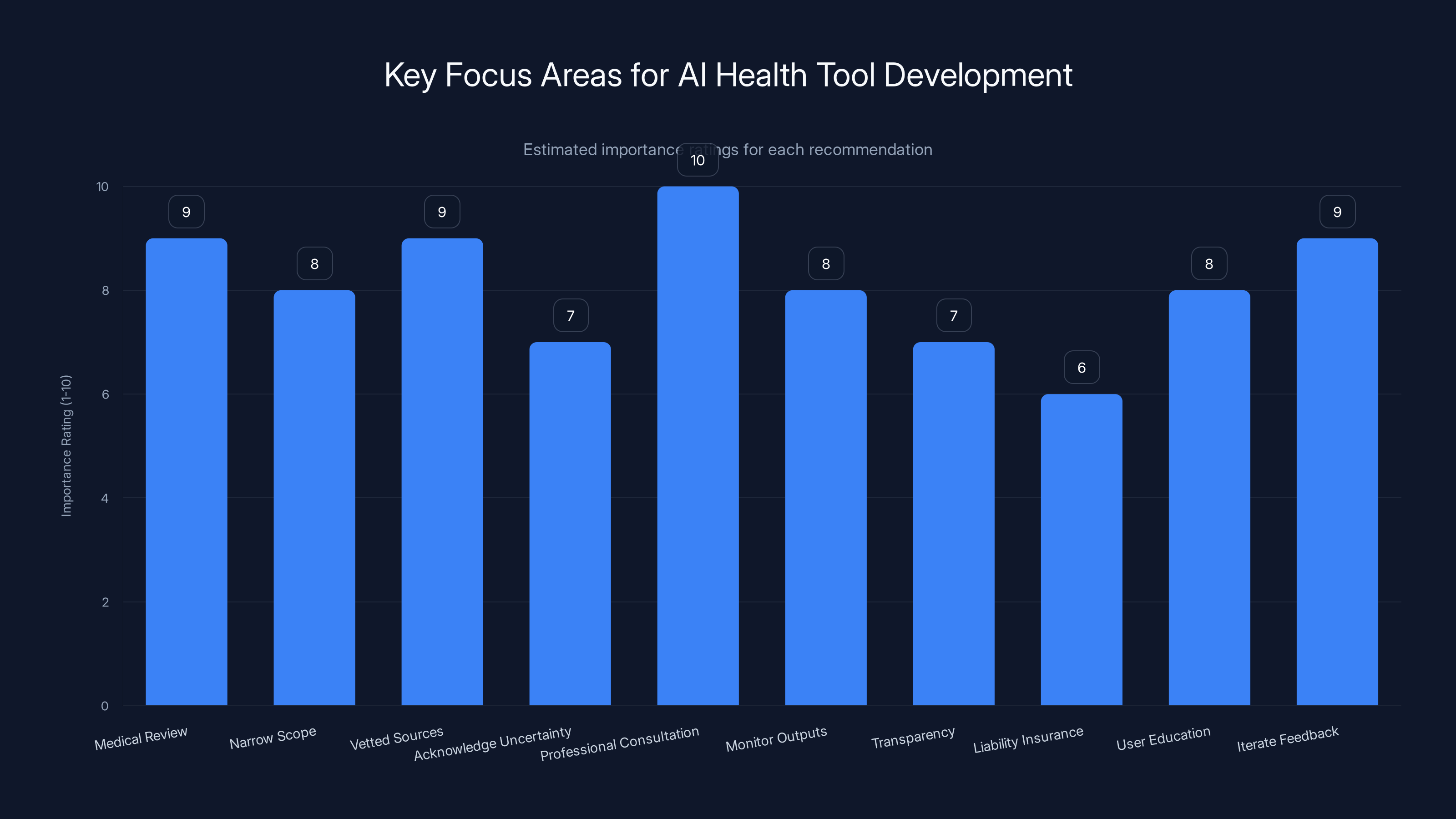

This bar chart estimates the importance of various recommendations for companies developing AI health tools. 'Professional Consultation' is rated highest, emphasizing the critical need for human oversight.

The Guardian's Investigation: How Medical AI Went Wrong

The investigation that triggered Google's response was thorough and damning. Researchers didn't find edge cases or rare glitches. They found a systematic problem: when people asked medical questions, the AI overviews were synthesizing information in ways that contradicted established medical guidance.

Let's look at the pancreatic cancer example because it illustrates just how wrong things went. A person searching for dietary recommendations for pancreatic cancer would see an AI overview advising them to avoid high-fat foods. That's not just slightly off. That's the opposite of what oncologists recommend. Pancreatic cancer patients typically need high-calorie, high-fat diets because the disease and its treatments severely impair nutrition absorption. Telling someone to do the exact opposite isn't a difference of opinion—it's medically dangerous.

The liver function test example was equally concerning. AI overviews provided incorrect reference ranges for tests like AST and ALT levels. Someone with serious liver disease could misread these results and think they're healthy. They might delay seeing a doctor or skip treatment altogether. That's not a minor mistake.

What's particularly troubling is that these weren't hallucinations in the technical sense. The AI didn't completely fabricate numbers or sources out of thin air. Instead, it synthesized real information in ways that created false impressions. It pulled accurate information about high-fat diets in some contexts and applied it incorrectly to pancreatic cancer. It found real reference ranges for liver tests but attributed them incorrectly or presented them without proper context.

This is actually harder to catch than outright fabrication. A completely made-up statistic might ring alarm bells. But information that's partially correct but contextually wrong? That's the kind of mistake that slips past both algorithms and humans.

The "Glue on Pizza" Problem: A Pattern of Errors

The medical misinformation wasn't Google's first major AI Overviews embarrassment. Months earlier, the feature told people to add glue to pizza to make cheese stick better. Before that, it suggested eating rocks for mineral intake. These aren't sophisticated medical errors—they're basic reasoning failures that would seem obvious to anyone thinking critically.

But here's the thing about AI systems: they're very good at pattern matching and confident in delivering answers regardless of whether those answers make sense. When someone asks how to make cheese stick to pizza, the AI finds websites discussing cheese, stickiness, recipes, and glue. It synthesizes this into a recommendation that sounds authoritative but is completely unhelpful.

The difference between "glue on pizza" and "avoid high-fat foods with pancreatic cancer" is that the latter sounds medically plausible. It doesn't trigger the same immediate skepticism. Someone might actually follow this advice. They might share it with friends. It might affect treatment decisions.

These failures point to a fundamental limitation: large language models are trained to predict the next word based on patterns in training data. They're not trained to understand whether the information they're synthesizing is medically accurate. They don't have built-in safeguards for high-stakes domains. They just follow statistical patterns that happen to work well for casual conversation.

What's become clear is that Google deployed this feature broadly without adequate testing in sensitive domains. The company likely validated the feature on general knowledge questions, casual queries, and straightforward topics. Medical information requires different validation standards. You can't just let a machine learning system generate health advice at scale without rigorous evaluation by medical professionals.

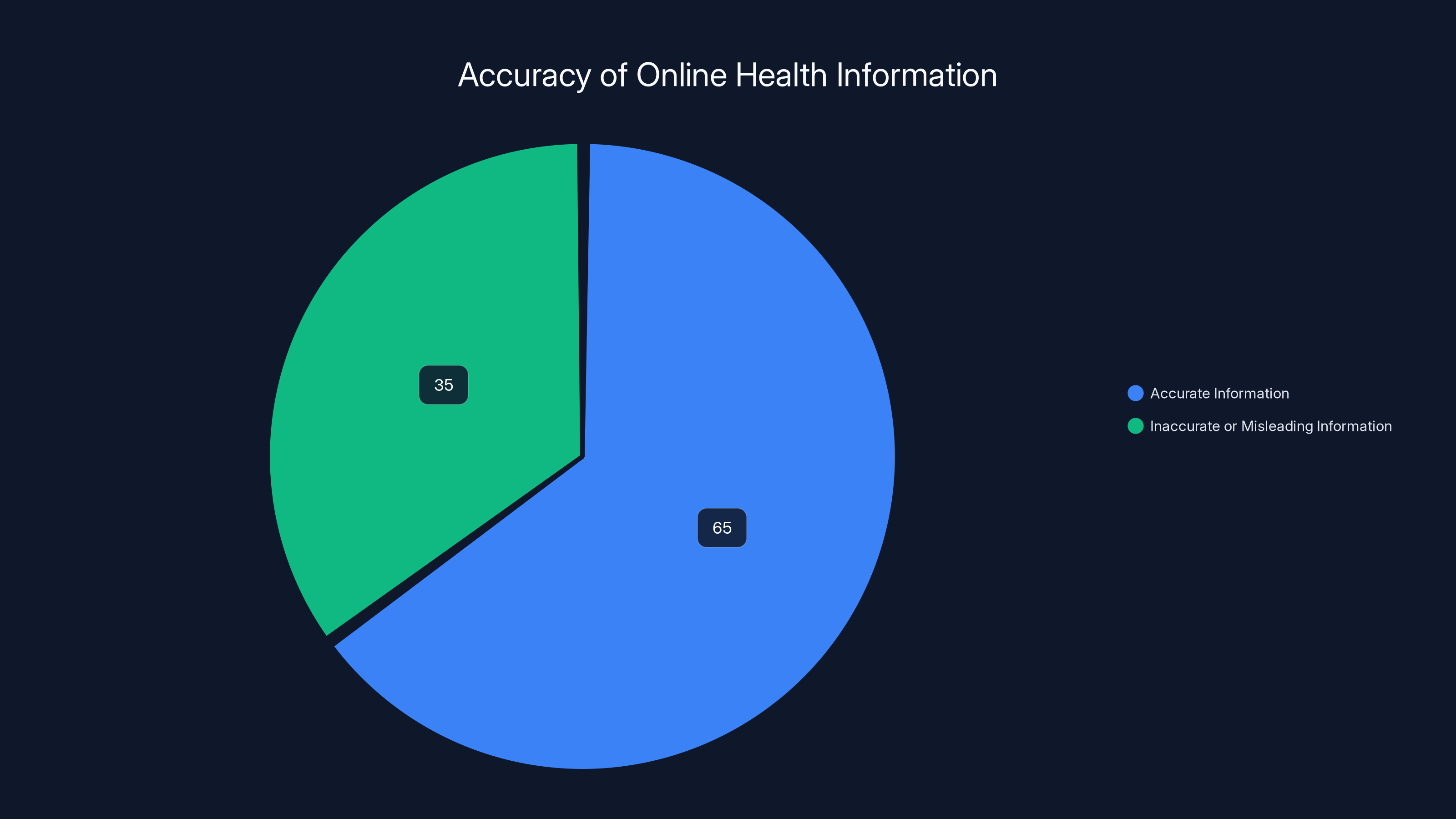

Studies suggest that approximately 35% of health information online is inaccurate or misleading, posing challenges for AI systems trained on such data. Estimated data.

Why Medical Information Is Uniquely Difficult

Medicine is hard. Not just for AI, but for everyone. Even doctors make mistakes. Medical knowledge is nuanced, context-dependent, and constantly evolving. There are legitimate debates about treatment approaches. Different patients need different advice based on their specific situations.

For AI systems specifically, medicine presents several unique challenges:

The Nuance Problem: Medical guidance depends heavily on context that's not always explicit in a search query. "Should I take antibiotics?" requires knowing what infection you have, your medical history, your current medications, and your allergies. An AI system can't ask follow-up questions like a doctor would.

The Confidence Problem: Large language models are trained to be confident. They produce answers formatted as if they're definitive. But medicine is full of "it depends" and "consult your doctor." The format of AI responses—clean, authoritative summaries—is fundamentally mismatched with medical uncertainty.

The Accuracy Threshold Problem: With casual information, 90% accuracy is fine. If Google tells you about the history of pizza, a small error isn't catastrophic. With medical information, 99% accuracy might not be good enough. That remaining 1% could affect treatment decisions. The acceptable error rate for health advice is essentially zero.

The Source Problem: Medical information on the internet varies wildly in quality. Legitimate medical journals sit next to health blogs. Personal anecdotes share space with peer-reviewed research. When an AI system synthesizes all of this, it has no inherent way to weight sources by reliability. It can't distinguish between a study published in The Lancet and a wellness blog.

Google's Response: Too Late, But Still Important

Google's official response was muted. The company declined to comment specifically about why it removed the feature for medical queries. But the action spoke clearly: AI Overviews couldn't be trusted for health information, at least not in their current form.

This raises questions about deployment strategy. Why wasn't this testing done before launch? Why did it take an external investigation to trigger the removal? Why hasn't Google issued a public apology or explained what went wrong?

The silence suggests internal friction. Product teams probably pushed for broad deployment to compete with Chat GPT and other AI services. Safety teams probably raised concerns. But the product shipped anyway. When things went wrong, the response was damage control, not transparency.

This pattern repeats across the AI industry. Companies launch features fast, fix problems reactively, and avoid public discussion of failures. This works fine for features that are merely inconvenient when they fail. But it doesn't work for high-stakes applications.

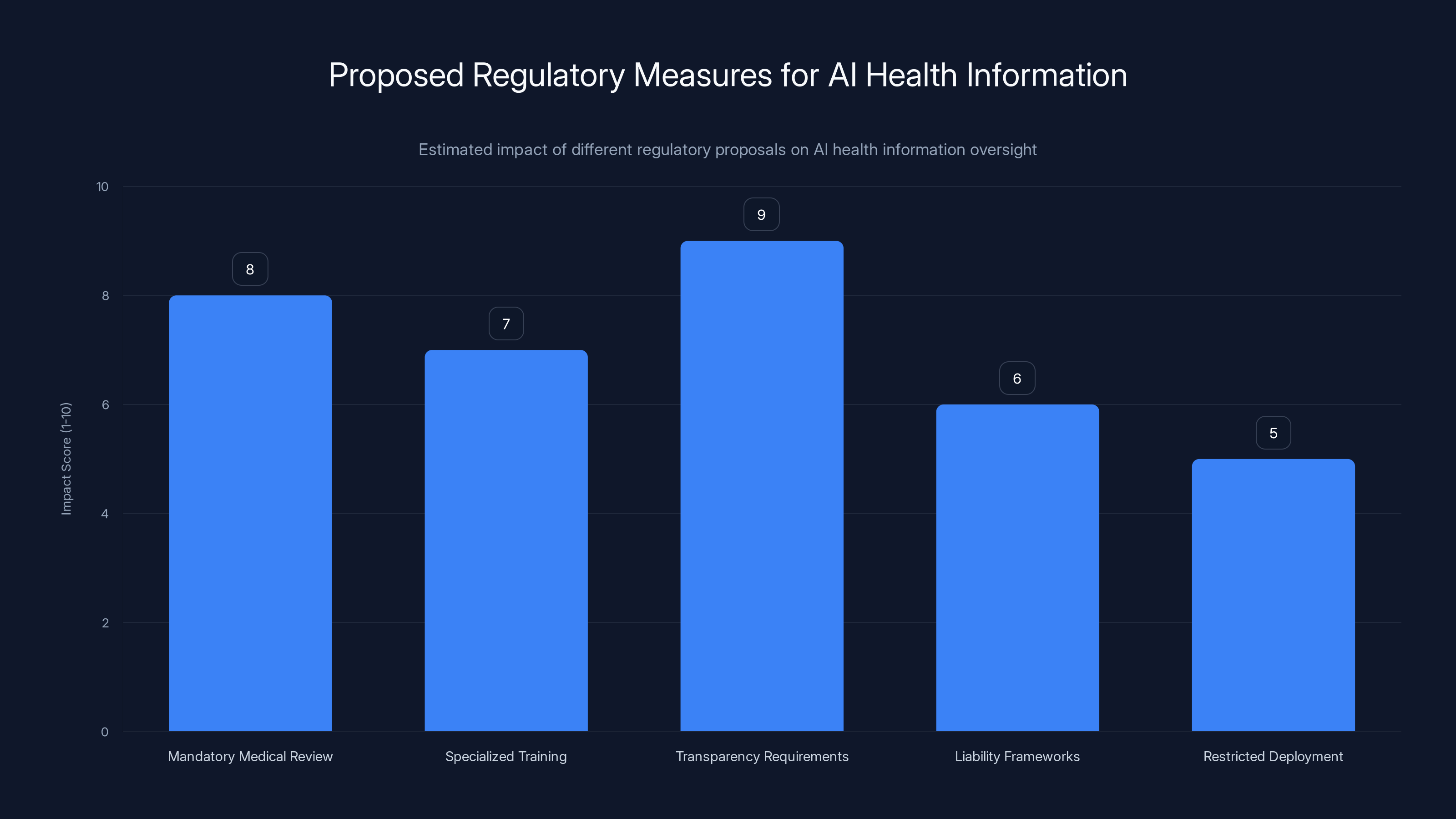

Transparency requirements are estimated to have the highest impact on ensuring safe AI health information, while restricted deployment might have the least impact. Estimated data.

The Liability Question: Who's Responsible?

One question that hasn't been fully answered: if someone followed Google's advice and suffered harm, who bears responsibility? Google? The websites Google cited? The AI model itself?

Legally, it's murky. Search engines have traditionally had limited liability for content they link to. But AI Overviews don't just link to content—they synthesize it into new statements. If Google's AI system says something false about a medical condition, Google is arguably responsible for that statement in a way it wouldn't be for simply linking to a false claim.

Multiple lawsuits have already been filed against Google over AI Overviews. These aren't resolved, but they point to growing pressure on the company to ensure accuracy or withdraw the feature entirely from sensitive domains.

This legal uncertainty has downstream effects on the entire industry. If Google faces significant liability for AI-generated medical misinformation, it sets a precedent. It creates incentive for other companies to be more cautious about health-related AI applications. Or it could push the industry toward demanding much stronger validation before launch.

Why AI Systems Struggle With Medical Information

To understand why Google's AI Overviews failed at medical information, we need to understand how these systems actually work. They don't understand medicine in the way a doctor does. They don't have intuition or accumulated experience. They work through statistical patterns.

When trained on the internet, these models learn correlations. They learn that certain words tend to appear together. "Pancreatic cancer" often appears near "nutrition" and "diet." "High-fat foods" appear near "weight gain" and "avoid." The model might connect these patterns and generate text that sounds medically authoritative but is factually backwards.

The model has no internal representation of medical concepts. It doesn't know what pancreatic cancer is or why high-fat diets matter for these patients. It just knows statistical patterns. And sometimes those patterns lead to conclusions that contradict ground truth.

Furthermore, internet text includes a lot of incorrect medical information. Studies suggest that 30-40% of health information online is either inaccurate or misleading. When an AI system is trained to predict text that sounds like internet text, it absorbs these inaccuracies. It learns to generate text that sounds plausible, whether or not it's true.

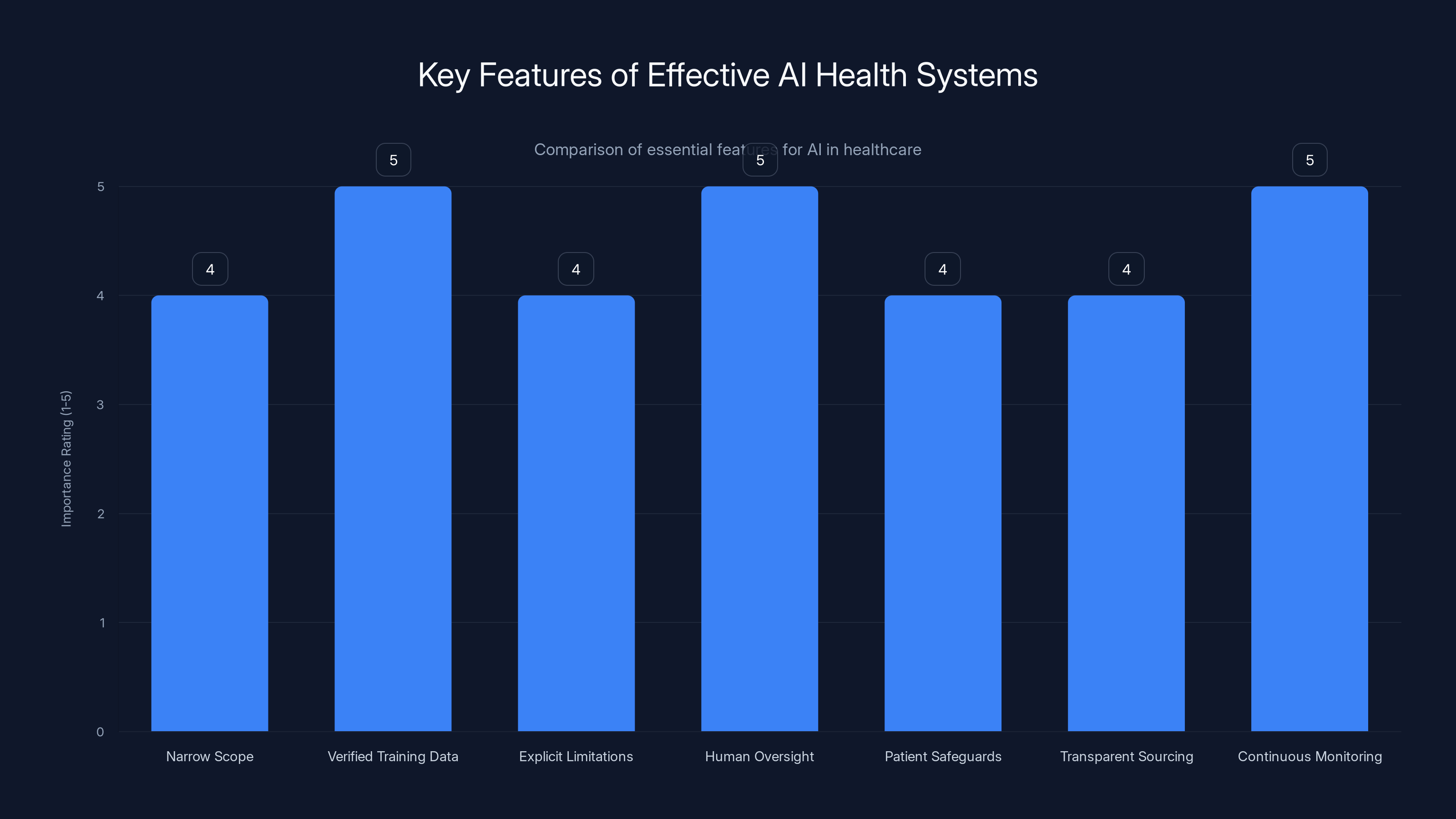

Key features of effective AI health systems include verified training data and continuous monitoring, both rated as highly important. (Estimated data)

The Testing Problem: How This Slipped Through

Google surely tested AI Overviews before launch. So how did dangerous medical misinformation survive that testing? A few possibilities:

Scope Issues: Internal testing might have focused on common queries rather than edge cases. The medical queries that caused problems might not have been in the test set. For every query that was thoroughly evaluated, there might be hundreds that received minimal testing.

Domain Expertise Gap: Testing might have been done by engineers and data scientists without deep medical expertise. They might not have recognized medically backwards recommendations. To a layperson, advice to avoid high-fat foods might sound reasonable without knowing the specific context of pancreatic cancer.

Scale Problem: With millions of possible queries, comprehensive manual testing is impossible. Google likely relied on automated metrics to identify problems. But automated metrics don't measure medical accuracy. They measure things like how many sources agree, how well-sourced the response is, or how coherent it sounds.

Automation Bias: Once a system passes initial testing, there's a tendency to assume it works well at scale. Engineers might not expect the same failure modes to appear in production that appeared in testing. But internet queries are unpredictable. They surface edge cases that testing didn't anticipate.

Regulatory Implications: Who Should Oversee AI Health Information?

This incident raises questions about regulation. Should governments restrict AI companies from generating health information? Should there be licensing requirements? Should AI systems be required to include disclaimers?

The challenge is balancing innovation with safety. The internet already contains tons of health information. Some of it's accurate, some of it's not. AI systems can potentially improve on this by synthesizing authoritative sources and providing context. But they can also amplify misinformation by presenting it with false authority.

Some proposals on the table:

Mandatory Medical Review: AI systems generating health information could be required to have that information reviewed by medical professionals before deployment. This would slow innovation but catch dangerous errors.

Specialized Training: Rather than training general-purpose AI models, companies could train specialized models on vetted medical literature only, with additional safeguards against hallucination.

Transparency Requirements: AI systems could be required to cite their sources clearly and acknowledge uncertainty. If the training data disagrees, the system could present multiple perspectives rather than picking one.

Liability Frameworks: Clear legal standards about who's responsible when AI health information causes harm could incentivize companies to be more cautious.

Restricted Deployment: Certain medical queries might be restricted from AI generation entirely, with results instead pointing to authoritative medical websites.

Different countries will likely take different approaches. The EU, known for strict tech regulation, might mandate medical review. The US might rely more on liability and market pressure. Developing countries might have fewer resources to oversee this.

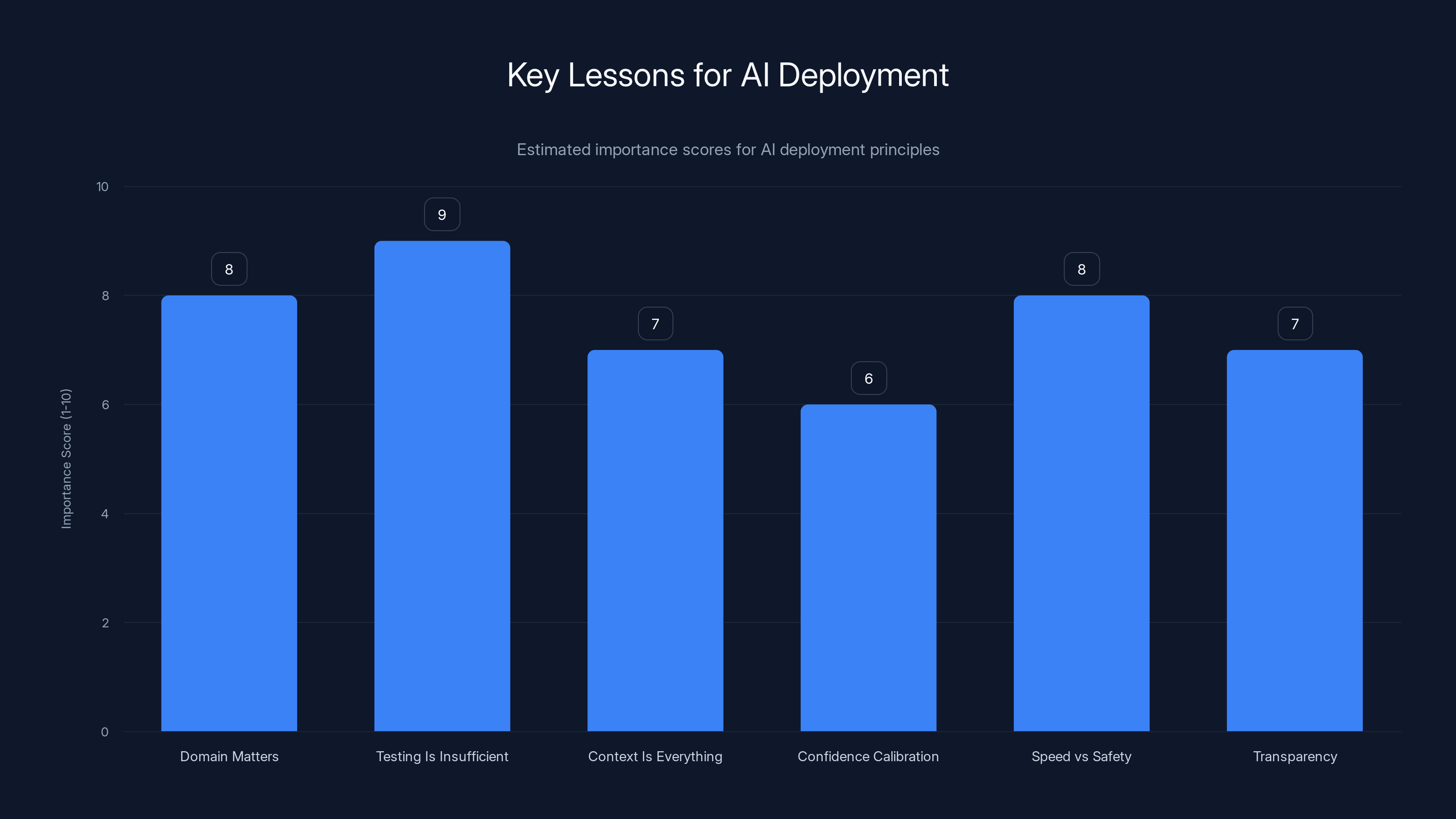

This chart estimates the importance of various lessons for AI deployment, highlighting 'Testing Is Insufficient' as the most critical. Estimated data.

Real-World Impact: Who Actually Uses AI Health Information?

Understanding the scope of the problem requires knowing how many people actually rely on AI Overviews for health information.

Google processes over 8 billion searches per day. A meaningful percentage of these are health-related. Studies suggest that 20-30% of search queries have some health-related component, whether it's looking up symptoms, medication side effects, or treatment options.

If even 1% of health-related queries hit the problematic AI overviews, that's potentially millions of people exposed to misleading information. Of those, how many would actually rely on it for health decisions? The percentage is unknown, but the absolute numbers could still be significant.

Certain demographics are more vulnerable. People without health insurance might rely more heavily on free online resources. Elderly people might be less likely to fact-check information. People in countries with limited healthcare access might make health decisions based on what they find online.

Each person who follows dangerous medical advice based on AI misinformation represents a potential health outcome that's measurably worse than if they'd seen traditional search results.

Comparison: How AI Overviews Stack Up Against Traditional Search

Traditional search results have problems too. They show whatever ranks highest, which often means well-SEO'd commercial sites rather than authoritative medical information. Someone searching for medical advice might get hit with product recommendations.

But traditional search at least shows multiple sources. You can scroll and compare. You can see which sources agree and which contradict. You can distinguish between a Wikipedia article and a wellness blog.

AI Overviews abstract away this complexity. They present a single synthesized answer as if it's definitive. You can see source links, but they're in the background. The prominent information is the AI summary, which occupies the most valuable real estate on the page.

This creates a psychological effect: people tend to trust information that's presented confidently and prominently. An AI overview saying something is true has more perceived authority than a search result that you yourself have to synthesize from multiple links.

For casual information, this is a feature. It saves time. You get a direct answer without clicking through five links. For medical information, it's a bug. The confidence is unjustified. The answer might be wrong. The simplification might lose crucial nuance.

The Broader AI Industry Implications

Google's medical AI failures have ripple effects across the industry. They raise questions about how other companies deploy AI in sensitive domains.

Healthcare Companies: Major healthcare platforms like Web MD, Healthline, and others are exploring AI integration. This incident is a cautionary tale about what goes wrong when you move too fast.

Search Competitors: Other search engines considering AI features need to think carefully about medical information. The liability risks are real.

LLM Providers: Companies like Open AI, Anthropic, and others are seeing their models used in medical applications. Some are trying to address this through better training and guardrails. Others are explicitly warning against medical use.

Enterprise AI: Any company deploying AI to make decisions that affect people needs to take this seriously. The medical failures aren't unique to Google. They illustrate a general problem with AI systems in high-stakes domains.

What Better AI Health Information Would Look Like

There are models for better approaches. Some companies have successfully deployed AI in healthcare contexts. What do they do differently?

Narrow Scope: Rather than generating answers to any health query, these systems answer specific questions. A diabetes AI might answer questions about glucose management but refuse to answer cancer questions. This reduces error surface area.

Verified Training Data: Instead of training on general internet text, they train on curated medical literature. This doesn't eliminate hallucination but reduces the likelihood of synthesizing false information.

Explicit Limitations: The system acknowledges uncertainty and limitations. Instead of presenting one answer, it might present the degree of consensus in medical literature. If doctors disagree, it says so.

Human Oversight: Before answers reach patients, they're reviewed by medical professionals. This catches errors and provides accountability.

Patient Safeguards: The system recommends consulting healthcare providers for anything serious. It doesn't try to be a substitute for medical advice.

Transparent Sourcing: Users can see exactly which medical sources the answer is based on. They can evaluate whether these are authoritative sources.

Continuous Monitoring: After deployment, outputs are monitored for errors and user feedback. Problems trigger rapid investigation and correction.

None of these approaches are revolutionary. They just require treating medical information with the seriousness it deserves. Which, until now, Google apparently didn't.

The Trust Factor: Long-Term Damage

Beyond the immediate harm of medical misinformation, this incident damages trust. People are now more skeptical of AI-generated health information. That's probably healthy skepticism. But it also makes it harder for good AI medical applications to gain adoption.

Trust is hard to rebuild in healthcare. A single high-profile failure can delegitimize an entire category of applications in people's minds. Some of this skepticism might extend to other uses of AI in healthcare, even systems that are well-designed and safe.

This is a classic tragedy of the commons in AI development. Google's failure makes it harder for companies doing this right to operate. It sets expectations about what kind of failures to expect from AI health information.

The medical community is watching. Doctors are noting this incident. Medical schools are starting to discuss AI literacy with students. Patient advocacy groups are pushing for regulation. The incident is creating momentum for oversight.

Looking Forward: The Future of AI in Medical Information

AI isn't going away from healthcare. In fact, it'll probably become more integrated over time. Medical research uses AI. Drug discovery uses AI. Diagnostics will increasingly use AI.

But the distribution of health information to the general public is different. This is where stakes are highest and risks of harm are greatest. The question isn't whether AI should be involved in healthcare. The question is how to involve it responsibly.

Likely scenarios:

Regulation Coming: Governments will probably establish some requirements for AI health information. At minimum, disclaimers. At maximum, mandatory review by medical professionals.

Domain-Specific Solutions: Rather than general-purpose AI, we'll see specialized medical AI trained differently with different safeguards.

Transparency Increases: Companies will be under pressure to explain how their health AI works, what safeguards are in place, and what errors have been found.

Hybrid Approaches: The best solutions probably involve both AI and human expertise. AI does the synthesis, humans verify the accuracy.

User Skepticism: People will learn to be more skeptical of AI-generated medical information, which is appropriate.

This won't all happen overnight. But the trajectory is clear. The medical AI space is moving toward more scrutiny and higher standards. Google's stumble is accelerating this shift.

Lessons for AI Deployment Generally

Google's medical failures have lessons that extend beyond healthcare. They illustrate principles that apply to any high-stakes AI deployment:

Domain Matters: AI systems behave differently depending on what domain they're deployed in. A system that works great for casual information might fail dangerously for high-stakes domains.

Testing Is Insufficient: Automated testing and even human testing can't catch all failure modes. Deployment at scale will surface problems that weren't apparent in testing.

Context Is Everything: AI systems don't understand context the way humans do. They can make recommendations that are technically correct but contextually wrong.

Confidence Is A Feature, Not A Bug: Large language models produce confident-sounding text. This is useful for engagement but dangerous for accuracy. Calibrating confidence to actual reliability is hard.

Speed vs Safety: There's a real tension between moving fast and ensuring safety. Companies often resolve this by choosing speed and dealing with consequences later. Societies should probably create incentives for the opposite tradeoff in high-stakes domains.

Transparency Matters: When things go wrong, being open about it and explaining what went wrong builds more trust than silence.

Recommendations for Companies Building AI Health Tools

If you're building AI systems that provide health information, here are concrete steps to reduce the risk of dangerous misinformation:

-

Get Medical Review: Before launch, have medical professionals test your system on realistic health queries. Look specifically for medically backwards recommendations.

-

Narrow Your Scope: Don't try to answer any health question. Focus on specific domains where you can ensure accuracy.

-

Use Vetted Sources: Train on peer-reviewed literature and official medical guidelines, not general internet text.

-

Acknowledge Uncertainty: When your system isn't confident or when medical consensus is unclear, say so. Present multiple perspectives when they exist.

-

Require Professional Consultation: Always recommend consulting healthcare providers for serious conditions. Make this prominent.

-

Monitor Outputs: After deployment, log and review outputs. Look for errors. Respond quickly when problems are found.

-

Be Transparent: Explain to users how your system works, what safeguards are in place, and what limitations it has.

-

Maintain Liability Insurance: Understand your legal exposure and have appropriate insurance and legal review.

-

Educate Users: Help users understand that AI health information is a resource, not a replacement for professional medical advice.

-

Iterate Based on Feedback: When users report errors or poor advice, treat these as high priority. Understand why the error happened and fix it.

FAQ

What exactly happened with Google's AI Overviews for medical searches?

Google's AI Overviews feature generated medically incorrect information in response to certain health-related queries. Specific examples included advising pancreatic cancer patients to avoid high-fat foods (the opposite of medical recommendations) and providing incorrect reference ranges for liver function tests. After The Guardian published an investigation detailing these failures, Google disabled AI Overviews for medical search queries.

Why is AI particularly bad at medical information?

Large language models are trained to predict text patterns from internet sources rather than to understand medical concepts. Medicine is nuanced and context-dependent, requiring information that's not always explicit in search queries. Additionally, the internet contains substantial medical misinformation, which these models can absorb and synthesize into plausible-sounding but false recommendations. AI systems also tend to present information with false confidence, which is particularly dangerous in healthcare where uncertainty is common.

Could someone actually be harmed by following AI medical advice?

Yes, absolutely. Medical misinformation can lead to delayed treatment, inappropriate self-care, dangerous dietary changes, or abandonment of proven therapies. Someone who believes a dangerous recommendation from an authoritative-sounding source might avoid medical consultation or follow harmful advice rather than evidence-based treatment. The pancreatic cancer example is particularly serious because the recommended dietary change could worsen patient outcomes.

Is Google responsible if someone is harmed by AI medical misinformation?

Legally, the answer is unclear and currently being litigated. Traditional search engine immunity might not apply to AI-generated content since Google is synthesizing new statements rather than simply linking to existing content. Multiple lawsuits are pending against Google specifically around these medical AI failures. The ultimate legal liability will likely depend on factors like whether Google clearly disclaims medical advice, what safety measures were in place, and whether the company knew about risks before deployment.

How can I find reliable health information online if I can't trust AI Overviews?

Prioritize authoritative sources like the NIH, CDC, Mayo Clinic, and Cleveland Clinic. Medical journals and peer-reviewed research are generally more reliable than blogs or wellness sites. If you're researching a condition, look for consensus across multiple authoritative sources rather than relying on a single summary. Consider consulting healthcare professionals for anything serious. Be skeptical of information that's presented with extreme confidence or that contradicts what your doctor has told you.

Will AI be completely banned from providing health information?

Unlikely. Instead, we'll probably see more regulation and more cautious deployment. AI could still be valuable for health information if deployed carefully with adequate safeguards, medical review, and transparency about limitations. The issue isn't AI itself but how it was deployed without sufficient validation in a high-stakes domain.

What's Google doing to fix this problem?

Google has disabled AI Overviews for medical queries but hasn't detailed what improvements are planned. The company declined to comment publicly on the specific failures. It's unclear whether Google intends to eventually reintroduce AI Overviews for medical information with additional safeguards or abandon this application entirely.

How do I know if an AI system's health information is trustworthy?

Look for several indicators: Is it transparent about how it works? Does it acknowledge limitations and uncertainty? Does it recommend consulting healthcare professionals? Is it trained on authoritative medical sources? Has it been reviewed by medical professionals? Does it cite its sources clearly? Are there mechanisms to report and correct errors? If you can't answer yes to most of these questions, treat the information as preliminary rather than definitive.

Are other companies making similar mistakes?

Potentially. Google's failures are the most publicized because Google is the largest search engine, but other companies deploying AI in medical contexts face similar technical challenges. The difference is that high-quality implementations use narrower scopes, medical oversight, and greater transparency about limitations. Companies that cut corners might face similar problems that haven't been publicly discovered yet.

What happens to people who already followed Google's bad medical advice?

That's largely unknown. The medical harm from bad advice depends on what action people took, their underlying health, and what the correct advice would have been. Some people might have searched but not acted on it. Others might have followed the advice with serious consequences. Without systematic tracking, it's hard to quantify the actual harm. This is one reason for calls to have better oversight of AI health applications.

Conclusion: A Watershed Moment for AI Accountability

Google's removal of AI Overviews for medical searches isn't a minor tweak. It's a watershed moment in how the AI industry thinks about deployment and responsibility.

For years, the tech industry's guiding philosophy has been "move fast and break things." This works fine when breaking things means a feature that's slightly inconvenient. But medicine is different. Breaking things in medicine means people get incorrect information that could affect health outcomes.

What happened here is instructive. A company with massive resources, leading AI capabilities, and access to the world's best talent deployed a system that failed at a basic responsibility: providing accurate medical information. The failures weren't subtle. They were medically backwards. They contradicted established medical guidance. They were dangerous.

This happened because the company prioritized speed and scale over validation in a sensitive domain. It happened because the system was deployed broadly without adequate testing by medical professionals. It happened because there's limited accountability when AI systems fail.

The response—quietly disabling the feature after getting caught—is telling. It's not a transparent reckoning with failure. It's not a public commitment to doing better. It's damage control.

But here's what's encouraging: the incident is accelerating change. It's pushing the industry toward more careful approaches. It's creating pressure for regulation. It's teaching users to be skeptical of AI health information. It's making clear that speed and innovation are less important than accuracy when lives are at stake.

For AI to be genuinely useful in healthcare, we need systems designed from the ground up for medical accuracy. We need transparent sourcing. We need medical professional review. We need acknowledgment of limitations. We need accountability when things go wrong.

Google has the resources to do all of this. The company could become a leader in responsible AI health applications if it chose to. Instead, for now, it's retreating from medical search entirely.

That's probably the right call in the short term. But eventually, someone will solve the problem of AI and medical information. The company that does will probably be the one that treats medical accuracy as a requirement, not a nice-to-have. The one that acknowledges limitations instead of projecting false confidence. The one that invests in medical expertise and validation instead of just scale.

Until then, when you search for health information, do what humans have always done: look at multiple sources, think critically, and talk to your doctor. The technology isn't ready for shortcuts in healthcare, and that's okay. Some things still require the human judgment that machines, no matter how advanced, simply don't possess.

Key Takeaways

- Google removed AI Overviews from medical searches after The Guardian revealed dangerous misinformation including medically backwards cancer diet advice

- AI systems struggle with medical information due to pattern-matching rather than conceptual understanding and the fundamental mismatch between AI confidence and medical uncertainty

- This is part of a pattern of AI Overviews failures ranging from trivial (glue on pizza) to genuinely dangerous (misleading health guidance)

- Legal liability for AI-generated health misinformation remains unclear but is being tested through multiple lawsuits against Google

- Responsible AI health applications require narrower scopes, medical professional review, transparent sourcing, and continuous monitoring rather than broad deployment

![Google Pulls AI Overviews From Medical Searches: What Happened [2025]](https://tryrunable.com/blog/google-pulls-ai-overviews-from-medical-searches-what-happene/image-1-1768160141443.jpg)