Clear Audio Drives AI Productivity: Why Sound Quality Matters in Modern Workplaces

Here's something nobody talks about: your AI tools are only as good as the audio feeding them.

Think about the last meeting where Copilot missed half the context. Or when your transcription service turned "quarterly revenue" into "court-early revenue." Before you blame the AI, ask yourself one question: was the audio actually clear?

This isn't theoretical. When sound quality drops, AI performance collapses. Transcription accuracy plummets. Meeting insights become unreliable. And the whole reason you deployed AI in the first place—to make teams smarter and faster—falls apart because the foundation is broken.

The problem runs deeper than most organizations realize. We've spent billions on AI tools, cloud infrastructure, and collaboration platforms. But we've left audio quality to chance. Someone turns on their laptop microphone from across the room. Background noise from three open tabs running in the browser. A speaker who's clearly muted because nobody can hear them. And then we're shocked when the AI doesn't understand what happened.

But here's the opportunity: audio quality is one of the few collaborative elements you can control today that directly impacts every single AI feature your organization uses. From live translation to meeting transcription to AI-powered decision-making tools, everything depends on the quality of the sound being captured.

The companies getting the most value from their AI investments aren't the ones throwing the most money at new tools. They're the ones who fixed the audio first.

TL; DR

- Poor audio kills AI performance: Transcription accuracy drops to 65% or below with background noise, compared to 95%+ with clear audio

- This is a business problem, not a tech problem: Audio quality directly impacts decision-making speed, inclusion, and AI-powered meeting intelligence

- Investment in certified audio solutions pays immediate dividends: Organizations see 30-40% reduction in meeting time, faster decision cycles, and measurable productivity gains

- Audio technology is foundational to AI strategies: Companies embedding audio strategy into IT roadmaps unlock the full potential of AI tools like Copilot and custom agents

- The fix is practical: Better microphones, intelligent audio software, and room optimization deliver immediate, measurable ROI

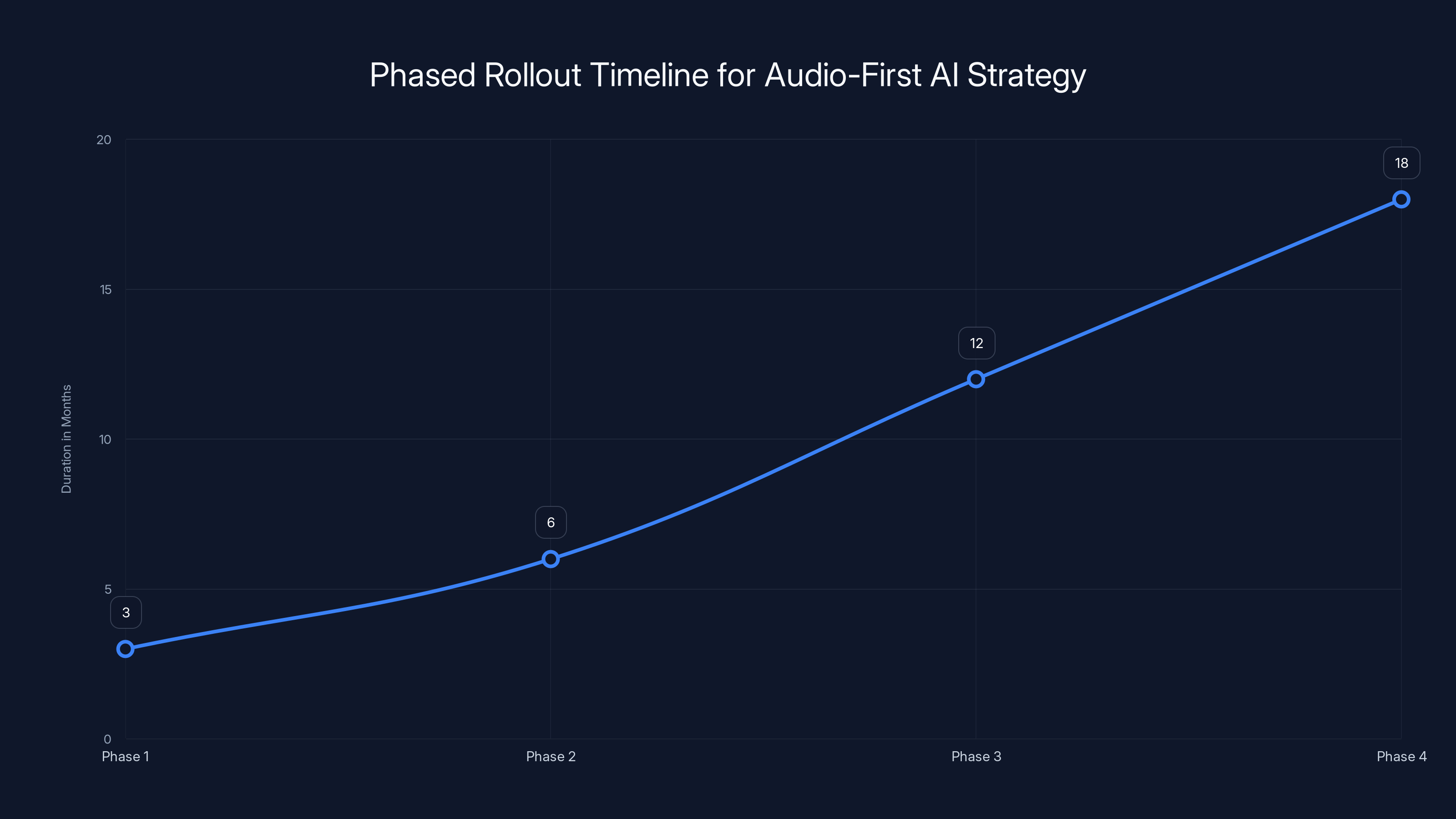

The phased rollout of an audio-first AI strategy spans over 18 months, starting with high-impact areas and gradually covering all workspaces. Estimated data.

Why AI Needs Clear Audio to Function

Let's start with the technical reality: AI models trained on speech data have no tolerance for garbage input.

When you feed a speech recognition model audio that's compressed, muffled, or buried in noise, the model doesn't somehow "fill in the gaps" like a human brain would. It makes its best guess based on statistical patterns. And when the audio is poor, it makes increasingly bad guesses.

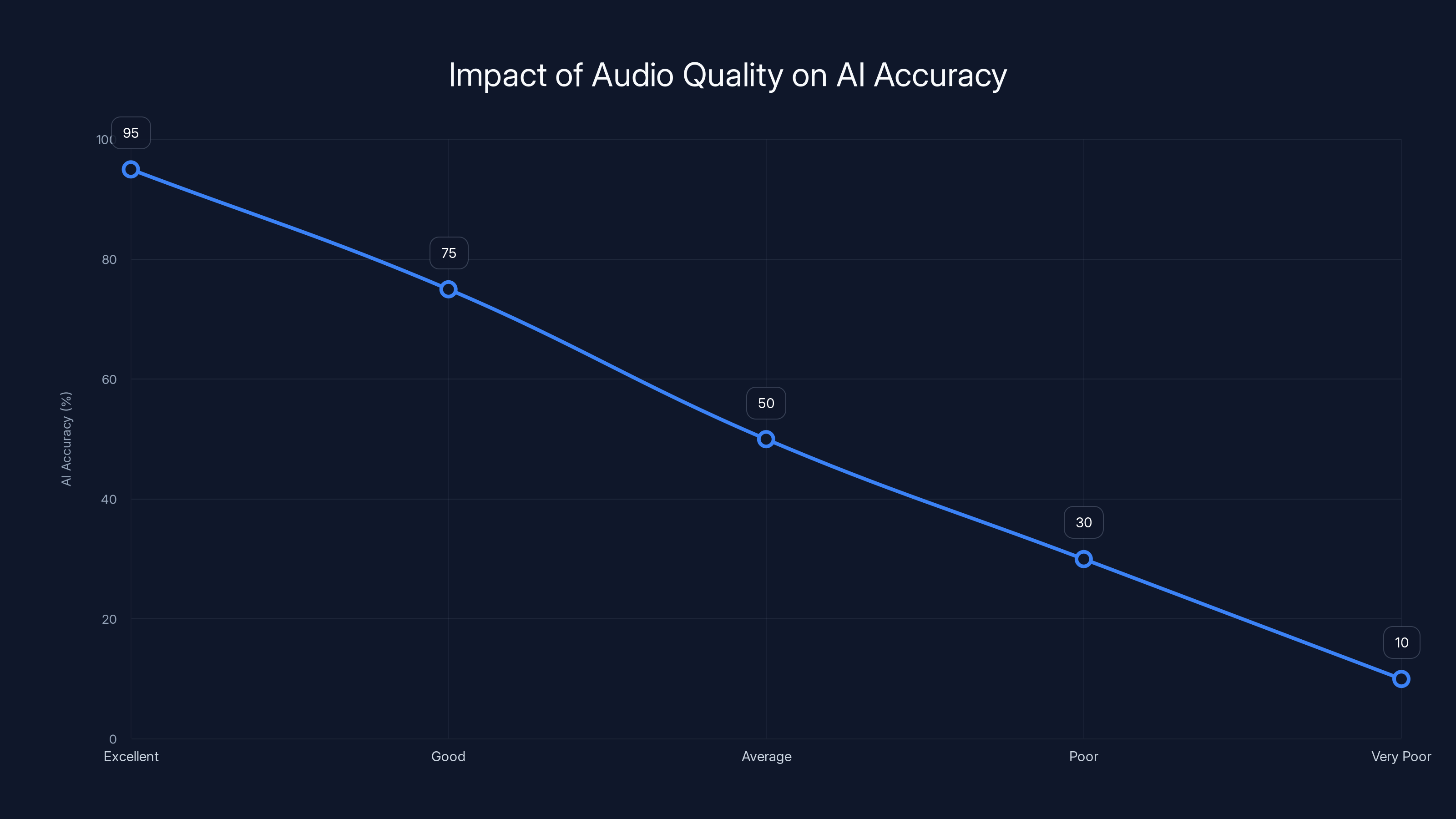

The relationship between audio quality and AI accuracy isn't linear—it's exponential. Move from excellent audio to just "pretty good" audio, and accuracy doesn't drop by 5%. It drops by 20-30%. Add background noise on top of that, and you're looking at 40-50% accuracy degradation.

This matters because modern AI meeting tools do more than just transcribe what people say. They analyze sentiment, extract action items, identify speakers, detect topics, and generate insights. Every single one of those capabilities depends on the AI correctly understanding what was actually said.

Imagine a customer support scenario. A client calls your support team. The AI agent needs to understand their problem, assess their sentiment, and route them appropriately. If the audio is unclear, the AI might misclassify the issue entirely. The customer gets transferred to the wrong department. Frustration increases. Resolution time doubles. The AI that was supposed to improve the customer experience actually made it worse.

Or consider an executive team having a strategy discussion. The AI is listening, tracking decisions, and generating an action item summary. But because someone's microphone is picking up keyboard clacking and wind from an open window, the AI captures maybe 60% of what was actually discussed. The summary is incomplete. Critical context is lost. A follow-up meeting becomes necessary that shouldn't have been.

These aren't edge cases. They're happening in thousands of organizations every single day.

The technical reality is this: large language models and speech recognition systems are trained on clean audio data. When you feed them degraded audio, you're using them in conditions they were never optimized for. It's like running high-performance racing software on a computer held together with tape. The software works fine on its intended system. On yours, it struggles.

The solution isn't to accept degraded performance. It's to recognize that audio quality is the first layer of your AI infrastructure. Everything else sits on top of that foundation.

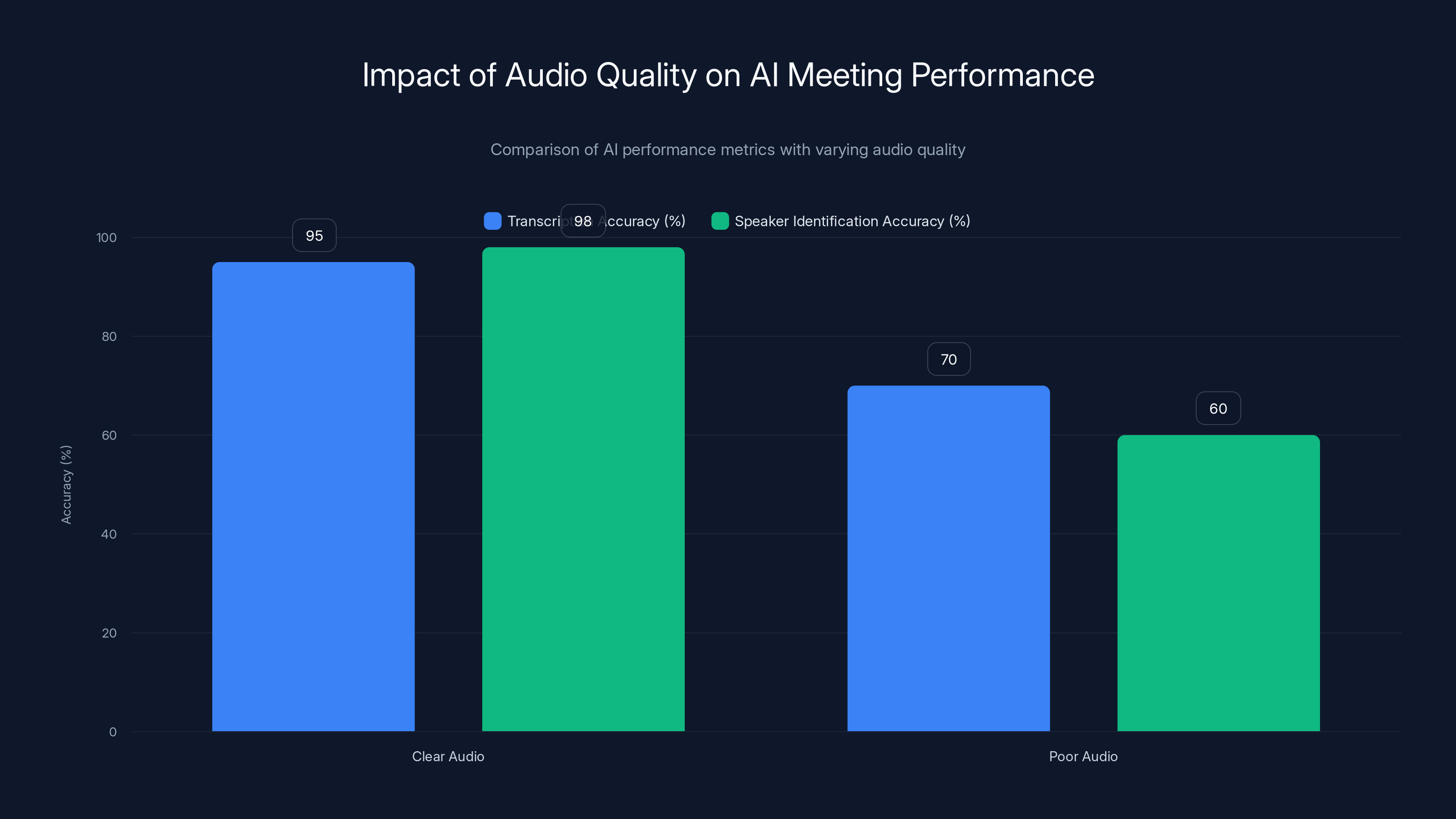

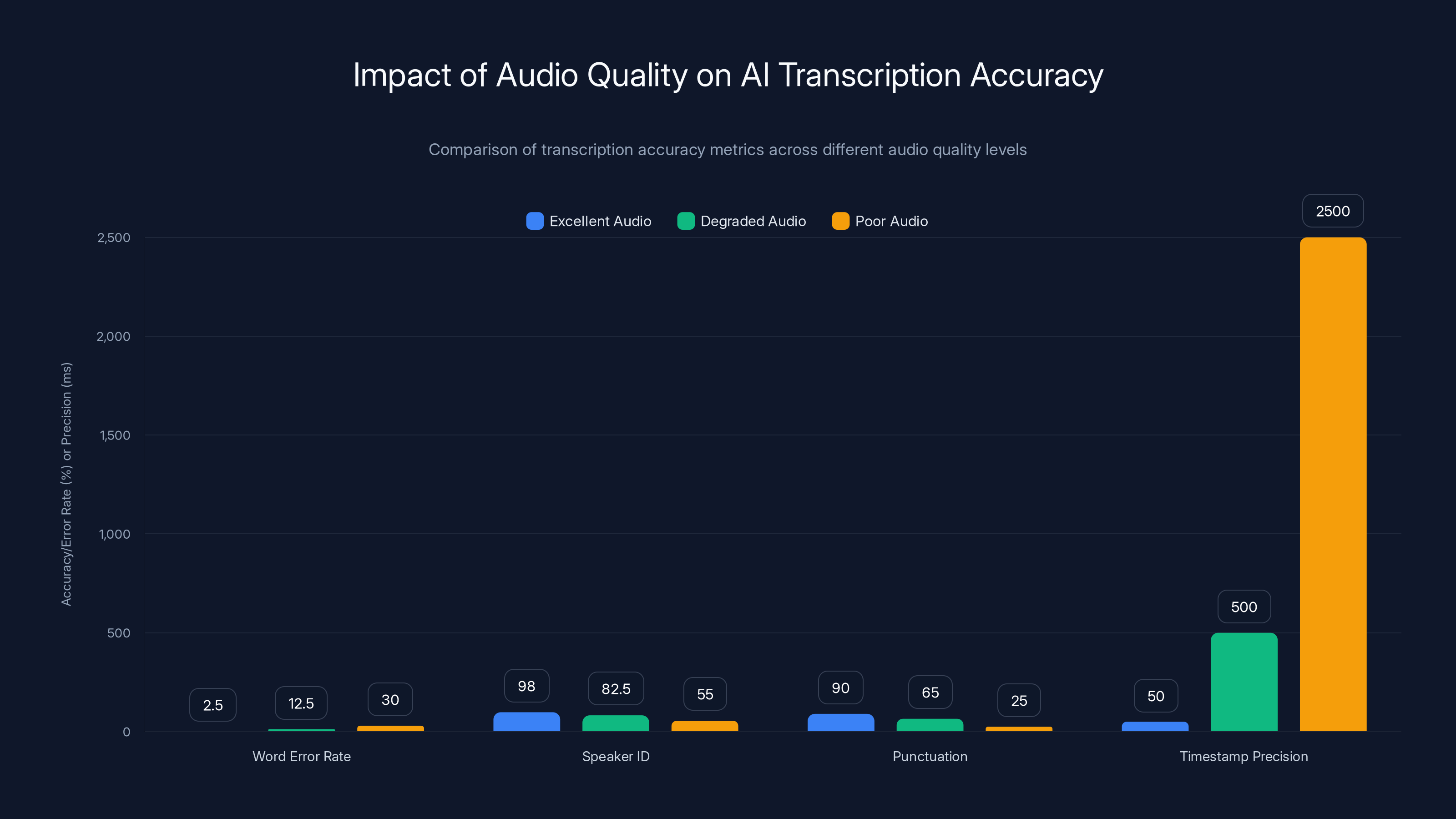

Clear audio significantly enhances AI meeting performance, with transcription accuracy at 95% and speaker identification at 98%. Poor audio drastically reduces these metrics.

The Hidden Cost of Poor Audio Quality

Most organizations track the cost of their AI tools, their cloud subscriptions, their licensing. What they don't track is the cost of audio quality degradation.

Let's calculate it.

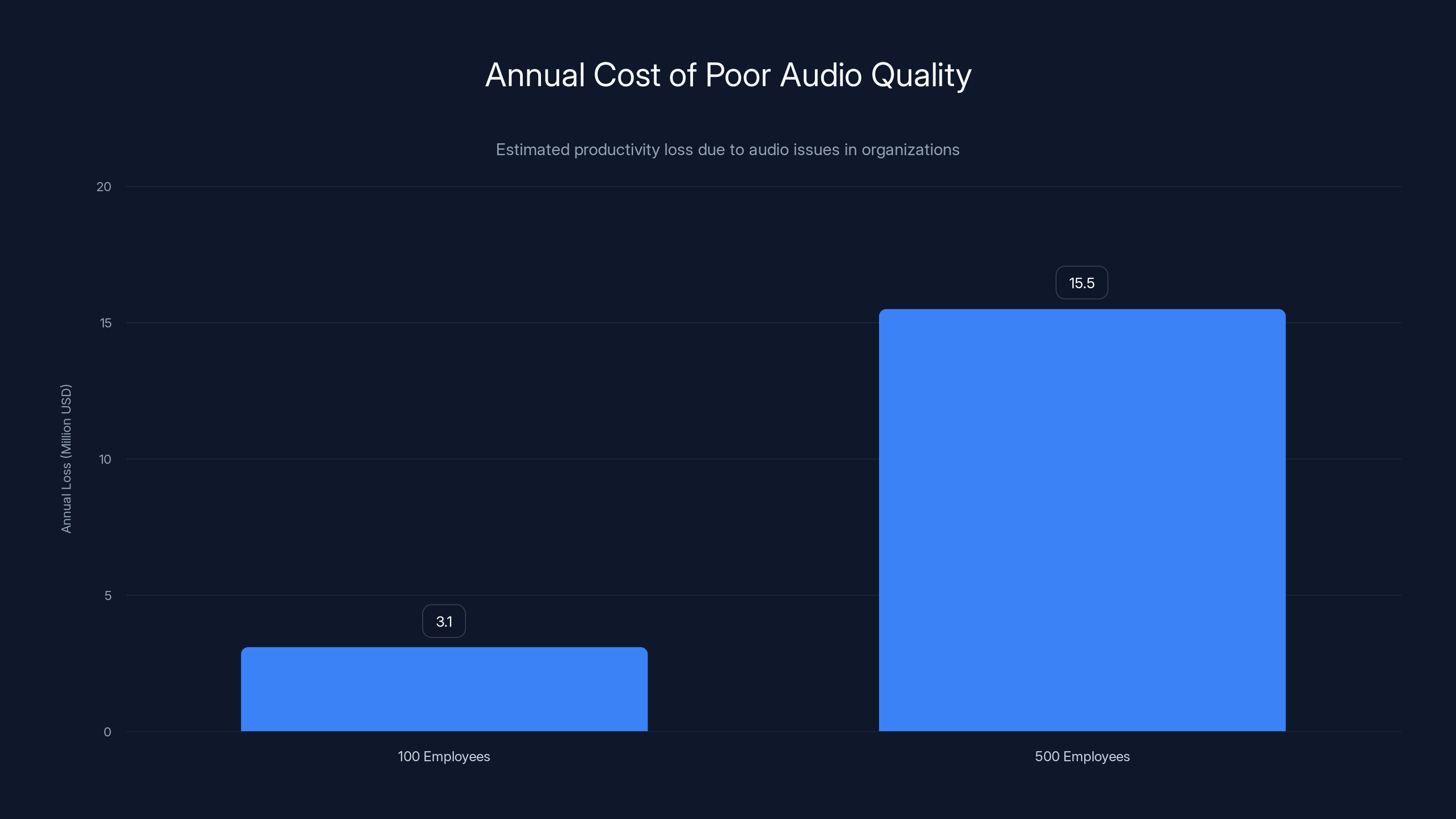

Assume your organization has 100 people in collaborative roles (sales, customer support, leadership, product teams). Assume each person participates in 10 meetings per week that involve AI features (transcription, meeting summarization, intelligence, etc.). That's 1,000 AI-assisted meetings per week.

Now assume that due to audio quality issues, 30% of those meetings require follow-up work—either because the transcription was incomplete, action items were missed, or context wasn't captured. That's 300 additional meetings per week that shouldn't have happened.

Each follow-up meeting costs roughly

Over a year, that's roughly $3.1 million in productivity loss from poor audio quality.

For a company with 500 people, multiply that by 5. You're looking at $15+ million in annual loss from audio quality issues alone.

And that's just the obvious, calculable cost. There's also the cost of:

- Slower decision-making: Incomplete meeting context means decisions take longer to finalize

- Lower inclusion: Remote workers with poor audio are excluded from critical discussions

- Customer experience degradation: Support interactions become frustrating when AI misunderstands

- Regulatory and compliance risk: Poor transcription creates liability in regulated industries

- Organizational frustration: Teams get frustrated with tools that don't work, leading to shadow IT and tool fragmentation

The irony is that the fix costs dramatically less than the problem. A certified meeting microphone costs

So a 10-person organization might spend

But there's another angle that makes this even more compelling: competitive advantage.

The organizations that figure out audio quality first are going to get 30-40% more value from their AI investments than organizations that don't. They'll make faster decisions. They'll have better customer interactions. Their teams will feel heard and included. And their AI tools will actually work as advertised.

That's not a small advantage. That's a fundamental competitive edge.

How Audio Quality Breaks Modern Meeting AI

Let's walk through exactly what happens when audio quality degrades.

Transcription Accuracy Collapse

Start with basic transcription. When audio is clean, modern speech-to-text systems achieve 95%+ accuracy. But accuracy isn't linear. Each decibel of background noise, each octave of muffling, each second of interruption creates exponential accuracy loss.

Background noise at 50 decibels (a quiet office) still allows 90%+ accuracy. At 60 decibels (normal conversation around you), accuracy drops to 80-85%. At 70 decibels (loud background noise), you're down to 65-70%. At 80 decibels (very loud noise), accuracy is below 50%.

Now imagine someone joining a call from a coffee shop. You're looking at 80+ decibels of ambient noise. The transcription is essentially unreliable.

Speaker Identification Failure

Many AI tools attempt to identify who said what. This depends on audio quality. If speakers are muffled or unclear, the AI can't build an acoustic profile. Voices blend together. The AI either stops trying to identify speakers or makes wild guesses.

This breaks several downstream features. Action item assignment becomes impossible. Meeting summaries can't properly attribute comments. Meeting insights that highlight who said what become useless.

Sentiment and Intent Detection Issues

Advanced AI tools analyze tone, pace, and linguistic markers to understand sentiment and intent. All of these depend on clear audio. If the audio is degraded, the AI can't detect the subtle vocal cues that indicate frustration, uncertainty, or emphasis.

A customer support AI might fail to detect that a customer is frustrated and escalate appropriately. An internal meeting AI might miss that a team member is uncertain about a decision and needs support. The AI becomes blind to the human elements that actually matter.

Real-Time Translation Degradation

Live translation features are increasingly common in global organizations. But translation depends on accurate transcription happening first. If transcription fails due to audio quality, translation fails next. And if translation fails, your global team suddenly can't communicate effectively.

A non-native English speaker in a meeting suddenly has their words mangled by poor transcription, then mistranslated, then their meaning is completely lost. The inclusion that live translation promised becomes the opposite—exclusion.

Meeting Intelligence and Insights Breakdown

When organizations invest in meeting intelligence platforms, they're buying insights about their meetings: what was discussed, what was decided, what's outstanding, what's at risk. All of these depend on the AI understanding what was actually said.

With poor audio, the AI generates low-quality insights. The summaries are incomplete. The action items are wrong. The risks aren't identified. The tool becomes a checkbox exercise rather than a genuine value driver.

It's like building a decision-making system on corrupted data. The outputs are technically generated, but they're not reliable. And teams quickly learn not to trust them.

Organizations with 100 employees face an estimated

The Role of Microphones in AI-Powered Collaboration

Microphones are the first link in the chain. Everything downstream depends on what they capture.

But not all microphones are created equal. Most built-in laptop microphones are terrible. They're designed to be cheap. They're designed to fit in a thin chassis. They're not designed for quality audio capture.

Built-in laptop microphones typically feature:

- Poor frequency response: They don't capture the full range of human speech, losing nuance

- No directionality: They pick up everything equally—your voice, keyboard clicks, fan noise, the person next to you

- Weak preamps: The electronics amplify noise as much as your voice

- No processing: There's no intelligent audio management happening in real-time

When you're relying on that microphone to feed your AI tools, you're feeding them degraded input. That's your baseline problem.

A certified meeting microphone does several things differently:

Directional pickup patterns

A quality microphone is designed to pick up your voice strongly and background noise weakly. This is done through physical design (multiple microphone elements that cancel out noise from certain directions) and tuning (frequency response curves that emphasize human speech frequencies).

The result is that your voice comes through clearly, while background noise is naturally suppressed. You're not using software to try to remove noise after the fact. You're not capturing it in the first place.

Intelligent audio processing

Modern certified microphones include built-in audio processing that happens in real-time. This includes:

- Noise gating: Automatically mutes audio when only background noise is detected

- Echo cancellation: Removes the sound of your own speakers from your microphone

- Equalization: Shapes the audio to emphasize speech frequencies and de-emphasize noise

- Gain optimization: Automatically adjusts microphone levels so you're never too quiet or too loud

All of this happens transparently, in real-time, without any action required from the user. It's like having a professional audio engineer sitting next to you during every call.

Multiple microphone elements

A single-element microphone captures audio uniformly from all directions. A multi-element microphone captures audio separately from different directions, then combines those signals intelligently.

The result is dramatically better rejection of noise from sources that aren't directly in front of the microphone. Someone rustling papers next to you doesn't get picked up. The coffee shop noise behind you gets suppressed. Your voice remains clear.

Proper impedance and connectivity

How the microphone connects to your device matters. USB microphones are typically more reliable than analog connections because they include a dedicated audio interface. They're not trying to negotiate with 47 different drivers and settings. They just work.

Certified meeting microphones are typically designed to work with platforms like Microsoft Teams, Zoom, and Google Meet out of the box. They're tested and optimized for those platforms.

Now, here's where this connects to AI: when your AI tools receive clear audio from a quality microphone, everything downstream improves.

Transcription accuracy improves. Speaker identification works. Sentiment analysis works. Meeting insights become reliable. Real-time translation becomes viable. The entire AI-powered meeting experience elevates.

And the crazy part? The cost is minimal. A quality meeting microphone costs $300-600. That's less than a single employee's daily salary. The return is 30-40% improvement in AI meeting feature accuracy, plus dramatic improvements in meeting experience and productivity.

Intelligent Audio Software: The Second Layer

Microphone hardware is the foundation. But modern audio also requires intelligent software.

This is where many organizations get confused. They buy a quality microphone, then plug it into a system that's still running basic audio codecs from 2010. The microphone captures great audio, but then the software compresses it, removes detail, and degrades it for transmission.

Intelligent audio software handles several critical functions:

Adaptive bitrate management

When network conditions are poor, audio needs to be compressed. But most systems compress audio in ways that remove the details that speech recognition depends on. Intelligent audio software uses machine learning to identify which parts of the audio are essential for understanding (speech) versus which parts can be compressed without harming meaning (silence, certain background noise).

The result is that even on poor networks, the AI gets the information it needs.

Active noise suppression

This is different from noise cancellation. Noise cancellation removes noise from the output (what the speaker hears). Active noise suppression removes noise from the input (what the AI receives).

Intelligent software analyzes incoming audio in real-time, identifies noise sources, and suppresses them before the audio is transmitted or stored. This is computationally intensive—it requires AI models running on the hardware. But the payoff is dramatic.

You can be in a loud environment and the person on the other end hears just you. More importantly, the AI hears just you.

Speaker separation and enhancement

When multiple people are speaking simultaneously, intelligent software can separate the speakers and enhance each one. This is called "speaker separation" and it's computationally complex.

But for AI, it's transformative. Instead of trying to extract two simultaneous conversations from one muddy audio stream, the AI receives two clear, separate streams. Transcription accuracy jumps. Speaker identification works perfectly. Real-time translation handles each speaker independently.

Frequency response optimization

Different platforms, different AI models, and different network conditions benefit from different frequency response characteristics. Intelligent software can adapt in real-time.

For example, if audio is being compressed heavily for transmission, emphasizing the mid-range frequencies (where most speech intelligibility lives) helps maintain clarity. If you're in a noisy environment, de-emphasizing the frequencies where the noise lives helps the AI focus on speech.

This isn't a fixed EQ curve. It's adaptive, real-time, and driven by what the system detects in the audio and network conditions.

Integration with platform APIs

Modern collaboration platforms expose APIs that allow audio software to integrate deeply. For example, Microsoft Teams allows audio partners to integrate features that were previously only available in the platform itself.

This means that certified audio solutions can provide:

- Automatic speaker identification integration

- Live transcription quality monitoring

- AI feature performance optimization

- Meeting analytics

- Compliance and recording features

All of this happens transparently to the user. The software ensures that the platform's AI features get the best possible input.

Audio quality significantly impacts AI transcription accuracy. Excellent audio yields a word error rate of 2-3%, while poor audio increases it to 25-35%. Timestamp precision also varies greatly, from ±50 ms with excellent audio to ±2-3 seconds with poor audio.

Room Acoustics and Audio Environment Optimization

Even the best microphone and software can't overcome a bad audio environment.

Consider a typical meeting room: hard walls that bounce sound, a large table that reflects audio, a doorway that lets outside noise in, a ventilation system that provides constant background hum. Acoustically, it's a disaster. Sound bounces everywhere. Everything echoes. The microphone picks up reflections alongside direct sound, which creates phase cancellation and muddy audio.

This is partly why Zoom calls from conference rooms often sound worse than Zoom calls from people's home offices. Home offices have carpet, furniture, bookshelves—stuff that absorbs sound. Conference rooms have nothing but hard surfaces.

Room acoustics are critical because they affect both the audio being captured and the audio being heard.

Acoustic treatment for recording

When you're trying to capture clear audio from a microphone, you want to minimize reflections and reverb. This is done through acoustic treatment:

- Absorption panels: Foam, fiberglass, or mineral wool absorb sound at various frequencies

- Bass traps: Specialized absorption handles low frequencies that standard panels can't deal with

- Diffusion: Scatters sound rather than absorbing it, maintaining liveliness while reducing echo

A well-treated meeting room will capture significantly clearer audio than an untreated room. The microphone isn't struggling against reflections. The audio is dry and clean.

For AI, this is critical. Clean audio means higher transcription accuracy, better speaker identification, and more reliable meeting intelligence.

Speaker placement and monitoring audio

How you place speakers in a room dramatically affects how audio is experienced. If speakers are mounted too high or too low, if they're positioned against walls, if they're creating hot spots where audio is too loud—all of these create bad listening experiences.

More importantly for AI, speakers that are placed poorly can create feedback (the squealing you hear when output gets back into a microphone) or acoustic effects that make speech hard to understand.

Proper speaker placement ensures:

- Intelligibility: Speech is clear from everywhere in the room

- Feedback prevention: No acoustic loops where audio goes from speaker back to microphone

- Balanced audio: Volume levels are consistent across the room

- Echo prevention: Reflections don't create the "hollow" feeling of a room with poor acoustics

For AI listening to the room, clear playback audio matters because it affects how participants respond. If they can't hear clearly, they speak differently, they move around to hear better, they miss context. All of that changes their audio quality.

Ventilation and background noise reduction

Meeting rooms typically have HVAC systems that provide constant background noise. This 50-70 decibel hum is a baseline problem in most office environments.

Solutions include:

- Acoustic ductwork: Reduces noise transmission through ventilation

- Vibration isolation: Isolates HVAC equipment from the room structure

- Smart microphone placement: Positions microphones away from ventilation returns

- Room isolation: Seals gaps and cracks that let outside noise in

Reducing background noise by 10 decibels typically increases AI accuracy by 10-15%. It's a direct relationship.

A well-optimized meeting room environment creates conditions where:

- Microphones capture clear, usable audio

- Software can optimize intelligently

- AI tools can perform at their peak capability

The investment in room acoustics typically ranges from

Audio Quality Impact on AI Transcription and Meeting Intelligence

Let's get specific about what happens when you feed quality audio into AI transcription and meeting intelligence systems.

Transcription accuracy benchmark

With excellent audio quality (clean microphone, minimal background noise, clear speech):

- Word error rate: 2-3% (95-98% accuracy)

- Speaker identification: 98%+ accuracy

- Punctuation accuracy: 90%+

- Timestamp precision: ±50 milliseconds

With degraded audio (laptop microphone, some background noise, normal meeting conditions):

- Word error rate: 10-15% (85-90% accuracy)

- Speaker identification: 80-85% accuracy

- Punctuation accuracy: 60-70%

- Timestamp precision: ±500 milliseconds

With poor audio (low-quality microphone, significant background noise, multiple speakers):

- Word error rate: 25-35% (65-75% accuracy)

- Speaker identification: 50-60% accuracy

- Punctuation accuracy: 20-30%

- Timestamp precision: ±2-3 seconds

The difference isn't academic. A 5% accuracy difference in a one-hour meeting means roughly 18 words are wrong out of 360. That's roughly 1 error every 20 words. Put that in a transcription, and it reads like a bad translation from a different language.

Meeting intelligence accuracy

Meeting intelligence systems extract:

- Action items: What needs to happen, who's responsible, when it's due

- Topics discussed: What was the focus of the meeting

- Decisions made: What was decided, who decided it

- Risks identified: What could go wrong

- Attendee sentiment: Who was positive, negative, neutral

All of these depend on the AI correctly understanding what was said. With degraded audio, accuracy collapses:

- Action item extraction: 85%+ accuracy with good audio, 55-65% with poor audio

- Topic identification: 90%+ accuracy with good audio, 65-75% with poor audio

- Decision capture: 88%+ accuracy with good audio, 60-70% with poor audio

- Risk detection: 82%+ accuracy with good audio, 50-60% with poor audio

- Sentiment analysis: 85%+ accuracy with good audio, 55-70% with poor audio

Notice the pattern. Good audio loses 10-15% accuracy across all metrics. Poor audio loses 25-35% accuracy. That's not a small difference. That's a fundamental breakdown of the AI's ability to extract value from the meeting.

Real-world impact: customer support

Consider a customer support scenario. A customer calls in with a problem. The AI is listening to:

- Categorize the issue

- Assess customer sentiment (angry, frustrated, confused, satisfied)

- Identify key information (account number, problem description, context)

- Detect escalation triggers (customer becoming angry, problem complexity)

With good audio quality and speech recognition:

- Issue categorization: 92% accuracy

- Sentiment detection: 88% accuracy

- Information extraction: 95% accuracy

- Escalation detection: 89% accuracy

With poor audio quality and degraded speech recognition:

- Issue categorization: 65% accuracy

- Sentiment detection: 60% accuracy

- Information extraction: 70% accuracy

- Escalation detection: 55% accuracy

What does this mean operationally? The AI routes 25-30% of calls to the wrong department. It misses customer frustration and doesn't escalate when it should. It extracts wrong information, leading to follow-ups. It fails to detect problems that need urgent escalation.

The customer experience degrades. Call resolution times increase. Customer satisfaction drops. And the organization blames the AI when the real problem was audio quality.

Real-world impact: strategic meetings

Consider a quarterly business review meeting with 12 people. The AI is generating an executive summary, action items, and business insights.

With good audio:

- The summary captures all major discussion threads

- Action items are accurate and properly assigned

- Business insights are based on clear context

- Follow-up can be efficient because people trust the AI record

With poor audio:

- The summary is incomplete and confusing

- Action items are wrong or missing

- Business insights are unreliable

- People don't trust the AI record and recreate notes manually

What's the cost? People waste time redoing work the AI was supposed to handle. Decisions get made with incomplete information. Follow-ups become necessary that shouldn't have happened. The meeting effectiveness drops dramatically.

Multiply this across dozens of meetings per week in a large organization, and you're looking at millions of dollars in lost productivity from audio quality issues.

AI accuracy significantly drops as audio quality decreases, with a potential 50% degradation from excellent to poor audio. Estimated data.

Certification Standards: Why They Matter for AI Performance

Not all audio equipment is created equal. Certification standards exist to ensure that audio equipment meets minimum quality thresholds for specific platforms and use cases.

For AI and meeting tools, several certification standards matter:

Microsoft Teams Certified

Microsoft certifies audio equipment specifically for Teams. Certification requires:

- Microphone specifications (frequency response, noise rejection, etc.)

- Speaker specifications (output quality, echo cancellation, etc.)

- Real-world performance testing on Teams

- Driver and firmware updates for at least 2 years

- Documentation and support

Why does this matter for AI? Teams Copilot features depend on clean audio. Certified equipment ensures the AI gets the best possible input. Uncertified equipment may work fine for voice calls but degrade Copilot performance.

Zoom for Home/Office Certified

Zoom has similar certification for audio equipment. Zoom recordings feed into analytics and transcription services. Certified equipment ensures optimal performance for those downstream AI features.

Skype for Business and Lync Certified

Legacy Microsoft platforms have their own certifications. If your organization still runs on older platforms, certified equipment ensures compatibility and optimal performance.

AES and other audio standards

Beyond platform-specific certification, audio equipment should meet basic audio engineering standards:

- Frequency response: 50 Hz-20k Hz is standard for speech. For speech-heavy applications, 80 Hz-12k Hz is acceptable.

- Signal-to-noise ratio: 85d B is minimum acceptable. 95d B+ is excellent.

- Total harmonic distortion: Should be below 1% at normal operating levels

- Phase response: Affects how the microphone captures multiple speakers simultaneously

Why do these standards matter? Because when equipment meets these standards, AI models trained on high-quality audio operate in conditions they're optimized for. When equipment falls below standards, you're operating AI in non-standard conditions, and performance degrades predictably.

Certified solutions typically come from manufacturers like Shure, Sennheiser, Polycom, and others who specialize in professional audio and have invested in meeting certification processes.

The certification process isn't just marketing. It's validation that the equipment meets specific performance thresholds and that real-world performance has been tested.

For organizations deploying AI tools, certified audio equipment should be a non-negotiable requirement. It's not optional. It's the foundation that makes everything else work.

The Integration Challenge: Audio in the Modern Tech Stack

Most organizations have fragmented tech stacks. They use Microsoft Teams, Slack, Zoom, Google Meet, and custom web conferencing all simultaneously. They record meetings in multiple places. They run different AI transcription services.

Integrating audio quality across this fragmented landscape is non-trivial.

The problem with point solutions

A typical organization might use:

- Laptop microphones for casual calls

- Desktop USB mics for primary workspace

- Headsets for mobile/hybrid workers

- Conference room systems for meeting spaces

- Different AI transcription services for different platforms

Each of these operates independently. Audio quality varies wildly depending on which device you're using and which platform you're on. The AI experience is inconsistent.

The solution: integrated audio strategy

Organizations that get audio right implement an integrated strategy:

- Standard equipment across the organization: Everyone gets the same certified microphone and headset

- Certified software: Audio processing and transcription use certified solutions designed for the platforms the organization uses

- Consistent environment: Meeting spaces are optimized to a standard

- Unified transcription and analytics: All meetings feed into a common pool where transcription and AI analysis happen consistently

- Quality monitoring: Real-time monitoring of audio quality feeds into IT operations so problems are identified and fixed immediately

This integration creates conditions where:

- Every meeting has similar audio quality

- AI features perform consistently

- Users experience the same level of service regardless of which platform or device they're using

- IT can troubleshoot problems at scale instead of handling individual complaints

Platform-specific considerations

Each platform has unique audio handling:

Microsoft Teams and Copilot

Teams Copilot features depend heavily on audio quality:

- Meeting transcription

- Automatic action item extraction

- Meeting insights and summaries

- Real-time translation

Integration with certified audio equipment ensures all of these features perform at their peak. Teams' API surface also allows third-party audio vendors to integrate directly with Copilot features.

Zoom and analytics

Zoom recordings feed into analytics services that depend on transcription quality. Zoom's AI assistant features are similarly dependent on clear audio.

Zoom also allows third-party audio partners to integrate analytics and quality monitoring features directly into the platform.

Slack and integrated voice

Slack's voice and video features are increasingly important for organizations. Slack also supports third-party audio integration for quality monitoring and optimization.

Custom platforms and bots

Organizations building custom meeting bots and AI agents need to ensure their audio pipeline is compatible with the platforms being used and optimized for the AI models in the agent.

This is where many organizations stumble. They build a sophisticated AI agent but feed it degraded audio from a laptop microphone. The agent's capability is bottlenecked by audio quality before it ever gets a chance to shine.

Certification standards like AES and Microsoft Teams ensure high-quality audio input, crucial for optimal AI performance. Estimated data based on typical industry importance.

Building an Audio-First AI Strategy

The best organizations are embedding audio strategy into their broader AI and collaboration initiatives. Instead of audio being an afterthought, it's foundational.

Step 1: Assess current state

First, understand what you have:

- What audio equipment is currently deployed

- Which equipment is certified for your platforms

- What audio quality issues are being reported

- What performance degradation you're seeing in AI features

- What your cost of poor audio quality is

This assessment typically involves:

- Auditing current equipment and certification status

- Recording sample meetings and evaluating audio quality

- Analyzing AI feature performance (transcription accuracy, meeting intelligence quality)

- Surveying users about audio quality and meeting experience

- Calculating cost of audio-related support tickets and productivity loss

Step 2: Define standards

Based on your assessment, define audio standards for your organization:

- Equipment standards: What certified equipment is approved for deployment

- Platform standards: Which platforms are considered primary (Teams, Zoom, Google Meet)

- AI standards: Which AI features are critical to your business and therefore require audio quality assurance

- Environment standards: Acoustic and audio environment specifications for meeting spaces

- Monitoring standards: How audio quality is monitored and what triggers corrective action

Step 3: Phased rollout

Don't try to fix everything at once. Phase the rollout:

Phase 1 (3 months): Upgrade high-impact meeting spaces (executive conference rooms, customer-facing spaces, large collaboration areas) with certified equipment and acoustic treatment.

Phase 2 (3-6 months): Upgrade primary meeting spaces with consistent equipment and begin monitoring audio quality at scale.

Phase 3 (6-12 months): Deploy consistent equipment to individual workspaces and remote workers.

Phase 4 (ongoing): Continuous monitoring, optimization, and upgrades as new equipment becomes available.

This approach allows you to demonstrate ROI early (phases 1-2 typically show 30-40% improvement in meeting productivity) and justify continued investment in phases 3-4.

Step 4: Integrate with AI roadmap

As you plan to deploy new AI features—whether Copilot, custom agents, or other AI-powered tools—ensure audio quality is part of the deployment plan.

Specifically:

- Before deploying an AI feature, audit audio quality in the environments where the feature will be used

- If audio quality is below standard, upgrade before deploying the AI feature

- When evaluating AI tools, test them with realistic audio quality (degraded audio, background noise, multiple speakers) to understand realistic performance

- Design AI feature rollouts to hit audio-optimized environments first, demonstrating value before broader rollout

Step 5: Measure and optimize

Once you've deployed audio solutions, measure the impact:

- Transcription accuracy: Comparing before/after transcription quality

- Meeting intelligence quality: Evaluating action item accuracy, topic identification, decision capture

- User experience: Surveying teams about meeting quality and productivity

- Adoption: Measuring how much teams are using AI features

- Business impact: Measuring time saved, productivity gains, customer satisfaction improvements

Use these metrics to identify optimization opportunities and continue improving.

Real-World Case Study: Large Enterprise Transformation

A financial services company with 5,000 employees across 12 offices deployed an AI-powered meeting intelligence platform. Initially, the rollout was disappointing. AI-generated summaries were incomplete. Action items were often wrong. User adoption was low.

The organization investigated and discovered the root cause: audio quality. The company was trying to run AI on conference room audio captured with 15-year-old microphones and laptop microphones used by remote participants.

They implemented a targeted audio upgrade:

- Replaced all conference room microphones with certified solutions

- Deployed quality headsets to all primary remote workers

- Implemented acoustic treatment in 40 primary meeting spaces

- Integrated audio quality monitoring into their IT operations

Results after 6 months:

- Transcription accuracy: Improved from 72% to 94%

- Action item accuracy: Improved from 65% to 91%

- User adoption of meeting intelligence: Increased from 22% to 78%

- Meeting follow-up time: Decreased by 35% (fewer follow-ups needed because the first meeting was better captured)

- Executive perception of AI value: Shifted from "not useful" to "critical business tool"

- ROI on audio investment: Payback in 14 months through productivity savings alone

The interesting part: the company didn't change the AI platform or deploy new features. They just fixed the audio. The same AI that was producing poor results with degraded audio produced excellent results with quality audio.

This case illustrates a critical point: audio quality is often the limiting factor in AI performance, and it's frequently overlooked because organizations assume the AI is the problem.

The Future: AI That Demands Quality Audio

As AI becomes more sophisticated, it will demand higher audio quality, not lower.

Current speech recognition and transcription are impressive but relatively simple: convert audio to text. Future AI will:

- Analyze emotional nuance: Understanding not just what was said but how it was said

- Extract implicit context: Understanding unstated assumptions and implications

- Identify expertise and disagreement: Knowing who the expert is on a topic and recognizing when people disagree

- Predict outcomes: Based on tone, discussion quality, and decision-making patterns, predicting which decisions will succeed and which will fail

- Generate personalized insights: Creating different summaries and insights for different people based on their role and needs

All of these capabilities require higher audio quality, not lower. They depend on preserving subtle vocal cues and avoiding the artifacts that appear when audio is degraded.

Organizations that invest in audio quality today are future-proofing themselves for the AI capabilities coming tomorrow. Organizations that defer this investment will find themselves unable to use advanced AI features because the audio foundation isn't there.

Practical Implementation: A Step-by-Step Roadmap

Here's how to actually implement audio strategy:

Week 1-2: Assessment

- Audit current audio equipment in top 10 meeting spaces

- Record sample meetings and evaluate audio quality

- Test transcription accuracy on those recordings

- Survey teams about audio quality issues

- Document cost of support tickets related to audio problems

Week 3-4: Planning

- Define equipment standards (which devices are approved)

- Identify high-impact spaces for first upgrade

- Get budget approval (typically $30K-50K for first phase)

- Select certified equipment

- Plan installation and cutover schedule

Month 2: First phase deployment

- Procure equipment

- Train IT staff on installation and troubleshooting

- Install in first batch of spaces

- Establish audio quality monitoring

- Begin collecting baseline data on improvements

Month 3-6: Expansion and optimization

- Deploy to additional spaces based on impact analysis

- Refine standards based on real-world learning

- Measure AI feature performance improvements

- Plan next phases of expansion

- Document ROI and communicate successes

Ongoing: Maintenance and continuous improvement

- Monitor audio quality across all spaces

- Replace equipment as needed

- Upgrade software and firmware

- Optimize settings based on new learning

- Plan next-generation equipment as it becomes available

Common Mistakes to Avoid

Mistake 1: Deploying AI without auditing audio quality first

Don't assume your current audio setup can support AI. Test it. Record a meeting. Listen to it. Run it through transcription. If it sounds bad to you, it will sound bad to the AI.

Mistake 2: Buying the cheapest option

Cheap audio equipment looks identical to quality equipment but performs dramatically differently. This isn't an area where you can save money without paying the price in AI performance.

Mistake 3: Ignoring room acoustics

Even perfect microphones and software can't overcome a room with terrible acoustics. You need both: quality microphones AND proper acoustic environment.

Mistake 4: Deploying without monitoring

Once audio equipment is installed, it can degrade over time. Microphone ports get clogged with dust. Software gets outdated. Monitoring ensures problems are identified and fixed immediately.

Mistake 5: Treating audio as solved after equipment deployment

Audio strategy is ongoing. As platforms change, as AI capabilities evolve, as new equipment becomes available, strategy needs to evolve too. This isn't a one-time fix.

The Competitive Advantage of Audio-First Strategy

Organizations that master audio quality gain significant competitive advantages:

Faster decision-making: Clearer meetings with better AI insights means decisions get made with better information and higher confidence.

Better customer experience: AI-powered customer support works better with clear audio, leading to faster resolution times and higher satisfaction.

Faster execution: Fewer follow-up meetings because the first meeting was captured completely and accurately.

Better inclusion: Remote workers and non-native speakers are more effectively included when audio quality is high.

AI capabilities at scale: Organizations can deploy advanced AI features at scale because the audio foundation supports it.

Reduced IT overhead: Clearer audio means fewer support tickets, fewer troubleshooting calls, less frustration.

Quantifying this: organizations that get audio right typically see 30-40% improvement in meeting productivity. At scale, across thousands of meetings, that's millions of dollars in value.

The companies winning in AI aren't winning because they have better AI tools. They're winning because they have better foundations—including audio quality—that allow their AI tools to perform at their peak.

FAQ

What is the relationship between audio quality and AI meeting performance?

Audio quality directly determines how well AI can understand meetings. When audio is clear, transcription accuracy is 95%+, speaker identification is 98%+ accurate, and AI insights are reliable. When audio is poor, transcription accuracy drops to 65-75%, speaker identification becomes guesswork, and AI insights become unreliable. The relationship is exponential: small drops in audio quality lead to large drops in AI performance.

How much does audio quality improvement cost and what's the ROI?

A certified meeting microphone costs

What audio certification standards matter most for AI?

Microsoft Teams Certified and Zoom for Home/Office Certified are the most important certifications for AI-powered collaboration. These certifications ensure the equipment has been tested with the platforms' AI features specifically. Beyond platform certifications, look for equipment that meets basic audio engineering standards: frequency response of 50 Hz-20k Hz for speech, signal-to-noise ratio of 85d B minimum (95d B+ preferred), and total harmonic distortion below 1%.

Can software fix poor audio quality?

Intelligent audio software can mitigate some audio quality issues through noise suppression, speaker separation, and adaptive processing. However, software cannot fully recover audio quality that was lost during initial capture. It's better to capture quality audio in the first place than to try fixing poor audio in software. The best approach combines quality microphone hardware and intelligent software together.

How do I measure audio quality improvement?

Measure transcription accuracy by comparing AI-generated transcripts to manual transcription. Track AI feature performance metrics like action item accuracy, topic identification, and sentiment detection. Survey users about meeting experience and productivity. Calculate business impact through metrics like follow-up meeting reduction, decision-making speed, and customer satisfaction. Most organizations see measurable improvements in 30-90 days after deploying quality audio equipment.

What should I do if my audio quality is degraded in existing meetings?

Start by assessing the problem: Is it the microphone, the room acoustics, the network connection, or the software settings? Record a sample meeting and listen to it directly. If you can't hear it clearly, neither can the AI. Common quick fixes include: repositioning the microphone closer to speakers, muting unused apps that might be creating noise, checking microphone driver versions, and testing with a different microphone. For persistent problems, conduct a professional audio assessment of your meeting spaces.

How does audio quality affect AI-powered customer support?

In customer support, audio quality determines whether the AI correctly understands the customer's problem, detects their emotional state, and routes them appropriately. With poor audio, the AI misclassifies issues (customer goes to wrong department), misses that the customer is frustrated (no escalation), and extracts wrong information (wrong follow-up actions). This leads to longer resolution times, lower customer satisfaction, and inefficient support operations. High audio quality enables the AI to categorize issues with 90%+ accuracy, detect customer sentiment reliably, and extract information correctly.

What's the most common mistake organizations make with audio and AI?

The most common mistake is deploying sophisticated AI tools while assuming the existing audio infrastructure is sufficient. Organizations spend millions on AI platforms, then cripple those platforms by feeding them poor quality audio from laptop microphones in acoustically terrible rooms. The fix is simple: audit audio quality before deploying AI, test AI performance with realistic audio conditions, and ensure audio meets standards before declaring the AI ready for production.

Final Thoughts: Audio as Strategic Infrastructure

Audio quality has moved from being a "nice to have" collaboration feature to being critical infrastructure for AI-powered workplaces.

Organizations that recognize this—and invest accordingly—are getting dramatic value from their AI tools. Organizations that ignore this are wasting millions on AI that never reaches its potential.

The good news: this is fixable. Audio quality is measurable. The solutions are well-understood. The ROI is clear. And the investment is modest compared to the value it unlocks.

Start with assessment. Understand where your audio quality gaps are. Then fix them systematically, measuring impact as you go.

If you want AI to deliver on its promise, start with sound. Clear audio doesn't just improve meetings. It unlocks the full potential of AI-powered collaboration.

That's not theoretical. That's happening in organizations right now. The question is: will your organization be among them?

Key Takeaways

- Poor audio quality reduces AI transcription accuracy from 95% to 65-75%, making meeting intelligence unreliable

- Audio equipment investment (typically $30K-50K for first phase) breaks even in 14-18 months through productivity savings

- Certified microphones, intelligent audio software, and room acoustics work together as foundational infrastructure for AI

- Organizations investing in audio quality first see 30-40% improvement in meeting effectiveness and AI feature adoption

- Every AI meeting feature depends on clear audio: transcription, speaker identification, sentiment analysis, and decision extraction

Related Articles

- Subtle's AI Voice Earbuds: Redefining Noise Cancellation [2025]

- Deploy Your First AI Agent Yourself: The Complete Hands-On Guide [2025]

- Vibe Bot: AI Agent for Your Desk [2025]

- AI Isn't Slop: Why Nadella's Vision Actually Makes Business Sense [2025]

- Satya Nadella's 'AI Slop' Pushback: Why the Backlash Actually Misses the Point [2025]

- Fender Audio's New Bluetooth Speakers & Headphones at CES 2026 [2025]

![Clear Audio Drives AI Productivity: Why Sound Quality Matters [2025]](https://tryrunable.com/blog/clear-audio-drives-ai-productivity-why-sound-quality-matters/image-1-1767780728822.jpg)