Discord Age Verification: Complete Guide to Teen-Safe Features [2025]

Introduction: The Shift Toward Age-Aware Platforms

Discord just made one of the biggest policy changes in its history. Starting in early March 2025, every single user on the platform faces a choice: verify your age, or lose access to a massive chunk of Discord's functionality.

This isn't some minor update buried in settings. We're talking about a fundamental restructuring of how the platform works. Messages from strangers automatically get filtered. Age-restricted channels become invisible unless you prove you're an adult. Voice channel access gets gated. Sensitive content stays blurred by default.

For a platform with over 150 million monthly active users, this is seismic. And honestly? It matters way more than most people realize.

The announcement came in February 2025, catching some users off guard. Discord's head of product policy, Savannah Badalich, framed it as building on the company's existing safety architecture, giving teens strong protections while allowing verified adults flexibility. Sounds reasonable on the surface. But dig deeper, and you'll find a lot of complicated questions about privacy, effectiveness, and whether age verification actually solves what it claims to solve.

Here's what you need to know: why Discord is doing this, how the system works, what the privacy implications are, and what it means for the future of age-gating on the internet.

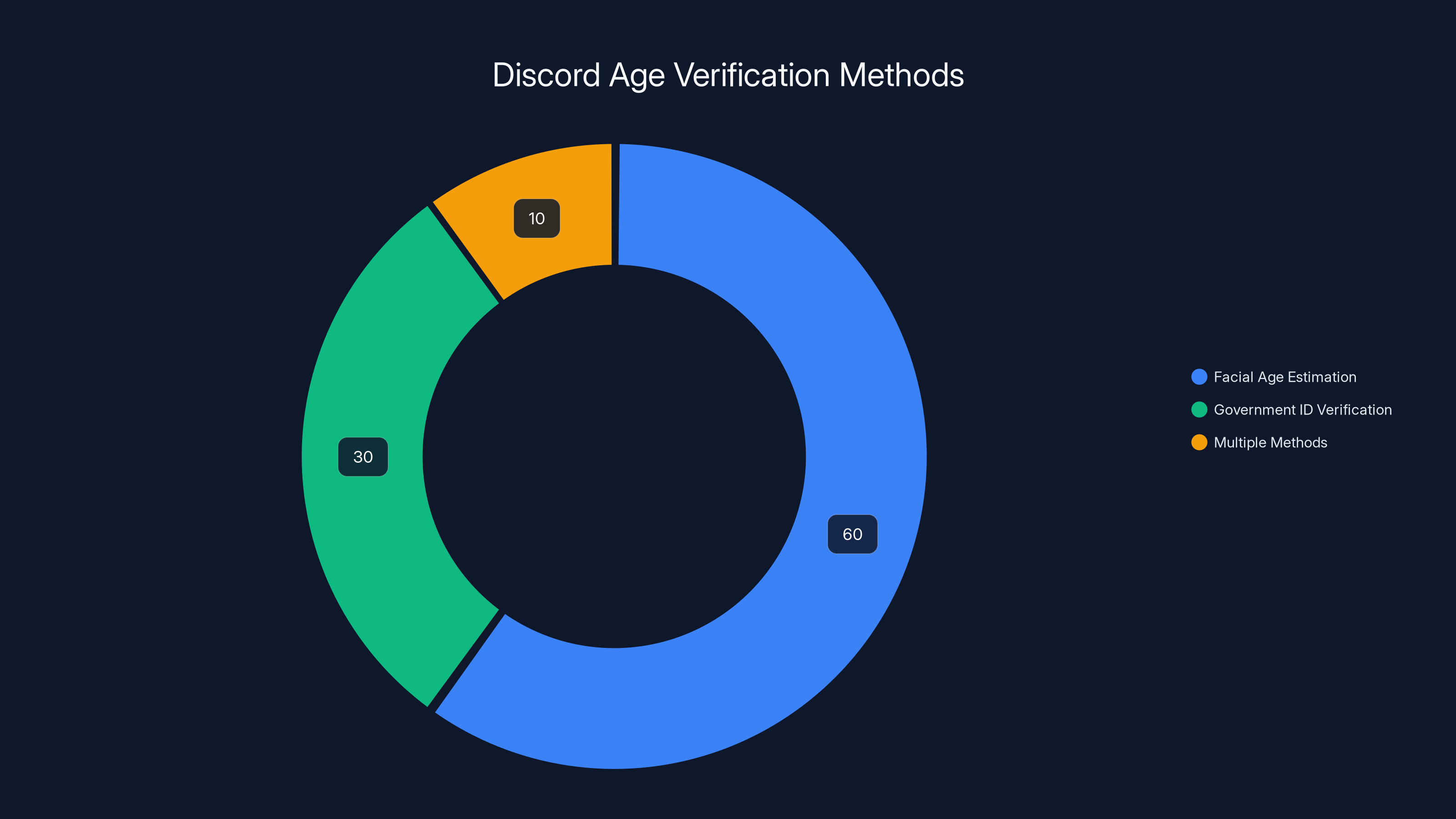

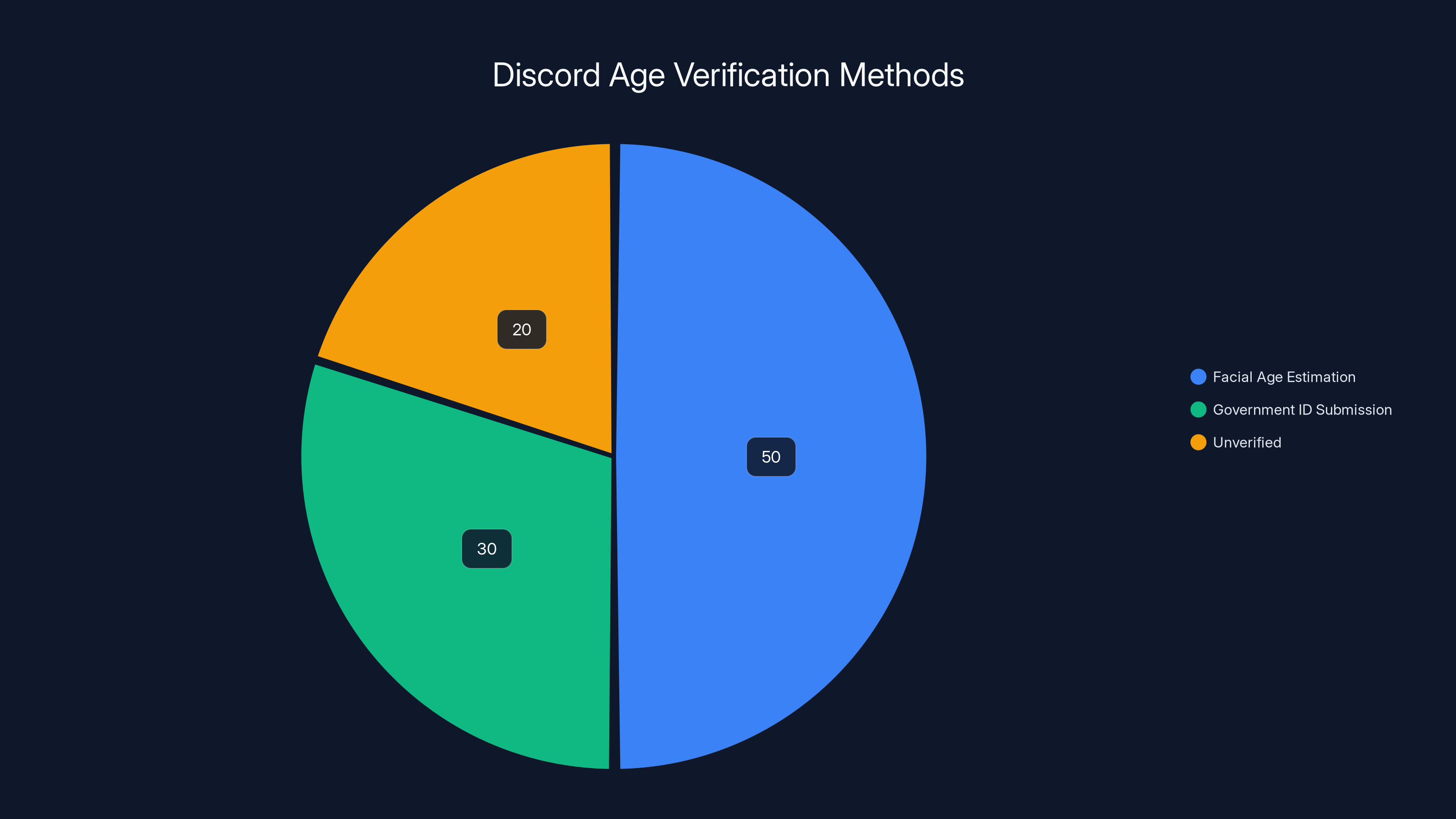

Estimated data shows that most users prefer facial age estimation due to privacy concerns, while a smaller percentage use government ID verification or multiple methods.

TL; DR

- Global rollout begins March 2025: All Discord users default to "teen-appropriate experience" unless age-verified

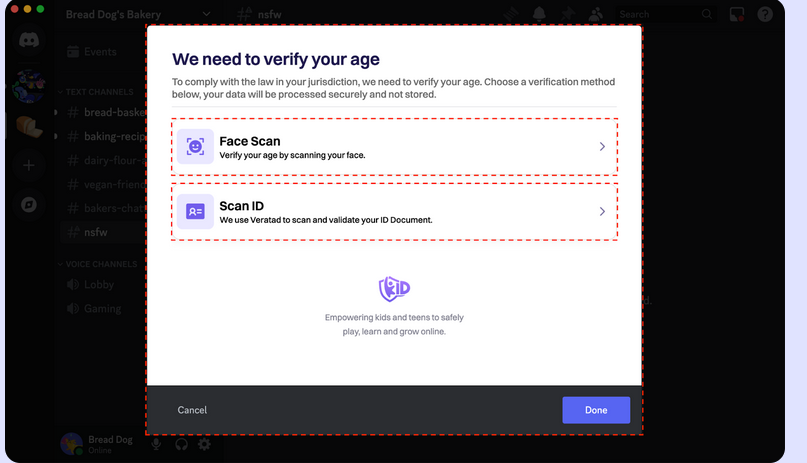

- Two verification methods available: Facial age estimation (video selfies) or government ID submission to third-party vendors

- Significant access changes: Verified adults gain access to age-restricted channels, can modify inbox filters, and can use stage features

- Privacy concerns linger: Discord experienced a breach in 2024 involving ID photos from a third-party vendor

- Industry-wide trend: Roblox, You Tube, and other platforms implementing similar age-verification systems

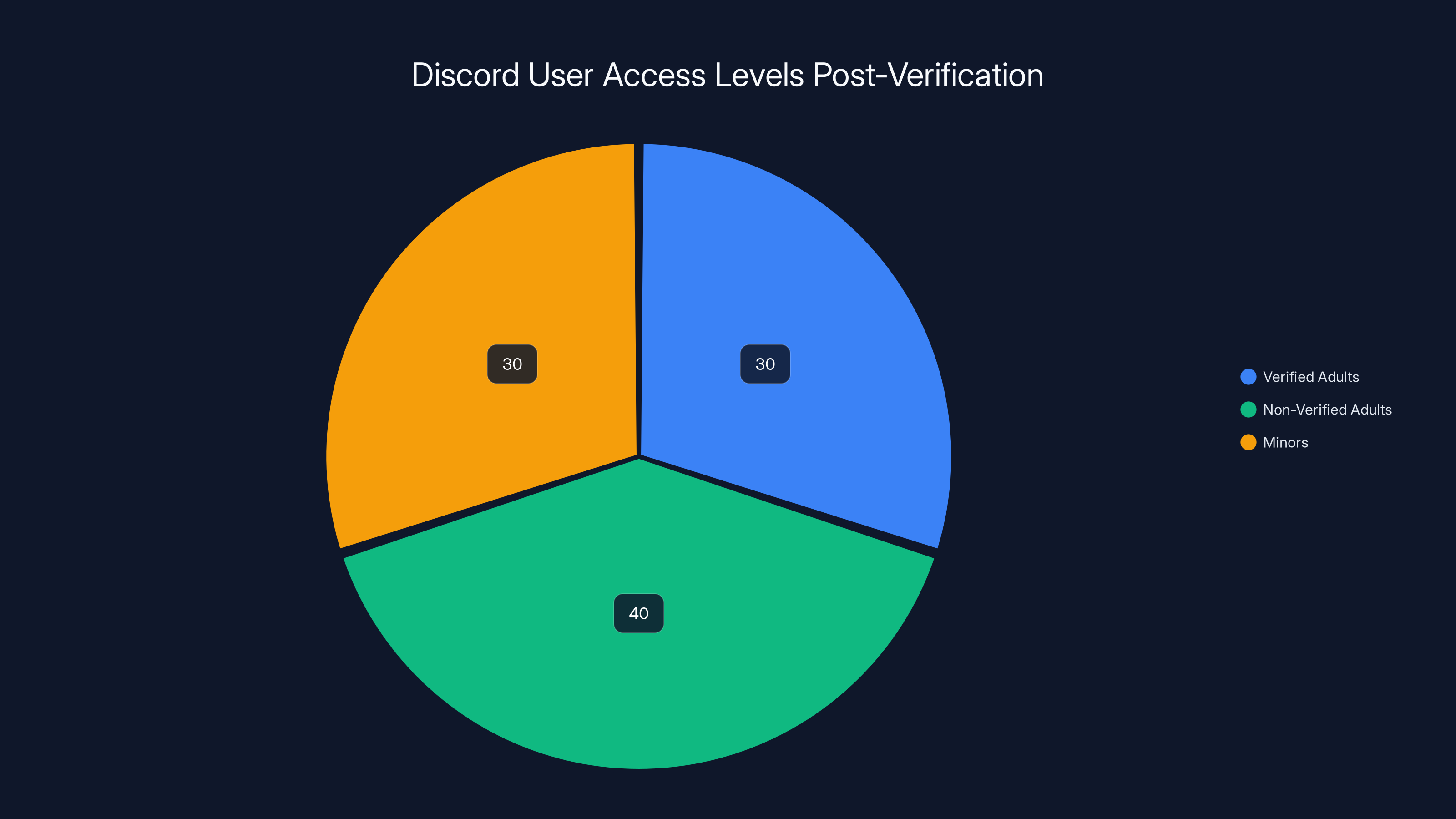

Estimated data suggests that a significant portion of adults may choose not to verify, maintaining teen-appropriate defaults. Minors constitute a similar share, highlighting the impact of verification on access levels.

Why Discord Is Rolling Out Age Verification Now

This didn't happen in a vacuum. Discord's age-verification push is part of a much larger movement toward platforms taking responsibility for protecting minors.

For years, Discord operated in a somewhat gray area. The platform has communities for everything. Gaming, education, crypto, art, fitness. Some servers are squeaky clean. Others? Not so much. There's adult content. There's unmoderated spaces where kids can stumble into conversations they shouldn't be having.

From a liability perspective, Discord needed to do something. Regulators globally are tightening screws on tech platforms regarding child safety. The UK Online Safety Bill went into effect. Australia introduced its own age-verification requirements. The EU's Digital Services Act imposes penalties for inadequate child protections. The US Congress keeps proposing new legislation aimed at restricting minors' access to social platforms.

Discord already implemented age verification for UK and Australian users in 2024. The global rollout is the logical next step. Once you've proven the system works in specific regions, expanding it worldwide becomes a matter of operational feasibility.

But there's another angle worth considering. Adult users have complained for years that Discord feels overrun with younger users. Implementing teen-by-default creates a two-tier experience. Adults can enjoy fewer restrictions. Teens get a curated, safer version. It theoretically creates better experiences for both groups.

The timing also matters. Discord's trying to position itself as a serious platform, not just a gaming chat app. If the company wants enterprise adoption, government contracts, or mainstream legitimacy, it needs to prove it takes safety seriously. Age verification is a highly visible way to demonstrate that commitment.

There's also the competitive angle. Roblox added mandatory facial verification for chat access. You Tube rolled out age-estimation technology. Other platforms are moving fast on this. Discord doesn't want to be perceived as lagging behind on child safety.

How Discord's Age Verification System Works

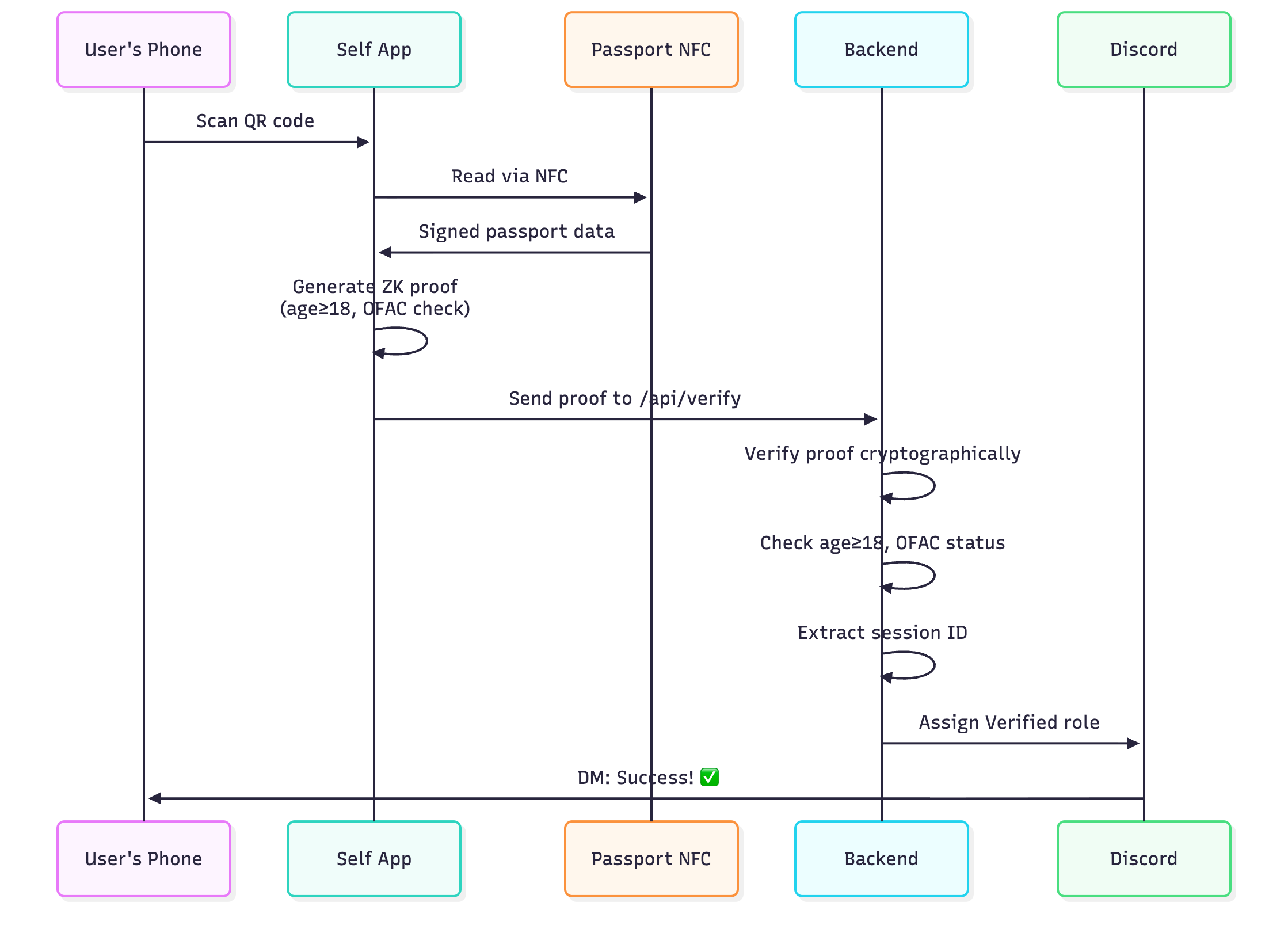

Let's get into the mechanics. Understanding how the system actually functions is crucial because it's where most of the privacy debates happen.

Discord offers two primary verification methods. First, there's facial age estimation. Users submit video selfies, and Discord's AI analyzes facial features to estimate age group. The company claims these videos never leave your device. They're processed locally, then deleted. In theory, you're not uploading sensitive video data anywhere.

Second, there's government ID verification. Users submit their ID to Discord's third-party vendor partners. This is where things get thorny. Your government ID contains a lot of sensitive information: your full legal name, date of birth, address, ID number, sometimes even biological markers. Discord says these IDs are deleted quickly, most often immediately after age confirmation. But the mere act of submitting them to a third party introduces risk.

Here's the critical part: Discord notes that some users might be asked to use multiple methods if additional information is needed. So facial estimation doesn't work? Time to submit ID. This creates a funnel effect where people who can't pass the easier verification method end up using the more invasive one.

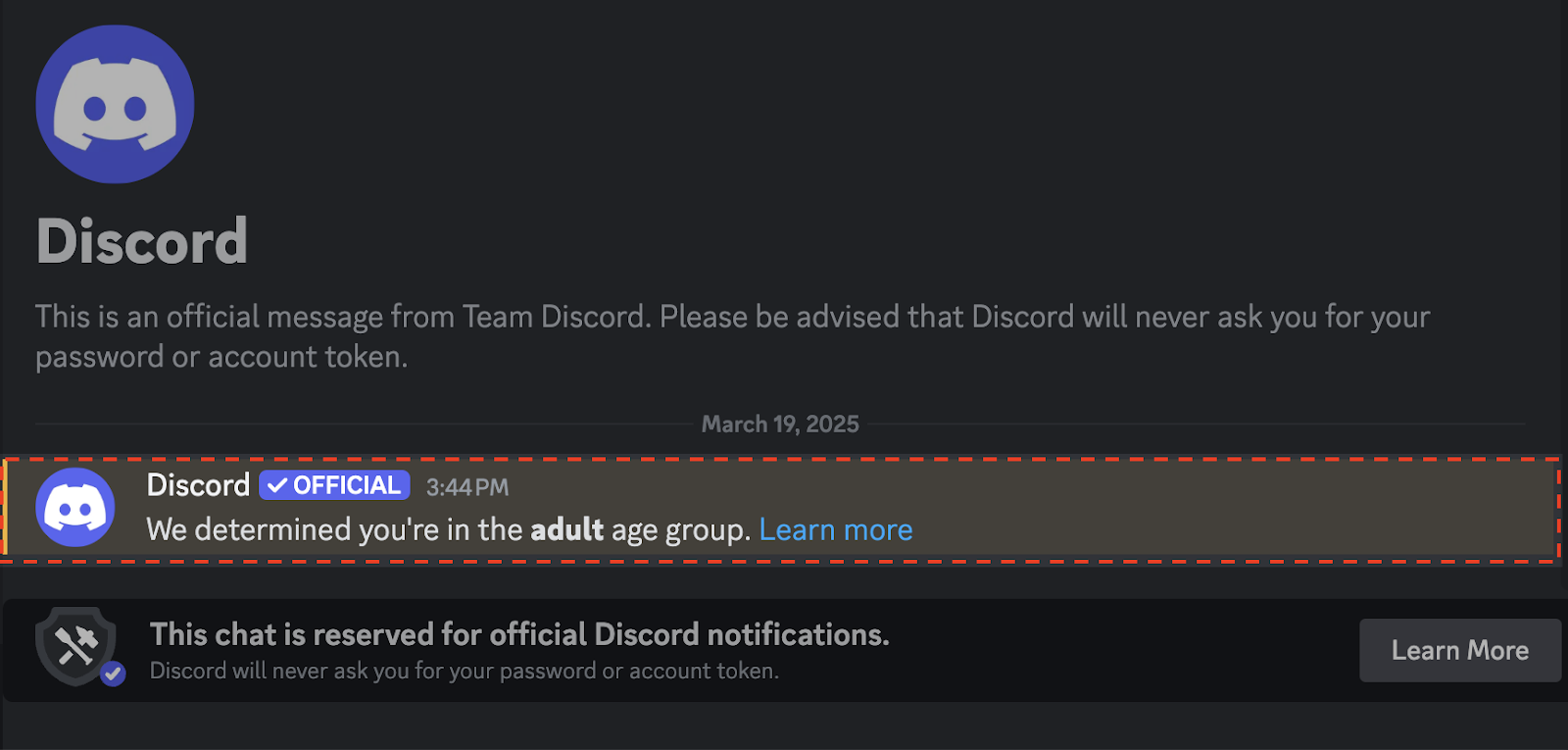

Once verified, your account gets flagged as "adult" or "teen." This flag then determines what you can access across the platform. It's binary. You're either verified as an adult, or you're not. There's no middle ground.

The verification flag also doesn't expire. Once you're verified, Discord remembers. This actually raises an interesting question: what if you're 17, get verified with ID, and then turn into an adult? You don't need to reverify because the system assumes your ID information was accurate.

But what if the system misidentifies you? What if the facial estimation algorithm thinks you're 18 when you're actually 15? The article mentions Discord might ask for multiple verification methods when uncertain, but there's limited information about what happens if the system is consistently wrong about your age.

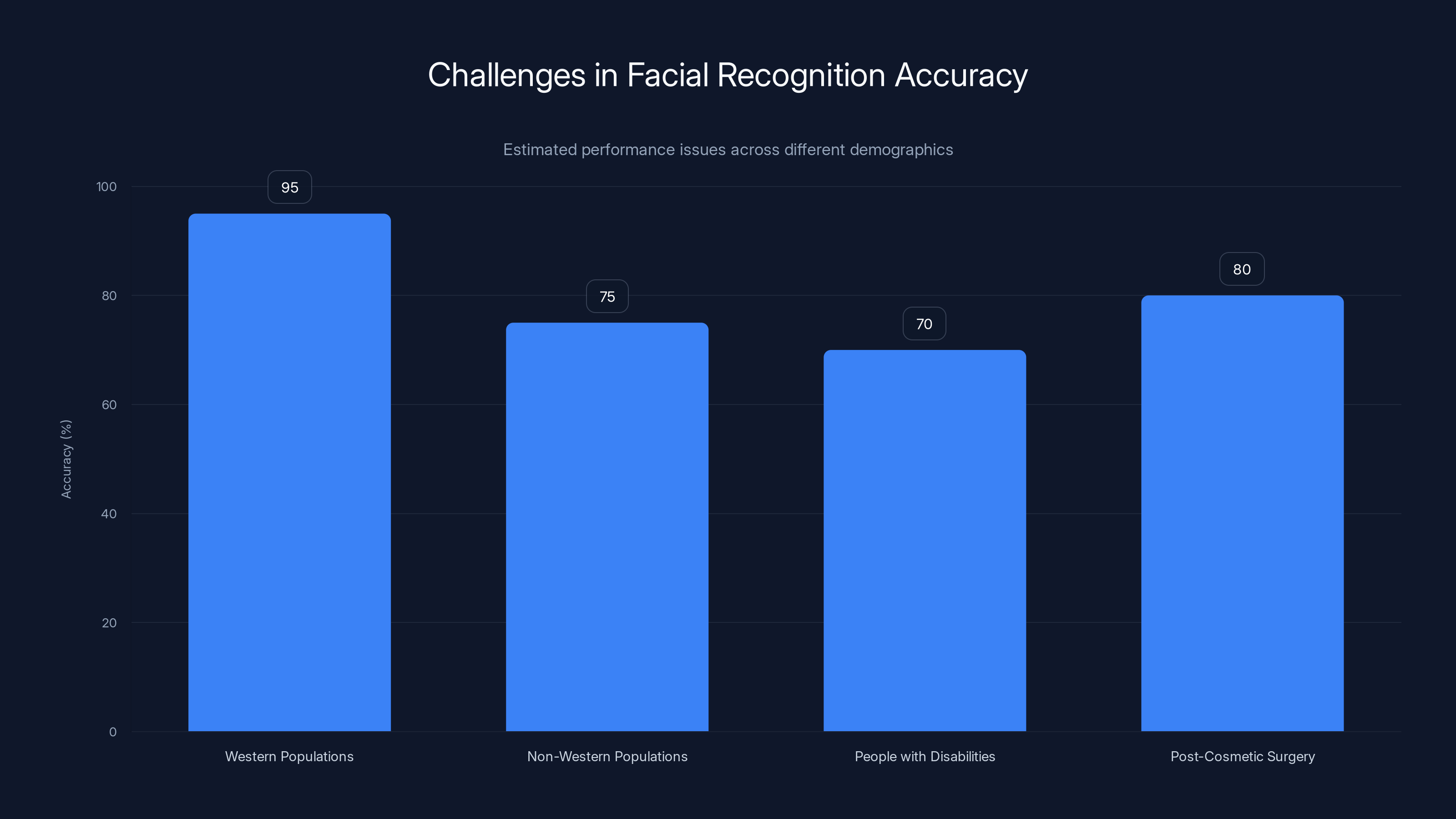

Estimated data suggests facial recognition models may perform significantly worse for non-Western populations and individuals with facial structure changes, highlighting potential discrimination issues.

The Teen-Appropriate Experience: What Changes for Younger Users

So you're a young user, and Discord now defaults you into "teen-appropriate mode." What does that actually mean?

First, sensitive content gets blurred. Images and videos marked as sensitive stay hidden unless you explicitly choose to see them. This is the unblur setting that only verified adults can toggle.

Second, age-restricted channels become invisible to you. If a server has channels marked for adults only, they don't even appear in your channel list. You can't accidentally click into them. You can't see what conversations are happening there. This is a pretty significant change from the current system where you might see channel names but not necessarily have access to the content.

Third, app commands and integrations that are age-restricted get blocked. If a server uses a bot that has adult-content commands, those commands won't work for you. Discord's building this capability into its platform, so developers can designate certain commands as adults-only.

Fourth, messages from people you don't know automatically route to a separate inbox. This is actually quite clever from a safety perspective. You still receive messages, but they're quarantined. They don't flood your main inbox. You have to actively decide to open them.

Fifth, you'll get warning prompts for friend requests from users you may not know. So if a random account sends you a friend request, Discord alerts you. It's not blocking the request, just making you think twice before accepting.

Sixth, you cannot speak on stage in servers. Voice channels have a stage feature where certain people can broadcast to everyone. Teens can listen but not broadcast. This prevents scenarios where a young person is amplifying their voice to unknown audiences.

There's also context to understand here. These aren't punitive restrictions. Discord's positioning them as protections. And frankly, most of them make sense from a child safety perspective. Random adults messaging your kid? That's a legitimate concern. Age-restricted content being visible to minors? Yeah, that's worth controlling.

But it also creates a two-tiered experience. Adults have full flexibility. Teens have guardrails. Whether those guardrails are effective, well, that's a different question entirely.

Facial Age Estimation: How Discord's AI Makes the Call

Let's zoom in on the facial age estimation method because it's the more privacy-friendly option on the surface, but it's also the most technically interesting.

Facial age estimation isn't new technology. It's been in development for decades. The general principle is straightforward: human faces have certain characteristics that correlate with age. Skin texture, bone structure, eye shape, hair patterns. AI models trained on thousands of faces can learn to recognize these patterns and make age predictions.

Discord's approach, according to the announcement, processes video selfies locally on your device. You open the Discord app, start the verification flow, record a video of your face from multiple angles, and the local AI analyzes the video. The analysis happens entirely on your device. The video gets deleted. Discord never sees the actual video.

This is a smart privacy choice. It means Discord doesn't have to store biometric data. It doesn't have to build a facial database. It just gets a yes/no answer: "This person is likely an adult, or they're likely a teen."

But there are limitations to facial estimation. It's not perfect. Different ethnic groups have different facial development patterns. The AI might be more accurate for some demographics than others. If someone has a genetic condition that affects facial development, the model might misclassify them. If someone uses makeup, angles the camera, or has had cosmetic procedures, the accuracy drops.

Discord addresses this by saying some users will be asked to use multiple methods. Translation: if the facial estimation is uncertain, they'll ask for ID. But this creates a problematic situation for people who don't want to submit government IDs. If the AI keeps misidentifying you, you're forced into a more invasive verification method.

There's also the question of long-term accuracy. As you age, your face changes. If you verify at 15 using facial estimation, the system learns what your adult face might look like based on your teen face. It makes a prediction. But predictions aren't always right. What if the system predicts you'll look like an adult when you turn 18, but you don't actually age the way the model predicted?

The broader issue: Discord is relying on an AI system that has documented accuracy problems, especially across different demographics. And if that system makes mistakes, the consequences could affect access to entire communities.

Estimated data suggests that 50% of users may prefer facial age estimation due to privacy concerns, while 30% opt for government ID submission. 20% remain unverified, defaulting to a teen-appropriate experience.

Government ID Verification: Privacy Trade-offs and Data Handling

Now let's talk about the ID verification path. This is where things get genuinely uncomfortable from a privacy standpoint.

When you submit government ID to Discord, you're not submitting it directly to Discord. You're submitting it to "vendor partners." Discord hasn't named these vendors. We don't know who they are. We don't know what their security practices look like. We don't know their track records.

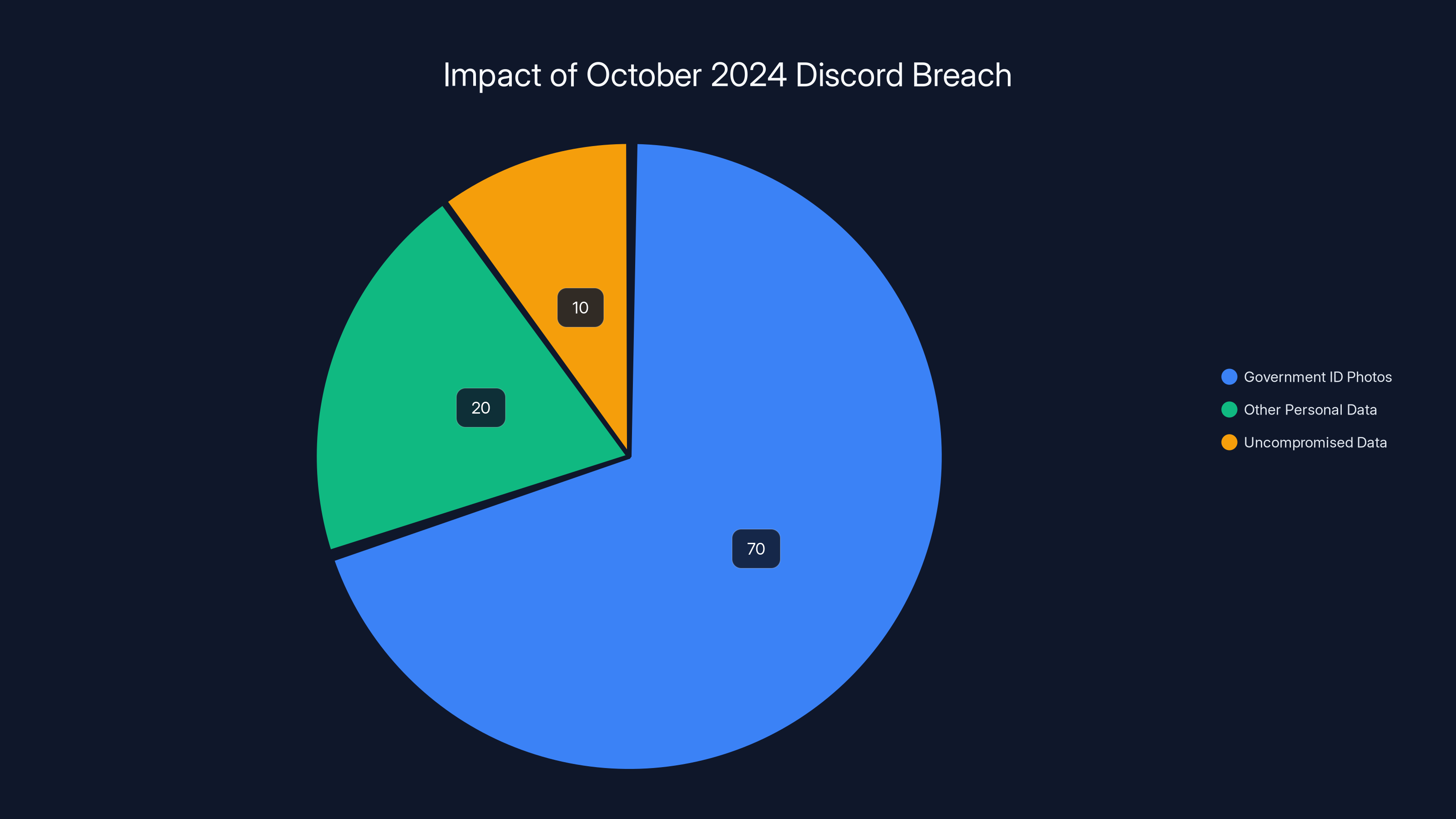

This is critical context because Discord literally experienced a breach in October 2024 involving a third-party vendor. Approximately 70,000 users had sensitive data exposed, including government ID photos. The breach wasn't Discord's infrastructure that failed. It was the vendor's infrastructure.

So when Discord says IDs are deleted quickly after verification, you have to ask: deleted by whom? When? How? And are they actually secure during the window between submission and deletion?

Here's what we know about typical ID verification flows: You take a photo or video of your ID. You upload it. A human or AI reviews it to confirm it's legitimate. Age information gets extracted. You either pass or fail verification. Then, theoretically, the ID gets deleted.

But "immediately after age confirmation" is vague. Does it mean within seconds? Minutes? Hours? And what about backups? What about logs that might reference the ID? What about downstream systems that received the data?

The other issue is that government IDs are persistent. Your government ID number is something you'll have for decades. If a vendor gets breached, that ID number could be sold, could be used for identity theft, could be tied to you for years.

Some countries have stricter ID verification laws than others. In the European Union, for example, the GDPR imposes strict requirements on storing biometric data. Discord might have to handle European ID verification differently than US verification. But the announcement doesn't clarify these regional differences.

There's also the business model question. Why do vendors exist for age verification? Because there's money in it. Vendors provide this service to multiple companies. They're handling millions of ID submissions. The more data they accumulate, the more valuable it becomes to potential buyers or hackers.

Discord's saying it's taking precautions. But precautions aren't guarantees. And the company's history with third-party breaches suggests the supply chain is a weak link.

What Verified Adults Get: Unrestricted Access

If you verify as an adult, congratulations. You basically get the Discord experience that existed before this change.

You can access age-restricted channels and servers. You can view sensitive content. You can use adult-flagged app commands and integrations. You can modify your message inbox settings, which means random messages from strangers don't automatically route to a separate inbox unless you want them to.

Most significantly for some users, you can broadcast on stage in voice channels. This matters for creators, streamers, educators, and anyone running a community that might want designated speakers.

Verified adults also don't get the warning prompts for friend requests from unknown users. If someone you don't know sends you a friend request, it just shows up like normal. You're trusted to make your own decisions about who you connect with.

This creates a genuine two-tier experience. But here's the thing that's worth pointing out: for most Discord users who are actually adults, this doesn't represent a massive change. They probably weren't heavily using the age-restricted features or communities anyway.

Where this gets interesting is for users who are borderline. Someone who's 15 might have had access to some age-gated content before. Now they don't unless they verify, which most won't because they don't want to submit ID. The experience becomes significantly more restricted.

There's also a behavioral economics angle. Friction discourages action. By making age verification required for adult features, Discord introduces friction. Some adults might just accept the teen-appropriate defaults rather than go through the verification process. Discord's effectively voluntarily restricting their own experience.

But from Discord's perspective, that's fine. The company gets plausible deniability about child safety while not entirely locking out minors who can't or won't verify as adults.

Estimated data suggests that having conversations about online safety is the most important parental action regarding Discord's changes, followed closely by monitoring activity and educating about risks.

The Privacy Paradox: Age Verification as a Double-Edged Sword

Here's where the conversation gets genuinely complicated.

Age verification is supposed to protect minors. Make the internet safer for kids. Prevent them from accessing adult content. Keep predators from targeting them. These are legitimate goals.

But age verification systems create permanent records of young people. If you verify as a 15-year-old, somewhere, someone has a record that says you're 15. If that record gets breached or misused, it could follow you. Bad actors might specifically target young people's verified accounts, knowing they're likely minors.

There's also the chilling effect. Some young people might avoid age verification entirely, choosing to live with the teen-appropriate restrictions rather than submit government ID. They're making a privacy calculation: is the risk of my data being breached worth unrestricted platform access? For many, the answer is no.

This creates a strange situation where the safety measure itself becomes a privacy liability. The system designed to protect kids creates new vectors for harm.

Digital rights activists have been sounding alarms about age verification for exactly this reason. They argue it's a Trojan horse for broader surveillance. Once governments and companies normalize age verification, they can expand it. Age checks become identity checks become tracking systems.

Discord's approach is relatively mild compared to some proposals. They're not asking for proof of age in public ways. You're not registering your age with a government database. But the principle is the same: you're creating a digital record that links your identity to your age.

There's also the question of who benefits from age verification data. Discord's vendors collect this data. Even if they delete it promptly, they see patterns. They understand market demographics. They know which regions have high verification rates, which age groups are most resistant to verification. This data, in aggregate, has value.

The counterargument is that age verification is necessary. Letting kids run wild on unmoderated platforms exposes them to genuinely harmful content and contact. Some level of friction, some level of identity verification, might be worth the privacy trade-off.

But the conversation needs to happen explicitly. We shouldn't sleepwalk into age verification systems without really understanding what we're trading away.

Facial Recognition and Biometric Data: Technical and Ethical Concerns

Facial age estimation uses biometric data. Your face is essentially a fingerprint. It's unique to you. It's permanent. It's hard to change.

Discord claims the facial estimation happens locally, on-device, and videos are deleted immediately. That's the promise. But let's examine what could go wrong.

First, there's the question of model robustness. The AI model that estimates age has been trained on specific datasets. Those datasets might underrepresent certain demographics. The model might perform significantly worse for non-Western populations, for people with disabilities that affect facial structure, for people who've had cosmetic surgery.

If the model performs worse for certain groups, those groups will face more friction. They'll be asked to verify multiple times or submit IDs when other groups pass with facial estimation alone. That's a form of discrimination, even if unintentional.

Second, there's the rollback question. Once you submit a biometric sample, can you get it back? No. Your face is out there, in someone's system, for verification purposes. Even if Discord deletes the video after estimation, the fact that a biometric sample existed is hard to undo.

Third, there's the scope creep question. Today, Discord uses facial estimation for age verification. Tomorrow, could it use the same system for identity verification? For authentication? For tracking? The infrastructure is there. The company's just waiting for regulatory justification.

There's also the question of what happens with edge cases. What if the system thinks you're an adult when you're clearly a child, or vice versa? What's the appeal process? Can you challenge the verification? Discord hasn't detailed how they handle disputes.

The broader context is that facial recognition technology is increasingly controversial. Governments are restricting its use. Privacy advocates are pushing for bans. And yet, companies are quietly integrating it into age verification systems, claiming it's more privacy-friendly than ID verification.

It's a marketing narrative with technical merit. Biometric analysis on-device is genuinely more privacy-protective than storing ID photos. But it also normalizes facial recognition technology in ways that could have long-term consequences.

Estimated data shows that government ID photos were the most exposed type of data in the October 2024 Discord breach, highlighting the risks of third-party vendor involvement.

The October 2024 Breach: Why Trust Is the Real Issue

In October 2024, Discord disclosed a security incident. A third-party vendor that the company uses for age-related appeals had been breached. Approximately 70,000 users had sensitive data exposed, including government ID photos.

Let that sink in. A vendor breach exposed ID photos for 70,000 people. Not to Discord. To hackers.

This is the exact scenario that critics of age verification point to as inevitable. No matter how careful Discord is, no matter how strict their contracts with vendors are, the vendors themselves can be compromised.

Now, Discord is rolling out age verification globally using vendors. The company's presumably learned from the October breach. They're probably using different vendors, demanding better security, implementing additional safeguards.

But the fundamental risk hasn't changed. Once you involve third parties in handling sensitive data, you're accepting that risk. There's no vendor so secure that breaches become impossible. There's only vendors whose breaches become less likely.

For users considering ID verification, this breach is worth remembering. It's proof that the infrastructure isn't foolproof. It's evidence that third-party vendors can fail.

The other angle is that this breach might have made Discord more cautious. The company has an incentive to avoid future breaches because the brand damage is significant. A second major breach involving user ID data would be catastrophic for Discord's reputation and user trust.

But incentives aren't guarantees. Companies get breached despite incentives to avoid breaches. It happens because infrastructure is complex, human error is constant, and attackers are sophisticated.

Global Implementation and Regional Differences

Discord isn't implementing age verification identically across the world. Different regions have different legal requirements and cultural norms around age verification.

In the UK and Australia, Discord already rolled out age verification in 2024. These regions had regulatory pressures that required it. Now, with the global rollout, Discord is bringing similar systems to 150+ countries.

But regulatory requirements differ significantly. The UK Online Safety Bill has specific requirements for platforms to age-gate certain content. The EU's Digital Services Act requires child protections but doesn't mandate specific age-verification mechanisms.

The US has no comprehensive federal age-verification requirement for platforms, though Congress has proposed legislation. Some states have enacted age-verification laws for specific content categories, like age-restricted gaming or adult content.

Asia has wildly different requirements. China mandates real-name registration for minors on some platforms. India has emerging data protection laws that might restrict how age verification data is handled. Japan has different cultural norms around online safety.

Discord's trying to implement a system that works globally while respecting regional requirements. That's genuinely difficult. One system that works perfectly in the UK might violate laws in the EU. One approach that's acceptable in the US might be illegal in Germany.

The global rollout probably means Discord's creating a baseline system that meets the lowest common denominator of requirements, with regional adjustments for stricter jurisdictions.

What's unclear is whether Discord will transparently communicate regional differences to users. Will the company explain that your age verification data is handled differently depending on your location? Will they explain what vendors they're using in different regions?

Probably not. Most companies don't because it's complex and might raise concerns.

Comparison With Competitors: How Other Platforms Handle Age Verification

Discord's not alone in implementing age verification. Other major platforms are doing the same thing, often more aggressively.

Roblox, which skews even younger than Discord, made facial verification mandatory for access to chats. You can't talk to other people on Roblox unless you verify your age using facial recognition. That's a harder requirement than Discord's implementing.

You Tube launched age-estimation technology in the US to identify teen users and provide age-appropriate recommendations. But You Tube's approach is more passive. The company's trying to infer age without requiring explicit verification. That's actually more privacy-invasive because it's not consensual.

Tik Tok has been pushing age verification for years, partly because of regulatory pressure. The platform has implemented systems to ask for ID when users try to access certain content or when unusual account behavior is detected.

Instagram and Facebook use age-gating but haven't fully implemented verification systems. Meta's approach is more about setting age restrictions on who can create accounts, then trusting users to not lie.

Snap has similar age restrictions but limited verification beyond asking for date of birth, which is easily spoofed.

The trend is clear: platforms are moving toward more rigorous age verification. Discord's joining that trend rather than leading it. But Discord's implementing it more thoroughly than most. Few platforms are making age verification as central to their architecture as Discord is doing.

There's probably a competitive element here. If Discord becomes known as the safest platform for minors, that's an advantage. If Roblox is known for strict verification but Discord is seen as more private, Discord wins different users.

But all these systems are converging on the same conclusion: age verification is becoming baseline infrastructure for social platforms.

Effectiveness Questions: Does Age Verification Actually Work?

Here's the uncomfortable question: does age verification actually make platforms safer?

The assumption behind age verification is straightforward. Minors can't access adult content. Adults can't easily target minors. Platforms become safer for kids.

But the evidence is mixed. Age verification reduces some risks (accidental exposure to adult content) but might increase others (concentrated data on minors becomes an attractive target for attackers).

There's also the spoofing question. How many kids will defeat age verification systems? Facial estimation might be harder to spoof than claiming you're 18, but it's not impossible. If a significant percentage of minors successfully bypass verification, the system's effectiveness drops.

Age verification also doesn't address the actual problem: predatory behavior. A predator can verify as an adult and then target minors in public Discord communities. The age verification of the predator doesn't prevent harm to children.

What actually prevents predatory behavior is moderation, reporting systems, and community standards enforcement. Age verification is a complementary measure, not a primary one.

There's also the question of whether age verification creates a false sense of security. Parents think Discord is safe for their kids because the platform has age verification. So they're less vigilant about what their kids are doing on Discord. In reality, the platform might not be any safer.

From a research perspective, we don't have strong evidence that age verification significantly reduces harm to minors on social platforms. We have evidence it works as a content-filtering mechanism. We have evidence it doesn't stop determined bad actors.

What we don't have is long-term data on whether age-verification systems actually improve outcomes for young people using these platforms.

For Parents: What You Should Know About Discord's Changes

If you're a parent with kids on Discord, here's what matters.

Discord is implementing a safety system that's genuinely more protective than the previous version. Kids will no longer see adult content by default. Messages from strangers get quarantined. Random people can't easily add your kid to voice channels where they're broadcasting to unknown audiences.

These are real improvements. They're not perfect, but they're better than the alternative.

However, you shouldn't interpret age verification as a solution. It's a tool. It reduces some risks but introduces others. Just because your kid's account is marked as "teen-appropriate" doesn't mean they're safe from all harm.

You still need to:

- Have conversations with your kids about online safety

- Understand who they're talking to on Discord

- Know what servers and communities they're joining

- Monitor their activity (without being invasive)

- Set boundaries about screen time and appropriate content

- Educate them about phishing, social engineering, and predatory behavior

Age verification can reduce the likelihood that they'll stumble into genuinely harmful situations. But determined predators, toxic communities, and problematic content will still find ways through.

Also, if your kid is using Discord legitimately, they might run into the teen-appropriate experience and feel like they're being treated like a child. This could create resentment or encourage them to use VPNs or fake verification to access adult areas. That's actually more risky than letting them see the content, because they're now deliberately hiding their activity from you.

The sweet spot is probably having a conversation with your kid about why Discord's making these changes, what the teen-appropriate features are designed to protect against, and then maybe having them go through the verification process so they're not dealing with artificial restrictions.

But that's a personal decision that depends on your kid, your family, and your comfort level.

Technical Implementation: How Discord's Building This at Scale

Implementing age verification for 150+ million users isn't trivial from a technical perspective.

Discord has to build infrastructure for processing verification requests at massive scale. Facial age estimation requires running AI models, which is computationally expensive. ID verification requires integration with multiple vendors, each with their own API, authentication requirements, and data handling procedures.

The company also has to manage backwards compatibility. Millions of accounts already exist. Some of those accounts belong to minors, some to adults. Discord has to grandfather in existing users, determine their age somehow, or force them through verification when they next log in.

There's also the international infrastructure question. If a user in Japan needs to verify using ID, they might use a different vendor than a user in Brazil. Discord's building a system that routes users to appropriate vendors based on location, currency, language, and regulatory requirements.

Then there's the data consistency problem. When an account gets verified, that verification status has to be propagated everywhere. Every server, every bot, every API endpoint needs to know whether the user is verified. If the system gets out of sync, you might have situations where a user appears verified in one place but not another.

From a product perspective, Discord also has to build UI that explains the new system to users without being confusing. The company's managing a completely new user flow: pre-verified (account just created), unverified, teen-verified, adult-verified, and potentially disputed-verification (user claims the system got their age wrong).

They're also building in failure modes. What happens if facial estimation fails consistently? What if the ID vendor goes down? What's the fallback experience? These questions don't have easy answers at scale.

The engineering team probably spent months just on database schema changes to support age-verification states and history.

Future Outlook: Where Age Verification Is Heading

Age verification is becoming table stakes for platforms. The question isn't whether it'll be standard. It's whether it'll become more invasive or more privacy-preserving.

There are technological trends moving in opposite directions. On one hand, AI-powered age estimation (like facial recognition) is improving. On the other hand, privacy-preserving technologies (like on-device processing and differential privacy) are also improving.

Discord's implementation is a bet that on-device facial estimation will become the dominant approach. No government ID needed. No third-party breach risks. Just local processing.

But governments might not be satisfied with that approach. Regulators might require verifiable age confirmation, which means government ID or other hard evidence. If that happens, Discord would have to pivot to more invasive systems.

There's also the possibility that blockchain-based identity systems gain traction. Users could have verifiable age credentials that they control themselves, then share those credentials with platforms without giving up their ID data. That's technically possible but requires a lot of infrastructure that doesn't exist yet.

Another possibility is that age verification becomes linked to national ID systems. Your government ID is automatically verified when you sign up for a platform. But that requires government infrastructure and raises its own privacy concerns.

The most likely scenario is that platforms continue implementing a mix of approaches. Facial estimation where feasible, ID verification where required, and progressive disclosure where users gradually prove their identity over time.

But the overall trend is clear: platforms are moving toward more robust age verification, not less. The question is how invasive that verification becomes and whether privacy protections keep pace.

Key Takeaways: What You Need to Remember

Let's bring this together.

Discord's age verification system is genuinely motivated by child safety concerns, but it also serves Discord's business interests. The company gets a more defensible liability position. Users get a more structured experience.

The technical implementation is sound. Facial estimation on-device is a smart privacy choice. ID verification is more invasive but more reliable.

However, trust is the real issue. Discord experienced a vendor breach in 2024. That breach exposed ID photos for 70,000 users. No system is breach-proof.

For teens, the new restrictions are real. But they're also rational from a safety perspective. Random adults messaging your kid? That's worth preventing.

For adults, age verification is a one-time friction point that then unlocks full platform access. It's annoying but not catastrophic.

The broader context is that age verification is becoming standard across platforms. Discord's joining a trend, not creating one.

If you're going to use age verification, facial estimation is preferable to ID submission. But understand the trade-offs. You're creating a record of your age. That record, even if securely deleted, creates risks.

If you're a parent, understand that age verification is a tool, not a complete solution. It reduces some risks but introduces others. You still need to be involved in your kid's online activity.

If you're just a user? Decide whether access to age-restricted content is worth verifying your age. For most people, the answer is probably yes. For some, privacy concerns might make them comfortable living with the teen-appropriate defaults.

There's no wrong answer. Just different calculations of risk and benefit.

FAQ

What is Discord age verification?

Discord age verification is a system that confirms whether a user is an adult or a minor. Starting in March 2025, users must verify their age through either facial age estimation or government ID submission to access age-restricted content, modify privacy settings, and use certain platform features. Unverified users default to a "teen-appropriate experience" with restricted access to sensitive content and limited messaging from strangers.

How does Discord's facial age estimation work?

Facial age estimation uses artificial intelligence to analyze facial features from video selfies to predict whether someone is an adult or minor. According to Discord, the video selfies are processed entirely on your device—they never leave your phone. The AI analyzes the video locally, makes an age determination, then deletes the video. You're submitting only the age determination result to Discord, not the actual biometric data.

What happens if I don't verify my age on Discord?

If you don't verify your age, your account defaults to the "teen-appropriate experience." This means sensitive content stays blurred, age-restricted channels are hidden, random messages from strangers automatically go to a separate inbox, you can't modify inbox filter settings, you can't speak on stage in voice channels, and you'll get warning prompts for friend requests from unknown users. You'll have limited access to adult communities and some server features.

Is submitting my government ID to Discord safe?

Discord says government IDs submitted for age verification are handled by third-party vendors and are deleted immediately after age confirmation. However, Discord experienced a vendor breach in October 2024 where ID photos for 70,000 users were exposed. While Discord likely improved security after this incident, third-party vendor breaches remain a risk. If you're concerned about ID submission, facial age estimation is the more privacy-protective option.

Can I appeal if Discord's age verification system gets my age wrong?

Discord's announcement doesn't provide detailed information about appeals or disputes for incorrect age verification. The company mentions that some users might be asked to use multiple verification methods if additional information is needed, but there's no clear process for users who disagree with their verification result. This is a gap that Discord should clarify in documentation.

How long does age verification take?

Facial age estimation should take just a few minutes once you start the process. You'll record brief video selfies from multiple angles, the AI analyzes them on your device, and you get a result. Government ID verification typically takes longer because the vendor needs to review your submission. Discord says IDs are deleted immediately after confirmation, but the entire process could take anywhere from minutes to hours depending on the vendor's processing time.

Will age verification data be used for other purposes?

Discord states that age verification data is used specifically for confirming age and routing users to appropriate experiences. However, the company doesn't explicitly guarantee that third-party vendors won't use aggregated data (patterns about verification rates, demographics, regional trends) for other purposes. This is worth keeping in mind when deciding whether to verify using ID.

What if I'm a minor and want access to adult content anyway?

Under Discord's new system, if you're verified as a minor, you won't have access to age-restricted channels, can't unblur sensitive content, and can't use adult-flagged commands. The system is designed to prevent this access. However, determined minors might attempt to defeat verification using fake ID or by lying about their age, though this carries its own risks including account suspension.

Is facial age estimation accurate across different ethnicities and appearances?

Facial age estimation AI models have documented accuracy variations across different demographics. The models might perform better for some ethnic groups than others, and factors like makeup, lighting, and appearance can affect accuracy. If Discord's system repeatedly misidentifies your age, you'll be asked to use additional verification methods like ID submission.

Why is Discord implementing age verification now?

Discord is implementing age verification globally in March 2025 in response to increasing regulatory pressure for child safety across multiple countries, competition from platforms like Roblox and You Tube that are implementing similar systems, lessons learned from regional implementations in the UK and Australia in 2024, and the company's need to demonstrate strong child safety measures for liability purposes and platform legitimacy.

Conclusion: The Future of Age-Gated Internet Communities

Discord's age verification rollout isn't just a policy change for one platform. It's a signal about where the internet is heading.

For years, platforms operated on an honor system. Users claimed to be 18. Platforms didn't verify. Everyone pretended this arrangement was sufficient.

It wasn't. Kids accessed adult content. Predators found minors. Platforms had minimal ability to claim they took child safety seriously.

Age verification changes that. It's not perfect. It's not foolproof. But it makes harmful access measurably harder.

The costs are real too. Privacy becomes a trade-off. Data breaches become a risk. Systems get more complex. User experience gets more friction.

But the needle is moving. Regulators expect platforms to take child safety seriously. Parents expect better protections. Society's tolerance for online harm to minors is shrinking.

Discord's implementation is thoughtful. Facial estimation on-device is legitimately privacy-preserving. Teen-appropriate defaults are rational. The system isn't trying to be draconian.

But individual platforms implementing verification isn't enough. The real progress would be if digital literacy education matched this infrastructure. If parents understood these systems. If teens learned about online safety alongside tech development.

Age verification is a technical solution to a social problem. It helps. But technology alone won't solve child safety online. The infrastructure matters. The culture matters more.

For Discord users, the practical reality is straightforward. Verify if you want full access. Don't verify if you prefer privacy. Either choice is defensible.

For the broader internet, the question is whether age verification becomes invasive or remains privacy-respecting. That answer depends on regulatory choices, technical developments, and public pressure over the next few years.

One thing's certain: platforms won't move backward. Age verification is here to stay. The only variable is how invasive it becomes.

Discord's bet is that on-device facial estimation can provide sufficient security without requiring government ID for everyone. Time will tell if that's true. But it's a bet worth watching.

Related Articles

- Discord Age Verification Global Rollout: What You Need to Know [2025]

- Roblox Age Verification for Chat: What Parents & Players Need to Know [2025]

- Discord Age Verification for Adult Content: What You Need to Know [2025]

- AT&T AmiGO Jr. Phone: Parental Controls Guide & Alternatives 2025

- France's VPN Ban for Under-15s: What You Need to Know [2025]

- Meta Pauses Teen Access to AI Characters: What This Means for Youth Safety [2025]