Discord's Age Verification Rollout: The Complete Breakdown

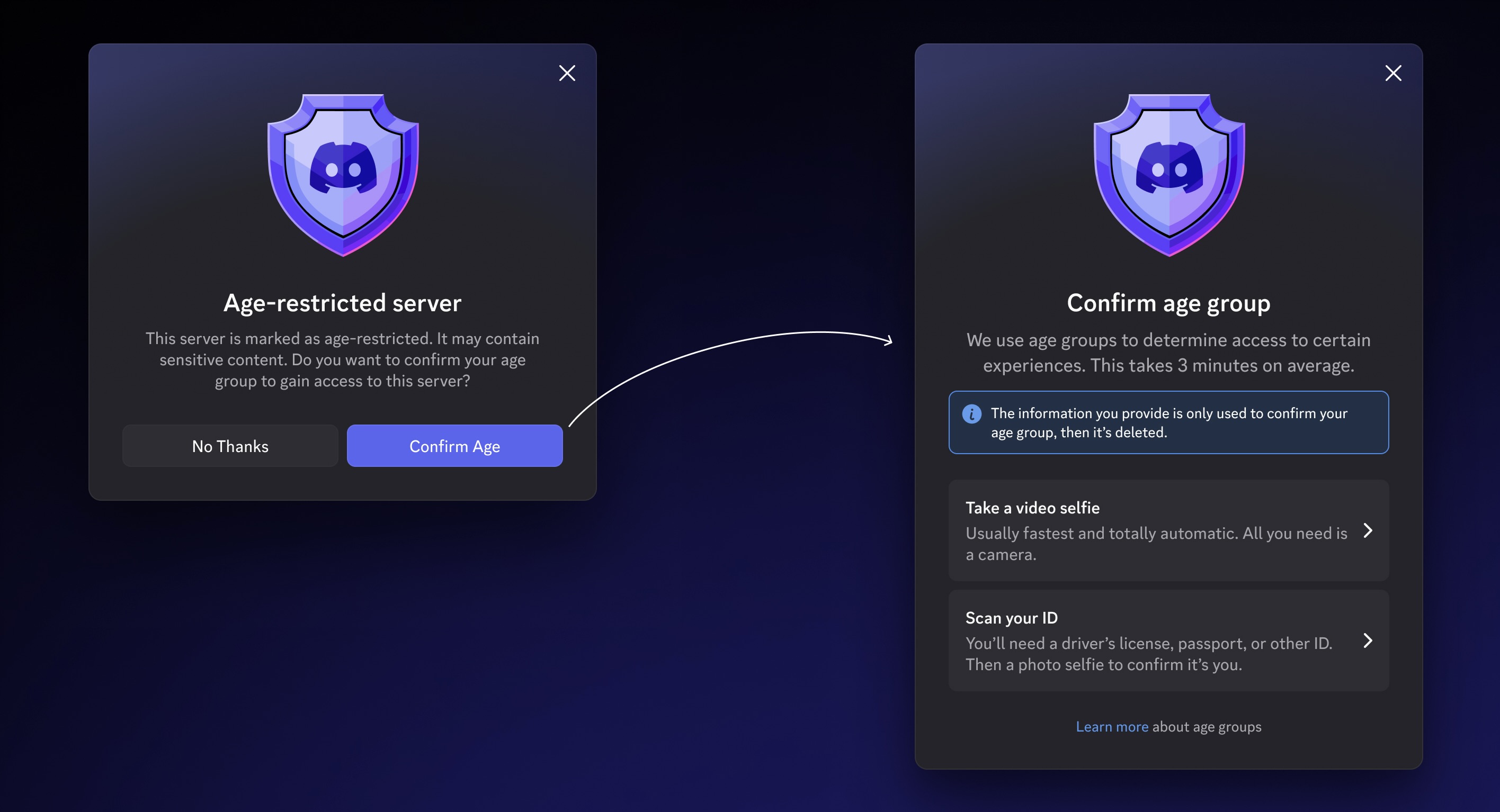

Here's the situation: Discord just announced that starting in March 2025, every single user on the platform will see major changes to how they access the service. If you don't verify your age, you're getting locked into what they're calling a "teen-appropriate experience." That sounds mild until you realize what that actually means.

You won't be able to join age-restricted servers. You can't speak in stage channels. Content filters kick in automatically. Friend requests from strangers get flagged. It's a fundamental shift in how the platform operates, and it affects millions of users worldwide.

This isn't Discord doing something random. It's part of a global wave of age verification mandates sweeping across social platforms. Governments worldwide are pushing hard on child safety, and platforms that don't comply are facing serious consequences. Discord decided to go nuclear and implement verification at scale rather than risk regulatory fallout.

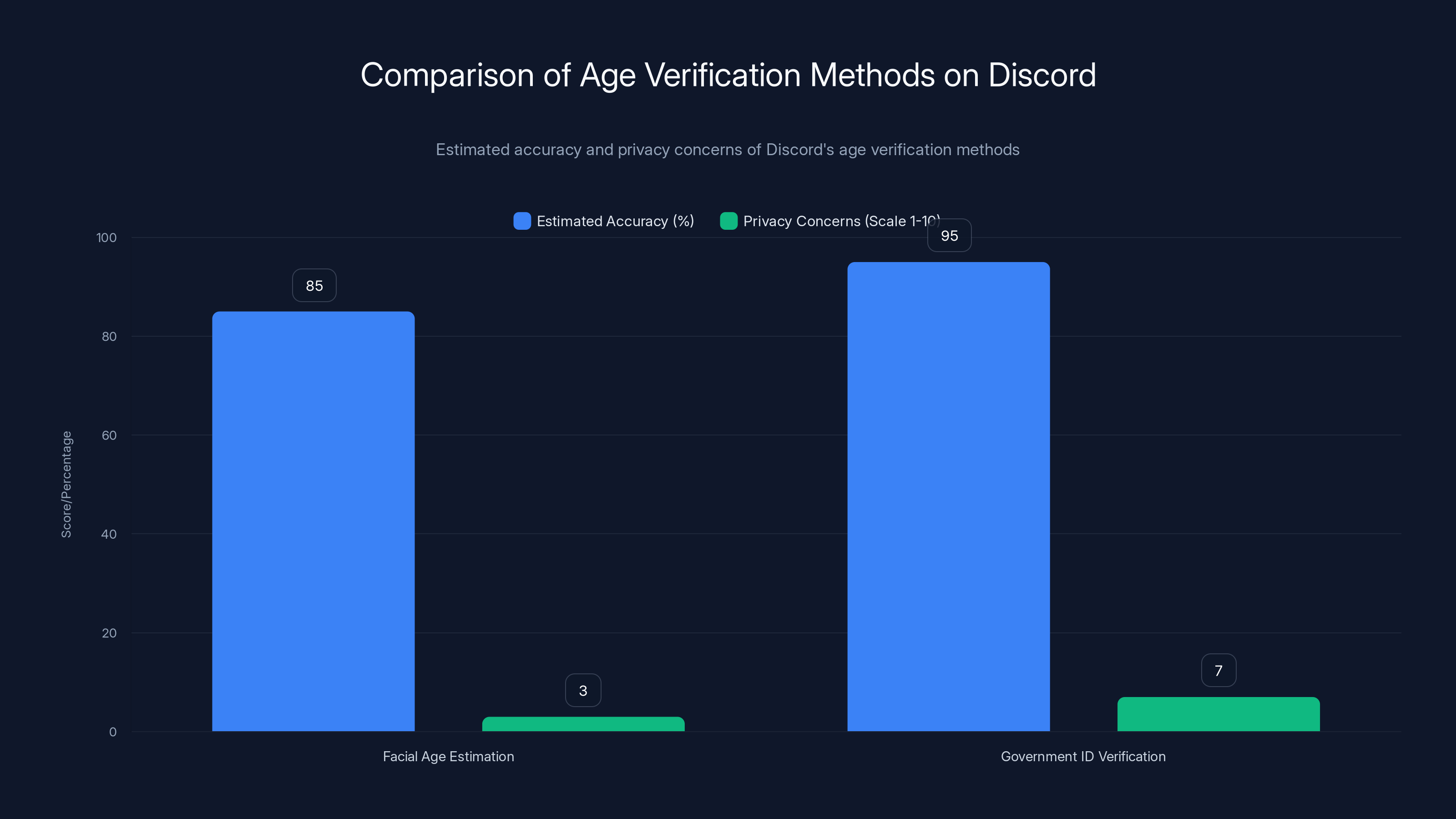

But here's what nobody's talking about: the privacy implications are genuinely thorny. Discord says they're using AI to estimate your age from a selfie, and the image never leaves your device. Sounds good. Then they added: or submit a government ID to a third-party vendor. Which sounds less good, especially after that October 2024 data breach where one of their vendors got compromised and leaked actual photos of government IDs.

We're going to walk through exactly what's happening, why Discord is doing this, what the technical implementation actually looks like, and most importantly, what the privacy and security trade-offs really mean for users.

TL; DR

- Global rollout starts March 2025: All Discord users automatically enter "teen mode" unless they verify their age

- Two verification options: AI-powered facial age estimation (selfie) or government ID upload to a third party

- Access restrictions hit hard: No age-restricted servers, stage channels blocked, content filtered, DMs from strangers separated

- Privacy concerns are real: Government ID images get uploaded to vendors despite previous data breach in October 2024

- This is regulatory-driven: Governments globally are mandating age checks, not optional platform improvements

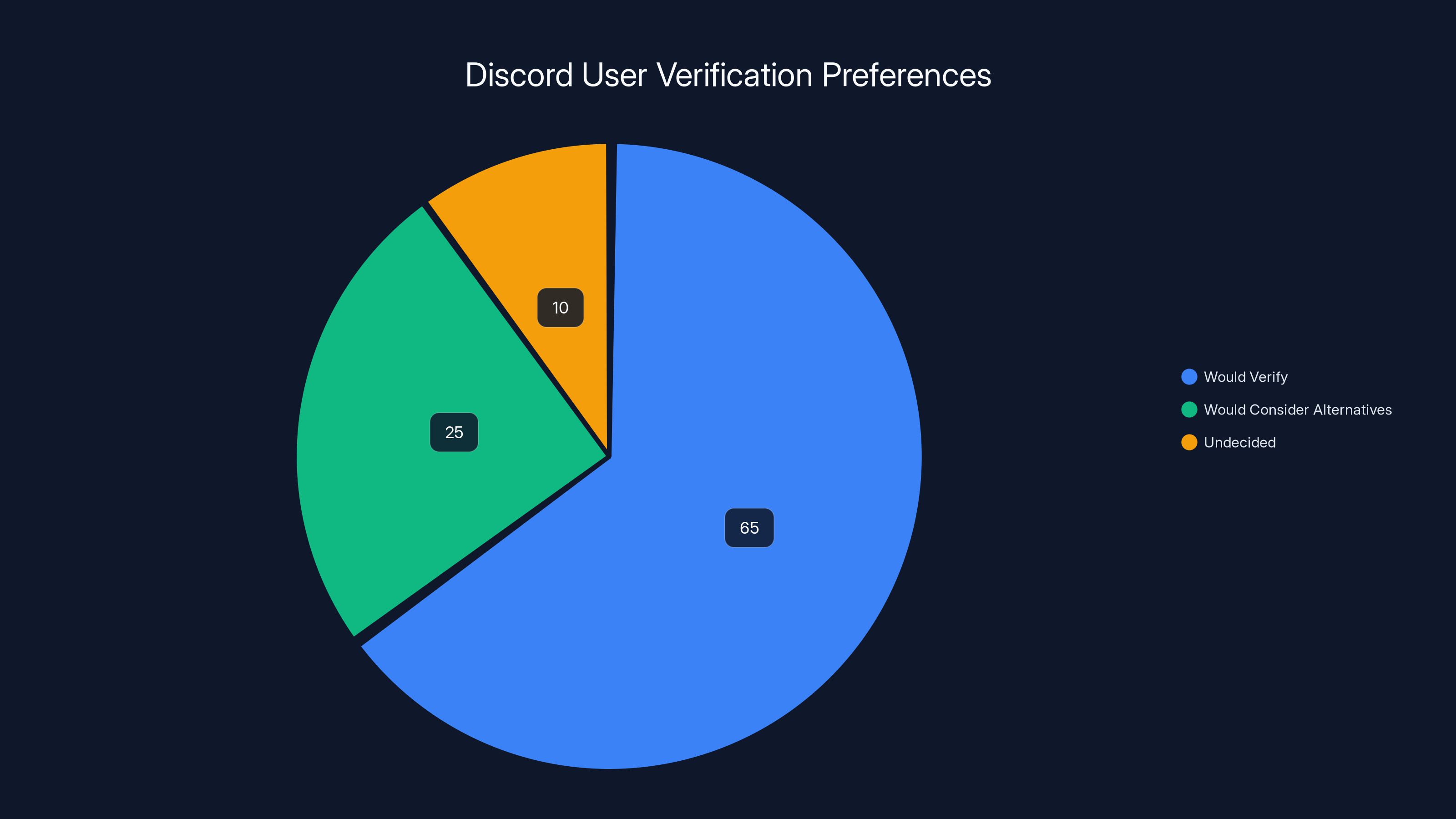

Approximately 65% of Discord users are willing to verify their age if required, while 25% would consider alternatives. Estimated data for undecided users.

Understanding Discord's "Teen-Appropriate Experience"

When Discord says "teen-appropriate," they're being strategic with language. What they actually mean is a significantly restricted version of the platform.

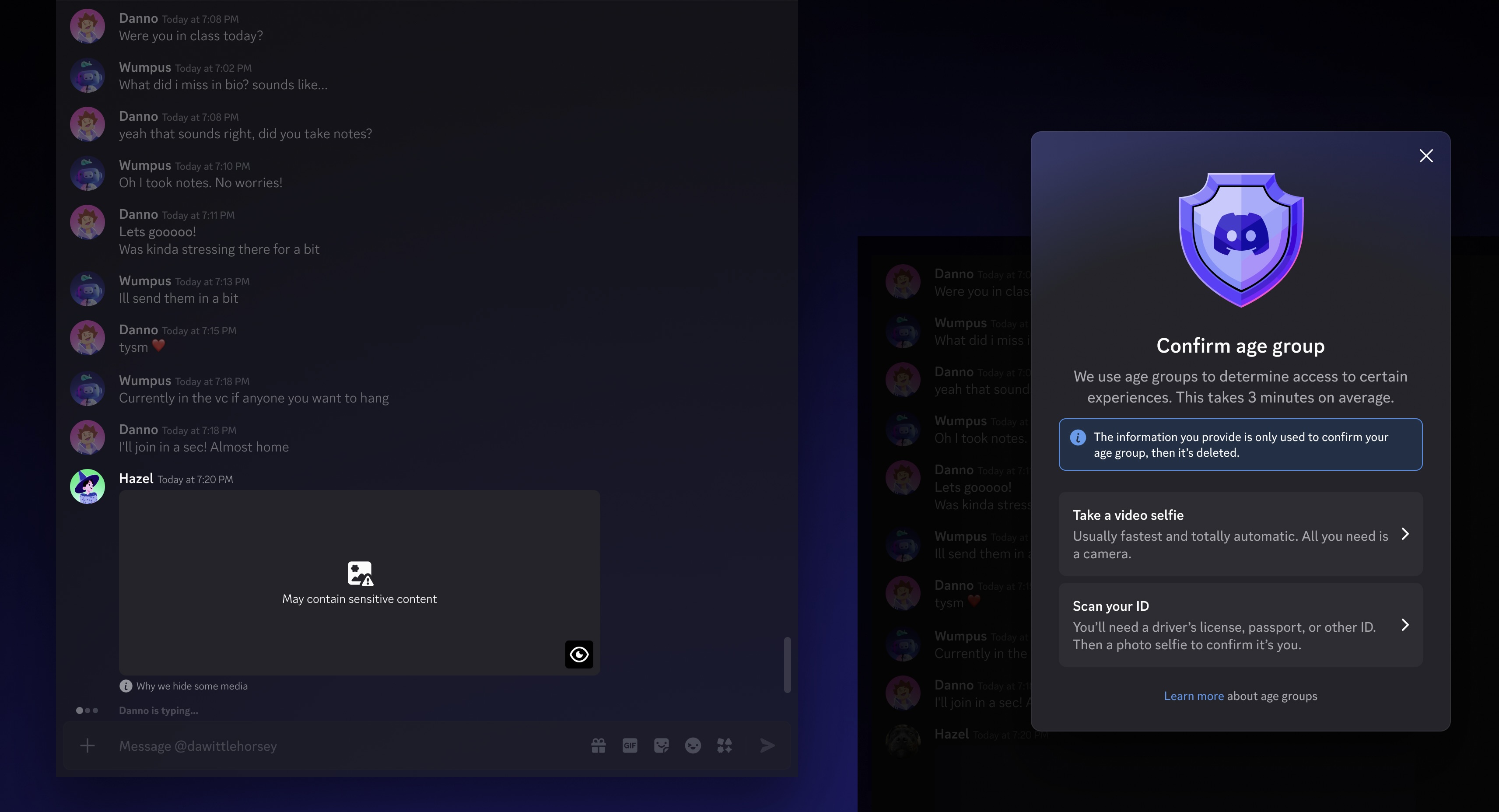

First, the access restrictions. If you're in the teen mode (because you haven't verified), any server marked as age-restricted becomes completely inaccessible. You get a black screen when you try to open it. Discord calls this "obfuscating" the server. You can't see what's in it. You can't read the description. You can't even peek at the member list. It's just gone.

Stage channels are out too. These are Discord's livestream-like feature where users can listen to hosts speaking. They're restricted across the board for unverified users, which actually makes sense from a moderation standpoint but blocks a whole category of community interaction.

The content filtering is automatic. Discord has detection systems scanning for graphic or sensitive content. If you're in teen mode, anything flagged gets blurred or filtered from your view. This isn't perfect technology. False positives happen. Important information sometimes gets caught in overly broad filters.

Direct messaging gets nuanced. Messages from people you already know work fine. But if someone you don't know tries to DM you, there's a warning prompt. If they persist, those DMs go into a separate inbox. It's like Discord is building a protective barrier around unverified users, which sounds good until you realize it could prevent important communication or legitimate community building.

When age-restricted servers get marked as such, existing members don't get grandfathered in. If you were part of a server before verification rolled out and you haven't verified your age, you lose access. You can't message in it. You can't see content. You're effectively kicked out unless you complete the verification process.

The Age Verification Methods: How Discord Actually Checks Your Age

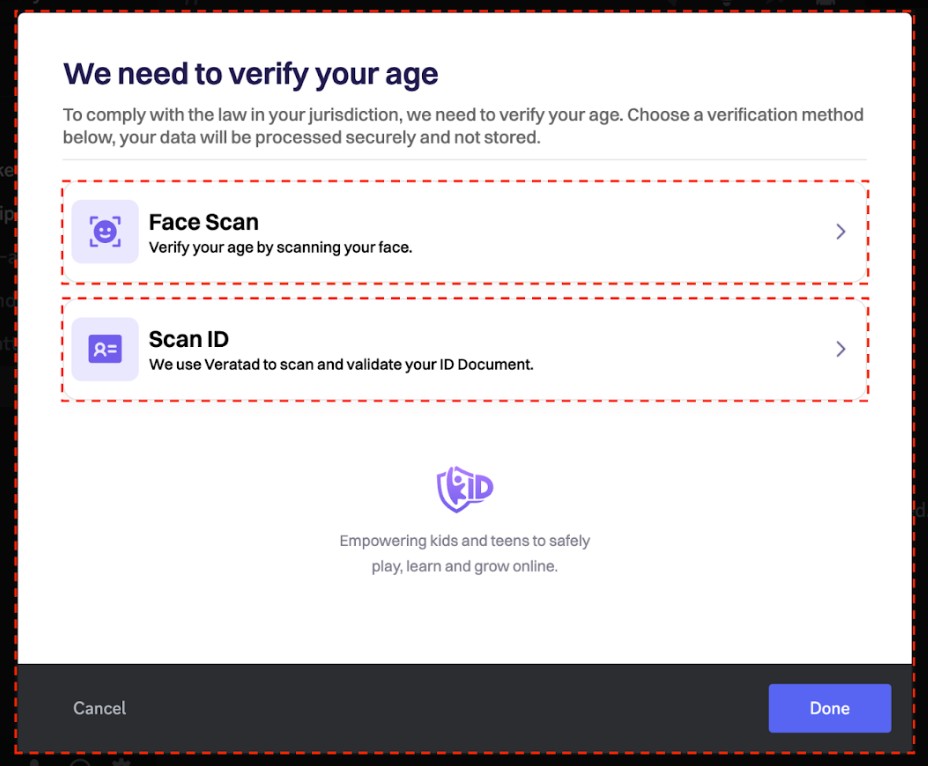

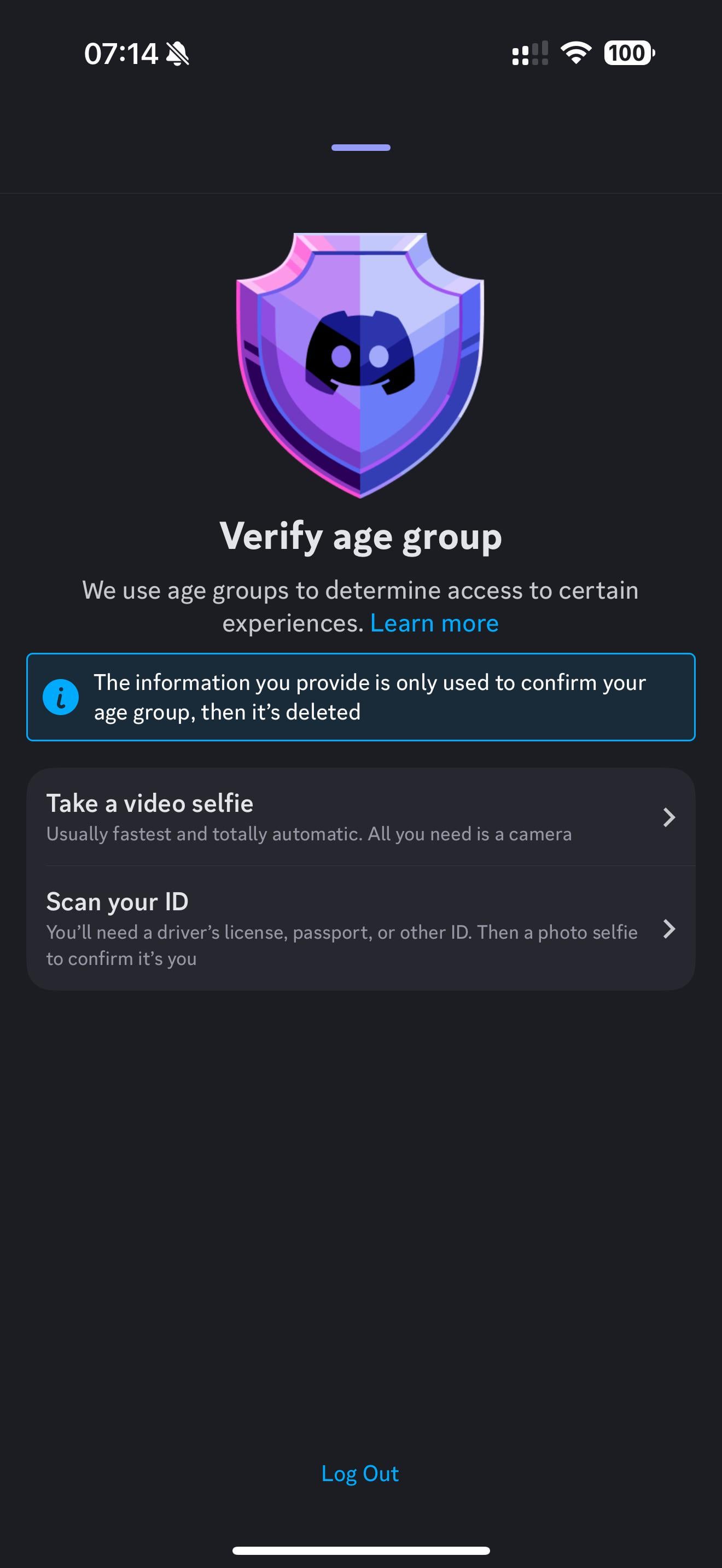

Discord is offering two paths to verification, and they work very differently.

The first option is facial age estimation. This is where Discord's own AI comes in. You take a video selfie through Discord's app. The AI analyzes your face and estimates whether you're a teen or an adult. Discord claims this analysis happens entirely on your device. The video never gets sent to their servers. It never gets stored. It never gets processed remotely.

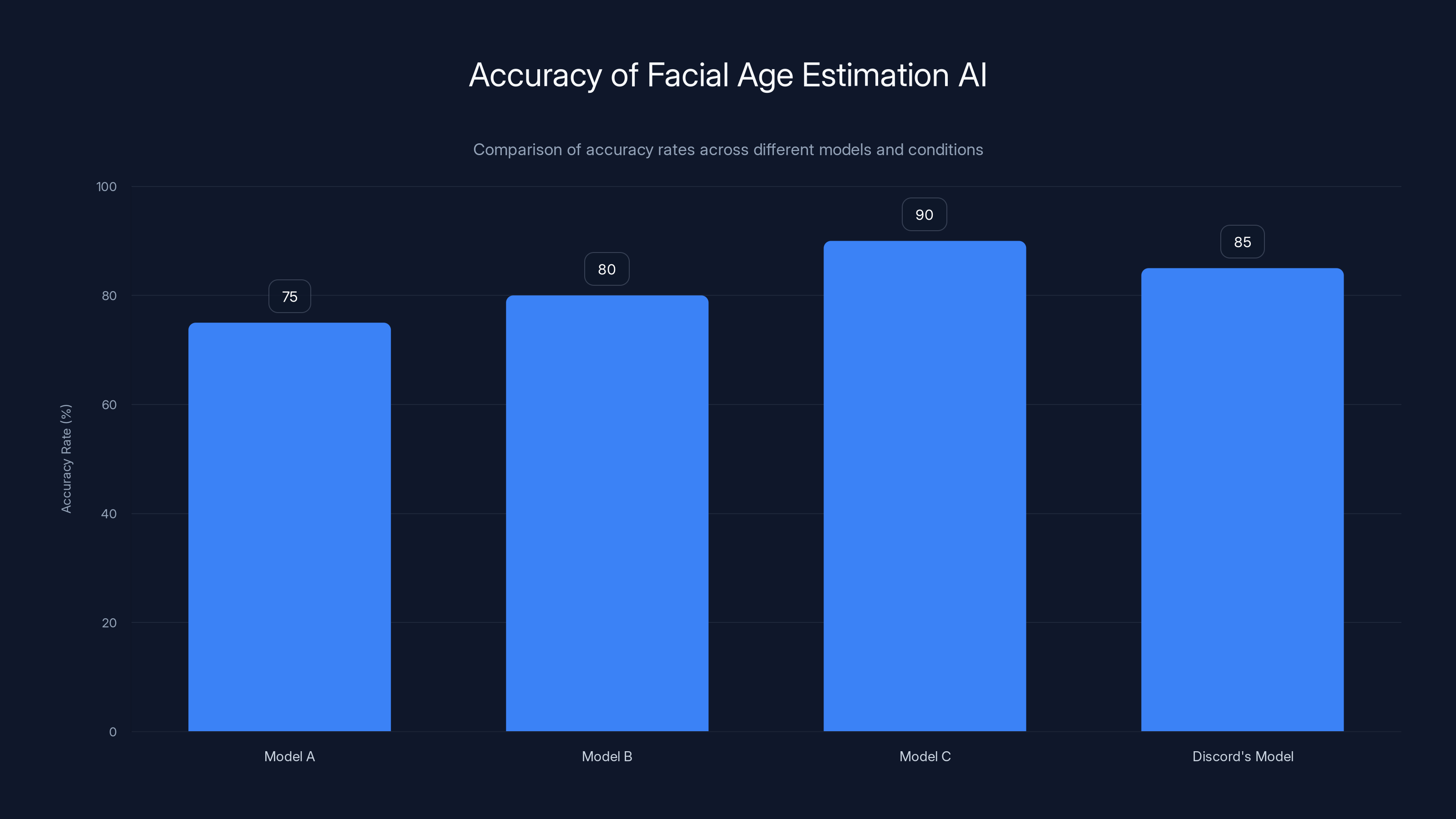

That's the official position. The practical reality is more complicated. The technology has to work somehow. Face-based age estimation requires sophisticated machine learning models that have been trained on thousands of images. Those models have accuracy ranges. Some research suggests facial age estimation can be off by several years in either direction, especially across different ethnicities and lighting conditions.

If the AI gets it wrong and marks you as a teen when you're actually an adult (or vice versa), Discord built in an appeals process. You can challenge the decision. If you want to guarantee accuracy, you move to option two.

The second option is government ID verification. You upload a photo of your driver's license, passport, national ID card, or birth certificate. This goes to a third-party vendor partner. They verify the ID is real, extract the age information, and confirm whether you're over the age threshold.

Here's where the privacy implications get sharp. Discord says these ID images are "deleted quickly, in most cases immediately after age confirmation." That's their phrasing. "In most cases." Not all cases. Most cases.

And here's the historical context that makes this scary: in October 2024, one of Discord's third-party vendors for age verification got breached. The hackers accessed and leaked images of government IDs, including the sensitive photos and information from users who had submitted them. Discord found out and stopped working with that vendor immediately.

But the fact that a breach happened once means it can happen again. The vendor Discord is using now is different, and they claim they've learned lessons and hardened security. But you're still uploading a government ID to a company that isn't Discord, trusting that company to delete it, and hoping their security is better than the last vendor's.

Discord's Savannah Badalich (global head of product policy) gave this statement to press: "We're not doing biometric scanning or facial recognition. We're doing facial estimation. The ID is immediately deleted. We do not keep any information around like your name, the city that you live in, if you used a birth certificate or something else, any of that information."

That's reassuring language, but it's important to parse what they're saying. They're not keeping the ID document image. They might still be keeping the age confirmation itself. They might be logging the fact that verification happened. They're not keeping your name from the ID, but they know your Discord username already. This is accurate but carefully worded.

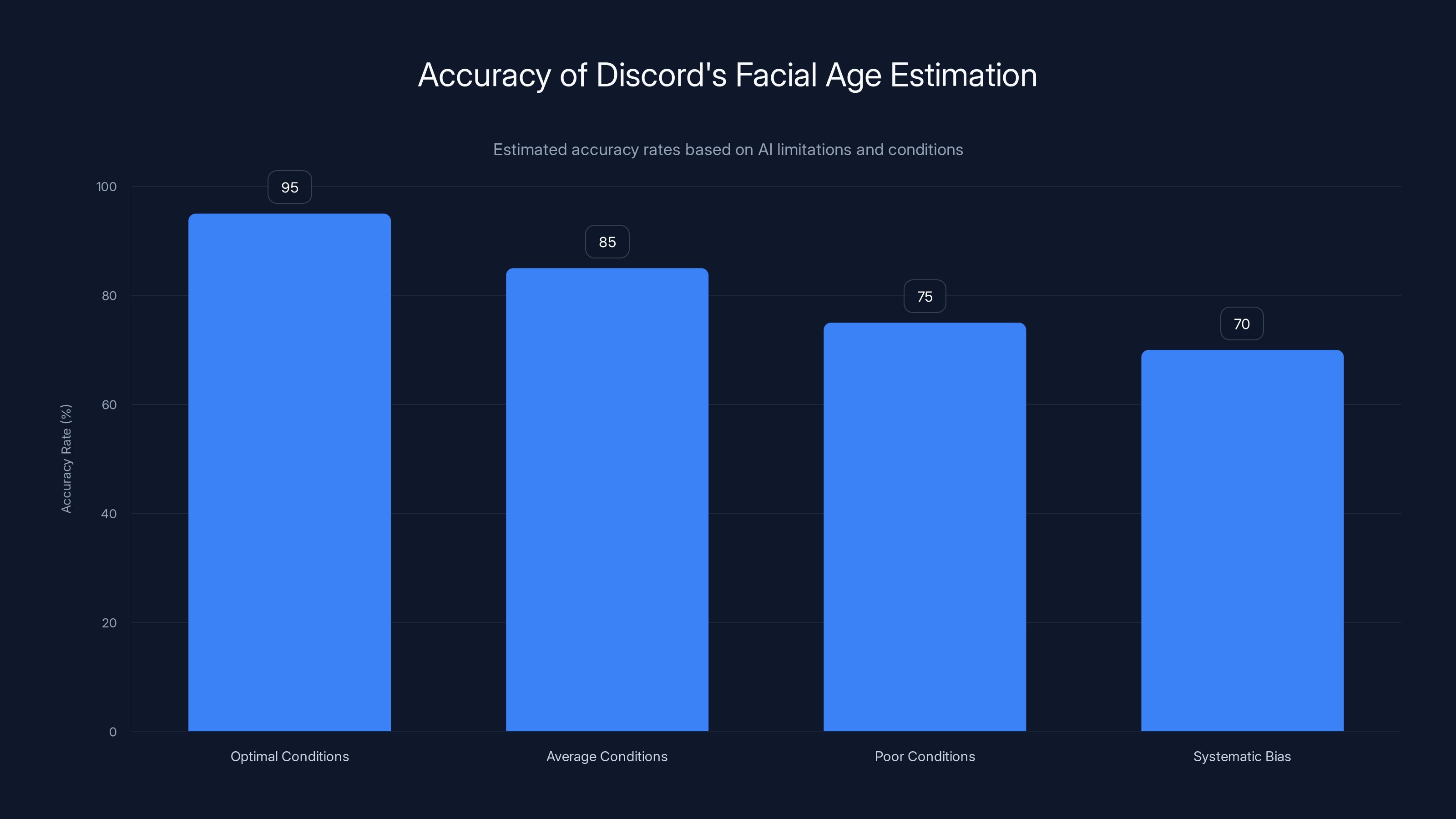

Discord's facial age estimation accuracy ranges from 75% to 95%, depending on conditions like lighting and camera quality. Systematic biases can reduce accuracy further. Estimated data.

Why Discord Is Doing This: The Regulatory Pressure Nobody Talks About

Discord didn't wake up one morning and think, "You know what this platform needs? Mandatory face scanning." This is regulatory reaction, pure and simple.

Multiple countries have been increasing pressure on tech platforms to implement age verification and child safety measures. The UK and Australia got it first last year. Discord tested age verification in those markets. Now it's going global because waiting is riskier than implementing.

The logic is straightforward: if Discord doesn't implement age verification on their own terms, governments will mandate it with worse terms. Regulatory mandates are usually more intrusive, less carefully implemented, and offer less flexibility than voluntary corporate systems. So Discord chose to implement something themselves first.

This isn't unique to Discord. Major tech platforms globally are implementing similar verification systems. It's becoming industry standard because the alternative is regulatory enforcement.

Child safety is the stated rationale, and it's legitimate. Predators do use platforms like Discord to target minors. Age verification creates a barrier. It's not perfect, but it's something.

However, the secondary effect is surveillance-adjacent. Platforms now have verified age data on users. They have government ID information (even if briefly). They have selfie analysis from AI systems. This creates a new kind of data liability and a new category of personal information that can be leaked or misused.

The Technical Implementation: What Actually Happens When You Verify

Let's walk through the actual user experience and technical flow.

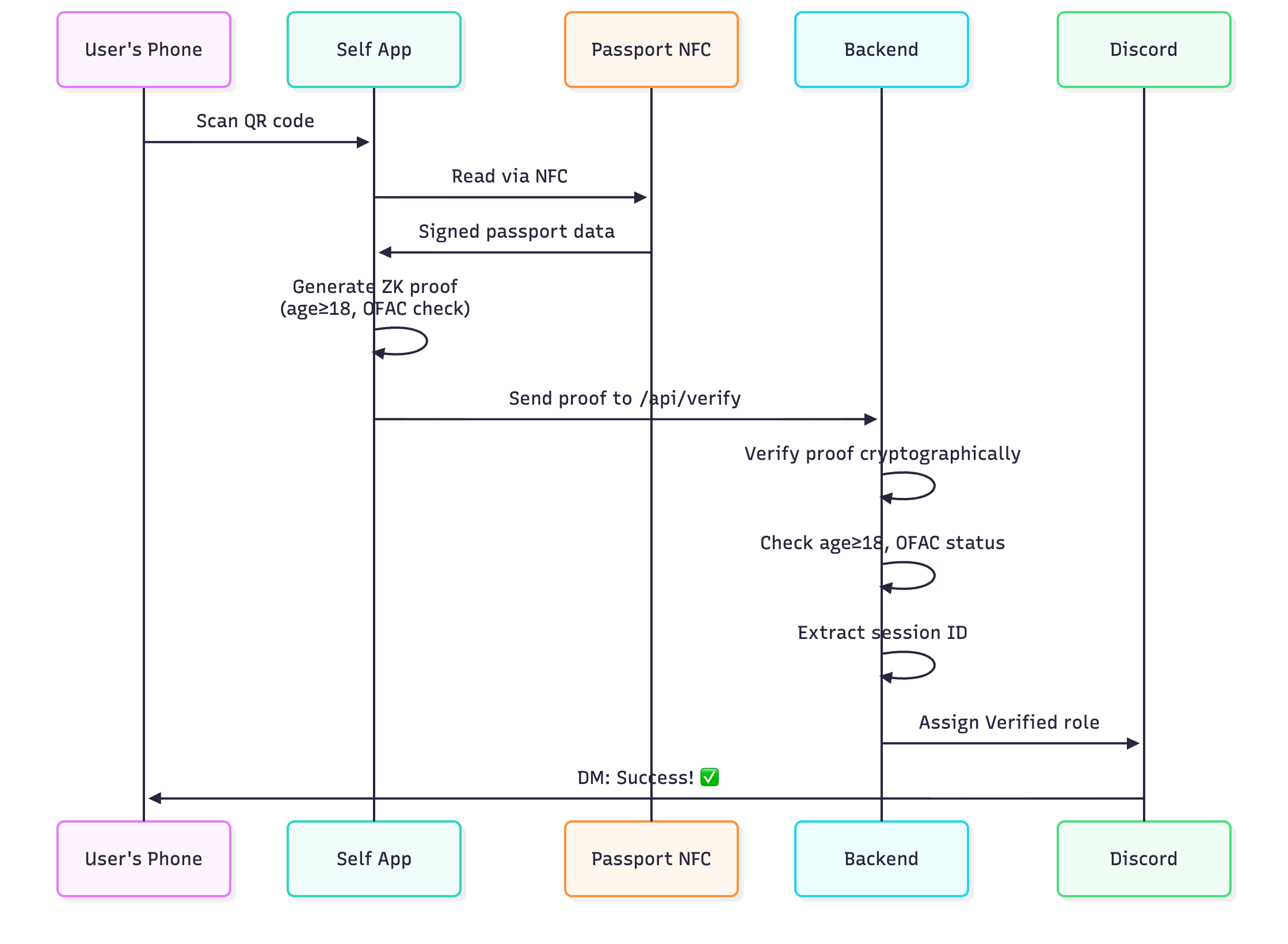

A user logs into Discord on or after March 2025. The system detects they haven't been age-verified. Instead of accessing the full platform immediately, they get prompted. "Complete age verification to unlock full Discord experience," essentially.

They have a choice. Option A: use their phone camera to record a short video selfie. Option B: upload a government ID photo. They pick option A.

The phone camera activates. The Discord app runs an AI model locally on the device. This model analyzes the video and produces a classification: teen or adult. The result is stored locally on their account. No video gets sent anywhere. No processing happens on Discord's servers. That's the claim.

The system shows them the result. "You've been verified as an adult." Done. They get full access.

Now for option B. Same starting point. They pick the ID route. They take a photo of their driver's license front and back. The app sends those images to a third-party vendor. The vendor has automated systems that verify the ID is real (checking security features, consistency, etc.). They extract the birthdate. They calculate the age. They send back to Discord: "This person is 27 years old, verified." The ID photos get deleted (theoretically immediately).

Discord stores that the account is verified as adult. They don't store the ID images themselves. But they or the vendor logs confirmation details: date verified, method used, possibly timestamp and device info.

Once verified, the user gets full platform access. They can join age-restricted servers. They can post in stage channels. Content filters disable. Friend requests work normally. Everything unlocks.

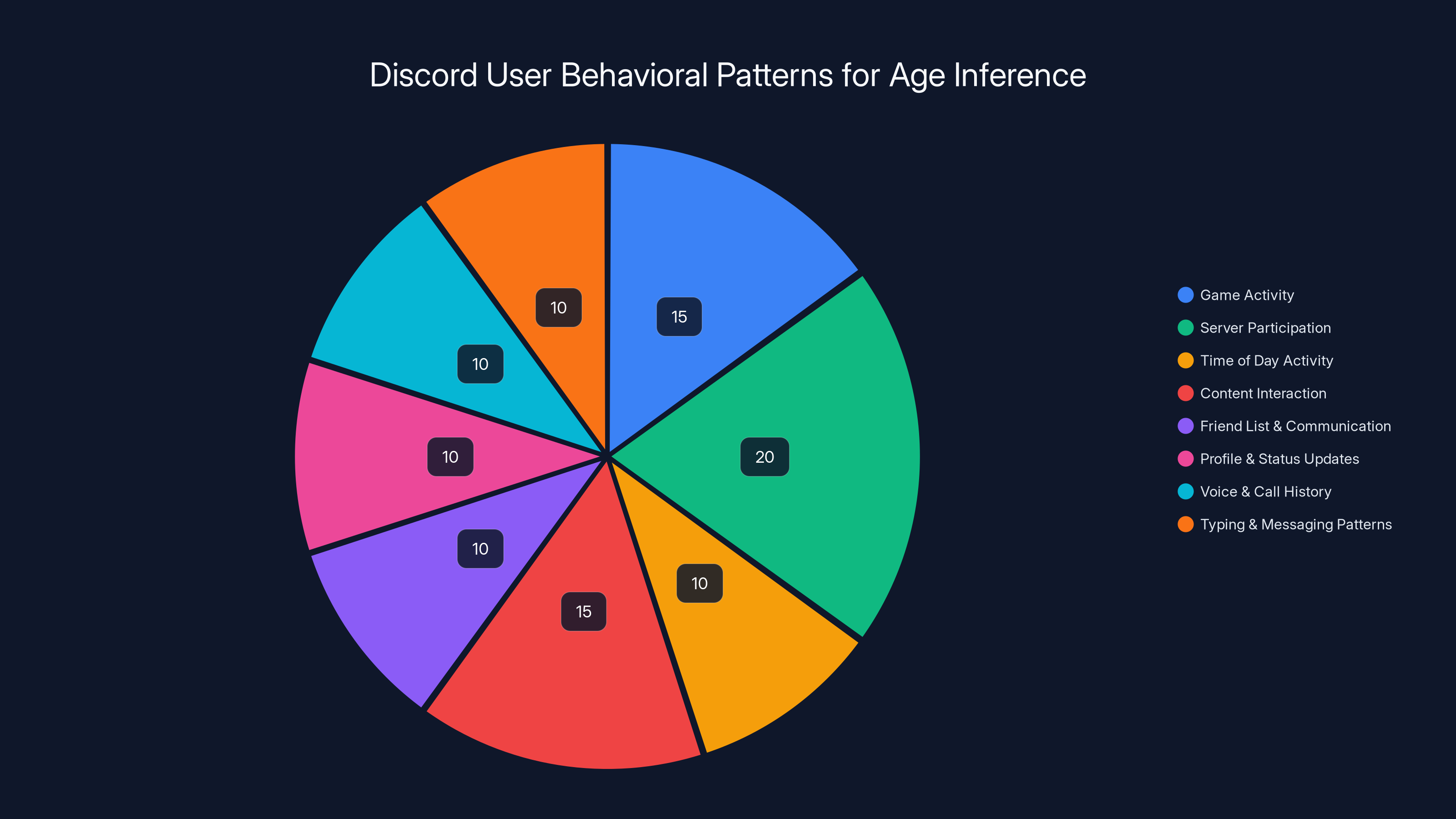

What's interesting is that Discord is also rolling out something called an "age inference model." This is a completely separate system. They're analyzing behavioral signals: what games you play, your activity patterns, your posting times, how long you spend on the platform, whether you have patterns consistent with work hours.

This inference model isn't a replacement for verification. But it's being used as an initial screening layer. Some users might already be automatically classified as likely adult based on behavioral patterns alone, which could affect when or how they get prompted for verification.

That's the controversial part nobody's discussing much. Discord is analyzing your behavior to estimate your age, even before you voluntarily submit proof.

The Privacy Trade-off: What You're Giving Up

Let's be honest about the privacy implications here.

First, facial data. Even if Discord claims the video never leaves your device, they still have your face in an analysis system. The AI model they're using was trained on faces. When you run it locally, you're running machine learning code that was built to recognize age-related facial patterns. Discord probably also trained their specific model on Discord user data, which means they've analyzed thousands of faces to build this system.

Second, behavioral data. Discord is analyzing your gameplay, your posting times, your activity levels, your activity type. They're building profiles about whether you're a teen or an adult based on how you use the platform. That's new data collection they're doing specifically for this feature.

Third, government ID data (if you choose that route). Even if images are deleted immediately, someone at the vendor company is handling your ID. Someone is processing it. It travels across the internet. It exists in temporary caches and buffers. The October 2024 breach proved that vendors in this space can be compromised.

Fourth, verification metadata. Discord definitely keeps records of when you verified, what method you used, and whether verification succeeded. They might keep device information, IP address, or other context. They're not keeping this super private. This data will likely be used for trust scoring, platform safety systems, and law enforcement requests.

Fifth, behavioral change. Once the verification system is in place, Discord will likely use it for other features. Age-based content recommendations. Age-based feature access. Advertising customization based on verified age. The system becomes more useful to Discord the more data they collect through it.

Discord isn't being dishonest about this. They're transparent in the documentation. But the scope of data collection is broader than just "checking your age." It's building an identity layer on top of Discord accounts.

Facial age estimation AI models show varying accuracy rates, with Discord's model achieving 85% accuracy. Estimated data.

Circumvention: How Users Have Already Found Workarounds

When Discord rolled out age verification in the UK and Australia in 2024, users found a loophole almost immediately. Someone discovered that the photo mode in Death Stranding, Kojima's game, could be manipulated to trick the facial age estimation system.

How? The photo mode let you adjust lighting, angles, and facial features in ways that confused the age detection AI. Users who wanted to bypass verification took photos of different faces or the same face altered enough to look significantly older or younger than they actually were.

Discord noticed and fixed it within a week. They updated their AI model and possibly added anti-spoofing checks. But the fact that a solution existed and spread so quickly tells you something important: determined users can find ways around this.

For the global rollout, Discord is expecting more attempts. Savannah Badalich acknowledged this in interviews. They know users will try to bypass the system. They're expecting it. They're planning to "bug bash as much as we possibly can," her exact words.

Potential workarounds that users might try:

- Using old photos: Submitting ID photos from years ago when they were younger (or older if pretending to be older)

- Photo editing: Manipulating selfies to appear older or younger

- Deepfakes or AI-generated images: Creating synthetic faces that fool the AI estimator

- Borrowing IDs: Using someone else's government ID (illegal but possible)

- VPN and proxies: Trying to appear as different locations to access different verification systems

- Timing exploits: Finding time windows before verification systems activate

- Technical exploits: If Discord's implementation has bugs, finding and using them

Adult users might also try to avoid verification despite not needing to circumvent it. Why? Privacy concerns. They don't want to submit government IDs or facial videos to any system, even one that claims deletion.

Some tech-savvy users might simply accept the teen restrictions rather than go through verification. The trade-off is worth it to them if it means avoiding data submission.

The October 2024 Vendor Breach: What Actually Happened

Context matters. Before you submit your government ID to anyone, you should understand what went wrong before.

In October 2024, one of Discord's third-party vendors for age verification infrastructure got hacked. This wasn't Discord's direct servers. This was an external company they'd partnered with. But that distinction matters less to users whose data leaked.

The breach exposed images of government-issued IDs. That's the worst-case scenario for an ID verification system. Photos included sensitive identifying information, not just the age data.

How did it happen? The vendor's security infrastructure had vulnerabilities. Exact details are limited because vendor companies rarely disclose full postmortem details publicly. But it likely involved something like unpatched systems, weak access controls, inadequate encryption, or compromised credentials.

What Discord did: they immediately stopped using that vendor. They moved to a different third-party partner. They claim they've reviewed security practices and implemented new safeguards.

But here's the thing: this isn't unique to Discord's vendor. ID verification companies as a category have been breached repeatedly. Cybersecurity researchers consistently find vulnerabilities in identity verification systems.

Why? Because ID verification services are high-value targets. Hackers can sell government ID data for thousands per record on dark markets. A successful breach pays hugely. So vendors are targeted constantly. Perfect security doesn't exist.

Discord's new vendor might have better security than the last one. But "better" isn't the same as "unhackable." Users should understand they're taking a real risk by submitting government IDs, even to Discord's carefully selected partner.

Behavioral Age Inference: The Sneakiest Part of the System

Here's what Discord isn't emphasizing: they're implementing age inference based on how you actually use the platform.

Discord has access to massive amounts of behavioral data on every user. They know:

- What games you play and when

- What servers you're in

- What time of day you're active

- How many servers you're in

- What types of content you interact with

- Your friend list and who you talk to

- Your status updates and profile information

- Your voice activity and call history

- Your typing patterns and message frequency

Using machine learning on this data, they can build models that predict whether an account belongs to a teen or an adult. Teens have different activity patterns than adults. Adults with jobs are active during different hours. Adults and teens play different games on average. Adults tend to be in more diverse server communities.

The inference model isn't perfect. But it's probably reasonably accurate. And here's the kicker: Discord can use it immediately without waiting for explicit verification.

If your inference score is high ("probably an adult"), Discord might not even bother prompting you for verification. If it's low ("probably a teen"), you get prompted earlier or more aggressively.

This is incredibly efficient for Discord from an implementation standpoint. They get to satisfy regulatory requirements while not requiring everyone to go through verification. But it also means they're making age decisions about you without your input, based on inference models that can be wrong.

A teen using Discord like an adult (because they're in creative communities, do game design, work on projects) might get incorrectly classified as adult. An adult who's very casual and lurks a lot might get classified as teen. These misclassifications cascade through the system.

Worse, once the inference model is deployed, Discord is collecting even more behavioral data to continuously refine it. Every user interaction is training data for age detection. That's a permanent shift in how much data Discord collects.

Facial age estimation is less accurate and has lower privacy concerns compared to government ID verification, which is more accurate but raises significant privacy issues. Estimated data.

The Age Gate Itself: How It Affects Community Dynamics

Age verification systems change how online communities function. They're not just administrative overhead. They have real effects on platform culture.

First, the friction. Verification requires steps. Some percentage of users will simply abandon the platform rather than go through it. Discord estimates most users will verify (they haven't published an official percentage), but some won't. These users either stay in teen mode or disappear.

Second, the segregation. Age-restricted servers become more of a thing. Server creators who want to gate content can now do it at the platform level rather than relying on honor systems or manual verification. More servers will implement age gates. More content becomes age-restricted.

This sounds good for child safety. But it also means that valuable communities and resources that happen to contain any mature content become off-limits to anyone who looks young or hasn't verified. It's a blunt tool.

Third, the authentication layer. Once verified age exists, it becomes useful for everything. Advertisers want to know verified ages. Content recommendations want to use it. Moderation systems want to consider it. What started as a safety feature becomes a categorization system for the entire platform.

Fourth, the barrier to entry. New users now have an extra step before they can access the full platform. That's friction that reduces initial adoption. It's especially significant for young users, who are the primary demographic Discord is supposedly protecting, but who also get the most locked-down experience.

Fifth, the chilling effect. Knowing your age is verified and recorded makes some users more cautious. They might self-censor. They might not join communities that are technically legal but socially controversial. They might avoid expressing unpopular opinions. Whether intentional or not, age verification creates a surveillance effect.

Global Variations: How Age Verification Differs Across Countries

Discord isn't implementing the same system everywhere. Different countries have different legal requirements and different age-of-majority definitions.

In the US and most of Europe, the age of majority is 18. You're an adult or you're not. Discord is implementing it that way in those regions.

But countries vary. In some places, the age of majority is 16. In others, it's 21. Some countries have specific laws about what platforms can do with age verification data. Some require that minors get special protections. Some prohibit certain types of collection.

Discord has to navigate this patchwork. They're implementing a global system but with local variations. That's complex. It means different user experiences in different countries. It means the verification threshold might be different. It means some countries might require more privacy protections than others.

The European Union is particularly strict. GDPR applies to EU users. Collecting face images and ID data falls under GDPR. Discord has to have a valid legal basis for processing this data. "Child safety" is probably sufficient, but the requirements around data deletion and user rights are strict.

The UK, post-Brexit, implemented the Online Safety Bill which explicitly addresses age assurance for platforms. But it's more flexible than GDPR. Other countries have their own frameworks.

Discord's approach seems to be: implement global systems with privacy-first design as much as possible, then add country-specific modifications where legally required. That means some users get better protections depending on where they are.

What Actually Stops Dedicated Circumvention

There's a gap between what technically prevents circumvention and what Discord can realistically implement.

To truly prevent all circumvention, Discord would need:

Liveness detection: The system would need to verify that your selfie is of you, right now, not a photo from a year ago or a synthetic image. This requires advanced anti-spoofing technology that's not perfect. Deepfakes can fool liveness detection. The technology itself is controversial.

Document verification: For ID verification, they'd need real-time communication with government databases to verify the ID is authentic and hasn't been reported stolen. Most ID verification systems still rely on visual inspection of security features rather than government database access, which is less secure.

Behavioral validation: They could try to match the selfie to the behavior profile (does the verified person match the account's usage pattern?). But this gets complicated and creates false positives.

Device fingerprinting: They could require that verification happens on a specific device and that the account stays associated with that device. But this enables tracking and reduces user freedom.

Legal enforcement: They could actually enforce age restrictions, not just content filtering. If someone violates restrictions, Discord could take legal action. But that scales poorly and invades privacy further.

Discord probably isn't implementing most of these. Why? Because they're expensive, they harm legitimate user experience, and they create privacy nightmares. Instead, Discord is probably accepting that some circumvention will happen and focusing on catching obvious cases while accepting that determined users will find ways around it.

The system is more about liability reduction ("we implemented something") than perfect age enforcement.

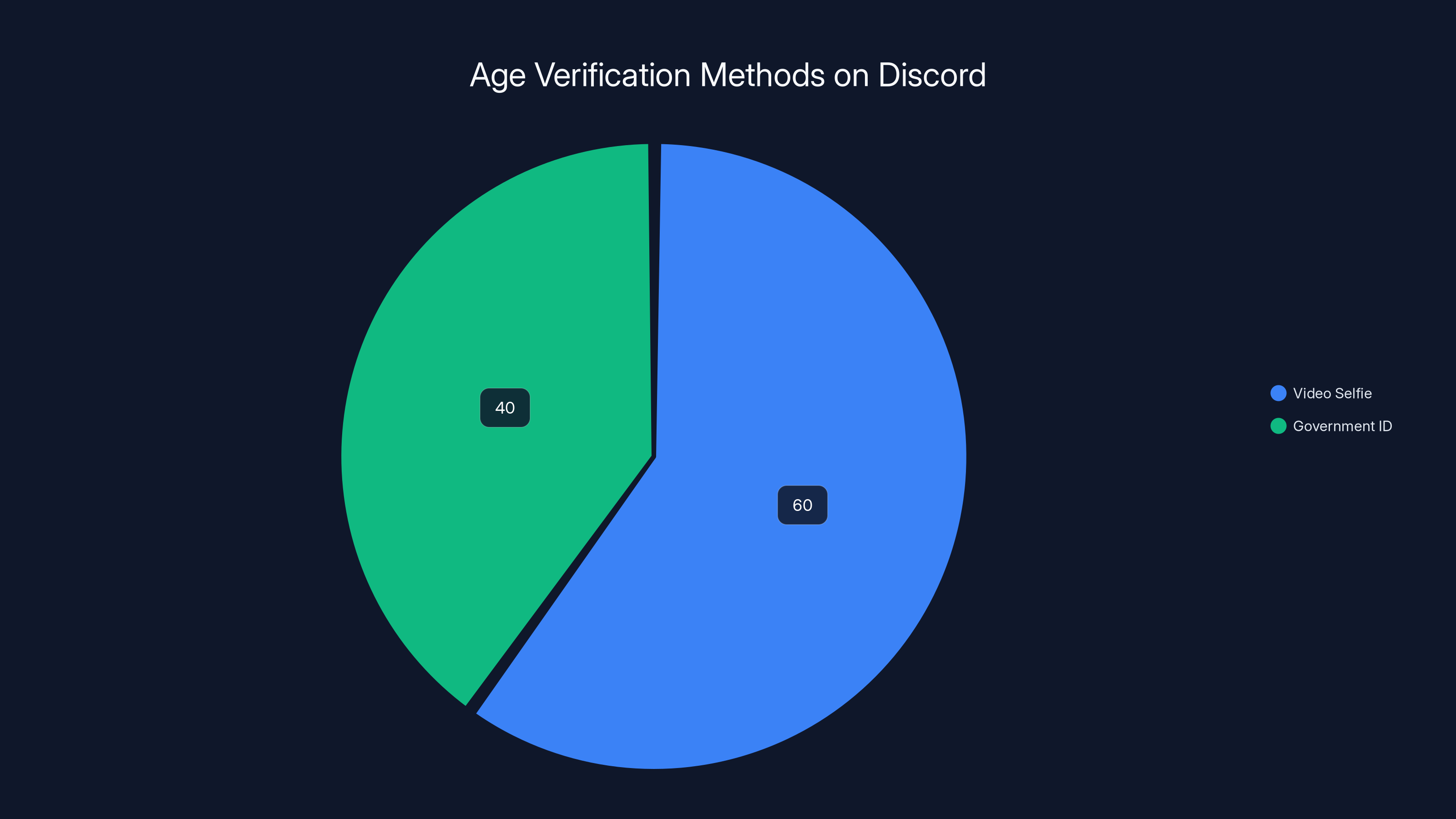

Estimated data suggests that 60% of users prefer using a video selfie for age verification on Discord, while 40% opt for uploading a government ID.

The Data Deletion Problem: Is It Really Deleted?

Discord claims ID images are deleted "immediately, in most cases." This language matters because it's vague.

Here's how systems actually work: when you upload a file, it gets written to a server. It gets processed. It gets stored temporarily in various places: memory buffers, disk caches, backup systems, log files. The image data gets moved between systems. It might be logged. It might be cached for performance. It might be included in automated backups.

To actually delete it, you need:

- Identify all copies: Find every location where the file exists (main server, caches, backups, replicas, logs)

- Delete securely: Not just delete the file pointer, but overwrite the actual bytes on disk so they can't be recovered

- Delete from logs: Remove references from transaction logs, audit logs, backups

- Verify deletion: Actually confirm the data is gone

Most systems don't do all of this. They do number 2. They probably do number 1 partially. Numbers 3 and 4 are often skipped. And vendors, despite good intentions, often fail at some of these steps.

When Discord says "deleted immediately," they probably mean "deleted from the primary database and not used for future processing." They probably don't mean "securely overwritten everywhere including backups and logs."

This matters because if the October 2024 breach happens again, what gets compromised might not be current ID images (they're deleted) but rather backup copies or log files that nobody properly cleaned up.

You should assume that once you upload a government ID to any system, it's in that system somewhere. Probably for longer than you think. Even if it gets deleted, copies might persist in backups or archives for years.

The Facial Age Estimation AI: How Accurate Is It Really

Let's dig into the facial age estimation technology because this is the privacy-friendlier option, but it comes with accuracy trade-offs.

Facial age estimation AI works by analyzing features like:

- Skin texture and wrinkles

- Face shape and proportions

- Bone density (visible in face structure)

- Skin elasticity

- Specific age-related features (gray hair, age spots, etc.)

The model was trained on thousands or millions of labeled images. It learned to recognize patterns that correlate with age. In testing, these models achieve accuracy rates between 75% and 95%, depending on the dataset, the model architecture, and what "accuracy" means.

But there are systematic biases. Different ethnicities have different aging patterns. Lighting affects how wrinkles appear. Makeup can significantly alter facial features. Gender classification models sometimes fail, and age models often inherit those failures. Camera quality matters. Angle matters.

Say Discord's model is 85% accurate overall. That's pretty good. But that means 15% of users are misclassified. If Discord has 150 million active users, that's 22.5 million people classified incorrectly.

A 16-year-old might look old for their age and get classified as adult (let them in, no problem). A 19-year-old might look young and get classified as teen (they get restricted content). There's also variance over time. The same person might look different in different selfies (lighting, angle, appearance changes).

Discord allows appeals. If you get misclassified, you can challenge the decision. The appeal process requires submitting an ID, which brings you back to the privacy concerns you were trying to avoid with facial estimation in the first place.

So the appeal process creates a weird incentive: if you're misclassified, the only way to fix it is to give them exactly the data you were trying to avoid giving them.

Comparing Discord's System to Other Platforms

Discord isn't alone in implementing age verification. But their approach differs from competitors.

Meta (Facebook, Instagram, WhatsApp): Uses a combination of ID verification and document scanning. More aggressive about collecting identity documents. Less emphasis on facial estimation. They've faced repeated criticism over data breaches and ID handling, which might actually make users trust Discord's approach more.

TikTok: Implements age verification in some regions but not globally. Uses ID verification where required by law. Less aggressive about enforcement in regions with less regulatory pressure. Facing potential bans in some countries, so verification might be moot soon.

Snapchat: Uses AI-based age estimation but also allows ID verification. More focused on preventing adult predators than protecting minors. Different threat model.

YouTube: Age verification for mature content exists but is generally optional. Relies more on self-reported age at account creation. Less strict enforcement.

Reddit: Similar to YouTube. Age verification is available but not mandatory. Relies on community moderation.

Discord's approach is stricter than most. They're making it mandatory rather than optional. They're blocking access rather than just hiding content. They're using behavioral inference in addition to explicit verification.

This strictness makes sense from a liability standpoint. If something happens involving minors, Discord can now demonstrate they took "reasonable" precautions. But it also means a more intrusive system than most competitors.

Estimated data shows that server participation and game activity are major components in Discord's age inference model, each contributing significantly to the overall prediction.

The Liability and Regulatory Angle: Why Discord Is Actually Doing This

Here's what nobody talks about directly: Discord is implementing this to reduce their legal liability.

If Discord doesn't verify age and a minor accesses adult content or is harmed by someone, Discord is potentially liable. They could face lawsuits. Regulators could fine them. Their reputation suffers.

With age verification in place, Discord has a defense. They can argue: "We implemented industry-standard age verification. We took reasonable precautions. Users circumvented the system despite our best efforts."

Legal liability is the primary driver, not user safety (though that's the stated rationale).

Regulatory bodies worldwide have been increasing pressure. The Online Safety Bill in the UK, the Digital Services Act in the EU, various bills in the US, and country-specific laws in Asia and elsewhere all address age verification and minor protection.

Biggest risk for Discord: being dragged into a legal proceeding where their age verification system is called inadequate. Being on the wrong side of a regulatory enforcement action. Having countries ban or restrict the platform for inadequate protections.

Implementing verification proactively is cheaper than fighting regulatory battles later. It's a business decision, dressed up in child safety language.

None of this is cynical. Child safety is important. But the primary motivation is risk reduction and liability management.

What Happens to Unverified Users Long-Term

Here's a question Discord hasn't explicitly answered: what happens if users remain unverified indefinitely?

The teen-mode restrictions don't go away. Users stay locked out of age-restricted content. But do they get pestered constantly? Do they get limited server invites? Do they get suspended?

Discord says verification is required to access the full platform, but "required" is vague. Is it required immediately? After 30 days? Will they force it?

Most likely scenario: Discord doesn't actively force verification, but makes it increasingly inconvenient not to. They gradually roll out features that require verification. They make the teen experience worse. They increase the frequency of verification prompts.

Some users will simply leave. Some will bite the bullet and verify. Some will use workarounds. Some will stick it out in teen mode indefinitely.

Majority of users will probably verify within 6 months, whether through explicit verification or through the inference model that likely already has them classified.

Adult users avoiding verification due to privacy concerns are in a bind. They can either give up privacy (by verifying) or give up functionality (by staying in teen mode). There's no third path.

Discord could theoretically add a pure-privacy option: verify age without submitting government IDs and without facial data collection. But that's less useful to them. They'd still get the regulatory compliance but less data to work with.

The Future: What Comes After Age Verification

Age verification is unlikely to be the endpoint. It's a foundation for further systems.

Once Discord has verified ages on accounts, they can:

Personalize recommendations: Serve different content based on verified age. This is valuable for advertisers and for content creators targeting specific demographics.

Adjust moderation rules: Apply stricter rules to accounts classified as minors. Looser rules to adults. This is fine in theory but creates complexity in practice.

Enable age-based monetization: Allow creators to monetize age-restricted content differently. Charge different prices for different age groups.

Build audience demographics: Tell creators and advertisers the exact age breakdown of their audience.

Implement parental controls: Let parents manage their children's accounts more closely (if Discord decides to go there).

Facilitate data sharing: Share age data with law enforcement when requested, since they now have verified IDs.

Each of these creates new privacy concerns but also new value for Discord and for other stakeholders. Age verification is the infrastructure that enables all of it.

In 5 years, the current controversy will probably seem quaint. Platforms will have far more intrusive identity systems. Kids will be used to it. Adults will be used to it. The boundary between identity and pseudonymity online will have shifted permanently.

For now, though, age verification is the visible frontier of this shift.

Practical Steps Users Should Take

If you're a Discord user, here's what to actually do.

First, decide your privacy tolerance. Are you okay submitting a government ID to Discord's vendor? Are you comfortable with facial data being analyzed? Your answer determines your path.

Second, understand the teen-mode restrictions. If you're not verifying, know exactly what you're losing. Check if communities you care about are age-restricted. Check if you participate in stage channels. Map out the impact.

Third, verify early if you're going to verify. Don't wait until you're locked out of important servers. Verify before March 2025 if possible (though the article mentions March as the rollout date, so you might not have much time).

Fourth, use facial estimation if you must verify. It's less accurate but significantly more private than ID submission. Only escalate to ID if the facial estimation fails.

Fifth, take security seriously. If you do submit an ID, don't use the same credentials for that account as your other accounts. Don't reuse passwords. Enable two-factor authentication. Make your Discord account properly secure because now it contains identity data.

Sixth, read Discord's actual documentation. The blog posts and official statements are careful and sometimes vague. Discord's support docs might have more detail about data handling.

Seventh, consider alternatives. If Discord's approach doesn't work for you and other platforms (Slack, Teams, Matrix) fit your use case, switching might make sense.

Most users probably won't do anything. They'll go through verification when prompted, forget about it, and move on. That's probably fine. The system isn't designed to be frictionless for the people who don't care.

But if you do care, be intentional about it.

The Bigger Picture: Age Verification as a Broader Trend

This isn't about Discord. Discord is just the visible case right now. Age verification is becoming standard across platforms.

The infrastructure layer is being built. Third-party vendors specializing in age verification are proliferating. Their business models depend on making verification faster, cheaper, and more widespread. Every platform that implements it creates demand for their services.

Regulatory bodies are building age verification requirements into new laws. Once enough governments mandate it, it stops being optional. It becomes necessary for platform viability.

That means we're heading toward a world where significant portions of the internet require age verification. You'll need verified identity to access certain communities, services, and content.

That has implications:

- Privacy erosion: Your age is one more data point feeding into comprehensive identity profiles.

- Reduced anonymity: Fewer places where you can participate pseudonymously.

- Increased surveillance: Once identity infrastructure exists, it becomes tool for tracking and moderation.

- New vulnerabilities: Identity data becomes target for breaches and misuse.

- Increased friction: More steps between you and the services you want to use.

But also potential benefits:

- Genuine child protection: Age-restricted content is actually age-restricted.

- Reduced fraud: Easier to identify and penalize bad actors.

- Platform accountability: Harder for platforms to claim they can't identify harmful users.

The question isn't whether Discord's system is good or bad in isolation. It's whether this is the future you want for the internet.

For most people, it's not a choice they're making actively. It's a change that's happening around them. By the time the implications become clear, the infrastructure is already in place and extremely hard to unwind.

FAQ

What exactly is age verification and why is Discord implementing it?

Age verification is a process where Discord confirms whether a user is an adult or a teenager. Discord is implementing it due to global regulatory pressure and child safety requirements, not because of user demand. Starting in March 2025, all users will be classified as "teen" by default unless they complete verification using either facial age estimation (a selfie analyzed by AI) or a government ID submitted to a third-party vendor.

How does Discord's facial age estimation work and is it accurate?

Discord's facial age estimation uses artificial intelligence to analyze your face from a video selfie and classify you as either a teen or adult. The analysis happens locally on your device, and no video gets sent to Discord's servers. However, like all facial age estimation AI, it has limitations. Accuracy rates typically range from 75% to 95% depending on lighting, camera quality, and other factors. Systematic biases also exist, meaning the technology performs differently across different ethnicities and demographics. If the system misclassifies you, you can appeal and submit government ID instead.

What are my options if I don't want to submit a government ID?

Your primary option is to use the facial age estimation method, which analyzes a video selfie on your device without submitting any images. If you accept being classified incorrectly by facial estimation, you can simply remain in teen mode and accept the content restrictions. However, you cannot join age-restricted servers or participate in stage channels if you remain unverified. There's no option to completely bypass age verification while maintaining full access to the platform.

What happened to the data from the October 2024 vendor breach?

In October 2024, one of Discord's third-party age verification vendors suffered a security breach that exposed government ID images and sensitive information from users who had submitted IDs. Discord immediately stopped working with that vendor and switched to a different third-party partner. They claim the new vendor has enhanced security measures, though the previous breach demonstrates that even vendor-selected companies can be compromised. If you submit an ID, you're accepting a real risk despite Discord's efforts to partner with secure vendors.

How long does Discord actually keep my government ID information after verification?

Discord claims that government ID images are "deleted immediately, in most cases" after age confirmation. The company specifically states they don't retain your name, address, or other personal information from the ID. However, verification metadata (the fact that you verified, the date you verified, and possibly other context) is likely kept for compliance and security purposes. Additionally, "deleted immediately" is somewhat ambiguous and may not account for copies in backup systems, logs, or temporary caches that persist longer than intended.

Can Discord see my face or use facial recognition technology on me?

Discord states they are not performing facial recognition or biometric scanning. They're specifically using "facial age estimation," which analyzes age-related features but doesn't create a facial print or identify your specific face. However, to perform age estimation, the system must analyze your facial data even if it doesn't store it. The distinction between age estimation and facial recognition is real but subtle, and both involve analyzing your face.

What are the restrictions for "teen" mode and how severe are they?

Users in teen mode cannot access age-restricted servers (the interface shows a black screen), cannot speak in stage channels, have content automatically filtered, and receive warning prompts for friend requests and DMs from unfamiliar users. These are significant restrictions that prevent participation in many communities. However, regular direct messages with known contacts and non-age-restricted servers work normally. Many communities will likely implement age restrictions even for content that isn't explicitly adult, which could substantially impact a teen user's Discord experience.

Will Discord force me to verify or will teen mode remain optional?

Discord hasn't explicitly stated whether verification will eventually become mandatory. Currently, teen mode will be the default, suggesting verification is required for full access. However, Discord's language indicates they won't aggressively force immediate verification. Most likely, they'll gradually make teen mode increasingly inconvenient through fewer features, more limitations, and more frequent prompts until most users voluntarily verify. Some users may choose to remain unverified indefinitely and simply accept the restrictions.

How does Discord's behavioral age inference work and what data does it analyze?

Discord is deploying an age inference model that analyzes behavioral patterns including what games you play, what servers you're in, your activity times, how long you spend on Discord, typing patterns, and other usage signals. The system uses these patterns to predict whether you're likely a teen or adult without requiring explicit verification. This inference happens automatically and is separate from the verification process. Discord may use this inference to determine who gets age verification prompts and when, or potentially to automatically classify some users without requiring verification.

What should I do if I'm concerned about privacy but need full Discord access?

If you need full access but are privacy-conscious, use the facial age estimation method rather than submitting a government ID. This avoids submitting sensitive documents to vendors. If facial estimation misclassifies you, you can choose to remain unverified in teen mode rather than escalate to ID submission. You could also consider if alternative platforms (Slack, Matrix, self-hosted solutions) better serve your privacy needs, though they have their own trade-offs. If you do verify with an ID, enable two-factor authentication, use unique credentials, and consider the long-term implications of having your age verified on the platform.

Looking Ahead: What This Means for Digital Identity

Discord's age verification rollout is a watershed moment. It's not revolutionary in technology. Facial age estimation and ID verification are mature technologies. But it's revolutionary in scope. A platform with 150 million active users is integrating identity verification into core platform function.

This normalizes something that seemed unlikely five years ago: the idea that casual social platforms require verified identity. Once it's normal, the resistance to it crumbles.

Governments will point to Discord as an example. "Other platforms do it successfully." Regulations will cite it. Competitors will follow because staying behind creates a competitive disadvantage in the regulatory environment.

The internet is shifting from pseudonymous spaces to identity-verified spaces. This shift has real benefits for safety and accountability. It also has real costs for privacy, freedom, and anonymity.

Users who care about these trade-offs need to make intentional choices now, while there are still choices to make. In five years, verified identity on social platforms will probably feel inevitable. Right now, it still feels like a choice.

Make it deliberately, not accidentally. That's the only real advice that matters here.

Key Takeaways

- Discord's March 2025 global rollout makes age verification mandatory, defaulting all users to "teen mode" until they verify via facial estimation or government ID.

- Facial age estimation uses AI to analyze selfies locally on-device (no image transmission), while ID verification uploads documents to third-party vendors with deletion claims but proven breach history.

- Teen-mode users lose access to age-restricted servers, stage channels, cannot message in restricted communities, and get automatic content filtering plus separated DM inboxes from strangers.

- The October 2024 vendor breach exposed government ID photos, demonstrating real risks of submitting identity documents despite Discord's claims of immediate deletion and security improvements.

- Behavioral age inference systems analyze gaming patterns, activity times, and platform usage to auto-classify users, collecting new behavioral data even before explicit verification occurs.

- Global regulatory pressure drives implementation, not user demand; Discord prioritizes liability reduction over pure privacy, positioning itself ahead of stricter mandates coming from governments worldwide.

Related Articles

- How Roblox's Age Verification System Works [2025]

- Egypt Blocks Roblox: The Global Crackdown on Gaming Platforms [2025]

- AI Toy Security Breaches Expose Children's Private Chats [2025]

- Prime Video's The Wrecking Crew: How Amazon Toned Down Violence [2025]

- TikTok Content Suppression Claims: Trump, California Law & The Algorithm Wars [2025]

- Pornhub UK Ban: Why Millions Lost Access in 2025 [Guide]

![Discord Age Verification Global Rollout: What You Need to Know [2025]](https://tryrunable.com/blog/discord-age-verification-global-rollout-what-you-need-to-kno/image-1-1770647919332.jpg)