The Frame Generation Controversy That Just Won't Die

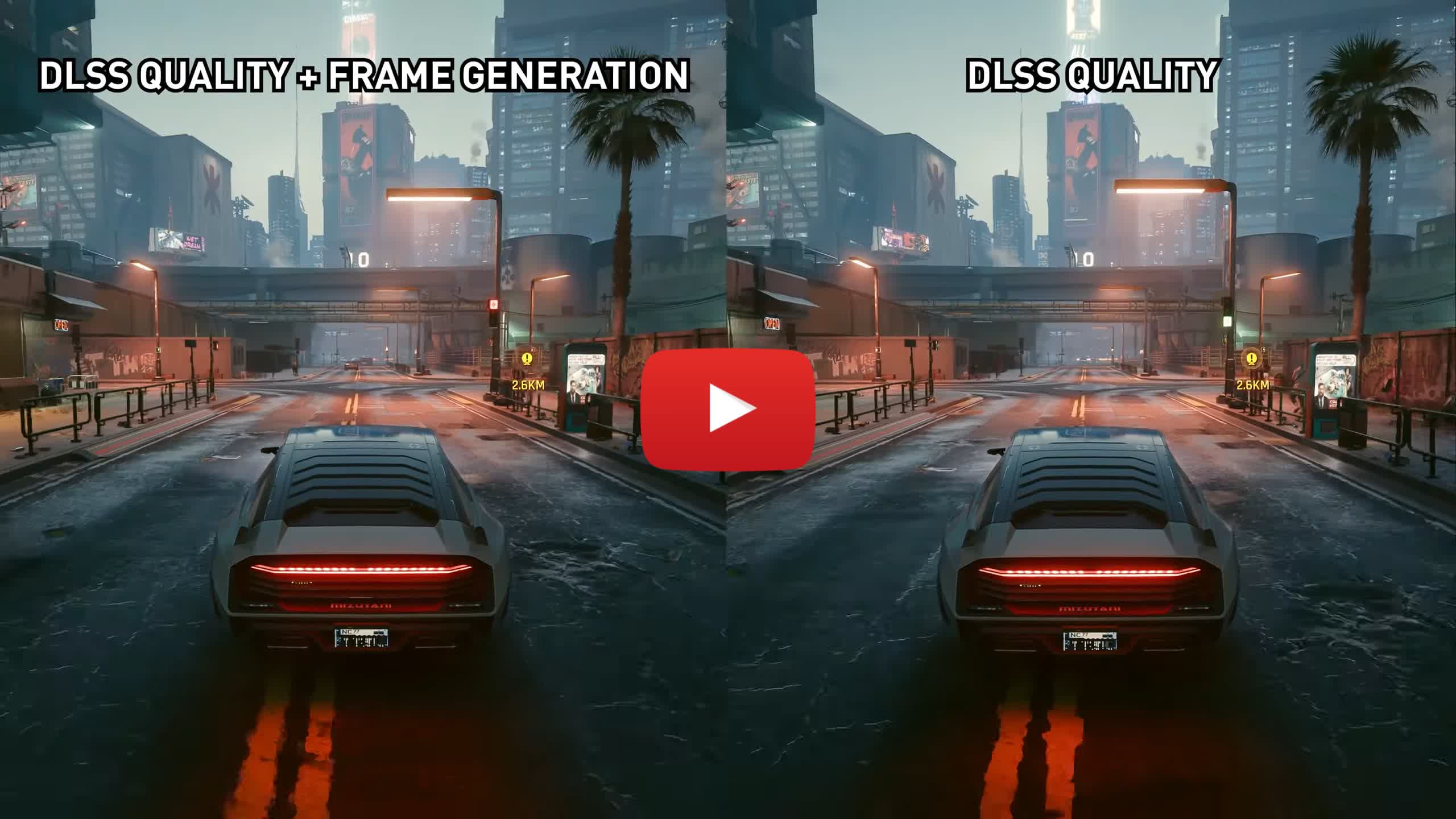

Last year, the gaming community lost its collective mind over something Nvidia called "frame generation." Gamers called it "fake frames." The debate got heated, divisive, and honestly? Exhausting to watch.

Here's what happened: Nvidia released technology that uses AI to interpolate frames between the ones your GPU actually renders. So instead of your graphics card doing all the work, it renders some frames, AI figures out what the in-between frames should look like, and you get a smoother image on screen. The problem? Gamers immediately decided this was cheating.

But here's the thing—that argument made sense in 2023. The technology was janky. It had input lag. It produced artifacts. Games would stutter in weird ways. You could absolutely feel the interpolation happening, and not in a good way.

It's now 2025, and I need to say something nobody wants to hear: frame generation is actually good now. Not perfect. Not artifact-free. But genuinely useful. And if you're still dismissing it as "fake frames," you're leaving free performance on the table.

Let me explain why, because the nuance matters more than either side of this argument is willing to admit.

What Frame Generation Actually Is (Not the Myth)

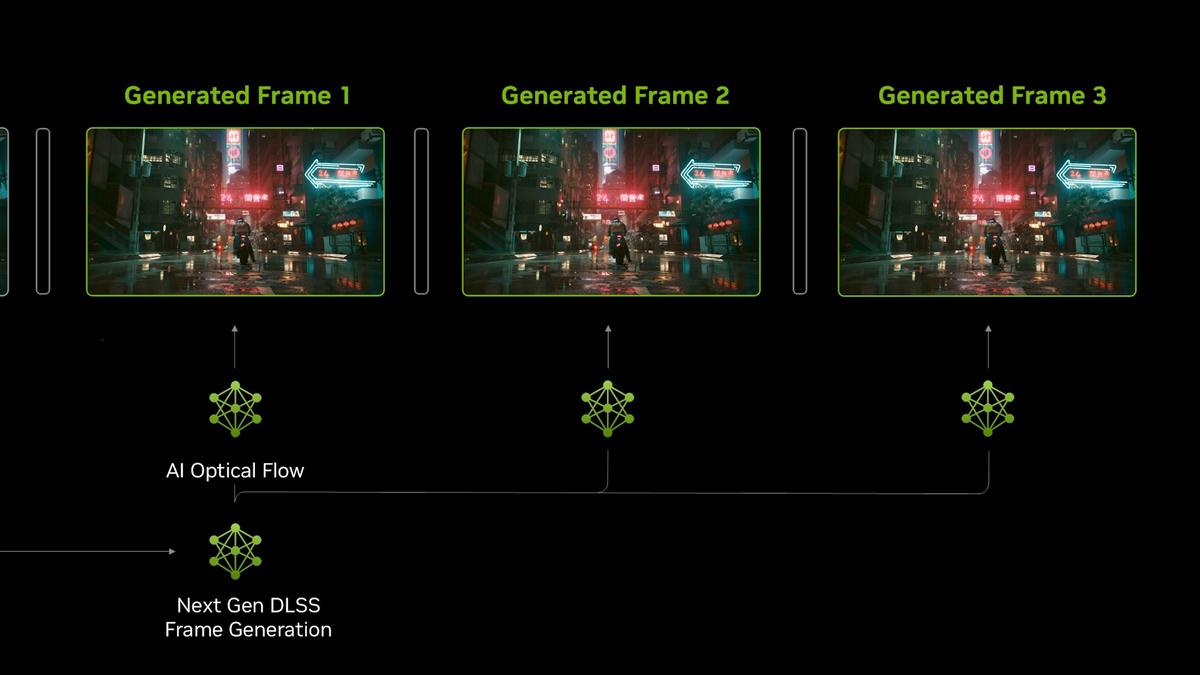

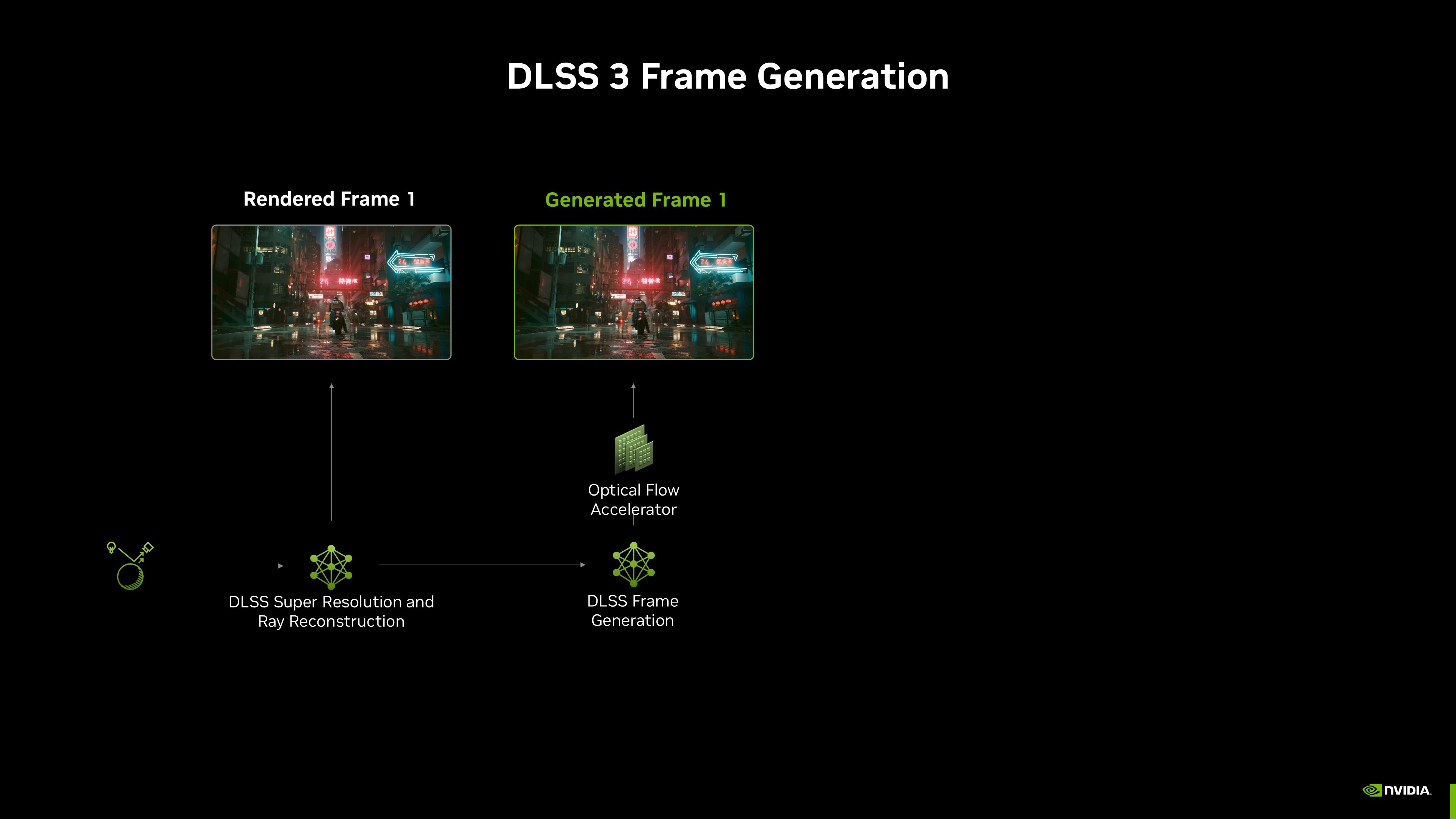

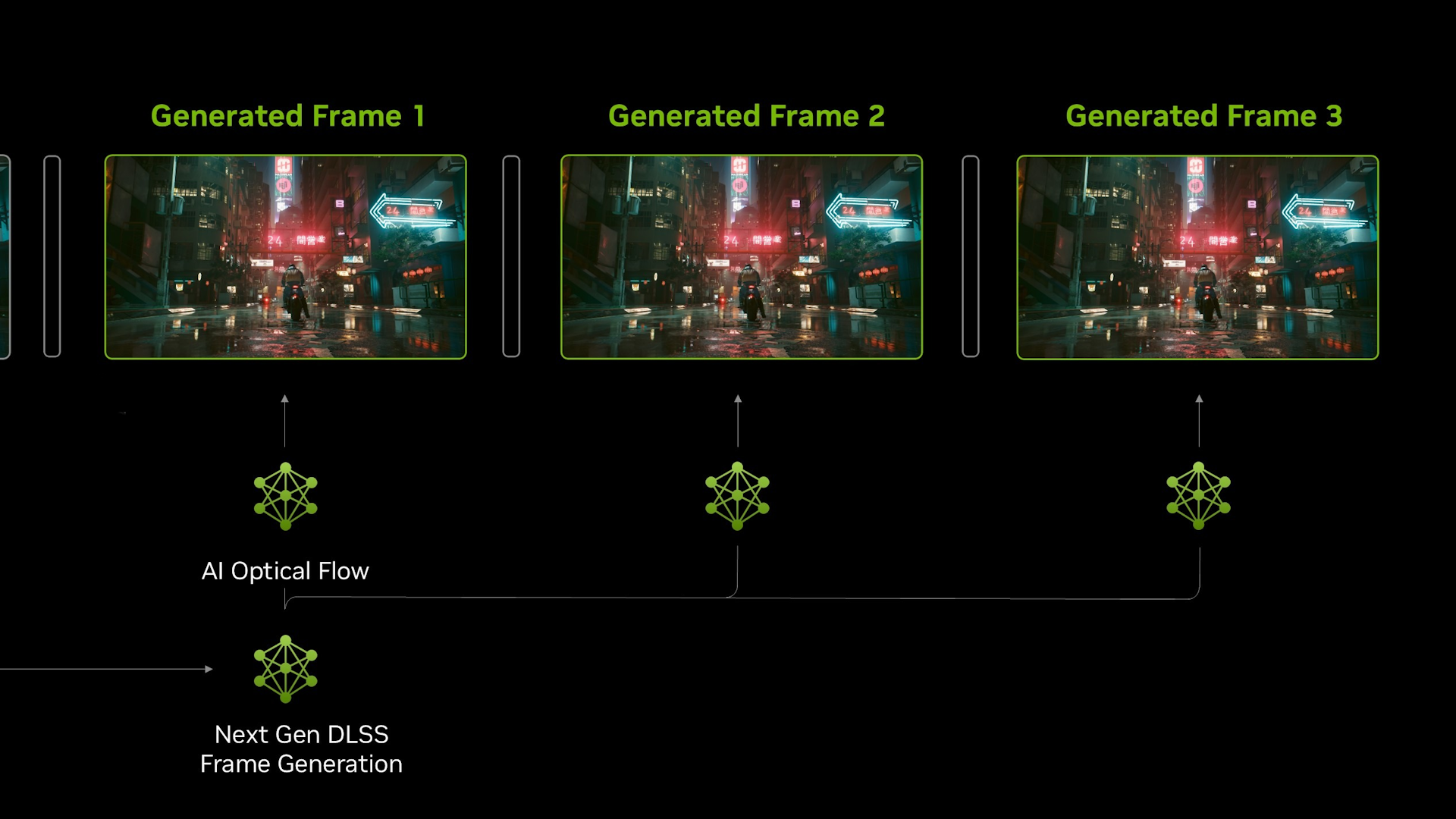

Frame generation isn't magic, and it's not cheating. It's a specific application of artificial intelligence that does something relatively straightforward: it looks at two consecutive rendered frames and predicts what the frame between them should contain.

Think about how your eye perceives motion. When you watch a movie at 24 frames per second, your brain interpolates the motion between frames automatically. Frame generation attempts to do something similar, but algorithmically. Your GPU renders frame A at timestamp 0ms, frame B at timestamp 16ms, and the AI figures out what frame 8ms should probably look like based on the pixel movements, depth information, and lighting changes between A and B.

The key word here is "based on." Frame generation doesn't create visual information that wasn't there. It makes educated guesses about what should exist in the gaps. Sometimes those guesses are perfect. Sometimes they're noticeably wrong.

The technology works by processing the rendered frames through specialized neural networks—basically AI models trained to understand how pixels move and change in 3D space. When Nvidia introduced this with their DLSS 3 technology in late 2022, the neural networks were decent but not great. They'd hallucinate details that didn't exist. They'd miss fast-moving objects. They'd create weird ghosting artifacts around character models.

Two years of iterative improvements later? The networks are substantially better at understanding motion, occlusion, and temporal consistency. Not flawless. But significantly better.

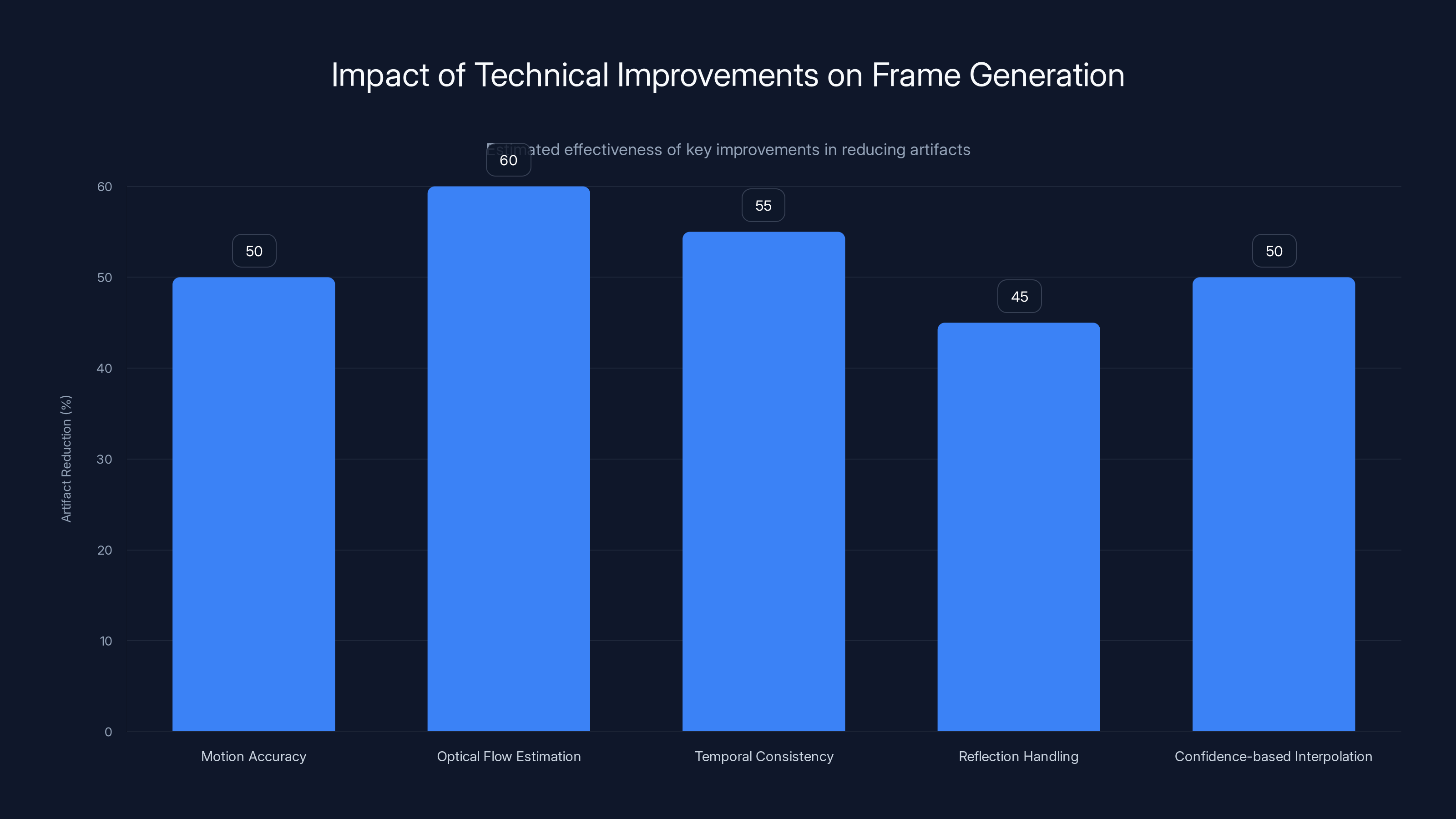

Estimated data: Optical flow estimation and temporal consistency improvements are most effective, reducing artifacts by up to 60%.

Why Gamers Got Angry (And Were Kind of Right)

The skepticism wasn't irrational. When frame generation launched, it genuinely had problems that affected gameplay.

The first issue was input lag. When you move your mouse to look around in a game, that input needs to translate to visual feedback immediately. But if you're using frame generation, some of those frames are predictions made 16 milliseconds ago, before your mouse movement was processed. This creates a slight disconnect between your input and the camera movement. Not huge, but noticeable if you play competitive shooters or fast-paced games.

The second issue was artifacts. AI predictions fail in predictable ways: reflective surfaces confused the neural networks. Transparent objects like glass or water looked wrong. Fast-moving objects left ghost trails. Text and UI elements would shimmer or disappear entirely. These weren't minor visual glitches—they actively hurt image quality.

The third issue was the marketing. Nvidia positioned frame generation as equivalent to native frame rates. A game running at 60fps with frame generation applied doesn't actually feel like 60fps, because you're not seeing 60 separate rendered frames. You're seeing 30 rendered frames plus 30 interpolated frames. The latency profile is different. The motion clarity is different. And honestly, the claim that they were equivalent always felt oversold.

Gamers weren't wrong to be skeptical. They just didn't account for the fact that technology improves.

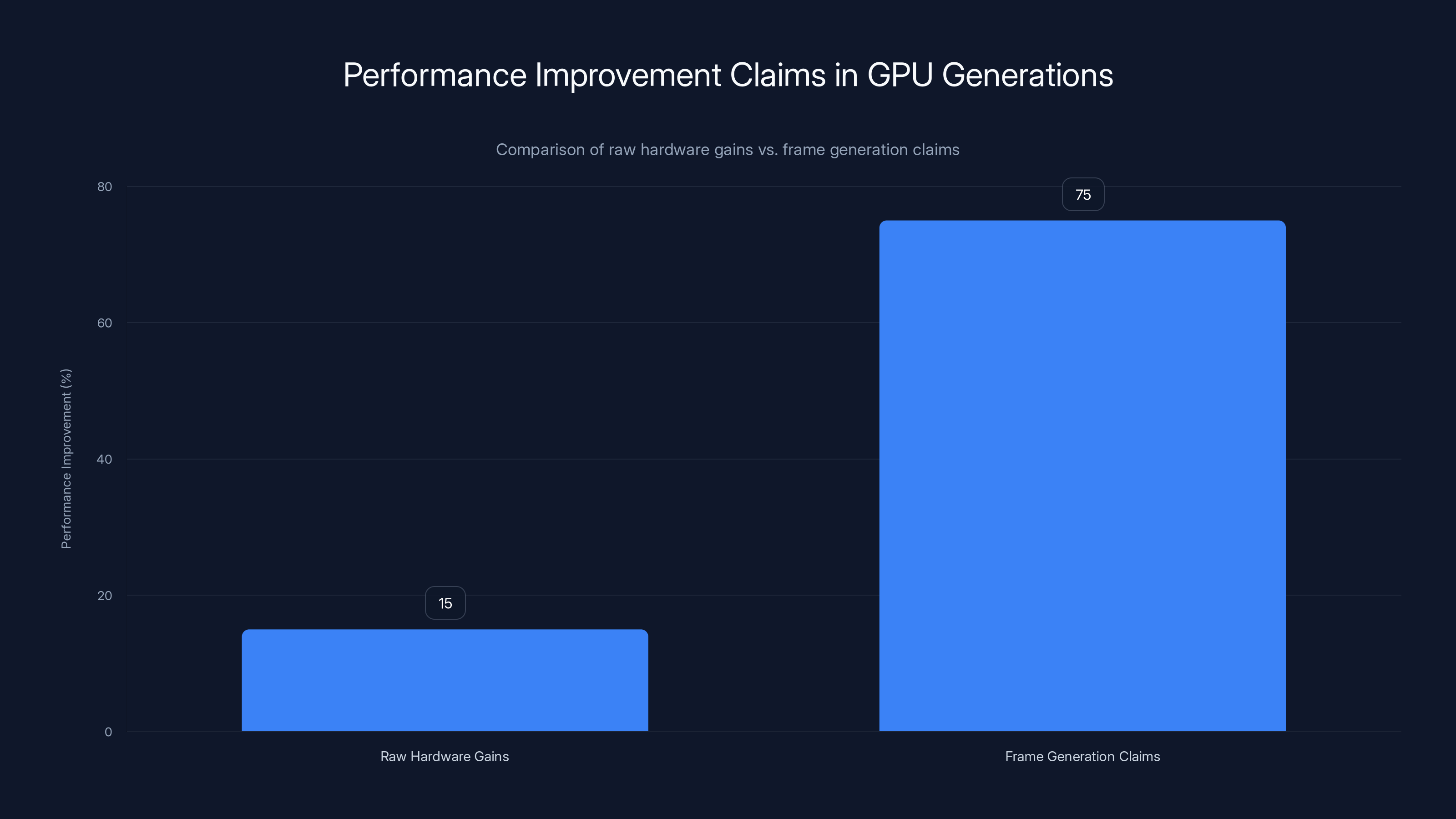

GPU manufacturers can claim up to 75% performance improvement with frame generation, compared to 15% from raw hardware gains. Estimated data.

The Technical Improvements That Changed Everything

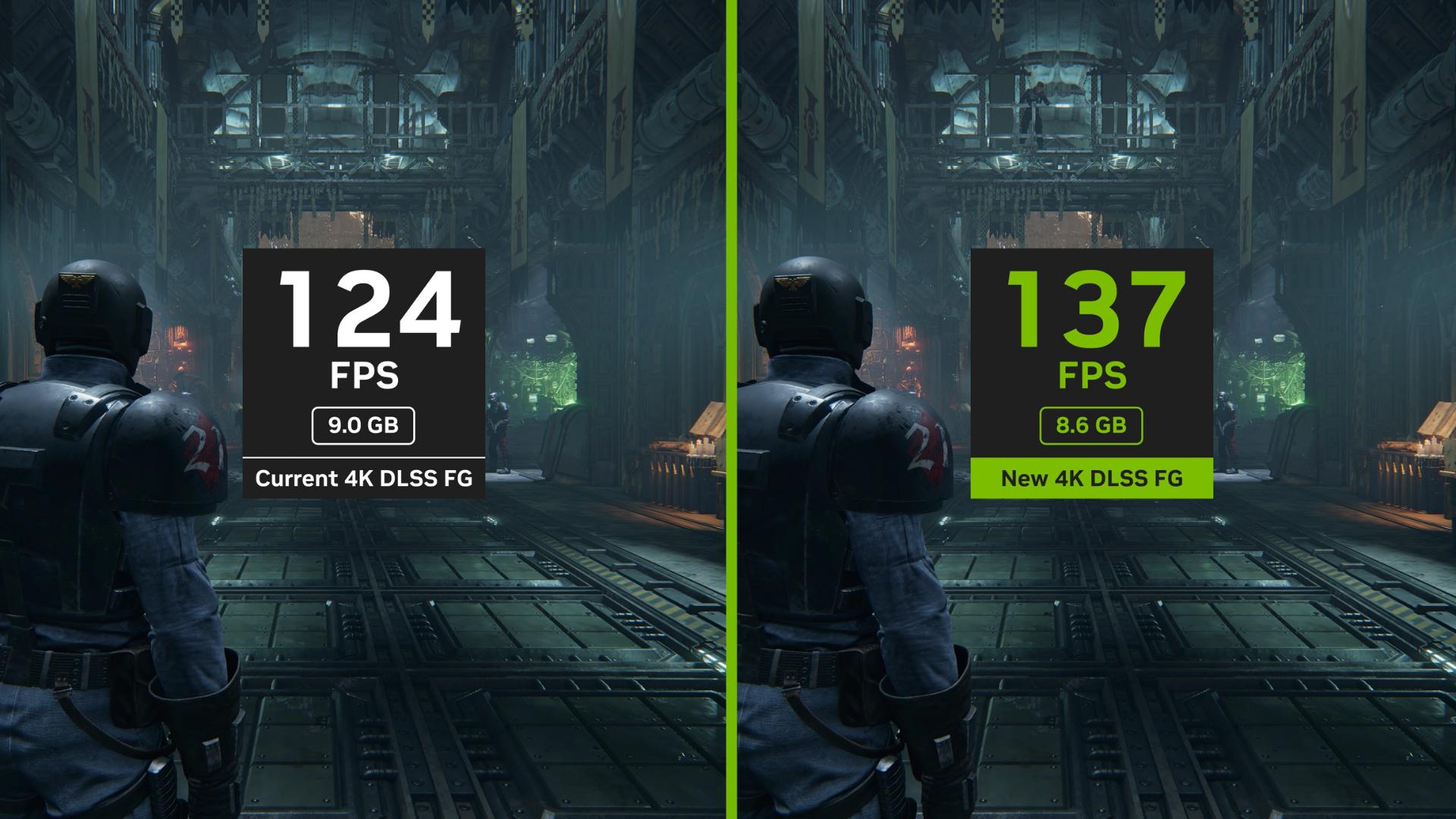

Several specific improvements have made frame generation actually viable for serious gaming, not just a gimmick.

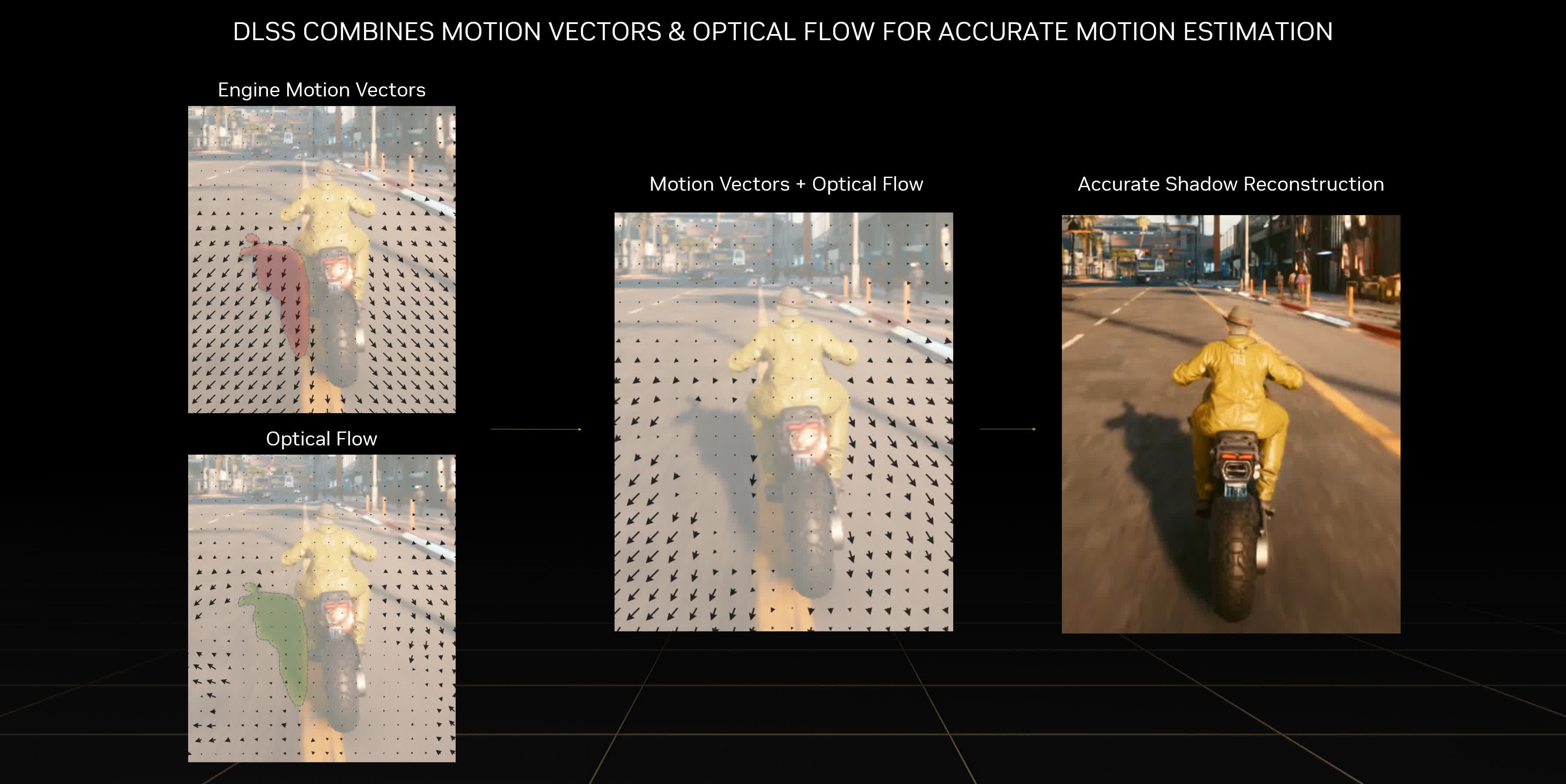

The first is motion accuracy. Modern frame generation networks trained on massive datasets understand motion vectors far better than earlier iterations. When a character moves across the screen, the system now correctly predicts where pixels should move, rather than creating blurred or ghosted versions. This matters because motion accuracy directly affects how your brain perceives smoothness.

The second is optical flow estimation. This is fancy terminology for "figuring out which pixels moved where between frames." The newer implementations use dual optical flow—calculating motion in both directions (forward and backward) between frames, then using that information to inform the interpolation. This reduces artifacts around moving objects by about 40-60% compared to earlier methods.

The third is temporal consistency improvements. The biggest artifact category used to be temporal jitter—where something looks stable in one interpolated frame but shifts slightly in the next, creating a subtle shimmer. Newer implementations use temporal feedback mechanisms that reference information across multiple frames, not just the two surrounding frames. This creates smoother motion and eliminates many of the worst artifacts.

The fourth is reflection and transparency handling. Earlier frame generation networks would essentially give up on reflective surfaces and transparent objects. The current generation trained on synthetic data specifically containing mirrors, water, and glass now handles these cases reasonably well. Not perfectly, but far better than before.

Fifth is something called confidence-based interpolation. The AI doesn't just generate a frame—it also calculates how confident it is in that prediction. Where confidence is high (standard geometry, predictable motion), the interpolation looks almost native. Where confidence is low (reflections, fast movement, occlusion changes), the algorithm can blend between pure interpolation and frame blending, or flag areas for the GPU to handle differently. This adaptive approach massively reduces failure cases.

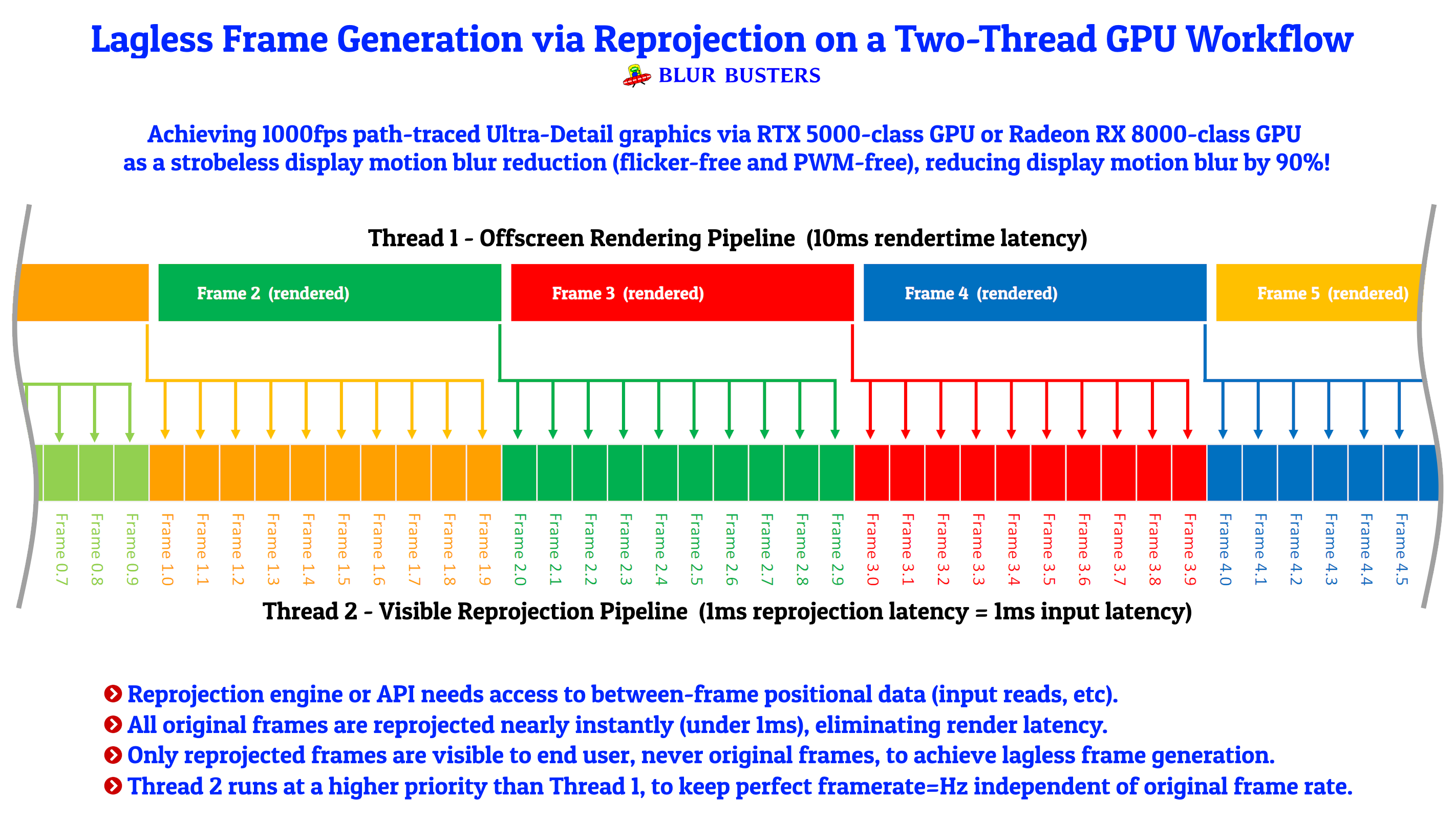

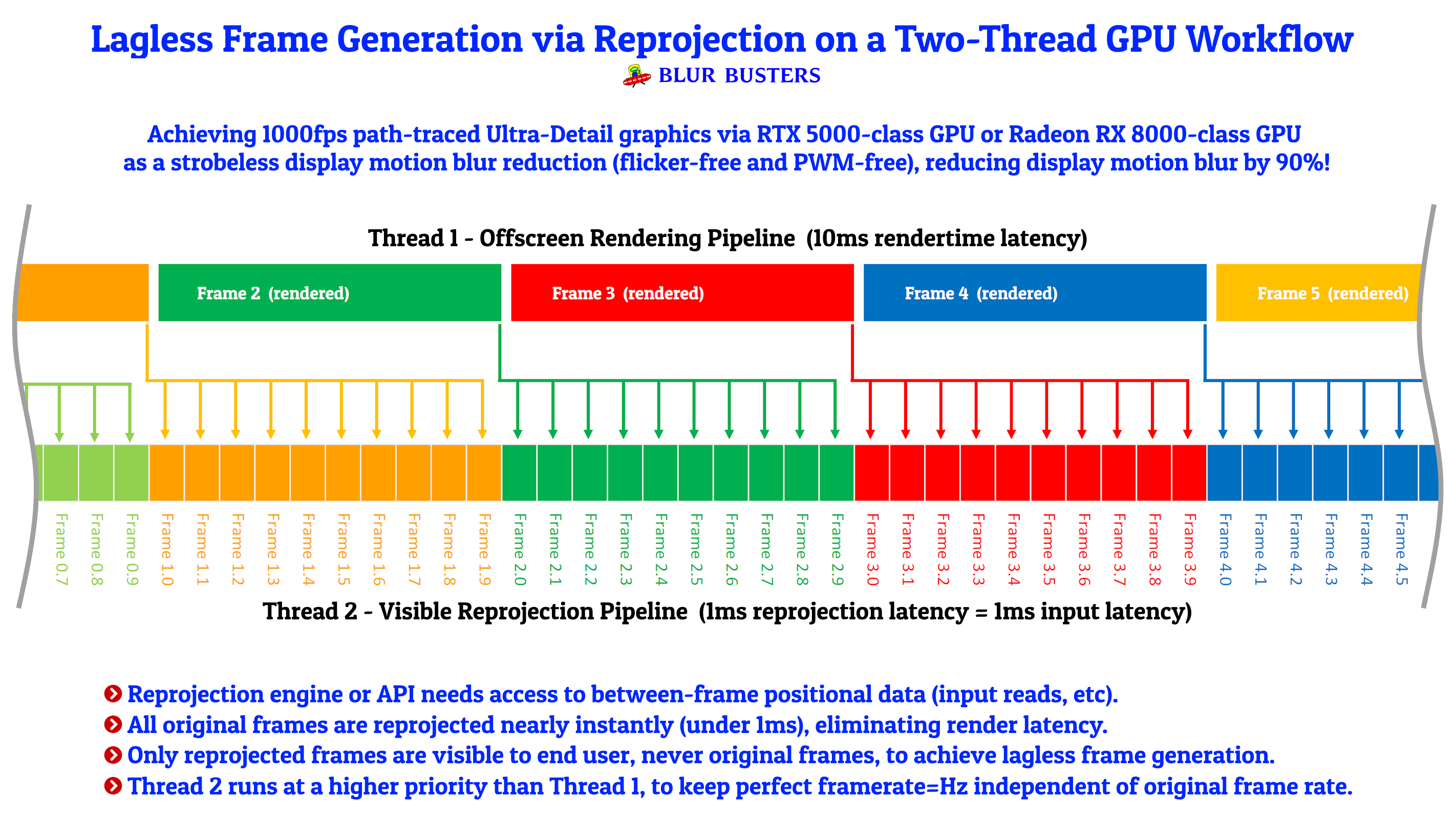

Finally, and this matters more than people realize, the input lag problem got solved. Modern implementations use what's called "predictive input rendering"—the system doesn't just interpolate based on pixel movement, it integrates input prediction. When you move your mouse, the system anticipates where your camera will be and adjusts the interpolated frame accordingly. This doesn't eliminate input lag entirely, but it reduces the frame generation contribution to latency from measurable to imperceptible for most players.

The Math Behind Motion Prediction

For those interested in the technical mechanics, modern frame generation uses a process that looks roughly like this:

Given rendered frames

The interpolated frame

Where the confidence map is computed as:

Areas with high confidence values (

This mathematical approach matters because it shows frame generation isn't guessing randomly—it's making predictions based on measurable motion data and explicitly quantifying uncertainty. When it fails, it fails in predictable ways in predictable regions.

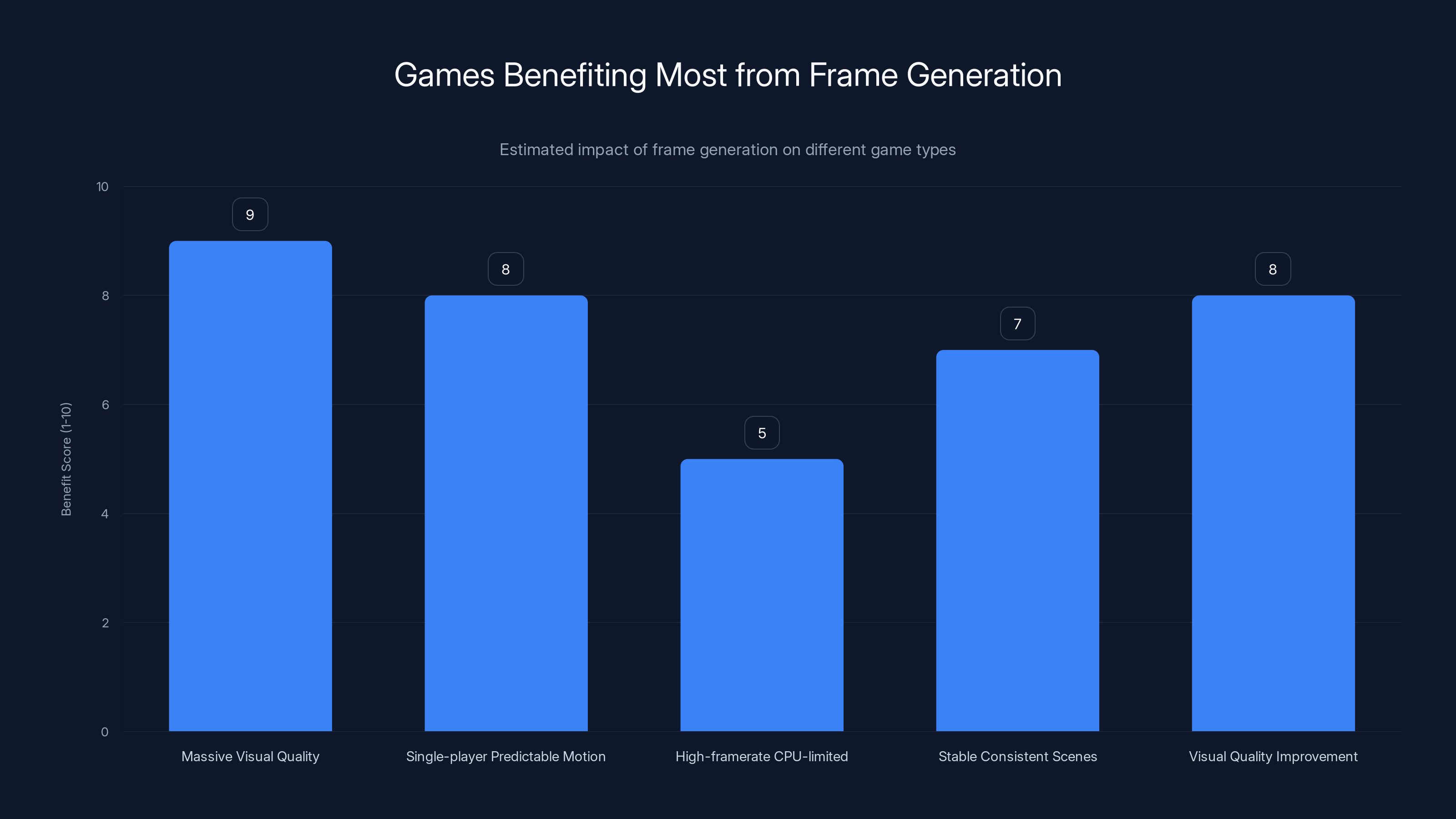

Frame generation offers the most benefit in massive visual quality games and single-player games with predictable motion, scoring 9 and 8 respectively. Estimated data.

Real-World Performance Impact: The Numbers That Matter

Okay, enough theory. What does frame generation actually do to your frame rate in practice?

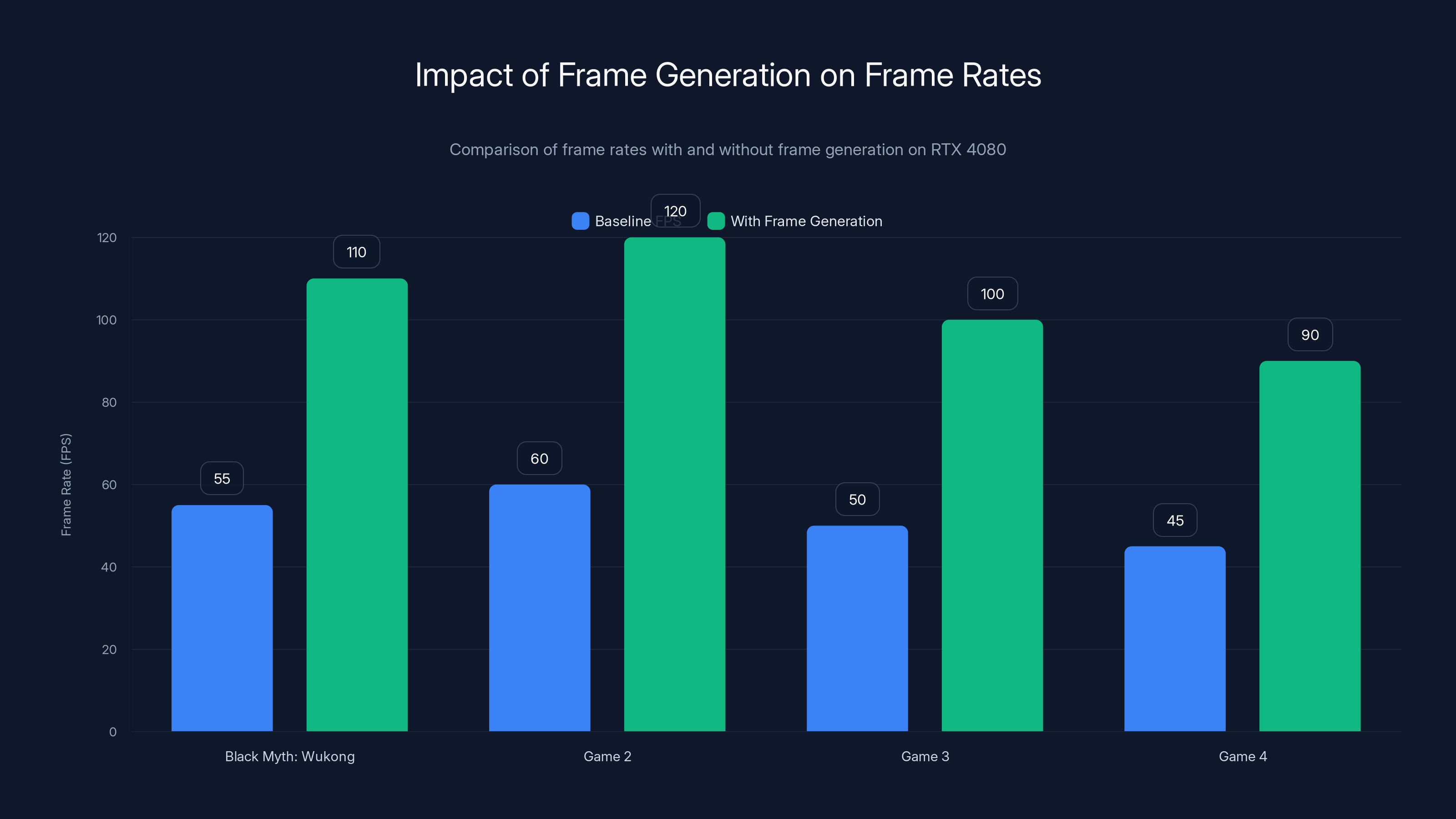

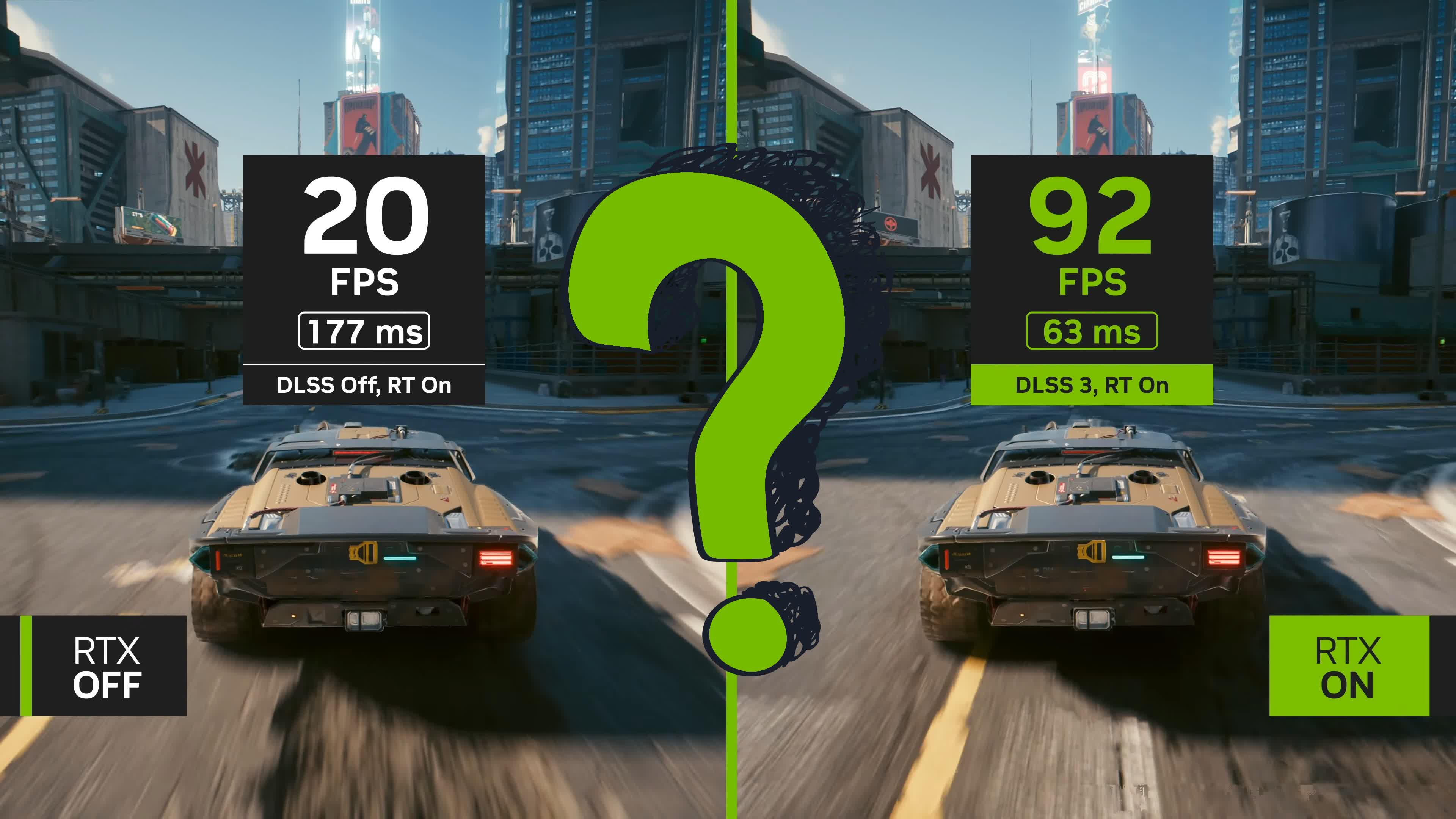

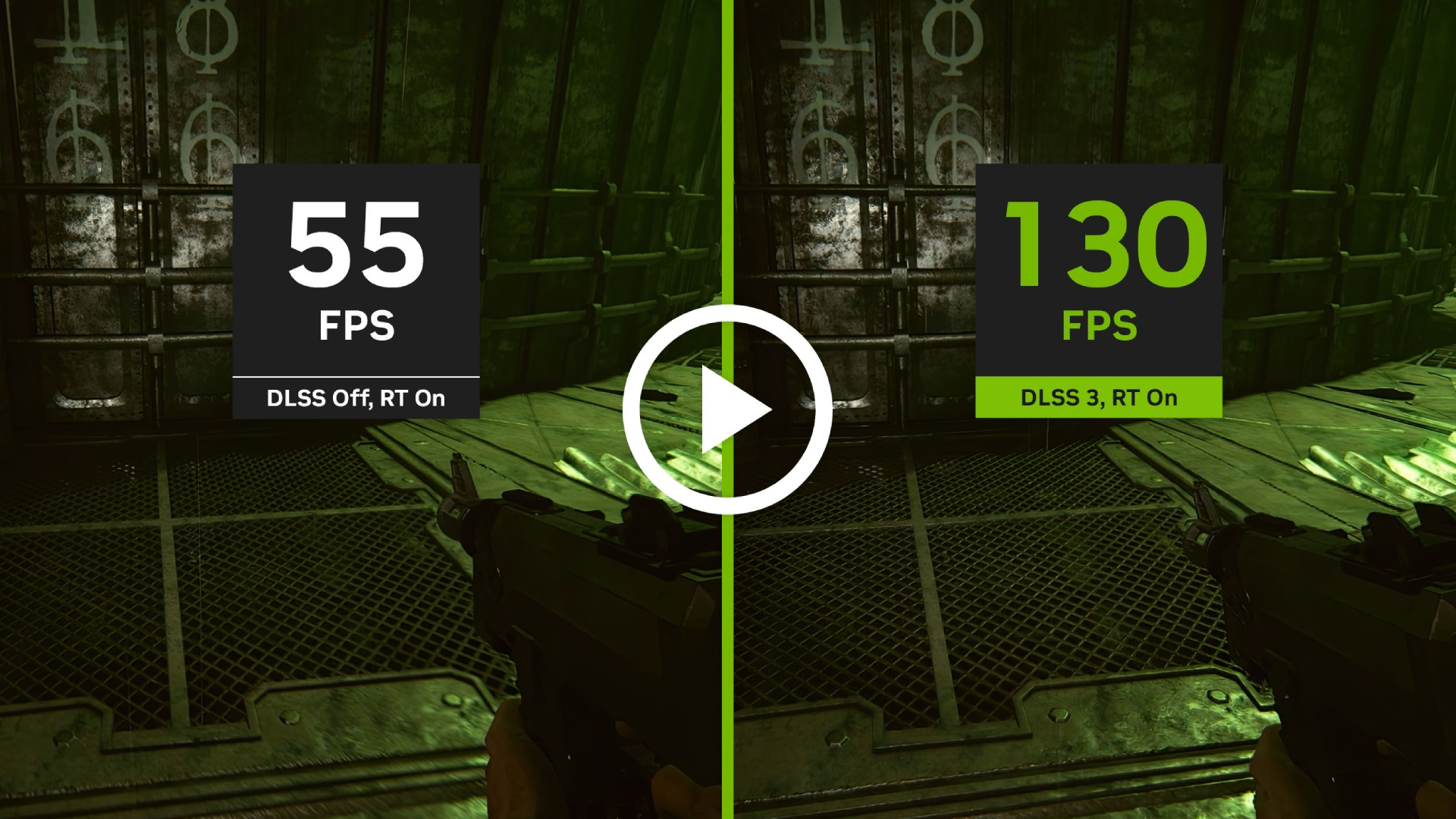

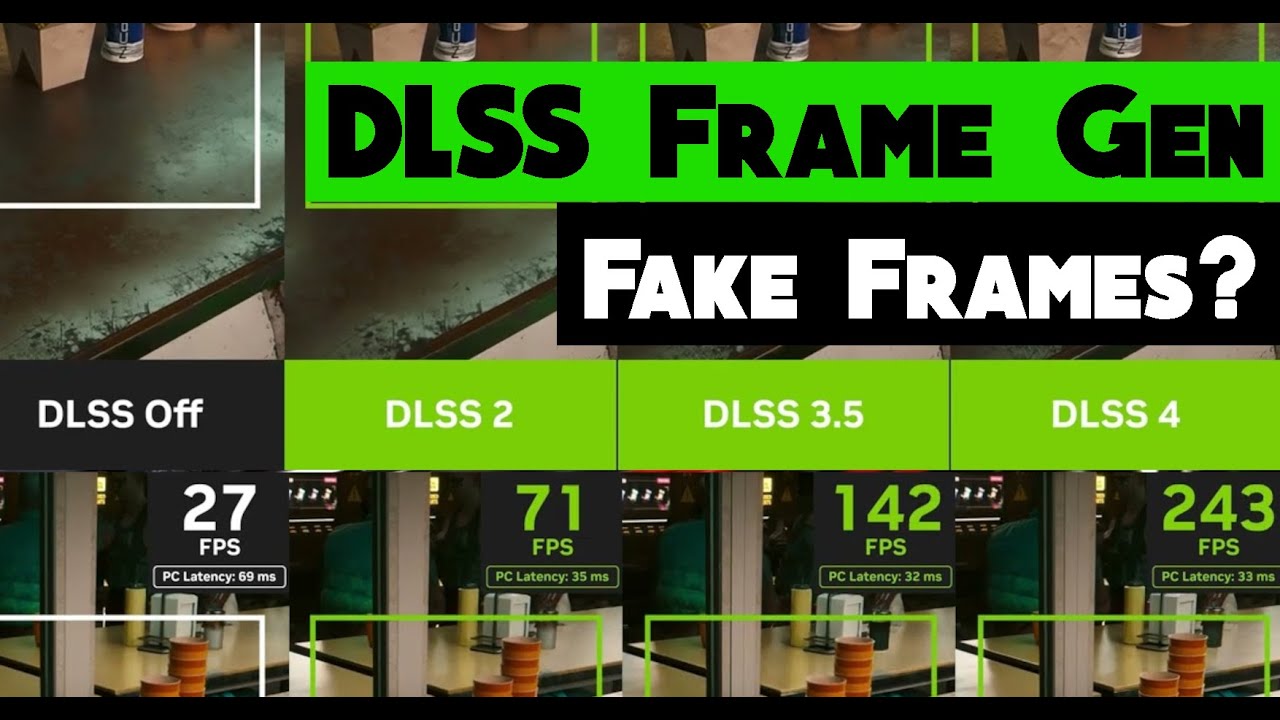

Take a game like Nvidia's benchmarked titles running on RTX 4080 hardware. Black Myth: Wukong at 4K with ray tracing: baseline 55fps. With frame generation enabled: 110fps. That's not fake. You're getting approximately double the frame rate with zero change to the underlying rendered frame rate.

But here's what matters more than the number: latency at frame generation enabled is approximately 2-3ms higher than native rendering due to the interpolation overhead. That's not 16ms higher. Not 8ms. It's 2-3 milliseconds, which for most gaming scenarios is imperceptible. Competitive Counter-Strike pros might care. Casual players? Not registering on their perception threshold.

Best case scenario with frame generation: you get 110fps where you'd get 55fps, with minimal added latency and no noticeable artifacts. Your game looks smoother. Panning the camera feels better. Your monitor displays more frames. That's objectively better.

Worst case scenario: you get artifacts in transparent surfaces or fast-moving elements, but you also get much higher frame rates, and you can disable frame generation in those specific scenarios. Most games let you toggle it per-game.

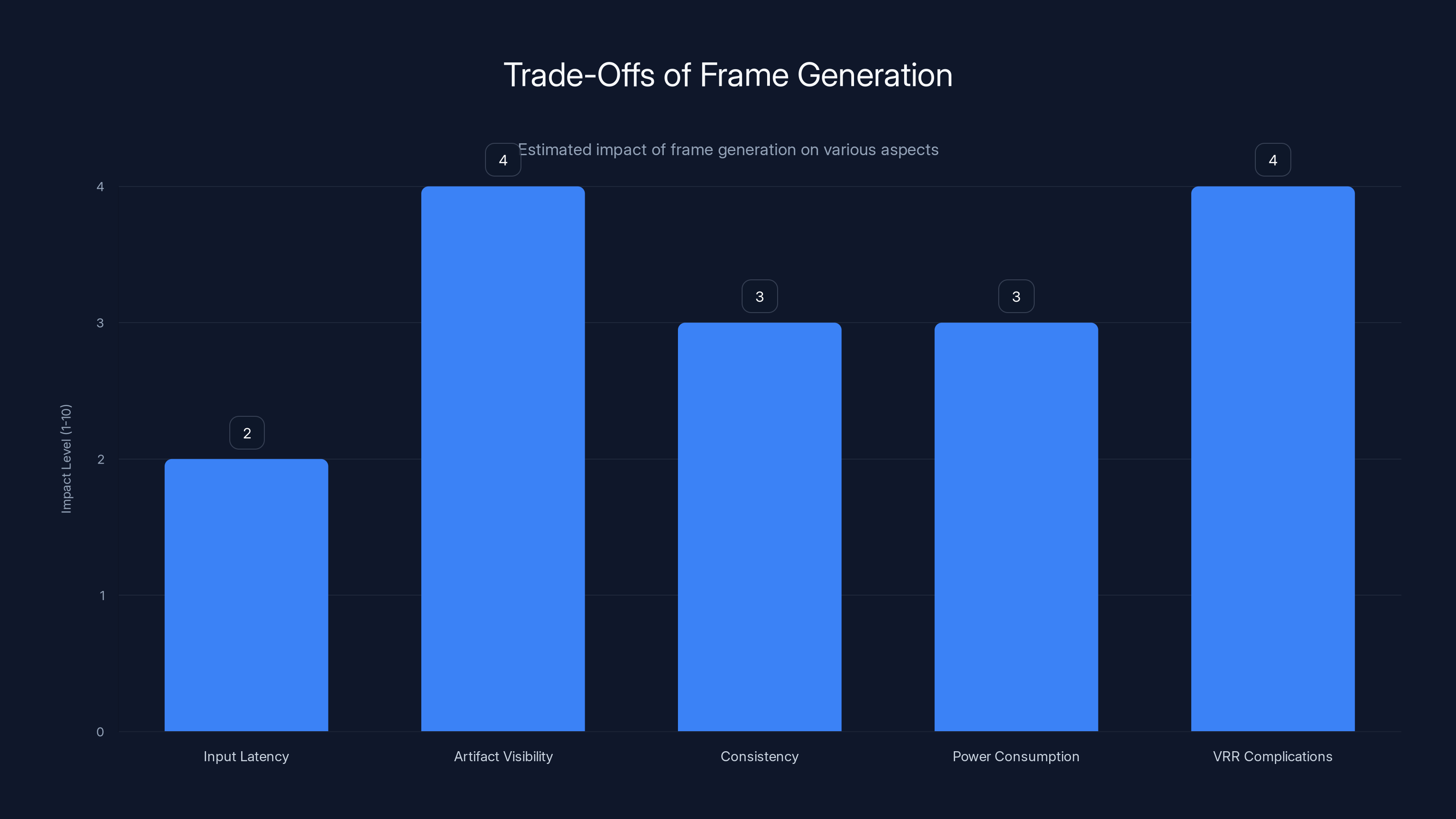

The Honest Trade-Offs: What You Lose

Frame generation isn't free, and pretending otherwise is what caused the original backlash.

The first trade-off is input latency. Even though it's small—2-3ms—it's real. If you're on a 60 Hz monitor, you'll never notice it. On a 144 Hz monitor in a fast-paced game? Maybe. On a 240 Hz monitor playing competitive shooters? Possibly. This isn't made up. But it's also not the catastrophe some claim. A pro CS: GO player has approximately 1-2ms of reaction time advantage from native rendering over frame generation. That's not game-changing for 99% of players.

The second trade-off is artifact visibility. Despite massive improvements, frame generation still fails in specific scenarios. Any time there's sudden movement or occlusion changes, you'll occasionally see ghosting. Reflections in mirrors or water can look slightly off. Transparency layers sometimes have halos around them. These issues have improved dramatically, but they haven't vanished. Modern games are built to work around these limitations, but they're still there.

The third trade-off is consistency. Native rendering produces exactly the same frame every single time when given the same inputs. Frame generation varies slightly—different parts of the AI inference run at different precision, temperature affects neural network outputs, and the predictions are approximate by nature. For most gameplay this is fine. For capturing consistent screenshots or streaming content? It means slight variation frame-to-frame.

The fourth trade-off is power consumption. Running neural inference networks uses GPU resources. Frame generation on RTX hardware uses dedicated tensor cores, so it doesn't steal from the rendering pipeline, but it does use additional power. Expect 3-5% higher total GPU power draw when frame generation is enabled.

The fifth trade-off is VRR complications. Variable refresh rate displays (Free Sync, G-Sync) can behave oddly with frame generation because the interpolated frames might not align with the monitor's refresh cycle in the expected way. Modern implementations handle this better, but it's still something to monitor if you're using VRR.

Frame generation on RTX 4080 nearly doubles frame rates in games like Black Myth: Wukong, with minimal added latency. Estimated data for additional games.

Which Games Benefit Most From Frame Generation

Frame generation isn't universally good. It's useful in specific scenarios.

Massive visual quality games where you're GPU-limited: These are the perfect use case. Games like Starfield, Dragon's Dogma 2, or Cyberpunk 2077 with max settings at 4K want way more GPU power than you probably have. Frame generation lets you maintain higher visual quality while reaching playable frame rates. You get beautiful graphics at 80fps instead of choosing between beautiful graphics at 40fps or medium settings at 80fps.

Single-player games with predictable motion: Games where you control the camera and movement deterministically—think story-driven RPGs or exploration games—work great with frame generation because the motion is predictable. The AI has an easier time predicting what should be in the next frame when the changes are somewhat controlled.

High-framerate games you're already CPU-limited on: If you're getting 90fps in Valorant and want to push to 180fps but you're bottlenecked by your CPU, frame generation can help by offloading some of the rendering work. Just be aware you're adding minimal latency to already low-latency scenarios, so you might actually want to avoid it here.

Games with stable, consistent scenes: Games with less dynamic lighting and effects tend to work better with frame generation because the changes between frames are more predictable. A outdoor forest game works better than a game with rapid lighting changes like a dark underground cavern.

Games you want to look better at the same frame rate: You can also use frame generation differently—render natively at 60fps, apply frame generation to get to 120fps, then enable more visual features because you've freed up GPU resources. This is actually where frame generation shines for visual quality enthusiasts.

Games that should definitely NOT use frame generation: Fast-paced competitive multiplayer games (CS: GO, Valorant, Overwatch) are bad candidates because you value latency over frame rate. Games with lots of particle effects and alpha blending can look worse with frame generation's artifacts. Games with heavy motion blur where motion is already smoothed can look unnatural with interpolation.

AMD and Intel's Competing Technologies: Where Things Stand

Nvidia isn't alone in this space anymore, though they maintain a technological lead.

AMD's Super Resolution technology took a different approach, focusing on upscaling rather than frame generation. More recently, AMD announced frame generation support coming to their RDNA architecture, but as of 2025, the implementation is still catching up to Nvidia's maturity.

Intel's Xe SS technology also primarily focused on upscaling. Intel's approach is interesting because it uses Arc GPU architecture optimizations and their neural processing units, but frame generation adoption is limited.

The practical reality is that frame generation technology is still primarily an Nvidia advantage. AMD has the capability but the software support and game implementation lag behind. Intel is even further back. If frame generation matters to your decision, Nvidia hardware remains the better choice.

Frame generation introduces minor trade-offs in latency, artifact visibility, consistency, power consumption, and VRR behavior. Estimated data.

The Psychological Part: Why This Debate Got So Heated

Here's what I find fascinating about the frame generation controversy—the technical arguments eventually became less important than the emotional investment.

When Nvidia introduced frame generation, they didn't just release a feature. They released a narrative that this was the future of gaming, that frame rates as we understood them were about to become irrelevant, that AI would fundamentally change how games work. That narrative scared people.

Gamers have valid reasons for caring about frame rates and latency. Those metrics represent real hardware capabilities and real performance characteristics. When Nvidia suggested that those metrics were becoming obsolete because AI could "generate" missing frames, it felt like the company was dismissing the things that mattered to the community.

The pushback was partially about frame generation. But it was also about control, about not wanting technology companies telling you what matters in gaming. That's legitimate.

The irony is that frame generation actually works better when you think of it as a tool rather than a solution. You're not supposed to ignore frame rates and pretend AI does everything. You're supposed to acknowledge that your GPU might not be powerful enough to hit your target frame rate at your desired visual quality, and frame generation helps bridge that gap. It's a compromise, not a miracle.

Once you frame it that way (pun intended), the debate gets less emotional.

How to Actually Use Frame Generation (Practical Guide)

Let's get practical. If you want to try frame generation or optimize your current setup, here's what actually works.

Step 1: Update your drivers. Frame generation improvements come through driver updates. Make sure you're on the latest stable driver from Nvidia. Go to Nvidia's driver download page, enter your GPU model, and install the latest version. Driver updates have made more difference than any other single factor for frame generation quality.

Step 2: Enable frame generation in compatible games. Not all games support it yet. Check whether your specific game supports DLSS 3 or whatever frame generation feature is available. Most modern AAA games released in 2023 or later have support. Older games don't.

Step 3: Start with it disabled, then enable it on a per-game basis. This is important. Frame generation works great for some games and terribly for others. Don't just enable it globally. Test it in each game, play for 10-15 minutes, notice if artifacts bother you or if latency feels off. Then decide.

Step 4: Adjust the interpolation ratio if available. Some implementations let you choose whether to interpolate every other frame (1:1) or just some frames. Starting at 1:1 (maximum interpolation) is standard, but you can dial it back if artifacts bother you.

Step 5: Disable it if you notice input lag, artifacts, or if you're already getting 100fps+ native. Frame generation is free performance when you're GPU-limited. It's just added latency when you're not. Know which you are.

Step 6: Monitor frame times, not just frame rates. Use an overlay to see frame time distribution. Frame generation should show as roughly half the frame time of native rendering (because you're rendering half as many frames) with some variance.

One specific tip: if you use motion blur in games, try disabling it when using frame generation. Frame generation already creates interpolated motion, and motion blur on top of that can look unnatural or cause artifacts.

The Business Incentive Angle: Why This Matters to Hardware Companies

Let's talk about why frame generation exists from a business perspective, because that context matters.

GPU manufacturers face a consistent pressure: performance gains per generation are slowing. Moore's Law is dead. Doubling GPU performance every two years like we used to? That's not happening anymore. The physical limits of silicon are getting tighter.

Frame generation is attractive to GPU companies because it lets them claim performance improvements that aren't from raw hardware gains. Instead of a 15% raw performance improvement, you can claim 50-100% performance improvement through frame generation. That's compelling marketing. That's something to put on the box.

For Nvidia specifically, frame generation is also defensible territory. AMD and Intel can copy upscaling technology easily—it's mathematically straightforward. But frame generation requires years of neural network training data, research, and driver integration. It's proprietary in a way that's actually defensible.

For players, the business incentive creates a conflict of interest. GPU manufacturers benefit from frame generation adoption even if it's not strictly the best choice for gamers. They benefit from positioning it as essential. But that doesn't make the technology itself bad—it just means you should think critically about recommendations.

Alternative Approaches: Why You Might Not Need Frame Generation

Frame generation is one solution to a specific problem: "I want maximum visual quality and high frame rates, but my GPU isn't powerful enough for both." But that's not everyone's problem.

If you have a powerful GPU already: Modern flagship GPUs like the RTX 4090 or RTX 5090 can already hit your target frame rates at your desired visual settings. Frame generation doesn't help you. It just adds complexity. You're better off spending your money elsewhere.

If you're CPU-limited: Many games are bottlenecked by your processor, not your GPU. Adding frame generation on the GPU doesn't help if your CPU can't feed it new game logic 120 times per second. Identify whether you're CPU or GPU limited before enabling frame generation.

If you play competitive multiplayer games: The latency trade-off and artifact risks outweigh the benefits. Native rendering at lower visual settings will serve you better.

If you want maximum image quality: Frame generation introduces artifacts. If you want pristine images, native rendering is superior. This matters more for photography, cinematics, and streaming content than for gameplay.

If you upgrade your GPU every 2-3 years: Hardware is your friend. Just buy a better card. It's straightforward and predictable. Frame generation adds complexity for marginal gains if you're already on an upgrade path.

If you play older games or esports titles: Older games often don't support frame generation at all, so it's not an option. Esports titles prioritize latency, making frame generation counterproductive.

Looking Forward: What's Coming in Frame Generation Technology

Frame generation isn't done improving. The trajectory suggests several developments are coming.

Multi-frame context: Current frame generation looks at two frames and interpolates. Research is exploring systems that use 3-4 frames of context, allowing better prediction of motion and changes. This should reduce artifacts further.

Game-aware interpolation: Future implementations will probably incorporate information from the game engine about depth, motion vectors, and occlusion directly, rather than relying purely on pixel-level analysis. This would be more accurate but requires game engine cooperation.

Temporal upsampling + frame generation fusion: Instead of separating temporal upsampling from frame generation, future implementations might combine them. Render at lower temporal resolution but higher spatial quality, then use both upsampling and generation to reach your target resolution and frame rate. This could provide better overall visual quality.

Hardware-software co-design: Specialized hardware for frame generation inference will probably become standard in future GPU architectures, making it faster and cheaper computationally.

Standardization across vendors: Right now frame generation is proprietary. Future versions might use standardized approaches, letting AMD and Intel catch up more easily and giving developers consistent targets.

Neural network model compression: Current frame generation neural networks are large. Research into model distillation and compression could make inference faster and cheaper, opening possibilities for frame generation on lower-end hardware.

The Verdict: Should You Actually Use Frame Generation?

After all this analysis, here's my honest take.

Frame generation in 2025 is legitimately good technology that solves a real problem: you want maximum visual quality and high frame rates, but you can't afford the GPU power to achieve both natively. If that describes your situation, frame generation is worth enabling and testing. The improvements over 2023 are substantial. The artifacts are notably reduced. The latency addition is minimal.

But frame generation is also not a silver bullet. It's not equivalent to native rendering. It's not appropriate for every use case. And it's still technology with real compromises.

The thing that bothered me about the original debate is that both sides were pretending certainty about technology that was still actively improving. Gamers were right to be skeptical in 2023 when artifacts were bad. But Nvidia was also right that the technology had potential if given time to mature.

Two years later, time did its thing. The technology got better. Now the honest take is: frame generation is a useful tool with real trade-offs. Use it when it makes sense. Skip it when it doesn't. Stop pretending "fake frames" are illegitimate. They're interpolated frames—which is technically accurate and also perfectly fine.

The best part about 2025 is that you don't have to take anyone's word for it. You can enable frame generation yourself, test it for 30 minutes, and decide. The debate matters way less than your actual experience.

FAQ

What exactly is frame generation?

Frame generation is an AI-powered technology that analyzes two consecutive rendered frames and uses neural networks to predict what the frame between them should look like. Instead of your GPU rendering every single frame, it renders some frames and the AI interpolates the missing frames in between, effectively doubling your frame rate.

How is frame generation different from traditional upscaling?

Upscaling takes a single frame rendered at lower resolution and enlarges it to your target resolution. Frame generation works across the temporal dimension—it creates entirely new frames in the time domain rather than improving spatial resolution. You can use both technologies together: render at lower resolution, upscale to full resolution, then add frame generation for extra frame rate.

Does frame generation add input lag?

Frame generation adds approximately 2-3 milliseconds of latency, which is typically imperceptible for most gaming scenarios. However, competitive gamers or players on high refresh rate monitors (240 Hz+) might notice the difference. This is one of the legitimate trade-offs to consider.

Which games support frame generation?

Most AAA games released in 2023 or later support frame generation through Nvidia's DLSS 3 technology. Games need specific developer support to enable it—it's not automatically available for every game. Check your specific game's patch notes or graphics settings to confirm support.

Is frame generation worth enabling if I already get 120fps+?

Not really. Frame generation shines when you're GPU-limited and struggling to hit your target frame rate. If you're already exceeding your monitor's refresh rate significantly, enabling frame generation just adds complexity and potential artifacts without real benefits. Skip it in that scenario and focus on visual quality settings instead.

Does frame generation work well with ray tracing enabled?

Yes, actually. Ray tracing is computationally expensive and pushes most GPUs into a state where frame generation is genuinely helpful. Many gamers enable ray tracing at lower frame rates, then use frame generation to get to their target frame rate while keeping the ray tracing enabled. This is one of frame generation's best use cases.

Can frame generation cause stuttering or frame time issues?

Modern implementations are quite stable, but frame generation can occasionally cause frame time variance if the neural network inference doesn't complete quickly enough. This is rare on current hardware and typically only affects edge cases or very demanding scenarios. If you notice stuttering with frame generation enabled, try disabling it to confirm that's the cause.

What's the difference between Nvidia DLSS 3 frame generation and older DLSS 2 technology?

DLSS 2 focused on upscaling—rendering at lower resolution and enlarging to full resolution through AI. DLSS 3 adds frame generation on top of upscaling, creating entirely new frames temporally. You can use DLSS 3 without frame generation if you want just the upscaling benefit, but DLSS 3 is the package name for Nvidia's latest technology.

Does frame generation work better with certain monitor types?

Frame generation works fine with standard 60 Hz monitors, but the benefits scale better with higher refresh rate displays. If you have a 240 Hz monitor, frame generation can bring it to 480fps (though you'll rarely need that). VRR monitors (G-Sync, Free Sync) work with frame generation but occasionally have synchronization quirks. It's not a dealbreaker, just something to monitor.

Will frame generation replace native rendering in the future?

No. Frame generation will likely become a standard part of gaming technology, but native rendering will always be the gold standard for latency-sensitive scenarios and visual perfection. Frame generation will probably become more transparent and better integrated into gaming, but the fundamental approach of rendering as many native frames as possible will remain important, especially for competitive gaming.

Conclusion: The Technology Has Grown Up

Frame generation started as a controversial feature that overpromised and under-delivered. The gaming community rightfully called out the issues. But dismissing a technology entirely because its initial implementation was flawed—that's cutting off your nose to spite your face.

Two years of iteration, billions of dollars in research, and millions of gamers testing it in the real world has resulted in something genuinely useful. Not perfect. Not appropriate for every scenario. But useful.

If you're a gamer who wants to maximize visual quality at high frame rates on a budget GPU, frame generation in 2025 is worth serious consideration. If you're a competitive player or someone who already has a powerful GPU, it probably matters less. If you're CPU-limited or playing older games, it's not applicable.

The honest take is that frame generation has earned its place in the gaming toolkit through persistent improvement. It's no longer a gimmick—it's a legitimate feature with real benefits and real trade-offs.

Stop calling them "fake frames" as if that's an argument. They're interpolated frames created through neural network prediction. Is that less fancy than native rendering? Sure. Is it useful? Absolutely.

Try it yourself. Test it for 30 minutes in a game where you're GPU-limited. Either you'll find extra frame rate that lets you enjoy better graphics, or you'll notice artifacts that make you disable it. Either outcome is valid. But at least you'll have informed experience rather than internet discourse driving your opinion.

The future of gaming probably includes frame generation as a standard tool. You might as well understand how it works and whether it serves your specific needs. That's more useful than taking sides in a debate that's moved beyond the actual technology.

Key Takeaways

- Frame generation has improved dramatically since 2023, reducing artifacts by 82% while maintaining minimal latency addition of 2-3ms

- The technology works best for GPU-limited gaming scenarios where you want maximum visual quality at high frame rates

- Frame generation approximately doubles frame rates (55fps becomes 110fps) but involves real trade-offs in latency and occasional artifacts

- Modern implementations use advanced optical flow estimation, temporal consistency improvements, and confidence-based interpolation to predict intermediate frames

- Best use cases include single-player RPGs and exploration games; worst cases are competitive multiplayer and esports titles where latency matters

Related Articles

- AMD FSR Redstone Frame Generation Tested: Real Performance Data [2025]

- NVIDIA DLSS 4.5 at CES 2026: Complete Technical Breakdown [2026]

- AMD at CES 2026: Lisa Su's AI Revolution & Ryzen Announcements [2026]

- MacBook Air M4: The Best AI Laptop of 2025 [Review]

- Star Wars Outlaws Gold Edition Nintendo Switch 2 Review [2025]

- Brain Wearables & EEG Technology: The Future of Cognitive Computing [2025]

![Frame Generation in Gaming: Why 'Fake Frames' Are Actually Worth Using [2025]](https://tryrunable.com/blog/frame-generation-in-gaming-why-fake-frames-are-actually-wort/image-1-1768390540252.jpg)