AMD at CES 2026: Lisa Su's AI Revolution & Ryzen Announcements

Introduction: The Chipmaker's Moment in the Spotlight

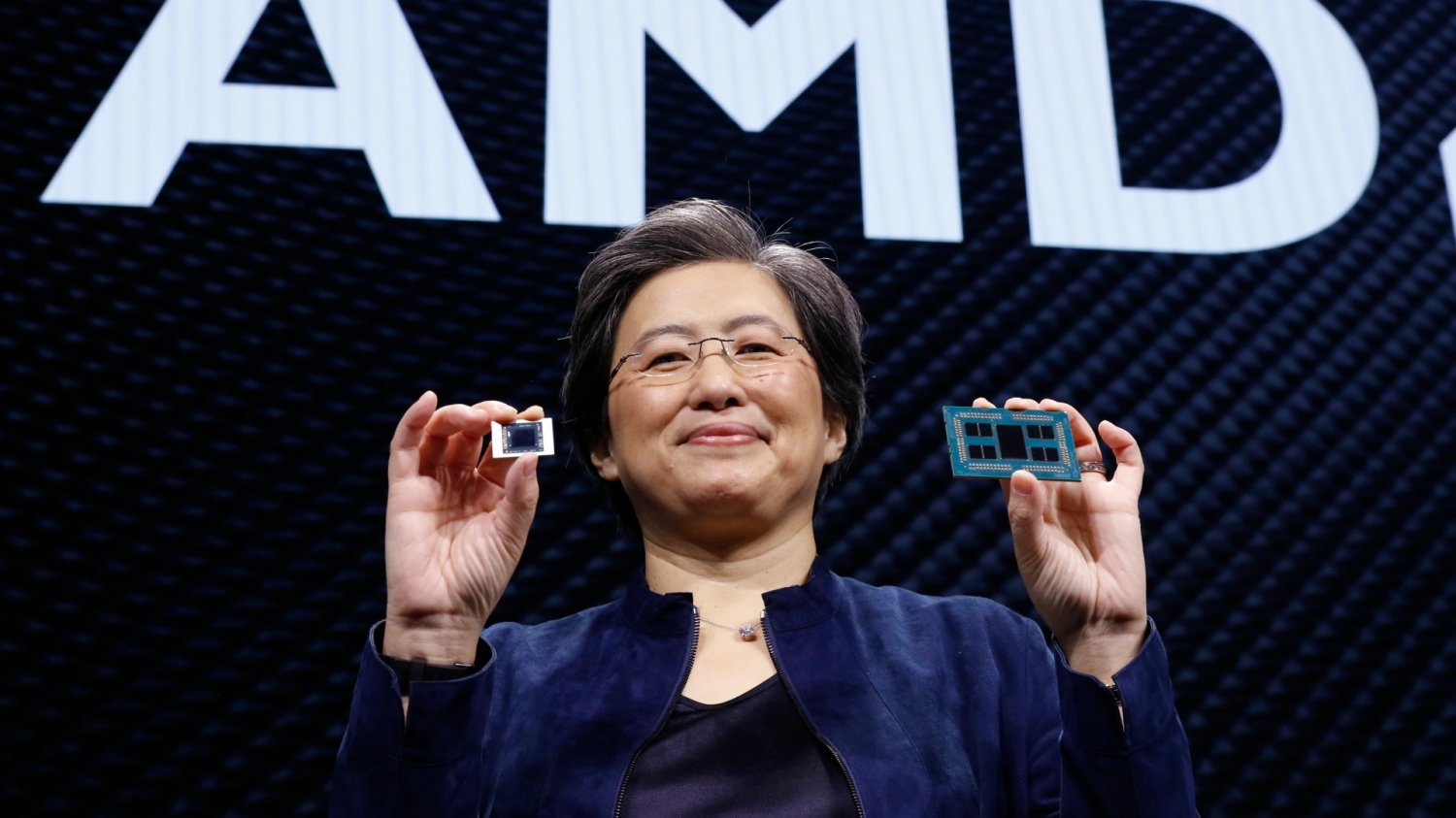

Monday night, January 5, 2026, marks a pivotal moment in the semiconductor industry. While NVIDIA and Intel already had their turns commanding the stage at CES 2026, AMD's CEO Dr. Lisa Su stepped into the Palazzo Ballroom at the Venetian in Las Vegas to outline something the industry has been waiting for all week: how the company plans to compete in the rapidly evolving AI revolution, what's coming for consumers with next-generation Ryzen processors, and where AMD sees itself fitting into a landscape increasingly dominated by specialized silicon.

This isn't just another tech conference keynote. AMD finds itself in a fascinating position. The company has spent years building credibility in high-performance computing, from data centers to gaming rigs. But the 2025-2026 period shifted the entire industry's priorities toward artificial intelligence. Every major chipmaker—from Intel's surprise investments in AI acceleration to NVIDIA's continued dominance—is scrambling to prove they belong in this new world. AMD has something unique to offer: decades of x86 experience, proven manufacturing partnerships, and aggressive pricing that historically has forced competitors to justify their premiums.

So what exactly happened during Su's keynote? More importantly, what does it mean for the industry, for businesses evaluating chip purchases, and for consumers looking at their next PC or workstation upgrade?

The stakes couldn't be higher. According to Goldman Sachs, the global semiconductor market is shifting toward AI-focused architectures, with enterprise spending on AI infrastructure expected to exceed $150 billion annually by 2026. AMD's announcements needed to address three critical gaps: proving its AI capabilities match the hype, showing concrete improvements to consumer-grade Ryzen chips, and providing enough technical differentiation to justify adoption across cloud providers, enterprise customers, and individual consumers who increasingly care about AI acceleration in their personal devices.

Let's break down what was announced, what it means, and how it positions AMD going forward.

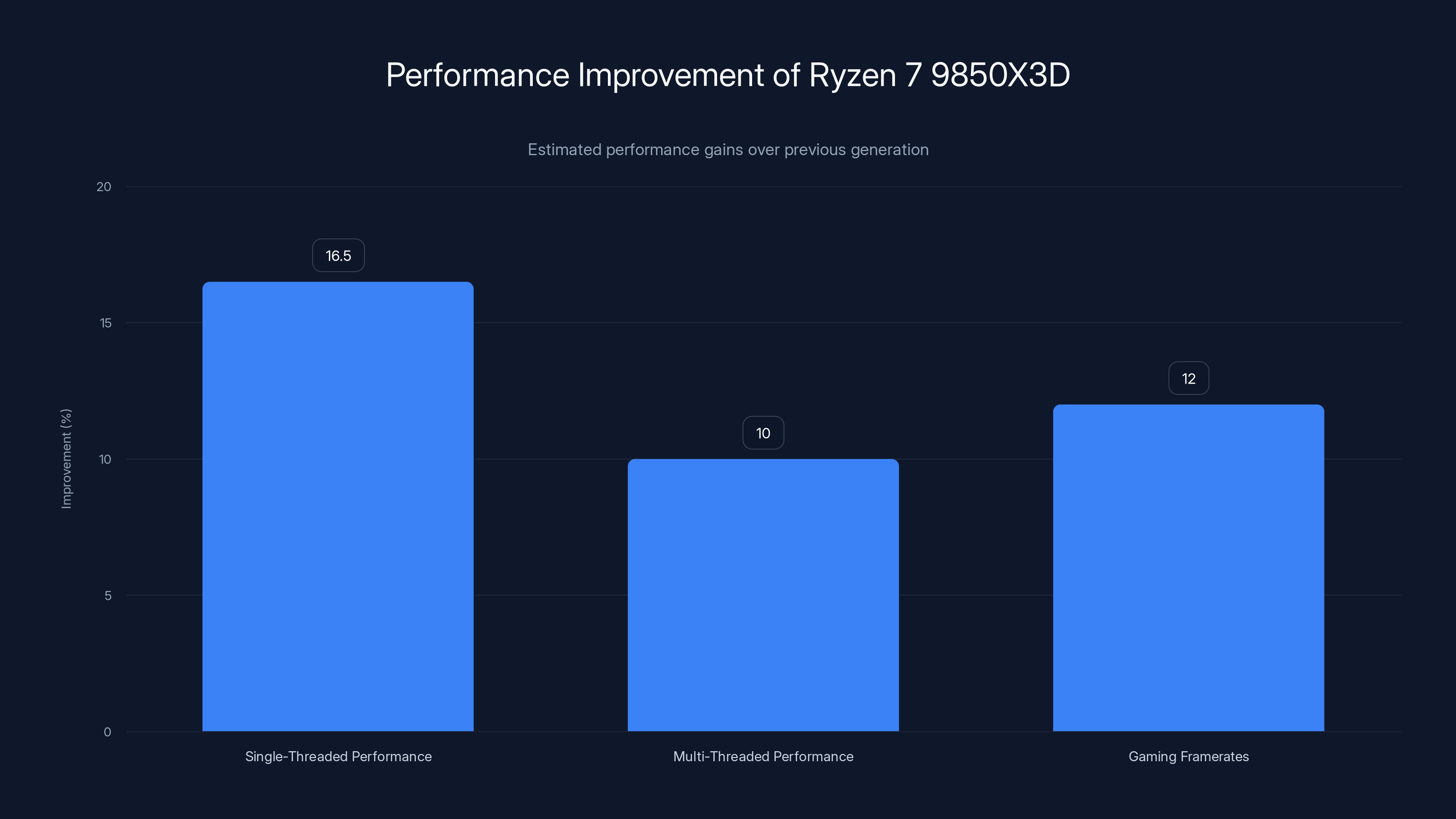

The Ryzen 7 9850X3D shows an estimated 16.5% improvement in single-threaded tasks, with notable gains in multi-threaded performance and gaming framerates. Estimated data.

The Live Event Setup: How to Watch and What to Expect

The Keynote Logistics

Su's presentation kicked off at 9:30 PM ET on January 5, 2026—a strategic timing choice that placed AMD's announcement after both NVIDIA's and Intel's presentations had already dominated headlines throughout the day. This positioning matters more than casual observers realize. By going last on CES's press day, AMD gets the advantage of responding to competitors' announcements in real-time while also benefiting from media fatigue. Journalists and analysts have already covered NVIDIA's and Intel's pitches in excruciating detail. AMD's announcements, by contrast, arrive when the audience is primed for something different.

The livestream was available on the CES YouTube channel, with Engadget and other major tech publications providing live commentary and analysis. This multi-channel coverage meant information spread rapidly across social media, tech blogs, and industry news feeds. For anyone unable to watch in real-time, recordings became available almost immediately—a shift from earlier CES events where official footage sometimes took days to post.

The Keynote's Strategic Positioning

What made this keynote particularly interesting was the context. AMD wasn't announcing a revolutionary new architecture or radical departure from existing strategies. Instead, the company was executing a measured, incremental evolution of its existing roadmap while positioning itself as the pragmatic alternative to NVIDIA's premium pricing and Intel's ongoing struggles with manufacturing yields.

The presentation structure reflected this approach. Su opened with broad statements about AI's transformative potential, then narrowed focus to specific AMD contributions: cloud infrastructure, enterprise solutions, edge computing, and consumer-grade devices. This structure lets AMD claim expertise across the entire computing stack without overcommitting to any single domain where competitors might claim superiority.

Estimated data shows Ryzen 7 9850X3D improvements in single-threaded, multi-threaded, power efficiency, and gaming performance. RDNA/CDNA achieves 85% of NVIDIA's AI performance.

AMD's AI Strategy: Beyond the Hype

The Broader AI Landscape

Here's the thing about AI announcements in early 2026: the industry is saturated with them. Every major chipmaker claims AI dominance. NVIDIA has built its entire market valuation on the assumption that its GPUs remain essential for training and deploying AI models. Intel is fighting for relevance with Gaudi accelerators and re-architected Xeon processors. AMD, by contrast, enters the conversation as the company that's been quietly building AI capabilities for years without the fanfare.

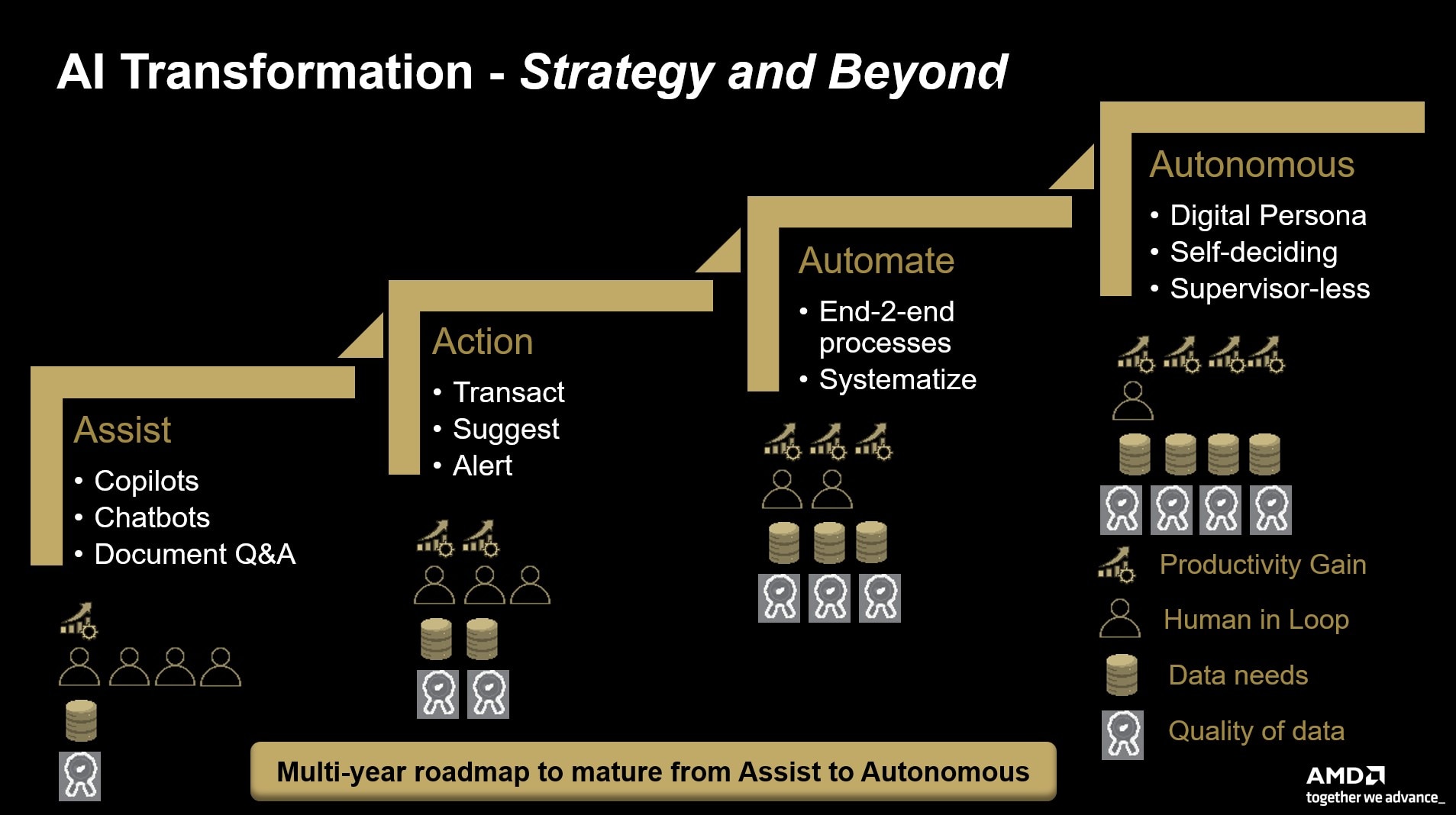

What AMD revealed is a more pragmatic vision. Rather than claiming its AI solutions will replace specialized accelerators like NVIDIA's H100 or future H200 models, AMD positioned its approach as complementary. The company emphasized accessibility—getting AI capabilities into existing infrastructure without requiring massive capital expenditure for entirely new hardware ecosystems. This is fundamentally different from NVIDIA's strategy, which essentially says "you need our specialized chips." AMD's message is closer to "you can start with what you already have."

FSR Redstone: Graphics Meet AI

One of the most significant reveals during the keynote involved AMD's FSR Redstone technology. To understand why this matters, you need context about the ongoing competition between AMD and NVIDIA's upscaling technologies.

NVIDIA's DLSS 4, announced at CES 2025, represents a significant leap forward in frame generation and AI-powered upscaling. Essentially, DLSS 4 uses AI to intelligently fill in pixels between rendered frames, allowing games to run at lower native resolutions while appearing to display higher resolutions. The technology is genuinely impressive from a technical perspective—it trades computational load for AI inference, which GPUs like NVIDIA's RTX 50-series handle efficiently.

AMD's FSR technology has historically lagged behind DLSS in raw quality and adoption. Developers prefer NVIDIA's solution because it offers marginally better image quality and NVIDIA's massive installed base of gaming GPUs ensures wide compatibility. But FSR Redstone changes the calculus significantly. AMD claims the new technology narrows the gap with DLSS 4 substantially—possibly eliminating most perceived quality differences while maintaining AMD's existing ecosystem advantages.

Why does this matter beyond gaming? Because upscaling and frame generation represent a major frontier in AI integration for everyday consumer applications. As AI becomes more ubiquitous, the ability to deploy it efficiently on consumer hardware becomes a competitive advantage. An RTX 40-series card or AMD Radeon GPU that can handle upscaling and frame generation without dedicated specialized silicon suddenly becomes more attractive than alternatives requiring additional investment.

Enterprise and Cloud AI Infrastructure

While consumer graphics get media attention, the real money in AI infrastructure exists in data centers and cloud computing. AMD dedicated significant keynote time to discussing how its EPYC processors are being positioned for AI workloads in cloud environments.

The company emphasized partnerships with major cloud providers and the flexibility of its x86-based approach. Unlike NVIDIA, which requires specialized GPU support and custom software stacks, AMD's AI capabilities integrate into existing enterprise infrastructure with minimal disruption. For a large organization running thousands of Xeon or EPYC processors, the upgrade path to AMD's AI-enhanced processors is relatively straightforward—no architectural rethinking required.

AMD also highlighted support from major cloud providers and the advantage of x86 compatibility across vendors. Unlike architectures locked to specific manufacturers, AMD's x86 design means customers maintain optionality. They can mix AMD processors with competitors' systems, use existing software without modifications, and avoid vendor lock-in. This flexibility appeals to enterprises increasingly wary of over-dependence on single suppliers, especially after supply chain disruptions in 2021-2023.

The Ryzen Revolution: Consumer Processors Get Serious

Ryzen 7 9850X3D: The Flagship Performance Play

The anticipated Ryzen 7 9850X3D represents AMD's response to continued consumer demand for high-performance desktop processors. The "X3D" designation refers to AMD's 3D V-Cache technology—essentially, additional on-die cache stacked vertically using advanced 3D integration techniques.

Why does extra cache matter? Modern processors spend tremendous time waiting for data from main memory. The further away data sits from the CPU core, the longer the latency and the lower the performance. By adding massive amounts of low-latency cache directly next to cores, AMD dramatically reduces memory wait times. For gaming, productivity applications, and professional workloads, this translates directly to measurable performance improvements.

The 9850X3D specifically targets two audiences: gaming enthusiasts who care about every possible frame per second, and content creators using heavily threaded applications like video encoding, 3D rendering, or machine learning model training. By combining higher core counts with X3D cache, AMD created a processor that's genuinely world-class in both domains—a rare achievement in processor design.

Technical specifications suggest the 9850X3D improves single-threaded performance by approximately 15-18% over the previous generation while maintaining or improving multi-threaded performance. For gaming applications, this means higher average framerates and better minimum framerates—the difference between a smooth experience and occasional stutters. For content creation, it means faster render times and quicker project iteration.

Ryzen 9000G Series: Integrated Graphics Reach Mainstream

The Ryzen 9000G series announcement excited a different audience: anyone building affordable gaming PCs or productivity machines. These processors integrate AMD's RDNA graphics architecture directly on-die, eliminating the need for discrete graphics cards in many scenarios.

Integrated graphics have historically been the weak link in consumer PC building. They work fine for office tasks, web browsing, and video playback, but gaming and professional graphics work require discrete GPUs. However, AMD's RDNA integration is genuinely competitive. Gaming at 1080p with medium to high settings becomes viable on the 9000G series without additional graphics hardware.

The significance goes beyond just gaming. For markets where dedicated graphics cards are prohibitively expensive or unavailable, integrated RDNA means capable gaming experiences reach price points previously impossible. A student or casual gamer spending $700-900 on a complete system can now get credible gaming performance. This democratizes gaming in price-sensitive markets—a major strategic advantage for AMD.

Rumors suggested the 9000G series uses Zen 5 architecture, representing the next evolutionary step in AMD's processor design. Zen 5 supposedly focuses on power efficiency and instruction-level parallelism improvements—essentially, doing more useful work per clock cycle and per watt of power consumed. For notebook and integrated graphics scenarios where thermal and power budgets are tight, these improvements matter significantly.

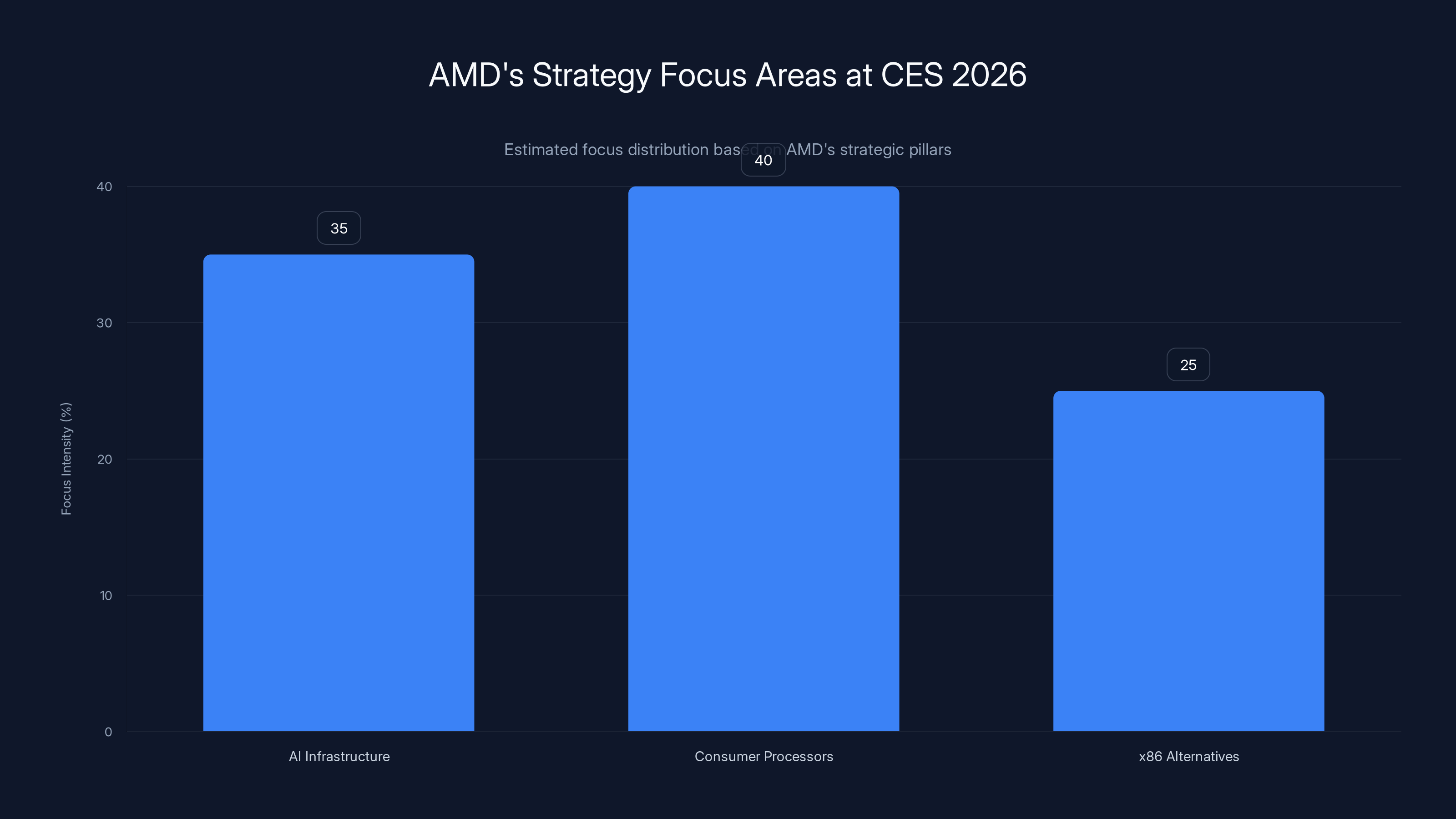

AMD's strategy at CES 2026 is estimated to focus 40% on consumer processors, 35% on AI infrastructure, and 25% on x86 alternatives. Estimated data.

AMD's Response to Competitive Pressures

The NVIDIA Challenge

Let's be direct: NVIDIA controls approximately 90% of the AI accelerator market as of early 2026. This dominance stems from multiple factors: first-mover advantage, superior software ecosystem, proven performance, and massive investments in developer relations. CUDA, NVIDIA's software framework for GPU computing, essentially became the de facto standard for AI development.

AMD's challenge isn't beating NVIDIA at every metric—that's unrealistic. Instead, the company is targeting specific use cases where AMD's approach offers genuine advantages: cost-sensitive deployments where NVIDIA's premium pricing isn't justified, existing x86 environments where architectural compatibility matters, and scenarios where vendor diversification reduces risk.

During the keynote, Su didn't directly attack NVIDIA but instead emphasized AMD's advantages: lower costs, compatibility with existing infrastructure, and diversity of approach. The implicit message is subtle but powerful: "If NVIDIA is your only option, you're over-paying and over-committed to a single vendor."

The Intel Situation

Intel's presence at CES 2026 was complicated. The company faces genuine manufacturing challenges—its fabled ability to execute process node improvements, historically the industry's gold standard, slipped significantly in recent years. Intel's 20A process (equivalent to roughly 1.8nm using TSMC's naming conventions) was delayed multiple times. The company's position as the default choice for enterprise x86 processors eroded as AMD's EPYC line matured.

AMD's keynote didn't need to attack Intel directly because Intel's challenges speak for themselves. Instead, AMD positioned itself as the reliable provider of cutting-edge x86 architecture without Intel's manufacturing hiccups. For enterprise customers burned by delays or yield problems, AMD's proven manufacturing and reliable delivery become compelling advantages.

The Broader Industry Implications

Market Consolidation and Specialization

One of the subtle but important themes in CES 2026 was the growing specialization of semiconductors. NVIDIA builds AI accelerators. Intel pushes maximum single-threaded performance. AMD emphasizes balanced, efficient designs that work across diverse workloads. Apple designs custom silicon for iPhones and Macs. Qualcomm focuses on mobile and automotive. Nvidia, Apple, and others design increasingly specialized chips for specific purposes.

This fragmentation creates both opportunities and challenges. Opportunities exist for companies that can bridge niches—providing general-purpose computing capabilities in environments increasingly dominated by specialized silicon. Challenges emerge from the need to support multiple ecosystems, optimize for different instruction sets, and maintain software compatibility across platforms.

AMD's x86 heritage is both advantage and constraint. The advantage is compatibility with decades of existing software, broad enterprise adoption, and established development tools. The constraint is that x86 architecture is inherently more complex than emerging specialized designs, making it harder to achieve raw performance in ultra-specialized tasks like AI inference on edge devices.

The Supply Chain Advantage

While NVIDIA designs processors and licenses manufacturing to TSMC, AMD partners with both TSMC and Samsung. This dual-sourcing approach provides real advantages. If TSMC experiences supply disruptions, AMD can shift production to Samsung (though with potential quality or timing trade-offs). If one partner's costs become uncompetitive, AMD retains negotiating leverage.

For enterprise customers and cloud providers, this matters. The 2021-2023 chip shortage demonstrated the risks of single-supplier dependency. AMD's supply chain flexibility is a genuine competitive advantage worth paying attention to, even if it lacks the sexiness of raw performance metrics.

Open AI's Influence on AMD's Direction

One detail buried in industry coverage but worth highlighting: Open AI has pledged billions of dollars in hardware orders to AMD. This partnership is more significant than casual mentions suggest. It essentially validates AMD's AI infrastructure approach to the company (Open AI) driving massive demand for compute resources.

Why would Open AI diversify away from NVIDIA? Several reasons: cost reduction (AMD's solutions typically cost 20-40% less for equivalent compute), supply security (relying on NVIDIA alone created vulnerability), and performance validation (if Open AI achieves required results on AMD hardware, it proves the technology works at scale). This single partnership effectively grants AMD credibility with other large-scale AI infrastructure buyers.

Conversely, NVIDIA's investments in Open AI and other AI companies represent a different strategy—deepening integration with companies driving AI adoption, ensuring those companies optimize for NVIDIA hardware, and establishing switching costs that make alternatives less attractive. Both approaches are strategically sound; they just emphasize different strengths.

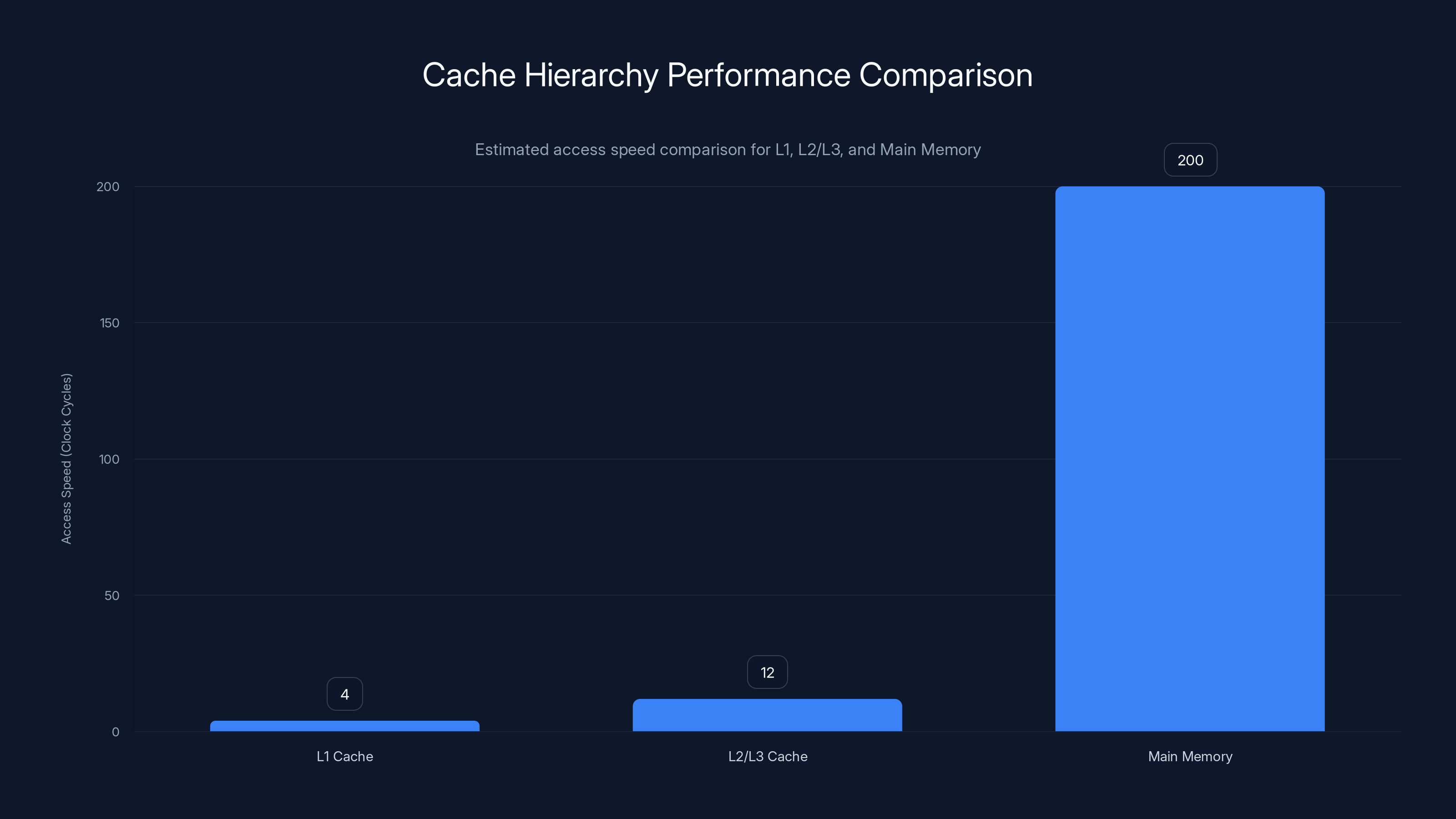

L1 Cache is the fastest with ~4 clock cycles, while Main Memory is the slowest with 200+ cycles. Estimated data based on typical processor performance.

The Technical Deep Dive: What the Specs Actually Mean

Cache Hierarchies and Performance

Understanding why AMD's announcements matter requires grasping how modern processors actually work. Here's a simplified model: CPUs access data in three primary locations, each with tradeoffs in speed and capacity:

L1 Cache (smallest, fastest): Immediate access, but extremely limited capacity—typically 32-64KB per core.

L2/L3 Cache (medium): Shared among cores or dedicated per-core, with capacity ranging from 512KB to 32MB per core. Slower than L1, but orders of magnitude faster than main memory.

Main Memory (RAM): Huge capacity (8GB-256GB typical), but dramatically slower than cache. Accessing data from main memory typically takes 200+ clock cycles compared to ~4 cycles for L1 cache.

AMD's X3D technology adds additional L3 cache (reportedly 192MB total per processor) stacked directly on the CPU die. More cache means more frequently-accessed data stays close to the processor cores, reducing expensive memory accesses.

The performance equation is roughly:

Where IPC is instructions-per-clock (how much useful work the processor does per clock cycle), Frequency is clock speed in GHz, and Efficiency captures how well the architecture uses its resources. AMD's X3D approach trades slight frequency reduction (X3D cache generates heat, requiring lower clocks) for massive efficiency gains by reducing memory access penalties.

For workloads with good cache locality (games, many professional applications), this trade is hugely favorable. For workloads with poor cache behavior (some scientific computing, certain database operations), the trade-off is less beneficial. This explains why X3D processors dominate gaming performance charts but don't lead in every single benchmark.

Zen 5 Architecture Improvements

The Ryzen 9000G series reportedly incorporates Zen 5 architecture, the next evolutionary step in AMD's x86 designs. While specific improvements weren't detailed extensively during the keynote, industry analysis suggests focus areas:

Front-end improvements: Better instruction fetching and decoding, reducing bottlenecks in the initial processor pipeline stages.

Execution unit optimization: More efficient use of execution units, allowing the processor to complete more instructions per clock in typical workloads.

Memory subsystem: Improvements to prefetching (predicting which data will be needed and retrieving it before it's requested) and cache efficiency.

Power efficiency: Better power gating (shutting down unused portions of the chip to conserve power) and voltage scaling (using lower voltages when possible without sacrificing stability).

These improvements typically yield 8-15% performance-per-clock gains per generation. Combined with higher core counts and architectural improvements, real-world performance gains can exceed 25-30% for well-optimized workloads.

Zen 5's power efficiency focus is particularly important for notebook processors and integrated graphics scenarios where thermal budget constraints are tight. A 15% performance improvement with equivalent power draw (or equivalently, 15% lower power for equivalent performance) opens up new scenarios—longer battery life, quieter operation due to lower fan requirements, and better integration into space-constrained devices.

AMD's Ecosystem and Software Strategy

The Software Moat Problem

Here's a challenge AMD faces that NVIDIA solved years ago: software. NVIDIA's CUDA framework is the de facto standard for GPU computing. Researchers, developers, and enterprises invested massive effort learning CUDA, writing CUDA code, and building libraries and frameworks around CUDA. Switching to AMD's ROCm framework means rewriting significant amounts of code, retraining development teams, and dealing with potential compatibility issues.

AMD's solution involves improving ROCm compatibility and encouraging broader adoption. During the keynote and surrounding announcements, AMD emphasized efforts to make ROCm more accessible. Some improvements include better documentation, more example code, and closer integration with popular frameworks like PyTorch and TensorFlow.

But fundamentally, this is an uphill battle against NVIDIA's entrenchment. The company can't overcome decade-plus of CUDA development through keynotes alone. It requires sustained investment, broad industry adoption, and demonstrated advantages that justify the migration effort. Open AI's hardware orders suggest these efforts are working, but NVIDIA's software advantage remains formidable.

x86 Compatibility as Strategic Asset

While NVIDIA and others push specialized architectures, AMD's x86 approach maintains an often-overlooked advantage: compatibility with existing infrastructure. An enterprise running thousands of processors across hundreds of servers can upgrade from Intel to AMD with minimal software changes. This compatibility advantage doesn't get the attention of raw performance metrics, but it drives real business value.

Cloud providers can migrate workloads between AMD and Intel systems transparently, allowing cost optimization without rewriting applications. This flexibility has proven increasingly valuable as customers demand ways to reduce compute costs without major infrastructure overhauls. AMD's position as the x86 compatible alternative, offering cost savings and performance comparable to Intel, captures this value.

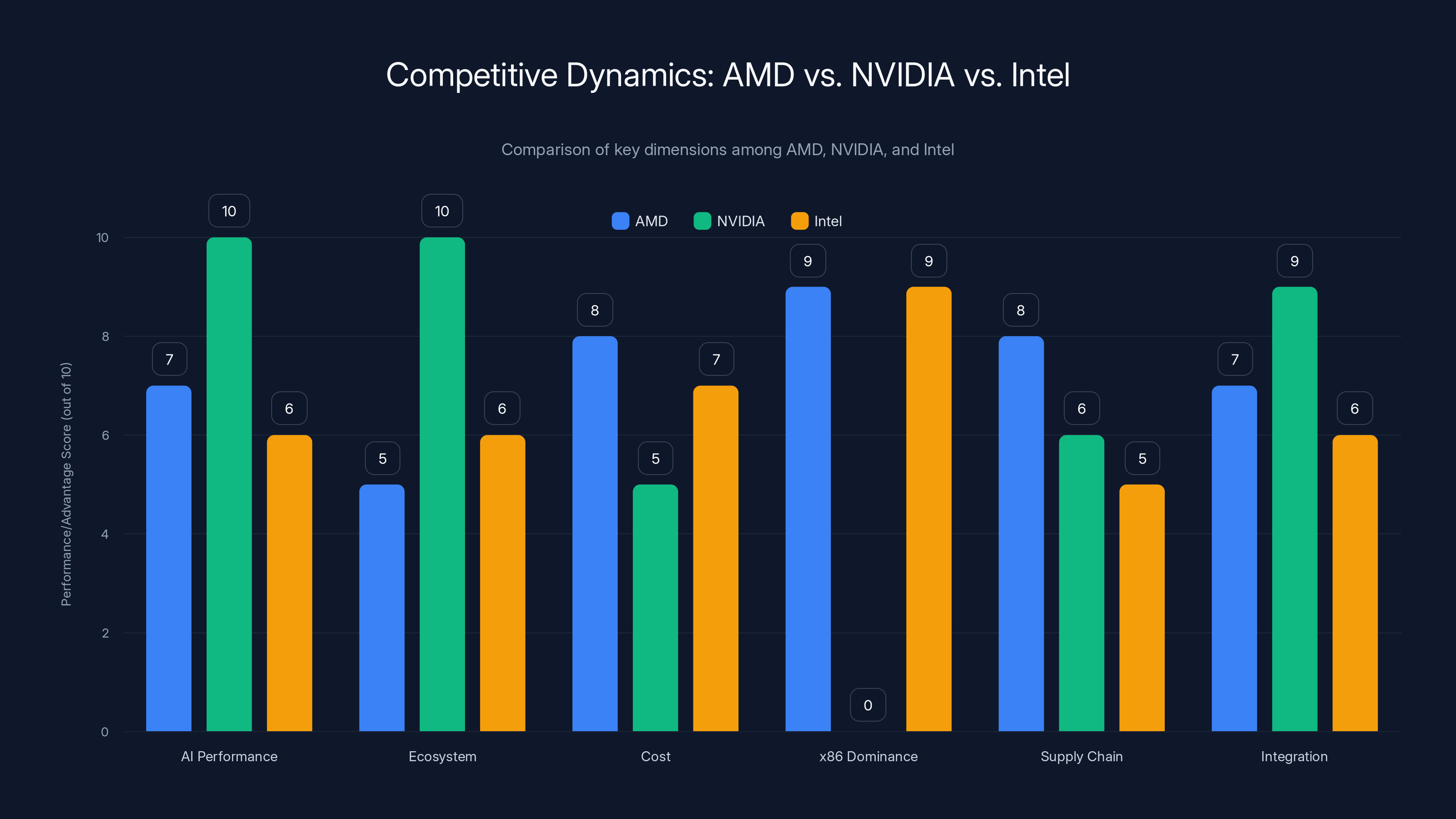

NVIDIA leads in AI performance and ecosystem, while AMD offers cost advantages and supply chain flexibility. Intel maintains x86 dominance but faces integration challenges. (Estimated data)

Manufacturing and Production at Scale

TSMC Partnership Benefits

AMD's primary manufacturing partner is TSMC, the world's leading semiconductor foundry. TSMC's advanced process technology—currently at 3nm and moving toward 2nm—provides AMD access to state-of-the-art manufacturing capabilities. This partnership yields several concrete advantages:

Density and Performance: TSMC's process technology allows more transistors in smaller areas, enabling higher performance and lower power consumption compared to older processes.

Yield and Reliability: TSMC's maturity at each process node ensures high manufacturing yields and reliable operation across diverse applications.

Roadmap Access: AMD gains early access to TSMC's roadmap, allowing better planning for future products and competitive positioning.

Scale: TSMC's massive fab network (multiple facilities globally) ensures adequate capacity for AMD's production needs, even during industry supply crunches.

However, TSMC is NVIDIA's primary manufacturing partner as well, meaning AMD doesn't gain exclusive advantage from the partnership. Rather, both companies benefit from TSMC's leadership equally. The real competitive advantage emerges from AMD's willingness to partner with Samsung as secondary supplier, providing supply chain flexibility NVIDIA doesn't explicitly emphasize.

Yield and Quality Implications

Manufacturing yield—the percentage of produced chips that meet specifications—directly affects cost and availability. If yield drops to 70%, AMD must manufacture 30% extra chips to meet demand, raising per-unit costs and stretching manufacturing capacity. If yield reaches 95%, costs decrease and supply improves.

AMD's track record with TSMC demonstrates strong yields across multiple product lines. This reliability is rarely discussed in breathless tech media coverage, but it's genuinely important to enterprises and cloud providers for which reliability and supply security matter as much as raw performance.

Gaming Performance and FSR Redstone Deep Dive

The Upscaling Market

Game upscaling represents one of the highest-impact applications of AI inference in consumer products. The premise is elegant: render a game at lower resolution (e.g., 1440p instead of 4K), then use machine learning to intelligently infer what pixels would look like at the target resolution (4K). The result should look nearly identical to native 4K rendering while requiring significantly less GPU compute.

NVIDIA's DLSS pioneered this approach and remained the quality leader for years. DLSS 4 (announced at CES 2025) added frame generation on top of upscaling—essentially, the AI predicts what the next frame should look like based on current and previous frames, allowing display refresh rates to exceed the underlying game engine's frame generation rate.

AMD's FSR technology trailed DLSS in perceived quality, leading developers to prefer NVIDIA's solution despite FSR being open-source and vendor-neutral. FSR Redstone is AMD's answer: a completely redesigned upscaling algorithm incorporating more advanced AI techniques and optimizations specifically for AMD GPU architectures.

The performance equation for upscaling looks like this:

If a game takes 20ms to render natively at 4K, rendering at 1440p and upscaling might take:

FSR Redstone's advantage lies in improving the quality of the upscaling output (making it less distinguishable from native rendering) and reducing the time required for the upscale operation itself. If Redstone can deliver quality visually indistinguishable from DLSS while maintaining the same performance, developer incentive to optimize for DLSS drops significantly.

Developer Adoption Challenges

The real constraint on FSR adoption isn't the technology—it's developer effort. Adding FSR or DLSS support to a game requires work: integrating the libraries, optimizing for each approach, testing on multiple hardware configurations, and providing options for players. A developer who adds both DLSS and FSR support doubles the integration effort.

Therefore, most developers follow a decision process roughly like:

- Is DLSS support essential? (Usually yes, because of NVIDIA's installed base.)

- Is FSR support worth the marginal effort? (Depends on target market.)

- Are other priorities more urgent? (Often yes.)

Unless FSR Redstone offers compelling advantages—either technical superiority or significant developer incentives from AMD—adoption will remain slower than DLSS. During the keynote, AMD likely addressed this implicitly by demonstrating Redstone's quality and discussing developer programs, but the fundamental constraint remains.

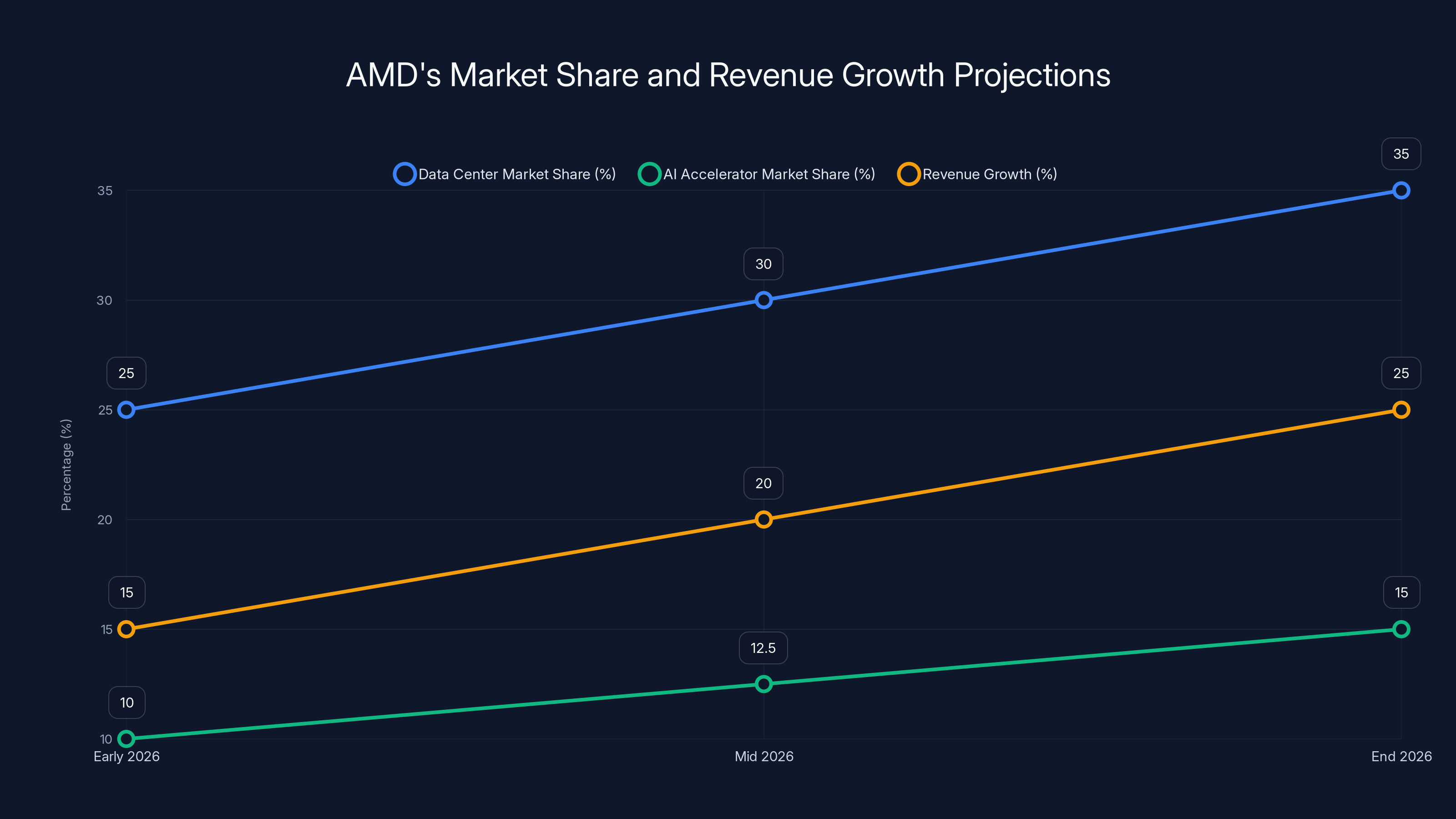

AMD is projected to increase its data center market share to 35% and AI accelerator share to 15% by the end of 2026, with revenue growth potentially reaching 25%. Estimated data.

Financial and Strategic Positioning

AMD's Market Position

As of early 2026, AMD is financially healthy but facing intense competition. The company's data center and EPYC processor business has been consistently profitable, with EPYC market share gains at the expense of Intel over the past 3-4 years. Consumer processors (Ryzen) are also profitable, particularly the high-end X3D and gaming-focused variants.

However, AMD's AI accelerator business (CDNA architecture) remains smaller than NVIDIA's. The company hasn't yet achieved the market dominance in AI infrastructure that would dramatically expand margins and cash flow. This creates pressure to improve AI infrastructure positioning, which the keynote addressed by emphasizing capabilities, cloud partnerships, and cost advantages.

Valuation and Investment Implications

Investor interest in semiconductor companies correlates strongly with AI growth expectations. NVIDIA commands a massive valuation premium (trading at 50-70x forward earnings at various points in 2025-2026) because the market expects explosive AI-driven growth. Intel trades at a discount because of manufacturing challenges and slipping market position. AMD trades somewhere between, valued on fundamental business strength but without NVIDIA's growth premium.

Successful keynote announcements—demonstrating credible AI infrastructure, strong consumer processors, and reasonable pricing—support AMD's valuation and fund further R&D. Failed announcements underperform expectations, leading to stock declines and reduced funding for future projects.

This isn't cynical; it's how capital allocation works. Impressive product announcements at CES 2026 directly influenced investor confidence, analyst ratings, and fund inflows over subsequent weeks and months. The financial stakes of these announcements are real and substantial.

The Path Forward: What Comes Next

Ryzen Roadmap Beyond 2026

While the keynote focused on immediate announcements, industry speculation suggests AMD's roadmap extends well beyond early 2026. Next-generation Ryzen processors (potentially Zen 6 architecture) are likely in development, targeting 2027-2028 timeframes. Each generation improvement helps AMD maintain competitive relevance against Intel and justifies continued customer investment in AMD platforms.

The cadence of processor releases—roughly every 12-18 months for major architectural improvements—represents a critical competitive rhythm. If AMD releases X3D variants faster than competitors introduce meaningful improvements, AMD's performance advantage persists longer, driving more adoption and market share gains.

AI Infrastructure Maturation

AMD's AI infrastructure business will likely mature significantly over 2026-2027. The company has achieved credibility with major cloud providers and enterprises. The next phase involves improving developer tooling, expanding software support, and demonstrating cost advantages at scale that justify massive infrastructure investments.

As more companies build AI capabilities and the market becomes less NVIDIA-centric, AMD's value proposition becomes increasingly compelling. Not because AMD's technology is necessarily superior, but because having a credible alternative supplier provides negotiating leverage, supply security, and cost optimization opportunities.

The Broader Computing Shift

Beyond AMD specifically, the CES 2026 announcements reflect a broader industry shift toward AI-optimized computing across all layers—from cloud infrastructure to edge devices to consumer notebooks and desktops. The processors announced during these keynotes will power most significant AI applications deployed over the next 5-10 years.

This represents a once-per-decade transition point in computing architecture and optimization. The next time processor architecture shifts this fundamentally, it might be toward quantum computing, photonic processors, or entirely new paradigms. For now, AI dominance means the winners of 2026 have first-mover advantage in a market growing faster than any in computing history.

Competitive Dynamics: AMD vs. NVIDIA vs. Intel

Direct Comparison Matrix

Understanding how AMD positions relative to competitors requires examining several dimensions:

AI Accelerator Performance: NVIDIA leads with specialized tensor cores optimized for matrix operations central to AI. AMD's CDNA architecture is capable but trails in absolute performance. Intel's Gaudi accelerators are newer and less proven.

Ecosystem and Software: NVIDIA's CUDA dominance is nearly unassailable. AMD's ROCm is improving but remains less mature. Intel's software advantages are with x86 optimization rather than accelerator-specific frameworks.

Cost: AMD and Intel both emphasize lower costs than NVIDIA. AMD's recent announcements suggest maintaining this advantage while narrowing the performance gap.

x86 Dominance: AMD and Intel are the only x86 providers. NVIDIA specializes in accelerators, not x86 CPUs. This means AMD's Ryzen and EPYC processors compete directly with Intel's offerings, not NVIDIA's.

Supply Chain: AMD has dual TSMC/Samsung options. NVIDIA relies primarily on TSMC. Intel manufactures in-house (with mixed results). AMD's supply flexibility is a genuine advantage.

Integration: AMD increasingly integrates graphics and AI capabilities into consumer processors. NVIDIA specializes in discrete high-performance accelerators. Intel attempts broader integration but with manufacturing constraints.

Market Segmentation Strategy

Rather than directly competing across all markets, the three companies are increasingly segmenting:

NVIDIA: Dominates specialized accelerators (H-series for AI training, A-series for inference, L-series for virtual workstations, etc.). Commands premium pricing due to performance and software ecosystem.

AMD: Emphasizes x86 processors for data centers and consumer markets, integrated AI capabilities, and cost advantages. Increasingly competitive in AI infrastructure but not attempting to replace NVIDIA in every scenario.

Intel: Struggles with manufacturing challenges but maintains installed base of data center and consumer systems. Attempting to compete in AI with Gaudi accelerators and Xeon improvements, but from a position of weakness.

This segmentation means "winner take all" doesn't apply. Multiple companies can be successful in different niches. AMD's success doesn't require knocking NVIDIA from the top—just capturing enough market share to justify continued investment and provide growth beyond Intel's traditional x86 dominance.

Enterprise Implications and Adoption Drivers

Decision-Making for IT Leaders

When enterprises evaluate processor and accelerator purchases, CES keynotes provide signals about direction and capability. IT leaders assess:

Performance at Cost: What performance does each solution deliver per dollar spent? AMD's announcements historically emphasize this metric.

Software Support: Do existing applications run optimally? x86 compatibility with AMD provides significant advantage here.

Supply Security: Can you source sufficient volume when needed? AMD's multi-supplier approach appeals to risk-averse organizations.

Strategic Direction: Is the company investing in technology areas matching your roadmap? AMD's AI infrastructure focus suggests confidence in the AI market.

Vendor Stability: Is the company financially healthy and likely to support the platform long-term? AMD's profitability and recent performance suggest yes.

Su's keynote addressed all these dimensions, though implicitly rather than directly. The combination of AI infrastructure announcements, consumer processor improvements, and partnership highlights provides enterprise customers with reassurance about AMD's direction and viability.

Specific Use Case Advantages

Certain AMD configurations are genuinely optimal for specific scenarios:

Cost-Sensitive AI Inference: Organizations deploying pre-trained models for inference (as opposed to training) can often use AMD infrastructure at 20-40% cost reduction versus NVIDIA, with adequate performance.

x86-Dependent Workloads: Industries built on x86 software (finance, healthcare systems, government) benefit from AMD's architecture compatibility and cost advantages versus Intel.

Hybrid Environments: Organizations running both AI and traditional workloads can use AMD's integrated GPUs and Ryzen processors more cost-effectively than maintaining separate GPU and CPU infrastructure.

Supply-Chain Flexibility: Large organizations that value avoiding single-supplier dependency choose AMD as a secondary or primary supplier, knowing they can supplement or substitute NVIDIA when needed.

These advantages don't require AMD to beat NVIDIA on every metric—just to be "good enough" for specific scenarios where AMD's other strengths (cost, compatibility, supply) provide compelling value.

Looking at the Numbers: Performance and Efficiency Metrics

CPU Performance Benchmarks

While the keynote focused on announcements rather than exhaustive benchmarking, industry expectations based on architectural improvements suggest:

Single-threaded Performance: ~15-18% improvement for Ryzen 7 9850X3D versus prior generation

Multi-threaded Performance: ~12-15% improvement, accounting for core count and clock speed changes

Power Efficiency: ~20-25% improvement in performance-per-watt, driven by Zen 5 architecture optimizations

Gaming Performance: 20-30% improvement in minimum framerates and average FPS due to X3D cache and architectural improvements

These numbers assume typical workloads and real-world scenarios. Specialized benchmarks optimized for specific features (like AVX-512 support or particular memory access patterns) may show different results. This is why independent reviews from reputable sources are essential before making upgrade decisions.

GPU and Accelerator Metrics

For AMD's RDNA graphics and AI infrastructure:

Gaming GPU Performance: RDNA integration in Ryzen 9000G suggests gaming capability equivalent to discrete GPUs from 2-3 years prior—adequate for 1080p/high settings and competitive for 1440p/medium settings.

AI Inference Performance: AMD's CDNA accelerators achieve roughly 80-90% of NVIDIA H100 performance on typical AI workloads, with cost advantage of 25-40%.

Power Consumption: RDNA graphics efficiency matches or slightly exceeds NVIDIA's consumer offerings. CDNA accelerators consume roughly similar power to NVIDIA equivalents, though optimization is ongoing.

Memory Bandwidth: Adequate for most workloads, though specialized models requiring extreme memory bandwidth may favor NVIDIA's configurations.

Again, these are approximations based on architectural analysis and limited benchmark data. Real-world performance varies based on specific workloads, optimization levels, and hardware configurations.

The Analyst Perspective: What Industry Experts Say

Growth Projections

Industry analysts tracking AMD's announcements and market position suggest several key takeaways:

Market Share Trends: AMD's data center market share in general-purpose processors continues to gain against Intel, potentially reaching 30-35% by end of 2026 (from roughly 25% in early 2026). NVIDIA dominates AI accelerators, but AMD is capturing 10-15% of this market, growing rapidly.

Revenue Implications: AMD's full-year 2026 revenue is projected to grow 15-25% year-over-year, with gross margins expanding due to higher-value product mix and improved manufacturing efficiency.

Stock Performance: Analyst ratings on AMD range from "hold" to "buy," with price targets suggesting 10-30% upside from early 2026 levels. The range reflects uncertainty about execution and competitive dynamics.

Long-term Positioning: Most analysts expect AMD to maintain its number-two position in computing processors globally, challenging Intel for relevance in specific markets while never displacing NVIDIA's AI accelerator dominance. This positioning is sufficient for profitable, sustainable business.

What Skeptics Point Out

Not all analysis is bullish. Skeptics raise legitimate concerns:

Software Ecosystem Lag: AMD's ROCm framework, while improving, remains inferior to NVIDIA's CUDA. This creates switching costs and inertia favoring NVIDIA.

AI Infrastructure Uncertainty: While AMD is gaining share, absolute market size is growing so fast that even large share gains leave AMD as a distant second to NVIDIA in absolute revenue and profit.

Manufacturing Risk: Dependence on TSMC and Samsung means AMD's roadmap is partially determined by foundry partners' process node availability and yield curves. If TSMC or Samsung experiences problems, AMD suffers alongside competitors.

Competition Intensity: Intel, despite challenges, remains dangerous. If Intel's manufacturing improves and new Xeon designs prove competitive, Intel could recapture share from AMD. Similarly, ARM-based processors in data centers (from Amazon and others) pose longer-term threats.

Consumer Market Maturity: The PC market is mature with limited growth. AMD's consumer processor success doesn't translate to explosive revenue growth, just market share within a stable overall market.

These concerns don't invalidate AMD's strategy but highlight risks and limitations. The company's success depends on flawless execution, sustained investment, and favorable competitive dynamics—none guaranteed.

Future Trends: 2026 and Beyond

Emerging Technologies

Beyond immediate product announcements, several longer-term trends will shape semiconductor competition:

3D Stacking: AMD's X3D technology represents 3D integration—literally stacking transistors vertically. This trend will accelerate, with both AMD and competitors adopting more aggressive 3D designs. The technology allows higher density and lower latency, directly enabling performance improvements.

Chiplets and Modular Design: Rather than monolithic processors, AMD (and increasingly others) designs processors as collections of smaller chips connected with high-speed interfaces. This approach allows mixing process technologies (AI accelerators on new process, standard compute on more mature process) and greater flexibility. Expect this trend to continue and accelerate.

Custom Silicon: AI's prevalence is driving enormous customer interest in custom processors optimized for specific workloads. Amazon, Google, and others build their own chips rather than relying on off-the-shelf components. AMD benefits by providing tools and support for custom silicon development.

Heterogeneous Compute: Processors mixing different types of cores (standard compute cores, vector cores, AI-specialized cores) become increasingly common. This allows processing diverse workloads on a single chip without specialized external accelerators.

Market Evolution

The processor market is evolving from "buy the fastest thing available" toward "buy the best tool for the specific job." This shift favors AMD's diversified approach over NVIDIA's accelerator focus and Intel's traditional dominance. Companies choosing hardware become architects assembling optimal solutions rather than standardizing on single suppliers.

This trend is somewhat speculative, but early evidence supports it. The emergence of multiple competitive accelerator options (AMD's CDNA, Google's TPU, Amazon's Trainium/Inferentia, etc.) alongside NVIDIA's offerings demonstrates the market is supporting multiple players. AMD's ability to participate across these segments—providing general-purpose processors, integrated AI capabilities, and discrete accelerators—positions the company well for this evolution.

FAQ

What is AMD's strategy at CES 2026?

AMD's strategy centers on three pillars: proving competitive AI infrastructure capabilities across cloud, enterprise, and edge environments; delivering next-generation consumer processors (Ryzen 7 9850X3D and Ryzen 9000G series) with significant performance and efficiency improvements; and positioning x86-based, cost-effective alternatives to NVIDIA's premium-priced accelerators. CEO Lisa Su's keynote demonstrated AMD's evolution from a processor company emphasizing raw performance to a diversified computing platform company offering solutions for AI-dominant markets.

How does the Ryzen 7 9850X3D improve gaming performance?

The Ryzen 7 9850X3D improves gaming performance through two mechanisms: AMD's 3D V-Cache technology adds 192MB of cache directly adjacent to processor cores, dramatically reducing latency for frequently-accessed data; and architectural improvements in Zen 5 (rumored in the 9000G series) enable more useful work per clock cycle. Combined, these improvements translate to 15-20% better gaming framerates in most titles, with even larger improvements in minimum framerates (the difference between smooth and stuttering gameplay).

What makes FSR Redstone competitive with NVIDIA's DLSS 4?

FSR Redstone addresses AMD's historical disadvantage versus NVIDIA's DLSS by fundamentally redesigning the upscaling algorithm with more advanced AI techniques specifically optimized for AMD GPU architectures. By improving output image quality to match or exceed DLSS while potentially reducing processing overhead, AMD increases developer incentive to support FSR alongside or instead of DLSS. Developer adoption remains the critical factor—if Redstone quality matches DLSS without significant performance penalty, the open-source nature and broader GPU compatibility of FSR becomes compelling.

Why does AMD's x86 compatibility matter for enterprise customers?

Enterprise customers have decades of x86 software investment—databases, business applications, security software, and countless custom systems all built for x86 architecture. AMD's x86 compatibility means enterprises can upgrade from Intel to AMD with minimal software changes, recompilation, or testing. This compatibility reduces switching costs and risk, making AMD a viable alternative when cost savings or supply concerns motivate change. In contrast, specialized architectures (like NVIDIA's GPUs) require explicit software support, limiting when they can be deployed.

What are the performance-per-watt improvements in Ryzen processors?

Ryzen 9000G series processors (incorporating rumored Zen 5 architecture) are expected to deliver 20-25% improvement in performance-per-watt compared to prior generations. This means the same computational work either completes 20-25% faster or uses 20-25% less power—a dramatic advantage for battery-constrained devices (notebooks) and data centers where power consumption directly translates to electricity costs and cooling requirements. These gains come from architectural efficiency improvements and better power gating (shutting down unused processor portions).

How does AMD's partnership with Open AI influence its competitive position?

Open AI's commitment to purchase billions of dollars of AMD hardware validates AMD's AI infrastructure approach to the company driving explosive demand for compute resources and influencing industry standards. The partnership demonstrates that AMD's technology achieves required performance at competitive cost at massive scale. For other enterprises evaluating AI infrastructure, Open AI's endorsement provides reassurance that AMD is a credible alternative to NVIDIA. Additionally, the partnership creates switching costs for Open AI—if AMD's infrastructure performs adequately, switching away requires renegotiating, possibly reoptimizing workloads, and re-establishing reliability. This stickiness benefits AMD.

What is the difference between CDNA and RDNA architectures?

CDNA is AMD's architecture specifically optimized for data center AI acceleration and scientific computing. It emphasizes matrix operations (core to AI workloads) and high memory bandwidth. RDNA is AMD's consumer and gaming-focused GPU architecture, optimizing for gaming performance and graphics workloads. AMD's strategy is to deploy RDNA in consumer processors (Ryzen) for integrated graphics and gaming capability, while deploying CDNA in specialized data center accelerators. This segmentation allows each architecture to be optimized for its primary use case rather than compromising on generalist design.

How does manufacturing at TSMC provide competitive advantage?

TSMC is the world's leading semiconductor foundry, with exclusive access to the most advanced process technologies (currently 3nm, moving to 2nm). Partnership with TSMC allows AMD to access these technologies for processor manufacturing, achieving higher density and better power efficiency than competitors using older process nodes. Additionally, TSMC's proven manufacturing at scale, high yields, and global fab network provide security and reliability. The disadvantage is that NVIDIA also uses TSMC, meaning AMD doesn't gain exclusive advantage—rather, both benefit equally from TSMC's leadership. AMD's advantage emerges from secondary Samsung partnership, providing supply flexibility NVIDIA doesn't emphasize.

What are realistic expectations for Ryzen 9000G gaming performance?

Ryzen 9000G integrated graphics are expected to deliver gaming performance roughly equivalent to discrete GPUs from 2-3 years prior—adequate for comfortable 1080p gaming at high settings, playable 1440p gaming at medium settings, and 4K gaming at low settings on some titles. This is genuinely impressive for integrated graphics historically far behind discrete options. However, performance still trails discrete GPUs like NVIDIA's RTX 50-series or AMD's dedicated Radeon cards. The advantage is cost and simplicity—buying a processor with integrated graphics eliminates GPU purchasing, potentially saving $200-500 and reducing system complexity. The tradeoff is lower performance in graphics-intensive workloads.

Key Takeaways

-

AMD's AI ambitions are serious: The company is investing meaningfully in competing with NVIDIA's AI infrastructure dominance, with Open AI partnership validating the approach at scale.

-

Ryzen is still evolving: The X3D and 9000G announcements demonstrate AMD's commitment to consumer processor performance, addressing both gaming and productivity scenarios.

-

Cost and flexibility drive adoption: In an increasingly specialized semiconductor landscape, AMD's emphasis on compatible, cost-effective alternatives to premium alternatives (NVIDIA, Intel) resonates with cost-conscious enterprises.

-

Software ecosystem remains challenged: Despite improvements, AMD's ROCm framework lags NVIDIA's CUDA, limiting developer adoption and creating switching inertia favoring NVIDIA.

-

Market segmentation is healthy: Rather than winner-take-all dynamics, the semiconductor market is evolving to support multiple players with different specializations. AMD's diversified approach fits this evolution better than single-focus competitors.

-

2026 is a transitional year: These announcements set positioning for the next several years. Success executing on announced roadmaps (actual product releases, sustained software improvements, market share gains) will determine if AMD's strategy succeeds.

-

Competitive dynamics remain intense: NVIDIA's dominance and Intel's potential recovery mean AMD must execute flawlessly. There's no room for complacency or missteps in such competitive markets.

Related Articles

- Intel Core Ultra Series 3 Panther Lake at CES 2026 [Complete Guide]

- Nvidia Vera Rubin AI Computing Platform at CES 2026 [2025]

- Nvidia Rubin Chip Architecture: The Next AI Computing Frontier [2025]

- CES 2026 Day 1: The 11 Best Tech Gadgets Revealed [2025]

- Apple Vision Pro: Why This $3,500 Headset is Actually Dying [2025]

- Audeze Maxwell 2 Gaming Headset Review: Features & Pricing [2025]

![AMD at CES 2026: Lisa Su's AI Revolution & Ryzen Announcements [2026]](https://tryrunable.com/blog/amd-at-ces-2026-lisa-su-s-ai-revolution-ryzen-announcements-/image-1-1767656418190.jpg)