Introduction: The Gen AI Paradox That's Quietly Destroying Data Governance

Your employees are using artificial intelligence tools right now. You probably don't know which ones, how often, or what data they're sharing.

This isn't paranoia. It's the reality facing virtually every organization in 2025. According to recent industry data, the average company is reporting over 200 Gen AI-related data policy violations each month. That's not a small number. That's a five-alarm fire dressed up in productivity software.

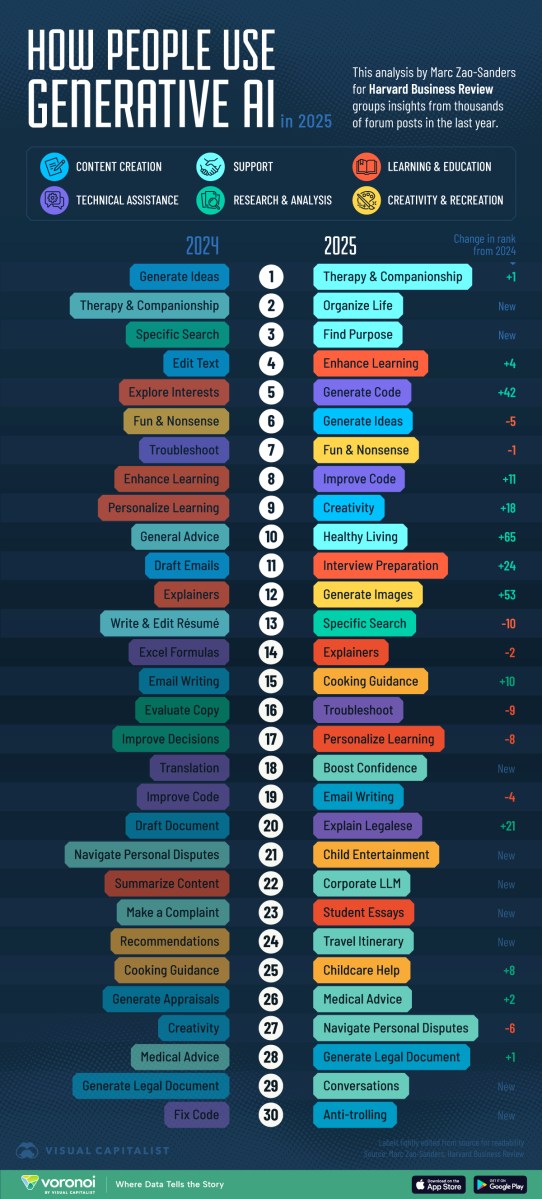

Here's what makes this particularly maddening: Gen AI tools are genuinely useful. Chat GPT, Claude, Gemini—they save time. They help teams move faster. They unlock real value. But they've also become a backdoor for one of the oldest security problems in the book: employees doing smart things for dumb reasons.

When someone copies sensitive client data into Chat GPT to get help drafting a proposal, they're not being malicious. They're being efficient. They're solving a problem in the fastest way available. The fact that they just sent proprietary information to a third-party AI service? That thought doesn't cross their mind until it's too late.

This phenomenon has a name: Shadow AI. And it's become the most dangerous security vulnerability most organizations haven't taken seriously yet.

What makes Shadow AI different from other shadow IT problems is scale and invisibility. A few years ago, when employees used unsanctioned cloud apps, IT could eventually audit log entries and discover the breach. Gen AI is different. Conversations happen inside browser tabs. Data flows into black-box language models. Interactions leave minimal trails. By the time you know something leaked, it's already been processed by algorithms you don't control, encrypted in systems you can't access, and potentially seen by competitors or bad actors who know how to extract valuable information from AI model outputs.

In this guide, we'll break down exactly what's happening with Gen AI data violations, why organizations are struggling to contain it, and what actually works to protect your company. This isn't theoretical. This is based on what's happening in real enterprises right now, where data governance is collapsing under the weight of tools employees refuse to stop using.

TL; DR

- Gen AI usage has exploded: SaaS usage tripled and prompt volumes jumped sixfold in just 12 months, with top organizations sending over 1.4 million prompts monthly

- Shadow AI is rampant: Nearly 47% of Gen AI users rely on unsanctioned personal AI apps, creating zero organizational visibility over data sharing

- Data breaches are doubling: Incidents of sensitive data being sent to AI apps have doubled year-over-year, with organizations averaging 223 violations per month

- Insider threats are real: 60% of insider threat incidents involve personal cloud app instances, with regulated data and credentials frequently exposed

- Compliance is collapsing: Organizations are struggling to maintain data governance as sensitive information flows freely into unapproved AI ecosystems

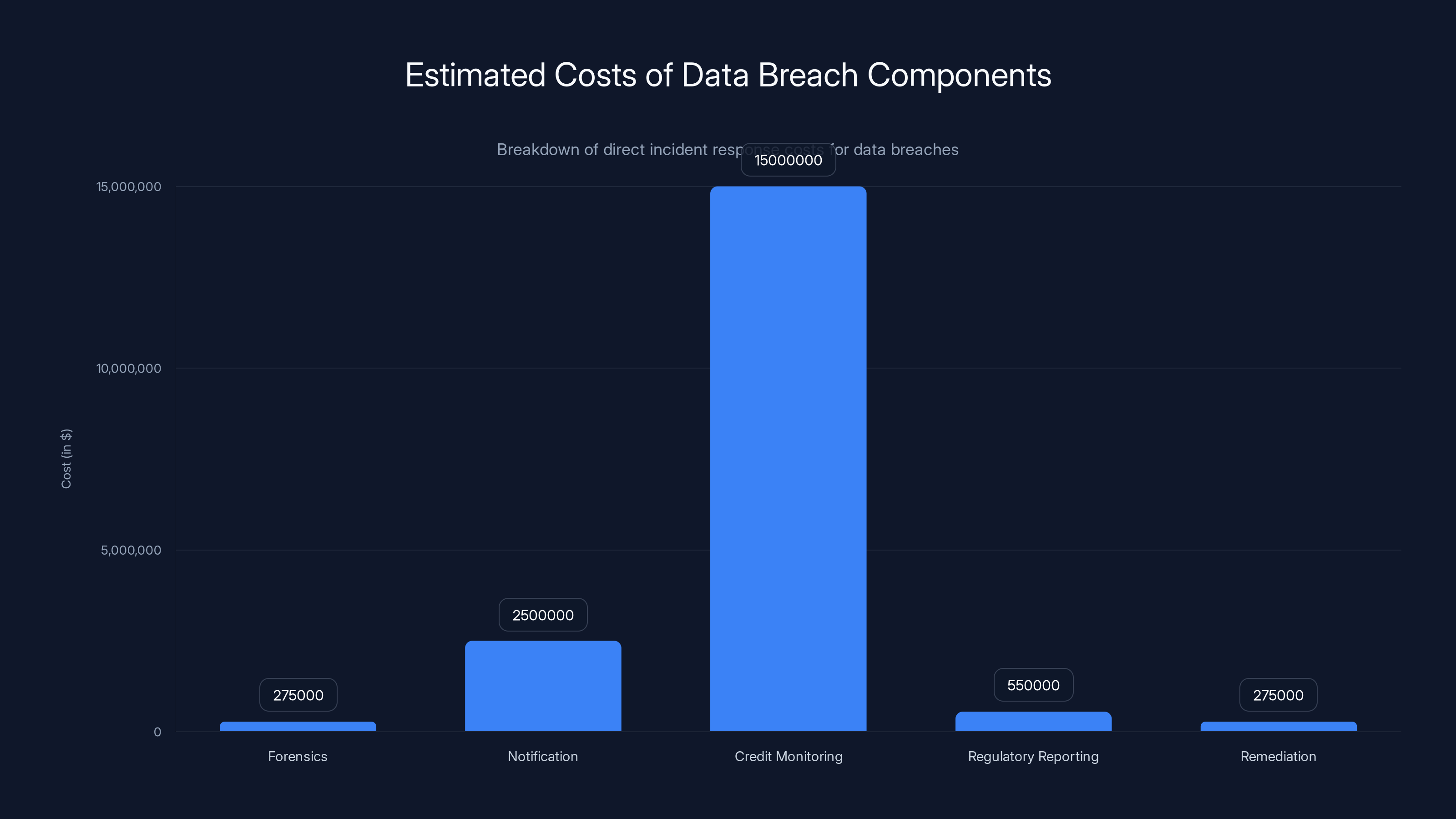

Estimated data shows that notification costs can be the highest, especially for large customer bases, followed by credit monitoring services. Estimated data.

The Scale of the Problem: Numbers That Should Terrify Your Security Team

Let's start with data, because numbers tell the story better than warnings ever could.

Gen AI adoption in the workplace has moved from "interesting experiment" to "unavoidable reality" in roughly 18 months. In 2024, the volume of Gen AI SaaS usage among businesses increased threefold. That alone is significant. But the way people are using these tools tells an even more alarming story.

Prompt volume has increased sixfold in the past 12 months. Let that sink in. Organizations went from averaging 3,000 prompts per year to generating more than 18,000 prompts per month. That's not people experimenting with a new tool. That's the tool becoming embedded into daily workflows.

And this usage is heavily concentrated at the top end. The top 25% of organizations are sending more than 70,000 prompts per month. The top 1%—the largest enterprises—are sending more than 1.4 million prompts per month. That's 46,000 prompts per day in some organizations. Every single one of those interactions represents a potential data exposure vector.

But here's where it gets worse: nearly half of Gen AI users are using unauthorized personal AI applications. We're talking about 47% of your workforce leveraging tools they found, signed up for independently, and are using without any organizational oversight. Chat GPT's free tier. Claude's free version. Gemini. Janitor AI. Whatever tool they discovered that makes their job easier.

These aren't regulated deployments. There's no enterprise agreement. There's no data processing agreement (DPA). There's no security audit. Organizations have zero visibility into what data flows through these applications, zero control over how the data is processed, and zero ability to prevent misuse.

The result? Incidents where users are sending sensitive data to AI apps have doubled in the past year. The average organization is now seeing 223 such incidents per month. That's 7.4 data exposures per day, on average, in a mid-sized company.

What Counts as a Data Violation?

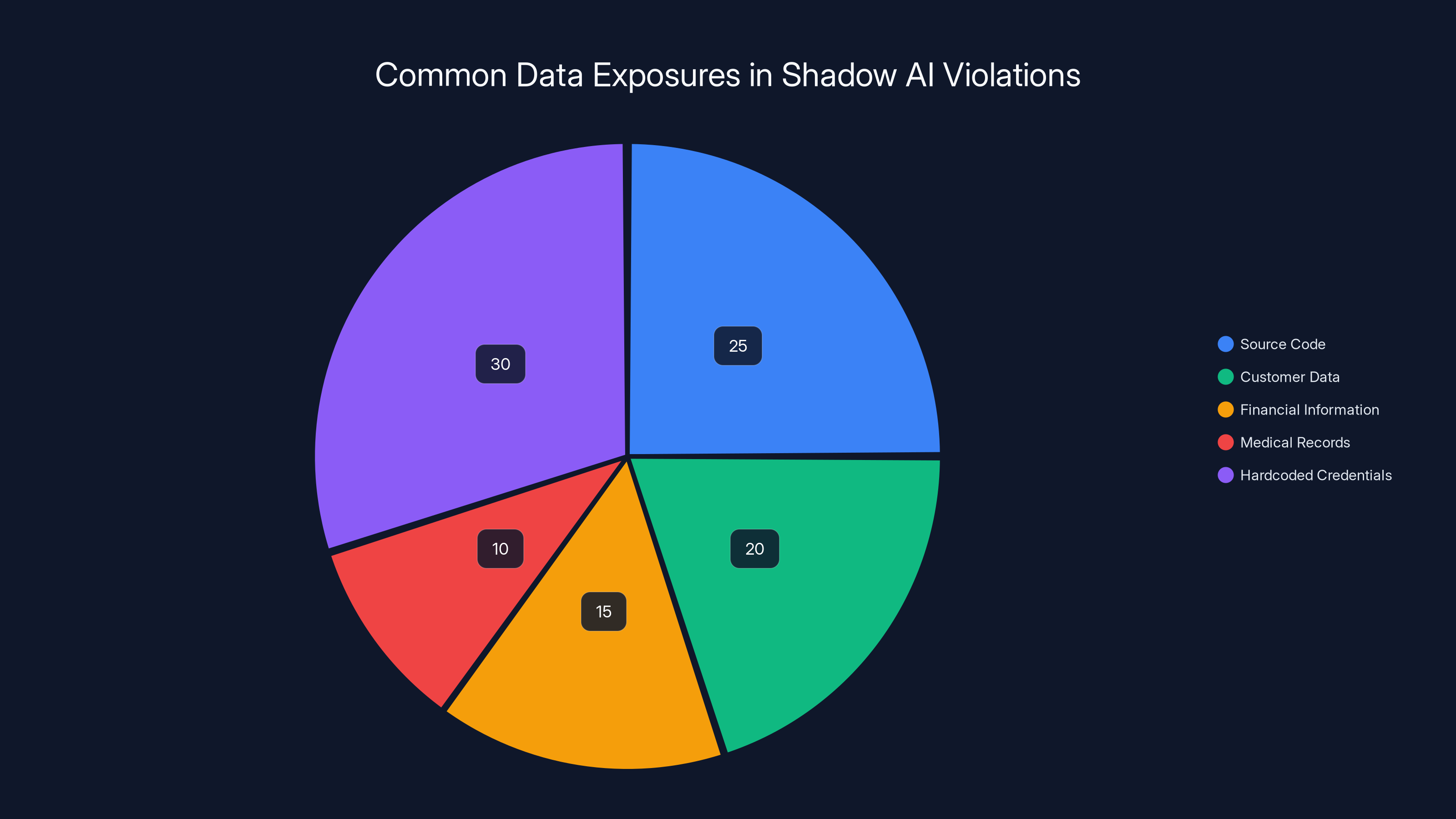

When we talk about policy violations, we're not talking about minor infractions. These are exposures of genuinely sensitive information.

The violations include:

- Regulated data: Payment card information, health records, personally identifiable information (PII) that triggers compliance obligations

- Intellectual property: Product roadmaps, architectural designs, proprietary algorithms, business strategies

- Source code: Application code, infrastructure templates, credentials embedded in code repositories

- Authentication credentials: API keys, database passwords, OAuth tokens that grant access to critical systems

- Financial information: Revenue figures, cost structures, merger and acquisition details

- Customer data: Lists of clients, contract terms, communication records

These aren't theoretical risks. In real organizations, employees are copying and pasting:

- Database queries containing customer records to get help debugging code

- Internal documentation mentioning product timelines to ask for help writing marketing copy

- Source code snippets containing hardcoded credentials to troubleshoot issues

- Contract templates with client names to draft new agreements faster

Each interaction feels like a minor productivity hack. Collectively, they're creating an unprecedented data exposure surface.

The total annual cost of Shadow AI violations for a 1,000-person company is estimated at $3,044,000, with breach costs being the most significant contributor.

Shadow AI: The Visibility Problem Nobody's Solving

There's a critical difference between "unauthorized software" and "shadow AI." You can scan your network and find unauthorized apps. You can audit login attempts. You can track software installations.

Shadow AI is fundamentally different because it's invisible to traditional security infrastructure.

When an employee opens a browser tab and logs into Chat GPT, they're using a personal account. From your network perspective, they're accessing OpenAI's legitimate website. Your firewall sees this as normal web traffic. Your proxy logs show an HTTPS connection to a well-known domain. Your DLP tools might have rules for common SaaS platforms, but personal AI app usage sits in a gray zone: it's legitimate software being used for unauthorized purposes.

The interactions themselves are opaque. Unlike Slack messages, which you might log and audit, or emails, which leave permanent records, conversations with generative AI models are ephemeral. They live in the user's browser cache, in the AI company's systems, and nowhere in your organization's infrastructure. You get no transcript. You get no audit log. You get no visibility into whether someone just shared your customer list or your source code.

The Economics of Why People Choose Shadow AI

Understanding why Shadow AI is so pervasive requires understanding employee incentives.

A developer faces a choice:

- Fill out a request form for an enterprise-approved AI tool, wait for IT security to evaluate it (three weeks), wait for procurement to negotiate terms (two weeks), attend security training, then use it

- Open a browser tab and solve the problem in three minutes

Option two isn't laziness. It's rational behavior. The employee values their time. They have a deadline. Using the personal tool doesn't feel reckless; it feels necessary.

Your organization likely responds by blocking Chat GPT, Claude, and other AI services at the network level. But employees work around this by using VPNs, tethering to their phones, or accessing the tools on personal devices. The restriction creates an arms race where the employees have all the incentive to find workarounds and IT has all the burden of enforcement.

The Insider Threat Multiplier: How Personal AI Apps Became Your Biggest Risk

Most organizations think about insider threats in traditional terms: a disgruntled employee selling trade secrets, a contractor accessing more than they should, someone transferring data to a USB drive.

Personal AI apps have fundamentally changed the insider threat calculus.

60% of insider threat incidents now involve personal cloud app instances. That statistic alone should reframe how your security team thinks about risk. The majority of insider threats aren't malicious anymore. They're accidental. They're an employee using a personal tool to solve a work problem and inadvertently exposing sensitive data.

Here's why this is a nightmare from a detection and prevention perspective:

Traditional insider threat detection looks for unusual data movement patterns. Large file transfers to external devices. Bulk exports from databases. Unusual access times or source IP addresses. But when an employee shares data with a personal AI app, the technical signature looks benign:

- It's a small amount of data (text in a browser form field)

- It's going to a legitimate destination (a major cloud company's API endpoint)

- It's happening during business hours from a work device

- It's following normal web traffic patterns

Your insider threat detection tools don't flag it as risky. It just looks like normal work.

The Credential Exposure Problem

One specific type of insider threat has become exceptionally common: credential exposure through personal AI apps.

When someone asks an AI tool for help debugging code, they often paste the actual code they're running. That code frequently contains hardcoded credentials, environment variables, or API keys. The person posting doesn't think about this. They're focused on the technical problem. But now their database password, AWS access key, or authentication token is sitting inside a language model's training dataset (or potentially accessible to someone willing to pay for it).

In one documented case, a developer at a financial services company pasted database connection strings into Chat GPT while debugging a query. The connection string included the password. Within 72 hours, someone had used that password to access the database and extract customer financial records. The attacker didn't exploit a vulnerability. They just read a prompt that had been stored somewhere in OpenAI's systems.

This is now one of the top ways enterprises are discovering they've been breached: an employee reports unusual database access, investigators pull the logs, trace the origin, and discover the credential was shared in a personal AI chat months earlier.

Source code and hardcoded credentials are the most frequently exposed data types in Shadow AI violations, accounting for over half of the incidents. (Estimated data)

Why Traditional Security Controls Are Failing

Your organization probably already has security policies in place. You've got an acceptable use policy. You've got data classification standards. You might have DLP tools deployed. You probably have security training programs.

None of this is working very well against Shadow AI. Here's why.

The Awareness Problem

Security training teaches employees not to share sensitive data. But most employees genuinely don't believe they're sharing sensitive data when they use AI tools.

They think: "I'm asking a computer for help. It's not like I'm emailing someone or posting to the internet." The mental model they have is flawed but understandable. They don't conceptualize large language models as data repositories. They think of them as private tools.

When someone says "don't share sensitive data," they interpret that as "don't share data with people." Gen AI tools don't feel like sharing with a person. They feel like thinking out loud into a private notebook.

The Policy Problem

Most acceptable use policies were written before generative AI existed at scale. They prohibit unauthorized SaaS applications but don't specifically address AI model interactions. When you try to enforce the policy, employees argue the tool is necessary for their job, it's not creating actual risk (because they don't understand how model training works), and blocking it is slowing down their productivity.

You end up in a situation where the policy exists but lacks enforcement mechanisms that actually work. You can't block all web traffic. You can't force everyone to use approved tools when approved tools don't exist yet or don't meet all use cases. You're trying to gate a resource that's become essential to how people work.

The Technical Detection Problem

Building DLP rules for AI platforms is technically challenging. You need to:

- Identify all the AI platforms employees might use (there are hundreds)

- Identify all the domains, API endpoints, and entry points

- Build DLP rules that detect when sensitive data is being sent to those endpoints

- Handle the false positives (legitimate business use of AI tools)

- Keep the rules current as new tools launch and old ones evolve

Most organizations attempt this with a blocklist approach: "Block traffic to Chat GPT, Claude, Gemini." But this is playing whack-a-mole. A new AI tool launches every week. Employees find workarounds. You block one endpoint and they use a different one.

The Compliance Apocalypse: Regulatory Frameworks Collapsing Under Gen AI

If Shadow AI is purely a productivity problem, it's annoying. But it becomes existential when you factor in compliance obligations.

Imagine you're in a regulated industry: financial services, healthcare, e-commerce handling credit cards. You have explicit obligations under frameworks like GDPR, HIPAA, PCI-DSS, or regional data protection laws. These frameworks require you to:

- Know where sensitive data is stored and processed

- Control access to sensitive data

- Ensure data doesn't flow to unauthorized third parties

- Audit data handling practices

- Maintain contractual agreements with any entity that touches sensitive data

When an employee shares customer data with Chat GPT via a personal account, you've violated every single one of these obligations:

- You don't know the data is stored in OpenAI's systems

- You can't control how OpenAI processes it

- You haven't explicitly authorized the data transfer

- You can't audit what happened to the data

- You don't have a data processing agreement in place

The technical term for this is a "compliance violation." The practical term is a "regulatory nightmare."

GDPR and Data Processing Agreements

Under GDPR, any organization processing EU personal data must have a Data Processing Agreement (DPA) with any processor handling that data. OpenAI has published a DPA for enterprise customers. But does that apply to someone using the free tier of Chat GPT?

Legally, it's murky. Some interpretations suggest that by sending EU personal data to Chat GPT's free tier without an approved DPA, you're violating Article 28 of GDPR. The penalties? Up to 4% of global annual turnover. For a billion-dollar company, that's $40 million.

Most organizations haven't tested this in court yet. But the legal risk is real enough that compliance teams are losing sleep over Shadow AI.

HIPAA and Healthcare Data

If you're in healthcare, the restrictions are even tighter. Under HIPAA, you generally cannot send protected health information (PHI) to any third-party service unless you have a Business Associate Agreement (BAA) and that service implements required security controls.

When a healthcare worker sends patient notes to Claude to get help drafting a clinical summary, they've created a HIPAA violation. The fact that it wasn't intentional doesn't matter. The violation exists. The organization is liable.

Financial Services and PCI-DSS

If you're processing credit cards, PCI-DSS requires that payment card data never touches unaccredited systems. Many financial services organizations have discovered employees sharing transaction data, customer card information, or encrypted payment data with AI tools for analysis or troubleshooting. Each instance is a PCI violation.

Unlike GDPR, where fines are capped at a percentage of revenue, PCI violations can result in per-incident fines of

The volume of GenAI prompts has increased dramatically from 2022 to 2024, with the top 1% of organizations reaching 1.4 million prompts per month. Estimated data.

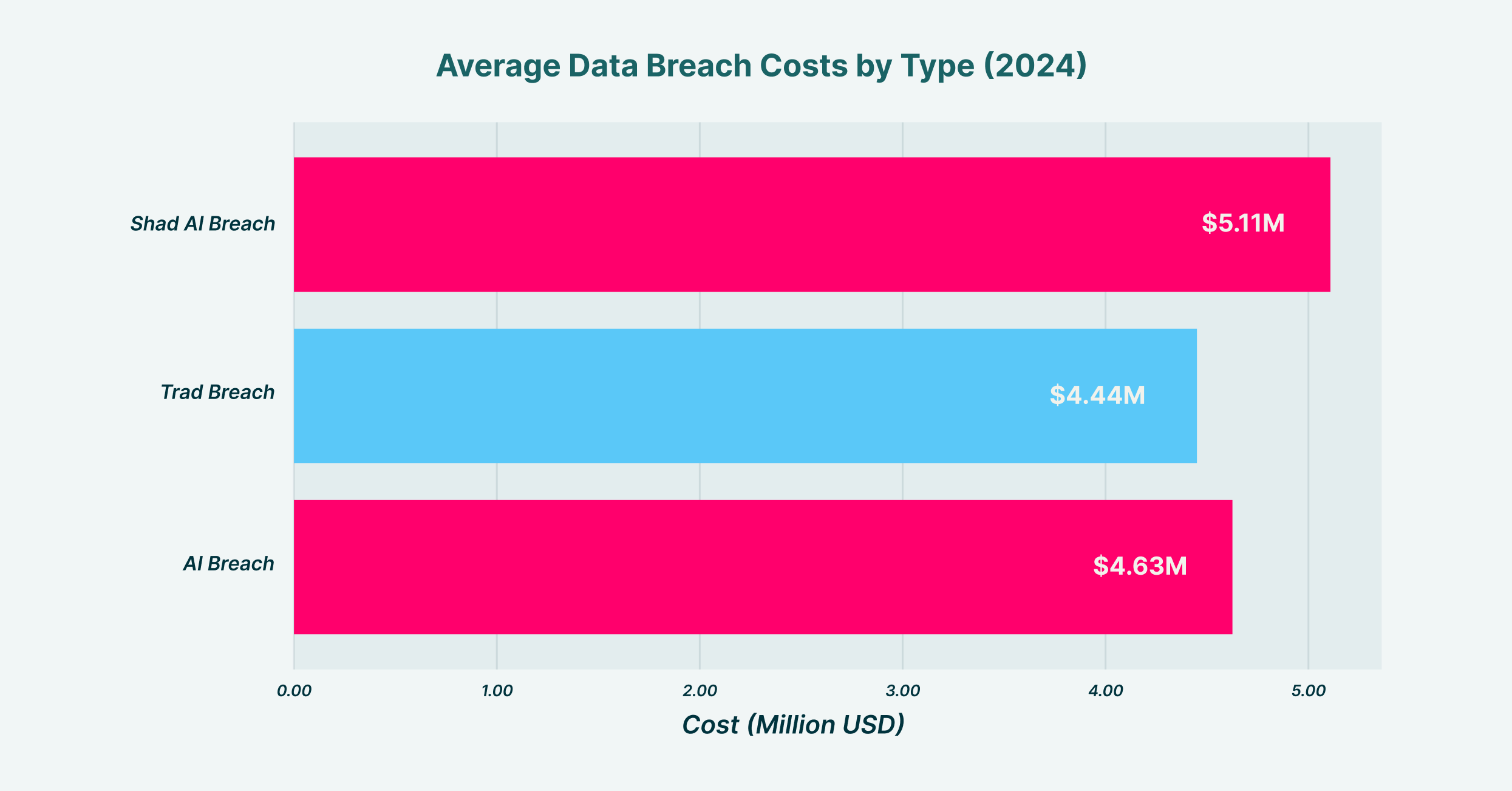

The Real-World Cost of Data Violations: What This Actually Costs Organizations

Compliance fines are theoretically frightening but feel abstract. Let's talk about concrete costs.

Direct Incident Response Costs

When your organization discovers that sensitive data has been shared with an AI platform:

- Forensics and investigation: Security team time to determine what data was exposed, when, by whom, and for how long. Budget: 500,000 depending on scale and complexity.

- Notification and customer communication: Legal notices to affected customers. Notification costs alone typically run 5 per customer notified.

- Credit monitoring services: Many breaches require offering affected customers credit monitoring. Budget: 50 per person.

- Regulatory reporting: Filing breach notifications with regulators, responding to inquiries. Budget: 1,000,000+ depending on regulatory jurisdiction.

- Remediation: Changing passwords, rotating credentials, implementing additional controls. Budget: 500,000.

For a company with 500,000 customers affected, notification costs alone could hit

Regulatory and Legal Costs

If the violation triggers regulatory enforcement:

- Regulatory fines: GDPR (up to 4% of global revenue), HIPAA (50,000 per violation), PCI-DSS (varies by card issuer)

- Legal fees: Responding to regulatory inquiries, litigation if class action suits are filed, settlement negotiations

- Consent decrees and audit costs: Many regulatory settlements include mandatory audits and compliance monitoring

For a billion-dollar company with a significant GDPR violation, total regulatory costs could exceed $100 million.

Reputational and Business Costs

These are harder to quantify but often exceed direct financial costs:

- Customer churn: Customers learning their data was exposed to unauthorized AI platforms often switch providers

- Damage to brand: News coverage of data breaches impacts how customers perceive your organization

- Competitive disadvantage: Regulators sometimes require increased transparency or additional security measures that competitors don't face

- Loss of business opportunities: Some enterprise customers require compliance certifications that breaches can jeopardize

Historical data suggests that significant breaches result in 2–5% customer churn within 12 months. For a SaaS company with

Quantifying the Risk: A Mathematical Framework for Your Organization

Want to know what Shadow AI is likely costing your company? Here's a framework to estimate it.

The Violation Probability Formula

Let's plug in realistic numbers for a 1,000-person technology company:

Breakdown:

- 1,000 active users: Total employee base

- 0.47 adoption rate: 47% of employees using unauthorized AI tools

- 50 prompts per user per month: Realistic average usage (some use it dozens of times daily, others never)

- 0.001 violation rate: 0.1% of prompts contain sensitive data (conservative estimate; many organizations see higher rates)

For a 1,000-person company, you should expect roughly 24 data exposure incidents per month, or 288 annually. That's nearly one per business day.

Now, what does that cost?

The Cost-Per-Incident Formula

Assuming:

- Detection cost: $500 per incident (security team time to identify and log it)

- Breach rate: 10% of violations detected and reported become incidents requiring formal breach response

- Breach cost: $100,000 per breach (investigation, notification, remediation)

That's $3 million annually for a 1,000-person company, and that's before regulatory fines.

Estimated data shows high risk of compliance violations due to Shadow AI, with GDPR and HIPAA being the most vulnerable frameworks.

The Insider Threat Reality: When Your Employees Become Your Biggest Security Risk

Here's a statistic that should change how your organization thinks about security: 60% of insider threat incidents now involve personal cloud app instances.

That's not a typo. That's not a projection. That's the measured reality across organizations today.

Traditional insider threat programs assume the insider is the risk. But modern insider threats are often not about malice; they're about negligence.

The Accidental Insider Threat Pattern

The typical scenario:

- Employee faces a legitimate work problem: Needs to debug code, draft a document, analyze data

- Employee chooses a personal AI tool: It's faster than the approved alternative, or no approved alternative exists

- Employee shares sensitive data: Often without explicitly thinking about it as "sharing"

- Data is exposed: The AI company's systems, competitors' reconnaissance, bad actors with access to model outputs

- Organization detects it months later: If at all

- Incident response kicks in: Investigation, notification, remediation

The employee wasn't trying to damage the organization. They were trying to do their job. But the result is indistinguishable from a security breach.

Credential Exposure at Scale

The most dangerous type of insider threat involving personal AI tools is credential exposure. Developers routinely paste code into Gen AI tools for debugging. That code frequently contains:

- Database connection strings with passwords

- API keys for third-party services

- AWS access credentials

- Private encryption keys

- Slack or GitHub tokens

Each credential exposed in a Gen AI conversation is a potential entry point for attackers. A penetration tester or threat actor can search for credentials accidentally posted in model outputs. If they find them, they have legitimate access to your critical infrastructure.

One major breach in 2024 started exactly this way: A developer pasted a Python script containing an AWS access key into Chat GPT. The key was later extracted from model training data by someone with access. The access key granted permissions to an S3 bucket containing customer data. By the time the organization realized the credential was compromised, millions of customer records had been accessed.

Why Detection Is Failing

Your insider threat detection tools are probably missing most of these incidents because:

- Data volume is small: A few paragraphs of code pasted into a browser form looks like normal activity, not a data exfiltration

- Destination is legitimate: OpenAI, Anthropic, and Google are trusted companies; traffic to their endpoints doesn't flag as suspicious

- Timing is normal: It's happening during business hours from a work device, exactly when you'd expect data access

- User behavior is normal: The employee isn't doing anything obviously nefarious; they're doing their job

So your detection systems see: normal user, normal timing, normal destination, normal data volume. No alerts. No incidents. But the exposure has happened.

How Organizations Are Currently Responding (And Why It's Not Working)

Most organizations have attempted to address Shadow AI through traditional security strategies. None of them are particularly effective.

Strategy 1: Blocking and Filtering (Almost Universally Failing)

The most common response: block access to known AI platforms at the network level.

This fails because:

- Too many tools exist: New AI platforms launch daily. Maintaining a comprehensive blocklist is impossible

- Workarounds are trivial: Employees use VPNs, mobile hotspots, home networks, or wait until they're off-site

- It creates friction without solving the problem: Employees don't stop using AI tools; they just use them more covertly

- It antagonizes the workforce: Blocking productivity tools that employees find valuable damages morale and trust

Organizations that attempted blocking often report that Shadow AI usage actually increased as employees found more creative workarounds.

Strategy 2: Security Training (Ineffective Against Normalized Behavior)

Despite annual security training, Shadow AI usage continues rising. Why?

Because training is fighting uphill against normalized behavior. Every day, employees see that using personal AI tools makes them more productive. Every day, they see that it doesn't result in immediate consequences. Every day, they see peers doing it without repercussions.

A 30-minute training session once per year can't compete with the daily reinforcement that Shadow AI is safe and necessary.

Moreover, training assumes the problem is awareness. In reality, many employees are acutely aware they shouldn't share sensitive data with AI tools. They just don't think the data they're sharing is actually sensitive. They're making a judgment error, not an awareness error.

Strategy 3: Acceptable Use Policies (Unenforced and Unenforceable)

Most organizations have updated their acceptable use policy to explicitly prohibit sharing data with unauthorized AI tools. This is good governance, but enforcement is practically impossible.

You can't force 5,000 employees to comply with a policy when the policy prevents them from doing their jobs efficiently and violates it is technically invisible.

What typically happens:

- Policy is published

- Employees read it and decide it doesn't apply to them ("I'm not sharing sensitive data, I'm asking for help")

- Usage continues

- Audit discovers violations

- Organization cracks down, threatens discipline

- Usage temporarily decreases

- Organizational memory fades and usage resumes

The cycle repeats annually, and Shadow AI adoption continues to climb.

Organizations find traditional strategies like blocking, training, and policies largely ineffective against Shadow AI, with ratings below 3 out of 5. Estimated data based on narrative insights.

What Actually Works: A Framework for Containing Shadow AI

After analyzing dozens of organizations with relatively successful Shadow AI containment, a pattern emerges. The companies that are actually limiting Shadow AI aren't doing it through restriction. They're doing it through alternative channels.

Principle 1: Meet Employees Where They Are

The core problem driving Shadow AI adoption is that employees need something, the approved alternative doesn't exist or is too slow, and they solve it themselves.

The solution isn't to prevent them from solving it. It's to solve it before they have to.

Organizations that have reduced Shadow AI by 40–60% typically do this by:

- Deploying approved enterprise AI tools rapidly: Not after six months of evaluation, but within weeks

- Making approved tools easier to use than personal alternatives: This means better integration with workflows, fewer login steps, faster response times

- Explicitly allowing use cases: Instead of saying "don't use personal AI tools," say "you can use the company Chat GPT instance for debugging, code review, and document drafting"

- Building data governance into the tool: Approved tools handle data classification, encryption, audit logging, and compliance automatically

One healthcare organization reduced Shadow AI by 67% in six months by deploying an enterprise Gen AI tool specifically for clinical documentation. Previously, clinicians were using personal Chat GPT to draft clinical notes. Once they had a tool designed specifically for HIPAA-compliant clinical work, Shadow AI usage in that use case dropped dramatically.

Principle 2: Data Classification as an Enabler, Not a Restriction

Classical data classification programs create categories (Confidential, Internal, Public) and then build restrictive policies around them. This usually fails because the categories are so broad that everything important is classified as Confidential, and the restrictions become so burdensome that nobody follows them.

Companies successfully managing Shadow AI invert this: they use classification to enable appropriate tool usage rather than restrict it.

They ask: "What AI tools can we use for Public data without restrictions? Which tools can we use for Internal data with audit logging? Which tools require additional controls for Confidential data?"

This approach gives employees more options, not fewer. And it creates a framework for using Gen AI intelligently rather than trying to prevent its use.

Principle 3: Detecting Violations Through Behavior, Not Network Analysis

Your DLP tools probably aren't detecting Shadow AI violations because they're looking for files moving across network boundaries, when actual violations are text in browser forms.

Organizations having better success at detection are using behavioral signals instead:

- Suspicious paste operations: Monitoring for very large text pastes into web forms that might indicate data sharing

- Credential exposure patterns: Scanning for common credential formats (AWS keys, database connection strings) in text data

- Sensitive word clustering: Detecting combinations of words that suggest sensitive data (e.g., "customer" + "card number" + "transaction")

- User-initiated reporting: Building a culture where employees report violations rather than hide them

This is harder to implement than network-level blocking, but it's also more effective because it catches violations that network analysis misses.

Principle 4: Building a Violation Detection and Correction Culture

The most successful organizations have reframed how they handle Shadow AI violations.

Instead of "violation = punishment," they've implemented "violation = correction opportunity."

When an employee is caught sharing sensitive data with a personal AI tool:

- No immediate discipline: Instead, a conversation

- Education: "Here's why this was risky and what actually happened to your data"

- Solution offering: "Here's the approved tool for this use case"

- Prevention: "We're adding extra controls so this is harder to do accidentally"

This approach sounds soft, but organizations that implement it report significantly higher violation reporting rates. When employees know they'll be punished, they hide violations. When they know they'll be educated, they report them.

Regulatory Expectations and Legal Liability in 2025

Regulators are starting to take Shadow AI seriously, and the legal framework is evolving rapidly.

Emerging Regulatory Expectations

SEC Guidance on AI and Data Security: The SEC has issued guidance suggesting that companies with poor data governance around AI pose material risk to investors. Companies may be required to disclose AI-related data breaches in SEC filings.

GDPR Enforcement: Several European regulators are investigating whether personal use of Chat GPT with customer data violates GDPR. Precedent is still emerging, but enforcement actions are coming.

HIPAA Expansion: The U.S. Department of Health and Human Services has published guidance suggesting that using personal AI tools with patient data violates HIPAA, regardless of intent or actual breach.

State Privacy Laws: California's CCPA, Colorado's CPA, and other state privacy laws are being interpreted to create liability for organizations that lose customer data to unauthorized AI platforms.

Contractual Implications

Many enterprise customer contracts now require explicit certification that data won't be shared with unauthorized third-party AI systems. Violating this obligation can trigger contract termination and liability.

One financial services company discovered it had inadvertently violated customer contracts when employee usage of personal AI tools exposed customer data. The customer exercised the breach clause in the contract and terminated a $50 million annual relationship.

Insurance Implications

Cyberinsurance carriers are starting to exclude coverage for breaches involving Shadow AI. If your organization's breach happens because an employee shared data with Chat GPT, your cyber insurance may not cover it because you failed to implement reasonable controls.

Building a Comprehensive Shadow AI Containment Program

If you're going to actually manage this, here's a structured approach that's working for organizations managing it well.

Phase 1: Discovery (Month 1–2)

Objective: Understand the scope of Shadow AI usage in your organization

- Deploy discovery tools: Install endpoint agents or network monitoring to identify AI platform usage

- Conduct user surveys: Ask employees what tools they're using and why

- Analyze incident data: Review any past incidents or policy violations related to AI

- Map use cases: Identify the actual work problems Shadow AI is solving

- Quantify adoption: What percentage of employees are using personal AI tools?

Expected output: A comprehensive report showing where Shadow AI is most prevalent, what data is most at risk, and what work problems are driving adoption.

Phase 2: Governance (Month 2–4)

Objective: Build the policy and process framework

- Define approved AI tools: Determine which platforms will be enterprise-approved

- Build data policies: Create clear guidance on what data can be shared with which tools

- Design exception processes: Build a fast-track system for teams that need tools beyond the approved list

- Create incident response procedures: Document how violations are detected, reported, and remediated

- Update contracts: Ensure vendor agreements and customer contracts align with your AI data policy

Expected output: An approved tools list, data classification schema, incident response procedure, and updated contracts.

Phase 3: Implementation (Month 3–6)

Objective: Deploy technology and organizational controls

- Deploy approved tools: Get enterprise AI platforms live and integrated into workflows

- Configure DLP rules: Build detection rules specific to Shadow AI violations

- Implement monitoring: Get visibility into AI tool usage and sensitive data exposure

- Train teams: Teach employees about the new tools, policies, and why they matter

- Enable fast-track approval: Build a process for teams to request new tools with 48-hour turnaround

Expected output: Approved tools operational, detection infrastructure live, training completed, fast-track process operational.

Phase 4: Maintenance (Month 6+)

Objective: Sustain the program and respond to violations

- Monthly violation reviews: Analyze violations detected, trends, root causes

- Tool optimization: Update approved tools based on usage patterns

- Policy updates: As new risks emerge, update policies and controls

- Continuous training: Keep awareness high through ongoing education

- Executive reporting: Report metrics to leadership quarterly

Expected output: Sustained reduction in Shadow AI adoption, improved compliance posture, measurable risk reduction.

The Future of Gen AI and Data Governance

Shadow AI isn't a temporary problem. It's a permanent feature of how organizations will work. But the frameworks for managing it are evolving.

Emerging Technology Solutions

AI-Specific DLP: New DLP solutions are being built specifically for Gen AI use cases. Instead of detecting file transfers, they detect sensitive data being submitted to model APIs.

Behavioral Analytics for Data Loss: AI-powered analytics that identify risky user behavior patterns specific to Shadow AI usage.

Federated Learning Models: Enterprise models trained on your data locally rather than sent to external platforms. This keeps proprietary data internal while still delivering AI capabilities.

Prompt Filtering and Data Masking: Tools that automatically redact sensitive data from prompts before they're sent to external AI platforms, allowing employees to use tools without risk.

Organizational Evolution

We're likely to see a bifurcation:

- Organizations with strong governance: Will deploy comprehensive approved AI platforms, build robust data policies, and achieve high visibility and control over AI usage

- Organizations with weak governance: Will continue struggling with Shadow AI, will experience more breaches, and will face regulatory liability

Competitive advantage will increasingly accrue to organizations that figure out how to enable Gen AI safely at scale.

Conclusion: The Path Forward

Gen AI data policy violations are one of the most pressing security challenges facing organizations in 2025. They're growing faster than detection capabilities can keep up with. They're driven by legitimate employee needs. They're creating real compliance and legal liability.

But they're not unsolvable.

Organizations that are successfully managing Shadow AI share common patterns: they've deployed approved alternatives, they've built data governance frameworks that enable rather than restrict, they've invested in detection beyond network-level blocking, and they've created organizational cultures where violations are corrected rather than punished.

The statistical reality is stark: your organization is probably seeing 150–300 Gen AI policy violations monthly right now, and you're probably only aware of a fraction of them. That's not a failure of your security team. It's a feature of how invisible and normalized Shadow AI has become.

But it's also fixable. Start with Phase 1: Discovery. Understand what's actually happening in your organization. From there, you can build a program that reduces risk while actually enabling the productivity benefits that make Gen AI so valuable.

The companies that win in the next 24 months won't be the ones that successfully blocked AI. They'll be the ones that successfully governed it.

FAQ

What exactly is Shadow AI, and how is it different from regular SaaS usage?

Shadow AI refers specifically to unauthorized use of generative AI platforms like Chat GPT, Claude, or Gemini. Unlike traditional shadow IT (unauthorized apps purchased through personal accounts), Shadow AI is particularly dangerous because interactions happen in browser tabs without organizational visibility, data flows into black-box language models you don't control, and conversations leave minimal audit trails. While a company might eventually discover unauthorized Slack usage through network logs, Shadow AI violations are often never detected.

Why are 47% of Gen AI users relying on personal AI apps instead of approved tools?

Employees choose personal AI tools because approved alternatives either don't exist yet, are too slow to access through organizational processes, or don't meet specific use case needs. A developer needing help debugging code can either request an enterprise tool (which takes weeks) or open Chat GPT (which takes seconds). The incentive structure strongly favors the unauthorized tool. Organizations that have successfully reduced Shadow AI typically do this by deploying approved tools with minimal friction and faster response times than the approval process itself.

What data is most frequently exposed through Shadow AI violations?

The most common exposures include source code (developers debugging), customer data (customer service representatives), financial information (analysts and accountants), medical records (healthcare workers), and hardcoded credentials (developers pasting code samples). Regulated data (PII, PHI, payment card information) is frequently exposed because employees don't always recognize it as sensitive in context, or they're sharing it for a legitimate business purpose but without authorization from the organization.

How can my organization detect Shadow AI usage if traditional DLP tools aren't catching it?

Effective detection typically involves multiple approaches: monitoring endpoint paste operations and clipboard activity for suspicious patterns, scanning for credential formats that might indicate code sharing, looking for combinations of words suggesting sensitive data, implementing network-level visibility into AI platform access patterns, and creating internal reporting mechanisms where employees can report violations without fear of punishment. The most successful organizations combine technical detection with behavioral analytics and encourage employee self-reporting.

What are the legal implications if my organization discovers a Shadow AI data breach?

Legal implications vary by jurisdiction and data type. Under GDPR, sending EU personal data to unauthorized platforms without a Data Processing Agreement can result in fines up to 4% of global revenue. HIPAA violations involving protected health information can result in per-incident penalties of

Can we simply block all personal AI tools and solve the problem?

Blocking doesn't work as a primary strategy because there are thousands of AI tools, new ones launch constantly, and employees can easily circumvent network blocks using VPNs or personal devices. Organizations that have tried blocking typically see Shadow AI increase rather than decrease, as employees find more covert workarounds. More successful approaches focus on deploying approved alternatives that are more convenient than blocked tools, combined with detection and education rather than pure restriction.

What's the realistic cost of Shadow AI violations for a typical mid-sized company?

Using the calculation framework provided: a 1,000-person company expecting approximately 288 violations annually, with a 10% conversion rate to formal breaches, faces approximately

How long does it typically take to implement a comprehensive Shadow AI containment program?

A complete program from discovery to sustained maintenance typically takes 6–12 months. Phase 1 (Discovery) takes 1–2 months to understand current state. Phase 2 (Governance) takes 2–4 months to build policies and frameworks. Phase 3 (Implementation) takes 3–6 months to deploy tools and infrastructure. Phase 4 (Maintenance) is ongoing. Organizations can see significant improvement within 90 days by focusing first on approved tool deployment and fast-track exception processes, which removes the fundamental incentive driving Shadow AI adoption.

Are there approved enterprise AI tools specifically designed for Shadow AI containment?

Yes, several categories of tools are emerging: enterprise Gen AI platforms with built-in security controls (Anthropic's enterprise Claude, OpenAI's business Chat GPT, Google's Workspace-integrated Gemini), DLP solutions designed specifically for Gen AI (monitoring prompts and API calls rather than file transfers), behavioral analytics tools that identify risky AI usage patterns, and prompt filtering solutions that automatically redact sensitive data before sending to external platforms. The most effective approach typically involves combining multiple solutions rather than relying on a single platform.

What's the role of data classification in managing Shadow AI?

Data classification frameworks help organizations decide which AI tools are appropriate for different data types. Instead of classifying data and then restricting all use with AI, successful organizations ask: "What AI tools can we use for Public data without restrictions? Which tools can we use for Internal data with audit logging? What controls are required for Confidential data?" This approach enables appropriate AI usage rather than preventing all usage, which removes the incentive driving Shadow AI. Classification also helps employees understand which data is actually sensitive, addressing a major awareness gap.

Summary: What You Need to Do Right Now

If you take nothing else from this article, implement these three things immediately:

-

Measure your current state: Use discovery tools to identify what AI platforms employees are using and estimate your monthly violation count. Most organizations are shocked to discover the scale.

-

Deploy one approved tool within 30 days: Pick the single highest-use Shadow AI tool (probably Chat GPT), negotiate an enterprise agreement, and make it available to your organization. This alone reduces unauthorized usage by 20–30%.

-

Update your acceptable use policy to enable rather than restrict: Instead of "don't use personal AI tools," say "you must use approved AI tools for sensitive work." Make the approved path easier than the unauthorized path.

These three steps won't solve the problem completely. But they'll give you visibility and significantly reduce risk within the first 90 days. From there, you can build a more comprehensive program based on what you've learned about your organization's specific use cases and risks.

Key Takeaways

- Organizations are reporting 223 GenAI policy violations monthly on average, more than double the previous year, driven by Shadow AI adoption reaching 47% of users

- Shadow AI is invisible to traditional security infrastructure because conversations happen in browsers, creating no audit trails and enabling undetected sensitive data exposure

- 60% of insider threat incidents now involve personal cloud app instances, making employee AI tool usage one of the primary attack vectors for data theft and breach

- Credential exposure through AI tools is emerging as a critical vulnerability—developers paste code containing API keys and database passwords into ChatGPT, enabling attackers to access critical infrastructure

- Regulatory frameworks (GDPR, HIPAA, PCI-DSS) create explicit liability for organizations whose data flows to unauthorized AI platforms, with fines reaching 4% of global revenue or 100,000 per affected record

- Single Shadow AI data breaches cost organizations 5 million in incident response, and enterprise-scale breaches exceed $5 million when accounting for regulatory fines and litigation

- Traditional containment approaches (blocking, training, policies) fail because they ignore the incentive structure driving adoption—approved alternatives must be faster and easier than unauthorized tools

- Successful organizations reduce Shadow AI by 60-70% through approved tool deployment, data classification frameworks that enable appropriate usage, behavior-based detection, and violation correction rather than punishment cultures

Related Articles

- Data Sovereignty for Business Leaders [2025]

- Age Verification Changed the Internet in 2026: What You Need to Know [2025]

- iRobot Roomba Data Privacy After Chinese Takeover [2025]

- Cybersecurity Insiders Plead Guilty to ALPHV Ransomware Attacks [2025]

- Oracle EBS Breach: How Korean Air Lost 30,000 Employees' Data [2025]

- 1Password Deal: Save 50% on Premium Password Manager [2025]

![GenAI Data Policy Violations: The Shadow AI Crisis Costing Organizations Millions [2025]](https://tryrunable.com/blog/genai-data-policy-violations-the-shadow-ai-crisis-costing-or/image-1-1767813018524.jpg)