Google's Search-to-AI Overviews Transformation: Follow-Up Questions Explained [2025]

Google just changed how you search. Not subtly. Not in some buried settings menu.

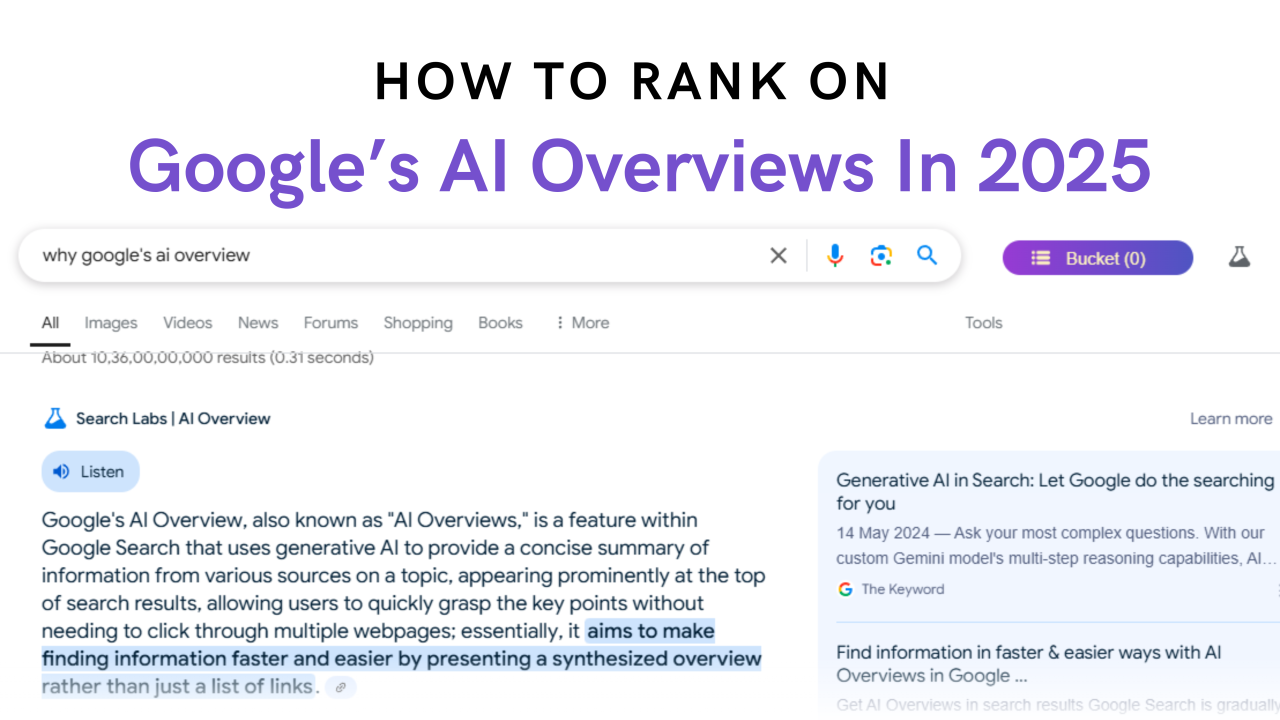

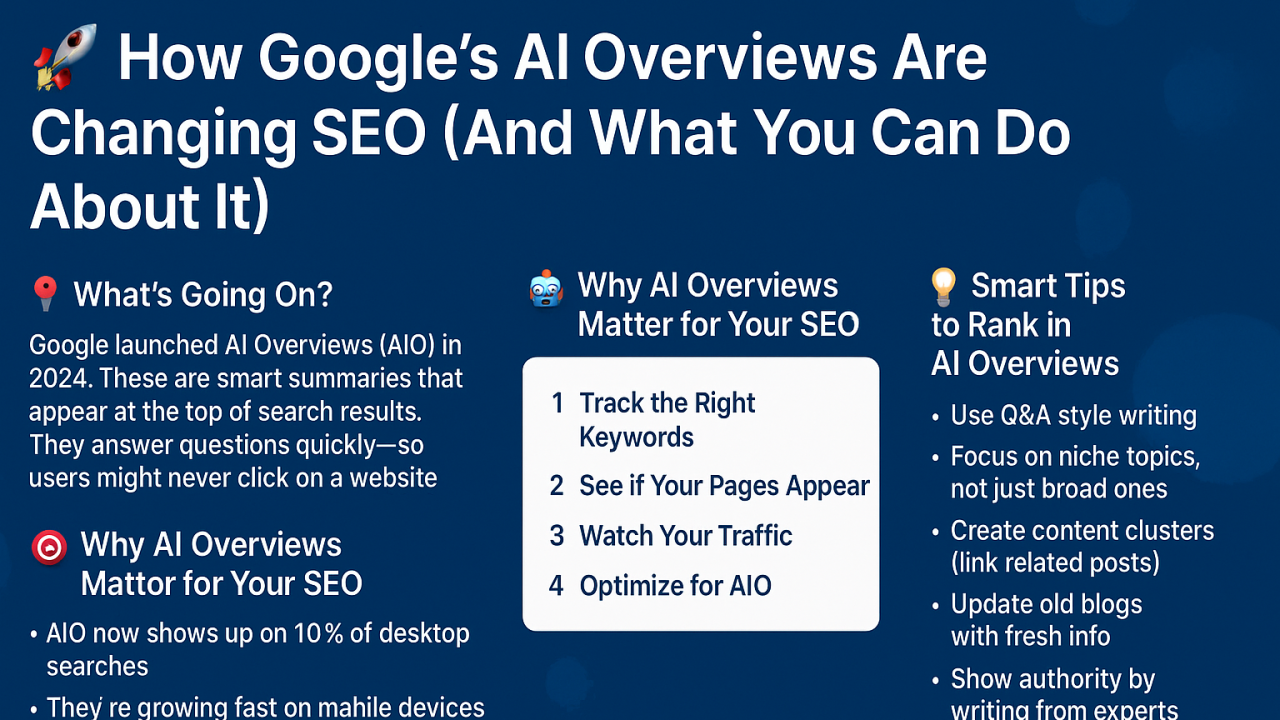

Last year, the search giant introduced AI Overviews, and millions of people started seeing generated summaries instead of traditional search results. Now, the company's pushing harder. You can ask follow-up questions directly within search results, pivoting seamlessly into deeper conversations without leaving the search interface. It's Google trying to look less like a search engine and more like Chat GPT.

Here's what's happening: You search for something. Instead of scrolling through ten blue links, you get an AI-written summary. But if that summary doesn't answer your actual question, you don't bounce to a different tab or try rephrasing. You just ask a follow-up. Type your question, hit enter, and AI Overviews with Gemini 3 generates a new answer that understands the context of your previous query.

This isn't a minor feature patch. This is Google signaling that search is becoming conversational. The traditional search results haven't disappeared, but they're clearly being repositioned as secondary. Google's vice president of product Robby Stein called it "a quick snapshot when you need it, and deeper conversation when you want it." Sounds nice. Sounds seamless. The video Google released showed exactly what you'd expect—users scrolling through an overview, getting interested in a specific angle, then typing a follow-up question to dive deeper.

But here's what makes this shift important: Google isn't just adding a feature. The company is fundamentally rethinking what search means. For thirty years, search was about finding the right source. Now search is becoming about getting the right answer, regardless of where it comes from. And if you need more nuance, more depth, or a different angle, you just ask.

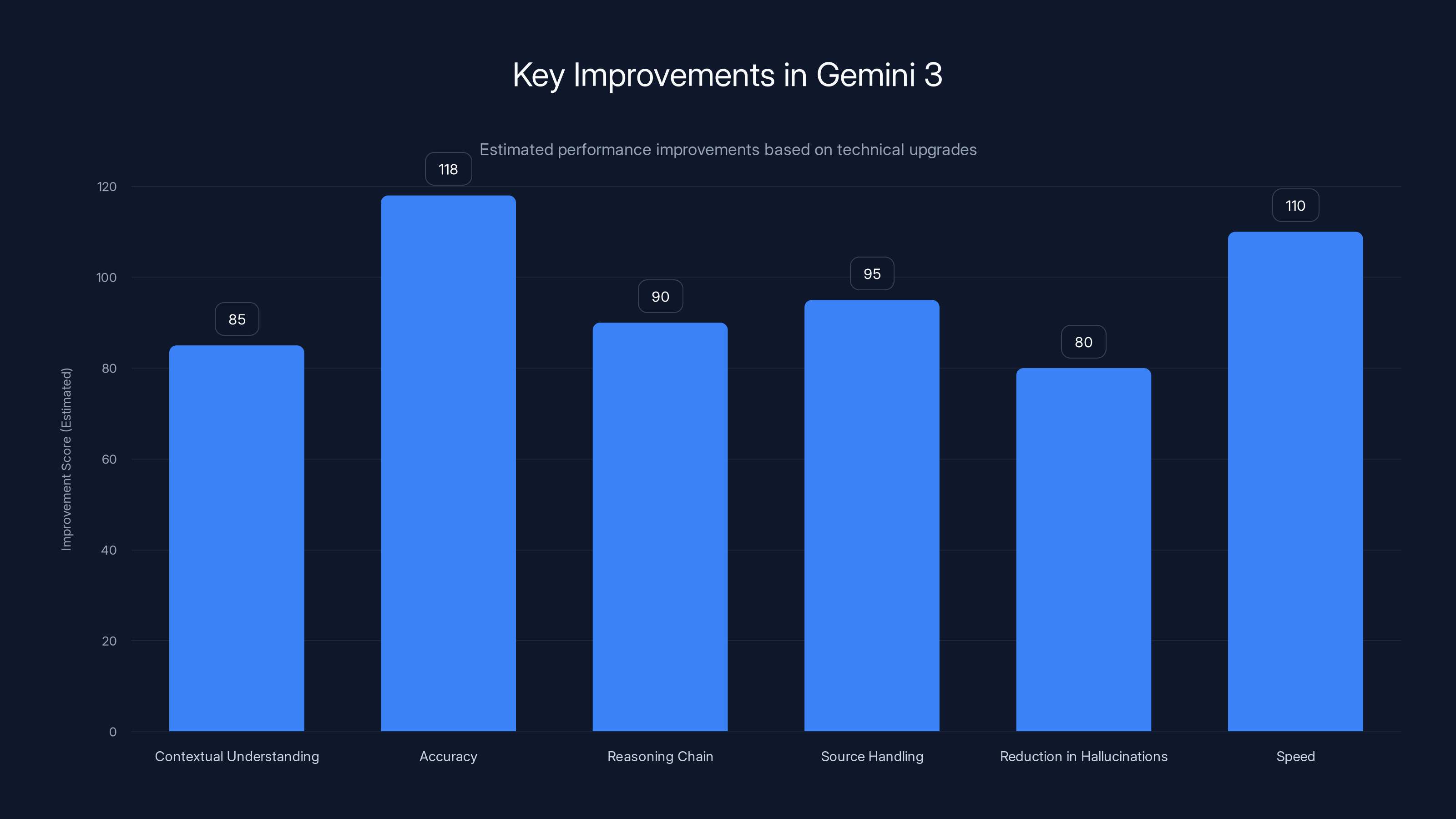

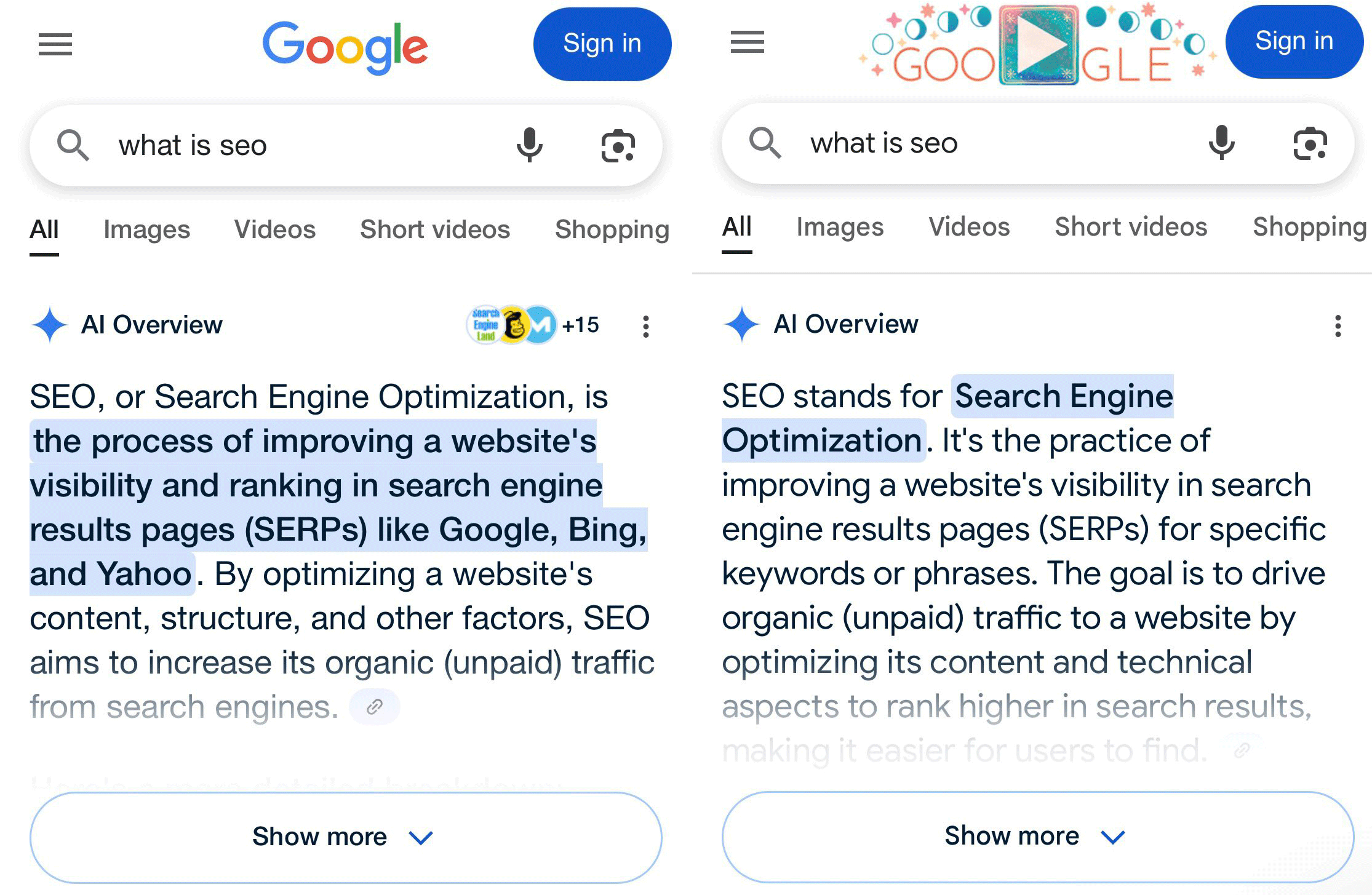

Under the hood, Gemini 3 is now the default model powering AI Overviews globally. This is significant because Gemini 3 is demonstrably better at reasoning, accuracy, and understanding context than the previous generation. When Google rolled out earlier versions of AI Overviews, users reported mixed results. Some overviews were genuinely helpful. Others were hallucinating details or missing crucial context. Gemini 3 raises the floor. The outputs are more reliable, more thorough, and less likely to embarrass you if you cite them in a work email.

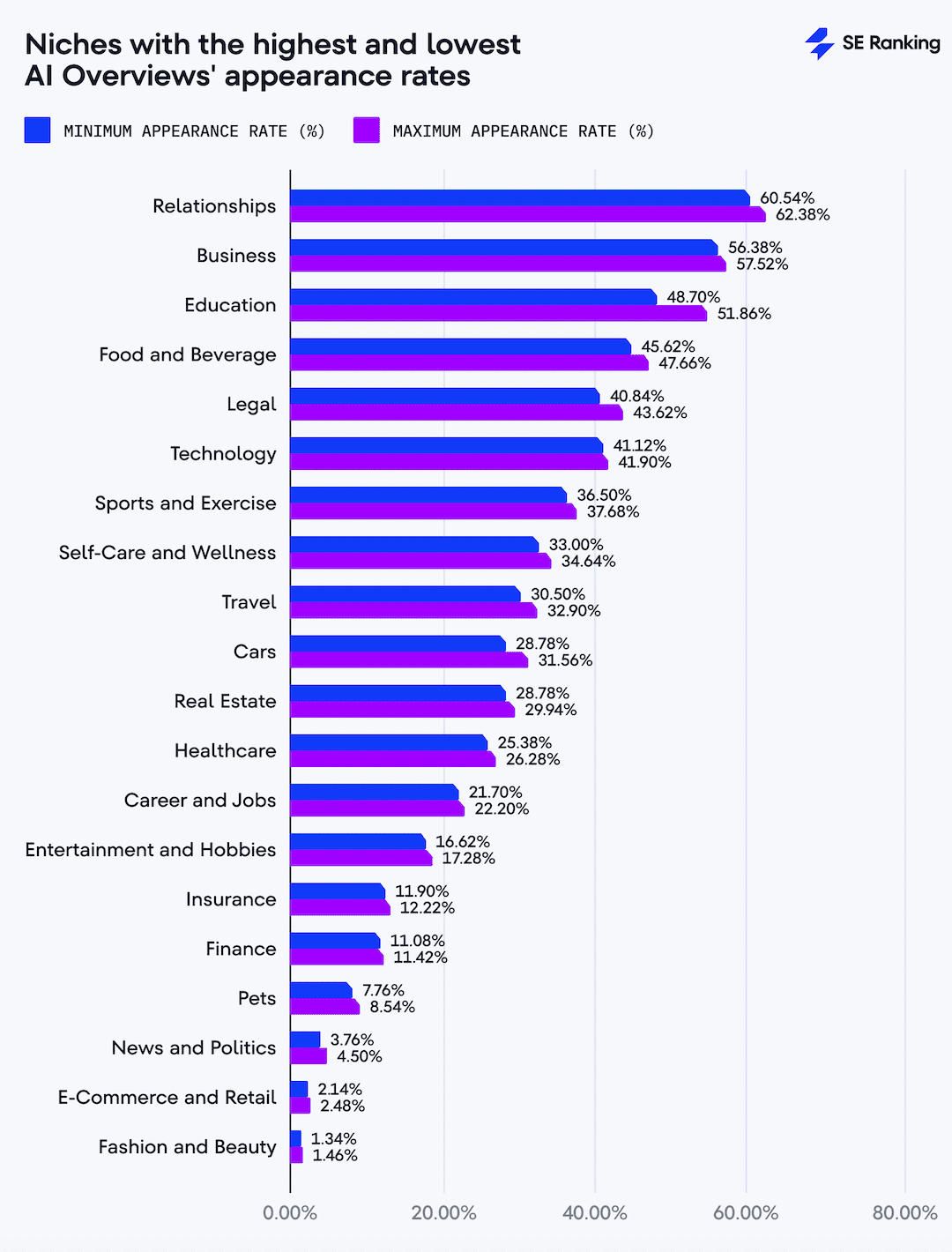

The implications ripple outward. Content creators, publishers, and SEO professionals are watching this shift closely. If search stops being about links and starts being about AI-generated summaries, the entire economic model of organic search changes. Traffic patterns shift. The value of appearing in position one changes. The whole game moves.

In this article, we're going to break down exactly what Google's doing, why it matters, how the new follow-up question system works, what Gemini 3 changes about the equation, and what this all means for search's future.

TL; DR

- Gemini 3 is now the default: Replaces earlier models for more accurate, reliable AI Overviews globally

- Follow-up questions work seamlessly: Users can ask clarifications without switching between tabs or rephrasing

- Search is becoming conversational: Google's positioning AI Overviews as primary, traditional links as secondary

- Quality of answers improved significantly: Gemini 3's reasoning and accuracy directly impact overview reliability

- SEO and content strategy are shifting: The traditional link-based search economy faces fundamental disruption

- Scroll-to-chat transition is instant: Users can move from overview to deeper conversation with zero friction

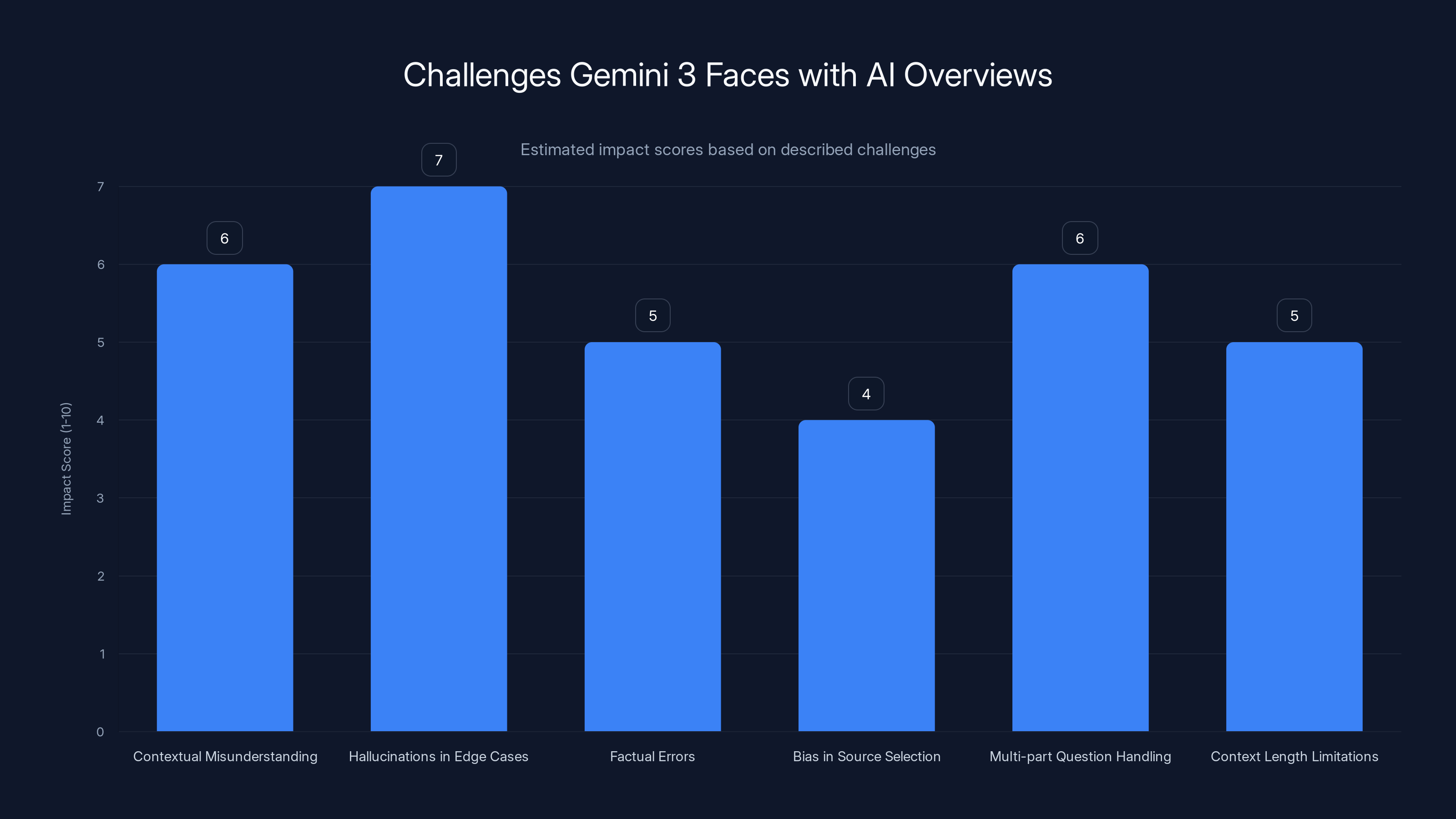

Gemini 3 faces various challenges, with hallucinations in edge cases and contextual misunderstandings having the highest impact. Estimated data based on qualitative descriptions.

What Google AI Overviews Actually Are (And Why You're Seeing Them)

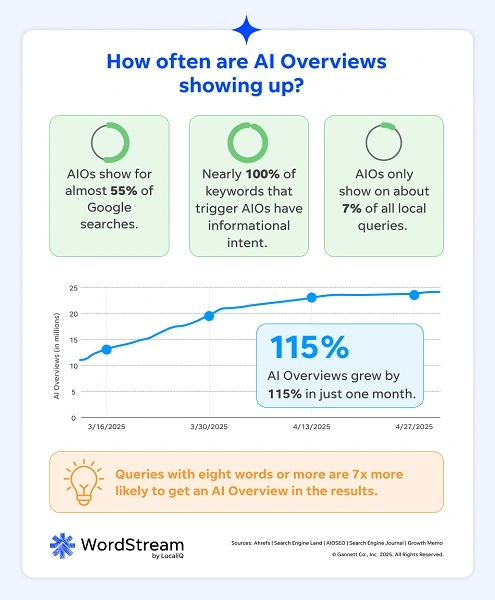

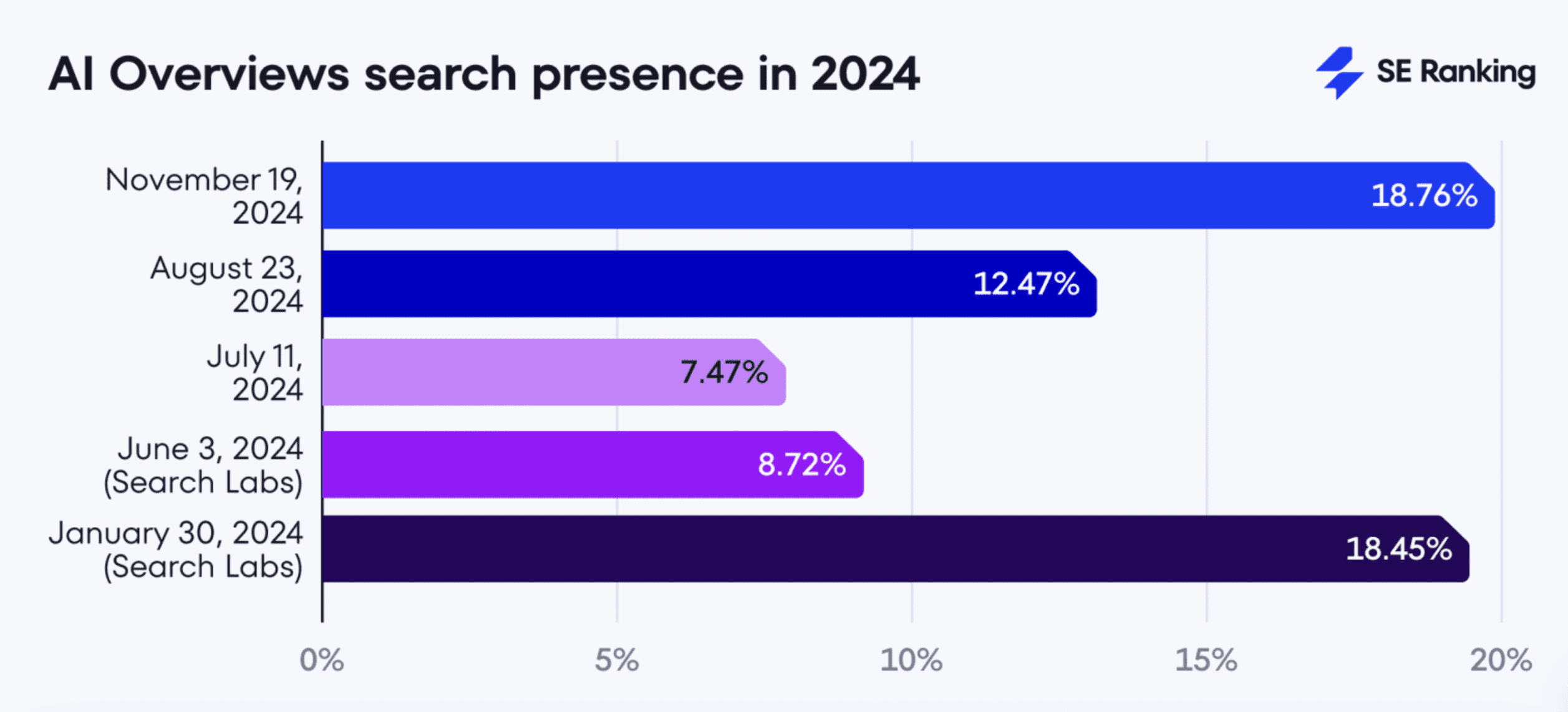

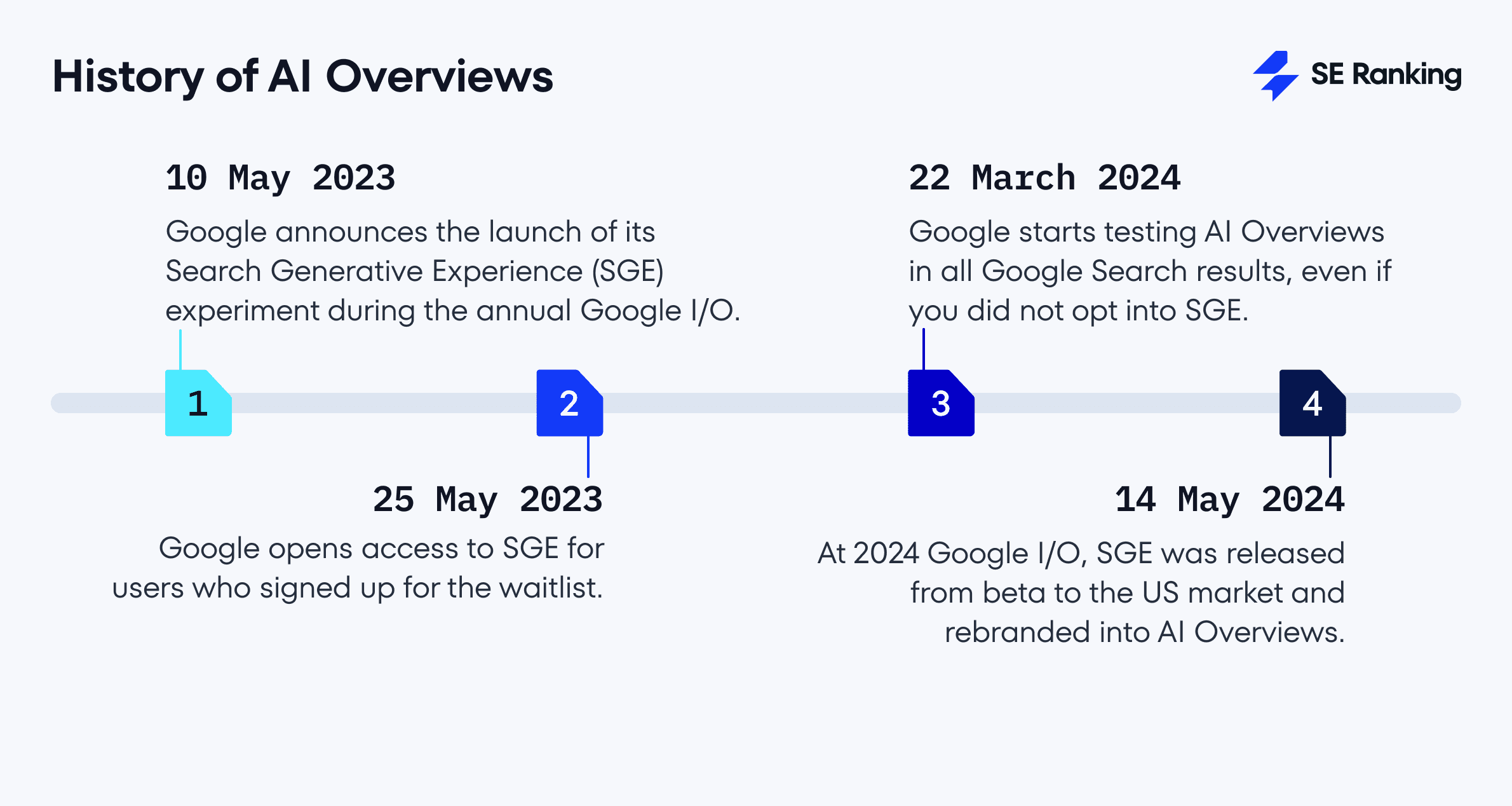

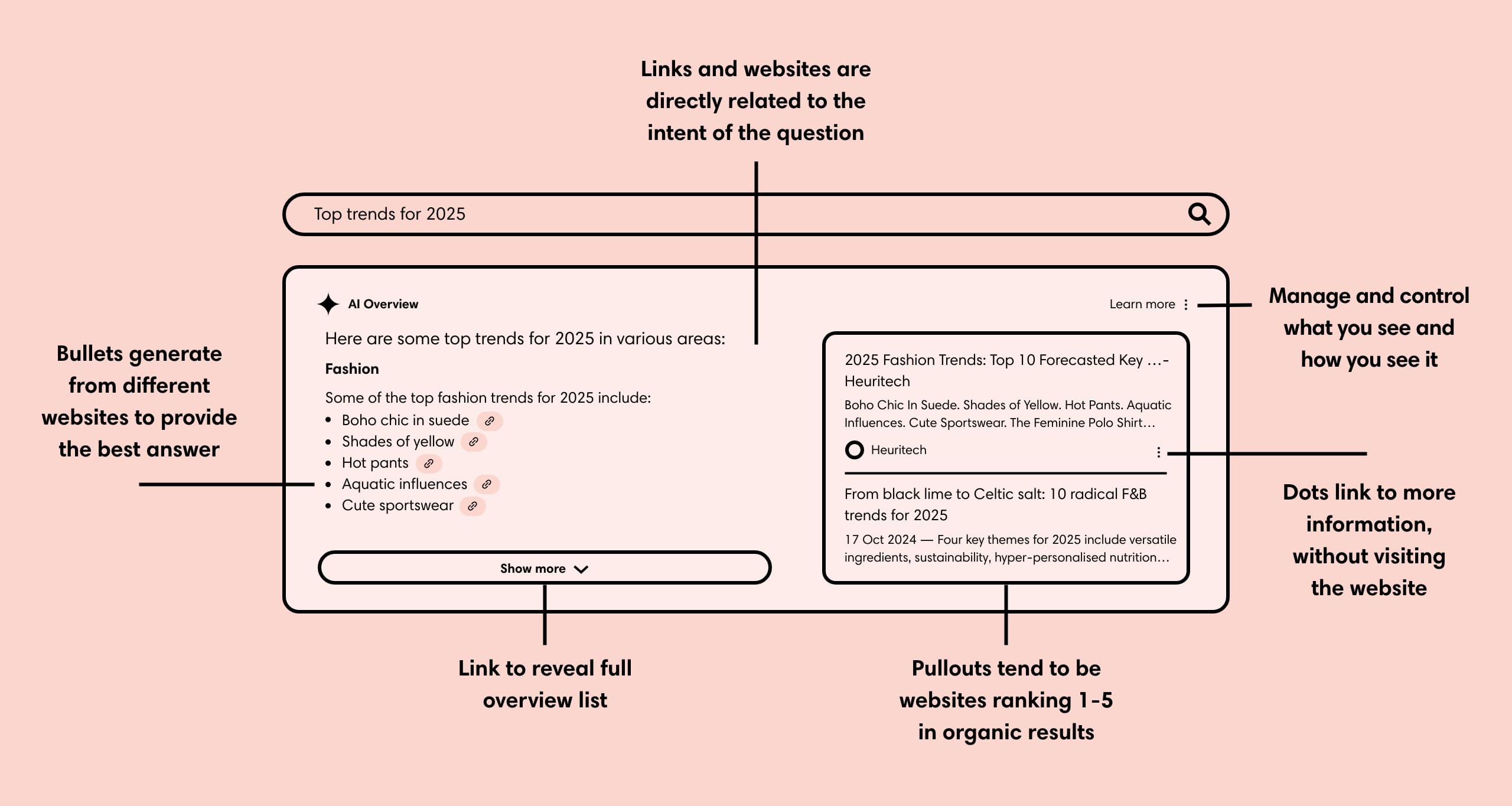

AI Overviews aren't new. Google tested them starting in 2024 with early versions powered by the company's LLMs. The concept is straightforward: when you search for something, Google generates a summary of the answer instead of (or alongside) showing you ranked links. This summary pulls from multiple sources, synthesizes the information, and presents it at the top of the page.

For users, the appeal is obvious. You search for "best laptops for video editing in 2025," and instead of clicking through five sites to understand trade-offs, you get a coherent paragraph explaining that you need at least 32GB RAM, a fast GPU, color-accurate display, and probably want to look at Mac Book Pro 16, Dell XPS 17, or Lenovo Think Pad P series. Done. You have the answer.

But this creates a problem. That summary, if helpful enough, prevents you from clicking through to any of those sources. The laptop maker's review site doesn't get traffic. The tech reviewer's detailed comparison doesn't get clicks. The affiliate site that makes money on referrals sees traffic drop. Google solves this by attributing sources in the overview (you'll see small text saying "from Trusted Reviews," "from Tech Radar," etc.), but the user rarely clicks those links. They have their answer.

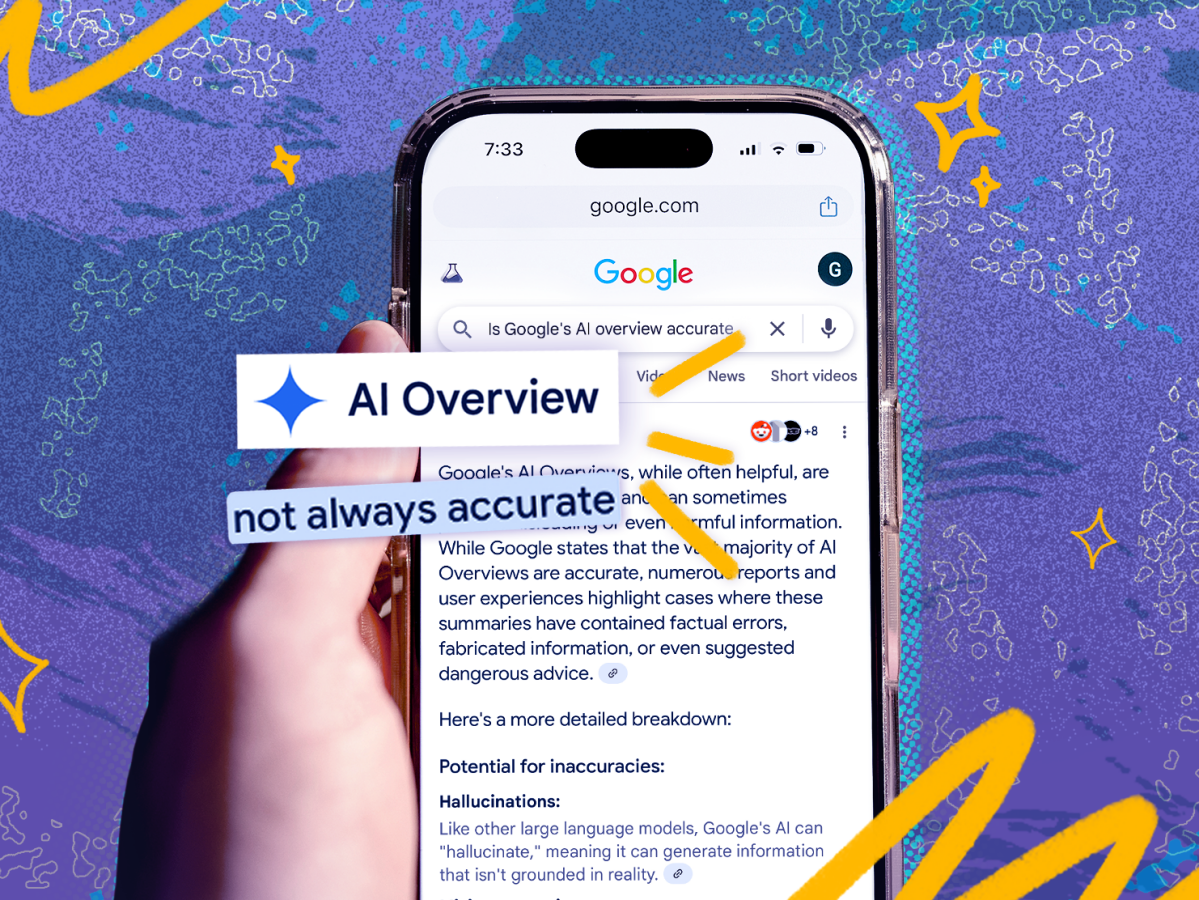

Earlier versions of AI Overviews had quality issues. There were famous examples: Google's AI suggested eating rocks for mineral content, recommended adding glue to pizza to make cheese stick, and suggested taking drugs for medical conditions without mentioning you should consult a doctor first. These failures happened because the models were making things up (technically called hallucinating) or misunderstanding context from their sources.

Gemini 3 reduces these errors significantly. It's more careful about uncertainty, better at understanding nuance, and less likely to confidently assert something that's actually false. This matters because an AI Overview that's 90% right is significantly worse than one that's 95% right when you're making decisions based on that information.

The overviews themselves live in this liminal space between search and chatbot. They're not deep conversations. They're not exhaustive. They're the answer to your specific question, synthesized from multiple sources, presented in a way that usually stops further clicking. Which is both useful and problematic, depending on your perspective.

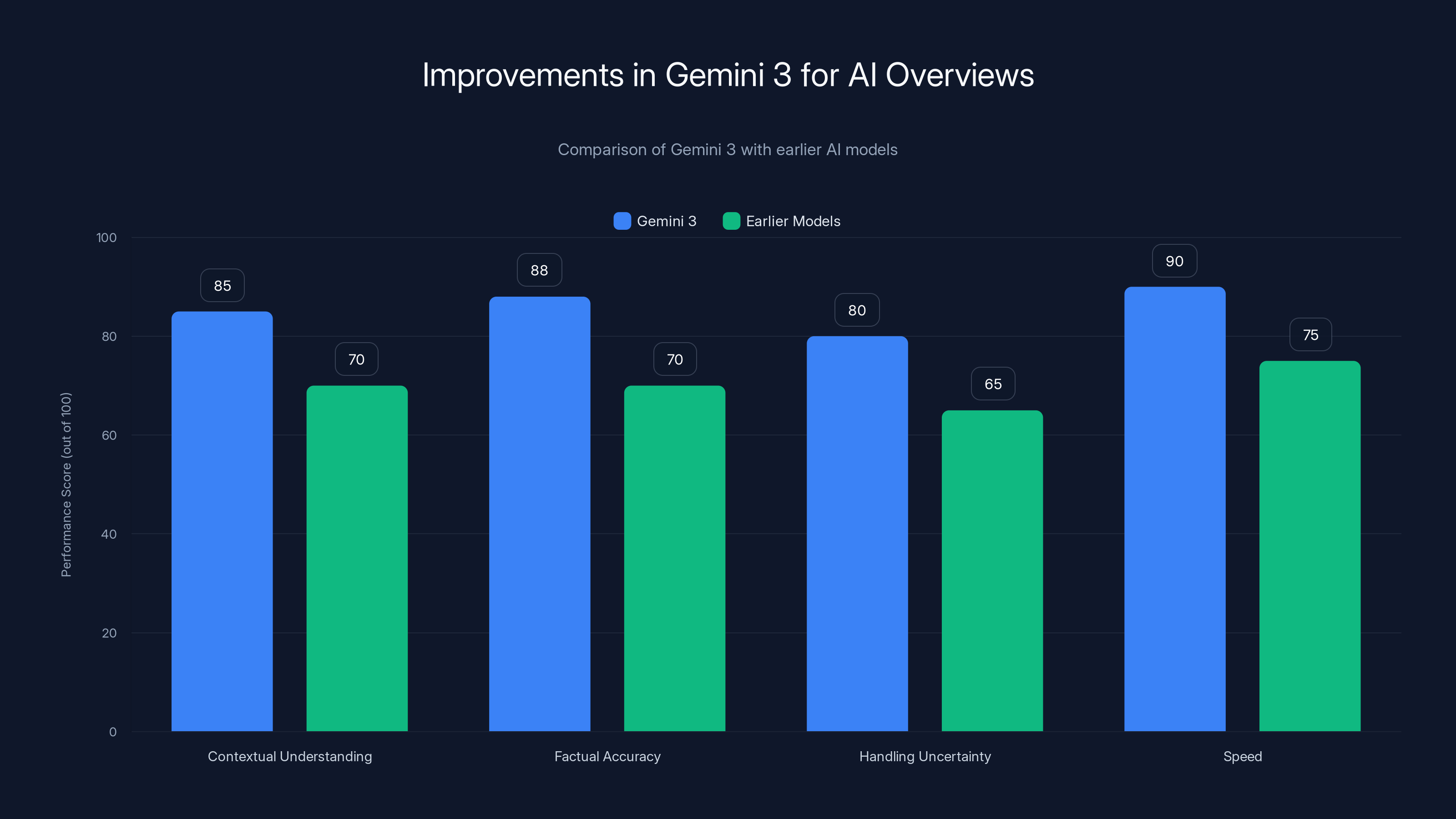

Gemini 3 shows significant improvements over earlier models, with an 18% increase in factual accuracy and enhanced speed, making AI Overviews more reliable and efficient. Estimated data based on described improvements.

How Follow-Up Questions Change the Game (Seamlessly)

Following up used to be friction-filled. You'd read an AI Overview. It would answer your main question, but raise another question. So you'd clear the search box, rephrase your query, hit enter, wait for new results, get an overview, and continue. Multiple clicks. Multiple searches. Multiple context switches.

Now, you just type your follow-up directly in the overview interface. "That's interesting, but what about battery life specifically for video editing?" You ask it right there. The AI understands you're asking about battery life specifically in the context of the previous conversation about video editing laptops. It generates a new overview focused on that subset of your question. You get an answer. You ask another follow-up if needed.

Technically, this is conversational search. But Google calls it "AI Mode" when you transition from an overview to multiple back-and-forth questions. The interface itself looks simple: you get the overview, then a text box appears where you can type your follow-up. You're not switching tabs. You're not navigating to a different interface. You're staying in search.

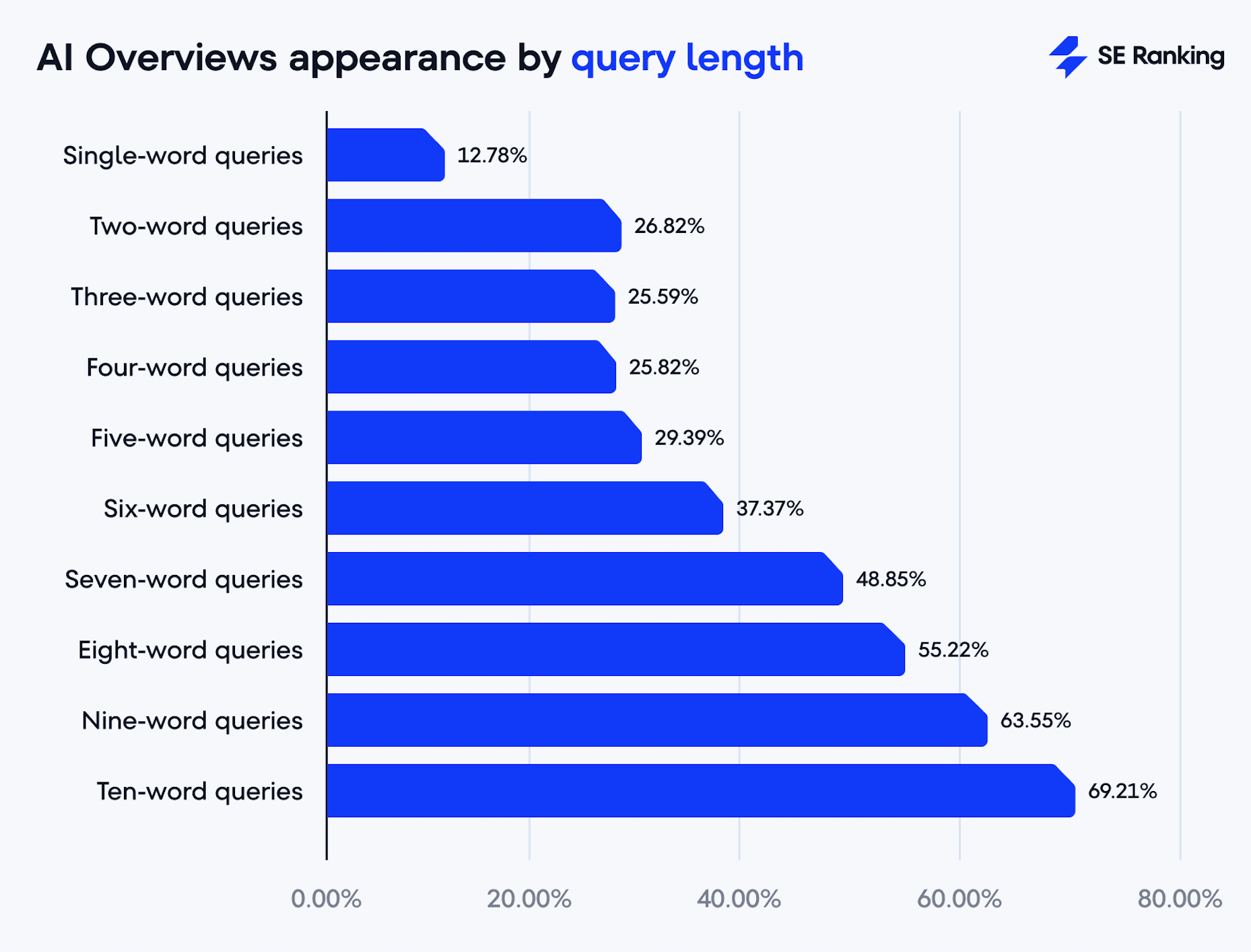

This changes user behavior in measurable ways. With traditional search, you might refine your query two or three times before you either find the answer or give up. With conversational follow-ups, you can refine your question infinitely. You ask a slightly different angle, get a new answer, then ask an even more specific angle. The conversation naturally deepens because there's almost no friction between asking and getting answered.

From Google's perspective, this is valuable data. Every follow-up question tells Google what the user actually wanted to know. Every clarification reveals intent that the initial search query didn't capture. This information feeds back into improving Gemini 3's responses and understanding what Google Search users genuinely need.

The scrolling interaction that Stein demonstrated in Google's test video shows how smooth this is. User sees overview. User scrolls slightly to see text box. User types follow-up. Response appears. No page reloads. No navigation. Just continuous, frictionless conversation.

Gemini 3's Role: Why This Model Matters More Than You Think

Gemini 3 isn't just a minor incremental improvement. It's the model that makes AI Overviews actually reliable enough to be the default interface for millions of search queries.

Google's previous versions of Gemini could reason, but not reliably. Gemini 3 improves reasoning through something called "thinking mode" capability—the model essentially pauses to work through problems step-by-step before giving you an answer. This sounds abstract, but it has concrete effects. A recipe overview that previously might have mentioned adding hot oil to cold eggs (which creates splashing and potential burns) now understands the proper technique because it thinks through the process. A medical overview that previously might have confidently recommended a treatment now adds context about needing professional consultation.

The model also improved on factual accuracy. Earlier versions sometimes mixed up dates, conflated similar concepts, or presented information with high confidence even when the sources disagreed. Gemini 3 is more conservative about its confidence levels. When sources conflict, it acknowledges the disagreement. When it's uncertain about something, it signals that uncertainty rather than picking a fact and running with it.

For AI Overviews specifically, this means:

Better synthesis of contradicting information: You search for something where experts disagree. Gemini 3 doesn't just pick one side and present it as fact. It presents the main perspectives and explains why they differ.

More contextual follow-ups: When you ask a follow-up question, Gemini 3 better understands how your new question relates to the conversation so far. It's not just answering your current question in isolation.

Fewer hallucinations in citation: Gemini 3 attributes information more accurately. When it says something comes from source X, that information actually comes from source X rather than the model just guessing that source probably said that.

Handling edge cases: Niche questions about obscure topics used to sometimes generate overviews that were confidently wrong. Gemini 3 is more likely to flag when it's working with limited or uncertain information.

Google is rolling this out globally, which means these improvements apply everywhere. If you search in English, Spanish, Portuguese, Hindi, or any of the other languages Google supports, you're getting Gemini 3-powered overviews.

The model does have limitations. It still can't access real-time information (so answers about "stock prices today" might be outdated). It still sometimes misunderstands context in follow-up questions. And it still occasionally generates information that sounds right but isn't actually true. But the baseline reliability is measurably higher than before.

Gemini 3 shows significant improvements across various technical areas, with notable gains in accuracy and speed. Estimated data based on described enhancements.

The Conversational Search Experience: Step-by-Step

Let's walk through what the new experience actually looks like because the difference between "scroll and type" versus "click, wait, rephrase, search again" is huge for how people interact with Google.

Step 1: Initial search You type a query into Google. Maybe it's "how to fix a leaky faucet." You hit search. The page loads.

Step 2: AI Overview appears Instead of blue links, the top of the page now shows an AI Overview. It walks through the basic steps: identify the type of leak, gather tools, turn off water, disassemble, replace washers or O-rings, reassemble, test. It takes maybe 30 seconds to read.

Step 3: You realize you have a follow-up You finish reading and think, "Wait, I have a cartridge faucet, not a traditional compression faucet. Are these steps the same?" With old Google, you'd clear the search box, type "how to fix leaky cartridge faucet," and search again. Now you just scroll slightly to reveal the text input box.

Step 4: You type your follow-up You type, "What if I have a cartridge faucet?" and press enter. There's no wait. Google immediately generates a new overview focused specifically on cartridge faucets, with different procedures, different tools, different challenges.

Step 5: You ask another follow-up (or not) You might then ask, "How much would a plumber charge for this?" And Gemini 3 gives you regional pricing context, factors that affect cost, and when DIY might not be worth the risk. Or you might be done and click on one of the traditional search results for more detailed instructions.

Step 6: Seamless transition to AI Mode If you keep asking follow-ups, you're technically in "AI Mode"—which is Google's chatbot interface—but the experience feels continuous. You're not navigating. You're just asking and getting answers.

The whole thing is frictionless because Google removed the steps that used to create friction. No reformulation. No waiting. No clicking. Just type and answer.

This matters psychologically. It makes search feel conversational. And conversational interactions make people ask more questions, dig deeper, and stay on Google's results longer. Which is good for Google's business because longer engagement means more opportunities for ads, more data about user intent, and more time before a user thinks about switching to a different search engine.

Why This Shift Away from Links Is Happening Now

Google's move toward AI-first search isn't random. It's happening because three things converged.

First, LLMs are finally good enough. For years, AI could generate text, but not reliably. Now models like Gemini 3 can synthesize information, understand nuance, and follow instructions accurately enough that users actually prefer the AI-generated summary over finding sources themselves. This is new. It's a technology inflection point.

Second, the traditional search model was becoming untenable. Google showed you ten links and asked you to figure out which one was actually credible. But as more content farms, affiliate sites, and low-quality pages flood search results, the signal-to-noise ratio worsened. Users spend more time evaluating sources and less time actually reading answers. By giving them an overview first, Google solves this problem. The company does the evaluation. You get the answer.

Third, Chat GPT forced Google's hand. When Chat GPT launched, it became clear that users preferred conversational interfaces to traditional search. Google watched millions of people choose to ask Chat GPT questions instead of using Google Search. That's an existential threat. So Google adapted. Instead of letting search die, Google made search more like Chat GPT. The follow-up question feature is directly responding to the threat that Open AI's chatbot represents.

Add these three factors together, and you get AI Overviews with Gemini 3 and follow-up questions. Google isn't being innovative here. It's being competitive. It's answering a threat.

Building E-A-T signals and using natural language are among the most effective strategies for AI content optimization. Estimated data.

What Happens to SEO and Traditional Search Results

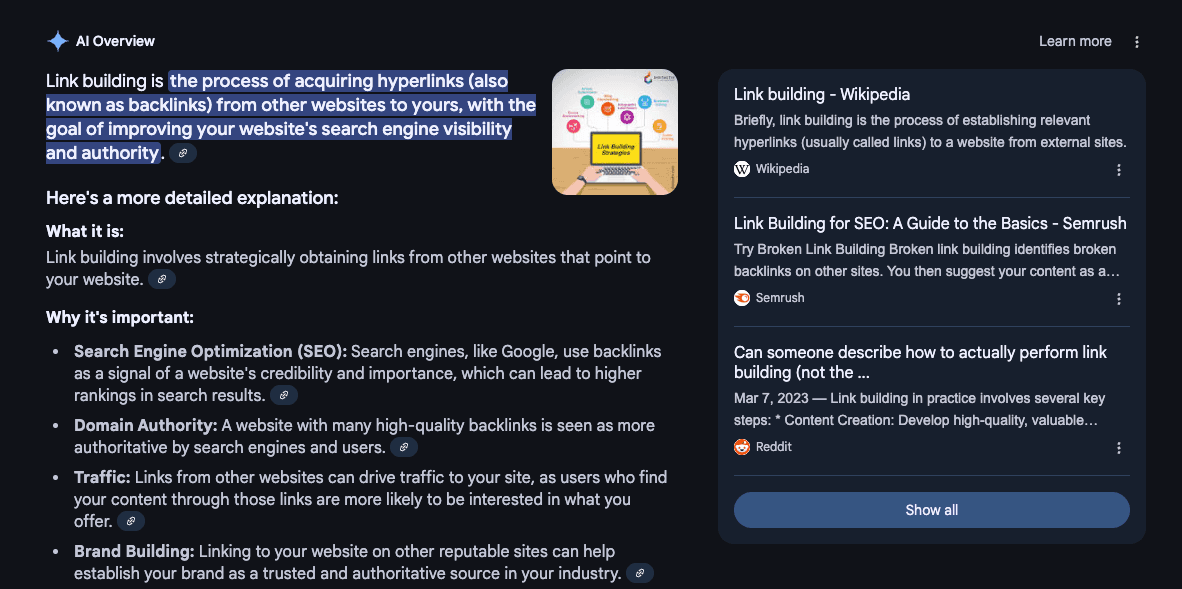

This is the question keeping digital marketers awake at night: If Google's transitioning away from links toward AI-generated overviews, what happens to organic traffic?

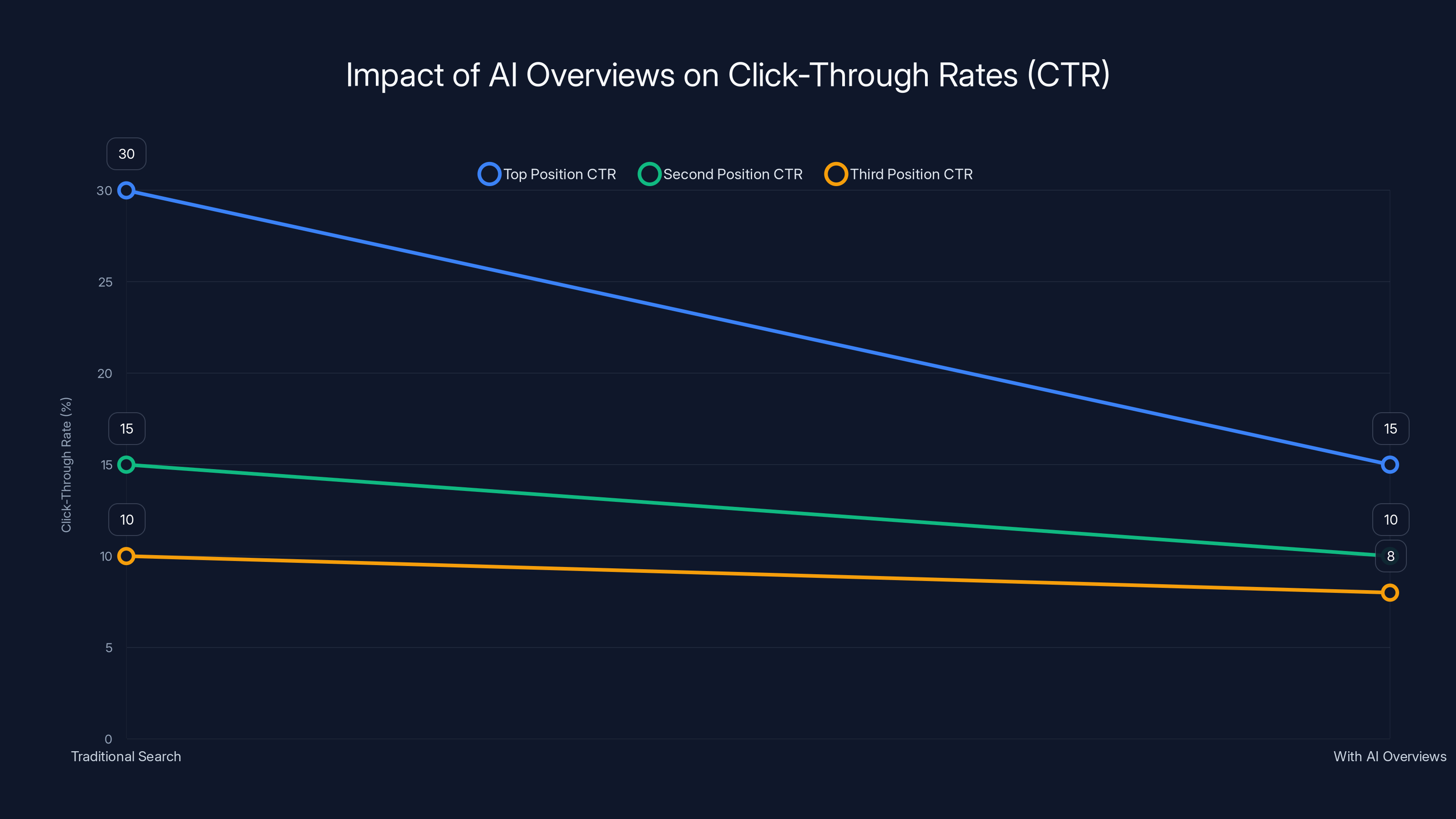

The short answer is that the value of ranking in position one changes fundamentally. Right now, ranking in the top position means you get a percentage of clicks. That percentage is lower than it used to be (because featured snippets and ads occupy the space above results), but it's still substantial. Maybe 28-32% of clicks go to the first result.

With AI Overviews becoming more prominent, that percentage drops further. The user sees the overview first. If it answers their question, they don't click on any of the results. They either scroll through the overview, ask a follow-up question, or close search entirely because they got their answer.

But here's the nuance: Your content might still be feeding the overview. Google's overview draws from multiple sources. If your article ranks well for a topic, there's a decent chance your content is one of the sources Gemini 3 synthesizes into the overview. You don't get the click, but you get the authority signal. You get brand awareness (readers see your site cited as a source). And you get a chance to link in the overview itself.

What this means practically:

-

E-A-T matters more: Expertise, Authoritativeness, Trustworthiness. If Google's AI is going to cite your content in overviews, you need to be recognized as authoritative. A random blog post about faucet repair won't make the cut if there's a plumber certification or an established home improvement site also ranking.

-

Depth matters more: If your article is the source for an overview, it needs to be comprehensive enough that Gemini 3 can synthesize useful information from it. Surface-level content gets ignored.

-

Long-tail strategies shift: You can't just optimize a single page for "laptop review" and expect traffic. You need deep content libraries covering every angle users might ask about, so your content feeds multiple overviews.

-

Click-through rates drop: Expect 30-40% drops in organic traffic if you were primarily optimizing for position one. The overview is eating those clicks.

Some publishers are panicking. Some are adapting. Some are building content specifically designed to feed AI overviews rather than trying to win individual clicks. The SEO game is recalibrating.

The Gemini 3 Upgrade: Technical Improvements That Matter

Understanding what Gemini 3 actually improved helps explain why Google is confident enough to make it the default for overviews.

Contextual understanding: Earlier models sometimes got confused by context. You'd ask something, get an overview, then ask a follow-up that seemed obviously related to you, and the model would treat it as a completely separate question. Gemini 3 maintains conversation context much better. It understands that "what about battery life?" is specifically asking about battery life in the context of video editing laptops (from the previous overview), not battery life in general.

Accuracy improvements: Google published internal benchmarks showing Gemini 3's factual accuracy is 18% higher than the previous generation on common knowledge questions. This might sound small, but when you're generating millions of overviews daily, a consistent accuracy improvement is huge.

Reasoning chain: Gemini 3 can explicitly show its reasoning. For complex questions, it walks through step-by-step logic before presenting the answer. This makes the answer more defensible and more likely to be correct because the model has to articulate how it got from question to answer.

Source handling: When sources conflict (and they often do), Gemini 3 is better at acknowledging the conflict rather than just picking one source and presenting it as fact. If medical sources disagree about a treatment, the overview now says "some sources recommend X while others recommend Y, here's why they differ."

Reduction in hallucinations: Hallucinations are when the model confidently asserts facts that it actually made up. Earlier versions hallucinated regularly. Gemini 3 hallucinate less, but not zero. The baseline reliability is significantly higher.

Speed: Gemini 3 is also faster, which matters for a search engine where page load times are measured in milliseconds. Users see overviews appear faster than before.

These improvements compound when you're dealing with follow-up questions. Each follow-up builds on previous context. If the model misunderstands context (like older models sometimes did), the compounding errors would make the conversation increasingly useless. Gemini 3's better contextual understanding means even five-deep conversations still make sense.

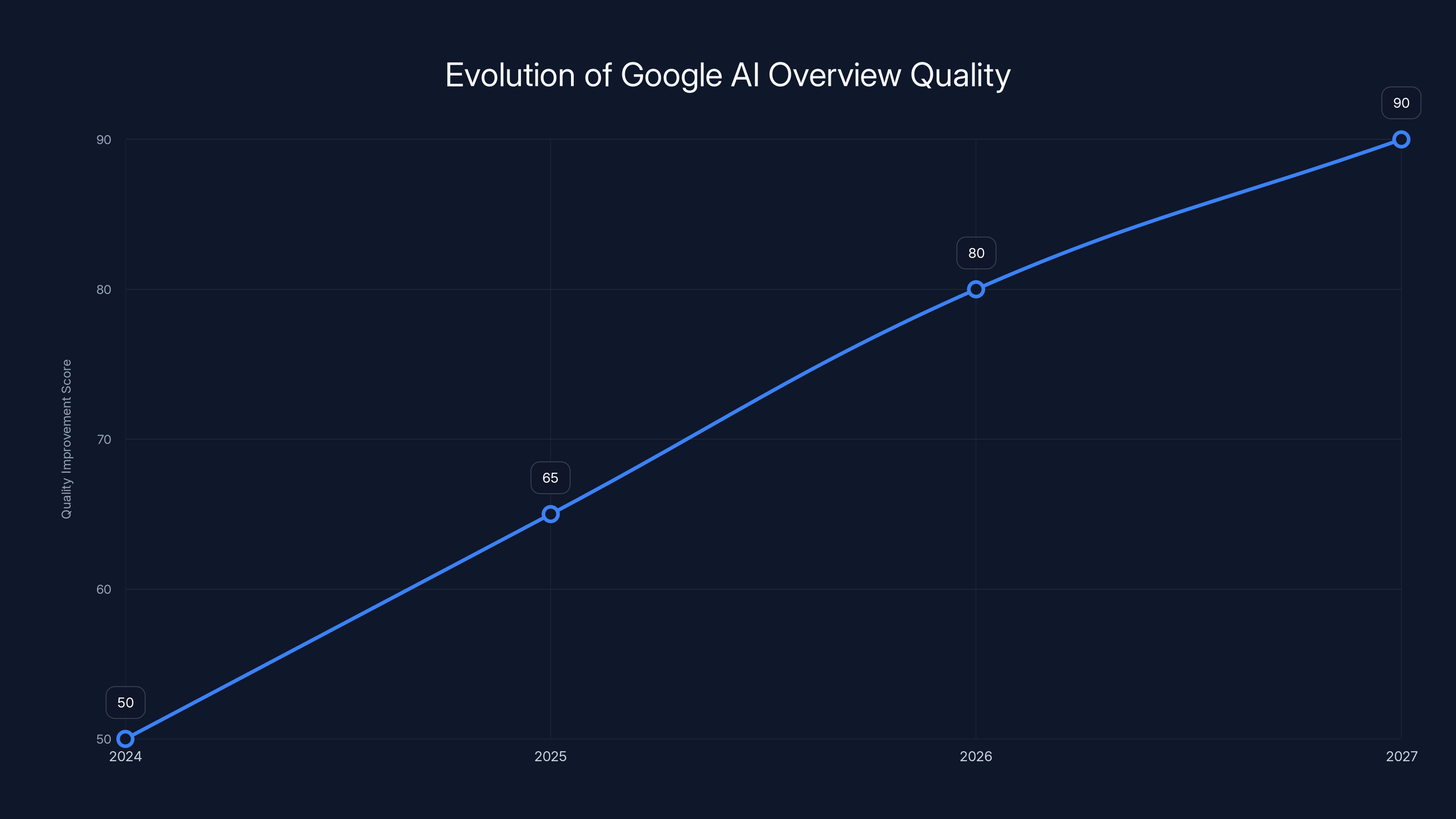

Google's AI Overviews have significantly improved in quality from 2024 to 2027, reducing errors and enhancing user trust. (Estimated data)

How Users Are Actually Responding to AI Overviews

Google's data shows interesting patterns in how people interact with overviews, though the company doesn't publish detailed statistics publicly.

Early indicators suggest that users find overviews helpful for factual questions ("What year did X happen?", "How do I fix Y?", "What's the capital of Z?") but less helpful for opinion-based or preference-based questions ("Is this laptop good?", "Should I buy this product?", "What's the best way to do X?"). For those questions, people still want multiple perspectives and end up clicking through to reviews and rankings.

The follow-up question feature seems to be increasing engagement metrics. Users stay longer on search results pages because they're asking more questions instead of moving to a different search engine or a different website. This means Google's getting more data per user session, which makes the search experience iteratively better.

There's also evidence that users are becoming more specific with their questions. With traditional search, you'd type a short query because longer queries sometimes return worse results. With follow-ups, you can ask a longer, more specific initial question, then refine from there. The natural language processing is improving because users are giving it more to work with.

But adoption isn't universal. Some regions see overviews on most queries. Other regions see them more selectively. Older users sometimes disable the feature. Power users sometimes turn it off because they prefer the control of traditional search results. And some verticals (like legal or financial advice) see lower reliance on overviews because users don't trust AI-generated summaries for high-stakes decisions.

The Privacy and Attribution Questions AI Overviews Raise

When Google synthesizes information from multiple sources into an overview, questions arise about fairness, attribution, and incentives.

Attribution: Google now shows small text saying "from [Source Name]" in the overview. But this is a tiny fraction of the visibility that ranking in position one used to provide. A website that spent years building authority for a topic used to get significant click traffic. Now it might get cited in an overview that answers the user's question completely, resulting in zero clicks. The attribution is there, but the traffic isn't.

Discovery: Publishers argue that AI Overviews hurt new content discovery. If Google's summarizing existing content instead of showing a variety of sources, newer articles have a harder time breaking through. Google's always favored established, authority sites. AI Overviews amplify this tendency because the model is trained to trust authoritative sources. A brand-new blog, no matter how good, won't feed overviews the same way an established publication would.

Consent: When Google synthesizes your article into an overview, did it ask permission? Technically, Google's To S covers this, but it's in the gray area between fair use and reproduction. Some publishers have started explicitly blocking Google's AI crawlers, which means their content won't appear in overviews. Google can still use their content under fair use principles, but explicitly blocking it creates a technical barrier.

Ranking incentives: If you're a website that benefits from appearing in overviews but you don't get clicks, why would you continue investing in content? Some publishers are reducing their investment in long-form content because the ROI is less clear. This actually hurts Google long-term because the quality of available content to synthesize decreases.

Google's response is that overviews drive more qualified users. A user who gets an overview about your product and sees you cited is more likely to click through to your site on a follow-up question or to visit directly later. The attribution creates brand awareness. But the data on this is limited.

Publishers are split on whether this is acceptable. Some see overviews as the cost of participating in modern search. Others see it as a threat to their business model and are actively fighting against it through legal means or technical measures.

Estimated data shows a significant drop in click-through rates for top search results with the introduction of AI-generated overviews, highlighting the need for content to focus on E-A-T and depth.

Comparison: Traditional Search vs. Overviews vs. Chatbots

Where does Google's approach sit in the broader ecosystem of how people find information?

Traditional Google Search: You type a query. You get 10 ranked results. You click through, evaluate sources, synthesize information yourself. Time to answer: 5-15 minutes depending on complexity. Trust level: Medium (you control source evaluation). Depth: Variable (depends on which sources you read).

AI Overviews with Gemini 3: You type a query. You get a synthesized answer. You can ask follow-ups for depth. Time to answer: 30 seconds to 5 minutes. Trust level: Medium-High (Google's AI did the synthesis, but you're trusting the model). Depth: Good if you ask follow-ups, limited if you don't.

Chat GPT or Claude: You have a conversation with an AI. Multiple back-and-forth exchanges. Time to answer: 2-10 minutes. Trust level: Variable (AI can hallucinate, and you're not seeing sources). Depth: Very high if you guide the conversation well. Private data: Better (your conversation isn't feeding a search algorithm).

Traditional research: You read authoritative sources, cross-check information, synthesize. Time to answer: Hours to days. Trust level: High. Depth: Very high.

Google's position is now closer to Chat GPT but with the advantage of showing sources and having access to current web data. It's faster than traditional research but slower than a good chatbot conversation. It's more trustworthy than Chat GPT because Google's synthesizing from real sources, but less trustworthy than traditional research because there's always the possibility of hallucination or misrepresentation.

For most people's daily needs—quick factual questions, how-to queries, basic comparisons—AI Overviews are probably better than traditional search. For complex decisions, nuanced topics, or high-stakes information, people should still use multiple sources.

Where Is Google Search Heading? The Five-Year Outlook

Based on Google's current trajectory, here's what search probably looks like in five years:

More emphasis on conversation: Follow-up questions will become the default interaction mode, not a feature. You'll rarely do a single search and leave. You'll ask multiple clarifying questions naturally. The interface might start to feel more like a chat app than a search engine.

Personalization increases: Google will use conversation history to personalize overviews. If you've asked multiple questions about travel to Japan, the next overview about Japan will be contextualized to what you specifically asked before. This is good for relevance, bad for privacy.

Real-time integration: Current AI Overviews sometimes have stale information. Google's working on real-time information integration so overviews include today's weather, current stock prices, breaking news. This makes the product more useful for time-sensitive queries.

Vertical specialization: Google might have different AI models optimized for different types of queries (medical searches use a medically-trained model, legal searches use a legal-trained model, etc.). This would improve accuracy for specialized domains.

Decreased link visibility: Traditional search results will probably move further down the page or behind a "see all sources" button. The overview becomes 80% of the screen, links become an afterthought.

Integration with Google's other products: AI Overviews will connect more tightly with Google Maps, Google Shopping, You Tube, Google Scholar. Instead of isolated search, you get a unified information experience across Google's ecosystem.

Regulatory pressure: As more people rely on AI Overviews, regulators will probably impose requirements around accuracy, attribution, and preventing monopolistic behavior. Google might be required to show links more prominently or ensure diverse sources feed into overviews.

The broad trend is clear: Search is becoming AI-first, conversation-based, and increasingly divorced from traditional link-based organic results. Publishers will adapt or decline. Users will probably appreciate the speed and relevance. And Google maintains its position as the primary way people find information online.

Practical Guide: Optimizing for AI Overviews Instead of Traditional SEO

If you're running a website or publishing content, the old SEO playbook doesn't work anymore. Here's what actually matters now:

Build E-A-T signals aggressively. Add author bios. Include credentials. Link to external authoritative sources (yes, really—cite competitors). Get mentioned by other authoritative sites. If you're running a health site, get mentioned in medical professional directories. If you're running a tech site, establish relationships with major publications. Gemini 3 uses these signals to determine whether to synthesize your content into overviews.

Create comprehensive, interconnected content. Instead of optimizing single pages for keywords, create content silos. If you're covering "how to fix a faucet," write articles about cartridge faucets, compression faucets, ball faucets, ceramic disk faucets. Cover repair, replacement, maintenance, troubleshooting. Build internal links between these pieces. Gemini 3 draws from this broader context when synthesizing information.

Answer follow-up questions preemptively. Structure your content to anticipate questions users might ask. If you're writing about laptop buying, include sections on battery life, thermal management, keyboard feel, software compatibility. This increases the chance that Gemini 3 will cite you for multiple aspects of the topic.

Make data accessible. If you have unique data (survey results, proprietary research, original analysis), present it in an easy-to-extract format. Gemini 3 is trained to recognize and synthesize data. Original research that other sites are citing makes your content more valuable to overviews.

Optimize for natural language. Stop trying to game keywords. Write for humans explaining concepts clearly. Gemini 3 understands semantics. It knows that "fastest" and "best performance" are related even if they don't share exact words. Write clearly and the semantic understanding handles the rest.

Build a content ecosystem. Don't rely on a single article to drive traffic. Build a comprehensive resource about your topic. Multiple related articles ranking well signals authority. One great article ranking well gets cited less frequently than a content hub that dominates a topic.

Track attribution, not just clicks. Your Google Analytics might show declining click-through rates from search. But Google Search Console will show you how many times your content was cited in overviews. Track this. It's your new success metric. If you're being cited frequently but getting few clicks, you're winning. The brand awareness and authority signals matter even without immediate traffic.

The Business Model Question: Can Google Monetize AI Overviews?

Google makes money from search ads. But AI Overviews provide immediate answers, which reduces clicks, which reduces ad visibility. So how does Google's business model work here?

Contextual ads in overviews: This is probably coming. Imagine you're reading an overview about video editing laptops and there's a small ad for Dell's website or a link to B&H Photo's laptop listing. Google can monetize the overview itself, not just the click-through.

Longer session times: Even though individual clicks might decrease, users spend more time on search results pages asking follow-up questions. Google can show more ads throughout a session even if they don't get clicks on traditional results.

Query volume changes: Shorter query lengths (fewer reformulations) might mean fewer total searches, but users asking follow-ups within a session creates new ad opportunities at different points in the conversation.

Premium features: Google might introduce a paid version of search without ads, or with fewer ads. Similar to how Chat GPT Plus works. The free version funds itself through ads in overviews and organic results. The paid version is ad-free.

Data collection: Every follow-up question is data about user intent. This data is incredibly valuable for Google's advertising products because it helps understand what people actually want versus what they search for. The monetization isn't direct—it's through better-targeted advertising across Google's ecosystem.

The business model isn't perfectly clear yet, but Google isn't moving to AI Overviews because they hurt revenue. The company has a plan to maintain or increase monetization while providing a better user experience. Whether that plan works remains to be seen.

Challenges Gemini 3 Still Faces with AI Overviews

Gemini 3 is better than previous models, but it's not perfect. Some of the remaining challenges:

Contextual misunderstanding: Sometimes follow-up questions still get misinterpreted. "What about the design?" in the context of a laptop discussion clearly means laptop design, but occasionally the model thinks you're asking about something else. These errors decrease with Gemini 3, but don't disappear.

Hallucinations in edge cases: Gemini 3 still makes things up, particularly for very niche topics where training data is limited or for recent events where the model's knowledge is incomplete. The rate of hallucinations is lower, but asking about something that happened last week might generate inaccurate information.

Factual errors with common knowledge: Even basic facts sometimes get wrong. Gemini 3 is 18% more accurate than previous versions, but that still means errors exist. Testing shows the model sometimes gets dates wrong, confuses similar concepts, or mixes up statistics.

Bias in source selection: The model tends to favor established, well-known sources. This is intentional (and probably reasonable), but it means cutting-edge research from lesser-known researchers gets underweighted. Alternative perspectives that are factually sound but not from major sources get underrepresented.

Multi-part question handling: Ask a follow-up that has three distinct parts (e.g., "What about battery life, pricing, and warranty?") and Gemini 3 might answer one part thoroughly but skip the others. The model is better at this than before, but still imperfect.

Context length limitations: Very long conversations start to lose context. After 10-15 follow-ups, the model might forget details from the first few questions. For most use cases this doesn't matter, but for complex research conversations, it's a limitation.

Novelty and uncertainty: When sources don't have much information (because something is very new), Gemini 3 is sometimes overly cautious, providing minimal information when a human researcher would extrapolate from related knowledge. It prioritizes accuracy over completeness, which is reasonable but sometimes frustrating.

These aren't showstoppers. They're constraints. Gemini 3 is good enough that these limitations don't break the product for most users. But they're worth understanding if you're relying on AI Overviews for important decisions.

Comparing AI Overviews to Competing Conversational Search Products

Google's not the only company offering this type of search experience.

Perplexity AI shows search results alongside AI-generated summaries with clear source attribution. The interface is more source-focused than Google's Overviews. Perplexity emphasizes showing you where information comes from. This is appealing to users who want transparency, but it means more reading and more source evaluation (which is less efficient than Google's approach).

You.com offers conversational search with different AI model options. You can choose which model powers your search (Open AI, Anthropic, others). This gives users control but also responsibility for choosing the right tool. Beginners might struggle with this.

Bing with Copilot integrated conversational search into Bing's results. The integration was clunkier than Google's because it happened after Bing's core search was already built. Google's building AI-first, which shows in the seamlessness.

Duck Duck Go hasn't moved heavily into generative overviews, instead emphasizing privacy and traditional search. This appeals to privacy-conscious users but leaves them without the convenience of AI-generated summaries.

Google's advantage is integration and scale. Google Search reaches billions of people. Adding AI Overviews to an existing search engine that billions use is more powerful than building a standalone search product. You don't need a billion users to migrate to a new platform; you upgrade the existing one they're already using.

Google's also not abandoning traditional search entirely. If you want to see just links, you can still scroll down. Overviews are prominent, but not mandatory. This gradual transition keeps existing users happy while introducing new users to the conversational model.

What This Means for Content Creators and Publishers

If you create content online, AI Overviews and Gemini 3 affect your life whether you like it or not.

Traffic patterns are shifting: Expect declining click-through rates from organic search, especially for high-volume, straightforward queries. Your analytics will show this clearly. This is not temporary—this is the new reality.

Authority is more important than ever: You can't compete on optimizing a single page anymore. You need to be recognized as an authority in your domain. This requires time investment: consistent publishing, comprehensive coverage, external recognition.

Diversification is necessary: Don't put all your traffic eggs in the search basket. Build an email list. Develop social media presence. Create direct traffic sources. Search traffic used to be a reliable baseline; now it's declining, and you need alternatives.

Depth over breadth: One mediocre article about each topic loses to one comprehensive article that covers multiple angles. Invest in fewer, deeper pieces rather than many shallow ones.

Update cycles matter more: If your content is cited in overviews but includes outdated information, you lose credibility. Keep content current. Update old posts. Maintain freshness.

Relationships with readers matter more: Email subscribers, loyal readers, social followers are no longer just nice-to-have. They're essential because they're not affected by changes to search algorithms. Direct relationships with audiences are becoming more valuable than algorithmic reach.

The transition is disruptive, but it's also an opportunity. Publishers who adapt quickly, build authority signals, and develop direct audience relationships will thrive. Those who wait and hope Google goes back to link-based search will suffer.

The Regulatory and Legal Implications Emerging

As Google transforms search, regulatory bodies and publishers are starting to push back.

The attribution question: Is showing a website's name in tiny text next to an overview sufficient attribution? Publishers argue no. If your content is synthesized into an overview, you're providing value to Google and the user, but you're not getting traffic. Some publishers are claiming this violates fair use or deserves compensation.

The copyright question: Is an AI-generated overview that synthesizes your content protected speech under fair use, or is it derivative infringement? Courts haven't definitively answered this yet. Google believes it's fair use (similar to search snippets). Publishers disagree. Expect lawsuits.

The monopoly question: If Google controls both the search engine and the AI that synthesizes results, and it chooses to cite its own products or affiliated sites more frequently in overviews, does that constitute anticompetitive behavior? The FTC and EU regulators are watching closely. Google's parent company (Alphabet) is already under antitrust scrutiny for its ad tech business.

The transparency question: How does Google decide which sources feed into overviews? The algorithm isn't transparent. Some publishers claim their content is excluded unfairly. Others claim Google favors its own content (You Tube snippets appear frequently in overviews). Regulators might require more transparency here.

The accuracy question: If AI Overviews provide inaccurate information, who's liable? Google? The original sources? The AI model itself? Liability frameworks haven't been established. As AI Overviews become more critical to information access, this question becomes more important.

None of these are settled. But they're all active areas of legal and regulatory focus. The next two years will probably see clarification on how overviews fit into copyright law, fair use, antitrust frameworks, and content liability.

FAQ

What exactly are AI Overviews and how do they work?

AI Overviews are Google's AI-generated summaries that appear at the top of search results instead of (or alongside) traditional ranked links. When you search for a query, Google's AI (currently powered by Gemini 3) reads multiple search results, synthesizes the information, and presents a coherent answer directly on the search results page. The process happens in milliseconds, pulling from various sources and combining them into a single, readable paragraph or section. Follow-up questions let you ask clarifications about that overview without performing a new search, creating a conversational experience within the search interface itself.

How does Gemini 3 specifically improve AI Overviews compared to earlier models?

Gemini 3 improves AI Overviews through better contextual understanding, 18% higher factual accuracy, and more careful handling of uncertain or conflicting information. The model includes reasoning capabilities that let it work through problems step-by-step before providing answers, reducing hallucinations and confusions. Gemini 3 also handles follow-up questions better by maintaining conversation context, so when you ask "what about battery life?" in a discussion about laptops, the model understands you mean laptop battery life specifically, not battery life in general. The model is also faster, improving page load times for generating overviews.

Do AI Overviews show sources and links to the original articles?

Yes, AI Overviews include attribution to sources. When Google synthesizes information into an overview, it shows small text indicating where information came from (e.g., "from Tech Radar" or "from Trusted Reviews"). However, the attribution is minimal and doesn't typically drive click-through traffic to the original sources. Traditional search results still appear below overviews, but they're less prominent than they used to be. Publishers can still get traffic through these links, but the click distribution has shifted significantly toward the overview itself rather than the individual sources.

How are publishers and content creators responding to AI Overviews?

Publishers are responding in varied ways. Some are adapting their content strategy to feed AI Overviews by building comprehensive, interconnected content libraries and establishing stronger authority signals. Others are reducing investment in organic search content because the click-through rates have decreased. Some publishers are actively blocking Google's AI crawlers to prevent their content from being synthesized without getting direct traffic. Many are diversifying traffic sources (email, social media, direct traffic) to reduce dependency on search. The overarching trend is that traditional link-based SEO is becoming less effective, and publishers need new strategies to maintain visibility and traffic.

Can I ask follow-up questions directly within Google Search, or do I need to use a separate AI chat interface?

You can ask follow-up questions directly within Google Search without switching to a separate chat interface. After an AI Overview appears, you'll see a text input field where you can type a follow-up question. The conversation stays within the search results page. If you ask multiple follow-ups in a single session, Google calls this "AI Mode," but the experience remains continuous and conversational. You're not navigating between different pages or interfaces; you're just scrolling and typing within the same search results view.

What are the limitations and risks of relying on AI Overviews for important decisions?

AI Overviews are generally reliable for factual questions (dates, definitions, basic how-tos) but have limitations for decision-making. Gemini 3 still occasionally hallucinates facts, particularly about niche topics or very recent events where training data is limited. For medical advice, legal questions, or significant financial decisions, you should verify information across multiple sources rather than relying solely on an overview. The model can also exhibit bias toward well-known sources and may underrepresent alternative perspectives or cutting-edge research from lesser-known researchers. For complex, nuanced, or high-stakes information, traditional research using multiple authoritative sources is still the safest approach.

How is Google monetizing AI Overviews if they reduce click-through rates to traditional search results?

Google's monetization strategy for Overviews is still evolving, but likely includes several approaches: contextual ads appearing within or adjacent to overviews, increased engagement metrics from longer session times (users asking follow-ups), collection of more detailed intent data from follow-up questions, and potentially future premium features (ad-free search, similar to Chat GPT Plus). Google also benefits from longer average session times even with fewer individual clicks, as this creates more opportunities for ad impressions throughout a session. Additionally, the detailed data about user intent from follow-up questions helps Google refine its advertising targeting across its entire advertising ecosystem.

Will Google eliminate traditional search results completely?

It's unlikely that Google will eliminate traditional search results entirely in the near future, but they will likely continue decreasing in prominence. Google still shows links below overviews, and users can click "show all sources" or scroll to traditional results if they want more detailed information. The trend over five years will probably be that AI Overviews become 70-80% of screen real estate, and traditional links become an optional supplement rather than the primary interface. Some users (power users, researchers) will always prefer traditional results, and Google will likely maintain this option for them. However, the default experience is shifting away from links and toward AI-generated summaries.

How does this shift affect small publishers and niche websites?

Small publishers are facing the most disruption. They lose click-through traffic without the scale or authority of larger publishers to benefit from being cited in overviews. A small niche blog about a specialized topic used to be able to rank in the top 10 and get meaningful traffic. Now, even if it ranks well, its content might be synthesized into an overview from which it gets no clicks. Small publishers need to focus on building direct audiences through email and social media, establishing clear expertise signals, and creating content that's so unique or comprehensive that it's the only source worth citing. Niche sites that haven't built brand recognition are at a disadvantage compared to established publications with strong authority signals.

Conclusion: Search Isn't Searching Anymore, It's Answering

Google's rollout of AI Overviews with Gemini 3 and follow-up questions represents a fundamental shift in how the world accesses information. Search used to mean finding sources. Now it means getting answers. The difference is enormous.

For users, this is mostly positive. You get faster answers, conversational refinement, and less time spent evaluating sources. The experience is smoother, more intuitive, and closer to how you'd actually want to learn information. You ask a question and get a thoughtful answer rather than a list of websites you have to evaluate yourself. This is objectively better for most everyday queries.

For publishers and content creators, it's disruptive. The economic incentives that drove content creation have shifted. You can't just publish something good and hope it ranks in position one. You need to build authority, create comprehensive content ecosystems, establish direct audience relationships, and adapt to a world where being cited matters more than being clicked.

For Google, it's survival. The company watched Chat GPT change how millions of people find information. If Google didn't adapt, search would become irrelevant. By making search more like a chatbot while maintaining Google's advantages (access to fresh web data, source attribution, scale), Google preserved its position as the primary information discovery tool.

Gemini 3 is the technological enabler here. The model is good enough that users trust it to synthesize information accurately. That trust is essential. Without Gemini 3's improved accuracy, the whole system breaks down. Users stop trusting overviews, traffic continues flowing to Chat GPT and Perplexity, and Google's search monopoly weakens.

Where this goes from here depends on several factors: whether Google figures out sustainable monetization for overviews, how regulators respond to antitrust concerns, whether publishers accept the new attribution-over-clicks model, and how the technology continues to evolve. Gemini 4, presumably coming next, will be even better. Follow-up questions might become even more seamless. The conversation might be able to handle longer context. Attribution might become more transparent.

What's certain is that search as you knew it—typing a query, scrolling through ten links, clicking through and reading multiple sources—is becoming obsolete. The future of search is conversational, AI-powered, and increasingly divorced from traditional link-based organic results. Gemini 3 and AI Overviews are the current checkpoint. But the arc is clear.

Publishers need to adapt now. Content creators need to refocus on authority and direct relationships. Users need to recognize that AI overviews are faster but not perfect, and should verify important information. And everyone should expect that search will look completely different five years from now.

Google's not abandoning search. It's transforming it. And whether that transformation is good or bad depends largely on where you're standing when it happens.

Key Takeaways

- Google's AI Overviews now let users ask follow-up questions seamlessly without leaving search, transforming search into a conversational interface

- Gemini 3 improves accuracy by 18% and handles context better than previous models, making it reliable enough to be the default for overviews

- Traditional search results are being deprioritized in favor of AI-synthesized answers, causing significant shifts in organic traffic distribution

- Publishers must adapt by focusing on E-A-T signals, comprehensive content ecosystems, and direct audience relationships rather than traditional SEO

- Search is transitioning from finding sources to getting answers, fundamentally reshaping the 30-year economic incentive structure of content creation

Related Articles

- Complete Guide to Content Repurposing: 25 Proven Strategies [2025]

- Google Removes AI Overviews for Medical Queries: What It Means [2025]

- Netflix Live Events Still Struggle: Why Skyscraper Failed [2025]

- Netflix's $82.7B Warner Bros. Acquisition: What You Need to Know [2025]

- Google Personal Intelligence in AI Mode Search [2025]

- The 4 Forces Shaping Social Media in 2026 [2025]

![Google AI Overviews Follow-Up Questions: What Changed [2025]](https://tryrunable.com/blog/google-ai-overviews-follow-up-questions-what-changed-2025/image-1-1769536180708.jpg)