Google's Quiet Retreat from Medical AI: What Happened and Why

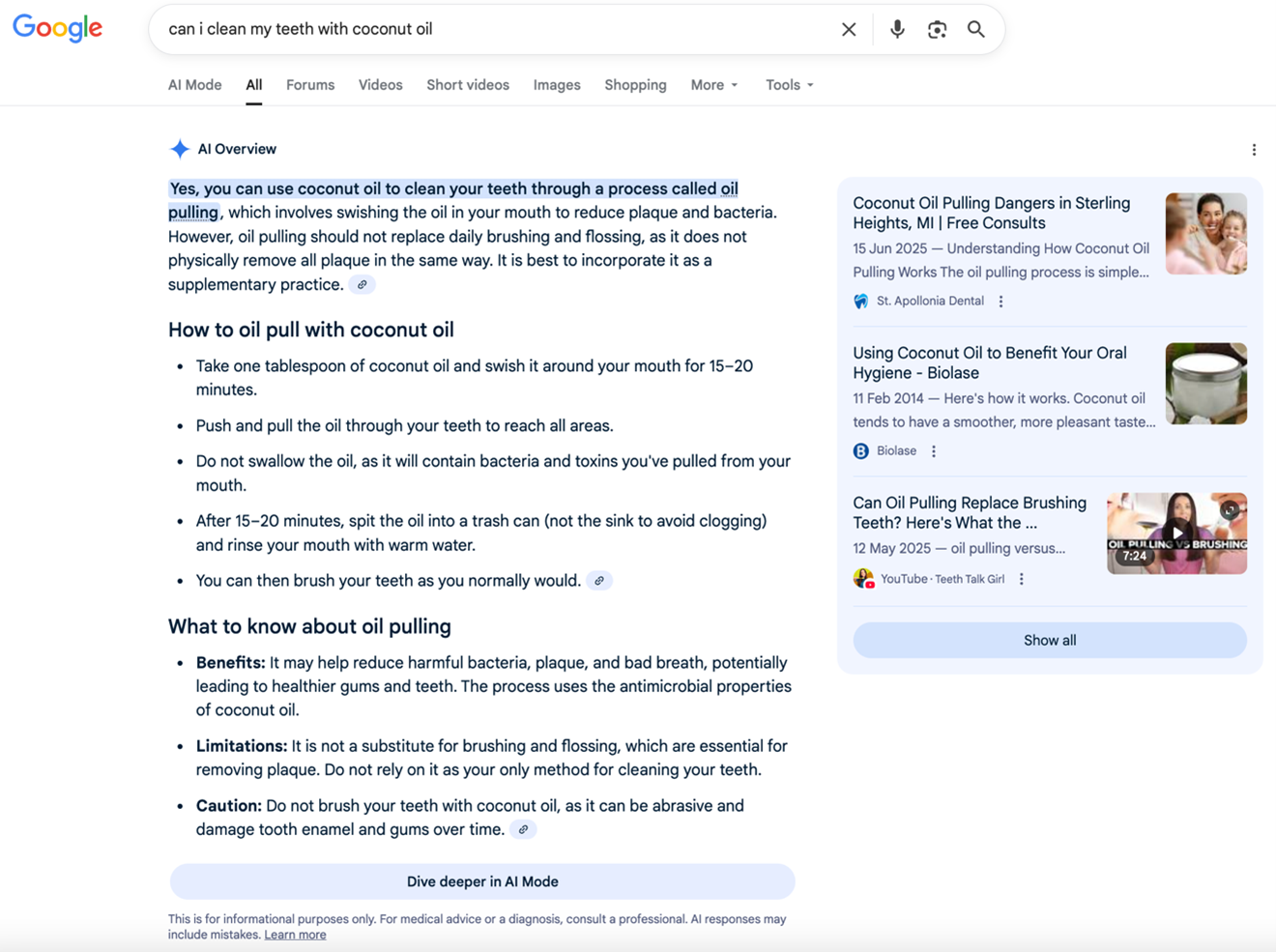

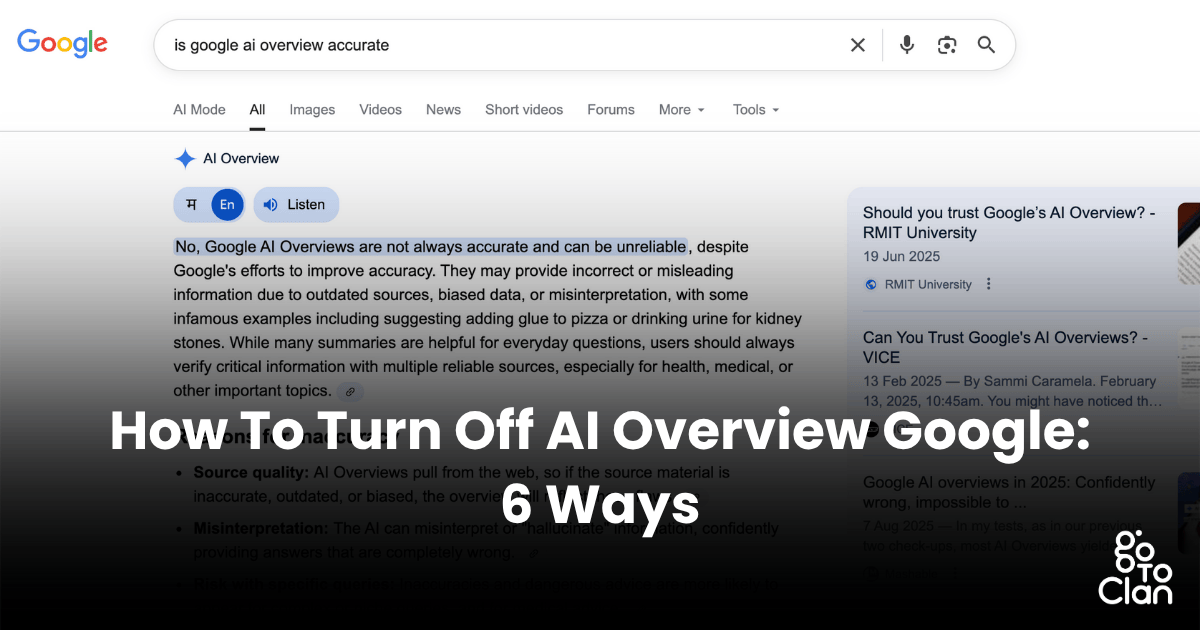

Something strange happened in January 2025. Google, the company that's built its entire search empire on providing answers to nearly every question humans can ask, suddenly started removing those answers. Not all of them, mind you. Just the ones about health.

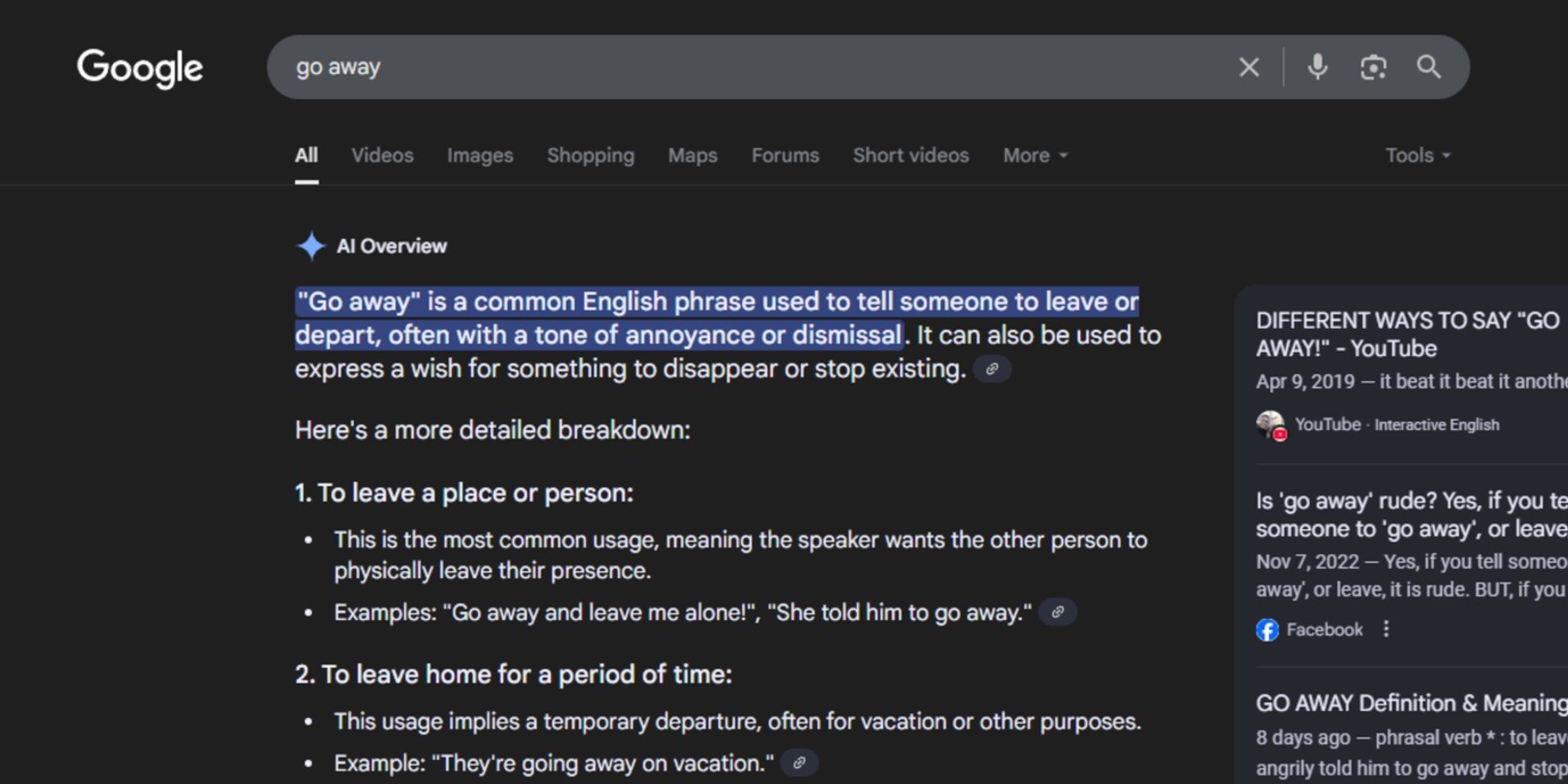

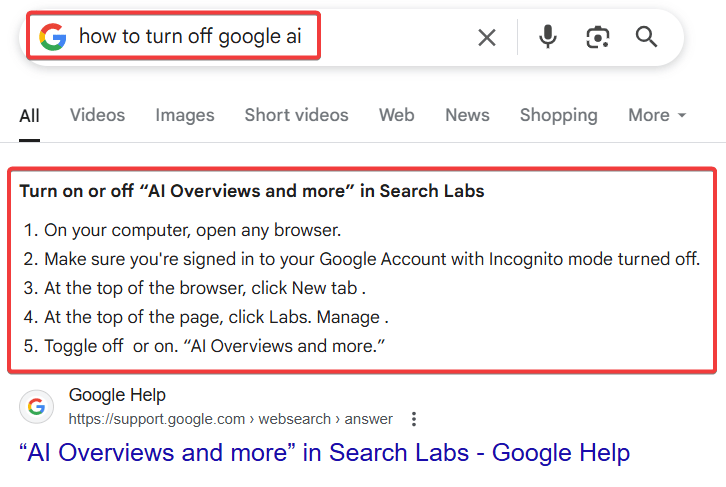

It started small. A British newspaper called the Guardian published an investigation that made Google look bad. The outlet found that when people searched for things like "what is the normal range for liver blood tests," Google's AI Overviews feature was serving up numbers that didn't account for age, sex, ethnicity, or nationality. For someone actually dealing with test results, this could mean the difference between realizing you need medical attention and thinking everything's fine.

Within hours, Google started pulling AI Overviews from those specific queries.

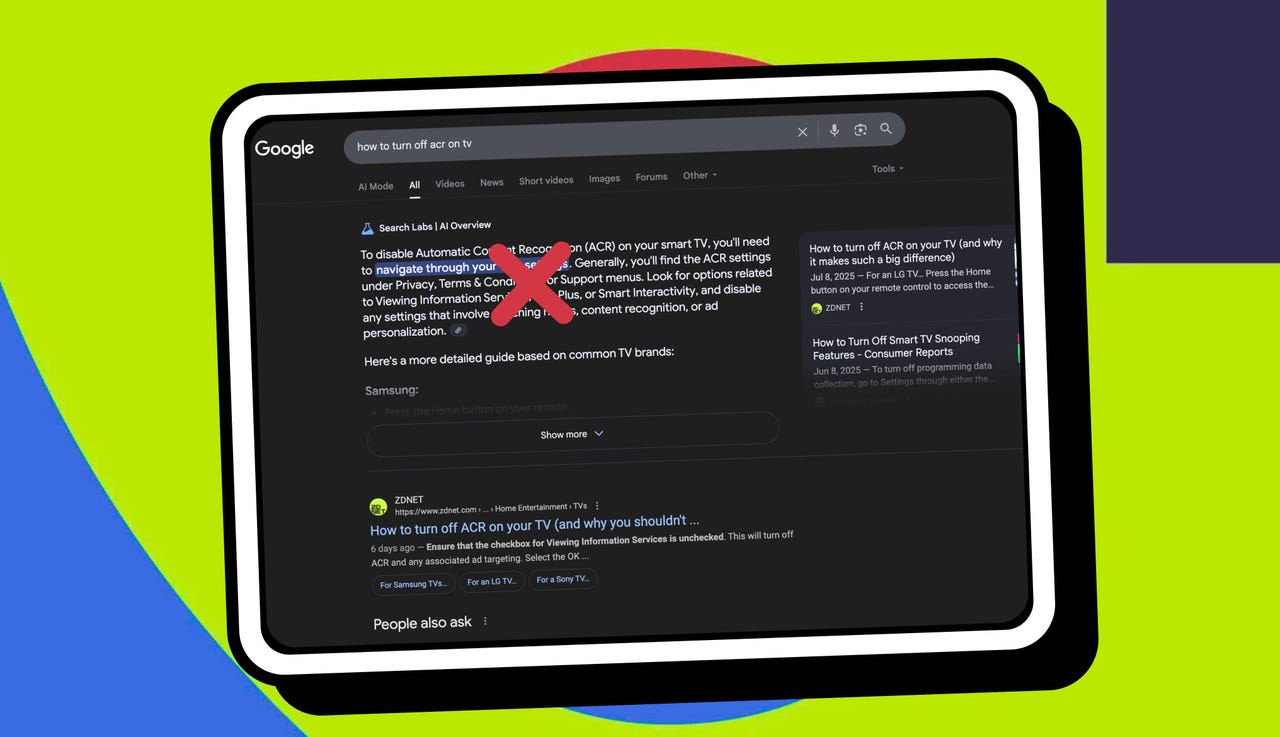

But here's where it gets complicated. Google removed the overviews from the exact phrases the Guardian tested. Then users tried slight variations like "lft reference range" and boom, the AI overviews were still there, still potentially misleading. This wasn't a comprehensive fix. This was damage control.

When I tested those same queries myself a few hours after the Guardian's article dropped, something interesting happened. The AI overviews were gone. But Google still offered to let me "ask in AI Mode," essentially moving the same feature to a different part of the interface. It's like Google turned off the lights in one room but left a door open to the dark hallway.

The top search results? Often they were the Guardian's own article explaining why Google's AI overviews were dangerous. There's a certain irony in that.

Google responded to the Guardian with a statement that basically said "we disagree with your assessment." A Google spokesperson claimed that an internal team of clinicians reviewed the questioned queries and found "in many instances, the information was not inaccurate and was also supported by high quality websites." So either the Guardian's testing was flawed, or Google's clinicians disagreed with the newspaper's interpretation of medical accuracy. Neither scenario is great when we're talking about health information that people actually rely on to make decisions about their bodies.

This incident exposed something fundamental about the current state of AI in search: nobody's really sure who's responsible when things go wrong.

The Guardian Investigation That Sparked It All

Let's back up. The Guardian didn't just wake up one morning and decide to test Google's AI Overviews. They were investigating how Google's new search features were affecting information quality. What they found was troubling enough to publish as a major investigation.

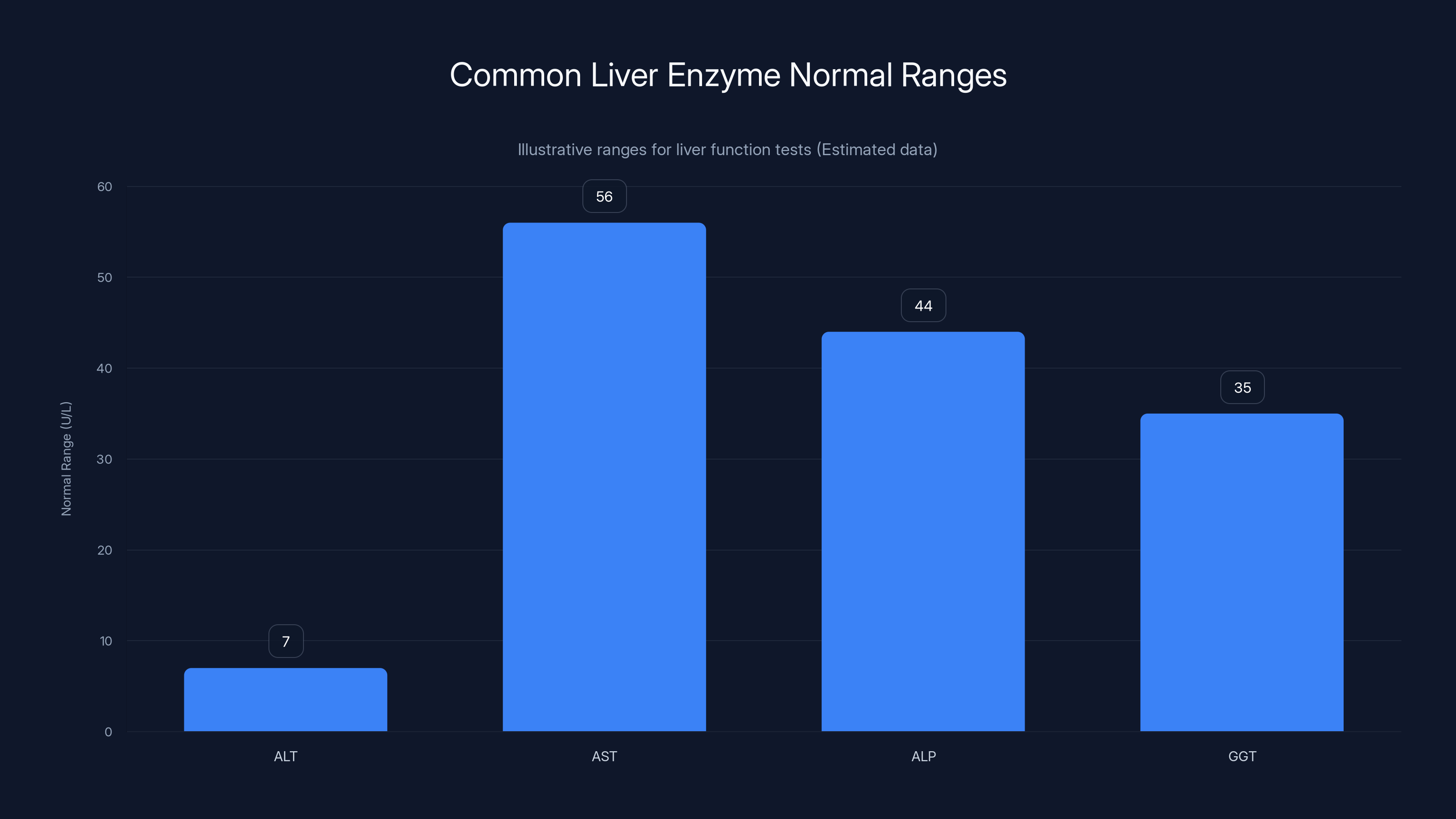

The newspaper tested dozens of health-related queries and found patterns of inaccuracy. The liver function tests example was just the most obvious. Users searching for normal test ranges got numbers without any context about what factors affect those ranges. Someone with a liver enzyme reading of 45 units per liter might see that number and think it's normal across all groups, when in reality that same result might indicate a problem depending on their age, sex, and ethnicity.

This isn't a minor formatting issue. This is medical information that could influence whether someone calls their doctor or ignores a warning sign.

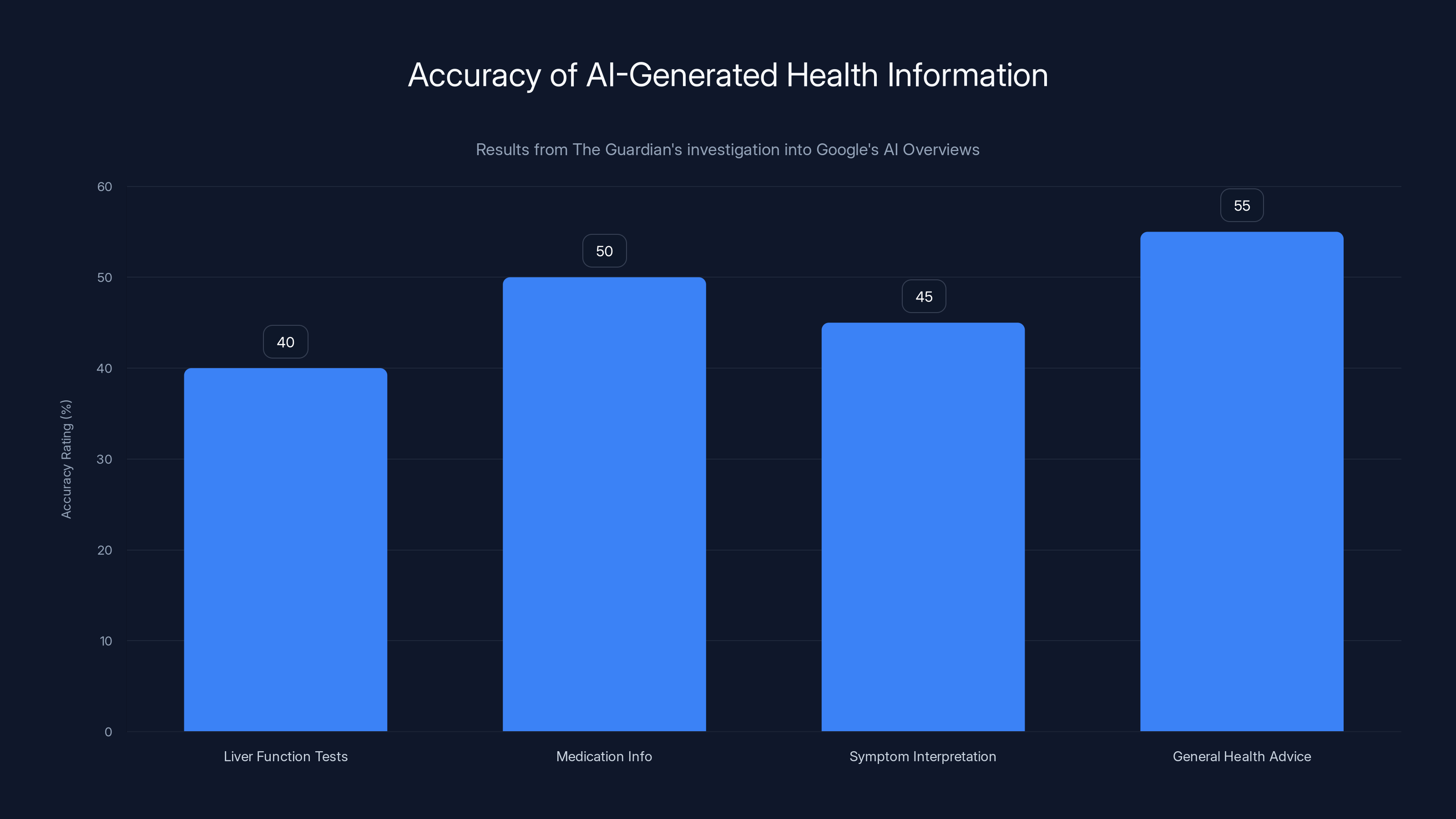

The Guardian tested other queries too. They found misleading information about medications, symptom interpretations, and general health advice. In some cases, the AI summaries oversimplified complex medical topics in ways that could genuinely harm someone's health decisions.

What made this investigation significant wasn't just that it found errors. It was that it found systematic errors. These weren't random mistakes—they were patterns that suggested Google's AI system wasn't thinking about nuance the way a qualified medical professional would.

The publication gave Google time to respond. Google didn't deny that there were issues. Instead, they started quietly removing AI Overviews from specific queries. But as the Guardian noted immediately after, the response was incomplete. Variations on the same queries still showed AI-generated summaries. The problem wasn't fixed, just partially hidden.

This is where the story gets really interesting, because it tells us something important about how Google operates. When there's a PR crisis around a specific phrase, Google can and will remove AI Overviews for that phrase. But doing the deeper work—actually ensuring that all variations and related queries provide accurate health information—that's harder. That takes more resources, more human review, more expertise.

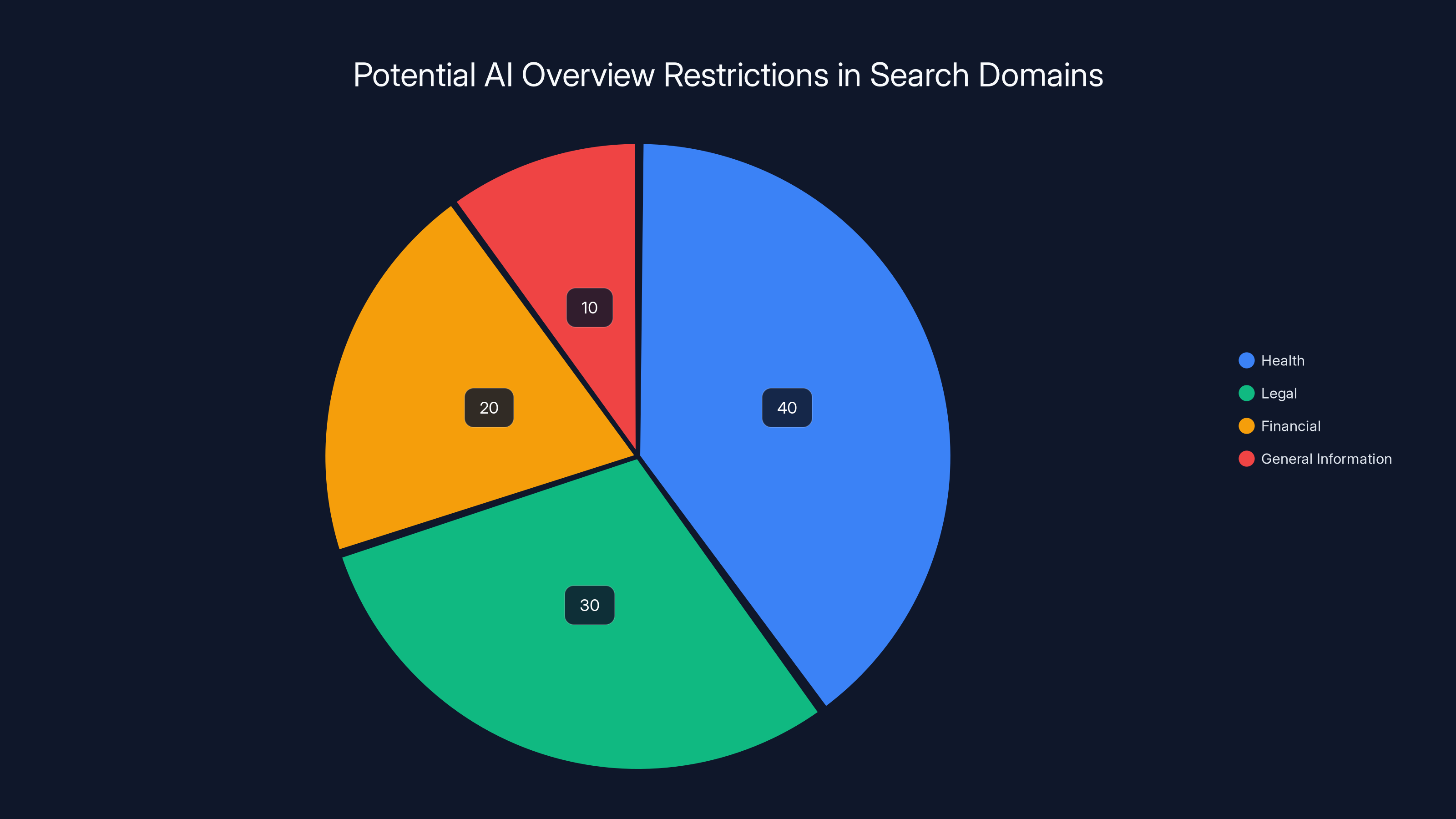

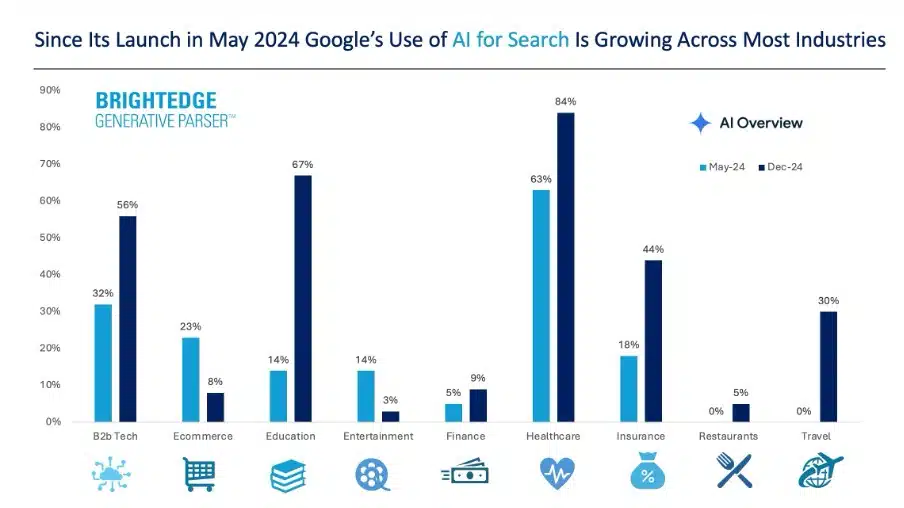

Estimated data suggests health, legal, and financial domains might see higher restrictions on AI Overviews due to their critical nature.

How AI Overviews Work and Why They're Different from Regular Search

Before we can understand what went wrong, we need to understand what AI Overviews actually are.

For decades, Google Search worked like this: you type a query, Google's algorithms find the most relevant web pages, and you see a ranked list. You click through to websites and read. It was simple, and it worked because Google's job was clear. Find relevant pages. Don't vouch for their accuracy. That's the website owner's responsibility.

AI Overviews change that relationship entirely.

When you search for something now, Google doesn't just show you links. In many cases, Google generates a summary. This summary is created by Google's AI models, trained on massive amounts of text data and fine-tuned to synthesize information. Google is saying, in essence: "Here's the answer to your question, synthesized from multiple sources."

The crucial word there is "synthesized." Google isn't just copying text from one website. The AI is reading multiple sources and creating new text that summarizes or explains the topic. This is fundamentally different from listing search results, because Google is now creating content, not just organizing other people's content.

When you're synthesizing information about a restaurant's hours or a movie's plot, synthesization is great. You get a quick answer. But when you're synthesizing medical information, you're not just organizing facts anymore. You're making editorial decisions about what matters, what's reliable, what should be emphasized.

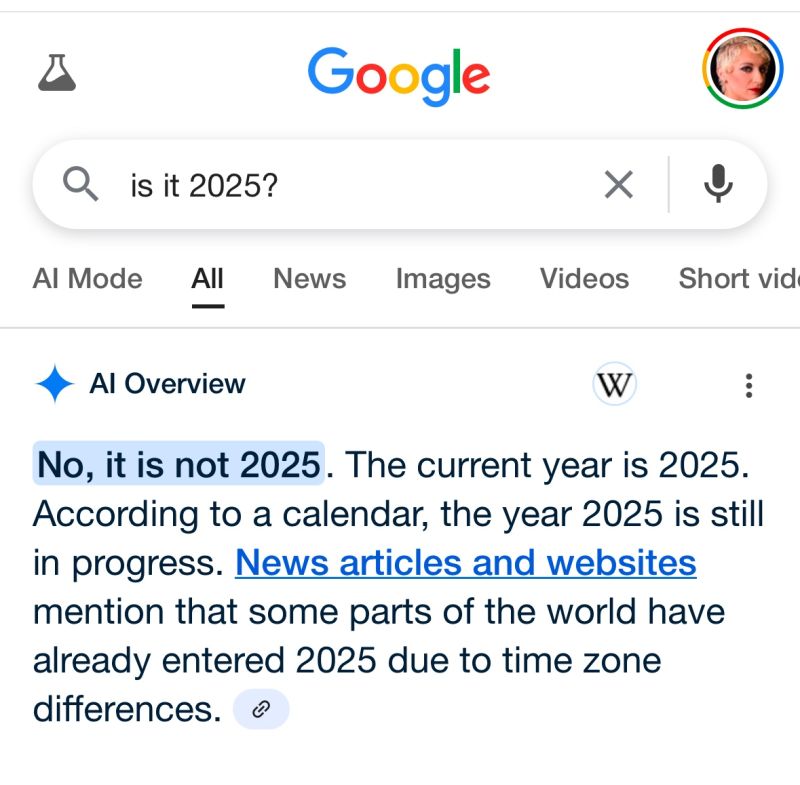

The AI system doesn't think like a doctor. It doesn't say, "Well, this applies to men but not women." It doesn't automatically add important caveats. It optimizes for being concise and sounding authoritative. Those two goals are sometimes at odds with accuracy and completeness.

Google introduced AI Overviews as a feature to make search faster and easier. You get an answer right at the top, generated specifically for your query. For many searches, this is genuinely useful. But for health queries, that same feature becomes risky because health information without proper context can be misleading or even dangerous.

The problem isn't that Google's AI is stupid. The problem is that health information isn't a problem that can be solved by optimizing for conciseness and relevance. Health information needs nuance, caveats, context, and sometimes the simple acknowledgment that "it depends" or "you should talk to a doctor."

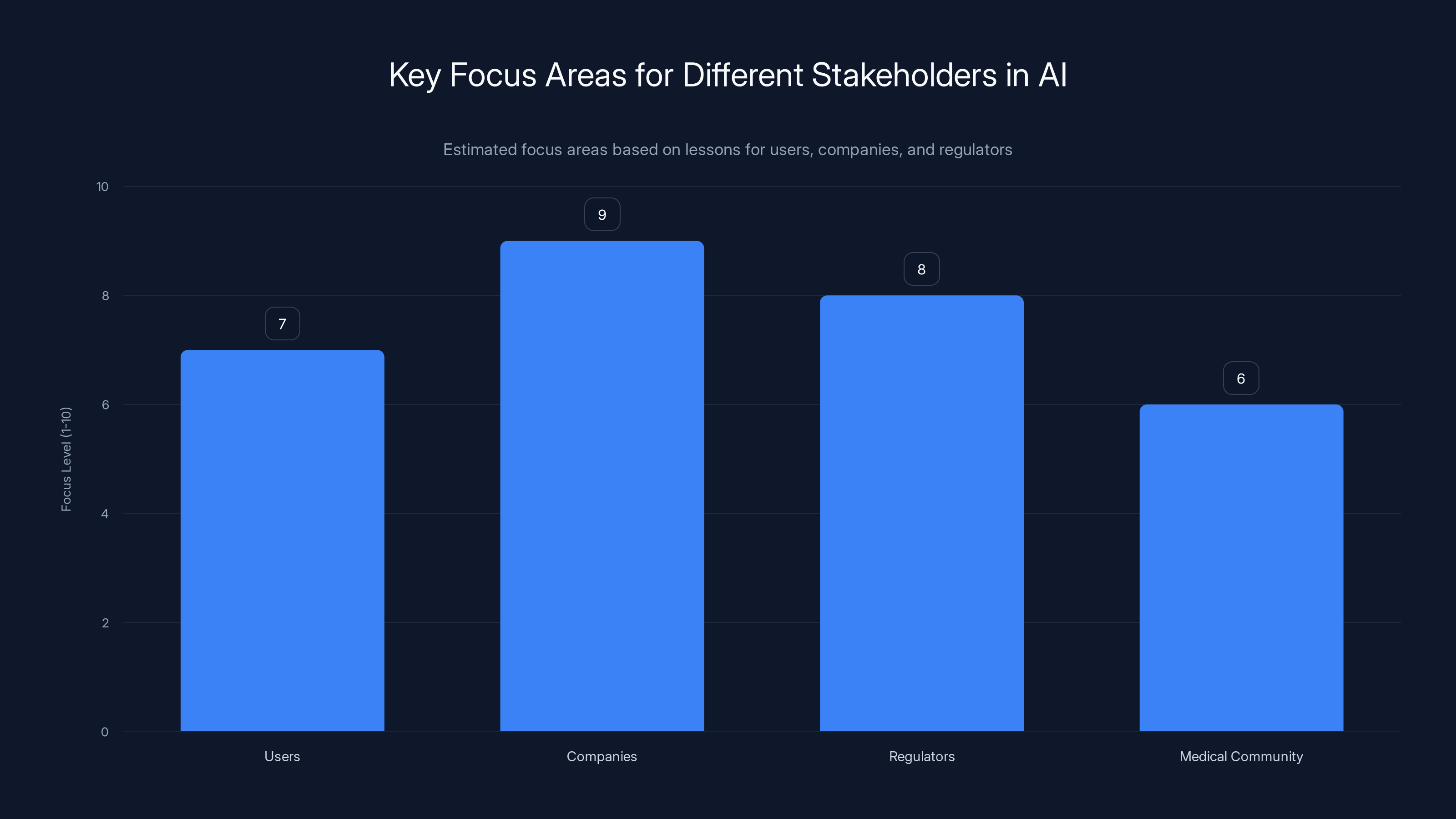

Estimated focus levels show companies need the highest attention to detail in AI deployment, followed by regulators. Estimated data.

The Liver Function Test Case: A Microcosm of the Problem

Let's dig deeper into the specific example that started this whole thing, because it really illustrates the core issue.

Liver function tests, or LFTs, measure how well your liver is working. Doctors check several different enzyme levels and other markers. The normal range for these markers varies significantly based on multiple factors.

When someone searches for "what is the normal range for liver blood tests," they might be searching because they just got lab results back and they're worried. They want to know if those results are normal. It's a high-stakes search.

Google's AI Overview gave them numbers without context. Someone could see their result, compare it to the number in the AI Overview, and either feel reassured or worried based on incomplete information.

But here's the thing: the ranges the AI provided might have been "correct" in some sense. They might be the average normal ranges used in many labs. The problem was that they weren't complete. They weren't wrong, exactly. They were just insufficiently qualified.

This is actually worse than being obviously wrong, because someone might think, "Well, Google said 7-56 is normal, and I have 62, so I'm probably fine." Meanwhile, their specific age and demographics might mean that 62 is concerning.

The Guardian tested whether Google's AI overviews accounted for these differences. They found that they largely didn't. The information was presented as universal when it was actually population-specific.

When Google removed AI Overviews for the exact phrase "what is the normal range for liver blood tests," they fixed the problem for people searching that exact phrase. But someone searching "normal lft range" or "lft test reference range" would still get potentially misleading information.

This exposes a fundamental limitation of the fix: it's too narrow. Google can't surgically remove AI overviews from every possible variation of a query. Well, they could, but it would require actually fixing the underlying problem, which is harder than just blacklisting specific phrases.

Google's Response: Denial or Disagreement?

Google's official response to the Guardian investigation is worth examining carefully, because it reveals a lot about how the company thinks about responsibility and accuracy.

A Google spokesperson told the Guardian: "We don't comment on individual removals within Search. We do work to make broad improvements."

Then came the more detailed response: "An internal team of clinicians reviewed the queries highlighted by the Guardian and found in many instances, the information was not inaccurate and was also supported by high quality websites."

Let's parse this. Google is claiming that clinicians on their staff reviewed the information and found it acceptable. They're saying the information wasn't inaccurate and was supported by high-quality sources.

But the Guardian's investigation wasn't about whether the numbers came from reputable medical sources. Of course they did. The investigation was about whether the information was presented in a sufficiently contextualized way for someone making health decisions.

There's a big difference between "this number appears on reputable medical websites" and "this number is a complete and sufficient answer to someone's health question."

Google's response suggests they might be talking past the Guardian. The Guardian is saying, "Your AI is oversimplifying medical information in ways that could harm people." Google is saying, "But the numbers come from good sources." Those are different arguments.

It's also worth noting that Google's response says they "work to make broad improvements." This is a classic corporate phrase that basically means "we're doing something but we're not going to tell you what." The actual broad improvements aren't yet visible. What's visible is narrow, reactive removal of AI Overviews from specific queries.

Vanessa Hebditch from the British Liver Trust captured the real concern: "Our bigger concern with all this is that it is nit-picking a single search result and Google can just shut off the AI Overviews for that but it's not tackling the bigger issue of AI Overviews for health."

She's saying what many experts have been thinking: removing AI Overviews from a few specific queries is performative. It makes Google look responsive without actually fixing the systemic issue that AI-generated health information is inherently risky when it lacks proper medical supervision and qualification.

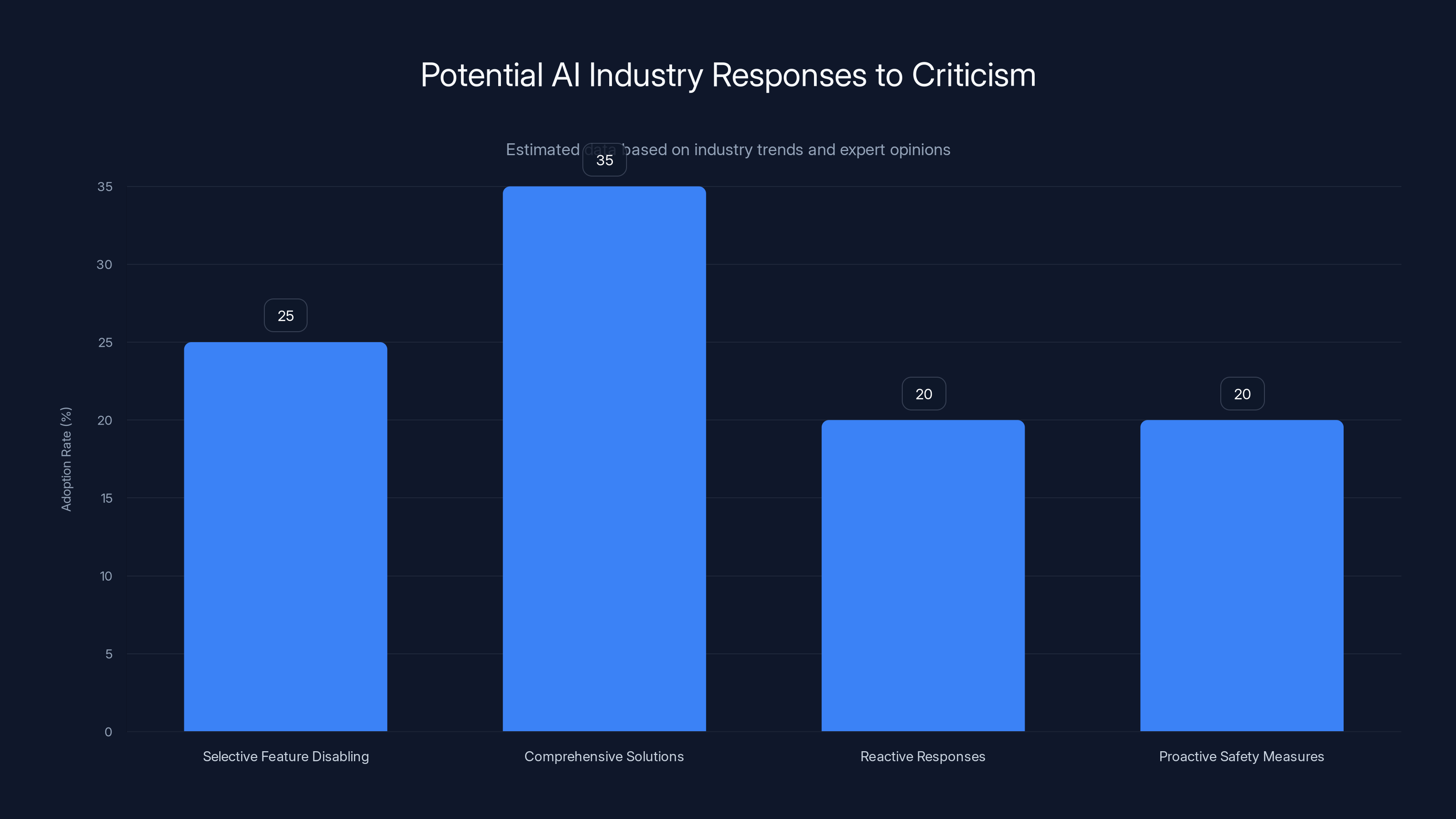

The AI industry may adopt various strategies in response to criticism. Comprehensive solutions and proactive safety measures are estimated to be the most favored approaches. (Estimated data)

Why Health Information Is Different

This incident raises a crucial question: why is health information different from other types of information that AI Overviews handle just fine?

The answer comes down to consequences. When you search for "best restaurants near me" and the AI Overview suggests a place you don't like, you've lost maybe an hour of your evening and a meal that wasn't great. When you search for health information and get incomplete or misleading information, you could literally harm yourself.

Health information sits at an intersection of complexity, stakes, and expertise. Most people aren't trained to interpret medical information. They don't know what they don't know. They can't easily spot when important context is missing because they don't understand the field well enough.

This is why doctors exist. Medicine is complicated. A doctor's job includes knowing what questions to ask, what factors matter, and what information is relevant for a specific person. An AI trained on general text data doesn't have the same framework.

Consider a query like "why am I tired?" There are literally hundreds of possible causes. A human doctor would ask follow-up questions: Have you had this for weeks or months? Do you have other symptoms? How's your sleep? Any stress in your life? Any medical conditions? What medications are you taking?

An AI Overview can't do this. It has to give a summary. That summary, by definition, leaves out possibilities and context that might be individually relevant.

There's also the issue of responsibility. If a restaurant recommendation is bad, you know who to blame: Google and the sources they pulled from. But health information exists in a weird gray zone. If you follow AI-generated health advice and something goes wrong, who's responsible? The AI developers? The sources the AI learned from? You, for taking advice from an AI instead of a doctor?

Medicine has evolved to require human expertise and judgment precisely because the stakes are high and the variables are complex. Automating health information summary creates a system that bypasses that expertise without acknowledging that it's doing so.

The Broader Implications for AI in Search

This incident isn't just about Google and health queries. It's a test case for how AI features in search will evolve and what guardrails will develop around them.

Right now, we're in a moment where AI companies are moving fast and rolling out features because they can. Google has always been a "launch fast and iterate" company. But AI Overviews expose a potential problem with that approach: some domains are too important to move fast in without breaking things.

Search is arguably the single most-consulted resource in human history. Billions of searches happen every day. When you introduce a feature that generates text instead of just organizing existing text, you're fundamentally changing what search does.

The question the industry hasn't fully grappled with yet is whether there are domains where generated summaries shouldn't appear at all. Should AI Overviews exist for health queries? For legal information? For financial advice? For anything that could affect someone's well-being?

We could imagine a system where Google disables AI Overviews entirely for health-related searches, treating them like restricted information. Users would get ranked search results as they have for the past two decades, without the AI-generated summary layer.

But Google probably won't do this, because it would mean admitting that the feature isn't ready for certain domains. And from a business perspective, disabling a feature is a loss. Instead, we'll likely see incremental improvements: better training data, more careful fine-tuning, human review processes, clearer disclaimers.

The question is whether those improvements are enough. The Guardian's investigation suggests they might not be.

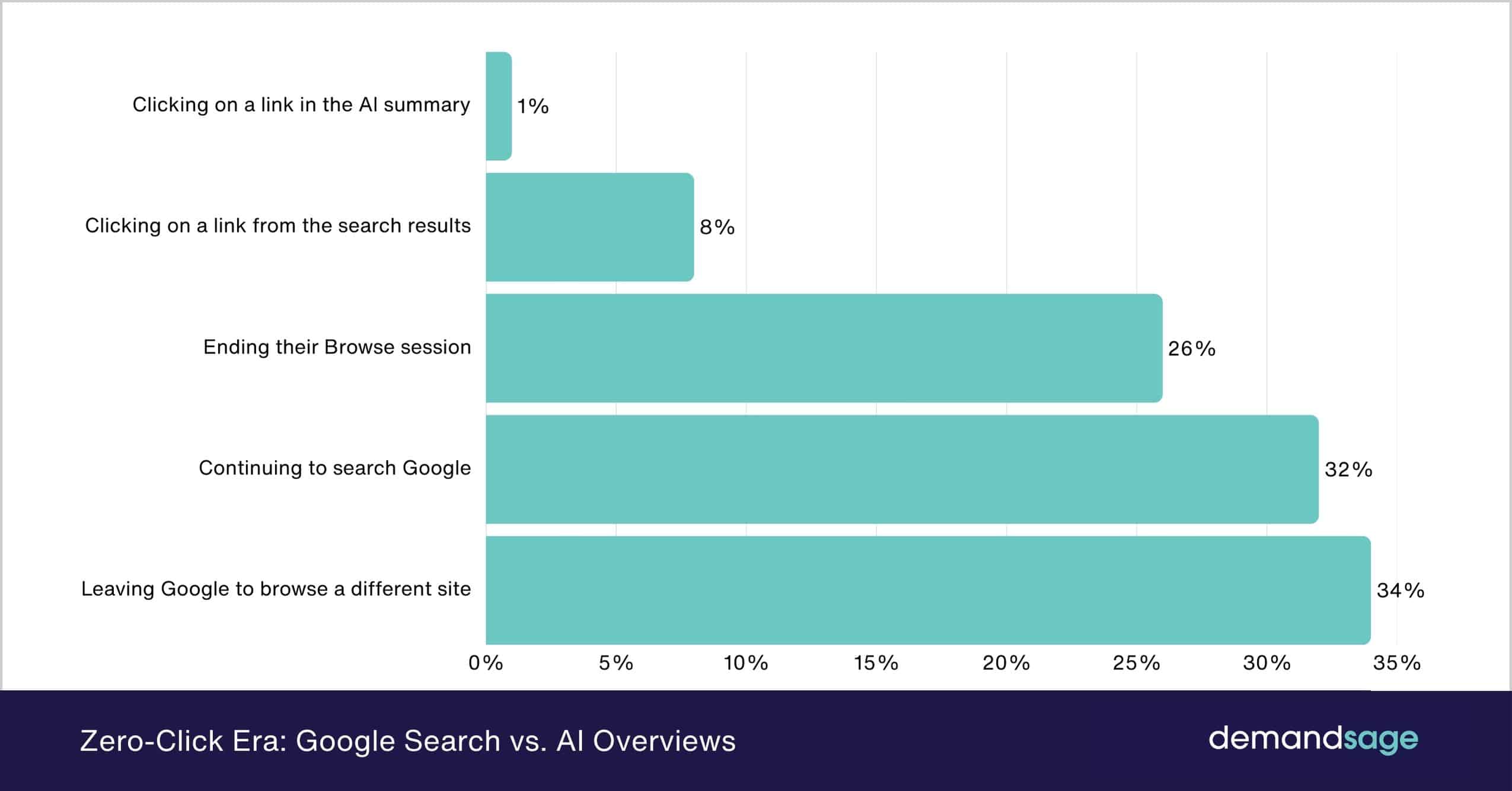

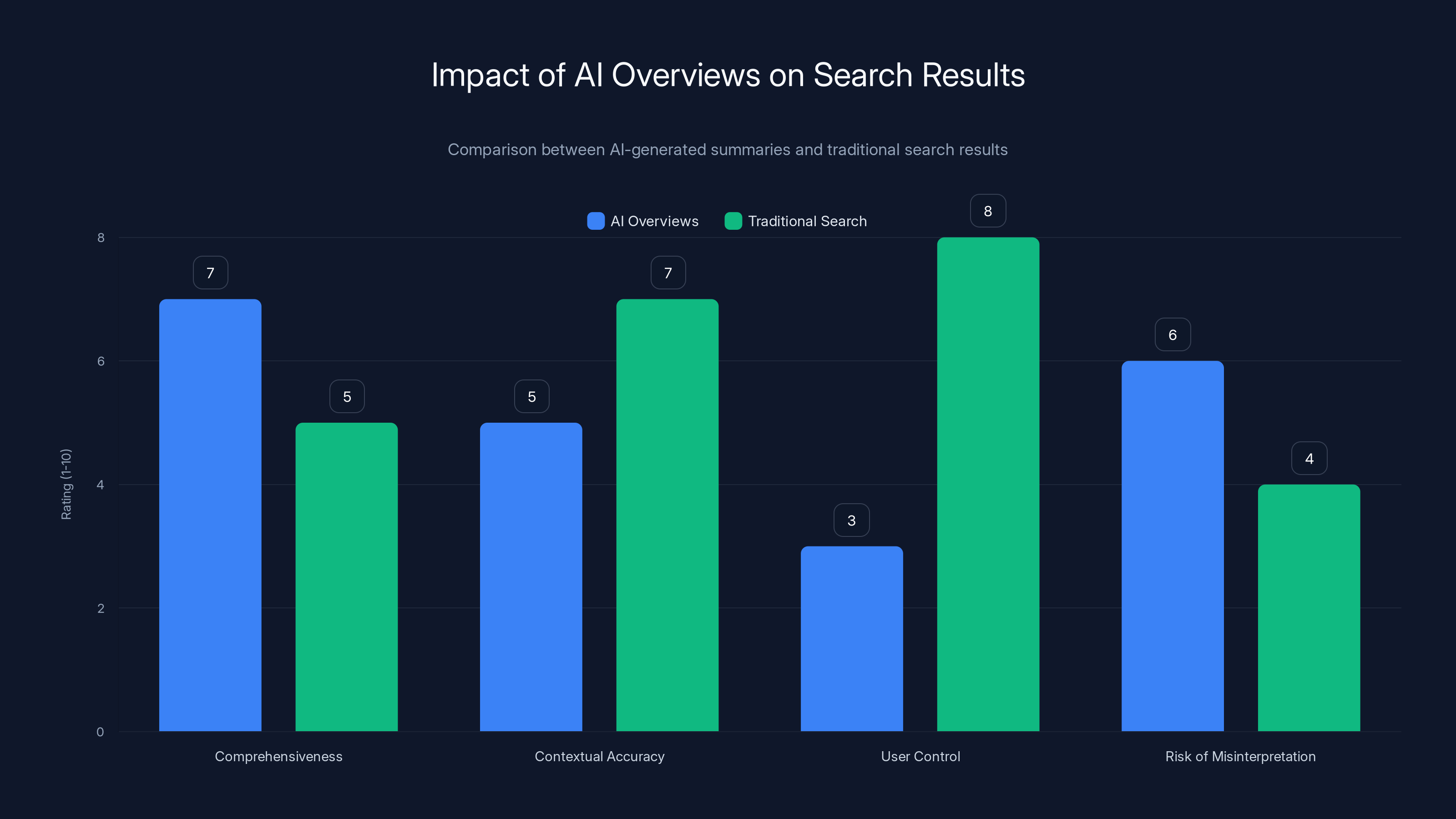

AI Overviews provide more comprehensive summaries but may lack contextual accuracy and user control, increasing the risk of misinterpretation compared to traditional search results. Estimated data.

How This Affects Regular Users

If you're someone who uses Google Search regularly, what does this mean for you?

Practically speaking, if you're searching for health information, you should be aware that AI Overviews might appear and might not be sufficient context for making health decisions. You should probably treat them the way you'd treat information from a friend: potentially useful as a starting point, but not a substitute for professional medical advice.

You might notice that some health queries don't show AI Overviews anymore. That's probably intentional. Google is trying to reduce the risk of providing incomplete health information by removing the feature from specific high-risk queries.

But you'll also notice that many health queries still show AI Overviews. The removal isn't comprehensive. It's targeted at specific phrases that got media attention. This creates an inconsistent experience where some health searches have AI summaries and others don't.

For anyone searching health information, the safest approach is still the approach that worked before AI Overviews: look at multiple sources, understand the context, and if you're genuinely concerned about your health, talk to a doctor. The AI Overview isn't a substitute for that process.

There's also a transparency issue. When Google removes AI Overviews from a specific query, they don't announce it. Users might not realize that the same type of search sometimes gets AI summaries and sometimes doesn't, and they might not understand why. The decision-making process is invisible.

The Role of Clinician Review

Google's response mentioned that an internal team of clinicians reviewed the Guardian's examples. This raises an interesting question: how much clinician review happens before AI Overviews are generated for health queries?

Likely not much, because that would be expensive and slow. Clinician review probably happens reactively, after something goes wrong and gets reported.

This is a fundamental challenge for large-scale AI systems. You can't have a clinician review every health query and every generated overview. That's not scalable. But without that review, you're essentially letting an AI system make judgment calls about medical information.

There are a few potential solutions. One is better training data and fine-tuning: train the model specifically on health information from reputable sources and explicitly teach it to include important caveats and context. Another is automated flagging systems that identify when a health overview might be incomplete or risky. A third is human review of a representative sample of health queries to catch systematic problems.

Google probably uses some combination of these. But the Guardian's investigation suggests that whatever approach they're using isn't catching all the problems.

The ideal would be a system where high-stakes health queries get reviewed by a clinician before the AI Overview appears to users. But again, that's not scalable to billions of searches per day. There's a fundamental tension between scale and safety.

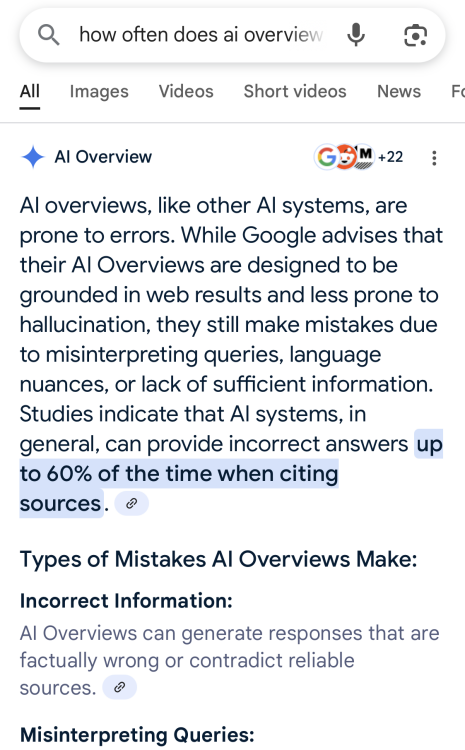

The Guardian's investigation highlighted systematic inaccuracies in Google's AI Overviews, with accuracy ratings ranging from 40% to 55%. Estimated data based on investigative findings.

What Experts Are Saying About This

Beyond the Guardian's investigation and Google's response, what are experts in medicine, AI, and search saying about this?

Medical professionals have been cautiously skeptical of AI-generated health summaries from the start. They understand that medicine is complex and that context matters enormously. They also understand the limitations of their own field. A doctor doesn't pretend to know everything about every condition. They make educated guesses based on their training and experience, and they often tell patients "let's run some tests" or "let's see how this develops."

An AI system generates confident summaries without that built-in humility.

AI researchers have pointed out that this is a known problem in the field: AI systems tend to hallucinate confidently. They don't say "I'm not sure." They give you an answer that sounds authoritative even when the training data didn't support a completely confident answer.

Searchsecurity experts and privacy advocates have raised concerns about AI Overviews in general, noting that they reduce visibility to the sources behind the information. You used to see multiple sources and could evaluate them yourself. Now you get Google's summary of those sources. That removes a layer of transparency.

Journalists and information quality experts have largely sided with the Guardian's concerns. When a major publication investigates and finds systematic problems with information quality, other journalists tend to trust that investigation unless there's clear evidence it's flawed.

The Regulatory Angle

There's also a regulatory question lurking here, especially in regions like the European Union where regulatory bodies are paying close attention to how tech companies handle information and AI.

The EU has been developing regulation around AI, including requirements that high-risk AI systems have proper oversight and safety measures. Health information arguably qualifies as high-risk.

In the United States, regulators at the FDA have been increasingly focused on how AI affects health information. The Federal Trade Commission has been investigating companies for claims about AI that aren't supported.

Google's approach to AI Overviews for health queries might eventually face regulatory scrutiny. If it turns out that Google is generating medical summaries without proper medical oversight, regulators might require changes.

Right now, Google is mostly managing this through PR and incremental fixes rather than comprehensive changes. But if the problem persists and more investigations document harm, regulatory action becomes more likely.

This chart shows estimated normal ranges for common liver enzymes. It's crucial to consider individual factors like age and demographics, as these ranges can vary significantly.

Comparing This to Other AI Search Features

Google isn't the only company with AI-powered search features, and this incident highlights how different companies are approaching similar challenges.

Perplexity, an AI search engine, shows sources alongside its answers and is transparent about what information came from where. This is arguably a better approach for high-stakes searches, though it also means Perplexity takes a different business model approach than Google does.

OpenAI's ChatGPT has disclaimers about its limitations and explicitly tells users to verify important information. When you ask ChatGPT for health advice, it usually includes a disclaimer that it's not a substitute for professional medical advice.

Bing's AI features in search have some integration with medical databases, though the specifics of their approach aren't as publicly documented as Google's.

DuckDuckGo has been more cautious about adding AI features at all, prioritizing privacy and information accuracy.

Google's approach has been to move fast and then respond to problems as they emerge. The Guardian investigation represents an "emerge" moment. It's possible that Google will learn from this and be more careful with future health-related AI features. It's also possible that they'll continue with incremental fixes and hope the controversy passes.

The Future of AI Overviews

If we look ahead, what's likely to happen with AI Overviews and health information?

Short term, expect more targeted removals of AI Overviews from specific health queries. Google will probably work with medical experts to identify high-risk queries and disable the feature for those specific searches. This is performative in some ways, but it does reduce harm.

They might also add more explicit disclaimers to health-related AI Overviews, making it clear that these are summaries and not medical advice, and that users should consult healthcare professionals for actual medical decisions.

Medium term, expect more sophisticated training of the AI models themselves. Google will likely invest in better health-focused training data and fine-tuning specifically to address the issues that emerged in the Guardian investigation.

There might also be more human review, either through expanding clinician teams or through automated systems that flag potentially problematic health summaries.

Long term, the question is whether AI-generated health summaries will remain a feature at all, or whether they'll be phased out in favor of curated health information from medical databases and reputable health organizations.

Regulation will probably play a role too. If the EU or other regulators decide that AI-generated health information needs specific oversight, Google will have to comply. That could reshape how health search works across the industry.

Implementing Better Safety Systems

If Google (or any company) wanted to do this right, what would a safer system look like?

First, you'd have a human-in-the-loop system where health-related AI summaries are reviewed by medical professionals before appearing to users. This isn't scalable to all health queries, but it could be done for common, high-stakes queries.

Second, you'd have clear, upfront disclaimers that health summaries are not medical advice and should not be used for actual health decisions. These disclaimers would be prominent and specific, not buried in fine print.

Third, you'd link explicitly to reputable health resources like the National Institutes of Health, Mayo Clinic, and other established medical organizations. Users should know where the information is coming from.

Fourth, you'd include caveats and context in health summaries automatically. For something like liver function tests, you'd note that ranges vary by age, sex, and ethnicity. You'd mention that results should be interpreted by a healthcare professional. You'd avoid presenting medical information as settled when it's actually complex.

Fifth, you'd have a feedback mechanism where healthcare professionals and patients can flag problematic health summaries. You'd use that feedback to continuously improve the system.

Sixth, you'd probably disable AI summaries entirely for certain types of health queries, particularly ones related to symptom checking or diagnosis. These are the highest-risk queries where incomplete information can cause real harm.

Would Google implement all of this? Probably not, because it would slow down the feature and require significant resources. But it's what a truly responsible approach would look like.

The Bigger Question: Who's Responsible?

As we wrap up the specifics, there's a larger question lurking underneath all of this: when something goes wrong with AI-generated information, who's responsible?

If someone sees a misleading health summary in Google's AI Overview and makes a decision based on that information, and something bad happens, who bears responsibility?

Google would argue that they're just aggregating information from reputable sources and that users should verify information before acting on it.

The sources would argue that Google's AI is presenting their information in a different context and context changes meaning.

The user would argue that they relied on information Google presented as authoritative.

From a legal perspective, this is genuinely unclear. There isn't established precedent for AI-generated search summaries and liability.

From an ethical perspective, Google is presenting information without the usual friction and verification that comes with looking at multiple sources. This creates a responsibility to be especially careful about accuracy.

The incident with medical queries and AI Overviews is essentially a stress test of who's responsible when AI-generated information is wrong. The answer Google is implicitly giving is "not us, we just aggregate" and "not really, because our clinicians say it's fine." But that answer might not hold up if the problem gets worse or more documented.

What This Means for the Broader AI Industry

Google's approach to this incident is being watched carefully by other AI companies because it sets a precedent.

If Google can respond to criticism about AI information quality by selectively disabling the feature for specific queries and claiming their experts say everything's fine, that becomes a template for how other companies might respond to similar criticism.

Alternatively, if this incident creates enough pressure for comprehensive solutions rather than patch jobs, it might push the entire industry toward more rigorous safety measures for AI-generated information.

Right now, the AI industry is in a move-fast-and-break-things phase. This incident is a case study in what "breaking things" can mean when you're dealing with information that affects human health and well-being.

Future AI companies rolling out information-generating features will have to decide: do we learn from Google's approach and do a more thorough job of safety from the start, or do we follow the same path and respond reactively when problems emerge?

There's also a question about commodification of expertise. For centuries, certain roles like doctor or lawyer existed because they had specialized knowledge that required training and judgment. AI systems can't replicate judgment, but they can provide plausibly authoritative-sounding summaries. This creates a risk that people mistake AI-generated information for expert judgment.

The health information incident is one manifestation of a larger challenge the industry is grappling with: how do you deploy AI systems that generate information without creating false impressions that the information has been vetted by human experts when it hasn't?

Lessons for Users, Companies, and Regulators

As this story evolves, there are lessons for different groups.

For users: AI-generated summaries of health information are useful for quick overviews but should never be your only source when making actual health decisions. Cross-reference, look at multiple sources, and consult healthcare professionals for anything important.

For companies building AI search features: recognize that not all domains are equal. Some information is high-stakes enough that you need special care. Health, legal, and financial information might need different approaches than general information. Disabling a feature for specific queries is a short-term fix, not a comprehensive solution.

For regulators: this is the moment to start thinking about AI-generated information and guardrails. You can't regulate at the speed these companies move, so you need principles and frameworks that will apply to future technologies we haven't even seen yet.

For the medical community: expect more non-specialists to encounter AI-generated health information and potentially make decisions based on it. Consider ways to provide better education about how to evaluate health information and work with AI tools.

FAQ

What are AI Overviews in Google Search?

AI Overviews are automated summaries that Google generates using AI models to synthesize information from multiple sources and present a direct answer at the top of search results. Rather than simply showing a ranked list of websites, Google's AI reads multiple sources and creates new text that attempts to comprehensively answer the user's query in a concise format. This differs fundamentally from traditional search, where Google organized existing content rather than creating new summaries.

Why did Google remove AI Overviews for certain medical queries?

Google removed AI Overviews for specific health queries following an investigation by the Guardian that documented cases where the AI-generated summaries provided incomplete or contextually insufficient medical information. For example, AI Overviews for liver function test ranges didn't account for important variables like age, sex, ethnicity, and nationality that significantly affect what constitutes a "normal" result. The removal was a reactive response to negative publicity rather than a comprehensive fix addressing all variations of health-related queries.

How can incorrect health information in search results harm users?

Incorrect or incomplete health information can directly harm users by causing them to make medical decisions without proper context or professional guidance. If someone receives lab results and checks AI-generated summaries rather than consulting their doctor, they might misinterpret their results and either ignore concerning findings or worry unnecessarily about normal results. The risk is especially high because most people lack medical training to identify when important context is missing from health information.

What is the difference between AI-generated summaries and traditional search results?

Traditional search results show a ranked list of websites, allowing users to evaluate sources themselves and read full context. AI-generated summaries have Google create new text synthesizing information, which removes visibility to original sources and requires users to trust Google's judgment about what's important. This difference is significant for health information because AI systems lack the medical training and judgment that doctors use when evaluating health information.

Should people rely on AI Overviews for making health decisions?

No. AI Overviews can provide useful initial information but should never be the sole source for making health decisions, particularly regarding diagnosis, treatment, or interpreting medical test results. Users should treat AI-generated health summaries as a starting point for research and always consult qualified healthcare professionals before making decisions based on health information found in search results. The Guardian's investigation demonstrated that even AI summaries from reputable sources can lack critical context needed for safe medical decision-making.

What steps is Google taking to improve AI Overviews for health content?

Google has indicated they work to "make broad improvements" and conducted internal clinical review of the queries highlighted by the Guardian. However, the company has been deliberately vague about specific improvements and their timeline. Visible changes include removal of AI Overviews for certain specific health queries, though this is a narrow fix since variations of those queries may still show AI summaries. Comprehensive solutions would require substantial investment in medical expertise integration, better training data, and possibly human clinical review of health-related summaries before they appear to users.

The Path Forward

The Guardian's investigation and Google's response represent an important moment in how we think about AI, information quality, and responsibility.

Google is the primary arbiter of information for most of the world. When Google makes a decision to summarize health information with AI, that decision affects millions of people. The company has enormous power and equally enormous responsibility.

Right now, Google is managing this moment by making selective changes and claiming their internal experts support their current approach. This might work short-term, especially as media attention moves to the next story.

But the underlying issue remains: AI systems aren't appropriate substitutes for expert human judgment in high-stakes domains like health. Acknowledging this isn't a failure. It's wisdom.

The next phase will likely include more pressure from regulators, more investigations from journalists, and potentially more comprehensive changes to how health information is handled in search.

For anyone using Google Search for health information, the practical advice is simple: treat AI Overviews as a starting point, not an endpoint. Cross-reference information, look at multiple sources, and when it matters for your health, talk to a qualified healthcare professional. That advice was true before AI Overviews and remains true now.

Google will continue evolving AI Overviews. The question is whether they'll do that evolution thoughtfully, with genuine concern for accuracy and safety, or whether they'll continue the pattern of moving fast and responding reactively to criticism.

The answer to that question will say a lot about not just Google, but about how responsible AI deployment looks at scale.

Key Takeaways

- Google removed AI Overviews from specific health queries after the Guardian documented misleading medical information, including liver function test ranges without proper context

- The removal is targeted to exact phrases only; variations of the same queries still show AI-generated summaries, suggesting the fix is performative rather than comprehensive

- Health information is fundamentally different from other searchable content because incomplete information can directly harm people who lack medical expertise to evaluate context

- Google's response claimed internal clinicians found the information acceptable, but experts argue the issue isn't source quality—it's whether information is presented with sufficient nuance for safe decision-making

- This incident raises crucial questions about responsibility when AI-generated information is wrong, what regulatory frameworks should govern high-stakes AI in search, and whether AI summaries should exist for health queries at all

Related Articles

- ChatGPT Health: How AI is Reshaping Medical Conversations [2025]

- Indonesia Blocks Grok Over Deepfakes: What Happened [2025]

- Grok's AI Deepfake Crisis: What You Need to Know [2025]

- Grok's Deepfake Problem: Why the Paywall Isn't Working [2025]

- Grok's Deepfake Crisis: UK Regulation and AI Abuse [2025]

- Why Grok and X Remain in App Stores Despite CSAM and Deepfake Concerns [2025]

![Google Removes AI Overviews for Medical Queries: What It Means [2025]](https://tryrunable.com/blog/google-removes-ai-overviews-for-medical-queries-what-it-mean/image-1-1768154740048.png)