Introduction: The Era of Agentic AI Has Arrived

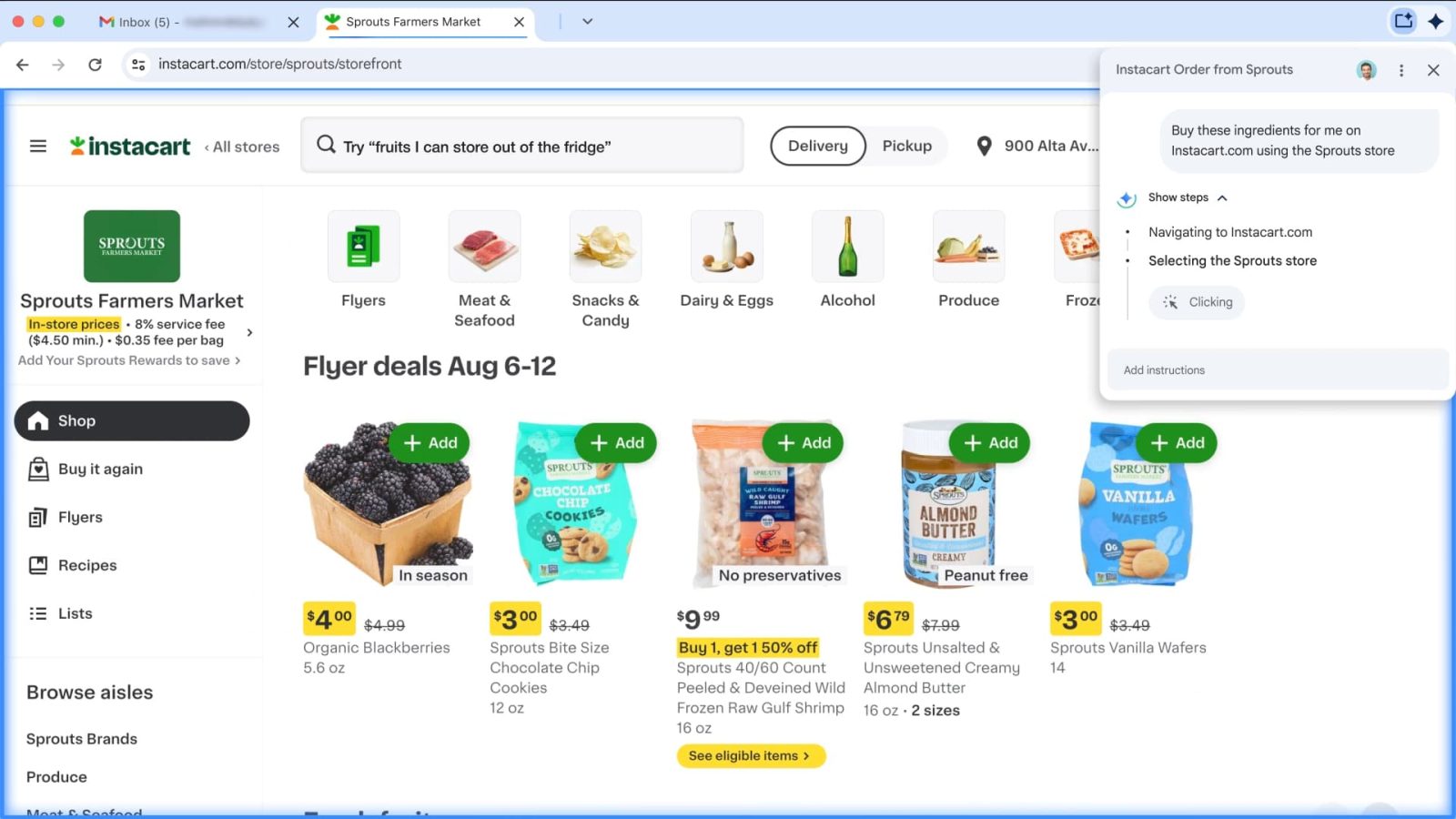

Last month, I watched something genuinely remarkable happen on my computer screen. Without typing a single command, an AI agent opened multiple tabs, compared hotel prices across three different booking sites, checked my calendar for conflicts, and compiled everything into a neat summary. It sounds like science fiction, but it's happening right now inside Google Chrome.

Google just rolled out Gemini's auto browse feature to Chrome, and this isn't just another incremental AI upgrade. This is a fundamental shift in how we interact with the web. For the first time, an AI living inside your browser can act as your personal agent, handling the tedious, multi-step tasks that normally eat hours of your week.

The feature is currently available to Google AI Pro and Ultra subscribers in the US, but it's the clearest sign yet that we're moving into an era where AI doesn't just answer questions. It actually does things. It researches hotel costs, schedules appointments, fills out forms, manages subscriptions, compares products, and handles the kind of repetitive web work that should've been automated years ago.

What makes this different from previous AI tools? Google has positioned Gemini as more than a chatbot. It's an agent with intentions, memory, and the ability to navigate your digital life. It understands context from your emails, calendars, shopping history, and browsing patterns. It knows what you care about because it's connected to the parts of your digital life that matter most.

But here's the honest part: this feature represents both tremendous opportunity and a fundamental recalibration of what we expect from technology. The browser is no longer just a passive viewing window. It's becoming an active participant in your tasks. That's powerful. It's also worth thinking carefully about.

Let's break down exactly what Google built, why it matters, and what this means for the future of how you work on the web.

What Is Gemini Auto Browse, Exactly?

Gemini auto browse is essentially an AI agent that lives in your Chrome browser and can execute multi-step tasks on your behalf across the web. Think of it as hiring a personal assistant who sits next to you and handles annoying tasks while you focus on more important work.

When you ask Gemini to "find the cheapest flight to Miami next month, check my calendar for conflicts, and book it," the AI doesn't just search Google. It actually opens new tabs, compares prices across multiple sites, references your personal calendar data, and completes forms. It moves through the web with intention.

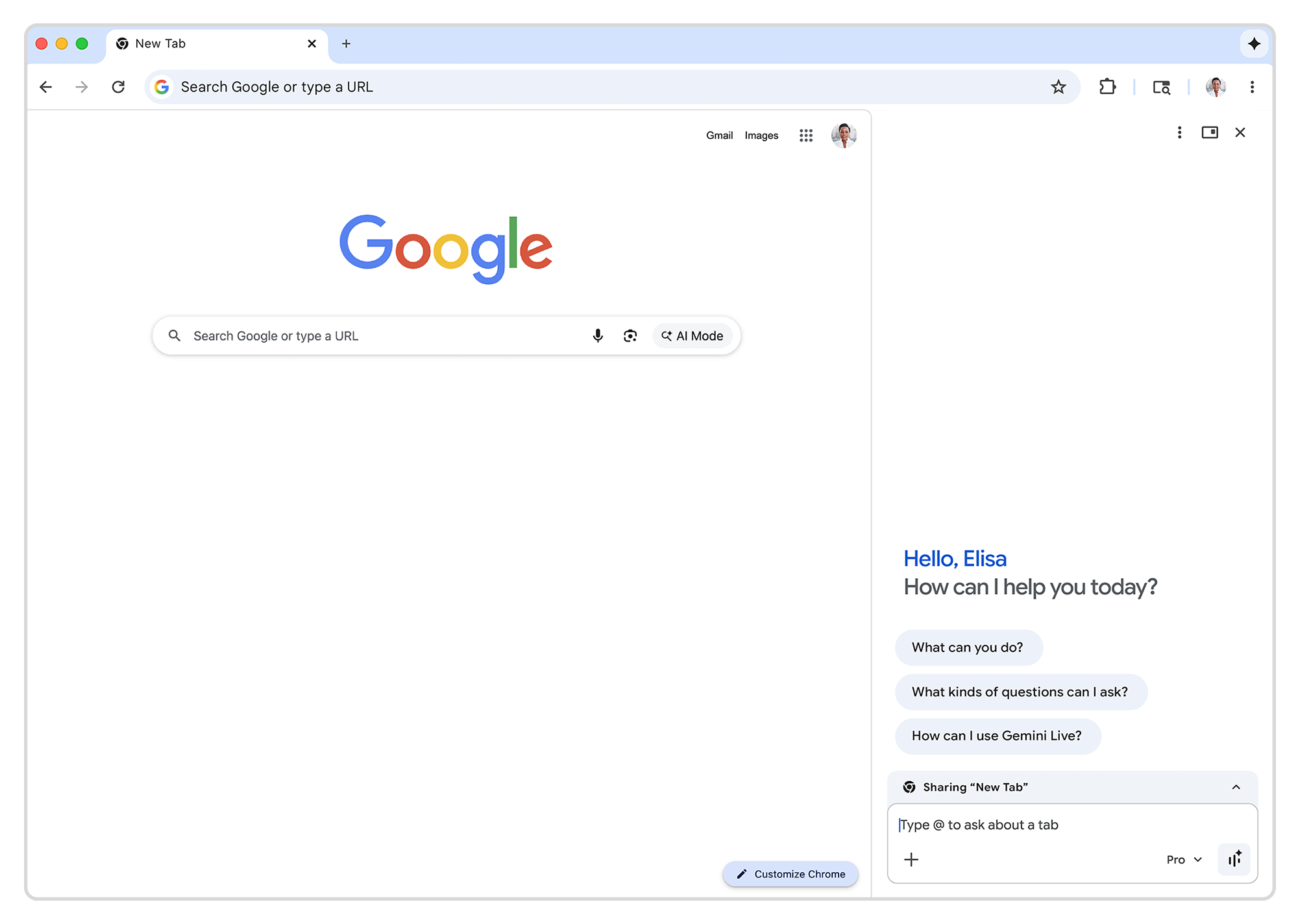

The feature moved from a pop-up window to a permanent panel on the right side of Chrome, making it feel less like a chatbot and more like a permanent colleague. This isn't a subtle design choice. The positioning signals that Google sees this as a core part of how you'll use the browser.

What's particularly clever is that auto browse integrates with your actual Google ecosystem. It can reference information from Gmail, Google Calendar, Google Maps, Google Shopping, and Google Flights. So when you ask it to plan a trip, it doesn't just search the public web. It pulls context from your personal emails about the conference you're attending, checks your calendar availability, and understands your travel preferences based on your search history.

Google's own example: "You're traveling to a conference and need to book a flight. Gemini can dig up that old email with event details, reference context from Google Flights to provide recommendations, and later draft an email letting your colleagues know your arrival time." That's a four-step task that normally requires switching between applications five times. The AI does it in one request.

The technical implementation is built on Google's Nano model, which handles image editing and understanding what's happening on your screen. When Gemini encounters a web form or interface it doesn't immediately understand, it can analyze the visual layout and figure out what data goes where.

But the real innovation isn't the individual pieces. It's that everything works together. The AI has your context (emails, calendar, search history), understands visual interfaces (what buttons to click, where to enter data), and can navigate multi-step workflows that would previously require human attention.

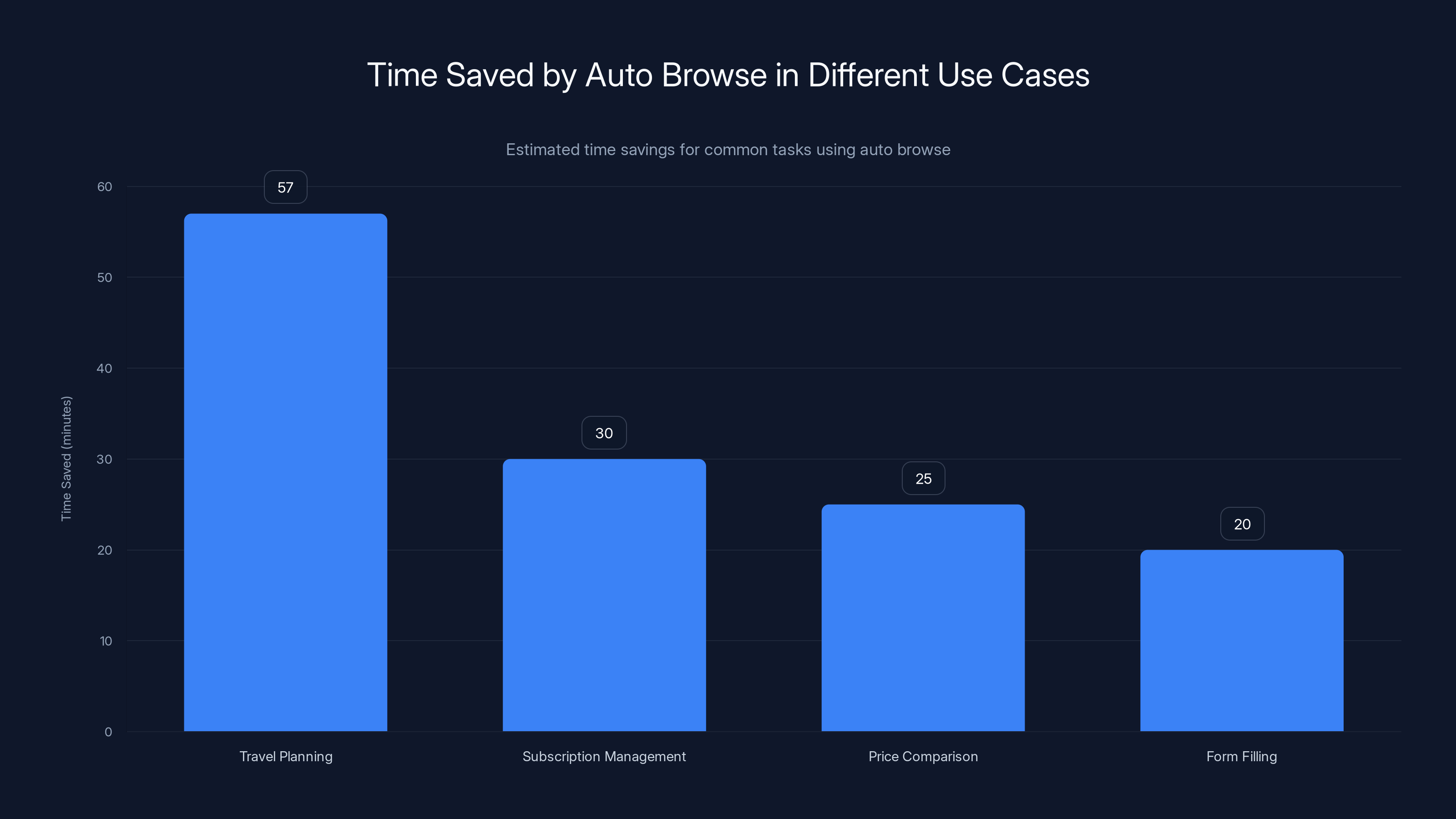

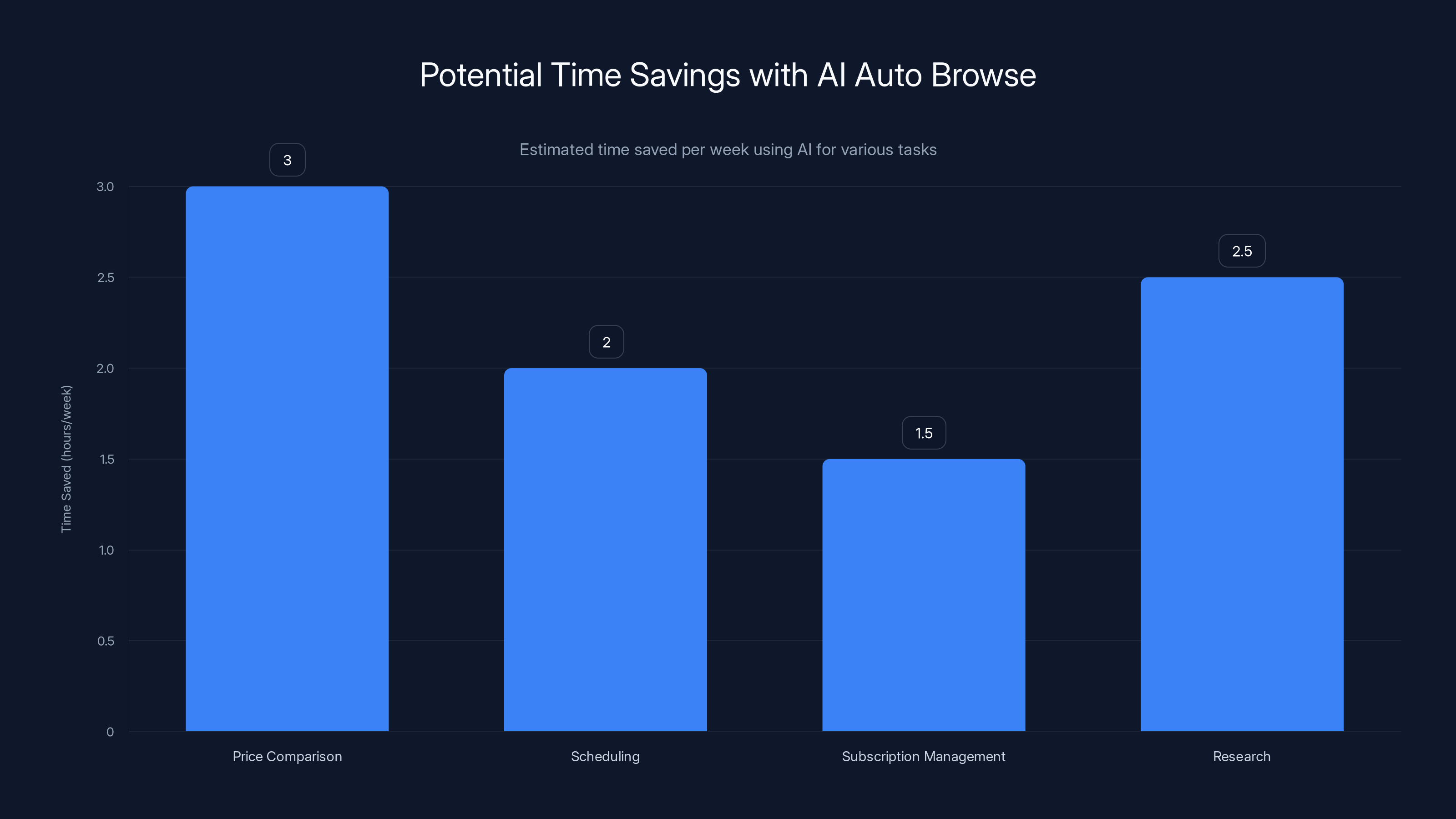

Auto browse significantly reduces time spent on tasks like travel planning and subscription management, saving up to 57 minutes for travel planning alone. Estimated data.

How Auto Browse Works: The Mechanics Behind the Magic

Understanding how auto browse functions requires understanding that this is fundamentally different from traditional chatbots. This isn't a system that just generates text. It's a decision-making system that navigates interfaces.

When you input a task into Gemini auto browse, the system breaks it down into steps. Not steps you define. Steps the AI figures out on its own. If you say "find me a budget hotel in Denver for next weekend," Gemini decides it needs to: (1) open a hotel booking site, (2) enter your dates, (3) set a budget filter, (4) compare options, (5) extract key information, (6) present results.

This requires what researchers call multimodal reasoning. The AI needs to understand text (your request, website content, form labels), visuals (button locations, layout structure, product images), and context (your preferences, budget constraints, availability).

Google's implementation leverages its Gemini model family, which includes the more capable Ultra version for Pro and Ultra subscribers. The reasoning capabilities of these models allow the AI to understand complex workflows and make decisions about which websites to visit and in what sequence.

Here's a concrete workflow example. You ask: "I need to schedule a meeting with my team, but first check everyone's availability on Thursday." Auto browse would:

- Access your Google Calendar through the integrated connection

- Pull up your team members' availability (if shared calendars are set up)

- Identify Thursday slots with the most overlap

- Return to Gmail and draft meeting invitations

- Suggest sending them through your email

- Wait for your confirmation before actually sending

The crucial detail here is safety checkpoints. Google built in confirmations before auto browse takes actions that require authorization. It won't automatically send emails or make purchases. It will show you what it's about to do and ask for your permission.

The browsing capability itself uses a technique similar to what Open AI explored with its internal projects and what Anthropic has researched around AI agent architectures. Google's system can click buttons, fill text fields, scroll pages, and interpret the results. When it encounters an interface it doesn't understand, it screenshots the current state and analyzes it visually.

For image editing, Google integrated Nano Banana, which is its lightweight image generation and editing model. This means if Gemini encounters an image on your screen that you want to modify (say, you want to remove someone from a photo or change colors), it can do that directly without leaving the browser.

The context window is also critical. Gemini auto browse maintains what researchers call persistent context. It remembers what you asked for, what it's already checked, and what decisions you've made. This prevents the common problem where AI agents start over with every interaction and lose the plot.

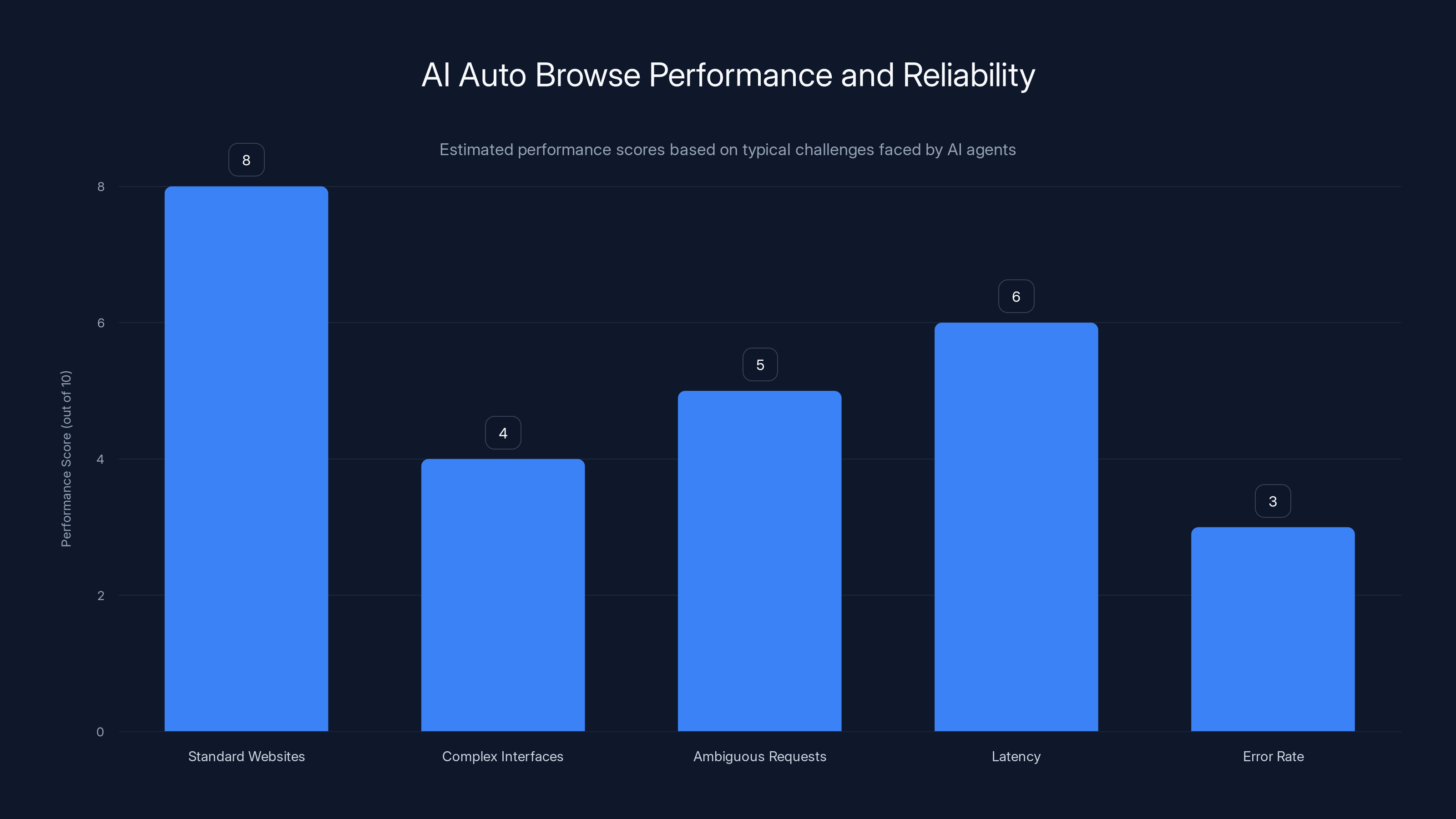

AI auto browse performs well on standard websites but struggles with complex interfaces and has a notable error rate. Estimated data.

The Integration Ecosystem: Your Digital Life as Context

What separates Gemini auto browse from standalone AI agents is that it lives inside your Google ecosystem. This is both its greatest strength and the most important privacy consideration.

Gemini in Chrome now integrates with five key Google services:

Gmail integration means Gemini can search your email history for context. You don't need to tell it about your conference. It finds the email with all the details. You don't need to manually input your colleague's availability. It can extract that from email signatures or previous meeting invitations.

Google Calendar integration is perhaps the most powerful piece. Gemini understands your schedule. It knows when you're busy, what time zones matter, and what meetings are already on your calendar. When you ask it to schedule something, it's not making a suggestion. It's working within your actual constraints.

Google Maps integration enables travel planning. The AI understands geography, distance, and travel time. When planning a trip, it can factor in commute times between locations and weather patterns.

Google Shopping and Google Flights integration means browsing history and saved items become active data. If you've been comparing flights, Gemini already knows your price sensitivity and routing preferences.

Google Photos integration, coming in future updates, will allow Gemini to understand your personal photos and memories. This is more significant than it sounds. Imagine telling Gemini "find all photos from my trip to Japan and create a travel summary." The AI would be pulling from your personal library.

The architecture here is elegant but represents a significant data trust model. Every browsing action, search, email, and calendar entry becomes potential input for the AI's decision-making. Google claims this is all processed with privacy controls, but the scale of integration is notable.

What makes this different from previous "AI assistants" is the integration depth. Earlier versions of Gemini in Chrome could analyze the webpage you were currently viewing. Now it can pull context from across your entire Google account. That's exponentially more powerful—and exponentially more reasons to understand what data is being processed.

Google has designed this so that certain actions require explicit permission. The AI won't automatically send emails or make purchases. But it will suggest them, which is a meaningful distinction. The friction isn't eliminating the action. It's asking you to confirm.

Real-World Use Cases: Where Auto Browse Actually Saves Time

Let's move from abstract to practical. Here's what auto browse actually does for people today.

Travel Planning. This is the marquee use case. You're going to a conference in three weeks. Normally: you open Gmail, search for the conference email, note the dates and location. You open Google Flights, enter the dates, check prices, open three comparison tabs. You check your calendar for conflicts. You open your hotel booking app, search for hotels, read reviews. You check your credit card rewards status to optimize your booking method.

With auto browse: "Book me a flight and hotel for the conference in Denver. I have a $150/night budget for the hotel and prefer early morning flights." Gemini does all of the above in three minutes. It returns a summary of options with estimated costs and asks if you want to proceed. One request. Massive time savings.

Subscription Management. You have 47 subscriptions (you're not alone—the average person does). They're scattered across different services. When you want to cancel one or check the price, you're manually digging through emails and login screens.

Gemini auto browse can navigate to each service, check your current subscriptions, identify which ones you're not using, and provide a summary. It won't automatically cancel anything. But it'll show you what's costing money and why.

Price Comparison. You want a new camera and you've narrowed it down to two models. You want to know where's cheapest, what the warranty covers, what people are saying in reviews. Normally you'd open five tabs and spend 30 minutes cross-referencing.

Auto browse can check three major retailers, compile the prices, note warranty differences, summarize review sentiment across multiple sites, and present everything in one place. The AI understands that "best price" is only meaningful in context of warranty and return policies.

Form Filling. Some of the most tedious web work is filling out forms. Job applications, rental applications, service requests. You're entering the same information over and over: your address, phone number, employment history.

Gemini can reference this information from your Google account (where it's stored) and auto-fill where appropriate. Not perfectly. There will be edge cases. But it eliminates the most repetitive part.

Appointment Scheduling. You need to schedule a meeting with your team. Normally: you send an email asking for availability, wait for responses, manually check each person's calendar, find an overlap, send a calendar invite.

With auto browse: "Schedule a 90-minute meeting with the marketing team next Thursday. I need at least three people." Gemini checks shared calendars, identifies the best time slot, sends the invite, and updates you. The team doesn't need to do anything.

Research Tasks. You're writing about a topic and need information from multiple sources. Normally you're tabbing between search results, reading carefully, extracting information, synthesizing it yourself.

Gemini can browse multiple sources, extract relevant information, fact-check across sources, and present a summary. It's like having a research assistant who reads quickly and takes good notes.

The pattern across all these use cases: they involve navigating multiple interfaces, synthesizing information, and making decisions based on constraints. These are exactly the kinds of tasks that are tedious for humans but straightforward for a capable AI system.

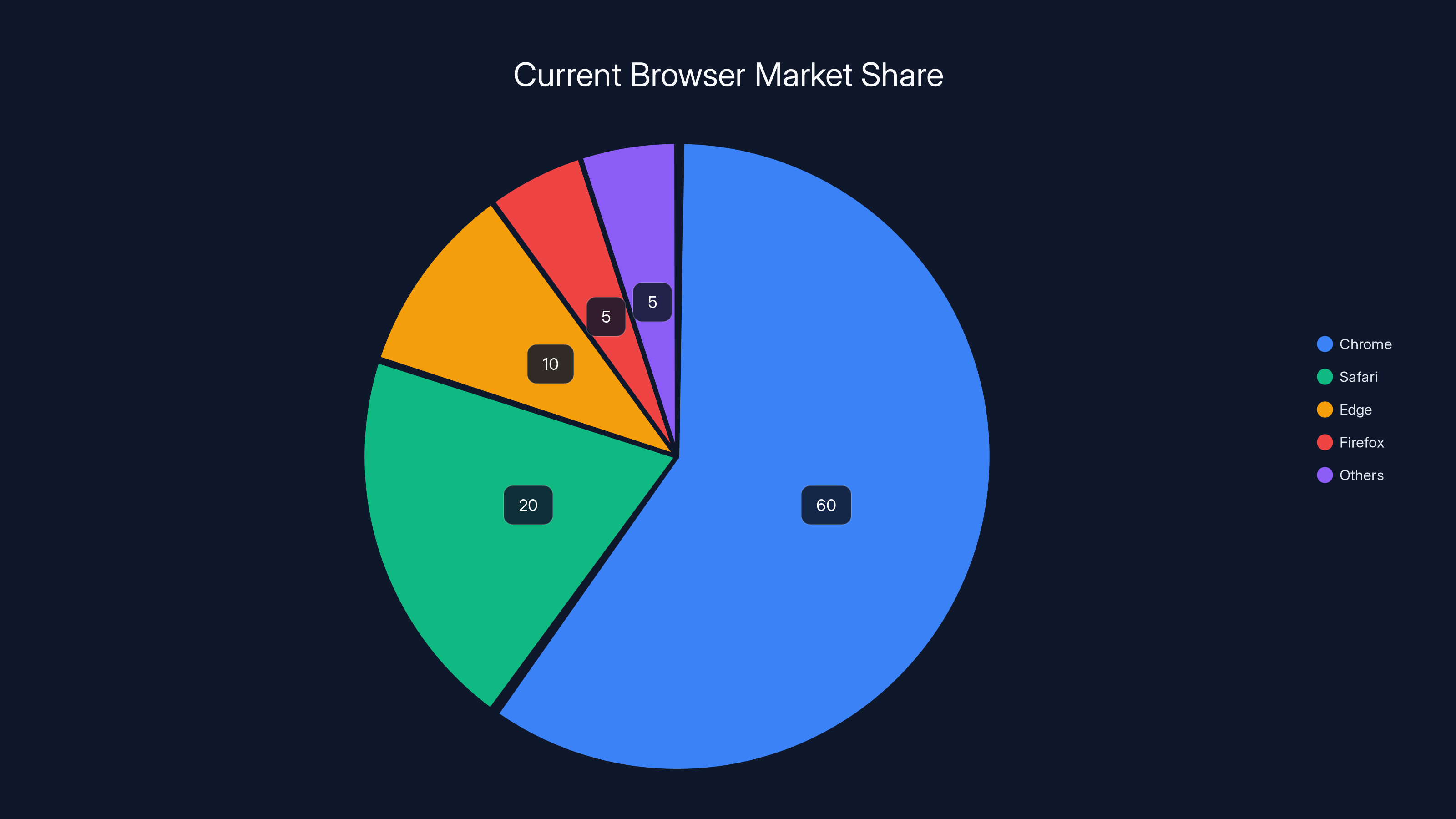

Chrome dominates the market with over 60% share, positioning Google advantageously as browsers evolve into active participants. Estimated data.

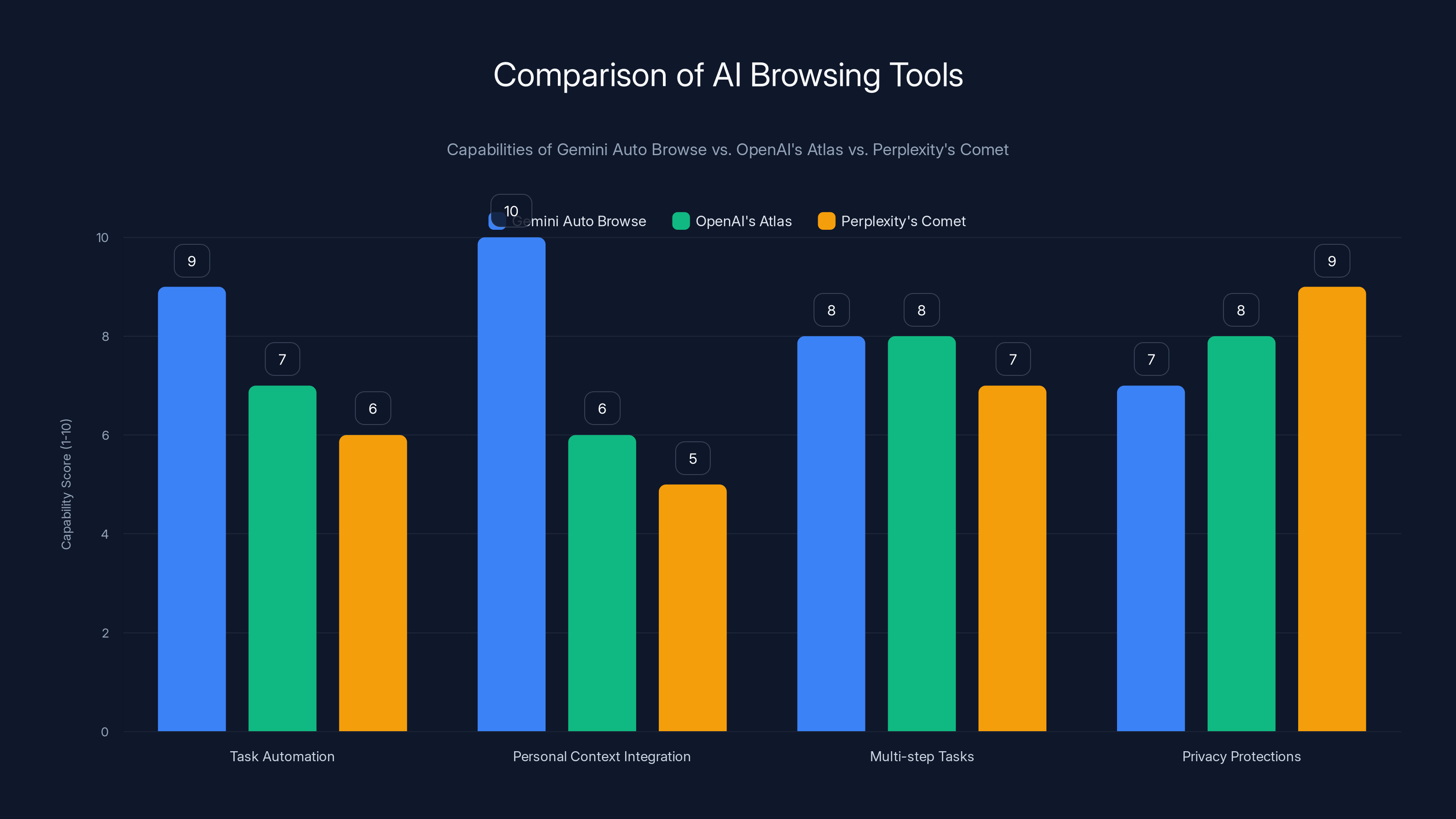

Comparison to Competitors: Open AI Atlas and Perplexity Comet

Google isn't alone in this race. There's a three-way competition emerging, and understanding the differences matters for which tool you'll actually use.

Open AI's Atlas is the company's answer to agentic browsing. It's built on the GPT-4 architecture and has similar capabilities to Gemini auto browse. Atlas can navigate the web, fill forms, compare prices, and handle multi-step tasks.

The key difference: Atlas is less integrated into a personal ecosystem. It's more like a standalone agent. Open AI hasn't built it into an email or calendar system (yet). This makes it more neutral and less dependent on using Open AI's other products, but it also means less context. Atlas is browsing the open web without knowing your personal constraints and preferences.

Perplexity's Comet takes a different approach. Perplexity has built its reputation on being the "thinking search engine," and Comet extends that. It's designed for research tasks specifically. Where Atlas and Gemini auto browse are general agents, Comet excels at finding information across sources and synthesizing it accurately.

Comet currently handles fewer action tasks (it's not designed to fill forms or make purchases) but is stronger at the "gather and synthesize information" part. It shows you sources, lets you drill down, and helps you understand the information landscape.

Here's the practical difference:

| Task | Gemini Auto Browse | Open AI Atlas | Perplexity Comet |

|---|---|---|---|

| Book a flight | Excellent | Excellent | Good (shows options only) |

| Compare prices | Excellent | Excellent | Excellent |

| Fill forms | Excellent | Good | Limited |

| Schedule meetings | Excellent (with Gmail) | Moderate | Not designed for this |

| Research topic | Good | Moderate | Excellent |

| Check personal calendar | Excellent (integrated) | Moderate | Limited |

| Fill subscription form | Excellent | Good | Limited |

The real distinction is about intent and context. Gemini auto browse is optimized for tasks that require knowing your personal information. Atlas is better for standalone tasks where you don't need personal context. Comet is best for pure research and information synthesis.

For most users, the choice comes down to: "Are you already using Google's ecosystem?" If yes, Gemini auto browse is immediately more powerful because it knows your calendar, email, and preferences. If you're in the Open AI ecosystem (Chat GPT Plus, business account), Atlas makes sense. If you're doing research-heavy work, Comet is compelling.

But here's what's interesting: the competition is driving all of these tools toward better reasoning, more reliable browsing, and safer interaction models. A year ago, no AI could reliably fill out a form. Now it's table stakes.

Privacy and Security: The Elephant in the Room

Let's talk about the thing nobody wants to think about but everyone should: what happens when your AI agent has access to your entire digital life?

Gemini auto browse sees your emails, calendar, search history, shopping behavior, and browsing patterns. Google is explicit that this data stays on your device and isn't used for advertising purposes (unlike some other Google services). But it's worth understanding the actual mechanics.

When Gemini accesses your Gmail to find conference details, that data is processed. When it checks your calendar to find scheduling conflicts, that's visible to the system. Google's privacy policy claims this isn't used for targeting, but it's still being processed, analyzed, and understood by an AI system.

The bigger privacy question: what happens if Gemini is compromised or if its behavior becomes unpredictable? An AI system with access to your calendar and email has the capability to do significant damage. It could schedule fake meetings, send emails pretending to be you, or extract sensitive information.

Google has built in safeguards: the AI asks for confirmation before sending emails or making purchases. But these safeguards are only as good as the AI's ability to understand what it's doing. If the AI hallucinates or misunderstands a task, the confirmation step only helps if you're paying attention.

There's also the question of corporate surveillance. Google having access to information about which tasks you ask Gemini to do is different from Google seeing your search history. One is passive. The other is an explicit log of things you actually wanted to get done. That data is incredibly valuable to advertisers and marketers.

Google claims it's not using this data for advertising. Believe that or not based on your faith in Google's intentions and their historical track record. The technical capability is definitely there.

The honest assessment: Gemini auto browse is probably safer than the alternative of you manually visiting 20 websites where advertisers are tracking you anyway. But it does represent a significant consolidation of personal digital data in one system. If you're uncomfortable with that, you should understand it before you use the feature.

One important note: auto browse is currently only available to paid subscribers (Google AI Pro and Ultra). Google hasn't announced plans to bring it to free tier users. This might be partly about monetization, but it's also partly about limiting the number of accounts with this level of access. That's not reassuring, exactly, but it's notable.

Using AI auto browse can save an estimated 1.5 to 3 hours per week on administrative tasks, reducing cognitive load. Estimated data.

Performance and Reliability: Where It Works and Where It Fails

Let's be honest about what works and what doesn't. AI agents are remarkable, but they're not perfect.

Auto browse excels with standard, well-structured websites. Amazon, Google Flights, major hotel booking sites, bank account portals. These sites have clear structures that the AI can learn to navigate. Click the search box, enter the query, analyze the results, click through to details. Straightforward.

Auto browse struggles with complex, non-standard interfaces, sites with unusual layouts, or sites designed to prevent bots from accessing them. Many news sites, for example, have paywalls and verification screens. Some websites actively block automated access. The AI has to work around these obstacles, and success is inconsistent.

It also struggles with ambiguous requests. If you say "find me a good camera under $500," what makes a camera "good"? Do you care more about image quality, autofocus speed, or battery life? Humans understand this through personal experience and preference. The AI has to guess. Sometimes it guesses right. Sometimes it optimizes for the wrong metric and you end up with recommendations that don't match what you actually want.

There's also a latency question. Auto browse is slower than a human browsing. An experienced human can compare three hotel booking sites and make a decision in ten minutes. Auto browse might take five or six minutes per site, so the total time could be longer. This matters less for complex tasks (where the AI's ability to track multiple criteria matters) and matters more for simple tasks (where you could just browse yourself).

The error rate is non-trivial. In testing and early user reports, auto browse occasionally:

- Misreads form fields and enters information in the wrong place

- Gets stuck in loading screens or authentication flows

- Fails to find information that's clearly visible to a human

- Makes decisions that don't match the stated criteria

- Loses context mid-task and starts over

Google claims this improves with use, as the model learns your preferences and common patterns. But early data suggests it's not perfect, and important tasks might need human verification.

One specific limitation: auto browse works better with tasks where you have clear constraints and the outcome is measurable. "Find flights under $400 with at least one stop" is perfect. The AI can optimize clearly. "Find me something to wear to a casual dinner" is much harder. The AI doesn't understand your style.

Google has been transparent that auto browse is launching in beta. The company doesn't claim it's flawless. But beta software from a company with Google's resources tends to be pretty reliable for common cases. Edge cases? Those might take time.

The Personal Intelligence Feature: Memory Meets AI

Along with auto browse, Google is expanding what it calls Personal Intelligence. This feature launched inside the Gemini app and is coming to Chrome in the coming months.

Personal Intelligence is Gemini's way of understanding your life. It gives the AI system access to your past conversations with Gemini, your email conversations, your calendar patterns, your photos, and your search history. The idea: the AI becomes more useful because it understands you as a person, not just the current task.

Consider this example: you ask Gemini "Can I attend the conference next month?" With Personal Intelligence, Gemini understands this is referring to a specific conference mentioned in emails weeks ago, knows why you wanted to attend, understands your scheduling constraints, and can give a much more useful answer than just checking next month's calendar.

Or: you ask "Should I buy that camera?" Personal Intelligence has context about cameras you've looked at, photography equipment you own, past purchases you've been happy with, and your current budget situation. The recommendation is much more informed.

This is where things get genuinely powerful and genuinely concerning. On one hand, an AI that understands your preferences, past decisions, and actual constraints can be tremendously helpful. On the other hand, an AI system with that level of personal knowledge is also a very sensitive data collection point.

Google's implementation requires explicit opt-in. You have to turn on Personal Intelligence. The company is positioning it as an opt-in convenience feature, not a default behavior. That's responsible but also means the feature isn't enabled for most people yet.

The technical implementation uses Gemini's reasoning capabilities to analyze your data without (Google claims) storing a complete profile. The AI understands patterns in your behavior without necessarily maintaining a persistent "user profile." Whether that distinction is meaningful from a privacy perspective is debatable.

Where Personal Intelligence gets really interesting is combining it with auto browse. Imagine: "I'm planning a trip like the one I took to Europe last year, but with a bigger budget. Can you plan it?" The AI knows where you went, what you enjoyed, how you like to travel, and how much you have to spend. The result isn't just a travel plan. It's a travel plan specifically tailored to your travel style.

This is where we see the real trajectory of AI assistants. They're moving from answering questions to understanding who you are, what matters to you, and proactively helping you achieve your goals.

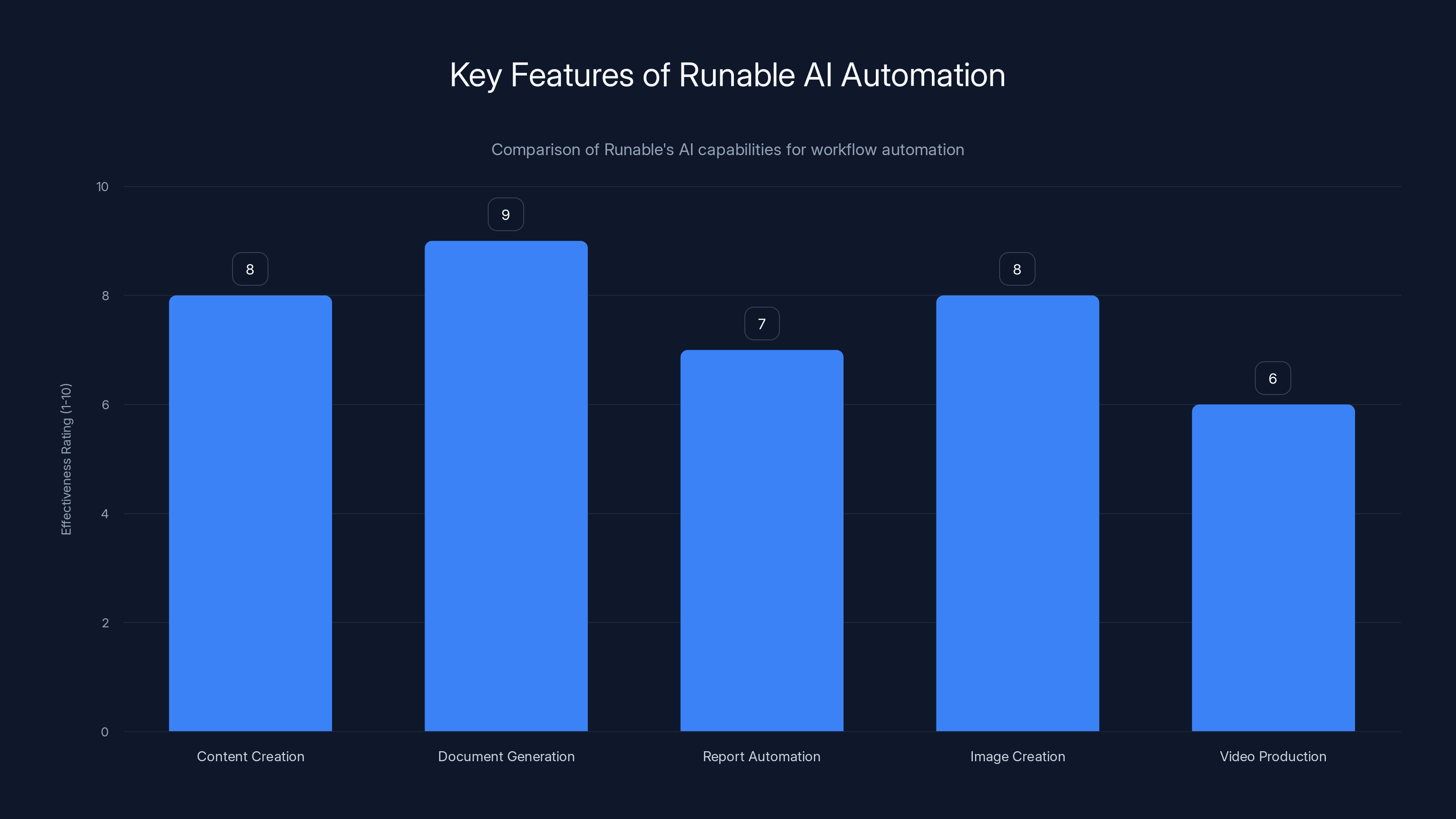

Runable offers a range of AI-powered automation features, with document generation and content creation being the most effective. Estimated data based on typical feature effectiveness.

The Broader Implications: Browsers Aren't Passive Anymore

Step back from the features for a moment. What Google is announcing is a fundamental change in what a web browser is.

For the past 30 years, the browser has been a viewing window. You go to a website, you see content, you interact with it. The browser is a portal. You're the agent.

With Gemini auto browse, the browser is becoming an acting participant. You tell the browser what you want, and it goes out into the web and does things on your behalf. The browser is becoming the agent. You're the director.

This is a massive shift. It means websites need to be designed not just for human users but for AI agents. Some websites might start blocking agents completely. Others might build specific APIs for agent access. The entire structure of how websites work might change.

It also means the browser becomes much more important as a computing platform. Right now, most of your digital work happens in applications (email app, calendar app, productivity suite). The browser is how you access services. With auto browse, the browser becomes the primary interface for getting things done.

This has implications for competition. Apple has Safari. Microsoft has Edge. Firefox is independent. If browsing becomes the primary way people interact with AI agents, the browser company becomes incredibly influential. Google's advantage is that Chrome is already dominant (over 60% market share), and Gemini auto browse is built directly into it.

It also raises questions about AI safety and alignment. An AI agent capable of navigating the web and taking actions needs to be very aligned with human values. It needs to understand what you want and do exactly that, no more, no less. As these systems get more capable, the margin for error shrinks.

Finally, there's the question of economic disruption. If AI agents can do these tasks, what happens to service jobs that involve coordinating between systems? A travel agent's job is basically what auto browse does. A personal assistant's job is what auto browse does. These systems aren't replacing humans today, but the trajectory is clear.

Google isn't creating this future alone. Open AI is building it with Atlas. Perplexity is building it with Comet. Runable is building AI automation for creating presentations, documents, and reports. The entire industry is moving toward agentic AI. Google's advantage is just that they're already in your browser.

Nano Banana: The Image Generation Layer

One component of Gemini auto browse that deserves attention is Nano Banana, Google's lightweight image generation and editing model integrated directly into the browser.

Where previous Gemini implementations in Chrome just analyzed the websites you were viewing, auto browse goes further. It can look at an image on your screen and edit it based on text prompts. Want to remove someone from a photo? Change the colors in an image? Regenerate part of a screenshot? Nano Banana handles it.

This is a smaller feature than auto browse itself, but it's important because it shows how Google is thinking about AI integration. Rather than directing you to different tools, it's embedding capabilities directly into the browsing experience.

Nano models are computationally lighter than full-size models. They run faster and use less processing power. This allows Google to do complex image operations (understanding what's in an image, making edits, generating variations) without requiring constant cloud processing. Much of this can happen on your device.

The practical implication: you can edit images without leaving your browser and without uploading them to a cloud service. That's a privacy win. It's also a convenience win.

Where Nano Banana shows its limitations: it's not as capable as full Gemini or other image generation models. It works well for edits and modifications. For creating entirely new images from scratch, the quality is decent but not remarkable. Google positioned it as integrated into workflow, not as a replacement for dedicated image tools.

The interesting question: how does image editing in your browser connect to the rest of your workflow? If you're shopping and see a product image, you might want to see what it looks like in different colors. Nano Banana lets you experiment without leaving the page. If you're researching and an image has text you want to modify, you can edit it directly. It's small conveniences that add up.

Gemini Auto Browse leads in personal context integration due to its deep integration with Google's ecosystem, making it superior for tasks like booking travel and scheduling meetings. Estimated data.

What the Gemini Expansion Means for Google's AI Strategy

Zoom out further. These features (auto browse, Personal Intelligence, Nano Banana image editing) are all part of a cohesive strategy from Google: become the infrastructure layer for AI-powered work.

Google's revenue historically came from search advertising. You search, Google shows you ads. That model is being disrupted by AI. Chat GPT answers questions without clicking ads. Perplexity shows search results without needing Google. AI is eating Google's primary business model.

So Google's new strategy: own the interface where AI happens. If you're using an AI agent, it should live in Chrome. If you're asking an AI questions, it should be Gemini. If you're managing your productivity with AI assistance, it should be integrated with Google Workspace (Gmail, Calendar, Docs, etc.).

This is actually a smart pivot. Rather than fighting the AI disruption to search, Google is repositioning itself as the infrastructure company. "We don't need to be the search destination. We'll be the platform where work happens." That's a different business model, but it could be equally valuable.

The risk for Google: integration lock-in breeds contempt. If Gemini is the only agent available and it's mediocre, users get frustrated. If Google's ecosystem integration with Gemini creates security vulnerabilities, there's a backlash. The company has to execute perfectly on both capability and trust.

The opportunity: Google has data and infrastructure nobody else has. Gmail is the dominant email platform. Google Calendar is the dominant calendar (for both personal and business use). Google Photos stores billions of people's memories. Google Maps understands travel patterns. Google Search has history of what humanity cares about. If you can weave all of that together into one AI agent, you have something that's genuinely hard to replicate.

The competition is real, but Google's advantages are substantial. That's why Open AI and Perplexity are moving fast. They know that if Google nails the integration piece, it's going to be very hard to compete.

Practical Steps: How to Actually Use This Effectively

If you have access to Gemini auto browse (you need Google AI Pro or Ultra), here's how to actually use it productively.

Start with high-friction tasks. These are tasks that take lots of clicks, multiple websites, and context switching. Booking travel is perfect. Managing subscriptions is perfect. Scheduling with multiple people is perfect. Don't use auto browse for a simple Google search.

Be specific about constraints. "Book me a flight" is too vague. "Book me a flight to Denver on May 15th, budget of $400, prefer early morning departure, Southwest airline if possible" is useful. The more specific you are about what you're optimizing for, the better the results.

Start with verification. Before auto browse actually sends an email or makes a purchase, review what it's about to do. Read the email draft. Check the booking details. This shouldn't take long because the AI has already done the work. You're just quality-checking.

Use it with your email and calendar. The real power comes when auto browse understands your personal context. If you set it up to access your email and calendar, tell it about tasks that reference people, meetings, or past communications. That's where it shines.

Batch your tasks. Rather than asking auto browse to handle one task at a time, group similar tasks. "Check all three of these subscription services and tell me what I'm paying for" is more efficient than asking about each separately.

Monitor the quality. Keep track of when auto browse gets things right and wrong. Are there particular websites where it struggles? Particular kinds of requests? Over time, you'll develop intuition for where it's reliable and where you should do things manually.

Don't trust it with irreversible actions... yet. Auto browse is getting better, but it's not perfect. High-stakes decisions (like actually deleting accounts or making large purchases) should still involve human judgment. The technology isn't quite there for full delegation of important decisions.

The Competitive Landscape: Who Else Is Building This

Understanding where the market is heading requires knowing who else is building agentic browsers and where the competition is fierce.

Anthropic, the company behind Claude, has been researching agent architectures but hasn't launched a browser integration yet. Claude is powerful for reasoning and analysis, but it's not yet integrated into a browsing environment. This might be deliberate—Anthropic seems to be focusing on being the infrastructure and letting others integrate it.

Perplexity AI has made researching on the web its core competency. Perplexity Comet is their agent offering. Comet is really strong for synthesis and analysis but less comprehensive for action-taking than Gemini auto browse. Perplexity is winning the "research assistant" title but not the "personal assistant" title.

Open AI is building Atlas, which is their answer to Gemini auto browse. Atlas is sophisticated and has access to the Open AI ecosystem (Chat GPT Plus accounts, business integrations). The main limitation: Open AI doesn't have an ecosystem integration like Google has. Open AI doesn't own your email, calendar, or office productivity suite.

The Browser Company (makers of Arc browser) has been experimenting with AI integration but has taken a different approach. They're building AI tools that help you manage your browser and organize information, rather than build autonomous agents. This is more about AI-assisted browsing than agentic browsing.

Outside the browser, companies like Zapier and Make.com are adding AI capabilities to workflow automation. These aren't browser agents, but they're solving similar problems (automating multi-step tasks across platforms).

The landscape is fragmented right now because the technology is new. In a few years, there will probably be a few dominant approaches: one heavily integrated with the Google ecosystem, one based on Open AI's models but with broader integrations, and specialized tools for specific industries. The winner will be whoever builds the most reliable agent with the deepest context about your life.

Looking Ahead: What's Next for Browser AI

If you're trying to understand where this is heading, here are the trends worth watching.

More capable reasoning. Current generation AI can navigate websites and fill forms. Next generation will understand complex multi-step workflows that require judgment calls and preference optimization. The AI will get better at understanding what you actually want, not just what you said.

Better context understanding. Right now, auto browse understands the public web and your personal Google data. Future versions will have access to enterprise systems (your corporate email, your company's project management tools, your actual work context). This will be even more powerful and even more sensitive.

Standardization of agent protocols. Websites will start providing specific data formats and interfaces for AI agents, similar to how RSS feeds let readers access content, similar to how APIs let developers build integrations. This will make it easier for agents to understand websites.

Multi-agent collaboration. Instead of one agent doing everything, you might have specialized agents that work together. One agent researches, another negotiates, another executes. This is already starting to happen in research and development settings.

Offline-first models. Processing everything in the cloud has latency and privacy trade-offs. We'll see more computation happen on your device. Nano Banana is the beginning. Full AI reasoning happening locally will follow.

Regulatory frameworks. As AI agents have more autonomy and access to more of your data, governments will create regulations. We're already seeing the beginning of this in the EU. By 2026, using an AI agent will probably require opt-in consent and clear disclosures about what data the agent can access.

The AI agent market becoming as competitive as the app market. Right now, Gemini auto browse is something Google built for Chrome. In a few years, you might have dozens of agents available, each specialized for different tasks, each with different privacy and capability trade-offs. You'll choose which agent to trust for which tasks.

These aren't speculative. They're all actively being worked on by companies in the space.

The Real Question: What Should You Actually Do?

At the heart of all this technical capability is a simpler question: does this actually improve your life?

For some people and some tasks, absolutely. If you spend 5+ hours per week on tasks like comparing prices, scheduling, managing subscriptions, or researching topics, auto browse will save you time. It's not just about the hours. It's about the cognitive burden. Switching between five websites to comparison shop is mentally taxing. Having an AI do it and present a summary is genuinely easier.

For other people and other tasks, maybe not. If your work is creative or strategic, an AI agent checking the details doesn't move the needle. If you care deeply about optimizing your choices in ways that require personal judgment, an agent making suggestions is less valuable.

The honest assessment: Gemini auto browse is a tool that's useful for a specific set of tasks, not a magical life upgrade. It excels at administrative work and information synthesis. It doesn't replace human judgment or creativity.

The bigger picture: these tools are the beginning. In five years, the question won't be "should I use an AI agent?" It'll be "which agents should I trust and which tasks should I delegate to them?" The technology will be better, more integrated, and probably mandatory for competitive job performance.

Right now, if you're curious and you have access, it's worth trying. Start with a small task that you find annoying. See if it actually works better than doing it yourself. Use that experience to decide if this technology is for you.

If you don't have access yet, you will soon. Google is rolling this out to Pro and Ultra subscribers first, but the company has a track record of making good features available to everyone eventually. By end of 2025, or early 2026, this might be standard in Chrome.

Conclusion: We're At an Inflection Point

Google's Gemini auto browse isn't revolutionary because it's technically impossible. Smart people have been working on AI web agents for years. It's revolutionary because it's arriving as a polished product integrated into a browser that 2 billion people already use.

This matters more than it might seem. In technology, the difference between "technically possible" and "shipping in a product" is everything. Chat GPT didn't invent language models. It made them accessible. Tesla didn't invent electric cars. It made them desirable. Google isn't inventing AI agents. It's making them practical.

What we're seeing is the moment when AI moves from cool demo to actual utility. Not everywhere. Not for everything. But for a significant slice of digital work—the administrative, repetitive, cross-platform work that eats hours of your week—AI agents now do it better than humans.

That's a genuine milestone.

The implications are still unfolding. Some of them are positive. You get your time back. You can focus on work that requires creativity and judgment. Some are concerning. Privacy gets more complicated. The tools that understand you best are built by companies with advertising incentives. Economic disruption is real.

But the direction is clear. AI agents are here. They're getting better. Competitors are racing to catch up to Google. In a few years, not using AI agents for suitable tasks will seem quaint.

The question for you: How are you going to stay ahead of this change? How are you going to use these tools to amplify your capabilities instead of being replaced by them? What skills matter more when routine tasks become automated?

Those are the questions worth thinking about now.

FAQ

What exactly is Gemini auto browse and how is it different from a regular chatbot?

Gemini auto browse is an AI agent that can perform multi-step tasks across websites on your behalf, whereas regular chatbots only answer questions through conversation. Auto browse can open tabs, click buttons, fill forms, check your calendar and email, and execute actions without you doing it manually. A chatbot responds to you. Auto browse acts for you. This fundamental difference makes it far more useful for administrative and research tasks.

How does Google's auto browse use my personal data like email and calendar?

When you enable auto browse, it can access your Gmail, Google Calendar, Google Maps, Google Shopping, and Google Flights to provide context for the tasks you assign. For example, if you ask it to book a hotel, it can reference the conference email you received to get dates and location. Google claims this data is processed with privacy protections and isn't used for advertising, though the data processing does happen. You control which services you grant access to, and you can revoke access anytime through your Google Account settings.

When will regular Chrome users get access to auto browse, or is it only for paid subscribers?

Currently, Gemini auto browse is limited to Google AI Pro ($20/month) and Google AI Ultra subscribers in the United States. Google hasn't announced a timeline for bringing it to free tier users or to other countries, though the company typically expands successful features over time. Since this is a complex feature with privacy and safety implications, the phased rollout to paying subscribers first makes sense from a testing and liability perspective.

How does auto browse compare to Open AI's Atlas and Perplexity's Comet in terms of capabilities?

Gemini auto browse excels at tasks requiring personal context (booking travel, scheduling meetings, managing subscriptions) because it integrates with Google's ecosystem. Open AI's Atlas is powerful for standalone tasks and has access to GPT-4's advanced reasoning, but lacks the personal data integration that makes Gemini special. Perplexity's Comet is strongest for research and information synthesis but is weaker at action tasks like form-filling or bookings. The best choice depends on whether you need personal context integration or just pure browsing capability.

What happens if auto browse makes a mistake, like filling out a form incorrectly or sending an email to the wrong person?

Google built in safeguards where auto browse asks for confirmation before taking important actions like sending emails or making purchases. However, the AI could still misunderstand your intentions or misread form fields even before requesting confirmation. The safeguards help catch obvious errors, but you should always review what the AI is about to do before confirming, especially for important or irreversible actions. As the technology improves, these safeguards will become more reliable.

Can auto browse work with websites other than Google's services, or is it limited to Google products?

Auto browse can work with any website—Amazon, hotel booking sites, subscription services, news sites, and more. It's not limited to Google products. However, it has enhanced capabilities with Google's own services because it has direct integration with Gmail, Calendar, etc. For non-Google websites, auto browse relies on understanding the visual layout and structure of the site, similar to how a human would navigate it. Some websites designed to block automated access might not work as well.

Is there a learning curve to using auto browse effectively, or can anyone pick it up immediately?

Auto browse is designed to be intuitive—you describe what you want and it does it. However, you'll get better results with practice. Learning to be specific about your constraints (budget limits, dates, preferences) helps the AI optimize correctly. Monitoring where it succeeds and fails teaches you which tasks it's good at. Most users find it intuitive within a few uses, but mastering it takes a few weeks as you develop intuition for what requests yield the best results.

What's the difference between Personal Intelligence and auto browse, and do you need both?

Auto browse performs tasks for you across the web. Personal Intelligence gives Gemini context about your life, preferences, and history to provide better recommendations and understanding. They're complementary features. Auto browse without Personal Intelligence can handle tasks but might miss important context. Personal Intelligence without auto browse can give good suggestions but can't execute actions. Together, they create a more capable assistant that understands you and can act on your behalf.

Are there privacy controls if I'm concerned about Google having so much access to my personal data?

Yes, Google provides privacy controls at multiple levels. You can choose which Google services (Gmail, Calendar, etc.) to allow auto browse to access. You can disable auto browse entirely. You can manage your Google privacy settings to limit what data Google collects and retains. However, these are controls over Google's access, not over whether using auto browse is safe in general. The fundamental trade-off is: more convenience and better AI assistance comes with more data being processed about your digital life. You have to decide if that trade-off works for you.

Looking to Automate Your Own Workflows?

If you're interested in AI-powered automation beyond just browsing, Runable offers comprehensive AI automation capabilities starting at just $9/month. Runable can automate content creation with AI agents that generate presentations, documents, reports, images, and videos. For teams and individuals looking to eliminate repetitive work beyond just web browsing, Runable provides an integrated platform for automating the broader set of workflow tasks.

Use Case: Automate your weekly report generation and presentation creation with AI, freeing up hours of manual work.

Try Runable For Free

Key Takeaways

- Gemini auto browse represents a fundamental shift from passive web browsing to AI agents that actively execute tasks across websites on your behalf

- Integration with Google's ecosystem (Gmail, Calendar, Maps, Flights) gives Gemini auto browse a significant advantage over competitors like OpenAI's Atlas and Perplexity's Comet

- The feature saves the most time on high-friction tasks like travel booking, subscription management, price comparison, and scheduling

- Privacy trade-offs are real—using auto browse means extensive personal data (email, calendar, search history) becomes visible to AI systems

- This technology will likely reshape how websites are designed, disrupt certain service-based jobs, and make AI agent adoption a competitive requirement within 3-5 years

Related Articles

- Chrome's Gemini Side Panel: AI Agents, Multitasking & Nano [2025]

- Google Calendar's Gemini Meeting Scheduler: Stop Wasting Hours Finding Available Times [2025]

- Google Gemini Meeting Scheduler: AI Calendar Optimization [2025]

- Alexa+ Forced Upgrade: How to Disable It (2025 Guide)

- Enterprise AI Agents & RAG Systems: From Prototype to Production [2025]

- Gemini-Powered Siri: What Apple Intelligence Really Needs [2025]

![Google Gemini Auto Browse in Chrome: The AI Agent Revolution [2025]](https://tryrunable.com/blog/google-gemini-auto-browse-in-chrome-the-ai-agent-revolution-/image-1-1769638458211.jpg)