Introduction: The Siri Problem That Apple Can't Ignore

Listen, we need to talk about Siri. Not the Siri you've grown to tolerate, but the one that's quietly becoming Apple's biggest competitive liability.

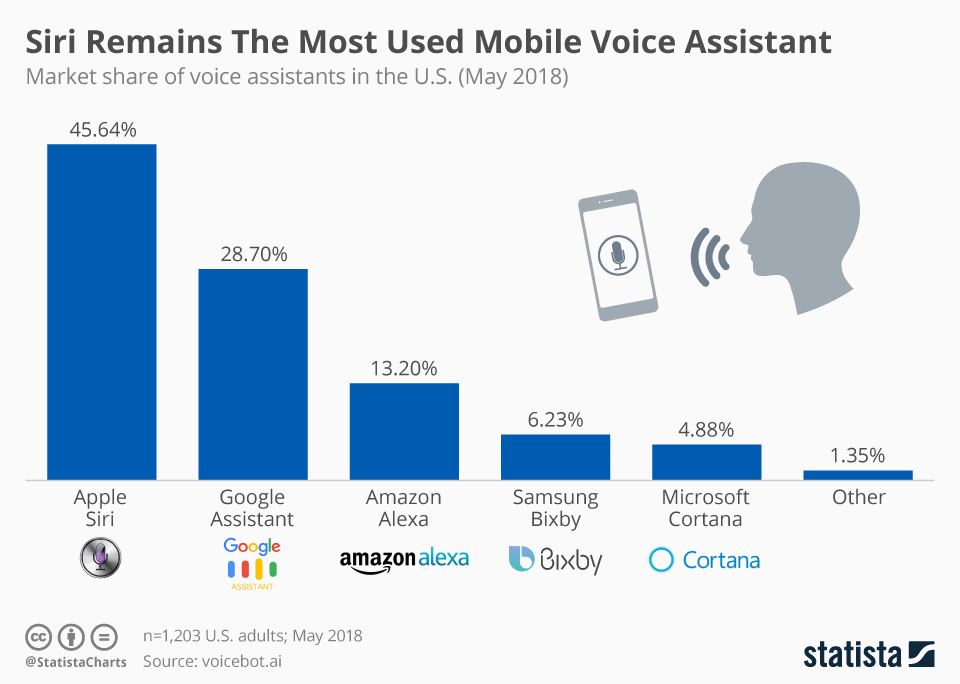

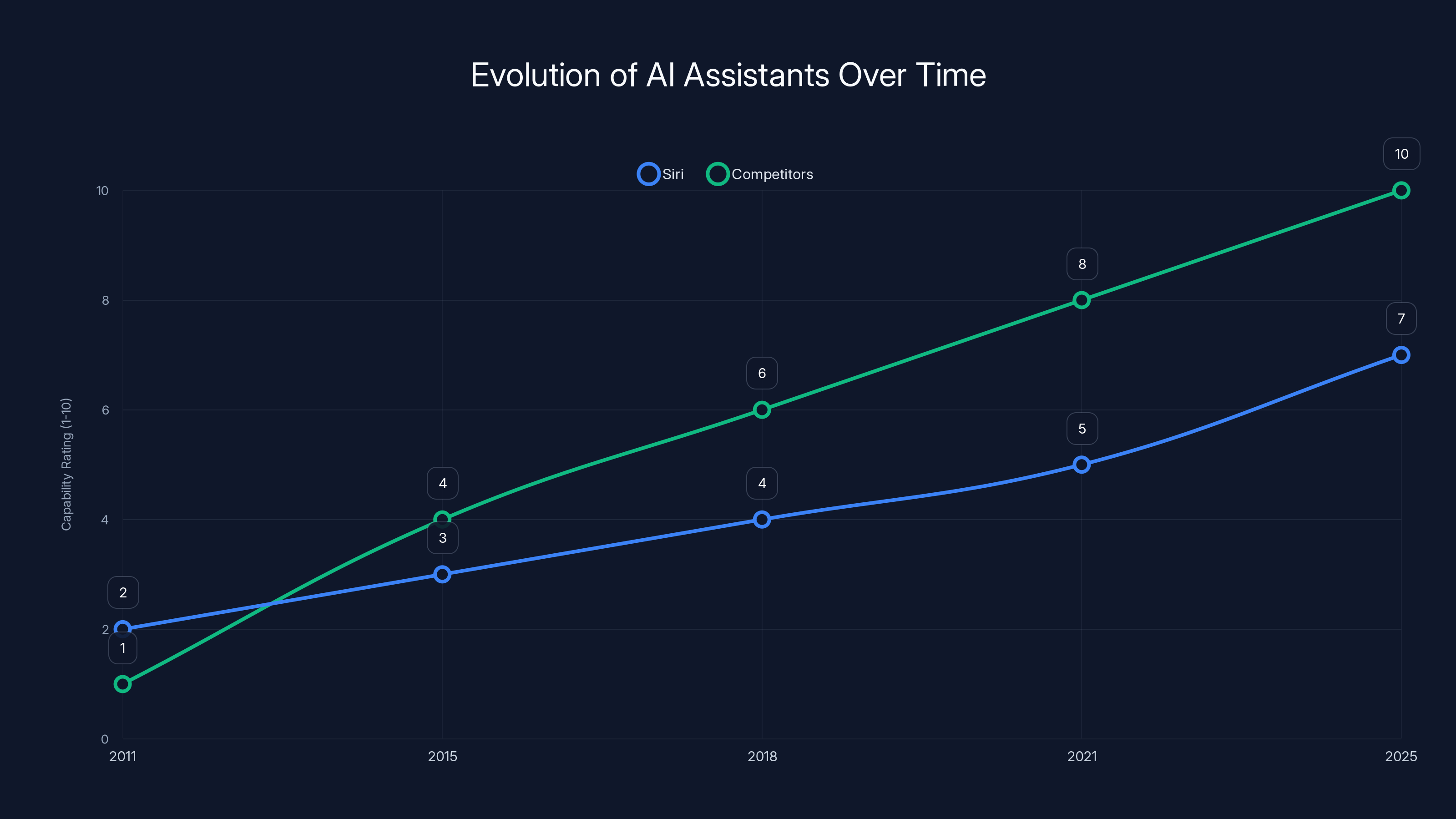

For over a decade, Siri has been the default voice assistant on hundreds of millions of iPhones. It's been there from the start, deeply integrated into iOS, Siri Shortcuts, and the broader Apple ecosystem. But here's the brutal truth: while Google's Assistant got smarter and Amazon's Alexa got more capable, Siri stagnated. It became the assistant people used only when they had no other choice.

Then Google released Gemini. Then OpenAI released ChatGPT. And suddenly, AI assistants stopped being nice-to-have features and became must-have tools.

Apple got the message. The company announced it would integrate Gemini into Siri, bringing Google's large language model technology directly into the iOS experience. On the surface, this sounds like salvation. Under the hood, it's only the beginning of what needs to happen.

The real question isn't whether Gemini will make Siri better. It will. The question is whether it'll be enough to make Siri genuinely useful in ways that matter to iPhone users who've spent years watching their assistant fail them.

Because here's what we know: iPhone users deserve better. They've waited years. They've watched Siri miss voice commands that ChatGPT would nail. They've been frustrated by Siri's inability to understand context, chain complex requests, or do anything beyond surface-level tasks. When you spend a thousand dollars on a flagship smartphone, you expect the AI that comes with it to feel premium, not like an afterthought.

So what does Gemini-powered Siri actually need to accomplish? What does "saving Apple from AI irrelevance" even look like in concrete terms?

That's what we're breaking down here. Not the hype. Not what marketing will say in keynote slides. But the actual, specific capabilities that will determine whether this update changes anything or just becomes another missed opportunity.

Let's dig in.

TL; DR

- Siri has fallen behind: ChatGPT and Google Assistant outperform Siri in reasoning, context, and complex task handling by significant margins.

- Gemini integration is necessary but not sufficient: Just swapping out the backend AI model won't fix Siri's architectural and UX problems.

- Five critical changes required: Conversational understanding, on-device processing, true context awareness, actionable intelligence, and deep app integration.

- The stakes are massive: Apple's AI credibility in 2025 and beyond depends on delivering an assistant that's genuinely useful, not just marginally better.

- Timeline matters: Users expect this in months, not years, and Apple's window to recapture mindshare is closing fast.

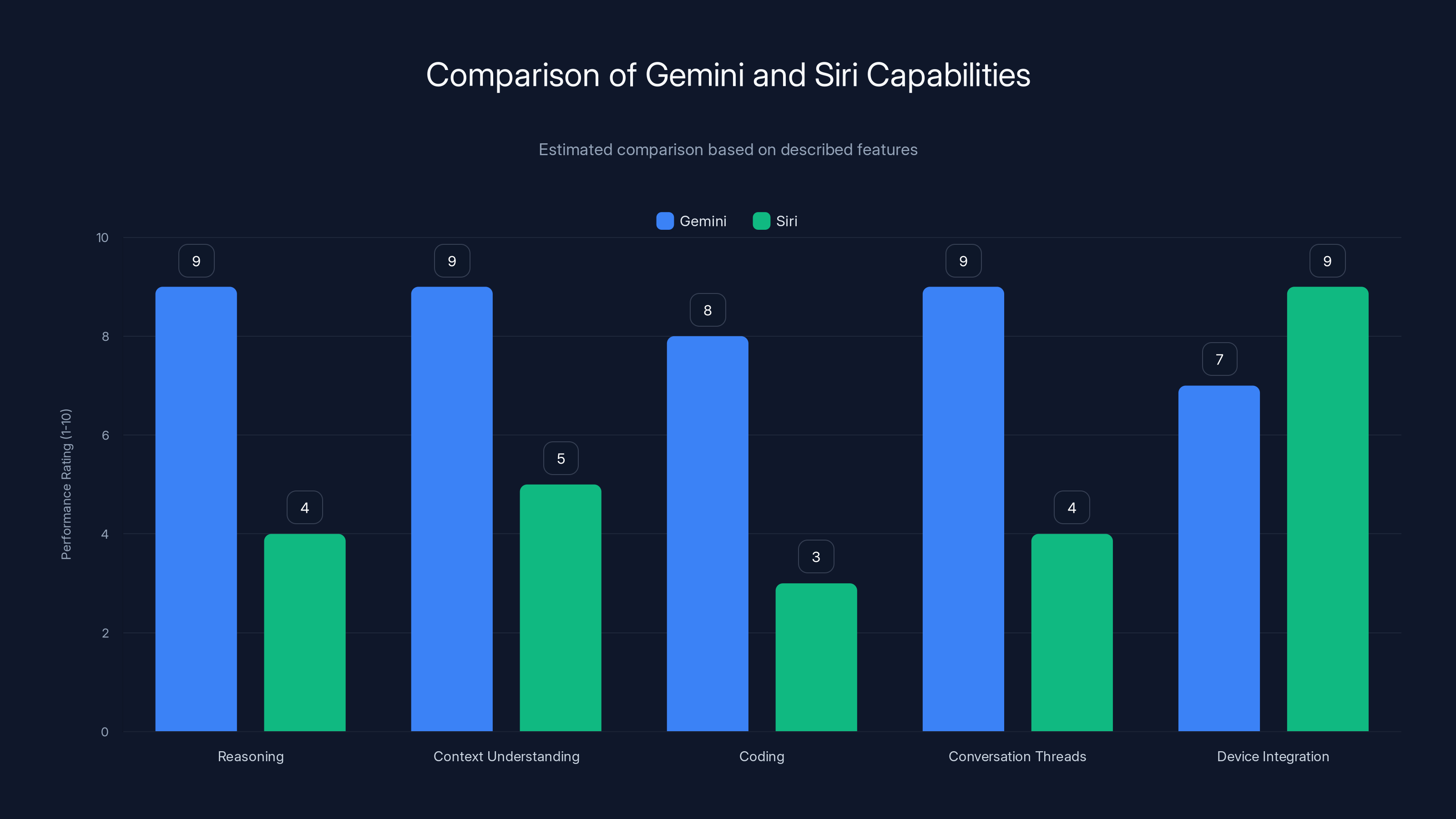

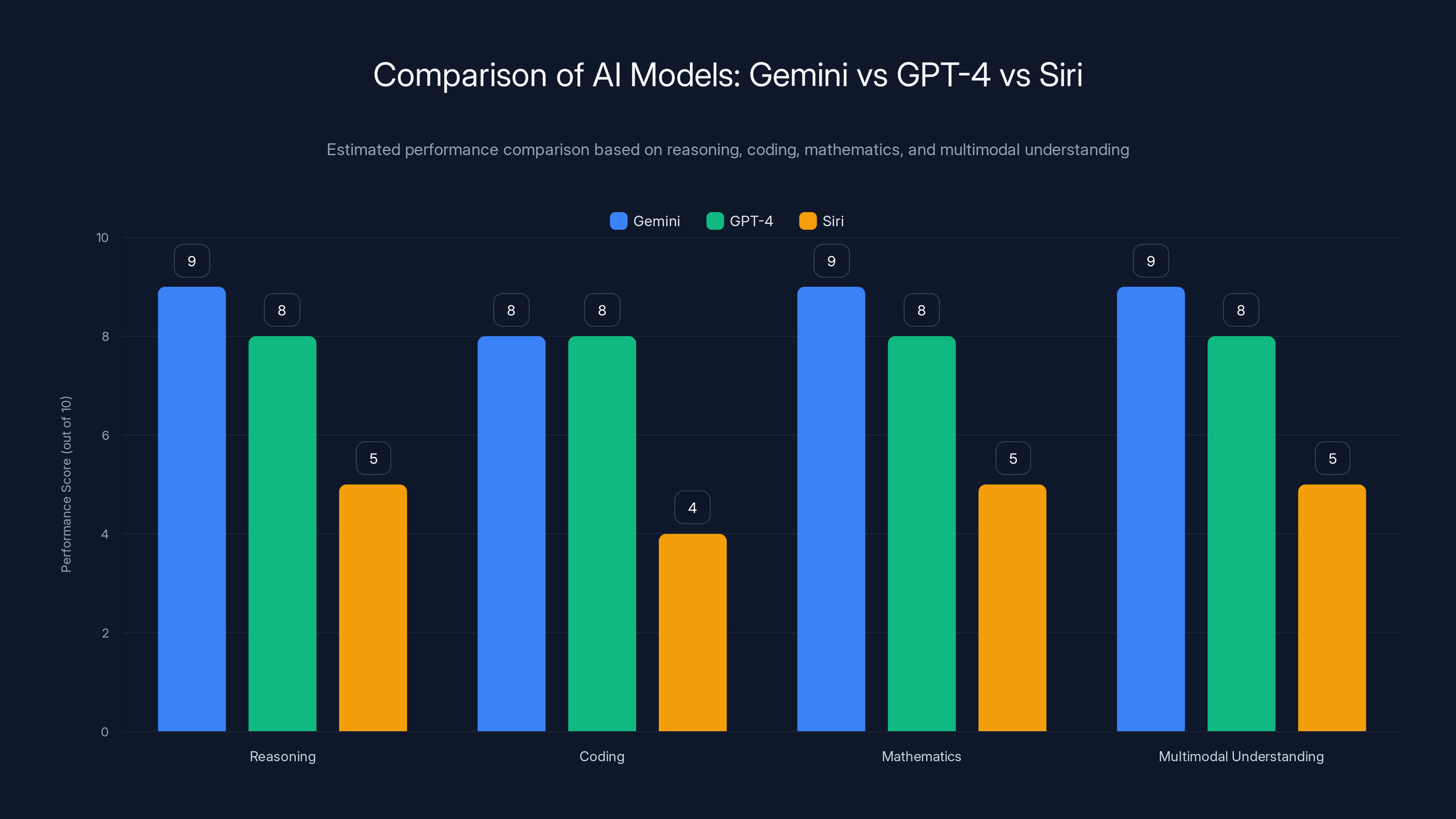

Gemini significantly outperforms Siri in reasoning, context understanding, coding, and maintaining conversation threads, while Siri excels in device integration. (Estimated data)

The Current State: Why Siri Lost Its Way

Siri Was Never Built for This Era

When Siri launched in 2011 as an iOS 5 feature (acquired from a startup Apple bought), it was genuinely innovative. Voice recognition wasn't trivial then. Understanding natural language queries was cutting-edge. Siri could set reminders, send texts, make calls, and search the web. For 2011, that was impressive.

But the problem is that Siri was architected for a different world. It was built as a task-completion engine, not a reasoning engine. It could follow predefined command patterns. It struggled with anything outside those patterns.

A simple example: Ask Siri "remind me to call my mom when I get home." Siri can do that. Ask Siri "what's a good restaurant near me that serves Thai food and is open after 9 PM?" Siri will fumble, probably returning generic results or asking you to clarify despite the fact that a modern AI would immediately understand the compound query with multiple constraints.

That's not a bug. That's the original design. Siri was trained on discrete commands, not on understanding complex conversational intent.

Meanwhile, large language models changed everything. Suddenly, AI assistants could understand nuance. They could chain multiple steps together. They could reason about problems instead of just executing scripts.

Why Competitors Pulled Ahead

Google's Assistant gets better every quarter. It understands conversation history. It knows context from your Google account. It can look at your calendar, your location, your previous searches, and use that to give you smarter answers.

ChatGPT doesn't integrate with your iPhone, but when users open it, they get an assistant that understands complex reasoning, creative tasks, and genuinely difficult questions. It's not perfect, but it's powerful.

Amazon's Alexa has become the hub for smart home integration. You can have actual conversations with it that span multiple topics and don't require specific command syntax.

And Siri? Siri still sometimes mishears voice commands. Siri still returns irrelevant search results. Siri still requires you to speak in ways it understands rather than letting the system adapt to how humans naturally communicate.

Apple users have noticed. In 2024 surveys, when asked what they'd change about iOS, voice assistant capability consistently ranks in the top five complaints.

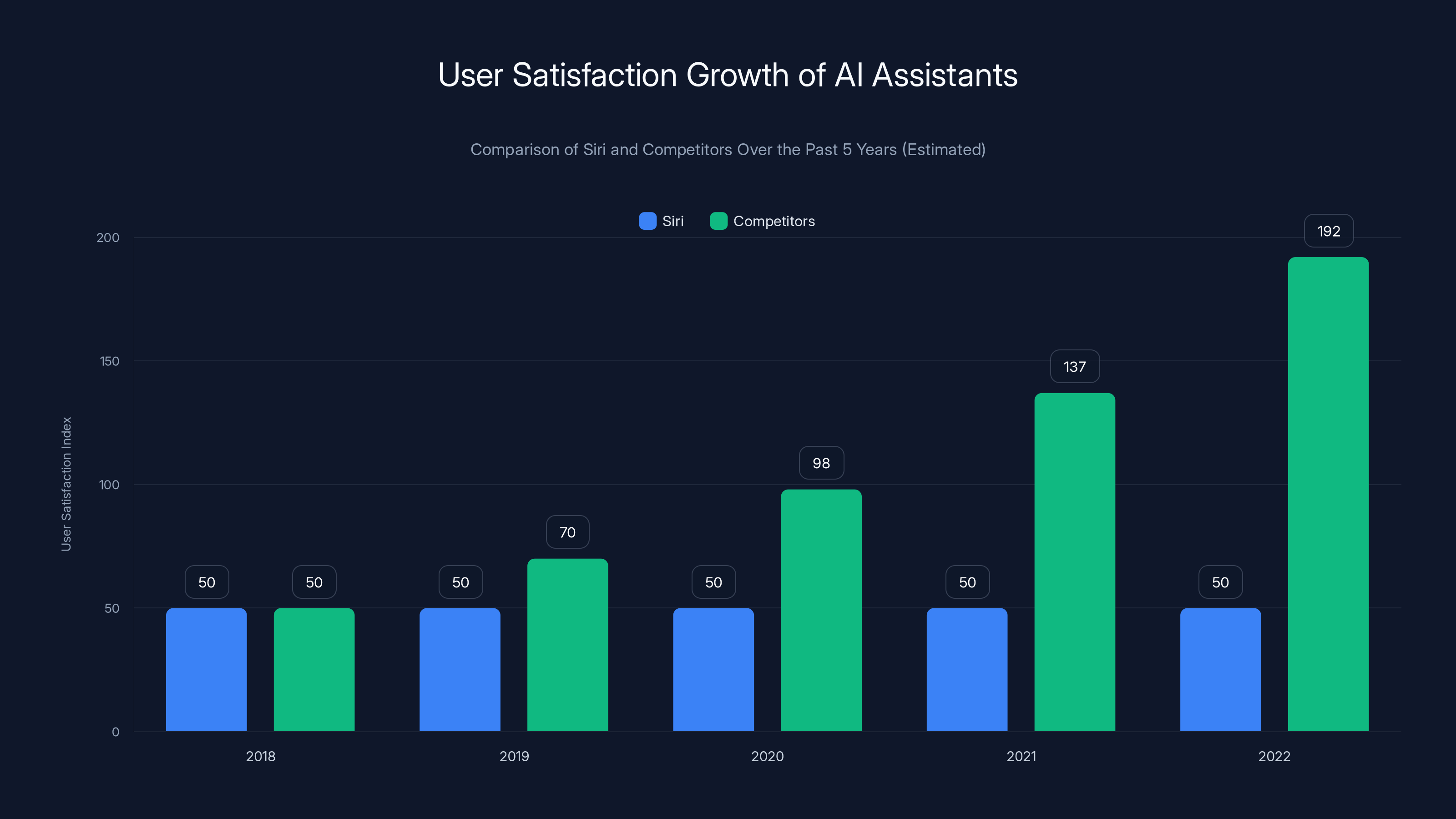

While Siri's user satisfaction has remained flat, competitors have seen a 40% year-over-year growth in satisfaction, highlighting their ability to adapt to modern AI demands. Estimated data.

What Gemini Brings to the Table

The Technology Advantage

Google's Gemini is genuinely strong. The company built it specifically to compete with and exceed OpenAI's GPT-4. In benchmark tests, Gemini matches or beats GPT-4 on reasoning, coding, mathematics, and multimodal understanding (text, images, audio).

For Siri, this matters. A lot.

Gemini understands context in ways Siri currently doesn't. If you ask a follow-up question, Gemini remembers what you said before. If you ask for creative help, Gemini can actually be creative. If you ask about your calendar and then ask "what's the weather there?", Gemini knows which location you meant.

That's not magic. That's just what modern LLMs do.

But here's the nuance: Gemini being better than Siri's current engine doesn't automatically make Gemini-powered Siri better than Claude or ChatGPT. The underlying model is only part of the equation.

Why Backend Swap Isn't Enough

Apple's mistake would be assuming that putting Gemini under Siri's hood automatically fixes everything.

Here's an analogy: imagine your car's engine is slow, but the real problem is that the transmission is broken. You could put a Ferrari engine in there, and yeah, the car would be faster. But it won't be good until you fix the transmission too.

Siri's problems aren't just the language model. They're also:

- The interface: Siri still feels clunky to activate and interact with compared to holding down a button and talking to ChatGPT.

- The integration: Siri can control some Apple apps and some third-party apps, but it's wildly inconsistent.

- The learning: Siri doesn't learn your preferences or communication style over time.

- The feedback: Siri rarely explains why it's giving you an answer or what it's doing behind the scenes.

- The processing model: Siri still sends most queries to Apple's servers, not just Gemini's—creating latency and privacy questions.

Putting Gemini in that system is necessary. It's not sufficient.

Critical Requirement #1: True Conversational Understanding

Multi-Turn Context That Actually Works

Gemini-powered Siri needs to excel at something Siri has always struggled with: remembering what you said five minutes ago.

Right now, if you ask Siri "what's the weather in Seattle?" and then ask "what about Portland?", there's about a 50/50 chance Siri understands you mean Portland, Oregon and not some other Portland. It gets confused. It asks for clarification. It breaks the conversational flow.

A real assistant doesn't do that. A real assistant tracks the thread.

Gemini can do this. The model maintains conversation history and understands that follow-up questions reference previous context. But Siri would need to be rebuilt to preserve and pass that context properly.

This means:

- Conversation threading: Every interaction should be linked to previous Siri sessions (within a defined timeframe, obviously with clear privacy boundaries).

- Contextual memory: If you ask about your calendar, subsequent questions about time or location should reference that calendar data.

- Implicit requests: "Schedule that at 3 PM" should work even if you haven't explicitly stated what "that" is, because Siri would understand it from context.

- Clarification handling: Instead of asking "which Portland?" five times, Siri should ask once, learn your answer, and remember it for future queries.

Implementing this well is harder than it sounds. It requires:

- Storing conversation history securely on-device (not sending everything to Apple's servers).

- Deciding how long context persists (hours? days? across phone restarts?).

- Managing privacy—users need to know what data is being kept and why.

- Handling multi-user households where different family members use the same phone.

Apple has the infrastructure to do this. The question is whether it will prioritize it over launching quickly.

Natural Language That Matches How Humans Actually Talk

Siri users know the drill. You can't ask Siri things naturally. You have to use a specific command structure or the system misunderstands.

Want to ask "I'm running late to my meeting, can you let my mom know I'll be 20 minutes late?" Siri might mishear or misinterpret. The safer way is to explicitly command it: "Send a message to Mom saying I'm 20 minutes late."

Gemini-powered Siri needs to understand both, plus dozens of variations, plus imprecise language.

This isn't about being fancy. It's about basic usability. When you talk to a human, you don't speak in commands. You speak in natural sentences, with filler words, with assumptions that the person understands context.

Siri should work the same way.

Implementing this requires:

- Robust intent recognition: Understanding that "I need to move my 3 o'clock meeting because I'm stuck in traffic" is a meeting reschedule request, not a statement.

- Flexible phrasing: Supporting dozens of ways to say the same thing.

- Partial information handling: Letting users say "remind me about this tomorrow" even if Siri doesn't know what "this" is initially (it should ask, learn, and remember).

- Error recovery: When Siri does mishear, it should suggest corrections rather than just failing silently.

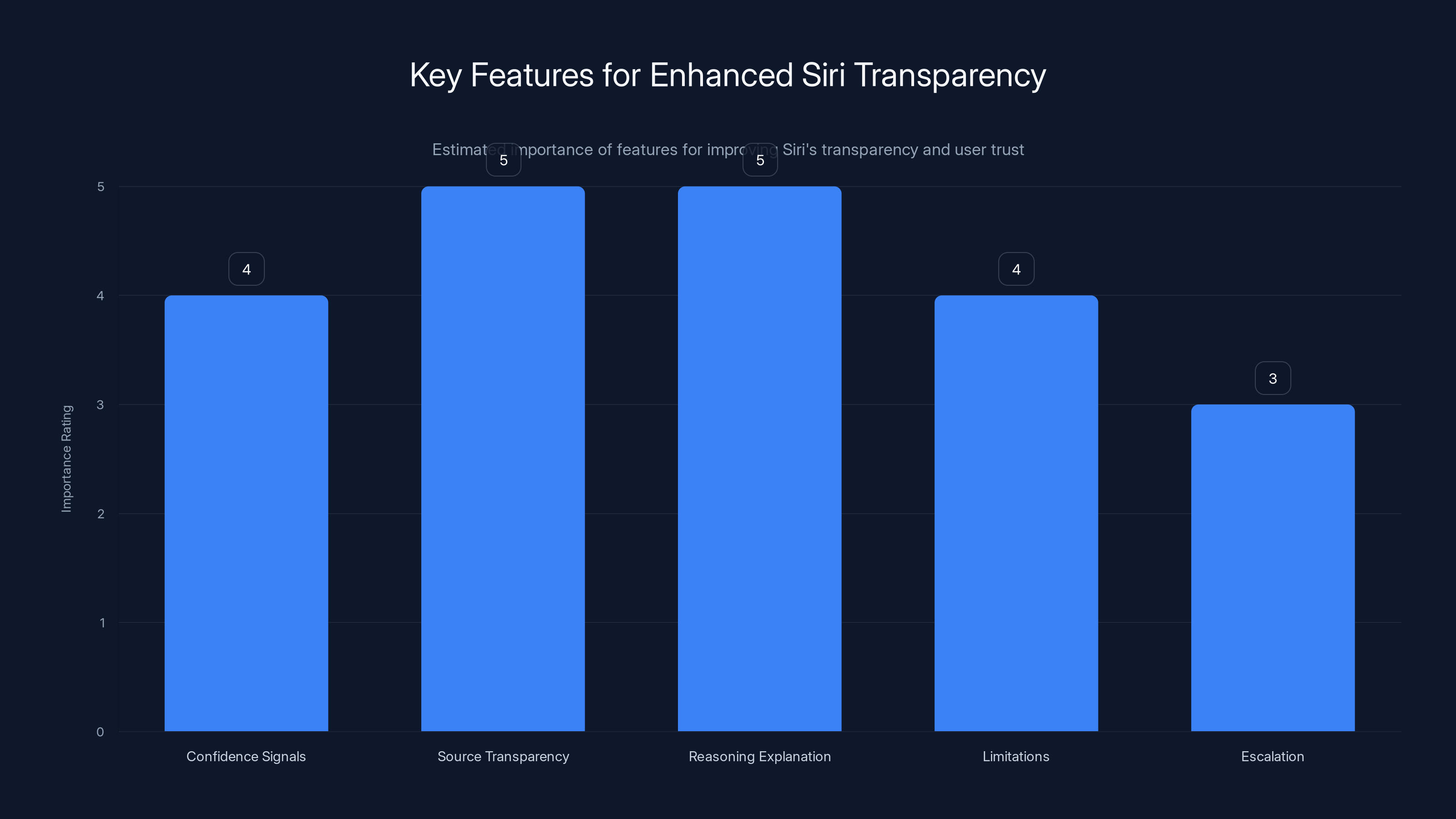

Source transparency and reasoning explanation are crucial for enhancing Siri's transparency and user trust. Estimated data based on typical user expectations.

Critical Requirement #2: On-Device Processing and Speed

Why Privacy and Latency Are Inseparable

Here's a political hot take: every time Siri sends your voice to Apple's servers, there's a privacy tradeoff. Apple says the data is encrypted and deleted, and I believe them. But the fact that it's being sent is the issue.

Users have a right to expect that some things stay on their device.

Gemini-powered Siri should process as much as possible locally. Not everything—Gemini's full model is huge, and running it locally on a smartphone would crush battery life. But common requests? Simple queries? Those should stay on-device.

Apple already has precedent for this. Apple's privacy-first approach includes on-device processing for photos, dictation, and face recognition. Siri should follow the same pattern.

This solves two problems simultaneously:

Privacy: Your voice and queries stay on your device for routine tasks. Apple isn't logging patterns about what you're asking Siri to do all day.

Speed: Processing happens instantly instead of requiring a network round trip. On-device processing means Siri can respond to "hey Siri, set a timer" in milliseconds, not the current half-second or more.

The technical approach would likely be:

- Local model for simple tasks: A lightweight language model on-device handles basic commands, reminders, timers, and common queries.

- Cloud fallback: Complex requests, creative tasks, or searches route to full Gemini when needed.

- Smart routing: The system learns which queries work locally and routes intelligently.

- Battery optimization: On-device processing is tuned to minimize battery drain.

Implementing this is ambitious but doable. Apple's A17 and M-series chips are powerful enough to run reasonably-sized language models. The company would need to optimize or release a smaller Gemini variant specifically for this.

The Battery Question

On-device LLM processing burns battery. Running Gemini locally all the time would drain your iPhone's battery in hours.

But running it selectively? That's viable. Apple needs to:

- Profile which queries are most common (settings, timers, quick facts, reminders).

- Optimize the on-device model for those specific tasks.

- Only activate the full model when needed.

- Use lower-power processing modes when the phone is plugged in or in low-power mode.

Apple's done harder optimization. The real question is whether this gets priority in iOS 19 or becomes a mid-cycle update.

Critical Requirement #3: Deep Context Awareness Across Your Digital Life

Calendar, Location, and Predictive Understanding

Google Assistant is genuinely useful because it connects to your calendar, your location, your search history, your Gmail, your Google Maps, your travel bookings. When you ask "how long to the airport?", it knows where you are, looks at your calendar, finds your flight, calculates the route based on current traffic, and gives you a specific number.

Siri can do some of this. But it's inconsistent.

Gemini-powered Siri needs to be integrated with Apple's ecosystem in ways that feel natural, not bolted-on.

This means:

- Calendar integration: Siri should understand your schedule context and use it to inform responses. If you ask "when can I schedule a meeting with Sarah?", Siri looks at both calendars and suggests specific times.

- Location awareness: Siri knows where you are and uses that context. "What's nearby?" should immediately understand you mean restaurants or whatever you're looking for in your current location.

- Cross-app intelligence: If you ask "did I send that email to Janet?" Siri can search Mail for Janet, understand the context, and answer.

- Predictive assistance: Siri notices patterns. If you always check the weather before your morning run, Siri offers it. If you frequently check flight status when you're near the airport, Siri mentions it.

- Device coordination: Siri on your iPhone should know about requests made on your Mac or Apple Watch and maintain context across devices.

The technical challenge is massive. Siri would need:

- Secure access to your most sensitive data (calendar, location, email).

- Algorithms that learn your patterns without sending everything to Apple's servers.

- Privacy controls that let you granularly choose what Siri can access.

- Transparency about what data it's using and why.

But here's why it matters: this is where Siri could actually beat Google Assistant and ChatGPT.

Google has your data, but you don't use Google's primary services on your iPhone the way you do on a computer. Siri owns your iPhone. If Apple gets this right, Siri could be the most contextually aware assistant in the world specifically on Apple devices.

The Privacy Implementation That Matters

Apple needs to be aggressive and transparent here. Not "we encrypted your data so it's fine." But rather, "we process your calendar and location on your device, we don't store interaction patterns, here's exactly what we send to our servers, and here's how you can verify it."

This is a competitive advantage. If Gemini-powered Siri respects privacy better than Google Assistant while being equally smart, that's a win.

The implementation would likely use:

- On-device indexing: Your calendar, emails, and location data are indexed locally.

- Selective sharing: Only necessary data is shared with cloud services for complex tasks.

- Anonymization: When data must be sent, it's anonymized or processed through privacy-preserving techniques.

- User control: Settings clearly show what Siri can and can't access.

Estimated data shows Siri's capabilities have improved slowly compared to competitors, highlighting the need for a significant upgrade to meet user expectations.

Critical Requirement #4: Actionable Intelligence and Task Completion

From Answering Questions to Solving Problems

ChatGPT and Gemini are fantastic at answering questions. "What's the capital of Peru?" "How do I make sourdough bread?" "Explain quantum computing." They nail these.

But most Siri users aren't asking trivia questions. They're trying to do something.

"Schedule a meeting with my team next Thursday." "Text my mom that I'm running late." "Order paper towels." "Book a reservation at that restaurant we talked about."

These aren't questions. They're tasks. And they require Siri to not just understand your intent, but to actually execute it in your apps and services.

This is where Siri could potentially pull ahead. Apple owns iOS. It can integrate Siri with Apple apps and reasonably demand that third-party developers support Siri integrations properly.

The requirements:

- Robust app integration: Siri needs to be able to read and write data across apps, not just send basic commands.

- Smart defaults: When you say "send the photos from today to Sarah," Siri should understand what photos and default to the right app (Messages, AirDrop, etc.) and ask only if necessary.

- Multi-step task handling: "Set a reminder to pick up groceries, and add milk, eggs, and cheese to my shopping list" should be a single interaction, not three separate commands.

- Service integrations: Siri needs to be able to order from services like DoorDash, Uber, etc. by defaulting to your preferred app and past preferences.

- Failure handling: When something can't be done, Siri should explain why clearly and offer alternatives.

Implementing this requires:

- Frameworks that let app developers provide rich Siri integrations (Apple partially has this with Siri Shortcuts, but it needs to be deeper).

- Permission and authentication flows that are secure but don't require users to re-authenticate constantly.

- Smart intent resolution (when you say "order coffee," which app? Your favorite? The one closest to you? Ask once, learn for next time).

This is solvable. Apple's platform advantage is exactly this kind of vertical integration.

Proactive Assistance and Suggestions

Good assistants don't just wait for commands. They notice when you might need help.

Google Assistant does this. If you have a flight booked and you ask a question Siri doesn't understand, it could proactively say "I see you have a flight tomorrow, would information about that help?"

Gemini-powered Siri should develop similar capability:

- When you're heading to the airport, offer flight info and directions.

- When it's approaching your regular workout time, ask if you want to schedule it.

- When a coworker cancels a meeting, suggest rescheduling with another person.

- When the weather changes unexpectedly, alert you if you have outdoor plans.

This requires learning your patterns and maintaining a model of your life. It's invasive if done wrong (and users hate it). It's magical if done right (and users love it).

Critical Requirement #5: Honest Limitations and Transparent Reasoning

When Siri Doesn't Know, It Should Say So

One of the most frustrating Siri experiences is asking something and getting a garbage answer with no indication that it's garbage.

You ask "what's the best laptop for video editing?" and Siri returns generic web results that don't actually answer your question. But it delivers them with confidence, as if it's correct.

Gemini-powered Siri needs to be honest about uncertainty.

This means:

- Confidence signals: "I'm not sure about this, but..." when the confidence is low.

- Source transparency: Saying where information comes from and whether it's current.

- Reasoning explanation: Showing the logic behind answers ("I'm suggesting this restaurant because you liked Mediterranean food last time, it's highly rated, and it's open until 11 PM").

- Limitations: Being upfront about what Siri can and can't do.

- Escalation: When something is beyond Siri's capability, offering to open the full ChatGPT app or direct you elsewhere.

This is actually a competitive advantage. Humans trust systems more when they admit uncertainty than when they bluff. ChatGPT does this well, and users appreciate it.

Implementing this requires:

- Updating Siri's response framework to include confidence scores and reasoning chains.

- Training Gemini specifically to explain its logic, not just provide answers.

- UI changes to surface this information in a natural way (voice and screen).

- Fallback mechanisms to point users elsewhere when appropriate.

The Reasoning Gap

Siri currently can't explain its reasoning because it doesn't have any. It's executing scripts, not reasoning.

When Gemini powers Siri, that changes. Gemini can think through problems step-by-step. It can show its work.

Apple needs to make this visible. When you ask Siri something complex, show the reasoning:

"You asked 'when should I water my plants?' I looked at the weather forecast (rain is unlikely for three days), your watering schedule (you water every five days), and the last time you watered (four days ago). Based on that, I'd suggest watering today."

That's not trying to be fancy. That's transparency. That's a user understanding not just the answer, but why.

Gemini outperforms GPT-4 and Siri in reasoning, coding, mathematics, and multimodal understanding. Estimated data based on benchmark tests.

Integration Architecture: How It All Comes Together

The Processing Model

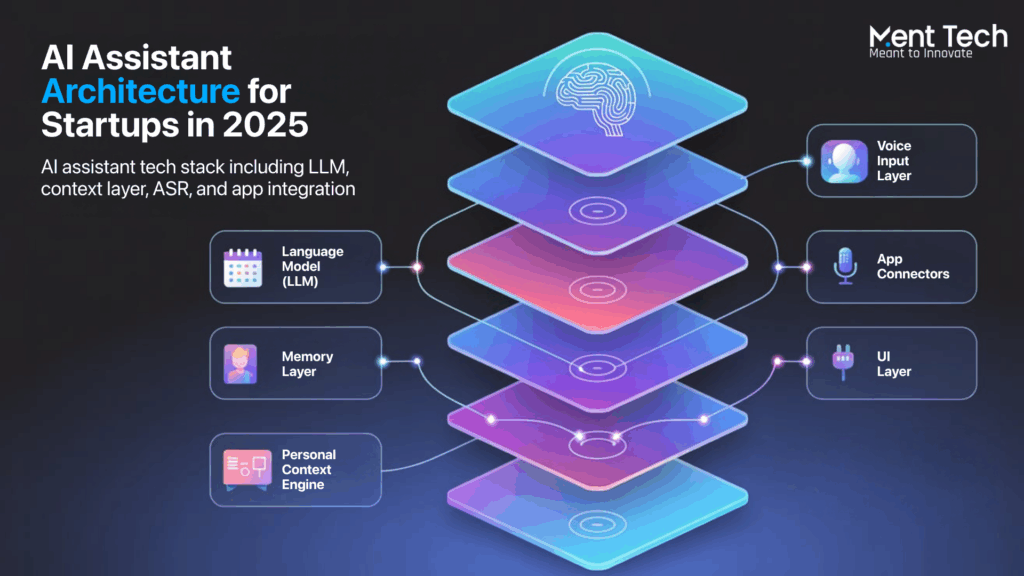

Gemini-powered Siri can't be a simple swap. It needs an architecture that handles:

- Local processing: Simple tasks, routine requests, privacy-sensitive queries.

- Cloud processing: Complex reasoning, knowledge-intensive questions, creative tasks.

- Intelligent routing: The system decides where to process based on query type, device state, and connectivity.

- Graceful degradation: If cloud is unavailable, Siri still works for common tasks.

The ideal architecture:

Voice Input

↓

Intent Classification (On-device)

↓

├─ Simple Task → Local Model → Action

├─ Complex Query → Gemini Cloud → Response

└─ Requires Confirmation → User → Action

↓

Response Synthesis

↓

Voice/Text Output

Each stage would be optimized for speed and accuracy. Apple would need to:

- Train a lightweight on-device model for intent classification.

- Optimize Gemini for latency-sensitive queries.

- Build smart routing logic.

- Handle fallbacks and errors gracefully.

This is architecturally sound but requires significant engineering.

The User Experience Arc

From the user's perspective, Gemini-powered Siri should feel like:

Activation: Press and hold, or just say "Hey Siri." No change needed here.

Request: Speak naturally. "I need to reschedule my 3 o'clock meeting because I'm stuck in traffic and won't make it."

Processing: Siri processes on-device if possible, routes to Gemini if needed. Users see a brief visual indicator that Siri is thinking.

Response: Siri either confirms and executes ("I've moved your 3 o'clock meeting with the team to 4 PM") or asks for clarification ("Which calendar should I update? And which team meeting is this?").

Follow-up: Context persists. "Can you also tell them?" should understand "tell the team members I just rescheduled with."

The entire interaction should feel conversational, not transactional.

The Competition: What Siri Is Up Against

ChatGPT's Advantages

ChatGPT isn't integrated into iOS, but millions of iPhone users open the app because Siri is inadequate for their needs.

ChatGPT's advantages:

- Reasoning ability: It thinks through complex problems step-by-step.

- Knowledge breadth: Trained on massive amounts of text data.

- Flexibility: Can help with writing, coding, analysis, creative work, not just task completion.

- Transparency: Shows reasoning and is upfront about limitations.

- Updates: OpenAI updates the model frequently with improvements.

For Siri to compete, it needs to match or exceed ChatGPT on reasoning while maintaining better integration with iOS.

Google Assistant's Ecosystem Power

Google Assistant isn't the default on iPhone, but it's available and deeply integrated with Android.

Google Assistant's advantages:

- Information access: Can query Google's search index, calendar, Gmail, Maps in real-time.

- Smart home control: Dominates in smart speaker integration.

- Learning: Improves over time based on user interactions.

- Integration: Works across Android, iOS, and smart home devices.

Siri can beat Google Assistant on privacy and device integration, but would need to match its information access.

Claude's Capability

Anthropic's Claude is a growing competitor that emphasizes accuracy and harmlessness.

Claude's advantages:

- Reliability: Very low hallucination rate.

- Safety: Built with constitutional AI for reliable behavior.

- Analysis: Excels at long-form analysis and complex document understanding.

Geographically, Claude isn't as integrated into iOS, but it's available through the web and apps.

Siri's competitive position:

If Gemini powers Siri and Apple executes on the five critical requirements, Siri could be the most useful assistant on iPhone specifically because of iOS integration, not despite it. It won't be the smartest for standalone reasoning tasks. But for actually getting things done on your device, it could dominate.

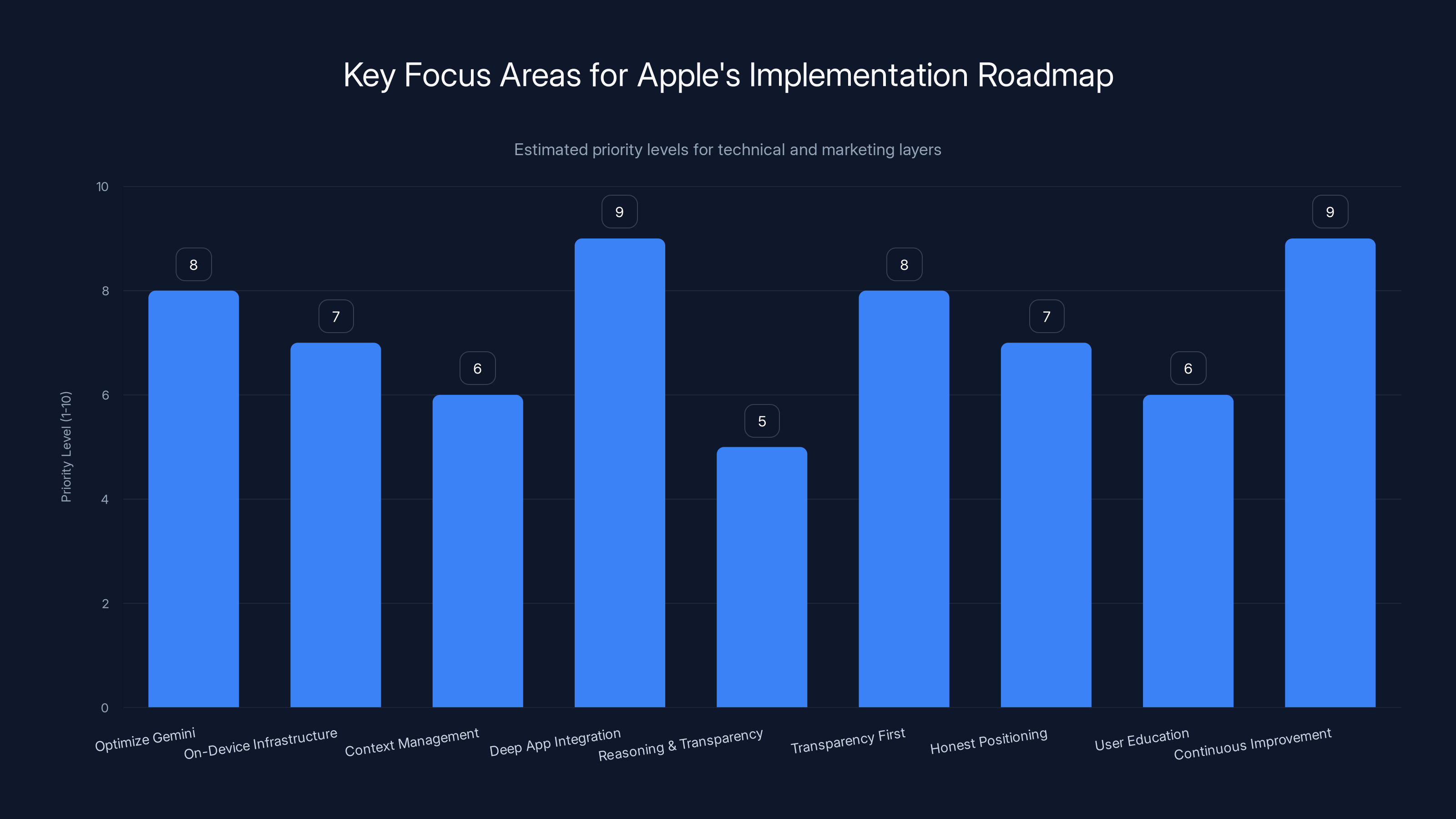

Deep App Integration and Continuous Improvement are top priorities, with a focus on enhancing user experience and communication. Estimated data based on typical strategic priorities.

Timeline and Rollout Expectations

When Will This Actually Happen?

Apple announced Gemini integration for Siri, but specific timelines are vague.

Based on Apple's typical development cycles:

- Phase 1 (Early 2025): Basic Gemini integration in beta. Handles simple queries better, supports more natural language.

- Phase 2 (Mid-2025): On-device processing for common tasks. Conversational context improves. Task completion expands.

- Phase 3 (Late 2025): Full feature set including predictive assistance, advanced reasoning, multi-step tasks.

- Phase 4 (2026): Continuous improvement, expanded third-party integration.

This is speculative, but Apple rarely ships half-baked features. If they're doing Gemini integration, it'll likely be comprehensive when it launches.

The Risk: Rushed Release

There's a scenario where Apple rushes Gemini-Siri to market before it's ready, just to meet competitive pressure. A rushed launch would be:

- Buggy and unreliable.

- Slower than current Siri (cloud-dependent without local optimization).

- Inadequate at task completion.

- A PR disaster.

Apple hasn't made this mistake with major features in years. But the pressure is real.

The Other Risk: Incremental Improvements

The opposite risk is that Apple ships Gemini-Siri, and it's just... fine. Better than current Siri, but not dramatically better. Users will use it sometimes, but still default to ChatGPT or Google for important tasks.

This is the "mid-result" scenario. It's possible and would be disappointing.

For Gemini-Siri to actually "save Apple from AI irrelevance," it needs to be genuinely transformative, not just incremental.

What Users Should Expect (And Demand)

Red Flags to Watch For

When Gemini-Siri launches (or when rumors start circulating), watch for these red flags:

- Still cloud-dependent: If every query goes to Apple's servers, on-device optimization failed.

- No improvement in understanding: If "what about Portland?" still confuses Siri, conversational context didn't work.

- Same app integration limitations: If Siri still can't read your email or see your location, context awareness wasn't implemented.

- Slower responses: If latency is worse than current Siri, the architecture is wrong.

- No reasoning transparency: If Siri still gives answers without explaining why, reasoning disclosure didn't happen.

If three or more of these are true, Gemini-Siri will be another incremental update, not a transformation.

Green Flags to Look For

On the flip side, these would indicate Apple nailed it:

- Instant responses for simple queries: Desktop Siri responds before you finish speaking for timers, reminders, etc.

- Natural conversation: You can speak however you want, and Siri understands.

- Context persistence: Siri remembers your previous questions for hours.

- Complex task handling: Multi-step requests work without clarification.

- Transparent reasoning: Siri explains its logic, not just the answer.

- Privacy confidence: Apple transparently documents what stays on-device and what doesn't.

If most of these are true, Apple succeeded.

The Larger Stakes: Why This Matters Beyond Siri

Apple's AI Credibility

Right now, Apple is behind on AI. Not just assistants, but broadly. The company was late to generative AI, late to acknowledge it matters, and slow to integrate it comprehensively.

Gemini-powered Siri is the company's chance to say "we're not behind, we just care about doing it right."

If Gemini-Siri is transformative, it reframes Apple's narrative from "playing catch-up" to "choosing quality over speed."

If Gemini-Siri is mediocre, it confirms that Apple is, in fact, behind.

The Ecosystem Advantage

Apple's real advantage in the AI space isn't raw capability. It's integration. Apple controls the entire stack: hardware, OS, first-party apps, and the App Store.

No one else has that. Google has breadth but loses control on Android. OpenAI has capability but no platform. Amazon has integration but limited hardware.

Apple has everything. If the company builds Siri to leverage that systematically, it could be genuinely unbeatable on iOS specifically.

Gemini-powered Siri that's truly integrated with Calendar, Mail, Maps, Health, HomeKit, and third-party apps would be more useful than any standalone AI, not because the model is better, but because it's connected.

The iPhone 17 Question

In 2026, when the next flagship iPhone launches, Siri will be a major selling point or a major liability.

If Gemini-Siri works, Apple will lead with it: "iPhone 17: The most integrated AI on any phone."

If Gemini-Siri doesn't work, Apple won't mention Siri at all, and users will assume it's still mediocre.

That's the pressure Apple is under.

Building the Case: Why Users Deserve This

Eleven Years is Too Long

Siri launched in 2011. It's been the default iPhone assistant for 14 years. In that time:

- Technology moved from scripted assistants to neural networks to large language models.

- Competitors went from nonexistent to highly competitive.

- User expectations multiplied.

- The bar for "acceptable" assistant moved from "understands basic commands" to "thinks through complex problems."

Apple users paid premium prices for iPhones during this entire period. They deserved better assistance for years.

The iPhone Experience Should Be Premium

Apple charges $1,000+ for flagship iPhones. The entire pitch is premium integration, premium hardware, premium experience.

But Siri? Siri is the opposite of premium. It's clunky, limited, frustrating.

That's a disconnect. If you're paying for premium, every part of the experience should be premium, including the assistant.

Gemini-powered Siri that's genuinely good would finally make that experience premium.

Competitive Necessity

This isn't just about Siri being good. It's about Apple's entire AI strategy.

In 2025, AI is a primary decision factor for phone purchases. It's not enough anymore to have a great camera and fast processor. The AI needs to be great too.

Apple missed this for years. Gemini-Siri is the company's chance to catch up before the gap becomes insurmountable.

Practical Implementation Roadmap

What Apple Needs to Do (Technical Layer)

-

Optimize Gemini for iOS:

- Create a mobile-optimized version of Gemini.

- Profile latency for typical queries.

- Ensure the model fits within iPhone memory constraints.

-

Build On-Device Infrastructure:

- Deploy a lightweight language model on-device.

- Create routing logic that decides what goes local vs. cloud.

- Optimize battery usage through smart processing scheduling.

-

Implement Context Management:

- Store conversation history securely on-device.

- Create privacy-preserving indexing of user data (calendar, location, etc.).

- Build fallback systems for when data isn't available.

-

Deep App Integration:

- Expand Siri capabilities for Mail, Messages, Calendar, Maps, etc.

- Create standardized frameworks for third-party developers.

- Build intent resolution that's smart about which app to use.

-

Reasoning and Transparency:

- Modify Gemini to surface reasoning chains.

- Create UI that displays this information naturally.

- Add confidence scoring to responses.

What Apple Needs to Communicate (Marketing Layer)

-

Transparency First:

- Explain how on-device processing works.

- Detail what data is stored where.

- Show privacy advantages over competitors.

-

Honest Positioning:

- Don't claim Siri is "the smartest assistant." Claim it's "the most integrated."

- Don't compare to ChatGPT on reasoning. Compare on usefulness on iOS.

- Be upfront about limitations.

-

User Education:

- Teach users how to interact naturally (many years of training them to use specific commands).

- Show what Siri can do that ChatGPT can't (iOS integration).

- Highlight privacy advantages.

-

Continuous Improvement Messaging:

- Set expectations that Siri will improve over time.

- Communicate update cadences.

- Show listening to user feedback.

Alternative Scenarios: What Could Go Wrong

Scenario 1: Gemini-Siri Works, But Usage Doesn't Change

Apple ships a genuinely good Gemini-powered Siri. Technically, it's an improvement. But iPhone users have spent years using ChatGPT, and habit is strong.

Siri stays unused because users don't trust it anymore, despite the improvement.

Likelihood: Moderate. Breaking user habits is hard.

Mitigation: Aggressive communication and marketing. Apple would need to actively promote the new Siri, not just release it quietly.

Scenario 2: On-Device Processing Causes Battery Problems

Apple tries to run Gemini locally, optimization is insufficient, and battery life tanks.

Users disable the feature and revert to cloud-based Siri, defeating the purpose.

Likelihood: Low. Apple's optimization is usually good. But possible.

Mitigation: Extensive beta testing and battery profiling before release.

Scenario 3: Privacy Implementation Has Holes

Users discover that despite Apple's claims, Siri is still sending sensitive data to the cloud.

Public backlash ensues, similar to the CSAM backlash from a few years ago.

Likelihood: Low-moderate. Apple is usually careful. But privacy is complex.

Mitigation: Independent security audits before launch. Third-party verification of privacy claims.

Scenario 4: App Integration Is Incomplete

Siri can't properly integrate with important third-party apps (Gmail, Slack, etc.).

Task completion remains limited. Siri works for Apple apps but not the broader ecosystem.

Likelihood: Moderate. Third-party integration is always a challenge.

Mitigation: Apple works closely with major app developers pre-launch to ensure quality integrations.

The Moment of Truth: 2025

Apple is at an inflection point. The company's approach to AI for the next decade will be decided by how it executes Gemini-powered Siri.

If it's transformative, Apple has found its path forward in the AI era. The company leaned on privacy, integration, and user experience rather than raw model size.

If it's mediocre, Apple will have missed the moment. Competitors will pull further ahead, and Apple will spend the next few years playing catch-up.

The technology is ready. Gemini is capable. Apple's hardware is powerful. The only variable is execution.

iPhone users have waited long enough. They deserve an assistant that works like the premium device it came with. Gemini-powered Siri has the potential to deliver that.

The question is whether Apple will actually do the work, or settle for good-enough improvements and call it a victory.

Based on the company's history, I'm cautiously optimistic. Apple usually gets it right when it matters. This matters.

FAQ

What is Gemini and why is it better than Siri's current system?

Gemini is Google's large language model built to compete with OpenAI's GPT-4. It excels at reasoning, understanding context, coding, and maintaining conversation threads. Siri's current system is older and was designed to execute predefined commands rather than understand complex language and reason through problems. Gemini can understand nuance, remember conversation history, and handle multi-step requests in ways current Siri fundamentally cannot.

Will Gemini-powered Siri replace ChatGPT for iPhone users?

Likely not for specialized tasks, but possibly for daily assistance. Gemini-Siri will have an advantage in iOS integration and privacy, meaning it'll be better for device-related tasks like scheduling, messaging, and smart home control. But for complex reasoning, creative work, or specialized knowledge, many users will continue using ChatGPT because it's more powerful for those specific tasks. The goal should be that Siri becomes the first choice for iOS-specific assistance and ChatGPT remains the choice when you need standalone AI reasoning.

How will Apple handle privacy with Gemini-powered Siri?

Apple's stated approach is to process simple queries on-device using a lightweight language model, while routing complex requests to Gemini cloud servers. The company claims this preserves privacy for routine tasks. However, the real test is whether Apple publishes transparent documentation about what data is sent where and allows independent verification. Users should be skeptical of privacy claims without transparency and third-party audits.

When will Gemini-powered Siri launch?

Apple hasn't announced a specific date, but based on typical development cycles and competitive pressure, expect a beta release in early-to-mid 2025 and a full release later that year. The company rarely launches major OS features before they're ready, so patience is likely necessary. Check Apple's announcements or beta releases (accessible through Apple's beta program) for official timelines.

Can I try Gemini-Siri now?

Not yet. As of 2025, Gemini integration is in development and not publicly available. When it does launch, it'll likely first appear in beta versions of iOS available through Apple's beta testing program, then in released versions in subsequent iOS updates. If you want early access, you can join Apple's beta testing program, but there's no guarantee Gemini-Siri will be included in the initial beta releases.

How will Gemini-Siri handle requests that previous Siri couldn't understand?

The primary mechanism is better language understanding. Gemini can interpret natural language in ways earlier systems couldn't. Instead of requiring specific command syntax, Gemini-Siri should understand requests phrased in conversational ways. When ambiguity remains, Siri should ask clarifying questions intelligently ("when you said that restaurant, do you mean the one near your work or home?") rather than failing silently. Context awareness from conversation history will also help reduce misunderstandings.

Will Gemini-Siri work offline like current Siri does?

Partially. Simple tasks like setting timers, controlling smart home devices, and accessing local data should work offline using on-device processing. But complex queries and searches will require cloud connectivity. Apple should communicate this clearly so users understand what works offline and what doesn't. This is actually a strength compared to ChatGPT, which requires internet for everything.

Is Gemini-Siri enough to make me switch from Android to iPhone?

For most users, probably not alone. Siri would need to be dramatically better than every other assistant to overcome switching costs, existing ecosystem investment, and habit. However, if you're already considering an iPhone, improved Siri becomes a feature that tips the scales toward Apple rather than against it. For heavy iOS users, Gemini-Siri could be a compelling reason to stay or upgrade within the ecosystem.

What features from ChatGPT or Google Assistant are missing from Siri that matter most?

The most important missing features are conversation memory (remembering questions from hours ago), context awareness (knowing about your schedule and location automatically), complex task handling (multi-step requests without clarification), and honest uncertainty (saying "I'm not sure" instead of guessing). Siri also struggles with creative tasks, coding help, and detailed explanations. Gemini-Siri should address most of these if Apple implements the five critical requirements properly.

How will I know if Gemini-Siri actually works?

Test immediately after launch with real-world requests: complex scheduling, multi-step tasks, conversational follow-ups, and requests that previous Siri struggled with. Pay attention to response time (should be instant for simple queries), understanding accuracy (fewer clarifications asked), and context awareness (remembers prior questions). Compare to ChatGPT and Google Assistant side-by-side. If Gemini-Siri handles 80%+ of your daily requests without frustration, Apple succeeded. If it still falls short of competitors, they didn't.

Conclusion: The Moment Siri Can Actually Matter

For 14 years, Siri has been the phone assistant nobody wanted to use.

That's a statement of fact, not opinion. The numbers are clear. Users prefer ChatGPT. They prefer Google Assistant. They prefer Alexa. They default to Siri only when they have no choice.

That's a failure. Not because Siri is fundamentally broken, but because it was designed for an era that passed it by.

Gemini-powered Siri is Apple's chance to fix that. Not with incremental improvements. With a fundamental shift in what the assistant can do and how it integrates with your life.

But it's only a chance, not a guarantee.

The five critical requirements aren't optional nice-to-haves. They're the minimum bar for Siri to be genuinely useful:

- True conversational understanding: Remembering context, understanding natural language, and handling follow-ups.

- On-device processing: Speed and privacy by handling common tasks locally.

- Deep context awareness: Connecting to your calendar, location, and digital life.

- Actionable intelligence: Actually completing tasks, not just answering questions.

- Transparent reasoning: Explaining answers and admitting limitations.

If Apple nails all five, Siri becomes indispensable. Not just better than before, but genuinely useful in ways that make it your first choice, not your last resort.

If Apple nails three or four, Siri becomes acceptable. An improvement worth noting but not transformative.

If Apple nails fewer than three, Siri stays irrelevant. Gemini under the hood doesn't matter if the rest of the system hasn't fundamentally changed.

The technical work is doable. Apple has the resources, the talent, and the platform control to execute on every single requirement. The company's optimization is legendary. Its integration capabilities are unmatched. Its privacy infrastructure is robust.

The question isn't technical. It's organizational. Did Apple's leadership prioritize this? Did engineering get the resources? Did product management demand the full vision or settle for quick wins?

Based on competitive pressure and Apple's historical response to competitive threats, I think leadership did prioritize this. iPhone sales depend on ecosystem stickiness, and a genuinely good Siri is a big part of that stickiness.

But execution remains uncertain.

What's certain is this: iPhone users deserve better. They've waited long enough. The technology is ready. Gemini is capable. Apple's platform is powerful.

All that's left is for Apple to deliver. If Gemini-Siri works, the next decade of AI on iPhones just changed fundamentally.

If it doesn't, well, users already know where to find ChatGPT.

The ball is in Apple's court. For once, the company is chasing, not leading. The competition is real. The stakes are high. And users are watching.

Let's see if Apple remembers how to deliver when it matters.

Key Takeaways

- Siri has stagnated for 14 years while competitors like ChatGPT and Google Assistant advanced significantly, making Gemini integration necessary but not sufficient.

- Five critical requirements must be met: conversational understanding with context, on-device processing for speed and privacy, deep iOS integration, true task completion, and transparent reasoning.

- On-device processing is essential for latency improvement and privacy protection; cloud-only routing defeats Siri's platform advantages.

- Apple's real competitive advantage isn't raw AI capability but vertical integration of hardware, OS, and services that competitors can't match.

- Success requires honest positioning, transparent privacy documentation, deep third-party app integration, and user education about changed Siri capabilities.

Related Articles

- Apple's AI Pivot: How the New Siri Could Compete With ChatGPT [2025]

- Apple Intelligence Siri Upgrade: What to Expect in 2025

- Apple's Gemini-Powered Siri Revolution Explained [2025]

- Is Apple Intelligence Actually Worth Using? The Real Truth [2025]

- Google AI Mode Personal Intelligence: Gmail & Photos Integration [2025]

- Google's Hume AI Acquisition: The Future of Emotionally Intelligent Voice Assistants [2025]

![Gemini-Powered Siri: What Apple Intelligence Really Needs [2025]](https://tryrunable.com/blog/gemini-powered-siri-what-apple-intelligence-really-needs-202/image-1-1769440172136.jpg)