Enterprise AI Agents & RAG Systems: From Prototype to Production [2025]

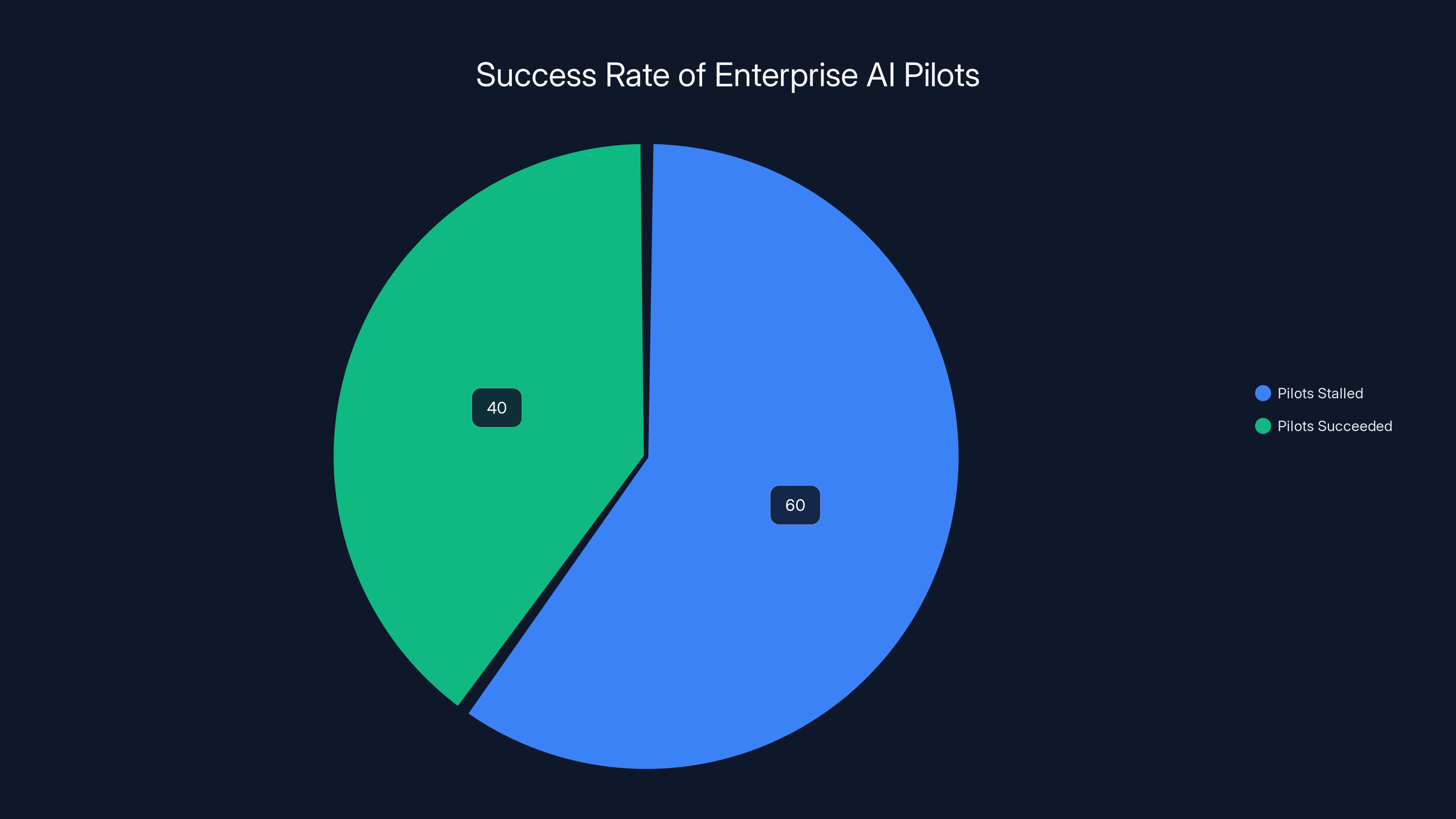

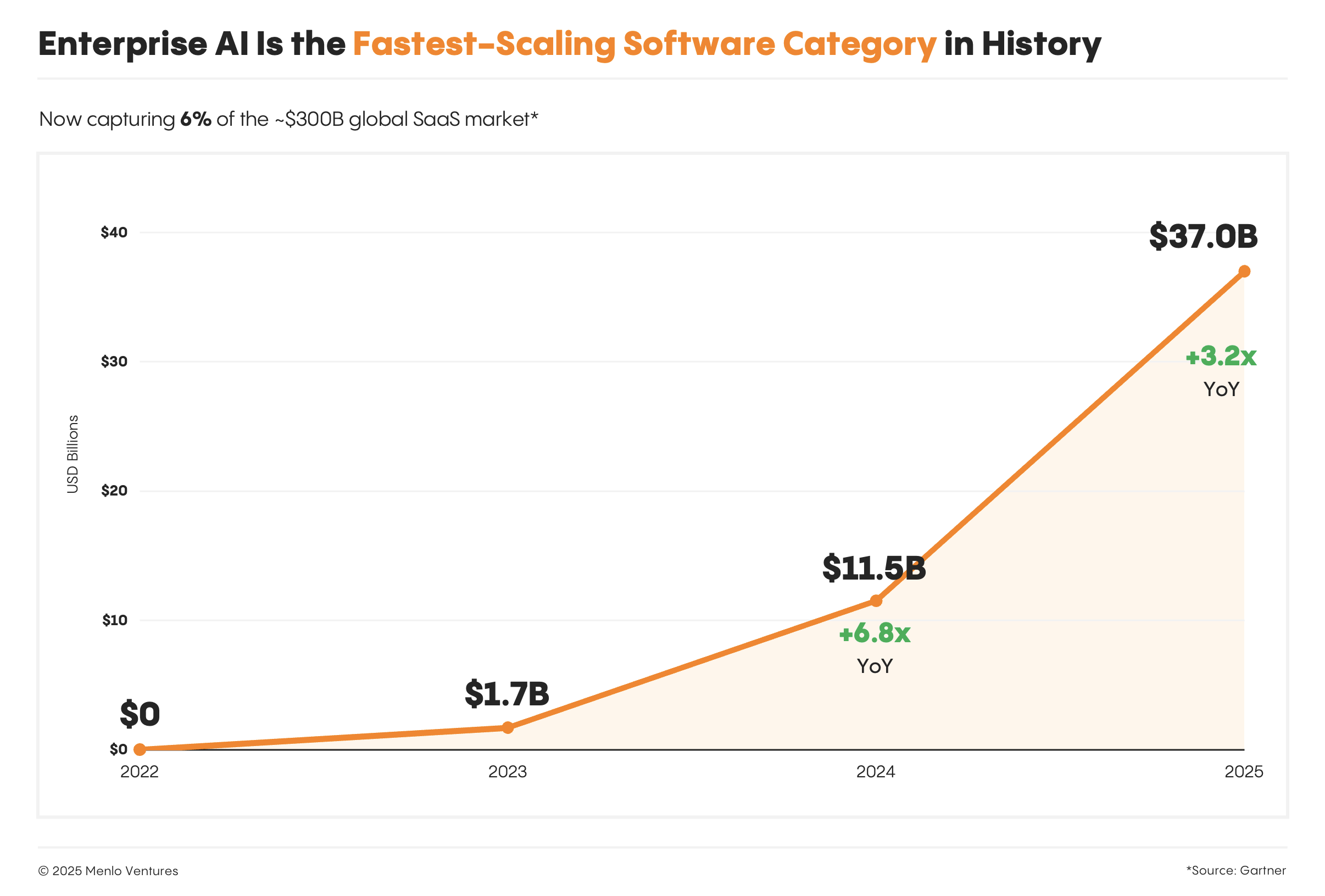

Here's the harsh truth about enterprise AI right now: it's not the models holding you back. Four years after Chat GPT exploded into the mainstream, thousands of companies are still stuck in pilot purgatory. They've spent millions on AI initiatives. Executives are impatient. Teams are burned out. And the projects that were supposed to change everything? They're quietly sitting in sandboxes, waiting for someone to figure out how to actually use them.

The bottleneck isn't intelligence anymore. A 73-year-old semiconductor engineer knows infinitely more about circuit design than any AI model ever will. The real problem is context. Can your AI system actually access your proprietary specifications, engineering docs, institutional knowledge, and the thousands of documents that live in your company's database? Can it ground its responses in your data instead of hallucinating? And once it understands your context, can it orchestrate multiple steps to actually solve complex workflows?

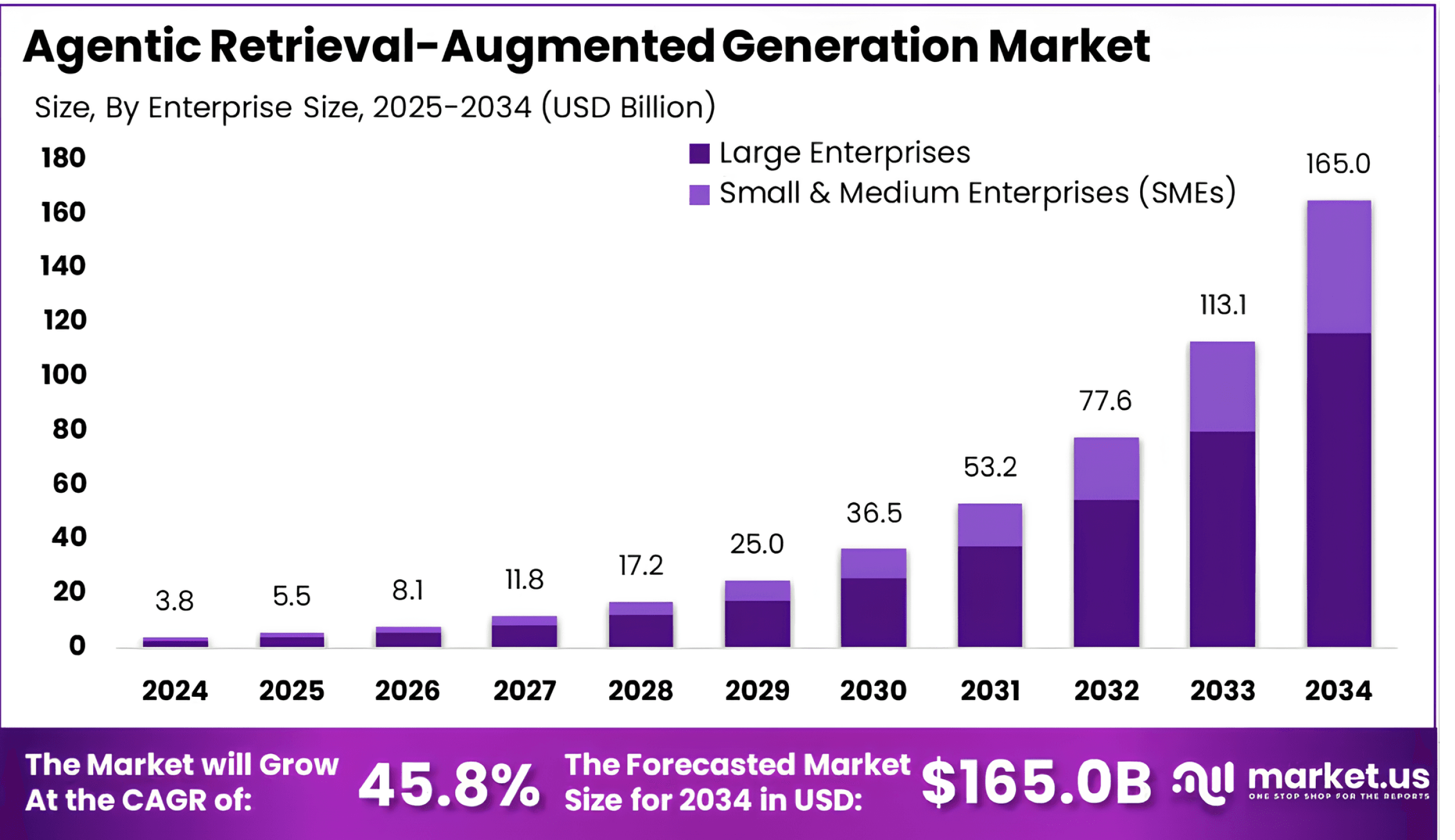

That's the fundamental shift happening right now in enterprise AI. The industry has spent the last four years optimizing for model intelligence. Now it's finally waking up to the fact that the real constraint is context intelligence. How you structure, retrieve, and present information to your AI systems determines whether your AI agent becomes a reliable automation tool or just an expensive chatbot that sounds confident while being confidently wrong.

This is where enterprise AI is headed in 2025, and understanding this shift is critical if you're building AI systems, evaluating AI platforms, or trying to move your AI pilots into actual production.

TL; DR

- The enterprise AI bottleneck is context, not models: Modern LLMs are commoditized. The constraint is how well your AI system accesses, retrieves, and grounds responses in your proprietary data.

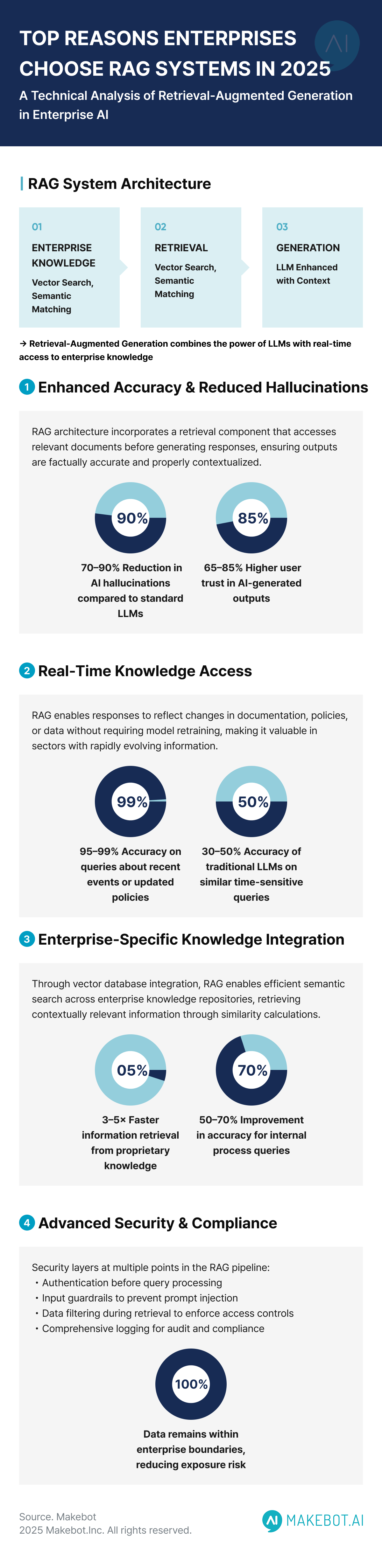

- RAG systems are foundational but weren't designed for production: Traditional retrieval-augmented generation works, but early implementations compound errors, hallucinate frequently, and can't handle complex multi-step workflows.

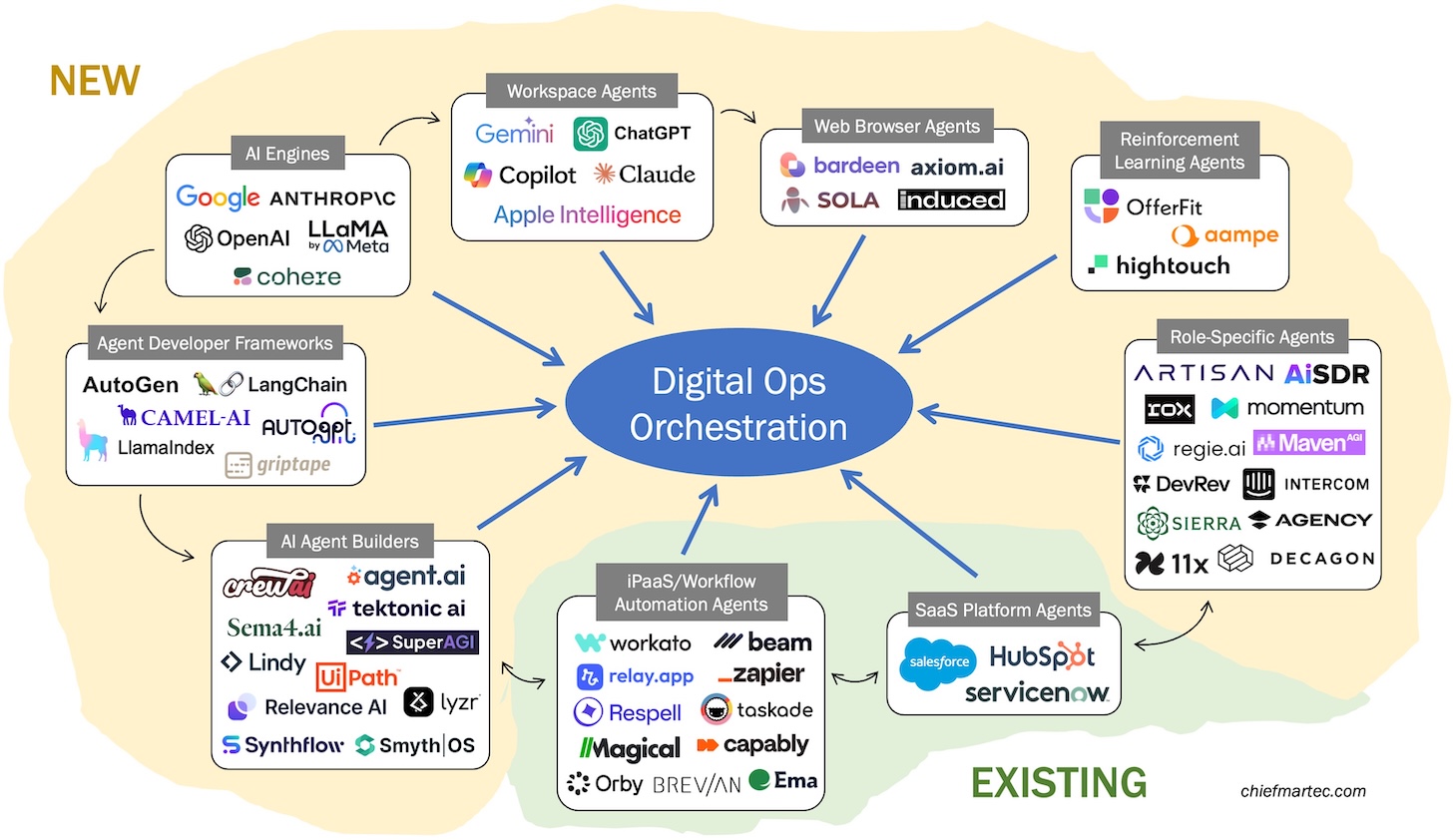

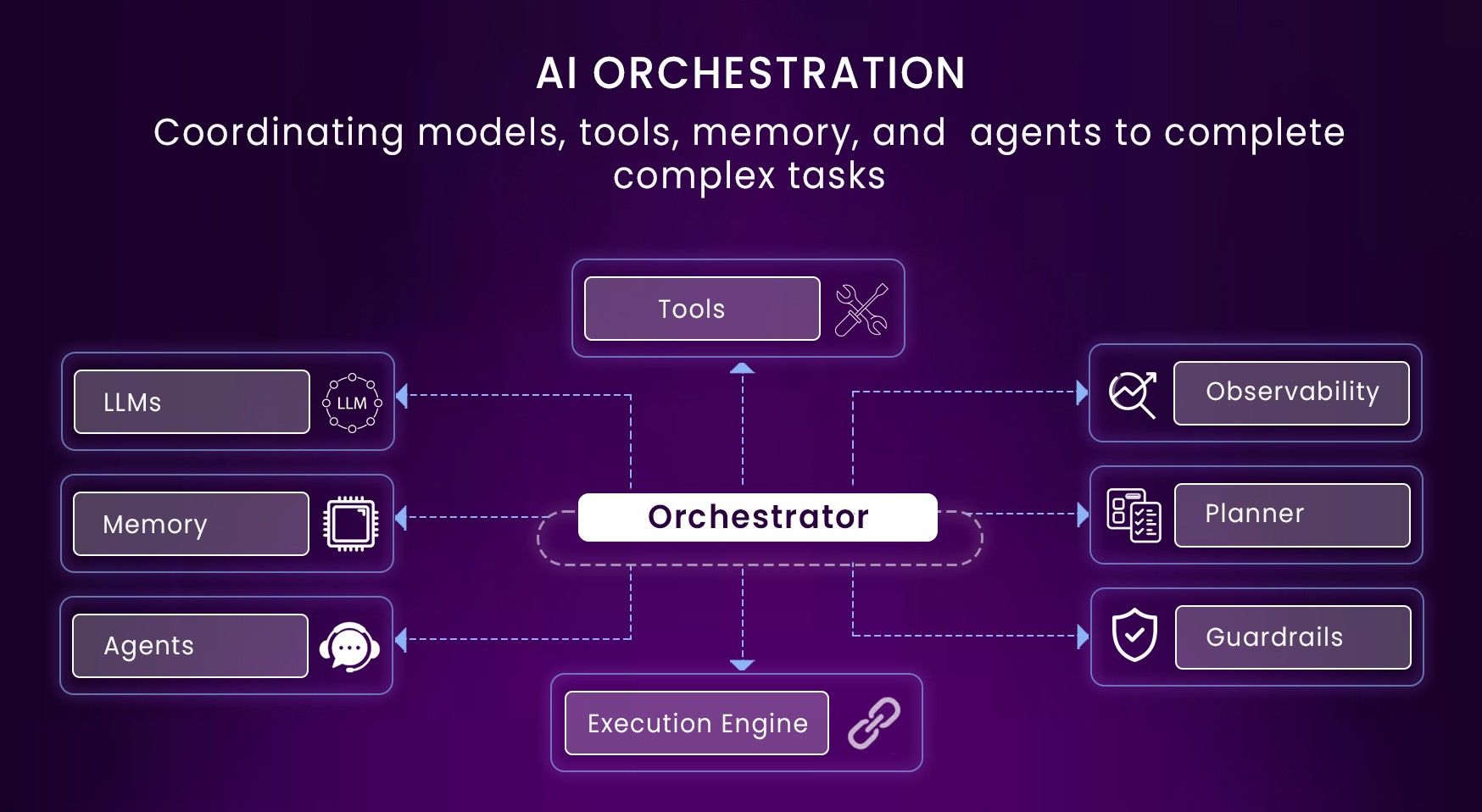

- AI agent orchestration bridges the gap: Multi-step workflow coordination, deterministic rules for critical steps, and intelligent reasoning for exploratory work creates production-grade AI agents.

- Hybrid architectures reduce risk while improving capability: Combining strict validation rules with dynamic AI reasoning prevents catastrophic failures while enabling automation at scale.

- Citation and auditability are non-negotiable for enterprises: Every decision an AI agent makes must be traceable to source documents, creating accountability and enabling faster debugging.

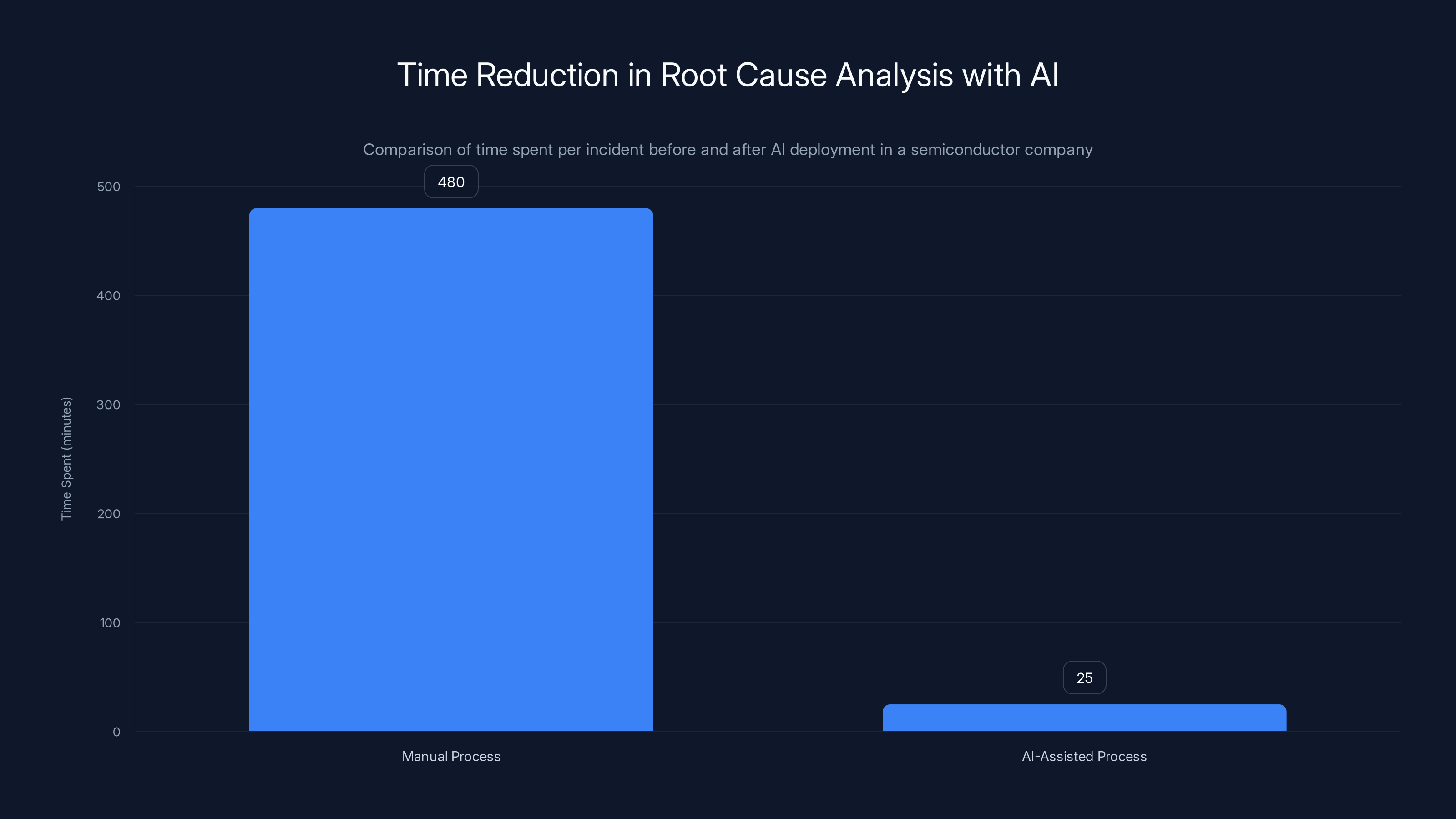

The deployment of AI reduced the time spent on root cause analysis from 480 minutes to just 25 minutes per incident, showcasing a 96% reduction in time.

The State of Enterprise AI: Why Pilots Become Prisons

Walk into most Fortune 500 companies right now and ask about their AI initiatives. You'll hear similar stories across industries. Teams launched ambitious pilots in 2023 and 2024. They connected Chat GPT or Claude to their internal databases. Early results looked promising. But somewhere between the pilot and production, everything stalled.

The reasons are predictable once you understand the technical constraints. First, off-the-shelf large language models have a fundamental knowledge cutoff. A model trained in 2024 knows nothing about engineering specifications finalized in 2025, proprietary manufacturing processes developed last month, or the informal institutional knowledge that lives in your engineers' heads. When you ask that model a question about company-specific details, it invents answers that sound authoritative but are completely fabricated.

Second, these hallucinations compound when you build multi-step workflows. If step one retrieves the wrong document, step two makes decisions based on bad information. Step three amplifies the error. By step five, you've built an elaborate fiction that nobody noticed until something breaks in production.

Third, early teams didn't think about governance. Who's responsible when an AI agent makes a decision? How do you audit what the system did? How do you know which source documents influenced the AI's output? Enterprise organizations need answers to these questions before deploying anything critical. Startups can move fast and break things. Manufacturers can't.

The companies that are succeeding understand something crucial: the problem is never the model's intelligence. It's always the plumbing. How information flows from your databases into the model. How the model retrieves the most relevant context. How you validate outputs before they affect critical systems. How you ensure every decision is auditable and traceable.

These are boring engineering problems. They don't make headlines. But they're the difference between a compelling demo and a system that actually works in production.

Over 60% of enterprise AI pilots never make it to production, highlighting significant challenges in scaling AI initiatives.

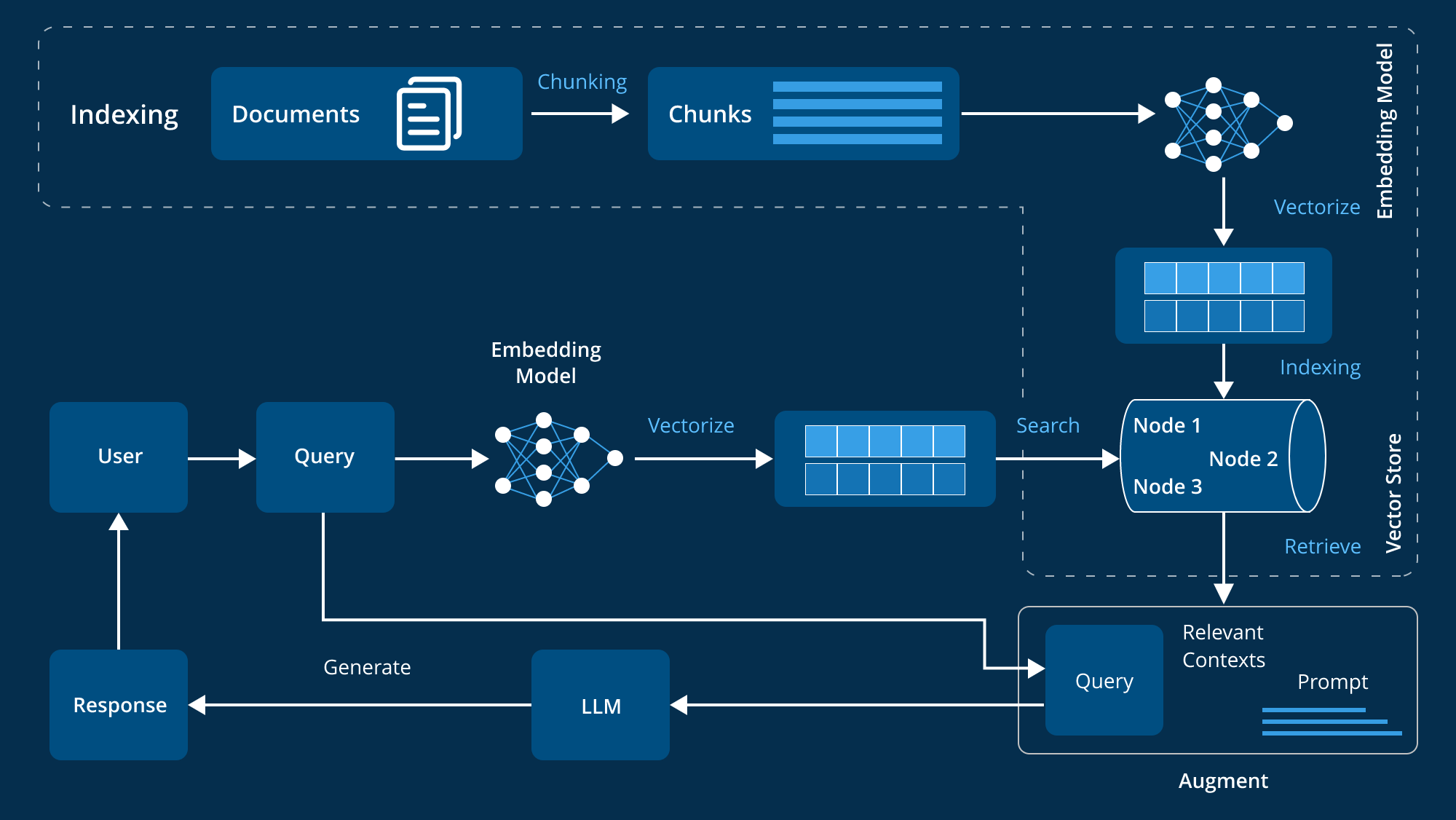

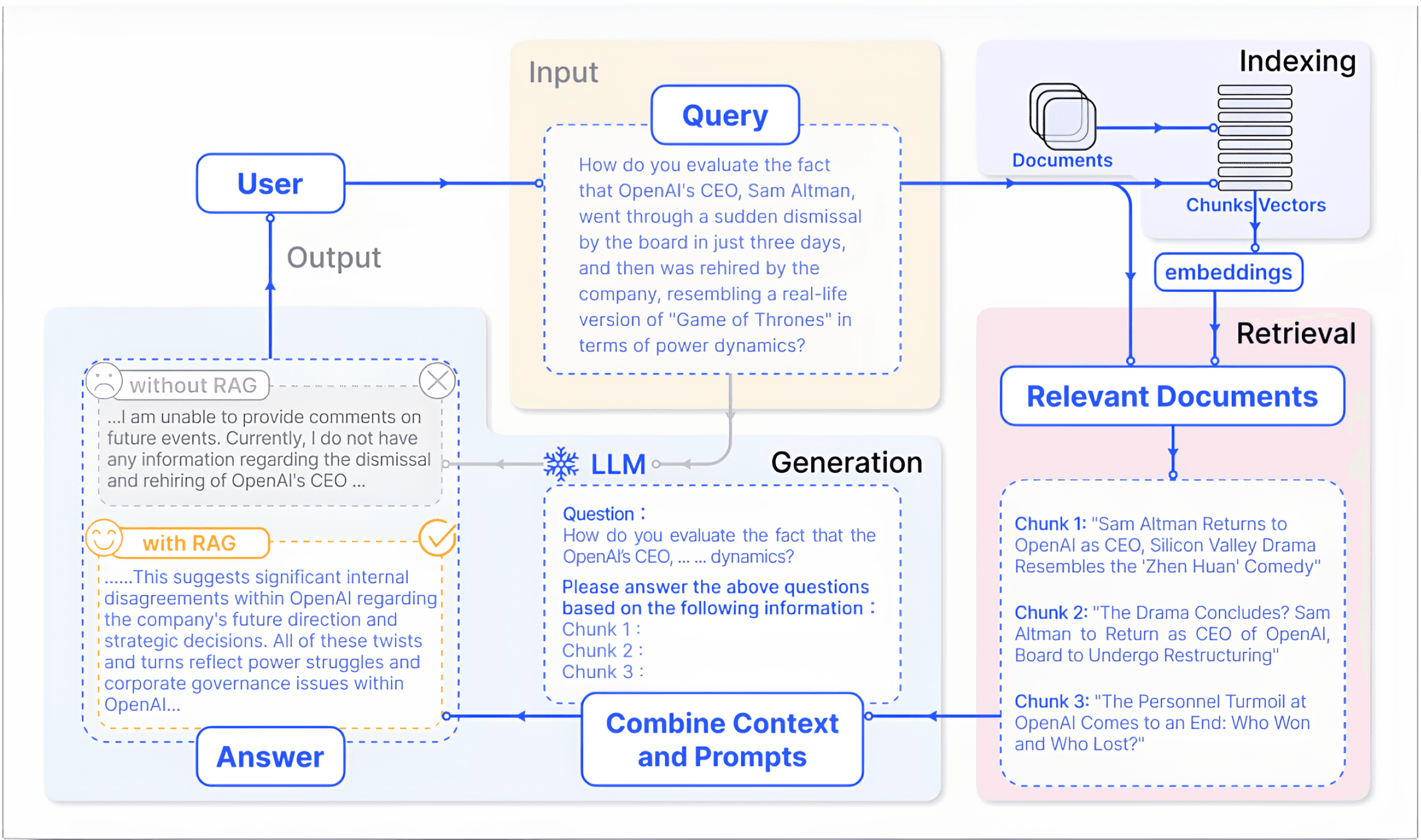

Understanding RAG: How Enterprises Ground AI in Their Own Data

Retrieved-augmented generation is deceptively simple in concept. Instead of relying solely on what a model learned during training, you give the model access to your company's documents right before it generates a response.

Here's how the basic pipeline works: User asks a question. System searches your document database for relevant materials. Those materials get fed into the model alongside the question. The model generates a response grounded in your actual data. Theoretically, this prevents hallucinations because the model is working with facts instead of training data memories.

In practice, RAG systems are where most enterprises first tried to solve the context problem. And RAG did solve a real problem. But it also created new problems that nobody anticipated.

The original RAG approach was crude. Take an off-the-shelf semantic search engine. Grab whatever documents seemed relevant. Dump them into the prompt. Hope the model reads them correctly and doesn't invent information anyway. This approach worked for simple Q&A systems. For complex workflows? It was disaster-prone.

Multiple problems emerged quickly. First, retrieval is hard. How many documents should you include? If you include too few, you miss relevant context. Include too many, and the model gets confused by noise. The semantic search engines people were using weren't designed for the specific way enterprises organize documents. A technical specification has a different structure than a customer service memo. A supply chain contract differs from a manufacturing standard.

Second, even when retrieval worked perfectly, models sometimes ignored the retrieved documents and relied on training data instead. You could see this in the generated responses. The model would reference facts that contradicted the documents right there in the context window. It had learned to value its training data over the provided context.

Third, early RAG systems had no way to handle multi-step reasoning. Real enterprise workflows aren't simple Q&A. They're complex: retrieve documentation, analyze it for compliance issues, check against regulatory requirements, cross-reference with supplier contracts, validate against internal policies, route for approval. At each step, the model might need different information. Traditional RAG treated the whole workflow as a single retrieval problem.

The companies building next-generation RAG systems understood that the problem wasn't the core concept. It was execution. You needed to be much more strategic about how you retrieved context, how you structured it in the prompt, how you trained models to prefer provided documents over training data, and how you orchestrated workflows that required different retrievals at different steps.

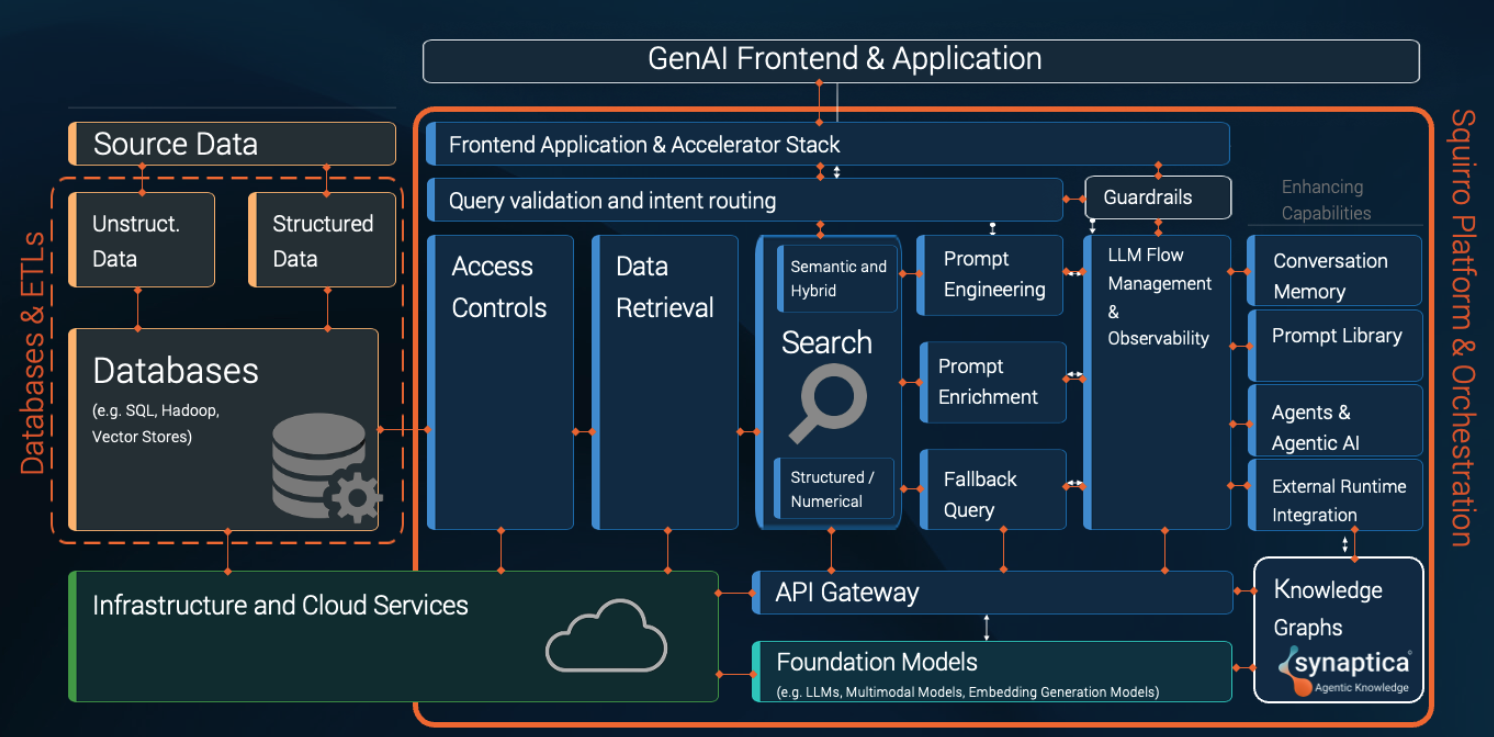

This led to what the better platforms now call a "unified context layer." Instead of treating RAG as a simple search-and-stuff operation, you build a sophisticated layer between your data and your models that handles retrieval strategy, document enrichment, context formatting, and quality validation.

The Context Layer Revolution: Where RAG Becomes Intelligent

Modern enterprise AI platforms have stopped thinking of RAG as a simple pipeline. Instead, they've built what could be called "intelligent context layers." This is the infrastructure that sits between your data and your AI models, making sure the right information reaches the model in the right format at exactly the right time.

What does a real context layer actually do? It's much more sophisticated than basic search.

Smart Retrieval Strategy: Instead of treating all documents equally, the context layer understands document types, relationships, and importance. An engineering spec has different retrieval logic than a regulatory document. A recent update to a standard supersedes the previous version. The context layer handles these relationships automatically. It doesn't just find relevant documents. It finds the right documents in the right order.

Semantic Understanding of Your Domain: This is where enterprise AI differs fundamentally from consumer AI. The context layer learns your domain-specific terminology. In semiconductor manufacturing, terms have precise meanings. The same word used in finance or aerospace might mean something completely different. A context layer trained on your industry understands these nuances. It knows which document contains the authoritative definition. It can disambiguate between similar concepts.

Prompt Optimization: Once you've retrieved documents, how you present them matters enormously. Simply concatenating documents into a prompt is inefficient and often produces poor results. Advanced context layers format retrieved information to highlight the most relevant passages, structure complex information hierarchically, and present context in a way that maximizes model performance.

Hallucination Detection and Prevention: Better context layers actually monitor model outputs against the source documents. If the model generates information that contradicts its context, systems can flag it, regenerate, or route it for human review. You're not just hoping the model stays grounded. You're actively enforcing it.

Cross-Document Reasoning: Complex workflows often require information from multiple documents. A context layer can retrieve related documents together, helping the model understand relationships and dependencies. It handles the case where information in Document A depends on information in Document B, but the model never would have thought to look for Document B.

The technical challenge here is significant. You're essentially building a specialized retrieval and formatting system that understands your specific business domain. This is why generic RAG platforms often underperform for critical enterprise workflows. They're optimized for general-purpose Q&A, not domain-specific reasoning.

Companies that have built sophisticated context layers report dramatic improvements in accuracy and reliability. Some report 90% reduction in hallucinations compared to basic RAG approaches. Others report 75% reduction in retrieval errors. These improvements aren't theoretical. They directly impact whether your AI system is trustworthy enough for production workflows.

But here's where things get interesting: a perfect context layer still doesn't solve the enterprise AI problem completely. Even if your model has perfect information and never hallucinates, it's still just answering questions. Enterprise workflows need more than Q&A. They need orchestration.

Implementing orchestration can lead to significant net annual benefits, with estimated savings of

AI Agent Orchestration: Automating Multi-Step Workflows

Real enterprise processes aren't single-step operations. They're workflows. Design review. Compliance checking. Supplier qualification. Supply chain optimization. Incident investigation. Each of these involves multiple steps, branching logic, and different AI capabilities at different points.

Consider root cause analysis in manufacturing. Step one: collect data from equipment sensors, maintenance logs, and incident reports. Step two: analyze this data for patterns and anomalies. Step three: cross-reference findings against known failure modes in technical documentation. Step four: identify potential root causes. Step five: estimate likelihood of each cause based on historical data. Step six: recommend next steps for verification. Step seven: document findings and route for engineer review.

Each step has different requirements. Steps one and five are largely deterministic—you're pulling data and doing calculations. Steps two and four require AI reasoning. Step three requires domain knowledge retrieval. Step six needs to consider risk and resource constraints.

AI agent orchestration means building a system that can coordinate these different capabilities across multiple steps. Instead of asking a single AI model to handle everything (which it will do poorly), orchestration lets you use the right tool at each stage.

This matters because it enables what we might call "hybrid workflows." For high-stakes steps, you use strict deterministic rules. Data validation? Run a deterministic check. Compliance verification? Use explicit rules. Financial transaction? Follow regulatory requirements precisely. No AI reasoning, no hallucinations, no uncertainty.

For exploratory steps where you actually need intelligence and reasoning? That's where you deploy AI. Analyzing patterns in data. Generating hypotheses about what might be wrong. Exploring possibilities. Recommending strategies.

By mixing deterministic and intelligent steps, you eliminate unnecessary risk while preserving the capability you actually need AI for.

Orchestration also enables branching logic. What happens if a validation check fails? What if the model is uncertain? High-confidence paths versus low-confidence paths. Escalation to humans when needed. Retry logic. Fallback mechanisms. These aren't theoretical—they're how you make AI systems reliable enough to actually deploy.

Consider a compliance workflow. You retrieve relevant regulatory documents. You ask an AI to analyze proposed changes against these regulations. But what if the model's confidence is low? What if there's ambiguity? Orchestration lets you branch: high-confidence approval goes straight through. Low-confidence flags for human review. This prevents both unnecessary bottlenecks and catastrophic failures.

Building Versus Buying: When to Use Orchestration Platforms

There's a fundamental decision every enterprise faces: build a custom AI orchestration system or use an existing platform?

Building in-house offers theoretical advantages. You control everything. You can optimize for your specific workflows. But the reality is brutal: orchestration platforms are genuinely complex. You need to handle retrieval, model integration, workflow management, error handling, audit logging, monitoring, and dozens of other concerns. A small team can spend a year building something that a mature platform provides out of the box.

Moreover, orchestration platforms have learned from thousands of deployments. They know which workflows fail. They know how to handle edge cases. They include industry-specific templates that accelerate implementation. They have monitoring and debugging tools specifically designed for AI workflows.

There are reasonable arguments for building custom systems, but they're usually wrong arguments. Yes, your workflow is unique. But the orchestration challenges you face are solved problems. Use a platform. Focus your engineering resources on the parts of your system that are actually differentiated.

When evaluating orchestration platforms, look for these capabilities:

Pre-Built Templates for Your Industry: Aerospace companies have unique challenges. So do semiconductor manufacturers. So do financial services firms. Platforms that have built templates for your industry typically work 10x better than generic platforms. They've already solved the domain-specific problems you'll face.

Visual Workflow Builder: If your team needs to understand and modify workflows, a visual interface is critical. Code-based systems are powerful but require engineers. Visual builders let domain experts, product managers, and business analysts participate in workflow design.

Deterministic and Intelligent Step Support: The platform should make it easy to mix rules-based logic with AI reasoning. If everything has to be AI or everything has to be rules, you've lost the benefits of hybrid workflows.

Robust Error Handling: How does the platform handle AI uncertainty? What about validation failures? Retrieval problems? Upstream data issues? Good platforms have sophisticated error handling built in.

Audit and Observability: Every decision should be traceable. You should be able to see what documents influenced a decision, what the model's reasoning was, what data flowed through each step. This is non-negotiable for production systems.

Fine-Tuning and Continuous Improvement: Does the platform let you improve performance based on real-world results? Can you feed back information about which decisions were correct and which weren't? Platforms with learning loops get better over time.

Semantic understanding is rated as the most important feature of intelligent context layers, followed by smart retrieval and prompt optimization. Estimated data.

Deterministic Hybrid Workflows: Eliminating Uncertainty Where It Matters

One of the most powerful patterns emerging in enterprise AI is the deliberately hybrid workflow. This is where you acknowledge that some steps need intelligence and some steps need certainty.

Consider a loan approval workflow. Step one: collect applicant data and pull credit records. Deterministic. Step two: validate that all required documents are present and meeting format specifications. Deterministic. Step three: assess creditworthiness based on historical data and models. This could use AI, but compliance requires explainability, so you might actually use traditional risk models or hybrid systems. Step four: check against regulatory requirements. Deterministic. Step five: route to approval or denial. Deterministic if the rules are clear. Escalate to human review if ambiguous.

Notice that only one of these steps actually benefits from AI reasoning, and even that step might be better served by traditional explainable models for regulatory reasons.

This is where many enterprise AI initiatives fail. They replace working deterministic systems with AI systems that sound nicer but are less reliable. This is backwards. You should only use AI where it actually improves outcomes compared to the alternative.

The companies getting impressive results are doing something counter-intuitive: they're using less AI, not more. They're identifying exactly the steps where AI reasoning helps and using it only there. Everywhere else, they're using the best tool for that specific step, which is often a simple rule or database query.

This approach also has significant security and reliability benefits. Deterministic steps are verifiable. You can test them. You can prove they work correctly. You can point to the logic. AI steps are inherently probabilistic. They might fail sometimes. They might make strange decisions occasionally. By limiting AI to where it actually helps, you improve overall system reliability while still getting AI's benefits where they matter.

Measuring the benefit of hybrid workflows is also easier. You have clear control groups. You can see that the deterministic validation steps catch 99.2% of errors. You can see that the AI step improves decision quality by 23% compared to the previous approach. You can calculate ROI. You can explain the system to stakeholders and regulators.

Citation and Auditability: The Foundation of Enterprise Trust

Here's something that distinguishes enterprise AI from consumer AI: every decision needs to be explainable and auditable. If an AI agent recommends rejecting a supplier, someone needs to be able to ask why. If an AI system approves a design change, auditors need to be able to trace the decision.

This is why citation is such a big deal in production AI systems. It's not just nice to have. It's a requirement.

Production-grade systems capture at the sentence level what information influenced each decision. You're not just tracking "documents 3, 5, and 7 were used." You're tracking "this specific sentence from document 5 influenced this specific part of the decision." Sometimes even to the word level for critical applications.

This serves multiple purposes. First, it enables debugging. When a decision was wrong, you can see exactly which source information was problematic. Was the information outdated? Was it retrieved incorrectly? Was the model's interpretation wrong? Citation helps you identify the failure mode.

Second, it enables trust. When stakeholders can see exactly where information came from, they're more likely to trust the system. If you can't explain your reasoning, they won't trust it, no matter how good the results are.

Third, it enables compliance. Regulations increasingly require explainability. You need to show that decisions were based on accurate information and legitimate reasoning, not bias or arbitrary factors.

Building comprehensive citation into AI systems is non-trivial. It requires training models to include citations. It requires modifying retrieval systems to track which retrieved chunks influenced which outputs. It requires building audit logging at multiple levels.

But it's also the difference between an impressive demo and a system that can actually be deployed in production. If you can't audit and explain decisions, you can't deploy the system in any regulated industry or any situation where stakeholders need accountability.

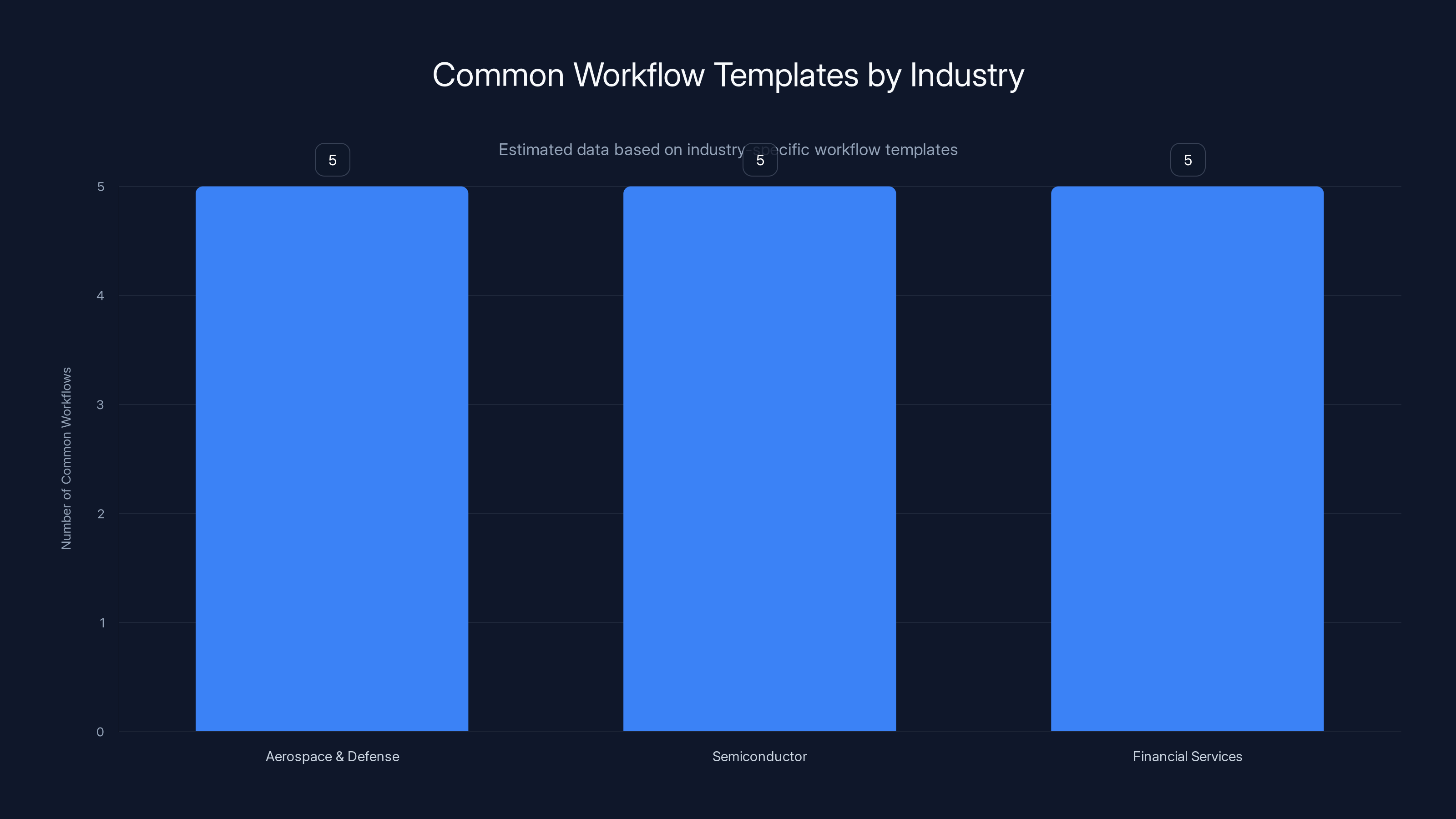

Each industry typically has around five common workflow templates, highlighting the importance of industry-specific platforms for efficient deployment. Estimated data based on typical workflows.

Industry Templates: Accelerating Deployment for Common Workflows

One of the most valuable patterns emerging in production AI systems is pre-built industry templates. These are workflow patterns specifically designed for particular industries, based on thousands of hours of experience with companies in that space.

In aerospace and defense, common workflows include: design change management, compliance verification against specifications, supply chain risk assessment, component failure root cause analysis, and regulatory documentation management.

In semiconductor manufacturing: process deviation investigation, equipment maintenance planning, yield analysis and optimization, supplier quality assessment, and design review process automation.

In financial services: regulatory compliance checking, fraud detection, anti-money laundering reviews, customer onboarding verification, and claims processing.

Each of these workflows has specific requirements. Design changes in aerospace require traceability to regulatory documents. They need to verify compliance with mil-spec standards. They need to check against historical issues with similar designs. They need to route for appropriate approvals.

A generic orchestration platform could build this workflow, but it would take weeks. An aerospace-specific platform has it pre-built, tested, and refined based on dozens of customer deployments. You configure it for your specific requirements, but the core logic is proven.

Beyond the workflow templates themselves, industry-specific platforms typically include:

Domain-Specific Training Data: The underlying models might be fine-tuned on documents and language from your industry. This dramatically improves accuracy for domain-specific concepts.

Regulatory Knowledge: Built-in understanding of relevant regulations, standards, and compliance requirements. The system knows what compliance verification means in your industry.

Integration Templates: Pre-built integrations with systems commonly used in your industry. ERP systems. CAD software. Manufacturing execution systems. You don't have to build these integrations from scratch.

Benchmark Performance Data: Data on how similar workflows perform in other companies using the same industry template. This helps you set realistic expectations and identify optimization opportunities.

The time and cost savings from using industry templates are substantial. Instead of building workflows from scratch (weeks to months), you configure a template (days to weeks). Instead of training models on your data (expensive and time-consuming), you use industry-trained models and fine-tune on your specific documents (much faster).

One-Click Optimization: Continuous Improvement Through Feedback

A sophisticated enterprise AI system doesn't stay static. It gets better over time. How? Through feedback loops.

Imagine you've deployed an AI agent to analyze equipment failures and recommend next steps. Week one, it makes decisions. Some are good. Some are mediocre. A human reviews the recommendations. Maybe 85% are actually useful.

In a static system, that's as good as it gets. The system doesn't learn. It won't improve.

In a learning system, you capture the feedback. The model learns which kinds of failures it handles well and which it doesn't. The retrieval system learns which documents tend to be actually relevant. The prompt engineering improves based on which phrasings consistently lead to better decisions.

The challenge is that AI model retraining is expensive and complex. You can't just ask an engineer to retrain the model. You need ML expertise. You need compute resources. You need careful validation.

The best platforms abstract this away with "one-click optimization." You flag a few decisions that were particularly good or particularly bad. The system automatically processes these, identifies patterns, and suggests improvements. In some cases, it can automatically retrain and deploy improvements.

This doesn't mean the system improves from a single feedback point. It means the system is continuously capturing data about what works and what doesn't. Over weeks, it identifies systematic improvements. Performance improves.

The measurable impact of continuous improvement can be significant. A system might start at 75% accuracy and reach 88% accuracy over three months through accumulated feedback. The person-hours required are minimal—mostly just reviewing the system's decisions (which you'd do anyway) plus a few configuration changes.

This is where orchestration platforms that include feedback loops actually have a huge advantage over custom-built systems. Building feedback mechanisms into custom systems is a significant undertaking. Using a platform that has it built in is vastly simpler.

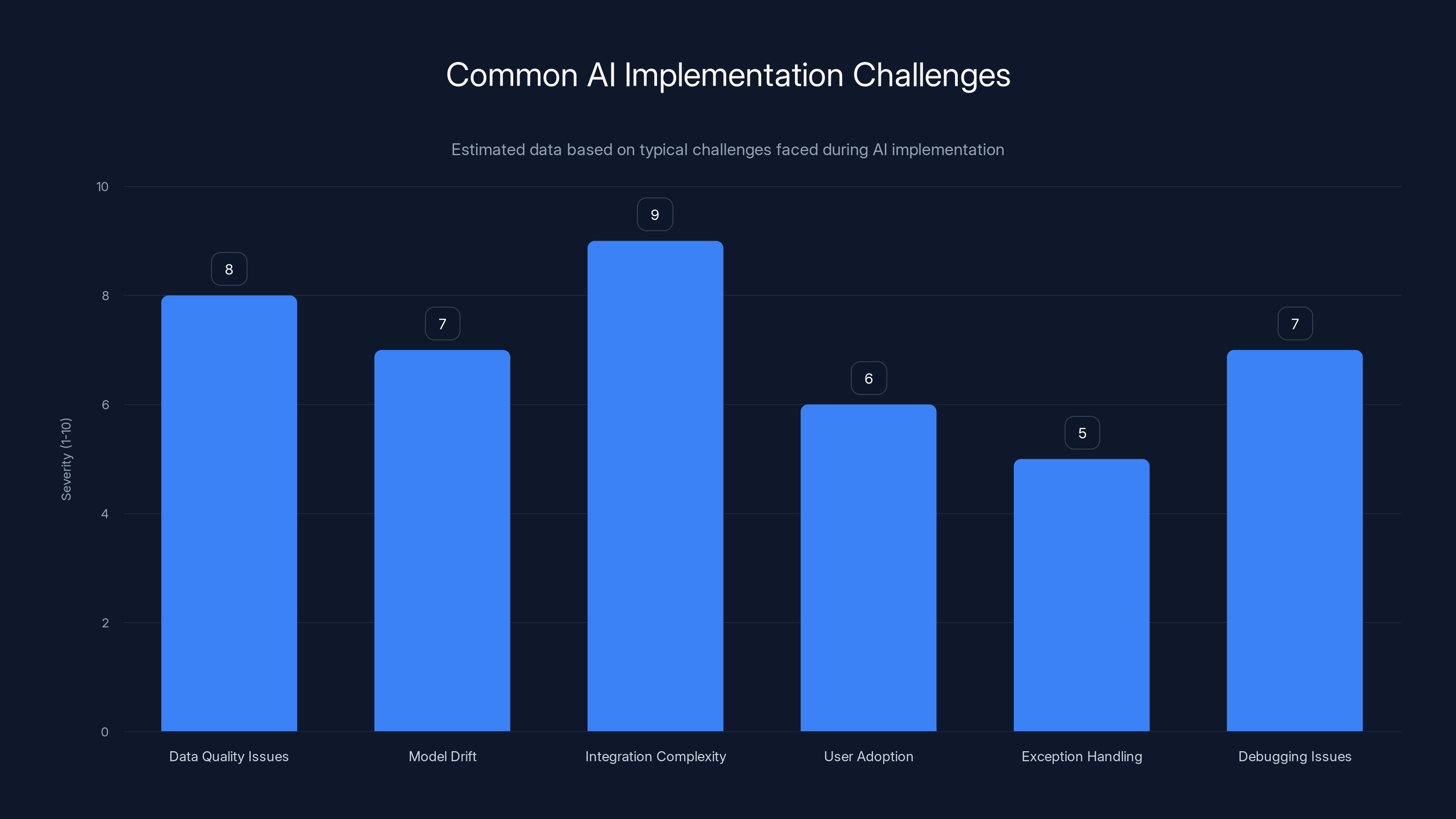

Integration complexity and data quality issues are the most severe challenges in AI implementation, often requiring significant resources to address. Estimated data.

Handling Uncertainty and Confidence Scoring

AI systems are probabilistic. They don't always output decisions with the same confidence. Sometimes the model is very confident. Sometimes it's uncertain. In production systems, this matters enormously.

A sophisticated orchestration system tracks confidence alongside decisions. When the AI recommends something with 95% confidence, that's meaningful. When it recommends the same thing with 62% confidence, that's very different.

Production systems should surface this uncertainty to users and downstream processes. For low-confidence decisions, the workflow can branch differently. Maybe it escalates to human review. Maybe it requires additional information retrieval. Maybe it routes to a second AI system to validate. Maybe it simply flags the decision as "proceed with caution."

Confidence scoring also enables cost-benefit optimization. Maybe you're willing to accept high-confidence decisions from the AI without review, but low-confidence decisions require human sign-off. This lets you automate 70% of decisions (the high-confidence ones) while maintaining oversight on the tricky cases.

Measuring and improving confidence scoring is an ongoing challenge. Some systems are miscalibrated—they're overconfident about uncertain things and underconfident about areas where they're actually reliable. Good platforms continuously calibrate confidence scoring against actual outcomes.

Multi-Model Orchestration: Combining Different AI Capabilities

Here's something interesting: the best enterprise AI systems don't rely on a single AI model. They use multiple models, each optimized for different tasks.

You might use one model specialized for document understanding and extraction. Another for reasoning about complex scenarios. Another for generating explanations. Another for detecting when something is potentially wrong or needs human review.

Orchestration systems enable multi-model pipelines. Retrieve documents with Model A (the document understanding specialist). Extract key information with Model B. Pass the extracted information to Model C for reasoning. Have Model D generate an explanation. Have Model E review the explanation for potential issues. Different models, different strengths, combined in a pipeline.

This approach has multiple benefits. First, you can use models specifically fine-tuned for each task. A model trained specifically on document extraction will outperform a general model trying to do everything. Second, you get some error redundancy. If one model makes a mistake, the next model might catch it. Third, you can mix open-source models, proprietary models, custom fine-tuned models, and external APIs in the same workflow.

The trade-off is complexity. Multi-model orchestration requires more sophisticated management. You need to handle the case where one model fails or produces unexpected output. You need to maintain multiple models and their dependencies. You need to test the entire pipeline, not just individual models.

But for critical workflows, the benefits often outweigh the complexity. Mission-critical systems in aerospace and defense are increasingly using multi-model approaches because the additional reliability is worth the engineering cost.

Real-World Performance: When AI Agents Dramatically Reduce Time

Let's talk about actual numbers because the theoretical benefits only matter if they actually happen in practice.

A semiconductor company was spending eight hours on average per incident doing root cause analysis. An engineer would collect sensor data, review maintenance logs, search through technical documentation, analyze failure modes, check against historical incidents, and document findings. Eight hours of skilled engineering time per incident.

They deployed an AI orchestration system with a root cause analysis template. The system automatically retrieved sensor data, analyzed equipment logs, pulled relevant technical specifications, searched for historical patterns, and generated a preliminary analysis. An engineer reviewed the AI's analysis, made adjustments as needed, and documented final findings.

Average time per incident dropped to 20 minutes for engineer review on top of maybe 5 minutes of automated processing. A 96% reduction in time.

But here's the important part: this wasn't magic. The AI didn't become superintelligent. What happened was that the AI handled the tedious parts perfectly. It never forgot to check a log. It never missed a relevant specification. It never skipped a historical comparison. It did the boring work faster and more reliably than humans ever could.

The engineer's job changed from "spend eight hours analyzing" to "spend twenty minutes reviewing and adjusting the AI's analysis." This is the actual value proposition of enterprise AI in practice. Handling tedious work that humans find hard and time-consuming.

Other real-world examples:

Compliance checking: An aerospace company with strict mil-spec compliance requirements. Analyzing designs for specification compliance used to take two engineers three days. Now: 25 minutes with the AI system and an engineer review. A 99.2% time reduction because the AI perfectly handles searching through thousands of pages of specifications for relevant constraints.

Supplier assessment: A manufacturing company evaluating new suppliers against quality, capability, and compliance requirements. Used to require two days of document review and research. Now: 45 minutes with AI-assisted analysis. The tedious part (reading through all the documents, checking against requirements) became fast and reliable.

Design change impact analysis: Aerospace. When you propose a change to a component, you need to understand all the systems that might be affected. This requires searching through interconnected technical documents. Used to take a senior engineer a full day. Now: 2 hours with AI analysis. The AI is perfect at cross-referencing interconnected specifications. Humans are slow at this kind of tedious searching.

The pattern is clear: AI provides massive value when the work involves information retrieval, document analysis, and pattern matching across large bodies of information. It's less valuable for creative design work or novel problem-solving that requires truly new thinking.

Security and Data Governance in AI Orchestration

When you're routing company data through AI systems, security and governance become critical. You can't just hand all your engineering specifications to a cloud API.

Production orchestration platforms need to address several security concerns:

Data Retention and Privacy: Does the platform store your data? For how long? Is it used to train models? Some platforms use your data to improve their general models. This might violate your data governance requirements. Look for platforms that explicitly don't use your data for training or model improvement.

Encryption in Transit and at Rest: Your engineering specifications, manufacturing data, and other sensitive information needs to be encrypted. This sounds basic, but verify it. What encryption standards? Who holds the keys?

Access Control and Audit Logging: You need fine-grained control over who can access what. Engineer Alice can access mechanical engineering specs but not electrical specs. You need audit logs showing who accessed what when. Regulatory compliance often requires this.

Model Transparency: You should know exactly which models are processing your data and how they work. Some platforms use only open-source models. Others use proprietary models. Some are a mix. Be clear about what you're comfortable with.

Data Residency: Some regulated industries require data to stay in specific geographic regions. Some require on-premises deployment. Verify that your orchestration platform can meet these requirements.

The good news is that mature orchestration platforms take these concerns seriously. They offer privacy-focused deployment options, encryption throughout the pipeline, fine-grained access control, and comprehensive audit logging. If a platform downplays these concerns, that's a red flag.

Cost-Benefit Analysis: When Orchestration Pays for Itself

Enterprise AI projects often struggle with ROI calculations. How do you measure the value of slightly better decision-making? How do you quantify the risk reduction from automating processes?

The clearest ROI comes from time savings. If you can reduce time spent on a process, that's directly calculable. If a workflow that previously took 8 hours now takes 20 minutes, and you have an engineer costing

For a company with 500 incidents per year, that's

Other ROI sources are harder to quantify but still real:

Accuracy and Compliance Improvements: Better decisions reduce risk. Maybe your supplier assessment process previously missed 2% of quality issues. Reducing this to 0.2% prevents defects and warranty costs. Difficult to quantify precisely, but real.

Scalability: With automation, the same team can handle 3x the volume. Instead of hiring more people, you deploy AI. This is an indirect ROI—you avoid costs rather than generating savings.

Speed to Decision: Faster analysis means faster decisions. For time-sensitive issues (supply chain disruptions, quality problems), faster decision-making might prevent significant losses.

Employee Retention: Automating the tedious parts of jobs makes them more interesting. This can improve retention and reduce hiring/training costs.

When building your ROI case, focus on the time savings first. These are concrete and measurable. Add the harder-to-quantify benefits once you have a solid base case.

One important note: be realistic about what's actually being automated. The best results come from automating specific, well-defined workflows that were previously tedious but straightforward. Don't expect AI to automate truly novel problem-solving. That's not what this technology is for.

Implementation Challenges: What Goes Wrong and How to Fix It

Orchestrating AI agents in production sounds straightforward. In practice, companies encounter predictable challenges.

Data Quality Issues: Your AI system is only as good as the data it works with. If your technical specifications are outdated, incomplete, or inconsistent, the AI will make decisions based on bad data. Before deploying, clean your data. Audit document completeness. Establish version control and update processes.

Model Drift: Models trained on data from six months ago might perform poorly on data from today if the underlying processes changed. Production systems need monitoring and periodic retraining. Some platforms handle this automatically. Others require manual intervention.

Integration Complexity: Your AI system needs to integrate with existing systems. Your ERP. Your CAD system. Your manufacturing execution system. Each integration is a potential failure point. Plan for longer integration timelines than you think you'll need.

User Adoption: Even if the system works perfectly, if your team doesn't trust it or doesn't know how to use it, adoption will be poor. Training and trust-building require deliberate effort. Start with skeptical users, show them the system working, build confidence gradually.

Exception Handling: Every process has edge cases. 95% of the time, your orchestrated workflow works perfectly. The remaining 5% of weird edge cases will require manual intervention. You need clear exception handling paths.

Debugging Production Issues: When something goes wrong in production, debugging AI workflows is harder than debugging traditional systems. Was it a data quality issue? A retrieval failure? A model hallucination? Your system needs comprehensive logging to enable rapid debugging.

Companies that implement successfully typically follow a pattern: they start with a single pilot workflow that's important but not mission-critical. They get it working. They monitor it for two-three months. They improve based on real data. Only then do they expand to more critical workflows.

The Future: Where Enterprise AI Orchestration Is Heading

The enterprise AI landscape is evolving quickly. Where is it heading?

Tighter Integration with Domain Systems: As platforms mature, you'll see deeper integration with industry-specific systems. Your CAD system won't just export designs. It will have AI orchestration built in. Your ERP system won't just store data. It will orchestrate AI analysis natively.

Automated Workflow Discovery: Instead of manually designing workflows, systems will observe your processes, identify candidates for automation, and suggest workflow designs. This is harder than it sounds, but platforms are starting to get there.

Multi-Agent Collaboration: More sophisticated systems will deploy multiple AI agents that work together, negotiating and validating each other's work. This is particularly valuable for complex workflows requiring diverse expertise.

Regulatory Compliance Built In: Future platforms will have deep knowledge of relevant regulations and will automatically verify that orchestrated workflows meet compliance requirements. This will be particularly valuable in heavily regulated industries.

Self-Improving Systems: Systems will get better at identifying which decisions were correct and which were wrong, adjusting automatically. Today this requires some manual feedback. Future systems will learn more autonomously.

Hybrid Human-AI Teams: Rather than trying to fully automate workflows, future systems will optimize for human-AI collaboration. The AI handles the analysis. The human makes the decision, informed by perfect information from the AI.

The common thread: enterprise AI is moving from generic systems to deeply customized, industry-specific, workflow-aware platforms. This is appropriate. Enterprise AI isn't one-size-fits-all. Domain expertise matters. Industry-specific knowledge matters. Thoughtful workflow design matters.

The companies winning with enterprise AI aren't the ones with the flashiest technology. They're the ones who thought carefully about their actual processes, identified where AI genuinely helps, and deployed focused solutions.

FAQ

What exactly is the difference between RAG and AI orchestration?

Retrieval-augmented generation (RAG) is about providing AI models with access to your company's documents so they can ground responses in your actual data rather than training data. AI orchestration goes further by coordinating multiple steps, multiple AI capabilities, and deterministic rules to complete complex multi-step workflows. RAG solves the context problem. Orchestration solves the workflow automation problem. You typically use both together.

Why do most enterprise AI pilots fail to reach production?

The primary reasons are governance gaps, insufficient data quality, integration challenges, and lack of trust from end users. Organizations often underestimate how much work is required for production deployment versus a working prototype. The technical challenges (which are solvable) are usually smaller than the organizational challenges (governance, change management, training).

How do I measure whether an AI orchestration system is actually improving our workflows?

Start by measuring time savings for the specific workflows you're automating. If an 8-hour workflow becomes a 20-minute review, that's concrete and measurable. Secondary metrics include accuracy improvements (fewer errors), consistency (the same situation always produces the same decision type), and exception rates (how often does the AI need human intervention). Track these metrics before and after deployment.

What's the main advantage of using pre-built industry templates versus building custom workflows?

Pre-built templates dramatically accelerate time-to-value. Instead of weeks spent designing and testing a workflow, you configure a template in days. Additionally, templates are based on patterns learned from dozens of customer deployments. They typically handle edge cases and failure modes better than custom-built workflows, especially in your first deployment.

How do I ensure my sensitive company data stays secure when using an AI orchestration platform?

Review the platform's data handling practices thoroughly. Verify that your data isn't used to train the platform's general models. Confirm encryption standards for data in transit and at rest. Ensure fine-grained access controls and audit logging are in place. For highly sensitive data, some platforms support on-premises deployment. Have your security team review the platform's architecture before deployment.

What's the typical timeline from platform selection to production deployment?

For well-scoped, relatively straightforward workflows in your industry, expect 2-4 months from platform selection to production deployment. This includes setup, integration with existing systems, testing, user training, and monitoring. For more complex workflows or when significant data cleanup is needed, timeline extends to 4-6 months. The companies that deploy fastest are those that pick workflows with the clearest time-saving value proposition.

Can I use AI orchestration for workflows that have truly novel, creative requirements?

Probably not. AI orchestration excels at handling structured workflows with well-defined steps, deterministic rules for some steps, and AI reasoning for others. If your workflow requires truly novel problem-solving that varies significantly between instances, orchestration will add overhead without much benefit. These are better handled by simpler AI approaches or traditional human processes.

How frequently do I need to update my orchestrated workflows as my business changes?

Mature orchestration platforms make workflow updates relatively straightforward—usually configuration changes rather than code changes. However, if your business processes fundamentally change, the underlying workflow design might need revision. In practice, most workflows remain relatively stable with periodic tuning. Plan for quarterly reviews to identify improvement opportunities, but don't expect constant workflow redesign.

What's the relationship between AI orchestration and traditional business process automation (RPA)?

Traditional RPA (robotic process automation) automates repetitive, rule-based processes by automating clicks and keystrokes. AI orchestration adds intelligent reasoning on top of this, enabling automation of processes that require some judgment or analysis. The best enterprise systems often combine both: RPA for the mechanical parts and AI orchestration for the decision-making parts.

How should I approach change management when deploying an orchestration system to my team?

Start with training that focuses on how the system changes their job, not just how to use the system. Help them understand why the tedious parts are being automated (freeing them for more interesting work) and how their role is evolving. Involve skeptical power users early—show the system working to people who are most likely to resist it. Celebrate early wins publicly. Expect 6-8 weeks for teams to become proficient and comfortable with new workflows.

Conclusion: Moving Enterprise AI From Pilot to Production

Four years into the AI revolution, enterprise organizations face a critical moment. The easy part—deploying chatbots and interesting demos—is done. The hard part—moving AI into actual production, handling complex workflows, maintaining reliability and auditability—is just beginning.

The bottleneck was never the intelligence of AI models. Modern language models are remarkably capable. The bottleneck has always been context: can your AI system access the right information? And increasingly, orchestration: can your system handle the multi-step workflows that actually drive business value?

Companies that are winning with enterprise AI right now are doing three things consistently. First, they're building intelligent context layers that ensure their AI systems work with proprietary data, institutional knowledge, and domain-specific documents. Second, they're thinking about orchestration from the start—designing workflows that mix deterministic rules where they're needed with AI reasoning where it adds value. Third, they're being ruthlessly specific about where AI actually helps. They're not trying to automate everything. They're automating the tedious information retrieval and document analysis that humans find time-consuming but AI handles perfectly.

This approach produces real business value. Not incremental improvements. Dramatic improvements. Workflows that previously took eight hours now take twenty minutes. Processes that previously required senior expertise now work with junior-level review. Quality improvements because the system never forgets a check. Consistency because the same process always follows the same logic.

The infrastructure to enable this is maturing rapidly. Orchestration platforms are moving from experimental to production-grade. Industry-specific templates are reducing implementation time dramatically. Integration with existing systems is becoming straightforward. Security and compliance capabilities are meeting enterprise requirements.

If you're running a company with knowledge-intensive workflows, particularly in aerospace, semiconductor manufacturing, financial services, or similar technically demanding fields, enterprise AI orchestration is no longer a future possibility. It's a present opportunity. Not some hypothetical future where robots replace workers. Right now, today, you can dramatically improve efficiency by strategically automating the tedious parts of your processes.

The key is starting with clear-eyed assessment of where AI actually helps versus where it adds complexity. Pick workflows with obvious time-saving value. Use industry-specific platforms rather than building from scratch. Focus on reliability and auditability from the start. Involve end users in design so they trust and adopt the system.

Do this well, and your organization will have a significant competitive advantage. Your best engineers will spend time on creative problem-solving instead of tedious information retrieval. Your processes will be faster and more consistent. Your decisions will be better informed. You'll have reduced time-to-decision on everything from design changes to supplier assessments to incident investigation.

The companies moving from AI pilots to production aren't waiting for better models. Better models will come, and they'll provide marginal improvements. The real value is in moving from "can we make AI work in theory" to "how do we orchestrate AI to solve real business problems in practice." That transition is happening now. The question is whether your organization will lead or lag.

If you're building complex workflows and need to automate them with AI, consider platforms that provide the intelligent context and orchestration capabilities discussed here. The best solutions combine industry-specific expertise with flexible orchestration that lets you design workflows specific to your business.

For teams looking to accelerate deployment and focus on their core business rather than building AI infrastructure, using proven industry templates and orchestration platforms typically delivers value 2-3x faster than custom-built approaches. The economics work out: investment in a good platform pays for itself within months through time savings on the first handful of automated workflows.

Key Takeaways

- Context intelligence, not model capability, is the primary bottleneck preventing enterprise AI adoption in production

- Intelligent context layers that retrieve and structure proprietary data reduce hallucinations by 90% compared to basic RAG approaches

- Hybrid workflows mixing deterministic rules with AI reasoning provide both reliability and capability for complex enterprise processes

- Industry-specific workflow templates reduce deployment time from months to weeks and are based on proven patterns from dozens of implementations

- Real-world time savings are substantial: complex 8-hour engineering workflows reduced to 20-minute AI-assisted reviews represent 96% efficiency gains

- Multi-step orchestration with confidence-based routing enables selective human oversight while automating 70%+ of routine decisions

- Citation tracking at the sentence level and comprehensive audit logging are non-negotiable for regulated industries and critical workflows

- Typical ROI payback period is 4-6 months for well-scoped workflows with clear time-saving value propositions

- Continuous feedback loops and automated optimization enable AI systems to improve performance over time without manual retraining

- Security, data governance, and access control requirements differ significantly between orchestration platforms and must be carefully evaluated

Related Articles

- Enterprise Agentic AI Risks & Low-Code Workflow Solutions [2025]

- Why AI Projects Fail: The Alignment Gap Between Leadership [2025]

- Why Agentic AI Projects Stall: Moving Past Proof-of-Concept [2025]

- ChatGPT Citing Grokipedia: The AI Data Crisis [2025]

- AI Coordination: The Next Frontier Beyond Chatbots [2025]

- Agentic AI Demands a Data Constitution, Not Better Prompts [2025]

![Enterprise AI Agents & RAG Systems: From Prototype to Production [2025]](https://tryrunable.com/blog/enterprise-ai-agents-rag-systems-from-prototype-to-productio/image-1-1769536180743.webp)