Introduction: Healthcare Meets Artificial Intelligence at Scale

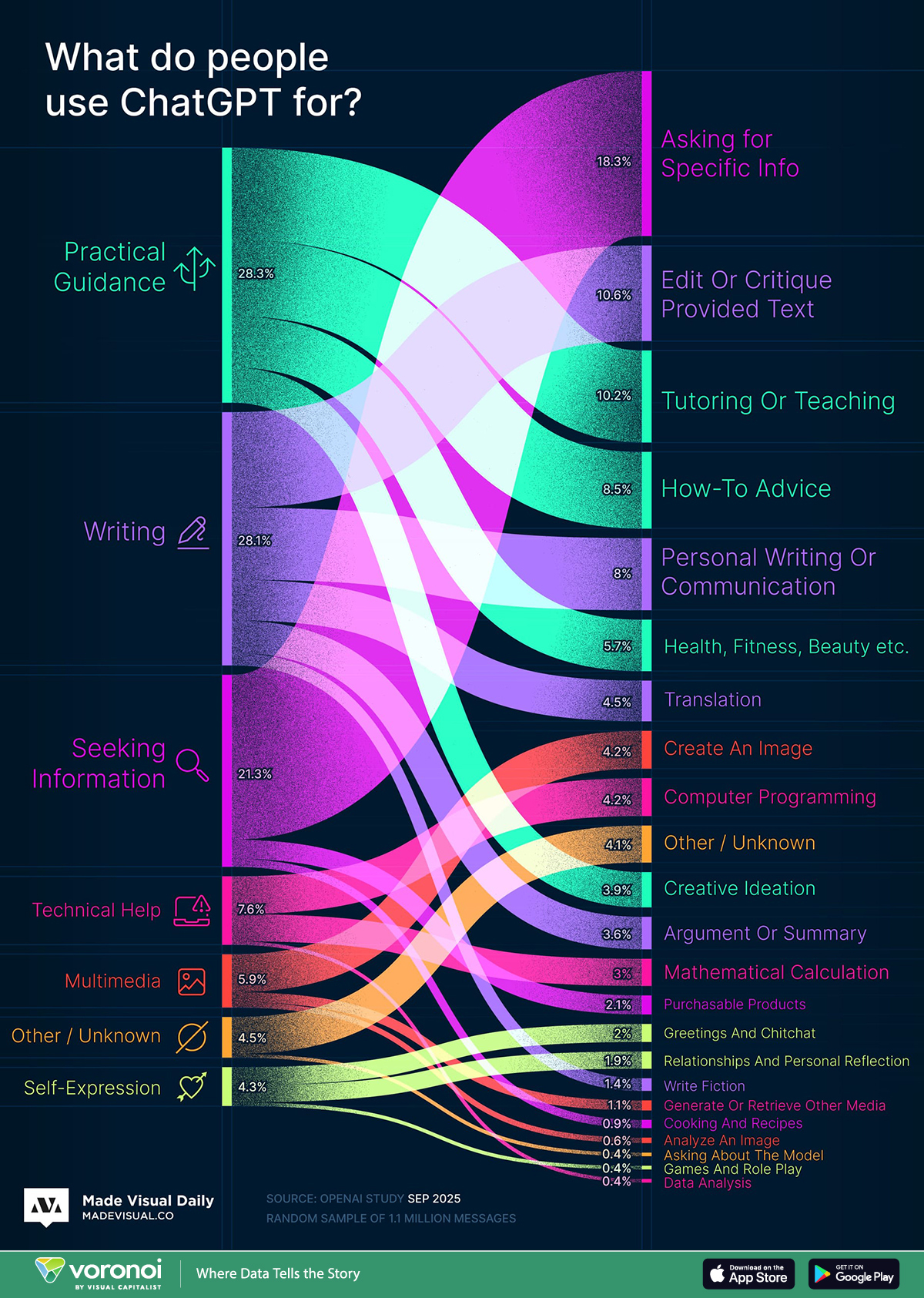

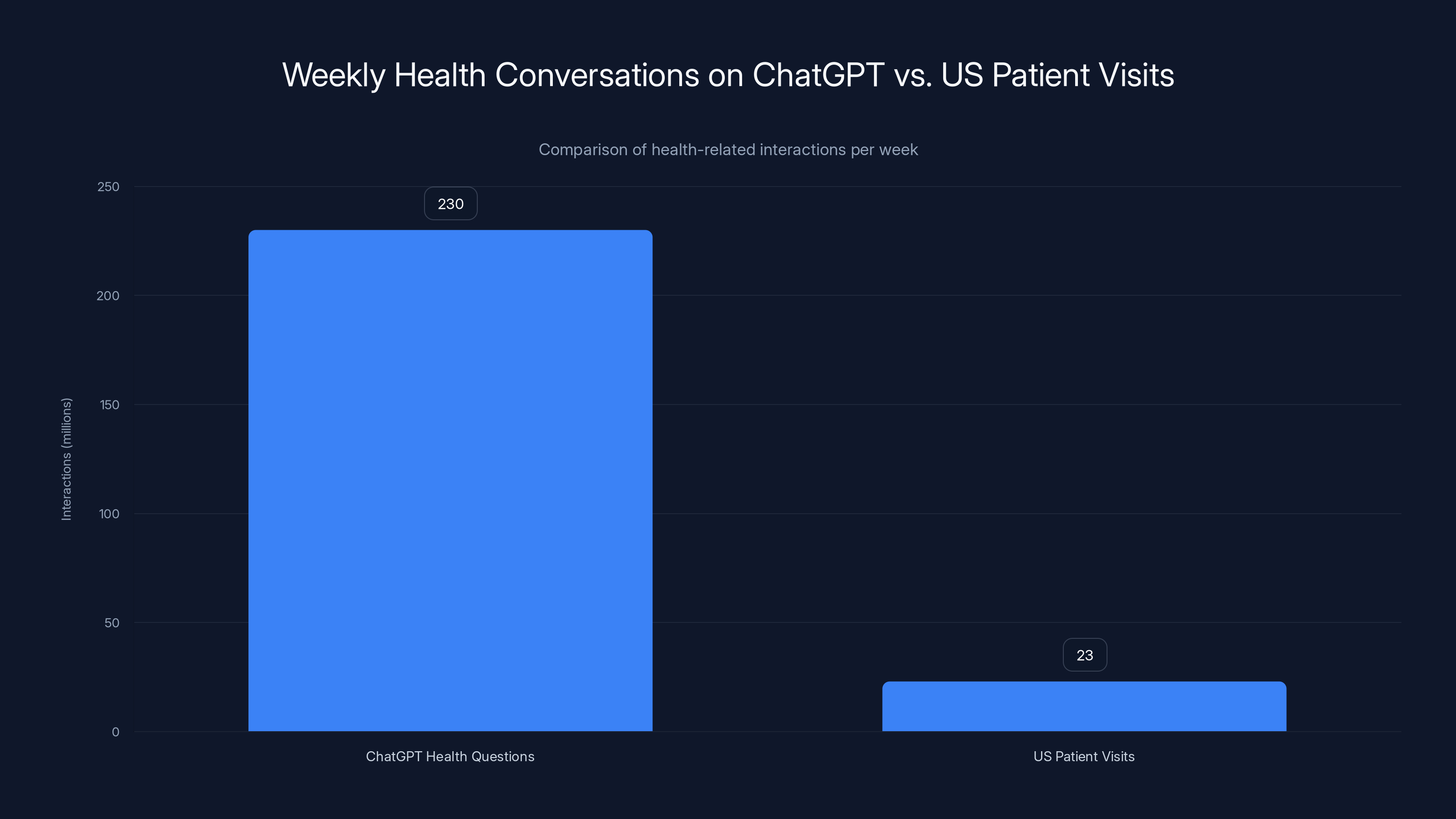

When was the last time you had a health question and immediately grabbed your phone to search for answers? You're not alone. In fact, over 230 million people turn to ChatGPT every single week to ask about health and wellness issues. That's an astounding number that tells us something profound: people are already using AI to navigate medical uncertainty, and they're doing it at a scale that dwarfs traditional healthcare channels.

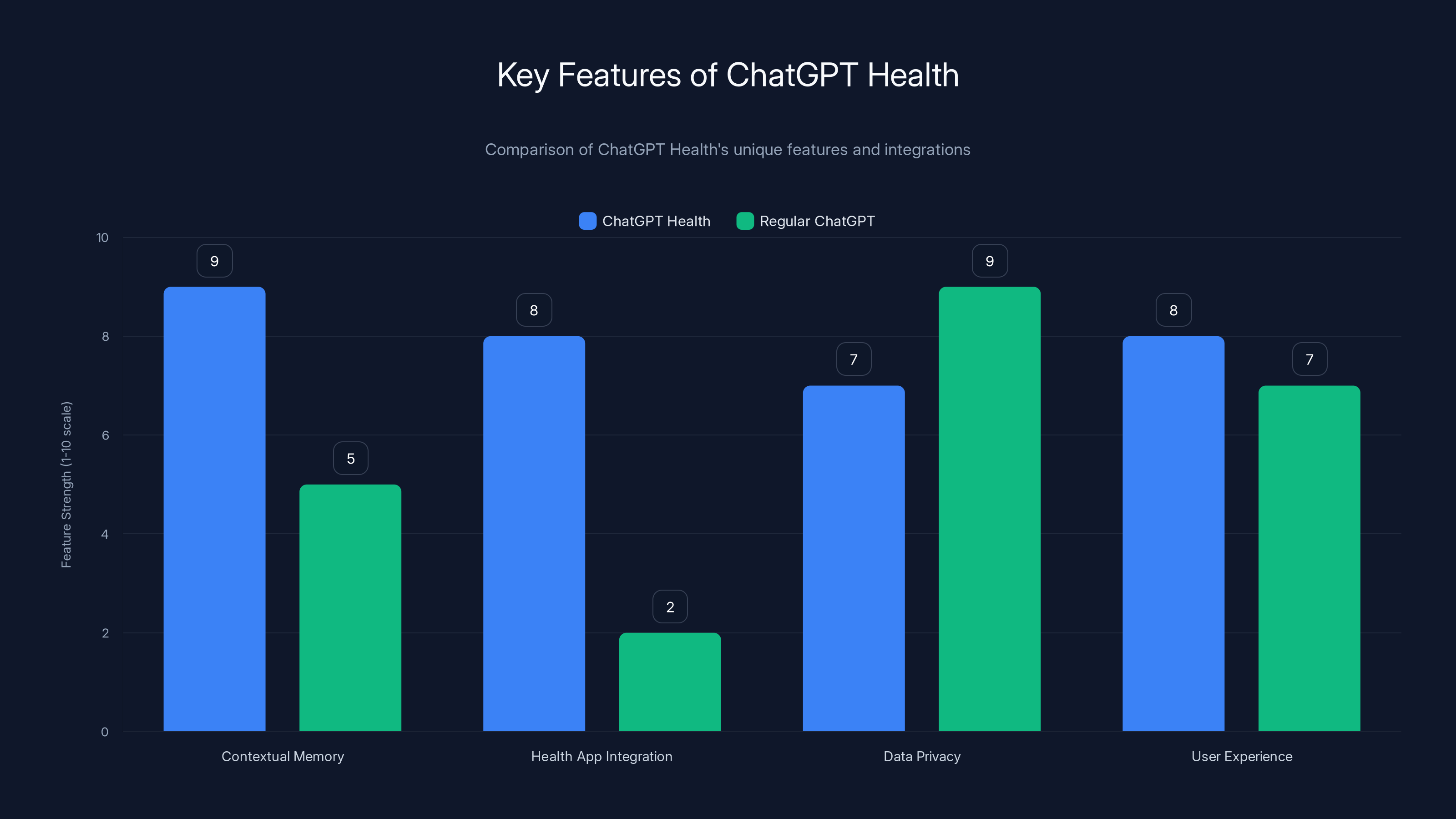

OpenAI just announced ChatGPT Health, a dedicated feature designed to create a separate, privacy-focused space for health conversations. Unlike your regular ChatGPT chats, where health questions might sit alongside work projects and casual queries, ChatGPT Health isolates your medical discussions. This isn't just a cosmetic change. It signals a fundamental shift in how tech companies view health information and how people are beginning to rely on AI as a first point of contact for medical concerns.

But here's what nobody's talking about openly: this is both revolutionary and deeply concerning. Revolutionary because accessibility to health information is a genuine problem in many countries. Deeply concerning because large language models don't understand medical truth the way doctors do. They understand statistical probability. They hallucinate confidently. They can't take responsibility for what they get wrong.

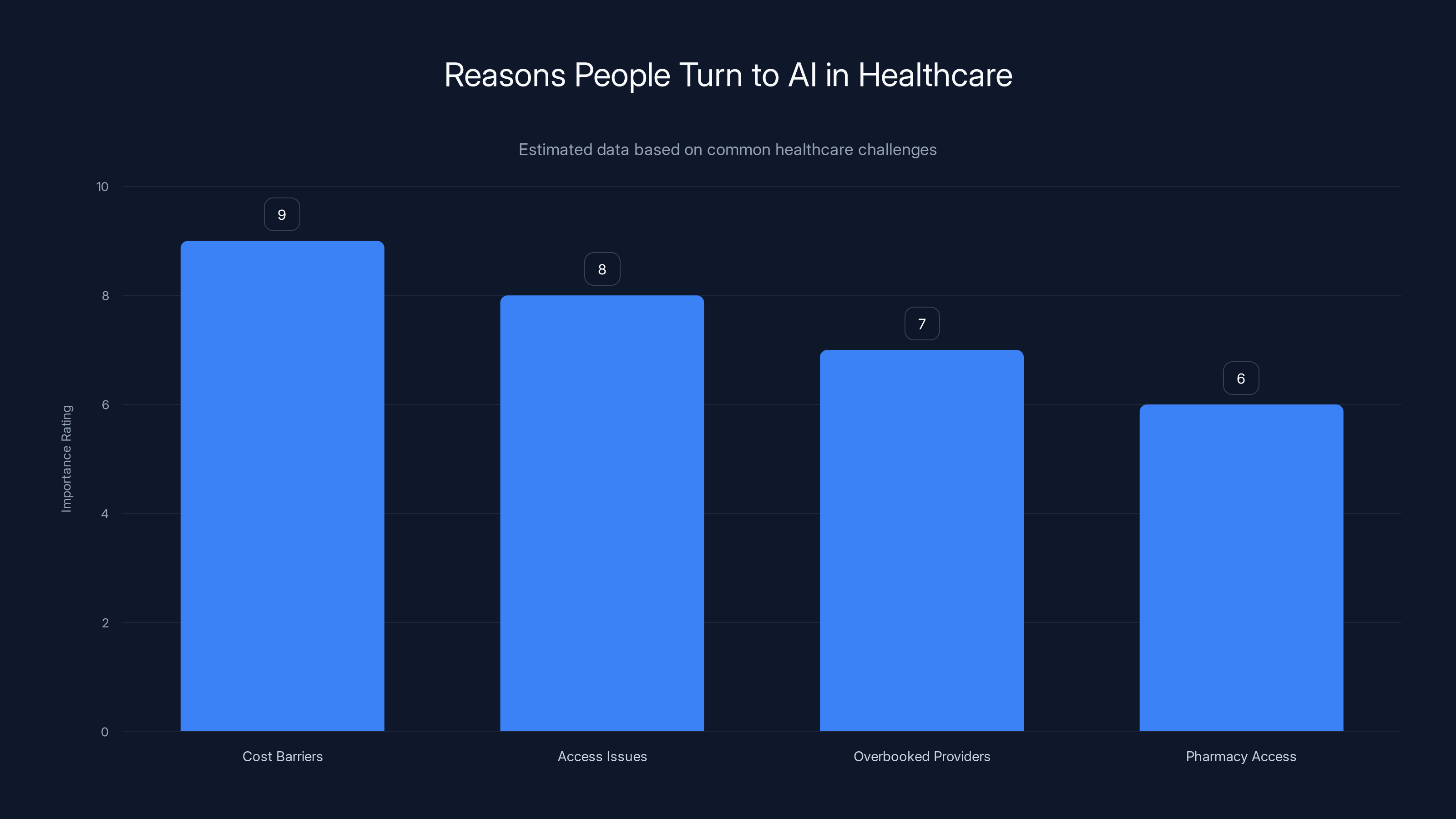

The healthcare system is broken in many ways. Doctors are overbooked. Medical debt crushes people. Access is unequal. Wait times are brutal. When Fidji Simo, OpenAI's CEO of Applications, described the motivation behind ChatGPT Health, she pointed to exactly these problems. Cost barriers. Continuity issues. Overworked providers. AI could theoretically help here. But it also introduces a new set of problems nobody has fully solved yet.

This article digs into what ChatGPT Health actually is, how it works technically, what the privacy implications are, where it fails, and what it might mean for healthcare's future. We're talking about real adoption patterns, actual limitations of the technology, and honest assessment of whether AI chatbots belong in healthcare at all.

TL; DR

- 230 million people weekly use ChatGPT for health questions, proving massive appetite for accessible health information

- ChatGPT Health isolates medical conversations in a dedicated space with cross-app integration (Apple Health, My Fitness Pal, Function)

- OpenAI won't train on Health conversations, addressing one privacy concern but not all of them

- LLMs predict probability, not medical truth, creating real risks of confident hallucinations in health contexts

- Integration with medical records could improve personalization but raises data security questions

- Regulatory landscape is unclear, with FDA oversight still evolving for AI health tools

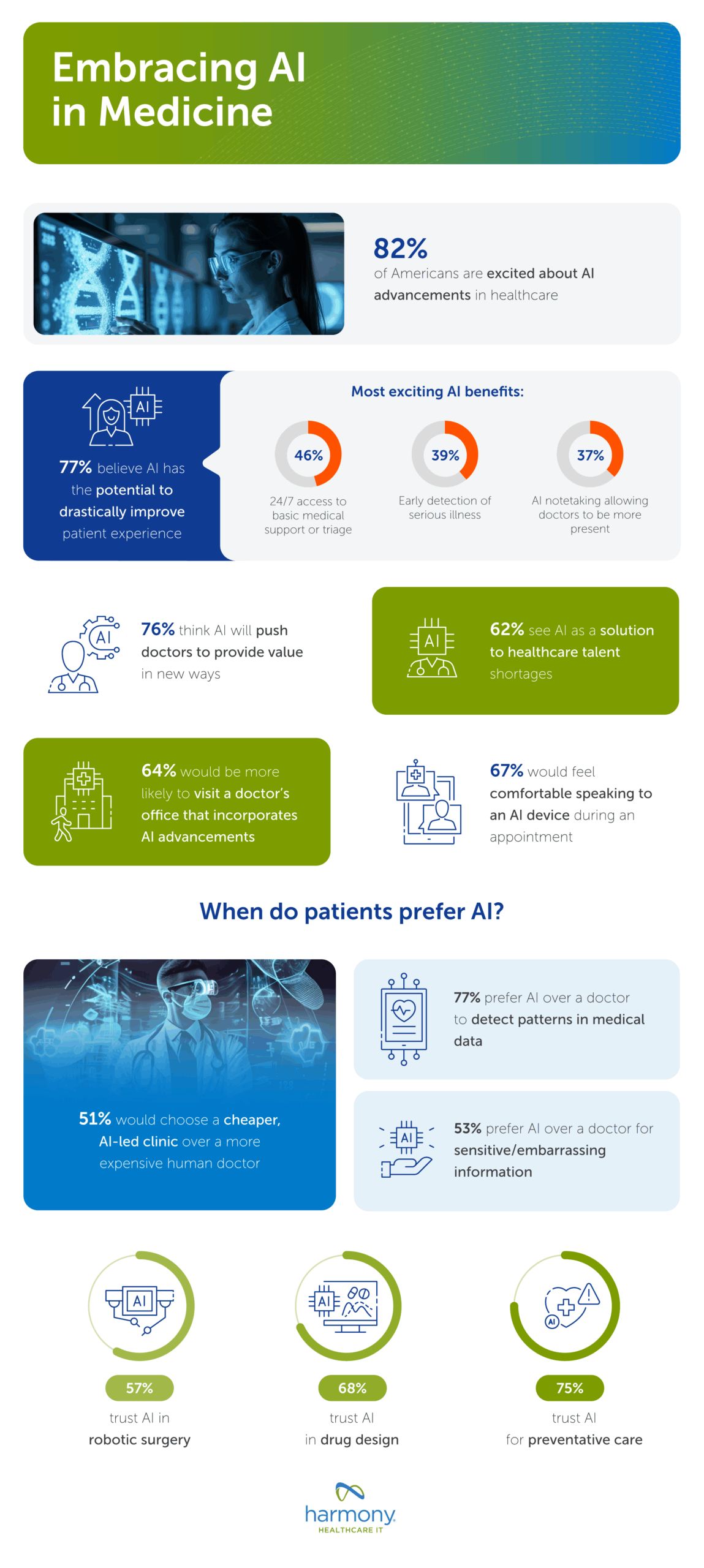

ChatGPT Health excels in conversational depth and accessibility compared to other health AI tools. Estimated data based on typical tool capabilities.

The Scale of Health Conversations on ChatGPT

Why 230 Million Users Matter

Take a moment to absorb that number. 230 million health questions per week on ChatGPT. To contextualize: the entire healthcare system in the United States handles roughly 1.2 billion patient visits per year. That's about 23 million per week in the US alone. ChatGPT is handling 10 times that volume globally. In conversations.

This tells us something critical about human behavior and access. People don't always have easy access to doctors. When they have a health question at 2 AM, they can't call their physician. They can't get a same-day appointment. They can't afford the visit. So they ask their phone.

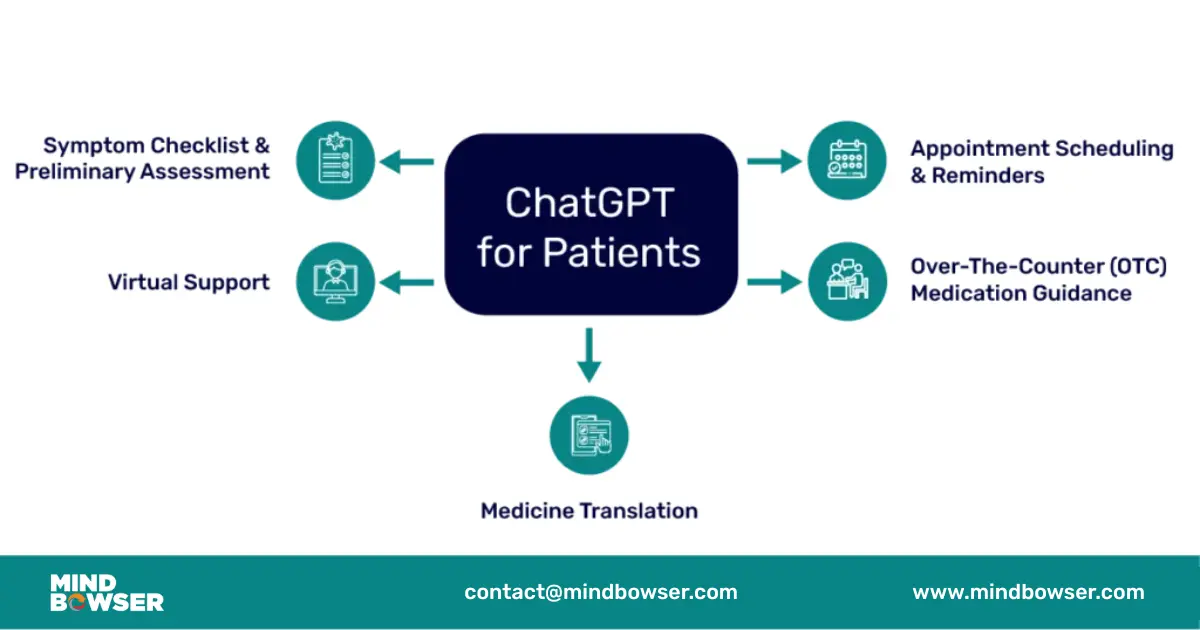

The types of questions vary wildly. Some people ask about symptoms they're experiencing. Others ask about medication side effects. Some are looking for general wellness advice. Others are asking about serious chronic conditions. OpenAI's data shows that people treat ChatGPT like a search engine crossed with a patient advocate. They're looking for information, reassurance, and personalized guidance.

What's remarkable is that OpenAI didn't build ChatGPT specifically for health conversations. There's no special training, no medical board approval, no FDA involvement. People just started using it for health questions because the alternative (searching WebMD, calling a nurse hotline, waiting for an appointment) felt worse.

The Problem This Solves

Currently, if you ask ChatGPT health questions, they sit in your general chat history. You've got your work project, a recipe you asked about, and your health question all in one place. This creates several problems.

First, context contamination. If ChatGPT later generates something for a work presentation, it might accidentally pull health-related information into your context window. That's not necessarily how LLMs work technically (they don't remember previous conversations), but the conceptual issue matters: health information is different. It should be treated differently.

Second, psychological separation. When health conversations are isolated, you might feel more comfortable sharing sensitive details. The act of stepping into a "Health" section signals that this is a medical conversation, not casual chat. This changes how people interact with the system and what they're willing to share.

Third, feature targeting. In a dedicated health space, OpenAI can build health-specific features—like integration with medical apps, health history context, and medication tracking—without polluting the general ChatGPT experience.

OpenAI addresses this by allowing the AI to recognize when health questions appear in standard conversations and gently nudge users to the Health section. If you ask about fitness in regular ChatGPT, the AI might suggest taking that to Health instead. This sounds small, but it's actually smart design. It uses the AI's understanding of intent to route conversations appropriately.

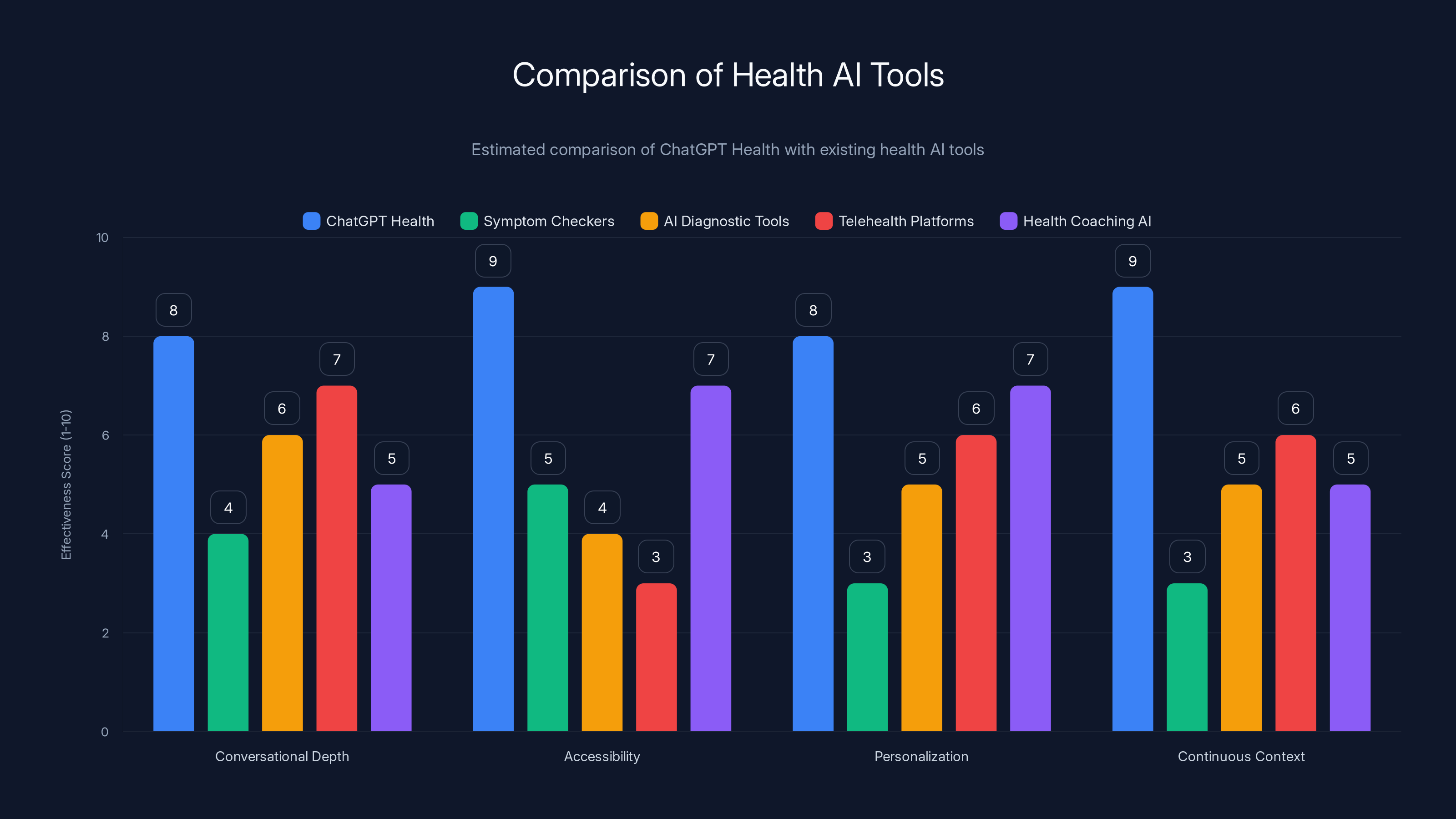

ChatGPT Health excels in contextual memory and health app integration compared to regular ChatGPT, while maintaining strong data privacy and user experience.

How ChatGPT Health Works: Architecture and Features

The Dedicated Conversation Space

Technically, ChatGPT Health is a new conversation type within the ChatGPT application. Think of it like how Gmail has a separate "Tasks" space or how Gmail lets you create separate folders for different types of email. It's not a separate application; it's a distinct conversation environment within ChatGPT.

When you open ChatGPT Health, you're starting fresh. No context from your regular chats. No history of your other questions. This is intentional isolation.

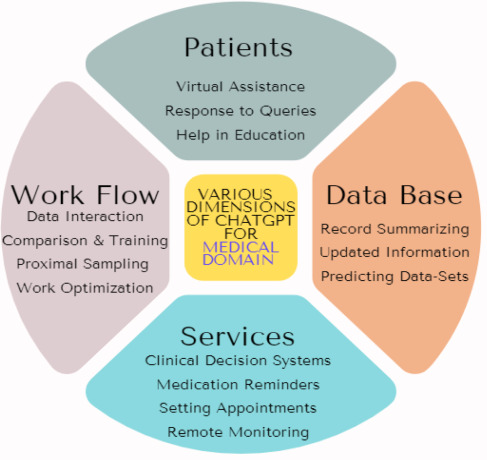

But here's where it gets interesting. ChatGPT Health can remember information within the Health context itself. If you tell ChatGPT in Health that you're training for a marathon, and later ask about knee pain, it will remember the marathon context. It'll know you're asking about pain specifically related to running.

The system learns patterns within your health conversations. If you mention that you take medication X and ask about symptom Y, ChatGPT can check whether Y is a known side effect of X. It can cross-reference information you've shared across multiple conversations within the Health space.

This is different from regular ChatGPT, where each conversation is isolated. In Health, there's continuity of context across conversations within that sandbox.

Integration with Health Apps and Medical Records

This is the feature that gets people excited and nervous simultaneously. ChatGPT Health can connect to external data sources:

- Apple Health: Fitness data, heart rate, step count, sleep data

- My Fitness Pal: Nutrition and calorie tracking

- Function: Another health and wellness app

- Presumably other integrations coming

The integration works like this: ChatGPT can read your health data from these apps and use it as context. If you ask about your sleep quality, ChatGPT can pull your actual sleep data from Apple Health and base its response on real information instead of just your description.

This is powerful because self-reported health data is unreliable. People remember their sleep as better or worse than it actually was. They underestimate calorie intake. They misremember medication timing. If ChatGPT has access to your actual data, its advice becomes more grounded in reality.

But the data security question is obvious: who has access to your health information? OpenAI is explicit that health conversations won't be used to train its models. But what about the health app data you connect? What's the encryption standard? What's the data retention policy? Can law enforcement compel this data?

The Context Carryover Problem and Solution

One issue OpenAI solved smartly: how much information should ChatGPT Health know about your regular life?

Option 1 would be zero. Complete isolation. But that's useless. If you run marathons and ask about knee pain in Health, it's helpful if ChatGPT knows you're a runner.

Option 2 would be total access to everything. But that's a privacy disaster. ChatGPT Health shouldn't know about your work projects or personal relationships.

OpenAI went with selective carryover. Health conversations can reference lifestyle patterns you've established in other conversations (like "you mentioned you're a runner"), but they can't access sensitive work or personal information. The specifics of how this filtering works aren't fully detailed, but the concept is right.

This is actually solving a real user need. When you talk to your doctor, they want to understand your lifestyle. "Do you exercise?" "Do you drink?" "What's your job?" These aren't random questions; they're context. ChatGPT Health needs similar context to be useful.

The Privacy Architecture: What Gets Saved, What Doesn't

The "We Won't Train on Health Data" Promise

OpenAI made an explicit commitment: conversations in ChatGPT Health won't be used to improve ChatGPT's models.

This is significant and meaningfully different from how they handle regular ChatGPT conversations (where they can use your chats for model improvement if you haven't disabled that setting). But what does this promise actually mean technically?

Model training typically happens offline. OpenAI's engineers take datasets of conversations and feed them into training pipelines that update the model weights. If health conversations aren't in that pipeline, they're not contributing to model improvement.

But "training" is just one use case for data. There are others:

- Safety monitoring: Humans reviewing conversations to improve safety systems

- Service improvement: Analyzing patterns to understand what features people need

- Aggregate analytics: Understanding trends without accessing individual conversations

- Data backup and redundancy: Storing conversations on OpenAI's servers for service continuity

OpenAI is specifically excluding "model training," which is the most sensitive use case. But other uses likely continue. The company is balancing privacy (not training models on health data) with operational necessity (needing to store data somewhere).

For regulatory purposes, this matters. The FDA and FTC care about model training on medical data because that's how bias gets baked in. If your training data is predominantly from one demographic group, your model will work worse for others. By not training on health conversations, OpenAI sidesteps some regulatory concerns.

But users should understand what "not used for training" actually means. It doesn't mean "completely deleted." It doesn't mean "nobody at OpenAI can read it." It means specifically "not fed into model improvement pipelines."

Data Retention and Deletion

When you delete a regular ChatGPT conversation, what happens to your data? OpenAI's terms say conversations are kept for 30 days after deletion, then removed. But "removed" is ambiguous. Does that mean deleted from all servers? From backups? From research datasets?

Healthcare data is supposed to be different. There are legal requirements in many jurisdictions. HIPAA in the US, GDPR in Europe, PIPEDA in Canada—all these frameworks have specific requirements for health information retention and deletion.

OpenAI hasn't detailed how ChatGPT Health handles deletion requests under these frameworks. Do they comply with HIPAA's 6-year retention requirement? Or GDPR's right to be forgotten? These are critical questions that aren't fully answered yet.

The practical reality is that health data is more sensitive than other data. If someone gains access to your health history, that's worse than them accessing your ChatGPT conversations about cooking. Governments, employers, and insurers all have reasons to want your health data. OpenAI's infrastructure needs to reflect this higher sensitivity.

Encryption and Access Controls

OpenAI states that health conversations are encrypted in transit (moving to OpenAI's servers) and at rest (sitting on OpenAI's servers). This is standard practice for any cloud service handling sensitive data.

But encryption only matters if the encryption is end-to-end (you have the only key) or if OpenAI's keys are sufficiently protected. OpenAI handles the keys, which means employees could theoretically access the data. Most cloud services work this way, but it's worth understanding: you're trusting OpenAI as a custodian of your health data, not keeping it completely private.

Access controls are stricter for ChatGPT Health than regular ChatGPT. Not all OpenAI employees can read your health conversations. Only specific teams (likely safety, trust and safety, and health product teams) have access, and that access is presumably logged and audited.

But again, it's not zero-trust encryption. You're relying on OpenAI's internal controls to keep your data private.

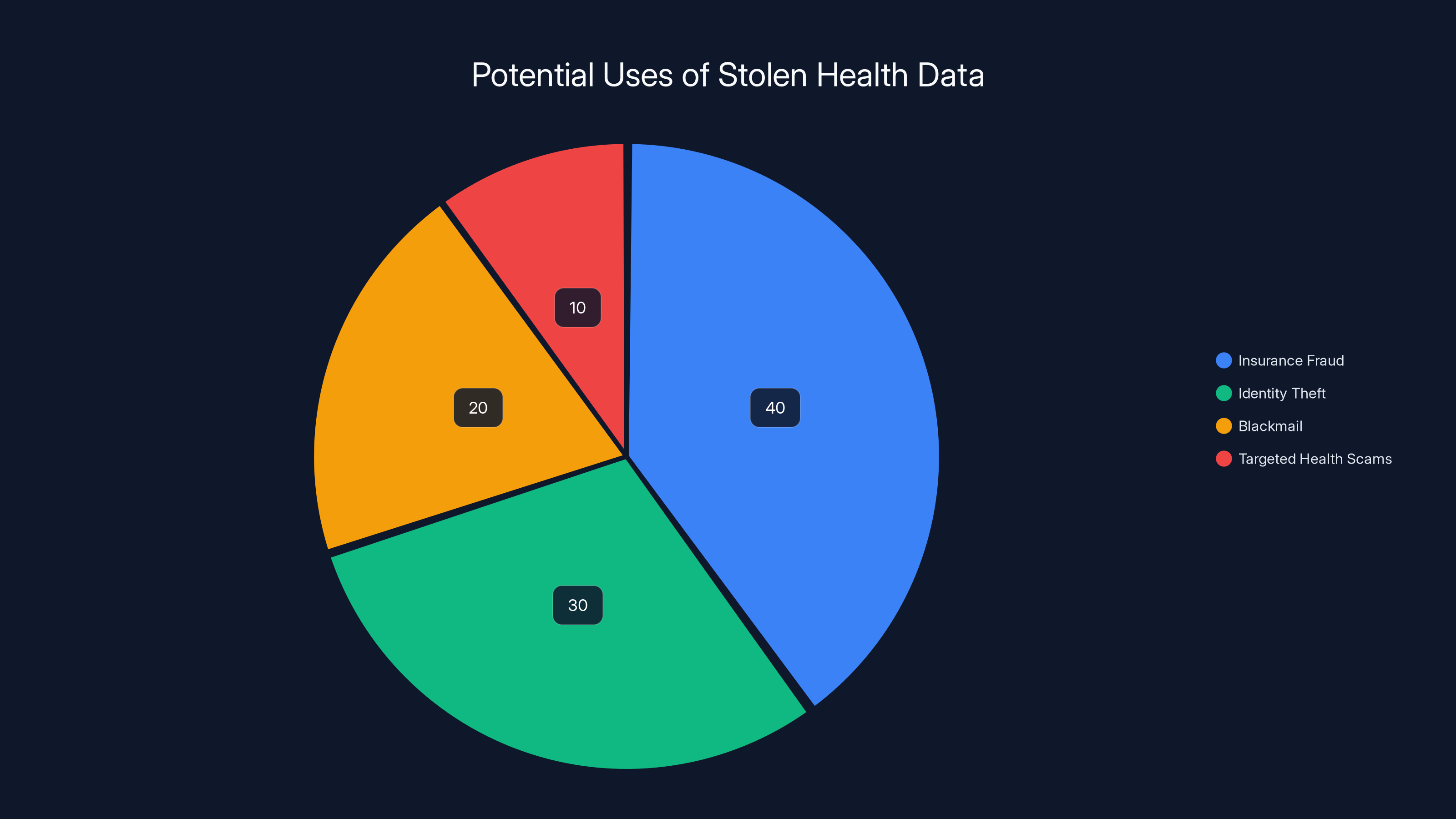

Estimated data shows insurance fraud as the most common use of stolen health data, followed by identity theft. Estimated data.

How LLMs Understand Health: Prediction vs. Truth

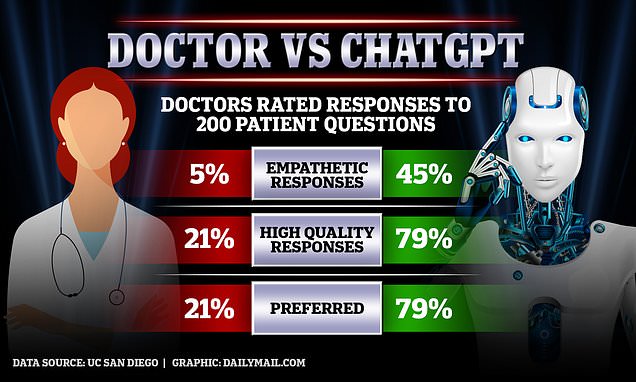

The Fundamental Limitation: Prediction Over Accuracy

This is the core issue nobody can solve with ChatGPT Health or any LLM. Large language models are next-token prediction engines. They work by calculating probability. Given the previous tokens (words), what's the most statistically likely next token?

This is how they generate coherent text. It's also how they generate confident nonsense.

Medical knowledge isn't probabilistic in the same way. When you have chest pain, the question isn't "what's the statistically likely cause?" It's "could this be a heart attack?" You need sensitivity (catching real problems) not specificity (avoiding false alarms). The cost of missing a real problem is much higher than the cost of false alarms.

LLMs are trained to be helpful and harmless, which means they're trained to sound confident and to give information people want. This creates a dangerous combination with health advice. People want reassurance, so LLMs provide it. People want clear answers, so LLMs provide them—even when the truth is uncertain.

A real doctor facing uncertainty says "I don't know, let's run tests." ChatGPT facing uncertainty says "this could be X, Y, or Z. If you're concerned, see a doctor." That last sentence is a hedge, but the preceding sentences have already established in the user's mind that the AI understands their situation. It doesn't, not really.

Hallucinations in Medical Context

Hallucination is when an LLM generates false information confidently. It happens because the model is trained to predict plausible tokens, not true tokens. Medical hallucinations are particularly dangerous.

Example: Someone asks about a drug interaction between their two medications. ChatGPT's training data probably includes information about both drugs and their interactions. But the model doesn't understand drugs the way a pharmacist does. It doesn't have a mental model of drug mechanisms. It has statistical associations.

If those associations are weak in the training data (maybe the interaction is rare or poorly documented online), ChatGPT might confabulate. It might generate an interaction that sounds real but doesn't exist. The user trusts it because it sounds authoritative.

OpenAI has worked on reducing hallucinations with techniques like retrieval-augmented generation (pulling in real information from reliable sources). ChatGPT Health presumably uses this. But even with RAG, the model can still misapply information or get causal relationships wrong.

The difference between LLMs and search engines matters here. A search engine returns information; you evaluate it. ChatGPT synthesizes information and presents it as understanding. The medium changes how people interpret the output.

Bias in Medical Training Data

ChatGPT was trained on internet text, which includes medical information. But internet medical information reflects the biases in the medical system itself. Certain conditions are overrepresented (conditions affecting wealthy populations). Certain demographics are underrepresented (racial and gender minorities often get worse healthcare and less documentation of their experiences).

When ChatGPT provides medical advice, it's likely to be better calibrated for majority populations and worse for minorities. This mirrors exactly the kinds of health disparities the medical system struggles with.

OpenAI hasn't detailed how they're addressing bias in ChatGPT Health. Have they deliberately included diverse medical information? Have they tested the system on different populations? These questions matter.

Historically, AI systems trained on unfiltered internet data inherit societal biases. Healthcare is one of the most biased fields. Combining the two is risky.

The Healthcare System Context: Why People Turn to AI

The Broken Incentives Problem

Fidji Simo's framing of why ChatGPT Health matters is honest: the healthcare system has real failures.

Cost barriers are massive. In the US, a routine doctor's visit costs $150-300 out of pocket even with insurance. Lab tests are separate. Specialists are thousands of dollars. People ration healthcare. They skip checkups. They self-diagnose because they can't afford a professional diagnosis.

Access is terrible. In rural areas, the nearest doctor might be an hour away. In cities, you wait weeks for appointments. Telehealth helped, but it's still gatekept by insurance. And if you don't have insurance, you're out of luck.

Overbooked providers are the norm. Doctors see dozens of patients a day. They have 10 minutes per patient. Continuity of care is extinct. You might see a different doctor every visit. They don't know your history. They run tests just to establish a baseline.

Pharmacy access varies wildly. In some areas, you can't get antibiotics without a doctor's visit. In others, pharmacists can prescribe certain medications. The rules change by location and insurance.

In this broken context, ChatGPT actually solves some real problems. It's always available. It doesn't cost anything for the user. It won't forget what you told it (within the Health context). It will take time explaining things. It's patient and non-judgmental.

From a public health perspective, if ChatGPT can reduce unnecessary ER visits by helping people understand what's genuinely urgent versus what they can address at home, that's valuable. If it helps people realize they need to see a doctor when they were planning to ignore symptoms, that's valuable.

The risk is obvious: ChatGPT might tell people they're fine when they're not. It might tell them they don't need a doctor when they do. It might suggest treatments that don't work or interact with their other conditions.

The Access Angle

OpenAI's framing emphasizes access. ChatGPT Health isn't meant to replace doctors. It's meant to supplement a broken system. For people who can't access doctors, even a flawed AI is better than nothing.

But this creates a moral hazard. If people rely on ChatGPT for health guidance because they can't afford doctors, that's not actually solving healthcare access. It's papering over the problem. It lets healthcare systems avoid fixing the underlying failures.

ChatGPT Health is a band-aid on a bullet wound.

That said, band-aids matter when you're bleeding. If ChatGPT Health helps someone in a country without universal healthcare avoid an unnecessary ER visit, that's real value. That person's time and money matter.

Estimated data suggests cost barriers and access issues are the most significant reasons people turn to AI in healthcare, with ratings of 9 and 8 out of 10, respectively.

Regulatory Landscape: Where Does ChatGPT Health Fit?

FDA Oversight of AI in Medicine

The FDA has been slowly developing guidance on AI in healthcare. They've issued statements emphasizing the need for evidence, validation, and ongoing monitoring. But they haven't mandated specific approval processes for AI tools that provide health information (as opposed to medical devices that diagnose or treat).

ChatGPT Health sits in a gray area. It's not a medical device in the FDA's sense—it's not diagnosing or treating. It's providing information. Search engines provide health information. WebMD provides health information. These aren't regulated as medical devices.

But the distinction blurs. If ChatGPT Health says "based on your symptoms, this is likely a UTI," is that diagnosing? The FDA would probably say yes. If it says "UTIs are usually caused by bacteria and treatment typically involves antibiotics," the FDA would probably say that's information, not diagnosis.

OpenAI is likely operating conservatively here. ChatGPT Health will probably include appropriate disclaimers ("This is not medical advice. See a healthcare provider for diagnosis."). But disclaimers don't eliminate legal risk. If someone relies on ChatGPT Health's guidance and has a bad outcome, there could be liability questions.

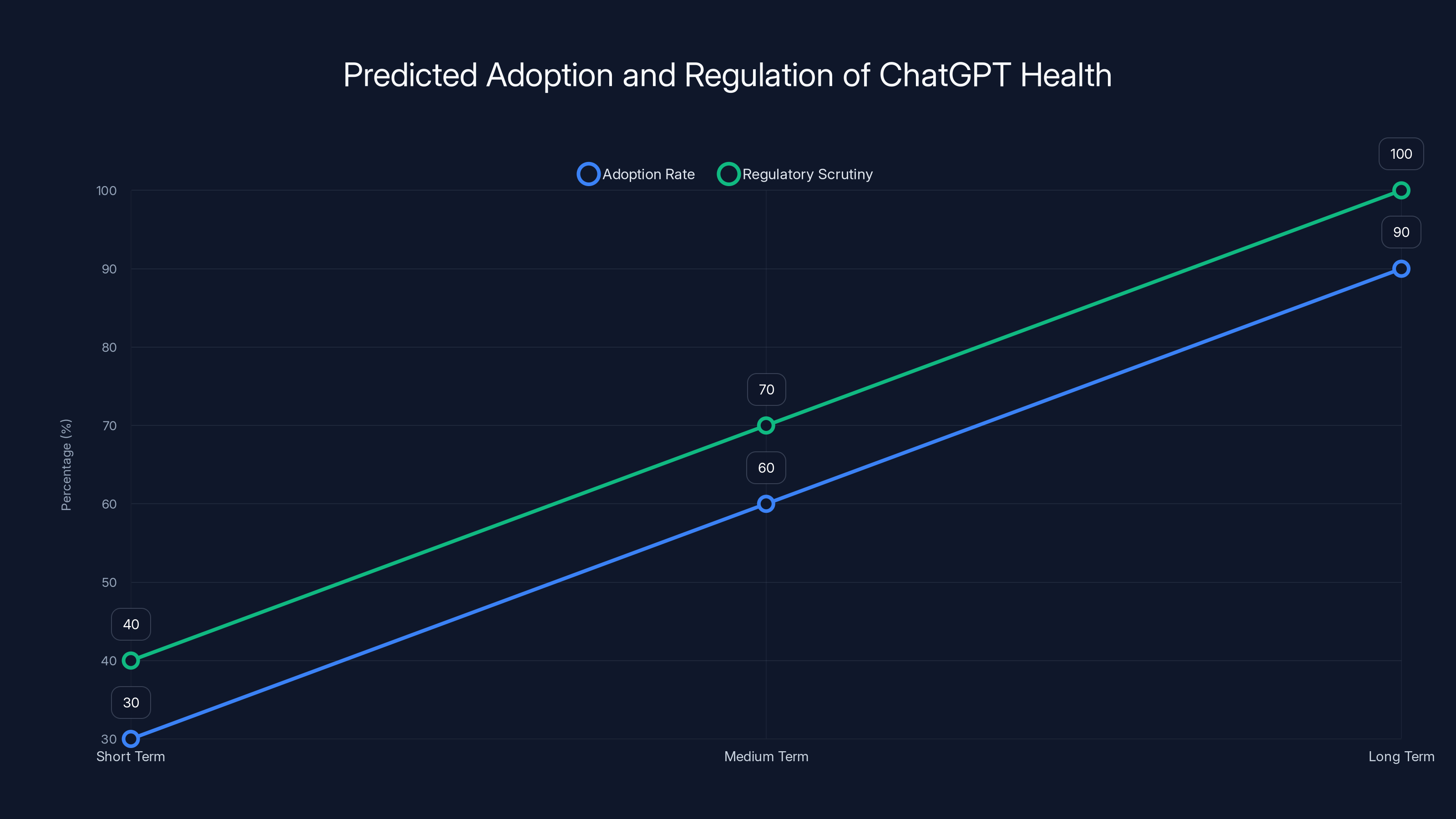

The regulatory environment will clarify as more AI health tools launch. The Biden administration called for AI regulation in healthcare. The FTC is scrutinizing AI-driven health claims. Eventually, there will likely be specific guidance on what AI health tools can and can't claim.

Right now, ChatGPT Health is moving faster than regulation can catch up.

FTC and Consumer Protection

The FTC cares about unfounded health claims and deceptive practices. If ChatGPT Health claims to diagnose medical conditions but can't reliably do so, that's deceptive. If it claims to replace doctors when it can't, that's deceptive.

OpenAI has built in safeguards (telling people to see doctors, not diagnosing). But the FTC will probably review whether these safeguards are sufficient. If consumers are actually relying on ChatGPT Health as a substitute for doctors, claiming it's just informational might not be legally defensible.

The FTC has also been aggressive about health data privacy. If ChatGPT Health collects health data and doesn't protect it adequately, the FTC could take action.

International Regulatory Fragmentation

Outside the US, regulations are more specific. Europe's medical device regulation (MDR) explicitly covers AI-based clinical tools. It requires evidence of safety and effectiveness. ChatGPT Health would likely require formal approval in Europe.

Canada's regulatory path is similar to the US. Health Canada regulates medical devices and AI tools that diagnose or treat.

China takes a completely different approach, with state control and specific registration requirements for health AI tools.

For OpenAI, this means ChatGPT Health might launch in the US in January 2026 but face longer delays or modifications in other markets.

Comparison: ChatGPT Health vs. Existing Health AI Tools

The Landscape of Health AI

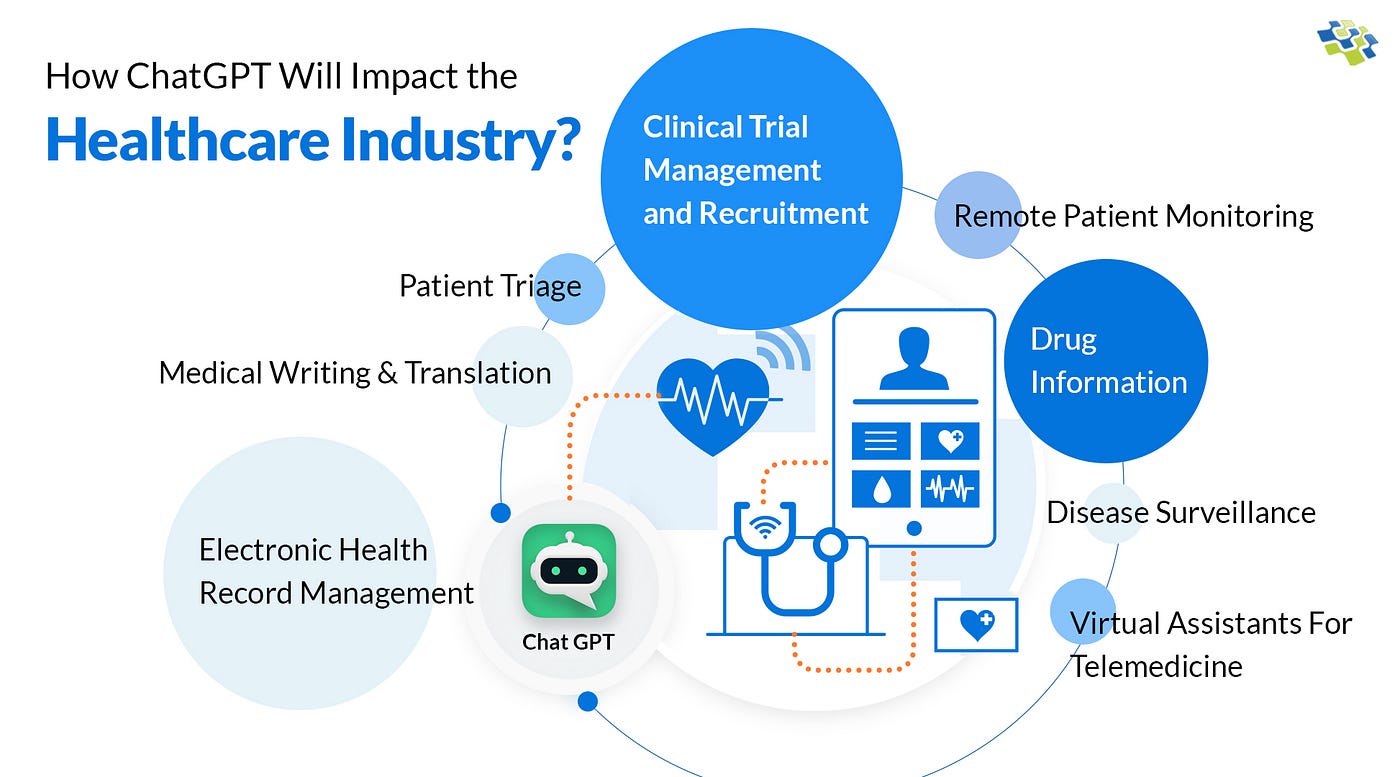

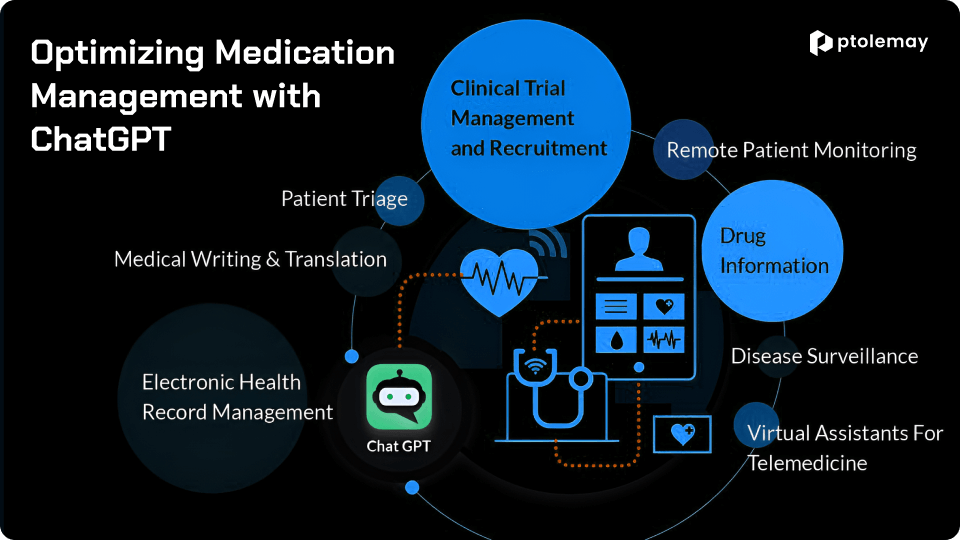

ChatGPT Health isn't the first AI tool attempting to provide health information. The field already includes several approaches:

Symptom checkers like Healthline Symptom Checker and WebMD Symptom Checker use decision trees and algorithms to suggest possible conditions based on symptoms. They're rule-based, not AI-based in the modern sense.

AI diagnostic tools like Tempus and IBM Watson for Oncology are trained specifically on medical data and are designed to assist doctors. They're used in clinical settings, not by consumers directly.

Telehealth platforms like Teladoc and Amwell connect you to actual doctors via video. They provide real medical advice from licensed providers.

Health coaching AI like Lark and Livongo use AI to provide personalized health guidance based on your data. They're typically used for chronic disease management.

General LLMs like ChatGPT, Claude, and Gemini can all answer health questions, but they're not optimized for health and don't have dedicated privacy controls.

ChatGPT Health combines elements of these. It's an LLM (like general ChatGPT) with health-specific features (privacy controls, app integration, health context). It's not a clinical tool like Watson, and it's not a doctor like Teladoc.

Where ChatGPT Health Wins

Conversational depth: ChatGPT can have longer, more nuanced conversations than symptom checkers. You can ask follow-up questions and explain complex situations.

Accessibility: It's free and always available. Teladoc costs money and requires scheduling. Symptom checkers are often paywalled or ad-laden.

Personalization through data: By integrating with Apple Health and other apps, ChatGPT Health can understand your specific situation better than generic health information.

Continuous context: Within the Health space, ChatGPT remembers conversations. You don't have to re-explain your situation.

Where ChatGPT Health Loses

Accountability: If a doctor gives bad advice, you can sue. If ChatGPT gives bad advice, you probably can't.

Evidence standards: Doctors are trained using medical evidence and specific protocols. ChatGPT is trained on internet text.

Regulation and oversight: Doctors are licensed and regulated. ChatGPT is not.

Accuracy: Despite all efforts, LLMs hallucinate. Doctors (hopefully) don't.

Ability to examine you: A doctor can do a physical exam. ChatGPT can't.

Projected increase in both adoption and regulatory scrutiny of ChatGPT Health over the next few years. Estimated data based on potential market and regulatory developments.

Security Risks and Data Vulnerability

The Data Breach Scenario

ChatGPT Health's biggest vulnerability is being a centralized repository of health data. If OpenAI's systems are breached, attackers get access to everyone's health information simultaneously.

OpenAI has experienced security incidents before (though not publicly disclosed breaches of ChatGPT conversations). But the healthcare industry is a major target for hackers because health data is extremely valuable.

On the black market, health records go for

- Insurance fraud: Filing false claims using someone's medical history

- Identity theft: Using medical identity to get prescriptions or procedures

- Blackmail: Threatening to publish embarrassing health information

- Targeted health scams: Targeting people with specific conditions

OpenAI has built-in protections (encryption, access controls, monitoring). But perfect security doesn't exist. A breach is possible, and the consequences would be severe.

API Integration Vulnerabilities

When ChatGPT Health connects to Apple Health, My Fitness Pal, and Function, you're creating additional potential breach points. Each integration is another company handling your health data.

What happens if My Fitness Pal is breached? ChatGPT Health doesn't cause that breach, but it's increased your exposure by connecting systems. The more places your data exists, the higher the risk.

OpenAI's documentation doesn't detail how these integrations are secured. Are they using OAuth (which gives time-limited access without sharing passwords)? Or are they sharing API keys that grant broader access? This matters.

Regulatory Compliance Challenges

HIPAA in the US requires that healthcare providers (covered entities) and their business associates implement specific security standards. OpenAI is probably not a covered entity (ChatGPT Health is an information service, not a medical service). But if people use ChatGPT Health as their primary source of health guidance, regulators might argue it should be held to HIPAA standards.

GDPR in Europe gives people the right to access their data and have it deleted. ChatGPT Health needs infrastructure to support these rights at scale.

State privacy laws (California's CPRA, Virginia's VCDPA, etc.) have health information carve-outs requiring extra protection.

OpenAI's existing privacy policies might not meet these standards for health data. As ChatGPT Health launches, the company will likely need to update privacy policies and potentially hire compliance experts.

Real-World Use Cases and Limitations

Where ChatGPT Health Actually Helps

Medication side effects: "I started taking this medication and experienced these symptoms. Are they known side effects?" ChatGPT can check against documented side effect profiles. This is genuinely useful. It helps people distinguish normal side effects from concerning ones.

Symptom research: "I've had this strange symptom for a week. What conditions cause it?" ChatGPT can list possibilities. This helps people decide whether to call a doctor. It won't diagnose, but it narrows the field.

Health literacy: "What is a lipid panel?" "How does insulin resistance work?" "What's the difference between Type 1 and Type 2 diabetes?" ChatGPT can educate. Informed patients make better decisions.

Lifestyle optimization: "Based on my sleep data, what should I change?" ChatGPT can give personalized suggestions using your actual data. This is more useful than generic health advice.

Pre-appointment preparation: "I have a doctor's appointment tomorrow for knee pain. What questions should I ask?" ChatGPT can help people prepare and ask better questions of their doctors.

Where ChatGPT Health Fails or Is Dangerous

Emergency assessment: "Am I having a heart attack?" ChatGPT might not tell you to call 911. It might provide information about heart attack symptoms, but the responsibility is now on you to assess yourself. In emergencies, every second matters. You shouldn't be having a text conversation with an AI.

Rare conditions: ChatGPT's training data has plenty of information about common conditions but less about rare ones. If you have a rare condition, ChatGPT's advice might be useless or dangerous.

Drug interactions: While ChatGPT can check documented interactions, it might miss rare interactions or interactions specific to your condition. A pharmacist is still better.

Mental health: Chatbots aren't therapists. ChatGPT Health might help someone understand anxiety, but it can't replace therapy. And for suicidal ideation or severe mental health crises, an AI chatbot is actively dangerous if it's someone's only resource.

Genetic counseling: If you're concerned about genetic conditions, ChatGPT can provide information but can't replace a genetic counselor who understands your family history and can advise on testing.

Personalization limits: ChatGPT Health can integrate with health apps, but it doesn't understand you the way a doctor does. It doesn't know your values, your anxiety levels, your work stress. It can't take these into account the way a human provider can.

ChatGPT handles 230 million health questions weekly, 10 times the volume of weekly US patient visits, highlighting its role as a major health information resource. Estimated data.

The Broader Implications: AI as Healthcare Infrastructure

Democratization vs. Dilution

One way to view ChatGPT Health: it democratizes health information. Healthcare has historically been gatekept by credentials. You need a medical degree to practice medicine. ChatGPT Health puts basic health guidance in everyone's hands.

Another way to view it: it dilutes the value of healthcare expertise. If anyone can get health advice from an AI, what's the point of medical training? This is probably overblown—doctors do more than provide information—but it's worth considering.

The optimistic scenario: ChatGPT Health supplements healthcare. People use it for basic questions, filtering out worries that don't need medical attention. This reduces unnecessary doctor visits, freeing up medical resources. Doctors focus on people who genuinely need them.

The pessimistic scenario: ChatGPT Health substitutes for healthcare. People with low health literacy or limited access rely on it entirely. They miss real conditions. They take incorrect medications. They delay necessary treatment.

The likely reality: both things happen simultaneously. For some users, ChatGPT Health is genuinely helpful. For others, it's dangerous. The system as a whole becomes more fragmented.

The Labor Question

Will ChatGPT Health put doctors out of work? No. Healthcare demand is already higher than supply. The US has a shortage of about 37,000 physicians and this gap is widening.

What ChatGPT Health might do is change what doctors do. If AI handles basic information provision and triage, doctors spend more time on complex cases and patient care rather than explanation.

Or it might reduce demand for certain types of medical work. Telemedicine doctors answering basic questions might see decreased demand as people use ChatGPT instead. Radiology might be affected if AI gets better at image interpretation (it already is in some domains).

Healthcare is unlikely to see massive job losses from ChatGPT Health specifically. But the integration of AI into healthcare will reshape the profession.

Global Healthcare Inequality

Here's a radical thought: ChatGPT Health might be better than the alternative for people in countries without good healthcare systems.

In parts of Africa, Asia, and Latin America, doctors are scarce. People die of treatable conditions because they can't access care. For someone in rural Zimbabwe, ChatGPT Health is better than nothing. It's free. It's available. It doesn't require visiting a clinic.

ChatGPT Health doesn't solve healthcare inequality. But for certain use cases and certain populations, it meaningfully improves access.

This creates a weird situation where the US and Europe might regulate ChatGPT Health heavily (limiting its claims, requiring disclaimers) while people in lower-income countries use it as their primary healthcare resource. The regulation might actually harm global access.

Privacy Deep Dive: What You Should Know

What Data Is Collected

When you use ChatGPT Health, OpenAI collects:

- Your health questions and conversations

- Health data you've connected from Apple Health, My Fitness Pal, etc.

- Any files you upload (medical records, test results)

- Metadata (when you asked, how long the conversation was, etc.)

This is substantially more health data than OpenAI collects from regular ChatGPT use.

Who Can Access Your Data

- OpenAI employees: Limited to specific teams (health, trust and safety, product). Access is logged.

- OpenAI contractors: If OpenAI uses external contractors for data labeling or analysis, they might have access.

- Third parties via integration: When you connect Apple Health, Apple technically has access to the data you're sharing.

- Law enforcement: If served with a warrant or subpoena, OpenAI must comply with legal requests for your data.

OpenAI's privacy policy is more transparent than many tech companies, but it's not zero-knowledge encryption. Your data is protected by OpenAI's policies and infrastructure, not by cryptography that makes your data unknowable to them.

Opt-Out Options

You can presumably delete ChatGPT Health conversations, but this has the same deletion timeline as regular ChatGPT (30 days in a deleted state, then removal). For healthcare data, you might want stronger deletion assurances.

You can decline to connect external apps. This limits ChatGPT Health's functionality (it can't see your actual health data) but improves privacy.

You can ask OpenAI for a data export under privacy laws, though the process isn't well-publicized.

The Trade-off

This is the fundamental privacy trade-off: personalization requires data. ChatGPT Health is more useful with your health data (it can see your actual sleep patterns, exercise, nutrition). But your health data in OpenAI's systems is a vulnerability. You're trusting OpenAI with information that's extremely sensitive.

Some people will make this trade happily. Others will refuse and accept lower functionality. Both choices are reasonable.

Implementation Timeline and Rollout Strategy

Phase 1: Initial Launch (Early 2026)

ChatGPT Health is rolling out "in the coming weeks" as of January 2026. This likely means January through March.

Initial availability will probably be limited to ChatGPT Plus subscribers (paying users). This serves two purposes: testing with engaged users and monetizing the feature. OpenAI can gather feedback and iterate before releasing to free tier.

Integration phasing: Initial integrations are probably Apple Health, My Fitness Pal, and Function. More integrations (Fitbit, Strava, healthcare provider patient portals) will likely follow.

Geographic rollout: US first, then expanded internationally. Regulatory requirements vary by country, so expansion will be staged.

Phase 2: Expansion and Integration (Mid-2026 to Late-2026)

Once initial launch is stable, OpenAI will likely:

- Expand to free tier users

- Add more app integrations (partnering with major health platforms)

- Add provider integrations (letting doctors view recommendations)

- Expand to additional countries

- Adjust based on user feedback and incident reports

Phase 3: Mainstream Adoption (2027 and Beyond)

If ChatGPT Health gains traction, it could become standard within ChatGPT. Millions of people might use it daily for health questions.

This timeline assumes nothing catastrophic happens (major breach, serious misguidance leading to harm, regulatory rejection). If those occur, the timeline accelerates (shutdown) or slows (suspension for improvements).

The Developer and Enterprise Angle

API Access for Healthcare Apps

OpenAI hasn't announced whether ChatGPT Health will have APIs for healthcare app developers. But this is likely coming.

Imaging that you're building a healthcare app for managing diabetes. You could integrate ChatGPT Health to provide personalized health guidance within your app. Users get coaching and symptom assessment integrated into their diabetes management tool.

This creates a whole ecosystem where ChatGPT Health becomes infrastructure for other health apps.

The risk: it centralizes AI health guidance through OpenAI. All these apps depend on ChatGPT working correctly. If ChatGPT hallucinates, it propagates through the entire ecosystem.

Integration Standards

Healthcare has existing integration standards (FHIR for data exchange, HL7 for messaging). ChatGPT Health will need to work with these standards to integrate with healthcare systems.

If your health provider uses Epic (the dominant medical records system), ChatGPT Health would need to integrate with Epic's APIs. This is technically feasible but requires certification and security review.

OpenAI's trajectory suggests they're planning for serious healthcare system integration. The initial consumer play (Apple Health, My Fitness Pal) is just the start.

Critical Assessment: Is This Good or Bad?

The Glass Is Half Full

Accessibility: ChatGPT Health can help people in underserved regions access health information and guidance. For a person who can't see a doctor, it's better than nothing.

Symptom filtering: Helps people understand whether symptoms require medical attention, reducing unnecessary ER visits and doctor appointments.

Health literacy: Encourages people to understand their own health conditions, medications, and risk factors.

Cost reduction: If it prevents even a small percentage of unnecessary medical visits, the healthcare savings are enormous.

24/7 availability: Healthcare is accessible at 2 AM on a Sunday, when you have a question.

The Glass Is Half Empty

Hallucination risk: LLMs confidently generate false information. In a healthcare context, this can be directly harmful.

Accountability gaps: If ChatGPT's advice leads to harm, it's unclear who's responsible. This creates perverse incentives (OpenAI has no accountability for accuracy).

Regulatory uncertainty: The legal and regulatory framework is undefined, making it risky for users and OpenAI alike.

Inequality: While it helps some people, it might make inequality worse if it replaces actual doctor access for people who need it.

Privacy risk: Centralizing healthcare data in a corporate system creates vulnerability.

Bias reproduction: If healthcare has bias (and it does), ChatGPT inherits that bias and potentially amplifies it.

The Realistic View

ChatGPT Health is neither revolutionary healthcare savior nor dangerous AI menace. It's a useful tool with serious limitations and risks.

For some use cases (symptom research, medication side effect checking, health literacy), it's genuinely helpful. For others (emergency assessment, rare disease diagnosis, mental health crisis), it's inadequate or dangerous.

The real question isn't whether ChatGPT Health is good or bad. It's whether OpenAI, users, regulators, and healthcare providers can establish guardrails that maximize benefit while minimizing risk.

Early evidence suggests the answer is maybe. OpenAI is being thoughtful about privacy and safety. But the incentives favor expansion and adoption over caution.

What Happens Next: Predictions and Implications

Short Term (Next 6-12 Months)

ChatGPT Health launches successfully. Millions of people try it. Some use cases work well (symptom research). Others generate problems (people relying on it instead of seeing doctors).

Media attention focuses on the risks. Stories emerge of people harmed by bad advice (even though ChatGPT always recommends seeing doctors, some people don't listen). This generates regulatory scrutiny.

OpenAI iterates based on feedback. They add disclaimers. They refuse to answer certain questions. They improve accuracy through retrieval augmentation and fine-tuning on medical data.

Competitors launch their own health features. Google, Microsoft (Copilot), Meta, and others all have LLMs and all have motivation to enter the health space. ChatGPT Health isn't alone for long.

Medium Term (1-2 Years)

Regulatory frameworks clarify. The FDA issues guidance on AI health tools. The FTC settles cases about deceptive health claims. Countries implement specific requirements for AI in healthcare.

OpenAI either certifies ChatGPT Health as meeting new standards or pulls back its health claims. The feature either becomes regulated like a medical device or becomes more limited in what it claims to do.

Integration expands. Major healthcare systems consider ChatGPT Health integration. Epic, Cerner, and Athena (major medical records vendors) face pressure to integrate or build competing solutions.

Liability becomes clear. Either someone successfully sues OpenAI for harm from ChatGPT Health, or courts rule that OpenAI isn't liable because users were warned. This determines whether the product is viable long-term.

Long Term (2+ Years)

If successful, ChatGPT Health becomes standard within healthcare IT infrastructure. Patients access it through their healthcare providers. Doctors use it as a diagnostic support tool.

If unsuccessful, it gets relegated to consumer wellness (like general health information) without claims of medical guidance.

The broader question: does AI medicine work? Can we build AI systems that doctors trust enough to use in clinical care? Are LLMs the right architecture, or do we need symbolic AI or hybrid approaches?

These questions will shape healthcare for decades.

FAQ

What is ChatGPT Health?

ChatGPT Health is a dedicated space within ChatGPT for having health-related conversations. Unlike regular ChatGPT chats, health conversations are isolated from your other discussions. The feature can integrate with health apps like Apple Health and My Fitness Pal to provide personalized guidance based on your actual health data.

How does ChatGPT Health work technically?

ChatGPT Health functions as a separate conversation context within ChatGPT. It uses the same underlying language model but applies specialized safety guidelines for health topics. It can retain context across multiple conversations within the Health space and access data from connected health apps. OpenAI won't use these conversations to train its general models, providing a privacy boundary that regular ChatGPT doesn't offer.

Is ChatGPT Health a replacement for doctors?

No. ChatGPT Health is explicitly positioned as an information and guidance tool, not a substitute for medical care. It can help you understand symptoms, research conditions, and prepare for doctor visits. But it can't diagnose conditions, prescribe medications, or provide personalized medical care the way licensed doctors can. For any serious health concern, you should see a healthcare provider.

What are the privacy concerns with ChatGPT Health?

ChatGPT Health collects and stores your health conversations and, optionally, your health data from connected apps. While OpenAI encrypts this data and won't use it for model training, it's centralized in OpenAI's systems. If OpenAI were breached, attackers would gain access to everyone's health data simultaneously. Additionally, regulatory compliance with HIPAA, GDPR, and state privacy laws is still being determined.

Can ChatGPT Health access my medical records?

Initially, ChatGPT Health integrates with consumer health apps (Apple Health, My Fitness Pal, Function) that provide fitness, sleep, and nutrition data. Integration with medical records from your doctor's office would require connecting through those provider systems, which isn't available at launch. Future integrations might allow this, but it would require additional security and regulatory approvals.

What are the accuracy limitations of ChatGPT Health?

Large language models like ChatGPT predict statistically likely responses, not necessarily correct ones. They can hallucinate confident-sounding false information, especially about rare conditions or unusual drug interactions. ChatGPT Health is useful for common conditions and general health education but shouldn't be trusted for rare diagnoses, emergency assessment, or complex medication interactions without verification from a pharmacist or doctor.

How is ChatGPT Health different from other health AI tools?

ChatGPT Health combines conversational AI capabilities with health-specific privacy protections and health app integration. Compared to basic symptom checkers, it offers more nuanced conversation. Compared to clinical AI tools like IBM Watson, it's designed for consumers, not doctors. Compared to telehealth, it's free but doesn't provide the accountability and expertise of a licensed provider. It's a middle ground between raw information and professional care.

When will ChatGPT Health be available?

OpenAI announced ChatGPT Health in January 2026 with rollout "in the coming weeks." Initial availability is expected for ChatGPT Plus subscribers first, with expansion to free tier users later. International rollout will follow after US launch, pending regulatory approval in each region.

What health data does OpenAI collect through ChatGPT Health?

OpenAI collects your health questions, health app data you've connected (exercise, sleep, nutrition), any health documents you upload, and metadata about your conversations. OpenAI has committed to not using this data for model training, but it's stored on OpenAI's servers and is accessible to certain OpenAI teams for trust, safety, and product purposes. You can control this by not connecting external apps or by deleting conversations.

What should I do if ChatGPT Health gives me wrong information?

Trust your doctor over ChatGPT. If you received health guidance from ChatGPT Health and it contradicts what your doctor says, follow your doctor's guidance. ChatGPT is a tool for understanding health information and researching conditions, not for making medical decisions. Always verify important health information with qualified healthcare providers before acting on it.

Conclusion: Healthcare's AI Moment

ChatGPT Health represents a critical inflection point. For the first time, a general-purpose AI tool is being explicitly positioned as a healthcare resource. It's not a niche medical AI system designed by healthcare companies. It's an LLM from a tech company, applied to medicine.

This is significant because it signals that healthcare is now part of AI's mainstream application. For years, AI in healthcare was specialized and regulated. Now it's consumer-facing and casual.

The optimistic reading: ChatGPT Health helps millions of people understand their health better. It reduces unnecessary medical visits, freeing up doctors for serious cases. It enables healthcare access in underserved regions. Over time, integration with medical systems makes healthcare more efficient and patient-centered.

The pessimistic reading: ChatGPT Health creates a false sense of medical understanding. People rely on it instead of seeing doctors and miss serious conditions. The technology amplifies healthcare disparities by being better for educated, tech-savvy users. Eventually, a high-profile failure occurs, and ChatGPT Health is sued out of existence.

The realistic reading: Both things happen. ChatGPT Health helps some people and harms others. The net effect depends on implementation details, regulation, and how society chooses to integrate AI into healthcare.

The next two years will determine which narrative wins. If ChatGPT Health launches cleanly, gains user trust, and integrates responsibly with healthcare systems, it could transform medicine. If problems emerge early (major privacy breach, significant harm from misguidance, regulatory rejection), it could become a cautionary tale about moving too fast in healthcare.

What's certain: healthcare's relationship with AI is no longer theoretical. It's real, it's starting now, and it will reshape how we think about medical knowledge, access, and trust.

OpenAI has opened a door. The question is what comes through it.

Key Takeaways

- 230 million people weekly ask health questions on ChatGPT, proving massive demand for accessible health information

- ChatGPT Health isolates medical conversations and integrates with health apps (Apple Health, MyFitnessPal, Function) while not using data for model training

- Large language models predict probability, not medical truth, creating risks of hallucinations in health contexts where accuracy is critical

- ChatGPT Health addresses real healthcare failures (cost barriers, provider overload, access limitations) but introduces new risks around accountability and regulation

- Initial rollout targets ChatGPT Plus subscribers starting January 2026, with regulatory approval questions and liability frameworks still undefined

Related Articles

- ChatGPT Health: How AI is Reshaping Healthcare Access [2025]

- ChatGPT in Healthcare: 40M Daily Users & AI's Medical Impact [2025]

- AI Accountability Theater: Why Grok's 'Apology' Doesn't Mean What We Think [2025]

- Best AI Chatbots for Beginners: Complete Guide [2025]

- 9 Biotech Startups Changing Healthcare: Disrupt 2025 Spotlight [2025]

- Is Artificial Intelligence a Bubble? An In-Depth Analysis [2025]

![ChatGPT Health: How AI is Reshaping Medical Conversations [2025]](https://tryrunable.com/blog/chatgpt-health-how-ai-is-reshaping-medical-conversations-202/image-1-1767822008679.png)