Introduction: When a Messaging App Becomes a Social Platform

For years, WhatsApp operated in a regulatory gray zone. It was the messaging app your grandmother used to send voice notes, the secure communication tool that activists relied on, the simple private chat platform that Meta acquired back in 2014 for a staggering $19 billion. But things shifted in 2023 when Meta introduced WhatsApp Channels, a feature that fundamentally changed what WhatsApp does.

WhatsApp Channels lets users broadcast messages to followers in a one-to-many model. It's not private messaging anymore. It's more like Twitter, or how Instagram Stories work. And that distinction matters enormously for regulators across Europe.

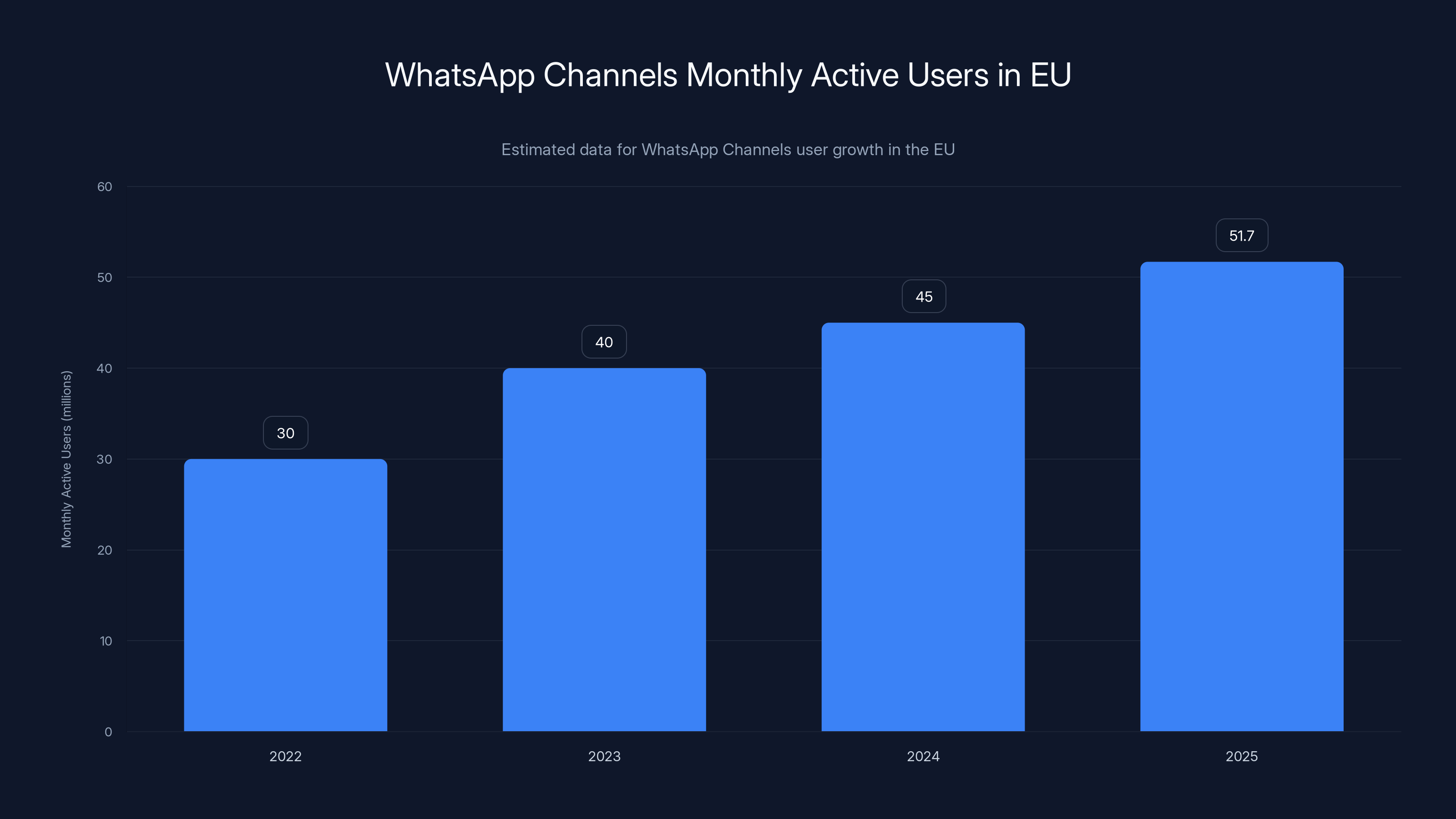

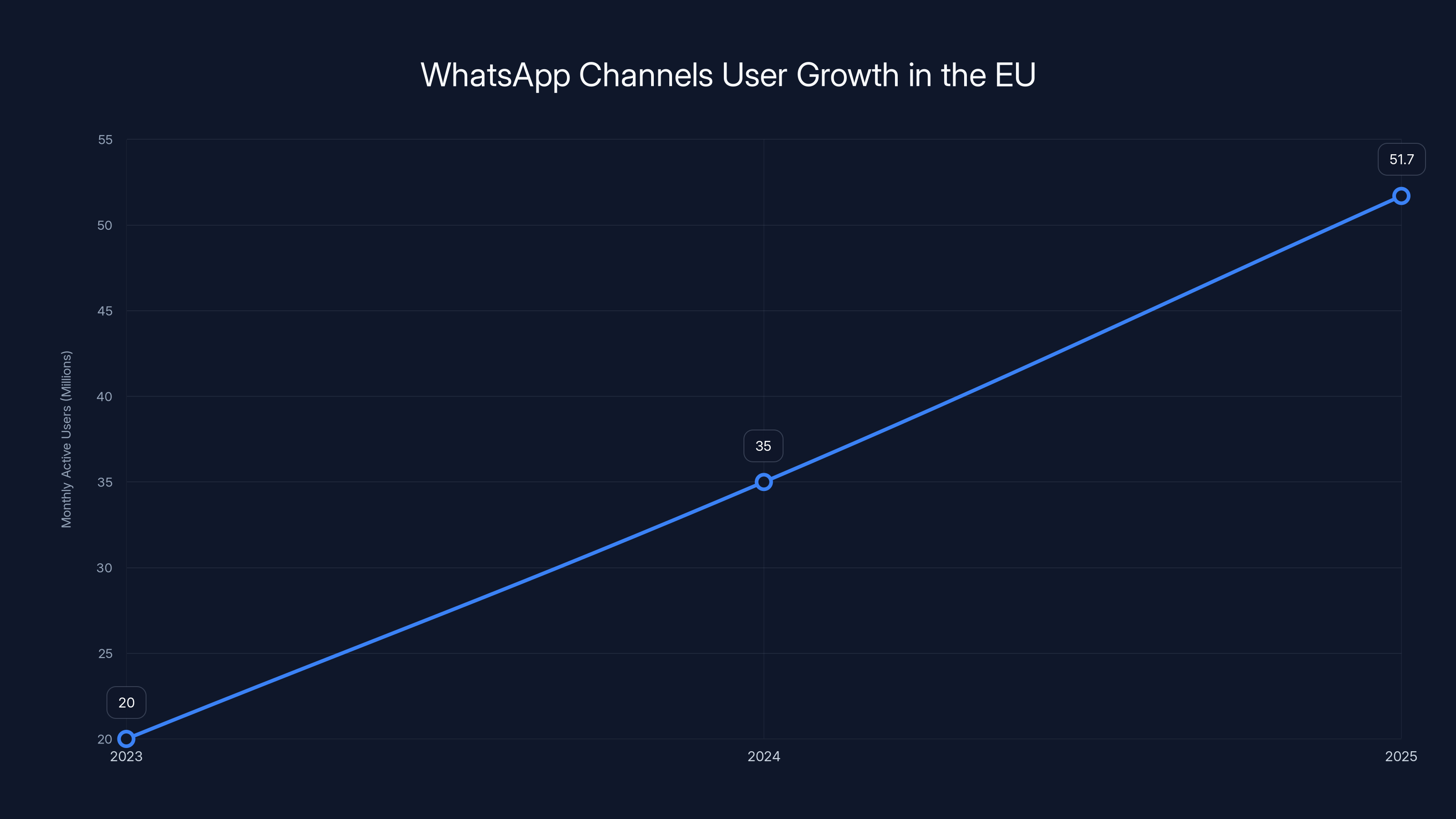

In early 2025, the European Commission revealed that WhatsApp Channels had accumulated approximately 51.7 million average monthly active users across the European Union during the first half of 2025. That number crossed a crucial threshold: 45 million users. Under the EU's Digital Services Act (DSA), any platform that reaches 45 million monthly active users gets classified as a "very large online platform" or VLOP. Once you're a VLOP, you're subject to intense regulatory scrutiny, mandatory compliance frameworks, and potential fines up to 6% of global annual revenue.

This development marks a pivotal moment for Meta, for European digital regulation, and for how we think about messaging apps in an age of platform consolidation. WhatsApp Channels didn't just cross a user threshold. It walked directly into one of the most complex regulatory regimes ever created for digital platforms.

Let's break down what happened, why it matters, and what comes next.

TL; DR

- WhatsApp Channels hit 51.7M EU users: The broadcasting feature exceeded the 45M threshold for "very large online platform" status under the DSA

- DSA penalties are severe: Non-compliance can result in fines up to 6% of global annual revenue, affecting Meta's bottom line significantly

- Meta already faces DSA violations: The company was previously fined for Facebook and Instagram compliance issues around illegal content reporting

- Channels transforms WhatsApp's nature: Moving from private messaging to broadcast communication triggered regulatory oversight

- Timeline matters: Designation could happen within months, not years, based on Commission statements

WhatsApp Channels reached 51.7 million monthly active users in the EU by 2025, surpassing the DSA's 'very large online platform' threshold of 45 million users. (Estimated data)

Understanding the Digital Services Act: Europe's Bold Regulatory Move

Before we talk about what happens to WhatsApp, you need to understand the Digital Services Act itself. Because here's the thing: the DSA isn't some minor compliance update. It's fundamentally reshaping how the world's largest tech platforms operate.

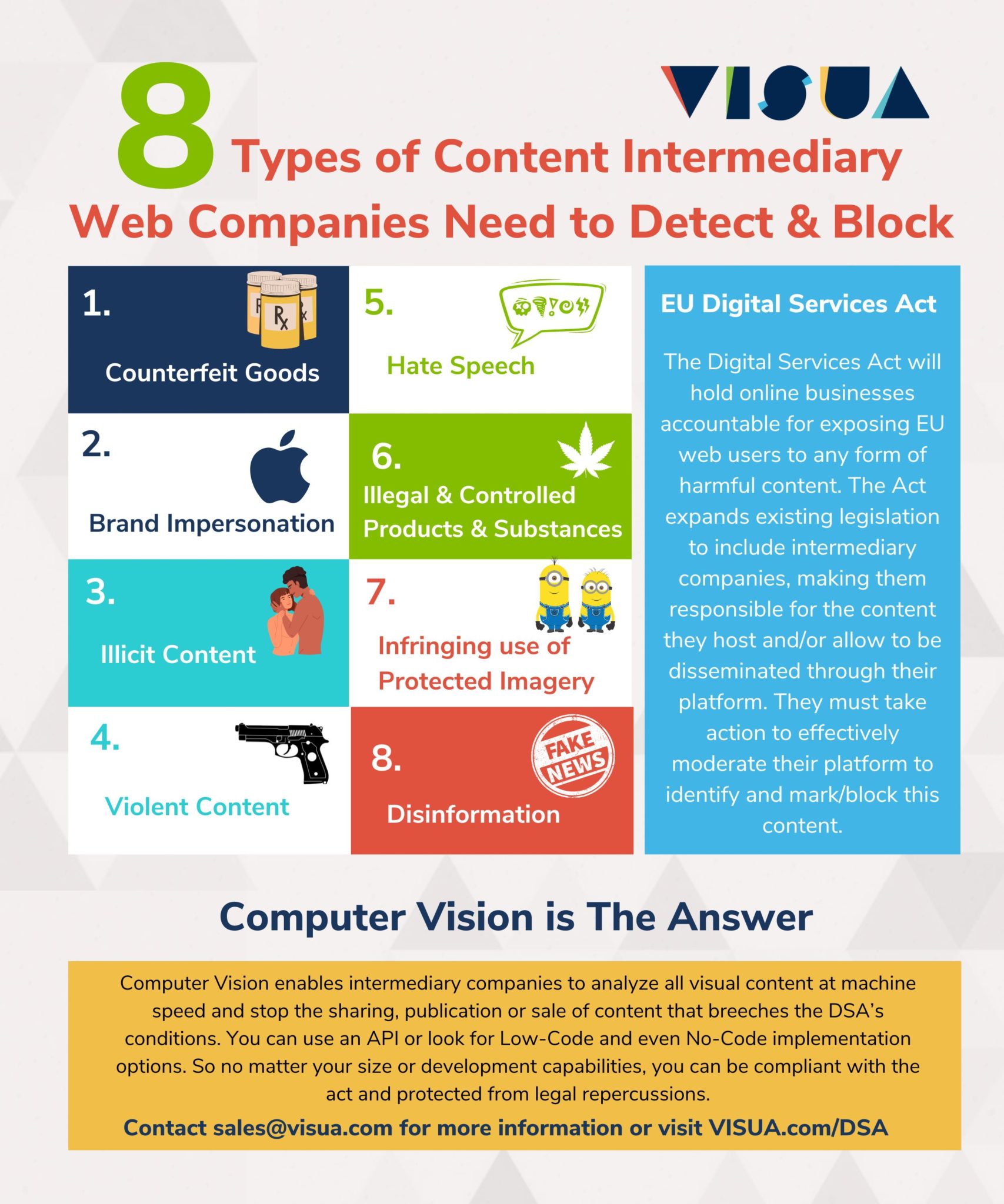

The DSA was officially adopted by the European Union in 2022 and began enforcement in 2024. It's the EU's direct response to decades of tech platforms operating with minimal oversight. Social media companies, search engines, marketplaces, and now messaging apps can basically do whatever they want as long as they follow their own terms of service. The DSA changed that calculus.

Under the DSA, platforms are categorized based on user numbers and social impact. Most apps exist in a kind of regulatory baseline tier. But once you hit 45 million monthly active users, you become a VLOP. And VLOP status comes with mandates that feel foreign to tech companies accustomed to self-regulation.

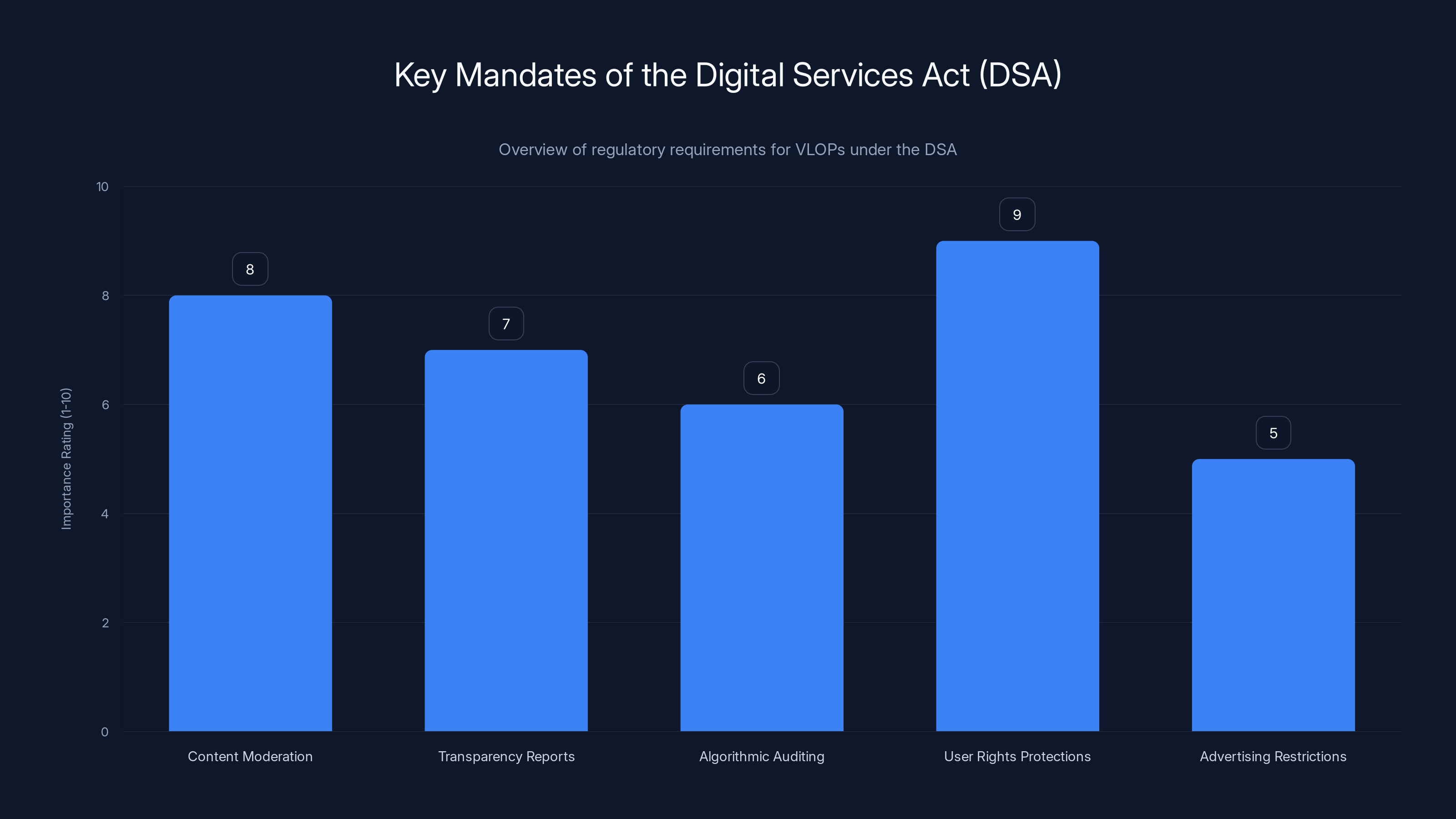

Those mandates include mandatory content moderation, transparency reports, algorithmic auditing, user rights protections, and advertising restrictions. Companies must document their systems, prove compliance, and open their platforms to independent auditors. It's the regulatory equivalent of moving from a small startup where anything goes to a publicly traded company where SEC oversight is constant.

Meta already has experience with this. Facebook and Instagram were designated as VLOPs almost immediately because they vastly exceeded the 45-million-user threshold. The company has been navigating DSA compliance since 2024, which is exactly why the WhatsApp designation matters so much. Meta now faces a three-front regulatory battle: Facebook, Instagram, and potentially WhatsApp.

Meta's potential DSA fine of

WhatsApp Channels: How a Messaging App Became a Broadcast Platform

WhatsApp's original concept was pure. Create a simple, encrypted messaging service where individuals could communicate privately with other individuals. No advertisements. No algorithms. Just messages from person to person, protected end-to-end encryption.

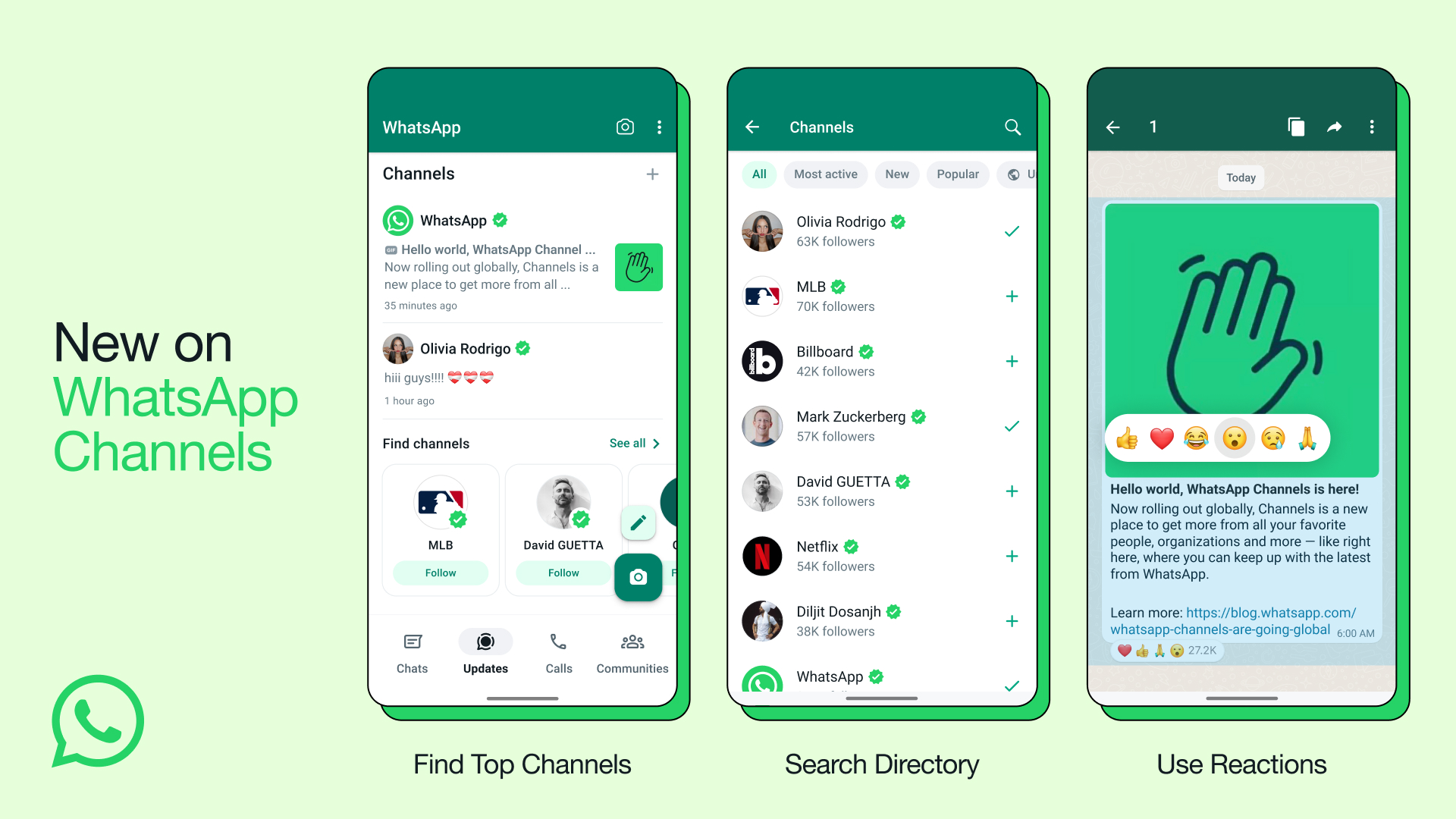

Then Meta started experimenting with broadcast functionality. In 2023, they rolled out WhatsApp Channels. The feature works like this: any user can create a channel. That channel can have followers. The channel creator broadcasts messages to those followers in a one-way stream. Followers see the messages, but they can't directly reply in the broadcast feed.

It's functionally similar to Telegram Channels, Twitter/X feeds, or Instagram broadcast stories. And that matters because once you're broadcasting to large audiences, the entire regulatory framework changes. You're no longer a private messaging tool. You're a publisher of content to potentially millions of people.

This is where WhatsApp crossed into DSA territory. The company didn't need to completely rebuild WhatsApp. They just needed a single feature to reach 45 million users for the classification to apply specifically to that feature. WhatsApp Channels did exactly that.

The feature grew rapidly because it solved real problems. Businesses could communicate with customers at scale. Public figures could reach supporters directly. News organizations could distribute breaking information. NGOs could coordinate with members. The functionality was genuinely useful, which is why adoption accelerated.

But that same growth created a regulatory problem. More users meant more content. More content meant more responsibility under EU law. And more responsibility meant WhatsApp had to suddenly operate like every other social platform.

The 45-Million-User Threshold: A Regulatory Tipping Point

There's something almost mathematical about how EU regulation works. The DSA defines specific thresholds, and platforms either meet them or they don't. There's no gray area. Either you're a VLOP or you're not. Either you hit 45 million monthly active users or you didn't.

WhatsApp Channels reaching 51.7 million users in the EU means the feature unambiguously crosses this threshold. The European Commission confirmed in a briefing that they're actively investigating whether to formally designate WhatsApp Channels as a VLOP. The word "designate" matters here. It's not automatic. The Commission must formally make the designation.

But the numbers are undeniable. 51.7 million is roughly equivalent to the population of Spain or Poland. It's larger than the combined population of all Scandinavian countries. For a feature that didn't exist three years ago, that growth is phenomenal.

The threshold of 45 million wasn't arbitrary. EU regulators designed it to capture the genuinely large platforms while excluding smaller competitors. The logic is sound: only the biggest platforms have the infrastructure and reach to cause systemic harm. If you reach 45 million users across the EU alone, you're operating at a scale where your decisions affect democracies.

Meta knew this threshold existed when they built Channels. The company operates other VLOPs like Facebook and Instagram. They understood the regulatory implications. What they maybe didn't anticipate was how quickly Channels would grow among EU users specifically. Growth in Europe has historically been slower than in other regions, but Channels bucked that trend.

WhatsApp Channels saw significant growth in the EU, reaching 51.7 million users by early 2025, surpassing the VLOP threshold of 45 million users. Estimated data.

DSA Compliance Requirements: What WhatsApp Must Now Do

Assuming the Commission formally designates WhatsApp Channels as a VLOP, the compliance requirements are extensive. This isn't like getting a small fine from some regulator. This is a complete overhaul of how the platform operates.

First, there's content moderation. The DSA requires VLOPs to have documented systems for identifying and removing illegal content. "Illegal" in this context means content that violates EU law, not just the platform's terms of service. That includes hate speech, incitement to violence, child sexual abuse material, terrorism-related content, and copyright violations. Platforms must act on these within defined timeframes.

WhatsApp already uses automated systems for abuse detection, but those systems operate primarily in private messages. Channels are broadcast, which means content is public and requires different moderation approaches. The company would need to develop scalable, transparent moderation processes that can handle millions of pieces of content daily.

Second, there's algorithmic transparency. The DSA requires platforms to disclose how algorithms work, especially recommendation algorithms. WhatsApp Channels includes a recommendation system that suggests channels to users. The Commission wants to know exactly how this works, what data it uses, and how it might amplify harmful content.

This is tricky because algorithmic systems are often proprietary and protected. Meta doesn't want to reveal its recommendation architecture because competitors could copy it. But under the DSA, they don't have a choice. Transparency requirements are mandatory.

Third, there's user empowerment. Platforms must give users meaningful choices about algorithmic recommendation. Users should be able to see why content was recommended to them. Users should be able to opt out of personalized recommendations. These features exist on some platforms, but they're often hidden or poorly designed. The DSA requires them to be prominent and functional.

Fourth, there's advertising regulation. VLOPs face strict requirements around political advertising, including transparency about who paid for ads and how they're targeted. WhatsApp currently doesn't show ads, but it does allow businesses to send promotional messages through Channels. If those become classified as advertising, Meta would need to implement political ad libraries and targeting restrictions.

Fifth, there's reporting. Meta must publish semiannual reports on DSA compliance, detailing how many pieces of content they removed, how many accounts they suspended, and what complaints they received. These reports must be detailed enough for auditors and regulators to verify accuracy.

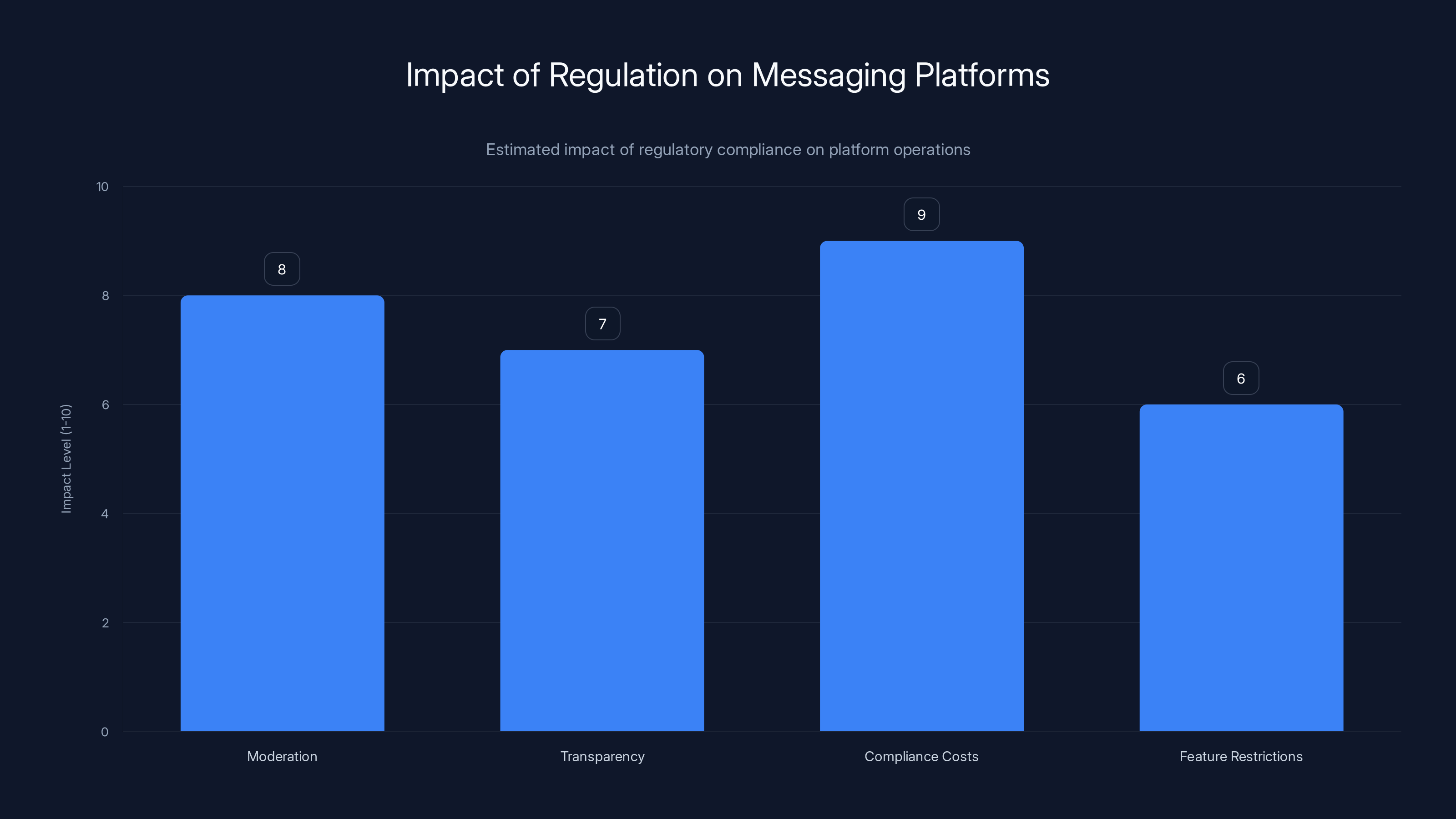

All of this costs money. It requires hiring compliance staff, building new systems, updating moderation infrastructure, and maintaining detailed documentation. For Meta, it's manageable. For smaller platforms, reaching VLOP status would be financially catastrophic.

The 6% Revenue Fine: What's Actually at Stake

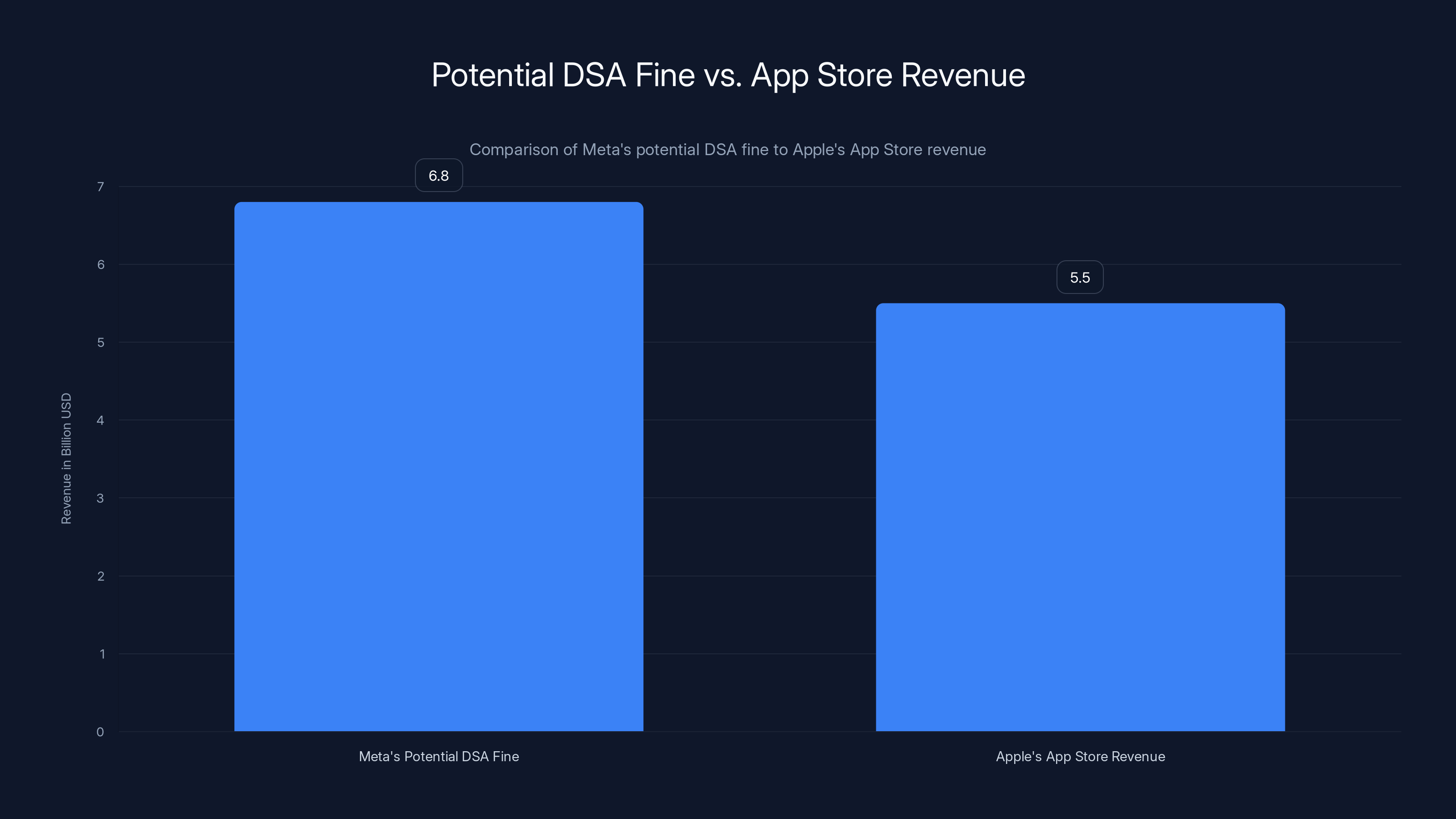

Let's talk about the financial incentive to comply. The DSA allows the European Commission to fine VLOPs up to 6% of global annual revenue for serious violations. Not regional revenue. Global revenue.

Meta's annual revenue in 2024 was approximately

That's not a rounding error. That's a figure that would move markets and shareholder meetings. That's more than most Fortune 500 companies make annually. To put it in perspective, Apple's entire App Store division generates roughly $5-6 billion in annual revenue. Meta's potential DSA fine would exceed that.

Now, the Commission isn't going to immediately fine Meta 6%. These penalties are reserved for egregious violations. But they're the backstop. They're the maximum deterrent. And they create incentives to comply seriously.

Meta has already experienced DSA fines. In October 2025, the Commission fined the company for how it handled illegal content reporting on Facebook and Instagram. The company was charged with making it too difficult for users to report illegal content, which violates DSA requirements around user empowerment.

These weren't the maximum penalties. But they set a precedent. The Commission is actively enforcing the DSA. They're not issuing warnings and moving on. They're investigating, documenting violations, and imposing real financial consequences.

For Meta, that means WhatsApp Channels compliance can't be an afterthought. It has to be a priority. The company has to anticipate what regulators will scrutinize and build compliant systems proactively.

The Digital Services Act introduces several key mandates for VLOPs, with user rights protections and content moderation being among the most critical. Estimated data.

Meta's Existing DSA Violations: A Pattern Emerges

Meta's experience with DSA enforcement hasn't been smooth. The company has faced multiple investigations and violations since 2024. Understanding this history helps explain why WhatsApp Channels designation is significant. It's not Meta's first rodeo. It's just another front in an expanding regulatory battle.

The most notable issue involves illegal content reporting mechanisms. The DSA requires platforms to make it easy for users to report illegal content and to respond to those reports with transparency. Meta's approach on Facebook and Instagram apparently didn't meet that standard.

Users complained that the process for reporting illegal content was buried, unclear, or ineffective. The Commission found that Meta hadn't made sufficient effort to streamline reporting, which violates the principle of user empowerment. This is almost laughably straightforward: if your platform has illegal content, users need to be able to report it easily.

Meta fixed this in 2025 by improving its reporting interfaces and making illegal content reporting more prominent. But the fact that this required regulatory intervention says something about how the company initially prioritized user empowerment versus other concerns.

There's also the algorithmic recommendation issue. On Instagram, Meta's recommendation algorithm showed potentially harmful content to minors. The Commission investigated whether the algorithm violated requirements around protecting minors and preventing harm. These investigations are ongoing, and they could result in additional fines.

For WhatsApp, these prior violations create context. The Commission already views Meta as a company that needs monitoring. They already have active investigations. Adding WhatsApp Channels to the list means three separate VLOPs under investigation, all owned by the same parent company.

From a regulatory perspective, that pattern matters. It suggests systemic issues rather than isolated violations. It suggests Meta prioritizes growth and engagement over compliance. And it makes regulators more likely to impose serious penalties if new violations emerge.

The Timeline: When Will Formal Designation Happen?

One question everyone wants answered: when will the Commission formally designate WhatsApp Channels as a VLOP?

There's no official timeline. The Commission spokesperson said they're "actively looking into it" and wouldn't "exclude a future designation," which is bureaucratic language for "this is definitely happening but we're not saying when."

Based on how the Commission handled Facebook and Instagram designations, the process typically takes several months. The Commission needs to verify user numbers, evaluate the platform's nature, assess potential harms, and prepare internal documentation. They might also reach out to Meta for comment and request information about current compliance measures.

Given that WhatsApp Channels' designation is likely based on data from the first half of 2025, and assuming the Commission had that data by mid-2025, a formal announcement could come anytime in late 2025 or early 2026. But these timelines are fluid, and regulators don't rush critical decisions.

What's virtually certain is that the designation will happen. The numbers are too clear, the precedent too established, and the Commission's appetite for enforcement too obvious. Meta's legal teams are probably already preparing compliance plans based on the assumption that designation is coming.

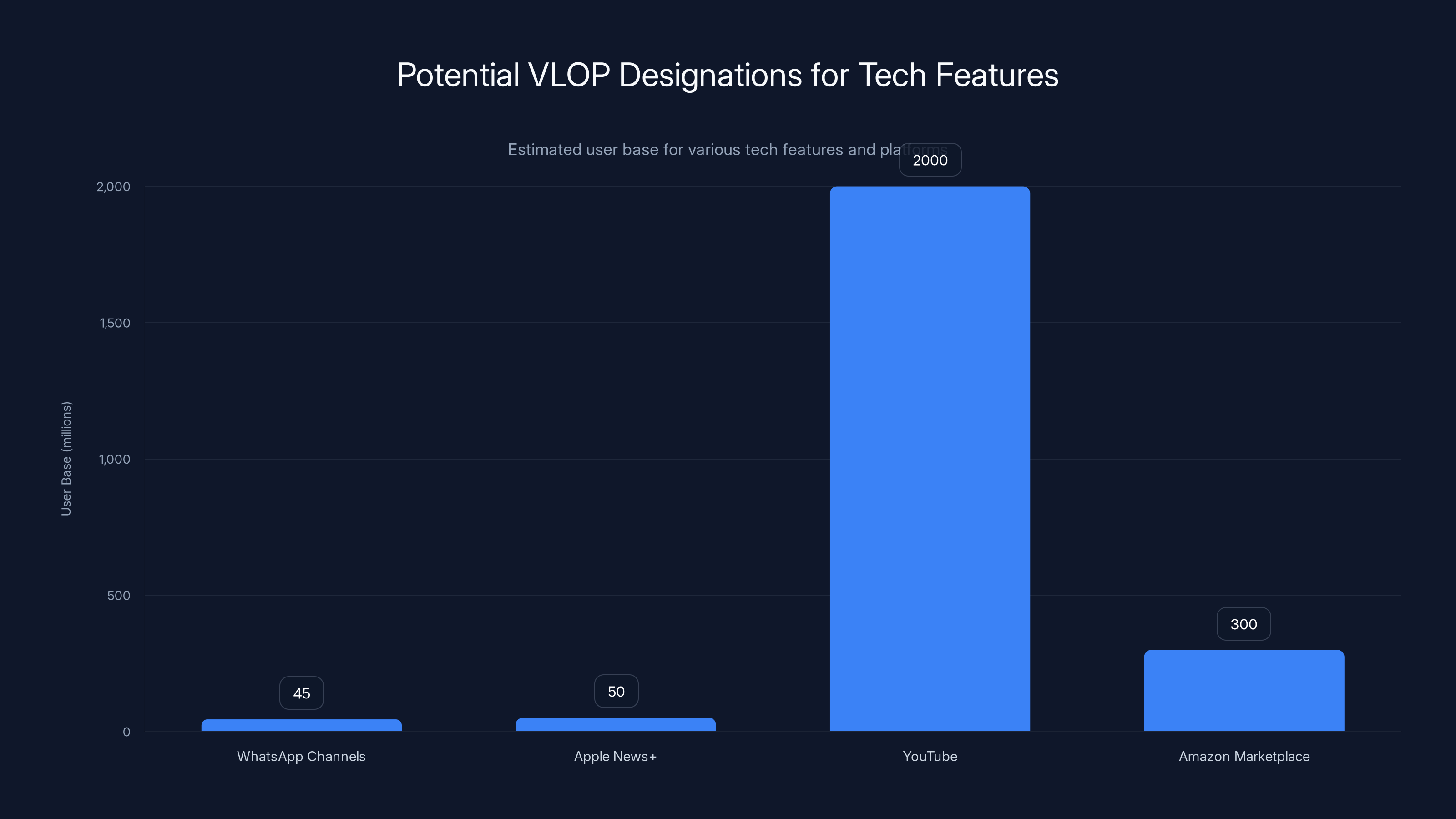

Estimated data shows potential VLOP designations for tech features based on user base, highlighting regulatory implications for large platforms.

WhatsApp's Unique Challenge: Encryption and Moderation

Here's where things get genuinely complicated. WhatsApp's core feature is end-to-end encryption. Messages sent through WhatsApp are encrypted on the sender's device and decrypted on the recipient's device. Neither Meta nor any government can read those messages.

This encryption is a feature. It's why activists, journalists, and people in authoritarian countries rely on WhatsApp. It's why the app is trusted.

But it's also a problem for DSA compliance. How do you identify and remove illegal content if you can't read it? How do you audit for hate speech if messages are encrypted? How do you comply with requirements to remove terrorism-related content if you literally can't see what's being communicated?

For private messages, this tension hasn't been resolved. WhatsApp maintains encryption and acknowledges that they can't moderate encrypted messages. Regulators have generally accepted this as a technical limitation.

But WhatsApp Channels is different. Channels are broadcast communication. They're not private. They're public. That means encryption isn't the core issue. The content is visible to anyone following the channel. Meta can absolutely see what's being posted in Channels and can moderate it just like they moderate Instagram or Facebook.

This actually makes WhatsApp Channels easier to regulate than private WhatsApp messaging. The company doesn't face the encryption paradox. They can see, moderate, and comply.

But this also highlights why the DSA designation matters. It forces Meta to apply the same moderation standards to WhatsApp Channels that they apply to Facebook and Instagram. And those standards are robust. The Commission expects comprehensive content moderation. They expect rapid response to illegal content. They expect transparency about moderation decisions.

Meta will have to implement these systems. They'll probably build on existing infrastructure from Facebook and Instagram moderation teams. But it's still a significant engineering and operational challenge.

Broader Implications: What This Means for Global Tech Regulation

WhatsApp Channels crossing the VLOP threshold has implications far beyond Meta or Europe. It sets a precedent for how regulators think about platform features and user classification.

Consider this: WhatsApp Channels is a feature within an existing app. It's not a standalone platform. Yet it's potentially subject to its own VLOP designation because it independently reached 45 million users. This creates a weird regulatory universe where features become platforms.

What does this mean for other companies? If you're Apple and you have Apple News+, does that count as a separate platform for DSA purposes? If you're Google and YouTube becomes so large it needs separate regulation, does YouTube get its own VLOP status distinct from Google Search? If you're Amazon and your Marketplace reaches 45 million users, is it separately regulated from your retail operations?

These aren't hypothetical questions. The DSA framework could create a future where companies manage multiple separate VLOP designations, each with its own compliance requirements and audit obligations. This dramatically increases regulatory complexity and compliance costs.

For smaller platforms, the message is clear: once you reach 45 million users, you enter a new world of regulatory scrutiny. Plan for it. Budget for it. Build compliance into your product from day one. Don't assume you can retrofit compliance later.

For larger platforms, it reinforces a trend we're already seeing. The biggest tech companies spend enormous resources on regulatory affairs and compliance. They hire former regulators. They maintain relationships with government officials. They build compliance infrastructure proactively. This creates a competitive moat. Smaller competitors simply can't afford the compliance overhead that VLOPs face.

Europe has effectively created a regulatory framework that favors incumbents and raises barriers to entry for challengers. That might be intentional. Regulators might believe that only the largest, most sophisticated platforms can be trusted with billions of users. Or it might be an unintended consequence. Either way, it's reshaping platform economics.

Estimated data shows that compliance costs and moderation are highly impacted by new regulations, indicating significant operational changes for platforms like WhatsApp Channels.

The European Commission's Active Enforcement: Why This Designation Matters

The European Commission isn't issuing warnings and hoping companies comply. They're actively investigating platforms and imposing financial penalties. This is enforcement at scale.

When the Commission spokesperson said they're "actively looking into" WhatsApp, that means investigators are reviewing data about Channels' user base, content, and moderation practices. They're probably requesting information from Meta about current compliance measures. They're examining whether the platform poses any special harms given its focus on privacy and encryption.

This active enforcement creates urgency for Meta. The company can't wait for a formal designation and then scramble to build compliance. They need to assume designation is coming and prepare now.

For WhatsApp specifically, this probably means building out a dedicated compliance team, implementing automated content moderation for Channels, creating transparency reporting infrastructure, and possibly restricting certain types of content or advertising on the feature.

Meta's legal and policy teams are already doing this. But it's resource-intensive and ongoing. Every investigation, every violation discovery, every fine makes the next stage more resource-intensive.

DSA Enforcement Across Europe: The Bigger Picture

WhatsApp Channels isn't the only platform facing DSA scrutiny. The Commission has been investigating multiple platforms for violations.

TikTok faced investigations into whether it adequately protects minor users and whether its recommendation algorithm amplifies harmful content. Instagram faced similar scrutiny. YouTube, Snapchat, and other major platforms all went through DSA reviews.

Some of these investigations resulted in negotiated compliance improvements rather than formal violations. Others are ongoing. A few have resulted in fines.

The pattern suggests the Commission isn't randomly targeting companies. They're systematically working through the largest platforms, checking compliance, and imposing consequences where violations exist. It's methodical and relentless.

For Meta, this means the company is squarely in the Commission's focus. Facebook, Instagram, and now WhatsApp. That's sustained attention from the most powerful digital regulator in the world.

Meta's Response: What the Company Might Do

Meta hasn't publicly announced specific plans for WhatsApp Channels compliance post-designation, but you can infer what the company will likely do based on their Facebook and Instagram playbook.

First, dedicated compliance team. Meta will likely establish a WhatsApp-specific team focused on DSA compliance. This team will handle reporting, auditing, policy development, and regulatory liaison.

Second, content moderation expansion. Meta will build out moderation systems specifically for Channels. This probably means machine learning models trained to identify illegal content in public channels, plus a team of human moderators who can review flagged content.

Third, transparency initiatives. Meta will likely publish reports on Channels moderation decisions, content removal, and user complaints. These reports will mirror the detailed transparency reports the company publishes for Instagram and Facebook.

Fourth, feature adjustments. Meta might restrict certain types of content on Channels, limit who can create channels, or require verification for high-profile channels. These changes would reduce moderation burden while improving compliance posture.

Fifth, algorithmic changes. Meta will probably modify how Channels recommendations work to reduce amplification of potentially harmful content. This might mean promoting channels from verified creators and reducing algorithmic spread of new channels by unverified users.

None of these changes will kill Channels. The feature is too valuable to Meta. But they'll change how Channels operates and how much infrastructure Meta needs to maintain it.

Users and Privacy: The Collateral Impact

While the regulatory focus is on meta-level compliance, WhatsApp users will experience actual changes. DSA compliance isn't abstract. It manifests in how platforms operate.

WhatsApp Channels users might notice that flagged content gets removed or restricted more aggressively. They might see new policies around what kinds of channels can exist. They might encounter more verification requirements. Channels with content that violates DSA standards might be suspended entirely.

For most users, these changes are fine. They're aligned with reasonable expectations about illegal content. But there are edge cases. Content that's legal but controversial might face additional scrutiny. Channels operated by dissidents, activists, or marginalized groups might face scrutiny simply because the content is politically sensitive.

This is the trade-off of regulation. You get protection against illegal content, but you also get bureaucratic moderation by a large company responding to regulatory pressure. Sometimes that's appropriate. Sometimes it's over-moderation.

WhatsApp's core users—people relying on the app for encrypted, private communication—probably won't be significantly affected. Private messages remain encrypted. WhatsApp Channels is separate. But people creating public channels or following public channels will definitely notice the regulatory footprint.

What Happens in Other Regions: Global Implications

The EU isn't the only region developing digital regulation. The UK has the Online Safety Bill. Singapore has the Protection from Online Falsehoods and Manipulation Act. Brazil has its own social media regulation. The US Congress periodically proposes bills that would regulate platforms.

WhatsApp Channels hitting 45 million EU users might trigger similar scrutiny in other regions. If Channels is popular enough to designate as a VLOP in Europe, it might be popular enough to attract regulatory attention in the UK, Brazil, or other jurisdictions.

The company could face similar designations and compliance requirements globally. This would be significantly more expensive and complex than dealing with EU regulation alone. Multiple regulatory frameworks might create conflicting requirements. Meta would have to navigate those conflicts and find ways to remain compliant across regions with different legal standards.

For users, this might mean Channels features or capabilities vary by region. A channel that operates normally in the US might face restrictions in Europe and different restrictions in Brazil. This creates a fragmented experience but allows Meta to tailor compliance to regional requirements.

Expert Perspectives: What Industry Watchers Say

Digital regulation experts and tech policy analysts have largely viewed the DSA as a necessary but blunt instrument. It's necessary because platforms were completely unregulated before. It's blunt because it applies the same standards to all VLOPs regardless of their specific characteristics.

WhatsApp presents an interesting case because it blurs the line between messaging and social media. Some experts argue that extending DSA oversight to Channels is appropriate because the feature operates like social media. Others argue that regulating encrypted communication, even as a broadcast feature, sets a dangerous precedent.

Most experts agree that the timeline matters. The DSA was designed for platforms that existed before 2024. Channels is a newer feature that evolved rapidly. Designating it as a VLOP relatively quickly suggests the regulatory framework is responsive and adaptive. That's positive. But it also creates uncertainty for platforms experimenting with new features. Every new feature that grows quickly could trigger VLOP designation.

Long-term, expert consensus seems to be that the DSA is probably necessary but will require refinement. The 45-million-user threshold might be too broad or too narrow. The compliance requirements might be overly burdensome for some platforms and insufficiently stringent for others. The Commission will probably adjust the framework based on early enforcement experience.

WhatsApp Channels is part of that learning process. It's a test case for how the DSA applies to features within larger platforms.

Timeline Predictions: When Will Things Actually Change?

If we're being speculative, here's a likely timeline:

Late 2025 or early 2026: The Commission formally designates WhatsApp Channels as a VLOP. This is announced in a regulatory decision that Meta can challenge in EU courts.

Mid-2026: Meta submits compliance plans to the Commission, demonstrating how the company will meet DSA requirements for Channels. These plans include moderation infrastructure, reporting systems, and algorithmic changes.

Late 2026 through 2027: Meta implements compliance measures. The company might temporarily restrict new channel creation or change how recommendations work while building moderation infrastructure. Users notice changes but aren't directly impacted unless their channels get moderated.

2027 onwards: Meta submits regular compliance reports. The Commission audits the platform. If violations are discovered, there's a cycle of investigation, notice, remediation, and potential fines. This becomes Meta's operational reality.

These predictions assume the Commission follows the procedural path used for Facebook and Instagram. It could move faster or slower depending on resource availability and investigation complexity.

Conclusion: A Turning Point for Messaging and Regulation

WhatsApp Channels crossing 45 million EU users is more than a data point. It's a regulatory inflection point. It's the moment when a private messaging company discovered that a new feature it built was large enough to trigger oversight typically reserved for Facebook and Instagram.

This matters for Meta because the company now faces multiple regulatory fronts and increasing compliance costs. It matters for other platforms because it establishes precedent: build a successful feature and you might trigger VLOP designation, complete with all the compliance burdens that entails.

It matters for users because regulation, while sometimes necessary, changes how platforms operate. Moderation becomes more aggressive. Transparency increases. Some things become restricted. These are consequences of living in a regulated digital ecosystem.

Most importantly, it matters for how we think about digital governance. The era of self-regulated platforms is over. At least in Europe. Regulators are actively enforcing rules, imposing consequences, and reshaping platform economics. WhatsApp Channels didn't cause this shift. But it's becoming one of the most visible examples of how broadly digital regulation now applies.

Meta will comply. The company has the resources and expertise to navigate DSA requirements. But that compliance will change how WhatsApp Channels operates and sets a precedent for how other features and platforms will face similar scrutiny.

Welcome to the regulated internet. It's more complicated than it was. But it's also more accountable.

FAQ

What is the Digital Services Act?

The Digital Services Act is European Union legislation adopted in 2022 that regulates how digital platforms operate, particularly around content moderation, algorithmic transparency, and user protection. It became enforceable in 2024 and applies to "very large online platforms" with 45 million or more monthly active users in the EU. The DSA gives the European Commission authority to investigate platforms, impose fines up to 6% of global annual revenue, and mandate specific compliance measures.

How does the DSA define "very large online platform"?

A platform becomes classified as a "very large online platform" or VLOP once it reaches 45 million monthly active users across the European Union. This threshold is based on European Commission methodology for calculating monthly active users during specified reporting periods. WhatsApp Channels met this threshold in the first half of 2025 with 51.7 million average monthly active users, triggering potential designation.

What are the main DSA compliance requirements?

DSA compliance includes mandatory content moderation for illegal content, algorithmic transparency and auditability, user empowerment features that let people control recommendations, detailed reporting on moderation decisions, protection for minors, and restrictions on certain types of targeted advertising. Platforms must document systems, undergo independent audits, and respond to Commission investigations. Non-compliance can result in fines, restriction orders, or forced changes to platform operations.

Why does WhatsApp Channels specifically trigger DSA designation when private WhatsApp messaging doesn't?

WhatsApp Channels is a broadcast feature where one-way messages go to public followers, making content visible and moderatable. This is fundamentally different from encrypted private messages, which the DSA framework struggles to apply to encrypted services. Because Channels content is public and visible, it falls squarely under DSA provisions for content moderation, algorithmic recommendation, and user protection that apply to social media platforms like Facebook and Instagram.

What happens if Meta doesn't comply with DSA requirements for WhatsApp Channels?

Non-compliance triggers investigation by the European Commission, which can result in fines up to 6% of Meta's global annual revenue (approximately $6.8 billion based on 2024 revenues). The Commission can also impose interim orders requiring specific compliance measures, restrict platform operations in the EU, or require changes to algorithms and features. Meta could face multiple violation notices and escalating fines for continuing non-compliance.

When will WhatsApp Channels be formally designated as a VLOP?

The European Commission has indicated they are "actively looking into" formal designation but hasn't announced a specific timeline. Based on precedent from Facebook and Instagram designations, the process typically takes several months from when user data is verified. Given that Channels user data from the first half of 2025 demonstrated VLOP status, formal designation could occur anytime in late 2025 through early 2026, though exact timing remains uncertain.

How does WhatsApp Channels' designation affect private WhatsApp messaging?

Formal designation as a VLOP applies specifically to WhatsApp Channels, not to the private messaging service. Private messages would remain unaffected by DSA requirements because WhatsApp's encryption prevents the company from reviewing encrypted content. However, Meta's compliance infrastructure and legal obligations for Channels might affect resources devoted to other WhatsApp features and could influence how the company approaches future feature development.

What is Meta's experience with DSA enforcement so far?

Meta has already faced DSA violations and fines since 2024. The company was charged in October 2025 for making illegal content reporting insufficiently accessible on Facebook and Instagram, violating user empowerment requirements. A Dutch court also ordered Meta to change its recommendation timeline presentation because users couldn't make "sufficiently free and autonomous choices" about algorithmic ranking. These violations suggest the Commission is actively enforcing the DSA and willing to impose financial consequences on major platforms.

Key Takeaways

- WhatsApp Channels reached 51.7M EU users in H1 2025, exceeding the 45M threshold for VLOP designation under the Digital Services Act

- VLOP status subjects WhatsApp to DSA compliance requirements including content moderation, algorithmic transparency, and mandatory reporting

- Meta faces potential fines up to 6% of global annual revenue for non-compliance, equivalent to approximately $6.8 billion based on 2024 revenues

- Meta has already experienced DSA enforcement with violations on Facebook and Instagram, establishing a pattern of Commission oversight

- The designation sets precedent for how regulatory frameworks apply to features within larger platforms, reshaping platform economics and compliance burdens

Related Articles

- Spotify Ends ICE Recruitment Ads: What Changed & Why [2025]

- Grok's Deepfake Problem: Why the Paywall Isn't Working [2025]

- Grok's Deepfake Crisis: UK Regulation and AI Abuse [2025]

- AI-Generated Non-Consensual Nudity: The Global Regulatory Crisis [2025]

- Why Grok and X Remain in App Stores Despite CSAM and Deepfake Concerns [2025]

- Grok's CSAM Problem: How AI Safeguards Failed Children [2025]

![WhatsApp Under EU Scrutiny: What the Digital Services Act Means [2025]](https://tryrunable.com/blog/whatsapp-under-eu-scrutiny-what-the-digital-services-act-mea/image-1-1767987347785.jpg)