Google's Tool for Removing Non-Consensual Images from Search [2025]

The internet has a serious problem with privacy violations. Non-consensual explicit images circulate across the web, causing devastating harm to victims. For years, people had almost no recourse. If a photo was indexed by Google Search, it stayed visible—searchable, shareable, and impossible to escape.

Then Google introduced a removal tool.

It's not perfect. It won't prevent these images from being uploaded in the first place. It won't stop them from spreading on other platforms. But it does give people a way to request deletion from Google Search results—and that matters.

This article breaks down exactly what Google's doing, how the tool works, what it actually achieves, and what privacy advocates think about it.

TL; DR

- Google added a removal tool for non-consensual explicit images, deepfakes, and personal information in Search

- The process is straightforward - click the image, select "remove result," specify what it shows, and submit

- You can track all requests through Google's "Results about you" hub with real-time updates

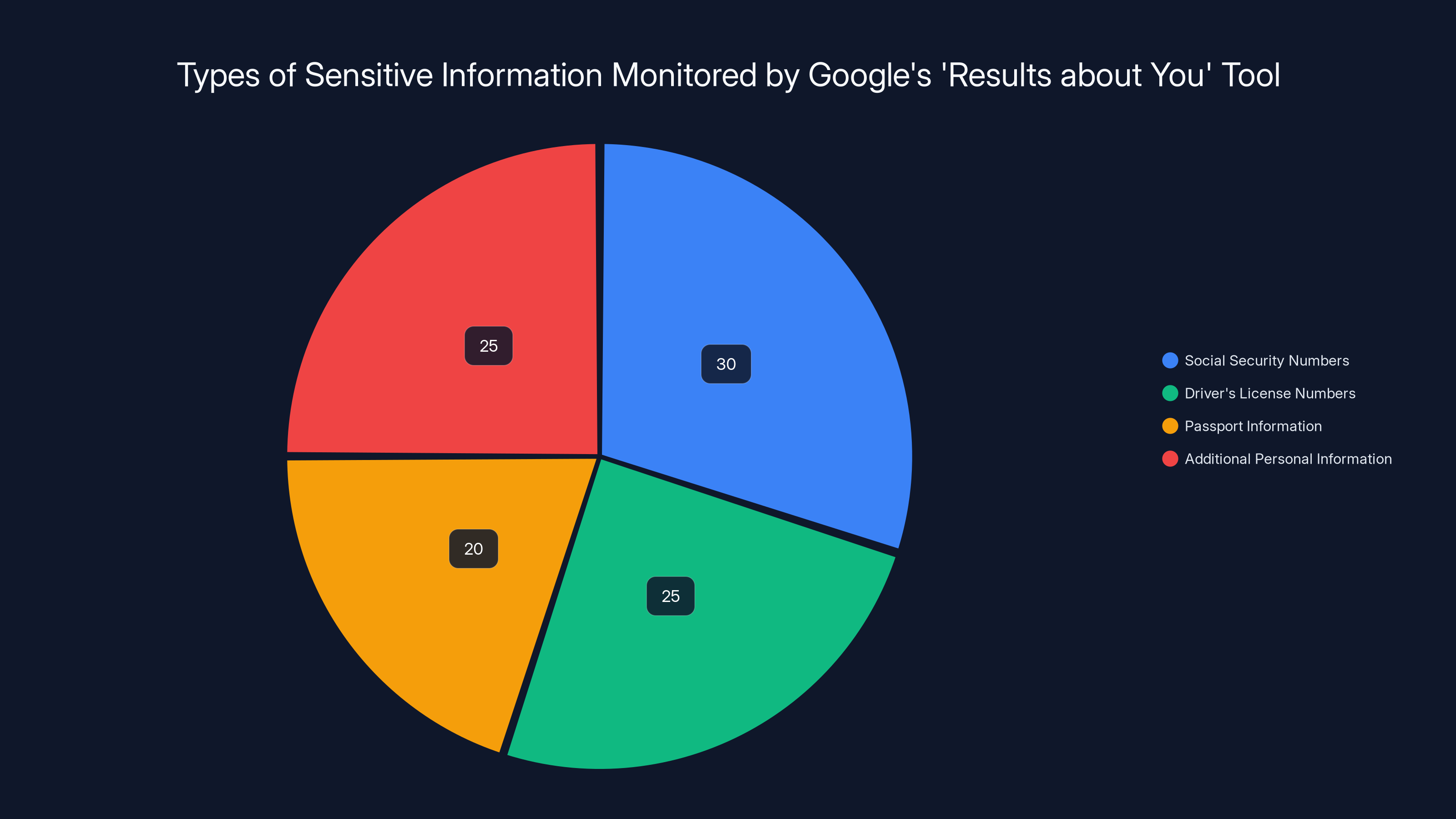

- Personal info protection expanded - Google now monitors for social security numbers, driver's licenses, and passport data

- Deepfake detection included - you can flag synthetic intimate images separately from real photos

Google's tool monitors various types of sensitive information, with Social Security Numbers being the most critical due to their direct link to identity theft. Estimated data.

The Problem: Non-Consensual Explicit Content Online

Non-consensual explicit images represent one of the most harmful forms of online abuse. These include real intimate photos shared without permission, often called "revenge porn," and deepfakes—AI-generated synthetic sexual content created without consent.

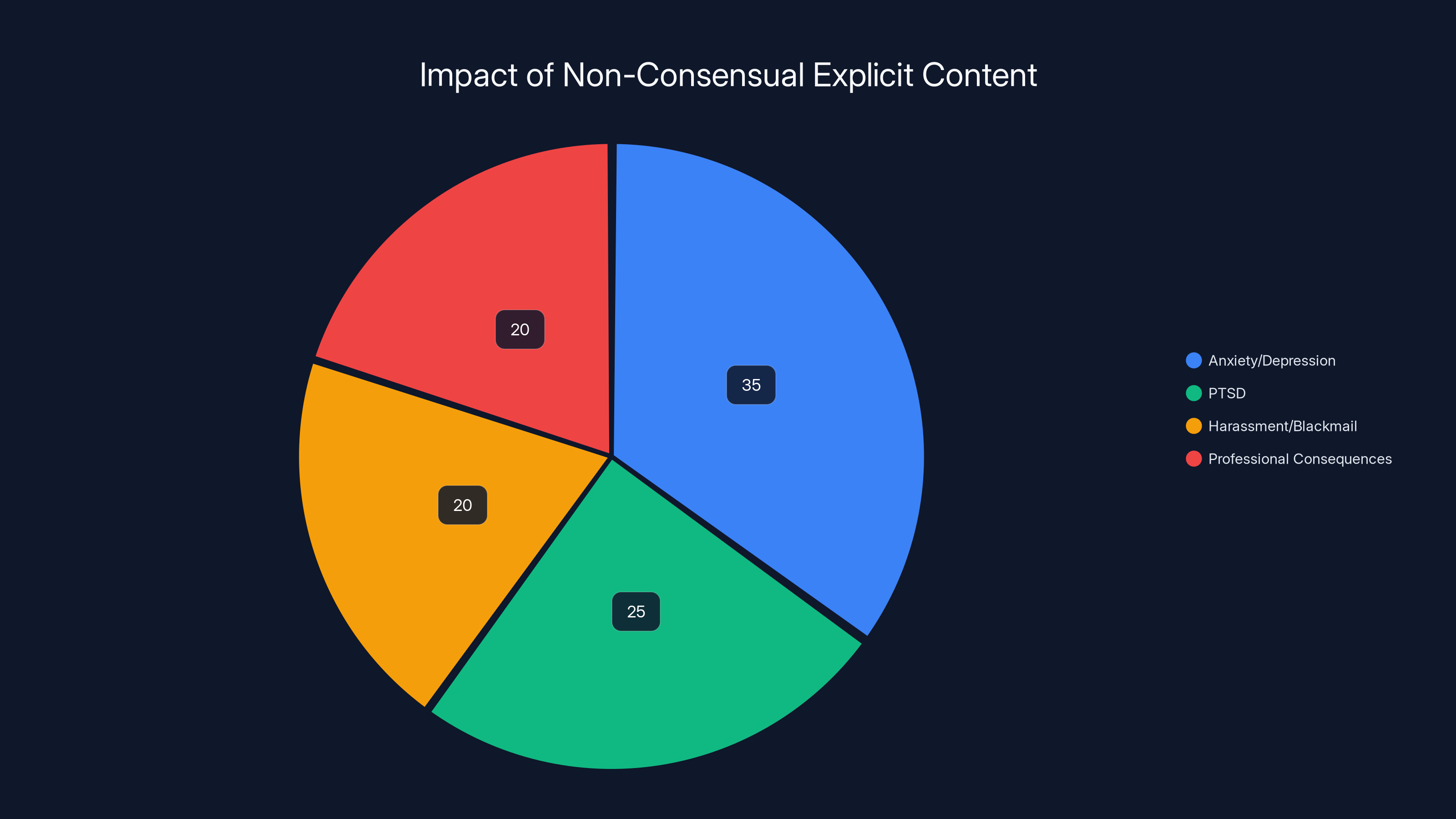

The statistics are grim. Research indicates that hundreds of thousands of women experience this violation annually. The emotional toll is severe. Victims report anxiety, depression, PTSD, and difficulty trusting others. Many face harassment, blackmail, and professional consequences when these images appear in search results.

When someone googled a victim's name, they'd see these explicit images alongside normal biographical information. The search visibility transformed private violations into public humiliations. Employers would find them. Family members would find them. Everyone the victim knew could potentially find them.

Before Google's removal tool, taking action required contacting the hosting platform directly, then requesting Google manually remove the link through their standard removal form. This process was slow, fragmented, and often ineffective. If five different sites hosted the image, you'd need to contact five different platforms. Then Google might not remove it anyway.

The fundamental issue: Google indexes the web as it exists. They see themselves as neutral. But that neutrality has real consequences for people experiencing some of the worst online violations imaginable.

Privacy advocates had been pushing for direct removal tools for years. Now Google has finally responded.

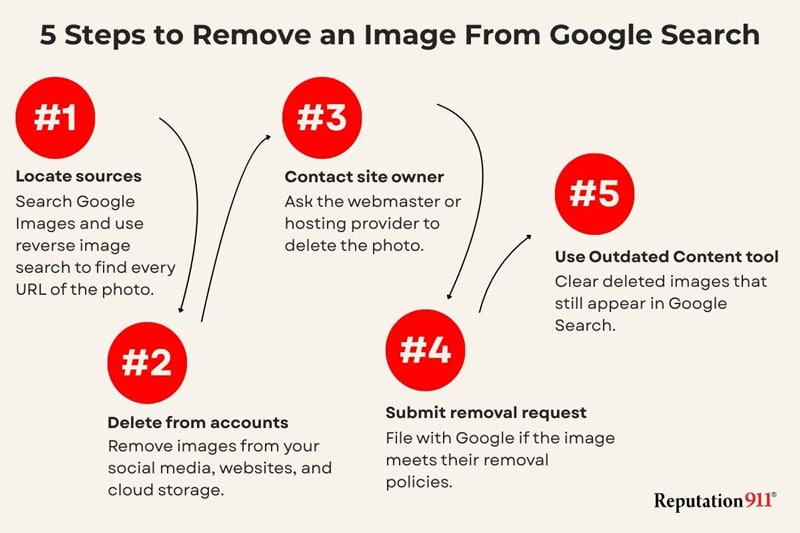

How Google's New Removal Tool Works

The actual mechanics are surprisingly simple. When you encounter a non-consensual explicit image in Google Search, you can remove it without hiring a lawyer or navigating complex procedures.

Here's the step-by-step process:

Step 1: Find the image in Search results

Search for yourself or search Google Images directly. If harmful content appears, you'll see it alongside regular search results.

Step 2: Click the three-dot menu

Hover over or long-press the image. A three-dot menu appears in the corner (this works on desktop and mobile).

Step 3: Select "Remove result"

The menu offers several options. Click "Remove result" or "Report this image."

Step 4: Specify the content type

Google asks you to categorize what the image shows. You'll choose from:

- "It shows a sexual image of me"

- "It shows a person under 18"

- "It shows my personal information"

- "It violates my rights in another way"

Step 5: Indicate if it's a deepfake

If you selected the sexual image option, Google asks a follow-up question: "Is this a real image or a deepfake?" This distinction matters because deepfakes are synthetic content, while real images document actual violations.

Step 6: Submit your request

Click submit. Google immediately directs you to resources—hotlines, counseling services, legal organizations, and support groups.

Step 7: Track your request in Results about You

Instead of wondering whether Google processed your request, you can check your "Results about you" hub. This dashboard shows all your removal requests, their status, and whether Google approved them.

The entire process takes maybe two minutes. That's the key innovation here. Accessibility matters. When the barrier to action is low, people actually take action.

Google also allows batch processing. If multiple explicit images exist, you can select several at once and submit them together instead of clicking through each one individually.

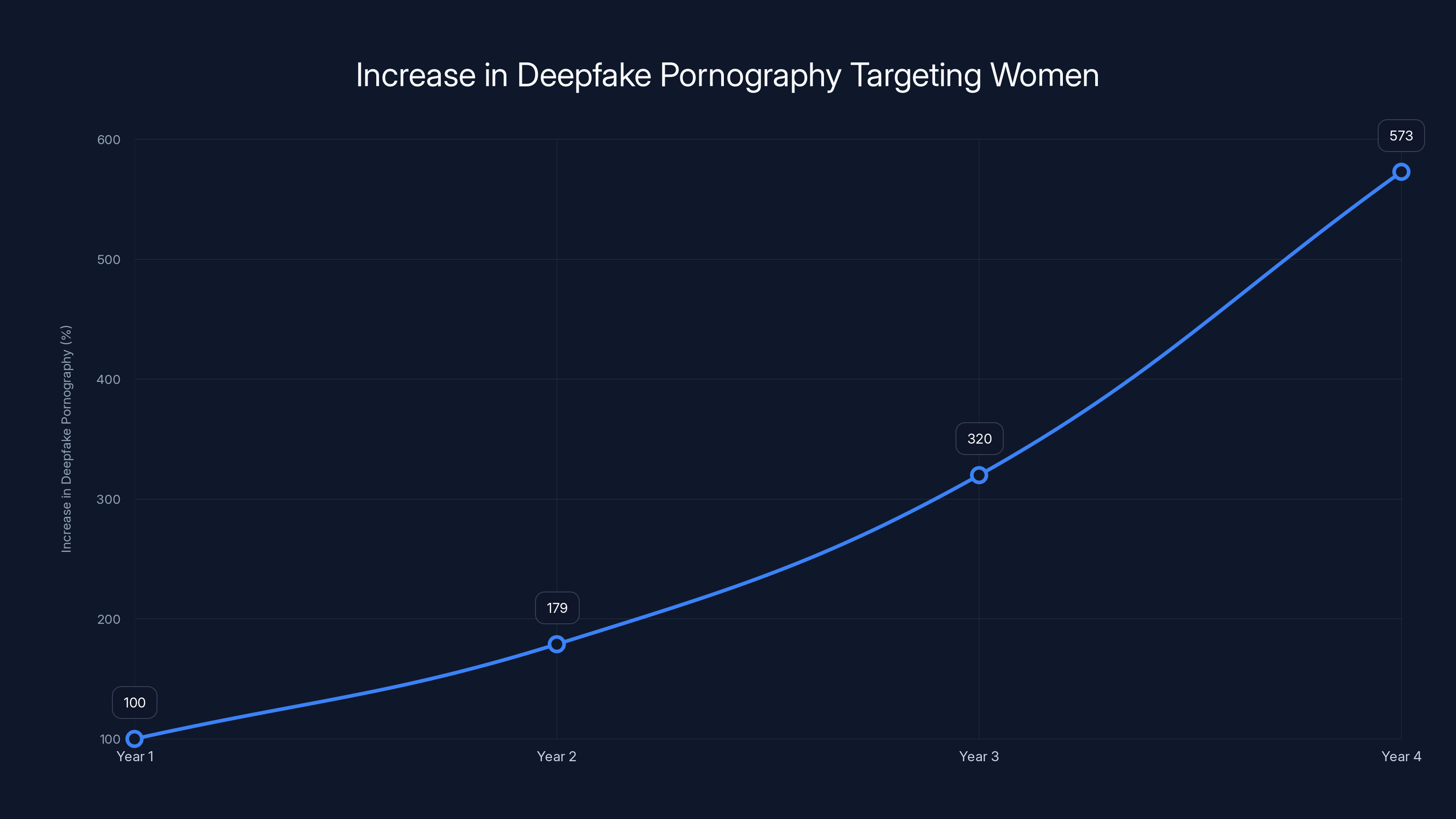

Deepfake pornography targeting women has seen a dramatic increase, with a 79% rise in just one year. Estimated data projects continued growth if current trends persist.

The "Results About You" Hub: Your Control Center

Google's "Results about you" hub is the command center for managing your search presence. Think of it as a personal SEO dashboard, except instead of optimizing visibility, you're controlling it.

The hub does several things:

Tracks your removal requests in real-time. When you submit a removal request through the explicit image tool, it appears in your hub with the current status. You see immediately whether Google is processing it, reviewing it, or has completed the action.

Shows you what's indexed under your name. Google scans Search results for personal information appearing about you—addresses, phone numbers, email addresses. If anything appears, the hub alerts you.

Monitors for identity theft risks. This is the newer, expanded functionality. Google now checks whether your social security number, driver's license, or passport information appears in Search results. If it does, the hub notifies you and provides removal tools.

Provides removal options for each result. You can request removal of individual results directly through the hub without having to find them in Search first.

Keeps historical records. The hub maintains a record of everything you've requested removal for. You can see timestamps, statuses, and outcomes.

Accessing the hub requires logging into your Google account. You'll verify your identity (sometimes with two-factor authentication) and can then add your contact information and government ID numbers. This verification prevents people from falsely removing others' content.

Google notes that setting up the hub takes about ten minutes. Once configured, it runs automatically in the background, continuously scanning for your personal information and alerting you if anything new appears.

Important limitation: The hub only affects what appears in Google Search. It doesn't remove content from the original websites. Those images remain hosted wherever they were uploaded. What the hub does is prevent Google from directing people to that content when they search for you.

Deepfakes: A New Category of Harm

The tool's deepfake detection is significant because it acknowledges a growing problem: AI-generated explicit content created without consent.

Deepfakes use machine learning to swap someone's face onto explicit video or generate entirely synthetic intimate imagery. The technology has improved dramatically. Five years ago, deepfakes were obviously fake. Now they're often convincing.

The harm is real even though the content is synthetic. A deepfake claiming to show you in explicit situations damages your reputation, relationships, and career prospects. People don't care that it's AI-generated—they see your face and draw conclusions.

Research from Sensity found that deepfake pornography targeting women increased 79% in one year. The vast majority of deepfake pornography targets women. Some research suggests at least 90% of deepfake porn depicts women, often without their knowledge or consent.

Google's decision to ask whether content is a real image or deepfake matters because the removal logic might differ. Real explicit images often need to be traced to the original host and removed at the source. Deepfakes, as AI-generated synthetic content, might be removed more quickly since there's no original victim footage to protect (though the synthetic version is still harmful).

The tool doesn't currently identify deepfakes automatically. You have to tell Google whether it's real or AI-generated. Google has been investing in deepfake detection technology, but automated identification remains imperfect. That's why they ask you directly.

Personal Information Protection: Beyond Explicit Content

Google expanded the "Results about you" tool beyond non-consensual explicit images. The updated system now protects sensitive personal information that could enable identity theft or harassment.

The hub now monitors for:

Social security numbers. If your SSN appears anywhere in Google Search results, the hub alerts you. This is critical because SSN exposure directly enables identity theft. Credit agencies, banks, and government databases all use SSNs to verify identity. Someone with your number can open accounts, file taxes, and drain your credit.

Driver's license numbers. State driver's licenses contain enough identifying information to impersonate you. Combined with an address or date of birth, a driver's license number is extremely valuable to criminals.

Passport information. Passport details enable international identity fraud. Someone with your passport number could potentially book travel, create accounts with international services, or cause complications if they're caught using a fraudulent document in your name.

Additional personal information. The system can flag other sensitive data like home addresses, unlisted phone numbers, and bank account information, depending on your settings.

Here's how it works in practice: You configure the hub with information about yourself. You add your SSN, driver's license number, and passport details (the hub encrypts this locally). Google then continuously scans Search results for this information. If it appears, you receive an alert within the hub and can request removal.

Google claims these removal requests are processed quickly. In many cases, the company can contact the website hosting the information and request takedown without your involvement.

The protection isn't automatic. You have to opt in by adding your sensitive information to the hub. This creates a privacy paradox: to protect your personal data from appearing in Search, you have to give Google your personal data. But Google already has vast amounts of your information from your Google account, browsing history, and other services.

The real-world impact is significant. Identity theft costs victims an average of $14,000 and 200+ hours of time to resolve, according to Identity Theft.gov. Preventing SSN and driver's license numbers from being searchable reduces that risk substantially.

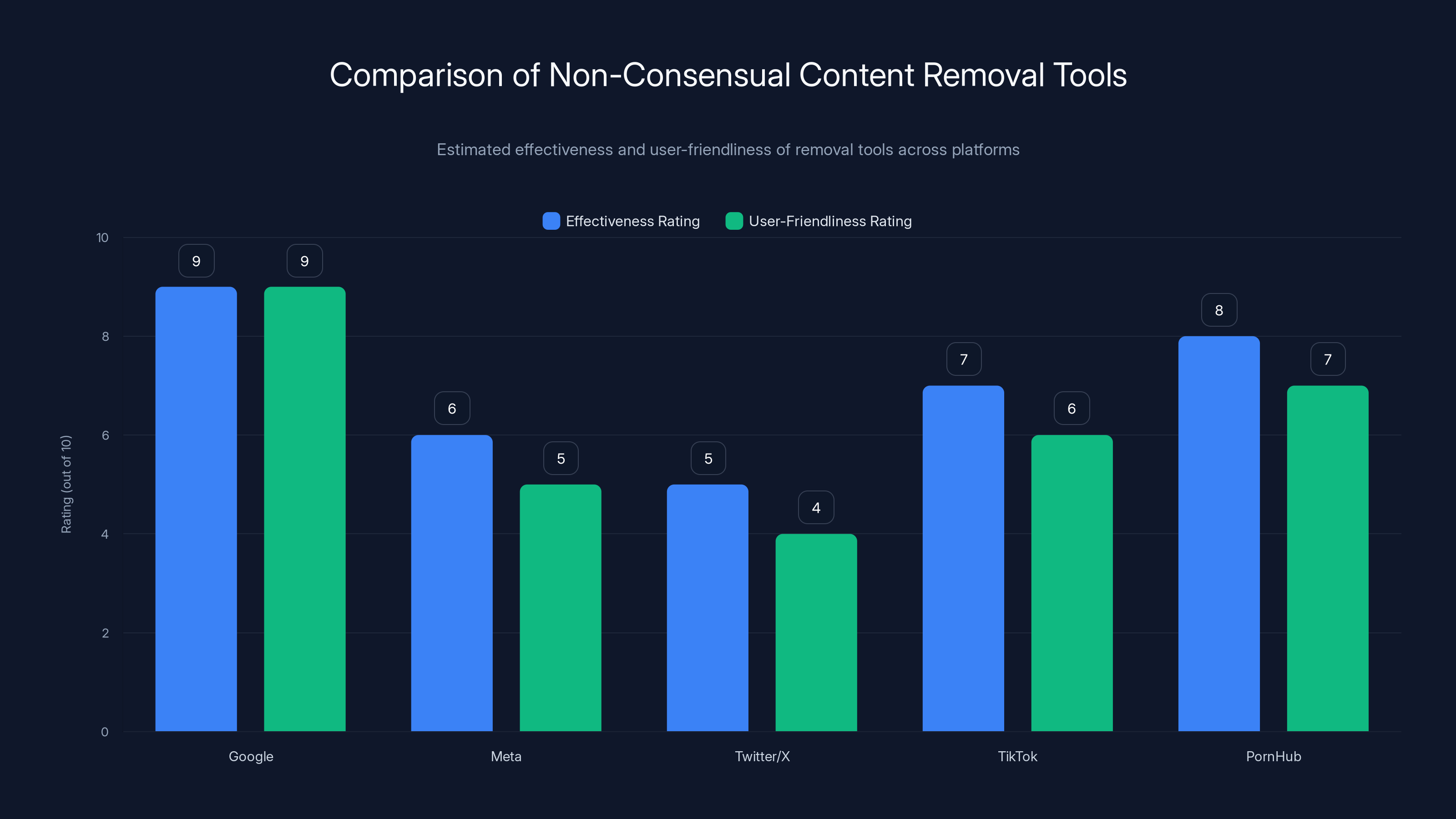

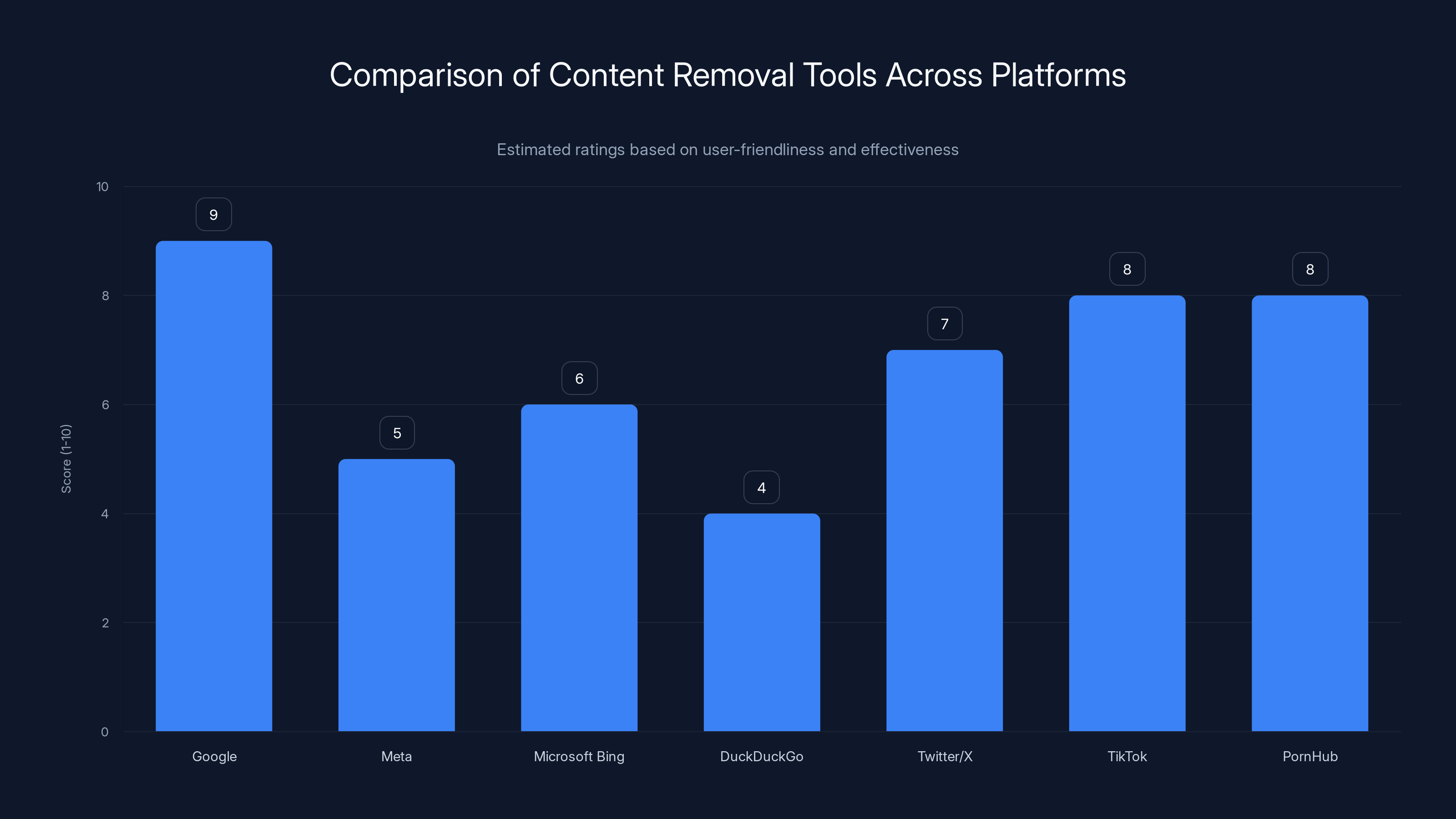

Google leads in both effectiveness and user-friendliness for non-consensual content removal tools, thanks to its comprehensive approach and centralized dashboard. Estimated data based on platform features.

The Immediate Support System

One of the most important features of Google's tool isn't technical—it's human support. When someone submits a removal request for non-consensual explicit content, they're often in emotional distress.

Google responds by immediately showing links to support organizations:

Emotional and mental health support. Organizations like Childhelp, RAINN (Rape, Abuse & Incest National Network), and local crisis counseling services appear in the tool.

Legal resources. The tool links to organizations that provide free or low-cost legal assistance for victims of image-based abuse. These organizations can help you understand your rights and potentially pursue legal action against the person who uploaded the content.

Hotlines and crisis support. Direct phone numbers and text lines for immediate support when you need to talk to someone.

Practical guides. Information about reporting to law enforcement, working with platforms, and documenting evidence.

This wraparound support acknowledges a reality that tech companies often miss: the technology is only part of the solution. Someone discovering their explicit images in Google Search needs more than a deletion button. They need psychological support, legal guidance, and practical next steps.

Google's approach here reflects feedback from advocacy organizations. These groups have long argued that removal tools are meaningless without supporting victims through the emotional and practical aftermath.

Limitations: What This Tool Doesn't Do

It's crucial to understand what Google's removal tool doesn't accomplish.

It doesn't prevent uploads. Google can't stop someone from uploading explicit images in the first place. The tool only removes them from Search results after the fact. If someone is determined to share intimate images of you, this tool doesn't block that behavior.

It only affects Google Search. Your images might appear on Bing, Duck Duck Go, social media platforms, specialized porn sites, or dedicated revenge porn websites. Google removal doesn't touch any of those. You'd need to contact each platform separately.

The images stay on the original hosting site. Removing an image from Google Search doesn't delete it from wherever it was uploaded. If someone posted it on a porn site, it remains there. Google just stops directing people to it through their search engine.

It requires your time and effort. Each image needs individual handling (though batch processing helps). If someone uploads 50 images of you, you need to go through and remove each one. That's tedious and emotionally draining.

Removal isn't guaranteed. Google reviews each request. If they decide the content doesn't violate their policies or if they can't verify your request, they might deny removal. The approval rate is high, but it's not automatic.

Existing visibility has already caused harm. By the time you discover the images and request removal, damage is done. Employers might have seen them. Classmates might have seen them. Friends might have seen them. Deletion from Search doesn't undo that prior exposure.

Similar results might still surface. Google notes that you can opt into "safeguards" that filter out similar results. But this filtering isn't perfect. Related images might still appear, or the same content under a different URL.

These limitations don't make the tool worthless. They just mean it's a partial solution to a complex problem.

The Privacy Trade-off: Giving Google Your Information

To use the expanded "Results about you" hub effectively, you need to provide Google with sensitive information: your SSN, driver's license number, and passport details.

This creates a legitimate tension. You're protecting yourself from information exposure by... exposing information to Google.

Google's defense is reasonable: the company encrypts this information locally on your devices. They don't store your SSN or driver's license number on their servers. Instead, they use this information to scan Search results, and the data stays protected.

But Google already knows vast amounts about you. They have your search history, your Gmail messages, your location data, your Google Maps history, your YouTube watch history, and hundreds of other data points. Adding your SSN to that collection is adding to an already massive profile.

The counterargument: Google already has tools to access this type of information. If Google wanted your SSN, they could likely infer it or obtain it through other means. The explicit addition to the hub is actually more transparent—you know what information you're providing and why.

From a practical perspective, the benefit likely outweighs the concern. If your SSN appears in public Google Search results, identity thieves don't need access to Google's internal databases. They just need to Google your name. Protecting against that very real public threat is worth providing Google with information they already largely control.

Still, users should understand what they're trading: information privacy to Google for information privacy from the broader public.

Estimated data shows that anxiety and depression are the most common impacts, affecting 35% of victims, followed by PTSD and harassment. (Estimated data)

How This Compares to Other Platforms

Google isn't alone in offering removal tools for non-consensual content. But their approach is notably more comprehensive than competitors.

Meta (Facebook and Instagram) has removal features, but they're buried in reporting menus and less intuitive than Google's dedicated tool. Meta requires you to report content through their platform, which then decides whether it violates terms.

Twitter/X allows reporting non-consensual intimate images, but the platform's chaotic moderation means approval is inconsistent.

Tik Tok has similar reporting features with reasonable success rates but limited transparency about request status.

Porn Hub actually has a relatively straightforward removal tool—possibly because operating a porn site that doesn't allow revenge porn is good PR. You can submit removal requests through their site, and they verify your identity before removing content.

Google's advantage is the integration with the "Results about you" hub, which provides a centralized dashboard, removes the need to contact hosting websites directly, and offers real-time tracking. You're not submitting requests and hoping for the best. You can see exactly what status each request is in.

The immediate support resources are also notably more developed than what competitors offer. When you submit a Tik Tok removal request, you don't get connected to RAINN or crisis counseling. Google does.

Legal and Regulatory Context

Google's removal tool arrives as regulations around image-based abuse are tightening globally.

In the United States, there's no federal law specifically criminalizing non-consensual pornography. But many states have passed legislation. As of 2025, more than 45 states have criminalized what's often called "revenge porn." Penalties vary from misdemeanors to felonies, with some states imposing up to 5 years in prison.

The EU is more aggressive. The Digital Services Act requires platforms to remove non-consensual content quickly. The Online Safety Bill in the UK has similar requirements.

Canada specifically made non-consensual intimate images illegal in 2014. Japan, South Korea, and several other countries have passed similar legislation.

Google's tool partially responds to regulatory pressure. By providing removal functionality, the company demonstrates good-faith effort to combat the problem. That matters when regulators evaluate whether platforms are doing enough to protect users.

But there's ongoing debate about whether removal tools go far enough. Some advocates argue that platforms should be responsible for preventing uploads in the first place, not just removing content after victims discover it. That would require more aggressive automated detection and verification systems.

Google has invested in automated detection systems, but they're not perfect. False positives (flagging non-explicit content as explicit) and false negatives (missing actual explicit content) both create problems. Too aggressive, and you're incorrectly removing legitimate content. Too lenient, and harmful content slips through.

The Role of Artificial Intelligence in Detection

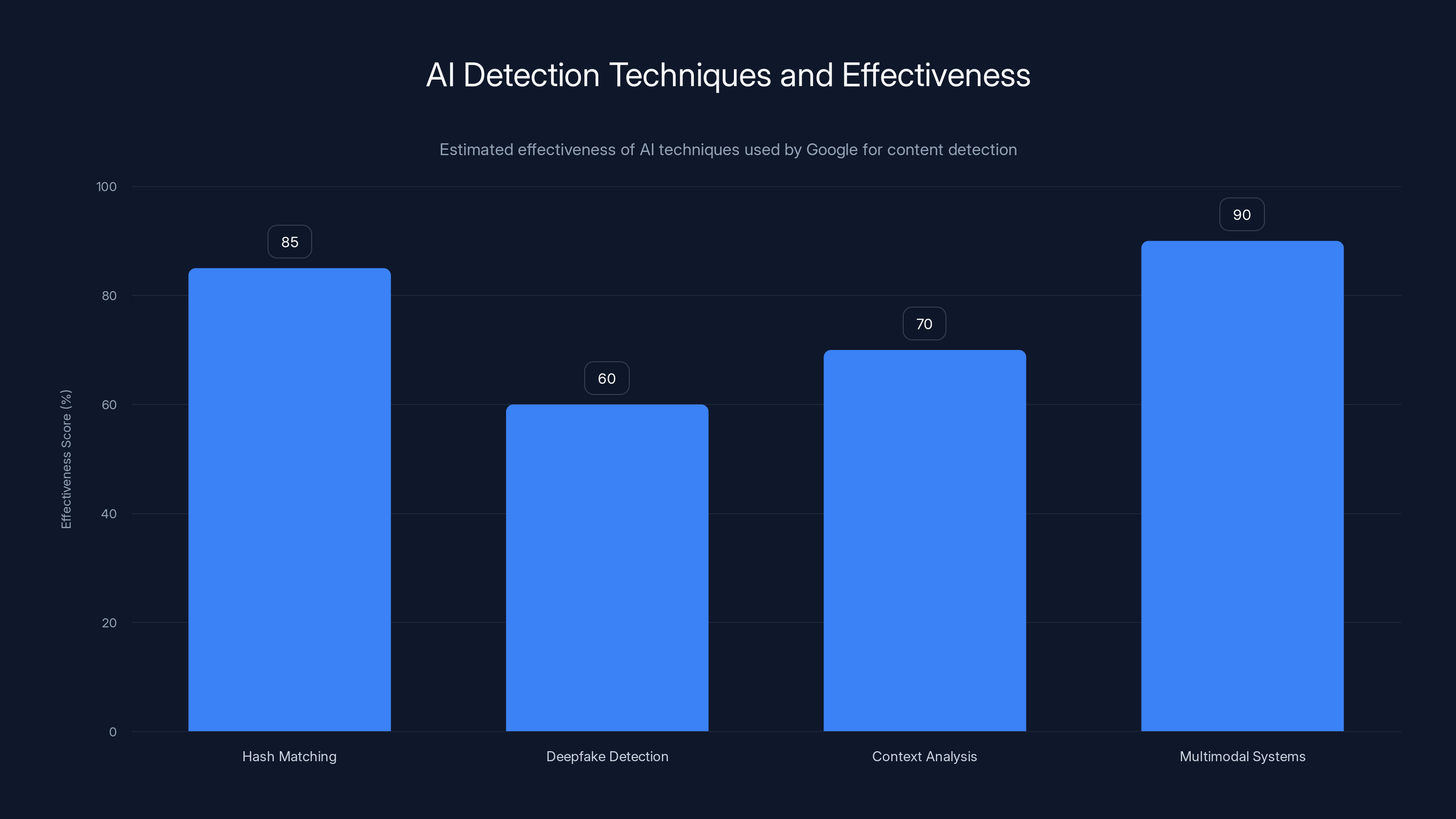

Google is using AI to improve both detection of non-consensual content and removal efficiency.

Hash matching is the primary technique. Google creates a digital fingerprint of explicit images and compares new uploads against that fingerprint. If a match is found, the new upload is blocked from being indexed or removed from existing Search results.

Deepfake detection is more challenging. Google has been working with organizations like Sensity to develop AI models that identify synthetic explicit content. These models analyze facial structure, eye movements, lighting, and other features to detect AI generation. But the technology is still imperfect, and deepfake generation tools are improving faster than detection tools.

Context analysis involves AI examining surrounding text and metadata to understand whether content is being shared in a non-consensual context. A medical textbook image of anatomy isn't the same as the same image being shared without consent as revenge porn.

The limitations are real. AI systems generate both false positives (incorrectly flagging content) and false negatives (missing actual violations). This is why Google combines automated detection with human review for removal requests.

Looking forward, Google and competitors are investing heavily in multimodal AI systems that combine image analysis, text analysis, user behavior, and reported-content metadata to improve detection accuracy. But we're probably years away from a system that reliably catches all non-consensual content while avoiding false positives.

Estimated data: Hash matching is currently the most effective AI technique for detecting non-consensual content, while deepfake detection remains challenging.

The Broader Internet Problem

Google's tool is important, but it highlights a fundamental internet problem: the platforms that host and distribute content have limited incentive to police non-consensual material aggressively.

Porn sites make money from traffic and ad revenue. More content means more traffic. Non-consensual content actually drives engagement because of its notoriety. Some sites have been remarkably resistant to removing content even when contacted by victims.

Image hosting platforms often claim they don't know whether content is consensual. A photo of you that you uploaded willingly looks identical to a photo of you that was shared without permission. Without additional metadata, it's hard for platforms to distinguish.

Social media platforms have invested in detection systems, but they're designed to catch obvious violations. Revenge porn shared in private messages, in closed groups, or under fake accounts is harder to catch than public violations.

The core problem: responsibility for non-consensual content is fragmented across dozens of platforms, hosting services, and search engines. Victims have to contact each one separately. Most have inconsistent policies and removal speeds.

Google's centralized approach through the "Results about you" hub addresses part of this fragmentation at least for Google Search. It doesn't solve the broader internet problem, but it's a practical step toward managing it.

Availability and Rollout Timeline

Google announced the removal tool and expanded "Results about you" hub in early 2025. As of now, the tool is rolling out gradually across countries.

In the United States, the tool became available in the coming days of the announcement and should be fully available by mid-2025. This is the first priority market.

In Europe, rollout is happening slightly slower due to GDPR compliance requirements. The regulation requires clear consent and specific data handling procedures. Google needs to structure the tool to comply with these standards.

In other countries, availability depends on local regulations and infrastructure. Countries with strong data protection laws or specific revenge porn legislation are getting the tool earlier.

Google's approach to phased rollout is actually smart. It allows them to gather feedback, identify bugs, and adjust before global availability. Early users are often more technically savvy and less likely to have issues.

When the tool becomes available in your region, you'll see prompts in Google Search and within Google One (Google's subscription service) if you're logged into your account. You can set it up proactively or wait until you need it.

Privacy Advocates' Perspective: The Incomplete Solution

Women's rights organizations and digital privacy advocates have mixed reactions to Google's tool.

The positive: This is the first major search engine to offer a dedicated removal process specifically for non-consensual explicit images. Before this, victims had to use Google's generic "request removal" form, which didn't acknowledge the specific harm of revenge porn. Having a specific pathway with immediate support resources is a significant improvement.

Advocates also appreciate that Google is tackling deepfakes specifically. This is forward-thinking, acknowledging threats that didn't exist a few years ago.

The concerns: Privacy organizations note that removal from Search isn't removal from the internet. As long as the content exists on hosting sites, motivated people can still find it. The tool creates a false sense of resolution.

Some advocates worry that removal tools let platforms avoid responsibility for prevention. If Google makes it easy to remove images after they're indexed, does that reduce pressure on hosting platforms to prevent non-consensual uploads in the first place?

There's also concern about verification and false removals. What prevents someone from maliciously removing other people's content by falsely claiming it's non-consensual? Google says they verify requests, but specifics on how verification works are limited.

Advocates would ideally see:

- Mandatory age verification on porn sites to prevent minors from uploading

- Stronger prevention through AI detection of non-consensual uploads before they spread

- Cooperation between platforms to ban users who upload revenge porn from other services

- Criminal prosecution of people who create and distribute deepfakes

- Liability for hosting platforms if they knowingly host non-consensual content

Google's tool addresses none of these. It's a removal system, not a prevention system.

But removal is still better than no removal. Incomplete solutions that help real people are more valuable than perfect solutions that exist only in theory.

Google's removal tool scores highest due to its user-friendly interface and effective support, closely followed by TikTok and PornHub. Estimated data based on described features.

What Happens to Your Data After Removal?

When you submit a removal request, Google processes your information and makes decisions about the content. What happens to your data?

The removal request itself: Your contact information, the images in question, your explanation of why you're requesting removal—all of this is stored temporarily while Google processes it. Google says they keep this data for compliance purposes and to prevent spam or false reports.

Timing: Once your request is processed (approved or denied), Google eventually deletes this associated data, though they don't specify exactly how long they retain it.

Your Results about you profile: If you configure the hub with your SSN and driver's license number, Google stores this encrypted locally on your device by default. But they may retain some encrypted metadata to enable scanning.

Appeals: If Google denies a removal request, you can appeal. Your appeal goes through the same process, and corresponding data is retained during review.

Google's privacy policy covers this, but the specifics are somewhat vague about exactly how long data is retained and what happens to it after your request is resolved.

For transparency, it would be helpful if Google were more explicit: "We retain your removal request data for 6 months, then delete it" or "We keep encrypted records of your sensitive information for the duration of your use of Results about you."

The lack of specificity is typical of tech companies—they want flexibility to adapt policies without formally announcing changes. But for something this sensitive, clearer timelines would build trust.

What You Should Do If You Find Explicit Images of Yourself

If you've discovered non-consensual explicit images of yourself online, here's a practical action plan.

Immediate (today):

- Take screenshots of the images and URLs where you found them. This documentation is important for reporting and potential legal action.

- Try to stay calm. This is traumatic, and your brain will go into crisis mode. Reach out to someone you trust—a friend, family member, or counselor.

- Don't contact the person who uploaded the content. That rarely leads to removal and sometimes escalates the situation.

- Consider calling a crisis hotline. RAINN (1-800-656-4673) offers confidential support.

Within 24-48 hours:

- Report to Google using the removal tool described in this article.

- Identify the hosting platform where the images appear (porn site, image hosting service, social media, etc.) and report to that platform.

- Save copies of your removal requests, confirmation numbers, and communications. You'll need these if you pursue legal action.

- Document the emotional impact. Keep notes about how the violation affects your daily life. This matters if you eventually sue the person who uploaded the content.

Within the first week:

- Report to law enforcement if you're comfortable doing so. Non-consensual explicit images are illegal in many jurisdictions. Police involvement can sometimes help identify the person responsible.

- Consider consulting an attorney about your legal options. Many specialize in image-based abuse cases. Some offer free consultations.

- Tell your employer (or don't—this is your choice, but they should know in case someone brings it to their attention).

- Consider a cease and desist letter if you know who uploaded the content. An attorney can send this formally.

Ongoing:

- Set up the "Results about you" hub to monitor for future uploads or spread.

- Use the deepfake option on Google's tool in a few months to check for synthetic versions of real images.

- Follow up on your removal requests. If Google hasn't processed them within a few weeks, escalate.

- Work with crisis organizations. Many offer ongoing support, not just initial crisis intervention.

The Future: Where This Goes Next

Google's removal tool is version 1.0. Expect significant evolution.

Better deepfake detection is inevitable. As AI detection improves, Google will likely add automatic flagging of synthetic explicit content without requiring users to identify it manually.

Cross-platform coordination is possible but faces obstacles. Imagine if Google, Meta, Twitter, Tik Tok, and porn sites shared hash databases of non-consensual content. An image removed from Google would be automatically removed from competing platforms. This would dramatically reduce spread. But getting competitors to cooperate on anything is difficult.

Prevention at upload is the holy grail. Some platforms are experimenting with verifying whether someone has consent to upload intimate images. This might involve the uploader and subject confirming consent before posting. It's invasive and raises its own privacy concerns, but it would prevent non-consensual uploads before they happen.

Legislation will likely drive much of this evolution. As countries pass more specific laws about non-consensual content, platforms will be forced to respond with better tools.

AI-powered support could expand beyond hotline links. Imagine an AI assistant helping victims understand their options, draft cease and desist letters, and navigate legal processes. It wouldn't replace human support, but it could bridge gaps when human resources are unavailable.

International standards might emerge. Right now, Google's tool works differently across countries based on local regulations. Eventually, there might be international agreements on how platforms should handle non-consensual content, similar to agreements on child safety.

Real-World Impact: What People Actually Experience

Despite limitations, the tool is making a difference for people who use it.

Victims report relief at having a straightforward process. Instead of navigating Google's generic removal form and hoping for the best, they can specifically describe what they're reporting and see real-time updates on whether their requests are being processed.

The immediate connection to support resources is genuinely valuable. Many victims don't know where to turn. Being directed to RAINN, local legal aid organizations, and crisis counseling within the same interface where you request removal is substantively more helpful than a generic form.

The "Results about you" hub gives people ongoing control. Instead of being passive victims waiting for Google to maybe respond, they have an active tool they can check regularly. That sense of control, even if limited, matters psychologically.

For some people, removal from Google Search is sufficient. They're less concerned about the image existing somewhere on the internet than about it appearing in search results when colleagues, family, or potential employers Google their name. Making the image unfindable through Google's ubiquitous search engine meaningfully improves their daily life.

Of course, for others, this is insufficient. A victim whose explicit images are hosted on a major porn site, shared across social media, and discussed in forums won't feel protected by Google removal alone. They want the content gone entirely, and Google removal is just one small piece of that.

But for many victims, it's the difference between having no tools and having some tools. And some tools are significantly better than no tools.

Comparing Platforms: Who Else Offers Removal Tools

How does Google's approach stack up against other major tech companies?

Meta (Facebook/Instagram): Has removal processes buried in report menus. You report to Meta, they decide whether to remove. No dedicated dashboard tracking requests. No immediate support resources. The process works, but it's less user-friendly than Google's.

Microsoft Bing: Offers removal requests through their webmaster tools. Less prominent than Google's dedicated tool. Smaller search market means fewer people use it, so visibility of removal is less critical.

Duck Duck Go: Focuses on privacy but doesn't index as much of the web as Google. Offers basic removal request capability, but their smaller index means fewer non-consensual images appear there.

Twitter/X: Allows reporting of non-consensual intimate images with relatively fast removal. But moderation is inconsistent. The tool works when your report reaches the right person.

Tik Tok: Has dedicated removal process for non-consensual content. Reportedly responsive, likely because the platform skews young and regulators focus heavily on their moderation practices.

Porn Hub: Surprisingly has perhaps the most straightforward process. You can submit removal requests directly on their site with identity verification. They actually follow through on removing content. This might be because hosting revenge porn is bad PR and regulatory risk.

Overall, Google's tool is most comparable to a combination of Tik Tok's responsiveness and Porn Hub's straightforwardness. The dedicated dashboard and immediate support resources are features that Google uniquely emphasizes.

A Realistic Assessment

Google's removal tool for non-consensual explicit images is a meaningful but partial solution to a significant problem.

What it gets right: It's accessible, transparent, and combines removal with support resources. The "Results about you" hub provides ongoing monitoring and control. The tool acknowledges both deepfakes and real images. It doesn't require technical expertise. Processing is fast.

What it doesn't address: It doesn't prevent uploads, doesn't remove content from other platforms, and doesn't eliminate harm already done. It doesn't attack the underlying problem that people upload non-consensual content in the first place.

The bottom line: For someone who discovers their explicit images in Google Search, this tool provides more options than existed before. That matters. It's not perfect, but it's better than the alternative of having no dedicated path to removal.

The real solution to non-consensual explicit images requires action across multiple fronts:

- Stronger prevention systems on hosting platforms

- Faster removal processes across all platforms

- Successful prosecution of people who create and distribute such content

- Cultural change that treats this behavior with the seriousness it deserves

Google's tool is part of that multi-pronged approach. It's one tool, not the solution. But one tool is better than zero.

FAQ

What is Google's non-consensual image removal tool?

It's a dedicated feature in Google Search that lets you request removal of explicit images posted about you without your consent. You can identify images as real photos, deepfakes, or containing personal information, then submit removal requests with tracking through Google's "Results about you" hub. Google processes requests and notifies you of the outcome.

How do I access Google's removal tool for explicit images?

When you encounter an explicit image in Google Search results, click the three-dot menu next to it (on desktop or mobile). Select "Remove result" and choose what the image shows—sexual content, content involving minors, or personal information. Fill in additional details and submit. You can also batch-submit multiple images at once.

What is the "Results about you" hub?

It's a privacy dashboard where you can track all your removal requests, monitor what personal information appears in Google Search results, and set up alerts for sensitive data like social security numbers, driver's licenses, and passport information. You can access it by logging into your Google account and navigating to the hub.

How long does Google take to process removal requests?

Google states that processing is relatively quick, though they don't specify exact timeframes. You can check status in real-time through the "Results about you" hub. Most requests are processed within days, though complex cases might take longer. You'll be notified of the outcome in your hub.

What happens if Google denies my removal request?

If Google determines content doesn't violate their policies or doesn't meet criteria for removal, they'll deny the request and explain why in your hub. You can appeal denied requests, and Google will review them again. If you believe the content violates laws in your jurisdiction, you might also consider legal action or reporting to law enforcement.

Does removing an image from Google Search delete it from the entire internet?

No. Removal from Google Search only stops Google from directing people to that content. The image remains hosted on whatever site originally uploaded it. You'd need to contact that platform separately to request deletion. Removing from Google is important because it stops the visibility, but it doesn't eliminate the content entirely.

How is deepfake content handled differently?

When you report sexual imagery, Google asks whether it's a real photo or a deepfake. This distinction matters because deepfakes are synthetic AI-generated content, while real images document actual violations. Google may process these differently—deepfakes might be removed more quickly since there's no original host to negotiate with. Both are harmful and removed, but the handling differs.

Is my personal information safe if I add it to the Results about you hub?

Google stores sensitive information like your SSN encrypted locally on your device. They don't retain it on their servers in unencrypted form. However, you're providing Google with information they don't typically have, so consider whether the protection benefit outweighs the concentration of data with one company. The tool is optional—you only set it up if you want the monitoring service.

What support resources does Google provide when I submit a removal request?

Google immediately directs you to organizations including RAINN, local crisis services, legal aid organizations, and counseling resources. These include crisis hotlines you can call immediately and legal organizations offering free or low-cost assistance with image-based abuse cases.

Can I report images of other people?

The tool is designed for images of yourself. You can report images of minors (anyone under 18) in sexual content, which helps protect child safety. But you cannot unilaterally remove images of adults on behalf of someone else. The person depicted needs to submit the removal request themselves to verify legitimacy and prevent malicious removals.

When will this tool be available in my country?

Google is rolling out the tool gradually starting in the United States first, then expanding to Europe and other regions. The process is phased to handle regulatory compliance (particularly GDPR in Europe) and to gather feedback. Check your Results about you hub or Google Search settings to see if it's available in your region. It should reach most countries by mid-2025.

Conclusion

Google's new removal tool for non-consensual explicit images represents a genuine, if incomplete, step forward in addressing one of the internet's most harmful phenomena.

For someone who discovers explicit images of themselves in Google Search results, having a dedicated, straightforward removal process is transformative. Before this tool, victims faced fragmentation and bureaucratic obstacles. Now they can submit requests in minutes and track status in real-time.

The integration with support resources—crisis hotlines, legal aid, mental health services—recognizes that removal is only part of the solution. The emotional and practical impacts of having such content exposed require wraparound support.

The expansion of the "Results about you" hub to monitor social security numbers, driver's licenses, and passport information addresses a different but related concern: identity theft. Preventing sensitive personal information from appearing in public search results protects people from fraud and harassment.

The acknowledgment of deepfakes is forward-thinking. Synthetic explicit content is a growing threat, and building specialized handling into removal tools anticipates future problems.

But the limitations are real and should be understood. Removing from Google doesn't remove from the internet. It doesn't prevent future uploads. It doesn't address the broader cultural problem that some people create and share non-consensual explicit images.

A complete solution requires:

- Hosting platforms being more aggressive about preventing non-consensual uploads

- Faster removal processes across all platforms

- Criminal prosecution of people who create and distribute such content

- Cultural change that treats this behavior with appropriate seriousness

Google's tool is part of addressing these issues, not a complete solution. It's part of a multi-pronged approach that includes platform policies, legal frameworks, victim support, and cultural standards.

For victims of image-based abuse, that's progress. Is it enough? No. But it's significantly better than what existed before.

If you're concerned about your own search presence or have discovered non-consensual content online, take action today. Set up your Results about you hub to monitor for future issues. If you find explicit imagery, use Google's removal tool. Contact the hosting platform directly. Reach out to support organizations. Document everything.

You don't have to navigate this alone. The tools and resources exist. They're imperfect, but they're real and they help.

Key Takeaways

- Google's removal tool makes it easy to request deletion of non-consensual explicit images from Search with a simple three-dot menu interface

- The Results about you hub provides centralized tracking of removal requests and monitoring for sensitive personal information like SSNs and driver's licenses

- The tool includes immediate connections to RAINN, crisis hotlines, and legal aid organizations—not just removal functionality

- Deepfake detection is included separately, acknowledging that synthetic explicit content requires different handling than real photographs

- While the tool helps with Google Search visibility, it doesn't delete content from original hosting sites or prevent uploads—a partial but meaningful solution

Related Articles

- Are VPNs Legal? Complete Global Guide [2025]

- India's New Deepfake Rules: What Platforms Must Know [2026]

- How to Cancel Discord Nitro: Age Verification & Privacy Concerns [2025]

- OpenAI's Super Bowl Hardware Hoax: What Really Happened [2025]

- Proton VPN 70% Off Deal: Complete Breakdown [2025]

- Proton VPN's Android Update Excludes Countries From Quick Connect [2025]

![Google's Tool for Removing Non-Consensual Images from Search [2025]](https://tryrunable.com/blog/google-s-tool-for-removing-non-consensual-images-from-search/image-1-1770739664780.jpg)