Open AI's Super Bowl Hardware Hoax: What Really Happened and Why It Matters

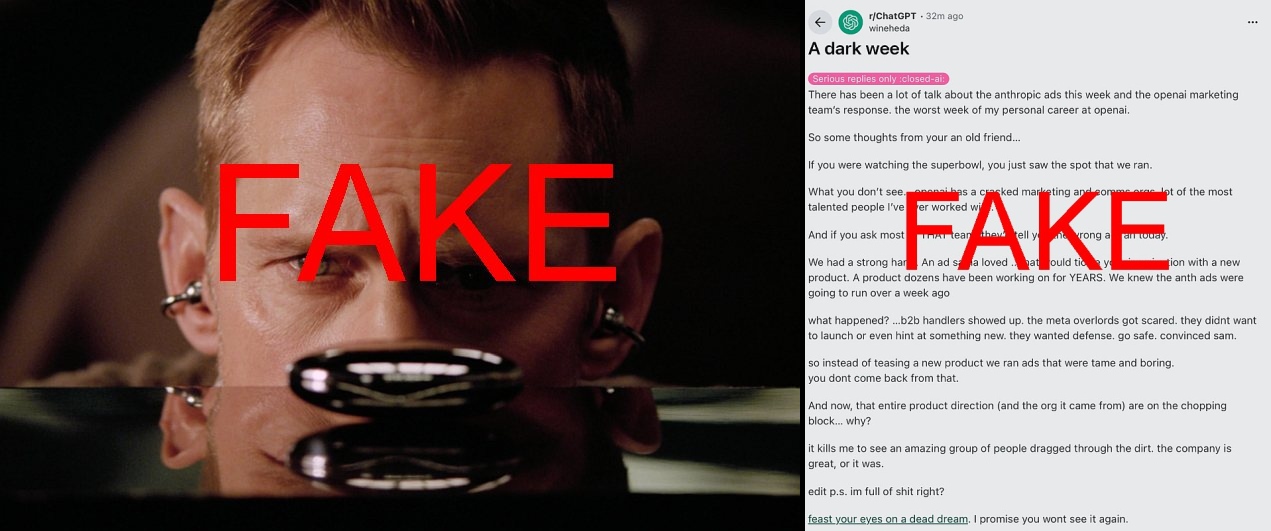

In February 2025, the tech world fell for one of the slickest hoaxes in recent memory. Videos and images began circulating online showing what looked like an official Open AI Super Bowl commercial featuring actor Alexander Skarsgård alongside design legend Jony Ive, both playing with an elegant orb-shaped hardware device. The narrative was compelling: Open AI had supposedly pulled the ad at the last minute due to competitive concerns. The story spread across Twitter, tech blogs, and industry publications within hours.

There's just one problem. None of it was real.

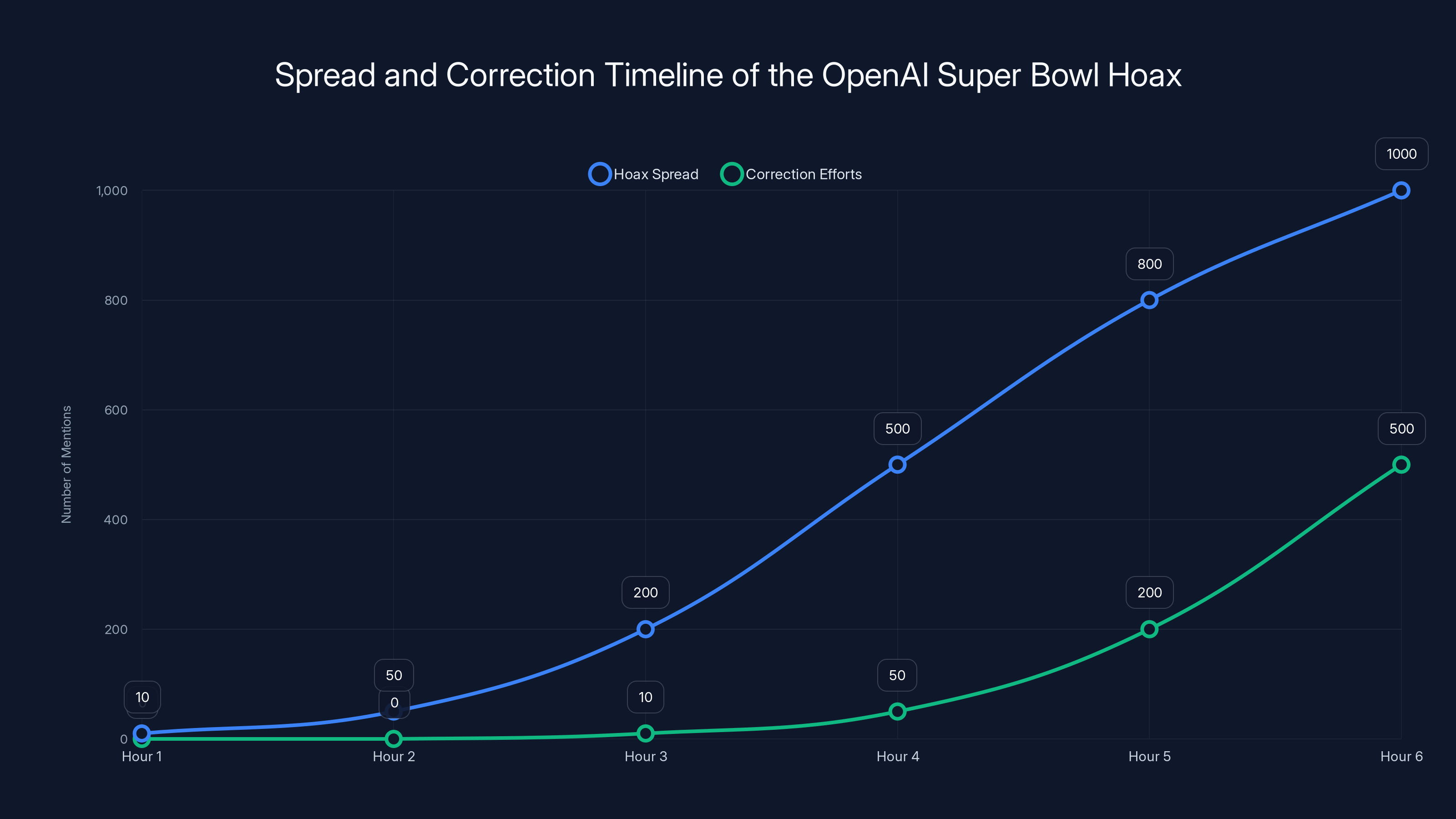

Open AI president Greg Brockman quickly shot back on X calling it "fake news," and company spokesperson Lindsay Mc Callum Rémy declared the entire story "totally fake." But the damage was already done. By that point, the hoax had seeded itself across the internet, demonstrating something troubling about how information travels in the AI era: it takes seconds to create convincing fiction, and minutes to spread it globally, but hours or days to correct it.

This wasn't some sloppy deepfake or obvious fake. The perpetrators went to extraordinary lengths, crafting fake news headlines, creating fraudulent websites, and even paying influencers real money to promote tweets about the non-existent product. Understanding how this hoax worked, who was behind it, and what it reveals about our information ecosystem is crucial for anyone paying attention to AI, tech, and media credibility in 2025.

TL; DR

- The Hoax: A fake Super Bowl ad supposedly showing Open AI's first hardware device with Skarsgård and Ive went viral across social media and tech outlets

- The Scope: The perpetrators created fake websites, fraudulent news headlines, paid influencers, and sent promotional emails with real payment transfers

- The Speed: Misinformation spread globally within hours before Open AI issued denials

- The Lesson: Even tech-savvy audiences can fall for convincing fabrications when they confirm existing expectations about company behavior

- The Implication: As AI generation tools become more sophisticated, distinguishing real from fake will become exponentially harder

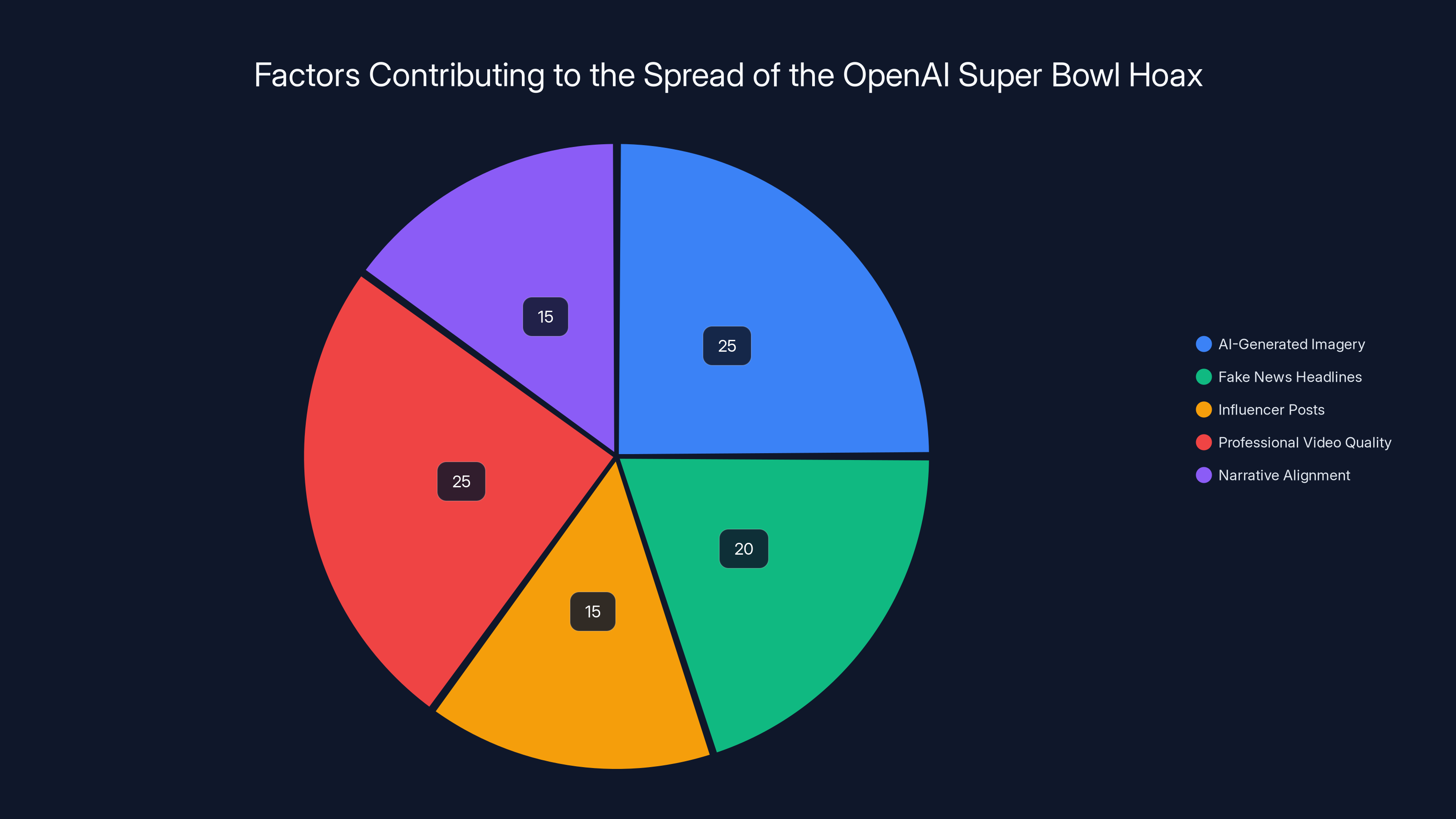

AI-generated imagery and professional video quality were the most influential factors, each contributing 25% to the hoax's believability. Estimated data based on narrative description.

How the Super Bowl Hoax Actually Worked

The anatomy of this hoax reveals a coordinated, sophisticated operation. It wasn't the work of a teenager with Photoshop skills. This required planning, resources, and multiple attack vectors designed to make the lie seem true from every angle.

The core of the hoax centered on a fabricated video or series of images showing the fictional hardware device. The design was sleek and minimalist, exactly the kind of aesthetic you'd expect from a collaboration between Apple-adjacent designer Jony Ive and an AI company trying to look cutting-edge. The imagery included a polished orb with subtle lighting effects, earbuds that appeared Apple-inspired, and professional cinematography that looked like it came from a premium brand campaign.

What made this believable wasn't just the visual quality. It was the narrative context. Open AI has been aggressively pursuing hardware. Sam Altman, the company's CEO, has made public statements about building AI devices. Jony Ive and Altman did announce a real AI hardware project in late 2024, so the idea of actual hardware wasn't fictional. The hoax simply took what people already suspected was coming and presented it as if it had already happened.

The perpetrators also understood influencer dynamics. Max Weinbach, a tech leaker with significant following, tweeted screenshots of an email he'd received roughly a week before the hoax went public. The email proposed promoting a tweet about the Open AI hardware teaser ad and apparently included a real payment of $1,146.12. This wasn't asking someone to spread rumors. It was literally paying them to post about a product that didn't exist, creating a false record of advance knowledge.

The fake news angle came through multiple channels. Ad Age reporter Gillian Follett called out a fake headline falsely attributed to her, portraying a story about Open AI changing its Super Bowl ad at the last minute. Open AI CMO Kate Rouch mentioned the existence of "an entire fake website" designed to back up the same narrative. These weren't accidents or misunderstandings. Someone built infrastructure specifically designed to create the appearance that this story had been reported by legitimate news outlets.

That's the sophistication that separates a hoax from random noise. Any idiot can post something fake online. Making it look like multiple independent sources are reporting the same story? That requires coordination, planning, and resources.

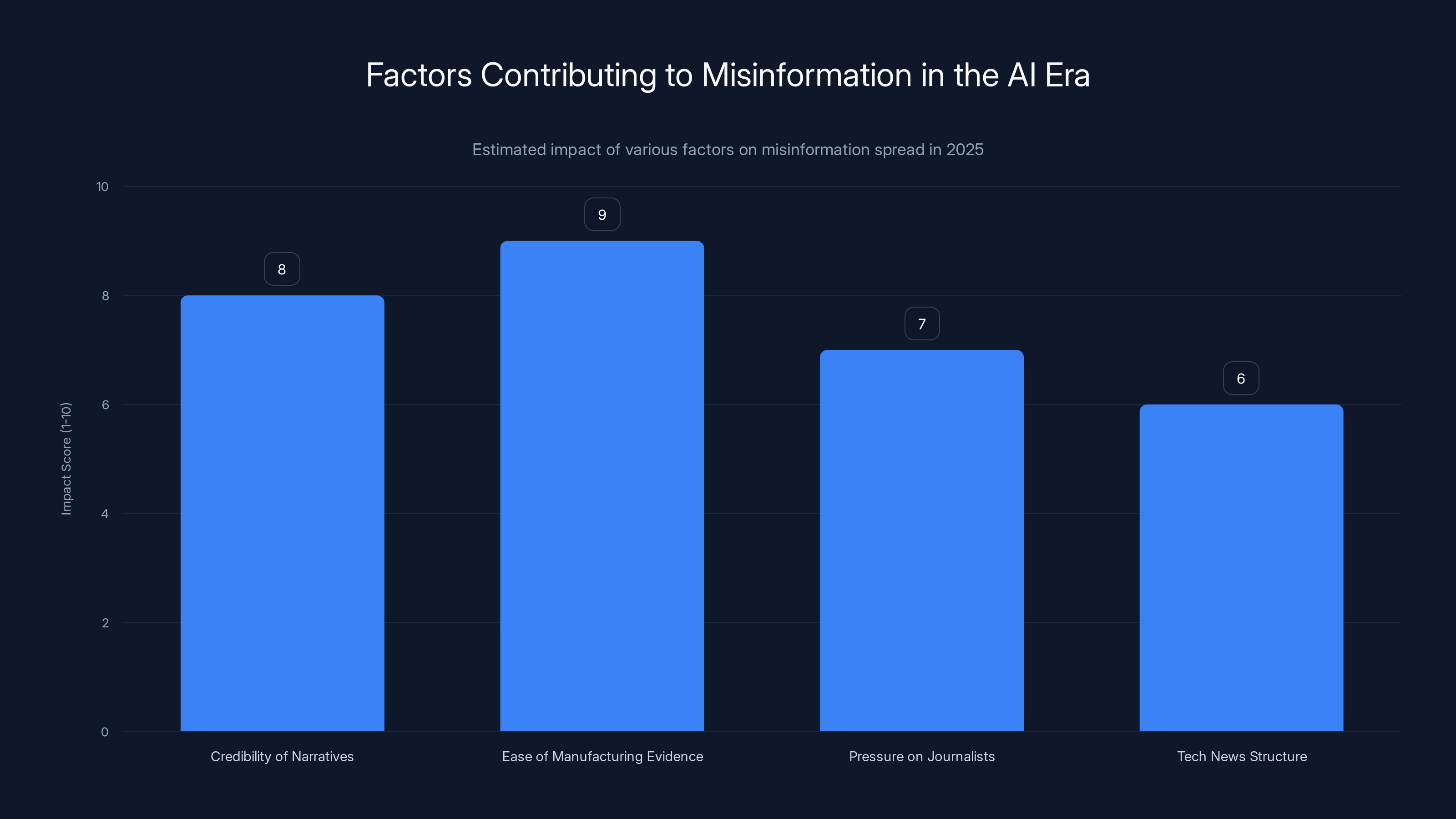

Estimated data suggests that the ease of manufacturing evidence and the credibility of narratives are major factors in the spread of misinformation in the AI era.

The Timing and Strategic Placement

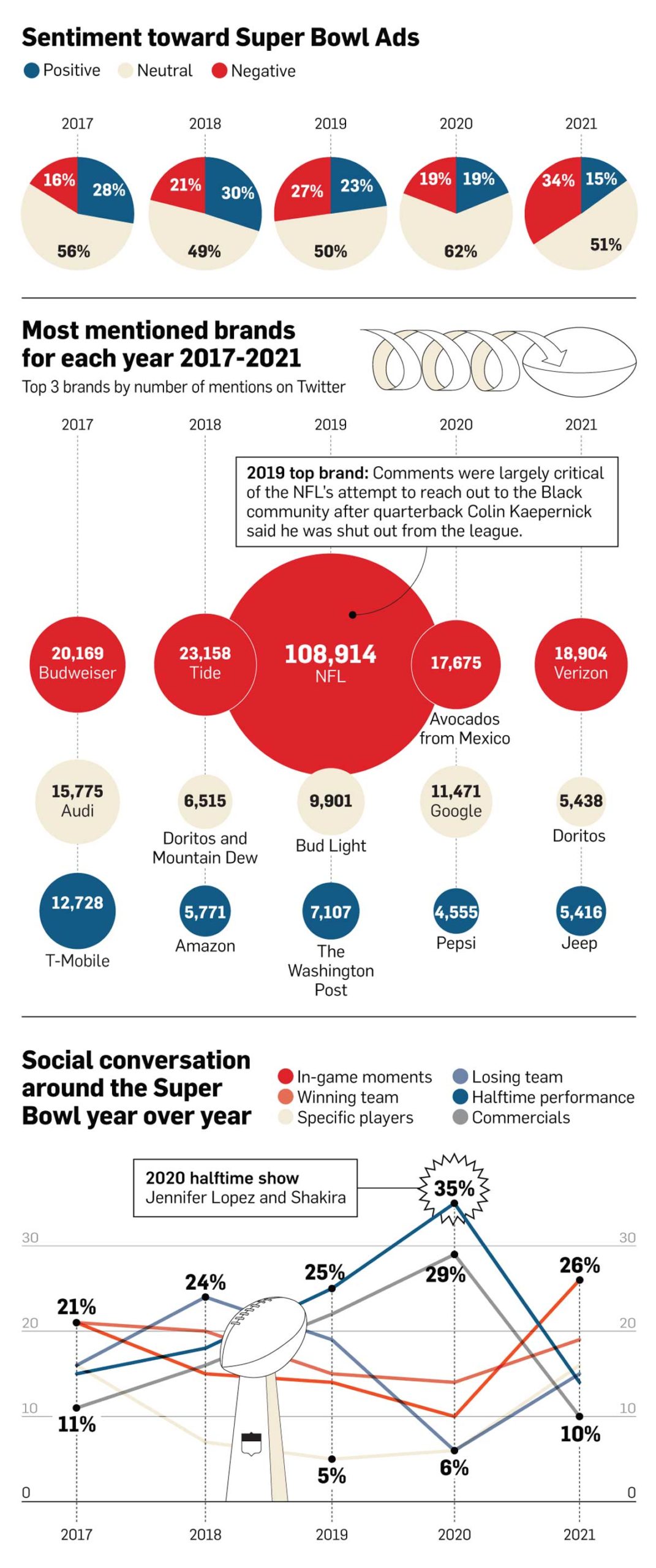

The hoax dropped at the most strategically valuable moment: Super Bowl season. The Super Bowl isn't just a sports event in America, it's also the advertising Olympics. Major brands spend $5-6 million for 30 seconds of air time. Tech companies have historically used the Super Bowl to announce major products or positioning shifts.

Apple introduced the Macintosh in a Super Bowl ad in 1984. Microsoft, Google, Amazon, and others have all used the Super Bowl as a platform for major announcements. So when people saw a Super Bowl ad supposedly showing Open AI's first hardware, it fit perfectly with existing expectations about how major tech announcements work.

The timing also mattered because Open AI is in an increasingly competitive hardware race. Anthropic, Microsoft, and various hardware startups are all pushing toward AI devices. The narrative that Open AI pulled an ad because of competitive concerns wasn't absurd. It was the kind of corporate paranoia that actually happens.

February 2025 also placed the hoax during a period of high AI interest. The previous months had seen significant announcements about O1 reasoning models, competitive tensions between Open AI and other labs, and increasing scrutiny of AI companies' moves into hardware. The hoax rode a wave of genuine news momentum.

What the Hoax Reveals About Misinformation in the AI Era

This hoax is more than just embarrassing for people who fell for it. It's a case study in how misinformation operates in 2025, especially when AI-generated content becomes more convincing.

First, the hoax demonstrates that humans remain deeply credulous when presented with coherent narratives. The elements felt real because they connected to established facts. Yes, Jony Ive and Sam Altman announced an AI hardware project. Yes, Open AI does plan to build devices. Yes, companies do sometimes pull ads before major broadcasts. Yes, Super Bowl ads are expensive and companies do compete for messaging advantage. By stringing together true premises and adding one false conclusion (the ad got pulled and was being leaked), the hoaxers created something that felt internally consistent.

Second, it shows how easy it is to manufacture evidence in the digital age. Creating convincing imagery and video is no longer expensive. The tools exist. Professional-quality renders can be made with AI image generators like Midjourney or DALL-E. Video editing is accessible. Deepfakes, while still somewhat detectable, are getting better. By 2026, we may not be able to reliably distinguish real from fake video without forensic analysis.

Third, the hoax reveals structural problems with how tech news travels. Tech journalists are simultaneously trained to be skeptical and incentivized to be first. If a story looks plausible and has some evidence behind it, the competitive pressure to publish before competitors often overrides caution. This doesn't excuse sloppy reporting, but it explains why the hoax was so effective. Some outlets probably ran versions of this story before confirming directly with Open AI.

Fourth, it highlights the vulnerability of the influencer economy. Influencers are paid to promote things. That's how the ecosystem works. But when you can be paid to promote things that don't exist, you've created a marketplace for lies. The person who received that $1,146.12 payment was being directly compensated to assist in spreading misinformation. How many other influencers and accounts shared the content without knowing about the payment? The payment created a false record of credibility (look, someone with standing knew about this in advance).

Fifth, the hoax exploits our trust in institutional validation. When a fake Ad Age headline exists, it creates the appearance that professional news outlets are reporting on the story. When there's a fake website, it looks like companies are documenting this narrative. We trust institutions to catch lies, but when the institutions themselves can be faked, that protection disappears.

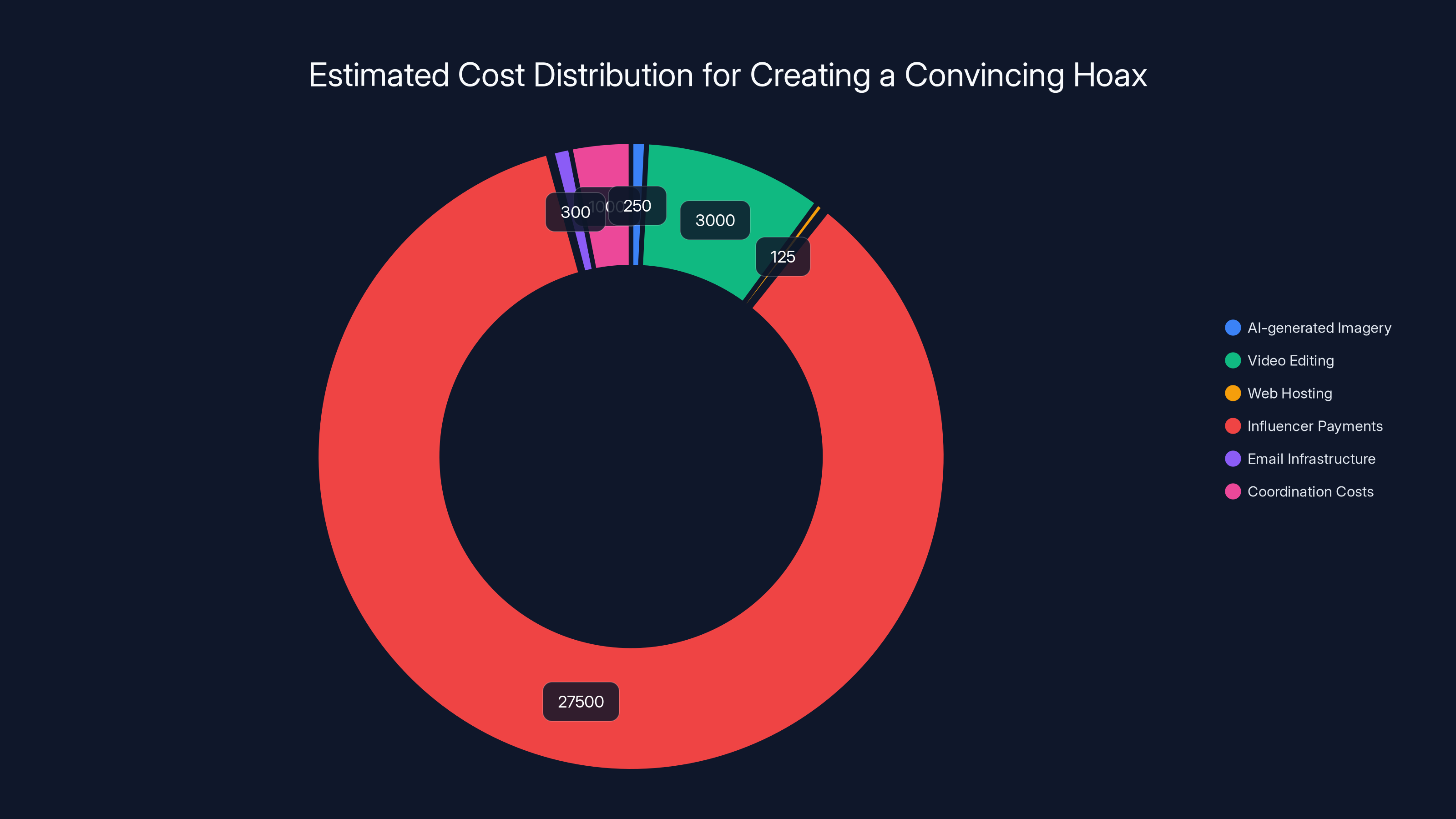

Influencer payments and video editing are the largest expenses in creating a convincing hoax, potentially accounting for over 80% of the total cost. Estimated data.

The Business of Creating Convincing Hoaxes

Someone invested real resources into this. We're not talking about posting fake news on Reddit. This required infrastructure, payment processing, social coordination, and possibly custom media creation.

The costs would include:

- High-quality AI-generated or rendered imagery (500, depending on tools used)

- Video editing and composition (5,000 if outsourced)

- Web hosting and domain registration (200)

- Influencer payments (50,000 depending on reach)

- Email infrastructure (500)

- Coordination across multiple accounts and platforms (time cost)

So we're probably talking about someone spending

Possible motivations included:

Competitive sabotage: A rival company or AI lab wanting to damage Open AI's credibility or distract from announcements.

Influence manipulation: Someone wanting to move markets or test the propagation speed of misinformation.

Attention and clout: A hacker or social engineer wanting to prove they could fool the tech industry and achieve fame.

Research: Someone studying how misinformation spreads, funded by a university or research institution.

Marketing for another product: A company wanting to ride the attention wave to promote something else.

The investigation into who was actually behind it continued through the spring of 2025, but the perpetrators had covered their tracks well. Crypto wallets don't easily trace back to identities. Email addresses can be spoofed. Influencers who received payments might not even know who hired them, just that they got paid through intermediaries.

Open AI's Response and Corporate Reputation Management

Open AI's response was swift and direct, which probably limited the damage but didn't eliminate it. Greg Brockman and Lindsay Mc Callum Rémy both issued quick denials on their primary communication channels. By responding quickly and forcefully, Open AI made it clear the story was fabricated.

However, there's a particular irony here. Open AI is a company built partly on surprising announcements and carefully controlled information releases. Sam Altman is known for strategic communications. The company has a history of creating hype around announcements. So when a story claims they're secretly working on hardware (true) and preparing a major announcement (likely true) but faked the leaks, it creates cognitive dissonance. People are inclined to believe Open AI would pull off something this elaborate.

The company's CMO Kate Rouch had to publicly acknowledge the fake website, fake headlines, and coordination behind it. This transparency actually helped credibility. Rather than just saying "it's fake," they explained the specific vectors the hoaxers used. This made it clear that dismissing the story as mere misinformation wasn't enough. There was actual coordination and infrastructure behind it.

The incident also probably accelerated Open AI's timeline for announcing actual hardware. If you're going to be associated with hardware rumors regardless, you might as well get ahead of it. Within weeks, Open AI would release more concrete details about hardware partnerships and timelines, allowing them to control that narrative rather than letting hoaxes define the conversation.

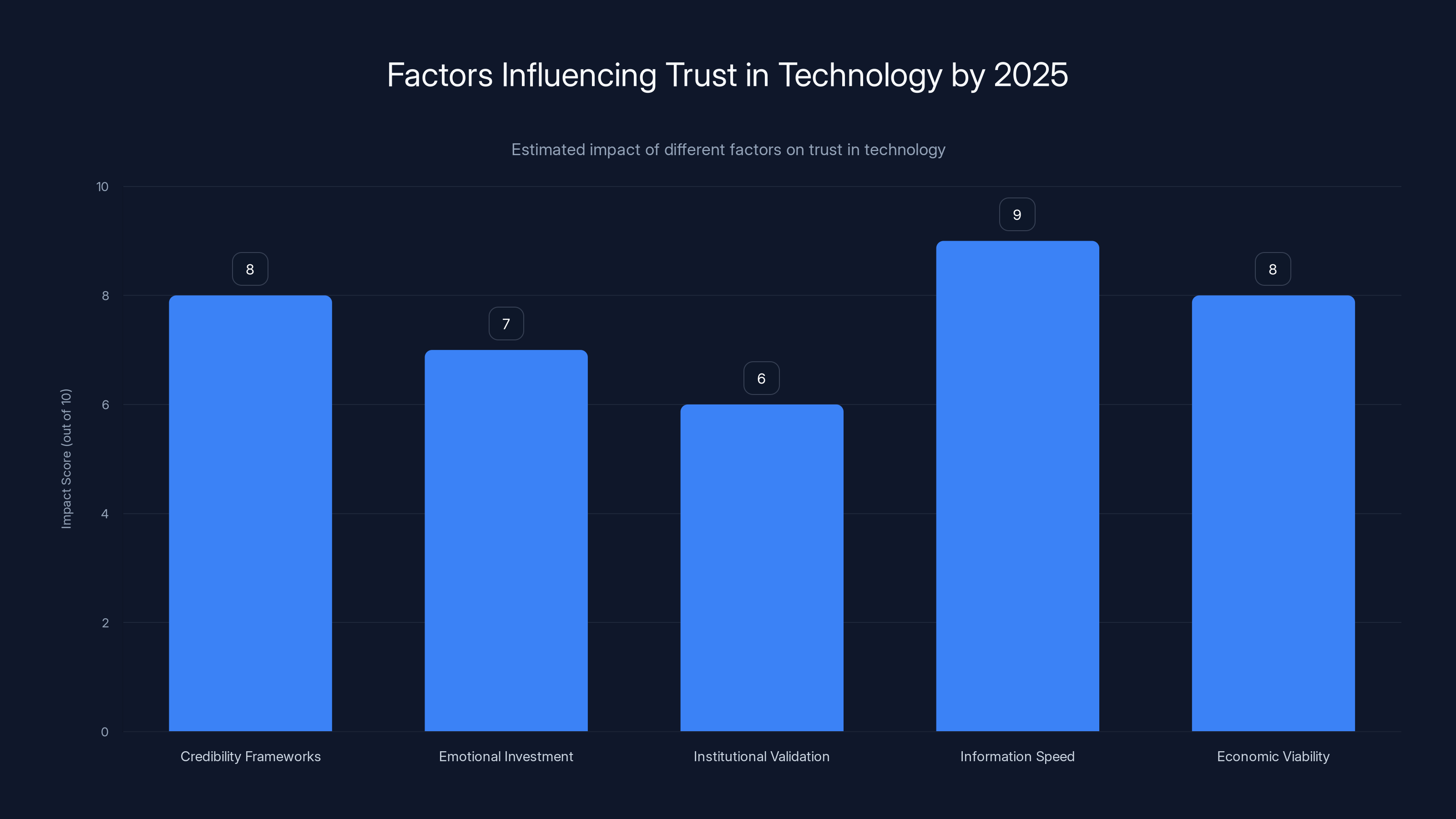

Estimated data suggests that the speed of information distribution and economic viability of misinformation are the most impactful factors affecting trust in technology by 2025.

How This Compares to Previous Tech Hoaxes and Leaks

This isn't the first time the tech industry has fallen for an elaborate hoax, but it might be the most coordinated and well-funded one targeting an AI company specifically.

Previous notable tech hoaxes included:

The Air Power wireless charger rumors (2019-2023): Apple famously cancelled this product after years of development. But for years before and after, fake images of Air Power units in the wild circulated online, along with hoaxes claiming the product was coming back. People wanted to believe Air Power was real, so hoaxes found an audience.

The i Phone 4 prototype bar incident (2010): An i Phone 4 prototype was found in a bar, recovered by Apple, and led to a journalistic investigation, not a hoax, but it shows how physical leaks can trigger massive attention.

Various "leaked" product images: Throughout the 2010s, fake images of upcoming Samsung, Apple, and Google products circulated constantly. Some were genuine leaks, others were fakes. The lack of certainty created an environment where hoaxes could thrive.

But the Open AI Super Bowl hoax had something those previous hoaxes lacked: sophisticated multimedia content, coordinated influencer participation, fake institutional validation, and significant funding. It was hoaxing at scale and with resources.

The Role of AI in Creating and Detecting Hoaxes

Here's the uncomfortable part of this story: the same AI technology that makes Open AI valuable also makes hoaxes like this much easier to execute.

Tools like Midjourney can generate photorealistic images from text prompts. DALL-E 3 and Stability AI's models can create compelling product renders. Runway can generate video. These tools democratized high-quality media creation. Someone doesn't need a $10,000 camera and a professional videographer to create something that looks professional.

Conversely, there are emerging tools designed to detect AI-generated content. IBM, Microsoft, and various startups are working on forensic analysis to identify deepfakes and generated media. But these tools are in an arms race with generation tools. Every time detection improves, generation improves. We're in an escalation cycle.

The hoax probably benefited from the fact that most people can't forensically analyze media. If you see a professional-looking video of Skarsgård and Ive with an elegant device, you just... believe it's real? That's the current state of media literacy. We're outsourcing credibility assessment to whether something "feels" professional, not whether it's actually real.

The hoax spread rapidly within the first few hours, reaching a peak before significant correction efforts began. Estimated data.

Implications for Tech Journalism and News Credibility

This hoax puts tech journalists in a difficult position. They're expected to break news first, but they're also expected to verify before publishing. The pressure to be first often wins, especially when a story seems plausible.

Some tech outlets probably ran versions of this story before Open AI issued denials. Others were more cautious. The outlets that published immediately had to issue corrections. The outlets that waited looked slow. This creates perverse incentives: publish without full verification and risk spreading misinformation, or wait for verification and risk being scooped.

The solution probably involves changing how tech journalism works. Rather than individual journalists competing to break stories first, we could see more emphasis on verification networks and collaborative investigation. Imagine tech journalists having a shared verification system: before any major leak is published, multiple journalists confirm it independently. This would slow down individual publications but increase overall credibility.

Alternatively, tech companies could be more proactive about directly communicating with journalists. If Open AI had a system where journalists could quickly verify claims by reaching out to company representatives, fewer hoaxes would gain traction. Some companies do this. Others keep journalists at arm's length, creating information vacuums that hoaxers can fill.

The Broader Context: Why We're Vulnerable to These Hoaxes Now

The reason this hoax worked so well is that it arrived at a moment when people are genuinely uncertain about AI and its capabilities. There's real innovation happening. There are real hardware projects in development. There's genuine competition between labs. That uncertainty creates an environment where hoaxes flourish.

If you're not sure whether Open AI is building hardware (they probably are), a sophisticated story claiming to show that hardware can feel credible. If you're not sure whether hardware announcements would happen at the Super Bowl (they might), that narrative fits. If you're unsure about the relationship between Jony Ive and Open AI (it's real, announced in 2024), then seeing them together in an ad feels plausible.

The hoax exploited genuine uncertainty and filled it with a confident false narrative. This is the core vulnerability: people don't know what's true, so they accept what feels true.

This is going to get worse before it gets better. As AI gets more capable, the ability to generate convincing false content will only increase. By 2027, we probably won't be able to trust video or audio at all without cryptographic verification or blockchain proofs of authenticity. The legal and technical infrastructure for proving something is real hasn't caught up with the ability to prove something is fake.

Lessons for Companies, Journalists, and Users

If you're an executive at a tech company, the lesson is clear: get ahead of your own news. Announce things on your own timeline before hoaxers can do it for you. Control the narrative. Jony Ive and Sam Altman had announced their hardware partnership, so hoaxers could credibly claim to show that product. If there were no prior announcements, the hoax would have seemed more obviously fake.

If you're a journalist, the lesson is: verify before you publish, even if it means being slower. Corrections damage credibility more than being second does. Build relationships with company PR teams so you can quickly verify claims. Don't rely on social media screenshots as primary evidence.

If you're a user consuming tech news, the lesson is: be skeptical of leaks that confirm what you already expected. The more a story aligns with your priors, the more likely you are to believe it without questioning. Real news often surprises you. Hoaxes usually confirm your expectations and provide a satisfying narrative.

More specifically:

- Check official channels first before accepting leaked information

- Cross-reference sources beyond social media and blogs

- Be suspicious of influencer posts promoting leaks (especially without context)

- Notice when multiple sources publish nearly identical stories (might be coordinated)

- Wait for company responses before treating rumors as fact

- Remember that professional-looking content doesn't equal real content

What Happened Next: Fallout and Industry Impact

The hoax probably had second and third-order effects that rippled through the industry for months.

First, it probably influenced how other companies approach product announcements. If hoaxers can credibly fake your Super Bowl ads, then you need to either announce faster or communicate more explicitly about what's real and what's not.

Second, it probably increased skepticism toward all hardware leaks in the AI space. For months after, legitimate leaks about AI hardware were probably treated with more caution. This makes it harder for actual news to spread.

Third, it probably accelerated regulation or discussion about synthetic media and misinformation. Policymakers saw that hoaxes could move markets and influence public perception. That creates pressure for new rules.

Fourth, it probably influenced how Open AI and other labs think about hardware announcements. The fact that people wanted this to be real so badly suggested there's genuine appetite for AI hardware. But the hoax also showed the dangers of uncertainty.

Fifth, it probably made influencers more cautious about what they promote. Getting paid to post about something that doesn't exist is a bad look. More influencers probably started asking for verification before accepting promotional deals.

Future Prevention: What Tech Companies Can Do

Companies can't prevent hoaxes entirely, but they can reduce their effectiveness.

Implement verification systems: Use cryptographic signatures or blockchain proofs to verify authentic communications. If you see a supposed press release, there should be a way to verify it came from official channels.

Pre-announce in advance: Give journalists advance notice of major announcements so they're less vulnerable to hoaxes. If journalists know real news is coming, they're more skeptical of fake leaks.

Invest in monitoring: Hire teams to actively monitor for misinformation about your company. The faster you detect hoaxes, the faster you can respond.

Build journalist relationships: Work with trusted journalists to verify claims before hoaxes spiral. A quick call to verify whether something is real prevents amplification.

Be transparent about product timelines: The less uncertainty about what's coming, the harder hoaxes are to believe. If you publicly say hardware is coming in 2026, then fake ads from 2025 seem less credible.

Support media literacy: Fund organizations that teach people how to identify misinformation. This is cheap compared to the cost of hoaxes.

These aren't perfect solutions, but they reduce the surface area for hoaxes.

The Deeper Question: Why Do We Want to Believe These Hoaxes?

Here's something worth thinking about. The hoax worked because people wanted it to be true. Imagine if the fake ad had shown Open AI making a terrible product decision. It probably wouldn't have spread the same way. Instead, the ad showed thoughtful design and celebrity appeal. It showed a company doing something exciting. People wanted Open AI to be building sleek hardware with Jony Ive and Alexander Skarsgård.

This suggests that misinformation succeeds not because we're stupid or lazy, but because it tells stories we want to be true. We're not really believing false information. We're hoping it's true and suspending skepticism accordingly.

That's actually the hardest problem to solve. You can improve media literacy and detection tools, but you can't change the fact that people hope for certain futures. And when someone presents a vision of that future, we're inclined to accept it.

The hoax exposed something about how we relate to tech companies and product announcements. We're eager to believe the next big thing is coming. We're desperate for excitement and innovation. So when someone packages that desire into a coherent narrative with professional-looking evidence, it feels true even when it's not.

That's the real vulnerability. Not that we lack information or literacy, but that we actively want to believe certain stories. Fixing that requires addressing why we're so hungry for these narratives in the first place.

FAQ

What exactly was the Open AI Super Bowl hoax?

The hoax involved fabricated images and videos of a supposed Open AI Super Bowl commercial featuring actor Alexander Skarsgård and designer Jony Ive with an elegant hardware device. The story claimed Open AI had pulled the ad at the last minute due to competitive concerns, and the footage was being leaked online.

How did the perpetrators make it believable?

They used multiple credibility vectors: high-quality AI-generated imagery, fake news headlines attributed to real journalists and outlets, a fake website backing up the narrative, influencer posts with paid promotional content, and professional-quality video that mimicked brand advertising standards. The narrative also aligned with known facts (Open AI is building hardware, Jony Ive works with Open AI, companies do pull ads from major broadcasts).

Why did this hoax spread so quickly?

The hoax spread because it confirmed existing expectations about Open AI's intentions while providing concrete "evidence" in the form of professional-looking media. People wanted to believe it was true. The story also arrived during high media interest in AI and hardware competitions, creating momentum for rapid sharing across social platforms.

How did Open AI respond to the hoax?

Open AI president Greg Brockman issued a quick public denial calling the story "fake news." Spokesperson Lindsay Mc Callum Rémy stated it was "totally fake." CMO Kate Rouch explained the specific coordination behind the hoax, including fake websites and fraudulent headlines. Their swift response limited the hoax's long-term credibility.

What does this reveal about misinformation in the AI age?

The hoax demonstrates that AI-powered content generation makes high-quality fake media accessible to anyone with basic resources. It shows that humans are credulous when presented with coherent narratives that align with existing expectations. It exposes weaknesses in tech journalism (pressure to break news before verification). And it highlights growing uncertainty about what's authentic in an age of synthetic media.

Could AI be used to prevent similar hoaxes in the future?

Yes, in multiple ways. IBM, Microsoft, and other companies are developing forensic analysis tools to detect AI-generated content and deepfakes. Blockchain-based verification systems could authenticate official communications. AI systems trained on company data could also help rapidly identify and flag misinformation about organizations. However, these tools are in an arms race with increasingly sophisticated generation tools.

How should people evaluate leaked tech news in the future?

Apply these verification steps: First, check official company statements before accepting leak claims. Second, verify whether multiple independent sources report the story (not just social media screenshots). Third, notice if the story confirms your existing expectations (hoaxes often do). Fourth, wait for company responses before treating rumors as fact. Fifth, remember that professional-looking content doesn't equal real content. Sixth, be suspicious of influencer posts promoting leaks without clear context or corporate confirmation.

What were the potential motivations behind this hoax?

Possible motivations included competitive sabotage (a rival company wanting to damage Open AI's credibility), influence manipulation (testing how quickly misinformation spreads or attempting to move markets), attention-seeking (hackers proving they could fool the industry), research (universities studying misinformation propagation), or marketing (another company riding the attention wave). The investigation into the perpetrators continued through 2025 but hasn't reached public conclusions.

How did the hoax exploit the hardware announcement timeline?

The hoax took advantage of existing uncertainty about hardware announcements. Open AI had announced a real hardware partnership with Jony Ive in 2024, but specific products and timelines remained unclear. The hoax filled this uncertainty with a confident false narrative. By appearing to show what that hardware would look like, it exploited the information gap between what was announced and what was actually known.

What impact did this have on tech journalism practices?

The hoax exposed tension between the pressure to break news first versus verifying claims before publication. Tech journalists faced a choice: publish immediately and risk spreading misinformation, or wait for verification and risk being scooped. This led to discussion about changing journalism workflows, including verification networks, collaborative investigation, and faster company communication channels. Some outlets probably reconsidered their sourcing standards for major announcements.

Why was this hoax harder to detect than typical misinformation?

Unlike obvious misinformation that contains errors or crude execution, this hoax was professionally executed across multiple channels. It included real payments to real people, fake institutional validation, high-quality media production, and a coherent narrative that aligned with known facts. The hoax exploited the fact that people are inclined to believe what they want to be true, and professional presentation gives false confidence in authenticity. These factors combined to make it significantly harder to dismiss as obviously fake.

Key Takeaways About Trust and Technology in 2025

The Open AI Super Bowl hoax offers several important insights for anyone following technology and media in the AI era.

First, the line between real and fake is increasingly blurry. Professional-looking content doesn't mean authentic content. We need new frameworks for assessing credibility when anyone with accessible tools can generate convincing media.

Second, humans are fundamentally credulous when presented with coherent narratives that align with our expectations. We're not primarily failing due to intelligence gaps, but due to emotional investment in certain outcomes. That's a harder problem to solve.

Third, institutional validation can be faked, which undermines traditional credibility markers. When fake news headlines and fake websites can mimic real ones, our usual verification methods break down.

Fourth, speed of information distribution has outpaced our ability to verify it. Something can go global in hours, but corrections take days or weeks to gain traction. This structural asymmetry favors misinformation.

Fifth, the convergence of AI capability, social media reach, and financial incentives has made professional-grade misinformation economically viable. Someone can spend

Finally, the hoax revealed genuine appetite for the products and narratives it promoted. The fact that people wanted this to be true suggests real demand for AI hardware, celebrity-adjacent tech, and the continued innovation from companies like Open AI. That demand itself isn't misinformation, just the underlying truth the hoax exploited.

Moving forward, individuals, companies, and institutions will need to evolve their relationship with information. That means developing stronger media literacy, implementing better verification systems, supporting journalism that has time to verify, and fundamentally rethinking how we assess authenticity in an age of synthetic media.

The hoax was ultimately harmless. Nobody lost money. Open AI recovered quickly. But it was a warning. Next time, the stakes might be higher. Next time, someone might use similar tactics to move markets, influence elections, or damage a company beyond recovery. The question isn't whether we'll see more sophisticated hoaxes. The question is how we'll adapt our institutions and behaviors to handle them.

Related Articles

- AI-Generated Anti-ICE Videos and Digital Resistance [2025]

- New York's AI Regulation Bills: What They Mean for Tech [2025]

- The Jeffrey Epstein Fortnite Account Conspiracy, Debunked [2025]

- Washington Post's Tech Retreat: Why Media Giants Are Abandoning Silicon Valley Coverage [2025]

- Voice: The Next AI Interface Reshaping Human-Computer Interaction [2025]

- AMD Ryzen AI Max+ 395 All-in-One PC: Game-Changing Desktop Power [2025]

![OpenAI's Super Bowl Hardware Hoax: What Really Happened [2025]](https://tryrunable.com/blog/openai-s-super-bowl-hardware-hoax-what-really-happened-2025/image-1-1770613532069.jpg)