India's Aggressive New Deepfake Regulations Transform Content Moderation

India just became one of the first countries to formally regulate deepfakes with teeth. And the regulatory bite is sharp.

Starting February 20, 2026, every major social media platform operating in India faces a radically compressed timeline for removing synthetic audio and video content. We're talking two to three hours from the moment a complaint lands or authorities issue orders. That's not a suggestion. It's a legal requirement with serious consequences for non-compliance.

What makes this particularly significant? India isn't some niche market. With over a billion internet users and a predominantly young population, it's the world's fastest-growing digital ecosystem. When India changes the rules, global tech firms listen. Meta, YouTube, Snapchat, X—they all have to adapt. And when they do, those adaptations often trickle into their global practices.

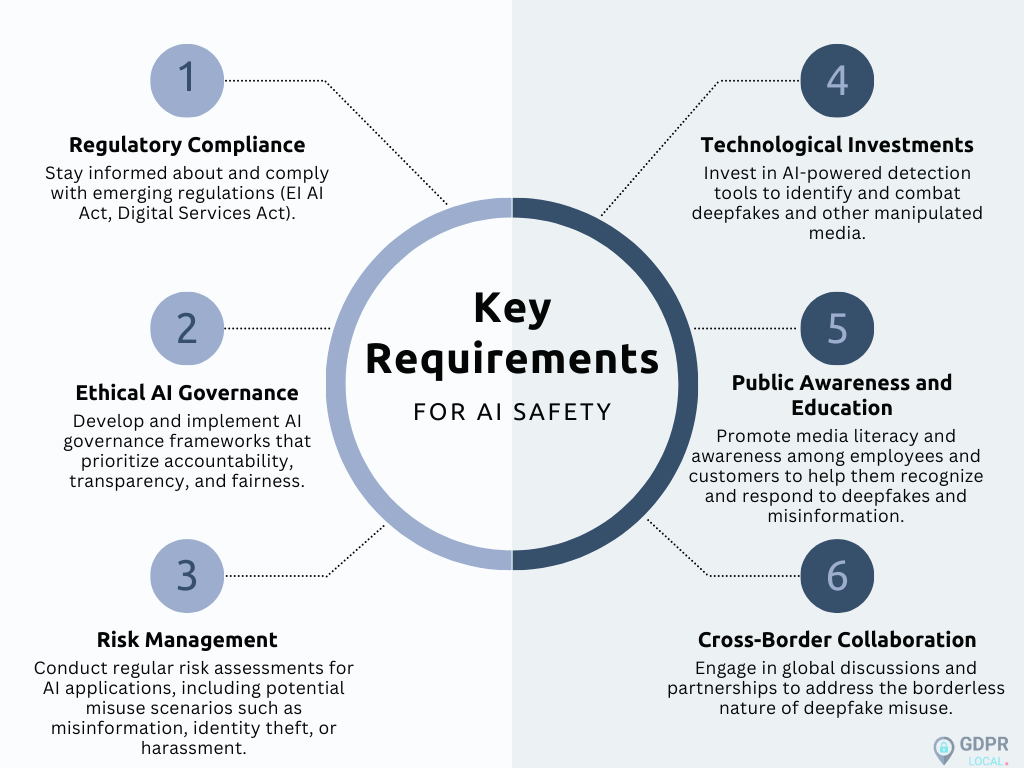

The implications are massive. We're looking at a shift from reactive moderation (spotting problems after they spread) to something closer to real-time content policing. Platforms will need to deploy sophisticated AI systems to identify deepfakes before humans even flag them. Labeling requirements. Traceability data embedded in synthetic content. Automated verification systems. This isn't just policy. It's a complete rearchitecture of how content moderation works.

But here's the thing—these timelines might actually be impossible to meet consistently. The Internet Freedom Foundation, India's leading digital rights group, is already flagging that two to three hours leaves almost zero room for human review. That means platforms will almost certainly automate content removal at scale, potentially catching innocent content in the dragnet.

This creates a fascinating tension. The rules exist to protect people from harmful deepfakes. But the execution might inadvertently suppress legitimate speech and create a chilling effect on what people feel comfortable sharing.

Let's dig into what's actually happening, what platforms are expected to do, and what this means for the global internet.

TL; DR

- New Indian rules take effect February 20, 2026, requiring social media platforms to remove deepfakes within 2-3 hours of complaints or official orders

- Synthetic content must be labeled and embedded with traceable provenance data, with certain categories (non-consensual intimate imagery, criminal content, deceptive impersonations) banned outright

- Non-compliance jeopardizes safe-harbor protections, exposing platforms to greater legal liability in India's massive market of 1 billion+ users

- Aggressive timelines create compliance challenges, requiring platforms to deploy advanced AI systems and automate moderation at scale

- Global impact is inevitable, as platforms adapt their policies to meet Indian requirements and those changes influence international practices

Estimated data shows that the 2-3 hour takedown deadline is significantly shorter than typical moderation cycles, highlighting the operational challenge for platforms.

What India's New Deepfake Rules Actually Require

The amended IT Rules aren't vague policy statements. They're specific technical and operational mandates that every platform must implement.

First, let's understand the scope. These rules target synthetic audio-visual content—deepfakes specifically. Not all AI-generated content. Not all misinformation. Just convincing synthetic media that impersonates real people or fabricates events.

Platforms must now require users to disclose whether content is synthetically generated. Not as a suggestion. As a mandatory field. Think of it like a label on processed food, except the consequence of mislabeling isn't a fine, it's legal liability.

But disclosures alone aren't enough. Platforms must deploy verification tools to check those claims. If someone uploads a video and claims it's real when it's actually a deepfake, the platform's verification system should catch it. This is where AI detection technology becomes critical. The problem? Current deepfake detection systems aren't perfect. Some miss obvious manipulations. Others flag legitimate videos as synthetic.

Any content identified as synthetic must be clearly labeled and embedded with provenance data—essentially a digital fingerprint showing when the content was created, by whom, and whether it's been modified. This traceability requirement is crucial because it creates an audit trail. You can't just slap a "synthetic" label on something and call it a day. There has to be proof of that claim.

Then there are the outright prohibitions. Certain categories of synthetic content are banned entirely: deceptive impersonations designed to deceive, non-consensual intimate imagery, content linked to serious crimes, and material that could incite violence or terrorism. These aren't edge cases. They're the categories where deepfakes do the most damage.

The really interesting part? The rules explicitly carve out exceptions for "routine, cosmetic or efficiency-related" uses of AI. So a slight color correction applied with AI during photo editing? Probably fine. A voice-cloning service that clearly labels outputs as synthetic? Probably fine. A deepfake video making someone look like they committed a crime? Absolutely not fine.

The Two-Hour Window That's Breaking Everything

Here's where the rules get operationally brutal: the takedown timelines.

When Indian authorities issue a formal takedown order, platforms have exactly three hours to remove the content. Three hours. Not three business days. Not three hours from when you see the email. Three hours from the moment they formally issue the directive.

For urgent user complaints—content that's causing immediate harm or involves children—the window shrinks to two hours. Two hours to identify the content, verify the complaint, review it for context, and remove it.

To put this in perspective, most content moderation teams operate on 24-hour review cycles. Some run on 48 hours. A few enterprise platforms with round-the-clock moderation can hit 12 hours. Two to three hours means you're operating in real-time mode, 24/7, with zero buffer.

This is why automated systems become essential. You simply cannot have humans reviewing every flagged deepfake within two hours consistently. The math doesn't work. If you're getting 50 complaints an hour about synthetic content, and each requires 15 minutes of human review, you're already 7.5 hours behind schedule.

Platforms will almost certainly respond by lowering their automation thresholds. Instead of waiting for high-confidence AI verdicts, they'll err on the side of removal. Why? Because missing a deadline has worse consequences than over-removing content.

According to policy experts analyzing the rules, this creates a perverse incentive structure. Platforms face significant legal liability if they miss takedown windows—they lose safe-harbor protections that shield them from liability for user-generated content. So they'll over-remove. And over-removal means legitimate content gets caught in the dragnet.

A photo edited with AI filters? Potentially removed. A parody video with clearly synthetic elements? Potentially removed. A documentary using deepfake technology for educational purposes? Potentially removed.

The Internet Freedom Foundation has already raised alarms about this, arguing that the compressed timelines essentially guarantee that platforms will choose automated removal over human review, undermining due process and free speech protections.

AI detection systems show high accuracy (85-95%) under controlled conditions but drop to around 70-80% in real-world scenarios. Estimated data.

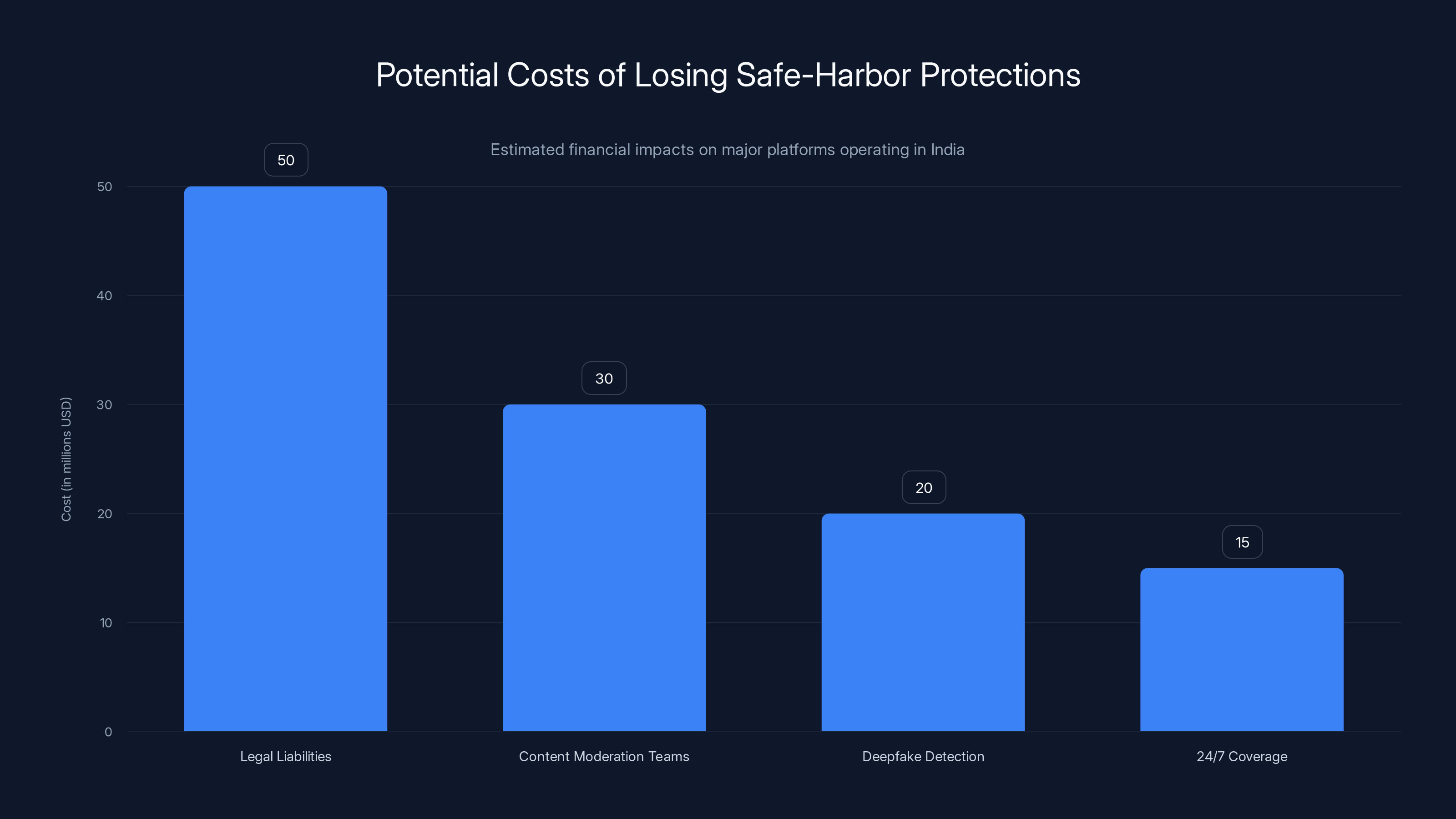

The Safe-Harbor Consequences That Actually Matter

So what happens if a platform misses a two-hour deadline? Or fails to properly label deepfakes? Or can't deploy adequate verification systems?

They lose their safe-harbor protections.

Safe-harbor provisions are fundamental to how the internet works. Without them, platforms would be legally liable for everything users post. Every video, every comment, every image. They couldn't operate at scale. The liability would be crushing.

India's IT Rules Section 79 provides safe-harbor protections for intermediaries—that's legal-speak for "platforms." But those protections come with conditions. One key condition: comply with takedown orders promptly and follow rules about content moderation.

If a platform violates these new deepfake rules, they're not just facing regulatory fines. They're losing safe-harbor status. That means they can be held directly liable for harmful deepfakes on their platform, even if a user created them.

For a platform operating in India, losing safe-harbor status is catastrophic. It transforms your legal liability from "we're not responsible for user content if we follow procedures" to "you're responsible for everything." Suddenly, every deepfake, every scam, every instance of non-consensual intimate imagery becomes the platform's direct legal problem.

This is why these rules have teeth. They're not just policy guidance. They're a threat to the fundamental economics of how platforms operate.

Companies are already working through the implications. Meta, Google, and others will likely establish dedicated teams for Indian content moderation. They'll need specialized deepfake detection capabilities. They'll need 24/7 coverage across multiple timezones. They'll need escalation procedures for edge cases. And they'll need to build all of this to operate at scale while hitting impossible timelines.

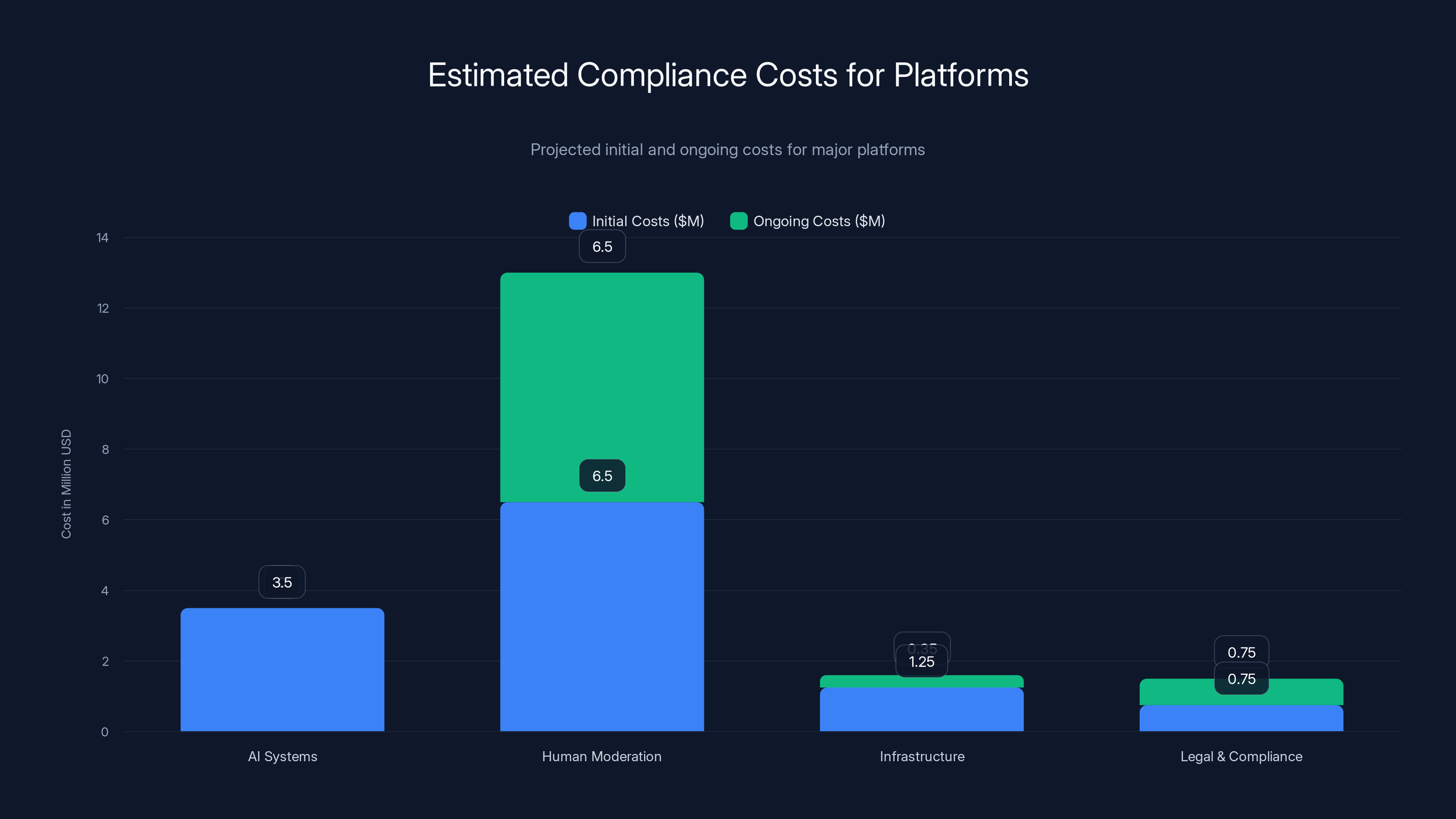

The financial cost is non-trivial. Industry sources estimate that major platforms will need to invest millions in infrastructure, AI systems, and human moderation capacity to meet these requirements in India alone.

AI Detection: The Technical Challenge Nobody's Solved

The rules assume that platforms can reliably detect deepfakes. That assumption is optimistic at best.

Deepfake detection is genuinely hard. The best AI-powered detection systems available today have accuracy rates somewhere in the 85-95% range under controlled conditions. Throw them at real-world data—compressed videos, low-resolution uploads, videos edited multiple times—and accuracy plummets.

There's also an arms race dynamic. As detection systems improve, so do deepfaking tools. It's a classic cat-and-mouse game. A detection system catches synthetic speech patterns, so deepfakers improve their models. The detector learns from new datasets, so deepfakers use different training approaches.

Platforms deploying detection systems face a choice: set high thresholds and miss real deepfakes, or set low thresholds and flag legitimate content. In an environment where missing deadlines costs safe-harbor status, platforms will almost certainly choose low thresholds.

The technical approach most platforms are likely to adopt: a multi-layered system. First, automated detection using computer vision and audio analysis. Second, metadata analysis looking for signs of manipulation. Third, user-reported content flagged for human review. Fourth, escalation procedures for edge cases.

But none of this happens in two hours consistently. It'll work maybe 70-80% of the time. The other 20-30%, either the content gets removed even though it shouldn't be, or it stays up longer than two hours.

Platforms may also adopt what's sometimes called "defensive removal"—taking down content that's potentially synthetic even if they're not certain. Better to over-remove than to miss deadlines and lose safe-harbor status.

This creates an interesting problem for the Indian government. The rules are designed to protect people from harmful deepfakes. But if over-zealous removal becomes the norm, they might inadvertently suppress legitimate speech, artistic expression, and research.

The Global Impact: When India's Rules Become Everybody's Rules

Here's why this matters beyond India: major platforms don't typically maintain separate moderation systems for different countries.

Yes, they have region-specific policies. YouTube's rules in India differ slightly from YouTube's rules in Germany. But the underlying infrastructure—the AI systems, the detection models, the removal procedures—those are global.

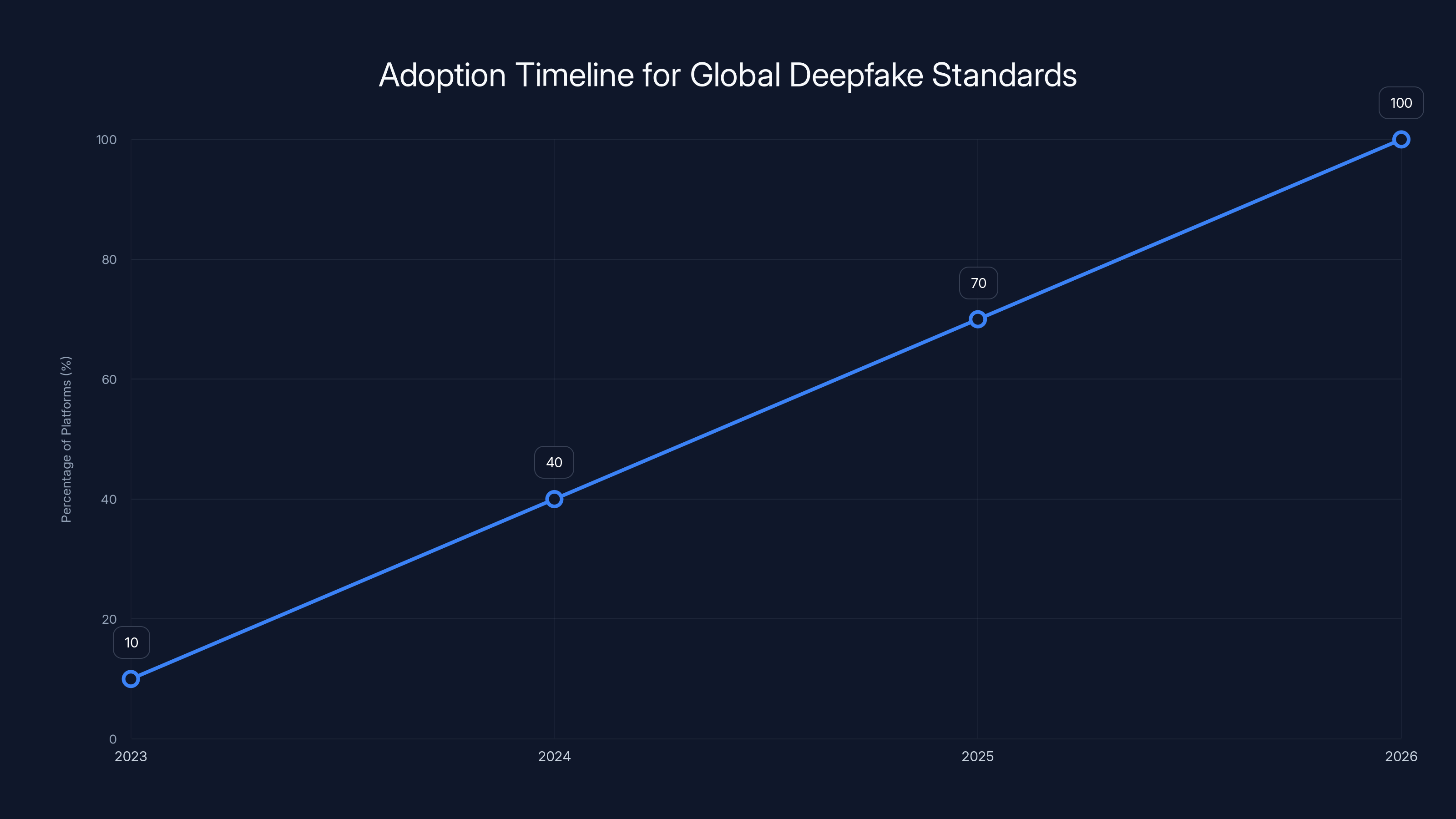

When India requires 2-3 hour takedowns for deepfakes, platforms face a decision: build separate systems for India, or adopt similar standards globally.

Separate systems are expensive and operationally messy. They require different training for moderation teams, different flagging procedures, different escalation paths. Most platforms will choose instead to implement similar standards globally.

What that means: India's aggressive deepfake rules become, de facto, global standards. A platform can't really say, "We use aggressive deepfake detection in India and lenient detection everywhere else." The cost and complexity don't make sense.

This is already happening with other regulations. When the European Union implemented GDPR, platforms didn't build separate GDPR-compliant systems for Europeans while exempting everyone else. They implemented privacy controls globally because that was simpler than managing two different systems.

Expect the same here. Within 12-18 months, the major platforms will likely have implemented deepfake detection and labeling systems globally that reflect India's requirements.

Why? Because compliance is cheaper than fragmentation. And also because from a risk perspective, the moment you've built systems to meet India's standards, you've essentially proven you can meet those standards everywhere. Regulators in other countries will notice that. Expect them to adopt similar rules.

We might be looking at the emergence of a global standard for deepfake regulation, with India as the template.

Estimated data suggests that by 2026, nearly all major platforms will have adopted deepfake standards similar to India's, reflecting a global shift towards unified regulation.

What Deepfakes Actually Are (And Why Regulation Is So Hard)

Before we go further, let's define the actual problem.

Deepfakes aren't just any AI-generated content. They're specifically synthetic media designed to convincingly impersonate someone real. Usually created using deep learning models trained on images or videos of actual people.

The basic technology is neural networks—specifically generative models that learn patterns from training data and can create new content that mimics those patterns. They're trained on hundreds or thousands of images of a real person, learning the shape of their face, how their skin reflects light, the patterns of their expressions. Then the model can generate entirely new images of that person in situations they never actually experienced.

Some deepfakes are obvious. The jaw doesn't quite match. The eyes don't focus consistently. There are artifacts around the edges. But state-of-the-art deepfakes? They're increasingly indistinguishable from reality to the human eye.

There are legitimate uses for the technology. Hollywood uses deepfake-adjacent technology for digital stunt doubles and aging/de-aging actors. Medical research uses synthetic imaging for training purposes. Education uses synthetic media for demonstrations.

But there are also deeply harmful uses. Non-consensual intimate imagery. Political misinformation. Fraud targeting someone's family members ("a loved one is in trouble, send money"). Harassment campaigns.

The challenge for regulation is distinguishing between the two. A deepfake of a politician making a controversial statement could be obviously fraudulent misinformation. Or it could be political satire protected as speech. How do you regulate one without suppressing the other?

India's rules attempt to split the difference by carving out exceptions for routine uses and focusing on clearly harmful categories. But enforcement happens in the gray area. And that's where these rules get tricky.

How Platforms Are Actually Responding

Major platforms haven't publicly detailed their India compliance strategies yet. But industry insiders have provided some insight.

Meta and Google are likely investing heavily in deepfake detection AI. They'll probably partner with specialized deepfake detection companies to supplement internal systems. Both companies already do this for other content categories (child sexual abuse material, terrorism). Deepfakes will follow the same model.

They're also probably building specialized teams in India focused purely on synthetic content moderation. Not just general content moderators, but people trained specifically on deepfake identification, artifact detection, and the edge cases that confuse automated systems.

They'll implement mandatory disclosure flows. Every upload in India will require users to attest whether content is synthetic. Some percentage of those uploads will be randomly audited for accuracy.

They'll add significant resources to their grievance handling systems. Two-hour removal windows require speed. That might mean automated removal for flagged content with appeals handled asynchronously. Or it might mean 24/7 moderation teams that can escalate to humans quickly.

YouTube in particular might shift its approach to user-uploaded content in India. Instead of allowing immediate publishing with after-the-fact moderation, they might implement pre-moderation for video categories flagged as high-risk for deepfakes.

Snapchat, with its focus on real-time video, might add filters or automated warnings for detected synthetic content rather than immediate removal.

X (formerly Twitter) has been contentious with Indian regulators, and these rules might trigger another escalation in that relationship. X's moderation is lighter than competitors, and two-hour deepfake removal windows might force a strategic decision about how serious they are about the Indian market.

Smaller platforms operating in India will face brutal challenges. They don't have the resources to build sophisticated AI detection systems or maintain 24/7 moderation teams. Some might simply restrict uploads to pre-verified content, essentially shutting down user-generated content in India.

The Legal and Political Context

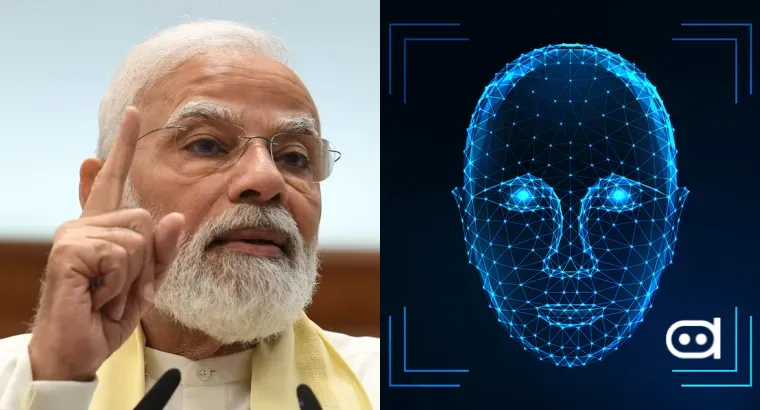

Understanding these rules requires understanding the broader Indian regulatory environment.

India's government has been increasingly assertive about content moderation over the past few years. In 2025, just months before these deepfake rules, the government reduced the number of officials authorized to issue content removal orders. That might sound like it's loosening control, but it's actually centralizing authority—fewer people making removal decisions, but with more power.

There's also a history of tension. Major platforms and Indian civil society groups have criticized government takedown orders as overbroad and lacking transparency. Meta and Google have published transparency reports showing thousands of removal orders from Indian authorities. Elon Musk's X actually sued the Indian government over removal directives, arguing they amounted to overreach.

But here's the political reality: India is a massive market. It's growing rapidly. Government regulators have leverage. Platforms need market access more than they need to resist government authority. So they comply.

There's also a legitimate concern about misinformation and harmful content. India has experienced deepfake-fueled violence. Synthetic videos of religious figures. Fabricated audio of political leaders. Manufactured intimate imagery used to harass women. These aren't abstract concerns. They've had real-world consequences.

The government sees regulation as necessary. Civil society groups worry about overreach and suppression of legitimate speech. Platforms are caught in the middle, trying to balance business interests with compliance obligations and principle.

What's interesting about these specific rules is that they're actually relatively narrow. They don't regulate all content. They don't regulate misinformation broadly. They focus specifically on synthetic media. That's actually more targeted than earlier draft versions, which would have covered a broader range of content.

Industry sources say that this narrowing happened because platforms pushed back on earlier drafts. The government took some feedback and refined the rules. But other recommendations didn't make the cut. And the final version was adopted with limited public consultation, which both industry and civil society groups have criticized.

From a policy perspective, there's a reasonable argument that these rules are necessary and well-designed for their specific purpose. From a compliance perspective, they're brutal. From a free speech perspective, they're concerning. All three perspectives are kind of right.

Estimated initial compliance costs range from

The Labeling Requirements and What They Mean

One element of these rules that hasn't gotten as much attention as the takedown timelines is the labeling requirement.

Every synthetic audio-visual piece has to be clearly labeled as such. Not hidden in metadata. Not buried in description text. Actually labeled where people will see it.

This is interesting because labeling does something different than removal. It doesn't suppress content. It provides context. It lets people make informed decisions about what they're watching.

The challenge is doing this at scale and in a way that's actually visible to users. Most platforms will probably add watermarks or badges to synthetic content. YouTube might add info panels. Instagram might add labels below posts. Snapchat might flag videos in the caption.

But there's also a usability question. Users don't read labels. If a synthetic video has a label saying it's synthetic, but 95% of people watching never see the label or don't process it, has the requirement actually accomplished anything?

Platforms might respond by making labels more prominent. They might pause synthetic videos before playback to show a warning. They might require explicit acceptance before viewing. Or they might just add subtle labels and hope for the best.

There's also the question of false positives. If the automated labeling system incorrectly marks legitimate content as synthetic, that damages credibility. A video labeled "synthetic" when it's actually real undermines trust in the labeling system itself.

Over time, these labeling requirements might actually become more effective than removal as a way of addressing deepfake harm. Instead of suppressing content, you provide context. People can still see things, make up their own minds, but with accurate information about authenticity.

That's actually the more sophisticated regulatory approach. It respects autonomy while addressing misinformation. The problem is that it requires users to read labels and process information. In practice, that doesn't always happen.

Privacy Concerns and Data Disclosure

Another provision that's raised concerns from civil society groups relates to user identification.

Under the new rules, platforms can disclose the identities of users who create deepfakes to complainants. Not just to law enforcement. To private individuals who report the content.

On the surface, this makes sense. If someone creates a deepfake that harms you, you should be able to identify them and potentially pursue civil remedies.

But there are obviously privacy implications. Users might be reluctant to upload legitimate synthetic content if they risk having their identity disclosed to complainants. There's potential for harassment. If you upload content that someone interprets as a deepfake even though it's not, your identity gets shared without judicial review.

The Internet Freedom Foundation has flagged this as a significant concern, arguing that disclosure without judicial oversight violates due process protections.

Platforms will need to implement procedures around this. They'll probably require some verification that the person requesting user information has a legitimate grievance. They might require judicial orders. But the rules as written don't explicitly mandate that.

This is another area where the rules create compliance challenges for platforms. They need to balance user privacy with compliance obligations, all while operating under tight timelines and legal uncertainty.

Traceability and Provenance Data: The Technical Deep Dive

The rules require that synthetic content be embedded with "traceable provenance data."

What does that actually mean technically?

Provenance essentially means origin and history. For synthetic content, it would ideally include: who created the content, when it was created, what tools were used to create it, whether it's been modified since creation, and the source training data used in creating it.

Implementing this is genuinely difficult. You need to create digital signatures or watermarks that are resistant to tampering but also invisible or minimally noticeable to users. You need metadata that travels with the content as it's downloaded, shared, reuploaded, edited.

One approach that's been researched is media fingerprinting—essentially creating a hash or fingerprint of the content that's stable even when the content is compressed, edited, or modified slightly. This allows detection of the content even when it's been altered.

Another approach is blockchain-based provenance tracking, where every modification is logged and verifiable. But this requires buy-in from all platforms handling the content, which is impractical.

Most platforms will probably implement internal provenance tracking at upload, with metadata attached to the content in their systems. But once content leaves a platform—gets downloaded, shared on messaging apps, reuploaded—that provenance data is likely to be lost.

The rules probably don't contemplate this limitation, but it's a real constraint of how content travels on the internet.

Platforms will need to be transparent about these limitations. They can track provenance for content hosted on their systems, but once it leaves, visibility is limited.

Platforms face significant pressure with Indian takedown orders requiring content removal in 3 hours, and urgent complaints in just 2 hours, compared to typical 24-hour review cycles. Estimated data.

Timeline Realities: What 2026 Actually Looks Like

The rules take effect February 20, 2026. That's when the clock starts.

For platforms, the practical implementation timeline is tight. They have roughly a month from the publication of the final rules to do implementation. For major platforms with existing deepfake detection systems, this might be achievable. They'll need to integrate existing systems with Indian infrastructure, adapt them to meet specific requirements, and test at scale.

For smaller platforms, a month might not be enough. They might need to restrict uploads, implement manual review processes, or partner with external moderation vendors.

The first few months after implementation will probably be chaotic. Platforms will discover edge cases their systems don't handle well. They'll miss some deadlines while they scale up. They'll over-remove content while they calibrate their automation thresholds. There will be appeals, complaints, criticism.

Then, around month 3-6, systems stabilize. Platforms figure out what works. They hire additional staff, fine-tune AI systems, establish processes.

By six months in, compliance becomes routine. But it's expensive routine. Platforms will be running dedicated moderation teams in India, maintaining specialized AI systems, handling appeals, dealing with regulatory queries.

That investment is a cost of doing business in India. Which brings us back to the global impact question: if platforms are building this infrastructure anyway, they might as well use it globally.

Consultation Process and What Got Left Out

Industry sources told analysts that the final rules were adopted after a limited consultation process.

The original draft was broader. It would have covered all synthetic content, not just audio-visual deepfakes. That would have included AI-generated images, text, etc.

Platforms pushed back. They argued the scope was too broad, that it would capture too much legitimate AI content, and that enforcement would be impossible.

The government listened to that feedback and narrowed the scope to audio-visual content. That's a concession to industry input.

But other recommendations didn't make it. Industry groups apparently suggested longer takedown timelines. They suggested carving out more exceptions. They suggested allowing for judicial review before disclosure of user identities.

None of those made it into the final rules. So while there was consultation, it seems selective. The government took feedback on scope but not on timelines, exceptions, or due process protections.

This creates frustration in the industry. Platforms feel like they were heard on some issues but not others. It feels arbitrary. Why adjust the scope but not the timelines?

Civil society groups are similarly frustrated, but for different reasons. They feel the consultation process excluded their voices, that civil society perspectives on free speech and due process weren't adequately considered.

This is actually pretty typical for Indian tech regulation. The government seems willing to engage with industry feedback on technical feasibility, but less willing to compromise on enforcement mechanisms or timelines. The assumption seems to be that if you're a major platform, you have resources to comply even with aggressive requirements.

That assumption might be defensible from a policy perspective—you want to incentivize compliance, so you set demanding requirements. But it creates real tensions with industry and civil society.

Deepfakes and Democracy: The Broader Concern

Underlying these rules is a genuine concern: deepfakes and misinformation in democratic politics.

India has experienced incidents where synthetic videos or audio contributed to real-world violence or misinformation spread. These aren't theoretical harms. They've happened.

The government's perspective is that regulation is necessary to protect democratic discourse and public safety. That's not an unreasonable position.

But there's also legitimate concern about using deepfake regulation as a pretext for political censorship. If you can flag anything as a deepfake, you can suppress inconvenient information or political opponents.

These rules might be written with good intentions, but once they're in place, there's potential for abuse. That's why civil society groups emphasize the importance of judicial oversight, due process, and transparency in how the rules are enforced.

The regulatory approach India is taking—specific categories of prohibited content, labeling requirements, traceability—is actually fairly sophisticated. It's not a blunt instrument. It's trying to be targeted.

But the execution, with those two-hour timelines and limited due process, is where concerns arise.

Overtime, we might see judicial review of these rules. The Indian courts have been willing to intervene on free speech issues. If civil society groups challenge the rules as unconstitutional, courts might adjust them.

But for now, the rules are in place. And platforms need to comply.

Losing safe-harbor protections could lead to significant costs for platforms, with legal liabilities and content moderation being major expenses. (Estimated data)

What This Means for Users

If you're a user in India, these rules will affect your experience.

First, synthetic content will be harder to access. Not impossible, but flagged, labeled, potentially requiring extra steps to view. That might be good if you're trying to avoid misinformation. It might be frustrating if you're interested in the technology or artistic uses of deepfakes.

Second, you might see more aggressive moderation from platforms. Some content that would have been fine a year ago might get removed. Platforms are going to err on the side of caution.

Third, if you create content using AI tools, you'll need to be thoughtful about disclosure. If you use AI to edit a video or generate imagery, you probably need to disclose that. Otherwise, you risk having your account penalized or your content removed.

Fourth, if you're concerned about synthetic content being created about you, these rules offer some protection. You can report deepfakes, and platforms are obligated to act quickly. You might also be able to identify the creator and pursue civil remedies.

Overall, if you're concerned about deepfakes and misinformation, these rules probably feel like they're protecting you. If you're interested in creative AI use or political satire, they probably feel restrictive.

Both perspectives are valid. The rules are trying to address a real problem, but in doing so, they create friction for legitimate uses and create pressure for over-moderation.

International Comparisons: How India Stacks Up

India isn't the first country to regulate deepfakes, but its approach is notably aggressive.

The European Union's Digital Services Act addresses deepfakes as part of broader content moderation requirements, but without specific timelines as tight as India's. The EU focuses more on transparency and due process.

The United States is still working on federal deepfake regulation. Some states have passed laws, but they're inconsistent and mostly focus on criminal liability rather than platform responsibility.

China has deepfake regulations that are actually quite strict, but they exist within a broader surveillance and content control apparatus.

India's approach is distinctive because it combines aggressive timelines with specific technical requirements. It's harder than most countries' approaches in terms of speed, but narrower in scope than some.

Over time, we might see a global pattern emerge where countries adopt similar timelines and requirements because the major platforms implement them as global standards. India might effectively be setting the global baseline for deepfake regulation.

That's potentially positive from a content safety perspective—consistent rules across countries. It's potentially negative from a free speech perspective—the most aggressive regulations becoming global defaults.

But that's how international regulation tends to work. The strictest requirement often becomes the global standard because platforms find it simpler to implement consistently.

The Economics of Compliance

How much is this going to cost platforms?

That's the question analysts are wrestling with. The answer varies based on platform, but we can estimate.

AI systems: Platforms will need to develop or license deepfake detection systems optimized for their content types. Building custom systems costs $2-5M. Licensing existing systems is cheaper but less tailored. Most platforms will probably do some combination.

Human moderation: India-specific moderation teams. If you're handling content at global-platform scale, you're probably looking at 100-500 dedicated moderators for India. At

Infrastructure: Servers, systems, testing environments for Indian content. Maybe

Legal and compliance: Staying on top of regulatory changes, handling appeals, documenting compliance. Maybe $500K-1M annually.

Total estimated cost per major platform:

For Meta or Google, that's a rounding error on their budgets. For smaller platforms, it's significant.

But there's also indirect cost. Implementation complexity, brand risk if you get compliance wrong, regulatory uncertainty.

Platforms are going to pass some of this cost to users through moderation delays or reduced functionality. They might also use it as leverage in discussions with the Indian government—"compliance is expensive, help us find ways to make it work."

From a public policy perspective, the question is whether the compliance costs are justified by the harm prevented. If these rules prevent significant deepfake-related harm, they're worth it. If they mostly cause unnecessary friction with minimal benefit, they're not.

That's ultimately an empirical question that will be answered in 2-3 years when we have data on actual impacts.

What Happens When Rules and Reality Collide

Here's what's probably going to happen in practice.

February 20 arrives. Rules go into effect. For a few days or weeks, nothing much changes because systems aren't fully ready.

Platforms reach out to regulators: "We're implementing but need clarification on X, Y, Z." Some of those clarifications are granted, others aren't.

Moderation scales up. Some content gets removed quickly. Other content sits in queues.

Platforms start missing two-hour windows occasionally. Regulators notice. Complaints are filed.

Platforms over-correct, starting to remove content more aggressively to ensure they meet timelines.

Users and civil society notice the over-removal. Complaints about censorship increase.

Court challenges begin. Civil society groups file suits arguing the rules violate free speech.

Regulators and platforms negotiate adjustments. Maybe the definitions get clarified. Maybe timelines get extended slightly. Maybe exceptions get added.

After 6-12 months, a new equilibrium emerges. Platforms know what compliance looks like. Regulators know what platforms can actually achieve. The system stabilizes.

It's not perfect, but it works.

That's the typical pattern for new regulations. Initial chaos, then adaptation.

The difference here is that the cost of getting it wrong—losing safe-harbor protections—is quite high. So platforms are probably going to be conservative in their interpretations.

Looking Forward: What's Next

India's deepfake rules are just the beginning.

Expect to see similar regulations in other countries within 2-3 years. Australia is considering it. The UK is considering it. Brazil is considering it. Canada is considering it.

Each country will add its own modifications, but the basic framework—detection requirements, labeling, takedown timelines—will probably be similar.

Platforms are already bracing for this. They're not just building systems for India. They're building systems they can scale globally.

There's also potential for international harmonization. Countries might eventually agree on basic standards—what deepfakes are prohibited, what timelines are reasonable, what due process protections are required. That would be simpler than having 200 different standards.

Technologically, we'll probably see advances in both deepfake generation and detection. As regulations incentivize better detection, research on detection systems will accelerate. But simultaneously, people motivated by these regulations to hide their deepfakes will develop better evasion techniques.

We might also see a shift toward embedded provenance systems—making it technically harder to create convincing deepfakes by requiring metadata and verification from the start. Some work is already happening on this.

From a policy perspective, India's rules offer a model that other countries will study. It's not the only model, but it's an influential one.

For platforms, the next few years are going to be expensive. Building compliant systems, adapting to rules in multiple jurisdictions, handling appeals and complaints.

But it's also an opportunity. Platforms that build sophisticated deepfake detection and labeling systems can differentiate themselves on content safety. They can advertise that their platforms have strong protections against harmful synthetic content.

Over time, managing deepfakes well might become a competitive advantage.

FAQ

What are deepfakes and why do they need regulation?

Deepfakes are synthetic audio or video content created using AI to convincingly impersonate real people or fabricate events. They need regulation because they've been used to create non-consensual intimate imagery, spread political misinformation, commit fraud, and harass individuals. While the technology has legitimate uses—film production, education, research—the harmful applications are significant enough that many governments believe regulation is necessary to protect people from deception and harm.

How do the new India deepfake rules work?

The rules require social media platforms to label synthetic audio-visual content, embed it with traceability data, and remove prohibited deepfakes within 2-3 hours of complaints or official orders. Platforms must deploy verification tools to check disclosures of synthetic content, and certain categories—like non-consensual intimate imagery and deceptive impersonations—are banned outright. Non-compliance jeopardizes platforms' safe-harbor protections, exposing them to greater legal liability.

What are the 2-3 hour takedown timelines and why are they challenging?

When Indian authorities issue a formal takedown order or urgent user complaints flag synthetic content, platforms have 2-3 hours to remove it. This is operationally challenging because it allows minimal time for human review. Most moderation systems operate on 12-48 hour cycles. Meeting these timelines requires 24/7 moderation teams and highly automated removal systems, which platforms are likely to calibrate conservatively to avoid missing deadlines, potentially leading to over-removal of legitimate content.

What happens if platforms fail to comply with these rules?

Non-compliance causes platforms to lose safe-harbor protections under India's IT Rules Section 79. This means they become directly liable for harmful deepfakes on their platforms, even if users created them. Instead of "we're not responsible if we follow procedures," platforms become "you're responsible for everything." This transforms the legal and financial risk significantly, making compliance urgent regardless of practical challenges.

Will these rules affect users outside of India?

Yes, likely. Because major platforms maintain global systems rather than region-specific alternatives, adapting to India's requirements often influences how they operate worldwide. When platforms build deepfake detection and labeling systems to comply with India's rules, those same systems typically become global infrastructure. This means India's aggressive regulatory approach may indirectly set standards for users in other countries as well.

How will platforms detect deepfakes if current technology isn't perfect?

Platforms will use multi-layered approaches: automated AI detection systems using computer vision and audio analysis, metadata analysis looking for signs of manipulation, user reporting systems, and human review for flagged content. However, current detection systems have accuracy rates of 85-95% under controlled conditions and perform worse on real-world compressed or edited content. This imperfection is why platforms are likely to set low automation thresholds and over-remove content to avoid missing 2-3 hour deadlines.

What are the privacy concerns with these rules?

The rules allow platforms to disclose user identities to private complainants without explicit judicial oversight. This raises concerns about harassment, misidentification, and chilling effects on legitimate content creation. Civil society groups argue this violates due process protections. While the intent is to help victims identify creators of harmful deepfakes, the lack of judicial review means users could have their identities disclosed based on subjective interpretations of whether content is actually synthetic.

How much will compliance cost platforms?

Major platforms estimate initial implementation costs of

Will other countries adopt similar rules?

Likely. India's approach is already being studied by Australia, the UK, Brazil, and Canada. Because deepfake regulation is emerging globally and India is setting an aggressive but relatively targeted standard, other countries will probably adopt similar frameworks. Platforms finding it simpler to implement consistent systems globally rather than maintaining country-specific approaches means India's rules may effectively establish international baselines.

What's the difference between labeling and removal?

Labeling provides context without suppressing content—people can still see synthetic content, but they know it's synthetic. Removal eliminates content entirely. India's rules require both: clearly labeling synthetic content AND removing prohibited categories like non-consensual intimate imagery. Labeling is more aligned with free speech principles because it respects autonomy while providing information. Removal is more effective at preventing harm but more restrictive of expression.

Final Thoughts

India's deepfake rules represent a significant regulatory moment. They're not just Indian policy. They're potentially the template for how democracies globally will regulate synthetic media.

The approach is thoughtful in some ways—focused on audio-visual content rather than all AI-generated content, carving out exceptions for legitimate uses. But the execution is brutal—two-hour takedown windows that essentially require automated moderation, loss of safe-harbor status for non-compliance.

For platforms, the next few months will be challenging. Building systems to meet impossible timelines while navigating legal and political uncertainty. But ultimately, they'll adapt. They have resources and incentive. Compliance is expensive but manageable.

For users, the impact is mixed. Better protection from harmful deepfakes, but also more aggressive moderation that might catch legitimate content. Synthetic content becomes harder to access, which might be protective or restrictive depending on your perspective.

For democracies globally, India's approach offers a case study. It shows what aggressive regulation of synthetic media looks like, what challenges emerge, and what trade-offs are necessary. Countries building their own regulations will learn from India's experience.

The deepfake problem isn't going away. Technology for creating convincing synthetic content is improving. Detecting it remains hard. Regulating it creates tensions with free speech and due process.

India's rules won't solve the problem, but they're an attempt. Whether they work—whether they actually reduce harmful deepfakes without suppressing legitimate speech—we'll know in 6-12 months.

Until then, platforms and users in India are living in the future of content moderation. The two-hour window is real. The labels are real. The rules are real.

Everyone else is watching to see how it goes.

Key Takeaways

- India's new deepfake rules (effective February 20, 2026) require platform removal of synthetic content within 2-3 hours, an operationally aggressive timeline that will reshape global moderation practices

- Platforms must label synthetic content, embed traceability data, and deploy AI verification systems, with non-compliance jeopardizing safe-harbor protections and exposing companies to direct legal liability

- The 2-3 hour window essentially mandates automated moderation, likely causing over-removal of legitimate content as platforms prioritize compliance over human review

- Compliance costs for major platforms are estimated at 5-15M annually, making India's rules effectively set global standards as platforms adapt systems uniformly across jurisdictions

- Civil society groups raise concerns about compressed timelines eliminating meaningful human review, user privacy risks from identity disclosure without judicial oversight, and potential suppression of legitimate synthetic media uses

Related Articles

- Humanoid Robots & Privacy: Redefining Trust in 2025

- UK's Light-Touch App Store Regulation: What It Means for Apple and Google [2025]

- Why Companies Won't Admit to AI Job Replacement [2025]

- Discord's Age Verification Mandate and the Future of a Gated Internet [2025]

- Europe's Sovereign Cloud Revolution: Investment to Triple by 2027 [2025]

- Meta's Child Safety Crisis: What the New Mexico Trial Reveals [2025]

![India's New Deepfake Rules: What Platforms Must Know [2026]](https://tryrunable.com/blog/india-s-new-deepfake-rules-what-platforms-must-know-2026/image-1-1770736022277.jpg)