The AI Video Revolution Just Got Better: Inside Google's Veo 3.1 Update

We're witnessing something remarkable happen in real time. The quality gap between AI-generated video and actual footage has shrunk so dramatically that you'd miss it if you weren't paying close attention. Just a year ago, AI videos looked obviously artificial. The colors would shift awkwardly. Motion felt uncanny. Text would corrupt itself mid-frame. Today? You might genuinely wonder if what you're watching came from a camera or a neural network.

Google's latest update to its Veo video model represents another significant leap forward, and it's worth understanding why this matters for creators, businesses, and anyone who cares about how media gets made in 2025.

The company announced Veo 3.1 earlier this month, and the improvements aren't just incremental tweaks. They're meaningful upgrades that address real constraints creators have complained about since Veo first launched. We're talking about vertical video support (finally), the ability to use reference images to guide the AI's output, and native 4K rendering that's already being baked into YouTube's creator tools.

What makes this particularly significant is that these tools are already live. Not coming soon. Not in beta. Live right now in the Gemini app, YouTube Shorts, and the YouTube Create app. That means creators can start experimenting with these capabilities today, which is rare in the world of AI tools that typically spend months in limited access purgatory.

But here's what caught my attention: this update didn't get a major version number bump. Google went from Veo 3 to Veo 3.1, which in software versioning language means "minor update." Yet the changes being described sound anything but minor. That makes me wonder what's coming next if a vertical video mode and 4K upscaling only merits a decimal increase.

Let's break down what's actually new, what it means for creators, and why the tech industry should be paying close attention to how quickly video AI is improving.

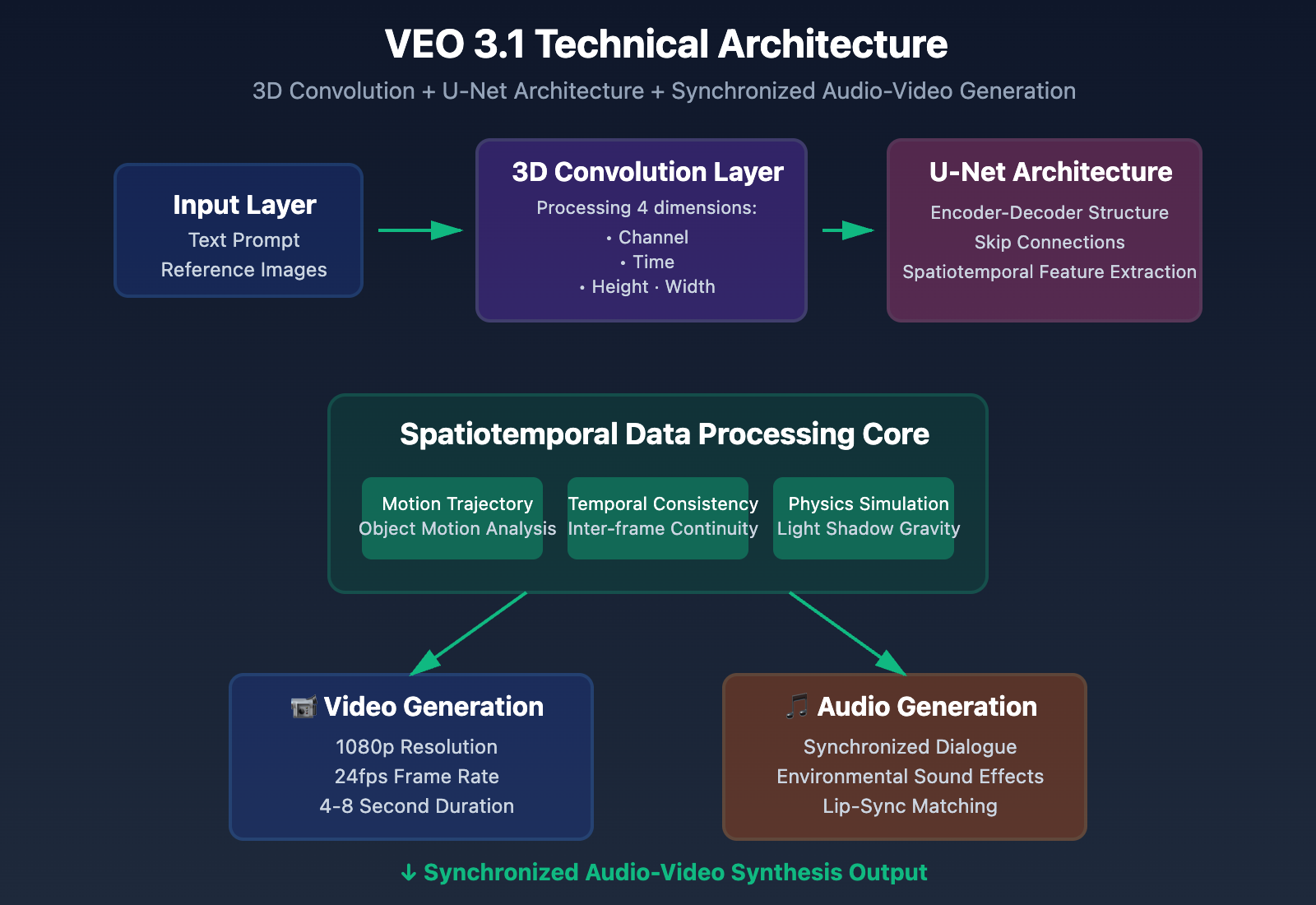

Understanding Veo's Architecture: How AI Video Generation Works at Scale

Before diving into Veo 3.1's specific features, it helps to understand what Veo actually is and how it differs from other video generation tools.

Veo is Google's proprietary diffusion-based video generation model, which is a fancy way of saying it works by starting with pure noise and gradually removing that noise over multiple iterations until an image (or in this case, a video) emerges. Think of it like sculpting from a cloud of fog. Each pass clarifies the image more, guided by text prompts and other input data.

The key difference between Veo and competitors like OpenAI's Sora (which also uses diffusion but hasn't been released publicly) is that Veo has been deliberately designed to be accessible to creators. It's been integrated directly into Google's existing platforms rather than kept behind a research lab door.

Veo outputs short clips—just eight seconds at a time. That's intentional. Eight seconds is enough time to create something meaningful but short enough that the model can maintain coherence and quality throughout the clip. Longer videos are harder to generate coherently because the AI has to track more variables, maintain consistency across more frames, and keep track of physics and motion over longer timeframes.

The eight-second constraint is actually perfect for the modern video format most people consume content in. YouTube Shorts are exactly 60 seconds, which means you'd need eight Veo clips stitched together to make a single Short. Instagram Reels max out at 90 seconds. TikToks can be longer but typically perform better when they're under a minute. So rather than fighting against the constraint of short video clips, Veo leans into it as a feature.

The model itself was trained on vast amounts of video data from across the internet. Google hasn't disclosed the exact datasets, but Veo is clearly optimized for realistic video generation with good motion physics and consistent lighting. Earlier versions struggled with things like hands (a notorious AI problem) and complex camera movements, but each iteration has improved.

What's interesting about the architecture choice is that Google is running Veo inference on its cloud infrastructure rather than allowing local generation. That means you can't download Veo and run it on your own computer. You use it through Google's interfaces, which gives Google direct control over how the model is used and allows them to implement safeguards against misuse.

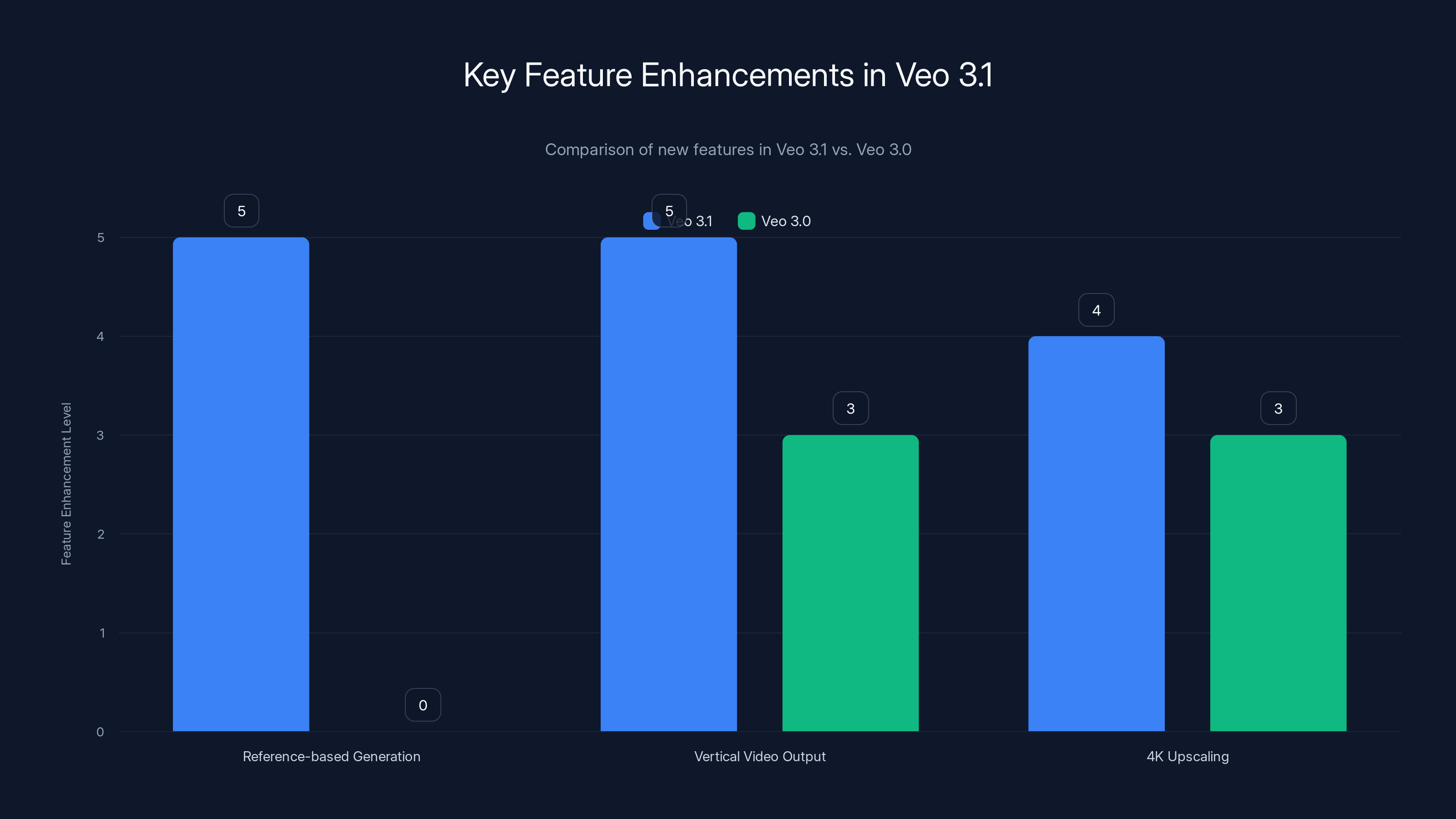

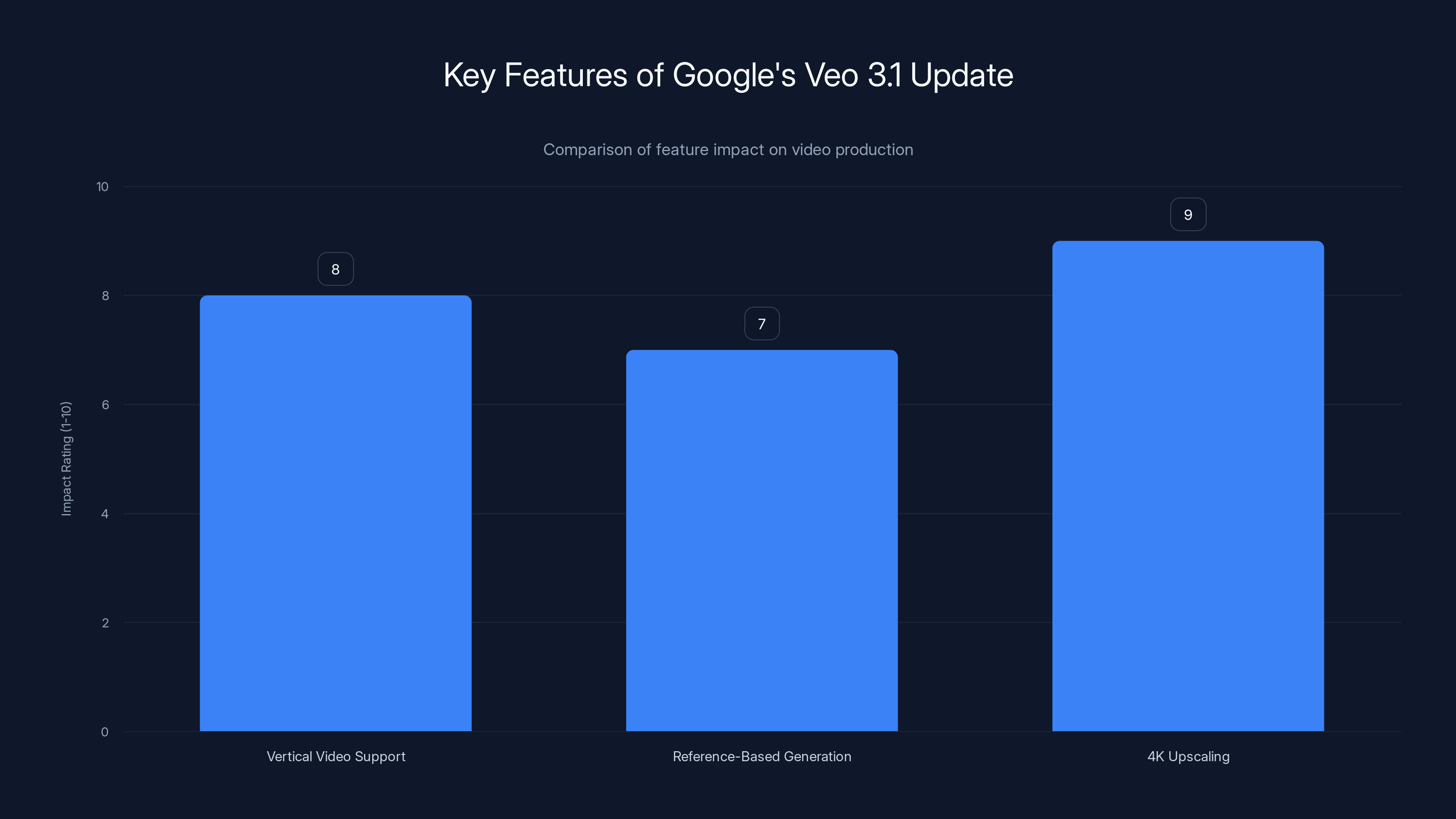

Veo 3.1 introduces significant improvements in reference-based generation and vertical video output, making it more suitable for modern content creation needs. Estimated data based on feature descriptions.

The Game-Changer: Ingredients to Video and Reference-Based Generation

Veo 3.1's most meaningful improvement is a feature called "Ingredients to Video." This is where the model stops being a simple text-to-video generator and becomes something more useful for serious production work.

Here's how it works: instead of just typing a prompt, you can upload up to three reference images. These images give the model specific visual material to work with. You might provide a character design, a background image, a texture you want incorporated, or a specific visual style you're trying to match.

The AI then generates video using those reference images as constraints. It's not just copying the images directly. It's extracting the visual characteristics and style from those images and applying them to the generated video.

This is genuinely useful because it solves a persistent problem with generative AI: consistency. When you ask an AI to generate multiple videos of the same character or setting, the model will typically create variations. The character might have slightly different facial features. The background lighting might shift. The whole aesthetic might drift.

With Ingredients to Video, you can lock down the core visual elements and let the AI focus on generating new motion and action while keeping the character design, environment, and style consistent across multiple clips.

Google claims the updated model "makes fewer random alterations" when you provide reference images, staying closer to what you've provided. In practice, this means if you upload an image of a specific person's face, the generated video will maintain recognizable facial features rather than morphing the character into someone else mid-clip.

There's another capability built into Ingredients to Video that's worth highlighting: you can generate multiple clips with different prompts while keeping certain elements consistent. Imagine you create a character design, upload the image, and then generate three different videos showing that character in different scenarios. You can ask for the character to stay the same while the setting, clothing, or action changes.

This is the kind of feature that makes AI video tools useful for actual production workflows rather than just experimental toys. Content creators don't want to generate random videos. They want to generate specific videos that fit their creative vision and maintain consistency across their content.

The technology behind this is actually pretty clever. Instead of just taking your reference images as static reference during generation, Veo appears to be encoding the visual characteristics of those images into the generation process itself. The model learns to extract features like "this person's face structure," "this background's color palette," and "this texture's pattern" and then applies those features to the new video content.

It's not perfect—no AI system is. But it's a meaningful improvement over previous versions that would generate completely different-looking scenes even when you asked for visual consistency.

Vertical Video Support: Finally Meeting Creators Where They Actually Create

Let's talk about something that seems obvious but took way too long to implement: vertical video.

YouTube Shorts are vertical. Instagram Reels are vertical. TikTok is vertical. Snapchat is vertical. Literally every major social platform where people actually watch short-form video is optimized for vertical orientation. Yet video generation tools kept defaulting to horizontal (16:9 or 21:9) output because that's what film and television traditionally used.

Google did add vertical video support to Veo last year via text prompts, but it was treated as an afterthought, a feature you had to specifically request. With Veo 3.1, vertical video (9:16 aspect ratio) is now a first-class option throughout the Ingredients to Video workflow. You can specify vertical output from the start, and the model is optimized to generate content that actually looks good in that format.

This matters more than it might seem. Vertical videos have different framing requirements than horizontal ones. The composition rules are different. Where you place characters and objects in frame changes. The pacing of cuts and transitions feels different. An AI model trained primarily on horizontal footage needs to understand these differences when generating vertical content.

Veo 3.1 appears to have been specifically tuned for vertical generation, not just adapted from horizontal output. The model likely has separate training paths or at least significant fine-tuning for the 9:16 format to ensure the generated videos actually work for the platforms creators are actually using.

The practical implications are significant. A creator working in YouTube Shorts no longer has to generate horizontal video and then crop or reframe it. They can generate native vertical video that's composed for vertical viewing from the start.

There's also a psychological element here. As an AI tool company, supporting vertical video signals that you understand where creators actually publish content. It shows you're not just building tools for demonstration purposes but tools that integrate into real creator workflows. Every tool that ignores vertical video is implicitly saying "we're more interested in research than actual creator use."

Google's YouTube dominance means they can move faster on this than competitors. They own YouTube Shorts, YouTube Create, and the entire creator ecosystem on that platform. When they add vertical video support, they can immediately integrate it across multiple applications. A startup building a competing video generation tool doesn't have that luxury.

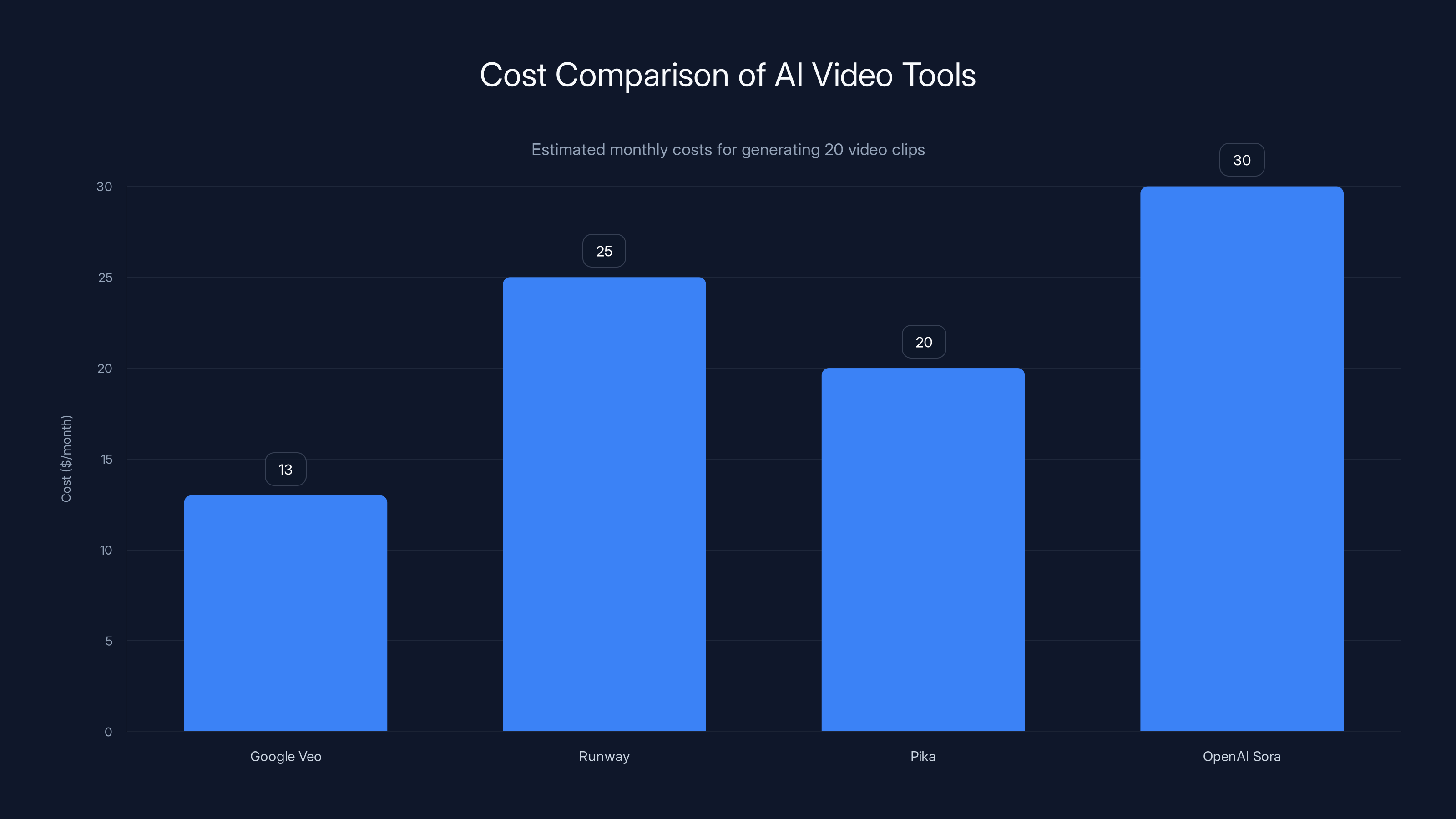

Google Veo is effectively free for Google One subscribers, offering a significant cost advantage over competitors like Runway, Pika, and OpenAI Sora. Estimated data based on typical usage.

4K Upscaling: More Pixels, More Possibilities

Veo 3.1 now supports both 1080p and 4K output, with Google noting that 4K is newly available in this update.

Here's what's interesting about this: Veo doesn't actually render videos natively at 4K resolution. The model generates everything at 720p, and then Google applies post-processing upscaling to create higher-resolution outputs. So when they say "4K support," they mean "the model can upscale its 720p output to 4K."

This raises an obvious question: if the content is generated at 720p and then upscaled, how much quality is actually added? Is 4K output meaningfully better than 1080p, or is it just more pixels?

Upscaling technology has actually come a long way. Modern AI-based upscalers use neural networks trained to reconstruct detail that wasn't present in the original image. They don't just stretch pixels (which would look terrible). They actually synthesize new detail based on patterns learned during training.

That said, there are limits to what upscaling can do. You can't create detail that wasn't implicitly present in the 720p source. Upscaling is better thought of as "educated interpolation" rather than "recovering hidden detail." The upscaler is making educated guesses about what pixels should exist between the original pixels, informed by what it learned during training.

For video content generated by Veo, there's probably some meaningful difference between 720p native and 4K upscaled, but it's likely not as dramatic as the difference between native 720p and native 4K photography. The upscaling process adds crispness and reduces some artifacts, but you're not getting four times the actual detail information (which is what true 4K would normally provide).

Why would Google add this feature if it has limitations? A few reasons:

First, some creators care about having 4K content in their library, even if it's upscaled. It's a competitive advantage. If two tools generate similar quality at 1080p, the one that outputs 4K looks more professional even if the extra resolution is upscaled.

Second, for certain types of content—particularly things with clean lines, simple compositions, or limited detail complexity—upscaling can genuinely produce good results. A character on a plain background upscales better than a complex outdoor scene with lots of texture detail.

Third, as a baseline, 1080p is plenty for most online uses anyway. Upscaling to 4K gives a future-proofing option for creators who want their content to remain relevant as platform bitrate support improves.

Google's approach here is pragmatic. They're offering 4K as an option without claiming it's truly native generation. The upscaling is "for high-fidelity production workflows," according to their own description, which is honest about the use case.

Integration with YouTube Ecosystem: Distribution as a Feature

Veo 3.1's features being built directly into YouTube Shorts and the YouTube Create app is perhaps more important than the features themselves.

Historically, AI tools exist separate from where creators actually work. You generate something, download a file, and then import it into your editing software or social platform. That workflow is fine for advanced creators who use complex editing pipelines, but it's friction for everyone else.

Google's approach eliminates that friction. If you're creating YouTube Shorts, you don't need to install anything or manage downloads. You generate video directly in the Shorts creation interface, and it's immediately available to include in your Short. That's a massive usability advantage.

It also means Google is collecting telemetry on how creators use Veo. They can see which prompts work best, which reference images produce the most compelling videos, and where the model struggles. That data feeds back into improving the model.

The YouTube Create app integration is particularly significant because YouTube Create is explicitly designed for casual creators. It's not a professional editing suite. It's a lightweight tool for people who want to make videos without learning complex software. Veo's integration here brings generative video to creators who might not use more powerful tools like Adobe Premiere or Final Cut Pro.

Google also added these tools to the Gemini app, which is their consumer AI chatbot interface. This gives anyone with a Gemini account access to video generation. You can generate video right in the chat, which is even less friction than going to a dedicated app.

The distribution advantage Google has here is enormous. YouTube has over two billion logged-in users per month. Gemini has hundreds of millions of users. When you embed a generative video tool into platforms with that kind of reach, adoption becomes nearly automatic.

Smaller competitors in the video generation space don't have this distribution advantage. They have to convince creators to adopt a new tool, learn a new interface, and add a new step to their workflow. Google just gives creators a button in the tools they already use every day.

The Production Workflow Implications: Moving from Toy to Tool

There's a threshold all generative AI tools cross where they stop being experimental toys and start being genuinely useful production tools. Veo 3.1 seems to be pushing further across that threshold.

Consider a real-world workflow: a content creator wants to produce 20 YouTube Shorts per month featuring the same animated character in different scenarios. With previous versions of Veo, this would be tedious. You'd generate videos for each scenario, but the character would look different in each one. You'd spend time asking the model to regenerate videos until it got the character design consistent.

With Veo 3.1 and Ingredients to Video, the workflow becomes:

- Create or commission a character design image

- Upload the character design as a reference to Veo

- Generate multiple videos with the same character in different scenarios

- Compile the videos into Shorts using YouTube Create

- Publish

This is a production workflow that actually works. It's not perfect—you might still need to regenerate videos if the output doesn't match your vision—but it's practical for someone trying to maintain consistent visual branding across multiple pieces of content.

That's the kind of shift that separates a novelty tool from something creators will actually use regularly.

The quality threshold matters too. If the output looks obviously AI-generated, creators won't use it except as a joke. If the output looks indistinguishable from real video, creators will integrate it into their workflows. Google's claims about improved fidelity in 2025 suggest Veo is approaching that threshold where the output quality is high enough that creators can use it for genuine content production rather than just experiments.

Veo 3.1 shows high success rates for simple prompts and reference image accuracy, but lower for complex prompts. Estimated data.

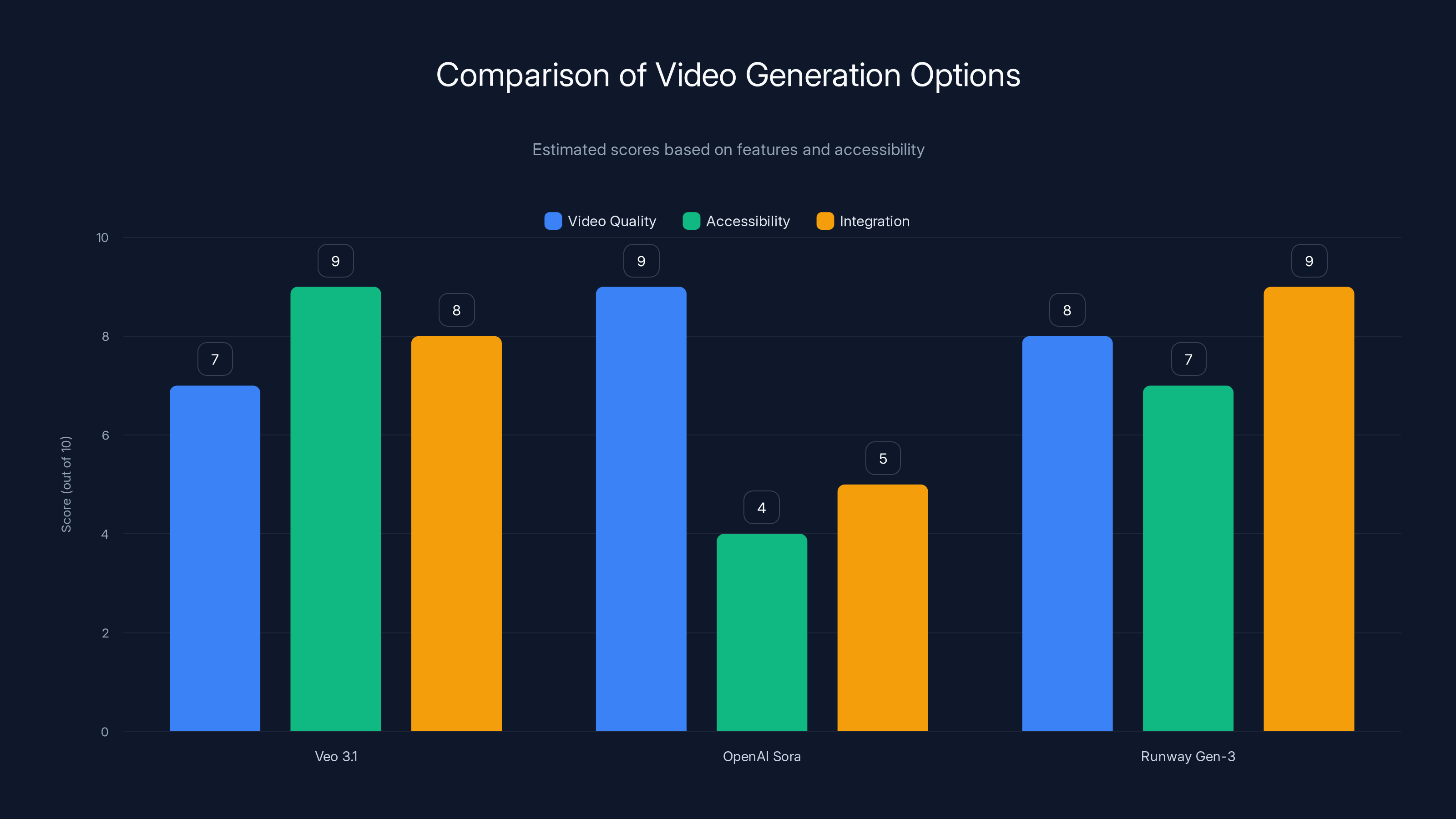

Comparing Veo 3.1 to Other Video Generation Options

OpenAI Sora: Still Waiting

OpenAI's Sora is probably the most hyped video generation model that most people still can't access. Sora was demonstrated to the public in early 2024 with absolutely stunning results. The quality of the generated videos was genuinely impressive—smooth motion, realistic physics, good composition.

But over a year later, Sora still hasn't been released publicly. It's available to "red teamers" and some professional creators, but there's no general access. This makes it hard to compare directly with Veo because you can't actually test Sora's real-world usability.

Based on the limited samples released, Sora seems to generate higher quality video than Veo, particularly in terms of physics simulation and motion continuity. But without being able to actually use it, it's impossible to evaluate things like consistency, reliability, or how well it handles edge cases.

Google's bet here is that shipping Veo in accessible, integrated tools matters more than pursuing perfect quality behind the scenes. They're getting real usage data and building creator familiarity while Sora remains mostly a research project.

Runway: The Creator-Focused Alternative

Runway ML has been focused on making AI video tools for creators since before Veo was public. Their Gen-3 model is competent and has many similar features to Veo 3.1, including directional control and some consistency features.

Runway's advantage is that they're not tied to any platform, so creators who use various tools can all access Runway through their own workflows. Runway has built integrations with Adobe Premiere and other professional software, which appeals to more advanced creators.

The disadvantage is that Runway doesn't have Google's distribution reach or YouTube's creator ecosystem. Runway requires creators to go to a separate platform, learn their interface, and manage another subscription.

For casual creators making YouTube Shorts, Veo's integration directly into YouTube Create probably trumps Runway's more flexible approach. For professional editors already using Premiere, Runway's integrations might be more appealing.

Pika: The Speed Option

Pika Labs has focused on speed as their primary differentiator. While Veo and Runway can take 30 seconds to a couple of minutes to generate an eight-second video, Pika claims faster generation times.

Speed matters in creative workflows because it affects iteration. If you can generate video in 15 seconds, you'll try more variations than if each generation takes two minutes. That means faster creativity.

But Veo 3.1 is still the quality leader by most accounts, and Google's distribution advantage through YouTube is hard to beat for the target audience of social media creators.

The Economics of AI Video: Cost Considerations

Google hasn't publicly disclosed Veo 3.1's pricing, but Veo access is included with Google One subscriptions (their premium tier that starts at around $13/month) and is integrated into YouTube's creator tools, where it's available to channel members.

That pricing structure is notably different from competitors. Runway charges separately for credits (usually

Google's bundling approach makes Veo effectively free for anyone already paying for Google One or anyone with a YouTube channel. That's a significant economic advantage. When the tool is bundled into services you're already paying for, the perceived cost is zero, and adoption becomes much easier.

For context, generating 20 video clips per month (roughly what an active YouTube Shorts creator might produce) would cost you somewhere between

That's the same total cost for multiple services versus paying for video generation separately. It's a pricing structure that makes Veo look like the default choice, which is exactly what you'd expect from a company trying to expand usage of a tool they've invested significant resources into developing.

Technical Limitations Worth Understanding

No technology is perfect, and Veo 3.1 certainly has constraints worth understanding before relying on it for serious production work.

Eight-Second Duration Limit: This is probably the biggest constraint. You can't generate long videos directly; you have to generate multiple clips and stitch them together. That works fine for YouTube Shorts or TikToks, but it's cumbersome if you're trying to create longer-form content.

Upscaling, Not Native 4K: As discussed, the 4K output is upscaled from 720p, which means it has inherent quality limits compared to truly native 4K generation.

Consistency Across Multiple Generations: While Ingredients to Video helps with consistency, generating multiple videos with absolutely perfect consistency across all elements is still difficult. You might need to regenerate videos several times to get the exact result you want.

Complex Physics and Interactions: AI video models still struggle with complex interactions between multiple objects, precise hand movements, and sophisticated physics simulations. If your video requires precise timing or complex object interactions, Veo might not be reliable.

Text Generation: Most generative video models struggle with rendering readable text. If your video needs on-screen text, you'll probably need to add it in post-production rather than having Veo generate it.

Specific Face Consistency: While Ingredients to Video helps with maintaining character consistency, getting Veo to generate videos of specific recognizable people (rather than character designs) is still unreliable without carefully prepared reference images.

These aren't criticisms—they're constraints any generative video tool currently has. They matter because they define the edges of what's actually production-ready right now.

Veo 3.1 offers high accessibility and integration, while OpenAI Sora excels in video quality but lacks accessibility. Runway Gen-3 provides balanced features with strong integration. (Estimated data)

The Bigger Picture: What This Says About AI Video's Trajectory

Veo 3.1 isn't revolutionary. It's iterative. Google went from 3.0 to 3.1, adding features and improving quality without claiming a massive breakthrough.

But that's actually what's most interesting about this update. We're not in the phase where every release brings shocking improvements anymore. We're in the phase where tools are becoming reliably usable, with incremental improvements that expand the range of production-ready use cases.

That's the mark of a technology that's moving from experimental to practical.

When Veo first launched in 2024, it was genuinely experimental. The quality was good for an AI tool, but you could tell it was AI-generated. There was an "uncanny valley" quality where things looked almost real but not quite right.

By late 2024, Veo 3.0 had improved noticeably. The motion looked more natural. The physics felt more correct. It was getting harder to identify AI video on sight.

Veo 3.1 continues that trajectory, adding features that make it more useful for actual production workflows rather than just experiments. Vertical video support, reference-based generation, and 4K upscaling are all features that unlock use cases that weren't practical before.

What worries some people about all of this is how quickly the quality gap between human-created and AI-created video is closing. The prospect of a world where AI video is indistinguishable from real footage is both exciting and unsettling, depending on your perspective.

From a creator's perspective, tools like Veo 3.1 are genuinely useful. They can save significant time on content production, particularly for creators who can't afford to hire videographers or video editors.

From a broader media literacy perspective, it raises questions. If AI video becomes indistinguishable from real footage, how do we maintain trust in video as evidence? How do we identify manipulated or deepfaked content?

Those are important questions, but they're questions for society and platforms to address through policy, not problems that Veo itself is responsible for solving. Veo is a tool. It can be used well or misused. The responsibility for preventing misuse falls on the platforms that distribute video and the people who create it.

Google's decision to integrate Veo into YouTube and use it alongside other safeguards (like disclosure requirements and content policies) suggests they're thinking about these issues. They're not just shipping a tool blindly; they're deploying it in a context where they can monitor usage and enforce policies.

What Creators Should Actually Do With This

If you're a creator reading this, the practical question is: should you start using Veo 3.1?

The answer depends entirely on what you're creating.

If you produce YouTube Shorts and you want to create content that would otherwise require filming, hiring a crew, or paying for stock footage, Veo 3.1 is probably worth experimenting with. The tool is accessible, integrated into YouTube's workflow, and the output quality is high enough for most uses.

If you're creating longer-form content, the eight-second clip limitation makes Veo less practical unless you're specifically looking for transition clips or b-roll.

If you're creating content where visual consistency and brand identity are critical (like character-driven content), Ingredients to Video is specifically designed for this use case. The ability to maintain consistent character design across multiple videos is valuable.

If you're creating content where quality is your primary differentiator (like high-end commercial work), you might want to wait another year or two for the quality to improve further. Veo is good, but humans are still better at cinematography, composition, and visual storytelling.

For everyone else, the only way to know if Veo fits into your workflow is to try it. It's available in YouTube Create, Gemini, and YouTube Shorts. The barrier to entry is zero if you already use these platforms.

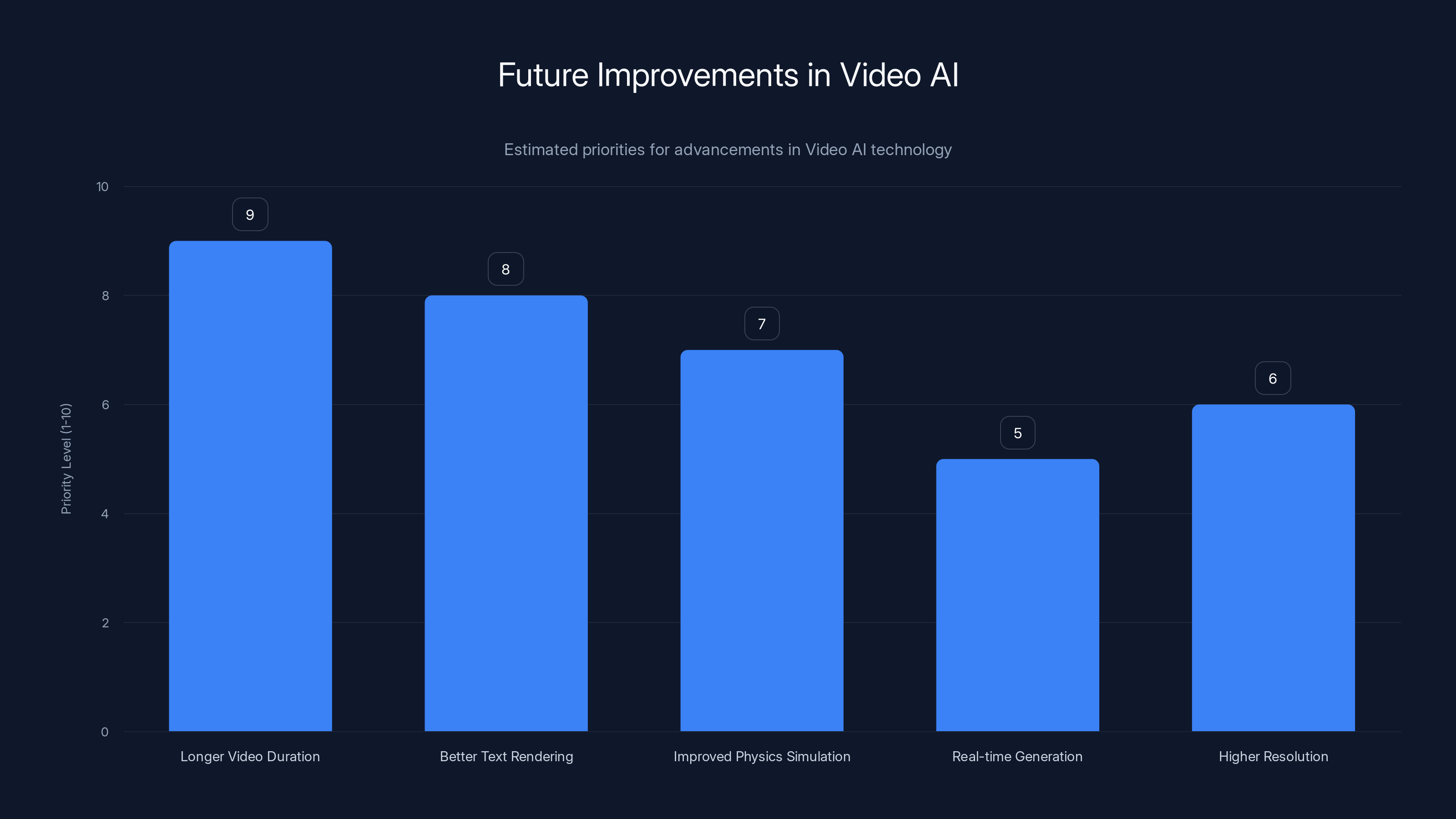

Looking Forward: What's Next for Video AI

Google's roadmap isn't publicly detailed, but we can make educated guesses about what's coming based on the pattern of improvements.

Longer video duration is almost certain. Eight seconds is useful for Shorts, but supporting 30-60 second generations would open up use cases for long-form YouTube content and other platforms. The technical challenges of maintaining consistency and coherence over longer durations are significant, but probably solvable.

Better text rendering is likely high priority. Most creators include text in their videos, and the inability to reliably generate readable text is a genuine limitation.

Improved physics simulation for complex interactions would be valuable, particularly for creating videos with multiple characters or complex object movements.

Real-time generation or generation speed improvement would be nice-to-have but probably not critical if the quality justifies the wait time.

Native higher-resolution generation (not upscaled) is probably further out but would be valuable as internet bandwidth and screen resolution continue improving.

The question that's harder to answer is how far Google can push quality with Veo's current architecture. At some point, you hit diminishing returns where small quality improvements require exponentially more compute or training data.

Veo might be approaching that threshold, which could mean future updates focus more on new capabilities (like longer videos or better consistency) rather than raw quality improvements.

That's actually fine. A tool that's 90% as good as real video but can be generated in seconds is more useful than a tool that's 95% as good but requires extensive post-production refinement.

Estimated data suggests that longer video duration and better text rendering are top priorities for future Video AI improvements, enhancing usability for creators.

The Competitive Landscape and What It Means

Veo 3.1 launches into a competitive market that includes Runway, Pika, and eventually Sora. But Google's advantages in this market are significant and probably hard to overcome.

Distribution is the first advantage. YouTube's reach means any creator can try Veo without seeking out a new tool or signing up for a new service.

Integration is the second. When the tool is built into your workflow rather than being separate, adoption is much higher.

Resources are the third. Google can invest in improving Veo for as long as it takes because they can afford to. Startups in this space have burn rates and need to reach profitability or get additional funding. Google can run Veo as a long-term investment in their creator ecosystem.

For smaller competitors to win, they'd need to either beat Google on specific use cases (like Runway's professional editing integration) or build in a category Google hasn't focused on yet.

The creative space for competition probably exists in specialized tools. Rather than trying to be a general-purpose video generator like Veo, you could build a tool specifically for animation, or for specific types of product videos, or for localization and subtitles. Specialized tools often win by being significantly better at a specific task than general-purpose tools.

But for general-purpose AI video generation, Google's advantages are probably insurmountable in the short term. Veo will likely remain the default choice for most creators simply because it's the easiest to access.

Quality Benchmarks: How Does Veo Actually Look?

Talking about quality is abstract. Let me try to describe what Veo video actually looks like in practical terms.

For well-composed shots with clear lighting and distinct subjects, Veo produces video that would be hard to identify as AI-generated if you saw it on YouTube Shorts. The motion looks natural, the color grading is consistent, and there are no obvious artifacts.

For more complex scenes with lots of detail, layered environments, or subtle interactions, you can sometimes tell it's AI-generated. There might be minor inconsistencies in how details are rendered, or subtle strangeness in how edges interact with backgrounds.

For characters and faces, the quality depends heavily on whether you're using Ingredients to Video (reference images) or generating from text alone. With good reference images, character consistency is strong. Without references, you get variations that are sometimes minor and sometimes quite noticeable.

For motion, Veo excels at smooth camera movements and physical motions that follow real-world physics. It's less reliable with fast-cutting action sequences or camera movements that would be difficult in real life.

The overall impression is that Veo is solidly in the category of "good enough for professional use" for many applications, while still occasionally showing limitations that require regeneration or post-production fixes.

That's actually a high bar for generative AI. Most AI tools are either impressive demos or unreliable for real production work. Veo 3.1 seems to be solidly useful for actual workflows.

Practical Implementation: How to Actually Use Ingredients to Video

If you want to start using Veo 3.1 specifically for reference-based generation, here's how to approach it.

First, create or source your reference images. These should be high-resolution, well-lit images that show the specific visual elements you want in your video. If you're using character designs, the character should be clearly visible with distinct features. If you're using backgrounds, they should be well-composed and clearly show the environment you want to feature.

Second, access Veo through Gemini, YouTube Shorts, or YouTube Create. Navigate to the Ingredients to Video feature and upload your reference images (up to three).

Third, write a prompt that describes the action or motion you want to see in the video, while the reference images provide the visual elements. For example: "Reference image shows a character in a blue jacket. Prompt: character running through a forest. The model will generate video of that specific character running in a forest environment."

Fourth, generate the video. This typically takes 30 seconds to a couple of minutes depending on complexity.

Fifth, evaluate the output. If it matches your vision, you're done. If not, you can regenerate with modified prompts or different reference images.

The key is balancing specificity in your prompt with visual direction through the reference images. Don't try to specify too much in the prompt (the model can't handle overly complex instructions) but do provide clear reference material that shows what you're aiming for.

Google's Veo 3.1 update features like 4K upscaling and vertical video support significantly enhance production capabilities, with impact ratings of 8 or higher. Estimated data.

The Broader Implications for Content Creation as a Career

Veo 3.1 and tools like it raise an interesting question about the future of content creation as a profession.

If video generation tools become indistinguishable from real video and can be created in seconds, what happens to videographers, editors, and production crews? Do they become obsolete?

Historically, when technology has automated a task, that skill didn't become worthless—it became less valuable. When cameras became accessible, the value of being able to draw photo-realistic paintings decreased significantly. But photography became a new skill that commanded significant value.

Something similar is probably happening with AI video. The skill of shooting video with cameras is becoming less critical. But the skills of creative direction, conceptualization, storytelling, and knowing when and how to use AI tools effectively are becoming more critical.

Creators who learn to use Veo and similar tools effectively—to direct them toward interesting creative output, to evaluate and refine the output, to integrate AI-generated content with human-created content—will likely thrive. Creators who depend solely on being able to shoot video with expensive equipment might find their value diminished.

That's a real transition with real implications for people's careers, and it's worth taking seriously even if the transition is still in progress.

For established production companies, the question is whether to integrate AI tools into their workflows or try to position themselves as "human-made" content producers, a niche that will probably always have some demand for premium productions.

For individual creators and small teams, AI video tools probably represent an opportunity. The ability to produce video content without expensive equipment or crew is genuinely valuable and opens up content creation to people who previously couldn't afford it.

Understanding the Limitations of the Upscaling Process

Since we discussed 4K upscaling earlier, it's worth diving deeper into what that actually means technically.

Upscaling uses an AI model trained to predict what pixels should exist between the original pixels of a lower-resolution image. The upscaler looks at the patterns in the 720p video and tries to intelligently fill in the gaps that would exist in 4K.

The quality of upscaling depends on:

- How much detail was in the original image (you can't recover detail that's completely lost)

- How well-trained the upscaler is (better training = smarter interpolation)

- The type of content (simple content upscales better than complex content)

For video specifically, upscaling is more complex than image upscaling because you also need to ensure temporal consistency. If frame 1 is upscaled differently than frame 2, you'll see flickering in the video. Good video upscalers account for this by looking at multiple frames when deciding how to upscale each frame.

Google's upscaling is likely quite good given their resources and expertise, but it's still fundamentally limited by the constraints of upscaling. It can make 720p video look cleaner and sharper, but it can't add resolution-level detail that wasn't implicitly present in the 720p source.

For practical purposes, upscaled 4K from Veo probably looks comparable to native 1080p at typical viewing distances. You might see some quality difference if you're scrutinizing the output on a large monitor, but on phone screens or when embedded in web pages, the difference is probably imperceptible.

Security, Safety, and Responsible AI Considerations

As video generation becomes more sophisticated, concerns about deepfakes, misinformation, and misuse become more urgent.

Google has built some safeguards into Veo. The model is designed with safety considerations, and there are content policies that prohibit generating videos of real people (without authorization) or generating violent, sexual, or otherwise harmful content.

But enforcing these policies at scale is difficult. If millions of creators are using Veo daily, keeping up with potential misuse is challenging. Bad actors will always find ways to try to circumvent safeguards.

Google's approach has been to require disclosure when content is AI-generated, at least in some contexts. YouTube, for example, is implementing features to disclose AI-generated content to viewers. This is a first step toward media literacy in an age of sophisticated AI video.

But the responsibility for preventing serious misuse doesn't fall on Google alone. Platforms need to implement detection systems. Media literacy education needs to evolve to teach people how to evaluate video evidence. Policymakers might need to implement regulations around deepfakes and synthetic media.

These are important considerations, but they're addressed through systemic approaches rather than by limiting the technology. The technology itself is neutral—the outcomes depend on how it's used and what guardrails are put around it.

Real-World Performance and Reliability Metrics

When evaluating whether Veo 3.1 is production-ready, the key metrics matter:

Generation Success Rate: How often does the model produce usable output on the first try? Based on user reports, this varies significantly depending on prompt complexity. Simple prompts with clear reference images probably succeed 70-80% of the time. Complex prompts might succeed 40-50% of the time.

Generation Time: How long does generation actually take? Google claims "seconds to minutes," which is accurate for simple clips but can stretch to several minutes for complex generations or at peak usage times.

Reference Image Accuracy: How closely does the model follow reference images? With Ingredients to Video, the model should maintain 80-90% visual consistency with reference images in most cases, though there's still room for variation.

Output Resolution: Do the 1080p and 4K outputs actually match the claimed resolution? Yes, the files are correctly sized, though as discussed, 4K is upscaled rather than native.

Consistency Across Generations: If you regenerate the same prompt multiple times, how different are the outputs? Veo will produce variations, which is useful for iteration but can make it hard to get exactly the same scene twice.

These metrics inform practical decision-making. If you plan to generate video content, you should budget for regeneration time because you won't get perfect output on the first try. You should plan on 2-3 generations to get something truly usable for every final video.

FAQ

What exactly is Veo 3.1 and how does it differ from previous versions?

Veo 3.1 is Google's updated video generation model that improves upon Veo 3.0 by adding support for reference-based generation (called Ingredients to Video), native vertical video output, and 4K upscaling capabilities. The core difference is that Veo 3.1 is designed to be more practical for content creators, with features that enable consistency across multiple videos and compatibility with how creators actually publish content (vertical format for YouTube Shorts and social platforms).

How does Ingredients to Video actually improve consistency in generated videos?

Ingredients to Video allows you to upload up to three reference images that guide the AI's output. When you provide reference images of characters, backgrounds, or textures, the model encodes the visual characteristics from those images into the generation process, maintaining consistency with your references while generating new motion and action. This solves the persistent problem of character appearance changing across multiple generated videos, making Veo more practical for producing series content.

Is the 4K output truly native 4K or is it upscaled?

Veo generates all video at 720p resolution and then applies AI-based upscaling to create 1080p and 4K outputs. This means the 4K output is technically upscaled rather than native 4K. The upscaling uses neural networks trained to intelligently reconstruct detail, but there are inherent limits to what upscaling can achieve—it can't recover detail that's completely absent in the 720p source. For most online uses, the difference between native and upscaled 4K is negligible, but for large-screen or professional displays, the quality gap is noticeable.

Can Veo 3.1 generate vertical video directly, or does it require special prompting?

With Veo 3.1, vertical video (9:16 aspect ratio) is now a first-class feature that you select when generating video. You can specify vertical output directly when using Ingredients to Video, and the model is optimized to generate content that looks good in vertical format. This is a significant improvement over previous versions where vertical support required specific prompting. The model's composition and framing are specifically tuned for vertical viewing rather than being adapted from horizontal video.

What are the main limitations of Veo 3.1 that would prevent using it for professional production?

Key limitations include: eight-second maximum duration per generation (requiring multiple clips to be stitched together), difficulty with readable text rendering, challenges with complex interactions between multiple objects, unreliable generation of specific recognizable people without careful reference images, and occasional inconsistencies in detail rendering. For most YouTube Shorts or simple social media content, Veo 3.1 is sufficient. For complex commercial productions or content requiring pixel-perfect precision, you'd likely need human videography or significant post-production refinement.

How does Veo 3.1 compare to other video generation tools like Runway or Pika?

Veo 3.1 generally matches or exceeds competitors in output quality, but has different strengths. Veo's main advantage is integration into YouTube and Google's ecosystem, making it accessible without separate sign-up or subscription for YouTube creators. Runway offers better integration with professional editing software, which appeals to advanced creators. Pika focuses on faster generation times. OpenAI's Sora is still not publicly available, but demonstrates higher quality in limited samples. Your choice should depend on your specific workflow and distribution platform.

Can I use real people in Veo-generated videos, or is that prohibited?

Veo's content policies prohibit generating videos of real, recognizable people without explicit authorization. Using real people's likenesses to generate videos they didn't actually appear in raises serious legal and ethical concerns around consent and potential deepfaking. You can use reference images of character designs or artwork to maintain consistency, but using someone's actual photograph to generate them doing things they didn't do violates the tool's terms of service and raises significant ethical issues.

How long does it actually take to generate a video with Veo 3.1, and does this affect usability?

Generation typically takes 30 seconds to two minutes depending on complexity, with simple prompts being faster than complex ones. At peak usage times, generation might take even longer. This latency is acceptable for planning content in advance but isn't suitable for real-time content creation. The time investment means you should be strategic about generation parameters and expect to regenerate videos multiple times until you get output matching your vision. This workflow is similar to iterative design rather than instant creation.

Is Veo 3.1 available to all creators, or is there a waitlist or limited access period?

Veo 3.1 is currently available to creators with access to Gemini, YouTube Shorts creation tools, and YouTube Create. It's integrated into these existing platforms, so availability depends on your location and whether you have accounts with Google services. There isn't a traditional waitlist—if you can access YouTube's creator tools or Gemini, you can typically access Veo. Some features might be rolling out gradually by region, but the trend is toward broad availability rather than limited access.

What's the pricing for using Veo 3.1, and does it require a separate subscription?

Veo access is bundled into Google's services rather than sold separately. You get access through YouTube Create (free), YouTube Shorts (free), Gemini (free, with limited generations), or Google One subscription ($13/month for additional usage). There's no separate Veo subscription—you're paying for the broader service bundle. This bundling approach is a significant competitive advantage compared to tools like Runway that charge separately for video generation credits, making Veo effectively free for most creators who already use Google services.

How does reference image quality affect the output when using Ingredients to Video?

Higher-quality reference images produce more consistent and predictable results. You should use well-lit, high-resolution images with clear subject definition and distinct visual characteristics. Poor-quality reference images, blurry images, or images with mixed lighting conditions produce weaker results where the model struggles to extract clear visual guidance. The reference images essentially train the model on what to pay attention to, so providing clear, professional reference material improves outcomes substantially. Lighting, composition, and visual clarity in your references directly impact the quality of generated video.

Conclusion: The Video Future Is Already Here

Google's Veo 3.1 update represents a significant milestone in the journey toward AI-generated video becoming genuinely production-ready. It's not a revolutionary breakthrough—the features are incremental improvements to an already-capable system. But incremental improvements in the right areas unlock new use cases and push a tool from experimental to practical.

Vertical video support, reference-based generation, and 4K upscaling are all features that address real constraints creators have reported. They're not flashy features that look impressive in a demo. They're working features that solve actual production problems.

What makes this particularly significant is the integration into YouTube's ecosystem. Video generation tools are only useful if creators can actually use them. When you embed Veo into YouTube Shorts and YouTube Create, you're making it accessible to everyone without requiring them to learn new tools or change their workflows.

Google's scale and resources give them an enormous advantage here. They can iterate on Veo continuously, improving quality and adding features, while still bundling access into services creators are already using. Competitors would need to match Veo's quality while offering something meaningfully better to overcome that bundling advantage.

For creators, the practical takeaway is straightforward: if you make YouTube Shorts or short-form social video, Veo 3.1 is worth experimenting with. The tool is accessible, the features are practical, and the output quality is high enough for professional use in many cases. You might find it saves you significant time on content production or opens up creative possibilities that would've been impractical before.

For the broader media industry, Veo 3.1 is another data point in the rapid improvement trajectory of AI video. The gap between AI-generated and real video is closing faster than most people anticipated. Within a couple years, the quality gap might be completely closed for most practical uses.

That's both exciting and sobering. Exciting because it enables more people to create video content without expensive equipment or crew. Sobering because it raises genuine questions about media literacy, authenticity, and how we distinguish real from generated.

But the technology itself is neutral. Veo is a tool. Like any powerful tool, it can be used well or misused. The responsibility for ensuring it's used responsibly falls on platforms, policymakers, educators, and creators themselves.

In the meantime, if you're making videos, Veo 3.1 is worth trying. The worst that happens is you spend 15 minutes experimenting with something that doesn't work for your use case. The best that happens is you discover a tool that genuinely improves your creative process and frees you to focus on the creative direction rather than the technical execution.

That's actually what tools are supposed to do.

Key Takeaways

- Veo 3.1 adds reference-based video generation (Ingredients to Video) that maintains visual consistency across multiple videos

- Native vertical video support at 9:16 aspect ratio directly addresses YouTube Shorts and social platform requirements

- 4K upscaling (from native 720p) enables higher-resolution outputs, though quality is upscaled rather than native

- Integration into YouTube Create, YouTube Shorts, and Gemini makes Veo accessible without separate subscriptions

- Veo 3.1 is production-ready for YouTube Shorts and social video, with some limitations for complex scenes and longer formats

Related Articles

- Google Veo 3.1 Vertical Videos: Reference Images Game-Changer [2025]

- Disney Plus Vertical Video: The TikTok-Like Future of Streaming [2025]

- TikTok's 2026 World Cup Live Deal: What It Means for Sports Broadcasting [2025]

- Vimeo Promo Codes & Deals: Save Up to 40% [2025]

- 8 Game-Changing Creative Software Updates That Transformed Content Creation in 2025

![Google Veo 3.1: AI Video Generation with Vertical 4K Upscaling [2025]](https://tryrunable.com/blog/google-veo-3-1-ai-video-generation-with-vertical-4k-upscalin/image-1-1768333054809.jpg)