Halide Mark III Looks Preview: Next-Gen iPhone Photography [2025]

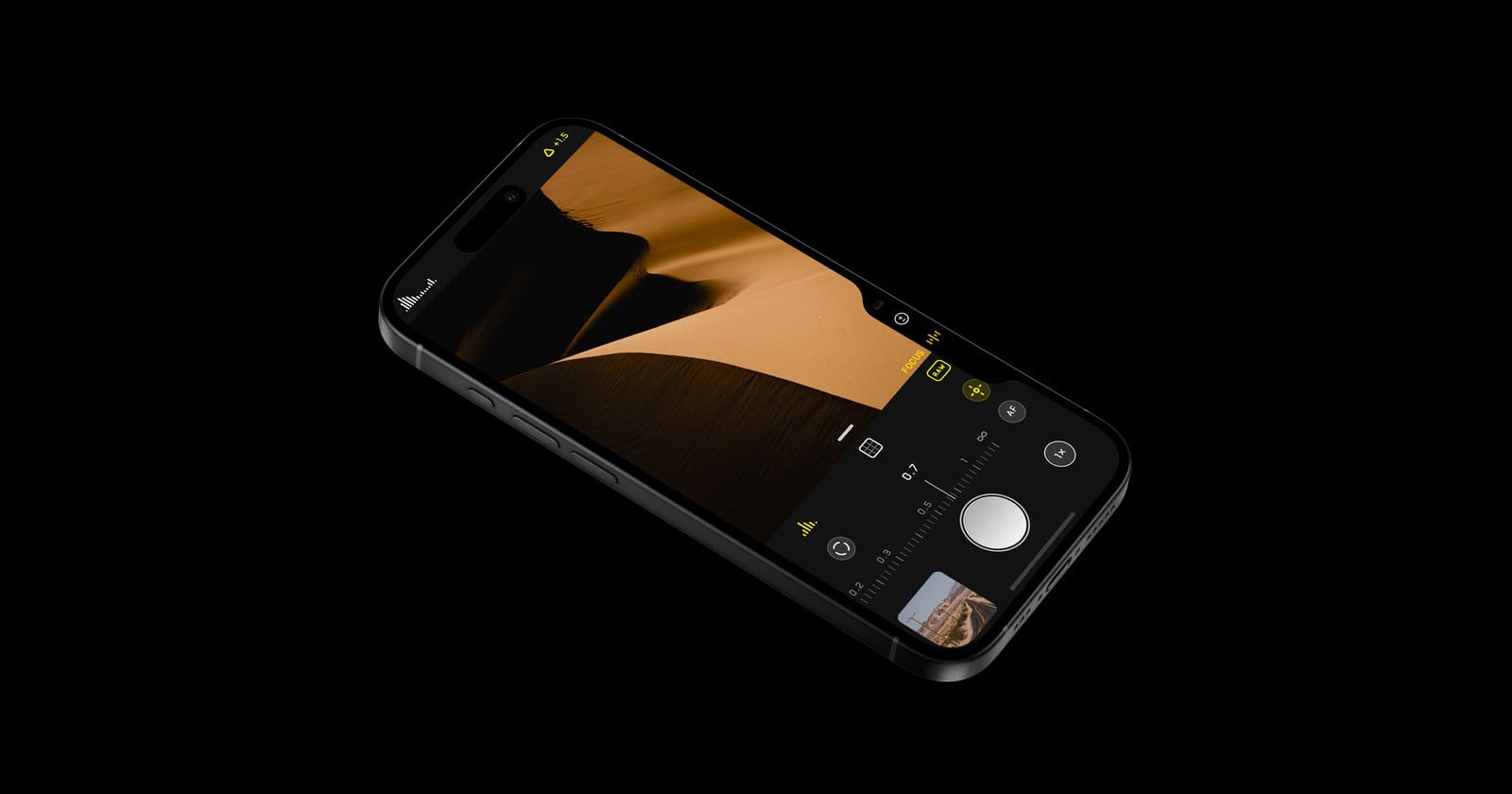

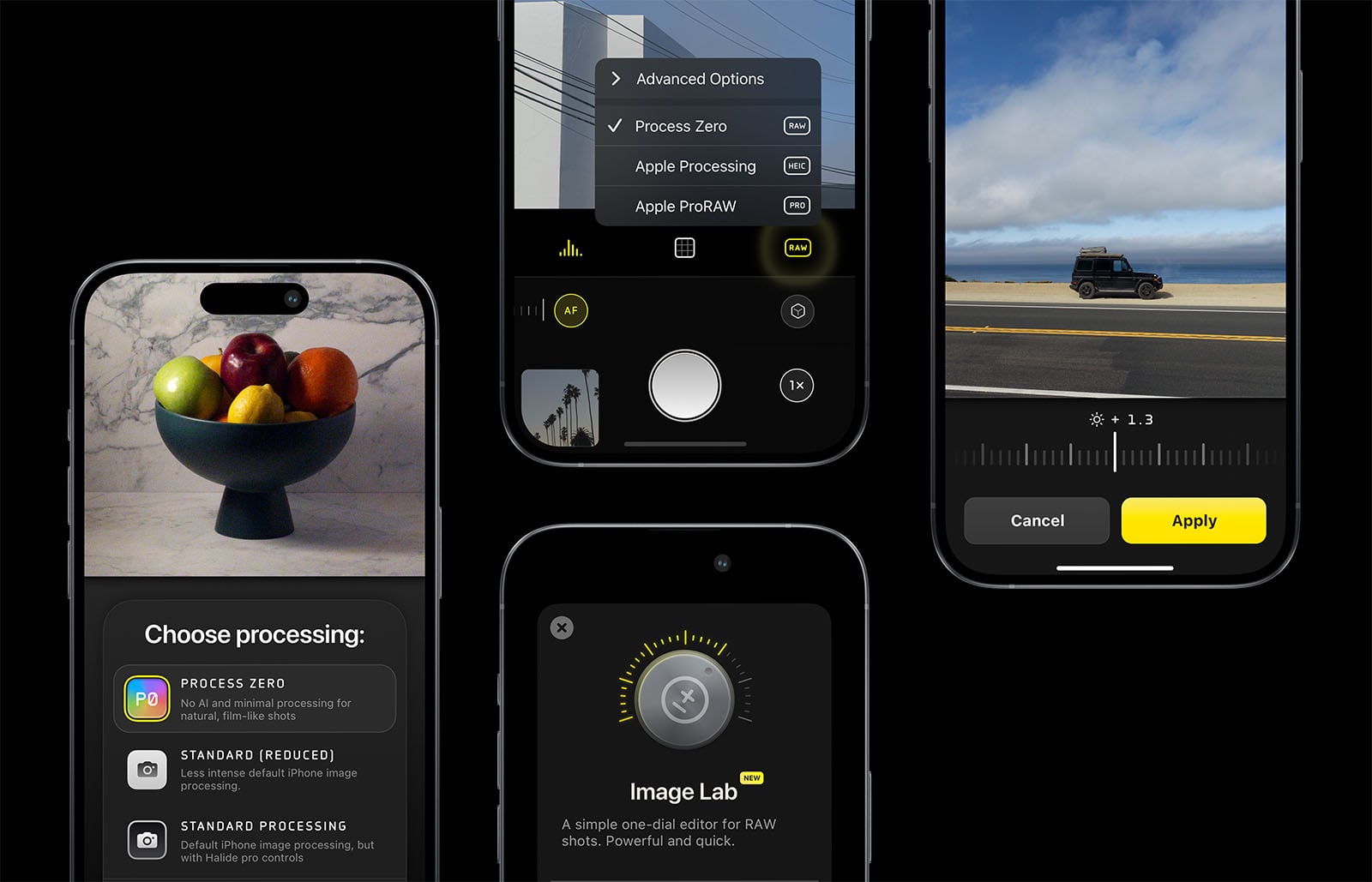

Today, a public preview of Halide Mark III launched, and it represents something genuinely different in mobile photography. After spending a year rebuilding the app's foundation, the team just opened the doors to what comes next. The first major feature they're showing off? Looks—a completely rethought approach to how iPhones capture and interpret photos.

Before we get into the weeds, let me be clear about what makes this matter. Most people use the default iPhone camera, which is genuinely good at one thing: taking the same photo everyone else takes. Apple's algorithms compress shadows, boost midtones, and apply a consistent aesthetic that prioritizes usable results over personality. That works great when you want convenience. But if you've ever felt like your iPhone photos all blend together, you've bumped into exactly what Halide is trying to solve.

The backstory here is worth understanding. The Halide team spent years watching mobile photography evolve—and simultaneously watching photographers abandon it. Some professionals started buying used Canon AE-1s and Fujifilm X100s cameras just to escape the algorithmic sameness. Others downloaded app after app hunting for presets that actually looked different. None of it felt right because presets are just color grades. They can't change how the camera captures the light in the first place.

Halide's solution flips the script entirely. Instead of asking "how do we grade photos after capture?" they asked "what if we let photographers choose their camera's interpretation at the moment of capture?" That's where Looks come in.

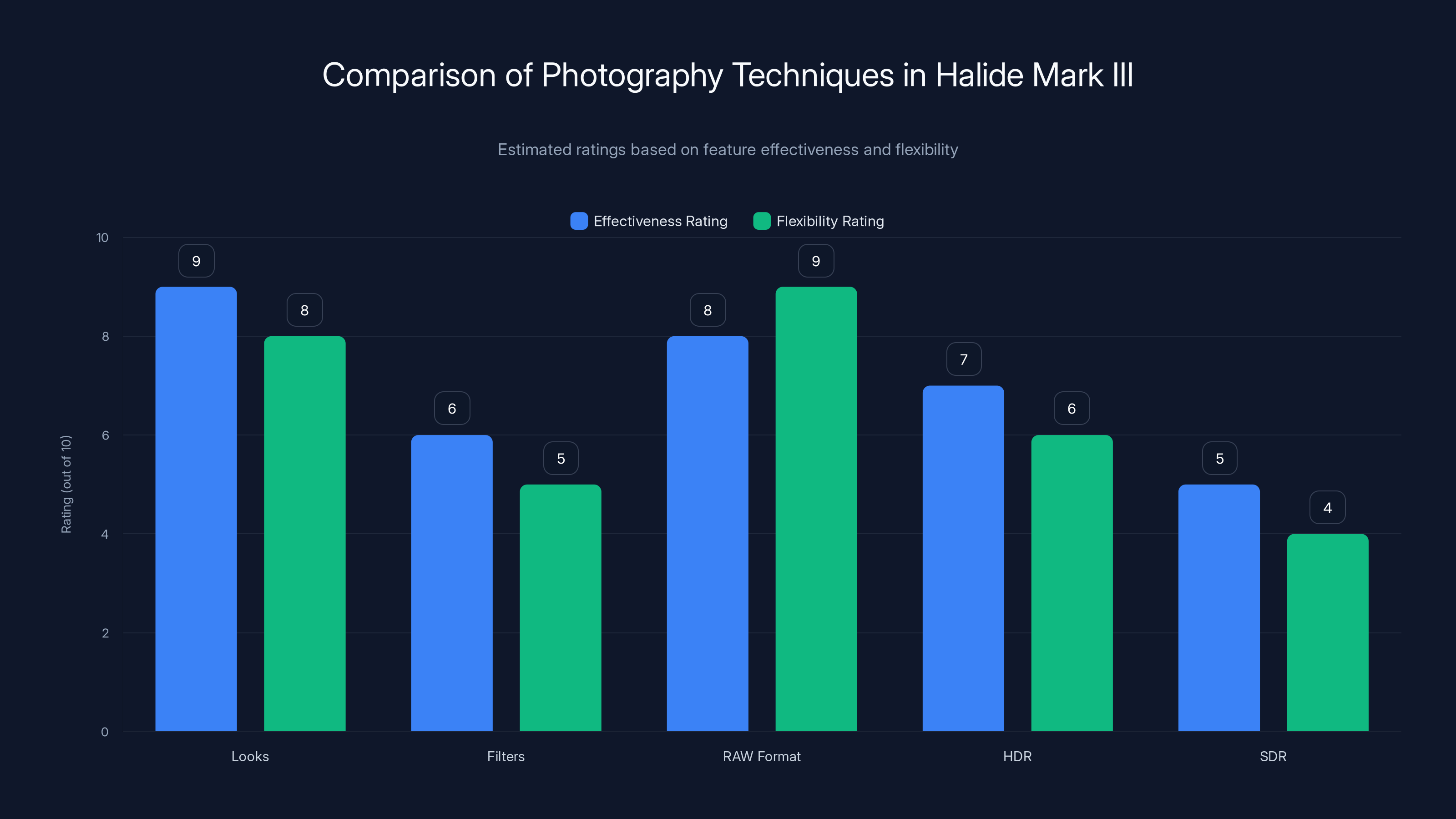

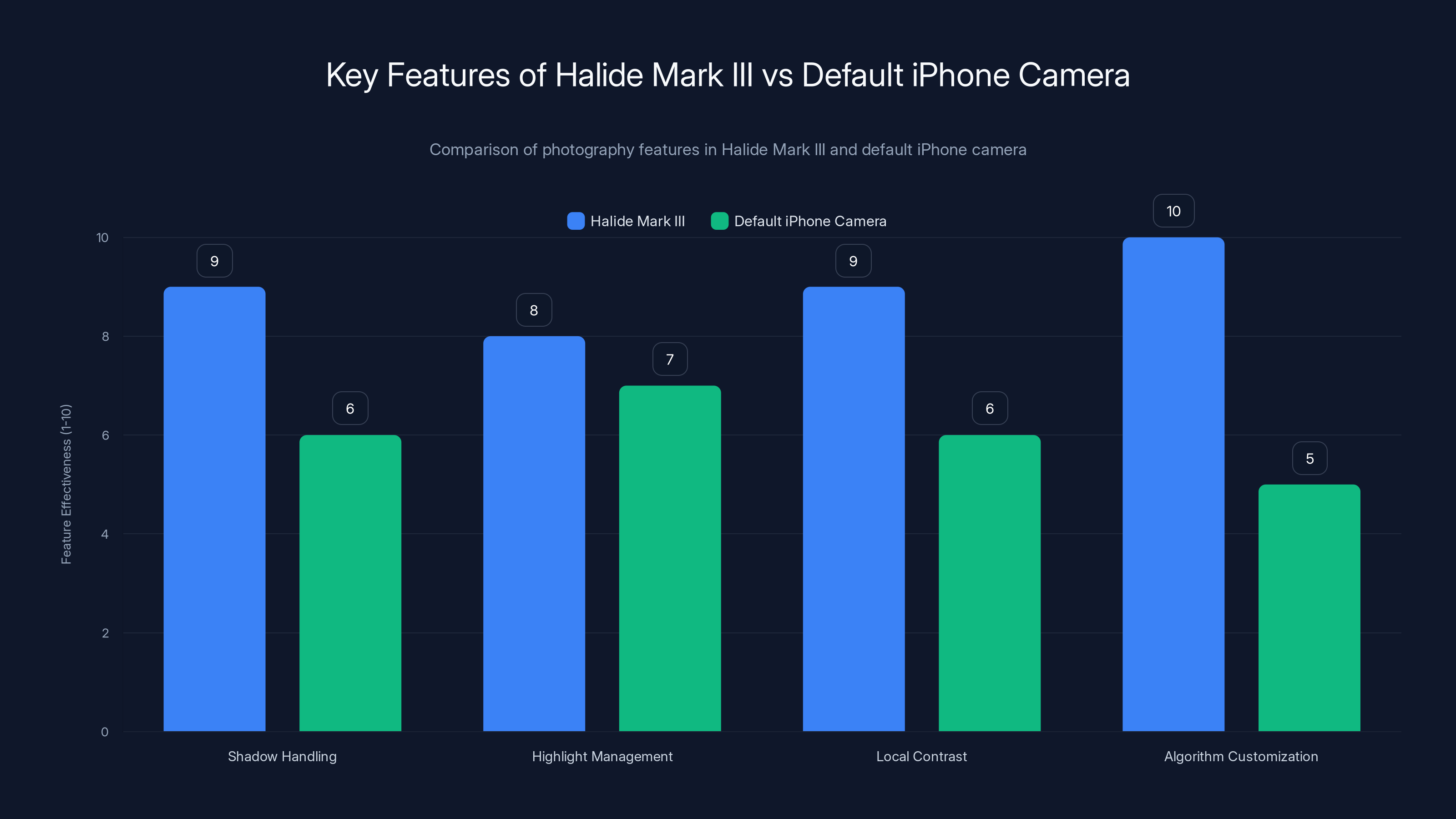

This isn't another preset app. This is architectural. When you select a Look in Halide, it changes how the iPhone's computational photography works—what algorithms fire, how highlights are handled, whether shadows get crushed or preserved, how much local contrast the system applies. The result is photos that look fundamentally different, not just shifted in tone or saturation.

I spent time working through the preview, and honestly, it's one of the more thoughtful camera app redesigns I've seen in years. Let me walk you through what's actually happening here.

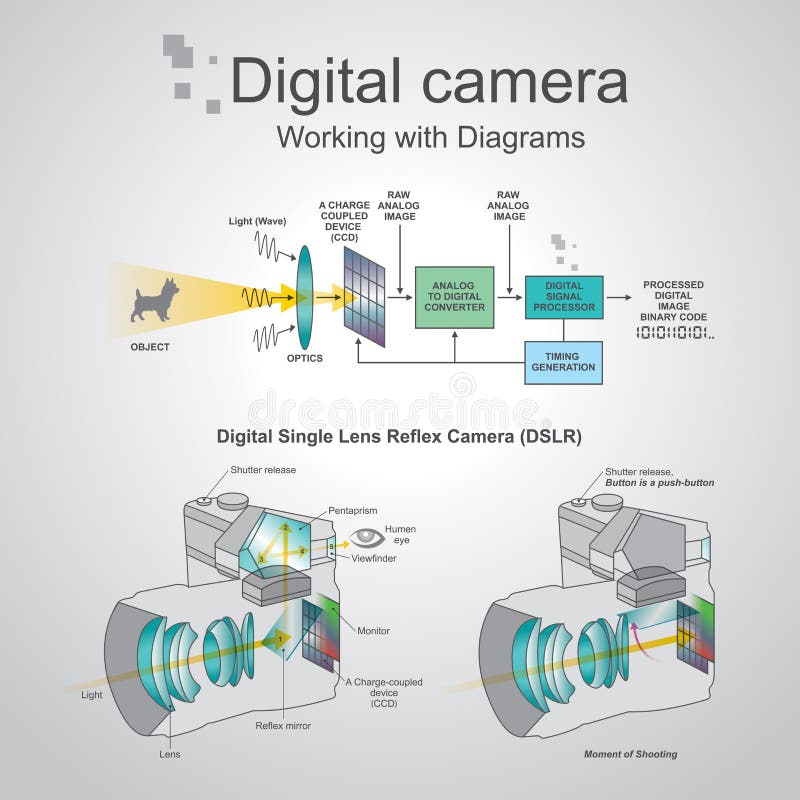

Understanding the Analog-to-Digital Bridge

There's a reason analog photography is experiencing a renaissance. It's not nostalgia—it's constraint.

When you load film into a camera, you've made your creative decision. Fujifilm Superia, Kodak Portra, Ilford HP5—each stock has a fixed color palette, contrast curve, and grain structure. You shoot the film, develop it, and the look is locked in. That simplicity is freeing. You don't spend an hour in Lightroom second-guessing your color grade. You pop the film in, frame the shot, and shoot. Some of the best photos you'll take are accidents, mistakes that worked.

Digital photography was supposed to be more flexible, but the opposite happened. The flexibility became paralysis. A billion menus, infinite presets, and yet everyone's phone photos look identical because they all started with the same iPhone algorithm.

The Halide team had the same realization. One of the developers took the deep dive into analog photography—learning cyanotype, platinum palladium printing, home film development, alternative processes. The goal wasn't to become a photographer (though that happened). It was to understand why analog still felt emotionally different, even after a decade building camera apps.

The answer: creative constraint plus authentic imperfection.

Film stock isn't trying to correct reality. It interprets it. A roll of Kodak Portra doesn't try to preserve every highlight. It crushes them slightly, leans warm, holds detail in shadows. That's not a bug. That's the look. Photographers chose that film specifically for that interpretation.

Halide's Looks are built on the same principle. You're not choosing a filter that gets applied in post. You're choosing a camera stock. The iPhone's sensors and processors interpret the light through that stock's lens.

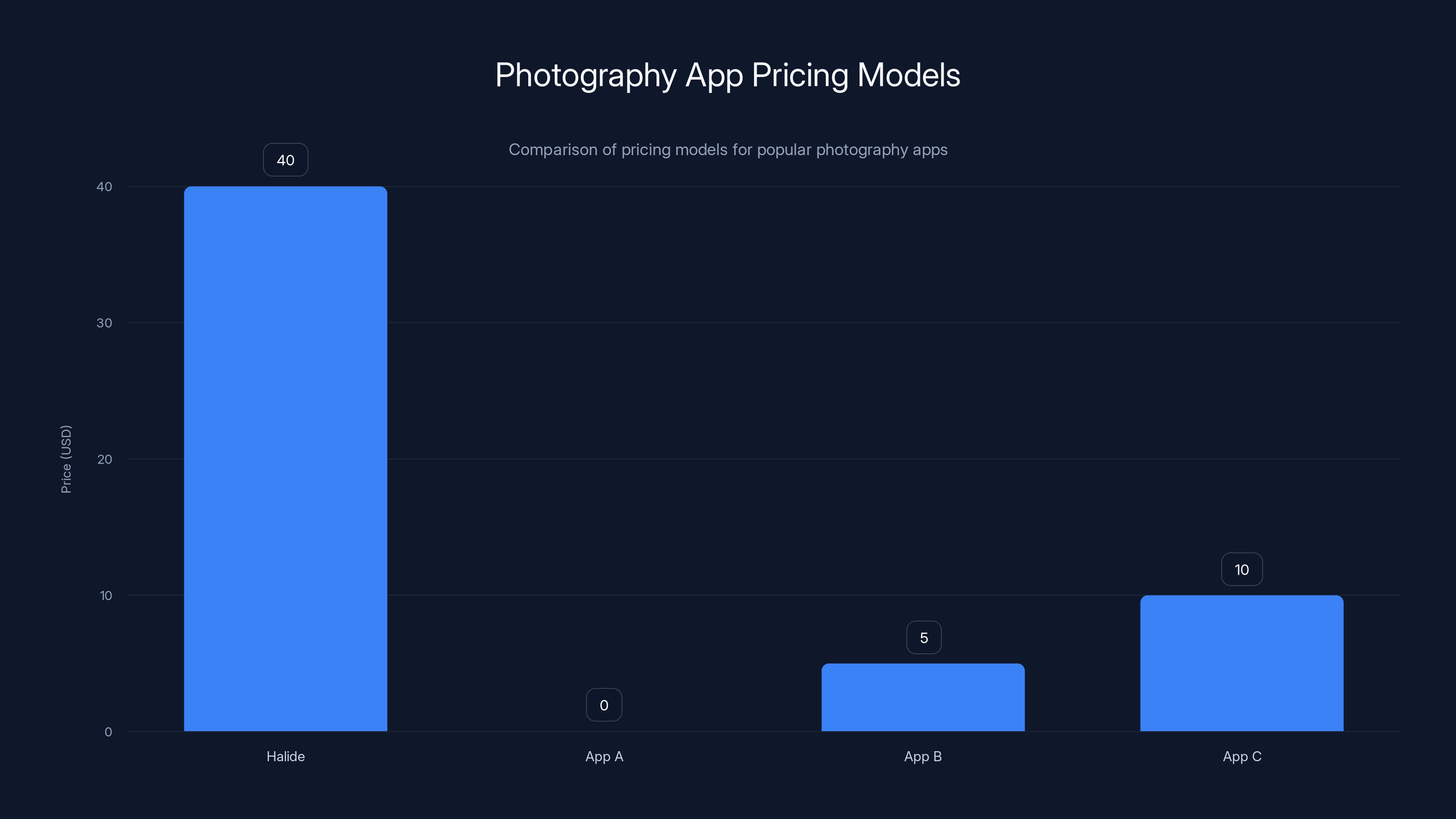

Halide's one-time purchase model at $40 stands out against free and subscription-based apps, appealing to serious photographers. Estimated data for comparison.

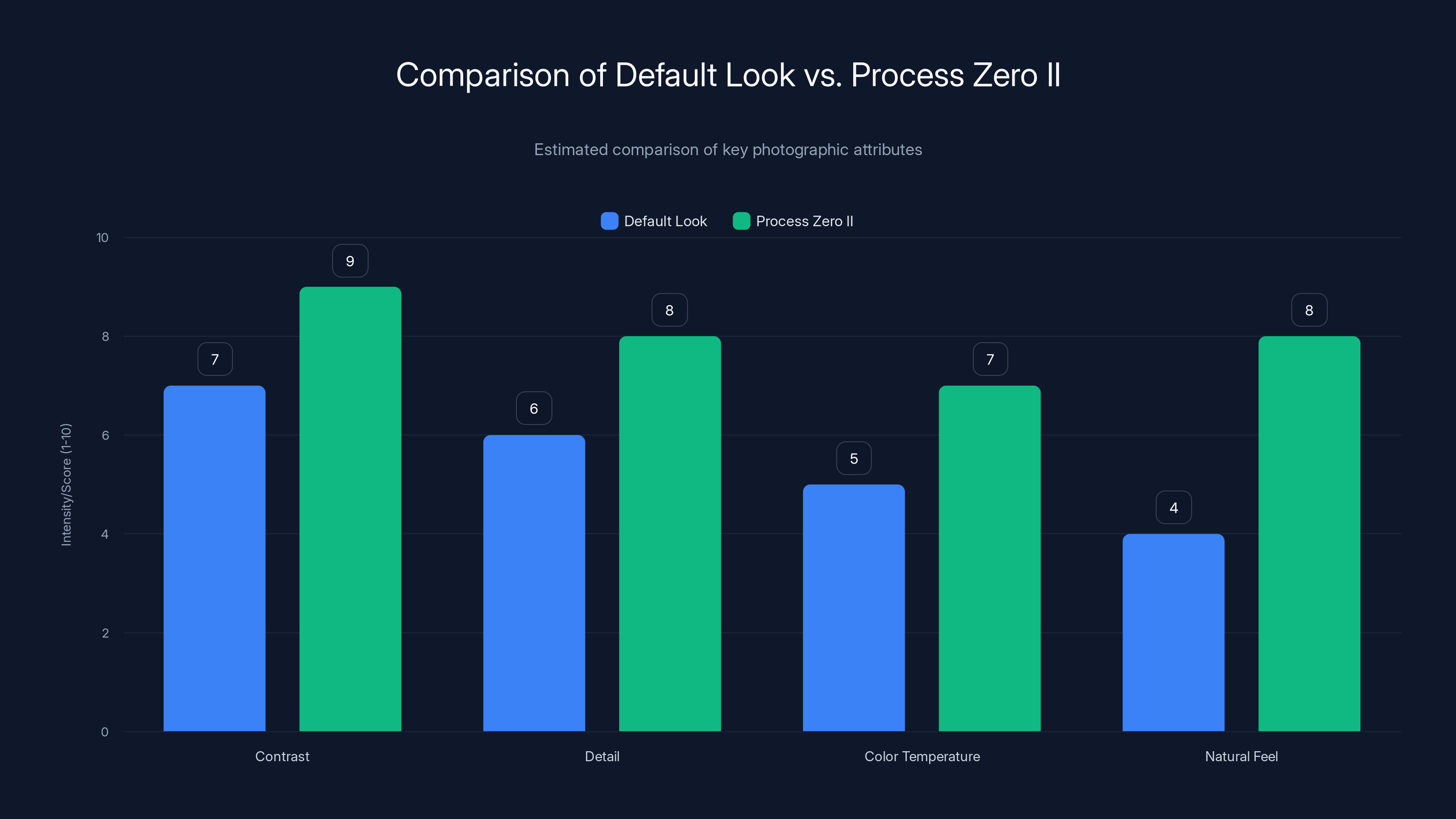

The Default Look vs. Process Zero II

When you launch Halide Mark III, you get options immediately. The Default look is straightforward: it matches what the iPhone's native camera app does. All the computational photography you're used to—face detection, local contrast boosting, shadow fill, highlight recovery, color science—it's all active. This is great if you want the Apple aesthetic with a bit more control. Manual focus, exposure metering, RAW capture on demand. Same results, less constraint.

Process Zero II is the opposite philosophy. It disables most computational photography at the moment of capture. The iPhone still has to process the raw sensor data (that's unavoidable), but it doesn't apply the aggressive algorithms that make everything feel polished and safe.

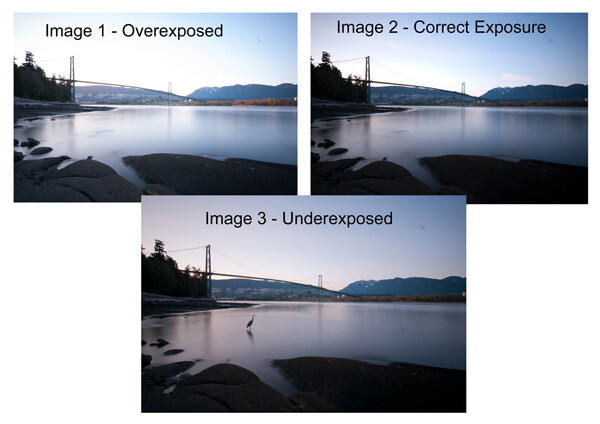

The difference is striking. Process Zero II photos have stronger contrast, subtler details, and an overall "more natural" feeling—though I'll be honest, "natural" is doing a lot of work in that sentence. What it really means is "less aggressively processed." You see more of what the sensor actually captured, less of what Apple's software thinks you want to see.

Side-by-side comparisons show it clearly. Shadows stay darker. Highlights hold more detail. Colors shift slightly cooler. The whole image has a bit more film-like character—less plasticky, less processed, just less. It's a smaller image, in a way. More restrained. Some people will hate it. Others will find it addictive.

Process Zero II is getting major upgrades in Mark III, starting with High Dynamic Range support. This is where things get interesting because HDR is finally solved at the computational level.

The HDR Problem That Nobody Talks About

HDR has been a photography buzzword for years, but most implementations are garbage. You've seen it: halos around objects, blown-out skies, oversaturated colors, an overall artificial plastic look. The algorithm is technically working—it's recovering detail in shadows and highlights simultaneously—but it's doing it in a way that screams "I used HDR."

Halide took a different approach. Their HDR implementation is subtle. It recovers detail in extreme highlights (crucial for sunsets and bright scenes) while holding shadow information without the weird color shifts or halo artifacts. The result is that you can actually use HDR in real scenes without your photo looking like a hyperreal painting.

This is where the creative choice element matters. HDR isn't always better. In a foggy, low-contrast scene like an early morning in Osaka, shooting in SDR (Standard Dynamic Range) looks way more appropriate. The lack of extreme contrast is the whole mood. Add HDR, and you're fighting the light.

But throw in a dramatic sunset with bright sky and darker foreground? HDR suddenly becomes essential. It preserves color saturation in the sky while keeping the land from turning into a silhouette. Sunflowers in SDR look washed out as they approach peak brightness. In HDR, the yellows stay vibrant.

The choice matters. And that's Halide's whole point: let the photographer decide, not the algorithm.

The clever part is that if you're not sure which is right, you shoot in RAW. Then in Image Lab (Halide's post-processing space), you can flip between HDR and SDR versions of the same shot and pick the one that works. You can even turn off HDR by default in settings if you're over it. This is how creative tools should work—smart defaults with an off switch.

Pro RAW: Fixing the RAW Format That Broke iPhones

Let's talk about something that should have been solved years ago but wasn't: Pro RAW looks terrible out of the box.

Every iPhone since 2015 has supported RAW capture, which should be amazing. RAW gives you all the sensor data, maximum flexibility in post-processing, and zero compression. In theory, perfect.

In practice, Pro RAW files emerge from the iPhone looking worse than the JPEG. Seriously. Shadows appear underexposed. Highlights are blown out. Colors are flat. A typical Pro RAW before editing looks like a disaster—you need to spend 10 minutes in Lightroom just to get it to look normal. Compare that to shooting JPEG with the iPhone camera app, which looks good immediately. From a user experience perspective, Pro RAW was broken.

Apple knows this. In 2020, they launched their own Pro RAW format—a variant that combines the flexibility of RAW with some intelligent pre-processing. Pro RAW includes tone mapping, color grading, and exposure optimization baked into the file. It looks usable out of the box. Then you get the full RAW data underneath for additional editing.

The problem is, not many apps actually use Pro RAW's full potential. They treat it like regular RAW. You import it, it still looks bad, and you're back to square one.

Halide is doing something different. They're engineering their Looks system to work with Pro RAW from the moment of capture. When you shoot Process Zero II in Pro RAW, the tone mapping is optimized for that look. The algorithm isn't trying to make the photo look like a polished iPhone JPEG. It's building the look directly into the file data.

This is genuinely technical. We're talking about different tone curves for different looks, optimized color science, exposure compensation that varies per-look. It's not a filter. It's computational photography intentionally designed around a specific aesthetic.

The result: Pro RAW files that look good immediately, with full flexibility to edit further. This solves a problem that's existed since the iPhone 11 Pro.

Estimated data shows that 'Looks' and 'RAW Format' offer higher effectiveness and flexibility compared to traditional filters and SDR mode. HDR provides a balanced approach for dynamic scenes.

How Looks Actually Work During Capture

Here's where most people get confused. When you select a Look in Halide, what's actually happening?

The short answer: The iPhone's computational photography pipeline is being reconfigured in real-time.

Let's get specific. The iPhone camera system involves several stages. First, the sensor captures raw photons and converts them to digital data. Then, the ISP (Image Signal Processor) applies noise reduction, demosaicing, color matrix transforms, and auto-exposure calculations. Then, higher-level algorithms apply face detection, local contrast boosting, shadow fill, and highlight recovery. Finally, the image gets encoded into JPEG or Pro RAW.

When you choose a Look, Halide is adjusting parameters throughout that entire pipeline. Different looks might disable certain algorithms entirely (like face detection or shadow fill), adjust the tone curve to preserve contrast instead of crushing blacks, use different color matrices for a cooler or warmer tone, or apply different amounts of local contrast.

The key insight: this only works if you choose the look before capture. The iPhone's ISP can't retroactively reprocess the sensor data. Once the ISP has done its work, you're stuck with the result.

That's why RAW becomes important. If you shoot RAW, the ISP hasn't fully processed yet (well, it has, but the RAW data underneath is raw). You can then interpret that data differently in Image Lab, trying various looks until one clicks. This is where Halide's design gets clever—you're not stuck with your initial choice.

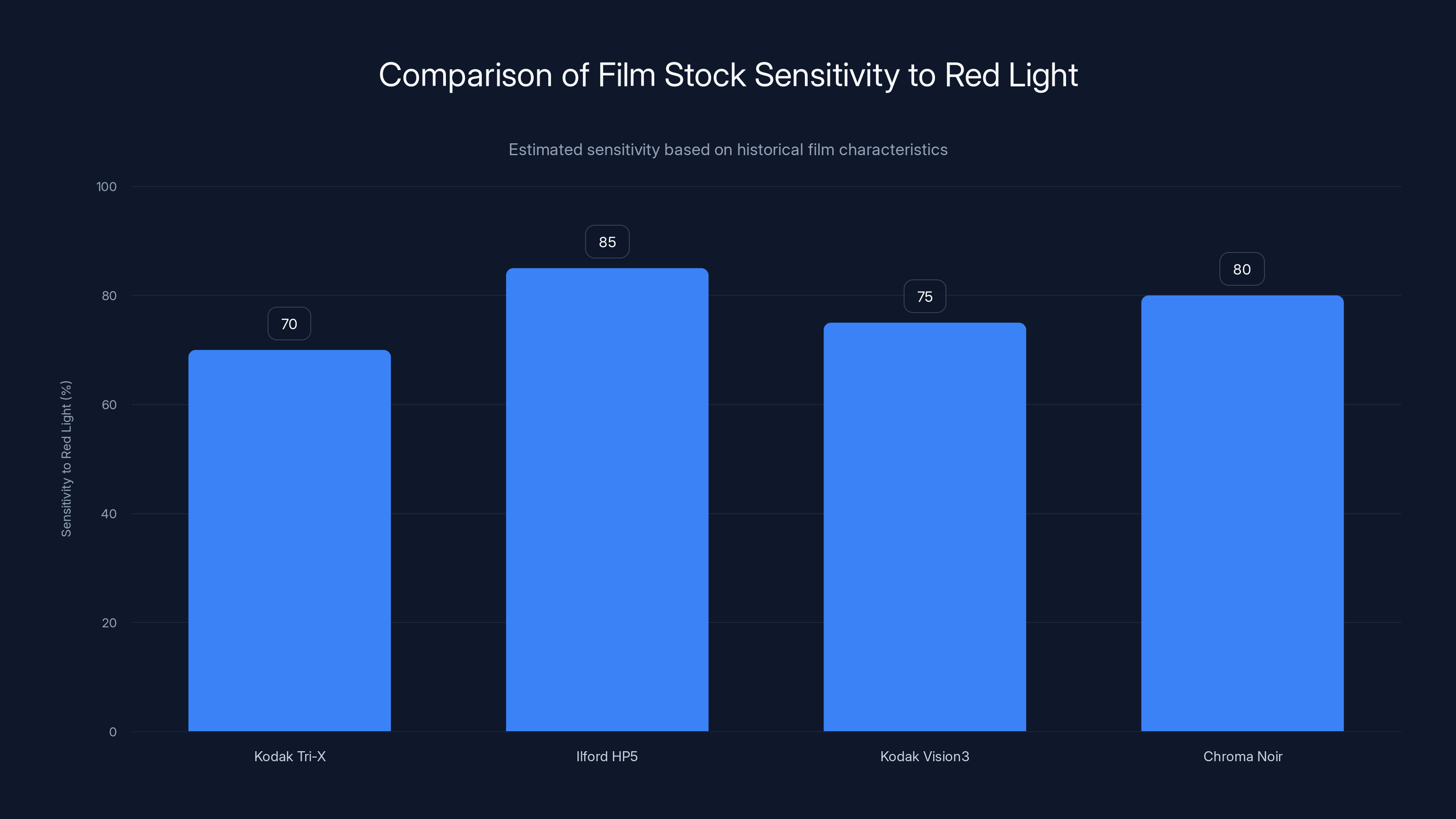

Chroma Noir and Cinematic Intent

As word spreads about Halide Mark III, the photography community is getting excited about Chroma Noir, which is a black-and-white look with specific color rendering characteristics.

Black and white seems simple—just desaturate and increase contrast. But there's actual photographic science here. Different film stocks had different spectral sensitivities. Kodak Tri-X responded to reds differently than Ilford HP5. That translated to different tonal renderings of colored objects.

When you shoot Kodak Tri-X, a red sweater might render darker than you'd expect because the film was more sensitive to red light. With Ilford HP5, that same sweater would look brighter. This isn't a bug. It's a feature. Photographers chose their film stock partly for these rendering characteristics.

Halide's Chroma Noir mimics this. It's not just desaturating the image. It's applying color-to-tone mappings that change how different wavelengths render in grayscale. A red neon sign looks different in Chroma Noir than it would in a standard desaturation. The rendering is intentional, not random.

There's also cinematic intent baked in here. Movies shot on film stocks like Kodak Vision 3 had specific color grades. Cinematographers chose those stocks partly for how they rendered shadows and midtones. The Halide team is essentially translating that cinematic photographic knowledge into a smartphone look.

This is what separates Looks from every other preset system. It's not "here's how to grade your photo." It's "here's how this film stock interprets light." The difference sounds subtle but it changes everything about how you approach photography.

Halation Effects and Film Authenticity

One detail that caught my attention: halation effects.

When film was the standard, halation was an optical phenomenon. Light would hit the film emulsion, pass through, reflect off the film base, and pass back through the emulsion. This created a subtle glow around bright light sources—a characteristic halo or blooming effect. Different film stocks handled this differently. Kodak Portra had noticeable halation. Fujifilm Superia less so.

Halation is technically a flaw. It reduces sharpness and detail. But it's also characteristic. Photographers grew to expect it and sometimes composed specifically to use it. A bright light at night becomes this ethereal glow instead of a harsh point source.

Halide's Looks can include halation effects—not because halation is better, but because it's authentic to the film stock. If you're trying to emulate Kodak Portra, you need some halation or it doesn't feel right. If you want clean, sharp results, you choose a stock that didn't have it.

This level of detail matters because it's where Looks move from "presets that look nice" to "actually modeling how different photographic systems work." You're not chasing an aesthetic. You're choosing a photographic system and accepting its entire character.

The Image Lab: Post-Capture Creative Flexibility

Here's the thing about choosing a Look at capture time: sometimes you don't know what you want yet.

Maybe you shot in Default, then opened the image and realized it needs the character of Process Zero II. Or you shot Pro RAW because you weren't sure, and now you want to compare three different looks side-by-side.

Image Lab is Halide's answer to that. It's a post-processing space where you can reinterpret RAW files with different Looks, or fine-tune the edits on JPEGs.

Shoot in RAW with any look, and Image Lab lets you switch looks retroactively. You can see Looks A, B, and C rendered from the same RAW file. This is genuinely powerful because RAW retains all the sensor information—it's up to the software to interpret it.

For JPEG files, Image Lab offers more limited adjustments (you can't recover data that compression threw away), but you can still do basic editing: exposure, contrast, saturation, highlights, shadows, color temperature. The interface is designed to be approachable for beginners but powerful for people who know what they're doing.

The real magic is tone mapping controls. You can adjust how aggressively the algorithm handles shadows and highlights. If you shot with HDR but want less extreme HDR, you can dial it back. If you want more pop, turn it up. This is the kind of granular control that used to require professional software like Capture One.

Process Zero II offers higher contrast and detail with a cooler color temperature, providing a more natural feel compared to the Default Look. Estimated data based on feature descriptions.

Why This Matters: Photography's Algorithmic Sameness Problem

Step back for a moment. Why are we talking about a camera app when iPhones already have a camera app?

Because computational photography created a new problem: everyone's photos look the same.

This is unintentional. The iPhone's camera is engineered to make every photo look usable. That's a feature for people who take one photo a year. But when you're taking photos regularly, the consistency becomes stifling. You start seeing the same shadows crushed, the same highlights boosted, the same color palette in every image.

This is partly why film photography came back. A photographer could buy a different film stock, and suddenly their entire portfolio changed character. That was a creative choice, not a technical limitation. The choice was the point.

Digital photography promised unlimited creative choices. Instead, it delivered the opposite: fewer visual options because everyone used the same algorithms. Photographers either accepted it or went back to film.

Halide's Looks try to restore the creative choice element to digital photography without sacrificing digital's flexibility. You can have the simplicity of choosing a film stock and the flexibility to adjust and experiment in post. You can shoot in RAW and try looks retroactively. You can switch between looks in seconds, a thing film photographers could never do.

It's not a perfect solution—nothing is—but it addresses the fundamental problem: making computational photography a choice instead of an inevitability.

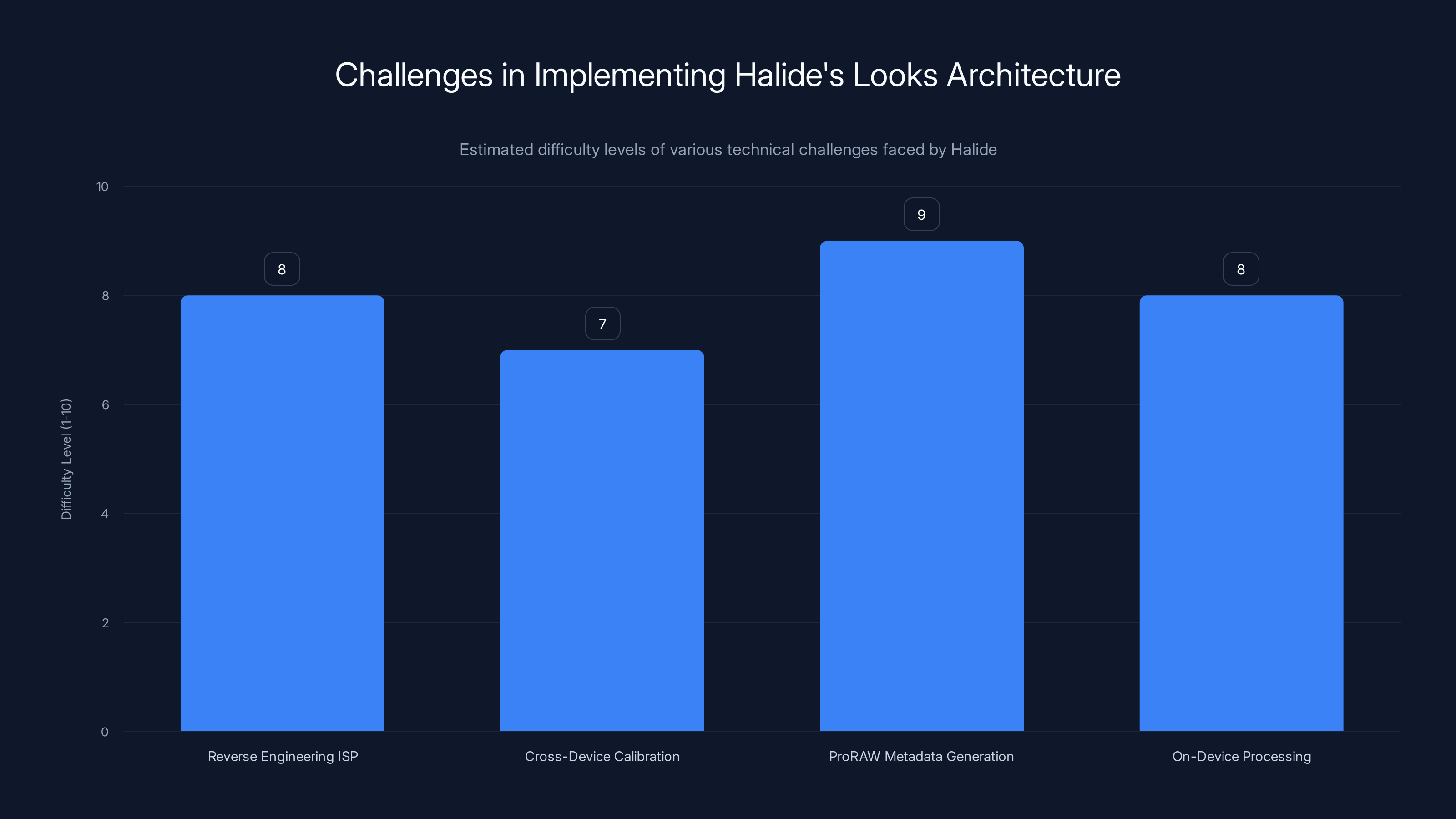

Technical Implementation: How Looks Architecture Works

For people who care about how this actually works, Halide had to solve some genuinely hard problems.

iPhone's Image Signal Processor (ISP) is proprietary hardware. Apple doesn't publish detailed specs. So Halide's engineers had to reverse-engineer how different camera settings affect the output, then build Looks that work within those constraints.

Different iPhone models have different hardware. An iPhone 14 Pro has different computational capabilities than an iPhone 15. The Looks have to work across that range without looking completely different on each device. This requires careful calibration—what works on the 15 might need adjustment on the 14.

Then there's the Pro RAW complication. Pro RAW files store tone mapping metadata. Halide had to figure out how to generate that metadata for different looks, ensuring Pro RAW files come out looking intentional, not like the software is guessing.

And all of this had to ship on-device. Halide can't send photos to a server for processing. Everything runs on the iPhone. That means the algorithms are optimized for the device's available compute power.

This is why Halide is a real company building real software, not an Instagram-style filter app. The engineering required to make Looks work properly is significant. It's not trivial.

Comparison with Apple's Native Approaches

Let's be honest about what Apple's camera app does well and where it falls short.

Apple's strength is simplicity at scale. They engineer a single camera experience that works for a billion people. Face detection is genuinely good. Exposure is usually correct. Colors are pleasing. The camera app is fast and reliable.

Apple's weakness is creativity. You don't choose how your photo looks. The algorithm decides. If you want something different, you're out of luck (unless you use Manual mode, which most people don't).

Halide's approach is basically the opposite. More control. More options. More complexity. This appeals to photographers who have opinions about how their photos should look. It doesn't appeal to people who just want a quick snapshot.

There's also the question of intent. Apple's camera algorithms optimize for "usable photos." Halide's Looks optimize for "photos with character." These aren't always the same thing. A look that introduces halation and strong contrast makes a more interesting photo but a less technically perfect one. Which you prefer depends on your goals.

Neither approach is objectively better. They're optimizing for different things. It's valuable that both exist.

The Broader Camera App Landscape

Halide isn't the only third-party camera app, but it's in a different category than most.

Apps like Camera+ and Lightroom Mobile are post-processing focused. They import photos and let you edit them. Halide is trying to be a replacement for the iPhone's camera app—a new way to capture, not just a way to fix captures.

This puts it in a smaller category. Adobe's Lightroom has a camera interface, but it's secondary to the editing features. Halide's entire philosophy is built around the capture moment. That's unusual.

The advantage of being capture-focused is that you get creative decisions at the source. The disadvantage is that most iPhone users never use third-party camera apps. They use the built-in camera because it's there.

Halide's answer to that is to make the app good enough that photographers specifically download it and set it as their default. Early Mark III feedback suggests they might be succeeding. The Looks system is compelling enough that photographers want to use it.

Chroma Noir mimics classic film stocks by adjusting sensitivity to red light, offering a unique grayscale rendering. Estimated data.

Pro RAW Mastery: Advanced Photographers and Workflow

For advanced photographers, Pro RAW support is the actual killer feature of Halide Mark III.

Pro RAW gives you 14 bits of color information per channel (compared to 8 bits in JPEG). That's theoretically 65,536 shades per channel instead of 256. In practice, it means more editing flexibility, better color grading, and less posterization when you push the image hard.

But Pro RAW files are huge—100+ MB per image—and they take longer to process. Plus, not every app handles Pro RAW properly. Lightroom does. Snapseed doesn't. Capture One does.

Halide's angle is to make Pro RAW the right choice by ensuring the files come out looking good immediately. You're not fighting the Pro RAW look. You're embracing it because it's intentionally designed that way.

For photographers shooting multiple images per day, Pro RAW workflow changes your entire edit process. Instead of trying to fix bad captures, you're working with flexible data that was captured well. The edit becomes creative refinement, not rescue.

Color Science Across Different Looks

One thing I haven't touched on enough: color science is different for each look.

Default uses Apple's native color matrix, which is slightly warm and saturated. Great for generic photos, slightly plasticky if you're doing portrait work.

Process Zero II uses a more neutral color science—cooler tones, less saturation boost. This is where you see more subtle color nuance.

Chroma Noir uses grayscale rendering that's calibrated to different color sensitivities, like film stocks had.

Each look essentially has its own color science because they're trying to model different capture systems. Process Zero II isn't just "Default but with less processing." It's an entirely different color pipeline.

This is another level of complexity that separates Looks from filters. Filters are color grades applied on top of an existing color interpretation. Looks are different color interpretations entirely.

Halide's Evolution as a Camera App

Halide has been around since 2015—a decade of iPhone camera app development. The original Halide was focused on manual controls: focus, exposure, ISO, white balance. The idea was to give photographers what the native camera app didn't: hands-on creative control.

That worked for a specific audience: people who wanted to learn about exposure and light. But it missed a bigger opportunity. Most photographers don't want to manually adjust ISO. They want their photos to look good and be distinctive.

Mark II (the previous version) addressed this by adding Aspect Ratio and Color Grade control. You could shoot in a cinematic 2.4:1 ratio and apply color grades in real-time on the preview.

Mark III is the synthesis of all that learning. Instead of building more manual controls, they built Looks: opinionated, distinctive, but still customizable if you want to dig deeper.

It's a much more mature approach than "give photographers all the controls." It's "here are good starting points, and if you want to customize, you can."

The Philosophy Behind Constraint and Creative Freedom

There's a paradox in creative tools: more options doesn't always mean more creativity.

Studies in decision-making show that when you have too many choices, you either paralyzed or make worse decisions. Photographers with 500 presets often end up using the same three. Photographers with a film stock choice use what they have and learn to work with it.

Halide's Looks philosophy recognizes this. By giving you distinct options (not sliding scales of tweaks), you're forced to pick and then commit. That constraint is where creativity comes from.

This mirrors how real photographers work. You buy film stock—a committed choice. You buy a camera—another committed choice. You bring those constraints to the shoot and work within them. That's how distinctive work emerges.

Digital's promise was freedom. But freedom without constraints is just options. Looks are trying to restore the creative value of constraint while keeping digital's flexibility.

Halide's engineering challenges include reverse engineering Apple's ISP, cross-device calibration, generating ProRAW metadata, and ensuring on-device processing, each with high difficulty levels. Estimated data.

Future of Mobile Photography: Looks as a Standard

If Halide Mark III succeeds, Looks could become a category that other apps adopt.

Right now, preset systems are the standard—little graded packages you apply in post. But Looks are fundamentally different. They require building into the camera capture itself, not just the editing.

That's harder technically, but it's also way more powerful. Once photographers experience the difference, they'll expect it elsewhere.

Apple could add something like Looks to the native camera (though they probably won't—it goes against their design philosophy of simplicity). Other third-party apps could adopt the concept.

The broader trend is that mobile photography is professionalizing. More people are using iPhones as their primary camera. That means camera apps need to serve photographers, not just general users. Looks is a step toward that professionalization.

Practical Workflow: How Mark III Fits Into Modern Photo Workflow

Let's talk about actual usage. How does Halide Mark III fit into a real photography workflow?

Scenario 1: Casual photographer You open Halide, switch to Process Zero II because it looks nice, shoot your stuff, and maybe make small adjustments in Image Lab. You export JPEG, share to Instagram. Total time from shoot to share: 10 minutes. Way faster than if you were using native camera + Lightroom.

Scenario 2: Professional photographer You're shooting a portrait session. You might switch between Default (for technical accuracy), Process Zero II (for natural look), and Chroma Noir (for some B&W shots). You shoot everything in Pro RAW. Later, in Image Lab and Lightroom, you fine-tune. The Looks were a starting point, not the final destination. Total time in Halide: 2 hours capturing, 1 hour initial review in Image Lab.

Scenario 3: Travel photographer You're in Kyoto, not bringing a laptop. You want to shoot in Halide, make final edits on the iPhone, and post directly to social. Looks like Process Zero II + light tone editing in Image Lab gives you exactly what you want, no Lightroom needed. Total time: real-time as you shoot.

The app scales to different use cases. That's the real value.

The Mark III Preview: What's Still in Progress

Halide Mark III is a public preview, not a final release. The team is being honest about what's still being worked on.

Currently available: Looks system, Process Zero II with HDR, Image Lab, Pro RAW support, manual controls. Solid foundation.

Still coming: More looks (the team hinted at additional looks beyond what's available now), refined performance optimization (previews sometimes lag on older devices), and additional editing features in Image Lab.

This phased approach makes sense. Ship the core innovation (Looks), get feedback, build from there. It's how good software gets built.

Industry Context: Where Mobile Photography is Headed

Mobile photography is at an inflection point. iPhones are genuinely good enough for professional work now. Not just "good for a phone." Actually good. Professional photographers use iPhones for editorial work, commercial shoots, fine art projects.

That shift means camera app design matters more. Apple's approach (one solution for everyone) works at consumer scale. But it doesn't serve photographers who want creative control. That gap is where Halide lives.

We're likely to see more specialized camera apps. Some optimized for speed, some for creative control, some for specific genres (portrait, landscape, street). The one-size-fits-all camera app is increasingly a starting point, not the final destination.

Halide's Looks system is betting that photographers will choose creative control over simplicity. If that bet pays off, other apps will follow. If photographers mostly stay with the native camera, Halide remains a nice niche product. Either way, the conversation about what mobile photography can be is evolving.

Halide Mark III offers superior customization and control over photography features compared to the default iPhone camera, particularly in algorithm customization and shadow handling. Estimated data.

Common Mistakes in Using Looks-Based Systems

If you start using Halide Mark III, here are mistakes worth avoiding.

Mistake 1: Thinking Looks are presets They're not. Choosing a Look isn't the final step. It's the starting point. You can (and should) fine-tune in Image Lab. Don't expect any Look to be perfect for every shot.

Mistake 2: Not shooting RAW when experimenting If you're trying to figure out which Look you like best, shoot RAW. Then compare different looks applied to the same RAW file in Image Lab. You'll make better decisions than trying to choose a look blind before capture.

Mistake 3: Ignoring HDR/SDR choice HDR isn't always better. In high-contrast scenes (sunsets, bright backlight), HDR is essential. In low-contrast scenes (fog, overcast), SDR looks more natural. Think about the scene before shooting.

Mistake 4: Underestimating Pro RAW complexity Pro RAW files are huge and slow. Only shoot Pro RAW if you plan to edit significantly or if you're uncertain about your creative direction. For quick snapshots, JPEG is fine.

Mistake 5: Not using manual focus Halide has excellent manual focus. The native camera's autofocus is good, but for creative control (especially in Chroma Noir where manual focus gives artistic bokeh), manual focus is worth learning.

Building a Personal Visual Style with Looks

Here's something interesting: using a consistent Look builds recognizable visual style.

Film photographers achieved this naturally—if you shot Kodak Portra for a year, all your photos had that warm, saturated look. Viewers recognized your work.

Digital photography fragmented that. Every photo could use a different preset, different edit. No consistency.

If you pick one Look (or two) and stick with it, your iPhone photos start to develop coherence. A year of Process Zero II photos will have a consistent character that's distinctive.

This is actually how professional photographers build recognizable styles. They develop a point of view and apply it consistently. Looks make that easier on iPhone.

Comparison: Halide vs. Competing Apps

How does Mark III stack up against alternatives?

vs. Native Camera Native wins on speed and simplicity. Mark III wins on creative control and distinctive results. Different audiences.

vs. Lightroom Mobile Lightroom is better for post-processing. Mark III is better for capture-time creativity. You could use both—shoot in Mark III, fine-tune in Lightroom. They're complementary.

vs. Adobe's camera experience Adobe's Lightroom has a camera interface but it's secondary to editing. Mark III prioritizes capture. If you only use phone photography, Mark III might be better. If you do complex edits, Lightroom is more powerful.

vs. Snapseed Snapseed is free and good for basic edits. Mark III is focused and opinionated. Different purposes.

Mark III isn't trying to be better at everything. It's trying to be better at one thing: making distinctive photos through the lens of creative looks. That's a specific choice, and it works well if that's what you want.

Practical Setup: Making Halide Your Default Camera

If you want to actually use Halide Mark III as your primary camera app, here's how to set it up.

- Download Halide from the App Store

- In Settings > Apps > Default Apps, set Halide as your default camera (iOS 17+, though you can also just open Halide first or use Siri to open it)

- In Halide settings, choose your default Look (I'd suggest Process Zero II if you want character, Default if you want consistency with the native camera)

- Turn on Pro RAW if you're planning to edit significantly

- Enable iCloud sync so your Image Lab edits stay in sync

- Export to Photos app, where your images are stored natively

The workflow is: shoot in Halide, initial review in Image Lab, export to Photos, further editing in Lightroom if needed, share from Photos.

One tip: create a Halide-specific folder in Photos to keep your originals organized. You'll appreciate it when you want to revisit old shots.

The Broader Context: Why This Matters Beyond Photography

Halide Mark III and Looks seem like they're just about camera apps. But they're actually about something bigger.

Every software system involves tradeoffs between simplicity and expressiveness. Simple systems work for most people but can't serve specific needs. Expressive systems can do anything but require more learning.

Apple's camera app picks simplicity. Halide Mark III tries to find the middle ground: opinionated simplicity. You're not choosing from infinite options. You're choosing from a curated set of looks. That's different from both "here's one way" and "here's infinite ways."

This model could apply to other tools. Email apps, note-taking, text editors. Instead of blank canvases or rigid templates, you provide thoughtful starting points that users can customize.

It's a lesson in design: good constraints are more useful than infinite freedom.

What Comes Next: Mark III's Roadmap

Halide's team hasn't published a detailed roadmap, but they've hinted at what's coming.

In the near term: More looks are definitely planned. The team mentioned exploring additional creative directions beyond what's currently available. Expect at least 5-7 looks total (they haven't said exactly, but there's room to expand).

Performance optimization: The preview version can sometimes lag on older iPhones. The final release will address this, probably through better memory management and preview optimization.

Video looks: This isn't confirmed, but given that computational photography is being applied to video, it would make sense to apply looks to video too. Imagine recording video with Process Zero II or Chroma Noir applied in real-time.

Integration with editing tools: Better export to Capture One, Affinity Photo, or other professional tools. Right now, Pro RAW export is the bridge, but deeper integration is probably coming.

Community looks: This is speculation, but allowing users to create and share custom looks would be a natural evolution. Some camera apps do this (though it's technically complex).

Pricing and Accessibility

Halide has historically been a paid app (around $20-40 depending on region and features). Mark III hasn't released final pricing, but the team has suggested it will likely follow similar pricing to Halide II.

That puts it in an interesting position. Most photography apps are either free (with limitations) or subscription-based. Halide is one-time purchase.

For photographers, $40 one-time is appealing—you own the app permanently, no recurring costs. For casual users, that's a barrier. But Halide isn't targeting casual users. It's targeting photographers who care about their image quality enough to spend money on a better camera app.

Accessibility and Learning Curve

One concern with a feature-rich app like Halide: is it too complex?

The team has clearly thought about this. The interface is clean. Default Look is approachable for beginners. Manual controls are there if you want them but hidden by default.

For someone coming from the native camera app, switching to Halide adds maybe 10 minutes of learning. "Here are some looks, pick one, shoot." That's not steep.

If you want to understand HDR vs. SDR and Pro RAW trade-offs, that's more learning. But that's optional.

The design pattern is: simple by default, deep if you want it. That's good pedagogy.

Technical Limitations Worth Understanding

Halide Mark III can't do some things, despite being genuinely well-built.

No computational bursting: The native camera can shoot multiple frames per second and computationally select the sharpest. Halide can't do this (limitation of the iOS APIs).

Limited macro support: Newer iPhones have macro capability. Halide's macro support isn't as refined as native camera.

No 8K video: Some iPhones shoot 8K, but Halide doesn't support it yet.

These are mostly not about Halide's engineering. They're about what Apple's APIs expose to third-party apps. The native camera gets hardware-level access. Apps like Halide work through iOS.

It's worth knowing these limitations if you're considering switching. For most photography, it doesn't matter. For specific advanced use cases, you might need to use the native camera sometimes.

The Evolution of Mobile Photography Devices

We're at a point where camera capabilities across flagship phones have largely converged. iPhone, Samsung, Google, One Plus—they all take good photos now.

The differentiation increasingly happens in software, not hardware. How the device interprets and processes the sensor data. That's where Looks comes in. It's software-level creativity that hardware can't provide.

This trend will likely continue. We'll see more camera apps offering distinctive interpretations of iPhone sensors. We might see more brands (not just phone manufacturers) building their own camera experiences.

Halide is ahead of this trend. They're positioning the camera app not as a simple tool but as a creative instrument. That's increasingly where the value lies in mobile photography.

Final Thoughts: Why Halide Mark III Matters

There's a tendency to dismiss camera apps as "just another tool." But Halide Mark III represents something more thoughtful.

It's not trying to be everything. It's not offering infinite customization. It's not replacing Lightroom or Capture One.

What it's doing is restoring something that computational photography accidentally removed: the idea that how you capture matters as much as what you capture.

For a decade, iPhone photography meant one thing: the iPhone's interpretation. You could adjust exposure and focus, but the fundamental look was determined by Apple's algorithm.

Looks restore choice without adding complexity. That's genuinely useful.

Whether you switch to Halide or not, Mark III represents the direction mobile photography is heading. Apps that serve photographers specifically. Tools built on the understanding that creative choices matter, starting at the moment of capture.

The preview is available now. If you care about how your iPhone photos look, it's worth trying. The Looks system is intuitive enough that you'll get it in minutes, but deep enough that you could spend months exploring it.

FAQ

What exactly are Looks in Halide Mark III?

Looks are curated capture configurations that change how the iPhone's computational photography interprets light and color at the moment of capture. Unlike presets that adjust color after photography, Looks alter the camera's entire processing pipeline—shadow handling, contrast, color science, and more—from the initial sensor data onward. You can think of them as digital film stocks, each with its own character and interpretation.

How do Looks differ from regular photo filters or presets?

Filters and presets modify photos after they're captured, working with whatever data the camera's algorithm already processed and locked in. Looks operate at the capture level, before the ISP (Image Signal Processor) completes its work. This means Looks can influence how shadows are handled, what detail is preserved, and how colors are interpreted from the raw sensor data itself. The result is fundamentally different—not just color-graded, but genuinely captured differently.

Can I change my Look after taking a photo?

Yes, if you shoot in RAW format. RAW files retain the full sensor data without final processing, allowing you to reinterpret them with different Looks later in Halide's Image Lab. With JPEG files, your Look choice is locked in at capture (you can adjust exposure and color after, but not change the fundamental look). This is why RAW is valuable when you're experimenting with different Looks.

What's the difference between shooting in HDR and SDR modes?

HDR (High Dynamic Range) preserves detail in both bright highlights and dark shadows simultaneously, which is essential for high-contrast scenes like sunsets. SDR (Standard Dynamic Range) doesn't attempt extreme highlight recovery and can look more natural in low-contrast scenes like foggy mornings. Halide lets you choose which mode makes sense for your shot, or shoot RAW and compare both versions afterward in Image Lab.

Should I always shoot in Pro RAW or is JPEG fine?

Pro RAW is worth it if you plan significant editing, shoot in difficult lighting, or want maximum color grading flexibility later. JPEG is perfectly fine for quick snapshots, instant social media sharing, or when you're confident in your creative direction at capture time. Pro RAW files are much larger (100+ MB each) and slower to process. Only use it when the flexibility justifies the extra file size and storage.

How does Process Zero II differ from the Default look?

Default uses all of Apple's computational photography algorithms—shadow fill, highlight recovery, local contrast boosting, color optimization. Process Zero II disables most of these algorithms, resulting in stronger contrast, subtler details, and a more natural, less processed appearance. Default looks more polished; Process Zero II looks more film-like. Neither is objectively better—it depends on your creative preference.

Can I create my own custom Looks or upload community-created ones?

Not yet. Halide Mark III ships with pre-created looks designed by their team. Custom look creation would require access to the ISP (Image Signal Processor) configuration, which is extremely complex and hasn't been exposed in iOS APIs. The team might explore community looks in future versions, but it's technically non-trivial.

How does Halide Mark III handle different iPhone models with different hardware?

Looks are calibrated to work across different iPhone generations, though each model's hardware has slightly different ISP capabilities. The team spent significant time ensuring that Process Zero II looks consistent on iPhone 14, 15, and 16, even though their computational photography hardware differs. You'll see quality variation (newer phones are faster, sharper), but the creative intent of each Look remains consistent.

What if I want to use Halide but sometimes need the native camera's speed or features?

There's no reason to choose exclusively. You can use Halide as your primary camera app while keeping the native camera for situations where you need burst shooting, macro capability, or 8K video. Set Halide as default, but the native camera is still there. Many photographers use both depending on the shooting situation.

Does Halide Mark III work well for video, or just still photos?

Currently, Looks are optimized for still photography. Video support with Looks applied in real-time would be coming in future updates (though this isn't officially confirmed). For now, if you want cinematic video, you're still primarily using the native camera app. Halide Mark III's focus is on still image capture.

Key Takeaways

- Halide Mark III's Looks system operates at capture time, altering computational photography algorithms rather than applying post-processing filters like traditional presets

- Process Zero II disables most algorithmic processing for stronger contrast and natural appearance, while Default matches iPhone's native look with added manual controls

- HDR and SDR modes serve different creative purposes: HDR excels in high-contrast scenes while SDR looks more natural in low-contrast lighting conditions

- ProRAW files get intentional tone mapping optimization for each Look, eliminating the traditional problem of RAW files looking bad without extensive editing

- Shooting in RAW enables retroactive Look selection and comparison in Image Lab, providing flexibility when uncertain about creative direction at capture time

Related Articles

- AI Discovers 1,400 Cosmic Anomalies in Hubble Archive [2025]

- Nikon Z5 II: Full Frame Camera Review, Specs & Best Deals [2025]

- Sigma 17-40mm f/1.8 DC Art Lens Review: The Prime Killer [2025]

- iOS 27 Rumors: 5 Game-Changing iPhone Upgrades Coming [2025]

- Best-Selling Cameras & Lenses 2025: Why Compacts & Zooms Won [2025]

- Best Camera Phone 2025: The iPhone 17 Pro Max Rival [2025]

![Halide Mark III Looks Preview: Next-Gen iPhone Photography [2025]](https://tryrunable.com/blog/halide-mark-iii-looks-preview-next-gen-iphone-photography-20/image-1-1769620044850.jpg)